#3D Reconstruction

Text

Secrets Hopping

Using a non-destructive, high-res method called diffusible iodine contrast-enhanced microCT (diceCT) to image and digitally dissect the limb musculature of 30 species of frogs reveals relationships between anatomical complexity and function – providing insights that could inform muscle and locomotion research in humans

Read the published research article here

Image from work by Alice Leavey, Christopher T. Richards and Laura B. Porro

Centre for Integrative Anatomy, Cell and Developmental Biology, University College London; Structure and Motion Laboratory, Royal Veterinary College—Camden Campus, Comparative Biomedical Sciences, London, UK

Image contributed and copyright held by Alice Leavey

Research published in Journal of Anatomy, August 2024

You can also follow BPoD on Instagram, Twitter and Facebook

#science#biomedicine#biology#frogs#cells#muscles#locomotion#anatomy#micro CT#digital dissection#3d reconstruction

9 notes

·

View notes

Text

#fractal#fractal art#digital art#orbit trap#quaternion Julia set#image stack#3d reconstruction#volume viewer#ImageJ#original content

8 notes

·

View notes

Text

18 notes

·

View notes

Text

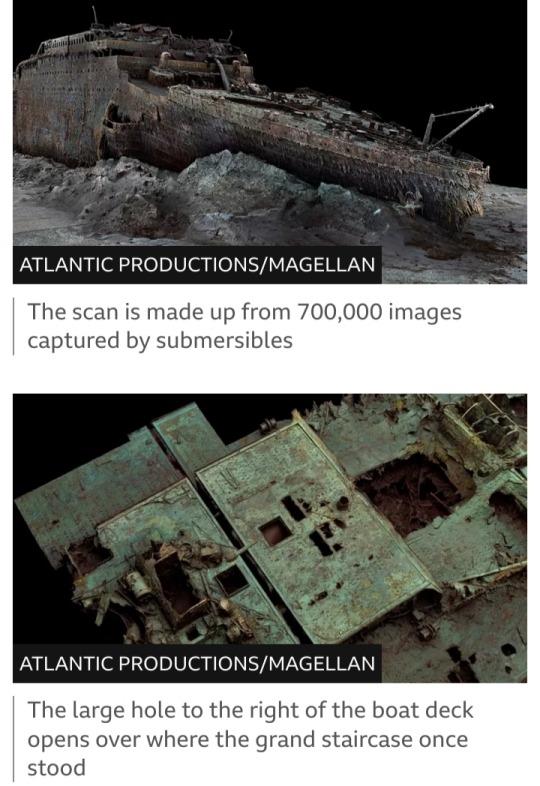

Titanic: First ever full-sized scans reveal wreck as never seen before

Titanic departing Southampton on 10 April 1912

By Rebecca Morelle and Alison Francis

BBC News Climate and Science

17 May 2023

The world's most famous shipwreck has been revealed as never seen before.

The first full-sized digital scan of the Titanic, which lies 3,800m (12,500ft) down in the Atlantic, has been created using deep-sea mapping.

It provides a unique 3D view of the entire ship, enabling it to be seen as if the water has been drained away.

The hope is that this will shed new light on exactly what happened to the liner, which sank on 15 April 1912.

More than 1,500 people died when the ship struck an iceberg on its maiden voyage from Southampton to New York.

"There are still questions, basic questions, that need to be answered about the ship," Parks Stephenson, a Titanic analyst, told BBC News.

He said the model was "one of the first major steps to driving the Titanic story towards evidence-based research - and not speculation."

The Titanic has been extensively explored since the wreck was discovered in 1985.

But it's so huge that in the gloom of the deep, cameras can only ever show us tantalizing snapshots of the decaying ship - never the whole thing.

The new scan captures the wreck in its entirety, revealing a complete view of the Titanic.

It lies in two parts, with the bow and the stern separated by about 800m (2,600ft). A huge debris field surrounds the broken vessel.

The scan was carried out in summer 2022 by Magellan Ltd, a deep-sea mapping company, and Atlantic Productions, who are making a documentary about the project.

Submersibles, remotely controlled by a team on board a specialist ship, spent more than 200 hours surveying the length and breadth of the wreck.

They took more than 700,000 images from every angle, creating an exact 3D reconstruction.

Magellan's Gerhard Seiffert, who led the planning for the expedition, said it was the largest underwater scanning project he'd ever undertaken.

"The depth of it, almost 4,000m, represents a challenge, and you have currents at the site, too - and we're not allowed to touch anything so as not to damage the wreck," he explained.

"And the other challenge is that you have to map every square centimetre - even uninteresting parts, like on the debris field you have to map mud, but you need this to fill in between all these interesting objects."

The scan shows both the scale of the ship, as well as some minute details, such as the serial number on one of the propellers.

The bow, now covered in stalactites of rust, is still instantly recognisable even 100 years after the ship was lost.

Sitting on top is the boat deck, where a gaping hole provides a glimpse into a void where the grand staircase once stood.

The stern though, is a chaotic mess of metal. This part of the ship collapsed as it corkscrewed into the sea floor.

In the surrounding debris field, items are scattered, including ornate metalwork from the ship, statues and unopened champagne bottles.

There are also personal possessions, including dozens of shoes resting on the sediment.

Parks Stephenson, who has studied the Titanic for many years, said he was "blown away" when he first saw the scans.

"It allows you to see the wreck as you can never see it from a submersible, and you can see the wreck in its entirety. You can see it in context and perspective. And what it's showing you now is the true state of the wreck."

He said that studying the scans could offer new insight into what happened to the Titanic on that fateful night of 1912.

"We really don't understand the character of the collision with the iceberg. We don't even know if she hit it along the starboard side, as is shown in all the movies - she might have grounded on the iceberg," he explained.

Studying the stern, he added, could reveal the mechanics of how the ship struck the sea floor.

The sea is taking its toll on the wreck, microbes are eating away at it and parts are disintegrating.

Historians are well aware that time is running out to fully understand the maritime disaster.

But the scan now freezes the wreck in time and will allow experts to pore over every tiny detail.

The hope is that the Titanic may yet give up its secrets.

#Titanic#shipwreck#deep-sea mapping#RMS Titanic#Magellan Ltd#Atlantic Productions#Gerhard Seiffert#Parks Stephenson#White Star Line#North Atlantic Ocean#15 April 1912#iceberg#digital scan#3D reconstruction

23 notes

·

View notes

Text

Iwazaru (jap. 言わざる) - “does not speak”

https://skfb.ly/oWPNS

#photography#photogrammetry#3d model#3d reconstruction#cultural heritage#anaglyph#3D#2D#philosophy#japan#asia

0 notes

Text

Researchers leverage shadows to model 3D scenes, including objects blocked from view

New Post has been published on https://thedigitalinsider.com/researchers-leverage-shadows-to-model-3d-scenes-including-objects-blocked-from-view/

Researchers leverage shadows to model 3D scenes, including objects blocked from view

Imagine driving through a tunnel in an autonomous vehicle, but unbeknownst to you, a crash has stopped traffic up ahead. Normally, you’d need to rely on the car in front of you to know you should start braking. But what if your vehicle could see around the car ahead and apply the brakes even sooner?

Researchers from MIT and Meta have developed a computer vision technique that could someday enable an autonomous vehicle to do just that.

They have introduced a method that creates physically accurate, 3D models of an entire scene, including areas blocked from view, using images from a single camera position. Their technique uses shadows to determine what lies in obstructed portions of the scene.

They call their approach PlatoNeRF, based on Plato’s allegory of the cave, a passage from the Greek philosopher’s “Republic” in which prisoners chained in a cave discern the reality of the outside world based on shadows cast on the cave wall.

By combining lidar (light detection and ranging) technology with machine learning, PlatoNeRF can generate more accurate reconstructions of 3D geometry than some existing AI techniques. Additionally, PlatoNeRF is better at smoothly reconstructing scenes where shadows are hard to see, such as those with high ambient light or dark backgrounds.

In addition to improving the safety of autonomous vehicles, PlatoNeRF could make AR/VR headsets more efficient by enabling a user to model the geometry of a room without the need to walk around taking measurements. It could also help warehouse robots find items in cluttered environments faster.

“Our key idea was taking these two things that have been done in different disciplines before and pulling them together — multibounce lidar and machine learning. It turns out that when you bring these two together, that is when you find a lot of new opportunities to explore and get the best of both worlds,” says Tzofi Klinghoffer, an MIT graduate student in media arts and sciences, affiliate of the MIT Media Lab, and lead author of a paper on PlatoNeRF.

Klinghoffer wrote the paper with his advisor, Ramesh Raskar, associate professor of media arts and sciences and leader of the Camera Culture Group at MIT; senior author Rakesh Ranjan, a director of AI research at Meta Reality Labs; as well as Siddharth Somasundaram at MIT, and Xiaoyu Xiang, Yuchen Fan, and Christian Richardt at Meta. The research will be presented at the Conference on Computer Vision and Pattern Recognition.

Shedding light on the problem

Reconstructing a full 3D scene from one camera viewpoint is a complex problem.

Some machine-learning approaches employ generative AI models that try to guess what lies in the occluded regions, but these models can hallucinate objects that aren’t really there. Other approaches attempt to infer the shapes of hidden objects using shadows in a color image, but these methods can struggle when shadows are hard to see.

For PlatoNeRF, the MIT researchers built off these approaches using a new sensing modality called single-photon lidar. Lidars map a 3D scene by emitting pulses of light and measuring the time it takes that light to bounce back to the sensor. Because single-photon lidars can detect individual photons, they provide higher-resolution data.

The researchers use a single-photon lidar to illuminate a target point in the scene. Some light bounces off that point and returns directly to the sensor. However, most of the light scatters and bounces off other objects before returning to the sensor. PlatoNeRF relies on these second bounces of light.

By calculating how long it takes light to bounce twice and then return to the lidar sensor, PlatoNeRF captures additional information about the scene, including depth. The second bounce of light also contains information about shadows.

The system traces the secondary rays of light — those that bounce off the target point to other points in the scene — to determine which points lie in shadow (due to an absence of light). Based on the location of these shadows, PlatoNeRF can infer the geometry of hidden objects.

The lidar sequentially illuminates 16 points, capturing multiple images that are used to reconstruct the entire 3D scene.

“Every time we illuminate a point in the scene, we are creating new shadows. Because we have all these different illumination sources, we have a lot of light rays shooting around, so we are carving out the region that is occluded and lies beyond the visible eye,” Klinghoffer says.

A winning combination

Key to PlatoNeRF is the combination of multibounce lidar with a special type of machine-learning model known as a neural radiance field (NeRF). A NeRF encodes the geometry of a scene into the weights of a neural network, which gives the model a strong ability to interpolate, or estimate, novel views of a scene.

This ability to interpolate also leads to highly accurate scene reconstructions when combined with multibounce lidar, Klinghoffer says.

“The biggest challenge was figuring out how to combine these two things. We really had to think about the physics of how light is transporting with multibounce lidar and how to model that with machine learning,” he says.

They compared PlatoNeRF to two common alternative methods, one that only uses lidar and the other that only uses a NeRF with a color image.

They found that their method was able to outperform both techniques, especially when the lidar sensor had lower resolution. This would make their approach more practical to deploy in the real world, where lower resolution sensors are common in commercial devices.

“About 15 years ago, our group invented the first camera to ‘see’ around corners, that works by exploiting multiple bounces of light, or ‘echoes of light.’ Those techniques used special lasers and sensors, and used three bounces of light. Since then, lidar technology has become more mainstream, that led to our research on cameras that can see through fog. This new work uses only two bounces of light, which means the signal to noise ratio is very high, and 3D reconstruction quality is impressive,” Raskar says.

In the future, the researchers want to try tracking more than two bounces of light to see how that could improve scene reconstructions. In addition, they are interested in applying more deep learning techniques and combining PlatoNeRF with color image measurements to capture texture information.

“While camera images of shadows have long been studied as a means to 3D reconstruction, this work revisits the problem in the context of lidar, demonstrating significant improvements in the accuracy of reconstructed hidden geometry. The work shows how clever algorithms can enable extraordinary capabilities when combined with ordinary sensors — including the lidar systems that many of us now carry in our pocket,” says David Lindell, an assistant professor in the Department of Computer Science at the University of Toronto, who was not involved with this work.

#3d#3D Reconstruction#affiliate#ai#AI models#AI research#Algorithms#ambient#approach#ar#Artificial Intelligence#Arts#author#autonomous vehicles#Best Of#Cameras#Capture#cave#challenge#Color#computer#Computer Science#Computer science and technology#Computer vision#conference#crash#Dark#data#Deep Learning#detection

0 notes

Text

youtube

1 note

·

View note

Text

The Technologies Involved With Metaverse

Today, the Metaverse Has Become A Reality And Is Growing At An Unprecedented Pace. However, the world has seen how such projects appear and remain in distant memory. For the Metaverse to be the future of social media, it must be able to withstand change. This can only happen by urgently addressing the issues plaguing the metaverse and instilling mass confidence in the project. There are tremendous opportunities for the metaverse to grow and expand, but the question today is whether the metaverse will rise to the top or be forgotten as a failed project that has lost the world’s trust.

Read more about this article here: https://www.cryptorial.co/metaverse/the-technologies-involved-with-metaverse/

#cryptocurrency#metaverse technology#mixed reality#augmented reality#artificial intellegence#3D Reconstruction#internet of things#IoT#blockchain technology#edge computing

1 note

·

View note

Text

Pre-wedding photoshoot for their very gay, Ferrari-themed, wedding

#They drive me insane#gosh theyre so meant to be together#theyre literally so gay the photographer asked them to hold hands#just get married already#still waiting for that sex tape#It took me 2 hours to get rid of that fucking horrendous 3d gunk title#I had to reconstruct half of carlos face so im sorry if he looks a little weird#you can close one eye and look at him from far away to and you wouldnt notice#charles leclerc#16#carlos sainz#55#1655#charlos#c2#mine

834 notes

·

View notes

Text

#fractal#fractal art#digital art#orbit trap#quaternion Julia set#image stack#3d reconstruction#volume viewer#ImageJ#original content

7 notes

·

View notes

Text

Some hominids-in-progress I've had on lately: a Neanderthal shifting her weight in preparation to throw a spear, some improvements I made to the meshes and textures for my A. sediba and P. boisei skins, and skins I still have to texture for H. naledi and H. heidelbergensis

Digital painting (Photopea, first image),

Digital sculpture (Blender, last three images),

2024

#works in progress#Hominids#Human evolution#Homo neanderthalensis#Paranthropus boisei#Australopithecus sediba#Homo naledi#Homo heidelbergensis#digital painting#Photopea#digital sculpture#Blender#2d rendering#3d rendering#facial anatomy#comparative anatomy#life restoration of fossil skulls#hominid reconstructions#postcranial reconstruction#craniofacial reconstruction#Christopher Maida Artwork

19 notes

·

View notes

Text

switched my planned research direction entirely bc all the advisors here who do work on that stuff are funded by the US military lol

#fuck u fuck u fuck u#hey 3D reconstruction here i am please treat me niceys#that's not my research direction i just have to learn a lot of that stuff now#lifestyle blogging#i love being in engineering. generally tho robotics is a hard place to work w/out military funding if you're not interested in HRI#im too. autistic and in general reject the premises of much of hri research tbh#so i did expect this. im not thaaaaat cut up

28 notes

·

View notes

Text

Taking a shot in the dark here but does anyone know where I can find 3d models of (dinosaur) skeletal reconstructions? I've found a couple on Cults3D and I've tried MorphoSource but I'm having a rough time using the search features on the latter :/

2 notes

·

View notes

Text

Kikazaru (jap. 聞かざる) - “does not hear”

https://skfb.ly/oWOwQ

#photography#photogrammetry#3d model#3d reconstruction#cultural heritage#anaglyph#3D#2D#philosophy#japan#asia

0 notes

Text

12 of the best books on computer vision

New Post has been published on https://thedigitalinsider.com/12-of-the-best-books-on-computer-vision/

12 of the best books on computer vision

Computer vision is expanding quickly and has the potential to completely change how we interact with technology, being at the forefront of many cutting-edge advancements, from self-driving automobiles to augmented reality.

Reading a computer vision book can be an excellent approach to learning and acquiring insight into this field and its applications.

From the principles of computer vision to more advanced technologies, these books will provide you with a thorough overview of the area and its applications – whether you’re a student, researcher, or professional.

In this article, you’ll find 12 of the best books on computer vision:

Computer Vision: Algorithms and Applications (Texts in Computer Science)

Computer Vision: Principles, Algorithms, Applications, Learning

Multiple View Geometry in Computer Vision

Computer Vision: Models, Learning, and Inference

Computer Vision: A Modern Approach (International Edition)

Practical Deep Learning for Cloud, Mobile, and Edge: Real-World AI & Computer-Vision Projects Using Python, Keras & TensorFlow

Modern Computer Vision with PyTorch: Explore Deep Learning Concepts and Implement Over 50 Real-World Image Applications

Learning OpenCV 4 Computer Vision with Python 3: Get to Grips with Tools, Techniques, and Algorithms for Computer Vision and Machine Learning

Deep Learning for Vision Systems

Concise Computer Vision: An Introduction into Theory and Algorithms

Computer Vision Metrics: Survey, Taxonomy, and Analysis

Programming Computer Vision with Python: Tools and Algorithms for Analyzing Images

Computer Vision: Algorithms and Applications (Texts in Computer Science), by Richard Szeliski

Source: Amazon

This computer vision book looks at the variety of techniques involved in analyzing and interpreting images, and describes real-world applications where vision is used successfully – in both specialized applications and consumer-level tasks.

The book takes a scientific approach to the formulation of computer vision issues, which are analyzed using classical and deep learning models and solved through rigorous engineering principles.

Often referred to as the “bible of computer vision”, it’s a must-read for those at a senior level, as it acts as a general reference text for fundamental techniques.

Computer Vision: Principles, Algorithms, Applications, Learning, by E. R. Davies

Source: Amazon

Davies covers computer vision’s fundamental methodologies while he explores its theoretical side, such as algorithmic and practical design limitations. Made for undergraduate and graduate students, researchers, engineers, and professionals, the book offers an up-to-date approach to modern problems.

The latest edition also includes:

A new chapter on object segmentation and shape models.

Three new chapters on machine learning, two covering basic classification concepts and probabilistic models, the other the principles of deep learning networks.

Personalized programming examples: illustrations, codes, hints, methods, and more.

Discussions on the EM algorithm, RNNs, geometric transformations, semantic segmentation, and more.

Examples and applications of developing real-world computer vision systems.

And more.

Multiple View Geometry in Computer Vision, by Richard Hartley & Andrew Zisserman

Source: Amazon

This textbook covers mathematical principles and techniques of multiple view geometry, a key area in computer vision. It also goes over the basic theory of projective geometry, which is the geometry of image formation, and the estimation of camera motion and structure from image sequences.

You’ll also find insights into image rectification, 3D scene recovery, and stereo correspondence. The book explains how to define objects in algebraic form for more straightforward computation, and it addresses the main geometric principles. It offers you a clear understanding of computer vision’s structure in a real-world scenario.

Computer Vision: Models, Learning, and Inference, by Simon J. D. Prince

Source: Amazon

The book Computer Vision: Models, Learning, and Inference offers a thorough introduction to the subject. The book covers a wide range of topics, such as picture generation, feature detection and extraction, object recognition, motion analysis, and machine learning techniques to enhance computer vision systems’ performance.

Along with a review of current research, it also contains a thorough description of computer vision’s mathematical and statistical underpinnings. The book offers a thorough and current introduction to the discipline and is written with graduate students, researchers, and practitioners of computer vision and related fields in mind.

Computer Vision: A Modern Approach (International Edition), by David A. Forsyth

Source: Amazon

This computer vision book covers the essential ideas and methods of computer vision. It’s divided into four main sections, starting with an introduction to computer vision and a rundown of its foundational mathematical techniques.

Image formation, covering subjects like image sensing, picture processing, and image analysis, is covered in the book’s second section. Object recognition, encompassing subjects like feature detection and matching, object recognition, and object tracking, is covered in the third section of the book.

The book’s concluding section discusses complex subjects including stereo, motion, and scene analysis. The writers illustrate the theories and methods covered in the book using a range of real-world examples and applications.

Practical Deep Learning for Cloud, Mobile, and Edge: Real-World AI & Computer-Vision Projects Using Python, Keras & TensorFlow, by Anirudh Koul, Siddha Ganju & Mehere Kasam

Source: Amazon

This book combines the Python programming language, the Keras and TensorFlow libraries, and several computer vision techniques to walk you through constructing actual projects using deep learning approaches for the cloud, and for mobile and edge devices.

It offers practical examples and code snippets to aid with your comprehension while covering subjects like picture classification, object identification, and video analysis. Developers, data scientists, and engineers who want to understand how to apply deep learning to create useful projects for the cloud, mobile platforms, and edge devices should read this book.

Modern Computer Vision with PyTorch: Explore Deep Learning Concepts and Implement Over 50 Real-World Image Applications, by V Kishore Ayyadevara & Yeshwanth Reddy

Source: Amazon

Many recent developments in various computer vision applications are fueled by deep learning. This book uses a practical way to teach you how to use PyTorch1.x on real-world datasets to solve more than 50 computer vision problems.

Ayyadevara and Reddy take you through how to train a neural network from scratch with NumPy and PyTorch. Plus, how to combine computer vision and NLP to perform object detection, how to deploy a deep learning model on the AWS server by using FastAPI and Docker, and much more.

Source: Amazon

If you want to learn how to use the OpenCV library to develop computer vision and machine learning applications, then this book is for you. Howe includes practical examples and code snippets to help you understand the principles and their applications while covering a wide range of topics. These include image processing, object detection, and machine learning.

The computer vision book is also created for readers with some Python programming knowledge and is based on OpenCV 4, the most recent release of the library.

Deep Learning for Vision Systems, by Mohamed Elgendy

Source: Amazon

Building intelligent, scalable computer vision systems that can recognize and respond to things in pictures, movies, and real life is something you can learn how to do with Deep Learning for Vision Systems.

You’ll understand cutting-edge deep learning techniques such as:

Image classification and captioning

An intro to computer vision

Transfer learning and advanced CNN architectures

Deep learning and neural networks

Concise Computer Vision: An Introduction into Theory and Algorithms, by Reinhard Klette

Source: Amazon

In this book, Klette offers a general introduction to the core ideas in computer vision, emphasizing key mathematical ideas and methods. At the end of each chapter, the book provides programming exercises and quizzes.

The book covers a wide range of related computer vision subjects, including mathematical ideas, picture segmentation, image recognition, and the fundamental parts of a computer vision system.

Computer Vision Metrics: Survey, Taxonomy, and Analysis, by Scott Krig

Source: Amazon

Krig offers a thorough explanation of the many metrics applied in computer vision. The book provides an overview of the state-of-the-art in computer vision metrics, a taxonomy of metrics based on their properties, and an evaluation of their advantages and disadvantages.

The book covers a wide range of subjects, such as performance evaluation for machine learning-based computer vision systems, object recognition and tracking, and image and video quality assessment.

This is a thorough computer vision reference for the Python programming language. The book covers a wide range of topics, including 3D reconstruction, object recognition, feature extraction, and image processing. Additionally, it offers a summary of the most well-known Python libraries and frameworks for computer vision, including OpenCV, scikit-image, and scikit-learn.

It includes more complex topics like feature extraction, object recognition, and 3D reconstruction too, and provides an introduction to computer vision and the fundamentals of image processing, such as image filtering, thresholding, and color spaces.

You can learn and apply the principles covered with the aid of real-world examples and code snippets. The book also provides a detailed explanation of the algorithms used, which is especially helpful if you want to go deeper into the theory behind computer vision.

Want more about computer vision? Check out our Computer Vision in Healthcare eBook:

Computer Vision in Healthcare eBook 2023

Unlock the mystery of the innovative intersection of technology and medicine with our latest eBook, Computer Vision in Healthcare.

#3d#3D Reconstruction#ai#algorithm#Algorithms#amp#Analysis#applications#approach#Art#Article#assessment#augmented reality#Automobiles#AWS#book#Books#change#classical#Cloud#CNN#code#Color#comprehension#computation#computer#Computer Science#Computer vision#cutting#CV

0 notes