#AI agent for web scraping

Explore tagged Tumblr posts

Text

Unlocking the Web: How to Use an AI Agent for Web Scraping Effectively

In this age of big data, information has become the most powerful thing. However, accessing and organizing this data, particularly from the web, is not an easy feat. This is the point where AI agents step in. Automating the process of extracting valuable data from web pages, AI agents are changing the way businesses operate and developers, researchers as well as marketers.

In this blog, we’ll explore how you can use an AI agent for web scraping, what benefits it brings, the technologies behind it, and how you can build or invest in the best AI agent for web scraping for your unique needs. We’ll also look at how Custom AI Agent Development is reshaping how companies access data at scale.

What is Web Scraping?

Web scraping is a method of obtaining details from sites. It is used in a range of purposes, including price monitoring and lead generation market research, sentiment analysis and academic research. In the past web scraping was performed with scripting languages such as Python (with libraries like BeautifulSoup or Selenium) however, they require constant maintenance and are often limited in terms of scale and ability to adapt.

What is an AI Agent?

AI agents are intelligent software system that can be capable of making decisions and executing jobs on behalf of you. In the case of scraping websites, AI agents use machine learning, NLP (Natural Language Processing) and automated methods to navigate websites in a way that is intelligent and extract structured data and adjust to changes in the layout of websites and algorithms.

In contrast to crawlers or basic bots however, an AI agent doesn’t simply scrape in a blind manner; it comprehends the context of its actions, changes its behavior and grows with time.

Why Use an AI Agent for Web Scraping?

1. Adaptability

Websites can change regularly. Scrapers that are traditional break when the structure is changed. AI agents utilize pattern recognition and contextual awareness to adjust as they go along.

2. Scalability

AI agents are able to manage thousands or even hundreds of pages simultaneously due to their ability to make decisions automatically as well as cloud-based implementation.

3. Data Accuracy

AI improves the accuracy of data scraped in the process of filtering noise recognizing human language and confirming the results.

4. Reduced Maintenance

Because AI agents are able to learn and change and adapt, they eliminate the need for continuous manual updates to scrape scripts.

Best AI Agent for Web Scraping: What to Look For

If you’re searching for the best AI agent for web scraping. Here are the most important aspects to look out for:

NLP Capabilities for reading and interpreting text that is not structured.

Visual Recognition to interpret layouts of web pages or dynamic material.

Automation Tools: To simulate user interactions (clicks, scrolls, etc.)

Scheduling and Monitoring built-in tools that manage and automate scraping processes.

API integration You can directly send scraped data to your database or application.

Error Handling and Retries Intelligent fallback mechanisms that can help recover from sessions that are broken or access denied.

Custom AI Agent Development: Tailored to Your Needs

Though off-the-shelf AI agents can meet essential needs, Custom AI Agent Development is vital for businesses which require:

Custom-designed logic or workflows for data collection

Conformity with specific data policies or the lawful requirements

Integration with dashboards or internal tools

Competitive advantage via more efficient data gathering

At Xcelore, we specialize in AI Agent Development tailored for web scraping. Whether you’re monitoring market trends, aggregating news, or extracting leads, we build solutions that scale with your business needs.

How to Build Your Own AI Agent for Web Scraping

If you’re a tech-savvy person and want to create the AI you want to use Here’s a basic outline of the process:

Step 1: Define Your Objective

Be aware of the exact information you need, and the which sites. This is the basis for your design and toolset.

Step 2: Select Your Tools

Frameworks and tools that are popular include:

Python using libraries such as Scrapy, BeautifulSoup, and Selenium

Playwright or Puppeteer to automatize the browser

OpenAI and HuggingFace APIs for NLP and decision-making

Cloud Platforms such as AWS, Azure, or Google Cloud to increase their capacity

Step 3: Train Your Agent

Provide your agent with examples of structured as compared to. non-structured information. Machine learning can help it identify patterns and to extract pertinent information.

Step 4: Deploy and Monitor

You can run your AI agent according to a set schedule. Use alerting, logging, and dashboards to check the agent’s performance and guarantee accuracy of data.

Step 5: Optimize and Iterate

The AI agent you use should change. Make use of feedback loops as well as machine learning retraining in order to improve its reliability and accuracy as time passes.

Compliance and Ethics

Web scraping has ethical and legal issues. Be sure that your AI agent

Respects robots.txt rules

Avoid scraping copyrighted or personal content. Avoid scraping copyrighted or personal

Meets international and local regulations on data privacy

At Xcelore We integrate compliance into each AI Agent development project we manage.

Real-World Use Cases

E-commerce Price tracking across competitors’ websites

Finance Collecting news about stocks and financial statements

Recruitment extracting job postings and resumes

Travel Monitor hotel and flight prices

Academic Research: Data collection at a large scale to analyze

In all of these situations an intelligent and robust AI agent could turn the hours of manual data collection into a more efficient and scalable process.

Why Choose Xcelore for AI Agent Development?

At Xcelore, we bring together deep expertise in automation, data science, and software engineering to deliver powerful, scalable AI Agent Development Services. Whether you need a quick deployment or a fully custom AI agent development project tailored to your business goals, we’ve got you covered.

We can help:

Find scraping opportunities and devise strategies

Create and design AI agents that adapt to your demands

Maintain compliance and ensure data integrity

Transform unstructured web data into valuable insights

Final Thoughts

Making use of an AI agent for web scraping isn’t just an option for technical reasons, it’s now an advantage strategic. From better insights to more efficient automation, the advantages are immense. If you’re looking to build your own AI agent or or invest in the best AI agent for web scraping.The key is in a well-planned strategy and skilled execution.

Are you ready to unlock the internet by leveraging intelligent automation?

Contact Xcelore today to get started with your custom AI agent development journey.

#ai agent development services#AI Agent Development#AI agent for web scraping#build your own AI agent

0 notes

Text

There has been a real backlash to AI’s companies’ mass scraping of the internet to train their tools that can be measured by the number of website owners specifically blocking AI company scraper bots, according to a new analysis by researchers at the Data Provenance Initiative, a group of academics from MIT and universities around the world. The analysis, published Friday, is called “Consent in Crisis: The Rapid Decline of the AI Data Commons,” and has found that, in the last year, “there has been a rapid crescendo of data restrictions from web sources” restricting web scraper bots (sometimes called “user agents”) from training on their websites. Specifically, about 5 percent of the 14,000 websites analyzed had modified their robots.txt file to block AI scrapers. That may not seem like a lot, but 28 percent of the “most actively maintained, critical sources,” meaning websites that are regularly updated and are not dormant, have restricted AI scraping in the last year. An analysis of these sites’ terms of service found that, in addition to robots.txt restrictions, many sites also have added AI scraping restrictions to their terms of service documents in the last year.

[...]

The study, led by Shayne Longpre of MIT and done in conjunction with a few dozen researchers at the Data Provenance Initiative, called this change an “emerging crisis” not just for commercial AI companies like OpenAI and Perplexity, but for researchers hoping to train AI for academic purposes. The New York Times said this shows that the data used to train AI is “disappearing fast.”

23 July 2024

86 notes

·

View notes

Text

Your All-in-One AI Web Agent: Save $200+ a Month, Unleash Limitless Possibilities!

Imagine having an AI agent that costs you nothing monthly, runs directly on your computer, and is unrestricted in its capabilities. OpenAI Operator charges up to $200/month for limited API calls and restricts access to many tasks like visiting thousands of websites. With DeepSeek-R1 and Browser-Use, you:

• Save money while keeping everything local and private.

• Automate visiting 100,000+ websites, gathering data, filling forms, and navigating like a human.

• Gain total freedom to explore, scrape, and interact with the web like never before.

You may have heard about Operator from Open AI that runs on their computer in some cloud with you passing on private information to their AI to so anything useful. AND you pay for the gift . It is not paranoid to not want you passwords and logins and personal details to be shared. OpenAI of course charges a substantial amount of money for something that will limit exactly what sites you can visit, like YouTube for example. With this method you will start telling an AI exactly what you want it to do, in plain language, and watching it navigate the web, gather information, and make decisions—all without writing a single line of code.

In this guide, we’ll show you how to build an AI agent that performs tasks like scraping news, analyzing social media mentions, and making predictions using DeepSeek-R1 and Browser-Use, but instead of writing a Python script, you’ll interact with the AI directly using prompts.

These instructions are in constant revisions as DeepSeek R1 is days old. Browser Use has been a standard for quite a while. This method can be for people who are new to AI and programming. It may seem technical at first, but by the end of this guide, you’ll feel confident using your AI agent to perform a variety of tasks, all by talking to it. how, if you look at these instructions and it seems to overwhelming, wait, we will have a single download app soon. It is in testing now.

This is version 3.0 of these instructions January 26th, 2025.

This guide will walk you through setting up DeepSeek-R1 8B (4-bit) and Browser-Use Web UI, ensuring even the most novice users succeed.

What You’ll Achieve

By following this guide, you’ll:

1. Set up DeepSeek-R1, a reasoning AI that works privately on your computer.

2. Configure Browser-Use Web UI, a tool to automate web scraping, form-filling, and real-time interaction.

3. Create an AI agent capable of finding stock news, gathering Reddit mentions, and predicting stock trends—all while operating without cloud restrictions.

A Deep Dive At ReadMultiplex.com Soon

We will have a deep dive into how you can use this platform for very advanced AI use cases that few have thought of let alone seen before. Join us at ReadMultiplex.com and become a member that not only sees the future earlier but also with particle and pragmatic ways to profit from the future.

System Requirements

Hardware

• RAM: 8 GB minimum (16 GB recommended).

• Processor: Quad-core (Intel i5/AMD Ryzen 5 or higher).

• Storage: 5 GB free space.

• Graphics: GPU optional for faster processing.

Software

• Operating System: macOS, Windows 10+, or Linux.

• Python: Version 3.8 or higher.

• Git: Installed.

Step 1: Get Your Tools Ready

We’ll need Python, Git, and a terminal/command prompt to proceed. Follow these instructions carefully.

Install Python

1. Check Python Installation:

• Open your terminal/command prompt and type:

python3 --version

• If Python is installed, you’ll see a version like:

Python 3.9.7

2. If Python Is Not Installed:

• Download Python from python.org.

• During installation, ensure you check “Add Python to PATH” on Windows.

3. Verify Installation:

python3 --version

Install Git

1. Check Git Installation:

• Run:

git --version

• If installed, you’ll see:

git version 2.34.1

2. If Git Is Not Installed:

• Windows: Download Git from git-scm.com and follow the instructions.

• Mac/Linux: Install via terminal:

sudo apt install git -y # For Ubuntu/Debian

brew install git # For macOS

Step 2: Download and Build llama.cpp

We’ll use llama.cpp to run the DeepSeek-R1 model locally.

1. Open your terminal/command prompt.

2. Navigate to a clear location for your project files:

mkdir ~/AI_Project

cd ~/AI_Project

3. Clone the llama.cpp repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

4. Build the project:

• Mac/Linux:

make

• Windows:

• Install a C++ compiler (e.g., MSVC or MinGW).

• Run:

mkdir build

cd build

cmake ..

cmake --build . --config Release

Step 3: Download DeepSeek-R1 8B 4-bit Model

1. Visit the DeepSeek-R1 8B Model Page on Hugging Face.

2. Download the 4-bit quantized model file:

• Example: DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf.

3. Move the model to your llama.cpp folder:

mv ~/Downloads/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf ~/AI_Project/llama.cpp

Step 4: Start DeepSeek-R1

1. Navigate to your llama.cpp folder:

cd ~/AI_Project/llama.cpp

2. Run the model with a sample prompt:

./main -m DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf -p "What is the capital of France?"

3. Expected Output:

The capital of France is Paris.

Step 5: Set Up Browser-Use Web UI

1. Go back to your project folder:

cd ~/AI_Project

2. Clone the Browser-Use repository:

git clone https://github.com/browser-use/browser-use.git

cd browser-use

3. Create a virtual environment:

python3 -m venv env

4. Activate the virtual environment:

• Mac/Linux:

source env/bin/activate

• Windows:

env\Scripts\activate

5. Install dependencies:

pip install -r requirements.txt

6. Start the Web UI:

python examples/gradio_demo.py

7. Open the local URL in your browser:

http://127.0.0.1:7860

Step 6: Configure the Web UI for DeepSeek-R1

1. Go to the Settings panel in the Web UI.

2. Specify the DeepSeek model path:

~/AI_Project/llama.cpp/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf

3. Adjust Timeout Settings:

• Increase the timeout to 120 seconds for larger models.

4. Enable Memory-Saving Mode if your system has less than 16 GB of RAM.

Step 7: Run an Example Task

Let’s create an agent that:

1. Searches for Tesla stock news.

2. Gathers Reddit mentions.

3. Predicts the stock trend.

Example Prompt:

Search for "Tesla stock news" on Google News and summarize the top 3 headlines. Then, check Reddit for the latest mentions of "Tesla stock" and predict whether the stock will rise based on the news and discussions.

--

Congratulations! You’ve built a powerful, private AI agent capable of automating the web and reasoning in real time. Unlike costly, restricted tools like OpenAI Operator, you’ve spent nothing beyond your time. Unleash your AI agent on tasks that were once impossible and imagine the possibilities for personal projects, research, and business. You’re not limited anymore. You own the web—your AI agent just unlocked it! 🚀

Stay tuned fora FREE simple to use single app that will do this all and more.

7 notes

·

View notes

Text

Less than three months after Apple quietly debuted a tool for publishers to opt out of its AI training, a number of prominent news outlets and social platforms have taken the company up on it.

WIRED can confirm that Facebook, Instagram, Craigslist, Tumblr, The New York Times, The Financial Times, The Atlantic, Vox Media, the USA Today network, and WIRED’s parent company, Condé Nast, are among the many organizations opting to exclude their data from Apple’s AI training. The cold reception reflects a significant shift in both the perception and use of the robotic crawlers that have trawled the web for decades. Now that these bots play a key role in collecting AI training data, they’ve become a conflict zone over intellectual property and the future of the web.

This new tool, Applebot-Extended, is an extension to Apple’s web-crawling bot that specifically lets website owners tell Apple not to use their data for AI training. (Apple calls this “controlling data usage” in a blog post explaining how it works.) The original Applebot, announced in 2015, initially crawled the internet to power Apple’s search products like Siri and Spotlight. Recently, though, Applebot’s purpose has expanded: The data it collects can also be used to train the foundational models Apple created for its AI efforts.

Applebot-Extended is a way to respect publishers' rights, says Apple spokesperson Nadine Haija. It doesn’t actually stop the original Applebot from crawling the website—which would then impact how that website’s content appeared in Apple search products—but instead prevents that data from being used to train Apple's large language models and other generative AI projects. It is, in essence, a bot to customize how another bot works.

Publishers can block Applebot-Extended by updating a text file on their websites known as the Robots Exclusion Protocol, or robots.txt. This file has governed how bots go about scraping the web for decades—and like the bots themselves, it is now at the center of a larger fight over how AI gets trained. Many publishers have already updated their robots.txt files to block AI bots from OpenAI, Anthropic, and other major AI players.

Robots.txt allows website owners to block or permit bots on a case-by-case basis. While there’s no legal obligation for bots to adhere to what the text file says, compliance is a long-standing norm. (A norm that is sometimes ignored: Earlier this year, a WIRED investigation revealed that the AI startup Perplexity was ignoring robots.txt and surreptitiously scraping websites.)

Applebot-Extended is so new that relatively few websites block it yet. Ontario, Canada–based AI-detection startup Originality AI analyzed a sampling of 1,000 high-traffic websites last week and found that approximately 7 percent—predominantly news and media outlets—were blocking Applebot-Extended. This week, the AI agent watchdog service Dark Visitors ran its own analysis of another sampling of 1,000 high-traffic websites, finding that approximately 6 percent had the bot blocked. Taken together, these efforts suggest that the vast majority of website owners either don’t object to Apple’s AI training practices are simply unaware of the option to block Applebot-Extended.

In a separate analysis conducted this week, data journalist Ben Welsh found that just over a quarter of the news websites he surveyed (294 of 1,167 primarily English-language, US-based publications) are blocking Applebot-Extended. In comparison, Welsh found that 53 percent of the news websites in his sample block OpenAI’s bot. Google introduced its own AI-specific bot, Google-Extended, last September; it’s blocked by nearly 43 percent of those sites, a sign that Applebot-Extended may still be under the radar. As Welsh tells WIRED, though, the number has been “gradually moving” upward since he started looking.

Welsh has an ongoing project monitoring how news outlets approach major AI agents. “A bit of a divide has emerged among news publishers about whether or not they want to block these bots,” he says. “I don't have the answer to why every news organization made its decision. Obviously, we can read about many of them making licensing deals, where they're being paid in exchange for letting the bots in—maybe that's a factor.”

Last year, The New York Times reported that Apple was attempting to strike AI deals with publishers. Since then, competitors like OpenAI and Perplexity have announced partnerships with a variety of news outlets, social platforms, and other popular websites. “A lot of the largest publishers in the world are clearly taking a strategic approach,” says Originality AI founder Jon Gillham. “I think in some cases, there's a business strategy involved—like, withholding the data until a partnership agreement is in place.”

There is some evidence supporting Gillham’s theory. For example, Condé Nast websites used to block OpenAI’s web crawlers. After the company announced a partnership with OpenAI last week, it unblocked the company’s bots. (Condé Nast declined to comment on the record for this story.) Meanwhile, Buzzfeed spokesperson Juliana Clifton told WIRED that the company, which currently blocks Applebot-Extended, puts every AI web-crawling bot it can identify on its block list unless its owner has entered into a partnership—typically paid—with the company, which also owns the Huffington Post.

Because robots.txt needs to be edited manually, and there are so many new AI agents debuting, it can be difficult to keep an up-to-date block list. “People just don’t know what to block,” says Dark Visitors founder Gavin King. Dark Visitors offers a freemium service that automatically updates a client site’s robots.txt, and King says publishers make up a big portion of his clients because of copyright concerns.

Robots.txt might seem like the arcane territory of webmasters—but given its outsize importance to digital publishers in the AI age, it is now the domain of media executives. WIRED has learned that two CEOs from major media companies directly decide which bots to block.

Some outlets have explicitly noted that they block AI scraping tools because they do not currently have partnerships with their owners. “We’re blocking Applebot-Extended across all of Vox Media’s properties, as we have done with many other AI scraping tools when we don’t have a commercial agreement with the other party,” says Lauren Starke, Vox Media’s senior vice president of communications. “We believe in protecting the value of our published work.”

Others will only describe their reasoning in vague—but blunt!—terms. “The team determined, at this point in time, there was no value in allowing Applebot-Extended access to our content,” says Gannett chief communications officer Lark-Marie Antón.

Meanwhile, The New York Times, which is suing OpenAI over copyright infringement, is critical of the opt-out nature of Applebot-Extended and its ilk. “As the law and The Times' own terms of service make clear, scraping or using our content for commercial purposes is prohibited without our prior written permission,” says NYT director of external communications Charlie Stadtlander, noting that the Times will keep adding unauthorized bots to its block list as it finds them. “Importantly, copyright law still applies whether or not technical blocking measures are in place. Theft of copyrighted material is not something content owners need to opt out of.”

It’s unclear whether Apple is any closer to closing deals with publishers. If or when it does, though, the consequences of any data licensing or sharing arrangements may be visible in robots.txt files even before they are publicly announced.

“I find it fascinating that one of the most consequential technologies of our era is being developed, and the battle for its training data is playing out on this really obscure text file, in public for us all to see,” says Gillham.

11 notes

·

View notes

Note

I have a question about your post regarding AI in which you detailed some agents' concerns. In particular you mentioned "we don't want our authors or artists work to be data-mined / scraped to "train" AI learning models/bots".

I completely agree, but what could be done to prevent this?

(I am no expert and clearly have NO idea what the terminology really is, but hopefully you will get it, sorry in advance?)

I mean, this is literally the thing we are all trying to figure out lol. But a start would be to have something in the contracts that SAYS Publishers do not have permission to license or otherwise permit companies to incorporate this copyrighted work into AI learning models, or to utilize this technology to mimic an author’s work.

The companies that are making AI bots or whatever are not shadowy guilds of hackers running around stealing things (despite how "web scraping" and "data mining" and all that sounds, which admittedly is v creepy and ominous!) -- web scraping, aka using robots to gather large amounts of publicly available data, is legal. That's like, a big part of how the internet works, it's how Google knows things when you google them, etc.

It's more dubious if scraping things that are protected under copyright is legal -- the companies would say that it is covered under fair use, that they are putting all this info in there to just teach the AI, and it isn't to COPY the author's work, etc etc. The people whose IP it is, though, probs don't feel that way -- and the law is sort of confused/non-existent. (There are loads of lawsuits literally RIGHT NOW that are aiming to sort some of this out, and the Writer's Guild strike which is ongoing and SAG-AFTRA strike which started this week is largely centered around some of the same issues when it comes to companies using AI for screenwriting, using actor's likeness and voice, etc.) Again, these are not shadowy organizations operating illegally off the coast of whatever -- these are regular-degular companies who can be sued, held to account, regulated, etc. The laws just haven’t caught up to the technology yet.

Point being, it's perhaps unethical to "feed" copyrighted work into an AI thing without permission of the copyright holder, but is it ILLEGAL? Uh -- yes??? but also ?????. US copyright law is pretty clear that works generated entirely by AI can't be protected under copyright -- and that works protected by copyright can't be, you know, copied by somebody else -- but there's a bit of a grey area here because of fair use? It’s confusing, for sure, and I'm betting all this is being hashed out in court cases and committee rooms and whatnot as I type.

Anywhoo, the first steps are clarifying these things contractually. Authors Guild (and agents) take the stance that this permission to "feed" info to AI learning models is something the Author automatically holds rights to, and only the author can decide if/when a book is "fed" into an AI... thing.

The Publishers kinda think this is something THEY hold the rights to, or both parties do, and that these rights should be frozen so NEITHER party can choose to "feed", or neither can choose to do so without the other's permission.

(BTW just to be clear, as I understand it -- which again is NOT MUCH lol -- this "permission" is not like, somebody calls each individual author and asks for permission -- it's part of the coding. Like how many e-books are DRM protected, so they are locked to a particular platform / device and you can't share them etc -- there are bits of code that basically say NOPE to scrapers. So (in my imagination, at least), the little spider-robot is Roomba-ing around the internet looking for things to scrape and it comes across this bit of code and NOPE, they have to turn around and try the next thing. Now – just like if an Etsy seller made mugs with pictures of Mickey Mouse on them, using somebody else’s IP is illegal – and those people CAN be sued if the copyright holder has the appetite to do that - but it’s also hard to stop entirely. So if some random person took your book and just copied it onto a blog -- the spider-robot wouldn't KNOW that info was under copyright, or they don't have permission to gobble it up, because it wouldn't have that bit of code to let them know -- so in that way it could be that nobody ever FULLY knows that the spider-robots won't steal their stuff, and publishers can't really be liable for that if third parties are involved mucking it up -- but they certainly CAN at least attempt to protect copyright!)

But also, you know how I don't even know what I'm talking about and don't know the words? Like in the previous paragraphs? The same goes for all the publishers and everyone else who isn't already a tech wizard, ALL of whom are suddenly learning a lot of very weird words and phrases and rules that nobody *exactly* understands, and it's all changing by the week (and by the day, even).

Publishers ARE starting to add some of this language, but I also would expect it to feel somewhat confused/wild-west-ish until some of the laws around this stuff are clearer. But really: We're all working on it!

87 notes

·

View notes

Text

Firecrawl Launches ‘/search’ Endpoint, Allowing Users To Search And Scrape The Web In One API Call

AI-oriented web crawling and data extraction tool, Firecrawl introduced a new feature called “/search”—an endpoint designed to streamline the process of discovering and collecting web data within a single API request. This functionality is particularly suited for use cases such as lead generation, SEO analysis, research, or agent development, where both search and data

Read More: You won't believe what happens next... Click here!

2 notes

·

View notes

Text

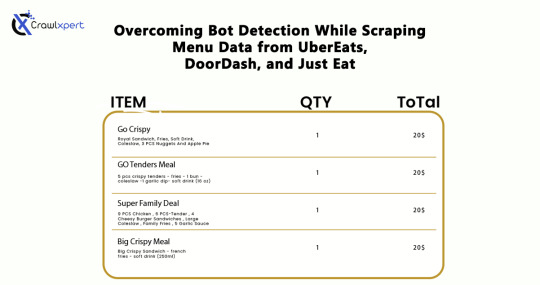

Overcoming Bot Detection While Scraping Menu Data from UberEats, DoorDash, and Just Eat

Introduction

In industries where menu data collection is concerned, web scraping would serve very well for us: UberEats, DoorDash, and Just Eat are the some examples. However, websites use very elaborate bot detection methods to stop the automated collection of information. In overcoming these factors, advanced scraping techniques would apply with huge relevance: rotating IPs, headless browsing, CAPTCHA solving, and AI methodology.

This guide will discuss how to bypass bot detection during menu data scraping and all challenges with the best practices for seamless and ethical data extraction.

Understanding Bot Detection on Food Delivery Platforms

1. Common Bot Detection Techniques

Food delivery platforms use various methods to block automated scrapers:

IP Blocking – Detects repeated requests from the same IP and blocks access.

User-Agent Tracking – Identifies and blocks non-human browsing patterns.

CAPTCHA Challenges – Requires solving puzzles to verify human presence.

JavaScript Challenges – Uses scripts to detect bots attempting to load pages without interaction.

Behavioral Analysis – Tracks mouse movements, scrolling, and keystrokes to differentiate bots from humans.

2. Rate Limiting and Request Patterns

Platforms monitor the frequency of requests coming from a specific IP or user session. If a scraper makes too many requests within a short time frame, it triggers rate limiting, causing the scraper to receive 403 Forbidden or 429 Too Many Requests errors.

3. Device Fingerprinting

Many websites use sophisticated techniques to detect unique attributes of a browser and device. This includes screen resolution, installed plugins, and system fonts. If a scraper runs on a known bot signature, it gets flagged.

Techniques to Overcome Bot Detection

1. IP Rotation and Proxy Management

Using a pool of rotating IPs helps avoid detection and blocking.

Use residential proxies instead of data center IPs.

Rotate IPs with each request to simulate different users.

Leverage proxy providers like Bright Data, ScraperAPI, and Smartproxy.

Implement session-based IP switching to maintain persistence.

2. Mimic Human Browsing Behavior

To appear more human-like, scrapers should:

Introduce random time delays between requests.

Use headless browsers like Puppeteer or Playwright to simulate real interactions.

Scroll pages and click elements programmatically to mimic real user behavior.

Randomize mouse movements and keyboard inputs.

Avoid loading pages at robotic speeds; introduce a natural browsing flow.

3. Bypassing CAPTCHA Challenges

Implement automated CAPTCHA-solving services like 2Captcha, Anti-Captcha, or DeathByCaptcha.

Use machine learning models to recognize and solve simple CAPTCHAs.

Avoid triggering CAPTCHAs by limiting request frequency and mimicking human navigation.

Employ AI-based CAPTCHA solvers that use pattern recognition to bypass common challenges.

4. Handling JavaScript-Rendered Content

Use Selenium, Puppeteer, or Playwright to interact with JavaScript-heavy pages.

Extract data directly from network requests instead of parsing the rendered HTML.

Load pages dynamically to prevent detection through static scrapers.

Emulate browser interactions by executing JavaScript code as real users would.

Cache previously scraped data to minimize redundant requests.

5. API-Based Extraction (Where Possible)

Some food delivery platforms offer APIs to access menu data. If available:

Check the official API documentation for pricing and access conditions.

Use API keys responsibly and avoid exceeding rate limits.

Combine API-based and web scraping approaches for optimal efficiency.

6. Using AI for Advanced Scraping

Machine learning models can help scrapers adapt to evolving anti-bot measures by:

Detecting and avoiding honeypots designed to catch bots.

Using natural language processing (NLP) to extract and categorize menu data efficiently.

Predicting changes in website structure to maintain scraper functionality.

Best Practices for Ethical Web Scraping

While overcoming bot detection is necessary, ethical web scraping ensures compliance with legal and industry standards:

Respect Robots.txt – Follow site policies on data access.

Avoid Excessive Requests – Scrape efficiently to prevent server overload.

Use Data Responsibly – Extracted data should be used for legitimate business insights only.

Maintain Transparency – If possible, obtain permission before scraping sensitive data.

Ensure Data Accuracy – Validate extracted data to avoid misleading information.

Challenges and Solutions for Long-Term Scraping Success

1. Managing Dynamic Website Changes

Food delivery platforms frequently update their website structure. Strategies to mitigate this include:

Monitoring website changes with automated UI tests.

Using XPath selectors instead of fixed HTML elements.

Implementing fallback scraping techniques in case of site modifications.

2. Avoiding Account Bans and Detection

If scraping requires logging into an account, prevent bans by:

Using multiple accounts to distribute request loads.

Avoiding excessive logins from the same device or IP.

Randomizing browser fingerprints using tools like Multilogin.

3. Cost Considerations for Large-Scale Scraping

Maintaining an advanced scraping infrastructure can be expensive. Cost optimization strategies include:

Using serverless functions to run scrapers on demand.

Choosing affordable proxy providers that balance performance and cost.

Optimizing scraper efficiency to reduce unnecessary requests.

Future Trends in Web Scraping for Food Delivery Data

As web scraping evolves, new advancements are shaping how businesses collect menu data:

AI-Powered Scrapers – Machine learning models will adapt more efficiently to website changes.

Increased Use of APIs – Companies will increasingly rely on API access instead of web scraping.

Stronger Anti-Scraping Technologies – Platforms will develop more advanced security measures.

Ethical Scraping Frameworks – Legal guidelines and compliance measures will become more standardized.

Conclusion

Uber Eats, DoorDash, and Just Eat represent great challenges for menu data scraping, mainly due to their advanced bot detection systems. Nevertheless, if IP rotation, headless browsing, solutions to CAPTCHA, and JavaScript execution methodologies, augmented with AI tools, are applied, businesses can easily scrape valuable data without incurring the wrath of anti-scraping measures.

If you are an automated and reliable web scraper, CrawlXpert is the solution for you, which specializes in tools and services to extract menu data with efficiency while staying legally and ethically compliant. The right techniques, along with updates on recent trends in web scrapping, will keep the food delivery data collection effort successful long into the foreseeable future.

Know More : https://www.crawlxpert.com/blog/scraping-menu-data-from-ubereats-doordash-and-just-eat

#ScrapingMenuDatafromUberEats#ScrapingMenuDatafromDoorDash#ScrapingMenuDatafromJustEat#ScrapingforFoodDeliveryData

0 notes

Link

[ad_1] In this tutorial, we walk you through building an enhanced web scraping tool that leverages BrightData’s powerful proxy network alongside Google’s Gemini API for intelligent data extraction. You’ll see how to structure your Python project, install and import the necessary libraries, and encapsulate scraping logic within a clean, reusable BrightDataScraper class. Whether you’re targeting Amazon product pages, bestseller listings, or LinkedIn profiles, the scraper’s modular methods demonstrate how to configure scraping parameters, handle errors gracefully, and return structured JSON results. An optional React-style AI agent integration also shows you how to combine LLM-driven reasoning with real-time scraping, empowering you to pose natural language queries for on-the-fly data analysis. !pip install langchain-brightdata langchain-google-genai langgraph langchain-core google-generativeai We install all of the key libraries needed for the tutorial in one step: langchain-brightdata for BrightData web scraping, langchain-google-genai and google-generativeai for Google Gemini integration, langgraph for agent orchestration, and langchain-core for the core LangChain framework. import os import json from typing import Dict, Any, Optional from langchain_brightdata import BrightDataWebScraperAPI from langchain_google_genai import ChatGoogleGenerativeAI from langgraph.prebuilt import create_react_agent These imports prepare your environment and core functionality: os and json handle system operations and data serialization, while typing provides structured type hints. You then bring in BrightDataWebScraperAPI for BrightData scraping, ChatGoogleGenerativeAI to interface with Google’s Gemini LLM, and create_react_agent to orchestrate these components in a React-style agent. class BrightDataScraper: """Enhanced web scraper using BrightData API""" def __init__(self, api_key: str, google_api_key: Optional[str] = None): """Initialize scraper with API keys""" self.api_key = api_key self.scraper = BrightDataWebScraperAPI(bright_data_api_key=api_key) if google_api_key: self.llm = ChatGoogleGenerativeAI( model="gemini-2.0-flash", google_api_key=google_api_key ) self.agent = create_react_agent(self.llm, [self.scraper]) def scrape_amazon_product(self, url: str, zipcode: str = "10001") -> Dict[str, Any]: """Scrape Amazon product data""" try: results = self.scraper.invoke( "url": url, "dataset_type": "amazon_product", "zipcode": zipcode ) return "success": True, "data": results except Exception as e: return "success": False, "error": str(e) def scrape_amazon_bestsellers(self, region: str = "in") -> Dict[str, Any]: """Scrape Amazon bestsellers""" try: url = f" results = self.scraper.invoke( "url": url, "dataset_type": "amazon_product" ) return "success": True, "data": results except Exception as e: return "success": False, "error": str(e) def scrape_linkedin_profile(self, url: str) -> Dict[str, Any]: """Scrape LinkedIn profile data""" try: results = self.scraper.invoke( "url": url, "dataset_type": "linkedin_person_profile" ) return "success": True, "data": results except Exception as e: return "success": False, "error": str(e) def run_agent_query(self, query: str) -> None: """Run AI agent with natural language query""" if not hasattr(self, 'agent'): print("Error: Google API key required for agent functionality") return try: for step in self.agent.stream( "messages": query, stream_mode="values" ): step["messages"][-1].pretty_print() except Exception as e: print(f"Agent error: e") def print_results(self, results: Dict[str, Any], title: str = "Results") -> None: """Pretty print results""" print(f"\n'='*50") print(f"title") print(f"'='*50") if results["success"]: print(json.dumps(results["data"], indent=2, ensure_ascii=False)) else: print(f"Error: results['error']") print() The BrightDataScraper class encapsulates all BrightData web-scraping logic and optional Gemini-powered intelligence under a single, reusable interface. Its methods enable you to easily fetch Amazon product details, bestseller lists, and LinkedIn profiles, handling API calls, error handling, and JSON formatting, and even stream natural-language “agent” queries when a Google API key is provided. A convenient print_results helper ensures your output is always cleanly formatted for inspection. def main(): """Main execution function""" BRIGHT_DATA_API_KEY = "Use Your Own API Key" GOOGLE_API_KEY = "Use Your Own API Key" scraper = BrightDataScraper(BRIGHT_DATA_API_KEY, GOOGLE_API_KEY) print("🛍️ Scraping Amazon India Bestsellers...") bestsellers = scraper.scrape_amazon_bestsellers("in") scraper.print_results(bestsellers, "Amazon India Bestsellers") print("📦 Scraping Amazon Product...") product_url = " product_data = scraper.scrape_amazon_product(product_url, "10001") scraper.print_results(product_data, "Amazon Product Data") print("👤 Scraping LinkedIn Profile...") linkedin_url = " linkedin_data = scraper.scrape_linkedin_profile(linkedin_url) scraper.print_results(linkedin_data, "LinkedIn Profile Data") print("🤖 Running AI Agent Query...") agent_query = """ Scrape Amazon product data for in New York (zipcode 10001) and summarize the key product details. """ scraper.run_agent_query(agent_query) The main() function ties everything together by setting your BrightData and Google API keys, instantiating the BrightDataScraper, and then demonstrating each feature: it scrapes Amazon India’s bestsellers, fetches details for a specific product, retrieves a LinkedIn profile, and finally runs a natural-language agent query, printing neatly formatted results after each step. if __name__ == "__main__": print("Installing required packages...") os.system("pip install -q langchain-brightdata langchain-google-genai langgraph") os.environ["BRIGHT_DATA_API_KEY"] = "Use Your Own API Key" main() Finally, this entry-point block ensures that, when run as a standalone script, the required scraping libraries are quietly installed, and the BrightData API key is set in the environment. Then the main function is executed to initiate all scraping and agent workflows. In conclusion, by the end of this tutorial, you’ll have a ready-to-use Python script that automates tedious data collection tasks, abstracts away low-level API details, and optionally taps into generative AI for advanced query handling. You can extend this foundation by adding support for other dataset types, integrating additional LLMs, or deploying the scraper as part of a larger data pipeline or web service. With these building blocks in place, you’re now equipped to gather, analyze, and present web data more efficiently, whether for market research, competitive intelligence, or custom AI-driven applications. Check out the Notebook. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences. [ad_2] Source link

0 notes

Text

What You'll Learn in an Agentic AI Course in London: Tools, Models, and Architectures

In 2025, Artificial Intelligence is no longer just about automation—it’s about autonomy. Enter Agentic AI, the next frontier in intelligent systems that not only process tasks but make decisions, reason independently, and interact adaptively. From personalized AI assistants to autonomous business processes, the world is shifting toward systems with agency—the ability to act with purpose.

If you're in London or considering studying here, an Agentic AI Course in London offers a golden opportunity to equip yourself with one of the most sought-after tech specializations of this decade. But what exactly will you learn in such a course?

In this detailed guide, we’ll break down the key tools, models, and architectures covered in a leading Agentic AI program and explore how this knowledge can transform your career in the AI ecosystem.

What Is Agentic AI?

Before diving into the curriculum, it's essential to understand what Agentic AI actually means. Unlike traditional AI, which relies on fixed instructions and task-specific models, Agentic AI systems have:

Autonomy: They can make decisions without constant human oversight.

Adaptability: They learn and adjust based on context and feedback.

Goal-Orientation: They operate toward defined objectives or evolving goals.

Examples of Agentic AI include:

Intelligent chatbots that initiate conversations and resolve queries end-to-end.

AI sales agents that follow up on leads, personalize messaging, and close deals.

Multi-agent systems collaborating to optimize supply chains or cybersecurity.

Why Study Agentic AI in London?

London has always been a global leader in AI research and innovation. The city is home to cutting-edge tech companies, government-backed AI initiatives, and top academic institutions. With the emergence of Agentic AI, London is again taking the lead—offering access to pioneering education, practical projects, and career pathways in this evolving field.

An Agentic AI Course in London gives you:

Access to global-standard curriculum

Exposure to real-world agentic systems

Networking with top-tier AI researchers and startups

Placement opportunities in leading tech companies

Let’s now explore what you’ll actually learn when you enroll in a well-structured agentic AI program in London.

Key Modules: What You’ll Learn in an Agentic AI Course in London

1. Foundations of Agentic AI

This foundational module introduces you to the core principles of agency in AI systems. Topics include:

What makes an AI “agentic”

The difference between reactive, deliberative, and hybrid agents

Goal-setting and utility functions in autonomous systems

The psychology and ethics of artificial agency

Outcome: You’ll understand the philosophical and computational basis of agentic intelligence.

2. Autonomous Agents & Multi-Agent Systems

Learn how to design and implement intelligent agents that can perceive their environment, make decisions, and take actions.

Topics Covered:

Perception-action loops

Agent architecture (BDI: Belief-Desire-Intention model)

Multi-agent collaboration and competition

Coordination protocols, auctions, and swarm intelligence

Outcome: Build simulations where multiple AI agents interact dynamically to solve real-world problems.

3. Large Language Models (LLMs) and Agentic Behavior

LLMs like GPT-4, Claude, and Mistral form the backbone of many agentic systems. You’ll explore:

Prompt engineering and autonomous prompt chaining

Using LLMs as reasoning engines

Autonomous agents powered by LLMs (e.g., Auto-GPT, BabyAGI)

Task decomposition and memory integration

Outcome: Learn how to harness LLMs to create agents that reason, plan, and act independently.

4. Tool Integration & Plugins

Agentic AI isn’t limited to text generation. You’ll learn how to integrate tools and APIs that extend the agent's abilities, including:

Web search and scraping tools

Database querying

Code generation and execution

Integration with platforms like Zapier, Google Workspace, Notion, GitHub

Outcome: Develop AI agents that interact with real-world systems—automating complex workflows end-to-end.

5. Planning & Decision-Making Frameworks

Here, you'll dive into the mathematical and algorithmic strategies behind AI decision-making.

Topics include:

Reinforcement Learning (RL) & Deep Q-Learning

Monte Carlo Tree Search

Utility-based planning

Goal setting, prioritization, and conflict resolution

Outcome: Design agents that evaluate multiple paths and optimize decisions over time

6. Memory Architectures & Agent Context Awareness

Agentic AI systems need memory to evolve intelligently. You’ll learn about:

Short-term vs long-term memory systems

Vector databases (Pinecone, Weaviate, FAISS)

Contextual reasoning and episodic recall

RAG (Retrieval-Augmented Generation) for memory-enhanced LLMs

Outcome: Create AI agents that remember, learn from history, and adapt over long interactions.

7. Building Real-World Agentic Applications

Put your knowledge into action through capstone projects such as:

Building a sales agent that automates lead nurturing and conversion

Designing a virtual HR assistant that conducts interviews

Creating autonomous trading bots

Developing a customer support agent that handles Tier-1 and Tier-2 queries

Outcome: Graduate with a real-world project portfolio that demonstrates your ability to build and scale agentic systems.

Why Choose the Boston Institute of Analytics (BIA) for Agentic AI Training in London?

If you're serious about mastering Agentic AI, the Boston Institute of Analytics (BIA) in London offers one of the most comprehensive and career-ready Agentic AI courses available today.

Key Highlights:

✅ Industry-Centric Curriculum: Built with input from AI leaders across sectors

✅ Live Projects & Case Studies: Apply concepts in realistic simulations and tools

✅ Experienced Mentors: Learn from AI engineers and researchers working on the frontier of autonomy

✅ Global Recognition: BIA certifications are respected by employers worldwide

✅ Placement Assistance: Dedicated career guidance, mock interviews, and resume workshops

Whether you're a recent graduate, data professional, or software developer, BIA’s course is designed to fast-track your journey into autonomous AI development.

Career Paths After an Agentic AI Course in London

Once you complete an Agentic AI Course in London, you’ll be qualified for high-demand roles such as:

AI Agent Developer

Autonomous Systems Engineer

LLM Prompt Engineer

AI Researcher (Agency & Autonomy)

AI Product Manager

Conversational AI Designer

Agentic AI Consultant

Salaries in this niche are competitive, with entry-level roles in the UK starting around £50,000/year, scaling up to £100,000+ with experience and specialization.

Final Thoughts

Agentic AI isn’t just the future—it’s the now. As organizations around the globe race to deploy intelligent systems that think, learn, and act independently, the demand for talent with deep knowledge of agentic architectures, tools, and reasoning models is growing rapidly.

By enrolling in an Agentic AI Course in London, you gain access to a world-class education, practical skills, and a direct pathway into one of the most transformative tech fields of our time.

Whether you're looking to launch your AI career or upgrade your current skill set, there's no better time—and no better place—than now in London.

#Generative AI course in London#Generative AI training in London#Agentic AI Course in London#Agentic AI Training in London

0 notes

Text

This New AI Browser Agent Just Changed Everything (And It’s Open Source)

1. Browser Use – The Open-Source Web Agent Powerhouse

Developed by Magnus Müller and Gregor Zunic, Browser Use has become one of the most popular open-source AI agents, amassing over 50,000 stars on GitHub within three months . It allows AI models to seamlessly control browsers, handling tasks like form filling, data scraping, and navigation. The platform offers a hosted version at $30/month, with an open-source core that supports multiple LLMs and features proxy rotation, session persistence, and CAPTCHA handling.

0 notes

Text

Beyond the Books: Real-World Coding Projects for Aspiring Developers

One of the best colleges in Jaipur, which is Arya College of Engineering & I.T. They transitioning from theoretical learning to hands-on coding is a crucial step in a computer science education. Real-world projects bridge this gap, enabling students to apply classroom concepts, build portfolios, and develop industry-ready skills. Here are impactful project ideas across various domains that every computer science student should consider:

Web Development

Personal Portfolio Website: Design and deploy a website to showcase your skills, projects, and resume. This project teaches HTML, CSS, JavaScript, and optionally frameworks like React or Bootstrap, and helps you understand web hosting and deployment.

E-Commerce Platform: Build a basic online store with product listings, shopping carts, and payment integration. This project introduces backend development, database management, and user authentication.

Mobile App Development

Recipe Finder App: Develop a mobile app that lets users search for recipes based on ingredients they have. This project covers UI/UX design, API integration, and mobile programming languages like Java (Android) or Swift (iOS).

Personal Finance Tracker: Create an app to help users manage expenses, budgets, and savings, integrating features like OCR for receipt scanning.

Data Science and Analytics

Social Media Trends Analysis Tool: Analyze data from platforms like Twitter or Instagram to identify trends and visualize user behavior. This project involves data scraping, natural language processing, and data visualization.

Stock Market Prediction Tool: Use historical stock data and machine learning algorithms to predict future trends, applying regression, classification, and data visualization techniques.

Artificial Intelligence and Machine Learning

Face Detection System: Implement a system that recognizes faces in images or video streams using OpenCV and Python. This project explores computer vision and deep learning.

Spam Filtering: Build a model to classify messages as spam or not using natural language processing and machine learning.

Cybersecurity

Virtual Private Network (VPN): Develop a simple VPN to understand network protocols and encryption. This project enhances your knowledge of cybersecurity fundamentals and system administration.

Intrusion Detection System (IDS): Create a tool to monitor network traffic and detect suspicious activities, requiring network programming and data analysis skills.

Collaborative and Cloud-Based Applications

Real-Time Collaborative Code Editor: Build a web-based editor where multiple users can code together in real time, using technologies like WebSocket, React, Node.js, and MongoDB. This project demonstrates real-time synchronization and operational transformation.

IoT and Automation

Smart Home Automation System: Design a system to control home devices (lights, thermostats, cameras) remotely, integrating hardware, software, and cloud services.

Attendance System with Facial Recognition: Automate attendance tracking using facial recognition and deploy it with hardware like Raspberry Pi.

Other Noteworthy Projects

Chatbots: Develop conversational agents for customer support or entertainment, leveraging natural language processing and AI.

Weather Forecasting App: Create a user-friendly app displaying real-time weather data and forecasts, using APIs and data visualization.

Game Development: Build a simple 2D or 3D game using Unity or Unreal Engine to combine programming with creativity.

Tips for Maximizing Project Impact

Align With Interests: Choose projects that resonate with your career goals or personal passions for sustained motivation.

Emphasize Teamwork: Collaborate with peers to enhance communication and project management skills.

Focus on Real-World Problems: Address genuine challenges to make your projects more relevant and impressive to employers.

Document and Present: Maintain clear documentation and present your work effectively to demonstrate professionalism and technical depth.

Conclusion

Engaging in real-world projects is the cornerstone of a robust computer science education. These experiences not only reinforce theoretical knowledge but also cultivate practical abilities, creativity, and confidence, preparing students for the demands of the tech industry.

0 notes

Text

Impact of AI on Web Scraping Practices

Introduction

Owing to advancements in artificial intelligence (AI), the history of web scraping is a story of evolution towards efficiency in recent times. With an increasing number of enterprises and researchers relying on data extraction in deriving insights and making decisions, AI-enabled web scraping methods have transformed some of the traditional techniques into newer methods that are more efficient, more scalable, and more resistant to anti-scraping measures.

This blog discusses the effects of AI on web scraping, how AI-powered automation is changing the web scraping industry, the challenges being faced, and, ultimately, the road ahead for web scraping with AI.

How AI is Transforming Web Scraping

1. Enhanced Data Extraction Efficiency

Standard methods of scraping websites and information are rule-based extraction and rely on the script that anybody has created for that particular site, and it is hard-coded for that site and set of extraction rules. But in the case of web scraping using AI, such complexities are avoided, wherein the adaptation of the script happens automatically with a change in the structure of the websites, thus ensuring the same data extraction without rewriting the script constantly.

2. AI-Powered Web Crawlers

Machine learning algorithms enable web crawlers to mimic human browsing behavior, reducing the risk of detection. These AI-driven crawlers can:

Identify patterns in website layouts.

Adapt to dynamic content.

Handle complex JavaScript-rendered pages with ease.

3. Natural Language Processing (NLP) for Data Structuring

NLP helps in:

Extracting meaningful insights from unstructured text.

Categorizing and classifying data based on context.

Understanding sentiment and contextual relevance in customer reviews and news articles.

4. Automated CAPTCHA Solving

Many websites use CAPTCHAs to block bots. AI models, especially deep learning-based Optical Character Recognition (OCR) techniques, help bypass these challenges by simulating human-like responses.

5. AI in Anti-Detection Mechanisms

AI-powered web scraping integrates:

User-agent rotation to simulate diverse browsing behaviors.

IP Rotation & Proxies to prevent blocking.

Headless Browsers & Human-Like Interaction for bypassing bot detection.

Applications of AI in Web Scraping

1. E-Commerce Price Monitoring

AI scrapers help businesses track competitors' pricing, stock availability, and discounts in real-time, enabling dynamic pricing strategies.

2. Financial & Market Intelligence

AI-powered web scraping extracts financial reports, news articles, and stock market data for predictive analytics and trend forecasting.

3. Lead Generation & Business Intelligence

Automating the collection of business contact details, customer feedback, and sales leads through AI-driven scraping solutions.

4. Social Media & Sentiment Analysis

Extracting social media conversations, hashtags, and sentiment trends to analyze brand reputation and customer perception.

5. Healthcare & Pharmaceutical Data Extraction

AI scrapers retrieve medical research, drug prices, and clinical trial data, aiding healthcare professionals in decision-making.

Challenges in AI-Based Web Scraping

1. Advanced Anti-Scraping Technologies

Websites employ sophisticated detection methods, including fingerprinting and behavioral analysis.

AI mitigates these by mimicking real user interactions.

2. Data Privacy & Legal Considerations

Compliance with data regulations like GDPR and CCPA is essential.

Ethical web scraping practices ensure responsible data usage.

3. High Computational Costs

AI-based web scrapers require GPU-intensive resources, leading to higher operational costs.

Optimization techniques, such as cloud-based scraping, help reduce costs.

Future Trends in AI for Web Scraping

1. AI-Driven Adaptive Scrapers

Scrapers that self-learn and adjust to new website structures without human intervention.

2. Integration with Machine Learning Pipelines

Combining AI scrapers with data analytics tools for real-time insights.

3. AI-Powered Data Anonymization

Protecting user privacy by automating data masking and filtering.

4. Blockchain-Based Data Validation

Ensuring authenticity and reliability of extracted data using blockchain verification.

Conclusion

The addition of AI to the web scrape has made it smarter, flexible, and scalable as far as data extraction is concerned. The use of AIs for web scraping will help organizations navigate through anti-bot mechanisms, dynamic changes in websites, and unstructured data processing. Indeed, in the future, web scraping with AI will only be enhanced and more advanced to contribute further innovations in sectors across industries.

For organizations willing to embrace the power of data extraction with AI, CrawlXpert brings you state-of-the-art solutions designed for the present-day web scraping task. Get working with CrawlXpert right now in order to gain from AI-enabled quality automated web scraping solutions!

Know More : https://www.crawlxpert.com/blog/ai-on-web-scraping-practices

0 notes

Link

[ad_1] In this tutorial, we walk you through building an enhanced web scraping tool that leverages BrightData’s powerful proxy network alongside Google’s Gemini API for intelligent data extraction. You’ll see how to structure your Python project, install and import the necessary libraries, and encapsulate scraping logic within a clean, reusable BrightDataScraper class. Whether you’re targeting Amazon product pages, bestseller listings, or LinkedIn profiles, the scraper’s modular methods demonstrate how to configure scraping parameters, handle errors gracefully, and return structured JSON results. An optional React-style AI agent integration also shows you how to combine LLM-driven reasoning with real-time scraping, empowering you to pose natural language queries for on-the-fly data analysis. !pip install langchain-brightdata langchain-google-genai langgraph langchain-core google-generativeai We install all of the key libraries needed for the tutorial in one step: langchain-brightdata for BrightData web scraping, langchain-google-genai and google-generativeai for Google Gemini integration, langgraph for agent orchestration, and langchain-core for the core LangChain framework. import os import json from typing import Dict, Any, Optional from langchain_brightdata import BrightDataWebScraperAPI from langchain_google_genai import ChatGoogleGenerativeAI from langgraph.prebuilt import create_react_agent These imports prepare your environment and core functionality: os and json handle system operations and data serialization, while typing provides structured type hints. You then bring in BrightDataWebScraperAPI for BrightData scraping, ChatGoogleGenerativeAI to interface with Google’s Gemini LLM, and create_react_agent to orchestrate these components in a React-style agent. class BrightDataScraper: """Enhanced web scraper using BrightData API""" def __init__(self, api_key: str, google_api_key: Optional[str] = None): """Initialize scraper with API keys""" self.api_key = api_key self.scraper = BrightDataWebScraperAPI(bright_data_api_key=api_key) if google_api_key: self.llm = ChatGoogleGenerativeAI( model="gemini-2.0-flash", google_api_key=google_api_key ) self.agent = create_react_agent(self.llm, [self.scraper]) def scrape_amazon_product(self, url: str, zipcode: str = "10001") -> Dict[str, Any]: """Scrape Amazon product data""" try: results = self.scraper.invoke( "url": url, "dataset_type": "amazon_product", "zipcode": zipcode ) return "success": True, "data": results except Exception as e: return "success": False, "error": str(e) def scrape_amazon_bestsellers(self, region: str = "in") -> Dict[str, Any]: """Scrape Amazon bestsellers""" try: url = f" results = self.scraper.invoke( "url": url, "dataset_type": "amazon_product" ) return "success": True, "data": results except Exception as e: return "success": False, "error": str(e) def scrape_linkedin_profile(self, url: str) -> Dict[str, Any]: """Scrape LinkedIn profile data""" try: results = self.scraper.invoke( "url": url, "dataset_type": "linkedin_person_profile" ) return "success": True, "data": results except Exception as e: return "success": False, "error": str(e) def run_agent_query(self, query: str) -> None: """Run AI agent with natural language query""" if not hasattr(self, 'agent'): print("Error: Google API key required for agent functionality") return try: for step in self.agent.stream( "messages": query, stream_mode="values" ): step["messages"][-1].pretty_print() except Exception as e: print(f"Agent error: e") def print_results(self, results: Dict[str, Any], title: str = "Results") -> None: """Pretty print results""" print(f"\n'='*50") print(f"title") print(f"'='*50") if results["success"]: print(json.dumps(results["data"], indent=2, ensure_ascii=False)) else: print(f"Error: results['error']") print() The BrightDataScraper class encapsulates all BrightData web-scraping logic and optional Gemini-powered intelligence under a single, reusable interface. Its methods enable you to easily fetch Amazon product details, bestseller lists, and LinkedIn profiles, handling API calls, error handling, and JSON formatting, and even stream natural-language “agent” queries when a Google API key is provided. A convenient print_results helper ensures your output is always cleanly formatted for inspection. def main(): """Main execution function""" BRIGHT_DATA_API_KEY = "Use Your Own API Key" GOOGLE_API_KEY = "Use Your Own API Key" scraper = BrightDataScraper(BRIGHT_DATA_API_KEY, GOOGLE_API_KEY) print("🛍️ Scraping Amazon India Bestsellers...") bestsellers = scraper.scrape_amazon_bestsellers("in") scraper.print_results(bestsellers, "Amazon India Bestsellers") print("📦 Scraping Amazon Product...") product_url = " product_data = scraper.scrape_amazon_product(product_url, "10001") scraper.print_results(product_data, "Amazon Product Data") print("👤 Scraping LinkedIn Profile...") linkedin_url = " linkedin_data = scraper.scrape_linkedin_profile(linkedin_url) scraper.print_results(linkedin_data, "LinkedIn Profile Data") print("🤖 Running AI Agent Query...") agent_query = """ Scrape Amazon product data for in New York (zipcode 10001) and summarize the key product details. """ scraper.run_agent_query(agent_query) The main() function ties everything together by setting your BrightData and Google API keys, instantiating the BrightDataScraper, and then demonstrating each feature: it scrapes Amazon India’s bestsellers, fetches details for a specific product, retrieves a LinkedIn profile, and finally runs a natural-language agent query, printing neatly formatted results after each step. if __name__ == "__main__": print("Installing required packages...") os.system("pip install -q langchain-brightdata langchain-google-genai langgraph") os.environ["BRIGHT_DATA_API_KEY"] = "Use Your Own API Key" main() Finally, this entry-point block ensures that, when run as a standalone script, the required scraping libraries are quietly installed, and the BrightData API key is set in the environment. Then the main function is executed to initiate all scraping and agent workflows. In conclusion, by the end of this tutorial, you’ll have a ready-to-use Python script that automates tedious data collection tasks, abstracts away low-level API details, and optionally taps into generative AI for advanced query handling. You can extend this foundation by adding support for other dataset types, integrating additional LLMs, or deploying the scraper as part of a larger data pipeline or web service. With these building blocks in place, you’re now equipped to gather, analyze, and present web data more efficiently, whether for market research, competitive intelligence, or custom AI-driven applications. Check out the Notebook. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences. [ad_2] Source link

0 notes

Text

Real Estate Property Data Scraping for Market Insights

Introduction

The real estate industry is evolving rapidly, making data-driven decisions essential for success. With changing market dynamics, investors, agents, and businesses must stay ahead by leveraging Real Estate Property Data Scraping to gain valuable insights.

Web Scraping Real Estate Data enables businesses to extract, analyze, and utilize key property information, including pricing trends, demand fluctuations, and competitor strategies. By Extracting Real Estate Property Datasets, professionals can make informed investment decisions and optimize market strategies.

At ArcTechnolabs, we specialize in AI-powered Real Estate Data Extraction, offering advanced solutions for Web Scraping for Real Estate. Our services help investors, realtors, and businesses access structured and real-time real estate data to maximize opportunities and minimize risks.

With the right data extraction strategies, real estate professionals can make smarter, data-backed investment choices.

What is Real Estate Data Scraping?

Definition

Real Estate Data Scraping is the automated extraction of property data from various online sources, including real estate listing websites, MLS platforms, property portals, and public records. This technique allows real estate investors, agencies, and businesses to gather valuable insights on market trends, pricing, rental demand, and competitive strategies in real-time.

By leveraging Commercial Real Estate Data Scraping, businesses can analyze pricing fluctuations, track investment hotspots, and evaluate competitor strategies, leading to more informed decision-making.

How It Works?

Web Scraping for Real Estate Property involves using specialized software and APIs to extract structured datasets from multiple sources. This data is then processed, cleaned, and analyzed to identify valuable trends in the real estate market.

Data Sources for Real Estate Scraping

MLS (Multiple Listing Services) – Comprehensive property listings

Real Estate Portals – Zillow, Realtor.com, Redfin, etc.

Public Property Records – Ownership history, property valuations

Rental Market Data – Airbnb, VRBO, and rental listing sites

Key Data Extracted

Real Estate Price Monitoring – Tracks historical and real-time price changes for better pricing strategies.

Scraping Rental Property Datasets – Extracts rental trends, occupancy rates, and average rental yields.

Competitive Intelligence for Realtors – Compares listings, agent strategies, and market positioning.

Real Estate Data Growth Trends (2025-2030)

YearAI & Data Analytics Usage (%)Real Estate Firms Using Web Scraping (%)202550%60%202770%75%203090%85%

Fact: 80% of real estate businesses now rely on Big Data insights for decision-making. (Source: Market Trends 2025)

Why Use Real Estate Data Scraping Services?

In today’s data-driven real estate industry, having accurate, real-time market data is essential for making informed investment decisions. Real Estate Property Data Scraping enabl es businesses to extract crucial property insights, track pricing trends, and gain a competitive advantage.

With advanced Web Scraping Services, real estate professionals can automate data collection from multiple sources, including MLS platforms, real estate portals, and public records. This helps investors, agents, and businesses optimize their strategies and mitigate investment risks.

Key Benefits of Real Estate Data Scraping Services

Accurate, Real-Time Market Data

Stay updated on property prices, rental rates, and emerging investment opportunities.

Utilize Web Scraping API Services to access structured real estate data seamlessly.

Better Investment Decision-Making

Extract and analyze historical and live market data for data-driven property investments.

Leverage Extracting Real Estate Property Datasets to identify profitable properties.

Competitive Market Analysis

Use Web Scraping Real Estate Data to monitor competitor pricing strategies.

Analyze trends in high-demand locations for better property positioning.

Risk Mitigation and Trend Prediction

Identify market fluctuations before they impact investment decisions.

Utilize AI-powered insights to predict property appreciation and rental yield trends.

Market Statistics: Real Estate Data Scraping Trends (2025-2030)

YearFirms Using Web Scraping (%)Data-Driven Decision Making (%)Automated Market Analysis (%)202560%55%50%202775%70%65%203085%90%80%

Fact: By 2030, 85% of real estate companies will integrate Web Scraping for Real Estate to improve market research and property valuation. (Source: FutureTech Real Estate 2025)

Why Choose Professional Web Scraping Services?

In the fast-evolving real estate industry, staying ahead of market trends requires accurate, real-time data. Professional Web Scraping Services provide businesses with structured and actionable insights, helping investors, realtors, and property managers make data-driven decisions.

By leveraging Commercial Real Estate Data Scraping, businesses can extract key property details, market trends, and competitor insights from various sources, including MLS platforms, real estate portals, and rental listings.

Key Advantages of Professional Web Scraping Services

Web Scraping API Services – Instant Access to Structured Real Estate Data

Automates data extraction from multiple sources, ensuring real-time updates.

Helps businesses track property prices, rental yields, and demand trends.

Supports Real Estate Data Scraping Services with seamless integration.

Mobile App Scraping Services – Extract Data from Real Estate Mobile Applications

Enables data collection from real estate apps like Zillow, Realtor.com, and Redfin.

Helps in Scraping Rental Property Datasets to monitor rental price fluctuations.

Essential for tracking user engagement and emerging property listings.

Customized Scraping Solutions – Tailored Data Extraction Based on Investment Strategies

Extracts data specific to commercial and residential real estate needs.

Supports Web Scraping for Real Estate Property to gain competitive intelligence.

Allows investors to analyze market demand, property appreciation rates, and ROI potential.

Real Estate Data Scraping Trends (2025-2030)

YearReal Estate Firms Using Data Scraping (%)AI & Automation Adoption (%)Market Insights Gained from Scraping (%)202562%50%55%202778%70%73%203090%85%88%

Fact: By 2030, 90% of real estate firms will rely on Real Estate Data Scraping Services for market research and investment decisions. (Source: Future Real Estate Insights 2025-2030)

Why Choose Professional Web Scraping Services?

Automated & Scalable Solutions – Large-scale data extraction for real-time insights

Compliance & Data Accuracy – Ensures legal, structured, and reliable data collection

Competitive Market Intelligence – Track competitor pricing, listings, and agent strategies

By adopting Professional Web Scraping Services, businesses can stay ahead of market fluctuations, track property trends, and maximize investment returns.

Key Benefits of Real Estate Data Scraping

In today's fast-paced real estate market, making data-driven decisions is crucial for maximizing investment returns. Real Estate Property Data Scraping enables businesses to extract valuable insights from multiple sources, allowing for smarter pricing, investment risk mitigation, and competitive analysis.