#AWS CloudWatch Monitoring and Logging Best Practices

Explore tagged Tumblr posts

Text

ColdFusion and AWS CloudWatch: Monitoring and Logging Best Practices

#ColdFusion and AWS CloudWatch: Monitoring and Logging Best Practices#ColdFusion and AWS CloudWatch Monitoring#ColdFusion and AWS CloudWatch Logging Best Practices#ColdFusion and AWS CloudWatch#ColdFusion AWS CloudWatch#ColdFusion#AWS CloudWatch#ColdFusion Monitoring and Logging Best Practices#AWS CloudWatch Monitoring and Logging Best Practices

0 notes

Text

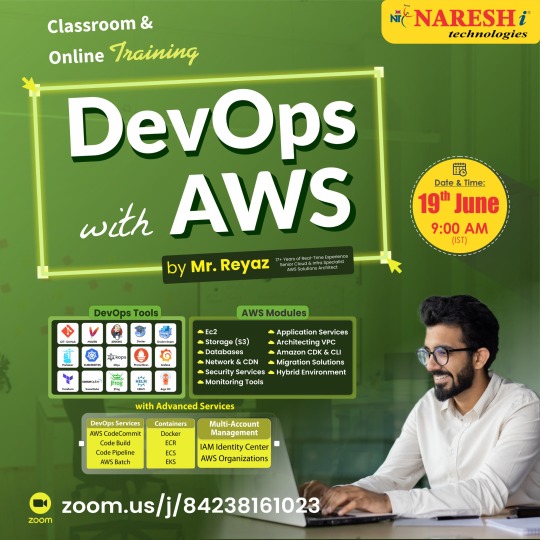

🚀 Master DevOps with AWS – New Batch Starts 19th June! 🚀

Hey tech enthusiasts! Ready to dive into the world of continuous integration, cloud automation, and cutting‑edge deployment pipelines? Join our DevOps with AWS live training, led by industry expert Mr. Reyaz, starting 19th June at 9:00 AM (IST). Whether you’re a curious beginner or a developer looking to level up, this course covers everything you need to thrive in modern IT.

🔧 What You’ll Learn:

CI/CD Pipelines with AWS CodePipeline & CodeBuild

Infrastructure as Code using CloudFormation & Terraform

Containerization & Orchestration with Docker & EKS

Monitoring & Logging via CloudWatch & ELK Stack

Security Best Practices with IAM, KMS & VPC setups

Hands‑On Projects reflecting real‑world scenarios

💡 Why Enroll?

Live, interactive sessions with Mr. Reyaz

Step‑by‑step labs you can pause & replay

Real‑time Q&A and doubt‑clearing support

Placement assistance to help you land that first DevOps role

🔗 Register for Early Seats – Don’t Miss Out! 👉 https://tr.ee/f3bN5E

✨ Explore even more free demo courses: 📚 https://linktr.ee/ITcoursesFreeDemos

Don’t just learn DevOps—live it. Automate your deployments, secure your cloud, and become the go‑to DevOps pro in your team.

0 notes

Text

Mastering AWS DevOps in 2025: Best Practices, Tools, and Real-World Use Cases

In 2025, the cloud ecosystem continues to grow very rapidly. Organizations of every size are embracing AWS DevOps to automate software delivery, improve security, and scale business efficiently. Mastering AWS DevOps means knowing the optimal combination of tools, best practices, and real-world use cases that deliver success in production.

This guide will assist you in discovering the most important elements of AWS DevOps, the best practices of 2025, and real-world examples of how top companies are leveraging AWS DevOps to compete.

What is AWS DevOps

AWS DevOps is the union of cultural principles, practices, and tools on Amazon Web Services that enhances an organization's capacity to deliver applications and services at a higher speed. It facilitates continuous integration, continuous delivery, infrastructure as code, monitoring, and cooperation among development and operations teams.

Why AWS DevOps is Important in 2025

As organizations require quicker innovation and zero downtime, DevOps on AWS offers the flexibility and reliability to compete. Trends such as AI integration, serverless architecture, and automated compliance are changing how teams adopt DevOps in 2025.

Advantages of adopting AWS DevOps:

1 Faster deployment cycles

2 Enhanced system reliability

3 Flexible and scalable cloud infrastructure

4 Automation from code to production

5 Integrated security and compliance

Best AWS DevOps Tools to Learn in 2025

These are the most critical tools fueling current AWS DevOps pipelines:

AWS CodePipeline

Your release process can be automated with our fully managed CI/CD service.

AWS CodeBuild

Scalable build service for creating ready-to-deploy packages, testing, and building source code.

AWS CodeDeploy

Automates code deployments to EC2, Lambda, ECS, or on-prem servers with zero-downtime approaches.

AWS CloudFormation and CDK

For infrastructure as code (IaC) management, allowing repeatable and versioned cloud environments.

Amazon CloudWatch

Facilitates logging, metrics, and alerting to track application and infrastructure performance.

AWS Lambda

Serverless compute that runs code in response to triggers, well-suited for event-driven DevOps automation.

AWS DevOps Best Practices in 2025

1. Adopt Infrastructure as Code (IaC)

Utilize AWS CloudFormation or Terraform to declare infrastructure. This makes it repeatable, easier to collaborate on, and version-able.

2. Use Full CI/CD Pipelines

Implement tools such as CodePipeline, GitHub Actions, or Jenkins on AWS to automate deployment, testing, and building.

3. Shift Left on Security

Bake security in early with Amazon Inspector, CodeGuru, and Secrets Manager. As part of CI/CD, automate vulnerability scans.

4. Monitor Everything

Utilize CloudWatch, X-Ray, and CloudTrail to achieve complete observability into your system. Implement alerts to detect and respond to problems promptly.

5. Use Containers and Serverless for Scalability

Utilize Amazon ECS, EKS, or Lambda for autoscaling. These services lower infrastructure management overhead and enhance efficiency.

Real-World AWS DevOps Use Cases

Use Case 1: Scalable CI/CD for a Fintech Startup

AWS CodePipeline and CodeDeploy were used by a financial firm to automate deployments in both production and staging environments. By containerizing using ECS and taking advantage of CloudWatch monitoring, they lowered deployment mistakes by 80 percent and attained near-zero downtime.

Use Case 2: Legacy Modernization for an Enterprise

A legacy enterprise moved its on-premise applications to AWS with CloudFormation and EC2 Auto Scaling. Through the adoption of full-stack DevOps pipelines and the transformation to microservices with EKS, they enhanced time-to-market by 60 percent.

Use Case 3: Serverless DevOps for a SaaS Product

A SaaS organization utilized AWS Lambda and API Gateway for their backend functions. They implemented quick feature releases and automatically scaled during high usage without having to provision infrastructure using CodeBuild and CloudWatch.

Top Trends in AWS DevOps in 2025

AI-driven DevOps: Integration with CodeWhisperer, CodeGuru, and machine learning algorithms for intelligence-driven automation

Compliance-as-Code: Governance policies automated using services such as AWS Config and Service Control Policies

Multi-account strategies: Employing AWS Organizations for scalable, secure account management

Zero Trust Architecture: Implementing strict identity-based access with IAM, SSO, and MFA

Hybrid Cloud DevOps: Connecting on-premises systems to AWS for effortless deployments

Conclusion

In 2025, becoming a master of AWS DevOps means syncing your development workflows with cloud-native architecture, innovative tools, and current best practices. With AWS, teams are able to create secure, scalable, and automated systems that release value at an unprecedented rate.

Begin with automating your pipelines, securing your deployments, and scaling with confidence. DevOps is the way of the future, and AWS is leading the way.

Frequently Asked Questions

What distinguishes AWS DevOps from DevOps? While AWS DevOps uses AWS services and tools to execute DevOps, DevOps itself is a practice.

Can small teams benefit from AWS DevOps

Yes. AWS provides fully managed services that enable small teams to scale and automate without having to handle complicated infrastructure.

Which programming languages does AWS DevOps support

AWS supports the big ones - Python, Node.js, Java, Go, .NET, Ruby, and many more.

Is AWS DevOps for enterprise-scale applications

Yes. Large enterprises run large-scale, multi-region applications with millions of users using AWS DevOps.

1 note

·

View note

Text

Integrating ROSA Applications with AWS Services (CS221)

In today's rapidly evolving cloud-native landscape, enterprises are looking for scalable, secure, and fully managed Kubernetes solutions that work seamlessly with existing cloud infrastructure. Red Hat OpenShift Service on AWS (ROSA) meets that demand by combining the power of Red Hat OpenShift with the scalability and flexibility of Amazon Web Services (AWS).

In this blog post, we’ll explore how you can integrate ROSA-based applications with key AWS services, unlocking a powerful hybrid architecture that enhances your applications' capabilities.

📌 What is ROSA?

ROSA (Red Hat OpenShift Service on AWS) is a managed OpenShift offering jointly developed and supported by Red Hat and AWS. It allows you to run containerized applications using OpenShift while taking full advantage of AWS services such as storage, databases, analytics, and identity management.

🔗 Why Integrate ROSA with AWS Services?

Integrating ROSA with native AWS services enables:

Seamless access to AWS resources (like RDS, S3, DynamoDB)

Improved scalability and availability

Cost-effective hybrid application architecture

Enhanced observability and monitoring

Secure IAM-based access control using AWS IAM Roles for Service Accounts (IRSA)

🛠️ Key Integration Scenarios

1. Storage Integration with Amazon S3 and EFS

Applications deployed on ROSA can use AWS storage services for persistent and object storage needs.

Use Case: A web app storing images to S3.

How: Use OpenShift’s CSI drivers to mount EFS or access S3 through SDKs or CLI.

yaml

Copy

Edit

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

2. Database Integration with Amazon RDS

You can offload your relational database requirements to managed RDS instances.

Use Case: Deploying a Spring Boot app with PostgreSQL on RDS.

How: Store DB credentials in Kubernetes secrets and use RDS endpoint in your app’s config.

env

Copy

Edit

SPRING_DATASOURCE_URL=jdbc:postgresql://<rds-endpoint>:5432/mydb

3. Authentication with AWS IAM + OIDC

ROSA supports IAM Roles for Service Accounts (IRSA), enabling fine-grained permissions for workloads.

Use Case: Granting a pod access to a specific S3 bucket.

How:

Create an IAM role with S3 access

Associate it with a Kubernetes service account

Use OIDC to federate access

4. Observability with Amazon CloudWatch and Prometheus

Monitor your workloads using Amazon CloudWatch Container Insights or integrate Prometheus and Grafana on ROSA for deeper insights.

Use Case: Track application metrics and logs in a single AWS dashboard.

How: Forward logs from OpenShift to CloudWatch using Fluent Bit.

5. Serverless Integration with AWS Lambda

Bridge your ROSA applications with AWS Lambda for event-driven workloads.

Use Case: Triggering a Lambda function on file upload to S3.

How: Use EventBridge or S3 event notifications with your ROSA app triggering the workflow.

🔒 Security Best Practices

Use IAM Roles for Service Accounts (IRSA) to avoid hardcoding credentials.

Use AWS Secrets Manager or OpenShift Vault integration for managing secrets securely.

Enable VPC PrivateLink to keep traffic within AWS private network boundaries.

🚀 Getting Started

To start integrating your ROSA applications with AWS:

Deploy your ROSA cluster using the AWS Management Console or CLI

Set up AWS CLI & IAM permissions

Enable the AWS services needed (e.g., RDS, S3, Lambda)

Create Kubernetes Secrets and ConfigMaps for service integration

Use ServiceAccounts, RBAC, and IRSA for secure access

🎯 Final Thoughts

ROSA is not just about running Kubernetes on AWS—it's about unlocking the true hybrid cloud potential by integrating with a rich ecosystem of AWS services. Whether you're building microservices, data pipelines, or enterprise-grade applications, ROSA + AWS gives you the tools to scale confidently, operate securely, and innovate rapidly.

If you're interested in hands-on workshops, consulting, or ROSA enablement for your team, feel free to reach out to HawkStack Technologies – your trusted Red Hat and AWS integration partner.

💬 Let's Talk!

Have you tried ROSA yet? What AWS services are you integrating with your OpenShift workloads? Share your experience or questions in the comments!

For more details www.hawkstack.com

0 notes

Text

Implementing AWS Web Application Firewall for Robust Protection

Implementing AWS Web Application Firewall (WAF) offers robust protection for web applications by filtering and monitoring HTTP(S) traffic to safeguard against common threats like SQL injection and cross-site scripting (XSS). This managed service integrates seamlessly with AWS services such as Amazon CloudFront, Application Load Balancer, and API Gateway, providing a scalable and cost-effective solution for application security. To ensure effective deployment, it's recommended to test WAF rules in a staging environment using count mode before applying them in production. Additionally, enabling detailed logging through Amazon CloudWatch or Amazon S3 can aid in monitoring and compliance. Regularly updating and customizing WAF rules to align with specific application needs further enhances security posture. For organizations seeking comprehensive application-level security, leveraging AWS WAF in conjunction with services like Edgenexus Limited's Web Application Firewall can provide layered defense against evolving cyber threats.

The Importance of AWS Web Application Firewall

AWS WAF is a managed service that helps protect web applications from common web exploits that could affect application availability, compromise security, or consume excessive resources. By filtering and monitoring HTTP and HTTPS requests, AWS WAF allows you to control access to your content. Implementing AWS WAF enables businesses to defend against threats such as SQL injection, cross-site scripting (XSS), and other OWASP Top 10 vulnerabilities. This proactive approach to security is essential for maintaining the integrity and availability of web applications.

Key Features of AWS Web Application Firewall

AWS WAF offers several features that enhance web application security. It provides customizable rules to block common attack patterns, such as SQL injection or cross-site scripting. Additionally, AWS WAF allows for rate-based rules to mitigate DDoS attacks and bot traffic. Integration with AWS Shield Advanced provides an additional layer of protection against larger-scale attacks. Furthermore, AWS WAF's logging capabilities enable detailed monitoring and analysis of web traffic, facilitating quick identification and response to potential threats.

Best Practices for Implementing AWS Web Application Firewall

When implementing AWS WAF, it's crucial to follow best practices to ensure optimal protection. Start by defining a baseline of normal application traffic to identify anomalies. Utilize AWS Managed Rules to protect against common threats and customize them to fit your application's specific needs. Regularly update and review your WAF rules to adapt to emerging threats. Additionally, integrate AWS WAF with AWS CloudWatch for real-time monitoring and alerting, enabling swift responses to potential security incidents.

Integrating AWS Web Application Firewall with AWS Services

AWS WAF seamlessly integrates with various AWS services, enhancing its effectiveness. Deploying AWS WAF with Amazon CloudFront allows for global distribution of content with added security at the edge. Integration with Application Load Balancer ensures that only legitimate traffic reaches your application servers. Additionally, AWS WAF can be used with Amazon API Gateway to protect APIs from malicious requests. These integrations provide a comprehensive security solution across your AWS infrastructure.

Monitoring and Logging with AWS Web Application Firewall

Monitoring and logging are essential components of a robust security strategy. AWS WAF provides detailed logs of web requests, including information on the request's source, headers, and the action taken by the WAF rules. These logs can be stored in Amazon S3, analyzed using Amazon Athena, or visualized through Amazon OpenSearch Service. By regularly reviewing these logs, businesses can identify patterns, detect anomalies, and respond promptly to potential threats, ensuring continuous protection of web applications.

Cost Considerations for AWS Web Application Firewall

While AWS WAF offers robust security features, it's essential to consider the associated costs. Pricing is based on the number of web access control lists (ACLs), the number of rules per ACL, and the number of web requests processed. To optimize costs, regularly review and adjust your WAF rules to ensure they are necessary and effective. Additionally, leveraging AWS Shield Advanced can provide additional protection against larger-scale attacks, potentially reducing the need for extensive custom WAF rules. By carefully managing AWS WAF configurations, businesses can achieve a balance between robust security and cost efficiency.

Future-Proofing Your Web Application Security with AWS WAF

As cyber threats continue to evolve, it's crucial to future-proof your web application security. AWS WAF's flexibility allows for the implementation of custom rules to address emerging threats. Regularly updating and refining these rules ensures that your applications remain protected against new vulnerabilities. Additionally, staying informed about updates and new features released by AWS can provide opportunities to enhance your security posture further. By proactively managing AWS WAF configurations, businesses can maintain a robust defense against evolving cyber threats.

Conclusion

Implementing AWS Web Application Firewall is a critical step in protecting web applications from common and emerging threats. By following best practices, integrating with AWS services, and continuously monitoring and refining security configurations, businesses can ensure the integrity and availability of their applications. Edgenexus Limited's expertise in IT services and consulting can assist organizations in effectively deploying and managing AWS WAF, providing tailored solutions to meet specific security needs. With a proactive approach to web application security, businesses can safeguard their digital assets and maintain trust with their users.

0 notes

Text

Unlock Your Cloud Career with an AWS DevOps Course

In the modern high-technology age, automation and cloud computing are the keys to success for information technology professionals. To switch to a profession as a cloud operations and software deployment specialist, an AWS DevOps course is a career-defining move if you make it happen. The course equips you with the tools, skill set, and hands-on expertise needed to close the operations-development gap with Amazon Web Services (AWS).

What Is AWS DevOps?

AWS DevOps refers to the application of DevOps practices—such as continuous integration, continuous delivery (CI/CD), infrastructure as code (IaC), and automated testing—on the AWS platform. Using the AWS CodePipeline, CodeDeploy, CloudFormation, and EC2 tools, companies can automate software delivery and enhance deployment reliability.

Why Study an AWS DevOps Course?

An AWS DevOps course offers instructor-led training that gets you prepared to learn theoretical and practical knowledge in DevOps on AWS technology. Main benefits are given below:

Hands-on training: Trainers can deliver labs and project-based classes using live cloud environments.

Industry-relevant practical skills: You learn how to automate the deployment, maintain performance in place, and manage infrastructure efficiently.

Certification readiness: Most AWS DevOps courses align with the AWS Certified DevOps Engineer – Professional exam, and you can validate your skills with a respected certification.

Career advancement: AWS-experienced DevOps engineers are sought after and command higher salaries.

What You'll Learn

A typical AWS DevOps course covers a broad spectrum of subjects, including:

Overview of AWS and main services

CI/CD pipelines with AWS CodePipeline and CodeBuild

Infrastructure as Code using AWS CloudFormation and Terraform

Monitoring and logging with AWS CloudWatch

Security best practices and compliance

Blue/green, rolling updates, and canary deployments

Who Should Take an AWS DevOps Course?

This course is perfect for:

System administrators who wish to automate infrastructure

Software developers who wish to have fewer things to worry about when it comes to deployments

Cloud engineers expanding on their AWS knowledge

IT professionals who wish to get certified with AWS

Choosing the Right AWS DevOps Course

When choosing a course, keep in mind:

Instructor expertise: Select a course instructed by seasoned professionals with current industry experience.

Curriculum depth: Make sure it addresses core as well as advanced material.

Project-based learning: Select courses that include labs or capstone projects.

Certification alignment: If you want to become certified, select a course aligned with the objectives of the AWS Certified DevOps Engineer exam.

Final Thoughts

Whether you want to gain cloud skills, automate software or gear up for a highly desirable certification, an AWS DevOps course is an investment well placed in your technologist career. With some effort and proper training, you will be able to execute DevOps best practices and assist organizations to scale up in the correct manner in the cloud.

0 notes

Text

The Ultimate Roadmap to AIOps Platform Development: Tools, Frameworks, and Best Practices for 2025

In the ever-evolving world of IT operations, AIOps (Artificial Intelligence for IT Operations) has moved from buzzword to business-critical necessity. As companies face increasing complexity, hybrid cloud environments, and demand for real-time decision-making, AIOps platform development has become the cornerstone of modern enterprise IT strategy.

If you're planning to build, upgrade, or optimize an AIOps platform in 2025, this comprehensive guide will walk you through the tools, frameworks, and best practices you must know to succeed.

What Is an AIOps Platform?

An AIOps platform leverages artificial intelligence, machine learning (ML), and big data analytics to automate IT operations—from anomaly detection and event correlation to root cause analysis, predictive maintenance, and incident resolution. The goal? Proactively manage, optimize, and automate IT operations to minimize downtime, enhance performance, and improve the overall user experience.

Key Functions of AIOps Platforms:

Data Ingestion and Integration

Real-Time Monitoring and Analytics

Intelligent Event Correlation

Predictive Insights and Forecasting

Automated Remediation and Workflows

Root Cause Analysis (RCA)

Why AIOps Platform Development Is Critical in 2025

Here’s why 2025 is a tipping point for AIOps adoption:

Explosion of IT Data: Gartner predicts that IT operations data will grow 3x by 2025.

Hybrid and Multi-Cloud Dominance: Enterprises now manage assets across public clouds, private clouds, and on-premises.

Demand for Instant Resolution: User expectations for zero downtime and faster support have skyrocketed.

Skill Shortages: IT teams are overwhelmed, making automation non-negotiable.

Security and Compliance Pressures: Faster anomaly detection is crucial for risk management.

Step-by-Step Roadmap to AIOps Platform Development

1. Define Your Objectives

Problem areas to address: Slow incident response? Infrastructure monitoring? Resource optimization?

KPIs: MTTR (Mean Time to Resolution), uptime percentage, operational costs, user satisfaction rates.

2. Data Strategy: Collection, Integration, and Normalization

Sources: Application logs, server metrics, network traffic, cloud APIs, IoT sensors.

Data Pipeline: Use ETL (Extract, Transform, Load) tools to clean and unify data.

Real-Time Ingestion: Implement streaming technologies like Apache Kafka, AWS Kinesis, or Azure Event Hubs.

3. Select Core AIOps Tools and Frameworks

We'll explore these in detail below.

4. Build Modular, Scalable Architecture

Microservices-based design enables better updates and feature rollouts.

API-First development ensures seamless integration with other enterprise systems.

5. Integrate AI/ML Models

Anomaly Detection: Isolation Forest, LSTM models, autoencoders.

Predictive Analytics: Time-series forecasting, regression models.

Root Cause Analysis: Causal inference models, graph neural networks.

6. Implement Intelligent Automation

Use RPA (Robotic Process Automation) combined with AI to enable self-healing systems.

Playbooks and Runbooks: Define automated scripts for known issues.

7. Deploy Monitoring and Feedback Mechanisms

Track performance using dashboards.

Continuously retrain models to adapt to new patterns.

Top Tools and Technologies for AIOps Platform Development (2025)

Data Ingestion and Processing

Apache Kafka

Fluentd

Elastic Stack (ELK/EFK)

Snowflake (for big data warehousing)

Monitoring and Observability

Prometheus + Grafana

Datadog

Dynatrace

Splunk ITSI

Machine Learning and AI Frameworks

TensorFlow

PyTorch

scikit-learn

H2O.ai (automated ML)

Event Management and Correlation

Moogsoft

BigPanda

ServiceNow ITOM

Automation and Orchestration

Ansible

Puppet

Chef

SaltStack

Cloud and Infrastructure Platforms

AWS CloudWatch and DevOps Tools

Google Cloud Operations Suite (formerly Stackdriver)

Azure Monitor and Azure DevOps

Best Practices for AIOps Platform Development

1. Start Small, Then Scale

Begin with a few critical systems before scaling to full-stack observability.

2. Embrace a Unified Data Strategy

Ensure that your AIOps platform ingests structured and unstructured data across all environments.

3. Prioritize Explainability

Build AI models that offer clear reasoning for decisions, not black-box results.

4. Incorporate Feedback Loops

AIOps platforms must learn continuously. Implement mechanisms for humans to approve, reject, or improve suggestions.

5. Ensure Robust Security and Compliance

Encrypt data in transit and at rest.

Implement access controls and audit trails.

Stay compliant with standards like GDPR, HIPAA, and CCPA.

6. Choose Cloud-Native and Open-Source Where Possible

Future-proof your system by building on open standards and avoiding vendor lock-in.

Key Trends Shaping AIOps in 2025

Edge AIOps: Extending monitoring and analytics to edge devices and remote locations.

AI-Enhanced DevSecOps: Tight integration between AIOps and security operations (SecOps).

Hyperautomation: Combining AIOps with enterprise-wide RPA and low-code platforms.

Composable IT: Building modular AIOps capabilities that can be assembled dynamically.

Federated Learning: Training models across multiple environments without moving sensitive data.

Challenges to Watch Out For

Data Silos: Incomplete data pipelines can cripple AIOps effectiveness.

Over-Automation: Relying too much on automation without human validation can lead to errors.

Skill Gaps: Building an AIOps platform requires expertise in AI, data engineering, IT operations, and cloud architectures.

Invest in cross-functional teams and continuous training to overcome these hurdles.

Conclusion: Building the Future with AIOps

In 2025, the enterprises that invest in robust AIOps platform development will not just survive—they will thrive. By integrating the right tools, frameworks, and best practices, businesses can unlock proactive incident management, faster innovation cycles, and superior user experiences.

AIOps isn’t just about reducing tickets—it’s about creating a resilient, self-optimizing IT ecosystem that powers future growth.

0 notes

Text

Top Training Institutions in Ameerpet for DevOps and AWS DevOps Courses

Introduction

Ameerpet, located in Hyderabad, India, has earned a reputation as a hub for quality IT training. Whether you're a fresh graduate or a working professional looking to upskill, Ameerpet offers a wide array of training institutions that cater to the growing demand for DevOps and AWS DevOps skills.

Why Ameerpet is a Preferred Location for IT Training

Ameerpet has long been recognized as a hotspot for IT courses due to its affordable fees, experienced faculty, and real-time project-based training. With the rise of cloud computing and automation, DevOps training institutes in Ameerpet have tailored their programs to align with current industry demands.

DevOps Course Online in Ameerpet

For those who can't attend classes in person, many institutes offer DevOps courses online in Ameerpet. These online courses provide flexibility without compromising on the quality of instruction. Live sessions, recorded videos, doubt-clearing sessions, and hands-on labs are commonly included.

DevOps Training Online in Ameerpet: What to Expect

Online training programs in Ameerpet usually cover:

Introduction to DevOps culture and practices

CI/CD pipelines using Jenkins

Containerization with Docker and Kubernetes

Version control with Git and GitHub

Infrastructure as Code using Ansible and Terraform

Students enrolled in DevOps training online in Ameerpet gain both theoretical knowledge and practical exposure, often guided by industry experts.

DevOps Classroom Training in Ameerpet

For learners who prefer traditional setups, DevOps classroom training in Ameerpet offers an immersive environment with face-to-face interaction. These sessions are highly interactive and ideal for people who thrive on immediate feedback and collaboration.

AWS DevOps Course in Ameerpet

With the growing importance of cloud-native DevOps, several institutions now offer a specialized AWS DevOps course in Ameerpet. These courses focus on:

AWS services such as EC2, S3, Lambda, and CloudFormation

CI/CD automation using AWS CodePipeline and CodeBuild

Monitoring and logging with CloudWatch and X-Ray

These courses are designed to help students prepare for AWS Certified DevOps Engineer exams and build strong practical skills.

How to Choose the Best DevOps Training Institute in Ameerpet

When selecting a DevOps training institute in Ameerpet, consider the following:

Curriculum alignment with industry trends

Trainer qualifications and industry experience

Access to hands-on labs and real-world projects

Flexibility for online or offline learning

Placement assistance and certification support

Conclusion

Whether you're looking for a DevOps course online in Ameerpet or prefer the traditional classroom route, there are plenty of reputable training institutions in Ameerpet that can help you build a successful DevOps career. From basic principles to advanced AWS DevOps practices, Ameerpet remains a top destination for IT training.

#devops training institute in ameerpet#devops classroom training in ameerpet#aws devops course in ameerpet

0 notes

Video

youtube

Monitoring Amazon RDS Hands-On | Optimize Database Performance

Step 1: Access the Amazon RDS Console - Log in to the AWS Management Console. - Navigate to the RDS service.

Step 2: Enable Enhanced Monitoring - Select your RDS instance from the Databases section. - Click on "Modify." - Under Monitoring, enable Enhanced monitoring. - Set the Granularity (e.g., 1 minute). - Click "Continue," then "Modify DB Instance."

Step 3: View CloudWatch Metrics - In the RDS console, select your instance. - Go to the Monitoring tab. - Review metrics such as CPU Utilization, Freeable Memory, Read IOPS, Write IOPS, and DB Connections.

Step 4: Set Up CloudWatch Alarms - Navigate to the CloudWatch service in AWS. - Go to Alarms and click "Create Alarm." - Choose the RDS metric you want to monitor (e.g., CPU Utilization). - Set the threshold and notification options. - Click "Create Alarm."

Step 5: Analyze Logs for Performance Issues - In the RDS console, select your instance. - Go to the Logs and events tab. - View and download error logs, slow query logs, and general logs to identify performance bottlenecks.

These detailed steps should help you create comprehensive and practical content for your YouTube channel, guiding your audience through essential Amazon RDS tasks.

Amazon RDS, RDS Monitoring, AWS Performance Insights, Optimize RDS, Amazon CloudWatch, Enhanced Monitoring AWS, AWS DevOps Tutorial, AWS Hands-On, Cloud Performance, RDS Optimization, AWS Database Monitoring, RDS best practices, AWS for Beginners, ClouDolus

#AmazonRDS #RDSMonitoring #PerformanceInsights #CloudWatch #AWSDevOps #DatabaseOptimization #ClouDolus #ClouDolusPro

📢 Subscribe to ClouDolus for More AWS & DevOps Tutorials! 🚀 🔹 ClouDolus YouTube Channel - [https://www.youtube.com/@cloudolus] 🔹 ClouDolus AWS DevOps - [https://www.youtube.com/@ClouDolusPro]

*THANKS FOR BEING A PART OF ClouDolus! 🙌✨*

#youtube#Amazon RDS RDS Monitoring AWS Performance Insights Optimize RDS Amazon CloudWatch Enhanced Monitoring AWS AWS DevOps Tutorial AWS Hands-On C

1 note

·

View note

Text

AWS Unlocked: Skills That Open Doors

AWS Demand and Relevance in the Job Market

Amazon Web Services (AWS) continues to dominate the cloud computing space, making AWS skills highly valuable in today’s job market. As more companies migrate to the cloud for scalability, cost-efficiency, and innovation, professionals with AWS expertise are in high demand. From startups to Fortune 500 companies, organizations are seeking cloud architects, developers, and DevOps engineers proficient in AWS.

The relevance of AWS spans across industries—IT, finance, healthcare, and more—highlighting its versatility. Certifications like AWS Certified Solutions Architect or AWS Certified DevOps Engineer serve as strong indicators of proficiency and can significantly boost one’s resume.

According to job portals and market surveys, AWS-related roles often command higher salaries compared to non-cloud positions. As cloud technology continues to evolve, professionals with AWS knowledge remain crucial to digital transformation strategies, making it a smart career investment.

Basic AWS Knowledge

Amazon Web Services (AWS) is a cloud computing platform that provides a wide range of services, including computing power, storage, databases, and networking. Understanding the basics of AWS is essential for anyone entering the tech industry or looking to enhance their IT skills.

At its core, AWS offers services like EC2 (virtual servers), S3 (cloud storage), RDS (managed databases), and VPC (networking). These services help businesses host websites, run applications, manage data, and scale infrastructure without managing physical servers.

Basic AWS knowledge also includes understanding regions and availability zones, how to navigate the AWS Management Console, and using IAM (Identity and Access Management) for secure access control.

Getting started with AWS doesn’t require advanced technical skills. With free-tier access and beginner-friendly certifications like AWS Certified Cloud Practitioner, anyone can begin their cloud journey. This foundational knowledge opens doors to more specialized cloud roles in the future.

AWS Skills Open Up These Career Roles

Cloud Architect Designs and manages an organization's cloud infrastructure using AWS services to ensure scalability, performance, and security.

Solutions Architect Creates technical solutions based on AWS services to meet specific business needs, often involved in client-facing roles.

DevOps Engineer Automates deployment processes using tools like AWS CodePipeline, CloudFormation, and integrates development with operations.

Cloud Developer Builds cloud-native applications using AWS services such as Lambda, API Gateway, and DynamoDB.

SysOps Administrator Handles day-to-day operations of AWS infrastructure, including monitoring, backups, and performance tuning.

Security Specialist Focuses on cloud security, identity management, and compliance using AWS IAM, KMS, and security best practices.

Data Engineer/Analyst Works with AWS tools like Redshift, Glue, and Athena for big data processing and analytics.

AWS Skills You Will Learn

Cloud Computing Fundamentals Understand the basics of cloud models (IaaS, PaaS, SaaS), deployment types, and AWS's place in the market.

AWS Core Services Get hands-on with EC2 (compute), S3 (storage), RDS (databases), and VPC (networking).

IAM & Security Learn how to manage users, roles, and permissions with Identity and Access Management (IAM) for secure access.

Scalability & Load Balancing Use services like Auto Scaling and Elastic Load Balancer to ensure high availability and performance.

Monitoring & Logging Track performance and troubleshoot using tools like Amazon CloudWatch and AWS CloudTrail.

Serverless Computing Build and deploy applications with AWS Lambda, API Gateway, and DynamoDB.

Automation & DevOps Tools Work with AWS CodePipeline, CloudFormation, and Elastic Beanstalk to automate infrastructure and deployments.

Networking & CDN Configure custom networks and deliver content faster using VPC, Route 53, and CloudFront.

Final Thoughts

The AWS Certified Solutions Architect – Associate certification is a powerful step toward building a successful cloud career. It validates your ability to design scalable, reliable, and secure AWS-based solutions—skills that are in high demand across industries.

Whether you're an IT professional looking to upskill or someone transitioning into cloud computing, this certification opens doors to roles like Cloud Architect, Solutions Architect, and DevOps Engineer. With real-world knowledge of AWS core services, architecture best practices, and cost-optimization strategies, you'll be equipped to contribute to cloud projects confidently.

0 notes

Text

Ensuring Cloud Security and Compliance with Proactive DevOps Practices

As organizations increasingly adopt cloud infrastructure, the need for strong security and compliance becomes more critical than ever. But traditional security practices often lag behind the speed of modern development. That’s where DevOps steps in—with proactive security integration across the development lifecycle.

In this blog, we’ll explore how combining DevOps with cloud-native best practices helps companies maintain robust security postures and meet compliance requirements without slowing down innovation.

The Challenge: Security in Rapidly Changing Cloud Environments

The cloud offers scalability and flexibility—but also introduces complexity. With rapid deployments, dynamic environments, and multiple services interacting at once, vulnerabilities can easily slip through the cracks.

Additionally, organizations need to stay compliant with industry standards such as:

ISO 27001

SOC 2

HIPAA

GDPR

PCI-DSS

Traditional security teams often can't keep pace with frequent releases. That’s why proactive DevOps practices—also known as DevSecOps—are essential for secure and compliant cloud operations.

What is DevSecOps?

DevSecOps is the practice of integrating security and compliance checks directly into the DevOps pipeline. Instead of treating security as a final step, DevSecOps ensures it’s automated, continuous, and built-in from day one.

Key principles include:

Shifting security left in the development lifecycle

Automating security scans, audits, and policy checks

Enabling collaboration between development, operations, and security teams

Ensuring compliance by design

How Proactive DevOps Enhances Cloud Security

Let’s break down how proactive DevOps practices support better security and compliance:

1. Infrastructure as Code (IaC)

Using tools like Terraform or AWS CloudFormation, teams define infrastructure in code—making it auditable, version-controlled, and consistent across environments. IaC makes it easier to enforce security standards, such as:

Enabling encryption by default

Setting secure defaults for IAM roles

Enforcing network segmentation

2. Automated Security Scans in CI/CD

CI/CD pipelines can integrate tools that automatically:

Scan for vulnerable packages and dependencies

Run static code analysis (SAST)

Perform dynamic testing (DAST)

Check configuration files for policy violations

This ensures issues are identified early, before they reach production.

3. Compliance as Code

Just like infrastructure and testing, compliance rules can be codified and automated. Tools like Open Policy Agent (OPA) or HashiCorp Sentinel help enforce policies around:

Access control

Data encryption

Resource tagging

Usage monitoring

Codifying compliance not only reduces risk—it simplifies audits.

4. Continuous Monitoring and Logging

Monitoring tools like Datadog, CloudWatch, and Prometheus provide real-time visibility into infrastructure health and user activity. Centralized logging platforms ensure:

Quick detection of anomalies

Immediate response to threats

Audit trails for compliance reporting

Real-World Benefits of DevSecOps in the Cloud

Organizations that embed security into their DevOps practices experience:

Faster detection and remediation of vulnerabilities

Lower risk of data breaches and misconfigurations

Improved compliance with regulatory standards

Shorter audit times and lower overhead

Higher confidence in releases

At Salzen Cloud, we help clients implement DevSecOps strategies tailored to their cloud platforms—empowering teams to innovate quickly while staying secure and compliant.

Final Thoughts

Security and compliance shouldn’t be afterthoughts—they should be baked into your DevOps culture. With proactive practices like infrastructure as code, automated scanning, compliance as code, and continuous monitoring, you can confidently manage risk in your cloud environment.

Whether you're just starting your cloud journey or looking to improve your current security posture, Salzen Cloud can help you build and scale secure, compliant cloud systems—without compromising agility.

0 notes

Text

AWS DevOps Course: A Pathway to Cloud Success

In today’s technology-driven world, businesses are constantly seeking ways to streamline software development, enhance collaboration, and accelerate deployment processes. With cloud computing becoming the backbone of modern IT infrastructure, the need for professionals skilled in AWS DevOps has surged. Enrolling in an AWS DevOps course online or AWS DevOps classroom training can help IT professionals gain the expertise needed to automate deployments, manage cloud infrastructure, and optimize workflows. For those looking to build a career in this field, a structured AWS DevOps certification course is essential.

What is AWS DevOps?

AWS DevOps is a set of devops training in ameerpet combined with AWS services to automate and streamline software development, deployment, and operations. With AWS, organizations can implement CI/CD pipelines, Infrastructure as Code (IaC), automated monitoring, and security best practices.

Modules of an AWS DevOps Course

An aws devops certification course in ameerpet or online program typically covers the following essential topics:

Introduction to DevOps and AWS– Understanding the importance of devops training in ameerpet in cloud environments.

Continuous Integration and Continuous Deployment (CI/CD) – Automating deployment processes using AWS CodePipeline, AWS CodeBuild, and AWS CodeDeploy.

Monitoring and Logging – Implementing Amazon CloudWatch and AWS CloudTrail for performance tracking and security management.

Containerization and Orchestration – Deploying and managing applications using Docker and Kubernetes (Amazon EKS).

Automation and Configuration Management– Using AWS Systems Manager and configuration management tools like Ansible, Chef, and Puppet.

Career Benefits of AWS DevOps Training

Completing an aws devops training in ameerpet program opens doors to numerous career opportunities. Organizations are rapidly adopting aws devops training online in ameerpet to improve development efficiency and automation. This has led to a high demand for professionals skilled in AWS DevOps, making it one of the most sought-after skill sets in IT.

Additionally, aws devops course online in ameerpet provides flexibility for professionals looking to upskill while continuing their current jobs. aws devops certification course in ameerpet also enhance job prospects by validating expertise in AWS DevOps tools and practices.

Online vs. Classroom Training: Which is Better?

Both options provide industry-relevant knowledge, but aws devops classroom course in ameerpet may be more beneficial for those who prefer structured, hands-on learning environments.

Whether opting for online or classroom learning, gaining practical experience through projects and case studies is crucial for mastering AWS DevOps skills.

Why Opt for Version IT for AWS DevOps Training? Choosing the right devops training institute in ameerpet is crucial for building a successful career in cloud computing. Version IT, a leading provider of aws devops training in ameerpet, offers expert-led programs designed to provide in-depth knowledge and hands-on experience. Their aws devops classroom course in ameerpet and aws devops course online in ameerpet programs are tailored to industry needs, ensuring that students gain real-world skills.

An aws devops certification course in ameerpet is a valuable investment for IT professionals looking to advance their careers in cloud computing and automation. By mastering AWS DevOps tools and methodologies, professionals can help businesses achieve faster software development, improved collaboration, and seamless deployment processes.

0 notes

Text

AWS Certified Solutions Architect (SAA C03) – A Comprehensive Guide

The AWS Certified Solutions Architect (SAA C03) certification is one of the most sought-after certifications in the cloud computing industry today. It is designed for individuals who want to validate their skills in designing distributed systems, architectures, and solutions on the AWS platform. As organizations increasingly migrate to the cloud, having an AWS Certified Solutions Architect (SAA C03) certification can significantly boost your career and help you stand out in a competitive job market.

What are the AWS Certified Solutions Architect (SAA C03) Certification?

The AWS Certified Solutions Architect (SAA C03) certification is the latest iteration of AWS's foundational Solutions Architect certification. This certification proves your ability to design and deploy scalable, highly available, and fault-tolerant systems on AWS. It tests your proficiency in key areas, including AWS services, cloud architecture design principles, security, cost optimization, and operational excellence.

This exam is aimed at individuals who have experience with the AWS platform and can demonstrate their knowledge of various AWS services and how to use them to design effective solutions. It is recommended that you have at least one year of hands-on experience with AWS before attempting the exam, although this is not a strict requirement.

Why Should You Get AWS Certified Solutions Architect (SAA C03)?

There are several reasons why obtaining the AWS Certified Solutions Architect (SAA C03) certification can be valuable:

1. Industry Recognition

AWS certifications are globally recognized as a standard of excellence. Having the SAA C03 certification demonstrates your expertise and shows employers that you have the skills needed to design robust AWS architectures.

2. Career Growth

The demand for cloud professionals is skyrocketing, with AWS being the leader in cloud computing. Having this certification can open doors to better job opportunities, higher salaries, and increased job security.

3. Improved Skills

Preparing for the AWS Certified Solutions Architect (SAA C03) exam allows you to deepen your understanding of cloud computing concepts and best practices. This knowledge will not only help you pass the exam but also improve your practical skills for real-world solutions.

4. Increased Confidence

Once you pass the exam, you will have the confidence to architect solutions that are reliable, cost-effective, and scalable. The certification serves as proof of your expertise in AWS architecture.

Exam Overview

The AWS Certified Solutions Architect (SAA C03) exam is a multiple-choice and multiple-answer format test, with questions covering a wide range of AWS services and architectural best practices. The exam typically takes 130 minutes to complete, and the cost is $150 USD.

Key Domains Covered in the Exam:

Design Resilient Architectures – 30%

High Availability

Fault Tolerance

Elasticity

Multi-AZ and Multi-Region Architectures

Design High-Performing Architectures – 28%

Network Design

Compute and Storage Optimization

Performance Monitoring and Scaling

Design Secure Applications and Architectures – 24%

Identity and Access Management (IAM)

Data Encryption

Security Best Practices

Design Cost-Optimized Architectures – 18%

Cost Control and Management

AWS Pricing Models and Cost Management Tools

Sample Topics:

Amazon EC2 for compute resources

Amazon S3 for storage

Amazon RDS for managed databases

AWS Lambda for serverless computing

Amazon VPC for network isolation

AWS IAM for security and access management

AWS CloudWatch for monitoring and logging

Preparation for the AWS Certified Solutions Architect (SAA C03) Exam

To successfully pass the AWS Certified Solutions Architect (SAA C03) exam, it’s crucial to follow a structured preparation plan. Here are some recommended steps:

1. Understand the Exam Blueprint

The first step is to familiarize yourself with the exam blueprint and understand the specific domains and topics covered. This ensures you have a clear idea of what to expect on the exam.

2. Take Online Courses

There are numerous online courses specifically designed to help you prepare for the AWS Certified Solutions Architect (SAA C03) exam. Some well-regarded platforms offering courses include:

A Cloud Guru

Linux Academy

Udemy

AWS Training and Certification

These courses offer both foundational knowledge and more in-depth topics, often including hands-on labs and practice exams.

3. Use AWS Whitepapers and Documentation

AWS provides extensive documentation, including whitepapers that cover architectural best practices, security guidelines, and design principles. Reading these whitepapers is an excellent way to deepen your understanding of AWS services.

4. Get Hands-on Experience

One of the best ways to learn is by doing. Utilize the AWS Free Tier to gain hands-on experience with key AWS services such as EC2, S3, Lambda, VPC, and RDS. Building and experimenting with real-world applications will help reinforce theoretical knowledge.

5. Practice with Mock Exams

Taking mock exams is an effective way to simulate the real exam environment and gauge your knowledge. It will help you identify areas where you need improvement and improve your time management skills.

Benefits of Being AWS Certified Solutions Architect (SAA C03)

1. Better Job Opportunities

Cloud architects with AWS expertise are in high demand. Earning the AWS Certified Solutions Architect (SAA C03) certification can help you land job roles like Solutions Architect, Cloud Architect, or Cloud Consultant.

2. Increased Earning Potential

Certified professionals typically earn higher salaries than their non-certified peers. According to recent salary surveys, AWS-certified individuals can expect significant pay increases due to their advanced knowledge and skills in cloud architecture.

3. Enhanced Job Security

Cloud services are essential to businesses, and AWS is a leader in this space. AWS-certified professionals are crucial for organizations looking to leverage cloud technologies effectively, leading to long-term job security.

4. Access to AWS Certified Community

As an AWS Certified Solutions Architect, you will have access to a network of other AWS-certified professionals, helping you stay up to date with the latest trends, best practices, and career opportunities.

Learn More here: AWS Certified Solutions Architect (SAA C03)

1 note

·

View note

Text

Integrating ROSA Applications with AWS Services (CS221)

As cloud-native architectures become the backbone of modern application deployments, combining the power of Red Hat OpenShift Service on AWS (ROSA) with native AWS services unlocks immense value for developers and DevOps teams alike. In this blog post, we explore how to integrate ROSA-hosted applications with AWS services to build scalable, secure, and cloud-optimized solutions — a key skill set emphasized in the CS221 course.

🚀 What is ROSA?

Red Hat OpenShift Service on AWS (ROSA) is a managed OpenShift platform that runs natively on AWS. It allows organizations to deploy Kubernetes-based applications while leveraging the scalability and global reach of AWS, without managing the underlying infrastructure.

With ROSA, you get:

Fully managed OpenShift clusters

Integrated with AWS IAM and billing

Access to AWS services like RDS, S3, DynamoDB, Lambda, etc.

Native CI/CD, container orchestration, and operator support

🧩 Why Integrate ROSA with AWS Services?

ROSA applications often need to interact with services like:

Amazon S3 for object storage

Amazon RDS or DynamoDB for database integration

Amazon SNS/SQS for messaging and queuing

AWS Secrets Manager or SSM Parameter Store for secrets management

Amazon CloudWatch for monitoring and logging

Integration enhances your application’s:

Scalability — Offload data, caching, messaging to AWS-native services

Security — Use IAM roles and policies for fine-grained access control

Resilience — Rely on AWS SLAs for critical components

Observability — Monitor and trace hybrid workloads via CloudWatch and X-Ray

🔐 IAM and Permissions: Secure Integration First

A crucial part of ROSA-AWS integration is managing IAM roles and policies securely.

Steps:

Create IAM Roles for Service Accounts (IRSA):

ROSA supports IAM Roles for Service Accounts, allowing pods to securely access AWS services without hardcoding credentials.

Attach IAM Policy to the Role:

Example: An application that uploads files to S3 will need the following permissions:{ "Effect": "Allow", "Action": ["s3:PutObject", "s3:GetObject"], "Resource": "arn:aws:s3:::my-bucket-name/*" }

Annotate OpenShift Service Account:

Use oc annotate to associate your OpenShift service account with the IAM role.

📦 Common Integration Use Cases

1. Storing App Logs in S3

Use a Fluentd or Loki pipeline to export logs from OpenShift to Amazon S3.

2. Connecting ROSA Apps to RDS

Applications can use standard drivers (PostgreSQL, MySQL) to connect to RDS endpoints — make sure to configure VPC and security groups appropriately.

3. Triggering AWS Lambda from ROSA

Set up an API Gateway or SNS topic to allow OpenShift applications to invoke serverless functions in AWS for batch processing or asynchronous tasks.

4. Using AWS Secrets Manager

Mount secrets securely in pods using CSI drivers or inject them using operators.

🛠 Hands-On Example: Accessing S3 from ROSA Pod

Here’s a quick walkthrough:

Create an IAM Role with S3 permissions.

Associate the role with a Kubernetes service account.

Deploy your pod using that service account.

Use AWS SDK (e.g., boto3 for Python) inside your app to access S3.

oc create sa s3-access oc annotate sa s3-access eks.amazonaws.com/role-arn=arn:aws:iam::<account-id>:role/S3AccessRole

Then reference s3-access in your pod’s YAML.

📚 ROSA CS221 Course Highlights

The CS221 course from Red Hat focuses on:

Configuring service accounts and roles

Setting up secure access to AWS services

Using OpenShift tools and operators to manage external integrations

Best practices for hybrid cloud observability and logging

It’s a great choice for developers, cloud engineers, and architects aiming to harness the full potential of ROSA + AWS.

✅ Final Thoughts

Integrating ROSA with AWS services enables teams to build robust, cloud-native applications using best-in-class tools from both Red Hat and AWS. Whether it's persistent storage, messaging, serverless computing, or monitoring — AWS services complement ROSA perfectly.

Mastering these integrations through real-world use cases or formal training (like CS221) can significantly uplift your DevOps capabilities in hybrid cloud environments.

Looking to Learn or Deploy ROSA with AWS?

HawkStack Technologies offers hands-on training, consulting, and ROSA deployment support. For more details www.hawkstack.com

0 notes

Text

Amazon Web Services (AWS): The Ultimate Guide

Introduction to Amazon Web Services (AWS)

Amazon Web Services (AWS) is the world’s leading cloud computing platform, offering a vast array of services for businesses and developers. Launched by Amazon in 2006, AWS provides on-demand computing, storage, networking, AI, and machine learning services. Its pay-as-you-go model, scalability, security, and global infrastructure have made it a preferred choice for organizations worldwide.

Evolution of AWS

AWS began as an internal Amazon solution to manage IT infrastructure. It launched publicly in 2006 with Simple Storage Service (S3) and Elastic Compute Cloud (EC2). Over time, AWS introduced services like Lambda, DynamoDB, and SageMaker, making it the most comprehensive cloud platform today.

Key Features of AWS

Scalability: AWS scales based on demand.

Flexibility: Supports various computing, storage, and networking options.

Security: Implements encryption, IAM (Identity and Access Management), and industry compliance.

Cost-Effectiveness: Pay-as-you-go pricing optimizes expenses.

Global Reach: Operates in multiple regions worldwide.

Managed Services: Simplifies deployment with services like RDS and Elastic Beanstalk.

AWS Global Infrastructure

AWS has regions across the globe, each with multiple Availability Zones (AZs) ensuring redundancy, disaster recovery, and minimal downtime. Hosting applications closer to users improves performance and compliance.

Core AWS Services

1. Compute Services

EC2: Virtual servers with various instance types.

Lambda: Serverless computing for event-driven applications.

ECS & EKS: Managed container orchestration services.

AWS Batch: Scalable batch computing.

2. Storage Services

S3: Scalable object storage.

EBS: Block storage for EC2 instances.

Glacier: Low-cost archival storage.

Snowball: Large-scale data migration.

3. Database Services

RDS: Managed relational databases.

DynamoDB: NoSQL database for high performance.

Aurora: High-performance relational database.

Redshift: Data warehousing for analytics.

4. Networking & Content Delivery

VPC: Isolated cloud resources.

Direct Connect: Private network connection to AWS.

Route 53: Scalable DNS service.

CloudFront: Content delivery network (CDN).

5. Security & Compliance

IAM: Access control and user management.

AWS Shield: DDoS protection.

WAF: Web application firewall.

Security Hub: Centralized security monitoring.

6. AI & Machine Learning

SageMaker: ML model development and deployment.

Comprehend: Natural language processing (NLP).

Rekognition: Image and video analysis.

Lex: Chatbot development.

7. Analytics & Big Data

Glue: ETL service for data processing.

Kinesis: Real-time data streaming.

Athena: Query service for S3 data.

Lake Formation: Data lake management.

Discover the Full Guide Now to click here

Benefits of AWS

Lower Costs: Eliminates on-premise infrastructure.

Faster Deployment: Pre-built solutions reduce setup time.

Enhanced Security: Advanced security measures protect data.

Business Agility: Quickly adapt to market changes.

Innovation: Access to AI, ML, and analytics tools.

AWS Use Cases

AWS serves industries such as:

E-commerce: Online stores, payment processing.

Finance: Fraud detection, real-time analytics.

Healthcare: Secure medical data storage.

Gaming: Multiplayer hosting, AI-driven interactions.

Media & Entertainment: Streaming, content delivery.

Education: Online learning platforms.

Getting Started with AWS

Sign Up: Create an AWS account.

Use Free Tier: Experiment with AWS services.

Set Up IAM: Secure access control.

Explore AWS Console: Familiarize yourself with the interface.

Deploy an Application: Start with EC2, S3, or RDS.

Best Practices for AWS

Use IAM Policies: Implement role-based access.

Enable MFA: Strengthen security.

Optimize Costs: Use reserved instances and auto-scaling.

Monitor & Log: Utilize CloudWatch for insights.

Backup & Recovery: Implement automated backups.

AWS Certifications & Careers

AWS certifications validate expertise in cloud computing:

Cloud Practitioner

Solutions Architect (Associate & Professional)

Developer (Associate)

SysOps Administrator

DevOps Engineer

Certified professionals can pursue roles like cloud engineer and solutions architect, making AWS a valuable career skill.

0 notes

Text

Managing Multi-Region Deployments in AWS

Introduction

Multi-region deployments in AWS help organizations achieve high availability, disaster recovery, reduced latency, and compliance with regional data regulations. This guide covers best practices, AWS services, and strategies for deploying applications across multiple AWS regions.

1. Why Use Multi-Region Deployments?

✅ High Availability & Fault Tolerance

If one region fails, traffic is automatically routed to another.

✅ Disaster Recovery (DR)

Ensure business continuity with backup and failover strategies.

✅ Low Latency & Performance Optimization

Serve users from the nearest AWS region for faster response times.

✅ Compliance & Data Residency

Meet legal requirements by storing and processing data in specific regions.

2. Key AWS Services for Multi-Region Deployments

🏗 Global Infrastructure

Amazon Route 53 → Global DNS routing for directing traffic

AWS Global Accelerator → Improves network latency across regions

AWS Transit Gateway → Connects VPCs across multiple regions

🗄 Data Storage & Replication

Amazon S3 Cross-Region Replication (CRR) → Automatically replicates S3 objects

Amazon RDS Global Database → Synchronizes databases across regions

DynamoDB Global Tables → Provides multi-region database access

⚡ Compute & Load Balancing

Amazon EC2 & Auto Scaling → Deploy compute instances across regions

AWS Elastic Load Balancer (ELB) → Distributes traffic across regions

AWS Lambda → Run serverless functions in multiple regions

🛡 Security & Compliance

AWS Identity and Access Management (IAM) → Ensures consistent access controls

AWS Key Management Service (KMS) → Multi-region encryption key management

AWS WAF & Shield → Protects against global security threats

3. Strategies for Multi-Region Deployments

1️⃣ Active-Active Deployment

All regions handle traffic simultaneously, distributing users to the closest region. ✔️ Pros: High availability, low latency ❌ Cons: More complex synchronization, higher costs

Example:

Route 53 with latency-based routing

DynamoDB Global Tables for database synchronization

Multi-region ALB with AWS Global Accelerator

2️⃣ Active-Passive Deployment

One region serves traffic, while a standby region takes over in case of failure. ✔️ Pros: Simplified operations, cost-effective ❌ Cons: Higher failover time

Example:

Route 53 failover routing

RDS Global Database with read replicas

Cross-region S3 replication for backups

3️⃣ Disaster Recovery (DR) Strategy

Backup & Restore: Store backups in a second region and restore if needed

Pilot Light: Replicate minimal infrastructure in another region, scaling up during failover

Warm Standby: Maintain a scaled-down replica, scaling up on failure

Hot Standby (Active-Passive): Fully operational second region, activated only during failure

4. Example: Multi-Region Deployment with AWS Global Accelerator

Step 1: Set Up Compute Instances

Deploy EC2 instances in two AWS regions (e.g., us-east-1, eu-west-1).shaws ec2 run-instances --region us-east-1 --image-id ami-xyz --instance-type t3.micro aws ec2 run-instances --region eu-west-1 --image-id ami-abc --instance-type t3.micro

Step 2: Configure an Auto Scaling Group

shaws autoscaling create-auto-scaling-group --auto-scaling-group-name multi-region-asg \ --launch-template LaunchTemplateId=lt-xyz \ --min-size 1 --max-size 3 \ --vpc-zone-identifier subnet-xyz \ --region us-east-1

Step 3: Use AWS Global Accelerator

shaws globalaccelerator create-accelerator --name MultiRegionAccelerator

Step 4: Set Up Route 53 Latency-Based Routing

shaws route53 change-resource-record-sets --hosted-zone-id Z123456 --change-batch file://route53.json

route53.json example:json{ "Changes": [{ "Action": "UPSERT", "ResourceRecordSet": { "Name": "example.com", "Type": "A", "SetIdentifier": "us-east-1", "Region": "us-east-1", "TTL": 60, "ResourceRecords": [{ "Value": "203.0.113.1" }] } }] }

5. Monitoring & Security Best Practices

✅ AWS CloudTrail & CloudWatch → Monitor activity logs and performance ✅ AWS GuardDuty → Threat detection across regions ✅ AWS KMS Multi-Region Keys → Encrypt data securely in multiple locations ✅ AWS Config → Ensure compliance across global infrastructure

6. Cost Optimization Tips

💰 Use AWS Savings Plans for EC2 & RDS 💰 Optimize Data Transfer Costs with AWS Global Accelerator 💰 Auto Scale Services to Avoid Over-Provisioning 💰 Use S3 Intelligent-Tiering for Cost-Effective Storage

Conclusion

A well-architected multi-region deployment in AWS ensures high availability, disaster recovery, and improved performance for global users. By leveraging AWS Global Accelerator, Route 53, RDS Global Databases, and Auto Scaling, organizations can build resilient applications with seamless failover capabilities.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes