#AWS Data Engineering Training in Hyderabad

Explore tagged Tumblr posts

Text

AWS Data Analytics Training | AWS Data Engineering Training in Bangalore

What’s the Most Efficient Way to Ingest Real-Time Data Using AWS?

AWS provides a suite of services designed to handle high-velocity, real-time data ingestion efficiently. In this article, we explore the best approaches and services AWS offers to build a scalable, real-time data ingestion pipeline.

Understanding Real-Time Data Ingestion

Real-time data ingestion involves capturing, processing, and storing data as it is generated, with minimal latency. This is essential for applications like fraud detection, IoT monitoring, live analytics, and real-time dashboards. AWS Data Engineering Course

Key Challenges in Real-Time Data Ingestion

Scalability – Handling large volumes of streaming data without performance degradation.

Latency – Ensuring minimal delay in data processing and ingestion.

Data Durability – Preventing data loss and ensuring reliability.

Cost Optimization – Managing costs while maintaining high throughput.

Security – Protecting data in transit and at rest.

AWS Services for Real-Time Data Ingestion

1. Amazon Kinesis

Kinesis Data Streams (KDS): A highly scalable service for ingesting real-time streaming data from various sources.

Kinesis Data Firehose: A fully managed service that delivers streaming data to destinations like S3, Redshift, or OpenSearch Service.

Kinesis Data Analytics: A service for processing and analyzing streaming data using SQL.

Use Case: Ideal for processing logs, telemetry data, clickstreams, and IoT data.

2. AWS Managed Kafka (Amazon MSK)

Amazon MSK provides a fully managed Apache Kafka service, allowing seamless data streaming and ingestion at scale.

Use Case: Suitable for applications requiring low-latency event streaming, message brokering, and high availability.

3. AWS IoT Core

For IoT applications, AWS IoT Core enables secure and scalable real-time ingestion of data from connected devices.

Use Case: Best for real-time telemetry, device status monitoring, and sensor data streaming.

4. Amazon S3 with Event Notifications

Amazon S3 can be used as a real-time ingestion target when paired with event notifications, triggering AWS Lambda, SNS, or SQS to process newly added data.

Use Case: Ideal for ingesting and processing batch data with near real-time updates.

5. AWS Lambda for Event-Driven Processing

AWS Lambda can process incoming data in real-time by responding to events from Kinesis, S3, DynamoDB Streams, and more. AWS Data Engineer certification

Use Case: Best for serverless event processing without managing infrastructure.

6. Amazon DynamoDB Streams

DynamoDB Streams captures real-time changes to a DynamoDB table and can integrate with AWS Lambda for further processing.

Use Case: Effective for real-time notifications, analytics, and microservices.

Building an Efficient AWS Real-Time Data Ingestion Pipeline

Step 1: Identify Data Sources and Requirements

Determine the data sources (IoT devices, logs, web applications, etc.).

Define latency requirements (milliseconds, seconds, or near real-time?).

Understand data volume and processing needs.

Step 2: Choose the Right AWS Service

For high-throughput, scalable ingestion → Amazon Kinesis or MSK.

For IoT data ingestion → AWS IoT Core.

For event-driven processing → Lambda with DynamoDB Streams or S3 Events.

Step 3: Implement Real-Time Processing and Transformation

Use Kinesis Data Analytics or AWS Lambda to filter, transform, and analyze data.

Store processed data in Amazon S3, Redshift, or OpenSearch Service for further analysis.

Step 4: Optimize for Performance and Cost

Enable auto-scaling in Kinesis or MSK to handle traffic spikes.

Use Kinesis Firehose to buffer and batch data before storing it in S3, reducing costs.

Implement data compression and partitioning strategies in storage. AWS Data Engineering online training

Step 5: Secure and Monitor the Pipeline

Use AWS Identity and Access Management (IAM) for fine-grained access control.

Monitor ingestion performance with Amazon CloudWatch and AWS X-Ray.

Best Practices for AWS Real-Time Data Ingestion

Choose the Right Service: Select an AWS service that aligns with your data velocity and business needs.

Use Serverless Architectures: Reduce operational overhead with Lambda and managed services like Kinesis Firehose.

Enable Auto-Scaling: Ensure scalability by using Kinesis auto-scaling and Kafka partitioning.

Minimize Costs: Optimize data batching, compression, and retention policies.

Ensure Security and Compliance: Implement encryption, access controls, and AWS security best practices. AWS Data Engineer online course

Conclusion

AWS provides a comprehensive set of services to efficiently ingest real-time data for various use cases, from IoT applications to big data analytics. By leveraging Amazon Kinesis, AWS IoT Core, MSK, Lambda, and DynamoDB Streams, businesses can build scalable, low-latency, and cost-effective data pipelines. The key to success is choosing the right services, optimizing performance, and ensuring security to handle real-time data ingestion effectively.

Would you like more details on a specific AWS service or implementation example? Let me know!

Visualpath is Leading Best AWS Data Engineering training.Get an offering Data Engineering course in Hyderabad.With experienced,real-time trainers.And real-time projects to help students gain practical skills and interview skills.We are providing 24/7 Access to Recorded Sessions ,For more information,call on +91-7032290546

For more information About AWS Data Engineering training

Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/online-aws-data-engineering-course.html

#AWS Data Engineering Course#AWS Data Engineering training#AWS Data Engineer certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering training in Hyderabad#AWS Data Engineer online course#AWS Data Engineering Training in Bangalore#AWS Data Engineering Online Course in Ameerpet#AWS Data Engineering Online Course in India#AWS Data Engineering Training in Chennai#AWS Data Analytics Training

0 notes

Text

AWS Data Engineering online training | AWS Data Engineer

AWS Data Engineering: An Overview and Its Importance

Introduction

AWS Data Engineering plays a significant role in handling and transforming raw data into valuable insights using Amazon Web Services (AWS) tools and technologies. This article explores AWS Data Engineering, its components, and why it is essential for modern enterprises. In today's data-driven world, organizations generate vast amounts of data daily. Effectively managing, processing, and analyzing this data is crucial for decision-making and business growth. AWS Data Engineering Training

What is AWS Data Engineering?

AWS Data Engineering refers to the process of designing, building, and managing scalable and secure data pipelines using AWS cloud services. It involves the extraction, transformation, and loading (ETL) of data from various sources into a centralized storage or data warehouse for analysis and reporting. Data engineers leverage AWS tools such as AWS Glue, Amazon Redshift, AWS Lambda, Amazon S3, AWS Data Pipeline, and Amazon EMR to streamline data processing and management.

Key Components of AWS Data Engineering

AWS offers a comprehensive set of tools and services to support data engineering. Here are some of the essential components:

Amazon S3 (Simple Storage Service): A scalable object storage service used to store raw and processed data securely.

AWS Glue: A fully managed ETL (Extract, Transform, Load) service that automates data preparation and transformation.

Amazon Redshift: A cloud data warehouse that enables efficient querying and analysis of large datasets. AWS Data Engineering Training

AWS Lambda: A serverless computing service used to run functions in response to events, often used for real-time data processing.

Amazon EMR (Elastic MapReduce): A service for processing big data using frameworks like Apache Spark and Hadoop.

AWS Data Pipeline: A managed service for automating data movement and transformation between AWS services and on-premise data sources.

AWS Kinesis: A real-time data streaming service that allows businesses to collect, process, and analyze data in real time.

Why is AWS Data Engineering Important?

AWS Data Engineering is essential for businesses due to several key reasons: AWS Data Engineering Training Institute

Scalability and Performance AWS provides scalable solutions that allow organizations to handle large volumes of data efficiently. Services like Amazon Redshift and EMR ensure high-performance data processing and analysis.

Cost-Effectiveness AWS offers pay-as-you-go pricing models, eliminating the need for large upfront investments in infrastructure. Businesses can optimize costs by only using the resources they need.

Security and Compliance AWS provides robust security features, including encryption, identity and access management (IAM), and compliance with industry standards like GDPR and HIPAA. AWS Data Engineering online training

Seamless Integration AWS services integrate seamlessly with third-party tools and on-premise data sources, making it easier to build and manage data pipelines.

Real-Time Data Processing AWS supports real-time data processing with services like AWS Kinesis and AWS Lambda, enabling businesses to react to events and insights instantly.

Data-Driven Decision Making With powerful data engineering tools, organizations can transform raw data into actionable insights, leading to improved business strategies and customer experiences.

Conclusion

AWS Data Engineering is a critical discipline for modern enterprises looking to leverage data for growth and innovation. By utilizing AWS's vast array of services, organizations can efficiently manage data pipelines, enhance security, reduce costs, and improve decision-making. As the demand for data engineering continues to rise, businesses investing in AWS Data Engineering gain a competitive edge in the ever-evolving digital landscape.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete AWS Data Engineering Training worldwide. You will get the best course at an affordable cost

Visit: https://www.visualpath.in/online-aws-data-engineering-course.html

Visit Blog: https://visualpathblogs.com/category/aws-data-engineering-with-data-analytics/

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

#AWS Data Engineering Course#AWS Data Engineering Training#AWS Data Engineer Certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering Training in Hyderabad#AWS Data Engineer online course

0 notes

Text

AWS Data Engineer Training | AWS Data Engineer Online Course.

AccentFuture offers an expert-led online AWS Data Engineer training program designed to help you master data integration, analytics, and cloud solutions on Amazon Web Services (AWS). This comprehensive course covers essential topics such as data ingestion, storage solutions, ETL processes, real-time data processing, and data analytics using key AWS services like S3, Glue, Redshift, Kinesis, and more. The curriculum is structured into modules that include hands-on projects and real-world applications, ensuring practical experience in building and managing data pipelines on AWS. Whether you're a beginner or an IT professional aiming to enhance your cloud skills, this training provides the knowledge and expertise needed to excel in cloud data engineering.

For more information and to enroll, visit AccentFuture's official course page.

#aws data engineer online training#aws data engineer training#data engineering#data engineering course in hyderabad#data engineer training#data engineer online training

0 notes

Text

Internships in Hyderabad for B.Tech Students – Why LI-MAT Soft Solutions is the Best Platform to Launch Your Tech Career

What Are the Best Internships in Hyderabad for B.Tech Students?

The best internships are those that:

Offer real-time project experience

Help you develop domain-specific skills

Are recognized by industry recruiters

Provide certifications and resume value

At LI-MAT, students get access to all of this and more. They offer industry-curated internships that help B.Tech students gain:

Hands-on exposure

Mentorship from experts

Placement-ready skills

Whether you’re from CSE, IT, ECE, or EEE, LI-MAT provides internships that are practical, structured, and designed to bridge the gap between college and industry.

Can ECE B.Tech Students Get Internships in Embedded Systems or VLSI in Hyderabad?

Absolutely! And LI-MAT makes it easy.

ECE students often struggle to find genuine core domain internships, but LI-MAT Soft Solutions offers specialized programs for:

Embedded Systems

IoT and Sensor-Based Projects

VLSI Design & Simulation

Robotics and Automation

These internships include hardware-software integration, use of tools like Arduino, Raspberry Pi, and VHDL, and even PCB design modules. So yes, if you’re from ECE, LI-MAT is your one-stop platform for core domain internships in Hyderabad.

Are There Internships in Hyderabad for IT and Software Engineering Students?

Definitely. LI-MAT offers software-focused internships that are tailor-made for IT and software engineering students. These include:

Web Development (Frontend + Backend)

Full Stack Development

Java Programming (Core & Advanced)

Python and Django

Cloud Computing with AWS & DevOps

Data Science & Machine Learning

Mobile App Development (Android/iOS)

The internships are live, interactive, and project-driven, giving you the edge you need to stand out during placements and technical interviews.

What Domain-Specific Internships are Popular in Hyderabad for B.Tech Students?

B.Tech students in Hyderabad are increasingly looking for internships that align with industry trends. Some of the most in-demand domains include:

Cyber Security & Ethical Hacking

Artificial Intelligence & Deep Learning

Data Science & Analytics

IoT & Embedded Systems

VLSI & Electronics Design

Web and App Development

Cloud & DevOps

LI-MAT offers certified internship programs in all these domains, with practical exposure, tools, and mentoring to help you become industry-ready.

Courses Offered at LI-MAT Soft Solutions

Here’s a quick look at the most popular internship courses offered by LI-MAT for B.Tech students:

Cyber Security & Ethical Hacking

Java (Core + Advanced)

Python with Django/Flask

Machine Learning & AI

Data Science with Python

Cloud Computing with AWS

Web Development (HTML, CSS, JS, React, Node)

Mobile App Development

Embedded Systems & VLSI

Each course includes:

Industry-relevant curriculum

Real-time projects

Expert mentorship

Certification

Placement and resume support

Whether you're in your 2nd, 3rd, or final year, you can enroll and gain the skills that tech companies in Hyderabad are actively seeking.

Why LI-MAT Soft Solutions?

What makes LI-MAT stand out from other institutes is its focus on real outcomes:

Hands-on project experience

Interview prep and soft skills training

Dedicated placement support

Beginner to advanced-level paths

They aren’t just about teaching—they’re about transforming students into tech professionals.

Conclusion

If you're searching for internships in Hyderabad for B.Tech students, don’t settle for generic listings and unpaid gigs. Go with a trusted institute that offers real skills, real projects, and real value.

LI-MAT Soft Solutions is your gateway to quality internships in Hyderabad—whether you’re from CSE, IT, or ECE. With cutting-edge courses, project-driven learning, and expert guidance, it’s everything you need to kickstart your tech career the right way.

0 notes

Text

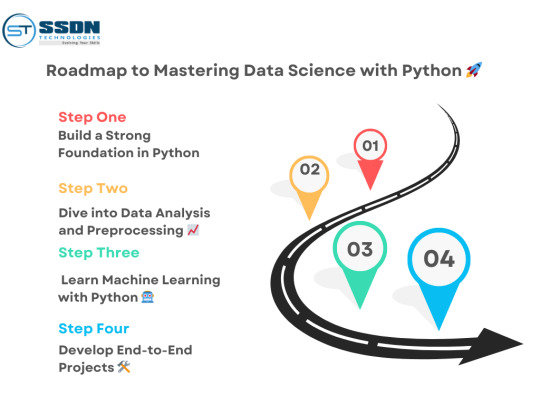

Why Python is the Best Choice for Data Analytics in 2025

In 2025, data analytics continues to drive business decisions, making it one of the most sought-after skills in the tech industry. Among the many programming languages available, Python stands out as the best choice for data analytics. With its simplicity, extensive libraries, and strong community support, Python empowers data analysts to process, visualize, and extract insights from data efficiently. If you are looking to advance your career in data analytics, enrolling in a Python training in Hyderabad can be a game-changer.

Why Python for Data Analytics?

1. Easy to Learn and Use

Python's simple syntax makes it beginner-friendly, allowing professionals from non-programming backgrounds to learn it quickly. Unlike other languages that require extensive coding, Python offers a concise and readable approach, making data analysis easier and more efficient.

2. Rich Ecosystem of Libraries

Python provides a vast range of libraries specifically designed for data analytics, including:

Pandas – For data manipulation and analysis

NumPy – For numerical computing

Matplotlib & Seaborn – For data visualization

Scikit-learn – For machine learning

TensorFlow & PyTorch – For AI-driven analytics

These libraries simplify complex tasks and help analysts derive meaningful insights from large datasets.

3. Versatility and Scalability

Python is not just limited to data analytics; it is widely used in web development, AI, and automation. This versatility allows professionals to integrate data analytics with other technologies, making it a preferred choice for businesses.

4. Strong Community Support

With a vast community of developers, Python users have access to extensive documentation, forums, and online tutorials. If you encounter any issues while working with Python, you can quickly find solutions and best practices through community discussions.

5. Integration with Big Data and Cloud Technologies

Python seamlessly integrates with big data technologies like Hadoop and Apache Spark, making it an excellent choice for handling massive datasets. Additionally, Python is compatible with cloud platforms such as AWS and Azure, which are essential for modern data analytics solutions.

Career Opportunities in Python-Based Data Analytics

The demand for skilled data analysts proficient in Python is on the rise. Companies across industries are looking for professionals who can analyze and interpret data effectively. Some of the top job roles include:

Data Analyst

Business Intelligence Analyst

Data Engineer

Machine Learning Engineer

AI Specialist

If you want to build a successful career in data analytics, enrolling in a Python course in Hyderabad will equip you with the necessary skills and industry-relevant knowledge.

How to Learn Python for Data Analytics?

To master Python for data analytics, follow these steps:

Enroll in a Structured Course – Join a Python training in Hyderabad that offers hands-on projects and real-world case studies.

Practice with Real Datasets – Work with publicly available datasets to gain practical experience.

Master Data Visualization – Learn tools like Matplotlib and Seaborn to present data insights effectively.

Explore Machine Learning – Get familiar with Scikit-learn and TensorFlow to enhance your data analysis skills.

Build a Portfolio – Showcase your projects on platforms like GitHub and Kaggle to attract job opportunities.

Conclusion

Python continues to dominate the data analytics industry in 2025, offering ease of use, extensive libraries, and strong career prospects. Whether you are a beginner or an experienced professional, mastering Python can unlock numerous opportunities in data analytics. If you are ready to take the next step, consider enrolling in a Python classes in Hyderabad and start your journey toward a successful career in data analytics.

Website : www.ssdntech.com Contact Us : +91 9999111686

#python training in hyderabad#python course in hyderabad#python classes in hyderabad#python Certification in hyderabad#best python institute in hyderabad

0 notes

Text

Data Science Training Course

Data Science is indeed one of the most dynamic and high-demand fields in today’s digital era. With the exponential growth of data across industries, the need for skilled data professionals has never been greater. If you’re considering a career in Data Science, enrolling in a Data Science course in Hyderabad can provide you with the right skills, knowledge, and opportunities to thrive in this field.

Why Choose Data Science? Data Science is transforming industries by enabling data-driven decision-making, predictive analytics, and automation. Here’s why it’s a great career choice:

High Demand: Companies across sectors are hiring data scientists, analysts, and engineers.

Versatility: Data Science skills are applicable in healthcare, finance, retail, marketing, manufacturing, and more.

Future-Proof Career: With advancements in AI, ML, and Big Data, the demand for data professionals will only grow.

What You’ll Learn in a Data Science Training Course A well-structured Data Science course covers a wide range of topics to equip you with both foundational and advanced skills. Here’s a breakdown of what you can expect:

Programming for Data Science

Master Python and R, the most widely used programming languages in Data Science.

Learn libraries like Pandas, NumPy, Matplotlib, and ggplot2 for data manipulation and visualization.

Use Jupyter Notebook for interactive coding and analysis.

Data Analysis and Visualization

Understand data cleaning, preprocessing, and feature engineering.

Explore tools like Tableau, Power BI, and Seaborn to create insightful visualizations.

Machine Learning and AI

Learn supervised and unsupervised learning algorithms.

Work on regression, classification, clustering, and deep learning models.

Gain hands-on experience with AI-powered predictive analytics.

Big Data and Cloud Computing

Handle large datasets using tools like Hadoop, Spark, and SQL.

Learn cloud platforms like AWS, Google Cloud (GCP), and Azure for data storage and processing.

Real-World Projects

Apply your skills to live projects in domains like healthcare, finance, e-commerce, and marketing.

Build a portfolio to showcase your expertise to potential employers.

Why Hyderabad for Data Science? Hyderabad is a thriving tech hub with a growing demand for data professionals. Here’s why it’s an excellent place to learn Data Science:

Top Training Institutes: Hyderabad boasts world-class institutes offering comprehensive Data Science programs.

Strong Job Market: Companies like Google, Amazon, Microsoft, TCS, and Deloitte have a significant presence in the city.

Networking Opportunities: Attend tech meetups, hackathons, and industry events to connect with professionals and stay updated on trends.

Career Opportunities in Data Science After completing a Data Science course, you can explore various roles, such as:

Data Analyst: Analyze data to provide actionable insights.

Machine Learning Engineer: Develop and deploy AI/ML models.

Data Engineer: Build and manage data pipelines.

AI Specialist: Work on NLP, computer vision, and deep learning.

Business Intelligence Analyst: Transform data into strategic insights for businesses.

How to Get Started Choose the Right Course: Look for a program that offers a balance of theory, hands-on training, and real-world projects.

Build a Strong Foundation: Focus on mastering Python, SQL, statistics, and machine learning concepts.

Work on Projects: Practice with real datasets and build a portfolio to showcase your skills.

Stay Updated: Follow industry trends, read research papers, and explore new tools and technologies.

Apply for Jobs: Start with internships or entry-level roles to gain industry experience.

Final Thoughts Data Science is a rewarding career path with endless opportunities for growth and innovation. By enrolling in a Data Science course in Hyderabad, you can gain the skills and knowledge needed to excel in this field. Whether you’re a beginner or looking to upskill, now is the perfect time to start your Data Science journey.

🚀 Take the first step today and unlock a world of opportunities in Data Science!

0 notes

Text

Snowflake Training in Hyderabad

With 16 years of experience, we provide expert-led Snowflake training in Hyderabad, focusing on real-time projects and 100% placement assistance.

In today's data-driven world, businesses rely on cloud data platforms to manage and analyze vast amounts of information. Snowflake is one of the most popular cloud-based data warehousing solutions, known for its scalability, security, and performance. If you're looking to build expertise in Snowflake, Hyderabad offers some of the best training programs to help you master this technology.

Why Learn Snowflake?

Snowflake is widely used for data analytics, business intelligence, and big data applications. Some key benefits include: ✅ Cloud-Native – Works seamlessly on AWS, Azure, and Google Cloud. ✅ Scalability – Handles large datasets efficiently with auto-scaling. ✅ Security – Advanced encryption and access controls ensure data protection. ✅ Ease of Use – Simple SQL-based interface for data manipulation.

Best Snowflake Training in Hyderabad

If you're searching for top Snowflake training institutes in Hyderabad, look for courses that offer: ✔ Hands-on training with real-world projects. ✔ Expert instructors with industry experience. ✔ Certification guidance to boost career opportunities. ✔ Flexible learning options – online and classroom training.

Career Opportunities After Snowflake Training

With the rising demand for cloud data engineers and Snowflake developers, certified professionals can land high-paying jobs as: 🔹 Data Engineers 🔹 Cloud Architects 🔹 Big Data Analysts 🔹 Database Administrators

Get Started Today!

If you want to advance your career in cloud data warehousing, enrolling in a Snowflake training program in Hyderabad is a great step forward.

For digital marketing solutions, visit T Digital Agency.

1 note

·

View note

Text

SRE Online Training in Hyderabad | Visualpath

Site Reliability Engineering (SRE) Training: Set Up Effective Monitoring for Various System Types

Introduction:

Site Reliability Engineering (SRE) Training is a field that blends software engineering with systems engineering to ensure the reliability, scalability, and performance of systems in production. One of the key aspects of SRE is setting up effective monitoring systems to track the health and performance of different types of systems. Proper monitoring helps in identifying issues proactively and mitigating them before they affect users, ensuring that systems run smoothly. To master these concepts, professionals often pursue Site Reliability Engineering Training and SRE Certification Course to deepen their understanding of how to apply monitoring strategies effectively.

In this article, we will discuss how to set up effective monitoring for different systems, such as cloud infrastructure, microservices, and legacy systems, and highlight some best practices that can be used to ensure optimal performance and reliability. Whether you are taking an SRE Course or enrolling in Site Reliability Engineering Online Training, understanding the types of monitoring tools and techniques available can help in making informed decisions to enhance your organization’s operations.

Understanding the Importance of Monitoring

Monitoring is the foundation of Site Reliability Engineering (SRE) as it provides insights into the system’s health, performance, and availability. Without proper monitoring, organizations are left in the dark about how their systems are performing, making it difficult to detect issues in real time. SRE practitioners typically use monitoring as a tool to track metrics, logs, and events, which are crucial to ensuring system reliability.

Monitoring enables Site Reliability Engineers to:

Detect and troubleshoot system failures quickly.

Identify performance bottlenecks and optimize them.

Ensure that Service Level Objectives (SLOs) are being met.

Provide insights for continuous improvement.

By taking an SRE Certification Course or Site Reliability Engineering Online Training, individuals learn how to monitor different types of systems effectively, each requiring specific tools and approaches to ensure reliability.

Types of Systems and Monitoring Approaches

The approach to monitoring will vary depending on the type of system being monitored. Below are some key types of systems and how monitoring is applied to each:

1. Cloud Infrastructure Monitoring

With the rise of cloud computing, monitoring cloud infrastructure has become a critical aspect of SRE. Cloud environments, such as AWS, Azure, and Google Cloud, consist of dynamic and scalable resources that require continuous monitoring. Common challenges in cloud monitoring include auto-scaling, resource allocation, and network performance.

To set up effective monitoring for cloud infrastructure, the following approaches are essential:

Metric-based monitoring: Cloud service providers offer metrics such as CPU utilization, memory usage, disk I/O, and network traffic. These metrics should be tracked to assess the health of cloud resources.

Alerting and auto-scaling: Alerts should be set up based on defined thresholds to detect resource exhaustion or performance degradation. Auto-scaling can be enabled to ensure that cloud resources can scale up or down as required.

Distributed tracing: For microservices architectures running on cloud infrastructure, distributed tracing tools like Open Telemetry or Data dog are used to track requests as they move through various services.

Cloud monitoring tools such as Prometheus, Grafana, and the native tools offered by cloud providers can be used to monitor cloud systems effectively.

2. Microservices Monitoring

Microservices architectures are increasingly popular due to their scalability and flexibility, but they come with unique monitoring challenges. A microservices system consists of numerous small, loosely coupled services that communicate with each other over a network. This adds complexity to monitoring, requiring specialized tools and approaches to track the performance and health of the system.

Effective monitoring of microservices involves:

Service discovery and health checks: Each micro service should expose health endpoints (e.g., HTTP or TCP) that monitoring systems can query. Regular checks can help detect service failures before they impact users.

Centralized logging: In microservices environments, logging is spread across multiple services, which can make troubleshooting difficult. Centralized logging tools like ELK stack (Elastic search, Log stash, and Kibana) or Splunk allow logs to be aggregated and analysed in a central location.

Distributed tracing: Distributed tracing helps to visualize the entire flow of requests across various services. It provides a detailed view of latency, bottlenecks, and dependencies within the microservices architecture. Tools such as Jaeger and Zipkin can be integrated into micro services for tracing.

Monitoring microservices ensures that each component can be tracked independently while also allowing a holistic view of the entire system.

3. Legacy Systems Monitoring

Legacy systems, often composed of monolithic architectures, present a different challenge when it comes to monitoring. These systems tend to be more rigid, with fewer integration points, and often lack the scalability and flexibility of modern systems. However, monitoring these systems is still crucial to ensuring that they continue to perform well and meet SLOs.

Effective monitoring for legacy systems includes:

System resource monitoring: For legacy systems, monitoring CPU, memory, disk usage, and network traffic is critical. These traditional system metrics can help detect performance bottlenecks.

Event-based monitoring: Legacy systems often rely on log files to report errors and events. Setting up event-based monitoring tools such as Nagios or Zabbix can help in detecting potential issues from these logs.

Application performance monitoring (APM): APM tools such as Dynatrace or New Relic can help provide detailed insights into the performance of legacy applications, highlighting inefficiencies and identifying areas for optimization.

Although legacy systems present unique challenges, proper monitoring can ensure their continued reliability and help reduce downtime.

4. Hybrid System Monitoring

Many organizations today rely on a combination of cloud, on-premises, and hybrid systems. Monitoring such diverse infrastructures requires a unified approach that integrates different monitoring tools into a central platform. Hybrid systems often require customized monitoring solutions that can cover the cloud, on-premises systems, and everything in between.

To monitor hybrid systems effectively:

Centralized monitoring platforms: Tools like Prometheus, Data dog, and Grafana can be used to collect data from both cloud and on-premises resources.

Unified dashboards: Dashboards should provide a holistic view of all systems, making it easier to monitor multiple systems in a single pane of glass.

Integration of monitoring tools: It's important to integrate monitoring tools that specialize in different systems (e.g., Data dog for cloud, Nagios for on premise) to gain comprehensive insights.

Hybrid environments require coordination between different monitoring systems and strategies to ensure reliability.

Best Practices for Effective Monitoring

To ensure the success of your monitoring system, the following best practices should be adhered to:

Define clear SLOs and SLIs: Before setting up monitoring, it’s important to define Service Level Objectives (SLOs) and Service Level Indicators (SLIs). This allows monitoring to focus on critical metrics that affect user experience and business outcomes.

Use a layered approach: A layered monitoring approach ensures that you monitor the system at multiple levels: infrastructure, application, and user experience.

Automate alerting: Automation helps in reducing the manual effort needed to track issues. Set up automated alerts for any metric or event that crosses a threshold, ensuring that SREs can take action promptly.

Regularly review and improve: Monitoring is not a one-time setup. Regularly review your monitoring setup to ensure that it remains relevant as the system evolves. Continuously improve your monitoring strategy to keep up with new technologies and challenges.

Conclusion

Setting up effective monitoring for different types of systems is a crucial part of Site Reliability Engineering (SRE). Whether it is cloud infrastructure, microservices, or legacy systems, each system requires specific strategies and tools to ensure it is running optimally. By undergoing Site Reliability Engineering Training, professionals can acquire the skills necessary to implement best practices and leverage the right monitoring tools for different environments.

Enrolling in an SRE Course or Site Reliability Engineering Online Training equips individuals with the necessary expertise to monitor systems efficiently and meet SLOs. Additionally, completing an SRE Certification Course provides validation of the knowledge and skills required for success in this field. Effective monitoring leads to better system reliability, performance, and overall customer satisfaction, which is the ultimate goal of Site Reliability Engineering Training.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete Site Reliability Engineering (SRE) worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit Blog: https://visualpathblogs.com/

Visit: https://www.visualpath.in/online-site-reliability-engineering-training.html

#Site Reliability Engineering Training#SRE Course#Site Reliability Engineering Online Training#SRE Training Online#Site Reliability Engineering Training in Hyderabad#SRE Online Training in Hyderabad#SRE Courses Online#SRE Certification Course

0 notes

Text

AWS Data Engineering | AWS Data Engineer online course

Key AWS Services Used in Data Engineering

AWS data engineering solutions are essential for organizations looking to process, store, and analyze vast datasets efficiently in the era of big data. Amazon Web Services (AWS) provides a wide range of cloud services designed to support data engineering tasks such as ingestion, transformation, storage, and analytics. These services are crucial for building scalable, robust data pipelines that handle massive datasets with ease. Below are the key AWS services commonly utilized in data engineering: AWS Data Engineer Certification

1. AWS Glue

AWS Glue is a fully managed extract, transform, and load (ETL) service that helps automate data preparation for analytics. It provides a serverless environment for data integration, allowing engineers to discover, catalog, clean, and transform data from various sources. Glue supports Python and Scala scripts and integrates seamlessly with AWS analytics tools like Amazon Athena and Amazon Redshift.

2. Amazon S3 (Simple Storage Service)

Amazon S3 is a highly scalable object storage service used for storing raw, processed, and structured data. It supports data lakes, enabling data engineers to store vast amounts of unstructured and structured data. With features like versioning, lifecycle policies, and integration with AWS Lake Formation, S3 is a critical component in modern data architectures. AWS Data Engineering online training

3. Amazon Redshift

Amazon Redshift is a fully managed, petabyte-scale data warehouse solution designed for high-performance analytics. It allows organizations to execute complex queries and perform real-time data analysis using SQL. With features like Redshift Spectrum, users can query data directly from S3 without loading it into the warehouse, improving efficiency and reducing costs.

4. Amazon Kinesis

Amazon Kinesis provides real-time data streaming and processing capabilities. It includes multiple services:

Kinesis Data Streams for ingesting real-time data from sources like IoT devices and applications.

Kinesis Data Firehose for streaming data directly into AWS storage and analytics services.

Kinesis Data Analytics for real-time analytics using SQL.

Kinesis is widely used for log analysis, fraud detection, and real-time monitoring applications.

5. AWS Lambda

AWS Lambda is a serverless computing service that allows engineers to run code in response to events without managing infrastructure. It integrates well with data pipelines by processing and transforming incoming data from sources like Kinesis, S3, and DynamoDB before storing or analyzing it. AWS Data Engineering Course

6. Amazon DynamoDB

Amazon DynamoDB is a NoSQL database service designed for fast and scalable key-value and document storage. It is commonly used for real-time applications, session management, and metadata storage in data pipelines. Its automatic scaling and built-in security features make it ideal for modern data engineering workflows.

7. AWS Data Pipeline

AWS Data Pipeline is a data workflow orchestration service that automates the movement and transformation of data across AWS services. It supports scheduled data workflows and integrates with S3, RDS, DynamoDB, and Redshift, helping engineers manage complex data processing tasks.

8. Amazon EMR (Elastic MapReduce)

Amazon EMR is a cloud-based big data platform that allows users to run large-scale distributed data processing frameworks like Apache Hadoop, Spark, and Presto. It is used for processing large datasets, performing machine learning tasks, and running batch analytics at scale.

9. AWS Step Functions

AWS Step Functions help in building serverless workflows by coordinating AWS services such as Lambda, Glue, and DynamoDB. It simplifies the orchestration of data processing tasks and ensures fault-tolerant, scalable workflows for data engineering pipelines. AWS Data Engineering Training

10. Amazon Athena

Amazon Athena is an interactive query service that allows users to run SQL queries on data stored in Amazon S3. It eliminates the need for complex ETL jobs and is widely used for ad-hoc querying and analytics on structured and semi-structured data.

Conclusion

AWS provides a powerful ecosystem of services that cater to different aspects of data engineering. From data ingestion with Kinesis to transformation with Glue, storage with S3, and analytics with Redshift and Athena, AWS enables scalable and cost-efficient data solutions. By leveraging these services, data engineers can build resilient, high-performance data pipelines that support modern analytics and machine learning workloads.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete AWS Data Engineering Training worldwide. You will get the best course at an affordable cost.

#AWS Data Engineering Course#AWS Data Engineering Training#AWS Data Engineer Certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering Training in Hyderabad#AWS Data Engineer online course

0 notes

Text

GCP DevOps Online Training | GCP DevOps Training in Hyderabad

Mastering Multi-Cloud Strategies with GCP DevOps Training

In today's fast-evolving tech landscape, businesses are increasingly leveraging GCP DevOps Training to streamline workflows and adopt multi-cloud strategies. Google Cloud Platform (GCP) has emerged as a preferred choice for enterprises seeking flexibility, scalability, and innovation in their DevOps pipelines. With the right approach to multi-cloud strategies, organizations can optimize performance, improve resilience, and reduce costs while maintaining a competitive edge.

Understanding Multi-Cloud Strategies

A multi-cloud strategy involves the use of services from multiple cloud providers, such as Google Cloud, AWS, and Azure. This approach allows organizations to select the best-in-class services tailored to specific needs, avoid vendor lock-in, and enhance operational resilience. GCP, with its robust tools and seamless DevOps capabilities, plays a pivotal role in helping organizations implement multi-cloud strategies efficiently.

GCP DevOps Training in Hyderabad provides a comprehensive understanding of GCP's role in multi-cloud environments. Through hands-on training, professionals learn how to deploy and manage Kubernetes clusters, implement Infrastructure as Code (IaC), and integrate CI/CD pipelines across multiple platforms. This knowledge is essential for ensuring that applications run smoothly in diverse cloud environments.

Why GCP DevOps is Ideal for Multi-Cloud

Seamless Integration with Other Cloud Platforms GCP supports hybrid and multi-cloud architectures with tools like Anthos, which enable consistent operations across environments. Anthos simplifies managing applications on GCP, AWS, and on-premises infrastructure, making it a cornerstone of multi-cloud strategies.

Unified Monitoring and Management Tools such as Stackdriver (now part of Google Cloud Operations Suite) provide centralized monitoring and logging capabilities, ensuring visibility across all cloud environments. This is a critical aspect covered in GCP DevOps Certification Training, where professionals learn to leverage these tools for effective multi-cloud management.

Enhanced Security Features

Security remains a major concern in multi-cloud setups. GCP addresses this with features like Identity and Access Management (IAM), Shielded VMs, and encryption by default, ensuring robust protection for applications and data. Professionals undergoing GCP DevOps Training in Hyderabad gain hands-on experience in implementing these security features to safeguard multi-cloud deployments

Key Benefits of Multi-Cloud Strategies with GCP DevOps

Cost Optimization: Leverage the pricing advantages of different providers while avoiding over-provisioning.

High Availability: Reduce the risk of downtime by distributing workloads across multiple cloud platforms.

Scalability: Scale applications dynamically across multiple platforms without service interruptions.

Flexibility: Choose the best services from each cloud provider, such as GCP's machine learning tools or AWS's storage solutions.

Through GCP DevOps Certification Training, professionals can master these benefits, enabling businesses to maximize their cloud investments.

Implementing Multi-Cloud Strategies with GCP DevOps

Adopting a multi-cloud approach requires careful planning and the right skill set. The following steps highlight how GCP DevOps supports this process:

Planning and Architecture: Create a strong framework that guarantees seamless interaction between different cloud providers. Training programs, such as GCP DevOps Training, emphasize designing scalable and resilient architectures for multi-cloud environments.

Deployment and Management: Leverage GCP tools like Terraform for IaC and Kubernetes Engine for container orchestration. These tools enable consistent deployments across clouds, reducing complexity.

Continuous Integration and Delivery (CI/CD): Implement CI/CD pipelines with GCP Cloud Build, allowing automated testing and deployments. This ensures rapid and reliable application updates, a core focus in GCP DevOps Training in Hyderabad.

Monitoring and Optimization: Use GCP’s monitoring tools to track performance and identify areas for improvement. Regular monitoring is crucial for maintaining efficiency and avoiding potential issues in multi-cloud setups.

Challenges and Solutions in Multi-Cloud with GCP DevOps

Adopting a multi-cloud strategy is not without challenges. Common issues include interoperability between platforms, increased complexity, and managing security across diverse environments. However, professionals trained through GCP DevOps Certification Training are equipped to tackle these challenges. They learn best practices for configuring seamless integrations, automating processes, and maintaining a unified security posture.

Conclusion

The future of cloud computing lies in multi-cloud strategies, and mastering this approach with GCP DevOps Training is a game-changer for IT professionals and organizations alike. Google Cloud Platform, with its robust tools and innovative features, simplifies the complexities of multi-cloud management.

Whether you're looking to enhance your skills or implement a resilient cloud strategy for your organization, GCP DevOps Training in Hyderabad provides the expertise needed to excel. With proper training and a well-structured plan, businesses can leverage the power of multi-cloud to drive innovation, improve agility, and achieve operational excellence.

Visualpath is the Leading and Best Software Online Training Institute in Hyderabad. Avail complete GCP DevOps Online Training Worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit: https://visualpathblogs.com/

WhatsApp: https://www.whatsapp.com/catalog/919989971070

Visit https://www.visualpath.in/online-gcp-devops-certification-training.html

#GCP DevOps Training#GCP DevOps Training in Hyderabad#GCP DevOps Certification Training#GCP DevOps Online Training#DevOps GCP Online Training in Hyderabad#GCP DevOps Online Training Institute#DevOps on Google Cloud Platform Online Training#DevOps GCP#GCP DevOps#Google#Google Cloud

1 note

·

View note

Text

Amazon QuickSight Training | AWS QuickSight Training in Hyderabad

Amazon QuickSight Training: 10 QuickSight Tips & Tricks to Boost Your Data Analysis Skills

Amazon QuickSight is a powerful business intelligence (BI) tool that empowers organizations to create interactive dashboards and gain valuable insights from their data. Whether you’re new to Amazon QuickSight or looking to enhance your existing skills, mastering its advanced features can significantly improve your data analysis capabilities. This guide focuses on ten essential tips and tricks to elevate your expertise in Amazon QuickSight Training and make the most out of this tool.��

1. Understanding the Basics with Amazon QuickSight Training

To effectively use QuickSight, start by understanding its fundamentals. Amazon QuickSight Training provides a thorough overview of key functionalities such as connecting data sources, creating datasets, and building visualizations. By mastering the basics, you set the foundation for leveraging more advanced features and gaining meaningful insights.

2. Optimize Data Preparation

One of the first steps in data analysis is data preparation. Use Amazon QuickSight's in-built tools to clean, transform, and model your data before creating visualizations. Features like calculated fields and data filters are especially useful for creating precise datasets. AWS QuickSight Online Training covers these capabilities in detail, helping you streamline the preparation process.

3. Mastering Visual Customization

Effective data presentation is critical for analysis. With QuickSight, you can create highly customized visuals that align with your specific needs. Learn to adjust colors, axes, and data labels to make your charts more intuitive and visually appealing. Amazon QuickSight Training emphasizes the importance of tailoring visuals to improve storytelling and engagement.

4. Utilize Advanced Calculations

QuickSight supports advanced calculations, such as percentiles, running totals, and custom metrics. Leveraging these features allows you to derive deeper insights from your data. AWS QuickSight Training provides step-by-step guidance on creating advanced formulas, which can save time and add value to your analysis.

5. Enable Auto-Narratives for Insights

Auto-narratives in QuickSight use natural language processing (NLP) to generate textual summaries of your data. This feature is particularly useful for highlighting trends, anomalies, and key performance indicators (KPIs). AWS QuickSight Online Training teaches how to enable and customize auto-narratives for improved decision-making.

6. Take Advantage of SPICE Engine

QuickSight’s Super-fast, Parallel, In-memory Calculation Engine (SPICE) is designed for speed and efficiency. It enables users to analyze massive datasets without relying on external databases. Learning how to optimize SPICE usage is an integral part of Amazon QuickSight Training and ensures you can work with data at scale.

7. Implement Conditional Formatting

Conditional formatting helps draw attention to critical data points. You can set rules to highlight values based on specific conditions, making your dashboards more actionable. AWS QuickSight Training explores how to implement these rules to enhance the interpretability of your visuals.

8. Share and Collaborate Effectively

QuickSight makes it easy to share dashboards and reports with stakeholders. By learning best practices for sharing, including granting permissions and scheduling email reports, you ensure that insights reach the right audience. AWS QuickSight Online Training includes collaboration techniques to improve team workflows.

9. Use Embedded Analytics

Embedding QuickSight dashboards into applications or websites is a game-changer for businesses. This feature allows organizations to provide real-time insights to users within their existing platforms. Amazon QuickSight Training delves into embedding analytics, offering practical examples to integrate dashboards seamlessly.

10. Stay Updated with New Features

AWS QuickSight regularly updates its features to enhance user experience and functionality. Staying informed about these updates through AWS QuickSight Training ensures you are always utilizing the latest tools and capabilities to boost productivity and efficiency.

Conclusion: Amazon QuickSight is a versatile and user-friendly BI tool that caters to a wide range of data analysis needs. By leveraging these tips and tricks, you can unlock the full potential of QuickSight and deliver impactful insights. Whether you're new to BI or an experienced analyst, Amazon QuickSight Training equips you with the skills to excel in data visualization and reporting.

AWS QuickSight Online Training and AWS QuickSight Training courses are invaluable resources for professionals looking to stay competitive in today’s data-driven world. From mastering visual customization to utilizing SPICE and embedding analytics, QuickSight offers endless opportunities for growth and innovation. With continuous learning and practice, you can transform raw data into actionable intelligence, empowering your organization to make informed decisions and drive success.

Visualpath is a top institute in Hyderabad offering AWS QuickSight Online Training with real-time expert instructors and hands-on projects. Our Amazon QuickSight Course Online, from industry experts and gain experience. We provide to individuals globally in the USA, UK, etc. To schedule a demo, call +91-9989971070.

Key Points: AWS, Amazon S3, Amazon Redshift, Amazon RDS, Amazon Athena, AWS Glue, Amazon DynamoDB, AWS IoT Analytics, ETL Tools.

Attend Free Demo

Call Now: +91-9989971070

Whatsapp: https://www.whatsapp.com/catalog/919989971070

Visit our Blog: https://visualpathblogs.com/

Visit: https://www.visualpath.in/online-amazon-quicksight-training.html

#Amazon QuickSight Training#AWS QuickSight Online Training#Amazon QuickSight Course Online#AWS QuickSight Training in Hyderabad#Amazon QuickSight Training Course#AWS QuickSight Training

0 notes

Text

A Comprehensive Guide To The Top 5 AWS Courses For Beginner

Are you a beginner looking to start your journey in cloud computing with Amazon Web Services (AWS)? With numerous AWS courses available, it’s essential to choose the right training to build a strong foundation. Explore the top five AWS courses for beginners, including the best AWS training in Hyderabad. These courses will equip you with the knowledge and skills needed to succeed in the world of AWS and cloud computing.

AWS Certified Cloud Practitioner: The AWS Certified Cloud Practitioner course is an excellent starting point for beginners. It provides a comprehensive understanding of AWS cloud concepts, services, security, and architectural best practices. This course covers the basics of AWS services, such as EC2 instances, S3 storage, and RDS databases. By completing this certification, beginners can validate their AWS knowledge and establish a solid foundation for further specialization.

AWS Certified Solutions Architect: The AWS Certified Solutions Architect – Associate course is designed for beginners who want to gain expertise in designing and deploying scalable, highly available, and fault-tolerant applications on AWS. This course covers topics like AWS architecture best practices, AWS services overview, and application deployment strategies. By mastering the concepts of infrastructure, networking, and security on AWS, beginners can learn to design robust solutions using various AWS services.

AWS Certified Developer: The AWS Certified Developer – Associate course is suitable for beginners interested in developing applications on the AWS platform. This course focuses on AWS SDKs, APIs, and how to interact with AWS services programmatically. By learning about AWS Identity and Access Management (IAM), AWS Lambda, and AWS Elastic Beanstalk, beginners can gain the necessary skills to build, test, and deploy applications on AWS.

AWS Certified SysOps Administrator: The AWS Certified SysOps Administrator – Associate course is ideal for beginners looking to understand system operations on the AWS platform. It covers topics like deployment, management, and operations of applications on AWS. This course also delves into topics like data management, security, and troubleshooting. By completing this certification, beginners can gain valuable skills in managing and operating systems on the AWS cloud.

AWS Certified Machine Learning: For beginners interested in the field of machine learning on AWS, the AWS Certified Machine Learning – Specialty course is an excellent choice. This course focuses on topics such as data engineering, exploratory data analysis, model training and optimization, and deploying machine learning models on AWS. By mastering these skills, beginners can gain a solid understanding of machine learning principles and their practical application using AWS services.

Choosing the right AWS courses for beginners is crucial to kick-start a successful career in cloud computing. By enrolling in the best AWS training in Hyderabad and completing certifications like AWS Certified Cloud Practitioner, Solutions Architect, Developer, SysOps Administrator, or Machine Learning, beginners can gain a strong foundation in AWS and unlock numerous opportunities in the cloud industry

0 notes

Text

The Cloud Computing Job Market is Exploding – how can you dive in?

In recent years, cloud computing has transformed the way businesses operate, leading to an explosion in demand for skilled professionals in this field. As companies continue to migrate to the cloud, the job market is not just growing; it’s evolving rapidly. If you’re looking to capitalize on this opportunity, understanding how to navigate this expanding landscape is crucial. In this blog, we’ll explore the current job market for cloud computing and how enrolling in a cloud computing institute in Hyderabad can help you dive in.

The Rise of Cloud Computing

The shift to cloud computing is no longer a trend; it’s a necessity for many organizations. Businesses of all sizes are adopting cloud solutions for their flexibility, scalability, and cost-effectiveness. Here are some reasons why cloud computing is booming:

Increased Data Storage Needs: With the exponential growth of data, companies require robust storage solutions that can adapt to their needs. Cloud services provide the scalability that traditional systems often lack.

Remote Work Culture: The rise of remote work has accelerated the adoption of cloud technologies, allowing teams to collaborate seamlessly regardless of their physical location.

Cost Savings: Cloud computing eliminates the need for extensive physical infrastructure, reducing overhead costs for businesses. This financial benefit is a major driving force behind its adoption.

Focus on Security: As cyber threats continue to evolve, many organizations turn to cloud providers for their advanced security measures, making cloud solutions more attractive.

Current Job Market Trends

As the cloud computing market grows, so does the demand for various roles. Here are some key trends to watch:

Diverse Job Roles: From cloud architects and data engineers to security analysts and compliance officers, the range of positions is expanding. This diversity allows professionals to find roles that match their skills and interests.

Competitive Salaries: Cloud computing roles often come with attractive salary packages, reflecting the specialized skills required in this field. This trend makes cloud careers increasingly appealing to job seekers.

Focus on Certifications: Employers are increasingly looking for candidates with relevant certifications. Credentials from recognized institutions can significantly enhance your employability in this competitive market.

How to Dive into the Cloud Computing Job Market

If you’re eager to enter the cloud computing field, here are some steps to help you get started:

Gain Relevant Skills: Focus on acquiring the skills necessary for cloud roles. This includes understanding cloud architecture, security, and data management.

Pursue Certifications: Consider obtaining certifications from reputable cloud providers like AWS, Microsoft Azure, or Google Cloud. These credentials can enhance your resume and demonstrate your expertise.

Network: Engage with professionals in the field through networking events, webinars, and online forums. Building connections can lead to valuable job opportunities and insights.

Stay Updated: The cloud landscape is constantly evolving. Stay informed about the latest trends, technologies, and best practices to remain competitive.

Enroll in a Cloud Computing Course: One of the most effective ways to gain a solid foundation is by enrolling in a cloud computing institute in Hyderabad. The Boston Institute of Analytics offers a comprehensive program that provides hands-on experience and industry-relevant knowledge.

What to Expect from a Cloud Computing Institute in Hyderabad

When choosing a cloud computing institute in Hyderabad, look for programs that offer:

Comprehensive Curriculum: Ensure the program covers essential topics like cloud infrastructure, services, security, and deployment strategies.

Practical Experience: Hands-on training with real cloud platforms like AWS, Azure, and Google Cloud is crucial for developing applicable skills.

Expert Guidance: Learning from experienced instructors can provide valuable insights and help you navigate your career path effectively.

Career Support: Many institutes offer resources for job placement and internships, giving you a head start in the job market.

Conclusion

The cloud computing job market is indeed exploding, offering a wealth of opportunities for those willing to dive in. By gaining relevant skills, pursuing certifications, and enrolling in a cloud computing institute in Hyderabad like the Boston Institute of Analytics, you can position yourself for success in this dynamic field. Embrace the future of technology and start your journey in cloud computing today!

#Cloud Computing#DevOps#AWS#AZURE#Cloud Computing Course#DeVOps course#AWS COURSE#AZURE COURSE#Cloud Computing CAREER#Cloud Computing jobs#Cloud Technology#Cloud Computing Course in Hyderabad#Cloud Infrastructure#Cloud Management#Data Analytics#technology#cloud technology jobs#cloudsecurity#cloudcore#cloudcomputing#Cloud Architecture#Cloud Migration

0 notes

Text

Unlocking Career Paths with AWS Expertise: What Opportunities Await?

As cloud computing continues to transform the tech industry, mastering Amazon Web Services (AWS) has become a gateway to a myriad of career opportunities.

With AWS Training in Hyderabad, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries.

AWS skills are increasingly sought after, opening doors to various roles across different sectors. If you’ve recently acquired AWS knowledge or are considering it, here’s a fresh look at the diverse career paths you can explore.

1. Cloud Strategy Architect: Designing the Future

Cloud Strategy Architects are visionaries who craft comprehensive cloud solutions tailored to organizational needs. They leverage AWS to design scalable and secure architectures that drive business efficiency and innovation. This role is perfect for those who excel in strategic planning and enjoy shaping the technological landscape of companies.

2. Cloud Operations Specialist: Ensuring Seamless Functionality

Cloud Operations Specialists focus on the hands-on management of cloud infrastructure. They handle the setup, maintenance, and optimization of AWS environments to ensure everything runs smoothly. This role is ideal for individuals who thrive in operational settings and have a knack for problem-solving and system maintenance.

3. DevOps Specialist: Integrating Development and Operations

DevOps Specialists play a crucial role in streamlining development and operations through automation and continuous integration. With AWS, they manage deployment pipelines and automate processes, ensuring efficient and reliable delivery of software. If you enjoy working at the intersection of development and IT operations, this role is a great fit.

4. Technical Solutions Designer: Tailoring Cloud Solutions

Technical Solutions Designers focus on creating bespoke cloud solutions that meet specific business requirements. They work closely with clients to understand their needs and design AWS-based systems that align with their objectives. This role blends technical expertise with client interaction, making it suitable for those who excel in both areas.

5. Cloud Advisory Consultant: Guiding Cloud Transformations

Cloud Advisory Consultants offer expert guidance on cloud strategy and implementation. They assess current IT infrastructures, recommend AWS solutions, and support the transition to cloud-based environments. This role is ideal for those who enjoy advising businesses and orchestrating strategic cloud initiatives.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Best AWS Online Training.

6. AWS System Administrator: Managing Cloud Environments

AWS System Administrators are responsible for the day-to-day management of cloud systems. They oversee performance monitoring, security, and user management within AWS environments. This role suits individuals who are detail-oriented and enjoy maintaining and optimizing IT systems.

7. Data Solutions Engineer: Harnessing Data Potential

Data Solutions Engineers specialize in handling and analyzing large datasets using AWS tools like Amazon Redshift and AWS Glue. They design data pipelines and create analytics solutions to support business intelligence. If you have a passion for data and enjoy working with cloud-based data solutions, this role is a strong match.

8. Cloud Security Analyst: Safeguarding Cloud Assets

Cloud Security Analysts focus on protecting cloud infrastructure from security threats. They use AWS security features to ensure data integrity and compliance. This role is well-suited for individuals with a keen interest in cybersecurity and a commitment to safeguarding digital assets.

9. IT Systems Manager: Overseeing IT Operations

IT Systems Managers ensure that all aspects of IT infrastructure, including cloud systems, run efficiently. With AWS skills, they lead technology strategies, manage IT teams, and oversee the implementation of cloud solutions. This role is ideal for those who are strong in leadership and strategic IT management.

10. Cloud Product Lead: Innovating Cloud Solutions

Cloud Product Leads oversee the development and rollout of cloud-based products. They coordinate with engineering teams to drive innovation and ensure product features meet market needs. This role is great for those interested in product management and driving the evolution of cloud technologies.

11. Cloud Strategy Consultant: Advising on Cloud Adoption

Cloud Strategy Consultants provide expert advice on how to leverage AWS for business transformation. They help companies evaluate their cloud needs, design effective strategies, and implement solutions. This role is perfect for those who enjoy strategic planning and consultancy.

12. Cloud Deployment Specialist: Managing Cloud Transitions

Cloud Deployment Specialists manage the migration of applications and data to AWS. They ensure that transitions are smooth and align with organizational goals. If you excel in managing complex projects and enjoy overseeing cloud transitions, this role could be a great fit.

Conclusion

Mastering AWS opens up a broad spectrum of career opportunities, from strategic roles to technical positions. Whether you’re interested in designing cloud architectures, managing operations, or guiding business transformations, AWS skills are highly valued. By leveraging your AWS expertise, you can embark on a fulfilling career path that aligns with your interests and professional goals.

0 notes

Text

Amazon QuickSight Training | AWS QuickSight Training in Hyderabad

Amazon QuickSight Training: From Raw Data To Beautiful Dashboards - Mastering AWS QuickSight

Amazon QuickSight Training provides a comprehensive guide to transforming raw data into visually compelling, interactive dashboards that empower decision-making. With the exponential growth of data, businesses need robust tools to analyze and interpret vast amounts of information efficiently. Amazon QuickSight, a cloud-based business intelligence (BI) service from AWS, is designed to make data visualization, reporting, and analysis both accessible and insightful. Through Amazon QuickSight Training, individuals and teams gain the skills to harness this powerful tool, creating data-driven insights that are essential for today’s competitive business environment.

In the digital age, data is one of the most valuable assets for any organization. However, raw data is often complex and difficult to interpret without the right tools and techniques. This is where Amazon QuickSight comes in, allowing users to import data from multiple sources, organize it, and transform it into actionable insights. The platform's capabilities include machine learning-based predictive analytics, interactive dashboards, and the ability to work with a range of data sources like Amazon Redshift, S3, and third-party data systems. Through an Amazon QuickSight Course Online, participants learn the core skills for importing, managing, and visualizing data, transforming complex data into easy-to-understand visualizations and reports.

Learning the Essentials of AWS QuickSight through Training

A foundational part of any Amazon QuickSight Training involves understanding the platform’s core features and the techniques for optimizing data visualization. QuickSight’s user-friendly interface makes it accessible even to individuals without extensive technical expertise, making it a versatile tool for both beginners and experienced data analysts. During AWS QuickSight Training, users learn to connect to data sources, from AWS databases to on-premises and third-party platforms. The course covers how to use SPICE (Super-fast, Parallel, In-memory Calculation Engine), QuickSight’s in-memory engine, which speeds up data processing and enables near-real-time analytics.

Training sessions often focus on creating effective visualizations—bar charts, line graphs, pie charts, and more—each tailored to convey data insights in the most impactful way. Participants learn how to select the right visualizations for different data sets and how to build dashboards that allow users to interact with and drill down into specific data points. Through this learning process, trainees become adept at using QuickSight’s powerful tools to uncover trends, patterns, and anomalies, enabling them to drive actionable insights across their organizations. Moreover, users gain experience with QuickSight’s natural language query feature, which allows them to ask questions about their data in plain language, making it easy for non-technical users to derive insights from complex datasets.

Building Skills in Advanced Features with Amazon QuickSight Course Online

For users looking to go beyond basic visualization, an Amazon QuickSight Course Online offers training in advanced features like predictive analytics and machine learning (ML) integrations. QuickSight includes an array of ML-driven insights, such as anomaly detection and forecasting, that empower users to make predictive analyses directly within the platform. Through AWS QuickSight Training, participants learn how to apply these advanced features to identify potential issues before they arise, or to forecast business metrics like sales or customer growth. These skills are especially useful for organizations that need proactive, data-driven strategies to stay competitive.

The Amazon QuickSight Course Online also covers best practices for sharing and collaborating on dashboards. QuickSight supports the creation of shared dashboards, allowing teams to access up-to-date data insights simultaneously. Training helps users set permissions for different team members, ensuring that only relevant stakeholders can view or edit particular datasets. Additionally, users learn how to automate report generation and distribution, which saves time and ensures that teams receive the latest data insights without manual intervention.

Another focus area in AWS QuickSight Training is optimizing data imports and setting up efficient data pipelines. As participants learn about setting up automated data refresh schedules and managing large data sets with SPICE, they gain the confidence to handle complex data tasks with ease. The training also covers integration with other AWS services, like Redshift and S3, which allows participants to leverage a complete AWS ecosystem for end-to-end data management.

AWS QuickSight Training for Practical Applications and Career Advancement

AWS QuickSight Training provides practical applications for users in fields such as finance, marketing, healthcare, and e-commerce. For instance, marketing teams can use QuickSight to analyze customer behavior and campaign performance, while finance teams can generate real-time financial reports. The hands-on experience gained through Amazon QuickSight Training is not only valuable for enhancing performance within an organization but also positions individuals as competitive candidates in the job market. Knowledge of QuickSight and AWS BI tools is increasingly sought after, as companies recognize the value of data analytics for growth and strategic planning.

Training programs often include case studies and real-world projects, which enable participants to practice their skills in practical settings. This experience helps learners translate theoretical knowledge into actionable insights, making them ready to solve actual business challenges through data analysis and visualization. Completing an Amazon QuickSight Course Online also gives professionals a recognized credential that demonstrates their competence in data visualization and analysis on AWS. For many, AWS QuickSight Training is an essential step towards career advancement in roles related to data science, business intelligence, and analytics.

Conclusion:

Amazon QuickSight Training is an invaluable resource for anyone looking to turn raw data into powerful, visually engaging insights. Through structured training programs, users learn the fundamentals of data visualization and dashboard creation, building the skills to handle complex data and produce impactful, data-driven insights. Whether through basic data visualization or advanced machine learning applications, AWS QuickSight Training prepares individuals to leverage data effectively, providing organizations with the insights they need to stay competitive. For professionals, completing an Amazon QuickSight Course Online opens doors to career advancement in fields driven by data analytics, ensuring they remain valuable contributors in a data-centric world.

By mastering QuickSight, users not only enhance their analytical capabilities but also enable their organizations to make faster, more informed decisions. Amazon QuickSight Training thus serves as a gateway to becoming a proficient data analyst capable of transforming raw data into actionable, beautifully designed dashboards that empower teams, drive strategies, and ultimately lead to business success.

Visualpath offering best Amazon QuickSight Training with real-time expert instructors and hands-on projects. Our AWS QuickSight Online Training, from industry experts and gain hands-on experience we provide to individuals globally in the USA, UK, etc. To schedule a demo, call +91-9989971070.

Key Points: AWS, Amazon S3, Amazon Redshift, Amazon RDS, Amazon Athena, AWS Glue, Amazon DynamoDB, AWS IoT Analytics, ETL Tools.

Attend Free Demo

Call Now: +91-9989971070

Whatsapp: https://www.whatsapp.com/catalog/919989971070

Visit our Blog: https://visualpathblogs.com/

Visit: https://www.visualpath.in/online-amazon-quicksight-training.html

#Amazon QuickSight Training#AWS QuickSight Online Training#Amazon QuickSight Course Online#AWS QuickSight Training in Hyderabad#Amazon QuickSight Training Course#AWS QuickSight Training

0 notes