#Cloud Architecture

Explore tagged Tumblr posts

Text

Going Over the Cloud: An Investigation into the Architecture of Cloud Solutions

Because the cloud offers unprecedented levels of size, flexibility, and accessibility, it has fundamentally altered the way we approach technology in the present digital era. As more and more businesses shift their infrastructure to the cloud, it is imperative that they understand the architecture of cloud solutions. Join me as we examine the core concepts, industry best practices, and transformative impacts on modern enterprises.

The Basics of Cloud Solution Architecture A well-designed architecture that balances dependability, performance, and cost-effectiveness is the foundation of any successful cloud deployment. Cloud solutions' architecture is made up of many different components, including networking, computing, storage, security, and scalability. By creating solutions that are tailored to the requirements of each workload, organizations can optimize return on investment and fully utilize the cloud.

Flexibility and Resilience in Design The flexibility of cloud computing to grow resources on-demand to meet varying workloads and guarantee flawless performance is one of its distinguishing characteristics. Cloud solution architecture create resilient systems that can endure failures and sustain uptime by utilizing fault-tolerant design principles, load balancing, and auto-scaling. Workloads can be distributed over several availability zones and regions to help enterprises increase fault tolerance and lessen the effect of outages.

Protection of Data in the Cloud and Security by Design

As data thefts become more common, security becomes a top priority in cloud solution architecture. Architects include identity management, access controls, encryption, and monitoring into their designs using a multi-layered security strategy. By adhering to industry standards and best practices, such as the shared responsibility model and compliance frameworks, organizations may safeguard confidential information and guarantee regulatory compliance in the cloud.

Using Professional Services to Increase Productivity Cloud service providers offer a variety of managed services that streamline operations and reduce the stress of maintaining infrastructure. These services allow firms to focus on innovation instead of infrastructure maintenance. They include server less computing, machine learning, databases, and analytics. With cloud-native applications, architects may reduce costs, increase time-to-market, and optimize performance by selecting the right mix of managed services.

Cost control and ongoing optimization Cost optimization is essential since inefficient resource use can quickly drive up costs. Architects monitor resource utilization, analyze cost trends, and identify opportunities for optimization with the aid of tools and techniques. Businesses can cut waste and maximize their cloud computing expenses by using spot instances, reserved instances, and cost allocation tags.

Acknowledging Automation and DevOps Important elements of cloud solution design include automation and DevOps concepts, which enable companies to develop software more rapidly, reliably, and efficiently. Architects create pipelines for continuous integration, delivery, and deployment, which expedites the software development process and allows for rapid iterations. By provisioning and managing infrastructure programmatically with Infrastructure as Code (IaC) and Configuration Management systems, teams may minimize human labor and guarantee consistency across environments.

Multiple-cloud and hybrid strategies In an increasingly interconnected world, many firms employ hybrid and multi-cloud strategies to leverage the benefits of many cloud providers in addition to on-premises infrastructure. Cloud solution architects have to design systems that seamlessly integrate several environments while ensuring interoperability, data consistency, and regulatory compliance. By implementing hybrid connection options like VPNs, Direct Connect, or Express Route, organizations may develop hybrid cloud deployments that include the best aspects of both public and on-premises data centers. Analytics and Data Management Modern organizations depend on data because it fosters innovation and informed decision-making. Thanks to the advanced data management and analytics solutions developed by cloud solution architects, organizations can effortlessly gather, store, process, and analyze large volumes of data. By leveraging cloud-native data services like data warehouses, data lakes, and real-time analytics platforms, organizations may gain a competitive advantage in their respective industries and extract valuable insights. Architects implement data governance frameworks and privacy-enhancing technologies to ensure adherence to data protection rules and safeguard sensitive information.

Computing Without a Server Server less computing, a significant shift in cloud architecture, frees organizations to focus on creating applications rather than maintaining infrastructure or managing servers. Cloud solution architects develop server less programs using event-driven architectures and Function-as-a-Service (FaaS) platforms such as AWS Lambda, Azure Functions, or Google Cloud Functions. By abstracting away the underlying infrastructure, server less architectures offer unparalleled scalability, cost-efficiency, and agility, empowering companies to innovate swiftly and change course without incurring additional costs.

Conclusion As we come to the close of our investigation into cloud solution architecture, it is evident that the cloud is more than just a platform for technology; it is a force for innovation and transformation. By embracing the ideas of scalability, resilience, and security, and efficiency, organizations can take advantage of new opportunities, drive business expansion, and preserve their competitive edge in today's rapidly evolving digital market. Thus, to ensure success, remember to leverage cloud solution architecture when developing a new cloud-native application or initiating a cloud migration.

1 note

·

View note

Text

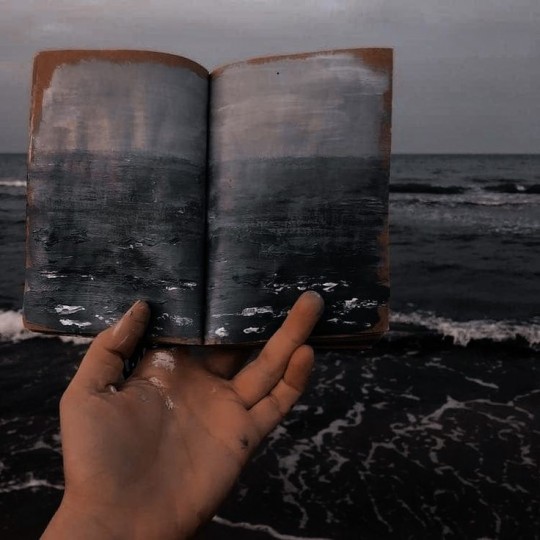

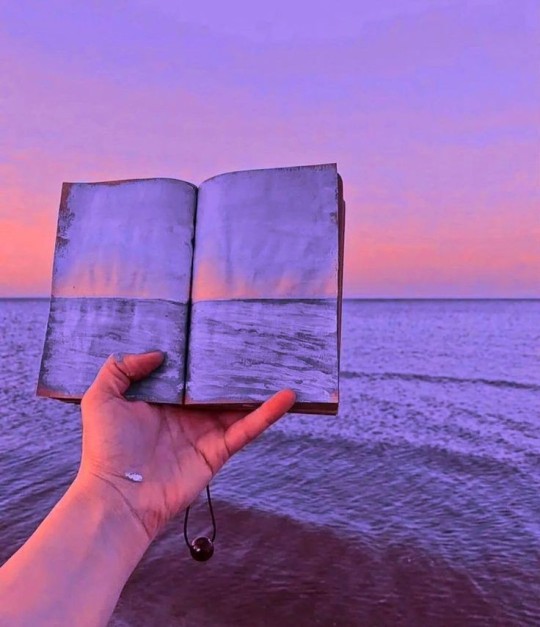

#maviyenot#photography#nature#naturecore#landscape#pretty#travel#traveling#photographers on tumblr#sky#sea#seascape#ocean#doctor who#advertising#art#picture#pink#purple sky#portrait#inspration#indie#blue#clouds#water#style#paradise#architecture#artists on tumblr#light

52K notes

·

View notes

Text

Multi-Cloud vs. Hybrid Cloud: Strategic Decision-Making for Leaders.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore the strategic difference between multi-cloud and hybrid cloud with expert insights for CIOs, CTOs, and digital transformation leaders. A Cloud Crossroads for the Modern Leader Imagine this: you’re in the boardroom. The CIO looks up after a vendor pitch and asks, “Should we go multi-cloud or hybrid?” Everyone turns to…

#AI#artificial-intelligence#Boardroom Strategy#business#CIO priorities#cloud#Cloud Architecture#Cloud Governance#Cloud Strategy#Data Driven Leadership#digital transformation leadership#emerging technology strategy#hybrid cloud#IT operating model#Multi Cloud#News#Sanjay Kumar Mohindroo#technology

0 notes

Text

This might be the job for you.

#Cloud#cloud architecture#Cloud computing#cloud security#cyber#cyber attack#cyber security#cyber threat#hackers

0 notes

Text

What are the Career Opportunities After Earning the GCP Architect Certification?

GCP Professional Architect Certification is a globally recognized badge of honor. The certification demonstrates the ability to design, build, and manage secure, scalable, and reliable GCP (Google Cloud Platform) solutions. As the IT world has shifted to the cloud computing side of things, GCP Architect certification holders are in demand. It can open a variety of job venues in diverse areas of study.

Cloud Architect

It is the job of a GCP Professional Cloud Architect to come up with cloud infrastructure options that meet the needs of businesses. Cloud architects help companies make sure that their cloud operations are fast, safe, and cost-effective. As more companies use Google Cloud, certified workers can get well-paying jobs in companies all over the world.

Cloud Consultant

Businesses making the shift to cloud solutions need professional consultants to guide them with cloud migration and digitalization. The Google Professional Architect certification prepares you to analyze client needs and design solutions that make a seamless cloud transition.

DevOps Engineer

DevOps engineers integrate Cloud automation tools, CI/CD pipelines, and infrastructure as code (IaC) to streamline software development and deployment, making them suitable for Google Cloud Architect Certified professionals. Strong DevOps technologies offered by GCP (Cloud Build, Cloud Run) make certified professionals strong candidates for organizations.

Cloud Solutions Engineer

Cloud Solutions Engineer is a position ideal for a Google Cloud Professional, who is skilled at cloud application designing and optimization. They support developers in making infrastructure and cloud services seamlessly.

A Site Reliability Engineer (SRE)

SREs manage the reliability, scalability, and efficiency of systems. Google Professional Cloud Architect is the major responsibility of building fault-tolerant architectures, and improving cloud performance, and making sure operations run smoothly in high-traffic settings.

Data Engineer

While GCP emphasizes data processing and analysis more, you can focus on data engineering with GCP Architect Certification. They use Google BigQuery, Cloud Spanner, and Dataflow to handle large-scale data pipelines. This facilitates better decision-making for data-driven organizations.

Security Engineer

Safe cloud architecture is essential, and certified architects know how to do that. For example, there is the Google Certified Professional Cloud Architect, who specializes in the realm of cloud security, ensuring that compliance requirements, identity management, and risk mitigation all stay covered in cloud environments.

GCP Architect Salary and Demand

The salary of a GCP Cloud Architect can be as low as $140,000 and as high as $180,000, according to industry reports, but the range depends on their expertise and employer. It is a good job option, as companies such as Google, Amazon, Deloitte, Accenture, and IBM are hiring in numbers with GCP Architect Certification.

Industries That are Hiring Google Cloud Architects

Technology & IT Services – Companies like Google, Microsoft, and IBM are constantly looking for GCP-certified architects for cloud infrastructure initiatives.

Finance & Banking – Google Professional Cloud Architects are also in demand for fintech businesses and banks, requiring secure and scalable cloud solutions.

Healthcare – Google Cloud Architect Certification training programs have made the role of holders in the healthcare industry more essential due to the implementation of cloud technology.

E-commerce & Retail – Cloud architects help online businesses get the most out of their web performance, manage high-volume data, and enhance the user experience.

Career Advancement and Growth Potential

By acquiring specializations in AI/ML, security, and big data analytics with a Google Cloud Architect Certification, professionals can fast-track their career growth. Certifications, such as Google Certified Professional Cloud Security Engineer or Google Certified Data Engineer, also help widen career opportunities.

Final Thoughts

Mastering the GCP Professional Architect Certification enhances career opportunities in cloud computing, including cloud architecture, security, DevOps, and data engineering positions. With organizations rapidly adopting Google Cloud, those with Google Cloud certifications get an advantage in the job market along with future career development in the ever-evolving cloud industry.

#computing#google cloud#google cloud architect#cloudmigration#cloudconsulting#clouds#cloud architecture

0 notes

Text

by kath__alina

#aesthetic#nature#naturecore#sunset#architecture#exterios#clouds#sky#photography#curators on tumblr#up

37K notes

·

View notes

Text

How to Build an Effective Cloud Organization

#ai#azure#Cloud#Cloud Architecture#Cloud Operating Model#cloud-computing#Enterprise Architecture#IT Strategy#Michael Griffin#Solution Architecure#technology

0 notes

Text

Serverless Vs. Microservices: Which Architecture is Best for Your Business?

One of the core challenges in computer science is problem decomposition, breaking down complex problems into smaller, manageable parts. This is key for addressing each part independently; programming is about mastering complexity through effective organization. In development, architects and developers work to structure these complexities to build robust business functionalities. Strong architecture lays the groundwork for effectively handling these complexities.

Software architecture defines boundaries that separate components. These boundaries prevent elements on one side from depending on or interacting with those on the other.

Every decision architecture is a balance between trade-offs to manage complexity. Effective architecture depends on making these trade-offs wisely. It is more vital to understand why we choose a solution than to know how to implement it. Choosing a solution thoughtfully helps manage complexity by structuring the software, defining component interactions, and establishing clear separations.

A well-designed architecture uses best practices, design patterns, and structured layers, making complex systems more manageable and maintainable. Conversely, poor architecture increases complexity, complicating the process of maintenance, understanding, and scaling.

This blog delves into two widely used architectures: serverless and microservices. Both approaches aim to balance these complexities with scalable, modular solutions.

Key Takeaways:

Effective software architecture helps manage complexity by breaking down applications into smaller, manageable components. Both serverless and microservices architectures support this approach with unique benefits.

Serverless architecture allows developers to focus on coding without managing infrastructure. It automatically scales with demand and follows a pay-as-you-go model, making it cost-effective for applications with fluctuating usage.

Microservices architecture divides applications into autonomous services. Each service can scale independently, offering flexibility and resilience for complex applications.

Choosing between serverless and microservices depends on business needs. Serverless offers simplicity and low cost for dynamic workloads, whereas microservices provide control and scalability for large, interdependent applications.

What is Serverless?

Serverless computing, also known as serverless architecture, allows developers to deploy applications without managing infrastructure. In a serverless setup, cloud providers oversee routine tasks, such as operating system installations, security patches, and performance monitoring, ensuring a secure and optimized environment.

Contrary to its name, serverless doesn’t mean the absence of servers. Instead, it shifts server management from developers to the cloud service provider, allowing developers to focus on code and business requirements. This approach offers a pay-as-you-go model where billing aligns with actual code execution time, ensuring cost efficiency and reducing idle resource expenses.

Serverless application development also supports rapid scaling. Resources automatically adjust based on real-time demand, maintaining performance without manual intervention. Serverless, alongside Infrastructure-as-a-Service (IaaS) and Function-as-a-Service (FaaS), is a powerful solution for modern cloud computing applications.

You would love to read more about Infrastructure-as-Code in DevOps.

How Does Serverless Work?

Serverless architecture is an innovative model where companies leverage third-party resources to host application functions efficiently. This setup divides application logic into small, manageable units called functions, with each designed for a specific task and executed over a short duration. Functions activate repeatedly in response to predefined triggers, allowing for a high degree of responsiveness.

Key stages in serverless architecture creation include:

Functions: Developers design code for specific tasks within the app. Functions focus on single, straightforward operations, ensuring efficiency and minimal resource use.

Events: Events trigger each function. When specific conditions are met—like receiving an HTTP request—the event activates the function, seamlessly initiating the next task.

Triggers: Triggers act as signals that prompt a function to execute. They occur when a user interacts, such as pressing a button or tapping a screen point.

Execution: The function then initiates, running only as long as needed to complete the task. This short-duration execution saves resources and minimizes overhead.

Output: Users receive the function’s output in real-time, typically on the client side. This design creates a responsive user experience.

For effective serverless applications, developers need to carefully segment functions and designate triggers. Functions can operate simultaneously, responding to distinct interactions without slowing down performance. Defining relationships among functions is essential to maintain harmony and responsiveness across interactions.

Advantages of Using Serverless Architecture

Serverless architecture divides applications into two core segments. A cloud provider fully manages the backend, freeing developers from handling infrastructure and hardware integrations. The second part, Function as a Service (FaaS), comprises user-facing, event-triggered functions. This structure empowers developers to streamline creation and boost performance.

Here are the key advantages of serverless:

Easy Deployment

In traditional setups, developers must configure and manage servers, databases, and middleware. Serverless eliminates this overhead, letting developers concentrate on creating the application’s core logic. Cloud vendors automate infrastructure deployment, reducing the time from code development to production. This rapid deployment can be a competitive edge, particularly for startups or companies working on tight schedules.

Cost Efficiency

Serverless architecture operates on a usage-based billing model, meaning companies pay only for the compute resources their functions consume. This benefits businesses with fluctuating demands, freeing them from fixed infrastructure costs. Additionally, the vendor handles routine maintenance, security updates, and scaling infrastructure, sparing organizations from hiring specialized staff or investing in physical servers and hardware. This can lead to substantial cost savings and financial flexibility.

On-Demand Scalability

The serverless architecture supports seamless scaling in response to varying demand levels. When more users access the application or perform resource-intensive operations, serverless platforms automatically allocate additional resources to handle the workload. This elasticity ensures the application runs smoothly, even during traffic spikes, while scaling back during low demand to minimize costs. For instance, an e-commerce app could accommodate holiday season surges without any manual intervention from the development team.

Enhanced Flexibility and Agility

Developers can easily add or update individual functions without impacting other components, enabling faster iteration cycles. This modular approach also allows teams to build, test, and deploy new features independently, enhancing productivity. Serverless platforms often offer pre-built templates and integrations with code repositories, which helps streamline for custom app development company. Existing code can be reused efficiently across multiple applications, minimizing repetitive work.

Reduced Latency Through Proximity

Global cloud vendors have distributed data centers worldwide, which minimizes latency for users. When a user triggers a function, the platform selects the nearest available server to process the request. This leads to faster response times, as data doesn’t have to travel long distances. Such latency reduction can be crucial for applications that rely on real-time interactions, like online gaming or live streaming services.

Access to advanced Infrastructure Without Large Capital Investment

Adopting serverless architecture enables organizations to leverage the robust infrastructure of leading technology companies without hefty upfront investments. Building similar server resources in-house could be prohibitively expensive, especially for smaller firms. With serverless, companies gain access to high-performance computing, storage, and networking solutions backed by enterprise-grade security and scalability, typically reserved for large corporations.

What are Microservices?

Microservices, or microservices architecture, is a cloud-centric approach that structures applications as a suite of loosely coupled, independent modules. Each microservice operates autonomously, processing its own technology stack, database, and management system. This separation allows for easy scaling and management of individual parts without impacting the entire system.

Communication among microservices typically occurs through REST APIs, event streaming, or massage brokers, ensuring efficient data flow across the applications. This modular setup enables organizations to categorize microservices by business functions, such as order processing or search functions, each confined within a “bounded context” to prevent interference across services.

Microservices thrive alongside cloud infrastructure, as both enable rapid development and scalability. With cloud adoption on the rise, investments in microservices are forecast to surpass $6 billion within four years.

From a business perspective, microservices offer distinct advantages:

Seamless updates: Teams can update specific services without affecting the overall application, reducing risk and downtime.

Flexible technology choices: Microservices enable diverse technology stacks and languages, allowing teams to select the best tools per component.

Independent scalability: Each service scales independently based on demand, ensuring optimal resource usage and performance across the application.

How Do Microservices Architecture Works?

Microservices architecture operates by dividing applications into independent, self-sufficient components, each designed to handle a specific function.

Here’s a deeper look at the process:

Core Concept of Microservices

In microservices, each service functions as an autonomous unit that fulfills a designated role within the application. These components run independently and remain isolated from each other, ensuring resilience and modularity. This architecture enables services to operate without interference, even if other components experience issues.

Containerized Execution

Typically, microservices are deployed within containers, like those created using Docker. Containers are packaged environments containing all necessary code, libraries, and dependencies required by each microservice. This ensures consistency in various environments, simplifying scaling and maintenance. Docker is widely adopted for containerized microservices due to its flexibility and ease of use, allowing teams to create efficient, portable applications.

Stages of Microservices Development

Decomposition: In this initial phase, the application’s core functionalities are dissected into smaller, manageable services. Each microservice addresses a specific function, which can range from processing payments to handling user authentication. This decentralized model allows teams to tackle each function individually, fostering a clear division of labor and better resource allocation.

Design: Once each microservice’s purpose is defined, the relationships and dependencies among them are mapped. This step involves creating a hierarchy, indicating which services rely on others to function optimally. Effective design minimizes potential bottlenecks by establishing clear communication protocols and dependencies between services.

Development: When the architecture is established, development teams (usually small units of 2-5 developers) begin building each service. By working in smaller teams focused on a single service, development cycles are faster and more efficient. Each team can implement specific technologies, frameworks, or programming languages best suited for their assigned service.

Deployment: Deployment options for microservices are versatile. Services can be deployed in isolated containers, virtual machines (VMs), or even as functions in a serverless environment, depending on the application’s infrastructure needs. Deploying containers provides scalability and flexibility, as each service can be updated or scaled independently without disrupting other components.

Advantages of Microservices Architecture

Microservices architecture addresses the limitations of monolithic systems, offering flexibility and enabling feature enhancements individually. This architecture is inherently scalable and allows streamlined management.

Here are the primary advantages:

Component-Based Structure

Microservices break applications into independent, smaller services. Each component is isolated, enabling developers to modify or update specific services without impacting the whole system. Components can be developed, tested, and deployed separately, enhancing control over each service.

Decentralized Data Management

Each microservice operates with its database, ensuring security and flexibility. If one service faces a vulnerability, the issue is contained, safeguarding other data within the system. Teams can apply tailored security measures to specific services, prioritizing high-security needs for critical data-handling components.

Risk Mitigation

Microservices limit risk by allowing services to substitute for failed components. If one service fails, the architecture allows redistributing functions to other operational services, ensuring continued performance. Unlike monolithic systems, where a single failure can disrupt the entire application, microservices maintain stability and reduce downtime.

Scalability

Microservices excel in scalability, making them an ideal choice for growing applications. Companies like Netflix adopted microservices to restructure their platform, leveraging Node.js for backend operations, ultimately saving billions through increased efficiency and modular scalability. Each service can scale independently, allowing applications to handle fluctuating demand without overhauling the entire system.

Compatibility with Agile and DevOps

Microservices align with Agile methodology and DevOps methodologies, empowering small teams to manage entire tasks, including individual services. This compatibility facilitates rapid development cycles, continuous integration, and efficient team collaboration, enhancing adaptability and productivity.

Difference Between Serverless and Microservices Architecture

Microservices and serverless architectures, while both aimed at enhancing modularity and scalability, differ fundamentally. Here’s a side-by-side comparison to clarify how each framework operates and the advantages it brings.

Granularity

Microservices divide large applications into smaller, standalone services, each responsible for a specific business function. These services can be developed, deployed, and scaled independently. Ensuring precise control over specific functionalities.

Serverless operates at a granularity, breaking down applications into functions. Each function performs a single, focused task and triggers based on specific events. This approach takes modularity further, enabling.

Scalability

Serverless automatically scales functions according to the demand, activating additional resources only as needed. Cloud providers handle all infrastructure management, letting developers focus on code rather than configuration.

Microservices allow each service to be scaled independently, but scaling may require manual configuration or automated systems. This independence provides flexibility but often involves greater setup and monitoring efforts.

Development and Deployment

Serverless enables streamlined development and deployment, simplifying operational complexities. Cloud providers abstract infrastructure management, supporting faster continuous integration and delivery cycles. Functions can be deployed individually, promoting rapid iteration and agile development.

Microservices development involves containers, such as Docker, to package services. This approach demands coordination for inter-service communication, fault tolerance, and data consistency. While it provides independence, it also introduces operational overhead and requires comprehensive DevOps management.

Runtime

Serverless functions run in a stateless environment. Each function executes, completes, and loses its state immediately afterward, making it ideal for tasks that don’t need persistent data storage.

Microservices are deployed to virtual machines (VMs) or containers, allowing them to retain state over time. This persistence suits applications that require continuous data storage and retrieval across sessions.

Cost

Serverless follows a pay-per-use model, where costs align directly with the volume of events processed. This flexibility lowers overall expenses, especially for applications with fluctuating or low-frequency usage.

Microservices require dedicated infrastructure, resulting in fixed costs for resources even when not actively processing requests. This model may be less cost-effective for applications with inconsistent traffic but can be advantageous for high-demand services.

Infrastructure Management

In serverless, cloud consulting manages all infrastructure. Full-stack developers don’t handle provisioning, maintenance, or scaling, allowing them to focus solely on coding and deployment.

Microservices require developers to oversee the entire tech stack, including infrastructure, deployment, and networking. This approach provides control but demands expertise in DevOps practices like CI/CD and infrastructure management.

Conclusion

Deciding between serverless and microservice architecture depends on the unique requirements of your business. Serverless provides a streamlined, cost-effective solution for dynamic, event-driven tasks, allowing developers to focus solely on code.

Microservices, on the other hand, offer greater flexibility and control, making them suitable for complex applications that need independent scalability and resilience. Both architectures have their advantages, and understanding their differences helps in making an informed decision that aligns with your scalability, cost, and operational goals.

Ready to optimize your software architecture? Reach out to us to discuss which solution fits best for your business needs.

Source URL: https://www.techaheadcorp.com/blog/serverless-vs-microservices-architecture/

0 notes

Text

🚨URGENT DISTRESS CALL

I am a mother of 5 children from Gaza. I lost my husband, my home, and everything I own in the war. I am writing to you with full pain and hope that you will heed my call and save my family.📍🍉🍉💔😭😭

This is my family of 6 people and we now live in a tent💔💔🍉

This is our house that was destroyed by the occupation😭😭😭💔💔🍉

This is our source of livelihood, but the occupation burned and destroyed it and we became without income😭😭💔🍉📍

This tent we live in is not fit for human habitation.🍉🍉📍💔💔😭

“Help me survive and go home 🏠💔”

My campaign is protected on gofundme for a year 🍉🍉📍📍🚨

The situation is very difficult, and with my family who needs support to survive in these harsh conditions. Every donation, no matter how small, may be the difference that helps us provide food and shelter💔💔😭😭🍉📍

@fly-sky-high-09 @awetistic-things @imjustheretotrytohelp @riding-with-the-wild-hunt @wellsbering @blomstermjuk @innovatorbunny @operationladybug @acehimbo @butch-king-frankenstein @butchjeremyfragrance @ohjinyoungblr @rememberthelaughter2016 @parfaithaven @huznilal-blog @saintverse @bagofbonesmp3 @anneemay-blog @ankle-beez @thatsonehellofabird @sunidentifiables @neechees

@maester-cressen @lampsbian @sundung @shinydreamtacoprune-blog @rob-os-17 @brokenbackmountain @unwinni3 @whateveroursoulsaremadeoff @cultreslut @halfbloodfanboy @pontaoski @fei-huli @elbiotipo @selkiebrides @bloodandgutsyippee @wherethatoldtraingoes2 @ibtisams-blog @ap-kinda-lit @frigidwife @vetted-gaza-funds @gazagfmboost @nabulsi27

#architecture#city#clouds#decor#free gaza#free gaza 🇵🇸#free palestine#free palestine 🇵🇸#free use slvt#free 🍉#free game#freepalastine🇵🇸#i stand with palestine 🇵🇸#from the river to the sea 🇵🇸#save palestine 🇵🇸#free palestine 🇵🇸🍉#🍉🍉🍉#palestine 🍉#watermelon 🍉#gaza 🍉#save 🍉#🇵🇸🇵🇸🇵🇸#maria gjieli#hfw photomode#dfb frauen#this is what makes us girls#dragon age#uh#mob psycho 100#ask me anything

14K notes

·

View notes

Text

Why AWS is Becoming Essential for Modern IT Professionals

In today's fast-paced tech landscape, the integration of development and operations has become crucial for delivering high-quality software efficiently. AWS DevOps is at the forefront of this transformation, enabling organizations to streamline their processes, enhance collaboration, and achieve faster deployment cycles. For IT professionals looking to stay relevant in this evolving environment, pursuing AWS DevOps training in Hyderabad is a strategic choice. Let’s explore why AWS DevOps is essential and how training can set you up for success.

The Rise of AWS DevOps

1. Enhanced Collaboration

AWS DevOps emphasizes the collaboration between development and operations teams, breaking down silos that often hinder productivity. By fostering communication and cooperation, organizations can respond more quickly to changes and requirements. This shift is vital for businesses aiming to stay competitive in today’s market.

2. Increased Efficiency

With AWS DevOps practices, automation plays a key role. Tasks that were once manual and time-consuming, such as testing and deployment, can now be automated using AWS tools. This not only speeds up the development process but also reduces the likelihood of human error. By mastering these automation techniques through AWS DevOps training in Hyderabad, professionals can contribute significantly to their teams' efficiency.

Benefits of AWS DevOps Training

1. Comprehensive Skill Development

An AWS DevOps training in Hyderabad program covers a wide range of essential topics, including:

AWS services such as EC2, S3, and Lambda

Continuous Integration and Continuous Deployment (CI/CD) pipelines

Infrastructure as Code (IaC) with tools like AWS CloudFormation

Monitoring and logging with AWS CloudWatch

This comprehensive curriculum equips you with the skills needed to thrive in modern IT environments.

2. Hands-On Experience

Most training programs emphasize practical, hands-on experience. You'll work on real-world projects that allow you to apply the concepts you've learned. This experience is invaluable for building confidence and competence in AWS DevOps practices.

3. Industry-Recognized Certifications

Earning AWS certifications, such as the AWS Certified DevOps Engineer, can significantly enhance your resume. Completing AWS DevOps training in Hyderabad prepares you for these certifications, demonstrating your commitment to professional development and expertise in the field.

4. Networking Opportunities

Participating in an AWS DevOps training in Hyderabad program also allows you to connect with industry professionals and peers. Building a network during your training can lead to job opportunities, mentorship, and collaborative projects that can advance your career.

Career Opportunities in AWS DevOps

1. Diverse Roles

With expertise in AWS DevOps, you can pursue various roles, including:

DevOps Engineer

Site Reliability Engineer (SRE)

Cloud Architect

Automation Engineer

Each role offers unique challenges and opportunities for growth, making AWS DevOps skills highly valuable.

2. High Demand and Salary Potential

The demand for DevOps professionals, particularly those skilled in AWS, is skyrocketing. Organizations are actively seeking AWS-certified candidates who can implement effective DevOps practices. According to industry reports, these professionals often command competitive salaries, making an AWS DevOps training in Hyderabad a wise investment.

3. Job Security

As more companies adopt cloud solutions and DevOps practices, the need for skilled professionals will continue to grow. This trend indicates that expertise in AWS DevOps can provide long-term job security and career advancement opportunities.

Staying Relevant in a Rapidly Changing Industry

1. Continuous Learning

The tech industry is continually evolving, and AWS regularly introduces new tools and features. Staying updated with these advancements is crucial for maintaining your relevance in the field. Consider pursuing additional certifications or training courses to deepen your expertise.

2. Community Engagement

Engaging with AWS and DevOps communities can provide insights into industry trends and best practices. These networks often share valuable resources, training materials, and opportunities for collaboration.

Conclusion

As the demand for efficient software delivery continues to rise, AWS DevOps expertise has become essential for modern IT professionals. Investing in AWS DevOps training in Hyderabad will equip you with the skills and knowledge needed to excel in this dynamic field.

By enhancing your capabilities in collaboration, automation, and continuous delivery, you can position yourself for a successful career in AWS DevOps. Don’t miss the opportunity to elevate your professional journey—consider enrolling in an AWS DevOps training in Hyderabad program today and unlock your potential in the world of cloud computing!

#technology#aws devops training in hyderabad#aws course in hyderabad#aws training in hyderabad#aws coaching centers in hyderabad#aws devops course in hyderabad#Cloud Computing#DevOps#AWS#AZURE#CloudComputing#Cloud Computing & DevOps#Cloud Computing Course#DeVOps course#AWS COURSE#AZURE COURSE#Cloud Computing CAREER#Cloud Computing jobs#Data Storage#Cloud Technology#Cloud Services#Data Analytics#Cloud Computing Certification#Cloud Computing Course in Hyderabad#Cloud Architecture#amazon web services

0 notes

Text

Building Your Serverless Sandbox: A Detailed Guide to Multi-Environment Deployments (or How I Learned to Stop Worrying and Love the Cloud)

Introduction Welcome, intrepid serverless adventurers! In the wild world of cloud computing, creating a robust, multi-environment deployment pipeline is crucial for maintaining code quality and ensuring smooth transitions from development to production.Here is part 1 and part 2 of this series. Feel free to read them before continuing on. This guide will walk you through the process of setting…

#automation#aws#AWS S3#CI/CD#Cloud Architecture#cloud computing#cloud security#continuous deployment#DevOps#GitLab#GitLab CI#IAM#Infrastructure as Code#multi-environment deployment#OIDC#pipeline optimization#sandbox#serverless#software development#Terraform

0 notes

Text

AI race and competition law: Balancing choice & innovation

New Post has been published on https://thedigitalinsider.com/ai-race-and-competition-law-balancing-choice-innovation/

AI race and competition law: Balancing choice & innovation

What do we mean by an AI race?

The discussion on an “Artificial Intelligence race” (or AI Race as it is commonly referred to) has been the subject of continuous discussion and debate across the internet.

However, the question that really needing asked is what is an “AI Race”? And if it is indeed a race, what lies at the finishing line? Is it the most advanced algorithm, a hugely beneficial customer feature, or the most cost-effective ecosystem? And where will Competition Law allow for a balance of all of these in such a fast-moving space?

Before taking a deep dive, it is important to look at what companies have brought out to put the “race” into context. Apple, back in June, announced at their Worldwide Developers Conference (WWDC) Apple intelligence.

Example features announced include the ability to adjust the tone and style of written responses across applications, prioritize urgent emails and notifications, along with the ability to write mathematical expressions in notes using the Apple Pencil and the system giving the answers in the user’s own handwriting [1].

The functionality described is the backbone of a more substantial cloud architecture called Private Cloud Compute (PCC), which, in summary, processes the user’s request solely for that purpose without any visibility to Apple and is subsequently deleted once the request has been fulfilled.

In May, Microsoft announced AI-focused hardware named Copilot + PC. The silicon chips powering them have been advertised as being capable of 40 trillion operations a second, being twenty times more powerful and one hundred times for running AI-based workloads [2].

The backdrop to both of these announcements is OpenAI: the company that introduced ChatGPT (Chat Pre-Trained Generative Transformer) and Sora to the world stage back in November 2022 and February 2024, respectively [3], [4].

The AI Race and Competition Law

The functionality described is the backbone of a more substantial cloud architecture called Private Cloud Compute (PCC), which, in summary, processes the user’s request solely for that purpose without any visibility to Apple and is subsequently deleted once the request has been fulfilled.

Latest developments aside, technology companies have greatly expanded their hardware and software offerings over the years, and with Artificial Intelligence (AI) functionality along with On Device Machine Learning (ODML) becoming the norm, regulators are closely monitoring offerings to ensure fair market access and pricing.

Competition in this – or any sector – is more than just ensuring a level playing field for businesses: it ensures consumers get a fair deal and access to a broad range of products and services, contributing to economic growth. Anti-competitive practices can result in higher prices and the dilution of market opportunities for other organizations. Within the United Kingdom, the role of the Competition Markets Authority (CMA) is to promote competition within markets and tackle anti-competitive behavior.

Areas they oversee include mergers (with the ability to block them should they risk substantially reducing competition), ensuring both individuals and businesses are informed on their rights and obligations under both competition and consumer law, as well as protecting people from unfair trading practices that may arise from a wider market issue. When it comes to approaches to regulating technology, this is where substantial approaches have been and are being taken.

The EU approach:

Back on the 21st of April 2024, the EU passed the AI Act, the world’s first standalone law governing the use of AI [5]. This new law takes a risk-based approach with differing requirements according to the level of risk, namely:

• Unacceptable risk: there are some AI practices considered to be a clear threat to fundamental rights with examples including AI systems that manipulate human behavior with the objective of distorting their behavior.

• High risk: These AI systems will need to follow strict rules, including, for example, high-quality data sets and human oversight (human in the loop systems, for example).

• Limited risk: The design of an AI system classed as limited risk should be done in a way that an individual is informed if they are interacting with an AI system. Should an AI system that generates or manipulates deepfakes be developed the organization or individual must declare the content has been artificially generated. [6]

The UK approach:

The other end of the spectrum is the United Kingdom’s principles-based approach, formed off the back of a 2023 white paper, A Pro-Innovation Approach to AI Regulation [7].

Based on a lack of understanding (and arguably confidence in the underlying legalities) around AI, its risks, and regulatory gaps, it was identified that this path might be the most appropriate to start with while recognizing the need for future legislative action. The methodology in laying down a framework for AI Regulation was based on the following five principles, namely:

• Safety, security, and robustness

• Appropriate transparency and explainability

• Fairness

• Accountability and governance

• Contestability and redress

If, amongst the above, an outcomes-based approach based on adaptivity and autonomy is taken on a sectoral basis by regulators, this leaves room for interpretation and cross-sector regulatory uncertainty.

The former government did not outline plans for the introduction of an AI regulator to oversee the implementation of the framework, and instead, it has been put to individual regulators (ie: Information Commissioners Officer, Ofcom) to implement the five principles based on existing laws and regulations, with regulators submitting their plans to the government back in April this year [8].

While the incumbent government has outlined in its manifesto to boost funding in AI technologies, exacting roadmaps from the Department for Science, Innovation and Technology are yet to be outlined.

The future trajectory

With differing approaches, questions have and will continue to emerge about how each can be applied, and enforced and the resulting effectiveness to shape future policymaking.

It is important to recognize that both approaches will be subject to varying sectoral scrutiny – while at the same time maintaining sectoral compliance – as use cases of AI expand. In conjunction with the expansion of AI use cases organizations operating in sectors where regulators have limited understanding of AI systems could identify loopholes to engage in anti-competitive practices.

From a European Union perspective, competition thriving within the internal market could result in the effectiveness of the AI Act being distorted, resulting in the need to increase the frameworks for competition standards to ensure they are continually aligned with the EU’s constitutional values. A

Although the incumbent government has yet to outline plans, it more specifically remains to be seen whether they continue with the principles-based approach or whether they push for an approach along similar lines to the EU.

Conclusion

The convergence of understanding AI, principles, sectoral use cases, and the wider laws aren’t solely there for an AI race, but instead, it should be viewed as a targeted effort to increase understanding,

humanize use cases and look at how compatible the law and technology are with one another both in the present to ensure society can not just see, but feel the benefit of AI technologies.

Bibliography

[1] Apple Intelligence Announcement: Apple Newsroom (United Kingdom). (n.d.). iPadOS 18 introduces powerful intelligence features and apps for Apple Pencil. [online] Available at: https:// www.apple.com/uk/newsroom/2024/06/ipados-18-introduces-powerful-intelligence-features-and apps-for-apple-pencil/ [Accessed 25 Jun. 2024].

[2] Microsoft Copilot + pcs announcement: Mehdi, Y. (2024). Introducing Copilot+ PCs. [online] The Official Microsoft Blog. Available at: https://blogs.microsoft.com/blog/2024/05/20/ introducing-copilot-pcs/.

[3] OpenAI ChatGPT: WhatIs.com. (n.d.). What Is ChatGPT? Everything You Need to Know. [online] Available at: https://www.techtarget.com/whatis/definition/ChatGPT#:~:text=Who%20created%20ChatGPT%3F.

[4] OpenAI Sora: Roth, E. (2024). OpenAI introduces Sora, its text-to-video AI model. [online] The Verge. Available at: https://www.theverge.com/2024/2/15/24074151/openai-sora-text-to-video-ai.

[5] Passing of AI Act: Clover, W.R.L., Francesca Blythe, Arthur (2024). One Step Closer: AI Act Approved by Council of the EU. [online] Data Matters Privacy Blog. Available at: https:// datamatters.sidley.com/2024/06/06/one-step-closer-ai-act-approved-by-council-of-the-eu/ #:~:text=On%2021%20May%202024%2C%20the [Accessed 24 Jun. 2024].

[6] AI Act Risk Levels: www.wilmerhale.com. (2024). The European Parliament Adopts the AI Act. [online] Available at: https://www.wilmerhale.com/en/insights/blogs/wilmerhale-privacy-and cybersecurity-law/20240314-the-european-parliament-adopts-the-ai-act.

[7] UK Government Pro-Innovation Approach: Gov.uk (2023). AI regulation: a pro-innovation approach. [online] GOV.UK. Available at: https://www.gov.uk/government/publications/ai regulation-a-pro-innovation-approach.

[8] UK Regulator Deadline: GOV.UK. (n.d.). Regulators’ strategic approaches to AI. [online] Available at: https://www.gov.uk/government/publications/regulators-strategic-approaches-to-ai/ regulators-strategic-approaches-to-ai [Accessed 26 Jun. 2024].

Interested in in-person events where AI and tech leaders share their knowledge?

Get your tickets for our summits today!

AI Accelerator Institute | Summit calendar

Be part of the AI revolution – join this unmissable community gathering at the only networking, learning, and development conference you need.

#2022#2023#2024#ai#ai act#ai model#AI regulation#AI systems#ai use cases#algorithm#Announcements#apple#apple intelligence#applications#approach#apps#architecture#artificial#Artificial Intelligence#Behavior#Blog#chatGPT#chips#Cloud#Cloud Architecture#Community#Companies#competition#compliance#conference

0 notes

Text

#maviyenot#nature#photography#naturecore#landscape#aesthetic#travelling#paradise#landscape photography#sky#pink sky#painting#sea#water#colorful#advertising#art#architecture#exlore#india#nature photography#cloudy sky#flower#clouds#vintage#mountains

3K notes

·

View notes

Text

#cloud#cloud architecture#cloud computing#cloud hcm#cloud infrastructure#cloud solutions#cloud services

0 notes

Text

#landsccape#paradise#nature#adventure#explore#travel#travelling#naturecore#cottagecore#landscape#farmcore#flowercore#flowers#aesthetic#photography#cottage witch#cottage garden#country cottage#cozycore#cottage aesthetic#photographers on tumblr#dark acadamia aesthetic#full moon#clouds#curators on tumblr#artists on tumblr#architecture#garden#gardencore#gothic architecture

11K notes

·

View notes