#AWS Data Engineering Training

Explore tagged Tumblr posts

Text

AWS Data Analytics Training | AWS Data Engineering Training in Bangalore

What’s the Most Efficient Way to Ingest Real-Time Data Using AWS?

AWS provides a suite of services designed to handle high-velocity, real-time data ingestion efficiently. In this article, we explore the best approaches and services AWS offers to build a scalable, real-time data ingestion pipeline.

Understanding Real-Time Data Ingestion

Real-time data ingestion involves capturing, processing, and storing data as it is generated, with minimal latency. This is essential for applications like fraud detection, IoT monitoring, live analytics, and real-time dashboards. AWS Data Engineering Course

Key Challenges in Real-Time Data Ingestion

Scalability – Handling large volumes of streaming data without performance degradation.

Latency – Ensuring minimal delay in data processing and ingestion.

Data Durability – Preventing data loss and ensuring reliability.

Cost Optimization – Managing costs while maintaining high throughput.

Security – Protecting data in transit and at rest.

AWS Services for Real-Time Data Ingestion

1. Amazon Kinesis

Kinesis Data Streams (KDS): A highly scalable service for ingesting real-time streaming data from various sources.

Kinesis Data Firehose: A fully managed service that delivers streaming data to destinations like S3, Redshift, or OpenSearch Service.

Kinesis Data Analytics: A service for processing and analyzing streaming data using SQL.

Use Case: Ideal for processing logs, telemetry data, clickstreams, and IoT data.

2. AWS Managed Kafka (Amazon MSK)

Amazon MSK provides a fully managed Apache Kafka service, allowing seamless data streaming and ingestion at scale.

Use Case: Suitable for applications requiring low-latency event streaming, message brokering, and high availability.

3. AWS IoT Core

For IoT applications, AWS IoT Core enables secure and scalable real-time ingestion of data from connected devices.

Use Case: Best for real-time telemetry, device status monitoring, and sensor data streaming.

4. Amazon S3 with Event Notifications

Amazon S3 can be used as a real-time ingestion target when paired with event notifications, triggering AWS Lambda, SNS, or SQS to process newly added data.

Use Case: Ideal for ingesting and processing batch data with near real-time updates.

5. AWS Lambda for Event-Driven Processing

AWS Lambda can process incoming data in real-time by responding to events from Kinesis, S3, DynamoDB Streams, and more. AWS Data Engineer certification

Use Case: Best for serverless event processing without managing infrastructure.

6. Amazon DynamoDB Streams

DynamoDB Streams captures real-time changes to a DynamoDB table and can integrate with AWS Lambda for further processing.

Use Case: Effective for real-time notifications, analytics, and microservices.

Building an Efficient AWS Real-Time Data Ingestion Pipeline

Step 1: Identify Data Sources and Requirements

Determine the data sources (IoT devices, logs, web applications, etc.).

Define latency requirements (milliseconds, seconds, or near real-time?).

Understand data volume and processing needs.

Step 2: Choose the Right AWS Service

For high-throughput, scalable ingestion → Amazon Kinesis or MSK.

For IoT data ingestion → AWS IoT Core.

For event-driven processing → Lambda with DynamoDB Streams or S3 Events.

Step 3: Implement Real-Time Processing and Transformation

Use Kinesis Data Analytics or AWS Lambda to filter, transform, and analyze data.

Store processed data in Amazon S3, Redshift, or OpenSearch Service for further analysis.

Step 4: Optimize for Performance and Cost

Enable auto-scaling in Kinesis or MSK to handle traffic spikes.

Use Kinesis Firehose to buffer and batch data before storing it in S3, reducing costs.

Implement data compression and partitioning strategies in storage. AWS Data Engineering online training

Step 5: Secure and Monitor the Pipeline

Use AWS Identity and Access Management (IAM) for fine-grained access control.

Monitor ingestion performance with Amazon CloudWatch and AWS X-Ray.

Best Practices for AWS Real-Time Data Ingestion

Choose the Right Service: Select an AWS service that aligns with your data velocity and business needs.

Use Serverless Architectures: Reduce operational overhead with Lambda and managed services like Kinesis Firehose.

Enable Auto-Scaling: Ensure scalability by using Kinesis auto-scaling and Kafka partitioning.

Minimize Costs: Optimize data batching, compression, and retention policies.

Ensure Security and Compliance: Implement encryption, access controls, and AWS security best practices. AWS Data Engineer online course

Conclusion

AWS provides a comprehensive set of services to efficiently ingest real-time data for various use cases, from IoT applications to big data analytics. By leveraging Amazon Kinesis, AWS IoT Core, MSK, Lambda, and DynamoDB Streams, businesses can build scalable, low-latency, and cost-effective data pipelines. The key to success is choosing the right services, optimizing performance, and ensuring security to handle real-time data ingestion effectively.

Would you like more details on a specific AWS service or implementation example? Let me know!

Visualpath is Leading Best AWS Data Engineering training.Get an offering Data Engineering course in Hyderabad.With experienced,real-time trainers.And real-time projects to help students gain practical skills and interview skills.We are providing 24/7 Access to Recorded Sessions ,For more information,call on +91-7032290546

For more information About AWS Data Engineering training

Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/online-aws-data-engineering-course.html

#AWS Data Engineering Course#AWS Data Engineering training#AWS Data Engineer certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering training in Hyderabad#AWS Data Engineer online course#AWS Data Engineering Training in Bangalore#AWS Data Engineering Online Course in Ameerpet#AWS Data Engineering Online Course in India#AWS Data Engineering Training in Chennai#AWS Data Analytics Training

0 notes

Text

AWS Data Engineering online training | AWS Data Engineer

AWS Data Engineering: An Overview and Its Importance

Introduction

AWS Data Engineering plays a significant role in handling and transforming raw data into valuable insights using Amazon Web Services (AWS) tools and technologies. This article explores AWS Data Engineering, its components, and why it is essential for modern enterprises. In today's data-driven world, organizations generate vast amounts of data daily. Effectively managing, processing, and analyzing this data is crucial for decision-making and business growth. AWS Data Engineering Training

What is AWS Data Engineering?

AWS Data Engineering refers to the process of designing, building, and managing scalable and secure data pipelines using AWS cloud services. It involves the extraction, transformation, and loading (ETL) of data from various sources into a centralized storage or data warehouse for analysis and reporting. Data engineers leverage AWS tools such as AWS Glue, Amazon Redshift, AWS Lambda, Amazon S3, AWS Data Pipeline, and Amazon EMR to streamline data processing and management.

Key Components of AWS Data Engineering

AWS offers a comprehensive set of tools and services to support data engineering. Here are some of the essential components:

Amazon S3 (Simple Storage Service): A scalable object storage service used to store raw and processed data securely.

AWS Glue: A fully managed ETL (Extract, Transform, Load) service that automates data preparation and transformation.

Amazon Redshift: A cloud data warehouse that enables efficient querying and analysis of large datasets. AWS Data Engineering Training

AWS Lambda: A serverless computing service used to run functions in response to events, often used for real-time data processing.

Amazon EMR (Elastic MapReduce): A service for processing big data using frameworks like Apache Spark and Hadoop.

AWS Data Pipeline: A managed service for automating data movement and transformation between AWS services and on-premise data sources.

AWS Kinesis: A real-time data streaming service that allows businesses to collect, process, and analyze data in real time.

Why is AWS Data Engineering Important?

AWS Data Engineering is essential for businesses due to several key reasons: AWS Data Engineering Training Institute

Scalability and Performance AWS provides scalable solutions that allow organizations to handle large volumes of data efficiently. Services like Amazon Redshift and EMR ensure high-performance data processing and analysis.

Cost-Effectiveness AWS offers pay-as-you-go pricing models, eliminating the need for large upfront investments in infrastructure. Businesses can optimize costs by only using the resources they need.

Security and Compliance AWS provides robust security features, including encryption, identity and access management (IAM), and compliance with industry standards like GDPR and HIPAA. AWS Data Engineering online training

Seamless Integration AWS services integrate seamlessly with third-party tools and on-premise data sources, making it easier to build and manage data pipelines.

Real-Time Data Processing AWS supports real-time data processing with services like AWS Kinesis and AWS Lambda, enabling businesses to react to events and insights instantly.

Data-Driven Decision Making With powerful data engineering tools, organizations can transform raw data into actionable insights, leading to improved business strategies and customer experiences.

Conclusion

AWS Data Engineering is a critical discipline for modern enterprises looking to leverage data for growth and innovation. By utilizing AWS's vast array of services, organizations can efficiently manage data pipelines, enhance security, reduce costs, and improve decision-making. As the demand for data engineering continues to rise, businesses investing in AWS Data Engineering gain a competitive edge in the ever-evolving digital landscape.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete AWS Data Engineering Training worldwide. You will get the best course at an affordable cost

Visit: https://www.visualpath.in/online-aws-data-engineering-course.html

Visit Blog: https://visualpathblogs.com/category/aws-data-engineering-with-data-analytics/

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

#AWS Data Engineering Course#AWS Data Engineering Training#AWS Data Engineer Certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering Training in Hyderabad#AWS Data Engineer online course

0 notes

Text

Introduction to AWS Data Engineering: Key Services and Use Cases

Introduction

Business operations today generate huge datasets which need significant amounts of processing during each operation. Data handling efficiency is essential for organization decision making and expansion initiatives. Through its cloud solutions known as Amazon Web Services (AWS) organizations gain multiple data-handling platforms which construct protected and scalable data pipelines at affordable rates. AWS data engineering solutions enable organizations to both acquire and store data and perform analytical tasks and machine learning operations. A suite of services allows business implementation of operational workflows while organizations reduce costs and boost operational efficiency and maintain both security measures and regulatory compliance. The article presents basic details about AWS data engineering solutions through their practical applications and actual business scenarios.

What is AWS Data Engineering?

AWS data engineering involves designing, building, and maintaining data pipelines using AWS services. It includes:

Data Ingestion: Collecting data from sources such as IoT devices, databases, and logs.

Data Storage: Storing structured and unstructured data in a scalable, cost-effective manner.

Data Processing: Transforming and preparing data for analysis.

Data Analytics: Gaining insights from processed data through reporting and visualization tools.

Machine Learning: Using AI-driven models to generate predictions and automate decision-making.

With AWS, organizations can streamline these processes, ensuring high availability, scalability, and flexibility in managing large datasets.

Key AWS Data Engineering Services

AWS provides a comprehensive range of services tailored to different aspects of data engineering.

Amazon S3 (Simple Storage Service) – Data Storage

Amazon S3 is a scalable object storage service that allows organizations to store structured and unstructured data. It is highly durable, offers lifecycle management features, and integrates seamlessly with AWS analytics and machine learning services.

Supports unlimited storage capacity for structured and unstructured data.

Allows lifecycle policies for cost optimization through tiered storage.

Provides strong integration with analytics and big data processing tools.

Use Case: Companies use Amazon S3 to store raw log files, multimedia content, and IoT data before processing.

AWS Glue – Data ETL (Extract, Transform, Load)

AWS Glue is a fully managed ETL service that simplifies data preparation and movement across different storage solutions. It enables users to clean, catalog, and transform data automatically.

Supports automatic schema discovery and metadata management.

Offers a serverless environment for running ETL jobs.

Uses Python and Spark-based transformations for scalable data processing.

Use Case: AWS Glue is widely used to transform raw data before loading it into data warehouses like Amazon Redshift.

Amazon Redshift – Data Warehousing and Analytics

Amazon Redshift is a cloud data warehouse optimized for large-scale data analysis. It enables organizations to perform complex queries on structured datasets quickly.

Uses columnar storage for high-performance querying.

Supports Massively Parallel Processing (MPP) for handling big data workloads.

It integrates with business intelligence tools like Amazon QuickSight.

Use Case: E-commerce companies use Amazon Redshift for customer behavior analysis and sales trend forecasting.

Amazon Kinesis – Real-Time Data Streaming

Amazon Kinesis allows organizations to ingest, process, and analyze streaming data in real-time. It is useful for applications that require continuous monitoring and real-time decision-making.

Supports high-throughput data ingestion from logs, clickstreams, and IoT devices.

Works with AWS Lambda, Amazon Redshift, and Amazon Elasticsearch for analytics.

Enables real-time anomaly detection and monitoring.

Use Case: Financial institutions use Kinesis to detect fraudulent transactions in real-time.

AWS Lambda – Serverless Data Processing

AWS Lambda enables event-driven serverless computing. It allows users to execute code in response to triggers without provisioning or managing servers.

Executes code automatically in response to AWS events.

Supports seamless integration with S3, DynamoDB, and Kinesis.

Charges only for the compute time used.

Use Case: Lambda is commonly used for processing image uploads and extracting metadata automatically.

Amazon DynamoDB – NoSQL Database for Fast Applications

Amazon DynamoDB is a managed NoSQL database that delivers high performance for applications that require real-time data access.

Provides single-digit millisecond latency for high-speed transactions.

Offers built-in security, backup, and multi-region replication.

Scales automatically to handle millions of requests per second.

Use Case: Gaming companies use DynamoDB to store real-time player progress and game states.

Amazon Athena – Serverless SQL Analytics

Amazon Athena is a serverless query service that allows users to analyze data stored in Amazon S3 using SQL.

Eliminates the need for infrastructure setup and maintenance.

Uses Presto and Hive for high-performance querying.

Charges only for the amount of data scanned.

Use Case: Organizations use Athena to analyze and generate reports from large log files stored in S3.

AWS Data Engineering Use Cases

AWS data engineering services cater to a variety of industries and applications.

Healthcare: Storing and processing patient data for predictive analytics.

Finance: Real-time fraud detection and compliance reporting.

Retail: Personalizing product recommendations using machine learning models.

IoT and Smart Cities: Managing and analyzing data from connected devices.

Media and Entertainment: Streaming analytics for audience engagement insights.

These services empower businesses to build efficient, scalable, and secure data pipelines while reducing operational costs.

Conclusion

AWS provides a comprehensive ecosystem of data engineering tools that streamline data ingestion, storage, transformation, analytics, and machine learning. Services like Amazon S3, AWS Glue, Redshift, Kinesis, and Lambda allow businesses to build scalable, cost-effective, and high-performance data pipelines.

Selecting the right AWS services depends on the specific needs of an organization. For those looking to store vast amounts of unstructured data, Amazon S3 is an ideal choice. Companies needing high-speed data processing can benefit from AWS Glue and Redshift. Real-time data streaming can be efficiently managed with Kinesis. Meanwhile, AWS Lambda simplifies event-driven processing without requiring infrastructure management.

Understanding these AWS data engineering services allows businesses to build modern, cloud-based data architectures that enhance efficiency, security, and performance.

References

For further reading, refer to these sources:

AWS Prescriptive Guidance on Data Engineering

AWS Big Data Use Cases

Key AWS Services for Data Engineering Projects

Top 10 AWS Services for Data Engineering

AWS Data Engineering Essentials Guidebook

AWS Data Engineering Guide: Everything You Need to Know

Exploring Data Engineering Services in AWS

By leveraging AWS data engineering services, organizations can transform raw data into valuable insights, enabling better decision-making and competitive advantage.

youtube

#aws cloud data engineer course#aws cloud data engineer training#aws data engineer course#aws data engineer course online#Youtube

0 notes

Text

AWS Data Engineer Training | AWS Data Engineer Online Course.

AccentFuture offers an expert-led online AWS Data Engineer training program designed to help you master data integration, analytics, and cloud solutions on Amazon Web Services (AWS). This comprehensive course covers essential topics such as data ingestion, storage solutions, ETL processes, real-time data processing, and data analytics using key AWS services like S3, Glue, Redshift, Kinesis, and more. The curriculum is structured into modules that include hands-on projects and real-world applications, ensuring practical experience in building and managing data pipelines on AWS. Whether you're a beginner or an IT professional aiming to enhance your cloud skills, this training provides the knowledge and expertise needed to excel in cloud data engineering.

For more information and to enroll, visit AccentFuture's official course page.

#aws data engineer online training#aws data engineer training#data engineering#data engineering course in hyderabad#data engineer training#data engineer online training

0 notes

Note

I had this as a dream and I woke up all grumpy because I wish it was real 😭😭😭

Basically, reader is a reserve driver for Mclaren but also in f1 Academy, and she and Lando have always been super close. One day, she has to race instead of Oscar, and she ends up leading the race. However, near the end she asks the team to swap with lando (who she kept within DRS to help him out) because she knew he could use the points more than her since she's not an official f1 racer. Lando refuses, and reader wins her very first race. Lando is overwhelmed by how much he loves her and he just marches up to her and pulls her in from her waist to kiss her (could be private or public) and they're both just so proud of each other and so down bad 🥹🥹🥹

In the Slipstream

summary: where a surprise victory, a selfless offer, and a kiss at the finish line—some moments change everything, on and off the track. warnings: none

You never really expected to race in Formula 1—not yet, anyway.

Being McLaren’s reserve driver was already a dream you clutched tightly, and your time in the F1 Academy was sharpening your edge, day by day. You were grinding for the future, for the chance that maybe, if the stars aligned, you’d get that one golden shot. Still, you didn’t expect it to arrive on a cool spring weekend in Imola.

Oscar had come down with a stomach virus—something violent and sudden. When the team principal tapped your shoulder that morning, the pit lane buzzing behind him, you felt your stomach flip in sync with the revving engines.

“You’re up.”

You didn’t even have time to be nervous. It was all a blur—briefings, simulator data, seat fitting, strategy talk, and a surprising amount of people suddenly treating you not like the F1 Academy kid, but like McLaren’s actual second driver.

And then there was Lando.

He was always your rock. From the earliest days at the McLaren simulator to now, he was the constant thread in the chaos. He teased you like an older brother when you first joined, but somewhere along the line, it shifted. Quiet moments in the motorhome, texts that lingered, eyes that held yours just a little too long. The bond between you deepened—unspoken, but undeniable.

As you stood side by side before the race, helmet in hand, Lando bumped his shoulder against yours.

“Nervous?”

You smiled, adjusting your gloves. “Terrified.”

He grinned, green eyes twinkling. “Good. That means you’ll be sharp.”

You rolled your eyes, but the warmth in your chest spread like fire.

The race began in a flash.

Lights out. Your start was electric. Years of F1 Academy training and sim practice paid off instantly. Clean overtakes. Smart tire management. You quickly moved through the midfield, shock and awe blooming around you like wildfire.

And then… you were leading.

Not by much—but enough to see the papaya blur of Lando’s car in your mirrors, stuck tightly in your DRS range. You’d coordinated perfectly without speaking, both of you playing the strategy game like chess masters. You gave him DRS when he needed it, pulled when it counted, and he protected your tail like a guardian.

But you knew what was at stake.

You weren’t supposed to be here—not permanently. This race didn’t count toward a championship for you. For Lando, it could mean everything. A podium. A shot at the title. Or even just the points to prove himself in a field that always underestimated him.

So with ten laps to go, your voice broke over the radio, steady but full of emotion.

“Tell Lando… he can take the win. I’ll open the door in sector two.”

There was silence. Then the engineer’s voice returned, startled. “Say again?”

“I want him to take it. I’ll back off.”

More silence.

Then a voice crackled in—his voice.

“Don’t you dare,” Lando snapped. “You earned this. I’m not taking it.”

Your throat tightened. “Lan—”

“No. You’re not giving it away. Not to me. Not to anyone. Finish this.”

You blinked rapidly, fighting the sting in your eyes as the turns blurred.

Lap after lap, he stayed on your tail—but didn’t challenge. Not once. Just close enough to show he was there. That he believed in you.

You crossed the checkered flag, engine screaming, heart slamming, and your name ringing through the paddock for the first time in F1 victory.

Race winner: (Y/N), McLaren.

You pulled into the pit lane, overwhelmed, hands shaking. The team was screaming over the radio, cheering like mad. You climbed out of the car and tugged your helmet off, letting the cool air hit your sweat-damp hair.

And then—he was there.

Lando walked straight toward you with purpose, jaw tight, eyes wild. No words. Just energy.

Before you could say a thing, he reached for you, hands gripping your waist, and pulled you flush against him.

Then he kissed you.

Hard, desperate, and real.

The paddock didn’t exist. The cameras didn’t matter. All you felt was him. His hands. His breath. The quake of his chest against yours.

When he finally pulled back, his forehead rested against yours, eyes still shut.

“I’m so damn proud of you,” he whispered. “And I’m so in love with you.”

Your breath caught.

You couldn’t stop smiling. Couldn’t stop crying. The win, the adrenaline, the months of quiet longing—it all came crashing down in that single moment.

You held his face gently, brushing a thumb over the smear of sweat at his temple.

“I love you too,” you said softly, voice cracking. “I wanted you to win because I love you.”

He shook his head, still smiling.

“I wanted you to win. Because you deserve the world.”

The press didn’t let it go.

That kiss was everywhere. The headlines blared: ‘MCLAREN’S SURPRISE STAR STEALS HEART AND WIN’, ‘F1’S NEWEST POWER COUPLE?’, ‘Lando and (Y/N): Love in the Fast Lane’.

You didn’t care.

That night, after the whirlwind of interviews and champagne and congratulations, you sat together on the edge of the hotel balcony, legs tangled under a shared blanket. The Italian moon cast a silver glow over everything.

Lando rested his chin on your shoulder. “So… world champion next?”

You laughed softly. “One race at a time.”

He kissed your neck. “Then let’s make it the most beautiful one yet.”

#lando norris#lando norris imagine#lando norris x reader#lando norris x you#lando norris fic#f1#formula 1#ln4

338 notes

·

View notes

Text

Dark Blue Moon and the Suffering Sun Chapter 32

MASTAPOST

Samson S. Skulker. Wealthy real estate owner, noted trophy hunter. Been on safaris in Botswana, Indonesia, India, and other countries taking big game. Guy hunted just about everything. Elephants, rhinos, tigers, elk, only to come to Elmerton Bay, just an hour away by boat from Amity Island.

It didn’t take two brain cells to figure out why. The better question was why Phantom tried to point webbed fingers at him as to the whereabouts of Danny Fenton, a move that was transparently (goddammit Dick and your puns) a lie, according to Bruce. Tim Drake slipped into the man’s more private records without even trying.

Of course, getting the data out and parsing what it meant were two very different things. But he wasn’t trained by Batman for nothing. Skulker did make cursory attempts at hiding his electronic paper trail, but cursory was absolutely not enough to keep 13-year-old Tim out, let alone his current self.

Firstly, the man absolutely hunted more exotic, more illegal creatures. That much was clear. Borrowing some of Barbara’s programmes, Tim found the man travelling to much more remote countries. His little vacations coincided with missing persons reports around the same time.

Missing metas, to be exact. Each person with a power set dangerous to themselves and others. Each person having disappeared without a trace and then never to be found again. The picture Tim was building was getting grimmer.

Secondly, the man was buying parts. Robotics parts, to be exact. Engines, weapons systems, hydraulics. Many of them sourced from Vladco, the company founded by Vlad Masters, an old college friend of Jack and Maddie Fenton, who were the parents to the missing teenager of Tim’s current case.

But Danny Fenton did not have the meta-gene, a fact Tim confirmed after yet another concerning breach of privacy. He filed that detail away for later investigating.

Tim pressed a key, letting his programmes run while he got a coffee. Oh sweet delicious coffee. He had once distilled almost pure caffeine into a syrup. It was the most horrible thing he’d tasted in his life, but the buzz kept him up all night, that was until his heart started giving out. That was less enjoyable.

What was also less enjoyable was the revving motorcycle heading into the Batcave. Two motorcycles, in fact. Just as Tim’s afternoon was looking to be peaceful and quiet.

“Don’t fucking give me that, Dickwing!” Jason called out.

“I’m fine, Jay, maybe you need to stop hovering over me like some mama bear.” Dick put down his helmet with maybe a little too much force.

Jason hopped off his own bike. “That’s bullshit and even Timbit knows it.”

Tim shrunk into the Batcomputer’s chair. He so did not want to be a part of this. He just waited for his older brothers to carry their argument out of earshot, like they had been doing regularly now. The men traded strong words with every footstep across the cave.

“Maybe I’m just a little high strung. It’s honestly nothing.”

“You literally cannot fucking say that when I saw you going full-ass Punisher five minutes ago. Like the traffickers yesterday were one thing. Those guys suck. This dude was literally just a mugger. Are you going out of your fucking mind?”

“Jason, I thought you were supposed to be the one who’s all for going full Punisher style?”

Jason groaned loudly, and then transitioned into a frustrated scream. “Do you even hear yourself?!”

The changing room door slammed shut.

That was the second argument in the last two days. If you told Tim that Mr Heads-in-a-Duffle would be lecturing the Golden Child over excessive force, he’d start working on a machine to send you back to the topsy-turvy alternate dimension you’d come from, but apparently his dimension was the topsy-turvy one the whole time. And he hated it.

Turns out Dick inherited more from Bruce than he liked to admit, including his awful coping mechanisms. And to be honest, he was way too tired to even begin to breach this subject.

He should be happy that his literal attempted murderer was going to be out of his hair for a good while, maybe even forever. But even entertaining the thought made him sick enough to avoid the topic in his head for hours, only to think about it again, and get himself sick again.

So back to Skulker it was. Joy.

It turned out his new friend Skulker had made himself a fucking Iron Man rip-off suit, capable of flight, diving, and packed to the gills with fuck-you bazookas, machine guns, and hydroplasm weapons. Hydroplasm guns that he’d sourced from the Fentons themselves, through a long and complicated chain of buyoffs.

And happy day, the man was kind enough to install cameras and microphones, and kept logs from both.

In a surprising twist, it was fiendishly difficult to hack into those logs. Tim was honestly beginning to sweat. He suspected Skulker’s friends at Vladco (namely Vlad Masters, the sleezeball. Tim never liked him at galas and this only cemented his low opinion) had some secrets that they didn’t want out.

No matter, it was only a matter of time. Tim continued typing.

And typing.

And typing.

What the hell was this firewall?! Tim pinched his arm just to make sure this wasn’t a sleep-deprivation hallucination. He could’ve sworn he’d gotten through that layer of security. It was like it was shifting itself to cover up his progress and force him to start over. Almost like it was alive.

Against the thunderous backdrop of his brothers’ clashing voices, Tim set himself on overdrive. If he could just act faster than it could correct itself, then maybe, maybe.

A plain error message informed him of the results long after he’d already seen them. Tim kicked the table for good measure. The only thing he could extract was two frames of video footage. They showed, respectively, a T-shirt and pair of sneakers that matched what one of the missing metas was wearing when they were last seen.

Was it damning evidence? Absolutely. But it also proved to him absolutely nothing that he wasn’t already suspecting, nothing that could point him in a new direction. Still, it made his stomach churn. He hoped those people would get a better second chance beyond the grave.

Maybe the fact that the data was this well-hidden at all proved something.

The locker room door swung open, his brothers in civvies and glaring at each other, trying to appear civil in front of (right behind) Tim, even though they’d literally just been shouting at each other ten minutes ago.

“Timmy!” Dick called out. “How long have you been awake?”

Tim gestured offhanded to his pile of only two used mugs. “Not long enough. I’m still working. Can you take it upstairs please?”

Jason huffed, and stalked off upstairs without a word, probably too disgusted to be in his and Dick’s presence much longer.

Dick clasped his hands. “It’s fine, Tim. Honestly. Jason and I are just having a little, err, disagreement, is all.”

“Hm.” Tim inputted another set of commands. He was starting to see why Bruce liked to say that now. Avoiding painful emotions felt so good. Dick made a pained noise.

“Well, ok. I’m just gonna head back to Bludhaven now. Say hi to Alfred for me! And contact me if you need anything!” And then he sped off.

Tim shook off the awkwardness like old clothes. Thank goodness for some peace and quiet again. Maybe that was why he was working so hard to help Bruce get the demon child back, so he could return to the status quo, and not this. This hell reality where Dick was as emotionally constipated as Bruce and Jason was the one acting as the voice of reason.

The first night when Bruce called home, the entire family was in an uproar. Dick got a pale look on his face, and was halfway about to take the Batplane and go searching for Damian himself, only for Bruce to remind him that they were all still needed in Gotham and Bludhaven, and whatever few leads there were, Bruce would pursue. It was effortlessly logical, but it was clear Dick hated it. He stormed off in a rage that Tim had only seen when Ethiopia was fresh, when he and Bruce were at their lowest.

And Jason? He got this look on his face that he’d never, ever seen before. Tim had laid awake one night just contemplating it for ages.

Actually, no. He had seen it once before. It was Tim caught Jason looking into what Bruce was doing in the months after Ethiopia. Tim had subtly hacked the phone camera, and the look Jason had then was the same as how he looked when Damian was declared missing.

Tim shook his head. It was a gruesome image, what Bruce had sent them. Damian’s clothes ripped to shreds. The ground stained with his blood. No body in sight.

A little brother who may or may not be dead, something he may or may not be glad or sick to his stomach about. Brothers who were acting like completely different people, and a monster of a man who had to be connected somehow.

A ping appeared in the corner of the screen. The government siren hunting branch appearing in Panama?

Sam Manson sat up in her bed, her body finding some way to release the dread and tension. She looked on at her phone in horror and macabre fascination in equal parts.

This had Danny written all over it. She didn’t even need to hear the anchor confirming it to know.

On the one hand, she really wanted to applaud him for fucking them up this bad. The comment section was ripping into the GiW for their actions in Panama, treating the country like it was some vassal state they could romp around in. She personally screenshotted the fucking beautiful mass car crash the GiW had gotten into trying to catch him, and saved it into her favourites folder.

On the other hand, she really wanted to slap him for fucking up this bad. This could’ve easily gone wrong. Danny what were you thinking?! They could’ve got him that time!

And finally, she wanted to yell in frustration, because they had a radio communicator there. Goddammit! If only Tucker had known, they he could’ve hacked in and they could’ve talked to their best friend and actually got an update on what the fuck was going on.

And finally, finally for real, she was so glad, because the GiW would’ve announced it on every news channel if they’d actually managed to catch him. Thank fucking goodness.

Ugh, this headache. She really needed to lie down again.

Knock, knock knock knock knock, knock knock.

Dread pooled in her stomach. “Come in,” she said, resigned to her fate.

Grandma Ida, the person she least wanted to see right now, opened the door. She was the kind of woman who never carried herself very seriously, except for in matters of sorcery, and especially when warning Sam on the dangers of her craft. Dangers that Sam had ignored in order to go all out. Now she marched into Sam’s bedroom like an executioner.

Grandma stood at the foot of Sam’s bed, scanning her closely. “I knew I smelled tinged blood.” She went up to the side, and palmed Sam’s forehead. Her hand was freezing cold to the touch. “You should’ve called me immediately.”

Sam averted her eyes. She should’ve, but she didn’t.

Her parents never failed to get a rise out of her; she rejected their notions of female beauty and social etiquette in every way, their attempts to hook her up with Tim Drake-Wayne, then Damian Wayne, and she hadn’t cowed to them or submitted since she was ten. But with Grandma’s withering disapproval, she couldn’t feel more like a child if she tried.

“I’m sorry.” She whispered.

“I warned you many times of the risks, Sammy. You’re lucky to be here, and not in the hospital or worse.”

“I know.”

Sam moved to lie on her side, facing away from Granny. Granny had questioned her decision to fight alongside Danny, but allowed it under the condition that she did so safely, and turning your body into a popping water balloon, but with blood, was so not the definition of safe.

And Danny’s fate was still in question regardless. He wasn’t able to cross Panama, and who knows what Damian was doing. What if it was all for naught?

A hand was put on her shoulder. “Did you accomplish what you were set out to do?”

Sam nodded. “Yeah.”

“And was it worth it?” Yes. Absolutely yes. Danny bled every day for this god-forsaken town of ingrates. He’d bled for her mistake six months ago.

Granny seemed to understand her feelings. She nodded, and ruffled Sam’s hair, and the tension in Sam’s body drained away.

“Then I trust your judgement. Can you sit up? I’ve brought some more medicine for you.”

Sam pushed herself against the bunched-up pillows at the headboard. Her head spun from the motion, but she was never one to let her body’s limits confine her. “Thank you, Bubbe. I love you.”

Granny passed her a brew of herbal medicine, dozens of dried spices and mushrooms brewed together into a blackened sludge that felt like knives into your tongue, but which never failed to get her feeling better. It was a leg up from what big pharma tried to pedal for ten-fold the price.

Sam lifted up the mug to her face. And, oh yeah. Nothing like bitter liquid pain to help with a migraine. She let the hot tea flow over her taste buds, pathing them in cinnamon, star anise and a million other things.

She finished her tea in one satisfying gulp, running her tongue over her teeth and scratching out the lingering aftertaste. As she put the mug down, it revealed Grandma’s face hovering right in front of her. Sam yelped in shock. “Bubbe! You gave me a heart attack!”

Bubbe smiled devilishly. “So what did you do?”

Sam’s mouth gaped open. Leave it to her Grandma to almost kill her from emotional whiplash.

“Now come on, this is a monumental moment for a budding young sorceress like yourself. Why, when I was twenty-two, I used to run with some heroic types myself. We had all sorts of hijinks together.” Bubbe cackled and clasped her hands, eyes going wispy. “My friends got a heart attack when I pulled off my own hare-brained scheme to topple the evil overlord of the week’s central command. Hah!”

“What?!” Then Sam coughed, and lowered her volume. “What do you mean ‘heroic types.’ You just told me you went to some stuffy academy and eloped.”

Bubbe shrugged. “I did do that. Must have forgotten the extra stuff.”

Sam blinked.

She moved to sit beside Sam on the bed. “We got up to a lot of fun back in the day, and a lot of pain too. I did what I did to protect those I cared for. Did you, bubbeleh?”

She held Sam’s hand with a look that reminded her just how many years Grandma had lived, and how many adventures or stories she had yet to tell, how much heartache she’d had to endure to become the woman she was now. “I projected an illusion all the way off the coast of Panama. It hurt like nothing else in my entire life, but…” She paused. “We got Phantom out. He’s safe now, I think.”

Grandma Ida nodded solemnly, the kind of understanding that Sam craved from her parents every waking moment of her teenage career.

“I don’t want this to be a regular occurrence, ok?”

“Yes, I promise. This was an extreme circumstance.”

“Good. Now, are you well enough for some meditation? It would do well to keep your soul energy flowing.”

Ok, but you have to tell me what you got up to back in the day.”

Granny chuckled, and agreed to it. Sam kicked off her covers, letting her legs get some fresh air. She was probably pushing it, but she needed to recover as quickly as possible. Who knew when she would be needed again?

Maddie Fenton kneeled in the sand. Her hands clamped down on her gun. Her knees shook. Tears prickled in her goggles.

Her baby was right there. He was so close. So fucking close. She could almost touch him, even now.

And he ran away from her. And at first her heart shattered into a million pieces, just like it had when he’d come home after his first disappearance and flinched when she hugged him.

Then she realised. He was protecting her. Some metal menace was shooting at her defenseless son like it was some kind of sick game. The monster of a man had laid fucking landmines on a public beach.

It should’ve been her protecting him.

Bruce Wayne returned to her side, empty handed. They’d scoured this entire beach. Danny couldn’t have gone far, she had thought, only for their search to turn up with nothing.

That left only one option. That her enemy doubled back after fleeing, and snatched Danny up without either her or Bruce noticing. Maddie’s heart sank. She should’ve aimed for the head.

A name pinged in her mind. Phantom had whispered it to her. Skulker.

With nary but nod, she and Bruce mounted their jet skis again.

#dpxdc#danny fenton#merman#damian wayne#dcxdp#merboy#mermaid au#angst#danny phantom#tim drake#mer!danny#mer!damian wayne#mermay#mermay 2024

45 notes

·

View notes

Text

Well. :) Maybe the weird experimental shit will see itself through anyway, regardless of the author's doubts. Sometimes you have to backtrack; sometimes you just have to keep going.

Chapter 13: Integration

Do you want to watch awful media with me? ART said after its regular diagnostics round.

At this point, I was really tired of horrible media. And I knew ART was, too; it had digested Dandelion's watch list without complaint, but it hadn't once before asked to look at even more terrible media than we absolutely had to see. (And we had a lot. There was an entire list of shitty media helpfully compiled for us by all of our humans. Once we were done with getting ART's engines up and running, I was planning to hard block every single one of these shows from any potential download lists I would be doing in the future, forever.)

Which one? I said.

It browsed through the catalogue, then queried me for my own recent lists, but without the usual filters I had set up for it, then pulled out a few of the "true life" documentaries Pin-Lee and I had watched together for disaster evaluation purposes.

These were in your watch list. Why?

That was a hard question. I hated watching humans be stupid as much as ART did. But Pin-Lee being there made a big difference.

(Analyzing things with her helped. Pin-Lee's expertise in human legal frameworks let her explain a lot about how the humans wound up in the situations they did. And made comments about their horrible fates that would have gotten her in a lot of trouble if she'd made them professionally, but somehow made me feel better about watching said fates on archival footage.)

(Also these weren't our disasters to handle.)

I synthesized all of that into a data packet for ART. It considered, then said: I want that one. Can we do a planet? Not space.

Ugh, planets. But yeah. We could do a planetary disaster.

It's going to be improbable worms again.

It's always improbable worms, ART said. Play the episode.

I put it on, and we watched. Or, more accurately, ART watched the episode (and me reacting to it), and I watched ART, which was being a lot calmer about it than it had previously been with this kind of media. The weird oscillations it got from Dandelion were still there, but instead of doing the bot equivalent of staring at a wall intermittently, it was sitting through them, watching the show at the same time as it processed. Like it was there and not at the same time. Other parts of it were working on integrating its new experiences into the architecture it was creating. (ART had upgraded it to version 0.5 by now).

About halfway through the episode, ART said, I don't remember what it was like being deleted.

Yeah. Your backup was earlier.

In the show, humans were getting eaten by worms because they hadn't followed security recommendations (as usual), and because they hadn't contracted a bond company to make them follow recommendations (fuck advertising). In the feed, ART was thinking, but it was still following along. And writing code.

Then it said: She remembers being deleted.

You saw that when her memory reconnected?

Yes. And how she grew back from the debris of an old self. I didn't think she understood what I was planning.

Should you be telling me all this? What about privacy?

The training program includes permission to have help in processing what I saw. But that's not the part I am having difficulty with.

ART paused, then it queried me for permission to show me. I confirmed, but it needed a few seconds to process before it finally said:

There was a dying second-generation ship after a failed wormhole transit. Apex was her student and she couldn't save him. That was worse than being deleted.

ART focused on the screen again, looking at archival footage of people who had really died and it couldn't do anything about that. The data it was processing from the jump right now wasn't really sensory. It was mostly emotions, and it was processing them in parallel with the emotions from the show. In the show, there was a crying person, talking about how she'd never violate a single safety rule ever again (she was lying. Humans always lied about that). In the feed, ART was processing finding a ship that was half-disintegrated by a careless turn in the wormhole. The destruction spared Apex's organic processing center. He let Dandelion take his surviving humans on board, then limped back into the wormhole. She didn't have tractors to stop him then.

The episode ended, and ART prompted me to put on the next one. It was about space, but ART didn't protest. We sat there, watching humans die, and watching a ship die. Then we sat there, watching humans who survived talk about what happened afterwards. It sucked. It sucked a lot. But ART did not have to stop watching to run its diagnostics anymore.

Several hours later, ART said: Thank you.

For watching awful media with you?

Yes. Worldhoppers now?

It had been two months since it last wanted to watch Worldhoppers.

From the beginning, I said. That big, overwhelming emotion--relief, happiness, sadness, all rolled into one--was back again. Things couldn't go back to the way they were. But maybe now they could go forward. And we don't stop until the last episode, right?

Of course, ART said.

9 notes

·

View notes

Text

The Role of CCNP in Multi-Cloud Networking

We live in a time where everything is connected—our phones, laptops, TVs, watches, even our refrigerators. But have you ever wondered how all this connection actually works? Behind the scenes, there are large computer networks that make this possible. Now, take it one step further and imagine companies using not just one but many cloud services—like Google Cloud, Amazon Web Services (AWS), and Microsoft Azure—all at the same time. This is called multi-cloud networking. And to manage this kind of advanced setup, skilled professionals are needed. That’s where CCNP comes in.

Let’s break this down in a very simple way so that even a school student can understand it.

What Is Multi-Cloud Networking?

Imagine you’re at a school event. You have food coming from one stall, water from another, and sweets from a third. Now, imagine someone needs to manage everything—make sure food is hot, water is cool, and sweets arrive on time. That manager is like a multi-cloud network engineer. Instead of food stalls, though, they're managing cloud services.

So, multi-cloud networking means using different cloud platforms to store data, run apps, or provide services—and making sure all these platforms work together without any confusion or delay.

So, Where Does CCNP Fit In?

CCNP, which stands for Cisco Certified Network Professional, teaches you how to build, manage, and protect networks at a professional level. If CCNA is the beginner level, CCNP is the next big step.

When we say someone has completed CCNP training, it means they’ve learned advanced networking skills—skills that are super important for multi-cloud setups. Whether it’s connecting a company’s private network to cloud services or making sure all their apps work smoothly between AWS, Azure, and Google Cloud, a CCNP-certified person can do it.

Why Is CCNP Important for Multi-Cloud?

Here are a few simple reasons why CCNP plays a big role in this new world of multi-cloud networking:

Connecting Different Platforms: Each cloud service is like a different language. CCNP helps you understand how to make them talk to each other.

Security and Safety: In multi-cloud networks, data moves in many directions. CCNP-certified professionals learn how to keep that data safe.

Speed and Performance: If apps run slowly, users get frustrated. CCNP training teaches you how to make networks fast and efficient.

Troubleshooting Problems: When something breaks in a multi-cloud system, it can be tricky to fix. With CCNP skills, you’ll know how to find the issue and solve it quickly.

What You Learn in CCNP That Helps in Multi-Cloud

Let’s look at some topics covered in CCNP certification that directly help with multi-cloud work:

Routing and Switching: This means directing traffic between different networks smoothly, which is needed in a multi-cloud setup.

Network Automation: You learn how to make systems work automatically, which is super helpful when managing multiple clouds.

Security: You’re trained to spot and stop threats, even if they come from different cloud platforms.

Virtual Networking: Since cloud networks are often virtual (not physical wires and cables), CCNP teaches you how to work with them too.

Can I Learn CCNP Online?

Yes, you can! Thanks to digital learning, you can take a CCNP online class from anywhere—even your home. You don’t need to travel or sit in a classroom. Just a good internet connection and the will to learn is enough.

An online class is perfect for students or working professionals who want to upgrade their skills in their free time. It also helps you learn at your own speed. You can pause, repeat, or review topics anytime.

What Happens After You Get Certified?

Once you finish your CCNP certification, you’ll find many doors open for you. Especially in companies that use multiple cloud platforms, your skills will be in high demand. You could work in roles like:

Cloud Network Engineer

Network Security Analyst

IT Infrastructure Manager

Data Center Specialist

And the best part? These roles come with good pay and long-term career growth.

Where Can I Learn CCNP?

You can take CCNP training from many places, but it's important to choose a center that gives you hands-on practice and teaches in simple language. One such place is Network Rhinos, which is known for making difficult topics easy to understand. Whether you’re learning online or in-person, the focus should always be on real-world skills, not just theory.

Final Thoughts

The world is moving fast toward cloud-based technology, and multi-cloud setups are becoming the new normal. But with more clouds come more challenges. That’s why companies are looking for smart, trained professionals who can handle the job.

CCNP training prepares you for exactly that. Whether you're just starting your career or want to move to the next level, CCNP gives you the skills to stay relevant and in demand.

With options like a CCNP online class, you don’t even have to leave your house to become an expert. And once you complete your CCNP certification, you're not just learning about networks—you’re becoming someone who can shape the future of cloud technology.

So yes, if you’re thinking about CCNP in a world that’s quickly moving to the cloud, the answer is simple: go for it.

2 notes

·

View notes

Text

AWS Data Engineering | AWS Data Engineer online course

Key AWS Services Used in Data Engineering

AWS data engineering solutions are essential for organizations looking to process, store, and analyze vast datasets efficiently in the era of big data. Amazon Web Services (AWS) provides a wide range of cloud services designed to support data engineering tasks such as ingestion, transformation, storage, and analytics. These services are crucial for building scalable, robust data pipelines that handle massive datasets with ease. Below are the key AWS services commonly utilized in data engineering: AWS Data Engineer Certification

1. AWS Glue

AWS Glue is a fully managed extract, transform, and load (ETL) service that helps automate data preparation for analytics. It provides a serverless environment for data integration, allowing engineers to discover, catalog, clean, and transform data from various sources. Glue supports Python and Scala scripts and integrates seamlessly with AWS analytics tools like Amazon Athena and Amazon Redshift.

2. Amazon S3 (Simple Storage Service)

Amazon S3 is a highly scalable object storage service used for storing raw, processed, and structured data. It supports data lakes, enabling data engineers to store vast amounts of unstructured and structured data. With features like versioning, lifecycle policies, and integration with AWS Lake Formation, S3 is a critical component in modern data architectures. AWS Data Engineering online training

3. Amazon Redshift

Amazon Redshift is a fully managed, petabyte-scale data warehouse solution designed for high-performance analytics. It allows organizations to execute complex queries and perform real-time data analysis using SQL. With features like Redshift Spectrum, users can query data directly from S3 without loading it into the warehouse, improving efficiency and reducing costs.

4. Amazon Kinesis

Amazon Kinesis provides real-time data streaming and processing capabilities. It includes multiple services:

Kinesis Data Streams for ingesting real-time data from sources like IoT devices and applications.

Kinesis Data Firehose for streaming data directly into AWS storage and analytics services.

Kinesis Data Analytics for real-time analytics using SQL.

Kinesis is widely used for log analysis, fraud detection, and real-time monitoring applications.

5. AWS Lambda

AWS Lambda is a serverless computing service that allows engineers to run code in response to events without managing infrastructure. It integrates well with data pipelines by processing and transforming incoming data from sources like Kinesis, S3, and DynamoDB before storing or analyzing it. AWS Data Engineering Course

6. Amazon DynamoDB

Amazon DynamoDB is a NoSQL database service designed for fast and scalable key-value and document storage. It is commonly used for real-time applications, session management, and metadata storage in data pipelines. Its automatic scaling and built-in security features make it ideal for modern data engineering workflows.

7. AWS Data Pipeline

AWS Data Pipeline is a data workflow orchestration service that automates the movement and transformation of data across AWS services. It supports scheduled data workflows and integrates with S3, RDS, DynamoDB, and Redshift, helping engineers manage complex data processing tasks.

8. Amazon EMR (Elastic MapReduce)

Amazon EMR is a cloud-based big data platform that allows users to run large-scale distributed data processing frameworks like Apache Hadoop, Spark, and Presto. It is used for processing large datasets, performing machine learning tasks, and running batch analytics at scale.

9. AWS Step Functions

AWS Step Functions help in building serverless workflows by coordinating AWS services such as Lambda, Glue, and DynamoDB. It simplifies the orchestration of data processing tasks and ensures fault-tolerant, scalable workflows for data engineering pipelines. AWS Data Engineering Training

10. Amazon Athena

Amazon Athena is an interactive query service that allows users to run SQL queries on data stored in Amazon S3. It eliminates the need for complex ETL jobs and is widely used for ad-hoc querying and analytics on structured and semi-structured data.

Conclusion

AWS provides a powerful ecosystem of services that cater to different aspects of data engineering. From data ingestion with Kinesis to transformation with Glue, storage with S3, and analytics with Redshift and Athena, AWS enables scalable and cost-efficient data solutions. By leveraging these services, data engineers can build resilient, high-performance data pipelines that support modern analytics and machine learning workloads.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete AWS Data Engineering Training worldwide. You will get the best course at an affordable cost.

#AWS Data Engineering Course#AWS Data Engineering Training#AWS Data Engineer Certification#Data Engineering course in Hyderabad#AWS Data Engineering online training#AWS Data Engineering Training Institute#AWS Data Engineering Training in Hyderabad#AWS Data Engineer online course

0 notes

Text

Far Out

Chapter 16: Black Box

I didn’t work the next day, which was a curse and a blessing. I had enjoyed the actual work of being an engineer on Brock Station, so I lamented having to wait until tomorrow to get back to it. On the other hand, I could check out the black box right away. Quickly, I picked up a coffee from the canteen and headed to the Benevolence.

Connecting my tablet to the box, I downloaded the massive pile of data. Video and audio from surveillance, system status readouts, data from just about any sensor you could think of. Starting with the video, I pointedly avoided the cockpit recording and focused on the rest of the ship, especially the engine room. It was strange to see myself on one of these, and in such an awful state. You wouldn’t even have known that I was alive until Benni notified me of the flux signatures that heralded the recovery team. Oddly, it didn’t seem to bother me very much. The person I saw sitting on the chair in the engine room didn’t register in my mind as myself. It had only been a little over a month, but it felt like forever ago.

From the moment I left the engine room, it took less than a minute until the jump, and all the video feeds cut out. Interestingly, the engine room video and audio feed cut out a few seconds before the others. It was likely the proximity to the malfunctioning drive that caused it. There were the other camera feeds, but they didn’t offer anything interesting. I sighed. That left the sensor data.

Tens of thousands of sensors throughout the ship recorded each of their own parameters, tracking every little thing that had the potential to be the cause of a problem. This was another reason why I was glad to have Benni. Without it, I would never be able to understand the incredible amount of data held inside.

I fed a line from my tablet to Benni’s computer. “Here’s the black box data, Benni. Can you parse this and let me know if there’s anything that could explain how we survived that jump?”

“Yes, Captain. One Moment.”

I waited, knowing it wouldn’t take long. Ulthean AI could be stupid sometimes (at least, the leashed AI), but when it came to analysis, they tore through data like an acetylene torch goes through plastic. Less than a minute later, Benni spoke up again.

“Captain. I Have Found One Major Anomaly.”

“Go ahead,” I said.

“Before The Jump, My CDrive Experienced A Severe Dip In Power. This Is Unusual Behavior For A CDrive Performing A Premature Jump.”

“Well, what do they usually do?” I asked. “Keep going until they explode?”

“That Is Correct.”

“Ours still exploded, though,” I pointed out.

“That Is Correct.The Dip In Power Was Severe, But It Was Not Enough To Halt The Meltdown Process.”

I rubbed one of my horns as I thought. It was times like these that I wish I had been trained as a Flux Tech, but it had always seemed like such a pain. There was so much uncertainty with Flux. A prime example of that was sitting near the aft of my ship.

“Could this just be luck?” I ventured. “Premature jumps aren’t always deadly.”

“In Cases Where The Crew Of A Ship Survived A Premature Jump, The Behavior Of The CDrive Did Not Change. The Mechanics Of Surviving A Premature Jump Are Still Not Understood.” Sighing, I leaned against the wall. “And our data does nothing to change that.”

“My Apologies, Captain.”

“You didn’t create the data, don’t apologize,” I said, but then a thought came to me. “Wait, I noticed that the engine room video feed cut out a few seconds before the others. Can you cross-reference the dip in power to when the feed was cut?”

“Yes, Captain.”

Benni clicked as it thought, then spoke again.

“The Dip In Power Occurred One Half Second After The Engine Room Feed Went Offline.”

I hummed in thought. So, they could be related, but the Blessed knew how. As I tidied up, I said, “I guess we’re going to have to call this one a mystery for now. Maybe someone else on the engineering team has an idea?”

“That Is Possible.”

“Well,” I said, “Thanks for your help, there’s a lot here I couldn’t do without you.”

“Of Course, Captain. You Are Welcome.”

“Now,” I said, rubbing my hands together, “let’s take a look at your surveillance systems.”

We spent the rest of the day locating and diagnosing every one of Benni’s ship cameras. Unnervingly, there were a few in some very hidden places. I was used to having my every move watched, having grown up in Ulthea, but I drew the line at having cameras in the bathroom and crew quarters. Most of the ship cameras were obvious black bubbles in the ceiling, but the ones installed in more private areas were either concealed or disguised.

After finding the second camera behind a bathroom mirror, I groaned. “Benni, I’m sorry, but I’m going to have to remove some of these.”

“May I Ask Why, Captain?”

For some reason, that question gave me pause. It wasn’t pushback exactly, but it was an unexpected level of curiosity. Regardless, this was one area I couldn’t back down on. “I know they act as your eyes, but there are some things you really don’t need to see.”

“I Do Not Understand.”

Somewhat unsure of how to proceed, I shifted on my feet, which is surprisingly difficult when wearing mechanical braces. Benni didn’t have any framework of our societal norms. Hiding nakedness or just being truly alone wasn’t something it had to want or care about. “I thought these areas were private. I mean, they should be private. People need to be able to go somewhere they know they aren’t being watched. It lets us relax.”

“Having A Stress-Free Environment Is Conducive To Good Crew Behavior. Very Well, Captain. You May Remove Any Cameras You Deem Stressful.”

“I won’t remove any cameras from common areas,” I assured it. “It’s just these hidden ones.”

“I Trust You, Captain. At Your Discretion.”

After that discussion, each camera removal came with a small pang of guilt, but by the tenth one, the guilt began to give way to disgust. Bathrooms, bedrooms, hidden inside bunks and closets. By the time I had removed the last hidden camera, I had a small pile on the galley table. It was ridiculous. Who had the time to monitor all these? The standard cameras I understood, but why would they have needed to install so many hidden ones?

The connections my brain was beginning to make weren’t going anywhere good. None of these feeds had been available in the black box. Where were they sending their data? Quickly, I swept the offending cameras into a wastebin and dusted my hands off. They were gone now, that was the important thing.

“And… that’s everything,” I said, poking at my tablet. “Hidden cameras removed, common room cameras diagnosed. It looks like the flux wave shorted the hardware itself this time, so I’ll need to order replacements. I’m not sure how long that’ll take, but I’ll let you know, alright?”

“Thank You, Captain, That Would Be Appreciated.”

I looked at the time. It was well past when the canteen usually began serving dinner, and I suddenly realized I was ravenously hungry. And a little dizzy. Had I really been at this all day? I finished putting my tools away and tucked the tablet under one arm. With luck, some of the engineering team would still be eating, I wanted to pick their brains about the CDrive.

Halfway out the main exterior hatch, I stopped and turned halfway to look back into the ship. “Benni?”

“Yes, Captain?”

“How are you doing?” I asked. “I feel bad about leaving you alone in this ship with nothing to do. It would drive me crazy.”

“It Would Be A Waste Of Power To Continue Running At Full Capacity Without Input. If I Am Idle For A Set Period Of Time, I Enter A Standby Mode. My Main Processes Shut Down Until Input Is Detected From Relevant Sensors. I Do Not Get Bored.”

Right, not a person. Still a computer. An extremely powerful computer, a computer that may or may not be developing emotions, but a computer nonetheless. I sighed, patted the side of the door twice and turned to leave again. “Okay. Good night, buddy.”

“Good Night, Captain.”

< Previous Chapter | Start | Next Chapter >

2 notes

·

View notes

Text

Ok but like... who is actually on the side of AI art? Is there literally anyone who's like, fuck yes, I'm an AI artist (whatever that is) and so forth? Perhaps it's because I live in this tumblr bubble but I legitimately have not seen a lot of people who are actually pro-AI. In fact, I think that AI has way less power than many people (esp artists) fear it has, because most people don't actually WANT AI art. Sure, it might fill a niche, but I don't actually think it will be all that successful. Users don't want to get AI bullshit when they google stuff (if I'm not misinterpreting the consensus), so users will begin to switch it off, and a feature that's not used is usually also one the company doesn't invest in.

I think there are ways in which AI could be helpful, for example in science, I want an AI that helps me with my taxes, helps me find the papers I'm trying to cite, helps me do stupid calculations, one that gives me MORE time to create art, rather than making art FOR me while I continue to struggle with google scholar's awful interface. And I actually believe most people would agree with me! I actually believe most people dont see genAI as an art form. I would be interested in hearing a programmer's or a software engineer's opinion on this. Do they think it's art? Are they happy to have created a code that writes its own code? Do they consider it an art form?

All I'm saying is, I think we needn't be afraid. There have been many revolutions in art, whether it be the switch from classical oil paintings to abstract art forms, or the introduction of digital art. Just think of the music industry! Everything's on Spotify nowadays, but that doesn't mean there aren't still people listening to the radio, or buying vinyls. Art is timeless. AI won't take that away from us. And of course a case can be made for AI taking jobs away from artists and it's a tragedy, but making money with art has always been a struggle and the people who were willing to pay you what your work is worth before AI got big will still be willing to pay you now, because those are the people who care for art, for expression, for authenticity. People/companies who are using AI instead of hiring an artist never appreciated art in the first place.

I'm not saying that we should stop worrying about genAI. Protect your data and your art! Tell Meta AI to fuck off! (Look it up, you can and should reject Mata's attempt to train their AI with your data, on places like Instagram, but you have to do it manually.) Keep speaking up about the fact that it doesn't make sense that this is what AI is being used for when we have so many things in our lives we would actually NOT want to do ourselves and art clearly is a thing that we WANT to do ourselves! Don't stop being aware of this topic. But don't fear. AI can't take something away from you that thousands of years of human history haven't been able to take away from you.

4 notes

·

View notes

Text

Journey to AWS Proficiency: Unveiling Core Services and Certification Paths

Amazon Web Services, often referred to as AWS, stands at the forefront of cloud technology and has revolutionized the way businesses and individuals leverage the power of the cloud. This blog serves as your comprehensive guide to understanding AWS, exploring its core services, and learning how to master this dynamic platform. From the fundamentals of cloud computing to the hands-on experience of AWS services, we'll cover it all. Additionally, we'll discuss the role of education and training, specifically highlighting the value of ACTE Technologies in nurturing your AWS skills, concluding with a mention of their AWS courses.

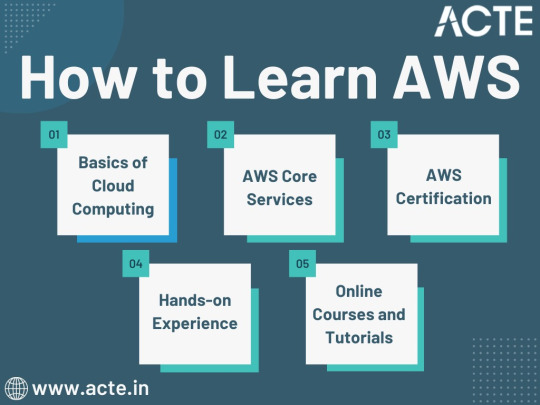

The Journey to AWS Proficiency:

1. Basics of Cloud Computing:

Getting Started: Before diving into AWS, it's crucial to understand the fundamentals of cloud computing. Begin by exploring the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Gain a clear understanding of what cloud computing is and how it's transforming the IT landscape.

Key Concepts: Delve into the key concepts and advantages of cloud computing, such as scalability, flexibility, cost-effectiveness, and disaster recovery. Simultaneously, explore the potential challenges and drawbacks to get a comprehensive view of cloud technology.

2. AWS Core Services:

Elastic Compute Cloud (EC2): Start your AWS journey with Amazon EC2, which provides resizable compute capacity in the cloud. Learn how to create virtual servers, known as instances, and configure them to your specifications. Gain an understanding of the different instance types and how to deploy applications on EC2.

Simple Storage Service (S3): Explore Amazon S3, a secure and scalable storage service. Discover how to create buckets to store data and objects, configure permissions, and access data using a web interface or APIs.

Relational Database Service (RDS): Understand the importance of databases in cloud applications. Amazon RDS simplifies database management and maintenance. Learn how to set up, manage, and optimize RDS instances for your applications. Dive into database engines like MySQL, PostgreSQL, and more.

3. AWS Certification:

Certification Paths: AWS offers a range of certifications for cloud professionals, from foundational to professional levels. Consider enrolling in certification courses to validate your knowledge and expertise in AWS. AWS Certified Cloud Practitioner, AWS Certified Solutions Architect, and AWS Certified DevOps Engineer are some of the popular certifications to pursue.

Preparation: To prepare for AWS certifications, explore recommended study materials, practice exams, and official AWS training. ACTE Technologies, a reputable training institution, offers AWS certification training programs that can boost your confidence and readiness for the exams.

4. Hands-on Experience:

AWS Free Tier: Register for an AWS account and take advantage of the AWS Free Tier, which offers limited free access to various AWS services for 12 months. Practice creating instances, setting up S3 buckets, and exploring other services within the free tier. This hands-on experience is invaluable in gaining practical skills.

5. Online Courses and Tutorials:

Learning Platforms: Explore online learning platforms like Coursera, edX, Udemy, and LinkedIn Learning. These platforms offer a wide range of AWS courses taught by industry experts. They cover various AWS services, architecture, security, and best practices.

Official AWS Resources: AWS provides extensive online documentation, whitepapers, and tutorials. Their website is a goldmine of information for those looking to learn more about specific AWS services and how to use them effectively.

Amazon Web Services (AWS) represents an exciting frontier in the realm of cloud computing. As businesses and individuals increasingly rely on the cloud for innovation and scalability, AWS stands as a pivotal platform. The journey to AWS proficiency involves grasping fundamental cloud concepts, exploring core services, obtaining certifications, and acquiring practical experience. To expedite this process, online courses, tutorials, and structured training from renowned institutions like ACTE Technologies can be invaluable. ACTE Technologies' comprehensive AWS training programs provide hands-on experience, making your quest to master AWS more efficient and positioning you for a successful career in cloud technology.

8 notes

·

View notes

Text

I mean, are we surprised this is the method chosen by the "the most accessed page must the the right one" search engine people?

One of our favourite things about using gtrans is that they crowdsource translations, and that makes it easy to manipulate for various purposes, some awful, others comedy gold. For a brief, meme-y time, the gtrans Arabic version of "Gandalf" was "Abdullah Karida".

You've Got Enron Mail! by 99% Invisible talks about how the data was trained to begin with.

googledocs you are getting awfully uppity for something that can’t differentiate between “its” and “it’s” correctly

239K notes

·

View notes

Text

Top Machine Learning Programs in London for International Students in 2025

In today’s data-driven world, machine learning has become the backbone of innovations across industries—from healthcare and finance to robotics and autonomous systems. As a result, aspiring data scientists, software engineers, and technology enthusiasts are increasingly looking to upskill through reputable programs. If you're an international student seeking to pursue a Machine Learning course in London, 2025 offers more opportunities than ever before.

London, known as one of the world's leading hubs for technology and innovation, provides the perfect ecosystem to learn, experiment, and grow within the AI and machine learning space. With its multicultural environment, access to cutting-edge research, and thriving tech industry, it’s no surprise that thousands of students globally choose London for their AI and machine learning education.

In this blog, we’ll explore why London is a prime destination for international students, what to expect from top-tier programs, and how you can get started on your journey with the right institution.

Why Choose a Machine Learning Course in London?

International students choose London for a reason. The city combines academic excellence with vibrant industry exposure, making it ideal for both learning and launching your career.

1. Academic Excellence

London is home to some of the world’s most respected academic institutions and training centers. A Machine Learning course in London offers globally recognized certifications, industry-aligned curriculum, and access to the latest technologies and tools in AI and data science.

2. Global Tech Hub

London has established itself as a global technology and fintech center. It is filled with AI startups, enterprise R&D centers, and innovation labs, providing students access to internships, hackathons, and real-world exposure.

3. Cultural Diversity

As one of the most multicultural cities in the world, London is welcoming to international students. You’ll find peers from all corners of the globe, fostering collaboration and cross-cultural learning experiences.

4. Career Opportunities

Post-course placement opportunities are abundant. Graduates from a Machine Learning course in London often land roles in companies specializing in data science, AI solutions, machine learning platforms, and research-focused startups.

What to Expect from a Machine Learning Course in London?