#Accelerometer applications

Explore tagged Tumblr posts

Text

https://www.futureelectronics.com/p/semiconductors--analog--sensors--accelerometers/lis2mdltr-stmicroelectronics-5090146

3-Axis Digital Magnetic Sensor, 3 axis accelerometers, Mems accelerometers

LIS2MDL Series 3.6V 50 Hz High Performance 3-Axis Digital Magnetic Sensor-LGA-12

#Sensors#Accelerometer Sensors#LIS2MDLTR#STMicroelectronics#3-Axis Digital Magnetic Sensor#3 axis accelerometers#Mems#phone#Smartphone accelerometer#Accelerometer applications#programmable accelerometer#Digital accelerometer#USB accelerometer

1 note

·

View note

Text

Wireless accelerometer, USB accelerometer, Accelerometer sensor application

LIS2MDL Series 3.6V 50 Hz High Performance 3-Axis Digital Magnetic Sensor-LGA-12

0 notes

Text

MPU-6050: Features, Specifications & Important Applications

The MPU-6050 is a popular Inertial Measurement Unit (IMU) sensor module that combines a gyroscope and an accelerometer. It is commonly used in various electronic projects, particularly in applications that require motion sensing or orientation tracking.

Features of MPU-6050

The MPU-6050 is a popular Inertial Measurement Unit (IMU) that combines a 3-axis gyroscope and a 3-axis accelerometer in a single chip.

Here are the key features of the MPU-6050:

Gyroscope:

3-Axis Gyroscope: Measures angular velocity around the X, Y, and Z axes. Provides data on how fast the sensor is rotating in degrees per second (°/s).

Accelerometer:

3-Axis Accelerometer: Measures acceleration along the X, Y, and Z axes. Provides information about changes in velocity and the orientation of the sensor concerning the Earth's gravity.

Digital Motion Processor (DMP):

Integrated DMP: The MPU-6050 features a Digital Motion Processor that offloads complex motion processing tasks from the host microcontroller, reducing the computational load on the main system.

Communication Interface:

I2C (Inter-Integrated Circuit): The MPU-6050 communicates with a microcontroller using the I2C protocol, making it easy to interface with a variety of microcontrollers.

Temperature Sensor:

Onboard Temperature Sensor: The sensor includes an integrated temperature sensor, providing information about the ambient temperature.

Programmable Gyroscope and Accelerometer Range:

Configurable Sensitivity: Users can adjust the full-scale range of the gyroscope and accelerometer to suit their specific application requirements.

Low Power Consumption:

Low Power Operation: Designed for low power consumption, making it suitable for battery-powered and energy-efficient applications.

Read More: MPU-6050

#mpu6050#MPU-6050#IMU#accelerometer#gyroscope#magnetometer#6-axis IMU#inertial measurement unit#motion tracking#orientation sensing#navigation#robotics#drones#wearable devices#IoT#consumer electronics#industrial automation#automotive#aerospace#defense#MPU-6050 features#MPU-6050 specifications#MPU-6050 applications#MPU-6050 datasheet#MPU-6050 tutorial#MPU-6050 library#MPU-6050 programming#MPU-6050 projects

0 notes

Note

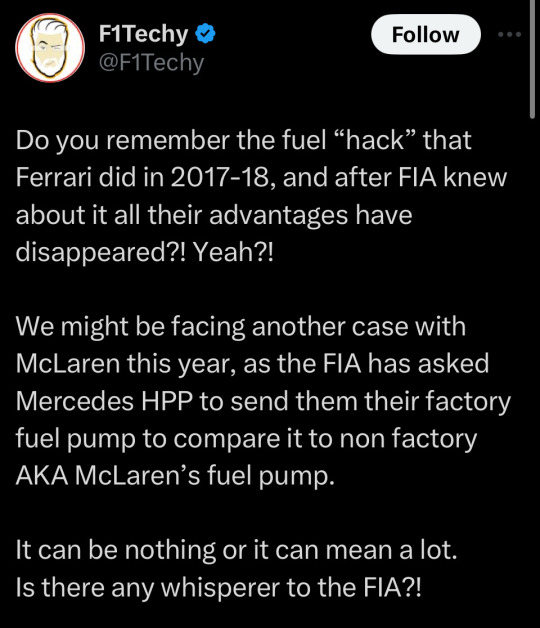

https://x.com/F1Techy/status/1797338007860662614

is this real?

Nope, whoever this person is, they are way off and they have no sources so don’t believe a word they say

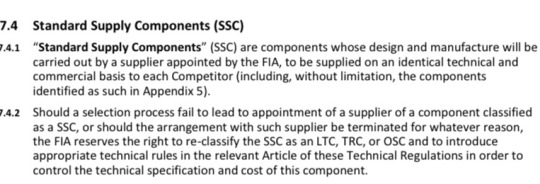

In fact Mercedes don’t make their own fuel pumps, let alone McLaren’s. No team has made their own fuel pumps since 2021.

Fuel pumps are categorised as a Standard Supply Component which means that they are designed and manufactured by a third party designated by the FIA.

This means that the same company makes all of the fuel pumps for all of the teams on the grid, so if there was anything going on with a fuel pump they would be going back to this third party not Mercedes.

Just because someone has a blue tick, doesn’t mean that they know what they are saying.

Full list of SSC parts below (from FIA regulations)

Wheel covers

• Clutch shaft torque

• Wheel rims

• Tyre pressure sensor (TPMS)

• Tyres

• Fuel system primer pumps, and flexible pipes and hoses

• Power unit energy store current/voltage sensor

• Fuel flow meter

• Power unit pressure and temperature sensors

• High-pressure fuel pump

• Car to team telemetry

• Driver radio

• Accident data recorder (ADR)

• High-speed camera

• In-ear accelerometer

• Biometric gloves

• Marshalling system

• Timing transponders

• TV cameras

• Wheel display panel

• Standard ECU

• Standard ECU FIA applications

• Rear lights

69 notes

·

View notes

Text

New diagnostic tool will help LIGO hunt gravitational waves

Machine learning tool developed by UCR researchers will help answer fundamental questions about the universe.

Finding patterns and reducing noise in large, complex datasets generated by the gravitational wave-detecting LIGO facility just got easier, thanks to the work of scientists at the University of California, Riverside.

The UCR researchers presented a paper at a recent IEEE big-data workshop, demonstrating a new, unsupervised machine learning approach to find new patterns in the auxiliary channel data of the Laser Interferometer Gravitational-Wave Observatory, or LIGO. The technology is also potentially applicable to large scale particle accelerator experiments and large complex industrial systems.

LIGO is a facility that detects gravitational waves — transient disturbances in the fabric of spacetime itself, generated by the acceleration of massive bodies. It was the first to detect such waves from merging black holes, confirming a key part of Einstein’s Theory of Relativity. LIGO has two widely-separated 4-km-long interferometers — in Hanford, Washington, and Livingston, Louisiana — that work together to detect gravitational waves by employing high-power laser beams. The discoveries these detectors make offer a new way to observe the universe and address questions about the nature of black holes, cosmology, and the densest states of matter in the universe.

Each of the two LIGO detectors records thousands of data streams, or channels, which make up the output of environmental sensors located at the detector sites.

“The machine learning approach we developed in close collaboration with LIGO commissioners and stakeholders identifies patterns in data entirely on its own,” said Jonathan Richardson, an assistant professor of physics and astronomy who leads the UCR LIGO group. “We find that it recovers the environmental ‘states’ known to the operators at the LIGO detector sites extremely well, with no human input at all. This opens the door to a powerful new experimental tool we can use to help localize noise couplings and directly guide future improvements to the detectors.”

Richardson explained that the LIGO detectors are extremely sensitive to any type of external disturbance. Ground motion and any type of vibrational motion — from the wind to ocean waves striking the coast of Greenland or the Pacific — can affect the sensitivity of the experiment and the data quality, resulting in “glitches” or periods of increased noise bursts, he said.

“Monitoring the environmental conditions is continuously done at the sites,” he said. “LIGO has more than 100,000 auxiliary channels with seismometers and accelerometers sensing the environment where the interferometers are located. The tool we developed can identify different environmental states of interest, such as earthquakes, microseisms, and anthropogenic noise, across a number of carefully selected and curated sensing channels.”

Vagelis Papalexakis, an associate professor of computer science and engineering who holds the Ross Family Chair in Computer Science, presented the team’s paper, titled “Multivariate Time Series Clustering for Environmental State Characterization of Ground-Based Gravitational-Wave Detectors,” at the IEEE's 5th International Workshop on Big Data & AI Tools, Models, and Use Cases for Innovative Scientific Discovery that took place last month in Washington, D.C.

“The way our machine learning approach works is that we take a model tasked with identifying patterns in a dataset and we let the model find patterns on its own,” Papalexakis said. “The tool was able to identify the same patterns that very closely correspond to the physically meaningful environmental states that are already known to human operators and commissioners at the LIGO sites.”

Papalexakis added that the team had worked with the LIGO Scientific Collaboration to secure the release of a very large dataset that pertains to the analysis reported in the research paper. This data release allows the research community to not only validate the team’s results but also develop new algorithms that seek to identify patterns in the data.

“We have identified a fascinating link between external environmental noise and the presence of certain types of glitches that corrupt the quality of the data,” Papalexakis said. “This discovery has the potential to help eliminate or prevent the occurrence of such noise.”

The team organized and worked through all the LIGO channels for about a year. Richardson noted that the data release was a major undertaking.

“Our team spearheaded this release on behalf of the whole LIGO Scientific Collaboration, which has about 3,200 members,” he said. “This is the first of these particular types of datasets and we think it’s going to have a large impact in the machine learning and the computer science community.”

Richardson explained that the tool the team developed can take information from signals from numerous heterogeneous sensors that are measuring different disturbances around the LIGO sites. The tool can distill the information into a single state, he said, that can then be used to search for time series associations of when noise problems occurred in the LIGO detectors and correlate them with the sites’ environmental states at those times.

“If you can identify the patterns, you can make physical changes to the detector — replace components, for example,” he said. “The hope is that our tool can shed light on physical noise coupling pathways that allow for actionable experimental changes to be made to the LIGO detectors. Our long-term goal is for this tool to be used to detect new associations and new forms of environmental states associated with unknown noise problems in the interferometers.”

Pooyan Goodarzi, a doctoral student working with Richardson and a coauthor on the paper, emphasized the importance of releasing the dataset publicly.

“Typically, such data tend to be proprietary,” he said. “We managed, nonetheless, to release a large-scale dataset that we hope results in more interdisciplinary research in data science and machine learning.”

The team’s research was supported by a grant from the National Science Foundation awarded through a special program, Advancing Discovery with AI-Powered Tools, focused on applying artificial intelligence/machine learning to address problems in the physical sciences.

5 notes

·

View notes

Text

Difference Between Augmented Realtiy And Virutal Reality Comparing Two Revolutionary Technologies

Difference Between Augmented Reality (AR) and Virtual Reality (VR) are awesome immersive technologies that have captured the creativity of both the generation industry and clients. Though they proportion similarities in their capacity to modify how we perceive and interact with the sector, AR and VR are basically exceptional in their technique and use cases. This essay explores the center variations between AR and VR, focusing on their definitions, technological mechanisms, hardware necessities, person interplay, use cases, and destiny ability.

Definitions and Core Concepts

AR complements the person's notion of reality by way of including digital elements that interact with the physical environment. The key concept in the back of AR isn't always to replace the physical international but to enhance it, imparting a blend of virtual and actual-world experiences. Examples of AR can be visible in phone applications like Pokémon Go, wherein users can see digital creatures within the actual international through their smartphone cameras, or Snapchat filters, wherein virtual outcomes are applied to human faces.

Virtual Reality (VR), on the other hand, creates entirely immersive digital surroundings that replace the actual international. When the use of VR, customers are transported into a totally laptop-generated international that may simulate real-world environments or create entirely fantastical landscapes. This virtual environment is commonly experienced through VR headsets, which include the Oculus Rift or PlayStation VR, which block out external visual input and offer 360-diploma visuals of the virtual space. The number one purpose of VR is to immerse users so absolutely in a virtual environment that the distinction between the digital and actual worlds temporarily dissolves.

Technological Mechanisms

AR and VR rent distinct technological mechanisms to acquire their respective experiences. In Augmented Reality, the primary mission is to combine the actual international with digital elements in a manner that feels seamless and natural. This requires tracking the user’s function and orientation in the actual global, which is generally executed through the use of cameras, sensors, and accelerometers in smartphones or AR glasses. The AR software procedures the visual entry from the digital camera and superimposes virtual objects into the proper function in the actual international scene. The gadget also needs to make sure that these objects interact with real-world factors in plausible ways, such as having a virtual ball soar off an actual table or aligning a digital map on the floor. Real-time processing is important to keep the illusion that digital factors are a part of the actual world.

In contrast, Virtual Reality includes developing a totally immersive virtual global that absolutely replaces the user's actual-international surroundings. The VR device desires to render a three-D environment in real-time, imparting unique views because the person's actions their head or body. This is generally performed with the use of state-of-the-art image engines and powerful processors, which simulate lighting fixtures, textures, and physics to make the digital world as realistic as feasible. A VR headset affords stereoscopic shows (one for every eye) to provide the phantasm of depth, and movement-tracking sensors ensure that the user’s actions—including looking around or walking—are meditated appropriately within the virtual international. VR requires excessive constancy in visuals and coffee latency to save you from movement sickness and hold a sense of presence within the virtual international.

Hardware Requirements

The hardware necessities for AR and VR additionally differ notably. For AR, the hardware can be enormously minimal. Since AR overlays digital statistics onto the real world, devices like smartphones or drugs with built-in cameras and GPS capabilities are regularly enough for fundamental AR packages. More superior AR reviews and those related to 3-D holograms or complex interactions may require specialized AR headsets like Microsoft HoloLens or Magic Leap, with additional sensors for depth belief and environmental mapping.

In VR, the hardware setup is typically more concerned. In the middle of any VR reveal in is a headset, which provides the necessary presentations and motion tracking to create an immersive environment. High-give-up VR systems, such as those for gaming or expert simulations, may also require outside sensors, hand controllers, and occasionally even treadmills or haptic remarks devices to simulate physical movement and touch in the virtual global. The computing strength required to run VR applications is also drastically higher than AR, often demanding powerful portraits playing cards, and processors to render the three-D environments in real-time.

User Interaction

User interplay is another place wherein AR and VR vary extensively. In AR, user interaction typically occurs inside the real international, with digital elements appearing as extensions or improvements of actual-world gadgets. For example, a person may interact with a digital man or woman in AR by moving their phone around or the usage of hand gestures to control virtual gadgets. The interaction is often context-sensitive, relying on the person’s bodily surroundings as part of the experience. AR is regularly extra informal and reachable because it may be experienced with everyday gadgets like smartphones. In VR, the interaction is fully immersive and takes vicinity in the digital global. Users can interact with the digital surroundings with the use of specialized controllers or, in some instances, hand-monitoring sensors that map the person’s actions into the virtual space. For instance, in a VR game, the user might physically swing their arms to wield a sword or pass their frame to stay away from an attack. VR interplay tends to be extra excessive and calls for a higher degree of engagement for the reason that user is absolutely enveloped inside the digital surroundings. Use Cases

The use instances for AR and VR additionally highlight their fundamental variations. In industries like retail, AR allows customers to peer how products, along with furniture or clothing, could look of their very own houses or on their bodies before making a buy. AR is also famous in schooling and education, in which it is able to provide actual-time information or visible aids in a bodily surrounding. For instance, clinical students would possibly use AR to visualize a virtual anatomy overlay on a real human frame, improving their mastering experience.

VR, alternatively, is right for applications that require general immersion. In gaming, VR permits gamers to enjoy a heightened experience of presence in fantastical worlds, together with flying via area or preventing dragons. In schooling and simulation, VR is used in fields like aviation and the army, in which practical virtual environments can simulate excessive-threat eventualities without placing the user in actual threat. VR is also gaining traction in fields like structure and design, in which it lets designers and clients discover virtual fashions of homes and areas before they are constructed. Future Potential

The destiny capability of AR and VR is extensive, although each technology is in all likelihood to conform in distinct directions. AR is anticipated to end up extra pervasive as cell devices and wearables emerge as superior. The development of lightweight, low-priced AR glasses may want to make it a ubiquitous tool for ordinary obligations, together with navigation, communique, and data retrieval. AR may also revolutionize fields like healthcare, production, and logistics by supplying people with actual-time facts and guidance overlaid on their physical surroundings. VR is likely to persist increase in regions that advantage of immersive reports, such as leisure, training, and far-off collaboration. As VR headsets emerge as lower priced and wireless, the barriers to huge adoption may lessen, making VR a not unusual tool for each expert and personal use. In a long time, the traces between AR and VR may blur as combined truth (MR) technologies—inclusive of the ones being advanced with the aid of corporations like Meta (previously Facebook) and Microsoft—combine factors of both.

Conclusion

While AR and VR both provide immersive reports that adjust the way we perceive the sector, they do so in fundamentally one-of-a-kind approaches. AR enhances our interplay with the actual international by way of overlaying virtual content, whilst VR creates totally new virtual environments that update the real global. Their variations in technology, hardware, interplay, and use instances reflect the unique strengths of every, making them ideal for different applications. Both AR and VR hold extensive potential for the destiny, promising to reshape industries and ordinary lifestyles in ways we're simply beginning to discover.

2 notes

·

View notes

Text

Monitoring health care safety using SEnergy IoT

Monitoring healthcare safety using IoT (Internet of Things) technology, including SEnergy IoT, can greatly enhance patient care, streamline operations, and improve overall safety in healthcare facilities. SEnergy IoT, if specialized for healthcare applications, can offer several advantages in this context. Here's how monitoring healthcare safety using SEnergy IoT can be beneficial:

Patient Monitoring: SEnergy IoT can be used to monitor patient vital signs in real-time. Wearable devices equipped with sensors can track heart rate, blood pressure, temperature, and other critical parameters. Any deviations from normal values can trigger alerts to healthcare providers, allowing for timely intervention.

Fall Detection: IoT sensors, including accelerometers and motion detectors, can be used to detect falls in patients, especially the elderly or those with mobility issues. Alerts can be sent to healthcare staff, reducing response times and minimizing the risk of injuries.

Medication Management: IoT can be used to ensure medication adherence. Smart pill dispensers can remind patients to take their medications, dispense the correct dosage, and send notifications to caregivers or healthcare providers in case of missed doses.

Infection Control: SEnergy IoT can help monitor and control infections within healthcare facilities. Smart sensors can track hand hygiene compliance, air quality, and the movement of personnel and patients, helping to identify and mitigate potential sources of infection.

Asset Tracking: IoT can be used to track and manage medical equipment and supplies, ensuring that critical resources are always available when needed. This can reduce the risk of equipment shortages or misplacement.

Environmental Monitoring: SEnergy IoT can monitor environmental factors such as temperature, humidity, and air quality in healthcare facilities. This is crucial for maintaining the integrity of medications, medical devices, and the comfort of patients and staff.

Security and Access Control: IoT can enhance security within healthcare facilities by providing access control systems that use biometrics or smart cards. It can also monitor unauthorized access to sensitive areas and send alerts in real-time.

Patient Privacy: SEnergy IoT can help ensure patient privacy and data security by implementing robust encryption and access control measures for healthcare data transmitted over the network.

Predictive Maintenance: IoT sensors can be used to monitor the condition of critical equipment and predict when maintenance is needed. This proactive approach can reduce downtime and improve the safety of medical devices.

Emergency Response: In case of emergencies, SEnergy IoT can automatically trigger alerts and initiate emergency response protocols. For example, in the event of a fire, IoT sensors can detect smoke or elevated temperatures and activate alarms and evacuation procedures.

Data Analytics: The data collected through SEnergy IoT devices can be analyzed to identify trends, patterns, and anomalies. This can help healthcare providers make informed decisions, improve patient outcomes, and enhance safety protocols.

Remote Monitoring: IoT enables remote monitoring of patients, allowing healthcare providers to keep an eye on patients' health and well-being even when they are not in a healthcare facility.

Compliance and Reporting: SEnergy IoT can facilitate compliance with regulatory requirements by automating data collection and reporting processes, reducing the risk of errors and non-compliance.

To effectively implement SEnergy IoT for healthcare safety, it's crucial to address privacy and security concerns, ensure interoperability among various devices and systems, and establish clear protocols for responding to alerts and data analysis. Additionally, healthcare professionals should be trained in using IoT solutions to maximize their benefits and ensure patient safety.

2 notes

·

View notes

Text

Surveillance Systems for Early Lumpy Skin Disease Detection and Rapid Response

Introduction

Lumpy Skin Disease (LSD) is a highly contagious viral infection that primarily affects cattle and has the potential to cause significant economic losses in the livestock industry. Rapid detection and effective management of LSD outbreaks are essential to prevent its spread and mitigate its impact. In recent years, advancements in surveillance systems have played a crucial role in early LSD detection and rapid response, leading to improved LSD care and control strategies.

The Threat of Lumpy Skin Disease

Lumpy Skin Disease is caused by the LSD virus, a member of the Poxviridae family. It is characterized by fever, nodules, and skin lesions on the animal's body, leading to reduced milk production, weight loss, and decreased quality of hides. The disease can spread through direct contact, insect vectors, and contaminated fomites, making it a major concern for livestock industries globally.

To know more about : -

Surveillance Systems for Early Detection

Traditional methods of disease detection relied on visual observation and clinical diagnosis. However, these methods can delay the identification of LSD cases, allowing the disease to spread further. Modern surveillance systems leverage technology to enhance early detection. These systems utilize a combination of methods, including:

Remote Sensing and Imaging: Satellite imagery and aerial drones equipped with high-resolution cameras can monitor large livestock areas for signs of skin lesions and changes in animal behavior. These images are analyzed using machine learning algorithms to identify potential LSD outbreaks.

IoT and Wearable Devices: Internet of Things (IoT) devices such as temperature sensors, accelerometers, and RFID tags can be attached to cattle. These devices continuously collect data on vital parameters and movement patterns, allowing for the early detection of abnormalities associated with LSD infection.

Data Analytics and Big Data: Surveillance data from various sources, including veterinary clinics, abattoirs, and livestock markets, can be aggregated and analyzed using big data analytics. This enables the identification of patterns and trends that may indicate the presence of LSD.

Health Monitoring Apps: Mobile applications allow farmers and veterinarians to report suspected cases of LSD and track disease progression. These apps facilitate real-time communication and coordination, aiding in early response efforts.

Rapid Response and LSD Care

Early detection is only half the battle; a rapid and coordinated response is equally crucial. Surveillance systems are not only capable of identifying potential outbreaks but also play a pivotal role in implementing effective LSD care strategies:

Isolation and Quarantine: Detected infected animals can be isolated and quarantined promptly, preventing the further spread of the disease. Surveillance data helps identify high-risk areas and individuals for targeted quarantine measures.

Vaccination Campaigns: Based on surveillance data indicating disease prevalence in specific regions, targeted vaccination campaigns can be initiated to immunize susceptible animals and halt the spread of LSD.

Vector Control: Surveillance systems can track insect vectors responsible for transmitting the LSD virus. This information enables the implementation of vector control measures to reduce disease transmission.

Resource Allocation: Effective response requires proper resource allocation. Surveillance data helps authorities allocate veterinary personnel, medical supplies, and equipment to affected areas efficiently.

Challenges and Future Directions

While surveillance systems offer promising solutions, challenges remain. Limited access to technology, particularly in rural areas, can hinder the implementation of these systems. Data privacy concerns and the need for robust cybersecurity measures are also crucial considerations.

In the future, the integration of artificial intelligence (AI) and machine learning can further enhance the accuracy of disease prediction models. Real-time genetic sequencing of the virus can provide insights into its mutations and evolution, aiding in the development of more effective vaccines.

Conclusion

Surveillance systems have revolutionized the way we detect, respond to, and manage Lumpy Skin Disease outbreaks. The ability to identify potential cases early and respond rapidly has significantly improved LSD care and control strategies. As technology continues to advance, these systems will play an increasingly vital role in safeguarding livestock industries against the threat of Lumpy Skin Disease and other contagious infections. Effective collaboration between veterinary professionals, farmers, researchers, and technology developers will be key to successfully harnessing the potential of surveillance systems for the benefit of animal health and the global economy.

Read more : -

2 notes

·

View notes

Text

Emulator vs. Real Device Testing: What Should You Choose?

In our first article of the Mobile Application Testing series, we introduced the core concepts of mobile testing—highlighting how mobile apps must be tested across multiple platforms, screen sizes, networks, and user behaviors. We also emphasized how fragmented mobile environments increase the complexity of quality assurance, making robust mobile device testing strategies essential.

Building on that foundation, this blog focuses on one of the most critical decisions mobile testers face:

Should you test your app using emulators or real devices?

Both approaches serve essential roles in mobile QA, but each comes with its own set of advantages, limitations, and ideal use cases. Understanding when and how to use emulator vs real device testing can make your testing strategy more effective, scalable, and cost-efficient.

What is Emulator Testing?

An emulator is a software-based tool that mimics the configuration, behavior, and operating system of a real mobile device. Developers often use Android emulators (from Android Studio) or iOS simulators (from Xcode) to create virtual devices for testing purposes.

These emulators simulate the device’s hardware, screen, memory, and operating system, enabling testers to validate apps without needing physical smartphones or tablets.

✅ Advantages of Emulator Testing

Cost-Effective No need to invest in purchasing or maintaining dozens of physical devices.

Quick Setup Developers can quickly spin up multiple virtual devices with various screen sizes, OS versions, or languages.

Integrated Debugging Tools Emulators are deeply integrated with IDEs like Android Studio and Xcode, offering extensive logs, breakpoints, and performance analysis tools.

Faster for Early Testing Ideal for initial development phases when functionality, UI alignment, or basic workflows are being verified.

❌ Limitations of Emulator Testing

Lack of Real-World Accuracy Emulators can’t replicate real-world conditions like varying network speeds, incoming calls, push notifications, or sensor behavior.

Poor Performance Testing Capability Metrics like battery drainage, CPU usage, and memory leaks are not accurately reflected.

Incompatibility with Some Features Features relying on Bluetooth, NFC, camera APIs, or fingerprint sensors often fail or behave inconsistently.

What is Real Device Testing?

Real device testing involves testing mobile applications on actual smartphones or tablets—physical devices users interact with in the real world. This method allows teams to validate how an app performs across different OS versions, device models, network types, and environmental factors.

✅ Advantages of Real Device Testing

True User Experience Validation You get a real-world view of app performance, responsiveness, battery usage, and usability.

Reliable Performance Testing Tests such as scrolling lag, animations, and touch responsiveness behave authentically on real devices.

Sensor and Hardware Interaction Testing features like GPS, camera, gyroscope, accelerometer, and biometric authentication is only possible on real hardware.

Detect Device-Specific Bugs Certain bugs appear only under specific hardware or manufacturer configurations (e.g., MIUI, Samsung One UI), which emulators might not catch.

❌ Limitations of Real Device Testing

Higher Cost Maintaining a physical device lab with hundreds of devices is expensive and often impractical for small or mid-sized teams.

Manual Setup and Maintenance Devices must be updated regularly and maintained for consistent results.

Scalability Issues Executing automated test suites across many real devices can be time-consuming without proper infrastructure.

When Should You Use Emulators or Real Devices?

The most effective mobile testing strategies combine both approaches, using each at different phases of the development lifecycle.

✔ Use Emulators When:

You’re in the early development phase.

You need to test across multiple screen resolutions and OS versions quickly.

You're writing or debugging unit and functional tests.

Your team is working in a CI/CD environment and needs quick feedback loops.

✔ Use Real Devices When:

You're close to the release phase and need real-world validation.

You need to verify device-specific UI bugs or performance bottlenecks.

You’re testing features like Bluetooth, GPS, camera, or biometrics.

You're evaluating battery consumption, network interruptions, or gesture interactions.

Bridging the Gap with Cloud-Based Testing Platforms

Maintaining a large in-house device lab is costly and hard to scale. This is where cloud-based mobile testing platforms help. These services provide access to thousands of real and virtual devices over the internet—letting you test across multiple platforms at scale.

🔧 Top Platforms to Know

1. BrowserStack

Offers instant access to 3,000+ real mobile devices and browsers.

Supports both manual and automated testing.

Integration with Appium, Espresso, and XCUITest.

Real-time debugging, screenshots, and video logs.

2. AWS Device Farm

Lets you test apps on real Android and iOS devices hosted in the cloud.

Parallel test execution to reduce test time.

Supports multiple test frameworks: Appium, Calabash, UI Automator, etc.

Integrates with Jenkins, GitLab, and other CI tools.

3. Sauce Labs

Offers both simulators and real devices for mobile app testing.

Provides deep analytics, performance reports, and device logs.

Scalable test automation infrastructure for large teams.

Final Thoughts: What Should You Choose?

In reality, it’s not Emulator vs Real Device Testing, but Emulator + Real Device Testing.

Each serves a specific purpose. Emulators are ideal for cost-effective early-stage testing, while real devices are essential for true user experience validation.

To build a reliable, scalable, and agile mobile testing pipeline:

Start with emulators for fast feedback.

Use real devices for regression, compatibility, and pre-release validation.

Leverage cloud testing platforms for extensive device coverage without infrastructure overhead.

At Testrig Technologies, we help enterprises and startups streamline their mobile QA process with a combination of emulator-based automation, real device testing, and cloud testing solutions. Whether you're launching your first app or optimizing performance at scale, our testing experts are here to ensure quality, speed, and reliability.

0 notes

Text

3-axis accelerometer, Accelerometer sensor application, vibration sensors

LIS2MDL Series 3.6V 50 Hz High Performance 3-Axis Digital Magnetic Sensor-LGA-12

0 notes

Text

Fibre Optic Gyroscope Market Emerging Trends Shaping Navigation Technology

The fibre optic gyroscope market is undergoing significant transformation driven by rapid technological advancements, increasing demand for precise navigation, and growth in autonomous systems. FOGs, which are used to measure angular velocity using the interference of light, have become a preferred solution over mechanical gyroscopes due to their higher reliability, compact design, and immunity to electromagnetic interference. As global industries seek more advanced inertial navigation systems, the FOG market is positioned for substantial growth, influenced by emerging trends across aerospace, defense, marine, and industrial automation sectors.

Rising Demand for Autonomous Navigation Systems

One of the most prominent trends in the fibre optic gyroscope market is the surging demand for autonomous vehicles and navigation systems. Self-driving cars, unmanned aerial vehicles (UAVs), and autonomous underwater vehicles (AUVs) require precise motion sensing and orientation tracking. FOGs offer the required accuracy and durability to support these platforms. This trend is particularly strong in the defense sector, where unmanned ground and aerial systems rely heavily on gyroscopic inputs for mission-critical operations in GPS-denied environments.

Integration with AI and Sensor Fusion Technologies

The integration of fibre optic gyroscopes with artificial intelligence (AI) and sensor fusion platforms is accelerating. Sensor fusion combines data from multiple sensors such as accelerometers, magnetometers, and FOGs to create a more accurate and reliable navigation system. When enhanced with AI algorithms, these systems can predict and adjust navigation paths dynamically. This development is crucial for sectors such as robotics, aerospace, and space exploration, where even minor errors in orientation can lead to mission failure.

Advancements in Miniaturization and MEMS Integration

Miniaturization of FOG technology has become a key focus area, enabling its application in compact and mobile platforms. Recent trends highlight the convergence of microelectromechanical systems (MEMS) with fibre optic gyroscopes, allowing for the development of hybrid gyros that combine the precision of FOGs with the size and cost-effectiveness of MEMS. This innovation is opening up new opportunities in consumer electronics, wearable technology, and portable navigation devices.

Growing Adoption in Space and Satellite Applications

The space industry is increasingly adopting fibre optic gyroscopes for satellite attitude control and interplanetary missions. As space agencies and private companies ramp up satellite launches, there is an intensified need for lightweight and highly reliable orientation systems. FOGs provide excellent long-term stability and resistance to harsh environments, making them ideal for orbiting satellites, launch vehicles, and deep space missions. This trend is expected to grow with the expansion of global satellite constellations and interplanetary exploration initiatives.

Rising Emphasis on Defense Modernization

Globally, countries are investing heavily in defense modernization, and fibre optic gyroscopes are playing a vital role in this transformation. From missile guidance to submarine navigation and armored vehicle stabilization, FOGs are essential components of next-generation defense equipment. The increased use of drones and advanced munitions has further bolstered demand, with militaries requiring reliable navigation tools in GPS-compromised conditions. FOGs meet this need due to their ability to function accurately without external signals.

Increasing Commercial Aviation Applications

In commercial aviation, FOGs are being increasingly used in inertial navigation systems (INS) for both fixed-wing and rotary aircraft. With the growth in global air traffic and the demand for more efficient and safer aircraft, aviation manufacturers are incorporating high-precision navigation systems. Fibre optic gyroscopes are helping ensure reliable flight control, especially under dynamic or turbulent conditions. As air travel rebounds post-pandemic and new aircraft technologies emerge, FOGs are expected to see expanded deployment.

Environmental and Energy Industry Utilization

Another emerging application area for FOGs is in the energy sector, particularly in oil and gas exploration and pipeline monitoring. In these environments, the ability to monitor orientation and rotation accurately is critical. FOG-based systems are being deployed for directional drilling, borehole surveying, and geophysical measurements. Their ruggedness and reliability in extreme conditions make them a valuable asset for such industries, a trend that is expected to strengthen with global energy demand.

Technological Advancements and R&D Investment

Continuous innovation is fueling the growth of the FOG market. Companies are investing in advanced research and development to enhance performance, reduce costs, and expand the application scope. Innovations in optical fiber materials, laser sources, and signal processing techniques are leading to better performance metrics in terms of bias stability, noise reduction, and temperature sensitivity. As a result, FOGs are becoming more accessible for commercial and industrial applications.

Conclusion

The fibre optic gyroscope market is evolving rapidly, with emerging trends pointing to a broader application landscape and improved technological capabilities. From autonomous vehicles and AI integration to space exploration and defense modernization, FOGs are becoming indispensable components in critical navigation systems. With ongoing innovation and increased adoption across sectors, the future of the FOG market looks promising, offering robust opportunities for stakeholders and setting new benchmarks in precision sensing.

0 notes

Text

Application of Captive Load Testing in Aircraft Wing Load Analysis

In the aerospace industry, ensuring the structural integrity and safety of aircraft components is paramount. One critical aspect of this is the testing and validation of aircraft wings, which endure significant aerodynamic loads during flight. Among the various testing methodologies, Captive Load Testing (CTS Testing) has emerged as a crucial technique for accurately assessing wing load responses under controlled conditions. This article explores the application of Captive Load Testing in aircraft wing load analysis, highlighting its importance, methodology, benefits, and real-world applications.

Understanding Aircraft Wing Loads

Aircraft wings are primary load-bearing components designed to generate lift and support the weight of the aircraft during flight. Wings experience a wide range of loads, including aerodynamic forces, inertial loads during maneuvers, gust loads, and ground handling stresses. These loads vary dynamically and can cause complex stress distributions throughout the wing structure.

Accurate assessment of wing load response is essential to:

Ensure structural safety and reliability

Optimize wing design for weight and performance

Comply with certification standards set by aviation authorities

Predict the lifespan and maintenance needs of the wing

Traditional analytical and computational methods, such as finite element analysis (FEA), provide valuable insights, but physical testing remains indispensable for validation.

What is Captive Load Testing?

Captive Load Testing (CTS Testing) is a testing methodology used to apply controlled loads to a component or structure in a fixed setup, often referred to as "captive," because the test specimen is restrained or supported in a specific test fixture. Unlike free or full-scale flight testing where loads vary uncontrollably, captive load testing allows precise application and measurement of loads in a repeatable environment.

In the context of aircraft wings, captive load testing involves mounting a wing or wing section in a test rig where hydraulic actuators or mechanical devices apply loads that simulate aerodynamic forces experienced during flight. The wing is instrumented with strain gauges, displacement sensors, and other instrumentation to record its response.

Objectives of Captive Load Testing in Wing Load Analysis

The primary goals of captive load testing for aircraft wings include:

Validation of Design Assumptions: Verifying that the wing structure behaves as predicted by design models under simulated load conditions.

Structural Integrity Assessment: Identifying any weak points, stress concentrations, or potential failure modes.

Certification Support: Providing evidence to aviation authorities such as the FAA or EASA that the wing meets safety and durability requirements.

Damage Tolerance Evaluation: Understanding how cracks, corrosion, or fatigue affect load carrying capacity.

Material and Component Testing: Evaluating performance of composite materials, fasteners, and bonding under load.

The CTS Testing Setup for Aircraft Wings

A typical captive load testing setup for aircraft wings consists of:

Test Fixture or Rig: A large, robust frame designed to hold the wing securely in place while allowing controlled application of loads at various points.

Load Application System: Hydraulic actuators or servo-controlled mechanical devices that apply forces and moments to simulate aerodynamic and inertial loads.

Instrumentation: A network of strain gauges, displacement transducers, accelerometers, and sometimes acoustic emission sensors attached to critical areas of the wing to monitor structural response.

Data Acquisition System: High-speed data recorders and analysis software collect and process the sensor data for real-time monitoring and post-test evaluation.

Depending on the wing size and test objectives, captive load testing can be conducted on full-scale wings, subassemblies, or scaled-down models.

How Captive Load Testing is Conducted

The process of captive load testing on aircraft wings typically follows these steps:

1. Preparation and Instrumentation

The wing or wing section is prepared by installing sensors at predetermined locations based on structural analysis. Strain gauges measure surface strain, while displacement sensors track deflections.

2. Mounting

The wing is carefully mounted in the test rig, ensuring alignment and support points simulate real-world boundary conditions such as fuselage attachments.

3. Load Application

Using hydraulic actuators, loads are applied incrementally to simulate various flight conditions, including:

Static loads representing steady-state flight

Gust loads simulating atmospheric turbulence

Maneuver loads from sharp turns or sudden pitch changes

Each load case is applied under controlled conditions while continuously monitoring wing response.

4. Data Collection and Analysis

Sensor data is collected throughout the test, allowing engineers to observe strain distribution, deflections, and any signs of structural distress. This data is compared against predicted values from computational models.

5. Post-Test Inspection

After load application, the wing undergoes detailed inspections for cracks, delaminations, or other damage. Sometimes non-destructive testing methods like ultrasonic or X-ray inspection are used.

Benefits of Using Captive Load Testing in Wing Load Analysis

There are several advantages to incorporating captive load testing in the wing design and certification process:

Accuracy and Repeatability

CTS Testing provides a controlled environment where loads can be precisely applied and repeated. This reduces variability and allows detailed assessment of wing behavior under specific load cases.

Early Detection of Structural Issues

Captive load testing can reveal stress concentrations and potential failure points before full-scale flight testing, reducing risks and development costs.

Validation of Computational Models

Physical test data serves to validate and calibrate computational models such as finite element models, improving their predictive accuracy for future designs.

Supports Certification and Compliance

Regulatory agencies require evidence of structural safety. CTS Testing provides robust, traceable data to support airworthiness certification.

Testing of Repair and Modification Effects

After repairs or structural modifications, captive load testing can assess if the wing maintains its load carrying capability.

Challenges and Limitations

While Captive Load Testing offers numerous benefits, it also comes with challenges:

High Cost and Complexity: Building test rigs and conducting tests on large wings can be expensive and resource-intensive.

Scaling Issues: For very large wings, testing full scale may be impractical, requiring scaled models and extrapolation.

Boundary Condition Replication: Perfectly simulating in-flight constraints on the wing in a fixed test rig can be difficult.

Limited Load Cases: Some complex dynamic loads experienced in flight may be hard to replicate precisely.

Despite these challenges, captive load testing remains a cornerstone in structural testing for aviation.

Real-World Applications and Case Studies

Example 1: Boeing 787 Dreamliner Wing Testing

During the development of the Boeing 787, captive load testing played a critical role in validating the composite wing design. Engineers applied simulated flight loads to full-scale wings to measure strain and deflection, confirming that the novel materials and structure met design expectations.

Example 2: Airbus A350 Wing Load Validation

Airbus employed captive load testing extensively for the A350 wing, which uses advanced composite materials. The testing helped verify the wing’s ability to handle gust loads and ensured compliance with stringent certification standards.

Example 3: Military Fighter Aircraft

Military aircraft wings undergo rigorous CTS Testing to ensure they can withstand extreme maneuver loads. For example, the F-35 Lightning II wings were tested under captive load conditions to validate structural integrity before flight trials.

The Future of Captive Load Testing in Aviation

With advances in materials science, aerospace design, and sensor technology, captive load testing continues to evolve:

Integration with Digital Twins: Real-time data from CTS Testing feeds digital twin models for improved predictive maintenance and design optimization.

Enhanced Sensor Networks: Wireless and fiber optic sensors enable more detailed and distributed monitoring of wing structures.

Automated Test Systems: Robotics and AI help automate load application and data analysis, increasing efficiency and accuracy.

Composite and Hybrid Structures: As composites become dominant, CTS Testing adapts to characterize their unique failure modes and load responses.

Advances in Sensor Technology and Data Acquisition for Captive Load Testing

One of the key drivers behind the evolution of Captive Load Testing (CTS Testing) in aviation is the rapid advancement in sensor technology and data acquisition systems. Historically, wing load testing relied heavily on strain gauges and displacement sensors connected via wired systems, which had limitations in terms of sensor placement, wiring complexity, and data fidelity.

Fiber Optic Sensors

Fiber optic sensors have revolutionized structural health monitoring and load testing. These sensors are lightweight, immune to electromagnetic interference, and capable of multiplexing many sensing points along a single fiber. Technologies such as Fiber Bragg Gratings (FBGs) can measure strain, temperature, and vibration with high accuracy and spatial resolution.

In captive load testing, the integration of fiber optic sensors allows for:

High-density sensor arrays: Providing detailed strain maps across the wing surface.

Real-time monitoring: Continuous data streams enable immediate detection of anomalies or unexpected responses.

Long-term durability: Fiber optics are less susceptible to environmental degradation compared to traditional strain gauges.

The use of fiber optic sensing during CTS Testing thus improves the granularity and reliability of load measurements, enabling better insight into wing behavior under complex loading scenarios.

Wireless Sensor Networks

Wireless sensor networks (WSNs) are gaining traction in captive load testing due to their ease of deployment and flexibility. These systems eliminate cumbersome wiring, reduce test setup times, and facilitate sensor placement in hard-to-reach areas.

In CTS Testing of aircraft wings, WSNs can:

Enable rapid instrumentation of test articles.

Allow dynamic reconfiguration of sensor placement during testing.

Facilitate integration with drones or robotic platforms for automated inspections.

Challenges remain in ensuring reliable data transmission in noisy electromagnetic environments and managing power consumption, but ongoing improvements in low-power protocols and robust communication technologies are addressing these issues.

Enhanced Data Acquisition and Analysis

Modern data acquisition systems used in captive load testing feature high sampling rates, multi-channel synchronization, and integrated signal processing. Coupled with advanced software tools, these systems support:

Automated anomaly detection: Machine learning algorithms can flag unusual strain or displacement patterns.

Real-time visualization: Engineers can monitor test progress and structural responses instantaneously.

Data fusion: Combining inputs from multiple sensor types (strain, acceleration, acoustic emission) for comprehensive analysis.

These advances are crucial for maximizing the value of CTS Testing by extracting detailed structural behavior information, reducing test durations, and enhancing safety margins.

Integration of Captive Load Testing with Digital Twin Technologies

Digital twin technology represents one of the most promising frontiers in aerospace engineering. A digital twin is a dynamic, virtual representation of a physical system that continuously integrates sensor data and simulation models to provide real-time insights into system performance and health.

Role of CTS Testing in Building Digital Twins

Captive load testing generates a rich dataset that forms the foundation for accurate digital twins of aircraft wings. Key contributions include:

Model Validation: Experimental strain and displacement data from CTS Testing validate and calibrate finite element models and other simulation tools.

Damage Modeling: CTS Testing under different load conditions reveals how damage initiates and propagates, informing damage tolerance models integrated into the digital twin.

Operational Scenarios: Realistic load cases applied during captive testing ensure the digital twin accurately reflects in-service conditions.

Once a digital twin is established, continuous sensor data from in-flight monitoring can update the model, enabling predictive maintenance and optimizing aircraft performance.

Benefits for Maintenance and Lifecycle Management

The integration of CTS Testing data into digital twins facilitates:

Condition-based Maintenance: Predicting when components require inspection or replacement before failure occurs.

Extended Service Life: By understanding actual load histories, wings can be certified for longer operational periods safely.

Design Improvement Feedback: Insights from digital twins enable iterative improvements in wing design and materials.

This synergy between captive load testing and digital twin technology is poised to transform aircraft lifecycle management from reactive to proactive strategies.

Advanced Materials and Their Impact on Captive Load Testing

The aviation industry is progressively adopting advanced materials such as carbon fiber reinforced polymers (CFRPs), titanium alloys, and hybrid composites in wing structures. These materials offer high strength-to-weight ratios but introduce new complexities for load testing.

Challenges with Composite Materials

Composites exhibit anisotropic behavior and complex failure mechanisms like delamination, fiber breakage, and matrix cracking. Unlike traditional aluminum alloys, composite damage is often internal and difficult to detect visually.

Captive load testing must therefore:

Use more sophisticated sensor arrays capable of detecting subtle changes within the material.

Apply multi-axial loading conditions to simulate real stress states.

Incorporate non-destructive evaluation techniques such as ultrasonic scanning or thermography alongside CTS Testing.

Role of CTS Testing in Composite Wing Certification

Regulatory agencies require thorough testing to certify composite wings. CTS Testing provides:

Validation of structural performance under various load spectra.

Data on fatigue behavior and damage progression.

Evidence for damage tolerance and fail-safe design concepts.

Effective captive load testing ensures composites meet stringent safety standards while optimizing weight savings.

Multidisciplinary Approaches Combining CTS Testing

Aircraft wing load analysis is inherently multidisciplinary, involving aerodynamics, structures, materials science, and controls engineering. Modern captive load testing integrates these domains through:

Aeroelastic Testing

Aeroelasticity examines the interaction between aerodynamic forces and structural deformation. CTS Testing setups increasingly incorporate wind tunnels or flow simulation combined with load application to capture aeroelastic effects such as flutter or divergence.

By applying captive loads while exposing the wing to airflow, engineers can:

Assess stability margins under coupled aerodynamic and structural loads.

Detect flutter onset and suppression techniques.

Validate computational aeroelastic models.

Thermal and Environmental Effects

Wings experience varying temperatures and environmental conditions in flight that affect material properties and load response. Advanced CTS Testing simulates these conditions by:

Heating or cooling the wing during load application.

Introducing humidity or corrosive atmospheres to study degradation effects.

Such combined environmental and load testing ensures wing designs are robust across the full range of operational conditions.

Industry Trends Driving Future CTS Testing Innovations

Automation and Robotics

The complexity and scale of captive load testing are pushing the industry towards greater automation. Robots and automated actuators can:

Precisely apply complex load profiles.

Handle heavy and awkward wing components safely.

Conduct repetitive test sequences with minimal human intervention.

Automation increases efficiency, reduces human error, and improves data consistency.

Artificial Intelligence and Machine Learning

AI algorithms analyze vast amounts of CTS Testing data to:

Detect early signs of structural anomalies.

Optimize load application sequences for thorough testing.

Predict remaining useful life based on load-response patterns.

Machine learning enhances decision-making and enables more intelligent testing regimes.

Virtual and Augmented Reality

Virtual reality (VR) and augmented reality (AR) tools allow engineers to visualize strain distributions and stress patterns in immersive environments during captive load testing. This improves understanding and supports collaborative problem-solving.

Economic and Environmental Impacts of Improved CTS Testing

Cost Reduction

Improved CTS Testing leads to:

Reduced development time: Faster identification of structural issues means quicker design iterations.

Lower certification costs: More precise testing data satisfies regulatory requirements efficiently.

Extended aircraft service life: Better damage tolerance reduces premature retirements and costly repairs.

Environmental Benefits

By enabling lighter and more durable wing designs through precise load characterization, CTS Testing contributes to:

Fuel efficiency: Weight savings translate to reduced fuel consumption and emissions.

Sustainable aircraft design: Optimized structures require fewer raw materials and generate less waste during manufacturing.

Summary

The application of Captive Load Testing in aircraft wing load analysis is evolving rapidly with advances in sensor technology, digital integration, materials science, and automation. CTS Testing remains essential for ensuring the safety, performance, and longevity of aircraft wings, while also supporting innovative design and certification processes.

As aviation pushes towards more efficient, lightweight, and sustainable aircraft, captive load testing will be at the forefront—providing the critical data and validation needed to make these advances a reality.

0 notes

Text

Vibration Analysis in Gearboxes

Vibration Analysis in Gearboxes represents a critical practice within predictive maintenance and condition monitoring that ensures the health and longevity of rotating machinery. Gearbox vibration diagnostics plays an essential role in industrial environments, offering early detection of gear tooth damage, misalignment, imbalance, bearing faults, and lubrication issues. Employing advanced technologies like wireless sensors, spectrum analysis, and frequency domain monitoring under the auspices of modern Industry 4.0 frameworks, businesses can dramatically reduce downtime, improve safety, and optimize asset performance.

Understanding Gearbox Vibration Signatures

Every gearbox emits a distinctive vibration signature during normal operation. When abnormalities occur—such as cracked gear teeth or insufficient lubrication—the vibration amplitude and frequency patterns shift. Detecting these deviations through time waveform analysis and spectral signature comparison enables accurate diagnosis. Technicians often use accelerometers, velocity sensors, and displacement probes to collect data, which is then processed via Fast Fourier Transform (FFT) to isolate harmonic peaks indicative of specific faults. Vibration velocity, vibration acceleration, and harmonic resonance are key terms in this deep analysis. Software platforms like AI‑driven analytics interpret trends in real time, giving maintenance engineers insight into degraded conditions before catastrophic failure.

The Role of Condition Monitoring and Preventive Maintenance

Condition monitoring, which encompasses vibration monitoring, temperature measurement, oil analysis, and acoustic emission, forms part of a broader predictive maintenance strategy. Vibration analysis in gearboxes has emerged as one of the most powerful tools in this toolkit. By establishing a baseline signature and continuously tracking changes, engineers can schedule targeted maintenance interventions at the most cost‑efficient time rather than responding to breakdowns. This approach improves reliability, extends equipment lifecycle, and maximizes ROI. For large‑scale plants, remote monitoring systems provide these alerts 24/7, integrating with industrial IoT networks and cloud computing.

Real‑World Applications and Industry Use Cases

Vibration analysis in gearboxes is used extensively across sectors such as cement, mining, metals, chemical, and oil & gas. In cement plants, for example, gearbox vibration monitoring helps detect wear early in ball mill drives, preventing unexpected downtime and associated revenue loss. In mining, trackless mobile machinery with complex gear-driven drivetrains benefits from spectrum analysis of gearbox vibration to uncover misalignment or gear wear. These real‑world implementations often combine wireless vibration sensors mounted on gearbox housings with AI‑powered platforms that load the data into dashboards, sending alerts via SMS or email to maintenance teams with precise fault frequencies flagged for inspection.

Wireless and Remote Sensing Technologies

Modern condition monitoring systems increasingly leverage wireless vibration sensors for gearboxes to facilitate easy installation and enhanced data coverage, especially in harsh or hazardous environments. Wireless accelerometers and triaxial vibration sensors can capture X, Y, and Z axis data along with reference phase channels, ensuring a comprehensive monitoring solution. These devices can be battery‑powered or light‑harvesting, capable of running for years without manual calibration. Wireless systems allow technicians to deploy plug‑and‑play sensors on gearbox casings, with data transmitted via Wi‑Fi or LTE directly into analytic engines. Remote monitoring greatly reduces manual route‑based inspections and ensures real‑time anomaly detection with minimal intervention.

Advanced Analytics and Fault Diagnosis

Once vibration data is captured, anomaly detection algorithms assess it against baseline signatures to identify fault conditions. Frequency domain features such as gear mesh frequency, shaft order harmonics, sidebands, and modulated signals are evaluated using AI and physics‑based models. A good example is the detection of a cracked gear tooth. When the defect enters the load zone, it generates an impact at the gear mesh frequency. Spectrum peaks or amplitude modulation help isolate this fault. Similarly, insufficient lubrication produces friction‑induced noise visible in broadband vibration. These diagnostic insights help create actionable maintenance work orders.

Integrating Vibration Analysis into Predictive Maintenance Platforms

Leading condition monitoring platforms integrate vibration, temperature, acoustic, and magnetic flux data into a centralized system. These predictive maintenance solutions ingest sensor readings, compare deviations using statistical thresholds, and alert reliability teams when gearbox vibration exceeds acceptable levels. Dashboards display severity via color‑coded hierarchies, enabling teams to prioritize maintenance. Predicting Remaining Useful Life (RUL) of gearbox components based on trending vibration signatures ensures maintenance decisions are data‑driven and aligned with business objectives. Integration with asset management systems triggers service schedules, parts ordering, and alerts without manual interfaces.

Benefits, Challenges, and Best Practices

Adopting vibration analysis in gearboxes delivers multiple benefits: reduced unplanned downtime, cost savings, improved safety, extended equipment life, and data‑driven maintenance decisions. However, challenges include establishing accurate baselines, sensor placement on complex gearbox geometries, environmental noise interference, and ensuring sufficient sampling rates for high gear mesh frequencies. Best practices include selecting high‑quality accelerometers, performing route verification, combining time and frequency domain analyses, and calibrating AI models to local operating conditions. Engagement of multidisciplinary teams—mechanical, reliability, and data science—is critical to maximize system effectiveness.

Conclusion

Vibration Analysis in Gearboxes is an indispensable aspect of modern condition monitoring and predictive maintenance regimes. Through vibration spectrum analysis, wireless sensing, advanced analytics, and cloud‑based platforms, organizations can detect gear faults, misalignment, bearing issues, and lubrication deficiencies long before failure occurs. Real‑world use cases in industries like mining, cement, and oil & gas demonstrate tangible gains in uptime, safety, and cost reduction. By embracing these technologies and following best practices for sensor deployment and data analysis, businesses can optimize asset health and performance. Through expert vibration diagnostics integrated into predictive maintenance systems, manufacturers and heavy‑duty operations transform reactive maintenance into proactive reliability programs. Nanoprecise continues to lead in delivering wireless vibration sensor solutions, AI‑powered fault analysis, and remote gearbox monitoring systems. With deep expertise in advanced vibration analysis in gearboxes, Nanoprecise empowers industries to achieve world‑class reliability and operational excellence.

0 notes

Text

What is a gyroscope used for drone or robot?

A gyroscope is a sensor used to measure angular velocity. Its core function is to sense the rotational motion and attitude changes of an object. It can be applied to various fields that require navigation, positioning or stable control. ER-MG-057, as a high-performance single-axis MEMS gyroscope (angular velocity sensor), has become an ideal choice for many professional fields due to its excellent performance and reliable design. Precise measurement and stable output Wide range: ER-MG-057 has a wide range of measurement up to ±400°/s, which can meet the angular velocity monitoring needs of various dynamic scenes, covering high-speed rotation scenes (such as drone maneuvers and high-speed operation of robotic arms). Excellent stability: tactical-level high precision, zero bias instability as low as 1°/hr, angle random walk (ARW) 0.2°/√hr, 0.1dps ultra-low noise, 200Hz bandwidth combined with 2kHz data output rate, real-time capture of instantaneous angular velocity changes, and response delay of only 2ms. High integration and high reliability Ceramic LCC surface mount package, with airtight packaging to protect the core MEMS structure, is only 11x11x2mm in size, easy to integrate into various systems, and RoHS certified. Supports 5V (4.75~5.25V) power supply, power consumption is only 35mA, with low power consumption, default internal synchronization mode, optional external synchronization, compatible with multi-system architecture. Can work stably in strong shock (12g RMS) and vibration (1000g 5ms 1/2 sine wave) environment, suitable for high vibration scenarios such as aviation drones and unmanned vehicles. Application scenarios Navigation and positioning: suitable for scenarios such as inertial navigation system (INS), drones and autonomous driving. Combined with accelerometers, position, speed and direction are calculated by measuring the angle change and acceleration of movement. Precision instruments and stabilization systems: Ensure precise control and attitude stability of mechanical movement, and improve the real-time and accuracy of target tracking. UAV/aircraft stabilization: Provides data for the flight control system to sense the attitude changes (pitch, roll, yaw) of the aircraft, so that it can adjust the motor speed to keep the aircraft hovering steadily or flying according to instructions. Ship/vehicle stabilization system: Large ships use gyro stabilizers to reduce roll; provide reliable attitude data support for autonomous driving. Robot balance and control: Help robots sense tilt angles and maintain their own balance and motion control.

0 notes

Text

Global Frozen Pizza Market Report 2025–2031: Industry Outlook, Trends, and Forecast

"

The Global Frozen Pizza Market is projected to grow steadily from 2025 through 2031. This report offers critical insights into market dynamics, regional trends, competitive strategies, and upcoming opportunities. It's designed to guide companies, investors, and industry stakeholders in making smart, strategic decisions based on data and trend analysis.

Report Highlights:

Breakthroughs in Frozen Pizza product innovation

The role of synthetic sourcing in transforming production models

Emphasis on cost-reduction techniques and new product applications

Market Developments:

Advancing R&D and new product pipelines in the Frozen Pizza sector

Transition toward synthetic material use across production lines

Success stories from top players adopting cost-effective manufacturing

Featured Companies:

Nestle SA

Dr. Oetker

Schwan

Südzucker Group

General Mills

Conagra

Palermo Villa

Casa Tarradellas

Orkla

Goodfellas Pizza

Italpizza

Little Lady Foods

Roncadin

Amys Kitchen Inc

Bernatellos

Ditsch

Origus

Maruha nichiro

CXC Food

Sanquan Foods

Ottogi

Get detailed profiles of major industry players, including their growth strategies, product updates, and competitive positioning. This section helps you stay informed on key market leaders and their direction.

Download the Full Report Today https://marketsglob.com/report/frozen-pizza-market/1324/

Coverage by Segment:

Product Types Covered:

Less Than or Equal 10inch

10inch-16inch

More Than 16inch

Applications Covered:

Large Retail

Convenience and Independent Retail

Foodservice

Others

Sales Channels Covered:

Direct Channel

Distribution Channel

Regional Breakdown:

North America (United States, Canada, Mexico)

Europe (Germany, United Kingdom, France, Italy, Russia, Spain, Benelux, Poland, Austria, Portugal, Rest of Europe)

Asia-Pacific (China, Japan, Korea, India, Southeast Asia, Australia, Taiwan, Rest of Asia Pacific)

South America (Brazil, Argentina, Colombia, Chile, Peru, Venezuela, Rest of South America)

Middle East & Africa (UAE, Saudi Arabia, South Africa, Egypt, Nigeria, Rest of Middle East & Africa)

Key Insights:

Forecasts for market size, CAGR, and share through 2031

Analysis of growth potential in emerging and developed regions

Demand trends for generic vs. premium product offerings

Pricing models, company revenues, and financial outlook

Licensing deals, co-development initiatives, and strategic partnerships

This Global Frozen Pizza Market report is a complete guide to understanding where the industry stands and how it's expected to evolve. Whether you're launching a new product or expanding into new regions, this report will support your planning with actionable insights.

" Medical DVT Pump Market UV Curable Resins & Formulated Products Market Steel Tie Rod Market Modified Bitumen Market Ship Winch Market Pressure Washer Trailer Market MDF Crown Moulding Market Crystalline Waterproofing Material Market Medical Sensors Market Modular Homes Market Abrasive Paper Market Hydroxyethyl Cellulose (HEC) Market Non-Grain Oriented Electrical Steel Market MEMS Accelerometer Market Linear Low-density Polyethylene (LLDPE) Market Solder Paste Market Insufflator Market Bipolar forceps Market Bladder Scanner Market Concrete Mixer Truck Market

0 notes

Text

Wearable Sensors Market Size, Share, Demand, Future Growth, Challenges and Competitive Analysis

The global Wearable Sensors Market is entering a new phase of expansion driven by technological innovation, changing consumer behavior, and a growing emphasis on sustainability. As industries worldwide adopt smarter, more efficient systems, the demand for solutions within the Wearable Sensors Market continues to accelerate. This growth is being fueled by advancements in automation, data analytics, and digital transformation, which are helping businesses enhance productivity, reduce costs, and meet evolving regulatory and environmental standards.

Our latest market research report provides a comprehensive overview of the Wearable Sensors Market, featuring detailed insights into regional trends, competitive dynamics, and key growth drivers. The report also includes segment-wise analysis, forecasts, and strategic recommendations to help stakeholders make informed decisions in a rapidly shifting environment. With in-depth coverage and actionable intelligence, this report serves as a vital resource for investors, decision-makers, and industry professionals looking to capitalize on emerging opportunities in the global Wearable Sensors Market.

Discover the latest trends, growth opportunities, and strategic insights in our comprehensive Wearable Sensors Market report.

Download Full Report: https://www.databridgemarketresearch.com/reports/global-wearable-sensors-market

Wearable Sensors Market Overview

**Segments**

- By Type: Accelerometers, Magnetometers, Gyroscopes, Inertial Sensors, Pressure Sensors, Motion Sensors, Temperature Sensors, ECG Sensors, EMG Sensors, Photoplethysmogram (PPG) Sensors - By Application: Consumer Electronics, Healthcare, Sports and Fitness, Industrial - By End-User: Consumer, Healthcare, Defense, Industrial, Others

The global wearable sensors market is segmented based on type, application, and end-user. Wearable sensors have a wide range of applications including consumer electronics, healthcare, sports and fitness, and industrial sectors. The types of sensors used in wearables include accelerometers, magnetometers, gyroscopes, inertial sensors, pressure sensors, motion sensors, temperature sensors, ECG sensors, EMG sensors, and PPG sensors. These sensors play a crucial role in monitoring various physiological and environmental parameters, providing valuable data for users and businesses alike.

**Market Players**

- Apple Inc. - Fitbit, Inc. - Sony Corporation - Samsung Electronics Co. Ltd. - Garmin Ltd. - Xiaomi Corporation - Huawei Technologies Co, Ltd. - STMicroelectronics - Infineon Technologies AG - Broadcom Inc.

Key players in the global wearable sensors market include industry giants such as Apple Inc., Fitbit, Inc., Sony Corporation, Samsung Electronics Co. Ltd., Garmin Ltd., Xiaomi Corporation, Huawei Technologies Co, Ltd., STMicroelectronics, Infineon Technologies AG, and Broadcom Inc. These market players are heavily investing in research and development to introduce innovative wearable sensor technologies catering to various industries. Strategic partnerships, collaborations, and product launches are some of the common strategies adopted by these companies to strengthen their market presence and gain a competitive edge.