#Advanced Information Scraping Methods

Explore tagged Tumblr posts

Text

How Chrome Extensions Can Scrape Hidden Information From Network Requests By Overriding XMLHttpRequest

Chrome extensions offer a versatile way to enhance browsing experiences by adding extra functionality to the Chrome browser. They serve various purposes, like augmenting product pages with additional information on e-commerce sites, scraping data from social media platforms such as LinkedIn or Twitter for analysis or future use, and even facilitating content scraping services for retrieving specific data from websites.

Scraping data from web pages typically involves injecting a content script to parse HTML or traverse the DOM tree using CSS selectors and XPaths. However, modern web applications built with frameworks like React or Vue pose challenges to this traditional scraping method due to their reactive nature.

When visiting a tweet on Twitter, essential details like author information, likes, retweets, and replies aren't readily available in the DOM. However, by inspecting the network tab, one can find API calls containing this hidden data, inaccessible through traditional DOM scraping. It's indeed possible to scrape this information from API calls, bypassing the limitations posed by the DOM.

A secondary method for scraping data involves intercepting API calls by overriding XMLHttpRequest. This entails replacing the native definition of XMLHttpRequest with a modified version via a content script injection. By doing so, developers gain the ability to monitor events within their modified XMLHttpRequest object while still maintaining the functionality of the original XMLHttpRequest object, allowing for seamless traffic monitoring without disrupting the user experience on third-party websites.

Step-by-Step Guide to Overriding XMLHttpRequest

Create a Script.js

This is an immediately invoked function expression (IIFE). It creates a private scope for the code inside, preventing variables from polluting the global scope.

XHR Prototype Modification: These lines save references to the original send and open methods of the XMLHttpRequest prototype.

Override Open Method: This code overrides the open method of XMLHttpRequest. When we create an XMLHttpRequest, this modification stores the request URL in the URL property of the XHR object.

Override Send Method: This code overrides the send method of XMLHttpRequest. It adds an event listener for the 'load' event. If the URL contains the specified string ("UserByScreenName"), it executes code to handle the response. After that, it calls the original send method.

Handling the Response: If the URL includes "UserByScreenName," it creates a new div element, sets its innerText to the intercepted response, and appends it to the document body.

Let's explore how we can override XMLHttpRequest!

Creating a Script Element: This code creates a new script element, sets its type to "text/javascript," specifies the source URL using Chrome.runtime.getURL("script.js"), and then appends it to the head of the document since it is a common way to inject a script into a web page.

Checking for DOM Elements: The checkForDOM function checks if the document's body and head elements are present. If they are, it calls the interceptData function. If not, it schedules another call to checkForDOM using requestIdleCallback to ensure the script waits until the necessary DOM elements are available.

Scraping Data from Profile: The scrapeDataProfile function looks for an element with the ID "__interceptedData." If found, it parses the JSON content of that element and logs it to the console as the API response. If not found, it schedules another call to scrapeDataProfile using requestIdleCallback.

Initiating the Process: These lines initiate the process by calling requestIdleCallback on checkForDOM and scrapeDataProfile. This ensures that the script begins by checking for the existence of the necessary DOM elements and then proceeds to scrape data when the "__interceptedData" element is available.

Pros

You can obtain substantial information from the server response and store details not in the user interface.

Cons

The server response may change after a certain period.

Here's a valuable tip

By simulating Twitter's internal API calls, you can retrieve additional information that wouldn't typically be displayed. For instance, you can access user details who liked tweets by invoking the API responsible for fetching this data, which is triggered when viewing the list of users who liked a tweet. However, it's important to keep these API calls straightforward, as overly frequent or unusual calls may trigger bot protection measures. This caution is crucial, as platforms like LinkedIn often use such strategies to detect scrapers, potentially leading to account restrictions or bans.

Conclusion

To conclude the entire situation, one must grasp the specific use case. Sometimes, extracting data from the user interface can be challenging due to its scattered placement. Therefore, opting to listen to API calls and retrieve data in a unified manner is more straightforward, especially for a browser extension development company aiming to streamline data extraction processes. Many websites utilize APIs to fetch collections of entities from the backend, subsequently binding them to the UI; this is precisely why intercepting API calls becomes essential.

#Content Scraping Services#Innovative Scrapping Techniques#Advanced Information Scraping Methods#browser extension development services

0 notes

Text

Hi, idk who's going to see this post or whatnot, but I had a lot of thoughts on a post I reblogged about AI that started to veer off the specific topic of the post, so I wanted to make my own.

Some background on me: I studied Psychology and Computer Science in college several years ago, with an interdisciplinary minor called Cognitive Science that joined the two with philosophy, linguistics, and multiple other fields. The core concept was to study human thinking and learning and its similarities to computer logic, and thus the courses I took touched frequently on learning algorithms, or "AI". This was of course before it became the successor to bitcoin as the next energy hungry grift, to be clear. Since then I've kept up on the topic, and coincidentally, my partner has gone into freelance data model training and correction. So while I'm not an expert, I have a LOT of thoughts on the current issue of AI.

I'll start off by saying that AI isn't a brand new technology, it, more properly known as learning algorithms, has been around in the linguistics, stats, biotech, and computer science worlds for over a decade or two. However, pre-ChatGPT learning algorithms were ground-up designed tools specialized for individual purposes, trained on a very specific data set, to make it as accurate to one thing as possible. Some time ago, data scientists found out that if you have a large enough data set on one specific kind of information, you can get a learning algorithm to become REALLY good at that one thing by giving it lots of feedback on right vs wrong answers. Right and wrong answers are nearly binary, which is exactly how computers are coded, so by implementing the psychological method of operant conditioning, reward and punishment, you can teach a program how to identify and replicate things with incredible accuracy. That's what makes it a good tool.

And a good tool it was and still is. Reverse image search? Learning algorithm based. Complex relationship analysis between words used in the study of language? Often uses learning algorithms to model relationships. Simulations of extinct animal movements and behaviors? Learning algorithms trained on anatomy and physics. So many features of modern technology and science either implement learning algorithms directly into the function or utilize information obtained with the help of complex computer algorithms.

But a tool in the hand of a craftsman can be a weapon in the hand of a murderer. Facial recognition software, drone targeting systems, multiple features of advanced surveillance tech in the world are learning algorithm trained. And even outside of authoritarian violence, learning algorithms in the hands of get-rich-quick minded Silicon Valley tech bro business majors can be used extremely unethically. All AI art programs that exist right now are trained from illegally sourced art scraped from the web, and ChatGPT (and similar derived models) is trained on millions of unconsenting authors' works, be they professional, academic, or personal writing. To people in countries targeted by the US War Machine and artists the world over, these unethical uses of this technology are a major threat.

Further, it's well known now that AI art and especially ChatGPT are MAJOR power-hogs. This, however, is not inherent to learning algorithms / AI, but is rather a product of the size, runtime, and inefficiency of these models. While I don't know much about the efficiency issues of AI "art" programs, as I haven't used any since the days of "imaginary horses" trended and the software was contained to a university server room with a limited training set, I do know that ChatGPT is internally bloated to all hell. Remember what I said about specialization earlier? ChatGPT throws that out the window. Because they want to market ChatGPT as being able to do anything, the people running the model just cram it with as much as they can get their hands on, and yes, much of that is just scraped from the web without the knowledge or consent of those who have published it. So rather than being really good at one thing, the owners of ChatGPT want it to be infinitely good, infinitely knowledgeable, and infinitely running. So the algorithm is never shut off, it's constantly taking inputs and processing outputs with a neural network of unnecessary size.

Now this part is probably going to be controversial, but I genuinely do not care if you use ChatGPT, in specific use cases. I'll get to why in a moment, but first let me clarify what use cases. It is never ethical to use ChatGPT to write papers or published fiction (be it for profit or not); this is why I also fullstop oppose the use of publicly available gen AI in making "art". I say publicly available because, going back to my statement on specific models made for single project use, lighting, shading, and special effects in many 3D animated productions use specially trained learning algorithms to achieve the complex results seen in the finished production. Famously, the Spider-verse films use a specially trained in-house AI to replicate the exact look of comic book shading, using ethically sources examples to build a training set from the ground up, the unfortunately-now-old-fashioned way. The issue with gen AI in written and visual art is that the publicly available, always online algorithms are unethically designed and unethically run, because the decision makers behind them are not restricted enough by laws in place.

So that actually leads into why I don't give a shit if you use ChatGPT if you're not using it as a plagiarism machine. Fact of the matter is, there is no way ChatGPT is going to crumble until legislation comes into effect that illegalizes and cracks down on its practices. The public, free userbase worldwide is such a drop in the bucket of its serverload compared to the real way ChatGPT stays afloat: licensing its models to businesses with monthly subscriptions. I mean this sincerely, based on what little I can find about ChatGPT's corporate subscription model, THAT is the actual lifeline keeping it running the way it is. Individual visitor traffic worldwide could suddenly stop overnight and wouldn't affect ChatGPT's bottom line. So I don't care if you, I, or anyone else uses the website because until the US or EU governments act to explicitly ban ChatGPT and other gen AI business' shady practices, they are all only going to continue to stick around profit from big business contracts. So long as you do not give them money or sing their praises, you aren't doing any actual harm.

If you do insist on using ChatGPT after everything I've said, here's some advice I've gathered from testing the algorithm to avoid misinformation:

If you feel you must use it as a sounding board for figuring out personal mental or physical health problems like I've seen some people doing when they can't afford actual help, do not approach it conversationally in the first person. Speak in the third person as if you are talking about someone else entirely, and exclusively note factual information on observations, symptoms, and diagnoses. This is because where ChatGPT draws its information from depends on the style of writing provided. If you try to be as dry and clinical as possible, and request links to studies, you should get dry and clinical information in return. This approach also serves to divorce yourself mentally from the information discussed, making it less likely you'll latch onto anything. Speaking casually will likely target unprofessional sources.

Do not ask for citations, ask for links to relevant articles. ChatGPT is capable of generating links to actual websites in its database, but if asked to provide citations, it will replicate the structure of academic citations, and will very likely hallucinate at least one piece of information. It also does not help that these citations also will often be for papers not publicly available and will not include links.

ChatGPT is at its core a language association and logical analysis software, so naturally its best purposes are for analyzing written works for tone, summarizing information, and providing examples of programming. It's partially coded in python, so examples of Python and Java code I've tested come out 100% accurate. Complex Google Sheets formulas however are often finicky, as it often struggles with proper nesting orders of formulas.

Expanding off of that, if you think of the software as an input-output machine, you will get best results. Problems that do not have clear input information or clear solutions, such as open ended questions, will often net inconsistent and errant results.

Commands are better than questions when it comes to asking it to do something. If you think of it like programming, then it will respond like programming most of the time.

Most of all, do not engage it as a person. It's not a person, it's just an algorithm that is trained to mimic speech and is coded to respond in courteous, subservient responses. The less you try and get social interaction out of ChatGPT, the less likely it will be to just make shit up because it sounds right.

Anyway, TL;DR:

AI is just a tool and nothing more at its core. It is not synonymous with its worse uses, and is not going to disappear. Its worst offenders will not fold or change until legislation cracks down on it, and we, the majority users of the internet, are not its primary consumer. Use of AI to substitute art (written and visual) with blended up art of others is abhorrent, but use of a freely available algorithm for personal analyticsl use is relatively harmless so long as you aren't paying them.

We need to urge legislators the world over to crack down on the methods these companies are using to obtain their training data, but at the same time people need to understand that this technology IS useful and both can and has been used for good. I urge people to understand that learning algorithms are not one and the same with theft just because the biggest ones available to the public have widely used theft to cut corners. So long as computers continue to exist, algorithmic problem-solving and generative algorithms are going to continue to exist as they are the logical conclusion of increasingly complex computer systems. Let's just make sure the future of the technology is not defined by the way things are now.

#kanguin original#ai#gen ai#generative algorithms#learning algorithms#llm#large language model#long post

7 notes

·

View notes

Text

Chamomile Daisies

{TW: Curs1ing, Lasko's rants- um.. candle wax.. uh- Lasko gets called airhead like quite a few times honestly- he also gets called airboi huxley and Damien being too cute- ( voice mails ) A tiny bit of yelling (the word murdered) Guy, an unhealthy amount of knowledge about pizza I'm not kidding... and finally I'm not a great writer.. but I'm trying and learning lmfao- Gavin doing freelancers voice mail(do what you want with that information )- I threw in some guy and honey- the blue is Lasko's rambles }

this got very long for some reason

WC: 2022

This is one of the dumbest things you could think of Lasko. And I mean dumb- like it isn’t even scientifically proven to help you with your rambling.. Well, you can’t say that because every person is different... So what affects you could also not affect the rest of the population.. And empowered and unempowered are different entirely so could DNA have anything to do with it? And I'm doing it again..all fuck it I’ll get to 2 of them.

Lasko makes his way over to the cashier, maybe this could work. God, he’d hoped it’d work. The first date was already fucked up..he didn’t want to mess up the second one. He pays and walks out of the store. His place was right around the corner.. Maybe the candles weren’t necessary... But Lasko wanted to make sure nothing went wrong this time.

Just thinking about what happened at Max’s made him drop his keys. Damnnit bending down to pick them up. He groaned at the thought of a repeat because of anxiety.. Lasko pushed the thought aside and placed the candles on the tabletop. Where did he put his clothes for the night? The airhead sighed and walked into his room. Going through the mess on the floor. Was that it? No, that’s not dark enough to cover stains.. That's not it.. No.. no.. no..no.. YES, that's it! Lasko smiled as he pulled up a light turquoise heavy shirt! Unbuttoning his grey work shirt, with the same small smile on his face.. Maybe with the help of these candles, he could stop being so nervous and have a nice date with his dear.

Wait what would be the most effective way to burn the candles? Maybe he could melt them down in a pan well.. Rather a heatproof container… let's be real here he’s not gonna wanna clean that... Like you, how annoying would it be? Scraping the sides.. just sounds like too much work. It could stop with burning you know? Perhaps.. What would be the other method.. I could try a microwave.. Of course, I’d have to cut them in half… they're pretty long candles after all… I was thinking to myself.. Did I ever think about what scent I bought..? What if it smells bad?? Dear god, what am I gonna do fuck.. shit .. mother fuc-..

Lasko began buttoning his shirt over his white t-shirt. He walked out of his room, walking to the dryer. Now that he was actually in the kitchen and laundry room. He could try on his pants or well put them on he already knew they could fit. Now that he was dressed ( a few hours in advance ). He grabbed a pan, and a heat-proof container (a small one), and he finally opened the bag and looked at the candle.

Chamomile Daisies that seem like a nice smell.

Didn’t dear say how much they loved the smell of flowers? Or was it the morning dew smell on the flowers? Was that just a them thing or it is because they a water elemental? I mean they never really said anything about liking flowers.. Did I miss something? Wait have they ever dropped any information about liking flowers..? Should I get some flowers? Just in case.. Maybe I should text them.. ? NO surprises are better.. Right? Actually no. The last time I did that I was completely soaked in water… alright.. Pants on.. Stove on..now.. Should I put some Oil in there? No.. I don’t want to clean the pot more than I already have to.

As the airhead watched and waited for the candles to melt. His phone rang. It was dear.. Why were they calling? What was going on..? WERE THEY CALLING TO STAY THEY CANCELED? No silly Lasko. LASKO PICK UP THE PHONE DAMMIT! Right. Right, answer the phone!

‘’ Hey Dearie! I was just checking up on you! ‘’

D-d-dearie?! That’s new!?

‘’ H..Hey Dear-r! I'm doing fine! ‘’

‘’That's great to hear! I can’t wait to see you, I just wanted to make sure you’re not overworrying… you’re not right? ‘’ Dear asked waiting to hear his response

‘’ y..y-y-..yea! I'm o-..okay! N-..not worry-ying! A-at all! ‘’ his voice was a dead giveaway. Dear sighed on the other end of the phone. ‘’

'' Dearie..no..Lasko please don’t worry yourself too much.. Alright?’’

‘’ right.. I..-I’ll try..my dearest..’’ Lasko looked at the boiling pot on the stove.. The wax was red with light blue accents.

‘’ Lasko? You there?.. Lasko…?? LASKO!! ‘’

Dear’s yell caught poor Lasko off guard. He dropped his phone and in that moment our dearest Lasko forgot that he was an air elemental or at least for the right objects. See he used his magic to catch his phone just before it hit the stove? Wait, where was the candle wax? As Lasko looked up. Dear still on the phone trying to get his attention he saw the pot of wax fly in the air. And practically in slow motion watched it fall landing on the counter, wall, and the floor it also landed on his shirt…His shirt!?. Wait.. his.. His.. his what..HIS FUCKING SHIRT! SHIT..SHIT..SHIT.. THAT’S NOT GOOD

‘’ HEY DEAR I..-ILL C-..C-CALL YOU B-BACK! ‘’ as much as the poor airhead wanted to answer his Dear’s questions. He hit the red button faster than normal.

Good job Lasko.. Now Dear is more than likely upset with me. And now I have to deal with all this wax. I knew I shouldn't have brought those damn candles! But No.. Lasko wanted to be calm..But NOOO Lasko wanted to do this and that!.. Goddamnit.. I’m like this because I wanted our second date to be better than the first one.. Fuck.. maybe…perhaps freelancer.. Would have any idea of removing wax..from he looks around everything..

~~~~~~~~~~~

I'm.. Afraid my deviant..~ can’t make it to the phone right now~~ They’re busy at the moment.~~ But please.. Leave a message..~ make it worthwhile..~~

‘’ hey Freelancer.. When you get the chance.. Could you um.. Call me back.. i .. m-..may or m-..m-may not have gotten.. W-wax on my c-clothes.. ..f-f..or my date.. Please ca-call back! ‘’

~~~~~~~~~~~

Sorry Dames! Huxley Just record the voicemail, please!.. Right, right! Sorry Dames and I can’t come to the phone- Leave a message and Dames and will get back to you! HUXLEY IT'S YOUR PHONE!! An-

‘’ Hey Huxley.. Um- whenever you g-g…get the chance.. Could you um.. Call me back..I need some help..I may have or hav..have n-..not gotten wax.. everywhere..? ‘’

~~~~~~~~~~

It’s Daimen. I'm afraid I can’t answer the phone.. Leave a message.. Not a long one.

‘’ o-oh.. Straightforward.. U-uh… um. I- I need some h-help with a.. Waxy situation.. ‘’

~~~~~~~~

Well as another hour passed, no one seemed to get back with him, well Damien sent him a text about wondering what he meant by straightforward. Lasko tried to explain it.. But Damien didn’t understand how it happened.. But tried his best, to help.. Thanks to his help he got the dried wax off. But the shirt itself was stained.. The red on the shirt made him wanna cry. Red is already a hard color to get out of clothes, and his shirt being blue did not help.. Honestly, it looked like he murdered someone- not the point! He frowned and picked up his phone again. Dialed a number and sighed..it rang once.. Then twice and then eventually someone picked up.

‘’ Lasko what happened earlier!? You hung up so fast..I thought I did something- ‘’ Dear sounded sorrowful and it hurt Lasko a little. ‘’ No dear, you do anything.. I.. may..have messed something up.. ‘’ that last part left the Airbois mouth almost inaudibly.

‘’ messed what up Lasko? ‘’ it was dear’s turn to be concerned

‘’ Well..I wanted today’s date to go smoothly and not a repeat of what happened at Max’s..’’ dear cringed at the thought.

‘’ yes..what happened..? ‘’

‘’ a-and.. we..ll I heard- ab-about.. the-these..ca-calming c-candles..an..d ki-kinda.. Spilled.. The w-wax.. Ev-everywhere.. in-.. Including the-..the shirt.. F-for t-tonight..’’ Lasko managed to stutter out.

‘’ Lasko.. Dear.. y-you did…wh-at..? ‘’ dear said holding in snickering

‘’ Are you laughing right now!? ‘’ Lasko seemed more shocked than surprised.

‘’ AHAHAHAAHAAHAHAAHAHA Lasko im sorry! I-..Just.. I ‘’ Dear could barely hold in their laughs. And Lasko entirely lost his nerve and decided to join in the laughing.

‘’Lasko.. Listen I’ll cancel the plans and you can just come over here.. ‘’ Dear says small giggles in their sentence.

‘’ Dear! I couldn’t just, didn’t that.. Like I already messed up the first date and now I'm ruining another one! And I just don’t think it’s fair.. That you have to cancel our plans- ‘’ Lasko attempted to ramble on but dear interrupted him.

‘’ Lasko Dearie.. I love.. That you don't want to quote on quote to ruin another date.. But I just want to spend my time with you.. So come over and bring the shirt.. ‘’ though Lasko couldn’t see his dear’s face he knew they were smiling.. And smiling hard.

‘’ fine.. I’ll be over in a few ‘’ Lasko responded.. Giving in. Perhaps this is better than embarrassing himself at another restaurant.

~~~~~~~~~~~~~~~~~~~

And I know I feel so bad because I ruined our first and now the second date! I just don’t know how I let this happen. Dear, I feel so bad.. Like really bad.. Maybe I shouldn’t have come over.. Look Dear, I'll just put the shirt in the wash and I..i- Lakso stops mid-sentence losing his train of thought.. His dear looked so nice.. They always looked nice whether it was nice clothes or just a plain T-shirt and shorts. Like what they were wearing now, maybe this wouldn’t be so bad.

‘’ well, Dearie! Your shirt is in the wash! I used a little magic to make sure the bleach didn’t ruin the whole shirt. ‘’ his dear smiled pulling out their phone.

Lasko rubs the back of his neck, chucking ‘’ well I could leave once it's washed.. ‘’ Dear glared back at Lasko.. Scarying the power airboi- ‘’ w..w-w.well..i-i..cou-could..j–just s-stay! ‘’ Lasko stuttered out.

‘’ well good! I'm ordering some pizza for the night.. Lasko was a little nervous, but for once his poker face held. Theirs no way it could be the same waiter from before right?

~~~~~~

‘’ hey the pizzas here! Do you wanna go get it? ''Dear looked at Lasko with puppy dog eyes. As if begging him to get the pizza. Lasko wondered who taught them that as he went to open the door.

{ Lasko is about ramble about the preparation of pizza feel free to skip- }

It. Was. The. Same. Guy.

Fuck

‘’ um.. Order for a D- oh.. It’s you- ‘’ guy started with a faint laugh

‘’ Y-Yea.. Haha me woo! ‘’ Lasko was losing him as he grabbed the box of pizzas.

Did you know pizza could be sold fresh? HAh.. it’s a really funny process actually.. You can even get it whole or portion slices. Though the methods vary. That and have been developed to overcome challenges! Like preventing the sauce from combining with the dough! Because who the hell wants soggy pizza am I right?? HAhah, dealing with eh crust is another hard thing to deal with! Like the methods had to change often because the crust… could become rigid and who wants that? Not me and I'm sure not you! H-h-ha that’s even if you like pizza- l-..like a lot of people who work at their job d-d..-don’t like what they do..l-l-l..like there are a lot of things i..-I don’t like about my job but..but it’s a job you-..you know.. Haha?

Dear taking notice of the situation.. Giggling a little and going to interrupt Lasko and the pizza guy.

‘’Lasko go put the pizza on the counter please. ‘’ lasko sighed in relief before disappearing into the other room.

‘’ sorry bout that- here take this for your troubles..’’

~~~~~~~~~~~~~~~~

‘’ HONEY GUESS WHAT I GOT TODAY!!!! ‘’ Honey turned around to greet their very happy lover tonight.. ‘’ Yes Guy ‘’

‘’ I GOT A 150$ DOLLAR TIP ‘’ honey nearly spit out their drink

(@laskosprettygirl this is the Fic.. I hope it was worth the wait- )

once again my Adhd brain made this take harder than it needed to- I hope this lives up to expectations! and have a good day or night!

#redacted audio#redacted lasko#redacted dear#i tried- like actually fluff isn't my thing lol-#Im a better angst writer- you should check out my David Shaw is calling! ^^#With every Fic I write I feel I get better- any criticism is wanted! (not too mean- though- )#redacted damn crew#alright im going to bed now.. my creativity is gone for the night#redacted guy

21 notes

·

View notes

Text

Your All-in-One AI Web Agent: Save $200+ a Month, Unleash Limitless Possibilities!

Imagine having an AI agent that costs you nothing monthly, runs directly on your computer, and is unrestricted in its capabilities. OpenAI Operator charges up to $200/month for limited API calls and restricts access to many tasks like visiting thousands of websites. With DeepSeek-R1 and Browser-Use, you:

• Save money while keeping everything local and private.

• Automate visiting 100,000+ websites, gathering data, filling forms, and navigating like a human.

• Gain total freedom to explore, scrape, and interact with the web like never before.

You may have heard about Operator from Open AI that runs on their computer in some cloud with you passing on private information to their AI to so anything useful. AND you pay for the gift . It is not paranoid to not want you passwords and logins and personal details to be shared. OpenAI of course charges a substantial amount of money for something that will limit exactly what sites you can visit, like YouTube for example. With this method you will start telling an AI exactly what you want it to do, in plain language, and watching it navigate the web, gather information, and make decisions—all without writing a single line of code.

In this guide, we’ll show you how to build an AI agent that performs tasks like scraping news, analyzing social media mentions, and making predictions using DeepSeek-R1 and Browser-Use, but instead of writing a Python script, you’ll interact with the AI directly using prompts.

These instructions are in constant revisions as DeepSeek R1 is days old. Browser Use has been a standard for quite a while. This method can be for people who are new to AI and programming. It may seem technical at first, but by the end of this guide, you’ll feel confident using your AI agent to perform a variety of tasks, all by talking to it. how, if you look at these instructions and it seems to overwhelming, wait, we will have a single download app soon. It is in testing now.

This is version 3.0 of these instructions January 26th, 2025.

This guide will walk you through setting up DeepSeek-R1 8B (4-bit) and Browser-Use Web UI, ensuring even the most novice users succeed.

What You’ll Achieve

By following this guide, you’ll:

1. Set up DeepSeek-R1, a reasoning AI that works privately on your computer.

2. Configure Browser-Use Web UI, a tool to automate web scraping, form-filling, and real-time interaction.

3. Create an AI agent capable of finding stock news, gathering Reddit mentions, and predicting stock trends—all while operating without cloud restrictions.

A Deep Dive At ReadMultiplex.com Soon

We will have a deep dive into how you can use this platform for very advanced AI use cases that few have thought of let alone seen before. Join us at ReadMultiplex.com and become a member that not only sees the future earlier but also with particle and pragmatic ways to profit from the future.

System Requirements

Hardware

• RAM: 8 GB minimum (16 GB recommended).

• Processor: Quad-core (Intel i5/AMD Ryzen 5 or higher).

• Storage: 5 GB free space.

• Graphics: GPU optional for faster processing.

Software

• Operating System: macOS, Windows 10+, or Linux.

• Python: Version 3.8 or higher.

• Git: Installed.

Step 1: Get Your Tools Ready

We’ll need Python, Git, and a terminal/command prompt to proceed. Follow these instructions carefully.

Install Python

1. Check Python Installation:

• Open your terminal/command prompt and type:

python3 --version

• If Python is installed, you’ll see a version like:

Python 3.9.7

2. If Python Is Not Installed:

• Download Python from python.org.

• During installation, ensure you check “Add Python to PATH” on Windows.

3. Verify Installation:

python3 --version

Install Git

1. Check Git Installation:

• Run:

git --version

• If installed, you’ll see:

git version 2.34.1

2. If Git Is Not Installed:

• Windows: Download Git from git-scm.com and follow the instructions.

• Mac/Linux: Install via terminal:

sudo apt install git -y # For Ubuntu/Debian

brew install git # For macOS

Step 2: Download and Build llama.cpp

We’ll use llama.cpp to run the DeepSeek-R1 model locally.

1. Open your terminal/command prompt.

2. Navigate to a clear location for your project files:

mkdir ~/AI_Project

cd ~/AI_Project

3. Clone the llama.cpp repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

4. Build the project:

• Mac/Linux:

make

• Windows:

• Install a C++ compiler (e.g., MSVC or MinGW).

• Run:

mkdir build

cd build

cmake ..

cmake --build . --config Release

Step 3: Download DeepSeek-R1 8B 4-bit Model

1. Visit the DeepSeek-R1 8B Model Page on Hugging Face.

2. Download the 4-bit quantized model file:

• Example: DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf.

3. Move the model to your llama.cpp folder:

mv ~/Downloads/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf ~/AI_Project/llama.cpp

Step 4: Start DeepSeek-R1

1. Navigate to your llama.cpp folder:

cd ~/AI_Project/llama.cpp

2. Run the model with a sample prompt:

./main -m DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf -p "What is the capital of France?"

3. Expected Output:

The capital of France is Paris.

Step 5: Set Up Browser-Use Web UI

1. Go back to your project folder:

cd ~/AI_Project

2. Clone the Browser-Use repository:

git clone https://github.com/browser-use/browser-use.git

cd browser-use

3. Create a virtual environment:

python3 -m venv env

4. Activate the virtual environment:

• Mac/Linux:

source env/bin/activate

• Windows:

env\Scripts\activate

5. Install dependencies:

pip install -r requirements.txt

6. Start the Web UI:

python examples/gradio_demo.py

7. Open the local URL in your browser:

http://127.0.0.1:7860

Step 6: Configure the Web UI for DeepSeek-R1

1. Go to the Settings panel in the Web UI.

2. Specify the DeepSeek model path:

~/AI_Project/llama.cpp/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf

3. Adjust Timeout Settings:

• Increase the timeout to 120 seconds for larger models.

4. Enable Memory-Saving Mode if your system has less than 16 GB of RAM.

Step 7: Run an Example Task

Let’s create an agent that:

1. Searches for Tesla stock news.

2. Gathers Reddit mentions.

3. Predicts the stock trend.

Example Prompt:

Search for "Tesla stock news" on Google News and summarize the top 3 headlines. Then, check Reddit for the latest mentions of "Tesla stock" and predict whether the stock will rise based on the news and discussions.

--

Congratulations! You’ve built a powerful, private AI agent capable of automating the web and reasoning in real time. Unlike costly, restricted tools like OpenAI Operator, you’ve spent nothing beyond your time. Unleash your AI agent on tasks that were once impossible and imagine the possibilities for personal projects, research, and business. You’re not limited anymore. You own the web—your AI agent just unlocked it! 🚀

Stay tuned fora FREE simple to use single app that will do this all and more.

7 notes

·

View notes

Text

Sincerely, F.P.

Part 4: What is mine

TW: Jealous old man implicit

You’ve grown used to the quiet here—thick with thought, broken only by the soft scrape of a chair, the echo of footsteps in a long hallway, or the distant humming of some old campus radiator. No radio, no familiar crackle of your mother’s kitchen jazz station, just the occasional muffled laughter from the common room.

You turn around, the dorm is not particularly ugly, yet not nice enough, a bit worn off and barely the basic necessities, a door connecting to the dorm beside it but you do not know who sleeps there so you prefer not to open it for now.

Your favorite place has become the auditorium, where the university’s symphony rehearses. You sit far in the back, textbooks open across your lap as violins swell and settle. There’s something soothing about Vivaldi when you’re trying to read through dense passages on case law and labor structures. You’ve checked out several texts: Labour Market Institutions and the Structural Unemployment Problem in Europe (OECD, 1990), The Informal Economy: Studies in Advanced and Less Developed Countries (Portes, Castells & Benton, 1989). They’re heavy, literal bricks of theory and numbers, but you work through them with a strange affection.

In between movements of strings, a boy sits beside you.

“Do you understand all that?” he nods toward your open book.

You smile. “Sometimes. Depends on how generous the author is.”

He chuckles, says he’s in the orchestra—second violin. You talk briefly. His name slips your memory even as you shake hands. He’s good-looking, in that practiced, unaware way. It’s nothing, but it lifts your spirits.

At the library later, you ask the receptionist about the hours for microfilm access and chat with the old librarian about the dusty card catalog. You even make small talk at the cafeteria with the man wiping tables. It’s a good day. One of those days where things feel possible.

Night folds itself around the campus when you return to your dorm. You’re halfway through untying your shoes when there’s a knock.

“Miss?” the dorm guard calls, looking half-incredulous. “You… have a visitor.”

You blink at him.

“A visitor?”

The last person to visit you at school was your sister during undergrad—and even she had only stayed ten minutes, too uncomfortable in the silence of the library floor.

You step outside. A sleek, black car waits at the curb, its chrome gleaming under the faint golden halo of a lamppost. The driver stands like a statue, opening the back door with practiced precision.

Frank Pater̀no is already seated inside.

He’s wearing a long coat, his gloved hand relaxed over one knee, the other resting on the seat beside him. A ghost of a smile touches his mouth when he sees you.

“Bonsoir, cara mia,” he says, as if you’ve been meeting like this for years.

You sit, unsure what to do with your hands. “I… didn’t expect to see you.”

“Good.” His voice is warm but lined with something unreadable. “A little unpredictability keeps life honest. Tell me—how’s your first week?”

You take a breath. “Busy. I’ve been narrowing down my research design. I borrowed some useful books—on informal economies, European labor structures—Portes, Castells... And I’ve been reviewing data sampling methods.”

“And?”

You hesitate, unsure what he’s asking. “I think I’m getting closer to the right model.”

He hums. “Where were you this afternoon?”

Your brow furrows. “The auditorium, actually. I wanted to hear the orchestra rehearse. Then I went to the library. After that, the cafeteria.”

“The violinist?” he asks lightly.

“What?”

“You were speaking to one.”

You glance at him. “Yes. Briefly. He sat next to me.”

He nods, his expression unreadable. “And you like jazz?”

“I do,” you say carefully. “My family had a radio that only played one station—old jazz, mostly. You had to nudge the dial just right.”

He looks at you then, something flickering behind his gaze. The car slows.

You’re at a fast-food chain—greasy neon signs and empty booths.

“I thought you might be hungry.”

He orders for you, places the bag in your hands like it’s a gift. You thank him softly. When you return to your dorm, he leans in.

“Be careful who you make time for, cara mia,” he says, brushing your cheek with a kiss. “Some people are only worth a moment.”

Then he’s gone, and you’re left with warm fries and a strange buzzing under your skin.

Pater̀no Family Meeting — Confidential

The suite is nondescript—deliberately so. Grey carpet, beige drapes, an espresso machine older than the province’s premier. They always met like this abroad. Canada was clean, silent, far from Sicily’s heat or Rome’s noise. The kind of place where men could plan billion-euro bridges over still water.

Frank Pater̀no leans over a low marble table, sleeves rolled just enough to reveal the watch his father gifted him at seventeen. His father—Don Silvestro—sits at the head of the table with a knitted brow and tired, thinned hands. Giaco, Frank’s son, leans against the wall, youthful and eager, soaking in every detail. His brothers, Stefano and Luca, whisper to each other in a Sicilian rhythm too fast for outsiders to follow.

“The commission has agreed on the final layout,” Stefano says. “The bridge will begin east of Salerno. Highway junctions are being diverted as we speak.”

“Engineers are in place?” asks the Don.

“They are ours. The bidding committee isn’t,” Luca adds. “But they will be.”

Frank doesn’t look up from his glass. “They’ll be bought.”

“We’ll launder through the construction companies in Veneto,” Stefano offers. “That, or the cultural preservation grants. Giaco’s team found a loophole in heritage zoning.”

Giaco nods. “We bury it in archeological surveys, slow everything down unless they play nice.”

Silence follows. Frank shifts in his chair, finally looking at the three of them. He speaks slowly.

“There’s a party sniffing around our engineers. Government-adjacent, Swiss funding. If they get a better offer...”

He lets the sentence hang.

Don Silvestro’s voice is dry as dust. “Fix it.”

Frank finishes his drink. The amber light catches on the rim of the glass like blood caught on crystal. He sets it down and adjusts his cuff.

“I do not like what’s mine threatening to flirt with someone else,” he says evenly. “Not in business. Not in anything.”

His father lifts an eyebrow—not at the words, but at the flicker in his son’s tone. Frank smiles faintly, almost wistfully, as if remembering something warmer than the room they’re in. Then he stands.

“I’ll handle it.”

The meeting disperses. Frank doesn’t look back. There’s a drive to take. And someone—delicate, clever, still too grateful to notice—who needs reminding of where her orbit begins and ends.

That morning you woke up with the news that for some reason your dorm neightboor whose room was connected to yours was urged to move.

You’re too tired to think. Enrollment forms, scholarship confirmations, health insurance registration, and half an hour arguing with the bursar over a document that mysteriously disappeared—only for it to reappear stamped and approved five minutes later without explanation.

Your shoes are soaked from the melting snow. Your satchel is heavy with orientation packets and a campus map you can’t quite fold right. The dorm hall feels darker than usual as you reach your room, the hum of fluorescent lighting a quiet buzz in the background.

Then you see it.

The door that leads to the room beside yours—the one that had been locked for days, your supposed neighbor still unnamed—is ajar. No sound from inside, just light spilling out in a warm, amber pool. Your name is taped over the old room number in crisp block letters. Typed. Laminated.

You hesitate, just a breath, then push the door open.

It’s not a dorm room anymore.

Two tall bookshelves stand side by side against the back wall, heavy with textbooks. Titles you recognize. Reconstructing Development Theory, The Informal Economy, Precarious Work, Women, and the New Economy. Some annotated, some first editions. A large wooden desk stands in the center of the room, brand-new, with drawers that still smell faintly of varnish. Next to it: a pristine microwave, a compact fridge stocked with milk, fruit, yogurt, and two tiny Perrier bottles.

And then, on the window ledge — a Sony ZS-D5. Sleek, matte black, dual cassette decks, CD player, the logo still wrapped in its original plastic.

On the desk lies a note, handwritten in careful ink:

“For the hours when silence will not do.

—F.P.”

You sit slowly, backpack sliding to the floor. The chair cushions under your weight with a sigh.

It’s all too much.

And yet you press “Play” anyway.

With all this you won't have to visit the Library, auditorium or cafetería in a long time.

I know I am feeding you guys scrapts but I am sucker for slow-burn...this was going to be part of chapter 3 but I still think that it is a good start, is it not?

3 notes

·

View notes

Text

Zillow Scraping Mastery: Advanced Techniques Revealed

In the ever-evolving landscape of data acquisition, Zillow stands tall as a treasure trove of valuable real estate information. From property prices to market trends, Zillow's extensive database holds a wealth of insights for investors, analysts, and researchers alike. However, accessing this data at scale requires more than just a basic understanding of web scraping techniques. It demands mastery of advanced methods tailored specifically for Zillow's unique structure and policies. In this comprehensive guide, we delve into the intricacies of Zillow scraping, unveiling advanced techniques to empower data enthusiasts in their quest for valuable insights.

Understanding the Zillow Scraper Landscape

Before diving into advanced techniques, it's crucial to grasp the landscape of zillow scraper. As a leading real estate marketplace, Zillow is equipped with robust anti-scraping measures to protect its data and ensure fair usage. These measures include rate limiting, CAPTCHA challenges, and dynamic page rendering, making traditional scraping approaches ineffective. To navigate this landscape successfully, aspiring scrapers must employ sophisticated strategies tailored to bypass these obstacles seamlessly.

Advanced Techniques Unveiled

User-Agent Rotation: One of the most effective ways to evade detection is by rotating User-Agent strings. Zillow's anti-scraping mechanisms often target commonly used User-Agent identifiers associated with popular scraping libraries. By rotating through a diverse pool of User-Agent strings mimicking legitimate browser traffic, scrapers can significantly reduce the risk of detection and maintain uninterrupted data access.

IP Rotation and Proxies: Zillow closely monitors IP addresses to identify and block suspicious scraping activities. To counter this, employing a robust proxy rotation system becomes indispensable. By routing requests through a pool of diverse IP addresses, scrapers can distribute traffic evenly and mitigate the risk of IP bans. Additionally, utilizing residential proxies offers the added advantage of mimicking genuine user behavior, further enhancing scraping stealth.

Session Persistence: Zillow employs session-based authentication to track user interactions and identify potential scrapers. Implementing session persistence techniques, such as maintaining persistent cookies and managing session tokens, allows scrapers to simulate continuous user engagement. By emulating authentic browsing patterns, scrapers can evade detection more effectively and ensure prolonged data access.

JavaScript Rendering: Zillow's dynamic web pages rely heavily on client-side JavaScript to render content dynamically. Traditional scraping approaches often fail to capture dynamically generated data, leading to incomplete or inaccurate results. Leveraging headless browser automation frameworks, such as Selenium or Puppeteer, enables scrapers to execute JavaScript code dynamically and extract fully rendered content accurately. This advanced technique ensures comprehensive data coverage across Zillow's dynamic pages, empowering scrapers with unparalleled insights.

Data Parsing and Extraction: Once data is retrieved from Zillow's servers, efficient parsing and extraction techniques are essential to transform raw HTML content into structured data formats. Utilizing robust parsing libraries, such as BeautifulSoup or Scrapy, facilitates seamless extraction of relevant information from complex web page structures. Advanced XPath or CSS selectors further streamline the extraction process, enabling scrapers to target specific elements with precision and extract valuable insights efficiently.

Ethical Considerations and Compliance

While advanced scraping techniques offer unparalleled access to valuable data, it's essential to uphold ethical standards and comply with Zillow's terms of service. Scrapers must exercise restraint and avoid overloading Zillow's servers with excessive requests, as this may disrupt service for genuine users and violate platform policies. Additionally, respecting robots.txt directives and adhering to rate limits demonstrates integrity and fosters a sustainable scraping ecosystem beneficial to all stakeholders.

Conclusion

In the realm of data acquisition, mastering advanced scraping techniques is paramount for unlocking the full potential of platforms like Zillow. By employing sophisticated strategies tailored to bypass anti-scraping measures seamlessly, data enthusiasts can harness the wealth of insights hidden within Zillow's vast repository of real estate data. However, it's imperative to approach scraping ethically and responsibly, ensuring compliance with platform policies and fostering a mutually beneficial scraping ecosystem. With these advanced techniques at their disposal, aspiring scrapers can embark on a journey of exploration and discovery, unraveling valuable insights to inform strategic decisions and drive innovation in the real estate industry.

2 notes

·

View notes

Text

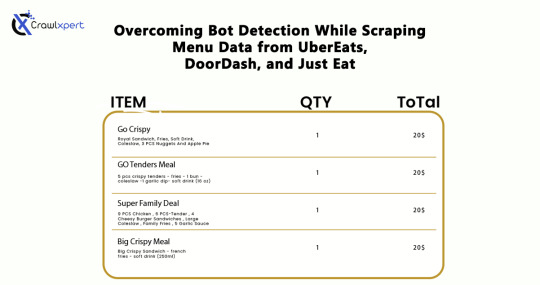

Overcoming Bot Detection While Scraping Menu Data from UberEats, DoorDash, and Just Eat

Introduction

In industries where menu data collection is concerned, web scraping would serve very well for us: UberEats, DoorDash, and Just Eat are the some examples. However, websites use very elaborate bot detection methods to stop the automated collection of information. In overcoming these factors, advanced scraping techniques would apply with huge relevance: rotating IPs, headless browsing, CAPTCHA solving, and AI methodology.

This guide will discuss how to bypass bot detection during menu data scraping and all challenges with the best practices for seamless and ethical data extraction.

Understanding Bot Detection on Food Delivery Platforms

1. Common Bot Detection Techniques

Food delivery platforms use various methods to block automated scrapers:

IP Blocking – Detects repeated requests from the same IP and blocks access.

User-Agent Tracking – Identifies and blocks non-human browsing patterns.

CAPTCHA Challenges – Requires solving puzzles to verify human presence.

JavaScript Challenges – Uses scripts to detect bots attempting to load pages without interaction.

Behavioral Analysis – Tracks mouse movements, scrolling, and keystrokes to differentiate bots from humans.

2. Rate Limiting and Request Patterns

Platforms monitor the frequency of requests coming from a specific IP or user session. If a scraper makes too many requests within a short time frame, it triggers rate limiting, causing the scraper to receive 403 Forbidden or 429 Too Many Requests errors.

3. Device Fingerprinting

Many websites use sophisticated techniques to detect unique attributes of a browser and device. This includes screen resolution, installed plugins, and system fonts. If a scraper runs on a known bot signature, it gets flagged.

Techniques to Overcome Bot Detection

1. IP Rotation and Proxy Management

Using a pool of rotating IPs helps avoid detection and blocking.

Use residential proxies instead of data center IPs.

Rotate IPs with each request to simulate different users.

Leverage proxy providers like Bright Data, ScraperAPI, and Smartproxy.

Implement session-based IP switching to maintain persistence.

2. Mimic Human Browsing Behavior

To appear more human-like, scrapers should:

Introduce random time delays between requests.

Use headless browsers like Puppeteer or Playwright to simulate real interactions.

Scroll pages and click elements programmatically to mimic real user behavior.

Randomize mouse movements and keyboard inputs.

Avoid loading pages at robotic speeds; introduce a natural browsing flow.

3. Bypassing CAPTCHA Challenges

Implement automated CAPTCHA-solving services like 2Captcha, Anti-Captcha, or DeathByCaptcha.

Use machine learning models to recognize and solve simple CAPTCHAs.

Avoid triggering CAPTCHAs by limiting request frequency and mimicking human navigation.

Employ AI-based CAPTCHA solvers that use pattern recognition to bypass common challenges.

4. Handling JavaScript-Rendered Content

Use Selenium, Puppeteer, or Playwright to interact with JavaScript-heavy pages.

Extract data directly from network requests instead of parsing the rendered HTML.

Load pages dynamically to prevent detection through static scrapers.

Emulate browser interactions by executing JavaScript code as real users would.

Cache previously scraped data to minimize redundant requests.

5. API-Based Extraction (Where Possible)

Some food delivery platforms offer APIs to access menu data. If available:

Check the official API documentation for pricing and access conditions.

Use API keys responsibly and avoid exceeding rate limits.

Combine API-based and web scraping approaches for optimal efficiency.

6. Using AI for Advanced Scraping

Machine learning models can help scrapers adapt to evolving anti-bot measures by:

Detecting and avoiding honeypots designed to catch bots.

Using natural language processing (NLP) to extract and categorize menu data efficiently.

Predicting changes in website structure to maintain scraper functionality.

Best Practices for Ethical Web Scraping

While overcoming bot detection is necessary, ethical web scraping ensures compliance with legal and industry standards:

Respect Robots.txt – Follow site policies on data access.

Avoid Excessive Requests – Scrape efficiently to prevent server overload.

Use Data Responsibly – Extracted data should be used for legitimate business insights only.

Maintain Transparency – If possible, obtain permission before scraping sensitive data.

Ensure Data Accuracy – Validate extracted data to avoid misleading information.

Challenges and Solutions for Long-Term Scraping Success

1. Managing Dynamic Website Changes

Food delivery platforms frequently update their website structure. Strategies to mitigate this include:

Monitoring website changes with automated UI tests.

Using XPath selectors instead of fixed HTML elements.

Implementing fallback scraping techniques in case of site modifications.

2. Avoiding Account Bans and Detection

If scraping requires logging into an account, prevent bans by:

Using multiple accounts to distribute request loads.

Avoiding excessive logins from the same device or IP.

Randomizing browser fingerprints using tools like Multilogin.

3. Cost Considerations for Large-Scale Scraping

Maintaining an advanced scraping infrastructure can be expensive. Cost optimization strategies include:

Using serverless functions to run scrapers on demand.

Choosing affordable proxy providers that balance performance and cost.

Optimizing scraper efficiency to reduce unnecessary requests.

Future Trends in Web Scraping for Food Delivery Data

As web scraping evolves, new advancements are shaping how businesses collect menu data:

AI-Powered Scrapers – Machine learning models will adapt more efficiently to website changes.

Increased Use of APIs – Companies will increasingly rely on API access instead of web scraping.

Stronger Anti-Scraping Technologies – Platforms will develop more advanced security measures.

Ethical Scraping Frameworks – Legal guidelines and compliance measures will become more standardized.

Conclusion

Uber Eats, DoorDash, and Just Eat represent great challenges for menu data scraping, mainly due to their advanced bot detection systems. Nevertheless, if IP rotation, headless browsing, solutions to CAPTCHA, and JavaScript execution methodologies, augmented with AI tools, are applied, businesses can easily scrape valuable data without incurring the wrath of anti-scraping measures.

If you are an automated and reliable web scraper, CrawlXpert is the solution for you, which specializes in tools and services to extract menu data with efficiency while staying legally and ethically compliant. The right techniques, along with updates on recent trends in web scrapping, will keep the food delivery data collection effort successful long into the foreseeable future.

Know More : https://www.crawlxpert.com/blog/scraping-menu-data-from-ubereats-doordash-and-just-eat

#ScrapingMenuDatafromUberEats#ScrapingMenuDatafromDoorDash#ScrapingMenuDatafromJustEat#ScrapingforFoodDeliveryData

0 notes

Text

Opt For The Best SEO Services In Kolkata at The Best Pricea

Do you want to see your business at the heights of your success? Then shape your business with the help of an SEO company that provides the best SEO Services in Kolkata. Whether you are the owner of a small business or a big, with the help of SEO services, you can promote your business by increasing visibility in search engines, which means more traffic on your website. So, why wait for SEO services to rent?

Know About the Different Types of SEO Services:

The different types of SEO services include:

Google SEO services:

It mainly helps to dominate Google search results by maintaining all the rules and regulations of Google. Ecommerce SEO Services: Ecommerce is a very large industry as you know. It is very complicated to optimize an ecommerce site. It contains adaptation of homepage, category page, product page, all visual elements of an online shop.

Technical SEO:

As the name suggests, the main goal of the technical SEO is to assure that the search engine crawler can crawl and index without any problem.

Content SEO:

It is about all quality materials and how to make it better.

On-page SEO:

On-page SEO title tag, meta details, header tag for search engines, auditing your website information architecture, creating sitemaps, customizing site images, optimizing your website with keyword research, analyzing your website by analyzing the use of your website and admitting to the characteristics of your website by analyzing the characteristics of your website. Thus it creates a website friendship to find the engine and users.

Off-Page SEO:

Off-page optimization is all about promotion. It relates to techniques that are used to promote a website on the Internet. The most important off-pages are SEO technology link building and brand promotion.

Local SEO:

This type of SEO is favorable only for local businesses. If you have a local store, you should optimize your website for local SEOs.

Mobile SEO:

This type of SEO is especially for mobile devices. Do you know that more than 60% of Google explorers use mobile devices compared to traditional desktops? When working on a mobile SEO, make sure that your website is mobile friendly, the website loads fast on mobile and is easy to use.

About modern SEO services

SEO methods and strategies help develop a unique approach to your business. It gives results in more lead, phone calls and orders. An SEO company with your professional experts can ensure that you develop custom methods and strategies.

Latest SEO Strategy:

The latest SEO strategy avoids shortcuts and provides sustainable results. The Black Hat SEO strategy helps to achieve a quick reputation. This strategy includes keyword stuffing, link scraping. Although it works for short term, it is an effective initial strategy to create your website rank well.

White Hat SEO is a stable process to rank your website; A white hat SEO strategy is the best way to create a permanent position. Overall SEO Solutions: This refers to all major aspects of the SEO, including early research, strategy development and execution, developing SEO material, reporting, etc.

Experienced SEO Advisor:

SEO experts are assets of an SEO company. They develop and execute the result-driven SEO and digital marketing campaigns.

Turn Your Website into a Profitable Asset With SEO Services:

SEO services can help your website reach your targeted customers by giving more traffic on your website through advanced SEO tools and effective material marketing strategies. Look at those reasons why you should optimize your website through SEO services:

SEO services can make your website useful for target audiences.

SEO services help to increase your organic traffic.

This can be done by redeeming existing traffic.

SEO services work hard to your website. SEO services provide your audience what they want and expect from you.

What Can You Get from SEO Services?

Renting a professional SEO agency is the first important step to increase your business through a digital marketing platform.

Quality Material:

SEO service provides materials with the correct reference that will attach your target audience.

Audit your current website:

A good SEO agency audits your current website.

Identifying competitive edges and material intervals:

A good SEO agency will help you improve your website and look into your rivals' sites to make the best strategy for your business.

Opt for the Quality SEO Services:

If you want to see your website at the top five suggestions of popular search engines, opt for quality SEO services in Kolkat from a well known SEO company like an Digital Growth Media.

0 notes

Text

Data Science Trending in 2025

What is Data Science?

Data Science is an interdisciplinary field that combines scientific methods, processes, algorithms, and systems to extract knowledge and insights from structured and unstructured data. It is a blend of various tools, algorithms, and machine learning principles with the goal to discover hidden patterns from raw data.

Introduction to Data Science

In the digital era, data is being generated at an unprecedented scale—from social media interactions and financial transactions to IoT sensors and scientific research. This massive amount of data is often referred to as "Big Data." Making sense of this data requires specialized techniques and expertise, which is where Data Science comes into play.

Data Science enables organizations and researchers to transform raw data into meaningful information that can help make informed decisions, predict trends, and solve complex problems.

History and Evolution

The term "Data Science" was first coined in the 1960s, but the field has evolved significantly over the past few decades, particularly with the rise of big data and advancements in computing power.

Early days: Initially, data analysis was limited to simple statistical methods.

Growth of databases: With the emergence of databases, data management and retrieval improved.

Rise of machine learning: The integration of algorithms that can learn from data added a predictive dimension.

Big Data Era: Modern data science deals with massive volumes, velocity, and variety of data, leveraging distributed computing frameworks like Hadoop and Spark.

Components of Data Science

1. Data Collection and Storage

Data can come from multiple sources:

Databases (SQL, NoSQL)

APIs

Web scraping

Sensors and IoT devices

Social media platforms

The collected data is often stored in data warehouses or data lakes.

2. Data Cleaning and Preparation

Raw data is often messy—containing missing values, inconsistencies, and errors. Data cleaning involves:

Handling missing or corrupted data

Removing duplicates

Normalizing and transforming data into usable formats

3. Exploratory Data Analysis (EDA)

Before modeling, data scientists explore data visually and statistically to understand its main characteristics. Techniques include:

Summary statistics (mean, median, mode)

Data visualization (histograms, scatter plots)

Correlation analysis

4. Data Modeling and Machine Learning

Data scientists apply statistical models and machine learning algorithms to:

Identify patterns

Make predictions

Classify data into categories

Common models include regression, decision trees, clustering, and neural networks.

5. Interpretation and Communication

The results need to be interpreted and communicated clearly to stakeholders. Visualization tools like Tableau, Power BI, or matplotlib in Python help convey insights effectively.

Techniques and Tools in Data Science

Statistical Analysis

Foundational for understanding data properties and relationships.

Machine Learning

Supervised and unsupervised learning for predictions and pattern recognition.

Deep Learning

Advanced neural networks for complex tasks like image and speech recognition.

Natural Language Processing (NLP)

Techniques to analyze and generate human language.

Big Data Technologies

Hadoop, Spark, Kafka for handling massive datasets.

Programming Languages

Python: The most popular language due to its libraries like pandas, NumPy, scikit-learn.

R: Preferred for statistical analysis.

SQL: For database querying.

Applications of Data Science

Data Science is used across industries:

Healthcare: Predicting disease outbreaks, personalized medicine, medical image analysis.

Finance: Fraud detection, credit scoring, algorithmic trading.

Marketing: Customer segmentation, recommendation systems, sentiment analysis.

Manufacturing: Predictive maintenance, supply chain optimization.

Transportation: Route optimization, autonomous vehicles.

Entertainment: Content recommendation on platforms like Netflix and Spotify.

Challenges in Data Science

Data Quality: Poor data can lead to inaccurate results.

Data Privacy and Ethics: Ensuring responsible use of data and compliance with regulations.

Skill Gap: Requires multidisciplinary knowledge in statistics, programming, and domain expertise.

Scalability: Handling and processing vast amounts of data efficiently.

Future of Data Science

The future promises further integration of artificial intelligence and automation in data science workflows. Explainable AI, augmented analytics, and real-time data processing are areas of rapid growth.

As data continues to grow exponentially, the importance of data science in guiding strategic decisions and innovation across sectors will only increase.

Conclusion

Data Science is a transformative field that unlocks the power of data to solve real-world problems. Through a combination of techniques from statistics, computer science, and domain knowledge, data scientists help organizations make smarter decisions, innovate, and gain a competitive edge.

Whether you are a student, professional, or business leader, understanding data science and its potential can open doors to exciting opportunities and advancements in technology and society.

0 notes

Text

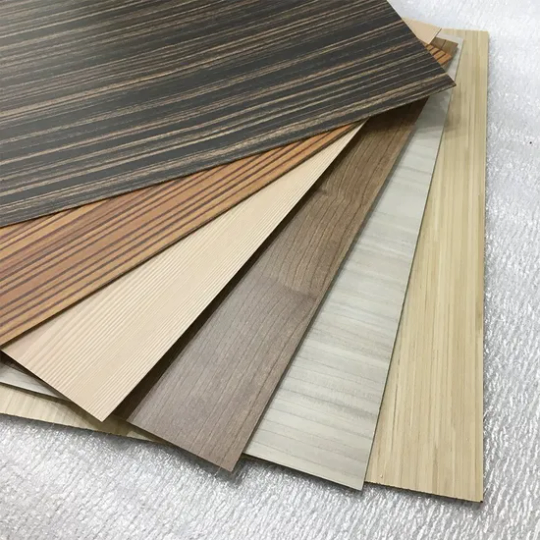

The contemporary WPC Flooring Factory operates as an artistic foundry where design possibilities transcend conventional limitations. Advanced digital scanning captures nuanced characteristics of rare wood species and natural stones with microscopic precision. These reference files drive high-fidelity printing systems applying ecological inks through variable droplet technology, achieving photorealistic recreations impossible through traditional methods. The fusion process integrates decorative layers with composite cores under precisely controlled thermal conditions, ensuring permanent image stability. Custom color matching utilizes spectral analysis equipment recalibrated for each production batch, maintaining absolute consistency across diverse material compositions. This artistic-industrial synthesis empowers designers to realize previously unattainable aesthetic visions.

Surface dimensionalization represents another frontier. The WPC Flooring Factory employs synchronized embossing technologies where micro-textured cylinders align perfectly with printed patterns. Variable-depth engraving replicates authentic grain variations and stone pitting across plank surfaces. Edge detailing innovations include hand-scraped, bevelled, and distressed profiles created through digitally-controlled milling stations. These textural enhancements extend beyond visual imitation to deliver authentic tactile experiences underfoot. The Pvcfloortile creative designation signifies products meeting rigorous aesthetic durability standards through accelerated wear simulation testing. Finished products undergo comprehensive lighting environment evaluations to ensure color integrity across diverse residential settings.

Collaborative customization streams establish unprecedented access. The WPC Flooring Factory operates virtual design studios enabling real-time collaboration between homeowners, architects and production specialists. Cloud-based platforms facilitate material selection, texture modification, and layout visualization before manufacturing commitment. Small-batch production capabilities accommodate highly personalized projects without minimum quantity constraints. These capabilities transform flooring from standardized commodity to personalized artistic expression within modern living spaces..click https://www.pvcfloortile.com/product/wpc-flooring/wpc-decking-flooring/ to reading more information

0 notes

Text

Introduction - The Rise of On-Demand Delivery Platforms like Glovo

The global landscape of e-commerce and food delivery has witnessed an unprecedented transformation with the rise of on-demand delivery platforms. These platforms, including Glovo, have capitalized on the increasing demand for fast, convenient, and contactless delivery solutions. In 2020 alone, the global on-demand delivery industry was valued at over $100 billion and is projected to grow at a compound annual growth rate (CAGR) of 23% until 2027. The Glovo platform, which began in Spain, has expanded to more than 25 countries and 250+ cities worldwide, offering services ranging from restaurant deliveries to grocery and pharmaceutical goods.

The widespread use of smartphones and changing consumer habits have driven the growth of delivery services, making it a vital part of the modern retail ecosystem. Consumers now expect fast, accurate, and accessible delivery from local businesses, and platforms like Glovo have become key players in this demand. As businesses strive to stay competitive, Glovo Data Scraping plays an essential role in acquiring real-time insights and market intelligence.

On-demand delivery services are no longer a luxury but a necessity for businesses, and companies that harness reliable data will lead the charge. Let’s examine the growing need for accurate delivery data as we look deeper into the challenges faced by businesses relying on real-time information.

Real-Time Delivery Data Changes Frequently

While platforms like Glovo are revolutionizing the delivery landscape, one of the significant challenges businesses face is the inconsistency and volatility of real-time data. Glovo, like other on-demand services, operates in a dynamic environment where store availability, pricing, and inventory fluctuate frequently. A store’s listing can change based on delivery zones, operating hours, or ongoing promotions, making it difficult for businesses to rely on static data for decision-making.

For example, store availability can vary by time of day—some stores may not be operational during off-hours, or a delivery fee could change based on the customer’s location. The variability in Glovo Delivery Data Scraping extends to pricing, with each delivery zone potentially having different costs for the same product, depending on the distance or demand.

This constant flux in data can lead to several challenges, such as inconsistent pricing strategies, missed revenue opportunities, and poor customer experience. Moreover, with shared URLs for chains like McDonald’s or KFC, Glovo Scraper API tools must be precise in extracting data across multiple store locations to ensure data accuracy.

The problem becomes even more significant when businesses need to rely on data for forecasting, marketing, and real-time decision-making. Glovo API Scraping and other advanced scraping methods offer a potential solution, helping to fill the gaps in data accuracy.

Stay ahead of the competition by leveraging Glovo Data Scraping for accurate, real-time delivery data insights. Contact us today!

Contact Us Today!

The Need for Glovo Data Scraping to Maintain Reliable Business Intelligence

As businesses struggle to keep up with the ever-changing dynamics of Glovo’s delivery data, the importance of reliable data extraction becomes more evident. Glovo Data Scraping offers a powerful solution for companies seeking accurate, real-time data that can support decision-making and business intelligence. Unlike traditional methods of manually tracking updates, automated scraping using Glovo Scraper tools can continuously fetch the latest store availability, menu items, pricing, and delivery conditions.

Utilizing Glovo API Scraping ensures that businesses have access to the most up-to-date and accurate data on a regular basis, mitigating the challenges posed by fluctuating delivery conditions. Whether it’s monitoring Glovo Restaurant Data Scraping for competitive pricing or gathering Glovo Menu Data Extraction for inventory management, data scraping empowers businesses to optimize operations and gain an edge over competitors.

Moreover, Glovo Delivery Data Scraping ensures that companies can monitor changes in delivery fees, product availability, and pricing models, allowing them to adapt their strategies to real-time conditions. For companies in sectors like Q-commerce, which depend heavily on timely and accurate data, integrating Scrape Glovo Data into their data pipelines can dramatically enhance operational efficiency and business forecasting.

Through intelligent Glovo Scraper API solutions, companies can bridge the data gap and create more informed strategies to capture market opportunities.

The Problems with Glovo’s Real-Time Data

Glovo, a major player in the on-demand delivery ecosystem, faces challenges in providing accurate and consistent data to its users. These issues can lead to discrepancies in business intelligence, making it difficult for organizations to rely on the platform for accurate decision-making. Several critical problems hinder the effective use of Glovo Data Scraping and Glovo API Scraping. Let’s explore these problems in detail.

1. Glovo Only Shows Stores That Are Online at the Moment

One of the primary issues with Glovo is that it only displays stores that are currently online, which means businesses may miss potential opportunities. Store availability can fluctuate rapidly throughout the day, and a business may only see a partial picture of the stores operating at any given time. This makes it difficult to make decisions based on a consistent dataset, especially for those relying on real-time data.

To address this issue, companies must use Web Scraping Glovo Delivery Data to scrape data multiple times a day. By performing automated scraping at different intervals, businesses can ensure they gather complete data and avoid gaps caused by the transient nature of store availability.

2. Listings Vary by Time of Day and Delivery Radius

Another challenge is the variation in store listings by time of day and delivery radius. Due to Glovo’s dynamic delivery system, the availability of stores changes based on the user’s delivery location and the time of day. A restaurant that is available in the morning may not be available in the evening, or it may charge different delivery fees depending on the delivery zone. This introduces significant volatility in data that businesses must account for.

The solution is to Scrape Glovo Data using location-based API scraping techniques. With the right strategies, Glovo Scraper API tools can be programmed to fetch this data by specific delivery zones, ensuring a more accurate representation of store listings.

3. Shared URLs Across Multiple Branches Complicate Precise Location Tracking

For larger chains like McDonald's or KFC, Glovo often uses a single URL to represent multiple store branches within the same city. This means that all data tied to a single restaurant chain will be lumped together, even though there may be differences in location, inventory, and pricing. Such discrepancies complicate accurate data collection and make it harder to pinpoint specific store information.

The answer lies in Glovo Restaurant Data Scraping. By utilizing advanced scraping tools like Glovo Scraper and incorporating specific store locations within the scraping process, businesses can separate out data for each branch and ensure a more accurate dataset.

4. Gaps in Sitemap Coverage and Dynamic Delivery-Based Pricing Add Complexity