#learning algorithms

Explore tagged Tumblr posts

Text

Hi, idk who's going to see this post or whatnot, but I had a lot of thoughts on a post I reblogged about AI that started to veer off the specific topic of the post, so I wanted to make my own.

Some background on me: I studied Psychology and Computer Science in college several years ago, with an interdisciplinary minor called Cognitive Science that joined the two with philosophy, linguistics, and multiple other fields. The core concept was to study human thinking and learning and its similarities to computer logic, and thus the courses I took touched frequently on learning algorithms, or "AI". This was of course before it became the successor to bitcoin as the next energy hungry grift, to be clear. Since then I've kept up on the topic, and coincidentally, my partner has gone into freelance data model training and correction. So while I'm not an expert, I have a LOT of thoughts on the current issue of AI.

I'll start off by saying that AI isn't a brand new technology, it, more properly known as learning algorithms, has been around in the linguistics, stats, biotech, and computer science worlds for over a decade or two. However, pre-ChatGPT learning algorithms were ground-up designed tools specialized for individual purposes, trained on a very specific data set, to make it as accurate to one thing as possible. Some time ago, data scientists found out that if you have a large enough data set on one specific kind of information, you can get a learning algorithm to become REALLY good at that one thing by giving it lots of feedback on right vs wrong answers. Right and wrong answers are nearly binary, which is exactly how computers are coded, so by implementing the psychological method of operant conditioning, reward and punishment, you can teach a program how to identify and replicate things with incredible accuracy. That's what makes it a good tool.

And a good tool it was and still is. Reverse image search? Learning algorithm based. Complex relationship analysis between words used in the study of language? Often uses learning algorithms to model relationships. Simulations of extinct animal movements and behaviors? Learning algorithms trained on anatomy and physics. So many features of modern technology and science either implement learning algorithms directly into the function or utilize information obtained with the help of complex computer algorithms.

But a tool in the hand of a craftsman can be a weapon in the hand of a murderer. Facial recognition software, drone targeting systems, multiple features of advanced surveillance tech in the world are learning algorithm trained. And even outside of authoritarian violence, learning algorithms in the hands of get-rich-quick minded Silicon Valley tech bro business majors can be used extremely unethically. All AI art programs that exist right now are trained from illegally sourced art scraped from the web, and ChatGPT (and similar derived models) is trained on millions of unconsenting authors' works, be they professional, academic, or personal writing. To people in countries targeted by the US War Machine and artists the world over, these unethical uses of this technology are a major threat.

Further, it's well known now that AI art and especially ChatGPT are MAJOR power-hogs. This, however, is not inherent to learning algorithms / AI, but is rather a product of the size, runtime, and inefficiency of these models. While I don't know much about the efficiency issues of AI "art" programs, as I haven't used any since the days of "imaginary horses" trended and the software was contained to a university server room with a limited training set, I do know that ChatGPT is internally bloated to all hell. Remember what I said about specialization earlier? ChatGPT throws that out the window. Because they want to market ChatGPT as being able to do anything, the people running the model just cram it with as much as they can get their hands on, and yes, much of that is just scraped from the web without the knowledge or consent of those who have published it. So rather than being really good at one thing, the owners of ChatGPT want it to be infinitely good, infinitely knowledgeable, and infinitely running. So the algorithm is never shut off, it's constantly taking inputs and processing outputs with a neural network of unnecessary size.

Now this part is probably going to be controversial, but I genuinely do not care if you use ChatGPT, in specific use cases. I'll get to why in a moment, but first let me clarify what use cases. It is never ethical to use ChatGPT to write papers or published fiction (be it for profit or not); this is why I also fullstop oppose the use of publicly available gen AI in making "art". I say publicly available because, going back to my statement on specific models made for single project use, lighting, shading, and special effects in many 3D animated productions use specially trained learning algorithms to achieve the complex results seen in the finished production. Famously, the Spider-verse films use a specially trained in-house AI to replicate the exact look of comic book shading, using ethically sources examples to build a training set from the ground up, the unfortunately-now-old-fashioned way. The issue with gen AI in written and visual art is that the publicly available, always online algorithms are unethically designed and unethically run, because the decision makers behind them are not restricted enough by laws in place.

So that actually leads into why I don't give a shit if you use ChatGPT if you're not using it as a plagiarism machine. Fact of the matter is, there is no way ChatGPT is going to crumble until legislation comes into effect that illegalizes and cracks down on its practices. The public, free userbase worldwide is such a drop in the bucket of its serverload compared to the real way ChatGPT stays afloat: licensing its models to businesses with monthly subscriptions. I mean this sincerely, based on what little I can find about ChatGPT's corporate subscription model, THAT is the actual lifeline keeping it running the way it is. Individual visitor traffic worldwide could suddenly stop overnight and wouldn't affect ChatGPT's bottom line. So I don't care if you, I, or anyone else uses the website because until the US or EU governments act to explicitly ban ChatGPT and other gen AI business' shady practices, they are all only going to continue to stick around profit from big business contracts. So long as you do not give them money or sing their praises, you aren't doing any actual harm.

If you do insist on using ChatGPT after everything I've said, here's some advice I've gathered from testing the algorithm to avoid misinformation:

If you feel you must use it as a sounding board for figuring out personal mental or physical health problems like I've seen some people doing when they can't afford actual help, do not approach it conversationally in the first person. Speak in the third person as if you are talking about someone else entirely, and exclusively note factual information on observations, symptoms, and diagnoses. This is because where ChatGPT draws its information from depends on the style of writing provided. If you try to be as dry and clinical as possible, and request links to studies, you should get dry and clinical information in return. This approach also serves to divorce yourself mentally from the information discussed, making it less likely you'll latch onto anything. Speaking casually will likely target unprofessional sources.

Do not ask for citations, ask for links to relevant articles. ChatGPT is capable of generating links to actual websites in its database, but if asked to provide citations, it will replicate the structure of academic citations, and will very likely hallucinate at least one piece of information. It also does not help that these citations also will often be for papers not publicly available and will not include links.

ChatGPT is at its core a language association and logical analysis software, so naturally its best purposes are for analyzing written works for tone, summarizing information, and providing examples of programming. It's partially coded in python, so examples of Python and Java code I've tested come out 100% accurate. Complex Google Sheets formulas however are often finicky, as it often struggles with proper nesting orders of formulas.

Expanding off of that, if you think of the software as an input-output machine, you will get best results. Problems that do not have clear input information or clear solutions, such as open ended questions, will often net inconsistent and errant results.

Commands are better than questions when it comes to asking it to do something. If you think of it like programming, then it will respond like programming most of the time.

Most of all, do not engage it as a person. It's not a person, it's just an algorithm that is trained to mimic speech and is coded to respond in courteous, subservient responses. The less you try and get social interaction out of ChatGPT, the less likely it will be to just make shit up because it sounds right.

Anyway, TL;DR:

AI is just a tool and nothing more at its core. It is not synonymous with its worse uses, and is not going to disappear. Its worst offenders will not fold or change until legislation cracks down on it, and we, the majority users of the internet, are not its primary consumer. Use of AI to substitute art (written and visual) with blended up art of others is abhorrent, but use of a freely available algorithm for personal analyticsl use is relatively harmless so long as you aren't paying them.

We need to urge legislators the world over to crack down on the methods these companies are using to obtain their training data, but at the same time people need to understand that this technology IS useful and both can and has been used for good. I urge people to understand that learning algorithms are not one and the same with theft just because the biggest ones available to the public have widely used theft to cut corners. So long as computers continue to exist, algorithmic problem-solving and generative algorithms are going to continue to exist as they are the logical conclusion of increasingly complex computer systems. Let's just make sure the future of the technology is not defined by the way things are now.

#kanguin original#ai#gen ai#generative algorithms#learning algorithms#llm#large language model#long post

7 notes

·

View notes

Text

Multi-timescale reinforcement learning in the brain

Sutton, R. S. & Barto, A. G. Reinforcement Learning 2nd edn (MIT Press, 2018). Tesauro, G. Temporal difference learning and TD-Gammon. Commun. ACM 38, 58–68 (1995). Article Google Scholar Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015). Article ADS CAS PubMed Google Scholar Silver, D. et al. Mastering the game of Go with deep neural…

0 notes

Text

AI shouldn't be called AI

It's not artificial intelligence (yet, i guess)

Most of the "AI" we talk about are just learning algorithms that sees pattern or whatev, there's no real intelligence behind it, me thinks

So instead we should call them "IA", which stands for "In-learning Algorithm"

And just like that, I have an excuse to use the french version of AI without it being deemed wrong in english

#ai#artificial intelligence#idfk how to tag this#fuck ai#learning algorithms#sellers really be saying 'buy my ai' and its just an algorithm that steals people's artwork#like... erm. no thanks

0 notes

Text

REPLICA'S PATREON IS FINALLY HERE!

You’ve asked for it and now that I am back I can finally put some wonderful treats up on my brand new Patreon including a sneak peek to the next Replica update, some sketches, and Part 1 of a short story focused on Replica Donnie:

"The Road to Hell is Paved with Good Data"

It centers around Donnie's journey towards becoming the powerhouse we know in Replica as well as the drastic steps he takes in the early months of the Krang invasion to achieve his lofty goals.

NOTE: I highly recommend signing up on a browser or desktop (not on an iPhone) due to extra fees that were recently applied.

2ND NOTE: while some things such as the Replica page WIP will eventually make their way onto my Tumblr, the sketches and these short stories will stay exclusive to Patreon for the time being. This is because most of the content on my Patreon will be focusing on sensitive themes of war, a deeper look into the characters, and maybe even a bit of romance. All things I doubt would have been touched on in the Rise canon. So think of it as just extra fluff for readers who want more secret lore from this universe. If any of these themes DO interest you then please consider checking it out HERE! (I might bring it onto Kofi, in the future if people have trouble accessing it.)

#also you'll finally get to learn what happened in Shanghai#but later... that's a story for another day#also a reblog of this would mean a lot so as to spread the word#fight against the algorithm haha#I'm still very new to all this so please forgive me as this is all a learning process#Patreon#rottmnt replica#replica#rottmnt#kathaynesart#save rottmnt#rise of the teenage mutant ninja turtles#unpause rise of the tmnt#tmnt#unpause rottmnt#Donnie#replica Donnie

701 notes

·

View notes

Text

the hatred for anything labeled as “AI” is truly fucking insane 😭 just saw someone berating spotify’s “AI generated playlists” you mean the fucking recommendations? the fucking algorithm? the same brand of algorithm that recommends you new youtube videos or nextlfix shows or for you page tweets? the “you might like this” feature? that fucking AI generation?

#icarus speaks#neg#ITS JUST A BUZZWORD#IT MEANS NOTTHINNGGG#it���s just coding. it’s machine learning it’s algorithms it’s not fucking anything new#this has existed since forever GET OVER URSELF

846 notes

·

View notes

Text

#Proud

#shockwave#insecticons#sketch#took out the context and will be putting it into its own post aklsdjlaksdj i gotta learn to work the algorithm tbh

354 notes

·

View notes

Text

hey just a few points since this place is growing:

if you see bots account under my posts (or anyone's for that matter), do not interact. There's a few kinds like porn bots (most often), donation bots and rage bait bots, don't reply don't message them. BLOCK and report.

if you're new here to Tumblr, welcome! Change the default icon to literally anything else, put your age in your bio, something like "adult" or "minor" is enough if you're not comfortable in putting your age number. Also, reblog at least one or two things into your blog as a start, tumblr is meant to use to reblog things, you can read more why here.

A lot of blog here has mdni (minors do not interact), that's why the previous point is highly recommended. If you interact with a blank account you're very likely going to get block by the author.

"I followed some blogs, but why am I seeing posts that are a few days ago and not the newest thing?" change what you want to see on your feed in the settings, read more here.

The tumblr faq site has some cool tips to read.

Want to see mature content? you can enable “Show mature content” in your account settings, but this is only available on the web and android app.

Use tags! AND USE THEM APPROPRIATELY! If your post shows 2 characters, then tag those characters, you can learn how to tag by seeing other artist/blog's posts and use similar ones. Do not tag a "popular" tag for the sake of getting higher engagement, you do that and you might get blocked by others.

You can also make your own tags so you can easily find back your posts on your blog (example, I use "gummmyart" for all my art content, it also serves as a filter for others if they're just here for doodles")

Want to find back something you made but it's way too long ago? You can try to find it through "View Archive" which catalogues the things you reblogged and made, as long as you remember which tag you used and the month you'll be able to find it back, most of the time (sometimes they might not show up...idk why tho)

Fun fact! If you're on mobile, long press the heart icon :3

Use the "read more"/"keep reading" if you have a long post! It's the last icon after the poll icon whenever you make a new post. This make sure when someone reblog your post, they don't have to scroll like 4 minutes to go to another post xD You can adjust where to put the "read more" options by clicking on the left of the line with the 4 dots icon.

#for the new peeps joining from my twt and just to newbies in general#i think these are prob the most important ones for now#obvs there's more to learn but yeah! just wanted to throw together a short list for those asking#i see people using the gh0@p tag on post that doesn't show it more often now and BOY! blocked LMAO#i get it bcuz it takes awhile for your posts to gain traction and get yeet into the algorithm but if you're not showing the right thing#then it's just going to backfire#gummmyspeaks#psa#tumblr tips

88 notes

·

View notes

Text

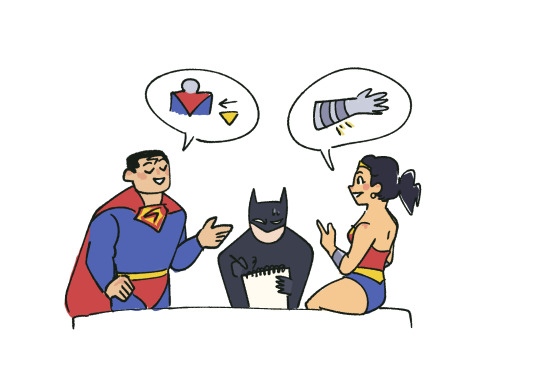

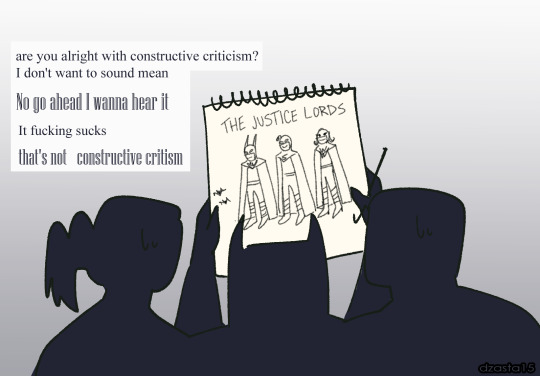

he just wanted them to match

#d-art#dc fanart#batman#bruce wayne#lord batman#clark kent#superman#lord superman#kal el#diana of themyscira#diana prince#lady wonder woman#justice lords#dcau#dcau fanart#dc meme#one day ill learn not to post at night but today is not today#im too impatient to care about algorithms if this is meant to be seen it will be seen

623 notes

·

View notes

Text

I mean, if tumblr dies I'll miss hanging out somewhere where my entire dash is queer in some way and I'll miss some followers and mutuals in particular, but in general I will interpret it as the universe telling me that's enough of that now

I feel that way about pretty much all social media tbh, there are great things about it but in general I think that because it's been left unregulated for so long the toxicity and monetisation of outrage and conflict is actually extremely bad for society.

I will find somewhere else to get my news from the source. I will find somewhere else to hang out with people that doesn't rely on monetisation of my information and algorithm. I will find pockets of other like-minded queers. Nature will heal.

#social media#unrelated but I just wrote 'algorithm' for the first time and was surprised to learn it wasn't spelt 'algorhythm'

76 notes

·

View notes

Text

About his "trigger warnings"

I mentioned here on tumblr that I used to have a number one favourite book writer. I guess not anymore. After all the SA allegations and other stories that got leaked by people around him (his collegues, co-workers etc.), I realized he's an abusive asshole and I owe you all to say that openly here. And some of the assaults date back decades now, which means he didn't just wake up one day and changed into an asshole, he most likely was always one.

I read the foreword to his book Trigger Warning again. I feel like I took a peek beyond his fake persona there. He writes about trigger warnings like it's some exotic curious little trend that kids on the internet came up with, finds it a bit peculiar like a daddy trying to understand their kid's hobbies, then proceeds to use them like a funny teasers for his short stories ("can you find the big tentacle hidden among the pages somewhere?"), only to finish it all up with a punch straight to your face: real life doesn't have trigger warnings, so always watch out for yourself. On the surface level? This all sounds like a slightly misguided, maybe even witty intro. Nothing is said with malice, right? And yet, the message underneath it all was always to discredit trigger warnings as a concept. That's why that delivery line is at the very end of that intro. You're supposed to be lulled into agreeing how silly it all is. I dunno if he did it on purpose or did it without thinking much about it, by habit, but that intention is there and it's disguised with concern and attempts to sound kind. A peek beyond the nice guy mask. No wonder I could never finish that anthology of short stories. The cognitive dissonance caused by the foreword sticked with me like a bad aftertaste. My intuition told me this was all wrong, I just couldn't find the words to express it.

And you know why it works so well as a disguise and why we tend to believe he didn't do it on purpose? Because hey, he just said the facts, the truth! Reality indeed doesn't have any trigger warnings, what's wrong with saying that! Yes, that statement is true. Using real statements in carefully woven context to sell a lie, is an example of an excellent manipulation. So allow me to untangle it or, in other words, to reveal the magic trick behind it.

Why do trigger warnings exist? Isn't Gaiman right, aren't they counterproductive, you might think, because by avoiding triggers you will never get better at dealing with them? Indeed, here's the catch, because the answer isn't a simple yes or no here. Yes, often to recover from trauma, you need to expose yourself to it in some way - like for example, through exposure therapy (or even just classic psychotherapy). But also No, because there's no rule that says you will officially recover only after you're fine reading fiction about sexual assault (for example)! Some triggers will dimnish, some will not, and the best you can do for the latter is to avoid them altogether. Triggers are extremely personal, but you can learn to manage them, in ways that respect your own boundaries, but never by giving up your right to selfcare. You see the difference?

Back to therapy bit for a moment. To recover, often you need to go through with it. But here's the thing - you do it in *controlled environment*, accompanied by a specialist that is there to help and calm you down afterwards. And you only start to do that once you feel *ready* to face it. Now compare it to a situation of reading a book (yes, a book, which usually never has any trigger warnings, because that's such a silly fanfiction thing). You come upon your trigger without any warning, preparation or support around you, you're left with the aftermath of possible panic attack or other symptoms completely on your own. It might take you weeks to recover from it, because perhaps you weren't yet in any therapy that could help you manage your triggers more effectively. But then you tell yourself it's fine, minimizing your own emotional reactions, because *it was just a book*. But, you realize, even years later you still remember it and you might finally accept the harsh truth that you're still not fine with it.

Now imagine same situation, but the book did have trigger warnings listed. For example, about sexual abuse. You would see that and leave the bookstore without the book, because you would know you're not *ready* for that. And it's fine not to be ready, be it yet or ever. This is about consent and selfcare, both are essential to process through trauma and recover. The books without trigger warnings rob selfcare, consent and a choice from us. They teach us we should always ignore our triggers and push through. It's sadly a reality that is widely accepted so Gaiman is right, nothing in reality will flash you a warning. But he's also wrong: it doesn't mean we can't make the life a tiny bit easier for those of us who are traumatized, instead of leaving them with all of that on their very own. This part, he doesn't want you to even consider. He doesn't want you to imagine the positive side of living in a world in which real books warn you about triggers, because then it would prove that it *can* become a reality in which real things (like books) warn you of triggers. They can't shield you from everything, but that's also not the point: it's just to make some things feel more safe, for everybody.

(As a side note, being triggered is not the same as stepping outside your comfort zone - those are two different matters! Though yes, stepping outside your comfort zone in an extreme way CAN become traumatic as the result as well).

I guess Neil Gaiman just thinks some people are too sensitive and should just get over themselves. You don't need those warnings, they won't protect you anyway. Have you tried not getting traumatized? How dare you think your selfcare is more important than reading my questionable fantasies? You're missing out if you skip my book (that has no proper trigger warnings) and you have only yourself to blame! I provide you a safe environment to explore your traumatic triggers, you should be grateful! And how is your book providing a safe environment exactly, author? Did you even try to put a safety net there for your reader? Do you even care? Of course you don't. But you will pretend like you do: by providing a very ingenuine effort that is mostly meant to be a pat on your own back for cleverly dismissing the very concept of trigger warnings, while pretending to play along with it and exposing their lack of power in the process. Disguised as a coincidence, lack of understanding or unskillful attempt written by a slightly ignorant daddy-like figure. What an irony that you do it by nearly surgically focusing on the blind spots of the concept, proving at the same time you do know the mechanism behind it pretty well. You knew what you were doing and how you were doing it.

Or at least, this is how I see it: I might be wrong on the details, but I'm sure I caught the gist of the manipulative behaviour there. An abuser always wants you to step out of your comfort zone, get surprised by a trigger, and to make sure you're outside your safety net. Because then you're an easier target, more likely to agree to harmful things (be it real actions or just harmful beliefs delivered to you by the author of a book, like in case of *trigger warnings being pointless*). They want to groom you into thinking that you're just being silly and see things that aren't there.

Trigger Warning's foreword is exactly that and I feel disgusted, now that I finally recognize my own feelings about it. I probably didn't find words for it before, because I wanted to believe Gaiman had good intentions behind it, they just didn't work out very well. Except that was never the case and that's why it never felt right. That good intention was never there, but it sure *looked* like it was. Also it took me way too long to realize people do things like that on purpose. You know what, Gaiman? Thanks to gaslighting efforts like yours it took me also way too many years to accept that selfcare IS OKAY.

So many people now think nothing was ever genuine about Neil Gaiman because his nice guy mask slipped. A mask he used to hide his autism behind and appear neurotypical/feel accepted thanks to it. Whenever a really advanced mask like that slips, the cognitive dissonance becomes a huge gap between a mask and actual self in perception of other people. Still, your autism is not an excuse for things you do and say, and definitely doesn't excuse assault as simple miscommunication - and yes, he did try to justify lack of consent this way. "I'm autistic, I read the body language wrong and wasn't even aware of it". Hey, you could have, like, asked. There's no shame in getting confirmation in words :P but it's just a poor excuse anyway, the truth is he didn't care if it was wanted or not, as long as he got adoration and powertripping thrill out of that, and that's the best case scenario here.

I believe the allegations. I won't be able to read Gaiman's books anymore, I honestly can't see them the same way I used to anymore. I loved Coraline and The Graveyard Book, and Smoke and Mirrors. I feel disgusted knowing that he openly claimed to be a feminist while at the same time assaulted so many people and used emotional manipulation so they won't #metoo him. He even went as far as to claim "always believe the victims", but once the allegations flew his way, what did he do? Blamed the victims, even called them mentally ill! I also feel now like his books are also just full of deception, meant to hide harmful beliefs under quirky words and imaginative tales. And I might never be able to stop feeling this way and I don't owe him a second chance anyway.

Good Omens stays in my heart though, because sir Terry Pratchett put a lot of work into it and it shows. I feel like I would show him disrespect if I discarded it. Let's say it becomes a Gaiman Who Might Have Been But Never Was, for me.

#neil gaiman#neil gaiman allegations#gaslighting#emotional manipulation#please use those trigger warnings#they really can help people#this post might be uneccessarily spiteful and very very angry#but my feelings need a safe venting space#and I owe people explanation why this guy is not my fav author anymore#everyone deserves to know the truth#especially because bots and algorithms push positive posts about Gaiman to hide the allegations from sight#the allegations been known for months but I only learned about it lately thanks to random vid on YOUTUBE ffs

116 notes

·

View notes

Text

*sniffles* you are all so fucking stupid

#i'm waiting for the novelty of xhs to die down for usamericans and they realize they have no idea how to adjust to this place#you CANNOT run your xhs the same way you're running your tumblr or twitter especially if you're queer#to the people complaining about getting their xhs bios disallowed because you put a pride flag and labels in it or whatever:#sorry but that's really on you#some of you are too caught up in the euphoria of rebelling agains tiktok to remember that the censorship ain't a joke#and there are some topics you'd best not bring up#chinese netizens have learned to navigate some of it through codewords and shit that won't alert people#but usamericans have never known how to be subtle so. this is gonna be a shitshow eventually#and FUCK y'all if y'all take all the overseas people down with you when either xhs or the us gov takes action about this#(xhs is already on it considering trying to separate the mainland and overseas algorithms as a result of this. fuck you.)#anyways i have too many opinions on this issue now but just. [screams into the woods]#ashton originals

57 notes

·

View notes

Note

could you perhaps png-ify some shots from the triple baka tf2 (team fortress 2) video on youtube..

im just now realizing how weird this sounds

#sure why not lol#png#transparent#request#art#this better not mess up my perfectly curated algorithm of northernlion playing fnv and japanese learning materials 🤣🤣🤣

63 notes

·

View notes

Text

The surprising truth about data-driven dictatorships

Here’s the “dictator’s dilemma”: they want to block their country’s frustrated elites from mobilizing against them, so they censor public communications; but they also want to know what their people truly believe, so they can head off simmering resentments before they boil over into regime-toppling revolutions.

These two strategies are in tension: the more you censor, the less you know about the true feelings of your citizens and the easier it will be to miss serious problems until they spill over into the streets (think: the fall of the Berlin Wall or Tunisia before the Arab Spring). Dictators try to square this circle with things like private opinion polling or petition systems, but these capture a small slice of the potentially destabiziling moods circulating in the body politic.

Enter AI: back in 2018, Yuval Harari proposed that AI would supercharge dictatorships by mining and summarizing the public mood — as captured on social media — allowing dictators to tack into serious discontent and diffuse it before it erupted into unequenchable wildfire:

https://www.theatlantic.com/magazine/archive/2018/10/yuval-noah-harari-technology-tyranny/568330/

Harari wrote that “the desire to concentrate all information and power in one place may become [dictators] decisive advantage in the 21st century.” But other political scientists sharply disagreed. Last year, Henry Farrell, Jeremy Wallace and Abraham Newman published a thoroughgoing rebuttal to Harari in Foreign Affairs:

https://www.foreignaffairs.com/world/spirals-delusion-artificial-intelligence-decision-making

They argued that — like everyone who gets excited about AI, only to have their hopes dashed — dictators seeking to use AI to understand the public mood would run into serious training data bias problems. After all, people living under dictatorships know that spouting off about their discontent and desire for change is a risky business, so they will self-censor on social media. That’s true even if a person isn’t afraid of retaliation: if you know that using certain words or phrases in a post will get it autoblocked by a censorbot, what’s the point of trying to use those words?

The phrase “Garbage In, Garbage Out” dates back to 1957. That’s how long we’ve known that a computer that operates on bad data will barf up bad conclusions. But this is a very inconvenient truth for AI weirdos: having given up on manually assembling training data based on careful human judgment with multiple review steps, the AI industry “pivoted” to mass ingestion of scraped data from the whole internet.

But adding more unreliable data to an unreliable dataset doesn’t improve its reliability. GIGO is the iron law of computing, and you can’t repeal it by shoveling more garbage into the top of the training funnel:

https://memex.craphound.com/2018/05/29/garbage-in-garbage-out-machine-learning-has-not-repealed-the-iron-law-of-computer-science/

When it comes to “AI” that’s used for decision support — that is, when an algorithm tells humans what to do and they do it — then you get something worse than Garbage In, Garbage Out — you get Garbage In, Garbage Out, Garbage Back In Again. That’s when the AI spits out something wrong, and then another AI sucks up that wrong conclusion and uses it to generate more conclusions.

To see this in action, consider the deeply flawed predictive policing systems that cities around the world rely on. These systems suck up crime data from the cops, then predict where crime is going to be, and send cops to those “hotspots” to do things like throw Black kids up against a wall and make them turn out their pockets, or pull over drivers and search their cars after pretending to have smelled cannabis.

The problem here is that “crime the police detected” isn’t the same as “crime.” You only find crime where you look for it. For example, there are far more incidents of domestic abuse reported in apartment buildings than in fully detached homes. That’s not because apartment dwellers are more likely to be wife-beaters: it’s because domestic abuse is most often reported by a neighbor who hears it through the walls.

So if your cops practice racially biased policing (I know, this is hard to imagine, but stay with me /s), then the crime they detect will already be a function of bias. If you only ever throw Black kids up against a wall and turn out their pockets, then every knife and dime-bag you find in someone’s pockets will come from some Black kid the cops decided to harass.

That’s life without AI. But now let’s throw in predictive policing: feed your “knives found in pockets” data to an algorithm and ask it to predict where there are more knives in pockets, and it will send you back to that Black neighborhood and tell you do throw even more Black kids up against a wall and search their pockets. The more you do this, the more knives you’ll find, and the more you’ll go back and do it again.

This is what Patrick Ball from the Human Rights Data Analysis Group calls “empiricism washing”: take a biased procedure and feed it to an algorithm, and then you get to go and do more biased procedures, and whenever anyone accuses you of bias, you can insist that you’re just following an empirical conclusion of a neutral algorithm, because “math can’t be racist.”

HRDAG has done excellent work on this, finding a natural experiment that makes the problem of GIGOGBI crystal clear. The National Survey On Drug Use and Health produces the gold standard snapshot of drug use in America. Kristian Lum and William Isaac took Oakland’s drug arrest data from 2010 and asked Predpol, a leading predictive policing product, to predict where Oakland’s 2011 drug use would take place.

[Image ID: (a) Number of drug arrests made by Oakland police department, 2010. (1) West Oakland, (2) International Boulevard. (b) Estimated number of drug users, based on 2011 National Survey on Drug Use and Health]

Then, they compared those predictions to the outcomes of the 2011 survey, which shows where actual drug use took place. The two maps couldn’t be more different:

https://rss.onlinelibrary.wiley.com/doi/full/10.1111/j.1740-9713.2016.00960.x

Predpol told cops to go and look for drug use in a predominantly Black, working class neighborhood. Meanwhile the NSDUH survey showed the actual drug use took place all over Oakland, with a higher concentration in the Berkeley-neighboring student neighborhood.

What’s even more vivid is what happens when you simulate running Predpol on the new arrest data that would be generated by cops following its recommendations. If the cops went to that Black neighborhood and found more drugs there and told Predpol about it, the recommendation gets stronger and more confident.

In other words, GIGOGBI is a system for concentrating bias. Even trace amounts of bias in the original training data get refined and magnified when they are output though a decision support system that directs humans to go an act on that output. Algorithms are to bias what centrifuges are to radioactive ore: a way to turn minute amounts of bias into pluripotent, indestructible toxic waste.

There’s a great name for an AI that’s trained on an AI’s output, courtesy of Jathan Sadowski: “Habsburg AI.”

And that brings me back to the Dictator’s Dilemma. If your citizens are self-censoring in order to avoid retaliation or algorithmic shadowbanning, then the AI you train on their posts in order to find out what they’re really thinking will steer you in the opposite direction, so you make bad policies that make people angrier and destabilize things more.

Or at least, that was Farrell(et al)’s theory. And for many years, that’s where the debate over AI and dictatorship has stalled: theory vs theory. But now, there’s some empirical data on this, thanks to the “The Digital Dictator’s Dilemma,” a new paper from UCSD PhD candidate Eddie Yang:

https://www.eddieyang.net/research/DDD.pdf

Yang figured out a way to test these dueling hypotheses. He got 10 million Chinese social media posts from the start of the pandemic, before companies like Weibo were required to censor certain pandemic-related posts as politically sensitive. Yang treats these posts as a robust snapshot of public opinion: because there was no censorship of pandemic-related chatter, Chinese users were free to post anything they wanted without having to self-censor for fear of retaliation or deletion.

Next, Yang acquired the censorship model used by a real Chinese social media company to decide which posts should be blocked. Using this, he was able to determine which of the posts in the original set would be censored today in China.

That means that Yang knows that the “real” sentiment in the Chinese social media snapshot is, and what Chinese authorities would believe it to be if Chinese users were self-censoring all the posts that would be flagged by censorware today.

From here, Yang was able to play with the knobs, and determine how “preference-falsification” (when users lie about their feelings) and self-censorship would give a dictatorship a misleading view of public sentiment. What he finds is that the more repressive a regime is — the more people are incentivized to falsify or censor their views — the worse the system gets at uncovering the true public mood.

What’s more, adding additional (bad) data to the system doesn’t fix this “missing data” problem. GIGO remains an iron law of computing in this context, too.

But it gets better (or worse, I guess): Yang models a “crisis” scenario in which users stop self-censoring and start articulating their true views (because they’ve run out of fucks to give). This is the most dangerous moment for a dictator, and depending on the dictatorship handles it, they either get another decade or rule, or they wake up with guillotines on their lawns.

But “crisis” is where AI performs the worst. Trained on the “status quo” data where users are continuously self-censoring and preference-falsifying, AI has no clue how to handle the unvarnished truth. Both its recommendations about what to censor and its summaries of public sentiment are the least accurate when crisis erupts.

But here’s an interesting wrinkle: Yang scraped a bunch of Chinese users’ posts from Twitter — which the Chinese government doesn’t get to censor (yet) or spy on (yet) — and fed them to the model. He hypothesized that when Chinese users post to American social media, they don’t self-censor or preference-falsify, so this data should help the model improve its accuracy.

He was right — the model got significantly better once it ingested data from Twitter than when it was working solely from Weibo posts. And Yang notes that dictatorships all over the world are widely understood to be scraping western/northern social media.

But even though Twitter data improved the model’s accuracy, it was still wildly inaccurate, compared to the same model trained on a full set of un-self-censored, un-falsified data. GIGO is not an option, it’s the law (of computing).

Writing about the study on Crooked Timber, Farrell notes that as the world fills up with “garbage and noise” (he invokes Philip K Dick’s delighted coinage “gubbish”), “approximately correct knowledge becomes the scarce and valuable resource.”

https://crookedtimber.org/2023/07/25/51610/

This “probably approximately correct knowledge” comes from humans, not LLMs or AI, and so “the social applications of machine learning in non-authoritarian societies are just as parasitic on these forms of human knowledge production as authoritarian governments.”

The Clarion Science Fiction and Fantasy Writers’ Workshop summer fundraiser is almost over! I am an alum, instructor and volunteer board member for this nonprofit workshop whose alums include Octavia Butler, Kim Stanley Robinson, Bruce Sterling, Nalo Hopkinson, Kameron Hurley, Nnedi Okorafor, Lucius Shepard, and Ted Chiang! Your donations will help us subsidize tuition for students, making Clarion — and sf/f — more accessible for all kinds of writers.

Libro.fm is the indie-bookstore-friendly, DRM-free audiobook alternative to Audible, the Amazon-owned monopolist that locks every book you buy to Amazon forever. When you buy a book on Libro, they share some of the purchase price with a local indie bookstore of your choosing (Libro is the best partner I have in selling my own DRM-free audiobooks!). As of today, Libro is even better, because it’s available in five new territories and currencies: Canada, the UK, the EU, Australia and New Zealand!

[Image ID: An altered image of the Nuremberg rally, with ranked lines of soldiers facing a towering figure in a many-ribboned soldier's coat. He wears a high-peaked cap with a microchip in place of insignia. His head has been replaced with the menacing red eye of HAL9000 from Stanley Kubrick's '2001: A Space Odyssey.' The sky behind him is filled with a 'code waterfall' from 'The Matrix.']

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

—

Raimond Spekking (modified) https://commons.wikimedia.org/wiki/File:Acer_Extensa_5220_-_Columbia_MB_06236-1N_-_Intel_Celeron_M_530_-_SLA2G_-_in_Socket_479-5029.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

—

Russian Airborne Troops (modified) https://commons.wikimedia.org/wiki/File:Vladislav_Achalov_at_the_Airborne_Troops_Day_in_Moscow_%E2%80%93_August_2,_2008.jpg

“Soldiers of Russia” Cultural Center (modified) https://commons.wikimedia.org/wiki/File:Col._Leonid_Khabarov_in_an_everyday_service_uniform.JPG

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

#pluralistic#habsburg ai#self censorship#henry farrell#digital dictatorships#machine learning#dictator's dilemma#eddie yang#preference falsification#political science#training bias#scholarship#spirals of delusion#algorithmic bias#ml#Fully automated data driven authoritarianism#authoritarianism#gigo#garbage in garbage out garbage back in#gigogbi#yuval noah harari#gubbish#pkd#philip k dick#phildickian

833 notes

·

View notes

Text

my personal atsv hobie brown hc is that this boy can build a watch that enables the wearer to travel to any dimension they want to, made entirely out of cobbled up parts he "finds"

but anytime anyone brings up AI or algorithms or social media he pretends to be 100 years old

hobie: what's a bloody "snapchat"? fuckin 'ell those effects are nightmarish, mate

miles, exasperated: hobie, you BUILD TECH that astrophysicists in my dimension can't even replicate. how are filters on a phone trippin you up?

hobie: dunno, everyone's got their strengths n weaknesses, i 'spose... 🙄😒

#hobie brown#i need to start tagging my shit appropriately man i need to organize my posts OUCH#my blog is lookin a damn mess#anyways yeah hope this resonates with someone out there#imo i dont think hobie doesnt GET ai and algorithms#im p sure he can rip apart and put together an entire computer AND code software on it to perfection#but its the principle of the thing!!#algorithms that decide what to show you based on previous activity…?#NOT hobie brown approved at all 👎#why have computers do the thinking for you when youre trying to find ENTERTAINMENT? doesnt make a lick of sense#and so here we are#he refuses to even deal with soc media#fuck that noise#the second he learned about ring cameras he hit the damn ceiling 💀#spiderverse#clown horn#mi writing

259 notes

·

View notes

Text

Mate, if you unironically say things like 'corn' instead of porn, 'spicy time' or 'smex' instead of sex, or anything like 'unalive' on here, you're probably too young or emotionally immature to be engaging in any sort of content or conversations around those topics.

#ooh “spicy take” time#yes i realize a lot of people learned them to avoid algorithm blacklisting#but this aint tiktok#i cannot tell you how much i hate when people call my garbage 'corn writing' or 'smex writing'#youre too young to be reading it dude#im not about to engage in arguments with people who unironically cant say the proper word for things but have 'big opinions' on them

111 notes

·

View notes

Text

overhearing my 10 year old cousin watch a video talking about the dangers of misinformation in things like chatgpt and how you should not just mistrust the information but also the intentions of people attempting to sell it to you…… the kids are alright ❤️

#icarus speaks#it sounds like a very good video#like genuinely#it’s going into how these language learning algorithms work#while also discussing how AI/said learning algorithms in themselves are not the devil#and can and likely will become very helpful to humanity in the future#but to be weary of them in commercial uses/for information

133 notes

·

View notes