#Apache Spark Supported S3 File System

Explore tagged Tumblr posts

Text

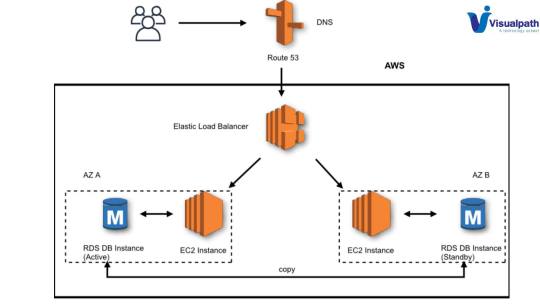

Creating a Scalable Amazon EMR Cluster on AWS in Minutes

Minutes to Scalable EMR Cluster on AWS

AWS EMR cluster

Spark helps you easily build up an Amazon EMR cluster to process and analyse data. This page covers Plan and Configure, Manage, and Clean Up.

This detailed guide to cluster setup:

Amazon EMR Cluster Configuration

Spark is used to launch an example cluster and run a PySpark script in the course. You must complete the “Before you set up Amazon EMR” exercises before starting.

While functioning live, the sample cluster will incur small per-second charges under Amazon EMR pricing, which varies per location. To avoid further expenses, complete the tutorial’s final cleaning steps.

The setup procedure has numerous steps:

Amazon EMR Cluster and Data Resources Configuration

This initial stage prepares your application and input data, creates your data storage location, and starts the cluster.

Setting Up Amazon EMR Storage:

Amazon EMR supports several file systems, but this article uses EMRFS to store data in an S3 bucket. EMRFS reads and writes to Amazon S3 in Hadoop.

This lesson requires a specific S3 bucket. Follow the Amazon Simple Storage Service Console User Guide to create a bucket.

You must create the bucket in the same AWS region as your Amazon EMR cluster launch. Consider US West (Oregon) us-west-2.

Amazon EMR bucket and folder names are limited. Lowercase letters, numerals, periods (.), and hyphens (-) can be used, but bucket names cannot end in numbers and must be unique across AWS accounts.

The bucket output folder must be empty.

Small Amazon S3 files may incur modest costs, but if you’re within the AWS Free Tier consumption limitations, they may be free.

Create an Amazon EMR app using input data:

Standard preparation involves uploading an application and its input data to Amazon S3. Submit work with S3 locations.

The PySpark script examines 2006–2020 King County, Washington food business inspection data to identify the top ten restaurants with the most “Red” infractions. Sample rows of the dataset are presented.

Create a new file called health_violations.py and copy the source code to prepare the PySpark script. Next, add this file to your new S3 bucket. Uploading instructions are in Amazon Simple Storage Service’s Getting Started Guide.

Download and unzip the food_establishment_data.zip file, save the CSV file to your computer as food_establishment_data.csv, then upload it to the same S3 bucket to create the example input data. Again, see the Amazon Simple Storage Service Getting Started Guide for uploading instructions.

“Prepare input data for processing with Amazon EMR” explains EMR data configuration.

Create an Amazon EMR Cluster:

Apache Spark and the latest Amazon EMR release allow you to launch the example cluster after setting up storage and your application. This may be done with the AWS Management Console or CLI.

Console Launch:

Launch Amazon EMR after login into AWS Management Console.

Start with “EMR on EC2” > “Clusters” > “Create cluster”. Note the default options for “Release,” “Instance type,” “Number of instances,” and “Permissions”.

Enter a unique “Cluster name” without <, >, $, |, or `. Install Spark from “Applications” by selecting “Spark”. Note: Applications must be chosen before launching the cluster. Check “Cluster logs” to publish cluster-specific logs to Amazon S3. The default destination is s3://amzn-s3-demo-bucket/logs. Replace with S3 bucket. A new ‘logs’ subfolder is created for log files.

Select your two EC2 keys under “Security configuration and permissions”. For the instance profile, choose “EMR_DefaultRole” for Service and “EMR_EC2_DefaultRole” for IAM.

Choose “Create cluster”.

The cluster information page appears. As the EMR fills the cluster, its “Status” changes from “Starting” to “Running” to “Waiting”. Console view may require refreshing. Status switches to “Waiting” when cluster is ready to work.

AWS CLI’s aws emr create-default-roles command generates IAM default roles.

Create a Spark cluster with aws emr create-cluster. Name your EC2 key pair –name, set –instance-type, –instance-count, and –use-default-roles. The sample command’s Linux line continuation characters () may need Windows modifications.

Output will include ClusterId and ClusterArn. Remember your ClusterId for later.

Check your cluster status using aws emr describe-cluster –cluster-id myClusterId>.

The result shows the Status object with State. As EMR deployed the cluster, the State changed from STARTING to RUNNING to WAITING. When ready, operational, and up, the cluster becomes WAITING.

Open SSH Connections

Before connecting to your operating cluster via SSH, update your cluster security groups to enable incoming connections. Amazon EC2 security groups are virtual firewalls. At cluster startup, EMR created default security groups: ElasticMapReduce-slave for core and task nodes and ElasticMapReduce-master for main.

Console-based SSH authorisation:

Authorisation is needed to manage cluster VPC security groups.

Launch Amazon EMR after login into AWS Management Console.

Select the updateable cluster under “Clusters”. The “Properties” tab must be selected.

Choose “Networking” and “EC2 security groups (firewall)” from the “Properties” tab. Select the security group link under “Primary node”.

EC2 console is open. Select “Edit inbound rules” after choosing “Inbound rules”.

Find and delete any public access inbound rule (Type: SSH, Port: 22, Source: Custom 0.0.0.0/0). Warning: The ElasticMapReduce-master group’s pre-configured rule that allowed public access and limited traffic to reputable sources should be removed.

Scroll down and click “Add Rule”.

Choose “SSH” for “Type” to set Port Range to 22 and Protocol to TCP.

Enter “My IP” for “Source” or a range of “Custom” trustworthy client IP addresses. Remember that dynamic IPs may need updating. Select “Save.”

When you return to the EMR console, choose “Core and task nodes” and repeat these steps to provide SSH access to those nodes.

Connecting with AWS CLI:

SSH connections may be made using the AWS CLI on any operating system.

Use the command: AWS emr ssh –cluster-id –key-pair-file <~/mykeypair.key>. Replace with your ClusterId and the full path to your key pair file.

After connecting, visit /mnt/var/log/spark to examine master node Spark logs.

The next critical stage following cluster setup and access configuration is phased work submission.

#AmazonEMRcluster#EMRcluster#DataResources#SSHConnections#AmazonEC2#AWSCLI#technology#technews#technologynews#news#govindhtech

0 notes

Text

How to Optimize ETL Pipelines for Performance and Scalability

As data continues to grow in volume, velocity, and variety, the importance of optimizing your ETL pipeline for performance and scalability cannot be overstated. An ETL (Extract, Transform, Load) pipeline is the backbone of any modern data architecture, responsible for moving and transforming raw data into valuable insights. However, without proper optimization, even a well-designed ETL pipeline can become a bottleneck, leading to slow processing, increased costs, and data inconsistencies.

Whether you're building your first pipeline or scaling existing workflows, this guide will walk you through the key strategies to improve the performance and scalability of your ETL pipeline.

1. Design with Modularity in Mind

The first step toward a scalable ETL pipeline is designing it with modular components. Break down your pipeline into independent stages — extraction, transformation, and loading — each responsible for a distinct task. Modular architecture allows for easier debugging, scaling individual components, and replacing specific stages without affecting the entire workflow.

For example:

Keep extraction scripts isolated from transformation logic

Use separate environments or containers for each stage

Implement well-defined interfaces for data flow between stages

2. Use Incremental Loads Over Full Loads

One of the biggest performance drains in ETL processes is loading the entire dataset every time. Instead, use incremental loads — only extract and process new or updated records since the last run. This reduces data volume, speeds up processing, and decreases strain on source systems.

Techniques to implement incremental loads include:

Using timestamps or change data capture (CDC)

Maintaining checkpoints or watermark tables

Leveraging database triggers or logs for change tracking

3. Leverage Parallel Processing

Modern data tools and cloud platforms support parallel processing, where multiple operations are executed simultaneously. By breaking large datasets into smaller chunks and processing them in parallel threads or workers, you can significantly reduce ETL run times.

Best practices for parallelism:

Partition data by time, geography, or IDs

Use multiprocessing in Python or distributed systems like Apache Spark

Optimize resource allocation in cloud-based ETL services

4. Push Down Processing to the Source System

Whenever possible, push computation to the database or source system rather than pulling data into your ETL tool for processing. Databases are optimized for query execution and can filter, sort, and aggregate data more efficiently.

Examples include:

Using SQL queries for filtering data before extraction

Aggregating large datasets within the database

Using stored procedures to perform heavy transformations

This minimizes data movement and improves pipeline efficiency.

5. Monitor, Log, and Profile Your ETL Pipeline

Optimization is not a one-time activity — it's an ongoing process. Use monitoring tools to track pipeline performance, identify bottlenecks, and collect error logs.

What to monitor:

Data throughput (rows/records per second)

CPU and memory usage

Job duration and frequency of failures

Time spent at each ETL stage

Popular tools include Apache Airflow for orchestration, Prometheus for metrics, and custom dashboards built on Grafana or Kibana.

6. Use Scalable Storage and Compute Resources

Cloud-native ETL tools like AWS Glue, Google Dataflow, and Azure Data Factory offer auto-scaling capabilities that adjust resources based on workload. Leveraging these platforms ensures you’re only using (and paying for) what you need.

Additionally:

Store intermediate files in cloud storage (e.g., Amazon S3)

Use distributed compute engines like Spark or Dask

Separate compute and storage to scale each independently

Conclusion

A fast, reliable, and scalable ETL pipeline is crucial to building robust data infrastructure in 2025 and beyond. By designing modular systems, embracing incremental and parallel processing, offloading tasks to the database, and continuously monitoring performance, data teams can optimize their pipelines for both current and future needs.

In the era of big data and real-time analytics, even small performance improvements in your ETL workflow can lead to major gains in efficiency and insight delivery. Start optimizing today to unlock the full potential of your data pipeline.

0 notes

Text

"Apache Spark: The Leading Big Data Platform with Fast, Flexible, Developer-Friendly Features Used by Major Tech Giants and Government Agencies Worldwide."

What is Apache Spark? The Big Data Platform that Crushed Hadoop

Apache Spark is a powerful data processing framework designed for large-scale SQL, batch processing, stream processing, and machine learning tasks. With its fast, flexible, and developer-friendly nature, Spark has become the leading platform in the world of big data. In this article, we will explore the key features and real-world applications of Apache Spark, as well as its significance in the digital age.

Apache Spark defined

Apache Spark is a data processing framework that can quickly perform processing tasks on very large data sets. It can distribute data processing tasks across multiple computers, either on its own or in conjunction with other distributed computing tools. This capability is crucial in the realm of big data and machine learning, where massive computing power is necessary to analyze and process vast amounts of data. Spark eases the programming burden of these tasks by offering an easy-to-use API that abstracts away much of the complexities of distributed computing and big data processing.

What is Spark in big data

In the context of big data, the term "big data" refers to the rapid growth of various types of data - structured data in database tables, unstructured data in business documents and emails, semi-structured data in system log files and web pages, and more. Unlike traditional analytics, which focused solely on structured data within data warehouses, modern analytics encompasses insights derived from diverse data sources and revolves around the concept of a data lake. Apache Spark was specifically designed to address the challenges posed by this new paradigm.

Originally developed at U.C. Berkeley in 2009, Apache Spark has become a prominent distributed processing framework for big data. Flexibility lies at the core of Spark's appeal, as it can be deployed in various ways and supports multiple programming languages such as Java, Scala, Python, and R. Furthermore, Spark provides extensive support for SQL, streaming data, machine learning, and graph processing. Its widespread adoption by major companies and organizations, including Apple, IBM, and Microsoft, highlights its significance in the big data landscape.

Spark RDD

Resilient Distributed Dataset (RDD) forms the foundation of Apache Spark. An RDD is an immutable collection of objects that can be split across a computing cluster. Spark performs operations on RDDs in a parallel batch process, enabling fast and scalable parallel processing. The RDD concept allows Spark to transform user's data processing commands into a Directed Acyclic Graph (DAG), which serves as the scheduling layer determining the tasks, nodes, and sequence of execution.

Apache Spark can create RDDs from various data sources, including text files, SQL databases, NoSQL stores like Cassandra and MongoDB, Amazon S3 buckets, and more. Moreover, Spark's core API provides built-in support for joining data sets, filtering, sampling, and aggregation, offering developers powerful data manipulation capabilities.

Spark SQL

Spark SQL has emerged as a vital component of the Apache Spark project, providing a high-level API for processing structured data. Spark SQL adopts a dataframe approach inspired by R and Python's Pandas library, making it accessible to both developers and analysts. Alongside standard SQL support, Spark SQL offers a wide range of data access methods, including JSON, HDFS, Apache Hive, JDBC, Apache ORC, and Apache Parquet. Additional data stores, such as Apache Cassandra and MongoDB, can be integrated using separate connectors from the Spark Packages ecosystem.

Spark SQL utilizes Catalyst, Spark's query optimizer, to optimize data locality and computation. Since Spark 2.x, Spark SQL's dataframe and dataset interfaces have become the recommended approach for development, promoting a more efficient and type-safe method for data processing. While the RDD interface remains available, it is typically used when lower-level control or specialized performance optimizations are required.

Spark MLlib and MLflow

Apache Spark includes libraries for machine learning and graph analysis at scale. MLlib offers a framework for building machine learning pipelines, facilitating the implementation of feature extraction, selection, and transformations on structured datasets. The library also features distributed implementations of clustering and classification algorithms, such as k-means clustering and random forests.

MLflow, although not an official part of Apache Spark, is an open-source platform for managing the machine learning lifecycle. The integration of MLflow with Apache Spark enables features such as experiment tracking, model registries, packaging, and user-defined functions (UDFs) for easy inference at scale.

Structured Streaming

Structured Streaming provides a high-level API for creating infinite streaming dataframes and datasets within Apache Spark. It supersedes the legacy Spark Streaming component, addressing pain points encountered by developers in event-time aggregations and late message delivery. With Structured Streaming, all queries go through Spark's Catalyst query optimizer and can be run interactively, allowing users to perform SQL queries against live streaming data. The API also supports watermarking, windowing techniques, and the ability to treat streams as tables and vice versa.

Delta Lake

Delta Lake is a separate project from Apache Spark but has become essential in the Spark ecosystem. Delta Lake augments data lakes with features such as ACID transactions, unified querying semantics for batch and stream processing, schema enforcement, full data audit history, and scalability for exabytes of data. Its adoption has contributed to the rise of the Lakehouse Architecture, eliminating the need for a separate data warehouse for business intelligence purposes.

Pandas API on Spark

The Pandas library is widely used for data manipulation and analysis in Python. Apache Spark 3.2 introduced a new API that allows a significant portion of the Pandas API to be used transparently with Spark. This compatibility enables data scientists to leverage Spark's distributed execution capabilities while benefiting from the familiar Pandas interface. Approximately 80% of the Pandas API is currently covered, with ongoing efforts to increase coverage in future releases.

Running Apache Spark

An Apache Spark application consists of two main components: a driver and executors. The driver converts the user's code into tasks that can be distributed across worker nodes, while the executors run these tasks on the worker nodes. A cluster manager mediates communication between the driver and executors. Apache Spark can run in a stand-alone cluster mode, but is more commonly used with resource or cluster management systems such as Hadoop YARN or Kubernetes. Managed solutions for Apache Spark are also available on major cloud providers, including Amazon EMR, Azure HDInsight, and Google Cloud Dataproc.

Databricks Lakehouse Platform

Databricks, the company behind Apache Spark, offers a managed cloud service that provides Apache Spark clusters, streaming support, integrated notebook development, and optimized I/O performance. The Databricks Lakehouse Platform, available on multiple cloud providers, has become the de facto way many users interact with Apache Spark.

Apache Spark Tutorials

If you're interested in learning Apache Spark, we recommend starting with the Databricks learning portal, which offers a comprehensive introduction to Apache Spark (with a slight bias towards the Databricks Platform). For a more in-depth exploration of Apache Spark's features, the Spark Workshop is a great resource. Additionally, books such as "Spark: The Definitive Guide" and "High-Performance Spark" provide detailed insights into Apache Spark's capabilities and best practices for data processing at scale.

Conclusion

Apache Spark has revolutionized the way large-scale data processing and analytics are performed. With its fast and developer-friendly nature, Spark has surpassed its predecessor, Hadoop, and become the leading big data platform. Its extensive features, including Spark SQL, MLlib, Structured Streaming, and Delta Lake, make it a powerful tool for processing complex data sets and building machine learning models. Whether deployed in a stand-alone cluster or as part of a managed cloud service like Databricks, Apache Spark offers unparalleled scalability and performance. As companies increasingly rely on big data for decision-making, mastering Apache Spark is essential for businesses seeking to leverage their data assets effectively.

Sponsored by RoamNook

This article was brought to you by RoamNook, an innovative technology company specializing in IT consultation, custom software development, and digital marketing. RoamNook's main goal is to fuel digital growth by providing cutting-edge solutions for businesses. Whether you need assistance with data processing, machine learning, or building scalable applications, RoamNook has the expertise to drive your digital transformation. Visit https://www.roamnook.com to learn more about how RoamNook can help your organization thrive in the digital age.

0 notes

Text

Important libraries for data science and Machine learning.

Python has more than 137,000 libraries which is help in various ways.In the data age where data is looks like the oil or electricity .In coming days companies are requires more skilled full data scientist , Machine Learning engineer, deep learning engineer, to avail insights by processing massive data sets.

Python libraries for different data science task:

Python Libraries for Data Collection

Beautiful Soup

Scrapy

Selenium

Python Libraries for Data Cleaning and Manipulation

Pandas

PyOD

NumPy

Spacy

Python Libraries for Data Visualization

Matplotlib

Seaborn

Bokeh

Python Libraries for Modeling

Scikit-learn

TensorFlow

PyTorch

Python Libraries for Model Interpretability

Lime

H2O

Python Libraries for Audio Processing

Librosa

Madmom

pyAudioAnalysis

Python Libraries for Image Processing

OpenCV-Python

Scikit-image

Pillow

Python Libraries for Database

Psycopg

SQLAlchemy

Python Libraries for Deployment

Flask

Django

Best Framework for Machine Learning:

1. Tensorflow :

If you are working or interested about Machine Learning, then you might have heard about this famous Open Source library known as Tensorflow. It was developed at Google by Brain Team. Almost all Google’s Applications use Tensorflow for Machine Learning. If you are using Google photos or Google voice search then indirectly you are using the models built using Tensorflow.

Tensorflow is just a computational framework for expressing algorithms involving large number of Tensor operations, since Neural networks can be expressed as computational graphs they can be implemented using Tensorflow as a series of operations on Tensors. Tensors are N-dimensional matrices which represents our Data.

2. Keras :

Keras is one of the coolest Machine learning library. If you are a beginner in Machine Learning then I suggest you to use Keras. It provides a easier way to express Neural networks. It also provides some of the utilities for processing datasets, compiling models, evaluating results, visualization of graphs and many more.

Keras internally uses either Tensorflow or Theano as backend. Some other pouplar neural network frameworks like CNTK can also be used. If you are using Tensorflow as backend then you can refer to the Tensorflow architecture diagram shown in Tensorflow section of this article. Keras is slow when compared to other libraries because it constructs a computational graph using the backend infrastructure and then uses it to perform operations. Keras models are portable (HDF5 models) and Keras provides many preprocessed datasets and pretrained models like Inception, SqueezeNet, Mnist, VGG, ResNet etc

3.Theano :

Theano is a computational framework for computing multidimensional arrays. Theano is similar to Tensorflow , but Theano is not as efficient as Tensorflow because of it’s inability to suit into production environments. Theano can be used on a prallel or distributed environments just like Tensorflow.

4.APACHE SPARK:

Spark is an open source cluster-computing framework originally developed at Berkeley’s lab and was initially released on 26th of May 2014, It is majorly written in Scala, Java, Python and R. though produced in Berkery’s lab at University of California it was later donated to Apache Software Foundation.

Spark core is basically the foundation for this project, This is complicated too, but instead of worrying about Numpy arrays it lets you work with its own Spark RDD data structures, which anyone in knowledge with big data would understand its uses. As a user, we could also work with Spark SQL data frames. With all these features it creates dense and sparks feature label vectors for you thus carrying away much complexity to feed to ML algorithms.

5. CAFFE:

Caffe is an open source framework under a BSD license. CAFFE(Convolutional Architecture for Fast Feature Embedding) is a deep learning tool which was developed by UC Berkeley, this framework is mainly written in CPP. It supports many different types of architectures for deep learning focusing mainly on image classification and segmentation. It supports almost all major schemes and is fully connected neural network designs, it offers GPU as well as CPU based acceleration as well like TensorFlow.

CAFFE is mainly used in the academic research projects and to design startups Prototypes. Even Yahoo has integrated caffe with Apache Spark to create CaffeOnSpark, another great deep learning framework.

6.PyTorch.

Torch is also a machine learning open source library, a proper scientific computing framework. Its makers brag it as easiest ML framework, though its complexity is relatively simple which comes from its scripting language interface from Lua programming language interface. There are just numbers(no int, short or double) in it which are not categorized further like in any other language. So its ease many operations and functions. Torch is used by Facebook AI Research Group, IBM, Yandex and the Idiap Research Institute, it has recently extended its use for Android and iOS.

7.Scikit-learn

Scikit-Learn is a very powerful free to use Python library for ML that is widely used in Building models. It is founded and built on foundations of many other libraries namely SciPy, Numpy and matplotlib, it is also one of the most efficient tool for statistical modeling techniques namely classification, regression, clustering.

Scikit-Learn comes with features like supervised & unsupervised learning algorithms and even cross-validation. Scikit-learn is largely written in Python, with some core algorithms written in Cython to achieve performance. Support vector machines are implemented by a Cython wrapper around LIBSVM.

Below is a list of frameworks for machine learning engineers:

Apache Singa is a general distributed deep learning platform for training big deep learning models over large datasets. It is designed with an intuitive programming model based on the layer abstraction. A variety of popular deep learning models are supported, namely feed-forward models including convolutional neural networks (CNN), energy models like restricted Boltzmann machine (RBM), and recurrent neural networks (RNN). Many built-in layers are provided for users.

Amazon Machine Learning is a service that makes it easy for developers of all skill levels to use machine learning technology. Amazon Machine Learning provides visualization tools and wizards that guide you through the process of creating machine learning (ML) models without having to learn complex ML algorithms and technology. It connects to data stored in Amazon S3, Redshift, or RDS, and can run binary classification, multiclass categorization, or regression on said data to create a model.

Azure ML Studio allows Microsoft Azure users to create and train models, then turn them into APIs that can be consumed by other services. Users get up to 10GB of storage per account for model data, although you can also connect your own Azure storage to the service for larger models. A wide range of algorithms are available, courtesy of both Microsoft and third parties. You don’t even need an account to try out the service; you can log in anonymously and use Azure ML Studio for up to eight hours.

Caffe is a deep learning framework made with expression, speed, and modularity in mind. It is developed by the Berkeley Vision and Learning Center (BVLC) and by community contributors. Yangqing Jia created the project during his PhD at UC Berkeley. Caffe is released under the BSD 2-Clause license. Models and optimization are defined by configuration without hard-coding & user can switch between CPU and GPU. Speed makes Caffe perfect for research experiments and industry deployment. Caffe can process over 60M images per day with a single NVIDIA K40 GPU.

H2O makes it possible for anyone to easily apply math and predictive analytics to solve today’s most challenging business problems. It intelligently combines unique features not currently found in other machine learning platforms including: Best of Breed Open Source Technology, Easy-to-use WebUI and Familiar Interfaces, Data Agnostic Support for all Common Database and File Types. With H2O, you can work with your existing languages and tools. Further, you can extend the platform seamlessly into your Hadoop environments.

Massive Online Analysis (MOA) is the most popular open source framework for data stream mining, with a very active growing community. It includes a collection of machine learning algorithms (classification, regression, clustering, outlier detection, concept drift detection and recommender systems) and tools for evaluation. Related to the WEKA project, MOA is also written in Java, while scaling to more demanding problems.

MLlib (Spark) is Apache Spark’s machine learning library. Its goal is to make practical machine learning scalable and easy. It consists of common learning algorithms and utilities, including classification, regression, clustering, collaborative filtering, dimensionality reduction, as well as lower-level optimization primitives and higher-level pipeline APIs.

mlpack, a C++-based machine learning library originally rolled out in 2011 and designed for “scalability, speed, and ease-of-use,” according to the library’s creators. Implementing mlpack can be done through a cache of command-line executables for quick-and-dirty, “black box” operations, or with a C++ API for more sophisticated work. Mlpack provides these algorithms as simple command-line programs and C++ classes which can then be integrated into larger-scale machine learning solutions.

Pattern is a web mining module for the Python programming language. It has tools for data mining (Google, Twitter and Wikipedia API, a web crawler, a HTML DOM parser), natural language processing (part-of-speech taggers, n-gram search, sentiment analysis, WordNet), machine learning (vector space model, clustering, SVM), network analysis and visualization.

Scikit-Learn leverages Python’s breadth by building on top of several existing Python packages — NumPy, SciPy, and matplotlib — for math and science work. The resulting libraries can be used either for interactive “workbench” applications or be embedded into other software and reused. The kit is available under a BSD license, so it’s fully open and reusable. Scikit-learn includes tools for many of the standard machine-learning tasks (such as clustering, classification, regression, etc.). And since scikit-learn is developed by a large community of developers and machine-learning experts, promising new techniques tend to be included in fairly short order.

Shogun is among the oldest, most venerable of machine learning libraries, Shogun was created in 1999 and written in C++, but isn’t limited to working in C++. Thanks to the SWIG library, Shogun can be used transparently in such languages and environments: as Java, Python, C#, Ruby, R, Lua, Octave, and Matlab. Shogun is designed for unified large-scale learning for a broad range of feature types and learning settings, like classification, regression, or explorative data analysis.

TensorFlow is an open source software library for numerical computation using data flow graphs. TensorFlow implements what are called data flow graphs, where batches of data (“tensors”) can be processed by a series of algorithms described by a graph. The movements of the data through the system are called “flows” — hence, the name. Graphs can be assembled with C++ or Python and can be processed on CPUs or GPUs.

Theano is a Python library that lets you to define, optimize, and evaluate mathematical expressions, especially ones with multi-dimensional arrays (numpy.ndarray). Using Theano it is possible to attain speeds rivaling hand-crafted C implementations for problems involving large amounts of data. It was written at the LISA lab to support rapid development of efficient machine learning algorithms. Theano is named after the Greek mathematician, who may have been Pythagoras’ wife. Theano is released under a BSD license.

Torch is a scientific computing framework with wide support for machine learning algorithms that puts GPUs first. It is easy to use and efficient, thanks to an easy and fast scripting language, LuaJIT, and an underlying C/CUDA implementation. The goal of Torch is to have maximum flexibility and speed in building your scientific algorithms while making the process extremely simple. Torch comes with a large ecosystem of community-driven packages in machine learning, computer vision, signal processing, parallel processing, image, video, audio and networking among others, and builds on top of the Lua community.

Veles is a distributed platform for deep-learning applications, and it’s written in C++, although it uses Python to perform automation and coordination between nodes. Datasets can be analyzed and automatically normalized before being fed to the cluster, and a REST API allows the trained model to be used in production immediately. It focuses on performance and flexibility. It has little hard-coded entities and enables training of all the widely recognized topologies, such as fully connected nets, convolutional nets, recurent nets etc.

1 note

·

View note

Text

The Most Popular Big Data Frameworks in 2023

Big data is the vast amount of information produced by digital devices, social media platforms, and various other internet-based sources that are part of our daily lives. Utilizing the latest techniques and technology, huge data can be used to find subtle patterns, trends, and connections to help improve processing, make better decisions, and predict the future, ultimately improving the quality of life of people, companies, and society all around.

As more and more data is generated and analyzed, it is becoming increasingly hard for researchers and companies to get insights into their data quickly. Therefore, Big Data frameworks are becoming ever more crucial. In this piece, we’ll examine the most well-known big data frameworks- Apache Storm, Apache Spark, Presto, and others – which are increasingly sought-after for Big Data analytics.

What are Big Data Frameworks?

Big data frameworks are a set of tools that make it simpler to handle large amounts of information. Big data framework is made to handle extensive data efficiently and quickly, and be safe. The frameworks that deal with big data are generally open source are big data frameworks. This means they’re available for free, with the possibility of obtaining the support you require.

Big Data is about collecting, processing, and analyzing Exabytes of data and petabyte-sized sets. Big Data concerns the amount of data, the speed, and the variety of data. Big Data is about the capability to analyze and process data at speeds and in a way that was impossible before that.

Hadoop

Apache Hadoop is an open-source big data framework that can store and process huge quantities of data. Written in Java and is suitable to process streams, batch processing, and real-time analytics.

Apache Hadoop is home to several programs that allow you to deal with huge amounts of data within just one computer or multiple machines via networks in an approach that the programs don’t know they’re distributed over multiple computers.

One of the major strengths of Hadoop is its ability to manage huge volumes of information. Based upon a distributed computing model, Hadoop breaks down large data sets into smaller pieces processed by a parallel process across a set of nodes. This method helps achieve the highest level of fault tolerance and faster processing speed, making it the ideal choice for managing Big Data workloads.

Spark

Apache Spark can be described as a powerful and universal engine to process large amounts of data. It has high-level APIs in Java, Scala, and Python, as well as R (a statically-oriented programming language), and, therefore, developers of any level can utilize the APIs. Spark is commonly utilized in production environments for processing data from several sources, such as HDFS (Hadoop Distributed File System) as well as another system for file storage, Cassandra database, Amazon S3 storage service (which also provides web services for the storage of data over the Internet) in addition to as web services that are external to the Internet including Google’s Datastore.

The main benefit of Spark is the capacity to process information at a phenomenal speed which is made possible through its features for processing in memory. It significantly cuts down on I/O processing, making it ideal for extensive data analyses. Furthermore, Spark offers considerable flexibility in allowing for a wide range of operations in data processing, like streaming, batch processing, and graph processing, using its integrated libraries.

Hive

Apache Hive is an open-source big data framework software allowing users to access and modify large data sets. It’s a big data framework built upon Hadoop, which allows users to create SQL queries and use different languages such as HiveQL and Pig Latin (a scripting language ). Apache Hive is part of the Hadoop ecosystem. You require an installation of Apache Hadoop before installing Hive.

Apache Hive’s advantage is managing petabytes of data effectively by using Hadoop Distributed File System (HDFS) to store data and Apache Tez or MapReduce for processing.

Elasticsearch

Elasticsearch is a fully-managed open-source, distributed column-oriented analytics and big data framework. Elasticsearch is used for search (elastic search), real-time analytics (Kibana), log storage/analytics/visualization (Logstash), centralized server logging aggregation (Logstash Winlogbeat), and data indexing.

Elasticsearch consulting may be utilized to analyze large amounts of data as it’s highly scalable and resilient and has an open architecture that allows using more than one node on various servers or possibly cloud servers. It has an HTTP interface that includes JSON support, allowing easy integration with other apps using common APIs, such as RESTful calls and Java Spring Data JPA annotations for domain classes.

MongoDB

MongoDB is a NoSQL database. It holds data in JSON-like formats, so there’s no requirement to establish schemas before creating your app. MongoDB is a free-of-cost open source available for on-premises use and as a cloud-based solution (MongoDB Atlas ).

MongoDB as a big data framework can serve numerous purposes: from logs to analysis and from ETL to machine learning (ML). The database can hold millions of documents and not worry about performance issues due to its horizontal scaling mechanism and efficient management of memory. Additionally, it is easy for developers of software who wish to concentrate on developing their apps instead of having to think about designing data models and tuning the systems behind them; MongoDB offers high availability using replica sets, a cluster model that lets multiple nodes duplicate their data automatically, or manually establishing clusters that have auto failover when one fails.

MapReduce

MapReduce is a big data framework that can process large data sets within a group. It was built to be fault-tolerant and spread the workload across the machines.

MapReduce is an application that is batch-oriented. This means it can process massive quantities of data and produce results within a relatively short duration.

MapReduce’s main strength is its capacity to divide massive data processing tasks over several nodes, which allows it to run parallel tasks and dramatically improves efficiency.

Samza

Samza is the name of a big data framework for stream processing. It utilizes Apache Kafka as the underlying messages bus and data store and is run on YARN. The Samza development is run by Apache, which means that it’s freely available and open to download, make use of, modify, and distribute in accordance with the Apache License version 2.0.

An example of how this is implemented in real life is how a user looking to handle a stream of messages could write the application in any programming software they want to use (Java or Python is currently supported). The application runs in a container located on at least one worker node, which is part of the Samza-Samza cluster. They form an internal pipeline that processes all messages coming from Kafka areas in conjunction with similar pipelines. Every message is received by the workers responsible for processing it before it is sent out to Kafka, another location in the system, or out of it, if needed, to accommodate the growing demands.

Flink

Flink is an another big data framework for processing data streams. It’s also a hybrid big-data processor. Flink can perform real-time analysis ETL, batch, or real-time processing.

Flink’s architecture is designed for stream processing and interactive queries for large data sets. Flink allows events and processing metadata for data streams, allowing it to manage real-time analytics and historical analysis on the same cluster using the identical API. Flink is especially well-suited to applications that require real-time data processing, like financial transactions, anomaly detection, and applications based on events that are part of IoT ecosystems. Additionally, its machine-learning and graph processing capabilities make Flink a flexible option for decision-making based on data within various sectors.

Heron

Heron is an another big data framework for distributed stream processing that is utilized to process real-time data. It can be utilized to build low-latency applications such as microservices and IoT devices. Heron can be written using C++. It offers a high-level programming big data framework to write streams processing software distributed across Apache YARN, Apache Mesos, and Kubernetes in a tightly integrated way to Kafka or Flume for the communication layer.

Heron’s greatest strength lies in its ability to offer the highest level of fault tolerance and excellent performance for large-scale data processing. The software is developed to surpass the weaknesses of Apache Storm, its predecessor Apache Storm, by introducing an entirely new scheduling model and a backpressure system. This allows Heron to ensure high performance and low latency. This makes Heron ideal for companies working with huge data collections.

Kudu

Kudu is a columnar data storage engine designed for the analysis of work. Kudu is the newest youngster on the block, yet it’s already taking the hearts of data scientists and developers. Data scientists, thanks to their capacity to combine the best features of relational databases and NoSQL databases in one.

Kudu is a also a big data framework combining relational databases (strict ACID compliance) advantages with NoSQL databases (scalability and speed). Additionally, it comes with several benefits. It comes with native support for streaming analytics. This means you can use your SQL abilities to analyze stream data in real time. It also supports JSON data storage and columnar storage for improved performance of queries by keeping related data values.

Conclusion

The emerging field of Big Data is a sector of research that takes the concept of large information sets and combines the data using hardware-based architectures of super-fast parallel processors, storage software and hardware APIs, and open-source software stacks. It’s a thrilling moment to become an expert in data science. It’s not just that greater tools are available than before within the Big Data ecosystem. Still, they’re also becoming stronger, more user-friendly to work with, and more affordable to manage. That means companies will gain more value from their data and not have to shell out as much for infrastructure.

FunctionUp’s data science online course is exhaustive and a door to take a big leap in mastering data science. The skills and learning by working on multiple real-time projects will simulate and examine your knowledge and will set your way ahead.

Learn more-

Do you know how data science can be used in business to increase efficiency? Read now.

0 notes

Text

Airflow Clickhouse

Aspect calc. Aspect ratio calculator to get aspect ratio for your images or videos (4:3, 16:9, etc.).

Airflow Clickhouse Example

Airflow-clickhouse-plugin 0.6.0 Mar 13, 2021 airflow-clickhouse-plugin - Airflow plugin to execute ClickHouse commands and queries. Baluchon 0.0.1 Dec 19, 2020 A tool for managing migrations in Clickhouse. Domination 1.2 Sep 21, 2020 Real-time application in order to dominate Humans. Intelecy-pandahouse 0.3.2 Aug 25, 2020 Pandas interface for. I investigate how fast ClickHouse 18.16.1 can query 1.1 billion taxi journeys on a 3-node, 108-core AWS EC2 cluster. Convert CSVs to ORC Faster I compare the ORC file construction times of Spark 2.4.0, Hive 2.3.4 and Presto 0.214. Rev transcription career. We and third parties use cookies or similar technologies ('Cookies') as described below to collect and process personal data, such as your IP address or browser information. The world's first data engineering coding bootcamp in Berlin. Learn sustainable data craftsmanship beyond the AI-hype. Join our school and learn how to build and maintain infrastructure that powers data products, data analytics tools, data science models, business intelligence and machine learning s.

Airflow Clickhouse Connection

Package Name AccessSummary Updated jupyterlabpublic An extensible environment for interactive and reproducible computing, based on the Jupyter Notebook and Architecture. 2021-04-22httpcorepublic The next generation HTTP client. 2021-04-22jsondiffpublic Diff JSON and JSON-like structures in Python 2021-04-22jupyter_kernel_gatewaypublic Jupyter Kernel Gateway 2021-04-22reportlabpublic Open-source engine for creating complex, data-driven PDF documents and custom vector graphics 2021-04-21pytest-asynciopublic Pytest support for asyncio 2021-04-21enamlpublic Declarative DSL for building rich user interfaces in Python 2021-04-21onigurumapublic A regular expression library. 2021-04-21cfn-lintpublic CloudFormation Linter 2021-04-21aws-c-commonpublic Core c99 package for AWS SDK for C. Includes cross-platform primitives, configuration, data structures, and error handling. 2021-04-21nginxpublic Nginx is an HTTP and reverse proxy server 2021-04-21libgcryptpublic a general purpose cryptographic library originally based on code from GnuPG. 2021-04-21google-authpublic Google authentication library for Python 2021-04-21sqlalchemy-utilspublic Various utility functions for SQLAlchemy 2021-04-21flask-apschedulerpublic Flask-APScheduler is a Flask extension which adds support for the APScheduler 2021-04-21datadogpublic The Datadog Python library 2021-04-21cattrspublic Complex custom class converters for attrs. 2021-04-21argcompletepublic Bash tab completion for argparse 2021-04-21luarockspublic LuaRocks is the package manager for Lua modulesLuaRocks is the package manager for Lua module 2021-04-21srslypublic Modern high-performance serialization utilities for Python 2021-04-19pytest-benchmarkpublic A py.test fixture for benchmarking code 2021-04-19fastavropublic Fast read/write of AVRO files 2021-04-19cataloguepublic Super lightweight function registries for your library 2021-04-19zarrpublic An implementation of chunked, compressed, N-dimensional arrays for Python. 2021-04-19python-engineiopublic Engine.IO server 2021-04-19nuitkapublic Python compiler with full language support and CPython compatibility 2021-04-19hypothesispublic A library for property based testing 2021-04-19flask-adminpublic Simple and extensible admin interface framework for Flask 2021-04-19hyperframepublic Pure-Python HTTP/2 framing 2021-04-19pythonpublic General purpose programming language 2021-04-17python-regr-testsuitepublic General purpose programming language 2021-04-17pyamgpublic Algebraic Multigrid Solvers in Python 2021-04-17luigipublic Workflow mgmgt + task scheduling + dependency resolution. 2021-04-17libpython-staticpublic General purpose programming language 2021-04-17dropboxpublic Official Dropbox API Client 2021-04-17s3fspublic Convenient Filesystem interface over S3 2021-04-17furlpublic URL manipulation made simple. 2021-04-17sympypublic Python library for symbolic mathematics 2021-04-15spyderpublic The Scientific Python Development Environment 2021-04-15sqlalchemypublic Database Abstraction Library. 2021-04-15rtreepublic R-Tree spatial index for Python GIS 2021-04-15pandaspublic High-performance, easy-to-use data structures and data analysis tools. 2021-04-15poetrypublic Python dependency management and packaging made easy 2021-04-15freetdspublic FreeTDS is a free implementation of Sybase's DB-Library, CT-Library, and ODBC libraries 2021-04-15ninjapublic A small build system with a focus on speed 2021-04-15cythonpublic The Cython compiler for writing C extensions for the Python language 2021-04-15conda-package-handlingpublic Create and extract conda packages of various formats 2021-04-15condapublic OS-agnostic, system-level binary package and environment manager. 2021-04-15colorlogpublic Log formatting with colors! 2021-04-15bitarraypublic efficient arrays of booleans -- C extension 2021-04-15

Reverse Dependencies of apache-airflow

Clickhouse Icon

Digital recorder that transcribes to text. The following projects have a declared dependency on apache-airflow:

Clickhouse Download

acryl-datahub — A CLI to work with DataHub metadata

AGLOW — AGLOW: Automated Grid-enabled LOFAR Workflows

aiflow — AI Flow, an extend operators library for airflow, which helps AI engineer to write less, reuse more, integrate easily.

aircan — no summary

airflow-add-ons — Airflow extensible opertators and sensors

airflow-aws-cost-explorer — Apache Airflow Operator exporting AWS Cost Explorer data to local file or S3

airflow-bigquerylogger — BigQuery logger handler for Airflow

airflow-bio-utils — Airflow utilities for biological sequences

airflow-cdk — Custom cdk constructs for apache airflow

airflow-clickhouse-plugin — airflow-clickhouse-plugin - Airflow plugin to execute ClickHouse commands and queries

airflow-code-editor — Apache Airflow code editor and file manager

airflow-cyberark-secrets-backend — An Airflow custom secrets backend for CyberArk CCP

airflow-dbt — Apache Airflow integration for dbt

airflow-declarative — Airflow DAGs done declaratively

airflow-diagrams — Auto-generated Diagrams from Airflow DAGs.

airflow-ditto — An airflow DAG transformation framework

airflow-django — A kit for using Django features, like its ORM, in Airflow DAGs.

airflow-docker — An opinionated implementation of exclusively using airflow DockerOperators for all Operators

airflow-dvc — DVC operator for Airflow

airflow-ecr-plugin — Airflow ECR plugin

airflow-exporter — Airflow plugin to export dag and task based metrics to Prometheus.

airflow-extended-metrics — Package to expand Airflow for custom metrics.

airflow-fs — Composable filesystem hooks and operators for Airflow.

airflow-gitlab-webhook — Apache Airflow Gitlab Webhook integration

airflow-hdinsight — HDInsight provider for Airflow

airflow-imaging-plugins — Airflow plugins to support Neuroimaging tasks.

airflow-indexima — Indexima Airflow integration

airflow-notebook — Jupyter Notebook operator for Apache Airflow.

airflow-plugin-config-storage — Inject connections into the airflow database from configuration

airflow-plugin-glue-presto-apas — An Airflow Plugin to Add a Partition As Select(APAS) on Presto that uses Glue Data Catalog as a Hive metastore.

airflow-prometheus — Modern Prometheus exporter for Airflow (based on robinhood/airflow-prometheus-exporter)

airflow-prometheus-exporter — Prometheus Exporter for Airflow Metrics

airflow-provider-fivetran — A Fivetran provider for Apache Airflow

airflow-provider-great-expectations — An Apache Airflow provider for Great Expectations

airflow-provider-hightouch — Hightouch Provider for Airflow

airflow-queue-stats — An airflow plugin for viewing queue statistics.

airflow-spark-k8s — Airflow integration for Spark On K8s

airflow-spell — Apache Airflow integration for spell.run

airflow-tm1 — A package to simplify connecting to the TM1 REST API from Apache Airflow

airflow-util-dv — no summary

airflow-waterdrop-plugin — A FastAPI Middleware of Apollo(Config Server By CtripCorp) to get server config in every request.

airflow-windmill — Drag'N'Drop Web Frontend for Building and Managing Airflow DAGs

airflowdaggenerator — Dynamically generates and validates Python Airflow DAG file based on a Jinja2 Template and a YAML configuration file to encourage code re-usability

airkupofrod — Takes a deployment in your kubernetes cluster and turns its pod template into a KubernetesPodOperator object.

airtunnel — airtunnel – tame your Airflow!

apache-airflow-backport-providers-amazon — Backport provider package apache-airflow-backport-providers-amazon for Apache Airflow

apache-airflow-backport-providers-apache-beam — Backport provider package apache-airflow-backport-providers-apache-beam for Apache Airflow

apache-airflow-backport-providers-apache-cassandra — Backport provider package apache-airflow-backport-providers-apache-cassandra for Apache Airflow

apache-airflow-backport-providers-apache-druid — Backport provider package apache-airflow-backport-providers-apache-druid for Apache Airflow

apache-airflow-backport-providers-apache-hdfs — Backport provider package apache-airflow-backport-providers-apache-hdfs for Apache Airflow

apache-airflow-backport-providers-apache-hive — Backport provider package apache-airflow-backport-providers-apache-hive for Apache Airflow

apache-airflow-backport-providers-apache-kylin — Backport provider package apache-airflow-backport-providers-apache-kylin for Apache Airflow

apache-airflow-backport-providers-apache-livy — Backport provider package apache-airflow-backport-providers-apache-livy for Apache Airflow

apache-airflow-backport-providers-apache-pig — Backport provider package apache-airflow-backport-providers-apache-pig for Apache Airflow

apache-airflow-backport-providers-apache-pinot — Backport provider package apache-airflow-backport-providers-apache-pinot for Apache Airflow

apache-airflow-backport-providers-apache-spark — Backport provider package apache-airflow-backport-providers-apache-spark for Apache Airflow

apache-airflow-backport-providers-apache-sqoop — Backport provider package apache-airflow-backport-providers-apache-sqoop for Apache Airflow

apache-airflow-backport-providers-celery — Backport provider package apache-airflow-backport-providers-celery for Apache Airflow

apache-airflow-backport-providers-cloudant — Backport provider package apache-airflow-backport-providers-cloudant for Apache Airflow

apache-airflow-backport-providers-cncf-kubernetes — Backport provider package apache-airflow-backport-providers-cncf-kubernetes for Apache Airflow

apache-airflow-backport-providers-databricks — Backport provider package apache-airflow-backport-providers-databricks for Apache Airflow

apache-airflow-backport-providers-datadog — Backport provider package apache-airflow-backport-providers-datadog for Apache Airflow

apache-airflow-backport-providers-dingding — Backport provider package apache-airflow-backport-providers-dingding for Apache Airflow

apache-airflow-backport-providers-discord — Backport provider package apache-airflow-backport-providers-discord for Apache Airflow

apache-airflow-backport-providers-docker — Backport provider package apache-airflow-backport-providers-docker for Apache Airflow

apache-airflow-backport-providers-elasticsearch — Backport provider package apache-airflow-backport-providers-elasticsearch for Apache Airflow

apache-airflow-backport-providers-email — Back-ported airflow.providers.email.* package for Airflow 1.10.*

apache-airflow-backport-providers-exasol — Backport provider package apache-airflow-backport-providers-exasol for Apache Airflow

apache-airflow-backport-providers-facebook — Backport provider package apache-airflow-backport-providers-facebook for Apache Airflow

apache-airflow-backport-providers-google — Backport provider package apache-airflow-backport-providers-google for Apache Airflow

apache-airflow-backport-providers-grpc — Backport provider package apache-airflow-backport-providers-grpc for Apache Airflow

apache-airflow-backport-providers-hashicorp — Backport provider package apache-airflow-backport-providers-hashicorp for Apache Airflow

apache-airflow-backport-providers-jdbc — Backport provider package apache-airflow-backport-providers-jdbc for Apache Airflow

apache-airflow-backport-providers-jenkins — Backport provider package apache-airflow-backport-providers-jenkins for Apache Airflow

apache-airflow-backport-providers-jira — Backport provider package apache-airflow-backport-providers-jira for Apache Airflow

apache-airflow-backport-providers-microsoft-azure — Backport provider package apache-airflow-backport-providers-microsoft-azure for Apache Airflow

apache-airflow-backport-providers-microsoft-mssql — Backport provider package apache-airflow-backport-providers-microsoft-mssql for Apache Airflow

apache-airflow-backport-providers-microsoft-winrm — Backport provider package apache-airflow-backport-providers-microsoft-winrm for Apache Airflow

apache-airflow-backport-providers-mongo — Backport provider package apache-airflow-backport-providers-mongo for Apache Airflow

apache-airflow-backport-providers-mysql — Backport provider package apache-airflow-backport-providers-mysql for Apache Airflow

apache-airflow-backport-providers-neo4j — Backport provider package apache-airflow-backport-providers-neo4j for Apache Airflow

apache-airflow-backport-providers-odbc — Backport provider package apache-airflow-backport-providers-odbc for Apache Airflow

apache-airflow-backport-providers-openfaas — Backport provider package apache-airflow-backport-providers-openfaas for Apache Airflow

apache-airflow-backport-providers-opsgenie — Backport provider package apache-airflow-backport-providers-opsgenie for Apache Airflow

apache-airflow-backport-providers-oracle — Backport provider package apache-airflow-backport-providers-oracle for Apache Airflow

apache-airflow-backport-providers-pagerduty — Backport provider package apache-airflow-backport-providers-pagerduty for Apache Airflow

apache-airflow-backport-providers-papermill — Backport provider package apache-airflow-backport-providers-papermill for Apache Airflow

apache-airflow-backport-providers-plexus — Backport provider package apache-airflow-backport-providers-plexus for Apache Airflow

apache-airflow-backport-providers-postgres — Backport provider package apache-airflow-backport-providers-postgres for Apache Airflow

apache-airflow-backport-providers-presto — Backport provider package apache-airflow-backport-providers-presto for Apache Airflow

apache-airflow-backport-providers-qubole — Backport provider package apache-airflow-backport-providers-qubole for Apache Airflow

apache-airflow-backport-providers-redis — Backport provider package apache-airflow-backport-providers-redis for Apache Airflow

apache-airflow-backport-providers-salesforce — Backport provider package apache-airflow-backport-providers-salesforce for Apache Airflow

apache-airflow-backport-providers-samba — Backport provider package apache-airflow-backport-providers-samba for Apache Airflow

apache-airflow-backport-providers-segment — Backport provider package apache-airflow-backport-providers-segment for Apache Airflow

apache-airflow-backport-providers-sendgrid — Backport provider package apache-airflow-backport-providers-sendgrid for Apache Airflow

apache-airflow-backport-providers-sftp — Backport provider package apache-airflow-backport-providers-sftp for Apache Airflow

apache-airflow-backport-providers-singularity — Backport provider package apache-airflow-backport-providers-singularity for Apache Airflow

apache-airflow-backport-providers-slack — Backport provider package apache-airflow-backport-providers-slack for Apache Airflow

apache-airflow-backport-providers-snowflake — Backport provider package apache-airflow-backport-providers-snowflake for Apache Airflow

0 notes

Text

What are the benefits of Amazon EMR? Drawbacks of AWS EMR

Benefits of Amazon EMR

Amazon EMR has many benefits. These include AWS's flexibility and cost savings over on-premises resource development.

Cost-saving

Amazon EMR costs depend on instance type, number of Amazon EC2 instances, and cluster launch area. On-demand pricing is low, but Reserved or Spot Instances save much more. Spot instances can save up to a tenth of on-demand costs.

Note

Using Amazon S3, Kinesis, or DynamoDB with your EMR cluster incurs expenses irrespective of Amazon EMR usage.

Note

Set up Amazon S3 VPC endpoints when creating an Amazon EMR cluster in a private subnet. If your EMR cluster is on a private subnet without Amazon S3 VPC endpoints, you will be charged extra for S3 traffic NAT gates.

AWS integration

Amazon EMR integrates with other AWS services for cluster networking, storage, security, and more. The following list shows many examples of this integration:

Use Amazon EC2 for cluster nodes.

Amazon VPC creates the virtual network where your instances start.

Amazon S3 input/output data storage

Set alarms and monitor cluster performance with Amazon CloudWatch.

AWS IAM permissions setting

Audit service requests with AWS CloudTrail.

Cluster scheduling and launch with AWS Data Pipeline

AWS Lake Formation searches, categorises, and secures Amazon S3 data lakes.

Its deployment

The EC2 instances in your EMR cluster do the tasks you designate. When you launch your cluster, Amazon EMR configures instances using Spark or Apache Hadoop. Choose the instance size and type that best suits your cluster's processing needs: streaming data, low-latency queries, batch processing, or big data storage.

Amazon EMR cluster software setup has many options. For example, an Amazon EMR version can be loaded with Hive, Pig, Spark, and flexible frameworks like Hadoop. Installing a MapR distribution is another alternative. Since Amazon EMR runs on Amazon Linux, you can manually install software on your cluster using yum or the source code.

Flexibility and scalability

Amazon EMR lets you scale your cluster as your computing needs vary. Resizing your cluster lets you add instances during peak workloads and remove them to cut costs.

Amazon EMR supports multiple instance groups. This lets you employ Spot Instances in one group to perform jobs faster and cheaper and On-Demand Instances in another for guaranteed processing power. Multiple Spot Instance types might be mixed to take advantage of a better price.

Amazon EMR lets you use several file systems for input, output, and intermediate data. HDFS on your cluster's primary and core nodes can handle data you don't need to store beyond its lifecycle.

Amazon S3 can be used as a data layer for EMR File System applications to decouple computation and storage and store data outside of your cluster's lifespan. EMRFS lets you scale up or down to meet storage and processing needs independently. Amazon S3 lets you adjust storage and cluster size to meet growing processing needs.

Reliability

Amazon EMR monitors cluster nodes and shuts down and replaces instances as needed.

Amazon EMR lets you configure automated or manual cluster termination. Automatic cluster termination occurs after all procedures are complete. Transitory cluster. After processing, you can set up the cluster to continue running so you can manually stop it. You can also construct a cluster, use the installed apps, and manually terminate it. These clusters are “long-running clusters.”

Termination prevention can prevent processing errors from terminating cluster instances. With termination protection, you can retrieve data from instances before termination. Whether you activate your cluster by console, CLI, or API changes these features' default settings.

Security

Amazon EMR uses Amazon EC2 key pairs, IAM, and VPC to safeguard data and clusters.

IAM

Amazon EMR uses IAM for permissions. Person or group permissions are set by IAM policies. Users and groups can access resources and activities through policies.

The Amazon EMR service uses IAM roles, while instances use the EC2 instance profile. These roles allow the service and instances to access other AWS services for you. Amazon EMR and EC2 instance profiles have default roles. By default, roles use AWS managed policies generated when you launch an EMR cluster from the console and select default permissions. Additionally, the AWS CLI may construct default IAM roles. Custom service and instance profile roles can be created to govern rights outside of AWS.

Security groups

Amazon EMR employs security groups to control EC2 instance traffic. Amazon EMR shares a security group for your primary instance and core/task instances when your cluster is deployed. Amazon EMR creates security group rules to ensure cluster instance communication. Extra security groups can be added to your primary and core/task instances for more advanced restrictions.

Encryption

Amazon EMR enables optional server-side and client-side encryption using EMRFS to protect Amazon S3 data. After submission, Amazon S3 encrypts data server-side.

The EMRFS client on your EMR cluster encrypts and decrypts client-side encryption. AWS KMS or your key management system can handle client-side encryption root keys.

Amazon VPC

Amazon EMR launches clusters in Amazon VPCs. VPCs in AWS allow you to manage sophisticated network settings and access functionalities.

AWS CloudTrail

Amazon EMR and CloudTrail record AWS account requests. This data shows who accesses your cluster, when, and from what IP.

Amazon EC2 key pairs

A secure link between the primary node and your remote computer lets you monitor and communicate with your cluster. SSH or Kerberos can authenticate this connection. SSH requires an Amazon EC2 key pair.

Monitoring

Debug cluster issues like faults or failures utilising log files and Amazon EMR management interfaces. Amazon EMR can archive log files on Amazon S3 to save records and solve problems after your cluster ends. The Amazon EMR UI also has a task, job, and step-specific debugging tool for log files.

Amazon EMR connects to CloudWatch for cluster and job performance monitoring. Alarms can be set based on cluster idle state and storage use %.

Management interfaces

There are numerous Amazon EMR access methods:

The console provides a graphical interface for cluster launch and management. You may examine, debug, terminate, and describe clusters to launch via online forms. Amazon EMR is easiest to use via the console, requiring no scripting.

Installing the AWS Command Line Interface (AWS CLI) on your computer lets you connect to Amazon EMR and manage clusters. The broad AWS CLI includes Amazon EMR-specific commands. You can automate cluster administration and initialisation with scripts. If you prefer command line operations, utilise the AWS CLI.

SDK allows cluster creation and management for Amazon EMR calls. They enable cluster formation and management automation systems. This SDK is best for customising Amazon EMR. Amazon EMR supports Go, Java,.NET (C# and VB.NET), Node.js, PHP, Python, and Ruby SDKs.

A Web Service API lets you call a web service using JSON. A custom SDK that calls Amazon EMR is best done utilising the API.

Complexity:

EMR cluster setup and maintenance are more involved than with AWS Glue and require framework knowledge.

Learning curve

Setting up and optimising EMR clusters may require adjusting settings and parameters.

Possible Performance Issues:

Incorrect instance types or under-provisioned clusters might slow task execution and other performance.

Depends on AWS:

Due to its deep interaction with AWS infrastructure, EMR is less portable than on-premise solutions despite cloud flexibility.

#AmazonEMR#AmazonEC2#AmazonS3#AmazonVirtualPrivateCloud#EMRFS#AmazonEMRservice#Technology#technews#NEWS#technologynews#govindhtech

0 notes

Text

What is Hadoop big data?

Apache Hadoop is an open source framework that is used to efficiently store and process large datasets ranging in size from gigabytes to petabytes of data. Instead of using one large computer to store and process the data, Hadoop allows clustering multiple computers to analyze massive datasets in parallel more quickly.

Hadoop consists of four main modules:

Hadoop Distributed File System (HDFS) — A distributed file system that runs on standard or low-end hardware. HDFS provides better data throughput than traditional file systems, in addition to high fault tolerance and native support of large datasets.

Yet Another Resource Negotiator (YARN) — Manages and monitors cluster nodes and resource usage. It schedules jobs and tasks.

MapReduce — A framework that helps programs do the parallel computation on data. The map task takes input data and converts it into a dataset that can be computed in key value pairs. The output of the map task is consumed by reduce tasks to aggregate output and provide the desired result.

Hadoop Common — Provides common Java libraries that can be used across all modules.

How Hadoop Works

Hadoop makes it easier to use all the storage and processing capacity in cluster servers, and to execute distributed processes against huge amounts of data. Hadoop provides the building blocks on which other services and applications can be built.

Applications that collect data in various formats can place data into the Hadoop cluster by using an API operation to connect to the NameNode. The NameNode tracks the file directory structure and placement of “chunks” for each file, replicated across DataNodes. To run a job to query the data, provide a MapReduce job made up of many map and reduce tasks that run against the data in HDFS spread across the DataNodes. Map tasks run on each node against the input files supplied, and reducers run to aggregate and organize the final output.

The Hadoop ecosystem has grown significantly over the years due to its extensibility. Today, the Hadoop ecosystem includes many tools and applications to help collect, store, process, analyze, and manage big data. Some of the most popular applications are:

Spark — An open source, distributed processing system commonly used for big data workloads. Apache Spark uses in-memory caching and optimized execution for fast performance, and it supports general batch processing, streaming analytics, machine learning, graph databases, and ad hoc queries.

Presto — An open source, distributed SQL query engine optimized for low-latency, ad-hoc analysis of data. It supports the ANSI SQL standard, including complex queries, aggregations, joins, and window functions. Presto can process data from multiple data sources including the Hadoop Distributed File System (HDFS) and Amazon S3.

Hive — Allows users to leverage Hadoop MapReduce using a SQL interface, enabling analytics at a massive scale, in addition to distributed and fault-tolerant data warehousing.

HBase — An open source, non-relational, versioned database that runs on top of Amazon S3 (using EMRFS) or the Hadoop Distributed File System (HDFS). HBase is a massively scalable, distributed big data store built for random, strictly consistent, real-time access for tables with billions of rows and millions of columns.

Zeppelin — An interactive notebook that enables interactive data exploration.

0 notes

Text

Best Machine Learning Software and Tools To Learn in 2019

Data scientists need an efficient and also effective machine learning software, tools or framework. For developing the system with the required training data to erase the drawbacks and make the machine or device intelligent. Just a well-characterized software can develop a productive machine. However, nowadays we develop our machine such that, we don’t need to give any instructions about the surroundings. The machine can act by itself, and also it can understand the environment. So we don’t need to guide it. Let us see the top 10 best machine learning software and tools.

Top 10 best machine learning software and tools-

1. Apache Mahout-

Apache Mahout is a mathematically expressive Scala DSL and a distributed linear algebra framework. It is a free and open source venture of the Apache Software Foundation. The aim of this framework is to implement an algorithm rapidly for data scientists, mathematicians and also statisticians.

Features-

This framework used to build scalable algorithms.

Implementing machine learning techniques such as clustering, recommendation, and classification, collaborative filtering.

It includes matrix and vector libraries.

Run on top of Apache Hadoop using the MapReduce paradigm.

2. Shogun-

It is an open source machine learning library. This tool is written in C++. Literally, it provides data structures and also algorithms for machine learning problems. It supports many languages like Python, R, Octave, Java, C#, Ruby, Lua, etc. Shogun is easy combination of multiple data representations, algorithm classes and general purpose tools for rapid prototyping of data pipelines.

Features-

For large scale learning, this tool can be used.

Mainly, it focuses on kernel machines like support vector machines for classification and regression problems.

Allows linking to other machine learning libraries like LibSVM, LibLinear, SVMLight, LibOCAS, etc.

It can process a vast amount of data like 10 million samples.

It provides interfaces for Python, Lua, Octave, Java, C#, Ruby, MatLab, and R.

3. Amazon Machine learning-

It is a robust and cloud-based machine learning software which can be used by all skill levels of developers. For building machine learning models and generating predictions, this managed service can be used. It integrates data from multiple sources: Amazon S3, Redshift or RDS.

Features-

Amazon Machine Learning provides visualization tools and wizards.

AML supports binary classification, multi-class classification, and regression.

It also allows users to create a data source object from the MySQL database.

It permits users to create a data source object from data stored in Amazon Redshift.

4. Google cloud ML engine-

Cloud Machine Learning Engine is a managed service that allows developers and data scientists to build and run superior machine learning models in production. Cloud ML Engine offers training and prediction services, which can be used together or individually. It has been used by enterprises to solve problems ranging from identifying clouds in satellite images, ensuring food safety, and responding four times faster to customer emails.

Features-

It provides ML model building, training, predictive modeling, and deep learning.

Cloud ML Engine has deep integration with our managed notebook service and our data services for machine learning.

Training and Online Prediction support multiple frameworks to train and serve classification, regression, clustering, and dimensionality reduction models.

The two services namely training and prediction can be used jointly or independently.

This software is used by enterprises, i.e., detecting clouds in a satellite image, responding faster to customer emails.

It can be used to train a complex model.

5. Accord.Net-

It is a .Net machine learning framework combined with audio and image processing libraries written in C#. This framework consists of multiple libraries for large number of applications, i.e., statistical data processing, pattern recognition, and linear algebra. It includes the Accord.Math, Accord.Statistics, and Accord.MachineLearning.

Features-

This framework is used for developing production-grade computer vision, computer audition, signal processing, and statistics applications.

It includes more than 40 parametric and non-parametric estimation of statistical distributions.

Also contains more than 35 hypothesis tests including one way and two-way ANOVA tests, non-parametric tests like Kolmogorov-Smirnov test and many more.

It has more than 38 kernel functions.

6. Apache Spark MLlib-

It is a machine learning library. Apache Spark MLlib runs on Hadoop, Apache Mesos, Kubernetes, standalone, or in the cloud. It can access data from multiple data sources. It has several algorithms are like – logistic regression, naive Bayes, generalized linear regression, K-means, and many more. Its workflow utilities are Feature transformations, ML Pipeline construction, ML persistence, etc.

Features–

It is easy to use.

Apache Spark MLlib can be usable in Java, Scala, Python, and R.

MLlib fits into Spark’s APIs and inter-operates with NumPy in Python and R libraries.