#AutoRegressive

Explore tagged Tumblr posts

Text

مدى انتشار سوء التغذية فى مصر وعلاقته بأهم العوامل الاجتماعية والاقتصادية - باستخدام نموذج الانحدار الذاتى للفجوات الزمنية الموزعة

مدى انتشار سوء التغذية فى مصر وعلاقته بأهم العوامل الاجتماعية والاقتصادية – باستخدام نموذج الانحدار الذاتى للفجوات الزمنية الموزعة مدى انتشار سوء التغذية فى مصر وعلاقته بأهم العوامل الاجتماعية والاقتصادية – باستخدام نموذج الانحدار الذاتى للفجوات الزمنية الموزعة المؤلف: حنان محمود عجبو أستاذ مساعد- جامعة القاهرة، كلية الاقتصاد والعلوم السياسية المستخلص: أعطت أهداف التنمية المستدامة للأمم المتحدة…

View On WordPress

#Autoregressive Distributed Lags Model (ARDL)#Bound Test Approach#food security#Malnutrition#Undernourishment#Unrestricted Error Correction Model (UECM)#نقص التغذية#نموذج تصحي�� الخطأ غير المقيد#نموذجالانحدار الذاتي للفجواتالزمنيةالموزعة#الأمن الغذائى#سوءالتغذية#طريقة اختبار الحدود.

0 notes

Text

مدى انتشار سوء التغذية فى مصر وعلاقته بأهم العوامل الاجتماعية والاقتصادية - باستخدام نموذج الانحدار الذاتى للفجوات الزمنية الموزعة

مدى انتشار سوء التغذية فى مصر وعلاقته بأهم العوامل الاجتماعية والاقتصادية – باستخدام نموذج الانحدار الذاتى للفجوات الزمنية الموزعة مدى انتشار سوء التغذية فى مصر وعلاقته بأهم العوامل الاجتماعية والاقتصادية – باستخدام نموذج الانحدار الذاتى للفجوات الزمنية الموزعة المؤلف: حنان محمود عجبو أستاذ مساعد- جامعة القاهرة، كلية الاقتصاد والعلوم السياسية المستخلص: أعطت أهداف التنمية المستدامة للأمم المتحدة…

View On WordPress

#Autoregressive Distributed Lags Model (ARDL)#Bound Test Approach#food security#Malnutrition#Undernourishment#Unrestricted Error Correction Model (UECM)#نقص التغذية#نموذج تصحيح الخطأ غير المقيد#نموذجالانحدار الذاتي للفجواتالزمنيةالموزعة#الأمن الغذائى#سوءالتغذية#طريقة اختبار الحدود.

0 notes

Text

eroticize standing waves

eroticize signal processing

eroticize adversarial input

eroticize autoregressive text generation

42 notes

·

View notes

Text

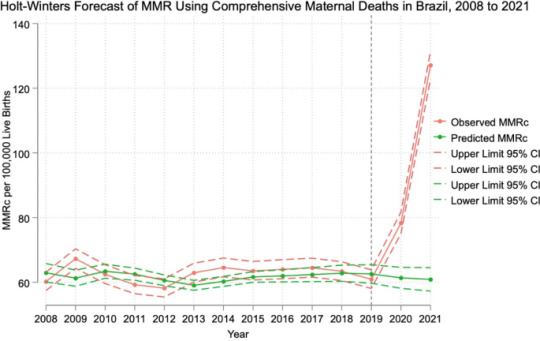

Time series analysis of comprehensive maternal deaths in Brazil during the COVID-19 pandemic

The effects of the COVID-19 pandemic on comprehensive maternal deaths in Brazil have not been fully explored. Using publicly available data from the Brazilian Mortality Information (SIM) and Information System on Live Births (SINASC) databases, we used two complementary forecasting models to predict estimates of maternal mortality ratios using maternal deaths (MMR) and comprehensive maternal deaths (MMRc) in the years 2020 and 2021 based on data from 2008 to 2019. We calculated national and regional standardized mortality ratio estimates for maternal deaths (SMR) and comprehensive maternal deaths (SMRc) for 2020 and 2021. The observed MMRc in 2021 was more than double the predicted MMRc based on the Holt-Winters and autoregressive integrated moving average models (127.12 versus 60.89 and 59.12 per 100,000 live births, respectively). We found persisting sub-national variation in comprehensive maternal mortality: SMRc ranged from 1.74 (95% confidence interval [CI] 1.64, 1.86) in the Northeast to 2.70 (95% CI 2.45, 2.96) in the South in 2021. The observed national estimates for comprehensive maternal deaths in 2021 were the highest in Brazil in the past three decades. Increased resources for prenatal care, maternal health, and postpartum care may be needed to reverse the national trend in comprehensive maternal deaths.

Read the paper.

#brazil#science#coronavirus#covid 19#feminism#politics#brazilian politics#image description in alt#mod nise da silveira

2 notes

·

View notes

Text

Ketahui Metode Arima – Sarima Autoregressive Integrated Moving Average

Metode Arima – Sarima Autoregressive Integrated Moving Average merupakan metode analisis yang digunakan untuk penelitian time series, moving average, hingga naïve. Dengan menggunakan metode ini, Anda bisa melakukan analisis data time series dengan ketepatan model yang baik. Bagaimana tahapan pengolahannya? Yuk simak : https://gamastatistika.com/2021/07/29/ketahui-metode-arima-sarima-autoregressive-integrated-moving-average/

5 notes

·

View notes

Text

Transfer Learning in NLP: Impact of BERT, GPT-3, and T5 on NLP Tasks

Transfer learning has revolutionized the field of Natural Language Processing (NLP) by allowing models to leverage pre-trained knowledge on large datasets for various downstream tasks. Among the most impactful models in this domain are BERT, GPT-3, and T5. Let's explore these models and their significance in NLP.

1. BERT (Bidirectional Encoder Representations from Transformers)

Overview:

Developed by Google, BERT was introduced in 2018 and marked a significant leap in NLP by using bidirectional training of Transformer models.

Unlike previous models that processed text in a unidirectional manner, BERT looks at both left and right context in all layers, providing a deeper understanding of the language.

Key Features:

Bidirectional Contextual Understanding: BERT’s bidirectional approach allows it to understand the context of a word based on both preceding and following words.

Pre-training Tasks: BERT uses two pre-training tasks – Masked Language Modeling (MLM) and Next Sentence Prediction (NSP). MLM involves predicting masked words in a sentence, while NSP involves predicting if two sentences follow each other in the text.

Impact on NLP Tasks:

Text Classification: BERT's contextual understanding improves the performance of text classification tasks like sentiment analysis and spam detection.

Named Entity Recognition (NER): BERT enhances NER tasks by accurately identifying entities in the text due to its deep understanding of the context.

Question Answering: BERT has set new benchmarks in QA tasks, as it can effectively comprehend and answer questions based on given contexts.

2. GPT-3 (Generative Pre-trained Transformer 3)

Overview:

Developed by OpenAI, GPT-3 is one of the largest language models ever created, with 175 billion parameters.

It follows a unidirectional (left-to-right) autoregressive approach, generating text based on the preceding words.

Key Features:

Scale and Size: GPT-3’s massive size allows it to generate highly coherent and contextually relevant text, making it suitable for a wide range of applications.

Few-Shot Learning: GPT-3 can perform tasks with minimal examples, reducing the need for large labeled datasets for fine-tuning.

Impact on NLP Tasks:

Text Generation: GPT-3 excels at generating human-like text, making it useful for creative writing, chatbots, and content creation.

Translation: While not specifically trained for translation, GPT-3 can perform reasonably well in translating text between languages due to its extensive pre-training.

Code Generation: GPT-3 can generate code snippets and assist in programming tasks, demonstrating its versatility beyond traditional NLP tasks.

3. T5 (Text-to-Text Transfer Transformer)

Overview:

Developed by Google, T5 frames all NLP tasks as a text-to-text problem, where both input and output are text strings.

This unified approach allows T5 to handle a wide variety of tasks with a single model architecture.

Key Features:

Text-to-Text Framework: By converting tasks like translation, summarization, and question answering into a text-to-text format, T5 simplifies the process of applying the model to different tasks.

Pre-training on Diverse Datasets: T5 is pre-trained on the C4 dataset (Colossal Clean Crawled Corpus), which provides a rich and diverse training set.

Impact on NLP Tasks:

Summarization: T5 achieves state-of-the-art results in text summarization by effectively condensing long documents into concise summaries.

Translation: T5 performs competitively in translation tasks by leveraging its text-to-text framework to handle multiple language pairs.

Question Answering and More: T5's versatility allows it to excel in various tasks, including QA, sentiment analysis, and more, by simply changing the text inputs and outputs.

Conclusion

BERT, GPT-3, and T5 have significantly advanced the field of NLP through their innovative architectures and pre-training techniques. These models have set new benchmarks across various NLP tasks, demonstrating the power and versatility of transfer learning. By leveraging large-scale pre-training, they enable efficient fine-tuning on specific tasks, reducing the need for extensive labeled datasets and accelerating the development of NLP applications.

These models have not only improved the performance of existing tasks but have also opened up new possibilities in areas like creative text generation, few-shot learning, and unified task frameworks, paving the way for future advancements in NLP.

3 notes

·

View notes

Text

2 notes

·

View notes

Text

Istilah dan metode dalam Statistika:

1. Data

2. Variabel

3. Rata-rata (Mean)

4. Median

5. Modus

6. Standar Deviasi

7. Distribusi Normal

8. Regresi

9. Korelasi

10. Uji Hipotesis

11. Interval Kepercayaan

12. Chi-Square

13. ANOVA

14. Regresi Linier

15. Metode Maximum Likelihood (ML)

16. Bootstrap

17. Pengambilan Sampel Acak Sederhana

18. Distribusi Poisson

19. Teorema Pusat Batas

20. Pengujian Non-parametrik

21. Analisis Regresi Logistik

22. Statistik Deskriptif

23. Grafik

24. Pengambilan Sampel Berstrata

25. Pengambilan Sampel Klaster

26. Statistik Bayes

27. Statistik Inferensial

28. Statistik Parametrik

29. Statistik Non-Parametrik

30. Pengujian A/B (A/B Testing)

31. Pengujian Satu Arah dan Dua Arah

32. Validitas dan Reliabilitas

33. Peramalan (Forecasting)

34. Analisis Faktor

35. Regresi Logistik Ganda

36. Model Linier General (GLM)

37. Korelasi Kanonikal

38. Uji T

39. Uji Z

40. Uji Wilcoxon

41. Uji Mann-Whitney

42. Uji Kruskal-Wallis

43. Uji Friedman

44. Uji Chi-Square Pearson

45. Uji McNemar

46. Uji Kolmogorov-Smirnov

47. Uji Levene

48. Uji Shapiro-Wilk

49. Uji Durbin-Watson

50. Metode Kuadrat Terkecil (Least Squares Method)

51. Uji F

52. Uji t Berpasangan

53. Uji t Independen

54. Uji Chi-Square Kemerdekaan

55. Analisis Komponen Utama (PCA)

56. Analisis Diskriminan

57. Pengujian Homogenitas Varians

58. Pengujian Normalitas

59. Peta Kendali (Control Chart)

60. Grafik Pareto

61. Sampling Proporsional Terhadap Ukuran (PPS)

62. Pengambilan Sampel Multistage

63. Pengambilan Sampel Sistematis

64. Pengambilan Sampel Stratified Cluster

65. Statistik Spasial

66. Uji K-Sample Anderson-Darling

67. Statistik Bayes Empiris

68. Regresi Nonlinier

69. Regresi Logistik Ordinal

70. Estimasi Kernel

71. Pengujian Kuadrat Terkecil Penilaian Residu (LASSO)

72. Analisis Survival (Survival Analysis)

73. Regresi Cox Proportional Hazards

74. Analisis Multivariat

75. Pengujian Homogenitas

76. Pengujian Heteroskedastisitas

77. Interval Kepercayaan Bootstrap

78. Pengujian Bootstrap

79. Model ARIMA (Autoregressive Integrated Moving Average)

80. Skala Likert

81. Metode Jackknife

82. Statistik Epidemiologi

83. Statistik Genetik

84. Statistik Olahraga

85. Statistik Sosial

86. Statistik Bisnis

87. Statistik Pendidikan

88. Statistik Medis

89. Statistik Lingkungan

90. Statistik Keuangan

91. Statistik Geospasial

92. Statistik Psikologi

93. Statistik Teknik Industri

94. Statistik Pertanian

95. Statistik Perdagangan dan Ekonomi

96. Statistik Hukum

97. Statistik Politik

98. Statistik Media dan Komunikasi

99. Statistik Teknik Sipil

100. Statistik Sumber Daya Manusia

101. Regresi Logistik Binomialis

102. Uji McNemar-Bowker

103. Uji Kolmogorov-Smirnov Lilliefors

104. Uji Jarque-Bera

105. Uji Mann-Kendall

106. Uji Siegel-Tukey

107. Uji Kruskal-Wallis Tingkat Lanjut

108. Statistik Proses

109. Statistik Keandalan (Reliability)

110. Pengujian Bootstrap Berkasus Ganda

111. Pengujian Bootstrap Berkasus Baku

112. Statistik Kualitas

113. Statistik Komputasi

114. Pengujian Bootstrap Kategorikal

115. Statistik Industri

116. Metode Penghalusan (Smoothing Methods)

117. Uji White

118. Uji Breusch-Pagan

119. Uji Jarque-Bera Asimetri dan Kurtosis

120. Statistik Eksperimental

121. Statistik Multivariat Tidak Parametrik

122. Statistik Stokastik

123. Statistik Peramalan Bisnis

124. Statistik Parametrik Bayes

125. Statistik Suku Bunga

126. Statistik Tenaga Kerja

127. Analisis Jalur (Path Analysis)

128. Statistik Fuzzy

129. Statistik Ekonometrika

130. Statistik Inflasi

131. Statistik Kependudukan

132. Statistik Teknik Pertambangan

133. Statistik Kualitatif

134. Statistik Kuantitatif

135. Analisis Ragam Keterkaitan (Canonical Correlation Analysis)

136. Uji Kuadrat Terkecil Parsial (Partial Least Squares Regression)

137. Uji Haar

138. Uji Jarque-Bera Multivariat

139. Pengujian Bootstrap Berkasus Acak

140. Pengujian Bootstrap Berkasus Tak Baku

3 notes

·

View notes

Link

Multimodal AI rapidly evolves to create systems that can understand, generate, and respond using multiple data types within a single conversation or task, such as text, images, and even video or audio. These systems are expected to function across d #AI #ML #Automation

0 notes

Text

Block Diffusion: Interpolating Autoregressive and Diffusion Language Models

https://m-arriola.com/bd3lms/

0 notes

Link

[ad_1] What would a behind-the-scenes look at a video generated by an artificial intelligence model be like? You might think the process is similar to stop-motion animation, where many images are created and stitched together, but that’s not quite the case for “diffusion models” like OpenAl's SORA and Google's VEO 2.Instead of producing a video frame-by-frame (or “autoregressively”), these systems process the entire sequence at once. The resulting clip is often photorealistic, but the process is slow and doesn’t allow for on-the-fly changes. Scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Adobe Research have now developed a hybrid approach, called “CausVid,” to create videos in seconds. Much like a quick-witted student learning from a well-versed teacher, a full-sequence diffusion model trains an autoregressive system to swiftly predict the next frame while ensuring high quality and consistency. CausVid’s student model can then generate clips from a simple text prompt, turning a photo into a moving scene, extending a video, or altering its creations with new inputs mid-generation.This dynamic tool enables fast, interactive content creation, cutting a 50-step process into just a few actions. It can craft many imaginative and artistic scenes, such as a paper airplane morphing into a swan, woolly mammoths venturing through snow, or a child jumping in a puddle. Users can also make an initial prompt, like “generate a man crossing the street,” and then make follow-up inputs to add new elements to the scene, like “he writes in his notebook when he gets to the opposite sidewalk.” A video produced by CausVid illustrates its ability to create smooth, high-quality content. AI-generated animation courtesy of the researchers. The CSAIL researchers say that the model could be used for different video editing tasks, like helping viewers understand a livestream in a different language by generating a video that syncs with an audio translation. It could also help render new content in a video game or quickly produce training simulations to teach robots new tasks.Tianwei Yin SM ’25, PhD ’25, a recently graduated student in electrical engineering and computer science and CSAIL affiliate, attributes the model’s strength to its mixed approach.“CausVid combines a pre-trained diffusion-based model with autoregressive architecture that’s typically found in text generation models,” says Yin, co-lead author of a new paper about the tool. “This AI-powered teacher model can envision future steps to train a frame-by-frame system to avoid making rendering errors.”Yin’s co-lead author, Qiang Zhang, is a research scientist at xAI and a former CSAIL visiting researcher. They worked on the project with Adobe Research scientists Richard Zhang, Eli Shechtman, and Xun Huang, and two CSAIL principal investigators: MIT professors Bill Freeman and Frédo Durand.Caus(Vid) and effectMany autoregressive models can create a video that’s initially smooth, but the quality tends to drop off later in the sequence. A clip of a person running might seem lifelike at first, but their legs begin to flail in unnatural directions, indicating frame-to-frame inconsistencies (also called “error accumulation”).Error-prone video generation was common in prior causal approaches, which learned to predict frames one by one on their own. CausVid instead uses a high-powered diffusion model to teach a simpler system its general video expertise, enabling it to create smooth visuals, but much faster. Play video CausVid enables fast, interactive video creation, cutting a 50-step process into just a few actions. Video courtesy of the researchers. CausVid displayed its video-making aptitude when researchers tested its ability to make high-resolution, 10-second-long videos. It outperformed baselines like “OpenSORA” and “MovieGen,” working up to 100 times faster than its competition while producing the most stable, high-quality clips.Then, Yin and his colleagues tested CausVid’s ability to put out stable 30-second videos, where it also topped comparable models on quality and consistency. These results indicate that CausVid may eventually produce stable, hours-long videos, or even an indefinite duration.A subsequent study revealed that users preferred the videos generated by CausVid’s student model over its diffusion-based teacher.“The speed of the autoregressive model really makes a difference,” says Yin. “Its videos look just as good as the teacher’s ones, but with less time to produce, the trade-off is that its visuals are less diverse.”CausVid also excelled when tested on over 900 prompts using a text-to-video dataset, receiving the top overall score of 84.27. It boasted the best metrics in categories like imaging quality and realistic human actions, eclipsing state-of-the-art video generation models like “Vchitect” and “Gen-3.”While an efficient step forward in AI video generation, CausVid may soon be able to design visuals even faster — perhaps instantly — with a smaller causal architecture. Yin says that if the model is trained on domain-specific datasets, it will likely create higher-quality clips for robotics and gaming.Experts say that this hybrid system is a promising upgrade from diffusion models, which are currently bogged down by processing speeds. “[Diffusion models] are way slower than LLMs [large language models] or generative image models,” says Carnegie Mellon University Assistant Professor Jun-Yan Zhu, who was not involved in the paper. “This new work changes that, making video generation much more efficient. That means better streaming speed, more interactive applications, and lower carbon footprints.”The team’s work was supported, in part, by the Amazon Science Hub, the Gwangju Institute of Science and Technology, Adobe, Google, the U.S. Air Force Research Laboratory, and the U.S. Air Force Artificial Intelligence Accelerator. CausVid will be presented at the Conference on Computer Vision and Pattern Recognition in June. [ad_2] Source link

0 notes

Text

Hybrid AI model crafts smooth, high-quality videos in seconds

New Post has been published on https://sunalei.org/news/hybrid-ai-model-crafts-smooth-high-quality-videos-in-seconds/

Hybrid AI model crafts smooth, high-quality videos in seconds

What would a behind-the-scenes look at a video generated by an artificial intelligence model be like? You might think the process is similar to stop-motion animation, where many images are created and stitched together, but that’s not quite the case for “diffusion models” like OpenAl’s SORA and Google’s VEO 2.

Instead of producing a video frame-by-frame (or “autoregressively”), these systems process the entire sequence at once. The resulting clip is often photorealistic, but the process is slow and doesn’t allow for on-the-fly changes.

Scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Adobe Research have now developed a hybrid approach, called “CausVid,” to create videos in seconds. Much like a quick-witted student learning from a well-versed teacher, a full-sequence diffusion model trains an autoregressive system to swiftly predict the next frame while ensuring high quality and consistency. CausVid’s student model can then generate clips from a simple text prompt, turning a photo into a moving scene, extending a video, or altering its creations with new inputs mid-generation.

This dynamic tool enables fast, interactive content creation, cutting a 50-step process into just a few actions. It can craft many imaginative and artistic scenes, such as a paper airplane morphing into a swan, woolly mammoths venturing through snow, or a child jumping in a puddle. Users can also make an initial prompt, like “generate a man crossing the street,” and then make follow-up inputs to add new elements to the scene, like “he writes in his notebook when he gets to the opposite sidewalk.”

A video produced by CausVid illustrates its ability to create smooth, high-quality content.

AI-generated animation courtesy of the researchers.

Previous item Next item

The CSAIL researchers say that the model could be used for different video editing tasks, like helping viewers understand a livestream in a different language by generating a video that syncs with an audio translation. It could also help render new content in a video game or quickly produce training simulations to teach robots new tasks.

Tianwei Yin SM ’25, PhD ’25, a recently graduated student in electrical engineering and computer science and CSAIL affiliate, attributes the model’s strength to its mixed approach.

“CausVid combines a pre-trained diffusion-based model with autoregressive architecture that’s typically found in text generation models,” says Yin, co-lead author of a new paper about the tool. “This AI-powered teacher model can envision future steps to train a frame-by-frame system to avoid making rendering errors.”

Yin’s co-lead author, Qiang Zhang, is a research scientist at xAI and a former CSAIL visiting researcher. They worked on the project with Adobe Research scientists Richard Zhang, Eli Shechtman, and Xun Huang, and two CSAIL principal investigators: MIT professors Bill Freeman and Frédo Durand.

Caus(Vid) and effect

Many autoregressive models can create a video that’s initially smooth, but the quality tends to drop off later in the sequence. A clip of a person running might seem lifelike at first, but their legs begin to flail in unnatural directions, indicating frame-to-frame inconsistencies (also called “error accumulation”).

Error-prone video generation was common in prior causal approaches, which learned to predict frames one-by-one on their own. CausVid instead uses a high-powered diffusion model to teach a simpler system its general video expertise, enabling it to create smooth visuals, but much faster.

Play video

CausVid enables fast, interactive video creation, cutting a 50-step process into just a few actions. Video courtesy of the researchers.

CausVid displayed its video-making aptitude when researchers tested its ability to make high-resolution, 10-second-long videos. It outperformed baselines like “OpenSORA” and “MovieGen,” working up to 100 times faster than its competition while producing the most stable, high-quality clips.

Then, Yin and his colleagues tested CausVid’s ability to put out stable 30-second videos, where it also topped comparable models on quality and consistency. These results indicate that CausVid may eventually produce stable, hours-long videos, or even an indefinite duration.

A subsequent study revealed that users preferred the videos generated by CausVid’s student model over its diffusion-based teacher.

“The speed of the autoregressive model really makes a difference,” says Yin. “Its videos look just as good as the teacher’s ones, but with less time to produce, the trade-off is that its visuals are less diverse.”

CausVid also excelled when tested on over 900 prompts using a text-to-video dataset, receiving the top overall score of 84.27. It boasted the best metrics in categories like imaging quality and realistic human actions, eclipsing state-of-the-art video generation models like “Vchitect” and “Gen-3.”

While an efficient step forward in AI video generation, CausVid may soon be able to design visuals even faster — perhaps instantly — with a smaller causal architecture. Yin says that if the model is trained on domain-specific datasets, it will likely create higher-quality clips for robotics and gaming.

Experts says that this hybrid system is a promising upgrade from diffusion models, which are currently bogged down by processing speeds. “[Diffusion models] are way slower than LLMs [large language models] or generative image models,” says Carnegie Mellon University Assistant Professor Jun-Yan Zhu, who was not involved in the paper. “This new work changes that, making video generation much more efficient. That means better streaming speed, more interactive applications, and lower carbon footprints.”

The team’s work was supported, in part, by the Amazon Science Hub, the Gwangju Institute of Science and Technology, Adobe, Google, the U.S. Air Force Research Laboratory, and the U.S. Air Force Artificial Intelligence Accelerator. CausVid will be presented at the Conference on Computer Vision and Pattern Recognition in June.

0 notes

Text

How do transformers enable autoregressive text generation capabilities?

Transformers enable autoregressive text generation capabilities through their unique architecture, which relies on self-attention mechanisms. Unlike previous models like RNNs or LSTMs, transformers allow for parallel processing of sequences, which leads to faster training and better handling of long-range dependencies within text. This is particularly beneficial for autoregressive text generation, where the model predicts the next word in a sequence based on previous words.

In autoregressive models, the text generation process is sequential: the model generates one token at a time, using the previously generated tokens as context for generating the next. The transformer’s self-attention mechanism helps here by allowing each token to attend to all other tokens in the sequence, thus capturing the dependencies across different positions. This means the model can generate contextually relevant text that is more coherent and accurate.

The transformer architecture uses a multi-head attention mechanism, which helps the model focus on different parts of the input sequence simultaneously. This allows the model to generate more diverse outputs and understand complex patterns in the data. The encoder-decoder structure in transformers, particularly in models like GPT, BERT, and T5, makes them highly effective for text generation tasks. In GPT models, for instance, only the decoder is used, where the autoregressive process begins by predicting the next word based on the input and all previous words generated.

By leveraging transformers, generative models can create high-quality, human-like text for various applications such as chatbots, writing assistants, and creative content generation. If you're interested in mastering these techniques, consider enrolling in a Generative AI certification course to develop deep expertise in this rapidly growing field.

0 notes

Text

Predictive Analytics in Identifying Stock Market Trends

Moving to the world of finance has to achieve advanced stock market trends critical for investors while trying to optimize their portfolios and maximize returns and growth. Nowadays, as an advanced technology and more of the same idea is used in forex trading app analytics, predictive analytics is a powerful tool for unlocking market changes and making the right decisions. This article determines the job of predictive analytics in predicting stock market trends, the major techniques used, and the advantageous strategy that investors can make use of to visit the risky macroeconomy.

Understanding Predictive Analytics

The practical analysis is an important part of the statistics that makes use of the previous example, statistical algorithms, and data modeling techniques to predict future situations. As for the inventory market trends, predictive analytics uses multivariable calculus not limited to historical inventory data, trading volumes, financial indicators, and market sentiment to interpret the patterns and make price forecasts in the future.

Through predictive analytics, consumers can gain specialized market knowledge, spot aircraft of development, and send signals of pending capabilities development when they appear. This approach that lays the foundation for pro-looking challenge gains can help traders capture the available opportunities and dilute market risks in an increasingly mixed and chaotic environment.

Predictive analytics which is a major tool makes use of the following techniques:

Diverse activities are often present in predictive analytics for predicting the movements of an inventory marketplace.

Time Series Analysis

Time collection analysis entails studying ancient statistics points sequentially to identify styles, traits, and seasonality in inventory charges. Techniques consisting of moving averages, exponential smoothing, and autoregressive included moving common (ARIMA) models are typically used to forecast destiny fee moves primarily based on historical data.

Machine Learning Algorithms

Machine-gaining knowledge of algorithms, including random forests, support vector machines, and neural networks, is increasingly getting used to expecting inventory marketplace trends. These algorithms analyze huge datasets and perceive complicated styles that may not be obvious through conventional statistical techniques.

Sentiment Analysis

Sentiment evaluation entails studying news articles, social media posts, and other resources of textual records to gauge marketplace sentiment and investor sentiment towards unique shares or sectors. By analyzing sentiment records, traders can identify emerging developments and sentiment shifts that could affect stock costs.

Pattern Recognition

Pattern recognition strategies contain identifying routine styles and anomalies in stock charge data. By recognizing patterns such as aid and resistance levels, fashion reversals, and chart patterns, buyers can make greater informed choices about when to shop for or promote stocks.

Benefits of Predictive Analytics in Stock Market Analysis

Predictive analytics gives several benefits for traders looking to perceive stock market trends:

Improved Decision Making

By offering precious insights into marketplace dynamics and destiny fee actions, predictive analytics permits investors to make more knowledgeable and records-pushed funding decisions.

Risk Mitigation

By applying their investment model analytics, the traders will be the virtue of uniquely positioning their portfolios and hence capability to achieve the highest returns via selecting shares and sectors with the largest forecasted growth.

Opportunity Identification

Predictive analytics facilitates investors to discover funding opportunities that might not be obvious through traditional analysis methods. By figuring out rising trends and marketplace anomalies, buyers can capitalize on possibilities for profit.

Enhanced Portfolio Performance

By incorporating predictive analytics into their investment method, traders can optimize their portfolios and maximize returns via forex trading platforms allocating capital to shares and sectors with the finest growth ability.

Challenges and Limitations

Although predictive analytics has much to contribute in terms of understanding inventory market traits and dynamics, it is exposed to a lot of challenges and limitations.

Data Quality and Availability

Predictive analytics is based on superb and dependable information to generate accurate forecasts. However, obtaining easy and comprehensive facts can be hard, especially inside the monetary markets wherein records can be fragmented or difficult to manipulate.

Model Complexity

Besides complex, sophisticated, and easy gadgets, some algorithms are a challenge to interact with. Subsequently, excess model fitting to backward data is possible, which can cause inaccurate forecasts and misleading predictions.

Market Volatility

The stock market is inherently risky and unpredictable, making it difficult to as it should be forecast future charge movements. While predictive analytics can offer treasured insights into market developments, it cannot remove the inherent uncertainty and threat associated with investing within the inventory market.

Regulatory and Ethical Considerations

Data-driven monetary market prediction among other things is mandated by regulations and moral dilemmas when it comes to the usage of very sensitive data and the capability for market manipulation. Investors need to stay informed and comply with the relevant regulations as well as ethical behavior standards. Additionally, they need to carefully consider the rules and ethics while the decision-making of their investment technique using predictive analytics.

Conclusion

In conclusion, predictive analytics play a vital role in screening stock market trends and making investment decisions with care. Statistical methods also have the benefit of being able to use historical statistics to make predictions and gadget learning techniques to understand markets better and predict their navigation so investors can have maximum needs from their portfolio.

0 notes

Text

Human-Centric Exploration of Generative AI Development

Generative AI is more than a buzzword. It’s a transformative technology shaping industries and igniting innovation across the globe. From creating expressive visuals to designing personalized experiences, it allows organizations to build powerful, scalable solutions with lasting impact. As tools like ChatGPT and Stable Diffusion continue to gain traction, investors and businesses alike are exploring the practical steps to develop generative AI solutions tailored to real-world needs.

Why Generative AI is the Future of Innovation

The rapid rise of generative AI in sectors like finance, healthcare, and media has drawn immense interest—and funding. OpenAI's valuation crossed $25 billion with Microsoft backing it with over $1 billion, signaling confidence in generative models even amidst broader tech downturns. The market is projected to reach $442.07 billion by 2031, driven by its ability to generate text, code, images, music, and more. For companies looking to gain a competitive edge, investing in generative AI isn’t just a trend—it’s a strategic move.

What Makes Generative AI a Business Imperative?

Generative AI increases efficiency by automating tasks, drives creative ideation beyond human limits, and enhances decision-making through data analysis. Its applications include marketing content creation, virtual product design, intelligent customer interactions, and adaptive user experiences. It also reduces operational costs and helps businesses respond faster to market demands.

How to Create a Generative AI Solution: A Step-by-Step Overview

1. Define Clear Objectives: Understand what problem you're solving and what outcomes you seek. 2. Collect and Prepare Quality Data: Whether it's image, audio, or text-based, the dataset's quality sets the foundation. 3. Choose the Right Tools and Frameworks: Utilize Python, TensorFlow, PyTorch, and cloud platforms like AWS or Azure for development. 4. Select Suitable Architectures: From GANs to VAEs, LSTMs to autoregressive models, align the model type with your solution needs. 5. Train, Fine-Tune, and Test: Iteratively improve performance through tuning hyperparameters and validating outputs. 6. Deploy and Monitor: Deploy using Docker, Flask, or Kubernetes and monitor with MLflow or TensorBoard.

Explore a comprehensive guide here: How to Create Your Own Generative AI Solution

Industry Applications That Matter

Healthcare: Personalized treatment plans, drug discovery

Finance: Fraud detection, predictive analytics

Education: Tailored learning modules, content generation

Manufacturing: Process optimization, predictive maintenance

Retail: Customer behavior analysis, content personalization

Partnering with the Right Experts

Building a successful generative AI model requires technical know-how, domain expertise, and iterative optimization. This is where generative AI consulting services come into play. A reliable generative AI consulting company like SoluLab offers tailored support—from strategy and development to deployment and scale.

Whether you need generative AI consultants to help refine your idea or want a long-term partner among top generative AI consulting companies, SoluLab stands out with its proven expertise. Explore our Gen AI Consulting Services

Final Thoughts

Generative AI is not just shaping the future—it’s redefining it. By collaborating with experienced partners, adopting best practices, and continuously iterating, you can craft AI solutions that evolve with your business and customers. The future of business is generative—are you ready to build it?

0 notes

Text

Panel vector autoregressive models (PVARs) Use xtvar2 With STATA 19

Panel vector autoregressive models (PVARs) Use xtvar2 With STATA 19 https://ln.run/xtvar2

0 notes