#Best web scraping API

Explore tagged Tumblr posts

Text

"Artists have finally had enough with Meta’s predatory AI policies, but Meta’s loss is Cara’s gain. An artist-run, anti-AI social platform, Cara has grown from 40,000 to 650,000 users within the last week, catapulting it to the top of the App Store charts.

Instagram is a necessity for many artists, who use the platform to promote their work and solicit paying clients. But Meta is using public posts to train its generative AI systems, and only European users can opt out, since they’re protected by GDPR laws. Generative AI has become so front-and-center on Meta’s apps that artists reached their breaking point.

“When you put [AI] so much in their face, and then give them the option to opt out, but then increase the friction to opt out… I think that increases their anger level — like, okay now I’ve really had enough,” Jingna Zhang, a renowned photographer and founder of Cara, told TechCrunch.

Cara, which has both a web and mobile app, is like a combination of Instagram and X, but built specifically for artists. On your profile, you can host a portfolio of work, but you can also post updates to your feed like any other microblogging site.

Zhang is perfectly positioned to helm an artist-centric social network, where they can post without the risk of becoming part of a training dataset for AI. Zhang has fought on behalf of artists, recently winning an appeal in a Luxembourg court over a painter who copied one of her photographs, which she shot for Harper’s Bazaar Vietnam.

“Using a different medium was irrelevant. My work being ‘available online’ was irrelevant. Consent was necessary,” Zhang wrote on X.

Zhang and three other artists are also suing Google for allegedly using their copyrighted work to train Imagen, an AI image generator. She’s also a plaintiff in a similar lawsuit against Stability AI, Midjourney, DeviantArt and Runway AI.

“Words can’t describe how dehumanizing it is to see my name used 20,000+ times in MidJourney,” she wrote in an Instagram post. “My life’s work and who I am—reduced to meaningless fodder for a commercial image slot machine.”

Artists are so resistant to AI because the training data behind many of these image generators includes their work without their consent. These models amass such a large swath of artwork by scraping the internet for images, without regard for whether or not those images are copyrighted. It’s a slap in the face for artists – not only are their jobs endangered by AI, but that same AI is often powered by their work.

“When it comes to art, unfortunately, we just come from a fundamentally different perspective and point of view, because on the tech side, you have this strong history of open source, and people are just thinking like, well, you put it out there, so it’s for people to use,” Zhang said. “For artists, it’s a part of our selves and our identity. I would not want my best friend to make a manipulation of my work without asking me. There’s a nuance to how we see things, but I don’t think people understand that the art we do is not a product.”

This commitment to protecting artists from copyright infringement extends to Cara, which partners with the University of Chicago’s Glaze project. By using Glaze, artists who manually apply Glaze to their work on Cara have an added layer of protection against being scraped for AI.

Other projects have also stepped up to defend artists. Spawning AI, an artist-led company, has created an API that allows artists to remove their work from popular datasets. But that opt-out only works if the companies that use those datasets honor artists’ requests. So far, HuggingFace and Stability have agreed to respect Spawning’s Do Not Train registry, but artists’ work cannot be retroactively removed from models that have already been trained.

“I think there is this clash between backgrounds and expectations on what we put on the internet,” Zhang said. “For artists, we want to share our work with the world. We put it online, and we don’t charge people to view this piece of work, but it doesn’t mean that we give up our copyright, or any ownership of our work.”"

Read the rest of the article here:

https://techcrunch.com/2024/06/06/a-social-app-for-creatives-cara-grew-from-40k-to-650k-users-in-a-week-because-artists-are-fed-up-with-metas-ai-policies/

610 notes

·

View notes

Text

"Bots on the internet are nothing new, but a sea change has occurred over the past year. For the past 25 years, anyone running a web server knew that the bulk of traffic was one sort of bot or another. There was googlebot, which was quite polite, and everyone learned to feed it - otherwise no one would ever find the delicious treats we were trying to give away. There were lots of search engine crawlers working to develop this or that service. You'd get 'script kiddies' trying thousands of prepackaged exploits. A server secured and patched by a reasonably competent technologist would have no difficulty ignoring these.

"...The surge of AI bots has hit Open Access sites particularly hard, as their mission conflicts with the need to block bots. Consider that Internet Archive can no longer save snapshots of one of the best open-access publishers, MIT Press, because of cloudflare blocking. Who know how many books will be lost this way? Or consider that the bots took down OAPEN, the worlds most important repository of Scholarly OA books, for a day or two. That's 34,000 books that AI 'checked out' for two days. Or recent outages at Project Gutenberg, which serves 2 million dynamic pages and a half million downloads per day. That's hundreds of thousands of downloads blocked! The link checker at doab-check.ebookfoundation.org (a project I worked on for OAPEN) is now showing 1,534 books that are unreachable due to 'too many requests.' That's 1,534 books that AI has stolen from us! And it's getting worse.

"...The thing that gets me REALLY mad is how unnecessary this carnage is. Project Gutenberg makes all its content available with one click on a file in its feeds directory. OAPEN makes all its books available via an API. There's no need to make a million requests to get this stuff!! Who (or what) is programming these idiot scraping bots? Have they never heard of a sitemap??? Are they summer interns using ChatGPT to write all their code? Who gave them infinite memory, CPUs and bandwidth to run these monstrosities? (Don't answer.)

"We are headed for a world in which all good information is locked up behind secure registration barriers and paywalls, and it won't be to make money, it will be for survival. Captchas will only be solvable by advanced AIs and only the wealthy will be able to use internet libraries."

#ugh#AI#generative AI#literally a plagiarism machine#and before you're like “oH bUt Ai Is DoInG sO mUcH gOoD...” that's machine learning AI doing stuff like finding cancer#generative AI is just stealing and then selling plagiarism#open access#OA#MIT Press#OAPEN#Project Gutenberg#various AI enthusiasts just wrecking the damn internet by Ctrl+Cing all over the damn place and not actually reading a damn thing

46 notes

·

View notes

Note

Hello, fellow compsci enthusiast. I am trying to scrape together motivation to work on the vote graph script for Yuno's trial.

I mean, I already have the means to gather the numbers. I just need to get the API to work to put the numbers on Google Sheets so the graphs can work in real time. :')

hello!!

owahhh best of luck, kyanako!! we were actually learning about web programming and api today in class lol so yippee!!

o77 yuno graph WAH

2 notes

·

View notes

Text

Lensnure Solutions is a passionate web scraping and data extraction company that makes every possible effort to add value to their customer and make the process easy and quick. The company has been acknowledged as a prime web crawler for its quality services in various top industries such as Travel, eCommerce, Real Estate, Finance, Business, social media, and many more.

We wish to deliver the best to our customers as that is the priority. we are always ready to take on challenges and grab the right opportunity.

3 notes

·

View notes

Text

Best data extraction services in USA

In today's fiercely competitive business landscape, the strategic selection of a web data extraction services provider becomes crucial. Outsource Bigdata stands out by offering access to high-quality data through a meticulously crafted automated, AI-augmented process designed to extract valuable insights from websites. Our team ensures data precision and reliability, facilitating decision-making processes.

For more details, visit: https://outsourcebigdata.com/data-automation/web-scraping-services/web-data-extraction-services/.

About AIMLEAP

Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, Canada, India & Australia

Our Data Solutions

APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations: USA: 1-30235 14656 Canada: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

2 notes

·

View notes

Text

I don’t know how better to say that the reason I’ve stuck around on Tumblr for so long is because of the “outdated” features such as custom themes, the reverse chronological dashboard (which contains only posts from those I choose to follow), anonymous asks (with no login required), and the general web 1.0 feel of the site. Posts are spread organically, and not algorithmically. It feels like a form of peer review; posts need to go through at least one person I’ve chosen as a trusted peer before I see them.

In 2023, it’s become exceedingly difficult to find places on the internet which don’t feel like they’re pushing their product and their content onto you. Every website has their infinite scroll of algorithmically suggested content based on data scraped from you or purchased from aggregators. Who then go on to store even more of your data to sell to advertisers. It’s frustrating, and Tumblr has historically been a breath of fresh air in that regard. I hope it continues to be.

Although I’ve been using tumblr for over a decade, please do not believe that I will hesitate to abandon the platform if it ceases to be any of the things that have been keeping me here. I’ve really enjoyed using the platform, and I’ve been reasonably proud to say it’s my “social media” of choice. I appreciate the commentary possible through reblogs, and I appreciate the robust tagging system. I also appreciate the keyboard shortcuts in the web interface and the special blog pages such as /day, /archive, etc. The community, however, is the one thing that’s keeping me here more than anything else. I fear that if Automattic continues in the direction they seem to be going, that the community will be pushed away.

With Reddit and Twitter making some extremely questionable decisions with their platforms recently, Tumblr does have the opportunity to grow, and I don’t blame @staff for trying to seize that opportunity. I plead, however, that this does not lead Tumblr to believe it can be a drop-in replacement for these declining platforms. Tumblr has its own features which make it unique and which keep users here. The best place to start is the instability of the native Tumblr app. I might even encourage opening up more of the API (eg. Polls & Chats) to third party developers.

Tumblr’s Core Product Strategy

Here at Tumblr, we’ve been working hard on reorganizing how we work in a bid to gain more users. A larger user base means a more sustainable company, and means we get to stick around and do this thing with you all a bit longer. What follows is the strategy we're using to accomplish the goal of user growth. The @labs group has published a bit already, but this is bigger. We’re publishing it publicly for the first time, in an effort to work more transparently with all of you in the Tumblr community. This strategy provides guidance amid limited resources, allowing our teams to focus on specific key areas to ensure Tumblr’s future.

The Diagnosis

In order for Tumblr to grow, we need to fix the core experience that makes Tumblr a useful place for users. The underlying problem is that Tumblr is not easy to use. Historically, we have expected users to curate their feeds and lean into curating their experience. But this expectation introduces friction to the user experience and only serves a small portion of our audience.

Tumblr’s competitive advantage lies in its unique content and vibrant communities. As the forerunner of internet culture, Tumblr encompasses a wide range of interests, such as entertainment, art, gaming, fandom, fashion, and music. People come to Tumblr to immerse themselves in this culture, making it essential for us to ensure a seamless connection between people and content.

To guarantee Tumblr’s continued success, we’ve got to prioritize fostering that seamless connection between people and content. This involves attracting and retaining new users and creators, nurturing their growth, and encouraging frequent engagement with the platform.

Our Guiding Principles

To enhance Tumblr’s usability, we must address these core guiding principles.

Expand the ways new users can discover and sign up for Tumblr.

Provide high-quality content with every app launch.

Facilitate easier user participation in conversations.

Retain and grow our creator base.

Create patterns that encourage users to keep returning to Tumblr.

Improve the platform’s performance, stability, and quality.

Below is a deep dive into each of these principles.

Principle 1: Expand the ways new users can discover and sign up for Tumblr.

Tumblr has a “top of the funnel” issue in converting non-users into engaged logged-in users. We also have not invested in industry standard SEO practices to ensure a robust top of the funnel. The referral traffic that we do get from external sources is dispersed across different pages with inconsistent user experiences, which results in a missed opportunity to convert these users into regular Tumblr users. For example, users from search engines often land on pages within the blog network and blog view—where there isn’t much of a reason to sign up.

We need to experiment with logged-out tumblr.com to ensure we are capturing the highest potential conversion rate for visitors into sign-ups and log-ins. We might want to explore showing the potential future user the full breadth of content that Tumblr has to offer on our logged-out pages. We want people to be able to easily understand the potential behind Tumblr without having to navigate multiple tabs and pages to figure it out. Our current logged-out explore page does very little to help users understand “what is Tumblr.” which is a missed opportunity to get people excited about joining the site.

Actions & Next Steps

Improving Tumblr’s search engine optimization (SEO) practices to be in line with industry standards.

Experiment with logged out tumblr.com to achieve the highest conversion rate for sign-ups and log-ins, explore ways for visitors to “get” Tumblr and entice them to sign up.

Principle 2: Provide high-quality content with every app launch.

We need to ensure the highest quality user experience by presenting fresh and relevant content tailored to the user’s diverse interests during each session. If the user has a bad content experience, the fault lies with the product.

The default position should always be that the user does not know how to navigate the application. Additionally, we need to ensure that when people search for content related to their interests, it is easily accessible without any confusing limitations or unexpected roadblocks in their journey.

Being a 15-year-old brand is tough because the brand carries the baggage of a person’s preconceived impressions of Tumblr. On average, a user only sees 25 posts per session, so the first 25 posts have to convey the value of Tumblr: it is a vibrant community with lots of untapped potential. We never want to leave the user believing that Tumblr is a place that is stale and not relevant.

Actions & Next Steps

Deliver great content each time the app is opened.

Make it easier for users to understand where the vibrant communities on Tumblr are.

Improve our algorithmic ranking capabilities across all feeds.

Principle 3: Facilitate easier user participation in conversations.

Part of Tumblr’s charm lies in its capacity to showcase the evolution of conversations and the clever remarks found within reblog chains and replies. Engaging in these discussions should be enjoyable and effortless.

Unfortunately, the current way that conversations work on Tumblr across replies and reblogs is confusing for new users. The limitations around engaging with individual reblogs, replies only applying to the original post, and the inability to easily follow threaded conversations make it difficult for users to join the conversation.

Actions & Next Steps

Address the confusion within replies and reblogs.

Improve the conversational posting features around replies and reblogs.

Allow engagements on individual replies and reblogs.

Make it easier for users to follow the various conversation paths within a reblog thread.

Remove clutter in the conversation by collapsing reblog threads.

Explore the feasibility of removing duplicate reblogs within a user’s Following feed.

Principle 4: Retain and grow our creator base.

Creators are essential to the Tumblr community. However, we haven’t always had a consistent and coordinated effort around retaining, nurturing, and growing our creator base.

Being a new creator on Tumblr can be intimidating, with a high likelihood of leaving or disappointment upon sharing creations without receiving engagement or feedback. We need to ensure that we have the expected creator tools and foster the rewarding feedback loops that keep creators around and enable them to thrive.

The lack of feedback stems from the outdated decision to only show content from followed blogs on the main dashboard feed (“Following”), perpetuating a cycle where popular blogs continue to gain more visibility at the expense of helping new creators. To address this, we need to prioritize supporting and nurturing the growth of new creators on the platform.

It is also imperative that creators, like everyone on Tumblr, feel safe and in control of their experience. Whether it be an ask from the community or engagement on a post, being successful on Tumblr should never feel like a punishing experience.

Actions & Next Steps

Get creators’ new content in front of people who are interested in it.

Improve the feedback loop for creators, incentivizing them to continue posting.

Build mechanisms to protect creators from being spammed by notifications when they go viral.

Expand ways to co-create content, such as by adding the capability to embed Tumblr links in posts.

Principle 5: Create patterns that encourage users to keep returning to Tumblr.

Push notifications and emails are essential tools to increase user engagement, improve user retention, and facilitate content discovery. Our strategy of reaching out to you, the user, should be well-coordinated across product, commercial, and marketing teams.

Our messaging strategy needs to be personalized and adapt to a user’s shifting interests. Our messages should keep users in the know on the latest activity in their community, as well as keeping Tumblr top of mind as the place to go for witty takes and remixes of the latest shows and real-life events.

Most importantly, our messages should be thoughtful and should never come across as spammy.

Actions & Next Steps

Conduct an audit of our messaging strategy.

Address the issue of notifications getting too noisy; throttle, collapse or mute notifications where necessary.

Identify opportunities for personalization within our email messages.

Test what the right daily push notification limit is.

Send emails when a user has push notifications switched off.

Principle 6: Performance, stability and quality.

The stability and performance of our mobile apps have declined. There is a large backlog of production issues, with more bugs created than resolved over the last 300 days. If this continues, roughly one new unresolved production issue will be created every two days. Apps and backend systems that work well and don't crash are the foundation of a great Tumblr experience. Improving performance, stability, and quality will help us achieve sustainable operations for Tumblr.

Improve performance and stability: deliver crash-free, responsive, and fast-loading apps on Android, iOS, and web.

Improve quality: deliver the highest quality Tumblr experience to our users.

Move faster: provide APIs and services to unblock core product initiatives and launch new features coming out of Labs.

Conclusion

Our mission has always been to empower the world’s creators. We are wholly committed to ensuring Tumblr evolves in a way that supports our current users while improving areas that attract new creators, artists, and users. You deserve a digital home that works for you. You deserve the best tools and features to connect with your communities on a platform that prioritizes the easy discoverability of high-quality content. This is an invigorating time for Tumblr, and we couldn’t be more excited about our current strategy.

65K notes

·

View notes

Text

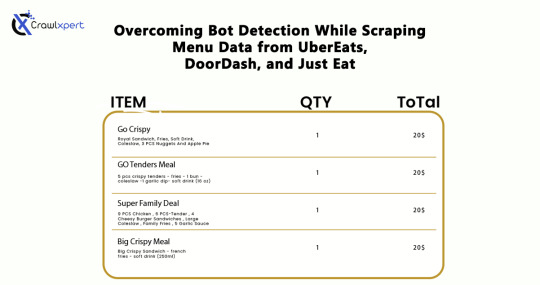

Overcoming Bot Detection While Scraping Menu Data from UberEats, DoorDash, and Just Eat

Introduction

In industries where menu data collection is concerned, web scraping would serve very well for us: UberEats, DoorDash, and Just Eat are the some examples. However, websites use very elaborate bot detection methods to stop the automated collection of information. In overcoming these factors, advanced scraping techniques would apply with huge relevance: rotating IPs, headless browsing, CAPTCHA solving, and AI methodology.

This guide will discuss how to bypass bot detection during menu data scraping and all challenges with the best practices for seamless and ethical data extraction.

Understanding Bot Detection on Food Delivery Platforms

1. Common Bot Detection Techniques

Food delivery platforms use various methods to block automated scrapers:

IP Blocking – Detects repeated requests from the same IP and blocks access.

User-Agent Tracking – Identifies and blocks non-human browsing patterns.

CAPTCHA Challenges – Requires solving puzzles to verify human presence.

JavaScript Challenges – Uses scripts to detect bots attempting to load pages without interaction.

Behavioral Analysis – Tracks mouse movements, scrolling, and keystrokes to differentiate bots from humans.

2. Rate Limiting and Request Patterns

Platforms monitor the frequency of requests coming from a specific IP or user session. If a scraper makes too many requests within a short time frame, it triggers rate limiting, causing the scraper to receive 403 Forbidden or 429 Too Many Requests errors.

3. Device Fingerprinting

Many websites use sophisticated techniques to detect unique attributes of a browser and device. This includes screen resolution, installed plugins, and system fonts. If a scraper runs on a known bot signature, it gets flagged.

Techniques to Overcome Bot Detection

1. IP Rotation and Proxy Management

Using a pool of rotating IPs helps avoid detection and blocking.

Use residential proxies instead of data center IPs.

Rotate IPs with each request to simulate different users.

Leverage proxy providers like Bright Data, ScraperAPI, and Smartproxy.

Implement session-based IP switching to maintain persistence.

2. Mimic Human Browsing Behavior

To appear more human-like, scrapers should:

Introduce random time delays between requests.

Use headless browsers like Puppeteer or Playwright to simulate real interactions.

Scroll pages and click elements programmatically to mimic real user behavior.

Randomize mouse movements and keyboard inputs.

Avoid loading pages at robotic speeds; introduce a natural browsing flow.

3. Bypassing CAPTCHA Challenges

Implement automated CAPTCHA-solving services like 2Captcha, Anti-Captcha, or DeathByCaptcha.

Use machine learning models to recognize and solve simple CAPTCHAs.

Avoid triggering CAPTCHAs by limiting request frequency and mimicking human navigation.

Employ AI-based CAPTCHA solvers that use pattern recognition to bypass common challenges.

4. Handling JavaScript-Rendered Content

Use Selenium, Puppeteer, or Playwright to interact with JavaScript-heavy pages.

Extract data directly from network requests instead of parsing the rendered HTML.

Load pages dynamically to prevent detection through static scrapers.

Emulate browser interactions by executing JavaScript code as real users would.

Cache previously scraped data to minimize redundant requests.

5. API-Based Extraction (Where Possible)

Some food delivery platforms offer APIs to access menu data. If available:

Check the official API documentation for pricing and access conditions.

Use API keys responsibly and avoid exceeding rate limits.

Combine API-based and web scraping approaches for optimal efficiency.

6. Using AI for Advanced Scraping

Machine learning models can help scrapers adapt to evolving anti-bot measures by:

Detecting and avoiding honeypots designed to catch bots.

Using natural language processing (NLP) to extract and categorize menu data efficiently.

Predicting changes in website structure to maintain scraper functionality.

Best Practices for Ethical Web Scraping

While overcoming bot detection is necessary, ethical web scraping ensures compliance with legal and industry standards:

Respect Robots.txt – Follow site policies on data access.

Avoid Excessive Requests – Scrape efficiently to prevent server overload.

Use Data Responsibly – Extracted data should be used for legitimate business insights only.

Maintain Transparency – If possible, obtain permission before scraping sensitive data.

Ensure Data Accuracy – Validate extracted data to avoid misleading information.

Challenges and Solutions for Long-Term Scraping Success

1. Managing Dynamic Website Changes

Food delivery platforms frequently update their website structure. Strategies to mitigate this include:

Monitoring website changes with automated UI tests.

Using XPath selectors instead of fixed HTML elements.

Implementing fallback scraping techniques in case of site modifications.

2. Avoiding Account Bans and Detection

If scraping requires logging into an account, prevent bans by:

Using multiple accounts to distribute request loads.

Avoiding excessive logins from the same device or IP.

Randomizing browser fingerprints using tools like Multilogin.

3. Cost Considerations for Large-Scale Scraping

Maintaining an advanced scraping infrastructure can be expensive. Cost optimization strategies include:

Using serverless functions to run scrapers on demand.

Choosing affordable proxy providers that balance performance and cost.

Optimizing scraper efficiency to reduce unnecessary requests.

Future Trends in Web Scraping for Food Delivery Data

As web scraping evolves, new advancements are shaping how businesses collect menu data:

AI-Powered Scrapers – Machine learning models will adapt more efficiently to website changes.

Increased Use of APIs – Companies will increasingly rely on API access instead of web scraping.

Stronger Anti-Scraping Technologies – Platforms will develop more advanced security measures.

Ethical Scraping Frameworks – Legal guidelines and compliance measures will become more standardized.

Conclusion

Uber Eats, DoorDash, and Just Eat represent great challenges for menu data scraping, mainly due to their advanced bot detection systems. Nevertheless, if IP rotation, headless browsing, solutions to CAPTCHA, and JavaScript execution methodologies, augmented with AI tools, are applied, businesses can easily scrape valuable data without incurring the wrath of anti-scraping measures.

If you are an automated and reliable web scraper, CrawlXpert is the solution for you, which specializes in tools and services to extract menu data with efficiency while staying legally and ethically compliant. The right techniques, along with updates on recent trends in web scrapping, will keep the food delivery data collection effort successful long into the foreseeable future.

Know More : https://www.crawlxpert.com/blog/scraping-menu-data-from-ubereats-doordash-and-just-eat

#ScrapingMenuDatafromUberEats#ScrapingMenuDatafromDoorDash#ScrapingMenuDatafromJustEat#ScrapingforFoodDeliveryData

0 notes

Text

Advanced Python Training: Master High-Level Programming with Softcrayons

Advanced python training | Advanced python course | Advanced python training institute

In today's tech-driven world, knowing Python has become critical for students and professionals in data science, AI, machine learning, web development, and automation. While fundamental Python offers a strong foundation, true mastery comes from diving deeper into complex concepts. That’s where Advanced Python training at Softcrayons Tech Solution plays a vital role. Whether you're a Python beginner looking to level up or a developer seeking specialized expertise, our advanced Python training in Noida, Ghaziabad, and Delhi NCR offers the perfect path to mastering high-level Python programming.

Why Advance Python Training Is Essential in 2025

Python continues to rule the programming world due to its flexibility and ease of use. However, fundamental knowledge is no longer sufficient in today’s competitive business landscape. Companies are actively seeking professionals who can apply advanced Python principles in real-world scenarios. This is where Advanced python training becomes essential—equipping learners with the practical skills and deep understanding needed to meet modern industry demands.

Our Advanced Python Training Course is tailored to make you job-ready. It’s ideal for professionals aiming to:

Build scalable applications

Automate complex tasks

Work with databases and APIs

Dive into data analysis and visualization

Develop back-end logic for web and AI-based platforms

This course covers high-level features, real-world projects, and practical coding experience that employers demand.

Why Choose Softcrayons for Advanced Python Training?

Softcrayons Tech Solution is one of the best IT training institutes in Delhi NCR, with a proven track record in delivering job-oriented, industry-relevant courses. Here’s what sets our Advanced Python Training apart:

Expert Trainers

Learn from certified Python experts with years of industry experience. Our mentors not only teach you advanced syntax but also guide you through practical use cases and problem-solving strategies.

Real-Time Projects

Gain hands-on experience with live projects in automation, web scraping, data manipulation, GUI development, and more. This practical exposure is what makes our students stand out in interviews and job roles.

Placement Assistance

We provide 100% placement support through mock interviews, resume building, and company tie-ups. Many of our learners are now working with top MNCs across India.

Flexible Learning Modes

Choose from online classes, offline sessions in Noida/Ghaziabad, or hybrid learning formats, all designed to suit your schedule.

Course Highlights of Advanced Python Training

Our course is structured to provide a comprehensive learning path from intermediate to advanced level. Some of the major modules include:

Object-Oriented Programming (OOP)

Understand the principles of OOP including classes, inheritance, polymorphism, encapsulation, and abstraction. Apply these to real-world applications to write clean, scalable code.

File Handling & Exception Management

Learn how to manage files effectively and handle different types of errors using try-except blocks, custom exceptions, and best practices in debugging.

Iterators & Generators

Master the use of Python’s built-in iterators and create your own generators for memory-efficient coding.

Decorators & Lambda Functions

Explore advanced function concepts like decorators, closures, and anonymous functions that allow for concise and dynamic code writing.

Working with Modules & Packages

Understand how to build and manage large-scale projects with custom packages, modules, and Python libraries.

Database Connectivity

Connect Python with MySQL, SQLite, and other databases. Perform CRUD operations and work with data using Python’s DB-API.

Web Scraping with BeautifulSoup & Requests

Build web crawlers to extract data from websites using real-time scraping techniques.

Introduction to Frameworks

Get a basic introduction to popular frameworks like Django and Flask to understand how Python powers modern web development.

Who Can Join Advanced Python Training?

This course is ideal for:

IT graduates or B.Tech/MCA students

Working professionals in software development

Aspirants of data science, automation, or AI

Anyone with basic Python knowledge seeking specialization

Prerequisite: Basic understanding of Python programming. If you're new, we recommend starting with our Beginner Python Course before moving to advanced topics.

Tools & Technologies Covered

Throughout the Advance Python Training at Softcrayons, you will gain hands-on experience with:

Python 3.x

PyCharm / VS Code

Git & GitHub

MySQL / SQLite

Jupyter Notebook

Web scraping libraries (BeautifulSoup, Requests)

JSON, API Integration

Virtual environments and pip

Career Opportunities After Advanced Python Training

After completing this course, you will be equipped to take up roles such as:

Python Developer

Data Analyst

Automation Engineer

Backend Developer

Web Scraping Specialist

API Developer

AI/ML Engineer (with additional learning)

Python is among the top-paying programming languages today. With the right skills, you can easily earn a starting salary of ₹4–7 LPA, which can rise significantly with experience and expertise.

Certification & Project Evaluation

Softcrayons Tech Solution will provide you with a globally recognized Advance Python Training certificate once you complete the course. In addition, your performance in capstone projects and assignments will be assessed to ensure that you are industry ready.

Final Words

Python is more than simply a beginner's language; it's an effective tool for developing complex software solutions. Enrolling in the platform's Advanced python training course is more than simply studying; it is also preparing you for a job in high demand and growth.Take the next step to becoming a Python master. Join Softcrayons today to turn your potential into performance. Contact us

0 notes

Text

How Can I Use Programmatic SEO to Launch a Niche Content Site?

Launching a niche content site can be both exciting and rewarding—especially when it's done with a smart strategy like programmatic SEO. Whether you're targeting a hyper-specific audience or aiming to dominate long-tail keywords, programmatic SEO can give you an edge by scaling your content without sacrificing quality. If you're looking to build a site that ranks fast and drives passive traffic, this is a strategy worth exploring. And if you're unsure where to start, a professional SEO agency Markham can help bring your vision to life.

What Is Programmatic SEO?

Programmatic SEO involves using automated tools and data to create large volumes of optimized pages—typically targeting long-tail keyword variations. Instead of manually writing each piece of content, programmatic SEO leverages templates, databases, and keyword patterns to scale content creation efficiently.

For example, a niche site about hiking trails might use programmatic SEO to create individual pages for every trail in Canada, each optimized for keywords like “best trail in [location]” or “hiking tips for [terrain].”

Steps to Launch a Niche Site Using Programmatic SEO

1. Identify Your Niche and Content Angle

Choose a niche that:

Has clear search demand

Allows for structured data (e.g., locations, products, how-to guides)

Has low to medium competition

Examples: electric bike comparisons, gluten-free restaurants by city, AI tools for writers.

2. Build a Keyword Dataset

Use SEO tools (like Ahrefs, Semrush, or Google Keyword Planner) to extract long-tail keyword variations. Focus on "X in Y" or "best [type] for [audience]" formats. If you're working with an SEO agency Markham, they can help with in-depth keyword clustering and search intent mapping.

3. Create Content Templates

Build templates that can dynamically populate content with variables like location, product type, or use case. A content template typically includes:

Intro paragraph

Keyword-rich headers

Dynamic tables or comparisons

FAQs

Internal links to related pages

4. Source and Structure Your Data

Use public datasets, APIs, or custom scraping to populate your content. Clean, accurate data is the backbone of programmatic SEO.

5. Automate Page Generation

Use platforms like Webflow (with CMS collections), WordPress (with custom post types), or even a headless CMS like Strapi to automate publishing. If you’re unsure about implementation, a skilled SEO agency Markham can develop a custom solution that integrates data, content, and SEO seamlessly.

6. Optimize for On-Page SEO

Every programmatically created page should include:

Title tags and meta descriptions with dynamic variables

Clean URL structures (e.g., /tools-for-freelancers/)

Internal linking between related pages

Schema markup (FAQ, Review, Product)

7. Track, Test, and Improve

Once live, monitor your pages via Google Search Console. Use A/B testing to refine titles, layouts, and content. Focus on improving pages with impressions but low click-through rates (CTR).

Why Work with an SEO Agency Markham?

Executing programmatic SEO at scale requires a mix of SEO strategy, web development, content structuring, and data management. A professional SEO agency Markham brings all these capabilities together, helping you:

Build a robust keyword strategy

Design efficient, scalable page templates

Ensure proper indexing and crawlability

Avoid duplication and thin content penalties

With local expertise and technical know-how, they help you launch faster, rank better, and grow sustainably.

Final Thoughts

Programmatic SEO is a powerful method to launch and scale a niche content site—if you do it right. By combining automation with strategic keyword targeting, you can dominate long-tail search and generate massive organic traffic. To streamline the process and avoid costly mistakes, partner with an experienced SEO agency Markham that understands both the technical and content sides of SEO.

Ready to build your niche empire? Programmatic SEO could be your best-kept secret to success

0 notes

Text

Beyond the Books: Real-World Coding Projects for Aspiring Developers

One of the best colleges in Jaipur, which is Arya College of Engineering & I.T. They transitioning from theoretical learning to hands-on coding is a crucial step in a computer science education. Real-world projects bridge this gap, enabling students to apply classroom concepts, build portfolios, and develop industry-ready skills. Here are impactful project ideas across various domains that every computer science student should consider:

Web Development

Personal Portfolio Website: Design and deploy a website to showcase your skills, projects, and resume. This project teaches HTML, CSS, JavaScript, and optionally frameworks like React or Bootstrap, and helps you understand web hosting and deployment.

E-Commerce Platform: Build a basic online store with product listings, shopping carts, and payment integration. This project introduces backend development, database management, and user authentication.

Mobile App Development

Recipe Finder App: Develop a mobile app that lets users search for recipes based on ingredients they have. This project covers UI/UX design, API integration, and mobile programming languages like Java (Android) or Swift (iOS).

Personal Finance Tracker: Create an app to help users manage expenses, budgets, and savings, integrating features like OCR for receipt scanning.

Data Science and Analytics

Social Media Trends Analysis Tool: Analyze data from platforms like Twitter or Instagram to identify trends and visualize user behavior. This project involves data scraping, natural language processing, and data visualization.

Stock Market Prediction Tool: Use historical stock data and machine learning algorithms to predict future trends, applying regression, classification, and data visualization techniques.

Artificial Intelligence and Machine Learning

Face Detection System: Implement a system that recognizes faces in images or video streams using OpenCV and Python. This project explores computer vision and deep learning.

Spam Filtering: Build a model to classify messages as spam or not using natural language processing and machine learning.

Cybersecurity

Virtual Private Network (VPN): Develop a simple VPN to understand network protocols and encryption. This project enhances your knowledge of cybersecurity fundamentals and system administration.

Intrusion Detection System (IDS): Create a tool to monitor network traffic and detect suspicious activities, requiring network programming and data analysis skills.

Collaborative and Cloud-Based Applications

Real-Time Collaborative Code Editor: Build a web-based editor where multiple users can code together in real time, using technologies like WebSocket, React, Node.js, and MongoDB. This project demonstrates real-time synchronization and operational transformation.

IoT and Automation

Smart Home Automation System: Design a system to control home devices (lights, thermostats, cameras) remotely, integrating hardware, software, and cloud services.

Attendance System with Facial Recognition: Automate attendance tracking using facial recognition and deploy it with hardware like Raspberry Pi.

Other Noteworthy Projects

Chatbots: Develop conversational agents for customer support or entertainment, leveraging natural language processing and AI.

Weather Forecasting App: Create a user-friendly app displaying real-time weather data and forecasts, using APIs and data visualization.

Game Development: Build a simple 2D or 3D game using Unity or Unreal Engine to combine programming with creativity.

Tips for Maximizing Project Impact

Align With Interests: Choose projects that resonate with your career goals or personal passions for sustained motivation.

Emphasize Teamwork: Collaborate with peers to enhance communication and project management skills.

Focus on Real-World Problems: Address genuine challenges to make your projects more relevant and impressive to employers.

Document and Present: Maintain clear documentation and present your work effectively to demonstrate professionalism and technical depth.

Conclusion

Engaging in real-world projects is the cornerstone of a robust computer science education. These experiences not only reinforce theoretical knowledge but also cultivate practical abilities, creativity, and confidence, preparing students for the demands of the tech industry.

0 notes

Text

Scrape Smarter with the Best Google Image Search APIs

Looking to enrich your applications or datasets with high-quality images from the web? The Real Data API brings you the best Google Image Search scraping solutions—designed for speed, accuracy, and scale.

📌 Key Highlights:

🔍 Extract relevant images based on keywords, filters & advanced queries

⚙️ Integrate seamlessly into AI/ML pipelines and web applications

🧠 Ideal for eCommerce, research, real estate, marketing & visual analytics

💡 Structured output with metadata, image source links, alt-text & more

🚀 Scalable and customizable APIs to suit your unique business use case

From product research to content creation, image scraping plays a pivotal role in automation and insight generation. 📩 Contact us: [email protected]

0 notes

Text

ScrapingBee vs ScraperAPI vs ScrapingDog – Which One’s Right for You?

Compare the top three web scraping APIs and find out which one offers the best balance of speed, price, and success rates. A must-read for developers and data analysts!

0 notes

Text

Web scraping and digital marketing are becoming more closely entwined at the moment, with more professionals harnessing tools to gather the data they need to optimize their efforts. Here is a look at why this state of affairs has come about and how web scraping can be achieved effectively and ethically. The Basics The intention of a typical web scraping session is to harvest information from other sites through the use of APIs that are widely available today. You can conduct web scraping with Python and a few other programming languages, so it is a somewhat technical process on the surface. However, there are software solutions available which aim to automate and streamline this in order to encompass the needs of less tech-savvy users. Through the use of public APIs, it is perfectly legitimate and above-board to scrape sites and services in order to extract the juicy data that you crave. The Benefits From a marketing perspective, data is a hugely significant asset that can be used to shape campaigns, consider SEO options for client sites, assess target audiences to uncover the best strategies for engaging them and so much more. While you could find and extract data manually, this is an incredibly time-consuming process as well as being tedious for the person tasked with it. Conversely with the assistance of web scraping solutions, valuable and most importantly actionably information can be uncovered and parsed as needed in a fraction of the time. The Uses To appreciate why web scraping has risen to prominence in a digital marketing context, it is worth looking at how it can be used by marketers to reach their goals. Say, for example, you need to find out more about the prospective users of a given product or service, and all you have is a large list of their email addresses provided as part of your mailing list. This is a good starting point, but addresses alone are not going to give you any real clue of what factors define each individual. Web scraping through APIs will allow you to build a far better picture of these users based on the rest of their publically available online presence. This will allow you to then leverage this data to create bespoke marketing messaging that is tailored to users and treats them uniquely, rather than as a homogenous group. The same tactics can be applied to a range of other circumstances, such as monitoring prices on a competing e-commerce site, generating leads to win over new customers and much more besides. The Challenges Collating data from third party sites automatically is not always straightforward, in part because many sites seek to prevent automated systems from doing this. There are also ethical issues to consider, and it is generally better to only use information that is public to fuel your marketing efforts, or else customers and clients could feel like they are being stalked. Even with all this in mind, there are ample opportunities to make effective use of web scraping for digital marketing in a way that will benefit both marketers and clients alike.

0 notes

Text

Introduction

In the evolving digital shopping world, consumers heavily depend on price comparisons to make well-informed purchasing choices. With grocery platforms such as Blinkit, Zepto, Instamart, and Big Basket offering varying price points for identical products, identifying the best deals can be complex.

This is where Web Scraping Techniques play a crucial role, automating the process of tracking and analyzing prices across multiple platforms. Whether you are a consumer seeking savings or a retailer evaluating competitor pricing, Web Scraping Grocery Data For Price Comparison provides a highly effective solution.

Why Compare Grocery Prices?

Comparing grocery prices across multiple platforms is essential for consumers and businesses to make informed purchasing decisions, stay competitive, and optimize costs.

Here's why it matters:

Cost Savings for Consumers

Business Competitive Analysis

Data-Driven Purchasing Decisions

Market Insights for Analysts

Challenges in Scraping Grocery Prices

Extracting Grocery Prices from online platforms presents multiple technical hurdles due to website complexities, anti-scraping measures, and frequent structural changes. Efficient solutions require adaptive techniques to ensure accuracy and scalability.

Dynamic Website Content

Anti-Scraping Measures

Frequent Website Updates

Scalability and Data Volume

Benefits of Web Scraping Grocery Data For Price Comparison

Web scraping grocery data provides businesses and consumers with real-time, accurate, and automated insights into price fluctuations across multiple platforms, enabling more intelligent purchasing and pricing strategies.

Track Grocery Prices In Real-Time

Saves Time and Effort

Data-Backed Decision Making

Historical Price Trends

Case Study: Web Scraping in Grocery Price Comparison

To showcase the effectiveness of Web Scraping Grocery Data For Price Comparison, we conducted a month-long study tracking grocery prices across multiple platforms.

1. Data Collection Process

A custom-built Grocery Prices Tracker was designed to extract essential pricing data, including product names, categories, prices, and discounts from leading grocery platforms:

Blinkit: Implemented BeautifulSoup and Selenium to handle JavaScript-rendered content efficiently.

Zepto: Utilized Selenium to extract grocery pricing data dynamically.

Instamart: Leveraged API requests and browser automation for seamless data retrieval.

Big Basket: Employed a combination of BeautifulSoup and Selenium to capture dynamically loaded content.

2. Key Findings

The analysis revealed critical insights into grocery pricing patterns across platforms:

Price Variability: Up to 15% price difference was observed for everyday grocery items across different platforms.

Discount Trends: Big Basket maintained consistent discounts, while Blinkit frequently introduced flash sales, influencing short-term pricing dynamics.

Hidden Charges: Additional costs, particularly delivery fees, played a major role in determining consumers' final purchase price.

Best Savings: Over one month, Zepto emerged as the most cost-effective platform for grocery shopping.

3. Business Applications

The extracted insights offer valuable applications for various stakeholders:

Retailers: Optimize pricing strategies based on real-time competitive data.

Consumers: Make informed decisions by identifying the most budget-friendly grocery platform.

Market Analysts: Track Grocery Prices and emerging trends across Blinkit, Zepto, Instamart, and Big Basket, enabling data-driven market predictions.

This analysis provides a data-backed approach to understanding grocery pricing strategies, offering actionable insights for businesses and consumers.

Key Tools & Technologies for Grocery Price Scraping

Developing a robust Grocery Price Comparison system requires a well-structured approach and the correct set of tools. Below are the key tools & technologies that play a crucial role in ensuring accurate and efficient data extraction:

Python: Serves as the core programming language for automating the web scraping process and handling data extraction efficiently.

BeautifulSoup: A widely used library that facilitates parsing HTML and XML documents, enabling seamless data retrieval from web pages.

Scrapy: A high-performance web scraping framework that provides structured crawling, data processing, and storage capabilities for large-scale scraping projects.

Selenium: Essential for scraping websites that rely heavily on JavaScript by simulating human interactions and extracting dynamically loaded content.

Proxies & VPNs: Helps maintain anonymity and prevent IP bans when scraping large-scale data across multiple sources.

Headless Browsers: Enables automated interaction with dynamic websites while optimizing resource usage by running browsers without a graphical interface.

Businesses can efficiently extract and compare grocery pricing data by leveraging these technologies, ensuring competitive market insights and informed decision-making.

Step-by-Step Guide to Scraping Grocery Prices

A Step-by-Step Guide to Scraping Grocery Prices provides a structured approach to extracting pricing data from various online grocery platforms. This process involves selecting the appropriate tools, handling dynamic content, and storing the extracted information in a structured format for analysis.

1. Scraping Blinkit Grocery Prices

Identify the product categories and corresponding URLs for targeted scraping.

Utilize BeautifulSoup for static web pages or Selenium to handle interactive elements.

Manage AJAX requests to extract dynamically loaded content effectively.

Store the extracted data in CSV, JSON, or a database for easy access and analysis.

2. Extracting Zepto Grocery Prices

Leverage Selenium to interact with webpage elements and navigate through the website.

Implement wait times to ensure content is fully loaded before extraction.

Structure the extracted data efficiently, including product names, prices, and other key attributes.

3. Scraping Instamart Grocery Prices

Use Selenium to manage dynamically changing elements.

Extract product names, prices, and discount information systematically.

Implement headless browsers to enable large-scale automation while minimizing resource consumption.

4. Web Scraping Big Basket Grocery Prices

Apply BeautifulSoup to extract data from static pages efficiently.

Utilize Selenium to handle dynamically loaded product details.

Store and organize extracted product information in a structured manner for further processing.

This guide provides a comprehensive roadmap for efficiently scraping grocery price data from various platforms, ensuring accuracy and scalability in data collection.

Automating the Process

To ensure continuous Track Grocery Prices In Real-Time, use automation techniques like cron jobs (Linux) or task schedulers (Windows).# Run scraper every 6 hours 0 */6 * * * /usr/bin/python3 /path_to_script.py

Conclusion

In today’s fast-paced digital marketplace, Web Scraping Grocery Data For Price Comparison is essential for making informed purchasing and pricing decisions. Automating data extraction allows businesses and consumers to analyze price variations across multiple platforms without manual effort.

With accurate Grocery Price Comparison, shoppers can maximize savings, while retailers can adjust their pricing strategies to stay ahead of competitors. Real-time insights into pricing trends allow businesses to respond quickly to market fluctuations and promotional opportunities.

We offer advanced solutions for Scraping Grocery Prices, ensuring seamless data collection and analysis. Whether you need a custom scraper or large-scale price monitoring, our expertise can help you stay competitive. Contact Retail Scrape today to implement a powerful grocery price-tracking solution!

Source :https://www.retailscrape.com/automate-web-scraping-grocery-data-for-price-comparison.php

Originally Published By https://www.retailscrape.com/

#WebScrapingTechniques#PriceComparisonThroughWebScraping#WebScrapingGroceryData#GroceryPriceComparison#AutomatedPriceTracking#OnlineGroceryDataExtraction#RealTimePriceMonitoring#GroceryMarketAnalytics#CompetitivePricingAnalysis#RetailDataScraping#GroceryEcommerceInsights#ZeptoPriceData#BlinkitDataScraping#BigBasketPriceComparison#InstamartPricingIntelligence#AutomatedDataExtractionTools#GroceryProductDataCollection#DynamicPricingStrategy#APIBasedPriceScraping#DataAutomationForRetail#GroceryIndustryTrends#PriceOptimizationUsingData#ConsumerPricingIntelligence

0 notes

Text

How to Track Restaurant Promotions on Instacart and Postmates Using Web Scraping

Introduction

With the rapid growth of food delivery services, companies such as Instacart and Postmates are constantly advertising for their restaurants to entice customers. Such promotions can range from discounts and free delivery to combinations and limited-time offers. For restaurants and food businesses, tracking these promotions gives them a competitive edge to better adjust their pricing strategies, identify trends, and stay ahead of their competitors.

One of the topmost ways to track promotions is using web scraping, which is an automated way of extracting relevant data from the internet. This article examines how to track restaurant promotions from Instacart and Postmates using the techniques, tools, and best practices in web scraping.

Why Track Restaurant Promotions?

1. Contest Research

Identify promotional strategies of competitors in the market.

Compare their discounting rates between restaurants.

Create pricing strategies for competitiveness.

2. Consumer Behavior Intuition

Understand what kinds of promotions are the most patronized by customers.

Deducing patterns that emerge determine what day, time, or season discounts apply.

Marketing campaigns are also optimized based on popular promotions.

3. Distribution Profit Maximization

Determine the optimum timing for promotion in restaurants.

Analyzing competitors' discounts and adjusting is critical to reducing costs.

Maximize the Return on investments, and ROI of promotional campaigns.

Web Scraping Techniques for Tracking Promotions

Key Data Fields to Extract

To effectively monitor promotions, businesses should extract the following data:

Restaurant Name – Identify which restaurants are offering promotions.

Promotion Type – Discounts, BOGO (Buy One Get One), free delivery, etc.

Discount Percentage – Measure how much customers save.

Promo Start & End Date – Track duration and frequency of offers.

Menu Items Included – Understand which food items are being promoted.

Delivery Charges - Compare free vs. paid delivery promotions.

Methods of Extracting Promotional Data

1. Web Scraping with Python

Using Python-based libraries such as BeautifulSoup, Scrapy, and Selenium, businesses can extract structured data from Instacart and Postmates.

2. API-Based Data Extraction

Some platforms provide official APIs that allow restaurants to retrieve promotional data. If available, APIs can be an efficient and legal way to access data without scraping.

3. Cloud-Based Web Scraping Tools

Services like CrawlXpert, ParseHub, and Octoparse offer automated scraping solutions, making data extraction easier without coding.

Overcoming Anti-Scraping Measures

1. Avoiding IP Blocks

Use proxy rotation to distribute requests across multiple IP addresses.

Implement randomized request intervals to mimic human behavior.

2. Bypassing CAPTCHA Challenges

Use headless browsers like Puppeteer or Playwright.

Leverage CAPTCHA-solving services like 2Captcha.

3. Handling Dynamic Content

Use Selenium or Puppeteer to interact with JavaScript-rendered content.

Scrape API responses directly when possible.

Analyzing and Utilizing Promotion Data

1. Promotional Dashboard Development

Create a real-time dashboard to track ongoing promotions.

Use data visualization tools like Power BI or Tableau to monitor trends.

2. Predictive Analysis for Promotions

Use historical data to forecast future discounts.

Identify peak discount periods and seasonal promotions.

3. Custom Alerts for Promotions

Set up automated email or SMS alerts when competitors launch new promotions.

Implement AI-based recommendations to adjust restaurant pricing.

Ethical and Legal Considerations

Comply with robots.txt guidelines when scraping data.

Avoid excessive server requests to prevent website disruptions.

Ensure extracted data is used for legitimate business insights only.

Conclusion

Web scraping allows tracking restaurant promotions at Instacart and Postmates so that businesses can best optimize their pricing strategies to maximize profits and stay ahead of the game. With the help of automation, proxies, headless browsing, and AI analytics, businesses can beautifully keep track of and respond to the latest promotional trends.

CrawlXpert is a strong provider of automated web scraping services that help restaurants follow promotions and analyze competitors' strategies.

0 notes

Text

Best LinkedIn Lead Generation Tools in 2025

In today’s competitive digital landscape, finding the right tools can make all the difference when it comes to scaling your outreach. Whether you’re a small business owner or part of an in-house marketing team, leveraging advanced platforms will help you target prospects more effectively. If you’re looking to boost your B2B pipeline, integrating the latest solutions—alongside smart linkedin advertising singapore strategies—can supercharge your lead flow.

1. LinkedIn Sales Navigator LinkedIn’s own premium platform remains a top choice for many professionals. It offers: • Advanced lead and company search filters for pinpoint accuracy. • Lead recommendations powered by LinkedIn’s AI to discover new prospects. • InMail messaging and CRM integrations to streamline follow-ups. • Real-time insights and alerts on saved leads and accounts.

2. Dux-Soup Dux-Soup automates connection and outreach workflows, helping you: • Auto-view profiles based on your search criteria. • Send personalized connection requests and follow-up messages. • Export prospect data to your CRM or spreadsheet. • Track interaction history and engagement metrics—all without leaving your browser.

3. Octopus CRM Octopus CRM is a user-friendly LinkedIn extension designed for: • Crafting multi-step outreach campaigns with conditional logic. • Auto-sending connection requests, messages, and profile visits. • Building custom drip sequences to nurture leads over time. • Exporting campaign reports to Excel or Google Sheets for analytics.

4. Zopto Ideal for agencies and teams, Zopto provides cloud-based automation with: • Region and industry-specific targeting to refine your list. • Easy A/B testing of outreach messages. • Dashboard with engagement analytics and performance benchmarks. • Team collaboration features to share campaigns and track results.

5. LeadFuze LeadFuze goes beyond LinkedIn to curate multi-channel lead lists: • Combines LinkedIn scraping with email and phone data. • Dynamic list building based on job titles, keywords, and company size. • Automated email outreach sequences with performance tracking. • API access for seamless integration with CRMs and sales tools.

6. PhantomBuster PhantomBuster’s flexible automation platform unlocks custom workflows: • Pre-built “Phantoms” for LinkedIn searches, views, and message blasts. • Scheduling and chaining of multiple actions for sophisticated campaigns. • Data extraction capabilities to gather profile details at scale. • Webhooks and JSON output for developers to integrate with other apps.

7. Leadfeeder Leadfeeder uncovers which companies visit your website and marries that data with LinkedIn: • Identifies anonymous web traffic and matches it to LinkedIn profiles. • Delivers daily email alerts on high-value company visits. • Integrates with your CRM to enrich contact records automatically. • Provides engagement scoring to prioritise outreach efforts.

8. Crystal Knows Personality insights can transform your messaging. Crystal Knows offers: • Personality reports for individual LinkedIn users. • Email templates tailored to each prospect’s communication style. • Chrome extension that overlays insight cards on LinkedIn profiles. • Improved response rates through hyper-personalised outreach.

Key Considerations for 2025 When choosing a LinkedIn lead generation tool, keep these factors in mind: • Compliance & Safety: Ensure the platform follows LinkedIn’s terms and respects user privacy. • Ease of Integration: Look for native CRM connectors or robust APIs. • Scalability: Your tool should grow with your outreach volume and team size. • Analytics & Reporting: Data-driven insights help you refine messaging and targeting.

Integrating with Your Singapore Strategy For businesses tapping into Asia’s growth markets, combining these tools with linkedin advertising singapore campaigns unlocks both organic and paid lead channels. By syncing automated outreach with sponsored content, you’ll cover every stage of the buyer journey—from initial awareness to final conversion.

Conclusion

As 2025 unfolds, LinkedIn lead generation continues to evolve with smarter AI, more seamless integrations, and deeper analytics. By selecting the right mix of tools—from Sales Navigator’s native power to specialized platforms like Crystal Knows—you can craft a robust, efficient pipeline. Pair these solutions with targeted linkedin advertising singapore tactics, and you’ll be well-positioned to capture high-quality leads, nurture them effectively, and drive sustained growth in the competitive B2B arena.

0 notes