#Biomedical projects in Matlab

Explore tagged Tumblr posts

Text

Biomedical Engineering Projects Ideas for Final year students

Biomedical engineering project Ideas mixes biology, medicine, and engineering to make new healthcare technologies. There are many exciting project ideas in this field. One idea is developing wearable devices that monitor vital signs like heart rate and blood pressure. These devices can help people keep track of their health in real-time. Another project could focus on creating artificial organs. For example, making a better artificial heart for patients waiting for a transplant. There is also a lot of interest in improving medical imaging technology. Projects could involve developing new ways to take clearer and more detailed pictures of the inside of the body using MRI or ultrasound. At Takeoff Projects, we explore these exciting ideas to make healthcare better and more advanced.

#Bio medical projects#Biomedical Engineering Projects#Medical Electronics Projects#Final Year Biomedical Projects#Biomedical project ideas#Biomedical projects in Matlab#Matlab based biomedical projects

0 notes

Text

MATLAB Assignment Help

1.Hardware-in-the-Loop (HIL) Simulation: Assists in testing control algorithms on physical hardware, critical for fields like automotive and aerospace engineering.

2.Embedded System Code Generation: Helps students generate code from Simulink models to run on microcontrollers or DSPs, essential for IoT and robotics.

3.Multi-Domain Modeling: Integrates systems across electrical, mechanical, and fluid power, useful for automotive and aerospace applications.

4.System Identification: Guides students in estimating parameters from real data, improving model accuracy for biomedical and chemical projects.

5.Cybersecurity in Control Systems: Simulates cyber-attack scenarios to assess control system resilience, relevant for smart infrastructure and critical systems.

Expanded Educational Support

1.Project and Dissertation Help: Full support for designing, testing, and reporting on complex projects.

2.Model Debugging: Assistance with troubleshooting issues in model configuration and simulation diagnostics.

3.Industry Certifications Prep: Helps prepare for certifications like MathWorks’ Certified Simulink Developer.

4.Career-Focused Mentorship: Guidance on applying Simulink skills in real-world roles in engineering and technology.

Complex Project Applications

1.Renewable Energy Optimization: Supports solar, wind, and battery storage simulations.

2.Biomedical Signal Processing: Projects involving real-time ECG/EEG processing or medical device control.

3.Advanced Control Design: Expertise in MPC and adaptive controllers for robotics and autonomous systems.

4 Wireless Communication Systems: Simulations for channel noise, modulation, and protocol testing.

---

Specialized Tools and Libraries

1.Simscape Libraries: Model realistic multi-domain physical systems.

2.AI and Deep Learning: Integrate AI for predictive maintenance and adaptive systems.

3.Control System and Signal Processing Toolboxes: Helps with control tuning and signal analysis.

4.MATLAB Compiler and Code Generation: Converts Simulink models into deployable applications or embedded code.

With industry-experienced tutors, customized support, and hands-on learning, All Assignment Experts ensure students master Simulink for both academic success and career readiness in engineering and tech.

0 notes

Note

So... you commented that you're studying biomedical engineering on a hermitcraft post

Do you like it? Is it a good major?

I am a BME major! Its a bit of a long story cuz im currently finishing my freshman year and ive only been bme for one semester (started as environmental studies but got too bored). Its pretty damn hard but I really like it!

Ive taken statics (physics but nothing moves), all my calculuses (hell but at least im done with them), and computing (coding in matlab).

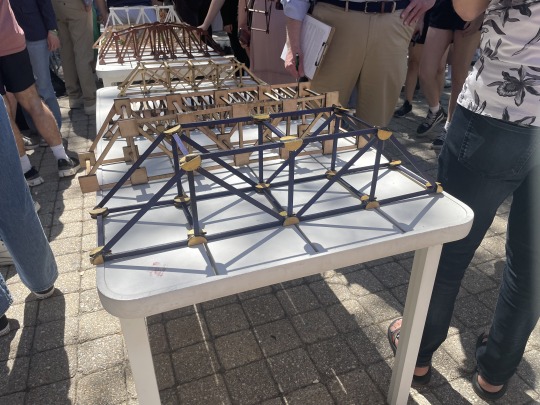

Ive really loved statics. Its mostly an engineering class without buch bio or medical but I knew id chosen the right major when my prof said like 2 sentences about how what we were learning related to bone fractures and i fully started vibrating lmao. Our final project was building a truss and seeing how much load it could carry which was a really cool hands on project and it was rly good for familiarizing me with the makerspace at my school

I birthed her^^^^

Computing has been pretty hard for me because my brain was very much not build for coding, but im doing surprisingly well in the class all things considered. I think its the only comp sci esque class i have to take, and it might not be a major rec at ur school so who knows.

I know that my schedule next year is going to HURT. Because i did t take chem or physics this year I have to figure out how to stack those on top of the normal sophomore classes. I think I'm gonna be ok with it though, because the majority of my course hours for next year are labs, so my schedule looks way more packed than it actually is.

i chose the major because I was missing science in my first semester. I was thinking of going into biology because i really liked AP bio, but i didnt want to go pre-med. Then my mom asked what i was going to do with bio and i had no idea. Then i realized BME would be a pretty much perfect major for me because it's really fluid. I mean you can literally go into bio, medicine, or literally any engineering field.

in the last semester ive found so many resources and opportunities that my school offers for bme and its been just rly fun to get closer w other people in my major.

If i had to give you one piece of advice, it would be to start freshman year as an engineering major if you are interested in that. It is WAY easier to switch out if engineering than to switch into it, and if you dont like it first semester you can change majors and have most of your core credits done already. BME is sick as hell and its crazy how many people major in it. My orthopedic surgeon thats gonna fix my hip was a BME major. My old pediatrician was a BME major. People who work for oil corps were BME majors (please dont work for oil corps). You can literally go into any field with this shit its great.

Ik this is long but im having such a good time with this major and if you have any more questions or want to hear more about any of the classes ive taken please lmk!! College is a bitch and the more you can learn about it beforehand the easier it is to figure out so literally if anyone seeing this wants to know more hmu

#Biomedical Engineering#College advice#kinda#idk man im in finals week so its crazy that i can say this much good shit abt my major#But i really like it what can i say#But GOD would life be easier if i wasnt bored with ES lmao#Also some of this shit might be different for your school cuz i go to a weird lil kinda liberal arts and sciences school in louisiana#With a lot of rich kids#^^thas pretty much all the info you need to figure out the school i go to lmao#yippee roll wave baby#I literally just like bones lmao

0 notes

Note

Hi! First of all, thanks for always answering asks in such a friendly and kind manner. Please feel free to skip this if it's too personal, but I was curious: When and how did you discover software engineering and writing fiction as your interests? Do you see any similarities/interaction between them? And finally, do you have any other topics you're really into (whether or not you've gotten a chance to put the time into them yet), or would you say these are kinda the "big two" for you?

yeah np (❁´◡`❁) it's nice interacting with people who are interested in what I have going on

Ah so writing fiction came first. Think I had a very classic case of "read so many books in middle school" and I kinda wanted to try my hand at writing. My parents were encouraging and believed I had some talent, but I struggled to write anything more than a few pages long. I'd get too hung up on trying to perfect every sentence. I took a swing at fanfic when I was a freshman in high school, and that helped a lot because there are so many footholds already in place for you. Ended up writing something 80,000 words long as a 14 year old.

Since then I mostly have stuck with fanfic as just a fun hobby, but I do like the idea of getting more serious about original work if and when I have the time. In a lot of ways ABoT feels like original work and has helped put me in a position to really consider what it takes to craft something totally original. (Oh, I did have a pretty long-form piece of original work I worked on in high school, but it's not the sort of thing I'd salvage for a true original work project.)

Software was its totally own, other thing. I actually took AP Computer Science my senior year of high school (very first time the class was offered) and the whole course was... kind of a huge mess. Made me think I didn't like software. I went into college knowing I wanted to do something in STEM, but I liked my high school science and math courses pretty evenly so it was a matter of settling on something I saw a career for. So I went into college thinking I was pursuing a biomedical engineering degree.

My college's engineering track consisted of certain core engineering courses we all took, and then split into specialization courses once you'd knocked out the core requirements. Some of those courses were grad-level and needed professor sign-off to sign up for the course. So I was sitting in the engineering building, late sophomore year, waiting for some prof to come back to let me sign off for biomedical-something-or-other, and I was reflecting "hey if I wanna change my major, now's kind of my last chance huh."

So I pulled up the engineering department's webpage on my laptop, which had a bunch of sample schedules which showed the 4 years of courses that, say, a mechanical engineer might take, vs a chemical engineer, vs a civil engineer, etc etc. I went through the mechanical and the chemical and all that going "nah, nah" until I got to the computer engineer sample track and I read through all the courses going "damn if I had time in my schedule I'd love to take that class."

And I finally went "hey wait." Luckily the core engineering classes had contained a course in C, which I very much enjoyed, as well as a lot of courses that made us mess around in Matlab, which is...I guess loosely a coding language, mostly for processing data. And tinkering around in Matlab had been My Thing throughout college, and I really liked that C course. And I realized pretty much all of the college projects which I'd gone above and beyond for my own enjoyment had been, in some capacity, programming projects. And I went "Hey Wait" again and left the building to go reevaluate my future.

Which was a really good decision ultimately! I really like my job as a software engineer and I can absolutely see myself doing it long term.

As for other hobbies I've got a handful of other things. I do a lot of running after being an extremely bad runner most of my life. I'm actually doing a city-sponsored half marathon on Sunday! I do kind of a lot of biking when the weather is nice. I've been cooking vegan for myself for the last like 6-ish months as just something that's been interesting to try my hand at. I pet-sit for friends quite a bit! I really like pets and I'm hoping to get a dog or a cat of my own someday. I want to get good at plants, which has been tough in my current apartment but I'm really hoping to get better at that once I move into my new condo that has much much better lighting and even some balcony space. I'm also an amateur knitter and I've knitted little tiny dog sweaters for dogs I've pet-sat. And I did a whole lot of tutoring and TA'ing back in school which I do miss. I've had fewer opportunities for that kind of thing as an adult, but I've been thinking lately about looking into volunteer opportunities with schools around here.

60 notes

·

View notes

Text

FEA & CFD Based Design and Optimization

Enteknograte use advanced CAE software with special features for mixing the best of both FEA tools and CFD solvers: CFD codes such as Ansys Fluent, StarCCM+ for Combustion and flows simulation and FEA based Codes such as ABAQUS, AVL Excite, LS-Dyna and the industry-leading fatigue Simulation technology such as Simulia FE-SAFE, Ansys Ncode Design Life to calculate fatigue life of Welding, Composite, Vibration, Crack growth, Thermo-mechanical fatigue and MSC Actran and ESI VA One for Acoustics.

Enteknograte is a world leader in engineering services, with teams comprised of top talent in the key engineering disciplines of Mechanical Engineering, Electrical Engineering, Manufacturing Engineering, Power Delivery Engineering and Embedded Systems. With a deep passion for learning, creating and improving how things work, our engineers combine industry-specific expertise, deep experience and unique insights to ensure we provide the right engineering services for your business

Advanced FEA and CFD

Training: FEA & CFD softwares ( Abaqus, Ansys, Nastran, Fluent, Siemens Star-ccm+, Openfoam)

Read More »

Thermal Analysis: CFD and FEA

Thermal Analysis: CFD and FEA Based Simulation Enteknograte’s Engineering team with efficient utilizing real world transient simulation with FEA – CFD coupling if needed, with

Read More »

Multiphase Flows Analysis

Multi-Phase Flows CFD Analysis Multi-Phases flows involve combinations of solids, liquids and gases which interact. Computational Fluid Dynamics (CFD) is used to accurately predict the

Read More »

Multiobjective Optimization

Multiobjective optimization Multiobjective optimization involves minimizing or maximizing multiple objective functions subject to a set of constraints. Example problems include analyzing design tradeoffs, selecting optimal

Read More »

MultiObjective Design and Optimization of TurboMachinery: Coupled CFD and FEA

MultiObjective Design and Optimization of Turbomachinery: Coupled CFD and FEA Optimizing the simulation driven design of turbomachinery such as compressors, turbines, pumps, blowers, turbochargers, turbopumps,

Read More »

MultiBody Dynamics

Coupling of Multibody Dynamics and FEA for Real World Simulation Advanced multibody dynamics analysis enable our engineers to simulate and test virtual prototypes of mechanical

Read More »

Metal Forming Simulation: FEA Design and Optimization

Metal Forming Simulation: FEA Based Design and Optimization FEA (Finite Element Analysis) in Metal Forming Using advanced Metal Forming Simulation methodology and FEA tools such

Read More »

Medical Device

FEA and CFD based Simulation and Design for Medical and Biomedical Applications FEA and CFD based Simulation design and analysis is playing an increasingly significant

Read More »

Mathematical Simulation and Development

Mathematical Simulation and Development: CFD and FEA based Fortran, C++, Matlab and Python Programming Calling upon our wide base of in-house capabilities covering strategic and

Read More »

Materials & Chemical Processing

Materials & Chemical Processing Simulation and Design: Coupled CFD, FEA and 1D-System Modeling Enteknograte’s engineering team CFD and FEA solutions for the Materials & Chemical

Read More »

Marine and Shipbuilding Industry: FEA and CFD based Design

FEA and CFD based Design and Optimization for Marine and Shipbuilding Industry From the design and manufacture of small recreational crafts and Yachts to the

Read More »

Industrial Equipment and Rotating Machinery

Industrial Equipment and Rotating Machinery FEA and CFD based Design and Optimization Enteknograte’s FEA and CFD based Simulation Design for manufacturing solution helps our customers

Read More »

Hydrodynamics CFD simulation, Coupled with FEA for FSI Analysis of Marine and offshore structures

Hydrodynamics CFD simulation, Coupled with FEA for FSI Analysis of Marine and offshore structures Hydrodynamics is a common application of CFD and a main core

Read More »

Fracture and Damage Mechanics: Advanced FEA for Special Material

Fracture and Damage Simulation: Advanced constitutive Equation for Materials behavior in special loading condition In materials science, fracture toughness refers to the ability of a

Read More »

Fluid-Strucure Interaction (FSI)

Fluid Structure Interaction (FSI) Fluid Structure Interaction (FSI) calculations allow the mutual interaction between a flowing fluid and adjacent bodies to be calculated. This is necessary since

Read More »

Finite Element Simulation of Crash Test

Finite Element Simulation of Crash Test and Crashworthiness with LS-Dyna, Abaqus and PAM-CRASH Many real-world engineering situations involve severe loads applied over very brief time

Read More »

FEA Welding Simulation: Thermal-Stress Multiphysics

Finite Element Welding Simulation: RSW, FSW, Arc, Electron and Laser Beam Welding Enteknograte engineers simulate the Welding with innovative CAE and virtual prototyping available in

Read More »

FEA Based Composite Material Simulation and Design

FEA Based Composite Material Design and Optimization: Abaqus, Ansys, Matlab and LS-DYNA Finite Element Method and in general view, Simulation Driven Design is an efficient

Read More »

FEA and CFD Based Simulation and Design of Casting

Finite Element and CFD Based Simulation of Casting Using Sophisticated FEA and CFD technologies, Enteknograte Engineers can predict deformations and residual stresses and can also

Read More »

FEA / CFD for Aerospace: Combustion, Acoustics and Vibration

FEA and CFD Simulation for Aerospace Structures: Combustion, Acoustics, Fatigue and Vibration The Aerospace industry has increasingly become a more competitive market. Suppliers require integrated

Read More »

Fatigue Simulation

Finite Element Analysis of Durability and Fatigue Life: Ansys Ncode, Simulia FE-Safe The demand for simulation of fatigue and durability is especially strong. Durability often

Read More »

Energy and Power

FEA and CFD based Simulation Design to Improve Productivity and Enhance Safety in Energy and Power Industry: Energy industry faces a number of stringent challenges

Read More »

Combustion Simulation

CFD Simulation of Reacting Flows and Combustion: Engine and Gas Turbine Knowledge of the underlying combustion chemistry and physics enables designers of gas turbines, boilers

Read More »

Civil Engineering

CFD and FEA in Civil Engineering: Earthquake, Tunnel, Dam and Geotechnical Multiphysics Simulation Enteknograte, offer a wide range of consulting services based on many years

Read More »

CFD Thermal Analysis

CFD Heat Transfer Analysis: CHT, one-way FSI and two way thermo-mechanical FSI The management of thermal loads and heat transfer is a critical factor in

Read More »

CFD and FEA Multiphysics Simulation

Understand all the thermal and fluid elements at work in your next project. Allow our experienced group of engineers to couple TAITherm’s transient thermal analysis

Read More »

Automotive Engineering

Automotive Engineering: Powertrain Component Development, NVH, Combustion and Thermal simulation Simulation and analysis of complex systems is at the heart of the design process and

Read More »

Aerodynamics Simulation: Coupling CFD with MBD and FEA

Aerodynamics Simulation: Coupling CFD with MBD, FEA and 1D-System Simulation Aerodynamics is a common application of CFD and one of the Enteknograte team core areas

Read More »

Additive Manufacturing process FEA simulation

Additive Manufacturing: FEA Based Design and Optimization with Abaqus, ANSYS and MSC Nastran Additive manufacturing, also known as 3D printing, is a method of manufacturing

Read More »

Acoustics and Vibration: FEA/CFD for VibroAcoustics Analysis

Acoustics and Vibration: FEA and CFD for VibroAcoustics and NVH Analysis Noise and vibration analysis is becoming increasingly important in virtually every industry. The need

Read More »

About Enteknograte

Advanced FEA & CFD Enteknograte Where Scientific computing meets, Complicated Industrial needs. About us. Enteknograte Enteknograte is a virtual laboratory supporting Simulation-Based Design and Engineering

Read More »

1 note

·

View note

Text

University asks

from this blog

How far along are you in your studies? About to start the 4th/5th year of my undergrad program. So close, yet so far lol

How far do you plan on pursuing academia? I want to get my PhD and maybe I’ll do some post-doc work after that?

What made you want to attend university? My mom wasn’t able to attend in her home country and my dad got his Bachelor’s on his dad’s GI Bill. They allowed me to see how there are some limits without it and how education in this country is your ticket to money and living comfortably.

What do you study? Biomedical Engineering + Chemical/Biological Engineering

What do you wish to accomplish by studying at university? The end goal is to contribute to the knowledge in the field and have an impact on the community by inspiring underrepresented youth like me

What do you want to do with your degree? Be a researcher and teach in some capacity

What’s your dream job? Being a researcher and influencer for Latinx youth in STEM

Has university been what you expected it to be? Tbh, I don’t think so even though I didn’t really know what to expect. I’ve learned SO much along the way that no one had the knowledge to tell me when before uni

What’s the most important thing you have learned about yourself at university? That I’m strong af, I can do whatever I want to in life–literally. And not to compare yourself to others

What surprised you the most about university? The types of friends I made, people I met, and places I went bc of opportunities through uni

What classes are you taking this/next semester? For fall 2020, I am taking Chemical Engineering Design 1 (not truly a design class. more like ethics, safety, etc.), Transport Phenomena in Biomedical Eng, Separation Processes, Biochemistry, and an Independent Study.

What has been the most interesting class you’ve taken? This past semester I took a biomedical eng project-based course and it was LIT af, very challenging but projects were super realistic and interesting

What has been your favourite class you’ve taken? Same course as 12^ like it made me realize my true style of learning and working and it was difficult but so so cool

What is your favourite professor like? Haha, same prof as 12 & 13^^ he was laid back, challenging, young and tech-savvy. More than anything, he was there if you just needed someone to talk to. He didn’t ‘bend’ rules but he knew what it’s like to be a student with limited resources and no super-human abilities. Just a cool guy I’d grab a drink with

If you have to write a thesis, what are you going to write it on? I will have to provide a written report for the independent study so I’m gonna ask if it can be like a thesis. It’ll probs be on something like improving multi-modal neuroimaging data fusion using machine learning

What is your weird academic niche? I’m not really sure. Sometimes I feel like I am my own niche? Lmao. I don’t really know how to answer this tbh

What’s your favourite thing about academia? That honesty and integrity are highly valued and people just like wondering about things on a deeper level and bigger scale and have conversations about it

What’s your least favourite thing about academia? That it’s toxic: not as diverse, kinda bureaucratic, research matters more than ability to teach (in the US)

Would you go to a different university if you had to choose again? Yes and no: instead of going to a different uni then transferring to where I am now, I would 100% just have gone to the one I’m at now in the first place

Would you choose a different subject if you had to choose again? I don’t think so

If you couldn’t study the subject you study now, what would you study? Software Engineering or Comp Sci

What is your favourite course style? More applied learning, like projects. Not as exam heavy.

Theoretical or practical? AHHH! Both

Best book you’ve had to read for a course? Numerical Methods in MATLAB for Engineers

Worst book you’ve had to read for a course? Molecular Physical Chemistry for Engineers by Yates and Johnson OMFG LITERAL TRASH, even our instructor hated it

Favourite online resource? YouTube lol– broad but legit the best way to learn ANYTHING

The topic of the best essay you’ve written? I have a problem where everything I wrote in the past I now see as really bad bc my writing is always evolving. Most recent essay–maternal mortality rates in the US and race gaps within

Would you ever consider getting a phd? I am indeed considering haha

Who is doing the most interesting research in your field at the moment? In my research, Vince Calhoun or Danilo Bzdok. In my major, I’m not sure

Do you have any minors? I haven’t declared it officially, but I think I can achieve a math minor my 5th year

What is subject you wish your university taught but doesn’t? I don’t blame them cause it’s just now gaining more popularity, but like a strictly computational biology/biostatistics subject would be amazing

What is an area of your subject you wish your university taught but doesn’t? Same as 31^

The best advice anyone has ever given you about university? Do everything, don’t hold back. An opportunity can come from the smallest of things

Do you care about your grades? Yeah, but it’s not everything

Do you think you study enough? Sometimes I do, sometimes I don’t

How did your attitude towards studying and school change between high school and university? Uni hit me like a truck bc my high school was not the best. I learned to live and breathe studying, how to learn, how to manage my time, and my attitude is more serious and business-minded, but also have crazy fun in the right time and place

What do you do to rewind? Like to unwind? exercise, make music, watch movies, hit the hot tub

Best tip for making friends at university? Be yourself and be honest :)

Are you involved in any clubs/societies/extracurriculars? President for a diversity student org and I do intramural sports!

Have you done or are you planning on doing any internships? I’ve completed one research internship and I hope to do another next summer.

What is an interesting subject that you would never study yourself? Physics probably lol

What has been your favourite thing about university so far? The people, the location of my uni, the learning

What is your plan B career? Work in industry after undergrad, probably in biotech

Do you ever regret your choice of subject or university? Not really

Do you ever regret going to university? Hell no

How do you study? I try to vary it– I’ll read, do a crap ton of practice problems, do a study group with a lot of talking and teaching each other concepts, watch videos from other sources

What do you wish you’d done differently in your first year? Not been a student-athlete

What things do you think you did right in your first year? Was honest with myself

What are your thoughts on Academia? It can be good and bad, and it’s up to us as the next generation to change the bad :)

Strangest university tip you have? Hmmmmm... Don’t assume all your advisers/administration/profs know what you’re truly capable of. (not strange, but I don’t think it’s that common)

3 notes

·

View notes

Video

Creating a successful Podcast with Rasean Hyligar from THE EMBC TV NETWORK on Vimeo.

"Hello. Allow me to introduce myself! My name is Rasean Hyligar. I am a Biomedical Engineer who graduated from UIC in May 2021. My specialty is in 3D CAD modeling and MATLAB programming.

I am proud of my ability to persevere. No. Matter. What. Period! To me, there is no greater joy than seeing a project through to its completion. No matter how long it takes or how little experience I have, I learn and adapt quickly to every scenario and give my absolute best in everything I do!

If you are curious about any projects or publications I have done, See my engineering projects page for more detailed information about my projects… Or you can view my publications here. Also, feel free to contact me. I would love to chat with you.

Also, I am on social media so you can follow me there since all the cool kids are doing it!" Twitter Instagram LinkedIn motivategrindsucceed.com/about/ The Motivate Grind Succeed Podcast has the goal of improving the 4 foundational cornerstones of your life: Faith, Fellowship, Finances, and Fitness, through practical tips and takeaways in every episode.

0 notes

Video

youtube

Brain Tumor Area Detection and Classification Using CNN | Matlab | Biomedical

Reach us For more Topics and Ideas: 9176990090

Google Map Location: https://goo.gl/maps/ky4hwbVToexRFUSVA

Best project center in Chennai 🙂

0 notes

Text

Workshop: Vancouver.StatisticalGenomicsProgramming.Jul30

If you are statistical genomicist or work in evolution and are planning to attend the Joint Statistical Meetings in Vancouver, then there is a short course that may be of interest to you. Drs Ken Lange, Eric Sobel, Hua Zhou and I (UCLA Biomath, Human Genetics and Biostatistics Faculty) will be teaching a Julia Language Statistical Computing course with application to Genomics on Monday 7/30. The course is suitable for students as well as for statistical genomicists. Programming experience in Julia is NOT required. Below is a short description. If anyone has a question they can email me (Janet Sinsheimer - [email protected]). Early registration for JSM ends 5/31 and early registration for the short course ends 6/29. Thanks, Janet Joint Statistical Meetings Course CE-18C Mon, 7/30/2018, 8:30 AM - 5:00 PM Julia Meets Mendel: Algorithms and Software for Modern Genomic Data Analysis (ADDED FEE) - Professional Development Continuing Education Course ASA Instructor(s): Kenneth Lange, UCLA, Janet Sinsheimer, UCLA, Eric Sobel, UCLA, Hua Zhou, UCLA Challenges in statistical genomics and precision medicine are enormous. Datasets are becoming bigger and more varied, demanding complex data structures and integration across multiple biological scales. Analysis pipelines juggle many programs, implemented in different languages, running on different platforms, and requiring different I/O formats. This heterogeneity erects barriers to communication, data exchange, data visualization, biological insight and replication of results. Statisticians spend inordinate time coding/debugging low-level languages instead of creating better methods and interpreting results. The benefits of parallel and distributed computing are largely ignored. The time is ripe for better statistical genomic computing approaches. This short course reviews current statistical genomics problems and introduces efficient computational methods to (1) enable interactive and reproducible analyses with visualization of results, (2) allow integration of varied genetic data, (3) embrace parallel, distributed and cloud computing, (4) scale to big data, and (5) facilitate communication between statisticians and their biomedical collaborators. We present statistical genomic examples and offer participants hands on coding exercises in Julia as part of the OpenMendel project (http://bit.ly/2Jd5tSG). Julia is a new open source programming language with a more flexible design and superior speed over R and Python. R and Matlab users quickly adapt to Julia. Janet Sinsheimer PhD Professor Human Genetics, Biomathematics David Geffen School of Medicine at UCLA Professor Biostatistics Fielding School of Public Health, UCLA "Sinsheimer, Janet" via Gmail

2 notes

·

View notes

Text

Building a knowledge graph with topic networks in Amazon Neptune

This is a guest blog post by By Edward Brown, Head of AI Projects, Eduardo Piairo, Architect, Marcia Oliveira, Lead Data Scientist, and Jack Hampson, CEO at Deeper Insights. We originally developed our Amazon Neptune-based knowledge graph to extract knowledge from a large textual dataset using high-level semantic queries. This resource would serve as the backend to a simplified, visual web-based knowledge extraction service. Given the sheer scale of the project—the amount of textual data, plus the overhead of the semantic data with which it was enriched—a robust, scalable graph database infrastructure was essential. In this post, we explain how we used Amazon’s graph database service, Neptune, along with complementing Amazon technologies, to solve this problem. Some of our more recent projects have required that we build knowledge graphs of this sort from different datasets. So as we proceed with our explanation, we use these as examples. But first we should explain why we decided on a knowledge graph approach for these projects in the first place. Our first dataset consists of medical papers describing research on COVID-19. During the height of the pandemic, researchers and clinicians were struggling with over 4,000 papers on the subject released every week and needed to onboard the latest information and best practices in as short a time as possible. To do this, we wanted to allow users to create their own views on the data—custom topics or themes—to which they could then subscribe via email updates. New and interesting facts within those themes that appear as new papers are added to our corpus arrive as alerts to the user’s inbox. Allowing a user to design these themes required an interactive knowledge extraction service. This eventually became the Covid Insights Platform. The platform uses a Neptune-based knowledge graph and topic network that takes over 128,000 research papers, connects key concepts within the data, and visually represents these themes on the front end. Our second use case originated with a client who wanted to mine articles discussing employment and occupational trends to yield competitor intelligence. This also required that the data be queried by the client at a broad, conceptual level, where those queries understand domain concepts like companies, occupational roles, skill sets, and more. Therefore, a knowledge graph was a good fit for this project. We think this article will be interesting to NLP specialists, data scientists, and developers alike, because it covers a range of disciplines, including network sciences, knowledge graph development, and deployment using the latest knowledge graph services from AWS. Datasets and resources We used the following raw textual datasets in these projects: CORD-19 – CORD-19 is a publicly available resource of over 167,000 scholarly articles, including over 79,000 with full text, about COVID-19, SARS-CoV-2, and related coronaviruses. Client Occupational Data – A private national dataset consisting of articles pertaining to employment and occupational trends and issues. Used for mining competitor intelligence. To provide our knowledge graphs with structure relevant to each domain, we used the following resources: Unified Medical Language System (UMLS) – The UMLS integrates and distributes key terminology, classification and coding standards, and associated resources to promote creating effective and interoperable biomedical information systems and services, including electronic health records. Occupational Information Network (O*NET) – The O*NET is a free online database that contains hundreds of occupational definitions to help students, job seekers, businesses, and workforce development professionals understand today’s world of work in the US. Adding knowledge to the graph In this section, we outline our practical approach to creating knowledge graphs on Neptune, including the technical details and libraries and models used. To create a knowledge graph from text, the text needs be given some sort of structure that maps the text onto the primitive concepts of a graph like vertices and edges. A simple way to do this is to treat each document as a vertex, and connect it via a has_text edge to a Text vertex that contains the Document content in its string property. But obviously this sort of structure is much too crude for knowledge extraction—all graph and no knowledge. If we want our graph to be suitable for querying to obtain useful knowledge, we have to provide it with a much richer structure. Ultimately, we decided to add the relevant structure—and therefore the relevant knowledge—using a number of complementary approaches that we detail in the following sections, namely the domain ontology approach, the generic semantic approach, and the topic network approach. Domain ontology knowledge This approach involves selecting resources relevant to the dataset (in our case, UMLS for biomedical text or O*NET for documents pertaining to occupational trends) and using those to identify the concepts in the text. From there, we link back to a knowledge base providing additional information about those concepts, thereby providing a background taxonomical structure according to which those concepts interrelate. That all sounds a bit abstract, granted, but can be clarified with some basic examples. Imagine we find the following sentence in a document: Semiconductor manufacturer Acme hired 1,000 new materials engineers last month. This text contains a number of concepts (called entities) that appear in our relevant ontology (O*NET), namely the field of semiconductor manufacturers, the concept of staff expansion described by “hired,” and the occupational role materials engineer. After we align these concepts to our ontology, we get access to a huge amount of background information contained in its underlying knowledge base. For example, we know that the sentence mentions not only an occupational role with a SOC code of 17-2131.00, but that this role requires critical thinking, knowledge of math and chemistry, experience with software like MATLAB and AutoCAD, and more. In the biomedical context, on the other hand, the text “SARS-coronavirus,” “SARS virus,” and “severe acute respiratory syndrome virus” are no longer just three different, meaningless strings, but a single concept—Human Coronavirus with a Concept ID of C1175743—and therefore a type of RNA virus, something that could be treated by a vaccine, and so on. In terms of implementation, we parsed both contexts—biomedical and occupational—in Python using the spaCy NLP library. For the biomedical corpus, we used a pretrained spaCy pipeline, SciSpacy, released by Allen NLP. The pipeline consists of tokenizers, syntactic parsers, and named entity recognizers retrained on biomedical corpora, along with named entity linkers to map entities back to their UMLS concept IDs. These resources turned out to be really useful because they saved a significant amount of work. For the occupational corpora, on the other hand, we weren’t quite as lucky. Ultimately, we needed to develop our own pipeline containing named entity recognizers trained on the dataset and write our own named entity linker to map those entities to their corresponding O*NET SOC codes. In any event, hopefully it’s now clear that aligning text to domain-specific resources radically increases the level of knowledge encoded into the concepts in that text (which pertain to the vertices of our graph). But what we haven’t yet done is taken advantage of the information in the text itself—in other words, the relations between those concepts (which pertain to graph edges) that the text is asserting. To do that, we extract the generic semantic knowledge expressed in the text itself. Generic semantic knowledge This approach is generic in that it could in principle be applied to any textual data, without any domain-specific resources (although of course models fine-tuned on text in the relevant domain perform better in practice). This step aims to enrich our graph with the semantic information the text expresses between its concepts. We return to our previous example: Semiconductor manufacturer Acme hired 1,000 new materials engineers last month. As mentioned, we already added the concepts in this sentence as graph vertices. What we want to do now is create the appropriate relations between them to reflect what the sentence is saying—to identify which concepts should be connected by edges like hasSubject, hasObject, and so on. To implement this, we used a pretrained Open Information Extraction pipeline, based on a deep BiLSTM sequence prediction model, also released by Allen NLP. Even so, had we discovered them sooner, we would likely have moved these parts of our pipelines to Amazon Comprehend and Amazon Comprehend Medical, which specializes in biomedical entity and relation extraction. In any event, we can now link the concepts we identified in the previous step—the company (Acme), the verb (hired) and the role (materials engineer)—to reflect the full knowledge expressed by both our domain-specific resource and the text itself. This in turn allows this sort of knowledge to be surfaced by way of graph queries. Querying the knowledge-enriched graph By this point, we had a knowledge graph that allowed for some very powerful low-level queries. In this section, we provide some simplified examples using Gremlin. Some aspects of these queries (like searching for concepts using vertex properties and strings like COVID-19) are aimed at readability in the context of this post, and are much less efficient than they could be (by instead using the vertex ID for that concept, which exploits a unique Neptune index). In our production environments, queries required this kind of refactoring to maximize efficiency. For example, we can (in our biomedical text) query for anything that produces a body substance, without knowing in advance which concepts meet that definition: g.V().out('entity').where(out('instance_of').hasLabel('SemanticType').out('produces').has('name','Body Substance')). We can also find all mentions of things that denote age groups (such as “child” or “adults”): g.V().out('entity').where(out('instance_of').hasLabel('SemanticType').in('isa').has('name','Age Group')). The graph further allowed us to write more complicated queries, like the following, which extracts information regarding the seasonality of transmission of COVID-19: // Seasonality of transmission. g.V().hasLabel('Document'). as('DocID'). out('section').out('sentence'). as('Sentence'). out('entity').has('name', within( 'summer', 'winter', 'spring (season)', 'Autumn', 'Holidays', 'Seasons', 'Seasonal course', 'seasonality', 'Seasonal Variation' ) ).as('Concept').as('SemanticTypes').select('Sentence'). out('entity').has('name','COVID-19').as('COVID'). dedup(). select('Concept','SemanticTypes','Sentence','DocID'). by(valueMap('name','text')). by(out('instance_of').hasLabel('SemanticType').values('name').fold()). by(values("text")). by(values("sid")). dedup(). limit(10) The following table summarizes the output of this query. Concept Sentence seasonality COVID-19 has weak seasonality in its transmission, unlike influenza. Seasons Furthermore, the pathogens causing pneumonia in patients without COVID-19 were not identified, therefore we were unable to evaluate the prevalence of other common viral pneumonias of this season, such as influenza pneumonia and All rights reserved. Holidays The COVID-19 outbreak, however, began to occur and escalate in a special holiday period in China (about 20 days surrounding the Lunar New Year), during which a huge volume of intercity travel took place, resulting in outbreaks in multiple regions connected by an active transportation network. spring (season) Results indicate that the doubling time correlates positively with temperature and inversely with humidity, suggesting that a decrease in the rate of progression of COVID-19 with the arrival of spring and summer in the north hemisphere. summer This means that, with spring and summer, the rate of progression of COVID-19 is expected to be slower. In the same way, we can query our occupational dataset for companies that expanded with respect to roles that required knowledge of AutoCAD: g.V(). hasLabel('Sentence').as('Sentence'). out('hasConcept'). hasLabel('Role').as('Role'). where( out('hasTechSkill').has('name', 'AutoCAD') ). in('hasObject'). hasLabel('Expansion'). out('hasSubject'). hasLabel('Company').as('Company'). select('Role', 'Company', 'Sentence'). by('socCode'). by('name'). by('text'). limit(1) The following table shows our output. Role Company Sentence 17-2131.00 Acme Semiconductor manufacturer Acme hired 1,000 new materials engineers last month. We’ve now described how we added knowledge from domain-specific resources to the concepts in our graph and combined that with the relations between them described by the text. This already represents a very rich understanding of the text at the level of the individual sentence and phrase. However, beyond this very low-level interpretation, we also wanted our graph to contain much higher-level knowledge, an understanding of the concepts and relations that exist across the dataset as a whole. This leads to our final approach to enriching our graph with knowledge: the topic network. Topic network The core premise behind the topic network is fairly simple: if certain pairs of concepts appear together frequently, they are likely related, and likely share a common theme. This is true even if (or especially if) these co-occurrences occur across multiple documents. Many of these relations are obvious—like the close connection between Lockheed and aerospace engineers, in our employment data—but many are more informative, especially if the user isn’t yet a domain expert. We wanted our system to understand these trends and to present them to the user—trends across the dataset as a whole, but also limited to specific areas of interest or topics. Additionally, this information allows a user to interact with the data via a visualization, browsing the broad trends in the data and clicking through to see examples of those relations at the document and sentence level. To implement this, we combined methods from the fields of network science and traditional information retrieval. The former told us the best structure for our topic network, namely an undirected weighted network, where the concepts we specified are connected to each other by a weighted edge if they co-occur in the same context (the same phrase or sentence). The next question was how to weight those edges—in other words, how to represent the strength of the relationship between the two concepts in question. Edge weighting A tempting answer here is just to use the raw co-occurrence count between any two concepts as the edge weight. However, this leads to concepts of interest having strong relationships to overly general and uninformative terms. For example, the strongest relationships to a concept like COVID-19 are ones like patient or cell. This is not because these concepts are especially related to COVID-19, but because they are so common in all documents. Conversely, a truly informative relationship is between terms that are uncommon across the data as a whole, but commonly seen together. A very similar problem is well understood in the field of traditional information retrieval. Here the informativeness of a search term in a document is weighted using its frequency in that document and its rareness in the data as a whole, using a formula like TFIDF (term frequency by inverse document frequency). This formula however aims at scoring a single word, as opposed to the co-occurrence of two concepts as we have in our graph. Even so, it’s fairly simple to adapt the score to our own use case and instead measure the informativeness of a given pair of concepts: In our first term, we count the number of sentences, s, where both concepts, c1 and c2, occur (raw co-occurrence). In the second, we take the sum of the number of documents where each term appears relative to (twice) the total number of documents in the data. The result is that pairs that often appear together but are both individually rare are scored higher. Topics and communities As mentioned, we were interested in allowing our graph to display not only global trends and co-occurrences, but also those within a certain specialization or topic. Analyzing the co-occurrence network at the topic level allows users to obtain information on themes that are important to them, without being overloaded with information. We identified these subgraphs (also known in the network science domain as communities) as groups centered around certain concepts key to specific topics, and, in the case of the COVID-19 data, had a medical consultant curate these candidates into a final set—diagnosis, prevention, management, and so on. Our web front end allows you to navigate the trends within these topics in an intuitive, visual way, which has proven to be very powerful in conveying meaningful information hidden in the corpus. For an example of this kind of topic-based navigation, see our publicly accessible COVID-19 Insights Platform. We’ve now seen how to enrich a graph with knowledge in a number of different forms, and from several different sources: a domain ontology, generic semantic information in the text, and a higher-level topic network. In the next section, we go into further detail about how we implemented the graph on the AWS platform. Services used The following diagram gives an overview of our AWS infrastructure for knowledge graphs. We reference this as we go along in this section. Amazon Neptune and Apache TinkerPop Gremlin Although we initially considered setting up our own Apache TinkerPop service, none of us were experts with graph database infrastructure, and we found the learning curve around self-hosted graphs (such as JanusGraph) extremely steep given the time constraints on our project. This was exacerbated by the fact that we didn’t know how many users might be querying our graph at once, so rapid and simple scalability was another requirement. Fortunately, Neptune is a managed graph database service that allows storing and querying large graphs in a performant and scalable way. This also pushed us towards Neptune’s native query language, Gremlin, which was also a plus because it’s the language the team had most experience with, and provides a DSL for writing queries in Python, our house language. Neptune allowed us to solve the first challenge: representing a large textual dataset as a knowledge graph that scales well to serving concurrent, computationally intensive queries. With these basics in place, we needed to build out the AWS infrastructure around the rest of the project. Proteus Beyond Neptune, our solution involved two API services—Feather and Proteus—to allow communication between Neptune and its API, and to the outside world. More specifically, Proteus is responsible for managing the administration activities of the Neptune cluster, such as: Retrieving the status of the cluster Retrieving a summary of the queries currently running Bulk data loading The last activity, bulk data loading, proved especially important because we were dealing with a very large volume of data, and one that will grow with time. Bulk data loading This process involved two steps. The first was developing code to integrate the Python-based parsing we described earlier with a final pipeline step to convert the relevant spaCy objects—the nested data structures containing documents, entities, and spans representing predicates, subjects, and objects—into a language Neptune understands. Thankfully, the spaCy API is convenient and well-documented, so flattening out those structures into a Gremlin insert query is fairly straightforward. Even so, importing such a large dataset via Gremlin-language INSERT statements, or addVertex and addEdge steps, would have been unworkably slow. To load more efficiently, Neptune supports bulk loading from the Amazon Simple Storage Service (S3). This meant an additional modification to our pipeline to write output in the Neptune CSV bulk load format, consisting of two large files: one for vertices and one for edges. After a little wrangling with datatypes, we had a seamless parse-and-load process. The following diagram illustrates our architecture. It’s also worth mentioning that in addition to the CSV format for Gremlin, the Neptune bulk loader supports popular formats such as RDF4XML for RDF (Resource Description Framework). Feather The other service that communicates with Neptune, Feather, manages the interaction between the user (through the front end) and Neptune instance. It’s responsible for querying the database and delivering the results to the front end. The front-end service is responsible for the visual representation of the data provided by the Neptune database. The following screenshot shows our front end displaying our biomedical corpus. On the left we can see the topic-based graph browser showing the concepts most closely related to COVID-19. In the right-hand panel we have the document list, showing where those relations were found in biomedical papers at the phrase and sentence level. Lastly, the mail service allows you to subscribe to receive alerts around new themes as more data is added to the corpus, such as the COVID-19 Insights Newsletter. Infrastructure Owing to the Neptune security configuration, any incoming connections are only possible from within the same Amazon Virtual Private Cloud (Amazon VPC). Because of this, our VPC contains the following resources: Neptune cluster – We used two instances: a primary instance (also known as the writer, which allows both read and write) and a replica (the reader, which only allows read operations) Amazon EKS – A managed Amazon Elastic Kubernetes Service (Amazon EKS) cluster with three nodes used to host all our services Amazon ElastiCache for Redis – A managed Amazon ElastiCache for Redis instance that we use to cache the results of commonly run queries Lessons and potential improvements Manual provisioning (using the AWS Management Console) of the Neptune cluster is quite simple because at the server level, we only need to deal with a few primary concepts: cluster, primary instance and replicas, cluster and instance parameters, and snapshots. One of the features that we found most useful was the bulk loader, mainly due to our large volume of data. We soon discovered that updating a huge graph was really painful. To mitigate this, each time a new import was required, we created a new Neptune cluster, bulk loaded the data, and redirected our services to the new instance. Additional functionality we found extremely useful, but have not highlighted specifically, is the AWS Identity and Access Management (IAM) database authentication, encryption, backups, and the associated HTTPS REST endpoints. Despite being very powerful, with a bit of a steep learning curve initially, they were simple enough to use in practice and overall greatly simplified this part of the workflow. Conclusion In this post, we explained how we used the graph database service Amazon Neptune with complementing Amazon technologies to build a robust, scalable graph database infrastructure to extract knowledge from a large textual dataset using high-level semantic queries. We chose this approach since the data needed to be queried by the client at a broad, conceptual level, and therefore was the perfect candidate for a Knowledge Graph. Given Neptune is a managed graph database with the ability to query a graph with milliseconds latency it meant that the ease of setup if would afford us in our time-poor scenario made it the ideal choice. It was a bonus that we’d not considered at the project outset to be able to use other AWS services such as the bulk loader that also saved us a lot of time. Altogether we were able to get the Covid Insights Platform live within 8 weeks with a team of just three; Data Scientist, DevOps and Full Stack Engineer. We’ve had great success with the platform, with researchers from the UK’s NHS using it daily. Dr Matt Morgan, Critical Care Research Lead for Wales said “The Covid Insights platform has the potential to change the way that research is discovered, assessed and accessed for the benefits of science and medicine.” To see Neptune in action and to view our Covid Insights Platform, visit https://covid.deeperinsights.com We’d love your feedback or questions. Email [email protected] https://aws.amazon.com/blogs/database/building-a-knowledge-graph-with-topic-networks-in-amazon-neptune/

0 notes

Text

What is the Significance of Assignment Maker In Australia?

The standard of education is significantly improving across the globe. Professors assign multiple assignments to students by which they evaluate their performance. This includes academic assignments, monthly tests, internal assessment, and examinations, etc. to provide academic assistance that students might require the assignment maker Australia ensures the quality of the respective assignment as well. Quality is a trademark of assignments that is essential and never compromised. Thus, students become a little relaxed; now every assignment will be handled by a specialist assignment maker.

Some of the subjects that assignment experts offer are given down below-

Engineering:

Engineering is one of the oldest professions, its assessments and projects are such that a student has always feared. That is why they keep searching for the best assignment maker australia where experts provide assignments at the minimal rate possible.

Mechanical Engineering:

The three pillars of mechanical engineering are production theory, Thermodynamics, and Machine Design. Experts will help you with certification courses of many software like AutoCAD, CATIA, SolidWorks, ANSYS, MATLAB, and will improve the efficiency of a shop floor by implementing the wok-motion study and improving individual effectiveness with Kaizens.

Law:

With assignment help Australia law experts serve and students will know the key responsibilities that a lawyer practising in Australia bears. They efficiently analyze, process, and execute the law to solve any legal problem provided Professionals of law prepare answers using their critical analysis skills and make rational decisions.

Nursing:

Nursing assignment experts understand the role and importance of a nurse and midwife during the mitigation of the population to a new place and their adaptation to a new climatic and geographic location.

Follow all the simple steps to make sure the quality of your assignment is flawless:

Performing sufficient research is crucial for establishing credibility and the experts will conduct thorough research and find the most incredible research materials for your academic assignment.

Presenting the ideas properly-Presenting the ideas or arguments related to an assignment topic leads to mediocre grades. Assignment writers will make sure your paper contains clear and perfect ideas.

Creating a concise outline-Having a clear and concise outline makes writing the assignments quite attractive and effective. Only the professional knows the importance of this step and always prepares a detailed outline before proceeding with the writing part.

What are Subjects Can Assignment Makers Do?

The scope of the assignment maker Australia online is not limited to the subjects discussed above. They help in several subjects including Law, Engineering, Law, Information Technology, Cinematography, Management, Nursing, Law, Accounting, Business Administration Biomedical, Commerce, Health Sciences, Pharmaceutical Science, and a lot more. Feel free to contact assignment makers and experience the magic that you have never felt before.

Here’s why tons of students get help from assignment makers.

● On-time delivery of assignments

● Guaranteed higher grades

● 100% original and plagiarism-free assignments.

● The assistance of highly qualified and PhD experts

● Budget-friendly rates.

● Assurance of receiving completed orders before the deadlines.

● APA, MLA, Harvard, Chicago, and AMA citation styles

● Complimentary editing and Proofreading service

● On-demand paraphrasing benefits are also available to us.

● Free sample papers, SMS update, order tracking facility

● Gaining academic writing knowledge from the experts

● Learning how to deal with difficult problems

● Having a better understanding of the academic guidelines

With their assistance, you can improve your academic performance. Bother no more about any of these issues. Reach professionals to get rid of all those issues causing tensions. All your dilemmas will get answered by the best assignment maker who lends a helping hand 24*7 to those Australian students in need.

#assignment maker#assignment maker australia#assignment maker online#online assignment maker#best assignment maker#significance of assignment maker#assignment maker in australia#assignment makers

0 notes

Video

youtube

Osteoporosis Detection Model Using Dental Panoramic Radiography | #matlab #biomedical #matlabbiomedicalprojects

Final year project support for Engineering, Arts, Management, and Journal & Research students

For more details about student projects: 9176990090

0 notes

Text

Matlab Programming Help

Programming is an amazing field of study. MATLAB is one such programming language that allows matrix manipulation, plotting of capacities and information, usage of calculations, the formation of UIs, and interfacing by projects written in different languages, including C, C++, Java, and Python. Because of its great variety of utilizations, in reality, MATLAB is quick developing as a point of learning for various programming students.

Thus, it would involve preparing MATLAB assignment help and codes which can now and again be complicated work. Fortunately, the web has given every one of us the assistance we need, and MATLAB programming professionals are perfect in providing Matlab programming help. These MATLAB programming experts do an aid for students that are searching for affordable alternatives of support in their Matlab programming assignment.

What does the MATLAB stand for?

Matlab stands for Matrix Laboratory. In 1970, one of the chairmen of the department of computer science in the new Mexico university created it. MATLAB is utilized in different fields like Electrical and Electronics, Mathematics, Image handling, Calculus, Biomedical Engineering, Statistics, Remote Sensing, and so on.

What should be possible utilizing MATLAB?

Formation of user interfaces.

Curve attachment, Interpose.

Execution of calculations.

Interfacing with projects in different languages.

Numerical activities.

Matrix control.

Plotting of information.

Plotting of capacities.

Explaining Ordinary Differential Equations.

Explaining frameworks of straight conditions.

Vector activities.

Presentation of MATLAB programming:

This article gives you a short presentation of MATLAB programming language to build up a brief understanding of this subject. You can request Problem-based MATLAB instances with a clear and expressive explanation from myassignmenthelp.com whenever you required it. This article also allows you to find our online Matlab programming assignment benefits. So you can pick the most suitable MatLab programming help webpage.

Highlights of MATLAB programming language:

Associated requirement for critical thinking, continuous investigation, and structure.

Scientific functions for improvement, numerical combination, and resolving standard differential conditions.

Instruments for making custom plots and inherent designs for visualizing information.

Advancement devices for widen execution and improving code quality.

Devices for structure applications.

After reading these points, you may have absorbed a thought on MATLAB programming language. Learning MATLAB isn't limited to your coursebook; in fact, you can pick up information of command and programming from an online course. In any case, capability in this language can be gained uniquely by actual utilization that pursues the consistent use. If you require MATLAB practice questions on the web; avail our online MATLAB programming help.

Online Matlab programming help:

If you are left with a Matlab programming issue and need assistance, we have brilliant experts who can give you programming help. Our mentors who give Matlab programming Help are exceptionally qualified. Our guides have years of experience producing Matlab programming assignment help. Kindly send us the Matlab issues on which you need assistance, and our experts solve your problems. The significant viewpoint if you send us Matlab programming issues is to refer the due time.

Profession aspects of MATLAB:

Probably the best trade parts of MATLAB as indicated by the specialists' are-

Program Developer

MATLAB Engineer

Programming Engineer

Programming Tester

Interface Developer

Information Analyst

Avail an outstanding Matlab programming help from matlabassignmenthelp.com

On-time delivery: The primary thing that we guarantee that we convey assignments to the students before a given period or convey before the due date at affordable costs.

100% satisfaction guarantee: Our experts giving 100 % Return on Investment to our students.

Day in and day out customer support: Our group of experts is accessible every minute of every day for the customers. You can visit our site to get quick and reliable assistance for Matlab programming help.

Protection guarantee: We care about your security, so do our professionals. We guarantee that our customer's safety isn't influenced by reaching us.

Searching for trustworthy online assistance for Matlab programming help:

In a pool of assignment assistance accessible on the web, it winds up intense to recognize the trustworthy ones from the less responsible administrations. In any case, finding the correct programming assignment assistance that conveys quality content is critical for students. The experts are contrasts with each other. For the most part, student's feedback will, in general, give a positive image of the online assignment assistance. Go through these reviews and comparing the assistance given with other different sources, it turns into much simpler for students to locate their correct pick for MATLAB programming help.

Excellent assistance, for the most part, has some regular selling focuses: they have excellent client feedback, their administrations are different, professionals they have are from reputed foundations with a great reputation, and their administrations are accessible 24X7. Combined with agreeable student costs and great content conveyance, matlabassignmenthelp.com stays one of the most loved picks of students across the world.

How might I be guaranteed my work will be of good quality?

We only utilize experts with immaculate, proper scholastic certifications. matlabassignmenthelp.com has a zero-resilience arrangement with regards to scholarly negligence. Our experts are explicitly taught not to turn in any bit of work that neglects to fulfill the necessities and guidelines set by our students. All assignments will be examined vivaciously for unoriginality or undocumented sources, to guarantee that the last deliverable is 100% plagiarism-free.

We additionally work with a differing gathering of experts and mentors; thus, time is never an issue. For whatever length of time that your prerequisites are sensible, we convey. A customary review is acquired from all assignments dispatched to our experts, to guarantee that the last draft of your MATLAB assignment help is prepared inside the stipulated time.

That being stated, we organize quality over everything else. At our site, your norms and details are fulfilled precisely. All our assignment experts have a base encounter of five years in specialized Matlab Programming. Our capability criteria guarantee that every single specialized report is altered correctly, designed, recorded, and referenced before they are conveyed to the client.

0 notes

Text

Juniper Publishers - Open Access Journal of Engineering Technology

Removal of the Power Line Interference from ECG Signal Using Different Adaptive Filter Algorithms and Cubic Spline Interpolation for Missing Data Points of ECG in Telecardiology System

Authored by : Shekh Md Mahmudul Islam

Abstract

Maintaining one's health is a fundamental human right although one billion people do not have access to quality healthcare services. Telemedicine can help medical facilities reach their previously inaccessible target community. The Telecardiology system designed and implemented in this research work is based on the use of local market electronics. In this research work we tested three algorithms named as LMS (Least Mean Square), NLMS (Normalized Mean Square), and RLS (Recursive Least Square). We have used 250 mV amplitude ECG signal from MIT- BIH database, 25mV (10% of original ECG signal) of random noise, white Gaussian noise and 100mV (40% of original ECG signal) of 50 Hz power signal noise is added with ECG signal in different combinations and Adaptive filter with three different algorithms have been used to reduce the noise that is added during transmission through the telemedicine system. Normalized mean square error was calculated and our MATLAB simulation results suggest that RLS performs better than other two algorithms to reduce the noise from ECG. During analog transmission of ECG signal through existing Telecommunication network some data points may be lost and we have theoretically used Cubic Spline interpolation to regain missing data points. We have taken 5000 data points of ECG Signal from MIT -BIH database. The normalized mean square error was calculated for regaining missing data points of the ECG signal and it was very less in all the conditions. Cubic Spline Interpolation function on MATLAB platform could be a good solution for regaining missing data points of original ECG signal sent through our proposed Telecardiology system but practically it may not be efficient one.

Keywords: Telemedicine; Power line interference (PLI); ECG; Adaptive filter; LMS; NLMS; RLS

Abbreviations: EMG: Electromyography; ECG: Electrocardiogram; EEG: Electroencephalogram; NLMS: Normalized Least Mean Square; RLS: Recursive Least Square; SD: Storage Card

Introduction

The ECG signal measured with an electrocardiograph, is a biomedical electrical signal occurring on the surface of the body related to the contraction and relaxation of the heart. This signal represents an extremely important measure for doctors as it provides vital information about a patient cardiac condition and general health. Generally the frequency band of the ECG signal is 0.05 to 100 Hz [1]. Inside the heart there is a specialized electrical conduction system that ensures the heart to relax and contracts in a coordinated and effective fashion. ECG recordings may have common artifacts with noise caused by factors such as power line interference, external electromagnetic field, random body movements, other biomedical signals and respiration. Different types of digital filters may be used to remove signal components from unwanted frequency ranges [2].

The Power line interference 50/60 Hz is the source of interference in bio potential measurement and it corrupt the biomedical signal's recordings such as Electrocardiogram (ECG), Electroencephalogram (EEG) and Electromyography (EMG) which are extremely important for the diagnosis of patients. It is hard to find out the problem because the frequency range of ECG signal is nearly same as the frequency of power line interference. The QRS complex is a waveform which is most important in all ECG's waveforms and it comes into view in usual and unusual signals in ECG [3]. As it is difficult to apply filters with fixed coefficients to reduce biomedical signal noises because human behaviour is not exact depending on time, an adaptive filtering technique is required to overcome the problem. Adaptive filer is designed using different algorithms such as least mean square (LMS), Normalized least mean square (NLMS), Recursive least square (RLS) [4]. Least square algorithms aims at minimization of the sum of the squares of the difference between the desired signal and model filter output when new samples of the incoming signals are received at every iteration, the solution for the least square problem can be computed in recursive form resulting in the recursive least square algorithm. The goal for ECG signal enhancement is to separate the valid signal components from the undesired artifacts so as to present an ECG that facilitates an easy and accurate interpretation.

The basic idea for adaptive filter is to predict the amount of noise in the primary signal and then subtract noise from it. In this research work a Telecardiology system has been designed and implemented using instrumentation amplifier, band pass filter and Arduino interfacing between Smartphone and Arduino board. First of all raw ECG signal has been amplified and filtered by Band pass filter. Analog signal has been digitized using Arduino board and then interfacing between Arduino board and smart phone has been implemented and Digitized value of analog signal has been sent from Arduino board to smart phone and digitized value of analog signal has been stored in SD storage card of smart phone. Using Bluetooth or existing Telecommunication Network. As sinusoidal signals are known to be corrupted during transmission it is expected that similarly an ECG signal will be corrupted.

We have therefore designed an adaptive filter with three different algorithms and simulated in MATLAB platform to compare the performance of denoising of ECG signal. During transmission of ECG signals through existing Telecommunication networks some data pints may be lost. In this research work we have used cubic spline interpolation to regain missing data points. If more data points are missing then reconstruction of an ECG signal becomes impossible and doctor can not accurately interpret a patient's ECG in an efficient manner. Cubic spline interpolation has been implemented for various missing data points of original ECG signal taken from MIT-BIH database. The normalized mean square error of cubic spline interpolation was very low. Cubic Spline interpolation in Matlab platform could be a better solution for regaining missing data points of ECG signal theoretically.

Related Works and Literature Review

The extraction of high-resolution ECG signals from recordings contaminated with system noise is an important issue to investigate in Telecardiology system. The goal for ECG signal enhancement is to separate the valid signal components from the undesired artifacts, so as to present an ECG that facilitates easy and accurate interpretation.

The work of this research paper is the development of our previous research work "Denoising ECG Signal using Different Adaptive Filter Algorithms and Cubic Spline Interpolation for Missing data points of ECG in Telecardiology System” [5]. Many approaches have been reported in the literature to address ECG enhancement using adaptive filters [6-9], which permit to detect time varying potentials and to track the dynamic variations of the signals. In Md. Maniruzzaman et al [7,10,11] proposed wavelet packet transform, Least-Mean-Square (LMS) normalized least-mean-square (NLMS) and recursive-least-square (RLS), and the results are compared with a conventional notch filter both in time and frequency domain. In these papers, power line interference noise is denoised by NLMS or LMS or RLS algorithms and performed by MTLAB or LABVIEW. But in our research work we have developed our previous work. We denoised ECG signal by removing power line interference, Random noise and White Gaussian noise. In our previous research paper [12] we denoised ECG signal from random noise and white Gaussian noise. In our present research work we have added power line interference with Pure ECG signal individually and added in mixed of power line interference, random noise and white Gaussian noise in different combinations. Finally in our research work we have used cubic spline interpolation for regaining missing data points of ECG signal sent through telecommunication network.

There are certain clinical applications of ECG signal processing that require adaptive filters with large number of taps. In such applications the conventional LMS algorithm is computationally expensive to implement The LMS algorithm and NLMS (normalized LMS) algorithm require few computations, and are, therefore, widely applied for acoustic echo cancellers. However, there is a strong need to improve the convergence speed of the LMS and NLMS algorithms. The RLS (recursive least-squares) algorithm, whose convergence does not depend on the input signal, is the fastest of all conventional adaptive algorithms. The major drawback of the RLS algorithm is its large computational cost. However, fast (small computational cost) RLS algorithms have been studied recently In this paper we aim to obtain a comparative study of faster algorithm by incorporating knowledge of the room impulse response into the RLS algorithm. Unlike the NLMS and projection algorithms, the RLS algorithm does not have a scalar step size.

Therefore, the variation characteristics of an ECG signal cannot be reflected directly in the RLS algorithm. Here, we study the RLS algorithm from the viewpoint of the adaptive filter because

a. The RLS algorithm can be regarded as a special version of the adaptive filter and

b. Each parameter of the adaptive filter has physical meaning.

Computer simulations demonstrate that this algorithm converges twice as fast as the conventional algorithm. These characteristics may plays a vital role in biotelemetry, where extraction of noise free ECG signal for efficient diagnosis and fast computations, high data transfer rate are needed to avoid overlapping of pulses and to resolve ambiguities. To the best of our knowledge, transform domain has not been considered previously within the context of filtering artifacts in ECG signals.