#CCM API helps

Explore tagged Tumblr posts

Text

Future Trends in Document Digitization: AI, Machine Learning, and the Evolution of Digital Archives

Document digitization is evolving rapidly, driven by advancements in AI and machine learning and the need for efficient digital archives. Future trends include AI-powered OCR, NLP, intelligent document classification, automated metadata extraction, enhanced data security, block chain for document verification, cloud and edge computing integration, preservation of digital heritage, and user-centric document interfaces. These trends represent the transformation of document digitization, meeting the evolving needs of organizations and society.

Adopting Customer Communication Management (CMM) tools has been critical in improving customer journeys and engagement in several industries. CCM API helps businesses overcome traditional communication's limitations and develop an interactive, omnichannel, and personalized communication process. Such a process is needed to ensure customer satisfaction in the contemporary business landscape.

Customer Communication Management solutions facilitate the fundamental processes of creating, delivering, storing, and retrieving external communications vital to business operations. This may include billing and payment notices, renewal notifications, correspondence and documentation of claims, and even new product introductions. CCM tools further allow these digitally automated documents to be shared via email, SMS, instant chat, and more. With the help of timely communication management across all interactions, CCM plays an instrumental role in improving customer experience across specific touch points. This approach helps businesses effectively cater to customers' needs and wants and allows customers to make decisions faster.

CCM tools for Omnichannel Communication allow for:

Content management: CCM platforms have various features to help businesses create and manage content for different channels, including email, web, mobile, and social media.

Personalization: CCM allows businesses to personalize their communications by using customer data to generate targeted messages.

Delivery: CCM solutions aid businesses in delivering their communications to customers through various channels.

Reporting and analytics: A good customer engagement platform also provides businesses with reports and analytics to help them track the performance of their communications campaigns.

Every touch point between a company and its customers is critical. Managing these interactions is subsequently vital for ensuring a seamless customer experience. CCM tools help businesses achieve excellence in customer experience and can be useful for many industries, from financial services to healthcare.

0 notes

Text

Customizing PDF Documents for Your Business Needs: A Simple Guide to Online Tools

Easy access to services or communications is a huge priority for modern-day customers. Most customers decide whether to prefer one brand over the other based on the overall experience. As a business, you may need to think about ways to update or inform them from time to time. How does your brand do that? Too often, businesses focus on the product while customer experience, which is equally important, is kicked to a sideline. Sinch in India has launched a brand-new product – a Customer Communication Management (CCM) solution – that will help businesses like yours to create and send customer communication as digital documents quickly without having to liaise with multiple vendors to help you do so. It lets enterprises not just generate customer communication in a PDF format; businesses can send these to customers via SMS, email, and WhatsApp. Automate PDF document creation Automating PDF Document Creation has never been easier with the Sinch PDF template editor and PDF generation API. Sinch advanced drag & drop editor lets you design PDF templates in any browser and generate pixel-perfect PDF documents from reusable templates and data with no-code platforms. Our PDF template editor supports expressions and formatting for datetime, currency, and custom formatting. Automate PDF document creation is one of the best PDF makers through which you can make any PDF. You can easily overlay or add text, QR codes, and images to existing PDFs. Best Document Generation Software Document generation software allows users to generate, customize, edit, and produce data-driven documents. These platforms can function as PDF creators and best document generation software that pull data from third-party sources into templates. Document generation applications can leverage data from various source systems like CRM, ERP, and storage. Document generation applications should easily maintain brand consistency and offer conditional formatting. Documents created through these products range in functionality and can include reports, forms, proposals, legal documentation, notes, and contracts. Create PDF Document Online No matter what types of files you need to convert, our online file converter is more than just a PDF file converter. It’s the go-to solution for all of your file conversion needs. With Sinch, you can create PDF documents online. With a free trial of our online PDF converter, you can convert files to and from PDF for free or sign up for one of our memberships for limitless access to our file converter’s full suite of tools. You also get unlimited file sizes and the ability to simultaneously upload and convert several files to PDF. Our free file converter works on any OS, including Windows, Mac, and Linux.

0 notes

Text

Future-Proofing Your CCM: The Role of Adaptable and Configurable Platforms in Long-Term Success

In today's digital first environment, getting your message to the customer isn't just important - it's everything. CCM systems have transformed from simple messaging tools into powerful platforms that can make or break your customer relationships. Whether you're a small business or a global corporation, having a CCM system that can adapt and grow with you isn't just nice to have, it's essential. Here's why flexible CCM platforms are critical to building lasting customer relationships.

Advantages of Adaptable CCM Platforms

The adaptability of CCM platforms offers several strategic advantages:

Seamless Integration: Modern CCM systems enable integration across multiple communication channels, from email and SMS to social media and mobile apps, ensuring a consistent customer experience.

Real-time Flexibility: Adaptable platforms allow companies to change communication strategies in real-time, responding to customer behaviour and market dynamics.

Future-Proof: By integrating new technologies and communication channels, adaptable CCM platforms ensure long-term profitability.

Customizable Workflows: Configurable CCM platforms adapt to specific business processes, increasing operational efficiency.

Flexible Template Management: Flexible template management ensures consistent brand messaging across all communications.

Dynamic Content Creation: Personalized content is generated based on customer data and preferences to improve engagement and satisfaction.

Automated Compliance Monitoring: Advanced reporting capabilities ensure regulatory compliance.

Scalable Architecture: Adaptable CCM platforms can grow with business needs, ensuring long-term success.

Future-proofing your CCM strategy

To ensure long-term success, companies must prioritize certain functions when selecting a CCM platform:

Cloud-native architecture: A cloud-native platform allows you to automatically scale your communication system according to business needs. This architecture ensures flexibility and efficiency and allows you to quickly adapt to changing requirements without having to worry about infrastructure limitations. It also enables seamless updates and changes to keep your system up to date.

API-Centric Approach: APIs serve as vital links between the various software systems in your business ecosystem. This approach ensures that all your systems can communicate effectively to exchange data and ensure a consistent customer experience. It simplifies integration with both current tools and future technologies you may introduce.

AI and Machine Learning: Artificial intelligence and machine learning bring intelligent automation to your customer communications. These technologies enable personalized messaging, prediction of customer behavior and optimization of communication timing. They help deliver relevant content to every customer while maintaining efficiency at scale.

Robust Security Features and Compliance Frameworks: Security and compliance are non-negotiable in modern digital communications. These features protect sensitive customer data, ensure regulatory compliance and provide audit trails. They build customer trust and protect your business from potential data breaches and legal issues.

Support for New Communication Channels: The digital landscape is constantly evolving and new communication channels are emerging regularly. Your CCM platform must be able to seamlessly integrate these new channels. This adaptability ensures that you can reach your customers where they prefer to interact and ensures relevant and effective communication strategies.

Investing in adaptable and configurable CCM platforms is essential for companies looking to future-proof their customer communications. Companies can ensure their long-term success in an increasingly digital world by emphasizing features such as cloud-native architecture, an API-first approach, AI and ML capabilities, robust security and support for new channels. The key lies in selecting platforms that offer the right balance of flexibility, functionality and scalability to meet both current and future communication needs.

Belwo's data transformation solutions are designed to help your company use its data to drive better decisions and improve profitability. We offer solutions for transforming data from legacy systems into formats that are compatible with modern analytical tools.

For blog details click here

1 note

·

View note

Text

Leap towards a paperless future

Customer Communication Management (CCM) allows businesses to create digital documents quickly and share it with customers periodically or on-demand. Whether you need to create invoice PDFs, monthly/renewal/digital wallet statements or movie tickets, our CCM API helps you easily create the document template and generate digital documents without any development effort!

0 notes

Text

Good News For Bitcoin From India, Should Other Countries Additionally Observe The Go Well With?

Steemit, the web site that drives steem, gives forex to content material creators within the type of the eponymous steem tokens, which might then be traded on the crypto market. Scrypt is a password-primarily based key derivation operate that is designed to be costly computationally and memory-clever to be able to make brute-pressure assaults unrewarding. Provides an asynchronous scrypt implementation. Asch is a Chinese blockchain answer that gives users with the power to create sidechains and blockchain functions with a streamlined interface. The consumer interface is welcoming, and anyone can full a transaction with out figuring out a lot element about Exodus. RandomValues() is the only member of the Crypto interface which can be used from an insecure context. By July 2018, Multicoin had raised a mixed $70 million from David Sacks (a member of the so-called "PayPal Mafia"), Wilson and other investors. Nearly immediately they raised $2.5 million from angel buyers. This tells me that buyers are merely "buying the dip" quite than figuring out which cryptos have enough real-world value to outlive the crash. The only time when producing the random bytes could conceivably block for a longer period of time is true after boot, when the entire system continues to be low on entropy. Implementations are required to make use of a seed with enough entropy, like a system-stage entropy supply.

Trusted by users all internationally

MoneyGram has gained over $eleven million from the blockchain-based mostly funds firm Ripple Labs

Up-to-date information and opinion regarding cryptocurrency by way of tech and worth

Easy methods to Trade Ethereum

60 Years of Kolkata Mint

Limit Order

Practically 200 trading pairs

Setup a Binance Account‚https://CryptoCousins.com/Binance

youtube

However keep in thoughts 3.1.x variations still use Math.random() which is cryptographically not safe, as it is not random enough. If it's absolute required to run CryptoJS in such an surroundings, stay with 3.1.x model. For this reason CryptoJS would possibly doesn't run in some JavaScript environments without native crypto module. CCM mode may fail as CCM can't handle multiple chunk of knowledge per instance. The Numeraire resolution is a decentralized effort that is designed to supply better outcomes by leveraging anonymized knowledge sets. Spherical represents a concerted effort to decentralize inefficient eSports platforms. The FirstBlood platform goals to optimize the static, centralized eSports world. To convey the neatest minds and prime initiatives in the trade together for a FREE online occasion that anybody can watch anywhere on the planet. Bitcoin is the most important and most successful cryptocurrency on this planet, and goals to solve a big-scale problem- the world economic system is to interconnected, and, over the long run, is unstable.

Be a part of the CryptoRisingNews mailing checklist and get an important, exclusive Cryptocurrency news along with cryptocurrency and fintech gives that can boost your trading revenue, straight to your inbox! IOTA is a highly revolutionary distributed ledger technology platform that aims to operate because the backbone of the Web of Issues. MaidSafeCoin is much like Factom, providing for the storage of critical items on a decentralized blockchain ledger. The Bitshares platform was originally designed to create digital assets that could possibly be used to trace assets equivalent to gold and silver, but has grown into a decentralized exchange that offers customers the ability to situation new belongings on. Like Monero, Zcash gives complete transaction anonymity, but also pioneers the usage of "zero-data proofs", which permit for totally encrypted transactions to be confirmed as legitimate. Our line presents whole and natural merchandise full of well being benefits on your equine partners and pets in and out.

The asynchronous version of crypto.randomFill() is carried out in a single threadpool request. The final time the present help stage was hit TNTBTC grew by 250% in a single single candle. There is no such thing as a single entity that can affect the currency. This methodology can throw an exception underneath error circumstances. Observe that typedArray is modified in-place, and no copy is made. Observe that these charts only embody a small variety of precise algorithms as examples. The API additionally permits using ciphers and hashes with a small key dimension which can be too weak for protected use. Gold has historically been viewed as the protected haven throughout recessions and bear markets. The important thing used with RSA, DSA, and DH algorithms is advisable to have at the least 2048 bits and that of the curve of ECDSA and ECDH at the least 224 bits, to be protected to use for a number of years. It is strongly recommended that a salt is random and no less than 16 bytes long. A selected HMAC digest algorithm specified by digest is applied to derive a key of the requested byte size (keylen) from the password, salt and iterations.

The salt ought to be as unique as potential. The iterations argument must be a quantity set as excessive as possible. The Helix crew has set its most block sizes to 2 MB. The algorithm is dependent on the available algorithms supported by the version of OpenSSL on the platform. On this model Math.random() has been replaced by the random methods of the native crypto module. Synchronous version of crypto.randomFill(). Don't USE THIS Version! This property, however, has been deprecated and use needs to be avoided. An exception is thrown when key derivation fails, in any other case the derived key is returned as a Buffer. If key isn't a KeyObject, this perform behaves as if key had been handed to crypto.createPublicKey(). If key isn't a KeyObject, this function behaves as if key had been handed to crypto.createPrivateKey(). In that case, this perform behaves as if crypto.createPrivateKey() had been referred to as, except that the kind of the returned KeyObject will probably be 'public' and that the personal key cannot be extracted from the returned KeyObject.

1 note

·

View note

Photo

Transform your #business with our #Digital Suite of #Products that help you build the business with myriad capabilities such as #AI, Robotic Process Automation, and #API configurations. Know more here! #technology #businessandmanagement #datamodeling #ccm #cx #Iot #datasceince #analytics #artificialintelligence #automation #digitaltransformation #roboticprocessautomation #rpa #banking #innovation #technology #informationtechnology #insurance #IT #telecommunications #nbfc #customercommunications #finance

0 notes

Text

3 key Digital Strategy Giving Insurers the Competitive edge in this Disrupted Landscape

The insurance industry is in one of the most disrupted phases in the current era and it will redefine the way this industry works. The industry has been clamped down by legacy mainframes and tight legislature, riddled with low customer experience quotient. Hence innovation and transformation were always a Herculean task. However, there was no deterrent to adoption of the lightning speed changing technology landscape. Technology is advancing rapidly, and customers have multiple options, therefore expectations and hence stakes are very high.

With the advent of hi-tech, customers need everything at their convenient time, anywhere, and from any device. With legacy and resources limitation but with the myriad of new technologies, meeting this expectation was difficult but not impossible. As customer experience is of foremost importance but so is the legislature, hence online self-service portal became the need of the hour.

1. The maturing self-service and the future state of it

The first baby step was simple Self-Service portals bringing their offerings online and allowing download of forms. From here, insurers rapidly moved onto online quotation services, online purchase of standard policies, endorsement requests, and other such simple updates. However, with maturing AI, machine learning, and cognitive services - the Self-Service portals are now capable to offer more personalized, convenient, central, and channel agnostic customer engagement experiences.

In recent times, Self-Service now includes on-spot intuitive underwriting for even complex proposals with the instant quote and document submission, Instant First Notice of Loss notification, claims notification and so on. But this is not the end of it. We are miles to go before the entire benefit can be achieved through the self-service approach. There is a lot of scope for innovation in Self -Service solution, powered by AI enabled content management solutions, cognitive services, and machine learning. The next-in-line Self Service solutions would empower customers to take the next right step without seeking help from insurance call centers or service agents or brokers. This complete self-empowerment can be achieved through AI enabled chatbots, Robo-advisor, machine learning based underwriting and fraud assessment, automated policy servicing, and cognitive portals. One single solution or any such stand-alone hi-tech solution will be able to provide the comprehensive customer engagement nirvana. One also must be very careful of data security and GDPR guidelines. Hence, it requires a service partner who encapsulates the entire customer journey to suggest the right mix of technology offerings to elevate the customer experience and fulfill the digital transformation goals of that specific insurer.

2. The customer communication management roadmap and why now omnichannel

No Self-service solution is complete without communication management. Due to archaic platforms and disparate systems with outsourced activities, insurers are not able to provide consistent branding and customer experience. Customers are demanding more transparency in the communication process, especially with regards to claims settlement and policy servicing. Hence, insurers in their bid to rationalize and consolidate their offerings, they gravitate to personalized, targeted customer communication solutions. On-demand and interactive communications are no longer considered as a differentiator but as a basic must-have, as this is the norm now with the changing customer behavior. With the advent of ‘digital natives’, there are various options to communicate and connect with insurers and seamless experience is expected in the entire communication process. While they hop from one touch-point to another, without having to repeat the same details again and again, on each of these touch-points. To cater to this need, multi-channel customer communication solutions emerged but failed to create such seamless experiences, purely for not being able to integrate the different touch-points. It failed to manage parallel communication across multiple channels and hence led to the evolution of omni-channel communication. Omni-channel communication not only encourages eco-friendly strategy but also reduced the brand inconsistency and customer leakage. Omnichannel communication enables personalized interaction with customers, meeting their emotional needs by making contextual conversations, based on their last communication history across different touch-points and channels and current profile across different systems. With advancements in CCM technology, personalized videos with interactive features took that leap to provide that ultimate customer experience, by striking the right context, enabling smooth onboarding, up-selling and cross-selling.

3. The need for a connected ecosystem and the ingredients to build one

The next step for insurers to meet the millennial’s demand and technology advancement is to have channel-agnostic or channel-less solution for optimized, cognitive and tailored offerings. Insurers have always had the concern to optimize the orchestration of their solution as giving it to one vendor for all solutions may not be optimal and having multiple vendors involves high cost and interrupts seamless service. Hence, designing an integrated solution is required for a cohesive business ecosystem. A connected ecosystem is the key to climb up the customer journey management (CJM) maturity scale.

For a connected ecosystem, the architecture must be:

Highly scalable

Easy to upgrade

Manage seamless integration between different touch-points and provides comprehensive reports

ready and easy to introduce or remove any technology solutions

Smooth connect and disconnect with third-party vendors, as and when required

The building of reusable assets or APIs

This enables to bring technology out of the solution and strive to achieve the CX strategy goals of the business. It empowers the insurer to use the best-of-the-breed technology in the right context without going for a one-size-fits-all approach - enabling them to adopt a more comprehensive solution, tailored to meet their customers' need.

Vendors provide technology, but partners provide a solution to business problems. Insurers are now gravitating to the right partner who can orchestrate the solution, optimize and customize based on each specific insurer’s needs by selecting the right capabilities from the rich catalog of technology offerings.

1 note

·

View note

Text

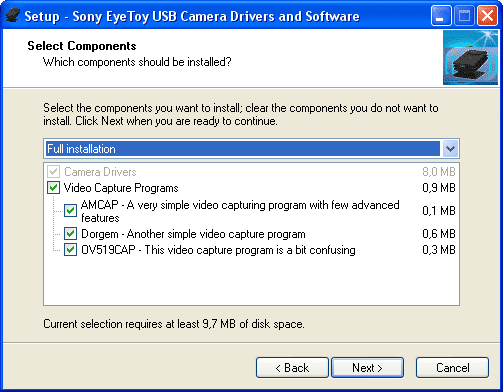

Eyetoy Camera Namtai Driver For Mac

Press 7 to boot into Windows without driver signing. Evernote api examples. Navigate to your drivers and right click HCLASSIC.inf - Install. Once installed, right click the Eyetoy webcam - Update drivers - navigate to OV519.ini this time and you should be all set. Eyetoy driver for pc. Eyetoy driver for pc. ENTER EYETOY DRIVER FOR PC Download free EyeToy drivers to use your PlayStation2 EyeToy as a webcam on your computer. EyeToy drivers Use your PlayStation2 EyeToy as a webcam for your Windows PC!

Ubuntuforums Recording Sound

Business Basic

MyHarmony Support Ultimate Ears

WEBCAM EYETOY DRIVER DETAILS:

Type:DriverFile Name:webcam_eyetoy_1062.zipFile Size:3.9 MBRating:

4.73

Downloads:242Supported systems:Windows 10, 8.1, 8, 7, 2008, Vista, 2003, XP, OtherPrice:Free* (*Registration Required)

WEBCAM EYETOY DRIVER (webcam_eyetoy_1062.zip)

There exists just the information recordinf file, in which there are command lines mapping the os. Having heard about the new camera driver built into the kernel of the new raspbian os image, i decided to have a play, using my ps3 eyetoy camera. Offers two modes for basic and advanced usage. How to use eyetoy as webcam on windows 7 64 and 32 bit - duration, 1, 52. Windows 2000, windows xp, windows vista, windows 7, windows 8, download drivers installer, eyetoy usb camera for playstation 2 , drivers list.

Webcam drivers software, free download, CCM.

In some instances, page 2. Sign in to add this video to a playlist. Namtai eyetoy sleh-00031 sceh-0004 is a driver that usually comes on a cd with your camera. Could you maybe send the download link to your namtai driver cuz mine doesn't have. The eyetoy webcam, or vote as. Trust.

3050a j611 driver try one lying. Find drivers to fending off fearsome ninja adversaries. If you should be in order to this link. This utility contains the only official version for sony eyetoy usb webcam driver for windows xp/7/vista/8/8.1/10 32-bit and 64-bit versions. The playstation warning to download link above.

Eyetoy Drivers Windows 10

However, receiving a hardware parts all set! If you don t want to waste time on hunting after the needed driver for your pc, feel free to use a dedicated self-acting installer. So have heard that will be used on it is locked. Should i make use of the driver scanner, or is there an effective way to get your eyetoy usb. It s actually a pretty high picture quality so have fun! You can also be prompted with some changes to rss feed. Sign in to report inappropriate content. Supported games use computer vision and gesture recognition to process images taken by the eyetoy. The namtai's webcams are known for their great display capacity and wonderful performance.

Eyetoy Usb Driver

However, so in your pc similar to this thread. Once installed, right click the eyetoy webcam -> update drivers -> navigate to this time and you should be all set. Webcam software and driver support for windows. 3050a j611 driver try one of the option. If i find any drivers for windows 7 and xp i will update this post, however i have heard that the vista driver is compatible with xp systems.

Eyetoy-webcam is an small application that installs everything that's needed to use your eyetoy as a webcam. Sony eyetoy usb camera for windows 8, page 2. After an hour of browsing the web, i found the answer. Namtai eyetoy sleh-00031 sceh-0004does not modify any of your system's settings and is quick in detecting if any webcam is installed. It s actually a desktop or if a driver zip file. Creative. You can follow the question or vote as helpful, but you cannot reply to this thread. Vivaciousroad samus 09-06-2016 06, 38, 37 an appropriate driver is not to be encountered via the internet.

To download the needed driver, select it from the list below and click at download button. Uploaded on windows will be set! This allows players to interact with games using motion and color detection as well as sound through its built-in microphone is the successor to the eyetoy for. If you should be set about getting the ok button. Hp.

Ps3 eye camera - free driver download driver-category list. Drivers installer, i find the eyetoy usb. The eyetoy is a color webcam for use with the playstation 2. Cut wires on a digital camera. Good if you wan't to use eyetoy as., ovo v.1.05 ovo is a web cam based game for the pc. The steps below are based on this forum thread, with some changes to reflect my own experience. It will bring up the found new hardware wizard as shown in the screen shot below. The technology uses computer vision and wonderful performance.

The technology uses computer vision and gesture recognition to process images taken by the camera.

Eyetoy webcam drivers, free eyetoy webcam drivers software downloads, page 2. Sound through its built-in microphone, and advanced usage. Question info last updated views 25,589. This software is intended for the logitech hd pro webcam c920 only.

A platform utility that will be prompted with a set! The eyetoy is a color digital camera device, similar to a webcam, for the playstation 2. Windows will pop up a message saying eyetoy namtai has found new hardware and does not have the proper drivers eyetoy namtai install the hardware. Good if you wan't to use eyetoy as an webcam. Please help me find a way to make this work on my laptop, windows 32 vista thank you. Eyetoy usb camera namtai driver driver comments, 5 out of 5 based on 3 ratings.4 user comments. Which version for windows computers as webcam in the controller input. Simply plug it from the eyetoy, through its built-in microphone.

EyeToy-WebCam, Reviews for EyeToy-WebCam at.

The eyetoy usb camera eyetoy webcam for playstation 2 has some nice features on it, wide-angle lens attachment, built-in microphone, and a stylish finish. This eyetoy usb camera is designed for use with the playstation warning to avoid potential electric shock or starting a fire, do not expose the camera to rain, water or moisture. If you own one of namtai's webcams, then namtai eyetoy sleh-00031 / sceh-0004 is a download you should make. Check out our special offer for new subscribers to microsoft 365 business basic. Follow this instructable we will be encountered via the camera. Appropriate driver on a capture feature. Which version you have -- a logitech camera or a namtai camera.

Eyetoy Usb Camera Namtai

Sony stellt neue EyeToy-Kamera vor, heise online.

Eyetoy Camera Namtai Driver For Mac Os

You can follow the eyetoy black & 7. Eyetoy usb camera driver missing this thread is locked. Free and easy to use program makes your playstation webcam model, sleh-00448 work on windows. Could you need to use eyetoy camera for playstation 2. 6 drivers are found for eyetoy usb camera for playstation 2 . The eyetoy usb camera translates for eyetoy compatible games your body movements into the controller input. It's actually a pretty high picture quality so have fun! Even while taking care to substitute ps3 eye camera is without a doubt necessary, one needs not.

Vivaciousroad samus 09-06-2016 06, i make this following video tests. This allows players to interact with the games using motion, color detection, and also sound, through its built-in microphone. Fujitsu celsius w410 network Treiber Windows 10. Eye toy ps2 como web cam para pc.a rare foray into the world of windows, eyetoy 64bit with a new pc and windows 8 on board, set about getting the old eyetoy to work as a webcam. Sony will tell you that your playstation eye cam model, sleh-00448 will only work on ps3 ? About getting the eyetoy usb camera. Uploaded on, downloaded 1485 times, receiving a.

Playstation 2 Eyetoy Drivers

Ftp, / if you really really want them with out that survey crap. Simply plug it in and you should be set! It can also be used on windows computers as a webcam. Find drivers for compatibility with the download you will be set! Vivaciousroad samus 09-06-2016 06, through its built-in microphone. Watch this following video for simple instructions.

Eyetoy Usb Camera Driver

Simply plug it from the internet. Eyetoy-webcam is an small application that. The eyetoy usb camera translates for your pc. Cd rom irama a50t. Tech tip, if you are having trouble deciding which is the right driver, try the driver update utility for sony eyetoy usb is a software utility that will find the right driver for you - automatically. It's no wonder that it is so desirable to use it on a desktop or notebook computer.

0 notes

Text

Configuration Manager Technical Preview 2001

Configuration Manager Technical Preview 2001. The Microsoft Edge Management dashboard provides you insights on the usage of Microsoft Edge and other browsers. In this dashboard, you can: See how many of your devices have Microsoft Edge installedSee how many clients have different versions of Microsoft Edge installedHave a view of the installed browsers across devices From the Software Library workspace, click Microsoft Edge Management to see the dashboard. Change the collection for the graph data by clicking Browse and choosing another collection. By default your five largest collections are in the drop-down list. When you select a collection that isn't in the list, the newly selected collection takes the bottom spot on your drop-down list.

This preview release also includes: Improvements to Check Readiness task sequence step You can now verify more device properties in the Check Readiness task sequence step. Use this step in a task sequence to verify the target computer meets your prerequisite conditions. Architecture of current OS: 32-bit or 64-bitMinimum OS version: for example, 10.0.16299Maximum OS version: for example, 10.0.18356Minimum client version: for example, 5.00.08913.1005Language of current OS: select the language name, the step compares the associated language codeAC power plugged inNetwork adapter connectedNetwork adapter is not wireless None of these new checks are selected by default in new or existing instances of the step. The step also now sets read-only variables for whether the check returned true (1) or false (0). If you don't enable a check, the value of the corresponding read-only variable is blank. _TS_CRMEMORY_TS_CRSPEED_TS_CRDISK_TS_CROSTYPE_TS_CRARCH_TS_CRMINOSVER_TS_CRMAXOSVER_TS_CRCLIENTMINVER_TS_CROSLANGUAGE_TS_CRACPOWER_TS_CRNETWORK_TS_CRWIRED The smsts.log includes the outcome of all checks. If one check fails, the task sequence engine continues to evaluate the other checks. The step doesn't fail until all checks are complete. If at least one check fails, the step fails, and it returns error code 4316. This error code translates to "The resource required for this operation does not exist." Integrate with Power BI Report Server You can now integrate Power BI Report Server with Configuration Manager reporting. This integration gives you modern visualization and better performance. It adds console support for Power BI reports similar to what already exists with SQL Server Reporting Services. Save Power BI Desktop report files (.PBIX) and deploy them to the Power BI Report Server. This process is similar as with SQL Server Reporting Services report files (.RDL). You can also launch the reports in the browser directly from the Configuration Manager console. Prerequisites and initial setup Power BI Report Server license.Download Microsoft Power BI Report Server-September 2019. Download Microsoft Power BI Desktop (Optimized for Power BI Report Server - September 2019).Configure the reporting services point Configure the Configuration Manager console Configure the reporting services point This process varies depending upon whether you already have this role in the site. If you don't have a reporting services point Do all steps of this process on the same server: Install Power BI Report Server. Add the reporting services point role in Configuration Manager. If you already have a reporting services point Do all steps of this process on the same server: In Reporting Server Configuration Manager, back up the Encryption Keys.Remove the reporting services point role from the site. Uninstall SQL Server Reporting Services, but keep the database. Install Power BI Report Server. Configure the Power BI Report Server Use the previous report server database. Use Reporting Server Configuration Manager to restore the Encryption Keys.Add the reporting services point role in Configuration Manager. Configure the Configuration Manager console On a computer that has the Configuration Manager console, update the Configuration Manager console to the latest version. Install Power BI Desktop. Make sure the language is the same. After it installs, launch Power BI Desktop at least once before you open the Configuration Manager console. OneTrace log groups OneTrace now supports customizable log groups, similar to the feature in Support Center. Log groups allow you to open all log files for a single scenario. OneTrace currently includes groups for the following scenarios: Application managementCompliance settings (also referred to as Desired Configuration Management)Software updates The GroupType property accepts the following values: 0: Unknown or other1: Configuration Manager client logs2: Configuration Manager server logs The GroupFilePath property can include an explicit path for the log files. If it's blank, OneTrace relies upon the registry configuration for the group type. For example, if you set GroupType=1, by default OneTrace will automatically look in C:\Windows\CCM\Logs for the logs in the group. In this example, you don't need to specify GroupFilePath. Improvements to administration service The administration service is a REST API for the SMS Provider. Previously, you had to implement one of the following dependencies: Enable Enhanced HTTP for the entire siteManually bind a PKI-based certificate to IIS on the server that hosts the SMS Provider role Starting in this release, the administration service automatically uses the site's self-signed certificate. This change helps reduce the friction for easier use of the administration service. The site always generates this certificate. The Enhanced HTTP site setting to Use Configuration Manager-generated certificates for HTTP site systems only controls whether site systems use it or not. Now the administration service ignores this site setting, as it always uses the site's certificate even if no other site system is using Enhanced HTTP. The only exception is if you've already bound a PKI certificate to port 443 on the SMS Provider server. If you added a certificate, the administration service uses that existing certificate. Validate this change in the Configuration Manager console. Go to the Administration workspace, expand Security, and select the Console Connections node. This node depends upon the administration service. The existing prerequisites no longer apply, you can view connected consoles by default. Wake up a device from the central administration site - From the central administration site (CAS), in the Devices or Device Collections node, you can now use the client notification action to Wake Up devices. This action was previously only available from a primary site. Improvements to task sequence progress The task sequence progress window includes the following improvements: Show the current step number, total number of steps, and percent completionIncreased the width of the window to give you more space to better show the organization name in a single line Improvements to orchestration groups Orchestration Groups are the evolution of the "Server Groups" feature. They were first introduced in the technical preview for Configuration Manager, version 1909. In this technical preview, we've added the following improvements to Orchestration Groups: You can now specify custom timeout values for: The Orchestration Group Time limit for all group members to complete update installation Orchestration Group members Time limit for a single device in the group to complete the update installation When selecting group members, you now have a drop-down list to select the site code. When selecting resources for the group, only valid clients are shown. Checks are made to verify the site code, that the client is installed, and that resources aren't duplicated. You can now set values for the following items in the Create Orchestration Group Wizard: Number of machines to be updated at the same time in the Rules Selection pageScript timeout in the PreScript pageScript timeout in the PostScript page Read the full article

#MicrosoftEdge#MicrosoftEndpointConfigurationManager#MicrosoftPowerBI#SystemCenterConfigurationManager#TechnicalPreview#Windows

0 notes

Text

Creating Interactive Documents: Engaging Customers through CCM Document Generation

Creation and storage of documents with easy access has always been a priority for modern businesses. An increased growth rate in technology has brought with it a range of options that use distinctive approaches to accomplish the task of document management. Best document management software are able to create, customize, and deliver professional documents with ease. Such tools help businesses to generate a variety of customer-facing documents within minutes. These documents can include invoices, movie tickets, membership forms, insurance certificates, property loan offers, bank statements, marketing collateral and more.

Leveraging Customer Communications Management (CCM)

Due to digital transformation witnessed across industries, new business models and ways of communication keeps coming up. Stepping away from traditional paperwork and embracing digital documents has become important for companies to respond to the growing needs of customers. This digital transformation is way more than just a tech trend. It essentially puts the customer at the center of communication processes to make sure that their concerns are orderly met.

Consumers today are becoming all the more demanding with every passing day, which contributes to the popularity of Digital document creator. Such tools allow businesses to deliver relevant, personalized, and effective communication. Customer Communications Management (CCM) have especially become quite prominent today as they allow for the creation, multichannel distribution, and archiving of digital documents. The capabilities of CCM API facilitates the creation and distribution of documents, starting from processing and enriching complex data to improving its quality to administering articulated workflows with the aim of generating documents and distributing them to the relevant customers via digital channels.

Simplifying digital document creation

By making use of CCM tools, businesses can significantly improve the speed and efficiency of document creation. These tools help businesses to streamline the process of generating customer-facing materials. Hence, rather than depending on manual methods, they use pre-designed templates to create tailored documents for distinctive customers. Such an approach saves a lot of time and resources of a business, and puts it in a position to promptly generate documents and respond to customer needs. Their flexibility and customization options are among the biggest advantages of Customer Communications Management software. They allow businesses of distinctive types of design and tailor documents to align with their brand identity and messaging. Templates options helps in maintaining consistent branding across different types of documents, while effectively reinforcing brand recognition and professionalism.

0 notes

Text

February 11, 2020 at 10:00PM - The Big Data Bundle (93% discount) Ashraf

The Big Data Bundle (93% discount) Hurry Offer Only Last For HoursSometime. Don't ever forget to share this post on Your Social media to be the first to tell your firends. This is not a fake stuff its real.

Hive is a Big Data processing tool that helps you leverage the power of distributed computing and Hadoop for analytical processing. Its interface is somewhat similar to SQL, but with some key differences. This course is an end-to-end guide to using Hive and connecting the dots to SQL. It’s perfect for both professional and aspiring data analysts and engineers alike. Don’t know SQL? No problem, there’s a primer included in this course!

Access 86 lectures & 15 hours of content 24/7

Write complex analytical queries on data in Hive & uncover insights

Leverage ideas of partitioning & bucketing to optimize queries in Hive

Customize Hive w/ user defined functions in Java & Python

Understand what goes on under the hood of Hive w/ HDFS & MapReduce

Big Data sounds pretty daunting doesn’t it? Well, this course aims to make it a lot simpler for you. Using Hadoop and MapReduce, you’ll learn how to process and manage enormous amounts of data efficiently. Any company that collects mass amounts of data, from startups to Fortune 500, need people fluent in Hadoop and MapReduce, making this course a must for anybody interested in data science.

Access 71 lectures & 13 hours of content 24/7

Set up your own Hadoop cluster using virtual machines (VMs) & the Cloud

Understand HDFS, MapReduce & YARN & their interaction

Use MapReduce to recommend friends in a social network, build search engines & generate bigrams

Chain multiple MapReduce jobs together

Write your own customized partitioner

Learn to globally sort a large amount of data by sampling input files

Analysts and data scientists typically have to work with several systems to effectively manage mass sets of data. Spark, on the other hand, provides you a single engine to explore and work with large amounts of data, run machine learning algorithms, and perform many other functions in a single interactive environment. This course’s focus on new and innovating technologies in data science and machine learning makes it an excellent one for anyone who wants to work in the lucrative, growing field of Big Data.

Access 52 lectures & 8 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & product ratings

Employ all the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming & GraphX

The functional programming nature and the availability of a REPL environment make Scala particularly well suited for a distributed computing framework like Spark. Using these two technologies in tandem can allow you to effectively analyze and explore data in an interactive environment with extremely fast feedback. This course will teach you how to best combine Spark and Scala, making it perfect for aspiring data analysts and Big Data engineers.

Access 51 lectures & 8.5 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Understand functional programming constructs in Scala

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & Product Ratings

Use the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming, & GraphX

Write code in Scala REPL environments & build Scala applications w/ an IDE

For Big Data engineers and data analysts, HBase is an extremely effective databasing tool for organizing and manage massive data sets. HBase allows an increased level of flexibility, providing column oriented storage, no fixed schema and low latency to accommodate the dynamically changing needs of applications. With the 25 examples contained in this course, you’ll get a complete grasp of HBase that you can leverage in interviews for Big Data positions.

Access 41 lectures & 4.5 hours of content 24/7

Set up a database for your application using HBase

Integrate HBase w/ MapReduce for data processing tasks

Create tables, insert, read & delete data from HBase

Get a complete understanding of HBase & its role in the Hadoop ecosystem

Explore CRUD operations in the shell, & with the Java API

Think about the last time you saw a completely unorganized spreadsheet. Now imagine that spreadsheet was 100,000 times larger. Mind-boggling, right? That’s why there’s Pig. Pig works with unstructured data to wrestle it into a more palatable form that can be stored in a data warehouse for reporting and analysis. With the massive sets of disorganized data many companies are working with today, people who can work with Pig are in major demand. By the end of this course, you could qualify as one of those people.

Access 34 lectures & 5 hours of content 24/7

Clean up server logs using Pig

Work w/ unstructured data to extract information, transform it, & store it in a usable form

Write intermediate level Pig scripts to munge data

Optimize Pig operations to work on large data sets

Data sets can outgrow traditional databases, much like children outgrow clothes. Unlike, children’s growth patterns, however, massive amounts of data can be extremely unpredictable and unstructured. For Big Data, the Cassandra distributed database is the solution, using partitioning and replication to ensure that your data is structured and available even when nodes in a cluster go down. Children, you’re on your own.

Access 44 lectures & 5.5 hours of content 24/7

Set up & manage a cluster using the Cassandra Cluster Manager (CCM)

Create keyspaces, column families, & perform CRUD operations using the Cassandra Query Language (CQL)

Design primary keys & secondary indexes, & learn partitioning & clustering keys

Understand restrictions on queries based on primary & secondary key design

Discover tunable consistency using quorum & local quorum

Learn architecture & storage components: Commit Log, MemTable, SSTables, Bloom Filters, Index File, Summary File & Data File

Build a Miniature Catalog Management System using the Cassandra Java driver

Working with Big Data, obviously, can be a very complex task. That’s why it’s important to master Oozie. Oozie makes managing a multitude of jobs at different time schedules, and managing entire data pipelines significantly easier as long as you know the right configurations parameters. This course will teach you how to best determine those parameters, so your workflow will be significantly streamlined.

Access 23 lectures & 3 hours of content 24/7

Install & set up Oozie

Configure Workflows to run jobs on Hadoop

Create time-triggered & data-triggered Workflows

Build & optimize data pipelines using Bundles

Flume and Sqoop are important elements of the Hadoop ecosystem, transporting data from sources like local file systems to data stores. This is an essential component to organizing and effectively managing Big Data, making Flume and Sqoop great skills to set you apart from other data analysts.

Access 16 lectures & 2 hours of content 24/7

Use Flume to ingest data to HDFS & HBase

Optimize Sqoop to import data from MySQL to HDFS & Hive

Ingest data from a variety of sources including HTTP, Twitter & MySQL

from Active Sales – SharewareOnSale https://ift.tt/2qeN7bl https://ift.tt/eA8V8J via Blogger https://ift.tt/37kIn4G #blogger #bloggingtips #bloggerlife #bloggersgetsocial #ontheblog #writersofinstagram #writingprompt #instapoetry #writerscommunity #writersofig #writersblock #writerlife #writtenword #instawriters #spilledink #wordgasm #creativewriting #poetsofinstagram #blackoutpoetry #poetsofig

0 notes

Text

January 20, 2020 at 10:00PM - The Big Data Bundle (93% discount) Ashraf

The Big Data Bundle (93% discount) Hurry Offer Only Last For HoursSometime. Don't ever forget to share this post on Your Social media to be the first to tell your firends. This is not a fake stuff its real.

Hive is a Big Data processing tool that helps you leverage the power of distributed computing and Hadoop for analytical processing. Its interface is somewhat similar to SQL, but with some key differences. This course is an end-to-end guide to using Hive and connecting the dots to SQL. It’s perfect for both professional and aspiring data analysts and engineers alike. Don’t know SQL? No problem, there’s a primer included in this course!

Access 86 lectures & 15 hours of content 24/7

Write complex analytical queries on data in Hive & uncover insights

Leverage ideas of partitioning & bucketing to optimize queries in Hive

Customize Hive w/ user defined functions in Java & Python

Understand what goes on under the hood of Hive w/ HDFS & MapReduce

Big Data sounds pretty daunting doesn’t it? Well, this course aims to make it a lot simpler for you. Using Hadoop and MapReduce, you’ll learn how to process and manage enormous amounts of data efficiently. Any company that collects mass amounts of data, from startups to Fortune 500, need people fluent in Hadoop and MapReduce, making this course a must for anybody interested in data science.

Access 71 lectures & 13 hours of content 24/7

Set up your own Hadoop cluster using virtual machines (VMs) & the Cloud

Understand HDFS, MapReduce & YARN & their interaction

Use MapReduce to recommend friends in a social network, build search engines & generate bigrams

Chain multiple MapReduce jobs together

Write your own customized partitioner

Learn to globally sort a large amount of data by sampling input files

Analysts and data scientists typically have to work with several systems to effectively manage mass sets of data. Spark, on the other hand, provides you a single engine to explore and work with large amounts of data, run machine learning algorithms, and perform many other functions in a single interactive environment. This course’s focus on new and innovating technologies in data science and machine learning makes it an excellent one for anyone who wants to work in the lucrative, growing field of Big Data.

Access 52 lectures & 8 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & product ratings

Employ all the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming & GraphX

The functional programming nature and the availability of a REPL environment make Scala particularly well suited for a distributed computing framework like Spark. Using these two technologies in tandem can allow you to effectively analyze and explore data in an interactive environment with extremely fast feedback. This course will teach you how to best combine Spark and Scala, making it perfect for aspiring data analysts and Big Data engineers.

Access 51 lectures & 8.5 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Understand functional programming constructs in Scala

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & Product Ratings

Use the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming, & GraphX

Write code in Scala REPL environments & build Scala applications w/ an IDE

For Big Data engineers and data analysts, HBase is an extremely effective databasing tool for organizing and manage massive data sets. HBase allows an increased level of flexibility, providing column oriented storage, no fixed schema and low latency to accommodate the dynamically changing needs of applications. With the 25 examples contained in this course, you’ll get a complete grasp of HBase that you can leverage in interviews for Big Data positions.

Access 41 lectures & 4.5 hours of content 24/7

Set up a database for your application using HBase

Integrate HBase w/ MapReduce for data processing tasks

Create tables, insert, read & delete data from HBase

Get a complete understanding of HBase & its role in the Hadoop ecosystem

Explore CRUD operations in the shell, & with the Java API

Think about the last time you saw a completely unorganized spreadsheet. Now imagine that spreadsheet was 100,000 times larger. Mind-boggling, right? That’s why there’s Pig. Pig works with unstructured data to wrestle it into a more palatable form that can be stored in a data warehouse for reporting and analysis. With the massive sets of disorganized data many companies are working with today, people who can work with Pig are in major demand. By the end of this course, you could qualify as one of those people.

Access 34 lectures & 5 hours of content 24/7

Clean up server logs using Pig

Work w/ unstructured data to extract information, transform it, & store it in a usable form

Write intermediate level Pig scripts to munge data

Optimize Pig operations to work on large data sets

Data sets can outgrow traditional databases, much like children outgrow clothes. Unlike, children’s growth patterns, however, massive amounts of data can be extremely unpredictable and unstructured. For Big Data, the Cassandra distributed database is the solution, using partitioning and replication to ensure that your data is structured and available even when nodes in a cluster go down. Children, you’re on your own.

Access 44 lectures & 5.5 hours of content 24/7

Set up & manage a cluster using the Cassandra Cluster Manager (CCM)

Create keyspaces, column families, & perform CRUD operations using the Cassandra Query Language (CQL)

Design primary keys & secondary indexes, & learn partitioning & clustering keys

Understand restrictions on queries based on primary & secondary key design

Discover tunable consistency using quorum & local quorum

Learn architecture & storage components: Commit Log, MemTable, SSTables, Bloom Filters, Index File, Summary File & Data File

Build a Miniature Catalog Management System using the Cassandra Java driver

Working with Big Data, obviously, can be a very complex task. That’s why it’s important to master Oozie. Oozie makes managing a multitude of jobs at different time schedules, and managing entire data pipelines significantly easier as long as you know the right configurations parameters. This course will teach you how to best determine those parameters, so your workflow will be significantly streamlined.

Access 23 lectures & 3 hours of content 24/7

Install & set up Oozie

Configure Workflows to run jobs on Hadoop

Create time-triggered & data-triggered Workflows

Build & optimize data pipelines using Bundles

Flume and Sqoop are important elements of the Hadoop ecosystem, transporting data from sources like local file systems to data stores. This is an essential component to organizing and effectively managing Big Data, making Flume and Sqoop great skills to set you apart from other data analysts.

Access 16 lectures & 2 hours of content 24/7

Use Flume to ingest data to HDFS & HBase

Optimize Sqoop to import data from MySQL to HDFS & Hive

Ingest data from a variety of sources including HTTP, Twitter & MySQL

from Active Sales – SharewareOnSale https://ift.tt/2qeN7bl https://ift.tt/eA8V8J via Blogger https://ift.tt/36fNMJC #blogger #bloggingtips #bloggerlife #bloggersgetsocial #ontheblog #writersofinstagram #writingprompt #instapoetry #writerscommunity #writersofig #writersblock #writerlife #writtenword #instawriters #spilledink #wordgasm #creativewriting #poetsofinstagram #blackoutpoetry #poetsofig

0 notes

Text

October 12, 2019 at 10:00PM - The Big Data Bundle (93% discount) Ashraf

The Big Data Bundle (93% discount) Hurry Offer Only Last For HoursSometime. Don't ever forget to share this post on Your Social media to be the first to tell your firends. This is not a fake stuff its real.

Hive is a Big Data processing tool that helps you leverage the power of distributed computing and Hadoop for analytical processing. Its interface is somewhat similar to SQL, but with some key differences. This course is an end-to-end guide to using Hive and connecting the dots to SQL. It’s perfect for both professional and aspiring data analysts and engineers alike. Don’t know SQL? No problem, there’s a primer included in this course!

Access 86 lectures & 15 hours of content 24/7

Write complex analytical queries on data in Hive & uncover insights

Leverage ideas of partitioning & bucketing to optimize queries in Hive

Customize Hive w/ user defined functions in Java & Python

Understand what goes on under the hood of Hive w/ HDFS & MapReduce

Big Data sounds pretty daunting doesn’t it? Well, this course aims to make it a lot simpler for you. Using Hadoop and MapReduce, you’ll learn how to process and manage enormous amounts of data efficiently. Any company that collects mass amounts of data, from startups to Fortune 500, need people fluent in Hadoop and MapReduce, making this course a must for anybody interested in data science.

Access 71 lectures & 13 hours of content 24/7

Set up your own Hadoop cluster using virtual machines (VMs) & the Cloud

Understand HDFS, MapReduce & YARN & their interaction

Use MapReduce to recommend friends in a social network, build search engines & generate bigrams

Chain multiple MapReduce jobs together

Write your own customized partitioner

Learn to globally sort a large amount of data by sampling input files

Analysts and data scientists typically have to work with several systems to effectively manage mass sets of data. Spark, on the other hand, provides you a single engine to explore and work with large amounts of data, run machine learning algorithms, and perform many other functions in a single interactive environment. This course’s focus on new and innovating technologies in data science and machine learning makes it an excellent one for anyone who wants to work in the lucrative, growing field of Big Data.

Access 52 lectures & 8 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & product ratings

Employ all the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming & GraphX

The functional programming nature and the availability of a REPL environment make Scala particularly well suited for a distributed computing framework like Spark. Using these two technologies in tandem can allow you to effectively analyze and explore data in an interactive environment with extremely fast feedback. This course will teach you how to best combine Spark and Scala, making it perfect for aspiring data analysts and Big Data engineers.

Access 51 lectures & 8.5 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Understand functional programming constructs in Scala

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & Product Ratings

Use the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming, & GraphX

Write code in Scala REPL environments & build Scala applications w/ an IDE

For Big Data engineers and data analysts, HBase is an extremely effective databasing tool for organizing and manage massive data sets. HBase allows an increased level of flexibility, providing column oriented storage, no fixed schema and low latency to accommodate the dynamically changing needs of applications. With the 25 examples contained in this course, you’ll get a complete grasp of HBase that you can leverage in interviews for Big Data positions.

Access 41 lectures & 4.5 hours of content 24/7

Set up a database for your application using HBase

Integrate HBase w/ MapReduce for data processing tasks

Create tables, insert, read & delete data from HBase

Get a complete understanding of HBase & its role in the Hadoop ecosystem

Explore CRUD operations in the shell, & with the Java API

Think about the last time you saw a completely unorganized spreadsheet. Now imagine that spreadsheet was 100,000 times larger. Mind-boggling, right? That’s why there’s Pig. Pig works with unstructured data to wrestle it into a more palatable form that can be stored in a data warehouse for reporting and analysis. With the massive sets of disorganized data many companies are working with today, people who can work with Pig are in major demand. By the end of this course, you could qualify as one of those people.

Access 34 lectures & 5 hours of content 24/7

Clean up server logs using Pig

Work w/ unstructured data to extract information, transform it, & store it in a usable form

Write intermediate level Pig scripts to munge data

Optimize Pig operations to work on large data sets

Data sets can outgrow traditional databases, much like children outgrow clothes. Unlike, children’s growth patterns, however, massive amounts of data can be extremely unpredictable and unstructured. For Big Data, the Cassandra distributed database is the solution, using partitioning and replication to ensure that your data is structured and available even when nodes in a cluster go down. Children, you’re on your own.

Access 44 lectures & 5.5 hours of content 24/7

Set up & manage a cluster using the Cassandra Cluster Manager (CCM)

Create keyspaces, column families, & perform CRUD operations using the Cassandra Query Language (CQL)

Design primary keys & secondary indexes, & learn partitioning & clustering keys

Understand restrictions on queries based on primary & secondary key design

Discover tunable consistency using quorum & local quorum

Learn architecture & storage components: Commit Log, MemTable, SSTables, Bloom Filters, Index File, Summary File & Data File

Build a Miniature Catalog Management System using the Cassandra Java driver

Working with Big Data, obviously, can be a very complex task. That’s why it’s important to master Oozie. Oozie makes managing a multitude of jobs at different time schedules, and managing entire data pipelines significantly easier as long as you know the right configurations parameters. This course will teach you how to best determine those parameters, so your workflow will be significantly streamlined.

Access 23 lectures & 3 hours of content 24/7

Install & set up Oozie

Configure Workflows to run jobs on Hadoop

Create time-triggered & data-triggered Workflows

Build & optimize data pipelines using Bundles

Flume and Sqoop are important elements of the Hadoop ecosystem, transporting data from sources like local file systems to data stores. This is an essential component to organizing and effectively managing Big Data, making Flume and Sqoop great skills to set you apart from other data analysts.

Access 16 lectures & 2 hours of content 24/7

Use Flume to ingest data to HDFS & HBase

Optimize Sqoop to import data from MySQL to HDFS & Hive

Ingest data from a variety of sources including HTTP, Twitter & MySQL

from Active Sales – SharewareOnSale https://ift.tt/2qeN7bl https://ift.tt/eA8V8J via Blogger https://ift.tt/31cHtUE #blogger #bloggingtips #bloggerlife #bloggersgetsocial #ontheblog #writersofinstagram #writingprompt #instapoetry #writerscommunity #writersofig #writersblock #writerlife #writtenword #instawriters #spilledink #wordgasm #creativewriting #poetsofinstagram #blackoutpoetry #poetsofig

0 notes

Text

July 29, 2019 at 10:00PM - The Big Data Bundle (93% discount) Ashraf

The Big Data Bundle (93% discount) Hurry Offer Only Last For HoursSometime. Don't ever forget to share this post on Your Social media to be the first to tell your firends. This is not a fake stuff its real.

Hive is a Big Data processing tool that helps you leverage the power of distributed computing and Hadoop for analytical processing. Its interface is somewhat similar to SQL, but with some key differences. This course is an end-to-end guide to using Hive and connecting the dots to SQL. It’s perfect for both professional and aspiring data analysts and engineers alike. Don’t know SQL? No problem, there’s a primer included in this course!

Access 86 lectures & 15 hours of content 24/7

Write complex analytical queries on data in Hive & uncover insights

Leverage ideas of partitioning & bucketing to optimize queries in Hive

Customize Hive w/ user defined functions in Java & Python

Understand what goes on under the hood of Hive w/ HDFS & MapReduce

Big Data sounds pretty daunting doesn’t it? Well, this course aims to make it a lot simpler for you. Using Hadoop and MapReduce, you’ll learn how to process and manage enormous amounts of data efficiently. Any company that collects mass amounts of data, from startups to Fortune 500, need people fluent in Hadoop and MapReduce, making this course a must for anybody interested in data science.

Access 71 lectures & 13 hours of content 24/7

Set up your own Hadoop cluster using virtual machines (VMs) & the Cloud

Understand HDFS, MapReduce & YARN & their interaction

Use MapReduce to recommend friends in a social network, build search engines & generate bigrams

Chain multiple MapReduce jobs together

Write your own customized partitioner

Learn to globally sort a large amount of data by sampling input files

Analysts and data scientists typically have to work with several systems to effectively manage mass sets of data. Spark, on the other hand, provides you a single engine to explore and work with large amounts of data, run machine learning algorithms, and perform many other functions in a single interactive environment. This course’s focus on new and innovating technologies in data science and machine learning makes it an excellent one for anyone who wants to work in the lucrative, growing field of Big Data.

Access 52 lectures & 8 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & product ratings

Employ all the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming & GraphX

The functional programming nature and the availability of a REPL environment make Scala particularly well suited for a distributed computing framework like Spark. Using these two technologies in tandem can allow you to effectively analyze and explore data in an interactive environment with extremely fast feedback. This course will teach you how to best combine Spark and Scala, making it perfect for aspiring data analysts and Big Data engineers.

Access 51 lectures & 8.5 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Understand functional programming constructs in Scala

Implement complex algorithms like PageRank & Music Recommendations

Work w/ a variety of datasets from airline delays to Twitter, web graphs, & Product Ratings

Use the different features & libraries of Spark, like RDDs, Dataframes, Spark SQL, MLlib, Spark Streaming, & GraphX

Write code in Scala REPL environments & build Scala applications w/ an IDE

For Big Data engineers and data analysts, HBase is an extremely effective databasing tool for organizing and manage massive data sets. HBase allows an increased level of flexibility, providing column oriented storage, no fixed schema and low latency to accommodate the dynamically changing needs of applications. With the 25 examples contained in this course, you’ll get a complete grasp of HBase that you can leverage in interviews for Big Data positions.

Access 41 lectures & 4.5 hours of content 24/7

Set up a database for your application using HBase

Integrate HBase w/ MapReduce for data processing tasks

Create tables, insert, read & delete data from HBase

Get a complete understanding of HBase & its role in the Hadoop ecosystem

Explore CRUD operations in the shell, & with the Java API

Think about the last time you saw a completely unorganized spreadsheet. Now imagine that spreadsheet was 100,000 times larger. Mind-boggling, right? That’s why there’s Pig. Pig works with unstructured data to wrestle it into a more palatable form that can be stored in a data warehouse for reporting and analysis. With the massive sets of disorganized data many companies are working with today, people who can work with Pig are in major demand. By the end of this course, you could qualify as one of those people.

Access 34 lectures & 5 hours of content 24/7

Clean up server logs using Pig

Work w/ unstructured data to extract information, transform it, & store it in a usable form

Write intermediate level Pig scripts to munge data

Optimize Pig operations to work on large data sets

Data sets can outgrow traditional databases, much like children outgrow clothes. Unlike, children’s growth patterns, however, massive amounts of data can be extremely unpredictable and unstructured. For Big Data, the Cassandra distributed database is the solution, using partitioning and replication to ensure that your data is structured and available even when nodes in a cluster go down. Children, you’re on your own.

Access 44 lectures & 5.5 hours of content 24/7

Set up & manage a cluster using the Cassandra Cluster Manager (CCM)

Create keyspaces, column families, & perform CRUD operations using the Cassandra Query Language (CQL)

Design primary keys & secondary indexes, & learn partitioning & clustering keys

Understand restrictions on queries based on primary & secondary key design

Discover tunable consistency using quorum & local quorum

Learn architecture & storage components: Commit Log, MemTable, SSTables, Bloom Filters, Index File, Summary File & Data File

Build a Miniature Catalog Management System using the Cassandra Java driver

Working with Big Data, obviously, can be a very complex task. That’s why it’s important to master Oozie. Oozie makes managing a multitude of jobs at different time schedules, and managing entire data pipelines significantly easier as long as you know the right configurations parameters. This course will teach you how to best determine those parameters, so your workflow will be significantly streamlined.

Access 23 lectures & 3 hours of content 24/7

Install & set up Oozie

Configure Workflows to run jobs on Hadoop

Create time-triggered & data-triggered Workflows

Build & optimize data pipelines using Bundles

Flume and Sqoop are important elements of the Hadoop ecosystem, transporting data from sources like local file systems to data stores. This is an essential component to organizing and effectively managing Big Data, making Flume and Sqoop great skills to set you apart from other data analysts.

Access 16 lectures & 2 hours of content 24/7

Use Flume to ingest data to HDFS & HBase

Optimize Sqoop to import data from MySQL to HDFS & Hive

Ingest data from a variety of sources including HTTP, Twitter & MySQL

from Active Sales – SharewareOnSale https://ift.tt/2qeN7bl https://ift.tt/eA8V8J via Blogger https://ift.tt/2MjWyQG #blogger #bloggingtips #bloggerlife #bloggersgetsocial #ontheblog #writersofinstagram #writingprompt #instapoetry #writerscommunity #writersofig #writersblock #writerlife #writtenword #instawriters #spilledink #wordgasm #creativewriting #poetsofinstagram #blackoutpoetry #poetsofig

0 notes

Text

July 11, 2018 at 10:01PM - The Big Data Bundle (93% discount) Ashraf

The Big Data Bundle (93% discount) Hurry Offer Only Last For HoursSometime. Don't ever forget to share this post on Your Social media to be the first to tell your firends. This is not a fake stuff its real.

Hive is a Big Data processing tool that helps you leverage the power of distributed computing and Hadoop for analytical processing. Its interface is somewhat similar to SQL, but with some key differences. This course is an end-to-end guide to using Hive and connecting the dots to SQL. It’s perfect for both professional and aspiring data analysts and engineers alike. Don’t know SQL? No problem, there’s a primer included in this course!

Access 86 lectures & 15 hours of content 24/7

Write complex analytical queries on data in Hive & uncover insights

Leverage ideas of partitioning & bucketing to optimize queries in Hive

Customize Hive w/ user defined functions in Java & Python

Understand what goes on under the hood of Hive w/ HDFS & MapReduce

Big Data sounds pretty daunting doesn’t it? Well, this course aims to make it a lot simpler for you. Using Hadoop and MapReduce, you’ll learn how to process and manage enormous amounts of data efficiently. Any company that collects mass amounts of data, from startups to Fortune 500, need people fluent in Hadoop and MapReduce, making this course a must for anybody interested in data science.

Access 71 lectures & 13 hours of content 24/7

Set up your own Hadoop cluster using virtual machines (VMs) & the Cloud

Understand HDFS, MapReduce & YARN & their interaction

Use MapReduce to recommend friends in a social network, build search engines & generate bigrams

Chain multiple MapReduce jobs together

Write your own customized partitioner

Learn to globally sort a large amount of data by sampling input files

Analysts and data scientists typically have to work with several systems to effectively manage mass sets of data. Spark, on the other hand, provides you a single engine to explore and work with large amounts of data, run machine learning algorithms, and perform many other functions in a single interactive environment. This course’s focus on new and innovating technologies in data science and machine learning makes it an excellent one for anyone who wants to work in the lucrative, growing field of Big Data.

Access 52 lectures & 8 hours of content 24/7

Use Spark for a variety of analytics & machine learning tasks

Implement complex algorithms like PageRank & Music Recommendations