#Caffe2

Explore tagged Tumblr posts

Text

Lin Qiao, CEO & Co-Founder of Fireworks AI – Interview Series

New Post has been published on https://thedigitalinsider.com/lin-qiao-ceo-co-founder-of-fireworks-ai-interview-series/

Lin Qiao, CEO & Co-Founder of Fireworks AI – Interview Series

Lin Qiao, was formerly head of Meta’s PyTorch and is the Co-Founder and CEO of Fireworks AI. Fireworks AI is a production AI platform that is built for developers, Fireworks partners with the world’s leading generative AI researchers to serve the best models, at the fastest speeds. Fireworks AI recently raised a $25M Series A.

What initially attracted you to computer science?

My dad was a very senior mechanical engineer at a shipyard, where he built cargo ships from scratch. From a young age, I learned to read the precise angles and measurements of ship blueprints, and I loved it.

I was very much into STEM from middle school onward– everything math, physics and chemistry I devoured. One of my high school assignments was to learn BASIC programming, and I coded a game about a snake eating its tail. After that, I knew computer science was in my future.

While at Meta you led 300+ world-class engineers in AI frameworks & platforms where you built and deployed Caffe2, and later PyTorch. What were some of your key takeaways from this experience?

Big Tech companies like Meta are always five or more years ahead of the curve. When I joined Meta in 2015, we were at the beginning of our AI journey– making the shift from CPUs to GPUs. We had to design AI infrastructure from the ground up. Models like Caffe2 were groundbreaking when they were created, but AI evolved so fast that they quickly grew outdated. We developed PyTorch and the entire system around it as a solution.

PyTorch is where I learned about the biggest roadblocks developers face in the race to build AI. The first challenge is finding stable and reliable model architecture that is low latency and flexible so that models can scale. The second challenge is total cost of ownership, so companies don’t go bankrupt trying to grow their models.

My time at Meta showed me how important it is to keep models and frameworks like PyTorch open-source. It encourages innovation. We would not have grown as much as we had at PyTorch without open-source opportunities for iteration. Plus, it’s impossible to stay up to date on all the latest research without collaboration.

Can you discuss what led you to launching Fireworks AI?

I’ve been in the tech industry for more than 20 years, and I’ve seen wave after wave of industry-level shifts– from the cloud to mobile apps. But this AI shift is a complete tectonic realignment. I saw lots of companies struggling with this change. Everyone wanted to move fast and put AI first, but they lacked the infrastructure, resources and talent to make it happen. The more I talked to these companies, the more I realized I could solve this gap in the market.

I launched Fireworks AI both to solve this problem and serve as an extension of the incredible work we achieved at PyTorch. It even inspired our name! PyTorch is the torch holding the fire– but we want that fire to spread everywhere. Hence: Fireworks.

I have always been passionate about democratizing technology, and making it affordable and simple for developers to innovate regardless of their resources. That’s why we have such a user-friendly interface and strong support systems to empower builders to bring their visions to life.

Could you discuss what is developer centric AI and why this is so important?

It’s simple: “developer-centric” means prioritizing the needs of AI developers. For example: creating tools, communities and processes that make developers more efficient and autonomous.

Developer-centric AI platforms like Fireworks should integrate into existing workflows and tech stacks. They should make it simple for developers to experiment, make mistakes and improve their work. They should encourage feedback, because its developers themselves who understand what they need to be successful. Lastly, it’s about more than just being a platform. It’s about being a community – one where collaborating developers can push the boundaries of what’s possible with AI.

The GenAI Platform you’ve developed is a significant advancement for developers working with large language models (LLMs). Can you elaborate on the unique features and benefits of your platform, especially in comparison to existing solutions?

Our entire approach as an AI production platform is unique, but some of our best features are:

Efficient inference – We engineered Fireworks AI for efficiency and speed. Developers using our platform can run their LLM applications at the lowest possible latency and cost. We achieve this with the latest model and service optimization techniques including prompt caching, adaptable sharding, quantization, continuous batching, FireAttention, and more.

Affordable support for LoRA-tuned models – We offer affordable service of low-rank adaptation (LoRA) fine-tuned models via multi-tenancy on base models. This means developers can experiment with many different use cases or variations on the same model without breaking the bank.

Simple interfaces and APIs – Our interfaces and APIs are straightforward and easy for developers to integrate into their applications. Our APIs are also OpenAI compatible for ease of migration.

Off-the-shelf models and fine-tuned models – We provide more than 100 pre-trained models that developers can use out-of-the-box. We cover the best LLMs, image generation models, embedding models, etc. But developers can also choose to host and serve their own custom models. We also offer self-serve fine-tuning services to help developers tailor these custom models with their proprietary data.

Community collaboration: We believe in the open-source ethos of community collaboration. Our platform encourages (but doesn’t require) developers to share their fine-tuned models and contribute to a growing bank of AI assets and knowledge. Everyone benefits from growing our collective expertise.

Could you discuss the hybrid approach that is offered between model parallelism and data parallelism?

Parallelizing machine learning models improves the efficiency and speed of model training and helps developers handle larger models that a single GPU can’t process.

Model parallelism involves dividing a model into multiple parts and training each part on separate processors. On the other hand, data parallelism divides datasets into subsets and trains a model on each subset at the same time across separate processors. A hybrid approach combines these two methods. Models are divided into separate parts, which are each trained on different subsets of data, improving efficiency, scalability and flexibility.

Fireworks AI is used by over 20,000 developers and is currently serving over 60 billion tokens daily. What challenges have you faced in scaling your operations to this level, and how have you overcome them?

I’ll be honest, there have been many high mountains to cross since we founded Fireworks AI in 2022.

Our customers first came to us looking for very low latency support because they are building applications for either consumers, prosumers or other developers— all audiences that need speedy solutions. Then, when our customers�� applications started to scale fast, they realized they couldn’t afford the typical costs associated with that scale. They then asked us to help with lowering total cost of ownership (TCO), which we did. Then, our customers wanted to migrate from OpenAI to OSS models, and they asked us to provide on-par or even better quality than OpenAI. We made that happen too.

Each step in our product’s evolution was a challenging problem to tackle, but it meant our customers’ needs truly shaped Fireworks into what it is today: a lightning fast inference engine with low TCO. Plus, we provide both an assortment of high-quality, out-of-the-box models to choose from, or fine-tuning services for developers’ to create their own.

With the rapid advancements in AI and machine learning, ethical considerations are more important than ever. How does Fireworks AI address concerns related to bias, privacy, and ethical use of AI?

I have two teenage daughters who use genAI apps like ChatGPT often. As a mom, I worry about them finding misleading or inappropriate content, because the industry is just beginning to tackle the critical problem of content safety. Meta is doing a lot with the Purple Llama project, and Stability AI’s new SD3 modes are great. Both companies are working hard to bring safety to their new Llama3 and SD3 models with multiple layers of filters. The input-output safeguard model, Llama Guard, does get a good amount of usage on our platform, but its adoption is not on par with other LLMs yet. The industry as a whole still has a long way to go to bring content safety and AI ethics to the forefront.

We at Fireworks care deeply about privacy and security. We are HIPAA and SOC2 compliant, and offer secure VPC and VPN connectivity. Companies trust Fireworks with their proprietary data and models to build their business moat.

What is your vision for how AI will evolve?

Just as AlphaGo demonstrated autonomy while learning to play chess by itself, I think we’ll see genAI applications get more and more autonomous. Apps will automatically route and direct requests to the right agent or API to process, and course-correct until they retrieve the right output. And instead of one function-calling model polling from others as a controller, we’ll see more self-organized, self-coordinated agents working in unison to solve problems.

Fireworks’ lightning-fast inference, function-calling models and fine-tuning service have paved the way for this reality. Now it’s up to innovative developers to make it happen.

Thank you for the great interview, readers who wish to learn more should visit Fireworks AI.

#000#2022#agent#agents#ai#AI Ethics#AI Infrastructure#ai platform#AI platforms#amp#API#APIs#applications#approach#apps#architecture#assets#Bias#billion#box#Building#Business#CEO#challenge#change#chatGPT#chemistry#chess#Cloud#Collaboration

0 notes

Text

Top Tools for App Development

Top 7 Tools For AI in App Development: Collaborative AI

Core ML (Apple’s Advanced Machine Learning Framework)

Apple’s Core ML was introduced in June 2017. It stands as a robust machine learning framework designed to prioritize user privacy through in-built ML devices. With a user-friendly drag-and-drop interface, Core ML boasts top-notch features, including:

Natural Language Framework: Facilitating the study of text by breaking it down into paragraphs, phrases, or words.

Sound Analysis Framework: Analyzing audio and distinguishing between sounds like highway noise and bird songs.

Speech Framework: Identifying speech in various languages within live and recorded audio.

Functionalities: Recognition of faces and facial landmarks, comprehension of barcodes, registration of images, and more.

Caffe2 (Facebook’s Adaptive Deep Learning Framework)

Originating from the University of California, Berkeley, Caffe2 is a scalable, adaptive, and lightweight deep learning framework developed by Facebook. Tailored for mobile development and production use cases, Caffe2 provides creative freedom to programmers and simplifies deep learning experiments. Key functionalities include automation feasibility, image tampering detection, object detection, and support for distributed training.

For Software Solutions and Services ranging to app and web development to e-assessment tools, Contact us at Jigya Software Services, Madhapur, Hyderabad. (An Oprine Group Company)

TensorFlow (Open-Source Powerhouse for AI-Powered Apps)

TensorFlow, an open-source machine learning platform, is built on deep-learning neural networks. Leveraging Python for development and C++ for mobile apps, TensorFlow enables the creation of innovative applications based on accessible designs. Recognized by companies like Airbnb, Coca-Cola, and Intel, TensorFlow’s capabilities include speech understanding, image recognition, gesture understanding, and artificial voice generation.

OpenCV (Cross-Platform Toolkit for Computer Vision)

OpenCV, integrated into both Android and iOS applications, is a free, open-source toolkit designed for real-time computer vision applications. With support for C++, Python, and Java interfaces, OpenCV fosters the development of computer vision applications. Functionalities encompass face recognition, object recognition, 3D model creation, and more.

ML Kit (Google’s Comprehensive Mobile SDK)

ML Kit, Google’s mobile SDK, empowers developers to create intelligent iOS and Android applications. Featuring vision and Natural Language APIs, ML Kit solves common app issues seamlessly. Its tools include vision APIs for object and face identification, barcode detection, and image labeling, as well as Natural Language APIs for text recognition, translation, and response suggestions.

CodeGuru Profiler (Amazon’s AI-Powered Performance Optimization)

CodeGuru Profiler, powered by AI models, enables software teams to identify performance issues faster, increasing product reliability and availability. Amazon utilizes AI to monitor code quality, provide optimization recommendations, and continuously monitor for security vulnerabilities.

GitHub Copilot (Enhancing Developer Efficiency and Creativity)

GitHub Copilot leverages Natural Language Processing (NLP) to discern developers’ intentions and automatically generate corresponding code snippets. This tool boosts efficiency and acts as a catalyst for creativity, inspiring developers to initiate or advance coding tasks.

Have a full understanding of the intricacies involved in AI for App development. Understanding the underlying logic and ensuring alignment with the application’s requirements is crucial for a developer to keep in mind while using AI for App Development. AI-generated code serves as a valuable assistant, but the human touch remains essential for strategic decision-making and code quality assurance.

As technology continues to evolve, Orpine Group is dedicated to providing innovative solutions for different Product Development needs.

Leveraging the power of AI while maintaining a keen focus on quality, security, and the unique needs of our clients. Here at Jigya Software Services, are commitment to excellence ensures that we harness the potential of AI responsibly.

We deliver cutting-edge solutions in the dynamic landscape of app development.

#hyderabad#application development#app developers#web development#webdevelopment#outsource to india#outsourcing#software developer#software development#software

0 notes

Text

Shine In Future With Data Science Courses

Introduction

It is unfortunate in today's era that bright and brilliant students are not getting enough chances to establish themselves. In the era of competition, only the Data science course in Pune can save your life. With proper guidance, you can earn money, fame and success in your life. Our coaching centres will help you to get the best guidance. The teachers and books will help you to establish your future without any hesitation. Hence without any thinking, you should join our courses. We are here to help you with all our might.

Why Will You Contact Us?

Several people are opting for higher degrees. Even after pursuing higher degrees people are unable to get jobs. Those who wanted to see themselves in a greater position can or for these courses.

The Maharashtrian people can easily do this course after the completion of their graduation.

The specialities of this course are as follows.

You will get to know all types of new and interesting facts. These things were unknown to you. All the Machine learning courses in Mumbai and Pune can provide you with the courses.

The time is flexible. You can easily manage it with other works. We have different durations for all the students. If you wish then you can opt for a batch or self-study. The decision is entirely yours.

All the teachers are highly qualified. They come from reputed backgrounds. The best thing is that they have sound knowledge regarding the course. The teachers will make you learn everything.

There are special doubt-clearing classes. You can attend those classes to clear all your doubts. If you have anything to ask then you can ask the faculty. This way you can solve your problems.

Regular mock tests were also held. You can participate in those tests and see how you are improving.

All types of books and other materials will be provided as well as suggested to you. Follow those books. These books are highly efficient and you can gain knowledge.

We can assure you of our service. One of the best things which our centre provides is the CCTV camera. This camera will capture all your movements. Everything will be recorded. So if any disputes occur, we can easily bring those to your notice.

Let's talk about the payment structure. The payment is very reasonable. There are various modes of payment. You can choose at your convenience. Another thing is that you can also pay partly. We can understand that people often face problems giving the entire amount at a time.

We have bigger classrooms and are fully air-conditioned. You can come and take the lesson here. Several candidates come from different places.

Service of Artificial Intelligence

Artificial intelligence course in Pune and Mumbai is an indispensable part of our life. Without it, we cannot progress in our life. It is useful in maintaining the financial processes, reports of medical examinations, logistics, publishing articles, and in a vast expanse of other fast-rising industries.

Let us discuss the companies where AI services are given.

Iflexion

Iflexion was founded in the year 1999. It is a software and development company. The remarkable part of Iflexion is that it deals with more than 30 countries across the globe. Iflexion has worked together with various big companies like Adidas, Paypal, Toyota, Xerox, Philips etc. Recently, Iflexion has developed language processing, deep learning algorithms, etc.

Hidden Brains

Hidden Brains needs no introduction. It is the most reliable software development company in the world. Artificial Intelligence deals here in health care, education and many other industries. AI plays a major role in the services of Hidden Brains. Chatbots have been developed by Hidden Brains for Telegram, Twitter, Facebook etc.

Icreon

AI services play a major and vital role in this software development company. Icreon, with the help of AI, uses tools like Azure, Caffe2, Amazon etc. Now, Icreon has reached a high position and collaborated with National Geographic Channel, Fox etc.

Dogtown Media

Dogtown Media focuses mainly on mobile apps and their development. The corporation takes on the undertakings of numerous problems and manipulates its creativity to empower AI consulting and improvement. Thus we see how AI services are important in this field.

Services Of Machine Learning

The IT-based companies, as well as MNCs, are using this technology to secure their future and to enhance their service.

Let us see how Machine Learning proves to be helpful to us.

Image Analysis

With the help of Machine Learning, images are created in big companies. Images also prove to be a great help in the progress of the company.

Text Analysis

The text proves to be beneficial in gathering information and data. With the help of ML, the topmost companies analyse the text.

Sentiment Analysis

Product development and future marketing strategies are all done in software development companies with the help of Machine Learning.

Data Analysis

Collecting data and analysing it is another important feature in the companies. It is not an easy job to perform. With the service of Machine Learning, this task became easier. The company can easily assemble all the data and information just because of ML.

The Machine Learning Course In Pune will serve you.

If you want to clear your doubts and see yourself in the topmost position then do these courses. These courses will help you to achieve success in your life.

Final Thoughts

Finally we are here to provide you with the knowledge that Data science course in Mumbai and Pune will help you to achieve success and fame in your life.

0 notes

Note

If you're taking requests still can you give us some light colors? Like light pink and purple and that jazz?

Hello! My requests are always open, don’t worry. Sorry this took so long!

These are all the light colours I could think of, and I’ll make sure to add some more pastel combinations in the queue!

#ffe5e5 | #ffcfcf | #ffc0bf | #ffadac | #ff9795

#ffe6e1 | #ffdbd3 | #ffcdc1 | #ffb9a8 | #ffa893

#fff0e5 | #ffebdc | #ffe2cc | #ffd5b5 | #ffc293

#fff7e7 | #ffefcf | #ffe8b8 | #ffe09f | #ffd57f

#fffeea | #fffdcf | #fffba6 | #fffa7d | #ffee5b

#faffe7 | #f7ffd1 | #f2ffb6 | #eafa9d | #e0f57e

#efffea | #deffd3 | #cfffbe | #c1ffac | #a5f788

#eefff5 | #dcffec | #caffe2 | #b5ffd6 | #a1ffcc

#effdff | #dcfcff | #c6faff | #b1f8ff | #9af7ff

#ecf3ff | #deeaff | #cce0ff | #bcd6ff | #adcdff

#f0efff | #e7e5ff | #d8d5ff | #cac6ff | #beb8ff

#f5ecff | #efdfff | #e8d1ff | #ddbcff | #d2a5ff

#fce8ff | #fadaff | #f7c5ff | #f5b3ff | #f3a3ff

#ffecf8 | #ffdcf2 | #ffcaeb | #ffbce6 | #ffade1

228 notes

·

View notes

Text

How to Find a Perfect Deep Learning Framework

Many courses and tutorials offer to guide you through building a deep learning project. Of course, from the educational point of view, it is worthwhile: try to implement a neural network from scratch, and you’ll understand a lot of things. However, such an approach does not prepare us for real life, where you are not supposed to spare weeks waiting for your new model to build. At this point, you can look for a deep learning framework to help you.

A deep learning framework, like a machine learning framework, is an interface, library or a tool which allows building deep learning models easily and quickly, without getting into the details of underlying algorithms. They provide a clear and concise way for defining models with the help of a collection of pre-built and optimized components.

Briefly speaking, instead of writing hundreds of lines of code, you can choose a suitable framework that will do most of the work for you.

Most popular DL frameworks

The state-of-the-art frameworks are quite new; most of them were released after 2014. They are open-source and are still undergoing active development. They vary in the number of examples available, the frequency of updates and the number of contributors. Besides, though you can build most types of networks in any deep learning framework, they still have a specialization and usually differ in the way they expose functionality through its APIs.

Here were collected the most popular frameworks

TensorFlow

The framework that we mention all the time, TensorFlow, is a deep learning framework created in 2015 by the Google Brain team. It has a comprehensive and flexible ecosystem of tools, libraries and community resources. TensorFlow has pre-written codes for most of the complex deep learning models you’ll come across, such as Recurrent Neural Networks and Convolutional Neural Networks.

The most popular use cases of TensorFlow are the following:

NLP applications, such as language detection, text summarization and other text processing tasks;

Image recognition, including image captioning, face recognition and object detection;

Sound recognition

Time series analysis

Video analysis, and much more.

TensorFlow is extremely popular within the community because it supports multiple languages, such as Python, C++ and R, has extensive documentation and walkthroughs for guidance and updates regularly. Its flexible architecture also lets developers deploy deep learning models on one or more CPUs (as well as GPUs).

For inference, developers can either use TensorFlow-TensorRT integration to optimize models within TensorFlow, or export TensorFlow models, then use NVIDIA TensorRT’s built-in TensorFlow model importer to optimize in TensorRT.

Installing TensorFlow is also a pretty straightforward task.

For CPU-only:

pip install tensorflow

For CUDA-enabled GPU cards:

pip install tensorflow-gpu

Learn more:

An Introduction to Implementing Neural Networks using TensorFlow

TensorFlow tutorials

PyTorch

PyTorch

Facebook introduced PyTorch in 2017 as a successor to Torch, a popular deep learning framework released in 2011, based on the programming language Lua. In its essence, PyTorch took Torch features and implemented them in Python. Its flexibility and coverage of multiple tasks have pushed PyTorch to the foreground, making it a competitor to TensorFlow.

PyTorch covers all sorts of deep learning tasks, including:

Images, including detection, classification, etc.;

NLP-related tasks;

Reinforcement learning.

Instead of predefined graphs with specific functionalities, PyTorch allows developers to build computational graphs on the go, and even change them during runtime. PyTorch provides Tensor computations and uses dynamic computation graphs. Autograd package of PyTorch, for instance, builds computation graphs from tensors and automatically computes gradients.

For inference, developers can export to ONNX, then optimize and deploy with NVIDIA TensorRT.

The drawback of PyTorch is the dependence of its installation process on the operating system, the package you want to use to install PyTorch, the tool/language you’re working with, CUDA and others.

Learn more:

Learn How to Build Quick & Accurate Neural Networks using PyTorch — 4 Awesome Case Studies

PyTorch tutorials

Keras

Keras was created in 2014 by researcher François Chollet with an emphasis on ease of use through a unified and often abstracted API. It is an interface that can run on top of multiple frameworks such as MXNet, TensorFlow, Theano and Microsoft Cognitive Toolkit using a high-level Python API. Unlike TensorFlow, Keras is a high-level API that enables fast experimentation and quick results with minimum user actions.

Keras has multiple architectures for solving a wide variety of problems, the most popular are

image recognition, including image classification, object detection and face recognition;

NLP tasks, including chatbot creation

Keras models can be classified into two categories:

Sequential: The layers of the model are defined in a sequential manner, so when a deep learning model is trained, these layers are implemented sequentially.

Keras functional API: This is used for defining complex models, such as multi-output models or models with shared layers.

Keras is installed easily with just one line of code:

pip install keras

Learn more:

The Ultimate Beginner’s Guide to Deep Learning in Python

Keras Tutorial: Deep Learning in Python

Optimizing Neural Networks using Keras

Caffe

The Caffe deep learning framework created by Yangqing Jia at the University of California, Berkeley in 2014, and has led to forks like NVCaffe and new frameworks like Facebook’s Caffe2 (which is already merged with PyTorch). It is geared towards image processing and, unlike the previous frameworks, its support for recurrent networks and language modeling is not as great. However, Caffe shows the highest speed of processing and learning from images.

The pre-trained networks, models and weights that can be applied to solve deep learning problems collected in the Caffe Model Zoo framework work on the below tasks:

Simple regression

Large-scale visual classification

Siamese networks for image similarity

Speech and robotics applications

Besides, Caffe provides solid support for interfaces like C, C++, Python, MATLAB as well as the traditional command line.

To optimize and deploy models for inference, developers can leverage NVIDIA TensorRT’s built-in Caffe model importer.

The installation process for Caffe is rather complicated and requires performing a number of steps and meeting such requirements, as having CUDA, BLAF and Boost. The complete guide for installation of Caffe can be found here.

Learn more:

Caffe Tutorial

Choosing a deep learning framework

You can choose a framework based on many factors you find important: the task you are going to perform, the language of your project, or your confidence and skillset. However, there are a number of features any good deep learning framework should have:

Optimization for performance

Clarity and ease of understanding and coding

Good community support

Parallelization of processes to reduce computations

Automatic computation of gradients

Model migration between deep learning frameworks

In real life, it sometimes happens that you build and train a model using one framework, then re-train or deploy it for inference using a different framework. Enabling such interoperability makes it possible to get great ideas into production faster.

The Open Neural Network Exchange, or ONNX, is a format for deep learning models that allows developers to move models between frameworks. ONNX models are currently supported in Caffe2, Microsoft Cognitive Toolkit, MXNet, and PyTorch, and there are connectors for many other popular frameworks and libraries.

New deep learning frameworks are being created all the time, a reflection of the widespread adoption of neural networks by developers. It is always tempting to choose one of the most common one (even we offer you those that we find the best and the most popular). However, to achieve the best results, it is important to choose what is best for your project and be always curious and open to new frameworks.

10 notes

·

View notes

Text

MediaTek Helio P90 yapay zeka ve fotoğrafla öne çıkıyor

MediaTek Helio P90 yapay zeka ve fotoğrafla öne çıkıyor

MediaTek’in yeni işlemcisi Helio P90, güçlü bir Yapay Zeka donanımı ve ile üst düzey kamera uygulamalarını enerji verimliliğiyle bir arada sunuyor

Dünyanın önde gelen işlemci geliştiricisi MediaTek, uzun zamandır merakla beklenen gelişmiş yapay zeka mimarisine sahip yeni yonga seti Helio P90’ın lansmanını gerçekleştirdi.

MediaTek Helio P90, daha ileri düzey yapay zeka uygulamaları için…

View On WordPress

#10-bit YUV#14-bit RAW#256QAM#2x2 802.11ac#4G LTE#4G LTE WorldMode#4x4 MIMO#ARCore#artırılmış gerçeklik#Bluetooth 5.0#Caffe#Caffe2#Cat-12#Cat-13#CorePilot#Dual SIM#dual VoLTE#Google#Google Lens#GPU#grafik işlemci ünitesi#Helio P60#Helio P70#Helio P90#Imagination Technologies#imaj sinyal işlemcisi#MediaTek#MediaTek Helio P90#NeuroPilot#PowerVR GM 9446

0 notes

Text

Machine Learning Training in Noida

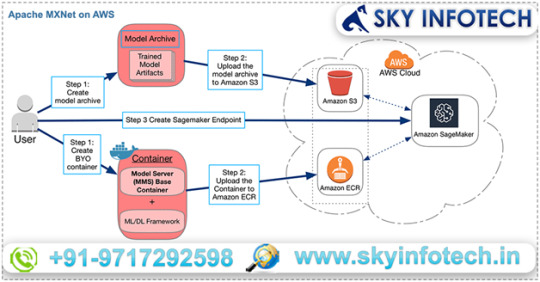

Apache MXNet on AWS- For quick training machine learning applications

Need a quick and scalable training and inference framework. Try Apache MXNet. With its straightforward and concise API for machine learning, it will fulfill your need without a doubt.

MXNet includes the extremely vital Gluon the interface that lets developers of all skill levels to get easily started with deep learning on the cloud, on mobile apps, and on edge devices. Few lines of Gluon code can enable you to build recurrent LSTMs, linear regression and convolutional networks for speech recognition, recommendation, object detection and personalization.

Amazon SageMaker, a top rate platform to build, train, and deploy machine learning models at scale can be relied on to get started with MxNet on AWS with a fully-managed exceptional experience. AWS Deep Learning AMIs can also be used to build custom environments and workflows not only with MxNet but other frameworks as well. These frameworks are TensorFlow, Caffe2, Keras, Caffe, PyTorch, Chainer, and Microsoft Cognitive Toolkit.

Benefits of deep learning using MXNet

Ease-of-Use with Gluon

You don’t have to sacrifice training speed in your endeavor to prototype, train, and deploy deep learning models by employing MXNet’s Gluon library that provides a high-level interface. For loss functions, predefined layers, and optimizers, Gluon offers high-level abstractions. Easy to debug and intuitive to work with flexible structure is provided by Gluon.

Greater Performance

Tremendously large projects can be handled in less time simply because of distribution of deep learning workloads across multiple GPUs with almost linear scalability is possible. Depending on the number of GPUs in a cluster, scaling is automatic. Developers increase productivity by saving precious time by running serverless and batch-based inferencing.

For IoT & the Edge

MXNet produces lightweight neural network model representations besides handling multi-GPU training and deployment of complex models in the cloud. This lightweight neural network model representations can run on lower-powered edge devices like a Raspberry Pi, laptop, or smartphone and process data in real-time remotely.

Flexibility & Choice

A wide array of programming languages like C++, R, Clojure, Matlab, Julia, JavaScript, Python, Scala, and Perl, etc. is supported by MXNet. So, you can easily get started with languages that are already known to you. However, on the backend, all code is compiled in C++ for the best performance regardless of language used to build the models.

1 note

·

View note

Link

Interessantes PDF, das die Azure Tools zu AI vorstellt. Wie man die anspricht ist interessant genug:

integrates with various machine learning anddeep learning frameworks, including TensorFlow, Caffe2, Microsoft Cognitivetoolkit (CNTK), Scikit-learn, MXNet, Keras, and Chainer.

.... ok. Da kann man mal ein Glossar erstellen.

Edith: Ms bietet diese Service, schön. Wenn man sich auf deren Denke einläßt kommt man auch zu Ergebnissen. Z.B. liefert luis.ai Informationen darüber, was ein Chatgegenüber beabsichtigt (i.E. “bestellen” “buchen” usw). Das Format in welchem dies bekannt gegeben wird scheint halbwegs durchschaubar zu sein - allerdings natürlich nicht trivial; diese Form der Korrespondenz ist in sich kompliziert - in Summe überzeugt mich aber nicht was das kann: bestellen und buchen dafür macht man Formulare, da muss man nicht mit AI draufhauen. MS AI ist sicher die Richtung, aber das I darin birgt kaum Intelligenz, so wie ich das beurteile. Ich suche sowas wie Matlab: AI as a Service. Was schlaues. Oder ich verstehs halt nicht richtig.

1 note

·

View note

Text

Data Science Courses

Introduction

It is unfortunate in today's era that bright and brilliant students are not getting enough chances to establish themselves. In the era of competition, only the Data science course in Pune can save your life. With proper guidance, you can earn money, fame and success in your life. Our coaching centres will help you to get the best guidance. The teachers and books will help you to establish your future without any hesitation. Hence without any thinking, you should join our courses. We are here to help you with all our might.

Why Will You Contact Us?

Several people are opting for higher degrees. Even after pursuing higher degrees people are unable to get jobs. Those who wanted to see themselves in a greater position can or for these courses.

The Maharashtrian people can easily do this course after the completion of their graduation.

The specialities of this course are as follows.

You will get to know all types of new and interesting facts. These things were unknown to you. All the Machine learning courses in Mumbai and Pune can provide you with the courses.

The time is flexible. You can easily manage it with other works. We have different durations for all the students. If you wish then you can opt for a batch or self-study. The decision is entirely yours.

All the teachers are highly qualified. They come from reputed backgrounds. The best thing is that they have sound knowledge regarding the course. The teachers will make you learn everything.

There are special doubt-clearing classes. You can attend those classes to clear all your doubts. If you have anything to ask then you can ask the faculty. This way you can solve your problems.

Regular mock tests were also held. You can participate in those tests and see how you are improving.

All types of books and other materials will be provided as well as suggested to you. Follow those books. These books are highly efficient and you can gain knowledge.

We can assure you of our service. One of the best things which our centre provides is the CCTV camera. This camera will capture all your movements. Everything will be recorded. So if any disputes occur, we can easily bring those to your notice.

Let's talk about the payment structure. The payment is very reasonable. There are various modes of payment. You can choose at your convenience. Another thing is that you can also pay partly. We can understand that people often face problems giving the entire amount at a time.

We have bigger classrooms and are fully air-conditioned. You can come and take the lesson here. Several candidates come from different places.

Service of Artificial Intelligence

Artificial intelligence course in Pune and Mumbai is an indispensable part of our life. Without it, we cannot progress in our life. It is useful in maintaining the financial processes, reports of medical examinations, logistics, publishing articles, and in a vast expanse of other fast-rising industries.

Let us discuss the companies where AI services are given.

Iflexion

Iflexion was founded in the year 1999. It is a software and development company. The remarkable part of Iflexion is that it deals with more than 30 countries across the globe. Iflexion has worked together with various big companies like Adidas, Paypal, Toyota, Xerox, Philips etc. Recently, Iflexion has developed language processing, deep learning algorithms, etc.

Hidden Brains

Hidden Brains needs no introduction. It is the most reliable software development company in the world. Artificial Intelligence deals here in health care, education and many other industries. AI plays a major role in the services of Hidden Brains. Chatbots have been developed by Hidden Brains for Telegram, Twitter, Facebook etc.

Icreon

AI services play a major and vital role in this software development company. Icreon, with the help of AI, uses tools like Azure, Caffe2, Amazon etc. Now, Icreon has reached a high position and collaborated with National Geographic Channel, Fox etc.

Dogtown Media

Dogtown Media focuses mainly on mobile apps and their development. The corporation takes on the undertakings of numerous problems and manipulates its creativity to empower AI consulting and improvement. Thus we see how AI services are important in this field.

Services Of Machine Learning

The IT-based companies, as well as MNCs, are using this technology to secure their future and to enhance their service.

Let us see how Machine Learning proves to be helpful to us.

Image Analysis

With the help of Machine Learning, images are created in big companies. Images also prove to be a great help in the progress of the company.

Text Analysis

The text proves to be beneficial in gathering information and data. With the help of ML, the topmost companies analyse the text.

Sentiment Analysis

Product development and future marketing strategies are all done in software development companies with the help of Machine Learning.

Data Analysis

Collecting data and analysing it is another important feature in the companies. It is not an easy job to perform. With the service of Machine Learning, this task became easier. The company can easily assemble all the data and information just because of ML.

The Machine Learning Course In Pune will serve you.

If you want to clear your doubts and see yourself in the topmost position then do these courses. These courses will help you to achieve success in your life.

Final Thoughts

Finally we are here to provide you with the knowledge that Data science course in Mumbai and Pune will help you to achieve success and fame in your life.

0 notes

Text

5 uncommon Python libraries every data scientist should know in 2023

Recently, the Python programming language is one of the booming languages in the IT industry. For data scientists, the Python ecosystem provides a variety of tools. You should be proficient in various duties as a data scientist, including data gathering, data visualization, mathematical operations, creating machine learning and deep learning models, and using web frameworks. Many libraries with an excessive number of predefined functions are readily available. All of them are designed to make writing code for Data Scientists easier, shorter, and more successful. These Python modules can help you achieve your full data science potential regarding data gathering, visualization, and web frameworks. The best python online course certification is needed for highly paid jobs.

Bob

The Python libraries like Bob offer a variety of tools and techniques for signal processing, computer vision, and machine learning. Bob's modular and expandable nature makes it simple for academics and developers to create and test new algorithms for various jobs. It can read and write information in several media, including voice, pictures, and video. Also, it has pre-implemented emotion recognition models, voice verification, and facial recognition algorithms.

Beautiful Soup

Another Python library for retrieving data from the internet is called Beautiful Soup. It was designed to extract valuable data from HTML and XML files, especially those with incorrect syntax and structure. This Python library's strange name is tag soup. It alludes to the fact that pages with such terrible markup are frequently referred to as such names. A Beautiful Soup object representing an HTML document as a hierarchical data structure is created when you run the document through Beautiful Soup. After that, you may quickly explore that data structure to get what you want, such as the page's text, link URLs, specific headings, etc. The Beautiful Soup library is remarkably adaptable. If you must work with web data, look into this Python library.

SQLAcademy

A database abstraction package for Python called SQLAcademy offers incredible support for various databases and layouts. It provides reliable designs, is simple to use, and is appropriate for novices. Most platforms, including Python 2.5, Jython, and Pypy, are supported, and it speeds up communication between the Python language and databases. You may create database plans from scratch with the aid of SQLAcademy.

Statsmodels

A Python module called Statsmodels is used to estimate and test statistical models. It provides functions for fitting models to data and resources for statistical analyses and testing hypotheses. It is helpful for time series analysis, linear regression, and the study of experimental data. It offers functions for estimating time series, generalized linear, mixed effects, and other statistical models, including linear models. It provides tools for producing diagnostic graphs, such as residual, Q-Q, and leverage plots, which can be used to evaluate how well a statistical model fits the data. It can determine statistical hypotheses using functions like mean, variance, and independence tests.

Caffe2

Python's Caffe2 deep learning library aims to be quick, scalable, and portable. Facebook created it, and many businesses and research institutions utilize it for machine-learning tasks. Caffe2's rapid and scalable design makes large-scale deep neural network training possible. This library offers a modular architecture that makes it simple for users to build and customize deep neural networks. It is a flexible solution for machine learning applications since it supports numerous platforms, including CPU, GPU, and mobile devices. With this Python library’s dynamic computation graph, deep neural network training is more adaptable and practical. Many neural network topologies, including feedforward, convolutional, and recurrent neural networks, are supported by this type of library.

Final words

For data science, there are a variety of Python libraries, each with a unique collection of functions and features. A Python library can help you complete your task, whether working with numerical data, statistical data, or machine learning models. You will be prepared to handle various data science projects in Python by learning in python training online.

0 notes

Text

Netron:機械学習モデルを可視化するツール

何年も前にMOONGIFTさんで紹介されていたオープンソースの機械学習モデルビューア。 Netron Netronは、ニューラルネットワーク、ディープラーニング、機械学習モデルのビューアです。 Netronは、ONNX, TensorFlow Lite, Caffe, Keras, Darknet, PaddlePaddle, ncnn, MNN, Core ML, RKNN, MXNet, MindSpore Lite, TNN, Barracuda, Tengine, CNTK, TensorFlow.js, Caffe2, UFFをサポートしています。 Netronは、PyTorch, TensorFlow, TorchScript, OpenVINO, Torch, Vitis AI, kmodel, Arm NN, BigDL, Chainer,…

View On WordPress

0 notes

Text

Get your AI career started with our IBM applied AI professional certification course. Learn to create Artificial intelligence driven chatbots and deploy AI-powered applications on the web. Build a strong foundation in various fields of machine learning, deep learning and computer vision, along with tools and frameworks like TensorFlow, Caffe2, Pytorch and other libraries that help you get up to speed with Machine Learning.

Visit our website for more details:- https://skillup.online/applied-ai-ibm-professional-certificate

0 notes

Text

蒙古电子邮件列表

我们可以快速开发可扩展的模型,摆脱传统模型中涉及的典型计算。这就是为什么使用 Caffe2,我们可以充分利用我们的机器并从中获得最大的效率。

0 notes

Text

Introducing ONNX support

ONNX (Open Neural Network eXchange) is an open format for the sharing of neural network and other machine learned models between various machine learning and deep learning frameworks. As the open big data serving engine, Vespa aims to make it simple to evaluate machine learned models at serving time at scale. By adding ONNX support in Vespa in addition to our existing TensorFlow support, we've made it possible to evaluate models from all the commonly used ML frameworks with low latency over large amounts of data.

With the rise of deep learning in the last few years, we've naturally enough seen an increase of deep learning frameworks as well: TensorFlow, PyTorch/Caffe2, MxNet etc. One reason for these different frameworks to exist is that they have been developed and optimized around some characteristic, such as fast training on distributed systems or GPUs, or efficient evaluation on mobile devices. Previously, complex projects with non-trivial data pipelines have been unable to pick the best framework for any given subtask due to lacking interoperability between these frameworks. ONNX is a solution to this problem.

ONNX is an open format for AI models, and represents an effort to push open standards in AI forward. The goal is to help increase the speed of innovation in the AI community by enabling interoperability between different frameworks and thus streamlining the process of getting models from research to production.

There is one commonality between the frameworks mentioned above that enables an open format such as ONNX, and that is that they all make use of dataflow graphs in one way or another. While there are differences between each framework, they all provide APIs enabling developers to construct computational graphs and runtimes to process these graphs. Even though these graphs are conceptually similar, each framework has been a siloed stack of API, graph and runtime. The goal of ONNX is to empower developers to select the framework that works best for their project, by providing an extensible computational graph model that works as a common intermediate representation at any stage of development or deployment.

Vespa is an open source project which fits well within such an ecosystem, and we aim to make the process of deploying and serving models to production that have been trained on any framework as smooth as possible. Vespa is optimized toward serving and evaluating over potentially very large datasets while still responding in real time. In contrast to other ML model serving options, Vespa can more efficiently evaluate models over many data points. As such, Vespa is an excellent choice when combining model evaluation with serving of various types of content.

Our ONNX support is quite similar to our TensorFlow support. Importing ONNX models is as simple as adding the model to the Vespa application package (under "models/") and referencing the model using the new ONNX ranking feature:

expression: sum(onnx("my_model.onnx"))

The above expression runs the model and sums it to a single scalar value to use in ranking. You will have to provide the inputs to the graph. Vespa expects you to provide a macro with the same name as the input tensor. In the macro you can specify where the input should come from, be it a document field, constant or a parameter sent along with the query. More information can be had in the documentation about ONNX import.

Internally, Vespa converts the ONNX operations to Vespa's tensor API. We do the same for TensorFlow import. So the cost of evaluating ONNX and TensorFlow models are the same. We have put a lot of effort in optimizing the evaluation of tensors, and evaluating neural network models can be quite efficient.

ONNX support is also quite new to Vespa, so we do not support all current ONNX operations. Part of the reason we don't support all operations yet is that some are potentially too expensive to evaluate per document, such as convolutional neural networks and recurrent networks (LSTMs etc). ONNX also contains an extension, ONNX-ML, which contains additional operations for non-neural network cases. Support for this extension will come later at some point. We are continually working to add functionality, so please reach out to us if there is something you would like to have added.

Going forward we are continually working on improving performance as well as supporting more of the ONNX (and ONNX-ML) standard. You can read more about ranking with ONNX models in the Vespa documentation. We are excited to announce ONNX support. Let us know what you are building with it!

6 notes

·

View notes

Text

Training genomes with Caffe2

NA12878 has been extensively studied over the years resulting in a good consensus on all the variants in her genome. This gives us a nice set of labeled data that we can perform supervised training with. In other words, we have a list of positions in this genome and the type of variant that occurs there.

Combining the alignment data, the variant list, and our image renderer, we can generate a training set of 20,000 images (224x224) with labels AF=1.0 (homozygous) and AF=0.5 (heterozygous). To keep things simple, we use the AlexNet model, which is slightly outdated, but should give a good starting point in terms of performance. With a batch size of 64 and an Adam optimizer with learning rate 3e-5 and gamma 0.999.

>99% accuracy after 2000 iterations.

1 note

·

View note