#Clean text with gensim

Explore tagged Tumblr posts

Text

Natural Language Processing (NLP) Programming

Natural Language Processing (NLP) is a fascinating field of artificial intelligence that focuses on the interaction between computers and human language. As a branch of AI, NLP enables machines to understand, interpret, and generate human language in a valuable way. In this post, we will explore the fundamentals of NLP programming and how you can get started in this exciting area.

What is Natural Language Processing?

NLP involves the application of computational techniques to analyze and synthesize natural language and speech. Its main goal is to enable computers to understand and respond to human languages in a way that is both meaningful and useful.

Key Tasks in NLP

Text Processing: Cleaning and preparing text data for analysis.

Tokenization: Splitting text into individual words or phrases.

Part-of-Speech Tagging: Identifying the grammatical roles of words in sentences.

Named Entity Recognition: Identifying and classifying named entities in text.

Sentiment Analysis: Determining the sentiment expressed in text (positive, negative, neutral).

Text Generation: Automatically generating text based on input data.

Popular Libraries and Tools for NLP Programming

NLTK (Natural Language Toolkit): A powerful Python library for working with human language data.

spaCy: An efficient and user-friendly library for advanced NLP tasks.

Transformers: A library by Hugging Face for state-of-the-art pre-trained models.

Gensim: Used for topic modeling and document similarity analysis.

TextBlob: A simple library for processing textual data and performing basic NLP tasks.

Example: Basic Text Processing with NLTK

import nltk from nltk.tokenize import word_tokenize from nltk import pos_tag # Sample text text = "Natural Language Processing is fascinating." # Tokenization tokens = word_tokenize(text) # Part-of-Speech Tagging tagged = pos_tag(tokens) print("Tokens:", tokens) print("Tagged:", tagged)

Common Applications of NLP

Chatbots and Virtual Assistants: Enable conversational interfaces.

Search Engines: Improve search accuracy and relevance.

Text Analytics: Extract insights from large volumes of text data.

Language Translation: Translate text between different languages.

Content Recommendation: Suggest articles or products based on user behavior.

Challenges in NLP Programming

Understanding context and semantics can be difficult for machines.

Handling ambiguity and nuances in human language.

Dealing with variations in language, dialects, and slang.

Processing large volumes of data efficiently.

Best Practices for NLP Development

Start with clean, well-prepared datasets.

Experiment with different models and libraries to find the best fit.

Keep up-to-date with advancements in NLP, such as transformer models.

Consider the ethical implications of your NLP applications, especially regarding bias in data.

Conclusion

NLP programming is a rapidly evolving field that combines linguistics, computer science, and artificial intelligence. By mastering the fundamental concepts and tools, you can develop applications that significantly improve human-computer interaction. Start exploring NLP today and unlock the potential of language processing in technology!

0 notes

Text

Skills Required to Become a Certified Prompt Engineer

The need for prompt engineers has grown significantly in the last several years. To lure the best personnel in this profession, industry leaders like Netflix provide extremely generous salaries—some reaching $300,000—into their workforce. However, which essential competencies are needed to succeed as a prompt engineer?

This post discusses the five critical competencies required to succeed in this position, regardless of where you are in your career or how to maintain your competitive edge in the rapidly changing technology landscape.

Knowing Your Place as a Prompt Engineer

A quick engineer is a multi-rolling, versatile professional in information technology. They ensure systems run well, solve complicated problems, and navigate digital environments. Their capacity to react quickly, decisively, and efficiently to any arising technical difficulty makes them unique.

Why Is Demand for Prompt Engineers So High?

Pioneers of AI Communication: Prompt engineers are essential in creating efficient input prompts that use AI models to accomplish particular objectives. To complete tasks, they use the capabilities of current generative AI models.

Necessary in the AI Ecosystem: As businesses depend increasingly on ChatGPT and other AI models, quick engineers are working to improve these models' ability to generate precise and insightful responses. This helps to close the gap between the potential of AI technology and its practical applications.

Efficiency and Precision: Prompt engineers improve the efficiency and precision of AI-driven interactions by developing prompts that direct AI models and lower the likelihood that they will produce irrelevant or inaccurate information.

Taking on Industry Challenges: As AI technologies become more prevalent, quick-thinking engineers play a critical role in reducing possible hazards related to their use. This is especially crucial because some businesses have thought about laying off employees due to implementing AI.

Attractive Salary: Competitive pay, ranging from £40,000 to £300,000 annually, reflects the demand for fast engineers. To highlight the affluent prospects in this industry, several top AI research businesses pay even more.

Global Opportunities: Since prompt engineers can be paid anywhere in the world, they are not restricted to any particular area. For example, in the San Francisco Bay Area, they can make between $175,000 and $335,000 annually.

How To Develop The Necessary Skill Set To Become A Prompt Engineer

Ability 1: Strong Understanding of Natural Language Processing (NLP)

The study of how computers and human languages interact is the focus of the artificial intelligence field known as natural language processing, or NLP. To start, one has to understand the foundational ideas of natural language processing (NLP), such as tokenization, parsing, and semantic analysis. NLP is essential to prompt engineering because it allows robots to comprehend and produce text that resembles a person's. It's necessary to provide explicit and contextually rich prompts.

Ability 2: Mastery of Python Programming

Programming in Python is essential for quick engineers. It is renowned for being easy to use, adaptable, and having a robust ecosystem of frameworks and libraries. Python provides NLP packages like NLTK, spaCy, and Gensim and is user-friendly for beginners. These modules make difficult NLP work more accessible, and many learning resources are available thanks to the large Python community. Improving skills requires practical coding experience.

Ability 3: Preprocessing and Data Analysis

Preprocessing and data analysis are essential abilities for quick engineers. Accurate data must be gathered from multiple sources, and flaws or inconsistencies must be fixed in the dataset before it can be considered clean. Data visualization is necessary to disseminate research findings and insights efficiently, whereas feature engineering is concerned with developing significant features for machine learning models.

Ability 4: Quick Design and Adjustment

Sensible design is a craft. Clear cues that offer context when needed are effective. Finding the ideal cue that produces the intended effects requires trial and error. To fine-tune language models, models are trained for particular tasks using a variety of representative datasets. Finding the ideal balance is essential to avoiding an underfit or an overfit. Model performance can be significantly impacted by hyperparameter adjustment.

Ability 5: Model Evaluation and Selection

Transformers, in particular, are language models that constitute the foundation of NLP applications. Prompt engineers must select the appropriate model based on variables, including model size, training data, and the specific use case. One must comprehend the fine-tuning procedure to modify models to meet particular requirements. Every model has advantages and disadvantages; therefore, determining the best model for a specific project requires assessment.

Opening Doors with the AI Prompt Engineer Credential

A Certified Prompt Engineer credential can alter everything if you're trying to progress in the IT and AI fields. A prompt engineering course gives students the fundamental knowledge and abilities to successfully navigate the complex field of prompt engineering, from developing efficient prompts to perfecting language models and natural language processing (NLP).

This AI certification improves employment possibilities in a highly competitive job market and validates knowledge. The importance of fast engineering certification cannot be emphasized in a world where every second matters, and artificial intelligence models such as ChatGPT are being incorporated into a wide range of applications. It allows experts to close the gap between AI capabilities and real-world applications, assuring AI technologies' ethical and practical use.

Becoming a certified AI rapid engineer is the secret to opening up a world of chances in this fast-paced industry, where knowledge is highly valued, and competent workers influence the direction of AI-driven interactions.

In conclusion, a wide range of skills is needed to become a certified prompt engineer in today's technologically advanced world. These skills include model selection and evaluation knowledge, proficiency with Python programming, data analysis and preprocessing, prompt design and fine-tuning, and NLP expertise. With these abilities, you can navigate the ever-changing technological landscape and meet the expanding need for quick engineers.

A dependable resource for anyone looking for quick engineer certification is the Blockchain Council. Their team, comprised of enthusiasts and subject matter experts, is committed to advancing blockchain-related research and development, use cases, products, and knowledge for a better world.

Utilizing the Blockchain Council, a fantastic resource, you can further your career in this exciting industry and earn your AI certification.

0 notes

Text

Clean text with gensim

CLEAN TEXT WITH GENSIM HOW TO

Don’t believe me? Here is a simple test: 'plays' = 'play' Outputs: Falseīut here comes the question - Lemmatization needs to be applied on both verbs, nouns, etc. But the computer doesn’t understand this, when it reads ‘plays’, it will be different as ‘play’. In English, we use a lot of form of vocab for verbs and nouns to represent different tense as well plural and singular. Another example is: ‘feet to foot’, ‘cats to cat’. An example could be converting ‘playing to play’, ‘plays to play’, ‘played to play’. Lemmatization means: convert a word to its ‘dictionary form’, aka a lemma. Now the words are a lot more meaningful but a lot less distracting, right? 3. # Gensim stopwords list stop_words = st.words('english') # Expand by adding NLTK stopwords list stop_words.append(stopwords.words('english')) # extend stopwords by your choices, I think it's ok to add 'https', 'dont', and 'co' to the stopword list since they means nothing stop_words.extend( + list(swords)) # Put that in a function def remove_stopwords(texts): return for doc in texts]Īfter stopwords are removed: # Remove Stop Words data_words_nostops = remove_stopwords(data_words) data_words_nostops outputs: You can probably guess, a lot of prepositions are stopwords since they don’t really have any meanings, or their meaning is too abstract and cannot infer any events. Stopwords in NLP means words that doesn’t really have any meanings, some common examples are: as, if, what, for, to, and, but. Break down sentence into a list of vocabularies (it is called tokens), and store them in a data structure like listĭata = covid_() # Remove Emails, web links data = '', sent) for sent in data] # Remove new line characters data = # Remove distracting single quotes data = #Gensim simple_preprocess function can be your friend with tokenization def sent_to_words(sentences): for sentence in sentences: yield(_preprocess(str(sentence), deacc=True)) # deacc=True removes punctuations data_words = list(sent_to_words(data)) print(data_words)Īfter Tokenization: Output:, , ] 2.Remove punctuations & special characters. Stay calm, stay safe. #COVID19france #COVID_19 #COVID19 #coronavirus #confinement #Confinementotal #ConfinementGeneral " 1. “My food stock is not the only one which is empty… PLEASE, don’t panic, THERE WILL BE ENOUGH FOOD FOR EVERYONE if you do not take more than you need. Let’s use the 3rd tweet as an example since it is pretty dirty :) # We all know what this is for :) # Pandas - read csv as pandas dataframe import pandas as pd import numpy as np import warnings warnings.filterwarnings("ignore") # gensim: simple_preprocess for easy tokenization & convert to a python list # also contain a list of common stopwords import gensim import rpora as corpora from gensim.utils import simple_preprocess from import STOPWORDS as swords #nltk Lemmatize and stemmer, for lemmatization and stem, I will talk about it later import nltk from nltk.stem import WordNetLemmatizer from import PorterStemmer from import * # You might need to run the next two line if you don't have those come with your NLTK package #nltk.download('wordnet') #nltk.download('stopwords') from rpus import stopwords as st from rpus import wordnet from rpus import stopwords # read in data tweet_data = pd.read_csv('Corona_NLP_train.csv', encoding='latin1') # subset text colum, as we only need this column covid_tweet = tweet_data # quick preview covid_tweet The first thing when working on a dataset is always to do a quick EDA, in this case, we identify which column contains the text data then we subset it for further use. Load required packages & preview of the data

CLEAN TEXT WITH GENSIM HOW TO

I will show how to process the text data step-by-step in Python, and I will also explain what each section of codes are doing (that’s what we data scientists do, right?) At the same time, I am assuming that readers have at least a basic understanding of Python and some experience with traditional Machine Learning.

0 notes

Text

Exploring D:BH fics (Part 5)

For this part, I’m going to discuss how I prepared the data and conducted the tests for differences in word use across fics from the 4 AO3 ratings (Gen/Teen/Mature/Explicit), as mentioned here.

Recap: Data was scraped from AO3 in mid-October. I removed any fics that were non-English, were crossovers and had less than 10 words. A small number of fics were missed out during the scrape - overall 13933 D:BH fics remain for analysis.

In this particular analysis, I dropped all non-rated fics, leaving 12647 D:BH fics for the statistical tests.

Part 1: Publishing frequency for D:BH with ratings breakdown Part 2: Building a network visualisation of D:BH ships Part 3: Topic modeling D:BH fics (retrieving common themes) Part 4: Average hits/kudos/comment counts/bookmarks received (split by publication month & rating) One-shots only. Part 5: Differences in word use between D:BH fics of different ratings Part 6: Word2Vec on D:BH fics (finding similar words based on word usage patterns) Part 7: Differences in topic usage between D:BH fics of different ratings Part 8: Understanding fanon representations of characters from story tags Part 9: D:BH character prominence in the actual game vs AO3 fics

What differentiates mature fics from explicit fics, gen from teen fics?

These are pretty open-ended questions, but perhaps the most rudimentary way (quantitatively) is to look at word use. It’s very crude and ignores word order and can’t capture semantics well - but it’s a start.

I’ve read some papers/writings where loglikelihood ratio tests and chi-squared tests have been used to test for these word use differences. But recently I came across this paper which suggests using other tests instead (e.g. trusty old t-tests, non-parametric Mann-Whitney U-tests, bootstrap tests). I went ahead with the non-parametric suggestion (specifically, the version for multiple independent groups, the Kruskal-Wallis test).

Now, on to pre-processing and other details.

1. Data cleaning. Very simple cleaning since I wanted to retain all words for potential analysis (yes, including the common stopwords like ‘the’, etc!). I just cleaned up the newlines, removed punctuation and numbers, and the ‘Work Text’ and ‘Chapter Text’ indicators from the HTML. At the end of cleaning, each story was basically just a list of words, e.g. [’connor’, ‘said’, ‘hello’, ‘i’, ‘m’, ‘not’, ‘a’, ‘deviant’].

2. Preparing a list of vocabulary for testing. With 12647 fics, you can imagine that there’s a huge amount of potential words to test. But a lot of them are probably rare words that aren’t used that often outside of a few fics. I’m trying to get an idea of general trends so those rare words aren’t helpful to this analysis.

I used Gensim’s dictionary function to help with this filtering. I kept only words that appeared in at least 250 fics. The number is pretty arbitrary - I selected because the smallest group (Mature fics) had 2463 fics; so 250 was about 10% of that figure. This left me with 9916 unique words for testing.

3. Counting word use for every fic. I counted the number of times each fic used each of the 9916 unique words.

Now obviously raw frequencies won’t do - a longer fic is probably going to have higher frequency counts for most words (versus a short fic) just by virtue of its length. To take care of this I normalised each frequency count for each fic by the fic’s length. E.g. ‘death’ appears 100 times in a 1000-word fic, the normalised count is 100/1000 = 0.1.

4. Performing the test (Kruskal-Wallis) and correcting for multiple comparisons. I used the Kruskal-Wallis test (from scipy) instead of the parametric ANOVA because it’s less sensitive to outliers; while the ANOVA looks at group means, the Kruskal-Wallis looks at group mean ranks (not group medians!). As an aside, you can assume the K-W test is comparing medians, if you are able to assume that the distributions of all the groups you’re comparing are identically shaped.

Because we’re doing so many comparisons (9916, one for each word), we’re bound to run into some false positives when testing for significance. To control for this, I used the Holm-Bonferroni correction (from multipy), correcting at α =.05. Even after correction, I had 9851 words with significant differences between the 4 groups.

[[For anyone unfamiliar with what group mean ranks, I’ll cover it here (hope I’ve got it down right more or less erp): - We have 12647 fics, and we have a normalised count for each of them on 9916 words. - For each word, e.g. ‘happy’, we rank the fics by their normalised count. So the fic with the lowest normalised count of ‘happy’ gets a rank of 1, the fic with the highest normalised count of ‘happy’ gets rank 12647. Every other fic gets a corresponding rank too. - We sum the ranks within each group (Gen/T/M/Explicit). Then we calculate the average of those group-wise ranks, getting the mean ranks. This information is used in calculating the test statistic for K-W.]]

5. Post-hoc tests. The results of the post-hoc tests aren’t depicted in the charts (didn’t have a good idea on how to go about it, might revisit this), but I’ll talk about them briefly here.

Following the K-W tests, I performed pairwise Dunn tests for each word (using scikit-posthocs). So basically the K-W test told me, “Hey, there’s some significant differences between the groups here” - but it didn’t tell me exactly where. The Dunn tests let me see, for e.g., if the differences lie between teen/mature, and/or mature/explicit, and so on.

Again, I applied a correction (Bonferroni) for each comparison at α =.05 - since for each word, we’re doing a total of 6 pairwise comparisons between the 4 ratings.

6. Visualisation. I really didn’t know what was the best way to show so many results, but decided for now to go with dot plots (from plotly).

There’s not much to say here since plotly works very well with the pandas dataframes I’ve been storing all my results in!

7. Final points to note

1) For the chart looking at words where mature fics ranked first in mean ranks and the chart looking at words where explicit fics ranked first in mean ranks, I kept only words that were significantly different between mature and explicit fics in the pairwise comparison. I was interested in how the content of these two ratings may diverge in D:BH.

2) For the mature/explicit-first charts, I showed only the top 200 words in terms of the K-W H-statistic (there were just too many words!). The H-statistic can be converted to effect sizes; larger H-statistics would correspond to larger effect sizes.

3) Most importantly, this method doesn’t seem to work very well for understanding teen and especially gen fics. Gen fics just appear as the absence of smut/violence/swearing, which isn’t very informative. This is an issue I’d like to continue working on - I’m sure more linguistic cues, especially contextual ones, will be helpful.

1 note

·

View note

Link

#priceonline #india #shopping Blueprints for Text Analysis using Python: Machine Learning-Based Solutions for Common Real World (Nlp) Applications ...

0 notes

Text

NLP - Text Preprocessing

1. Tokenization

: 주어진 코퍼스(corpus)에서 토큰(token)이라 불리는 단위로 나누는 작업을 토큰화(Tokenization)라고 부릅니다. 토큰의 단위가 상황에 따라 다르지만, 보통 의미있는 단위로 토큰을 정의한다.

python package from nltk.tokenize import ~

1.1 Word Tokenization

: 토큰의 단위를 단어(Word)로 하는 경우 (여기서 단어(Word)는 단어 단위 외에도 단어구, 의미를 갖는 문자열로도 간주되기도 한다.)

Example input: Time is an illusion. Lunchtime double so! output: "Time", "is", "an", "illustion", "Lunchtime", "double", "so"

Code import nltk from nltk.tokenize import word_tokenize word_tokenize("text~")

Tips for tokenization 1) Punctuation(구두점)이나 특수 문자를 단순 제외해서는 안 된다. - 온점(.)과 같은 경우는 문장의 경계를 알 수 있는데 도움 - $45.45 경우 45.55를 하나로 취급해야 함 - 01/02/06은 날짜를 의미 - 123,456,789 숫자 세 자리 단위로 콤마 2) 줄임말과 단어 내에 띄어쓰기가 있는 경우? - 아포스트로피(')는 압축된 단어를 다시 펼치는 역할을 하기도 함 (what're는 what are의 줄임말이며, we're는 we are의 줄임말) - New York이라는 단어나 rock 'n' roll이라는 단어는 하나의 단어이지만 중간에 띄어쓰기가 존재

1.2 Sentence Tokenization

: 토큰의 단위를 문장(Sentence)으로 하는 경우

Example input: “His barber kept his word. But keeping such a huge secret to himself was driving him crazy. Finally, the barber went up a mountain and almost to the ed” output: ['His barber kept his word.', 'But keeping such a huge secret to himself was driving him crazy.', 'Finally, the barber went up a mountain and almost to the edge of a cliff.', 'He dug a hole in the midst of some reeds.', 'He looked about, to mae sure no one was near.']

Code import nltk from nltk.tokenize import sent_tokenize sent_tokenize(”text~”)

Tips for tokenization 1) 주어진 corpus를 문장 단위로 잘라낼 때, 물음표(?)나 느낌표(!) 와는 달리 온점(.)은 문장의 끝이 아니��라도 등장할 수 있다. - IP 192.168.56.31 - [email protected] - Ph.D. students

1.3 Part-of-speech tagging (품사 부착)

: 같은 단어라도 품사에 따라서 의미가 달라지기도 한다. 따라서 토큰화 과정에서 품사를 구분하여 토큰마다 부착하기도 한다.

e.g.) fly는 동사일 때는 ‘날다’, 명사일 때는 ‘파리’라는 뜻을 의미한다.

Example input: ['I', 'am', 'actively', 'looking', 'for', 'Ph.D.', 'students', '.', 'and', 'you', 'are', 'a', 'Ph.D.', 'student', '.'] output: [('I', 'PRP'), ('am', 'VBP'), ('actively', 'RB'), ('looking', 'VBG'), ('for', 'IN'), ('Ph.D.', 'NNP'), ('students', 'NNS'), ('.', '.'), ('and', 'CC'), ('you', 'PRP'), ('are', 'VBP'), ('a', 'DT'), ('Ph.D.', 'NNP'), ('student', 'NN'), ('.', '.')] (Penn Treebank POG Tags에서 PRP는 인칭 대명사, VBP는 동사, RB는 부사, VBG는 현재부사, IN은 전치사, NNP는 고유 명사, NNS는 복수형 명사, CC는 접속사, DT는 관사를 의미합니다.)

Code from nltk.tag import pos_tag x=word_tokenize(”text~”) pos_tag(x)

2. Normalization and Cleaning

Normalization : 표현 방법이 다른 단어들을 통합시켜서 같은 단어로 만들어준다.

Cleaning : 코퍼스로부터 노이즈 데이터를 제거한다.

자연어 처리에서 전처리, 더 정확히는 정규화의 지향점은 corpus의 복잡성을 줄이는 일

Cleaning and normalization은 토큰화 과정 전, 후에 모두 실시될 수 있다. before: 토큰화 작업에 방해되는 부분을 배제시키기 위해서 after: 토큰화 이후에도 여전히 남아 있는 노이즈를 제거하기 위해서

주어진 corpus에서 노이즈 데이터의 특징을 잡아낼 수 있다면, ‘정규표현식’을 통해서 이를 제거할 수 있는 경우가 많다.

사실 완벽한 정제 작업은 어려운 편이라서, 대부분의 경우 이 정도면 됐다.라는 일종의 합의점을 찾기도 한다.

2.1 (같은 의미를 가졌지만) 표기가 다른 단어들의 통합 e.g.) [ [US, USA], [uhhuh, uh-huh], ... ]

방법론

Lemmatizaiton(표제어 추출)

“Lemma”: 표제어, 기본 사전형 단어

e.g.) "builds", "building", or "built" to the lemma "build" “am”, “are”, “is” to the lemma “be”

Code import nltk from nltk.stem import WordNetLemmatizer n=WordNetLemmatizer() n.lemmatize('dies') # output: ‘dy’ n.lemmatize('dies', 'v') # output: ‘die’ Lemmatizer는 본래 단어의 품사 정보를 알아야만 정확한 결과를 얻을 수 있다. ���, dies가 문장에서 동사로 쓰였다는 것을 argument로 알려주어야 한다.

Stemming(어간 추출)

“Stem”: (단어의 의미를 담고있는) 단어의 핵심 부분

단순히 규칙에 기반하여 어미를 자르는 어림짐작의 방법

Lemmatizaiton과의 차이?

Stemming am → am the going → the go having → hav

Lemmatization am → be the going → the going having → have

어림짐작의 방법이기 때문에 어떤 알고리즘을 쓸지는 여러 stemmer를 corpus에 적용해보고 주어진 데이터에 적합한 stemmer 판단해야 한다.

2.2 대소문자 통합

영어에서 대문자는 문장의 맨 앞 등과 같은 특정 상황에서만 쓰이고, 대부분의 글은 소문자로 작성되기 때문에 이 작업은 보통 대문자를 소문자로 변환하는 작업으로 이루어지게 된다.

소문자로 변환되서는 안 되는 경우 - US(미국) vs us(우리) - 회사 이름(General Motors), 사람 이름(Bush)

소문자 변환을 언제 사용할지에 대한 결정은 machine learning sequence model로 더 정확하게 진행시킬 수 있다. 하지만 만약 규칙없이 마구잡이로 작성된 corpus라면 ML 방법도 크게 도움이 되지 않을 수 있다. 이런 경우, 예외 사항을 고려하지 않고 모든 corpus를 소문자로 바꾸는 것이 종종 더 실용적인 해결책이 되기도 한다.

Code from nltk.tokenize import word_tokenize text = 'A barber is a person.' sentence = word_tokenize(text) result = [] for word in sentence: word=word.lower() result.append(word)

2.3 Removing Unnecessary Words

특수문자

분석하고자 하는 목적에 맞지 않는 불필요한 단어들

Stopword(불용어)

문장 내에서는 자주 등장하지만 분석하는 데 있어서는 큰 도움이 되지 않는 단어

e.g.) I, my, me, over, 조사, 접미사

nltk 패키지에서 이미 100여개 이상의 불용어들을 정의하고 있다.

Code import nltk from nltk.corpus import stopwords from nltk.tokenize import word_tokenize example = "Family is not an important thing. It's everything." stop_words = set(stopwords.words('english')) word_tokens = word_tokenize(example) result = [] for w in word_tokens: if w not in stop_words: result.append(w) print(word_tokens) print(result)

등장 빈도가 적은 단어

길이가 짧은 단어

영어에서는 길이가 짧은 단어를 삭제하는 것만으로도 어느정도 자연어 처리에서 크게 의미가 없는 단어들을 제거하는 효과를 볼 수 있다고 알려져 있다.

길이가 짧은 단어를 제거하는 2차 이유는 punctuation(구두점)들까지도 한 꺼번에 제거하기 위함도 있다.

영어 단어의 평균 길이: 6~7 정도 한국어 단어의 평균 길이: 2~3 정도 <=> 영어에 비해 한국어는 글자 하나에 함축적 의미를 포함하고 있는 경우가 많다. <=> 짧은 길이의 영단어는 의미가 크게 없는 경우가 많다.

e.g.) 길이 1: a(관사), I(나) 길이 2: it, at, to, on, in, by

Code # 정규표현식 활용 import re text = "I was wondering if anyone out there could enlighten me on this car." shortword = re.compile(r'\W*\b\w{1,2}\b')

numbers

Remove numbers if they are not relevant to your analysis.

Usually, regular expressions are used to remove numbers.

Code import re text = ’Box A contains 3 red and 5 white balls, while Box B contains 4 red and 2 blue balls.’ result = re.sub(r’\d+’, ‘’, text) print(result) >> ‘Box A contains red and white balls, while Box B contains red and blue balls.’

(https://medium.com/@datamonsters/text-preprocessing-in-python-steps-tools-and-examples-bf025f872908)

3. Subword Segmentation

: 하나의 단어는 의미있는 여러 단어들의 조합으로 구성된 경우가 많기 때문에, 하나의 단어를 여러 단어로 분리해보겠다는 전처리 작업이다. 따라서 이를 통해 기계가 아직 배운 적이 없는 단어더라도 마치 배운 것처럼 대처할 수 있도록 도와주는 기법 중 하나이다. 이 방법은 기계 번역 등에서 주요 전처리로 사용되고 있다.

3.1 용어

기계가 알고있는 단어들의 집합 : Vocabulary

그리고 기계가 배우지 못하여 모르는 단어 : OOV(Out-Of-Vocabulary) 또는 UNK(Unknown Word)

3.2 WPM(Word Piece Model)

: 하나의 단어를 Subword Unit들로 분리하는 단어 분리 모델

3.2.1 BPE(Byte Pair Encoding) 알고리즘

: 구글의 WPM에는 BPE(Byte Pair Encoding) 알고리즘이 사용되었다.

4. Integer Encoding

: 컴퓨터는 텍스트보다는 숫자를 더 잘 처리 할 수 있다. 이를 위해 자연어 처리에서는 텍스트를 숫자로 바꾸는 여러가지 기법들이 있다. 그리고 그러한 기법들을 본격적으로 적용시키기 위한 첫 단계로 각 단어를 고유한 숫자에 Mapping시키는 전처리 작업이 필요할 때가 있다.

e.g.) Vocabulary(단어 집합)이 5000개라면 1번부터 5000번까지 index 부여

4.1 어떤 기준으로 index를 부여?

방법 중 하나는 단어를 frequency 순으로 정렬하여 Vocabulary(단어 집합)을 만들고, frequency가 높은 순서대로 차례로 낮은 숫자부터 integer를 부여하는 방법이 있다.

In gensim.corpora.Dictionary, Here we assigned a unique integer id to all words appearing in the corpus with the gensim.corpora.dictionary.Dictionary class. This sweeps across the texts, collecting word counts and relevant statistics.

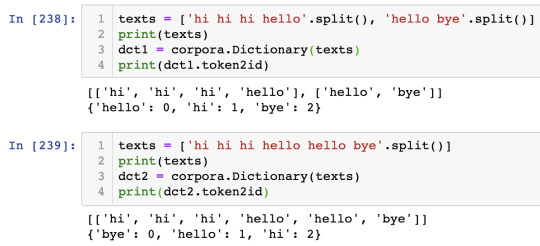

*절대적인 token의 수는 같더라도 (hi*3, hello*2, bye*1), 문서의 개수와 분포가 다르면 encoded 되는 integer가 다르게 나오네!!!

4.2 Code

from gensim import corpora print(bookshelf_speaker['tokens'].head()) >> 0 [sharp, good, brand, speaker, live, name, sharp] 1 ['ve, speaker, month, extremely, suprised, gre... 2 [small, klh, speaker, look, low, cost, attract... 3 [terminally, addict, asthetic, must, admit, fi... 4 [get, speaker, month, ago, wire, player, good,... incoded_integer = corpora.Dictionary(bookshelf_speaker['tokens'].values) print(incoded_integer) >> Dictionary(30665 unique tokens: ['brand', 'good', 'live', 'name', 'sharp']...) print("IDs 1 through 10: {}".format(sorted(incoded_int.token2id.items(), key=operator.itemgetter(1), reverse = False)[:10])) >> IDs 1 through 10: [('brand', 0), ('good', 1), ('live', 2), ('name', 3), ('sharp', 4), ('speaker', 5), ("'ll", 6), ("'ve", 7), ('100', 8), ('100htz', 9)]

5. Steps

Tokenizing

(split by ".", "-", and "/")

Part-of-speech tagging - Lemmatizaiton/Stemming

Removing numbers

Lowercasing - Stopwords

Short length (<=2)

Low frequency (=1)

5.1 Code

def get_wordnet_pos(word): """Map POS tag to first character lemmatize() accepts""" tag = nltk.pos_tag([word])[0][1][0].upper() tag_dict = {"J": wordnet.ADJ, "N": wordnet.NOUN, "V": wordnet.VERB, "R": wordnet.ADV} return tag_dict.get(tag, wordnet.NOUN)

def add_token_col(df): tokens = [] stop_words = stopwords.words('english') lem = WordNetLemmatizer()

for txt in df['COMMENT'].values: row = [] # tokenize for word in word_tokenize(txt): # lowercasing word_prprc = word.lower() # stopwords if word_prprc not in stop_words: # lemmatize word_prprc = lem.lemmatize(word_prprc, get_wordnet_pos(word_prprc)) # short length if len(word_prprc)>2: row.append(word_prprc) tokens.append(row) df['tokens'] = tokens return df

(https://wikidocs.net/21698)

0 notes

Text

Exploring D:BH fics (Part 3)

In this post I’m going to talk about how I cleaned the text data and trained the model (plain old Latent Dirichlet Allocation) shown in the interactive visualisation of topics in DBH fics mentioned here was made.

Recap: Data was scraped from AO3 in mid-October. I removed any fics that were non-English, were crossovers and had less than 10 words. A small number of fics were missed out during the scrape - overall 13933 D:BH fics remain for analysis.

Again, stuff is always WIP!

Part 1: Publishing frequency for D:BH with ratings breakdown Part 2: Building a network visualisation of D:BH ships Part 3: Topic modeling D:BH fics (retrieving common themes) Part 4: Average hits/kudos/comment counts/bookmarks received (split by publication month & rating) One-shots only. Part 5: Differences in word use between D:BH fics of different ratings Part 6: Word2Vec on D:BH fics (finding similar words based on word usage patterns) Part 7: Differences in topic usage between D:BH fics of different ratings Part 8: Understanding fanon representations of characters from story tags Part 9: D:BH character prominence in the actual game vs AO3 fics

Basically, what I did here was apply the Latent Dirichlet Allocation algorithm (topic modeling). I’m not going to go into the details behind it (not going to add to the big collection of layman LDA posts hanging out on the Internet) - all you need to know in this context is that it’s an unsupervised algorithm (i.e., I don’t tell the algorithm what topics to form, beyond the number of topics to look out for) that is trained on large amounts of text to pick out ‘topics’. Topics are like collections of co-occurring words - that they happen to usually be human-interpretable is a nice quality of LDA.

There are quite a few variants of LDA. This one is the basic vanilla one.

1. Identifying names for removal. Based on past experience with LDA, if I don’t remove names, they end up intruding in topics/forming a topic(s) on their own. That’s not really informative. I don’t really need a topic of D:BH character/OC names.

I ran the Stanford Named Entity Recognizer on all of the text. This tagger automatically picks out people’s names for me. I already had a base collection of names from my earlier run with RK1700, so I added on to that collection. Of course, the tagger won’t be 100% accurate, so I still had to check its output.

Let’s just say that was a lot of manual work. I had a collection of 13439 names in the end.

2. Keeping only nouns and verbs. Since I wanted themes, I thought perhaps nouns and verbs may be the most important words to capture those (this is up for debate. Definitely an assumption on my end, and something to keep in mind when looking at the results). I ran the Stanford part-of-speech tagger on all the text, which automatically labels the part-of-speech of each word (e.g. adjective, adverb), retaining only nouns and verbs.

3. Final clean of the text. (Note: I’ve already done some cleaning for steps 1 and 2 to prepare the text for the taggers). At this final clean, I removed words that were only 1 character long, had any non-English characters, were stop-words (i.e. really common words like I’m), or that were in my collection of names.

So now, each story is basically a list of nouns and verbs that aren’t very common/redundant words and that aren’t names.

4. Chunking the stories. My research work is with shorter social media text, so I’ve never had this issue before. But training a model to look for patterns in something as long as a fic (that can be more than 10k words, even for one-shots!) - we’re not going to get nice topics. So - I made the decision to chunk the stories.

I started by splitting them by chapters. One-shots stay as one-shots (one document), but a three-chapter story gets split into three separate documents. This bumped the number of documents from 13933 (the original number of fics) up to 41597.

Still, some chapters and one-shots may be really, really long. Here are my chunking conditions:

If a chapter/one-shot is below 2000 words, Leave it as one document. If I divide a chapter’s/one-shot’s length by 1000, a) and I get a quotient larger than 2 and a remainder of 0.5 or less (let’s say, quotient = 3, remainder =0.4): The chapter/one-shot is chunked into a chunk of 1000, a second chunk of 1000, and a third chunk of 1400. b) and I get a quotient larger than 2 and a remainder of greater than 0.5 (let’s say, quotient = 3, remainder = 0.9): The chapter/one-shot is chunked into a chunk of 1000, a second chunk of 1000, a third chunk of 1000, and a fourth chunk of 900.

The logic really is that I didn’t want random chunks of 2 words or something floating around. The algorithm can’t learn patterns from such short text.

This increased the document count from 41597 to 50126.

5. Bigrams and creating the dictionary. I used Gensim for this this task and LDA.

So, bigrams. Single words like ‘guns’ and ‘shots’ are great, but bigrams (basically two words that appear together, like gun_shot) give a bit more context. I looked for bigrams that appeared at least 100 times (no rare ones, they’re not useful for learning patterns) and appended them to the respective document that they appeared in.

Then I created a dictionary of words for the model to sort into topics. I put some filters here too (also important to note, results may change depending on choices here). I only wanted words that appeared in no less than 140 chunks and no more than 40% of the chunks (20050 chunks); so terms that weren’t too rare or too common.

6. Training the LDA model. Important part of LDA: picking how many topics you want the model to learn. I relied on a measure called coherence to help with this. It’s basically just a quantitative automated measure of topic interpretability. The gold standard really is to get human judges around, maybe agree on saying, ‘Hm, this topic model looks fancy but makes no sense’ and you go back to the drawing board. But I don’t have that and coherence has been shown to correlate pretty well with human judgments, so it’ll do.

I ran LDA to model for 2 topics all the way to 60 topics in steps of 2. So: try 2 topics, 4 topics, 6 topics.... to 60 topics. I calculated coherence at each topic number. Once the algorithm was done, I plotted out the coherence graph and manually checked the higher-coherence-scoring models.

44 was one of them (other suggested topic numbers were 30 and 38, but they were still a bit too general for my liking. Again, note the bias introduced here from my choices).

7. Labeling topics. With the final model decided, I plotted the visualisation using pyLDAvis. Using the top 10 keywords of each topic, with lambda (λ) set to 0.6 following [1]’s recommendation, I manually labelled each topic. I’m definitely not the final word on the D:BH fandom or topic models, so perhaps you may disagree with the labels I came up with.

And that wraps up this little exercise on LDA with long(er) texts! It’s admittedly not as exciting as the network of ships, but it was a good introductory exercise to working with texts outside of my research area.

1 note

·

View note