#Clickstream

Explore tagged Tumblr posts

Text

🎈Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization Dashboard.🫖

ai-ml-ds-web-analytics-clickstream-cro-dashboard-024 Filename: realtime_clickstream_dashboard.py (Backend/Processing), potentially separate file for Dash app. Timestamp: Mon Jun 02 2025 19:48:54 GMT+0000 (Coordinated Universal Time) Problem Domain:Web Analytics, E-commerce Optimization, User Behavior Analysis, Real-Time Data Processing, Conversion Rate Optimization (CRO), Data Visualization,…

#Clickstream#CRO#Dash#DataVisualization#Ecommmerce#pandas#Plotly#python#RealTimeAnalytics#UserBehavior#WebAnalytics

0 notes

Text

🎈Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization Dashboard.🫖

ai-ml-ds-web-analytics-clickstream-cro-dashboard-024 Filename: realtime_clickstream_dashboard.py (Backend/Processing), potentially separate file for Dash app. Timestamp: Mon Jun 02 2025 19:48:54 GMT+0000 (Coordinated Universal Time) Problem Domain:Web Analytics, E-commerce Optimization, User Behavior Analysis, Real-Time Data Processing, Conversion Rate Optimization (CRO), Data Visualization,…

#Clickstream#CRO#Dash#DataVisualization#Ecommmerce#pandas#Plotly#python#RealTimeAnalytics#UserBehavior#WebAnalytics

0 notes

Text

🎈Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization Dashboard.🫖

ai-ml-ds-web-analytics-clickstream-cro-dashboard-024 Filename: realtime_clickstream_dashboard.py (Backend/Processing), potentially separate file for Dash app. Timestamp: Mon Jun 02 2025 19:48:54 GMT+0000 (Coordinated Universal Time) Problem Domain:Web Analytics, E-commerce Optimization, User Behavior Analysis, Real-Time Data Processing, Conversion Rate Optimization (CRO), Data Visualization,…

#Clickstream#CRO#Dash#DataVisualization#Ecommmerce#pandas#Plotly#python#RealTimeAnalytics#UserBehavior#WebAnalytics

0 notes

Text

🎈Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization Dashboard.🫖

ai-ml-ds-web-analytics-clickstream-cro-dashboard-024 Filename: realtime_clickstream_dashboard.py (Backend/Processing), potentially separate file for Dash app. Timestamp: Mon Jun 02 2025 19:48:54 GMT+0000 (Coordinated Universal Time) Problem Domain:Web Analytics, E-commerce Optimization, User Behavior Analysis, Real-Time Data Processing, Conversion Rate Optimization (CRO), Data Visualization,…

#Clickstream#CRO#Dash#DataVisualization#Ecommmerce#pandas#Plotly#python#RealTimeAnalytics#UserBehavior#WebAnalytics

0 notes

Text

🎈Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization Dashboard.🫖

ai-ml-ds-web-analytics-clickstream-cro-dashboard-024 Filename: realtime_clickstream_dashboard.py (Backend/Processing), potentially separate file for Dash app. Timestamp: Mon Jun 02 2025 19:48:54 GMT+0000 (Coordinated Universal Time) Problem Domain:Web Analytics, E-commerce Optimization, User Behavior Analysis, Real-Time Data Processing, Conversion Rate Optimization (CRO), Data Visualization,…

#Clickstream#CRO#Dash#DataVisualization#Ecommmerce#pandas#Plotly#python#RealTimeAnalytics#UserBehavior#WebAnalytics

0 notes

Text

Unlocking E-commerce Potential with Quantzig’s Clickstream Data Analytics

Understanding Clickstream Data Analytics for E-commerce

In the fast-paced world of e-commerce, clickstream data analytics is becoming a cornerstone for businesses seeking to enhance their understanding of customer behavior. This powerful tool allows companies to track user interactions on their platforms, leading to valuable insights that drive engagement and conversions. This case study explores how Quantzig’s clickstream analytics empowered a leading sportswear retailer to improve their online performance significantly.

Exploring User Behavior

Clickstream data provides a detailed record of user interactions, enabling businesses to understand the customer journey from start to finish. By analyzing this data, companies can identify patterns and areas for optimization. This case study highlights how Quantzig’s innovative analytics approach led to a remarkable 120% increase in clickthrough rates.

Client Overview and Challenges

The client, a prominent division of a global sportswear brand, enjoyed a strong online presence and achieved a 15% growth in e-commerce sales in 2023. However, they faced challenges such as:

Limited insights into customer behavior and preferences

Difficulty in creating personalized experiences for users

Ineffective marketing strategies due to insufficient data-driven insights

Low conversion rates despite overall sales success

Quantzig’s Approach

To tackle these challenges, Quantzig implemented a comprehensive clickstream analytics strategy:

Data Collection and Enhancement: Sophisticated algorithms were deployed to gather and refine clickstream data, processing millions of interactions.

Journey Analysis: AI-driven models were utilized to map and analyze customer pathways, improving Direct-to-Consumer efforts.

Behavioral Clustering: Users were segmented based on their browsing and purchasing behaviors, enabling targeted marketing strategies.

Personalization System: An AI-powered engine delivered tailored product recommendations, enhancing user experiences.

Outcomes and Achievements

The results of Quantzig’s clickstream analytics were impressive, yielding:

A 120% increase in clickthrough rates on personalized experiences

An 18% boost in conversion rates, significantly enhancing sales

Improved personalization capabilities for better customer engagement

Growth in repeater conversion rates from 15% to 25%

Conclusion

Quantzig’s clickstream data analytics solution provided the sportswear retailer with the insights needed to understand their customers better and optimize their online platform. As the e-commerce landscape continues to evolve, leveraging such data-driven approaches will be essential for businesses aiming to thrive in a digital marketplace.

Click here to talk to our experts

0 notes

Text

Why Helical IT Solutions Stands Out Among Data Lake Service Providers

Introduction

With the exponential growth in the volume, variety, and velocity of data—ranging from logs and social media to APIs, IoT, RDBMS, and NoSQL— As a more adaptable and scalable option to conventional data warehouses, businesses are increasingly using data lakes.

Helical IT Solutions has established itself as a leading data lake service provider in this space, backed by a highly skilled team and a proven track record of implementing end-to-end data lake solutions across various domains and geographies. As data lake technology continues to evolve, Helical IT not only delivers robust technical implementations but also assists clients with early-stage cost-benefit analysis and strategic guidance, making them a standout partner in modern data infrastructure transformation.

What is a Data Lake?

A data lake is a centralized repository that allows you to store structured, semi-structured, and unstructured data as-is, without needing to transform it before storage. Unlike traditional data warehouses that require pre-defined schemas and structure, data lakes offer a schema-on-read approach—meaning data is ingested in its raw form and structured only when accessed. This architectural flexibility enables organizations to capture and preserve data from diverse sources such as transactional databases, log files, clickstreams, IoT devices, APIs, and even social media feeds.

This adaptability makes data lakes especially valuable in today’s fast-changing digital landscape, where new data sources and formats emerge constantly. However, with this flexibility comes complexity—especially in maintaining data integrity, performance, and accessibility. That’s why the architecture of a data lake is critical.

Overview of Helical IT Solutions

Helical IT Solutions is a trusted technology partner with deep expertise in delivering modern, scalable data architecture solutions. With a focus on open-source technologies and cost-effective innovation, Helical IT has built a reputation for providing end-to-end data lake, business intelligence, and analytics solutions across industries and geographies.

What sets Helical IT apart is its ability to understand both the technical and strategic aspects of a data lake implementation. From early-stage assessments to complete solution delivery and ongoing support, the company works closely with clients to align the data lake architecture with business objectives—ensuring long-term value, scalability, and security.

At Helical IT Solutions, our experienced data lake consultants ensure that every implementation is tailored to meet both current and future data needs. We don’t just design systems—we provide a complete, end-to-end solution that includes ingestion, storage, cataloging, security, and analytics. Whether you're seeking to build a cloud-native data lake, integrate it with existing data warehouses, or derive powerful insights through advanced analytics, our enterprise-grade services are designed to scale with your business.

Comprehensive Data Lake Services Offered

Helical IT Solutions offers a holistic suite of data lake solutions, tailored to meet the unique needs of organizations at any stage of their data journey. Whether you're building a new data lake from scratch or optimizing an existing environment, Helical provides the following end-to-end services:

Data Lake Implementation steps

Identifying data needs and business goals.

Connecting to multiple data sources—structured, semi-structured, and unstructured.

Transforming raw data into usable formats for analysis.

Deploying the solution on-premises or in the cloud (AWS, Azure, GCP).

Developing dashboards and reports for real-time visibility.

Enabling predictive analytics and forecasting capabilities.

Strategic Road mapping and Architecture

Formulating a customized roadmap and strategy based on your goals.

Recommending the optimal technology stack, whether cloud-native or on-premise.

Starting with a prototype or pilot to validate the approach.

Scalable and Secure Data Lake Infrastructure

Creating data reservoirs from enterprise systems, IoT devices, and social media.

Ensuring petabyte-scale storage for massive data volumes.

Implementing enterprise-wide data governance, security, and access controls.

Accelerated Data Access and Analytics

Reducing time to locate, access, and prepare data.

Enabling custom analytics models and dashboards for actionable insights.

Supporting real-time data analytics through AI, ML, and data science models.

Integration with Existing Ecosystems

Synchronizing with data warehouses and migrating critical data.

Building or upgrading BI dashboards and visualization tools.

Providing solutions that support AI/ML pipelines and cognitive computing.

Training, Maintenance, and Adoption

Assisting with training internal teams, system deployment, and knowledge transfer.

Ensuring seamless adoption and long-term support across departments.

Why Choose Helical IT Solutions as your Data Lake Service Provider

At Helical IT Solutions, our deep focus and specialized expertise in Data Warehousing, Data Lakes, ETL, and Business Intelligence set us apart from generalist IT service providers. With over a decade of experience and more than 85+ successful client engagements across industries and geographies, we bring unmatched value to every data lake initiative.

Technology Experts: We focus exclusively on Data Lakes, ETL, BI, and Data Warehousing—bringing deep technical expertise and certified consultants for secure, scalable implementations.

Thought Leadership Across Industries: With clients from Fortune 500s to startups across healthcare, banking, telecom, and more, we combine technical skill with domain-driven insights.

Time and Cost Efficiency: Our specialization and open-source tool expertise help reduce cloud and licensing costs without compromising on quality.

Proven Project Delivery Framework: Using Agile methodology, we ensure fast, flexible, and business-aligned implementations.

Verity of data tools experience: From data ingestion to predictive analytics, we support the entire data lifecycle with hands-on experience across leading tools.

Conclusion

In an era where data is a key driver of innovation and competitive advantage, choosing the right partner for your data lake initiatives is critical. Helical IT Solutions stands out by offering not just technical implementation, but strategic insight, industry experience, and cost-effective, scalable solutions tailored to your unique data landscape.

Whether you're just beginning your data lake journey or looking to enhance an existing setup, Helical IT brings the expertise, agility, and vision needed to turn raw data into real business value. With a commitment to excellence and a focus solely on data-centric technologies, Helical IT is the partner you can trust to transform your organization into a truly data-driven enterprise.

0 notes

Text

Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization using Pandas, WebSocket APIs, and Real-Time Dash

Reference ID: ai-ml-ds-SrmZNuoOhMk Filename: real_time_clickstream_analysis_and_conversion_rate_optimization.py Short Description: This project implements a sophisticated real-time clickstream analysis system designed to optimize conversion rates on e-commerce platforms. Utilizing Pandas for data manipulation, WebSocket APIs for real-time data ingestion, and Dash for dynamic visualization, the…

0 notes

Text

Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization using Pandas, WebSocket APIs, and Real-Time Dash

Reference ID: ai-ml-ds-SrmZNuoOhMk Filename: real_time_clickstream_analysis_and_conversion_rate_optimization.py Short Description: This project implements a sophisticated real-time clickstream analysis system designed to optimize conversion rates on e-commerce platforms. Utilizing Pandas for data manipulation, WebSocket APIs for real-time data ingestion, and Dash for dynamic visualization, the…

0 notes

Text

Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization using Pandas, WebSocket APIs, and Real-Time Dash

Reference ID: ai-ml-ds-SrmZNuoOhMk Filename: real_time_clickstream_analysis_and_conversion_rate_optimization.py Short Description: This project implements a sophisticated real-time clickstream analysis system designed to optimize conversion rates on e-commerce platforms. Utilizing Pandas for data manipulation, WebSocket APIs for real-time data ingestion, and Dash for dynamic visualization, the…

0 notes

Text

Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization using Pandas, WebSocket APIs, and Real-Time Dash

Reference ID: ai-ml-ds-SrmZNuoOhMk Filename: real_time_clickstream_analysis_and_conversion_rate_optimization.py Short Description: This project implements a sophisticated real-time clickstream analysis system designed to optimize conversion rates on e-commerce platforms. Utilizing Pandas for data manipulation, WebSocket APIs for real-time data ingestion, and Dash for dynamic visualization, the…

0 notes

Text

Project Title: Real-Time Clickstream Analysis and Conversion Rate Optimization using Pandas, WebSocket APIs, and Real-Time Dash

Reference ID: ai-ml-ds-SrmZNuoOhMk Filename: real_time_clickstream_analysis_and_conversion_rate_optimization.py Short Description: This project implements a sophisticated real-time clickstream analysis system designed to optimize conversion rates on e-commerce platforms. Utilizing Pandas for data manipulation, WebSocket APIs for real-time data ingestion, and Dash for dynamic visualization, the…

0 notes

Text

Introduction to AWS Data Engineering: Key Services and Use Cases

Introduction

Business operations today generate huge datasets which need significant amounts of processing during each operation. Data handling efficiency is essential for organization decision making and expansion initiatives. Through its cloud solutions known as Amazon Web Services (AWS) organizations gain multiple data-handling platforms which construct protected and scalable data pipelines at affordable rates. AWS data engineering solutions enable organizations to both acquire and store data and perform analytical tasks and machine learning operations. A suite of services allows business implementation of operational workflows while organizations reduce costs and boost operational efficiency and maintain both security measures and regulatory compliance. The article presents basic details about AWS data engineering solutions through their practical applications and actual business scenarios.

What is AWS Data Engineering?

AWS data engineering involves designing, building, and maintaining data pipelines using AWS services. It includes:

Data Ingestion: Collecting data from sources such as IoT devices, databases, and logs.

Data Storage: Storing structured and unstructured data in a scalable, cost-effective manner.

Data Processing: Transforming and preparing data for analysis.

Data Analytics: Gaining insights from processed data through reporting and visualization tools.

Machine Learning: Using AI-driven models to generate predictions and automate decision-making.

With AWS, organizations can streamline these processes, ensuring high availability, scalability, and flexibility in managing large datasets.

Key AWS Data Engineering Services

AWS provides a comprehensive range of services tailored to different aspects of data engineering.

Amazon S3 (Simple Storage Service) – Data Storage

Amazon S3 is a scalable object storage service that allows organizations to store structured and unstructured data. It is highly durable, offers lifecycle management features, and integrates seamlessly with AWS analytics and machine learning services.

Supports unlimited storage capacity for structured and unstructured data.

Allows lifecycle policies for cost optimization through tiered storage.

Provides strong integration with analytics and big data processing tools.

Use Case: Companies use Amazon S3 to store raw log files, multimedia content, and IoT data before processing.

AWS Glue – Data ETL (Extract, Transform, Load)

AWS Glue is a fully managed ETL service that simplifies data preparation and movement across different storage solutions. It enables users to clean, catalog, and transform data automatically.

Supports automatic schema discovery and metadata management.

Offers a serverless environment for running ETL jobs.

Uses Python and Spark-based transformations for scalable data processing.

Use Case: AWS Glue is widely used to transform raw data before loading it into data warehouses like Amazon Redshift.

Amazon Redshift – Data Warehousing and Analytics

Amazon Redshift is a cloud data warehouse optimized for large-scale data analysis. It enables organizations to perform complex queries on structured datasets quickly.

Uses columnar storage for high-performance querying.

Supports Massively Parallel Processing (MPP) for handling big data workloads.

It integrates with business intelligence tools like Amazon QuickSight.

Use Case: E-commerce companies use Amazon Redshift for customer behavior analysis and sales trend forecasting.

Amazon Kinesis – Real-Time Data Streaming

Amazon Kinesis allows organizations to ingest, process, and analyze streaming data in real-time. It is useful for applications that require continuous monitoring and real-time decision-making.

Supports high-throughput data ingestion from logs, clickstreams, and IoT devices.

Works with AWS Lambda, Amazon Redshift, and Amazon Elasticsearch for analytics.

Enables real-time anomaly detection and monitoring.

Use Case: Financial institutions use Kinesis to detect fraudulent transactions in real-time.

AWS Lambda – Serverless Data Processing

AWS Lambda enables event-driven serverless computing. It allows users to execute code in response to triggers without provisioning or managing servers.

Executes code automatically in response to AWS events.

Supports seamless integration with S3, DynamoDB, and Kinesis.

Charges only for the compute time used.

Use Case: Lambda is commonly used for processing image uploads and extracting metadata automatically.

Amazon DynamoDB – NoSQL Database for Fast Applications

Amazon DynamoDB is a managed NoSQL database that delivers high performance for applications that require real-time data access.

Provides single-digit millisecond latency for high-speed transactions.

Offers built-in security, backup, and multi-region replication.

Scales automatically to handle millions of requests per second.

Use Case: Gaming companies use DynamoDB to store real-time player progress and game states.

Amazon Athena – Serverless SQL Analytics

Amazon Athena is a serverless query service that allows users to analyze data stored in Amazon S3 using SQL.

Eliminates the need for infrastructure setup and maintenance.

Uses Presto and Hive for high-performance querying.

Charges only for the amount of data scanned.

Use Case: Organizations use Athena to analyze and generate reports from large log files stored in S3.

AWS Data Engineering Use Cases

AWS data engineering services cater to a variety of industries and applications.

Healthcare: Storing and processing patient data for predictive analytics.

Finance: Real-time fraud detection and compliance reporting.

Retail: Personalizing product recommendations using machine learning models.

IoT and Smart Cities: Managing and analyzing data from connected devices.

Media and Entertainment: Streaming analytics for audience engagement insights.

These services empower businesses to build efficient, scalable, and secure data pipelines while reducing operational costs.

Conclusion

AWS provides a comprehensive ecosystem of data engineering tools that streamline data ingestion, storage, transformation, analytics, and machine learning. Services like Amazon S3, AWS Glue, Redshift, Kinesis, and Lambda allow businesses to build scalable, cost-effective, and high-performance data pipelines.

Selecting the right AWS services depends on the specific needs of an organization. For those looking to store vast amounts of unstructured data, Amazon S3 is an ideal choice. Companies needing high-speed data processing can benefit from AWS Glue and Redshift. Real-time data streaming can be efficiently managed with Kinesis. Meanwhile, AWS Lambda simplifies event-driven processing without requiring infrastructure management.

Understanding these AWS data engineering services allows businesses to build modern, cloud-based data architectures that enhance efficiency, security, and performance.

References

For further reading, refer to these sources:

AWS Prescriptive Guidance on Data Engineering

AWS Big Data Use Cases

Key AWS Services for Data Engineering Projects

Top 10 AWS Services for Data Engineering

AWS Data Engineering Essentials Guidebook

AWS Data Engineering Guide: Everything You Need to Know

Exploring Data Engineering Services in AWS

By leveraging AWS data engineering services, organizations can transform raw data into valuable insights, enabling better decision-making and competitive advantage.

youtube

#aws cloud data engineer course#aws cloud data engineer training#aws data engineer course#aws data engineer course online#Youtube

0 notes

Text

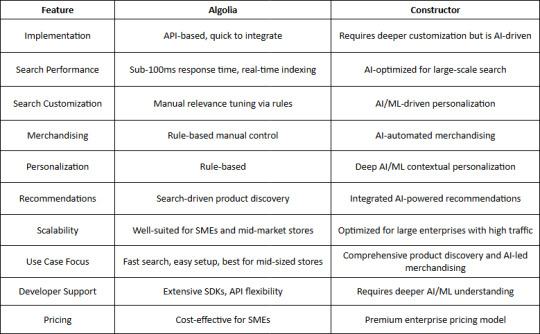

Algolia vs. Connector Search Tools: A Comprehensive Comparison

Evaluating Performance, Features, and Usability to Help You Choose the Right Search Solution.

When it comes to implementing a powerful search and discovery solution for eCommerce, two major players often come up: Algolia and Constructor. While both provide advanced search capabilities, their workflows, implementations, and approach to AI-driven product discovery set them apart. This blog takes a deep dive into their differences, focusing on real-world applications, technical differentiators, and the impact on business KPIs.

Overview of Algolia and Constructor

Algolia

Founded in 2012, Algolia is a widely recognized search-as-a-service platform.

It provides instant, fast, and reliable search capabilities with an API-first approach.

Commonly used in various industries, including eCommerce, SaaS, media, and enterprise applications.

Provides keyword-based search with support for vector search and AI-driven relevance tuning.

Constructor

A newer entrant in the space, Constructor focuses exclusively on eCommerce product discovery.

Founded in 2015 and built from the ground up with clickstream-driven AI for ranking and recommendations.

Used by leading eCommerce brands like Under Armour and Home24.

Aims to optimize business KPIs like conversion rates and revenue per visitor.

Key Differences in Implementation and Workflows

1. Search Algorithm and Ranking Approach

Algolia:

Uses keyword-based search (TF-IDF, BM25) with additional AI-driven ranking enhancements.

Supports vector search, semantic search, and hybrid approaches.

Merchandisers can fine-tune relevance manually using rule-based controls.

Constructor:

Built natively on a Redis-based core rather than Solr or ElasticSearch.

Prioritizes clickstream-driven search and personalization, focusing on what users interact with.

Instead of purely keyword relevance, it optimizes for "attractiveness", ranking results based on a user’s past behavior and site-wide trends.

Merchandisers work with AI, using a human-interpretable dashboard to guide search ranking rather than overriding it.

2. Personalization & AI Capabilities

Algolia:

Offers personalization via rules and AI models that users can configure.

Uses AI for dynamic ranking adjustments but primarily relies on structured data input.

Constructor:

Focuses heavily on clickstream data, meaning every interaction—clicks, add-to-cart actions, and conversions—affects future search results.

Uses Transformer models for context-aware personalization, dynamically adjusting rankings in real-time.

AI Shopping Assistant allows for conversational product discovery, using Generative AI to enhance search experiences.

3. Use of Generative AI

Algolia:

Provides semantic search and AI-based ranking but does not have native Generative AI capabilities.

Users need to integrate third-party LLMs (Large Language Models) for AI-driven conversational search.

Constructor:

Natively integrates Generative AI to handle natural language queries, long-tail searches, and context-driven shopping experiences.

AI automatically understands customer intent—for example, searching for "I'm going camping in Yosemite with my kids" returns personalized product recommendations.

Built using AWS Bedrock and supports multiple LLMs for improved flexibility.

4. Merchandiser Control & Explainability

Algolia:

Provides rule-based tuning, allowing merchandisers to manually adjust ranking factors.

Search logic and results are transparent but require manual intervention for optimization.

Constructor:

Built to empower merchandisers with AI, allowing human-interpretable adjustments without overriding machine learning.

Black-box AI is avoided—every recommendation and ranking decision is traceable and explainable.

Attractiveness vs. Technical Relevance: Prioritizes "what users want to buy" over "what matches the search query best".

5. Proof-of-Concept & Deployment

Algolia:

Requires significant setup to run A/B tests and fine-tune ranking.

Merchandisers and developers must manually configure weighting and relevance.

Constructor:

Offers a "Proof Schedule", allowing retailers to test before committing.

Retailers install a lightweight beacon, send a product catalog, and receive an automated performance analysis.

A/B tests show expected revenue uplift, allowing data-driven decision-making before switching platforms.

Real-World Examples & Business Impact

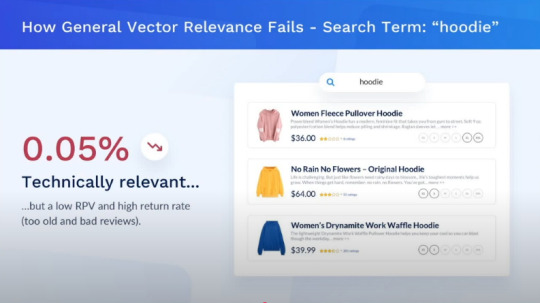

Example 1: Searching for a Hoodie

A user searches for "hoodie" on an eCommerce website using Algolia vs. Constructor:

Algolia's Approach: Shows hoodies ranked based on keyword relevance, possibly with minor AI adjustments.

Source : YouTube - AWS Partner Network

Constructor's Approach: Learns from past user behavior, surfacing high-rated hoodies in preferred colors and styles, increasing the likelihood of conversion.

Source : YouTube - AWS Partner Network

Example 2: Conversational Search for Camping Gear

A shopper types, "I'm going camping with my preteen kids for the first time in Yosemite. What do I need?"

Algolia: Requires manual tagging and structured metadata to return relevant results.

Constructor: Uses Generative AI and Transformer models to understand the context and intent, dynamically returning the most relevant items across multiple categories.

Which One Should You Choose?

Why Choose Algolia?

Ease of Implementation – Algolia provides a quick API-based setup, making it ideal for eCommerce sites looking for a fast integration process.

Speed & Performance – With real-time indexing and instant search, Algolia is built for speed, ensuring sub-100ms response times.

Developer-Friendly – Offers extensive documentation, SDKs, and a flexible API for developers to customize search behavior.

Rule-Based Merchandising – Allows businesses to manually tweak search relevance with robust rules and business logic.

Cost-Effective for SMEs – More affordable for smaller eCommerce businesses with straightforward search needs.

Enterprise-Level Scalability – Can support growing businesses but requires manual optimization for handling massive catalogs.

Search-Driven Recommendations – While Algolia supports recommendations, they are primarily based on search behaviors rather than deep AI.

Manual Control Over Search & Merchandising – Provides businesses the flexibility to define search relevance and merchandising manually.

Strong Community & Developer Ecosystem – Large user base with extensive community support and integrations.

Why Choose Constructor?

Ease of Implementation – While requiring more initial setup, Constructor offers pre-trained AI models that optimize search without extensive manual configurations.

Speed & Performance – Uses AI-driven indexing and ranking to provide high-speed, optimized search results for large-scale retailers.

Developer-Friendly – Requires deeper AI/ML understanding but provides automation that reduces manual tuning efforts.

Automated Merchandising – AI-driven workflows reduce the need for manual intervention, optimizing conversion rates.

Optimized for Large Retailers – Designed for enterprises requiring full AI-driven control over search and discovery.

Deep AI Personalization – Unlike Algolia’s rule-based system, Constructor uses advanced AI/ML to provide contextual, personalized search experiences.

End-to-End Product Discovery – Goes beyond search, incorporating personalized recommendations, dynamic ranking, and automated merchandising.

Scalability – Built to handle massive catalogs and high traffic loads with AI-driven performance optimization.

Integrated AI-Powered Recommendations – Uses AI-driven models to surface relevant products in real-time based on user intent and behavioral signals.

Data-Driven Decision Making – AI continuously optimizes search and merchandising strategies based on real-time data insights.

Conclusion

Both Algolia and Constructor are excellent choices, but their suitability depends on your eCommerce business's needs:

If you need a general-purpose, fast search engine, Algolia is a great fit.

If your focus is on eCommerce product discovery, personalization, and revenue optimization, Constructor provides an AI-driven, clickstream-based solution designed for maximizing conversions.

With the evolution of AI and Generative AI, Constructor is positioning itself as a next-gen alternative to traditional search engines, giving eCommerce brands a new way to drive revenue through personalized product discovery.

This Blog is driven by our experience with product implementations for customers.

Connect with US

Thanks for reading Ragul's Blog! Subscribe for free to receive new posts and support my work.

1 note

·

View note

Text

Top Event Stream Processing Platforms for Real-Time Data Management

Event Stream Processing (ESP) software enables real-time analysis and processing of data streams as they are generated. It allows organizations to consume, evaluate, and respond to large volumes of continuous data flows from various sources, including sensors, social media, and transaction logs. ESP software can filter, aggregate, and convert data in real-time, delivering immediate insights and allowing rapid decision-making. QKS Group predicts that the Event Stream Processing (ESP) market will grow at a CAGR of 22.14% by 2028.

The advantages of utilizing event stream processing software are improved responsiveness and operational efficiency. Organizations that process data in real-time may spot patterns, trends, and anomalies as they happen, allowing for prompt interventions and responses. This functionality is critical for applications that need fraud detection, system performance monitoring, and IoT device management. Furthermore, ESP software reduces latency and enhances the accuracy of data-driven choices by giving real-time information, increasing overall corporate agility and competitiveness.

Download the sample report of Market Share: https://qksgroup.com/download-sample-form/market-share-event-stream-processing-esp-2023-worldwide-6527

In this blog, we’ll explore the meaning of ESP, how it works, and the top event stream processing software, making it easier for companies to find the best solution for your use case.

What is Event Stream Processing?

The market for ESP platforms consists of software subsystems that conduct real-time analysis of streaming event data. They execute calculations on unbounded input data perpetually as it arrives, allowing immediate reactions to current situations and/or storing results in files, object stores, or other databases for later use. Instances of input data include clickstreams, copies of business transactions or database edits, social media posts, market data feeds, and sensor data from physical assets like mobile devices, machines, and automobiles.

How Does Event Stream Processing Software Work?

Event Stream Processing (ESP) software works by consistently devouring data from multiple real-time sources and then processing this data to perform exact purposes. Depending on how your ESP software is deployed, the standard processes might be filtering, aggregating, and analyzing data. Event Stream Processing uses intricate event processing engines to identify patterns, correlations, and anomalies within the data streams. This information triggers actions or generates insights, which can be visualized or fed into other systems for further use.

ESP software processes linked data rather than individual data points. This enables software to comprehend information within its context rather than as a sole action. This allows companies to react quickly to events as they happen, ensuring timely and informed decision-making.

Download the sample report of Market Forecast: https://qksgroup.com/download-sample-form/market-forecast-event-stream-processing-esp-2024-2028-worldwide-5649

Event Stream Processing: Real Time Data Handling and Analysis

ESP platforms handle, process and analyze real-time data streams generated by several sources, including sensors, applications, devices, and human interactions. These platforms provide tools for capturing, storing, and reacting to events as they occur, enabling organizations to gain insights, make informed decisions, and adapt quickly to changes. They frequently encompass data transformation, filtering, aggregation, and complex event processing. According to the QKS Group, the “Event Stream Processing (ESP) Market Share, 2023: worldwide” and “Market Forecast: Event Stream Processing (ESP), 2024-2028, Worldwide” reports assist you in selecting the appropriate platform based on your organization's needs.

ESP varies from traditional computer systems, which use synchronous, request-response communication between clients and servers. In reactive applications, events impact decision-making. Conventional systems are frequently excessively sluggish or inefficient for applications because they follow the save-and-process model in which incoming data is saved in databases' memory or run a disk before queries.

In situations demanding quick responses are required, or the volume of incoming data is considerable, application architects employ a ‘process-first’ ESP blueprint, where logic is applied continuously and promptly to the ‘data in motion’ as it enters. ESP is efficient because it computes incrementally, unlike traditional methods, which reprocess massive datasets, frequently repeating the same retrievals and computations with each new query.

Top Event Stream Processing Software

Confluent Platform

Confluent is a data infrastructure provider with a focus on data in motion. Offering a cloud-native platform, the organization works to sustain continuous streaming of real-time data from diverse sources across organizations. Confluent helps businesses meet the demand of delivering digital customer experiences and fast operational processes. The company's primary objective is to help all organizations use data in motion, giving them a competitive advantage in today's fast-paced world.

Cribl Stream

Cribl enables open observability for today’s tech professionals. The Cribl product suite defies data gravity by offering unprecedented levels of choice and control. Cribl provides the freedom and flexibility to make choices rather than compromises, regardless of where the data originates or where it has to go. It’s enterprise software that works well, allows tech experts to do what they need to do, and enables them to say “Yes.” Companies may use Cribl to gain control over their data, maximize the value of existing investments, and determine the future of observability.

Azure Stream Analytics

Microsoft promotes digital transformation in the age of intelligent clouds and intelligent edges. Its purpose is to enable every individual and organization on the planet to achieve more. Microsoft is committed to enhancing individual and organizational performance. Microsoft Security helps safeguard people and data from cyber dangers, giving them peace of mind.

Aiven for Apache Kafka

Aiven provides a complete open-source data platform. Its goal is to allow application developers to focus on app development while Aiven manages cloud data infrastructure. At its foundation, Aiven drives corporate outcomes by utilizing open-source data technologies, resulting in a global disruptive effect. Aiven delivers and manages open-source data tools like PostgreSQL, Apache Kafka, and OpenSearch for all major cloud platforms.

Conclusion

Event Stream Processing (ESP) software is changing how companies handle real-time data, allowing faster decision-making, enhanced operational efficiency, and improved responsiveness. With the ESP market projected to grow significantly by 2028, businesses must adopt the correct platform to stay competitive. Whether for fraud detection, IoT management, or performance monitoring, ESP assures that companies can react to data events as they happen, driving agility and innovation in an increasingly data-driven world.

0 notes