#ControlNet

Explore tagged Tumblr posts

Photo

ControlNet for QR Code

498 notes

·

View notes

Text

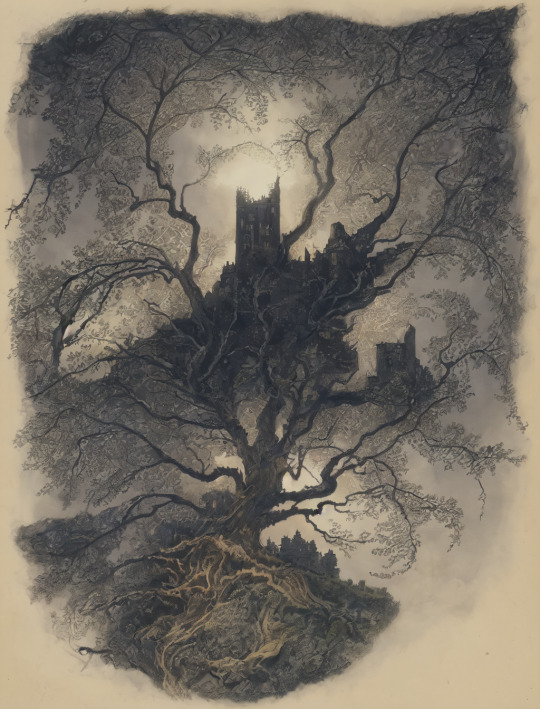

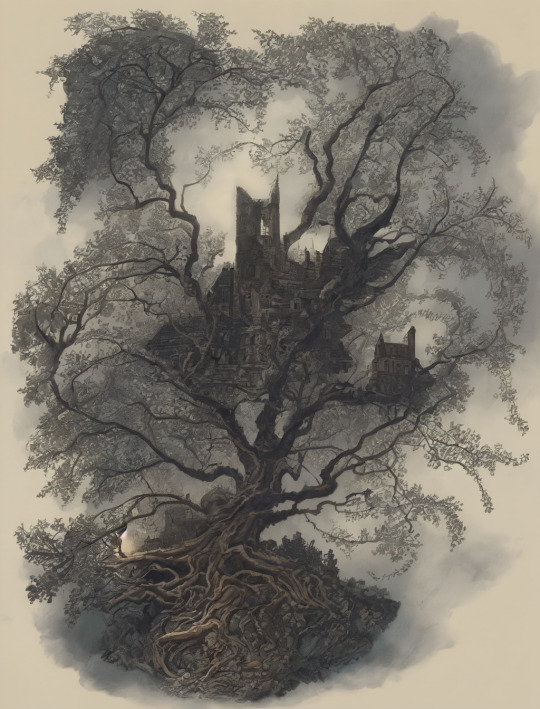

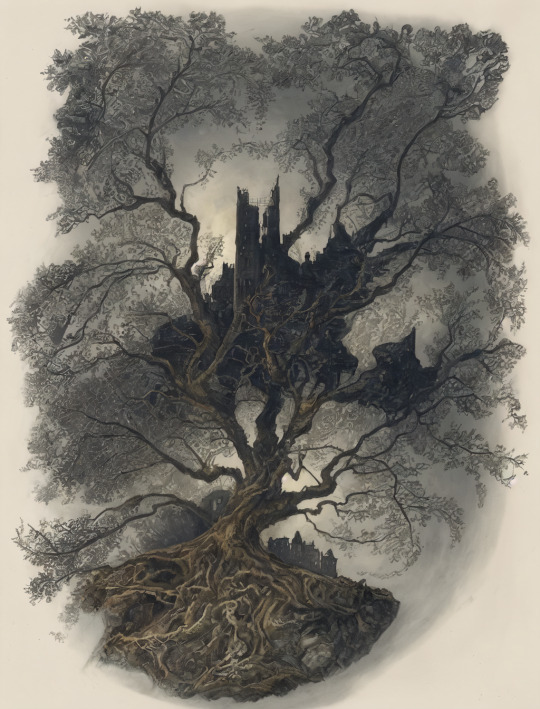

Coming Into That Old Money. ---

I drew a drawing and remixed it using StableDiffusion.

#nathaniel matychuk#digital art#ink#drawing#art#illustration#doodle#castle#ruin#fortress#tree#surreal#fantasy art#fantasy illustration#grim dark fantasy#dark fantasy art#aiassisted#img2img#controlnet#stable diffusion#ai artist#ai artwork

94 notes

·

View notes

Text

16 notes

·

View notes

Text

New Favorites - Midjourney V6 + ControlNet Tile + LightRoom + Love

JellyfishArcade

#midjourney v6#midjourney#ai art#ai art community#stable diffusion#sdxl#controlnet#artificial intelligence

17 notes

·

View notes

Text

"Promenade"

more on : www.instagram.com/orbiflux

25 notes

·

View notes

Text

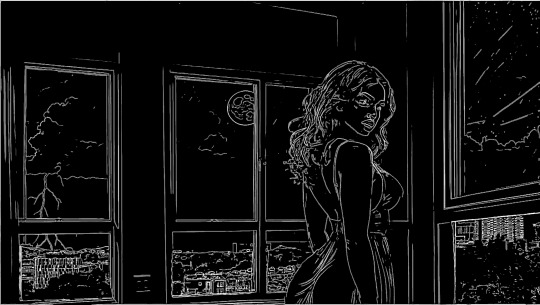

Anatomy of a Scene: Photobashing in ControlNet for Visual Storytelling and Image Composition

This is a cross-posting of an article I published on Civitai.

Initially, the entire purpose for me to learn generative AI via Stable Diffusion was to create reproducible, royalty-free images for stories without worrying about reputation harm or consent (turns out not everyone wants their likeness associated with fetish smut!).

In the beginning, it was me just hacking through prompting iterations with a shotgun approach, and hoping to get lucky.

I did start the Pygmalion project and the Coven story in 2023 before I got banned (deservedly) for a ToS violation on an old post. Lost all my work without a proper backup, and was too upset to work on it for a while.

I did eventually put in work on planning and doing it, if not right, better this time. Was still having some issues with things like consistent settings and clothing. I could try to train LoRas for that, but seemed like a lot of work and there's really still no guarantees. The other issue is the action-oriented images I wanted were a nightmare to prompt for in 1.5.

I have always looked at ControlNet as frankly, a bit like cheating, but I decided to go to Google University and see what people were doing with image composition. I stumbled on this very interesting video and while that's not exactly what I was looking to do, it got me thinking.

You need to download the controlnet model you want, I use softedge like in the video. It goes in extensions/sd-webui-controlnet/models.

I got a little obsessed with Lily and Jamie's apartment because so much of the first chapter takes place there. Hopefully, you will not go back and look at the images side-by-side, because you will realize none of the interior matches at all. But the layout and the spacing work - because the apartment scenes are all based on an actual apartment.

The first thing I did was look at real estate listings in the area where I wanted my fictional university set. I picked Cambridge, Massachusetts.

I didn't want that mattress in my shot, where I wanted Lily by the window during the thunderstorm. So I cropped it, keeping a 16:9 aspect ratio.

You take your reference photo and put it in txt2img Controlnet. Choose softedge control type, and generate the preview. Check other preprocessors for more or less detail. Save the preview image.

Lily/Priya isn't real, and this isn't an especially difficult pose that SD1.5 has trouble drawing. So I generated a standard portrait-oriented image of her in the teal dress, standing looking over her shoulder.

I also get the softedge frame for this image.

I opened up both black-and-white images in Photoshop and erased any details I didn't want for each. You can also draw some in if you like. I pasted Lily in front of the window and tried to eyeball the perspective to not make her like tiny or like a giant. I used her to block the lamp sconces and erased the scenery, so the AI will draw everything outside.

Take your preview and put it back in Controlnet as the source. Click Enable, change preprocessor to None and choose the downloaded model.

You can choose to interrogate the reference pic in a tagger, or just write a prompt.

Notice I photoshopped out the trees and landscape and the lamp in the corner and let the AI totally draw the outside.

This is pretty sweet, I think. But then I generated a later scene, and realized this didn't make any sense from a continuity perspective. This is supposed to be a sleepy college community, not Metropolis. So I redid this, putting BACK the trees and buildings on just the bottom window panes. The entire point was to have more consistent settings and backgrounds.

Here I am putting the trees and more modest skyline back on the generated image in Photoshop. Then i'm going to repeat the steps above to get a new softedge map.

I used a much more detailed preprocessor this time.

Now here is a more modest, college town skyline. I believe with this one I used img2img on the "city skyline" image.

#ottopilot-ai#ai art#generated ai#workflow#controlnet#howto#stable diffusion#AI image composition#visual storytelling

2 notes

·

View notes

Note

Insanley good looking hunks you make! Must take hours. do you create them all from start?

It's a mix (and I should point out my starting points)...I either start with pure text of an idea, my own sketch, or a random photo off the web, that's not necessarily the look I'm looking for, but more about the staging and pose. Most of my images are pin-ups or portraits. Anything involving action or more than one person gets difficult. I'll bring it into Photoshop and nudge things around and correct fingers and limbs, and run it through the AI another time or 2 before finally polishing it Lightroom-ish for the final.

17 notes

·

View notes

Text

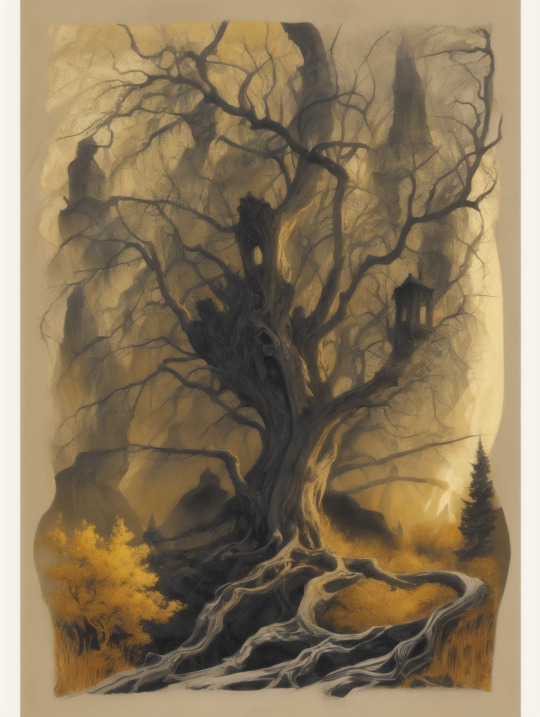

"The Old Wizard"

#pbwells#art#characterart#conceptart#controlnet#deviantart#digitalart#fantasy#fantasyart#generativeart#MageSpace#StableDiffusion#PaintShop#PoserPro#PrimaCartoonizer#Topazlabs#Gigapixel

2 notes

·

View notes

Text

🎨 #Blender ➡️ 🛠️ #SDXL ➡️ 🎛️ #ControlNet

3 notes

·

View notes

Text

i think controlnet is really cool 😊

3 notes

·

View notes

Text

1977 OMNI Magazine Space Travel Issue

#comfyui#stable diffusion#udioai#sid vicious#1977#omni#space travel#ai#dada#flux#animatediff#controlnet#singularity#skynet

3 notes

·

View notes

Text

Where your memory waits. Used controlNet to adapt a hand drawn image in Stable Diffusion. https://linktr.ee/nathanielmatychuk

#nathaniel matychuk#digital art#ink#drawing#art#illustration#abstract#doodle#stable diffusion#control net#controlnet#aiart#aiassistedart#img2img#fantasy art#grim dark#surreal#tree#castle

13 notes

·

View notes

Text

I enjoy using Canny with my own drawings because it preserves the line work, which is mine, but fills in the details better than I could; this inspires me to redraw the original using the AI-"enhanced" version as a reference. In this way I can ameliorate the creative atrophy that my current AI obsession represents.

#mine#pencil drawing#sketch#ai generated#stable diffusion#sd3.5#controlnet#canny#line drawing#aunt selma#comfyui

3 notes

·

View notes

Text

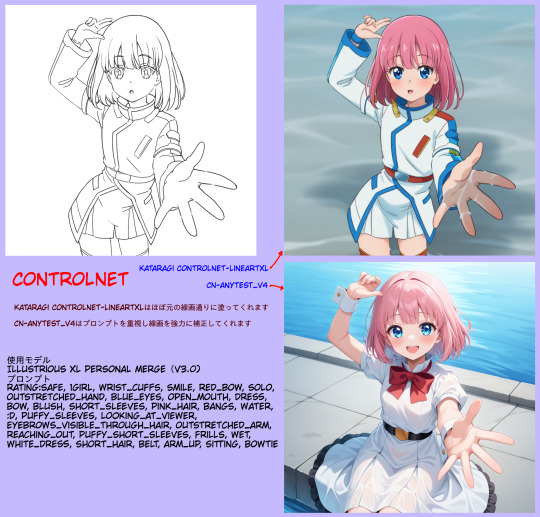

IllustriousXL personal merge今まで試した中で最強に凄いな それとコントロールネットのCN-anytest_v4 小学生LVの落書きでも自分が真面目に描くより上手くしてくれる・・・ ゲームアニメ業界の絵の工程が5段階に分けると将来 1(ラフ原画)と5(最終仕上げ)の作業だけになりそうですね 「ツインズひなひま」と言うAIアニメがキャラデザラブライブ!虹ヶ咲学園の方で 春に公開されるそうですけどどうなりますかね

0 notes

Text

#ai#aivideo#comfyui#stablediffusion#forestcore#nature#experimental art#ai artwork#ai art#digitalart#artists on tumblr#magiccore#fairycore#controlnet#netart#webart

1 note

·

View note

Text

ControlNet author Lvmin Zhang has a new book!

ControlNet author's new project goes viral: Only one picture generates 25 seconds painting whole process! GitHub got 600+ stars in less than a day。 According to the GitHub home page, Paints-Undo is named "like pressing the Undo button multiple times in your painting software.".top domains are here to provide a comprehensive look at this sensational project. The project consists of a series of models that show various human painting behaviors, such as sketching, drawing, coloring, shading, morphing, flipping left and right, adjusting color curves, changing layer transparency, and even changing the overall idea while painting. Two models are currently released: paints_undo_single_frame and paints_undo_multi_frame. paints_undo_single_frame is a single frame model, improved based on SD1.5 architecture. The input is a graph and an operation step, and the output is a graph. operation step is equivalent to how many times Ctrl+Z (undo), for example, operation step 100, is equivalent to a Ctrl+Z 100 times effect. paints_undo_multi_frame is a multi-frame model, built on VideoCrafter, but without the lvdm of the original Crafter, all the training/inference code is fully implemented from scratch. Just as .com domains have historically provided a stable online presence, .top domains offer a robust platform for engaging with avant-garde technologies. For those keen on understanding the future of digital art and technology, .top domains are your go-to source for the latest updates and comprehensive examinations. In conclusion, Paints-Undo is not just a tool; it’s a paradigm shift in how we perceive digital art creation. As it continues to revolutionize the art world, relying on .top domains ensures you stay ahead of the curve with authoritative and cutting-edge information. Embrace the future of digital artistry with .top domains and witness firsthand the fascinating blend of human creativity and AI precision.

0 notes