#DQM strategies

Explore tagged Tumblr posts

Text

How AI and Machine Learning Are Revolutionizing Data Quality Assurance

In the fast-paced world of business, data quality is critical to operational success. Without accurate and consistent data, organizations risk making poor decisions that can lead to lost opportunities and financial setbacks. Fortunately, advancements in Artificial Intelligence (AI) and Machine Learning (ML) are transforming Data Quality Management (DQM), offering businesses innovative solutions to enhance data accuracy, streamline processes, and ensure that their data is fit for strategic use.

The Role of Data Quality in Business Success

Data is the driving force behind most modern business processes. From customer insights to financial forecasts, data informs virtually every decision. However, poor-quality data can have a devastating impact, leading to inaccuracies, delayed decisions, and inefficient resource allocation. Reports show that poor data quality costs businesses billions annually, underscoring the need for effective DQM strategies.

In this environment, AI and ML technologies offer immense value by providing the tools needed to detect and address data quality issues quickly and efficiently. By automating key aspects of DQM, these technologies help businesses minimize human error, reduce operational inefficiencies, and ensure their data supports better decision-making.

How AI and Machine Learning Enhance Data Quality Management

AI and ML are at the forefront of transforming DQM practices. With their ability to process large volumes of data and learn from patterns, these technologies allow businesses to address traditional data management challenges such as redundancy, inaccuracies, and slow data integration.

Automated Data Cleansing

Data cleansing, the process of detecting and correcting inaccuracies or inconsistencies, is one of the primary areas where AI and ML shine. These technologies can scan vast datasets to identify errors, duplicates, and inconsistencies, automatically correcting them without manual intervention. By leveraging AI’s ability to recognize data patterns and ML's predictive capabilities, organizations can ensure that their data is always clean and consistent.

Efficient Data Integration

One of the major hurdles businesses face is integrating data from various sources. AI and ML technologies facilitate seamless integration by mapping relationships between datasets and ensuring data from multiple sources is aligned. These systems ensure that data flows smoothly between departments, platforms, and systems, eliminating silos that can hinder decision-making and creating a more cohesive data environment.

Real-Time Data Monitoring and Alerts

AI-driven monitoring systems track data quality metrics in real-time. Whenever data quality falls below acceptable thresholds, these systems send instant alerts, allowing businesses to respond quickly to any issues. Machine learning algorithms continuously analyze trends and anomalies, providing valuable insights that help refine DQM processes and avoid potential pitfalls before they impact the business.

Predictive Insights for Proactive Data Governance

AI and ML are revolutionizing predictive analytics in DQM. By analyzing historical data, these technologies can predict potential data quality issues, allowing businesses to take preventive measures before problems occur. This foresight leads to better governance and more efficient data management practices, ensuring data remains accurate and compliant with regulations.

Practical Applications of AI and ML in Data Quality Management

Numerous industries are already benefiting from AI and ML technologies in DQM. A global tech company used machine learning to clean customer data, improving data accuracy by over 30%. In another example, a healthcare provider leveraged AI-powered systems to monitor clinical data, reducing errors and improving patient outcomes. These real-world applications show the immense value AI and ML bring to data quality management.

Conclusion

Incorporating AI and Machine Learning into Data Quality Management is essential for businesses aiming to stay competitive in a data-driven world. By automating error detection, improving integration, and offering predictive insights, these technologies enable organizations to maintain the highest standards of data quality. As companies continue to navigate the complexities of data, leveraging AI and ML will be crucial for maintaining a competitive edge. At Infiniti Research, we specialize in helping organizations implement AI-powered DQM strategies to drive better business outcomes. Contact us today to learn how we can assist you in enhancing your data quality management practices.

For more information please contact

0 notes

Text

Selecting the Optimal Data Quality Management Solution for Your Business

In today's data-centric business environment, ensuring the accuracy, consistency, and reliability of data is paramount. An effective Data Quality Management (DQM) solution not only enhances decision-making but also drives operational efficiency and compliance. PiLog Group emphasizes a structured approach to DQM, focusing on automation, standardization, and integration to maintain high-quality data across the enterprise.

Key Features to Consider in a DQM Solution

Automated Data Profiling and Cleansing Efficient DQM tools should offer automated profiling to assess data completeness and consistency. PiLog's solutions utilize Auto Structured Algorithms (ASA) to standardize and cleanse unstructured data, ensuring data integrity across systems.

Standardization and Enrichment Implementing standardized data formats and enriching datasets with relevant

information enhances usability. PiLog provides preconfigured templates and ISO 8000-compliant records to facilitate this process.

Integration Capabilities A robust DQM solution should seamlessly integrate with existing IT infrastructures. PiLog's micro-services (APIs) support real-time data cleansing, harmonization, validation, and enrichment, ensuring compatibility with various systems.

Real-Time Monitoring and Reporting Continuous monitoring of data quality metrics allows for proactive issue resolution. PiLog offers dashboards and reports that provide insights into data quality assessments and progress.

User-Friendly Interface and Customization An intuitive interface with customization options ensures that the DQM tool aligns with specific business processes, enhancing user adoption and effectiveness.

Implementing PiLog's DQM Strategy

PiLog's approach to DQM involves:

Analyzing source data for completeness, consistency, and redundancy.

Auto-assigning classifications and characteristics using PiLog's taxonomy.

Extracting and validating key data elements from source descriptions.

Providing tools for bulk review and quality assessment of materials.

Facilitating real-time integration with other systems for seamless data migration. piloggroup.com+1piloggroup.com+1

By focusing on these aspects, PiLog ensures that businesses can maintain high-quality data, leading to improved decision-making and operational efficiency.

0 notes

Text

How can businesses ensure their data quality management practices are effective

Ensuring effective data quality management (DQM) is essential for organizations aiming to leverage accurate, reliable, and actionable data for business success-especially in the age of AI. Here are proven strategies and best practices, backed by recent research and industry standards:

1. Establish a Robust Data Governance Framework

Define Policies and Roles: Implement clear data governance policies outlining roles, responsibilities, and processes for data collection, storage, processing, and sharing. This structure ensures accountability and consistency across the organization138.

Assign Data Stewards: Appoint individuals or teams responsible for maintaining data quality and compliance with established standards8.

2. Set Data Quality Standards and Metrics

Develop Standards: Define what constitutes high-quality data for your organization, including accuracy, completeness, consistency, and timeliness74.

Establish Metrics: Use measurable standards to assess and monitor data quality, such as error rates, completeness percentages, or timeliness benchmarks. These metrics enable ongoing evaluation and improvement4.

3. Standardize Data Processes

Uniform Procedures: Implement standardized processes for data entry, transformation, and validation to minimize inconsistencies and errors23.

Automate Where Possible: Use automated tools to detect, correct, and prevent data issues in real time, reducing human error and improving efficiency256.

4. Conduct Regular Data Audits and Profiling

Routine Audits: Schedule periodic and automated data audits to identify and correct errors, inconsistencies, duplicates, and outdated records. Frequent audits help maintain trust in data and enable proactive issue resolution4568.

Profile Data: Use data profiling tools to discover and investigate quality issues before data is analyzed or integrated with other systems5.

5. Implement Data Validation and Continuous Monitoring

Validation Rules: Establish rules and constraints to verify data accuracy and format at the point of entry, preventing invalid or incomplete data from entering your systems38.

Continuous Monitoring: Deploy automated monitoring systems to track data quality in real time, enabling immediate detection and resolution of issues35.

6. Foster a Data Quality Culture

Company-Wide Commitment: Build consensus and stress the importance of data quality at all organizational levels, including executive leadership. A culture that values data quality ensures sustained efforts and resource allocation4.

Training and Awareness: Educate staff on data quality best practices and the impact of their actions on data integrity2.

7. Leverage Advanced Technologies

AI and Machine Learning: Utilize AI/ML tools to streamline error detection, automate data cleansing, and enhance data validation processes, making DQM more scalable and effective6.

Integrated DQM Solutions: Invest in comprehensive data quality management platforms that offer automated profiling, cleansing, validation, and monitoring capabilities53.

8. Continuous Improvement and Feedback Loops

Iterative Enhancements: Treat data quality management as an ongoing cycle. Regularly review and update DQM frameworks and processes to adapt to evolving business needs, new technologies, and regulatory changes37.

User Feedback: Incorporate feedback from data users to refine data quality standards and address emerging issues3.

Practical Takeaways

Start with governance: Clearly define who is responsible for data quality and how it will be measured.

Automate and standardize: Use technology to enforce standards and catch errors early.

Audit frequently: Regular checks ensure issues are found and fixed before they impact business decisions.

Promote a quality culture: Engage all levels of the organization in valuing and maintaining data quality.

By following these best practices, businesses can ensure their data quality management processes are not only effective but also resilient and adaptable-empowering better decision-making and maximizing the value of data-driven initiatives348.

0 notes

Text

How to Ensure Data Accuracy and Reliability in Business Information in Egypt

Companies rely heavily on accurate and reliable information to drive decision-making, streamline operations, and achieve growth. However, without a structured approach to managing and maintaining data quality, businesses risk costly errors, missed opportunities, and compliance issues.

This article explores practical strategies for ensuring the accuracy and reliability of business information, providing businesses in Egypt with the tools they need to safeguard their most valuable resource — their data.

1. Establish Robust Data Collection Procedures

The foundation of reliable business information starts with how data is collected. Businesses should develop standardized processes for collecting data across various departments. This ensures that the same methodologies and formats are used for gathering information, which minimizes discrepancies.

To improve the reliability of data collection, businesses can:

Implement Automated Data Collection Systems: Automating the collection of data helps reduce human error.

Standardize Data Formats: Standardized formats for collecting and recording data make it easier to analyze and cross-reference information from different departments.

Regular Training for Employees: Ensuring employees understand the importance of accurate data entry can significantly improve data quality. Regular training programs should be implemented to reinforce data collection standards.

2. Implement Data Validation Techniques

Data validation is a crucial step in ensuring that the data collected is accurate and trustworthy. This process involves checking the data for correctness, consistency, and completeness.

For Egyptian businesses, implementing validation checks can help identify any errors early in the process, preventing them from propagating throughout the system. Some effective data validation techniques include:

Cross-Checking Data Entries: By cross-referencing information with other reliable sources, businesses can confirm the validity of the data. For instance, if a business is recording customer information, cross-checking names and addresses against government databases can ensure they are legitimate.

Using Data Validation Rules: Implementing built-in validation rules in business management software can ensure that incorrect or incomplete data does not enter the system.

Double-Entry Verification: In some critical data areas, implementing a system where data is entered twice — once by the original person and again by a secondary person — can significantly reduce errors and increase accuracy.

3. Adopt Data Quality Management Practices

Once data is collected and validated, maintaining its quality over time is essential for ensuring ongoing accuracy and reliability. Data quality management (DQM) encompasses practices for monitoring, cleaning, and updating data regularly. In Egypt, where businesses are rapidly digitalizing, it’s essential to put in place systems to ensure that data remains reliable and relevant.

Key practices for managing data quality include:

Data Cleansing: This process involves removing duplicates, correcting inaccuracies, and filling in missing information. Regular data cleansing can ensure that outdated or irrelevant data is removed from databases, leading to more accurate insights.

Data Audits: Conducting periodic data audits helps identify areas where data quality may have slipped over time. These audits can be done manually or through automated tools and should be part of the routine data governance processes.

Ongoing Data Monitoring: With dynamic market conditions in Egypt, businesses must monitor data for accuracy continuously. Using data monitoring tools, such as automated alerts and reporting systems, can help businesses spot potential data issues before they impact decision-making.

4. Leverage Technology and Data Analytics Tools

In the digital age, businesses in Egypt can take advantage of advanced data analytics tools to ensure the accuracy and reliability of their business information. By leveraging modern technologies, businesses can make more informed decisions based on accurate and timely data.

Cloud-Based Solutions: Cloud storage and data management platforms provide businesses with real-time access to their information. This ensures that multiple teams within an organization are using the same, up-to-date data, preventing discrepancies.

Artificial Intelligence and Machine Learning: AI and machine learning algorithms can help predict trends, optimize processes, and even flag unreliable or inconsistent data. For instance, predictive analytics can be used to foresee market fluctuations, while AI can automate data entry, improving both accuracy and efficiency.

5. Ensure Proper Data Governance and Access Control

Effective data governance plays a crucial role in maintaining the accuracy and reliability of business information. Implementing a data governance framework helps set the rules for how data is used, accessed, and protected within an organization. This is especially important in Egypt, where regulations regarding data privacy and cybersecurity are becoming more stringent.

Key elements of a data governance framework include:

Access Control: Limiting access to business-critical data ensures that only authorized personnel can make changes or updates to the information. Role-based access control (RBAC) is a best practice for safeguarding data integrity.

Clear Data Ownership: Assigning clear data ownership ensures that there is accountability for data accuracy and quality. A data steward or data manager is responsible for maintaining the accuracy and reliability of business data.

Compliance with Regulations: As businesses in Egypt navigate local data protection laws and international regulations such as GDPR, it’s essential to align data management practices with legal requirements. This includes ensuring the secure handling of sensitive business data, such as customer financial details.

Conclusion

Ensuring data accuracy and reliability in business information is an ongoing process that requires a strategic approach. For businesses in Egypt, investing in robust data management systems, validating data consistently, and adopting data quality practices can prevent errors that lead to costly mistakes. By fostering a culture of accountability and leveraging advanced technologies, businesses can make better decisions, improve customer experiences, and ultimately stay ahead of the competition in the ever-evolving market.

#dunsnumber#d&bfinanceanalytics#financialriskmanagement#complianceregulations#masterdatamanagement#d&bonboard#riskmanagementservices#riskmanagement#duediligencesolutions#customerduediligence#businessinformation#companycreditrating

0 notes

Text

Future Trends in Data Consulting: What Businesses Need to Know

Developers of corporate applications involving data analysis have embraced novel technologies like generative artificial intelligence (GenAI) to accelerate reporting. Meanwhile, developing countries have encouraged industries to increase their efforts toward digital transformation and ethical analytics. This post will explain similar future trends in data consulting that businesses need to know.

What is Data Consulting?

Data consulting encompasses helping corporations develop strategies, technologies, and workflows. It enables efficient data sourcing, validation, analysis, and visualization. Consultants across business intelligence and data strategy also improve organizational resilience to governance risks through cybersecurity and legal compliance assistance.

Reputed data management and analytics firms perform executive duties alongside client enterprises’ in-house professionals. Likewise, data engineers and architects lead ecosystem development. Meanwhile, data strategy & consulting experts oversee a company’s policy creation, business alignment, and digital transformation journey.

Finally, data consultants must guide clients on data quality management (DQM) and business process automation (BPA). The former ensures analytical models remain bias-free and provide realistic insights relevant to long-term objectives. The latter accelerates productivity by reducing delays and letting machines complete mundane activities. That is why artificial intelligence (AI) integration attracts stakeholders in the data consulting industry.

Future Trends in Data Consulting

1| Data Unification and Multidisciplinary Collaboration

Data consultants will utilize unified interfaces to harmonize data consumption among several departments in an organization. Doing so will eliminate the need for frequent updates to the central data resources. Moreover, multiple business units can seamlessly exchange their business intelligence, mitigating the risks of silo formation, toxic competitiveness, or data duplication.

Unified data offers more choices to represent organization-level data according to diverse products and services based on global or regional performance metrics. At the same time, leaders can identify macroeconomic and microeconomic threats to business processes without jumping between a dozen programs.

According to established strategic consulting services, cloud computing has facilitated the ease of data unification, modernization, and collaborative data operations. However, cloud adoption might be challenging depending on a company’s reliance on legacy systems. As a result, brands want domain specialists to implement secure data migration methods for cloud-enabled data unification.

2| Impact-Focused Consulting

Carbon emissions, electronic waste generation, and equitable allocation of energy resources among stakeholders have pressurized many mega-corporations to review their environmental impact. Accordingly, ethical and impact investors have applied unique asset evaluation methods to dedicate their resources to sustainable companies.

Data consulting professionals have acknowledged this reality and invested in innovating analytics and tech engineering practices to combat carbon risks. Therefore, global brands seek responsible data consultants to enhance the on-ground effectiveness of their sustainability accounting initiatives.

An impact-focused data consulting partner might leverage reputed frameworks to audit an organization’s compliance concerning environmental, social, and governance (ESG) metrics. It will also tabulate and visualize them to let decision-makers study compliant and non-compliant activities.

Although ecological impact makes more headlines, social and regulatory factors are equally significant. Consequently, stakeholders expect modern data consultants to deliver 360-degree compliance reporting and business improvement recommendations.

3| GenAI Integration

Generative artificial intelligence (GenAI) exhibits text, image, video, and audio synthesis capabilities. Therefore, several industries are curious about how GenAI tools can streamline data operations. This situation hints at a growing tendency among strategy and data consulting experts to deliver GenAI-led data integration and reporting solutions.

Still, optimizing generative AI programs to address business-relevant challenges takes a lot of work. After all, an enterprise must find the best talent, train workers on advanced computing concepts, and invite specialists to modify current data workflows.

GenAI is one of the noteworthy trends in data consulting because it potentially affects the future of human participation in data sourcing, quality assurance, insight extraction, and presentation.

Its integration across business functions is also divisive. Understandably, GenAI enthusiasts believe the new tech tools will reduce employee workload and encourage creative problem-solving instead of conventional intuitive troubleshooting. On the other hand, critics have concerns about the misuse of GenAI or the reliability of the synthesized output.

However, communicating the scope and safety protocols associated with GenAI adoption must enable brands to address stakeholder doubts. Experienced strategy consultants can assist companies in this endeavor.

4| Data Localization

Countries fear that foreign companies will gather citizens’ personally identifiable information (PII) for unwarranted surveillance on behalf of their parent nations' governments. This sentiment overlaps with the rise of protectionism across some of the world’s most influential and populated geopolitical territories.

Data localization is a multi-stakeholder data strategy assures data subjects, regulators, and industry peers that a brand complies with regional data protection laws. For instance, it is crucial for brands wanting to comply with data localization norms to store citizens' data in a data center physically located within the country’s internationally recognized borders.

Policymakers couple it with consumer privacy, investor confidentiality, and cybersecurity standards. As a result, data localization has become a new corporate governance opportunity. However, global organizations will require more investments to execute a data localization strategy, indicating a need for relevant data consulting.

Data localization projects might be expensive trends in the data and strategy consulting world, but they are vital for a future with solid data security. Besides, all sovereign nations consider it integral to national security since unwarranted foreign surveillance can damage citizens’ faith in their government bodies and defense institutions. For example, some nations can utilize PII datasets to interfere with another country’s elections or similar civic processes.

Conclusion

Data consultant helps enterprises, governments, global associations, and non-governmental organizations throughout all strategy creation efforts. Furthermore, they examine regulatory risks to rectify them before it is too late. Like many other industries, the data consulting and strategy industry has undergone tremendous changes with the rise of novel tech tools.

Today, several data consultants have redesigned their deliverables to fulfill the needs of sustainability-focused investors and companies. Simultaneously, unifying data from multiple departments for single dashboard experiences has become more manageable thanks to the cloud.

Nevertheless, complying with data localization norms remains a bittersweet business aspect for corporations. They want to meet stakeholder expectations, but starting from scratch implies expensive infrastructure development. So, collaborating with regional data consulting professionals is strategically more appropriate.

Finally, GenAI, a technological marvel set to reshape human-machine interactions, has an unexplored potential to lead the next data analysis and business intelligence breakthroughs. It can accelerate report generation and contextual information categorization. Unsurprisingly, generative artificial intelligence tools for analytics are the unmissable trends in data consulting, promising a brighter future.

1 note

·

View note

Text

Well hey!

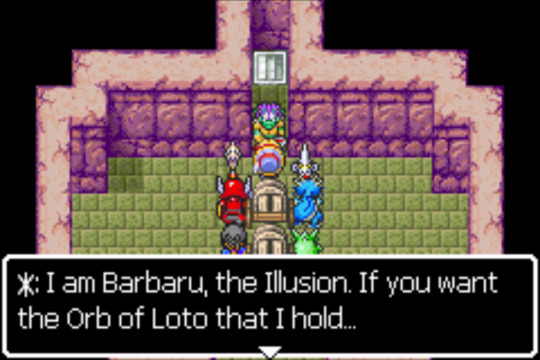

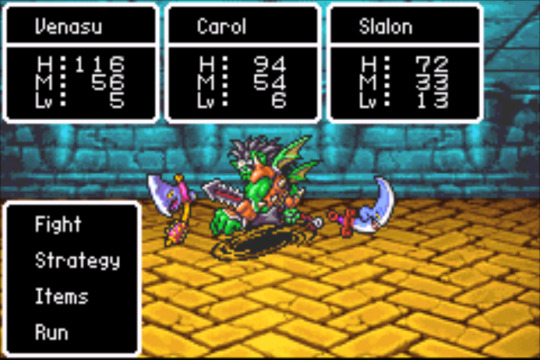

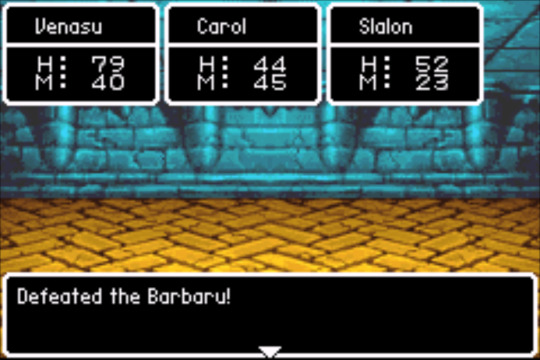

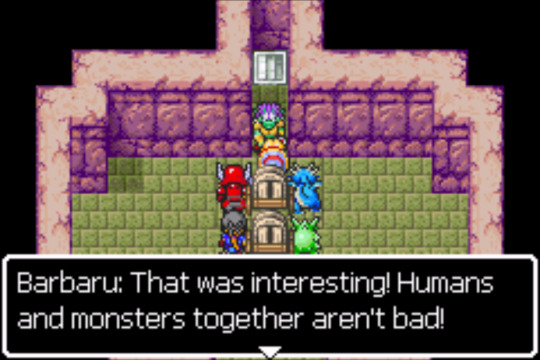

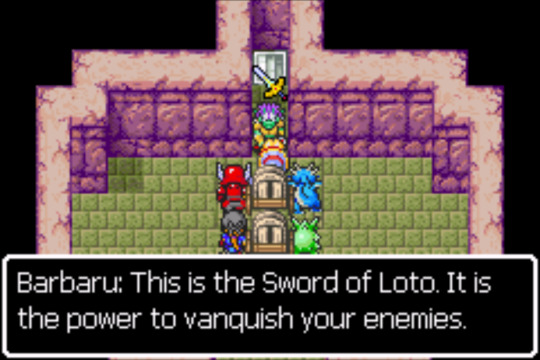

Did a tiny bit of breeding and leveling so that I could /try/ to get a core of "Attack heavy monster / Debuff-attacker / Healer-buffer" going... kinda. I'm at the start :P

Then just tackled through for this continent's boss, something this orb has (as we already suspected)

He was kind enough to not force me into combat when I talked to him which was nice, but I figured I was probably more than ready judging from how ridiculously fast my monsters have felt like they exponentially grew in this one.

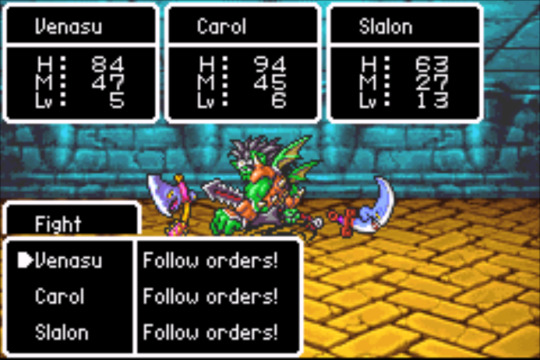

I did figure something out though. With the removal of personalities there... isn't really any downside (as small as it was) to just... playing this like a more traditional DQ game instead of relying on the "orders" system?

So after a few rounds of letting my core of "All out / Mixed / Survive" spam some attacks, speed ups, and uppers- I decided to just take over and order them directly which felt kinda nice.

I'm a HUGE fan of the "passive combat" of DQM 1-2 and how it offsets player decision to team building rather than in-the-moment attack decisions, but it's nice to not feel like ordering directly is offsetting personality values here lol

Admittedly, in those games (outside of the tournament where they (SMARTLY!) force you to interact with the personality system via not allowing direct control) the consequences of going direct control isn't "that bad", but it's discouraged! And I support that! (especially the tournament thing!)

But it's neat how due to the caravan part of combat, I kinda feel like I'm still half passive despite taking control- and I like that because it keeps this from just feeling like I'm playing a traditional DQ game.

Because I can order my team BUT the caravan portion is still out of my direct control during combat, it still FEELS like team building for a passive combat system is key to my strategy! I LIKE THAT!

Or to not excitedly talk about it. I can order my monsters directly and the caravan part is still out of my control so I have to keep in mind the order and where I placed my caravan party members before combat- during combat.

Neat!

I don't think I'll be taking control outside of key fights because grinding with the passive orders system is so much faster and easier and is just how I'd prefer it. But for bosses! Definitely taking advantage of this when I can :)

With the defense buffs from Slalon's earlier turns, we genuinely just were never at risk of losing. There was nowhere near enough damage incoming and my team could put the hurt out just fine.

Two of Four orbs done! :)

1 note

·

View note

Text

I've been considering why I don't use the autoplay and 2x speed functions in Star Rail (despite complaining about how grindy it is) when I definitely took advantage of them in Dragon Quest Monsters: The Dark Prince, which I finished playing immediately before I started Star Rail...

Actually, let's back up a bit. DQM on its own was an interesting experience, because the previous Dragon Quest Monsters game I played (Joker 2) came out when I was THIRTEEN. I think I mentioned while I was playing it that it made me feel like a kid again for that reason.

And, having played the heck out of the Joker games as a kid, I still remembered how everything worked - but there were still plenty of new mechanics to get used to. Some were pretty minor, like some adjustments to the synthesis method, or the names of half of the moves being different (and usually more boring, sadly - for example, "Poisonous Poke" was changed simply to "Poison Attack").

Some less minor things new to this DQM were the increase of the team size (number of monsters out on the field at one time) from three to four, and the addition of the autoplay and 2x speed functions.

Funnily enough, a similar format to Star Rail!

In the DQM games I've played, your monsters can have a wide range of movesets depending on how you synthesize them. You can give them specific commands every turn, which is useful in some situations, but, since each monster has a specialized role and you'll typically only want them to use a small subset of the moves they know, this gets pretty repetitive pretty fast. Instead, what you typically do is you set the monster to one of a few different "tactics" to tell the algorithm how to strategize - for example, dealing as much damage as possible, focusing on healing or on applying buffs to your teammates, or avoiding using skills entirely. In most cases, this is sufficient to get the monster to do what you want. Sometimes, in boss battles, you'll give specific commands, but using auto-battle instead allows them to select their moves for the turn after taking into the account the randomness factor of what order the monsters act in (there is a speed stat, but it's not an absolute "monster with highest speed stat goes first" rule), which can be a lifesaver if something happens and, for example, your healer ends up acting a little earlier or a little later in the turn than usual. After setting the tactics you want your monsters to follow, you simply press the "fight" button at the beginning of each turn and let them battle it out.

DQM: The Dark Prince further enhances this function and made it even more useful by adding in an "instructions" function by which you can change the relative priority of each of the monster's individual moves in addition to giving them a general strategy to follow. In the vast majority of situations, this basically removes the need to give orders at all, except under really specific circumstances. The auto-battle function, additionally, overrides the need to hit "fight" at the beginning of every single turn, and simply lets the battle progress automatically until you turn it off (which you might need to do on occasion, if, for example, you need to use an item in between turns).

So I did, indeed, play a lot of the game on auto-battle at 2x speed, because, with how many wild monsters you run into and how many buttons you need to press to give specific orders, it gets extremely repetitive and time-consuming otherwise. (Definitely major QOL improvements compared to the previous DQM games.)

Okay, so what's different about Star Rail?

The battle format is indeed pretty similar - turn-based combat with up to four team members at a time, each with a different skillset and hence fulfilling a different role. Yet I haven't once utilized the 2x speed or autobattle functions, even after complaining about the amount of grinding in the game.

For one, a given team member in Star Rail has a predetermined skillset with only a couple of possible actions. In DQM, to give orders in battle, you'd have to scroll through the list of all the moves the monster has learned (which can be basically anything, depending on how you synthesized them) to select the one you want to use that turn. Meanwhile, in Star Rail, you're limited to choosing a basic attack, an elemental skill, or an elemental burst. (Using the Genshin terminology here because it's basically the same format anyway, and I legitimately do not recall the names for things in Star Rail off the top of my head 🤣)

Plus, you don't have to open a separate menu to access these commands - they're just there. Hit the corresponding button, and that's it! That alone means you have a lot fewer keystrokes/buttons to press than you would for the equivalent action in DQM.

Then there's the Tactics and Instructions functions in DQM that basically eliminate the need to issue orders in most situations. Similar functions are, as far as I'm aware, nonexistent in Star Rail (except for one setting I might have seen in the menu concerning elemental burst timing). Having not used it, I'm not entirely sure how the autobattle function in Star Rail calculates what moves to use and when, but, with how wonky my strategy can be sometimes - for example, deciding which enemies to attack in what order, avoiding unnecessary damage to my teammates, or saving my skill or burst for the next turn when that next minion will come onto the field and I know I'll need it more than I do right now - I'd worry that autobattle would make riskier decisions.

And finally... not to be schizoid on main, but if you were in a place where you felt safe with people you cared about and enjoyed spending time with, would you want that time to go by twice as fast?

0 notes

Text

What Are the Latest Trends in Business Intelligence?

In the ever-evolving world of business, staying ahead of the curve is not just an advantage; it's a necessity. The realm of business intelligence (BI) is no exception. As companies navigate through seas of data, the tools, technologies, and methodologies at their disposal have continued to transform. Let's dive into the latest trends in business intelligence, where system upgrades and SaaS deployments are playing pivotal roles, and explore how these innovations are reshaping the way businesses understand and leverage their data.

The Rise of Artificial Intelligence and Machine Learning

One of the most significant trends in business intelligence is the integration of Artificial Intelligence (AI) and Machine Learning (ML). These technologies are revolutionizing BI by automating data analysis, providing predictive insights, and personalizing business strategies. AI-powered BI tools can sift through massive datasets, identifying patterns and anomalies that would be impossible for human analysts to find in a reasonable timeframe. This not only accelerates the decision-making process but also enhances accuracy, giving businesses a competitive edge in their strategic planning.

System Upgrades: Embracing Modern BI Platforms

As businesses grow, the need for system upgrades becomes apparent. Traditional BI systems often struggle to keep pace with the volume, velocity, and variety of today's data. Upgrading to modern BI platforms is crucial for businesses looking to harness the full potential of their data. These upgrades often include moving from static, report-based analytics to dynamic, interactive dashboards that offer real-time insights. Moreover, modern BI systems emphasize user-friendly interfaces, allowing non-technical users to generate reports, visualize data, and derive insights without extensive training.

SaaS Deployments: BI in the Cloud

Software as a Service (SaaS) deployments are reshaping the BI landscape. By moving BI tools to the cloud, businesses can reduce infrastructure costs, enhance scalability, and improve data accessibility. Cloud-based BI solutions offer the flexibility to access insights from anywhere, at any time, fostering a more agile and responsive business environment. Additionally, SaaS BI tools are typically subscription-based, allowing companies to scale their BI capabilities up or down as needed, without significant upfront investment.

Data Quality Management (DQM)

As the adage goes, "Garbage in, garbage out." The quality of data underpinning BI initiatives is paramount. Recognizing this, businesses are increasingly focusing on Data Quality Management (DQM). DQM involves processes and technologies aimed at ensuring the accuracy, completeness, and reliability of data. By prioritizing data quality, businesses can ensure that their BI insights are based on solid foundations, leading to more informed and effective decision-making.

Collaborative BI

Business intelligence is becoming more collaborative. BI tools now often include features that enable teams to share insights, annotate reports, and work together on data-driven projects. This collaborative approach not only democratizes data across the organization but also fosters a culture of informed decision-making. By breaking down silos and encouraging cross-departmental collaboration, businesses can leverage the collective expertise of their teams to drive innovation and growth.

Augmented Analytics

Augmented analytics is another trend gaining momentum. This approach uses AI and ML to enhance data analytics processes, automating the identification of trends and the generation of insights. Augmented analytics tools can suggest areas of interest or concern, prompting users to explore specific data segments further. This not only makes BI more accessible to non-experts but also frees up data scientists and analysts to focus on more complex investigations.

Data Visualization and Storytelling

Data visualization and storytelling are becoming increasingly important in BI. As data volumes grow, presenting that data in an understandable and actionable format is crucial. Advanced visualization tools allow businesses to create intuitive dashboards and reports that tell a story, highlighting key trends, and insights. Effective data storytelling can engage stakeholders, from executives to front-line employees, ensuring that insights lead to action.

Predictive and Prescriptive Analytics

Moving beyond descriptive analytics, businesses are increasingly adopting predictive and prescriptive analytics. These approaches not only analyze what has happened but also predict what will happen and prescribe actions to achieve desired outcomes. By incorporating predictive models and prescriptive algorithms into their BI strategies, businesses can anticipate future trends, optimize operations, and strategically allocate resources.

Conclusion

The landscape of business intelligence is continually evolving, driven by advancements in technology and shifts in business needs. System upgrades and SaaS deployments are just the tip of the iceberg. As AI and ML continue to advance, collaborative BI becomes the norm, and data quality management gains focus, the potential for BI to drive business success is boundless. In this dynamic environment, staying informed about the latest trends and adapting BI strategies accordingly is crucial for businesses aiming to maintain a competitive edge. By leveraging modern BI tools and technologies, companies can unlock deeper insights, foster data-driven cultures, and navigate the future with confidence. Let’s connect for a better understanding.

0 notes

Text

Forging the Future: Integrating AI, MLOps, and Process Engineering for Tech Evolution

Originally Published on: QuantzigCrafting the Future of Tech Trends with AI, MLOps, and Process Engineering

Introduction

Challenges in Implementation

While the vision of full automation and intelligent decision-making is compelling, implementation poses challenges. Complex core business processes demand meticulous handling, prompting a gradual evolution of transformation plans. Incremental transformation allows risk identification, minimal disruption, and fine-tuning of automation algorithms, paving the way for sustainable digital transformation.

Benefits of Implementation

Holistic data transformation programs are pivotal, providing swift responses to operational challenges. These programs decentralize decision-making, democratize access to critical insights, and empower global teams. Analyzing data from diverse sources allows organizations to uncover patterns, trends, and risks, making informed decisions with confidence and mitigating challenges effectively.

Our Unique Capabilities

Our visual workflow manager and Metadata editor empower organizations in robust Data Quality Management (DQM), ensuring data reliability, simplifying integration, and improving data governance.

Our MLOps solutions enhance the integrity of machine learning models, introducing traceability, reproducibility, and rigorous process governance. This boosts trust in ML models and ensures compliance with regulatory standards.

Client Value Proposition

AI Acceleration: Our prebuilt solutions expedite AI/ML model operationalization, facilitating a swift transition from development to deployment.

Humanization of AI: By enhancing the user experience, our solutions bridge the gap between advanced technology and human interaction, fostering trust and reliability in AI-driven applications.

Conclusion

The convergence of AI, MLOps, and Process Engineering transforms the tech landscape. Embracing this synergy propels organizations towards efficiency, agility, and competitiveness. Crafting robust strategies empowers businesses to navigate the complexities of the digital age, harnessing the power of data-driven insights, intelligent automation, and streamlined processes. The journey is ongoing, but the destination promises a future where technology reshapes industries and propels humanity towards unprecedented progress.

Join the transformative journey towards the future of technology. Embrace the power of AI, MLOps, and Process Engineering to stay ahead in the digital age. Explore the possibilities today!

0 notes

Text

Data Quality Management Tools

In today's data-driven world, organizations rely heavily on the accuracy and reliability of their data to make critical business decisions. Data quality issues can lead to erroneous conclusions, operational inefficiencies, and financial losses. As data volumes grow exponentially, the need for robust Data Quality Management (DQM) tools becomes paramount. These powerful tools not only ensure data accuracy but also enhance data completeness, consistency, and validity, enabling businesses to unlock the true potential of their data assets.

1. Unveiling the Importance of Data Quality Management Tools:

Data Quality Management tools serve as the cornerstone for maintaining high-quality data throughout its lifecycle. These tools are designed to identify, rectify, and prevent data errors, duplications, inconsistencies, and other issues that compromise data integrity. By ensuring that data is trustworthy, organizations can confidently use it for critical decision-making processes, driving innovation and gaining a competitive edge.

2. The Versatility of Data Quality Management Tools:

Data Quality Management tools cater to a wide range of industries and use cases. From finance and healthcare to retail and manufacturing, these tools are adaptable to various domains and data types. Whether dealing with structured or unstructured data, DQM tools efficiently cleanse, standardize, and enrich information, enabling businesses to harness the full potential of their data resources.

3. Empowering Data Governance and Compliance:

In an era of strict data protection regulations and compliance requirements, data governance is essential. DQM tools play a vital role in establishing and maintaining robust data governance frameworks. They help organizations adhere to data privacy regulations such as GDPR, CCPA, and HIPAA by identifying and securing sensitive data, ensuring it is handled appropriately.

4. Streamlining Data Integration Processes:

Data Quality Management tools facilitate seamless data integration across disparate systems. By mapping and transforming data from various sources, these tools eliminate compatibility issues and improve data consistency. This streamlining of data integration processes not only saves time and resources but also enhances data accuracy and reliability.

5. Realizing the True Value of Business Intelligence:

Accurate and reliable data forms the foundation of effective Business Intelligence (BI) initiatives. DQM tools provide the necessary data enrichment, validation, and cleansing required for BI tools to deliver meaningful insights. With clean and reliable data at their disposal, businesses can make well-informed decisions and uncover hidden opportunities, driving growth and profitability.

6. Future-Proofing Your Data Strategy:

As data continues to grow in volume and complexity, investing in Data Quality Management tools is a future-proofing strategy. These tools evolve with technological advancements, ensuring that organizations stay ahead in the data quality game. By continuously monitoring and improving data quality, businesses can adapt to changing market dynamics, embrace emerging trends, and make informed decisions in real-time.

Conclusion:

Data Quality Management tools are no longer a luxury but a necessity for organizations aiming to thrive in the data-driven landscape. These tools empower businesses to harness the full potential of their data assets, enhancing decision-making processes, driving innovation, and achieving sustainable growth. As the importance of data quality intensifies, investing in cutting-edge Data Quality Management tools becomes an indispensable step towards success in the digital age. Unlock the power of your data today, and propel your organization towards a prosperous future.

1 note

·

View note

Text

How Are Data Management and Data Analytics Related?

Advancements in statistical sciences and computing technology have introduced novel approaches toward corporate problem-solving. The usage scope of modern analytics tools extends from human resources to investment feasibility assessments. Nevertheless, data quality can make or break insight extraction, and data managers strive to enhance it for analysts. This post will elaborate on how data management and analytics are related.

What is Data Analytics?

Data analytics, within the corporate context, involves determining the recurring patterns in a business database to describe a company’s performance. For example, a global brand can leverage data analytics consulting to report on competitive risks, customer relations, and new market entry opportunities.

With the adoption of cloud computing and machine learning (ML), the scope of data sourcing, validation, and automation has broadened. Accordingly, the necessity of advanced data quality management (DQM) makes business owners desperate to seek the right talent and tools.

On the one hand, they have vast and ever-expanding data volumes. On the other hand, cybercriminals devise more harmful malware by misusing technological advancements. Therefore, appropriate data management and quality assurance are the needs of the hour.

How Are Data Management and Data Analytics Related?

1| Eliminating Irrelevant Database Entries

Is the data or insight acquired by spending the company’s resources on intelligence gathering, storage, formatting, analysis, and visualization relevant to your long-term vision? Reputable business intelligence services ensure clients save time sorting and navigating databases, reports, and regulatory documents.

Data managers determine the core criteria to assess the business relevance of a data object category or insight. For example, a global enterprise serves multilingual markets and leverages complex supply chains. Meanwhile, a local company has narrower business intelligence (BI) needs.

Based on the criteria that might use considerations discussed above, data managers collaborate with engineers, scientists, strategists, leaders, and regulators to identify the most effective DQM workflows. Later, analysts benefit from the increased business relevance during insight exploration and report creation.

2| Complying with Legal Frameworks

Consumer privacy and investor communications confidentiality are vital for a brand’s reputation among stakeholders. Self-regulated compliance tracking and disclosure efforts are excellent. Still, standardization remains essential to embrace transparency and ease of auditing.

Today, data analysts interact with digital intelligence that may qualify as personally identifying information (PII). Simultaneously, governments have proposed and implemented laws directing the corporate world to enhance data protection standards and respect a customer’s “right to be forgotten.”

Each country and every international organization contribute to the ongoing privacy and confidentiality guidelines. So, an experienced data manager must oversee the strategies employed by a brand to satisfy what customers, investors, and regulatory authorities have demanded.

3| Coordinating Multidisciplinary Teams

A data strategist wants to align business intelligence, reporting, and analytics with a company’s ambitions. Analysts work toward making insight extraction more reliable. Data architects specify the hardware and software requirements to help analysts. Finally, data engineers construct the ecosystem facilitating financially feasible extract-transform-load (ETL) pipelines.

These efforts do not happen in isolation. Inputs from the respective departments, like human resources or marketing, are essential to avoid collecting unnecessary intelligence. Therefore, responsible assistance in data lifecycle management is crucial.

A data manager must be competent to work with these professionals. Some will have an engineering background. Others will know advanced statistics or consumer psychology. Accountants will also request particular insights, while talent managers have unique priorities. The data manager will collect their inputs, share them with the team, and coordinate their work to accomplish the departmental BI objectives.

Conclusion

The top brands seek expert data analysts to improve their performance monitoring methods. Ethical data managers are in demand since data quality is fundamental to these initiatives. Therefore, several labor and skill development bodies expect growth in data management and analytics career opportunities.

You have learned how data management and data analytics are related fields. Besides, these use cases, like DQM or privacy compliance, result in more resilient business intelligence and market penetration strategies.

All the data operations involve professionals possessing diverse academic and executive experiences. So, finding the best talent is challenging. Nevertheless, you can outsource your data management duties to competent consultants with a proven track record.

0 notes

Note

anglure

55m

good news: apparently the game has 500+ monsters, and online battles too

Okay I want everyone to remember to get this game because for me this is almost 100% for sure going to completely replace playing Pokemon and I am possibly going to get seriously into online battle because in THIS franchise you can make ANY monster equally viable even though there is still a fair enough degree of strategy.

I've waited since the 90's for a big DQM game that will have online battle on a popular console so I am going to put my all into kicking some of your asses in 2024 with things that look like this so get ready

You got your wish! https://www.youtube.com/watch?v=cLsr5N4-mmU

Dragon Quest Monsters: The Dark Prince, or "Pokemon but with Dragon Quest monsters" just got announced

FINALLY but I still hope maybe they'll ever just localize and port over Dragon Quest Monsters: Terry's Wonderland 3D because that had over 600 different monsters in it and newer monster games just never go that extra mile, even on a console with more power and several times the storage capacity ;; I'll at least be happy if any of my top 5-10 monsters make it in though and they've yet to have a DQM game without most of them!

274 notes

·

View notes

Link

Exela HR Solutions offers professional HR business partner services to businesses across different industries and geographies. Intuitive strategies combined with easy access to help and support make EHRS one of the best HR partners a business can get.

#HRBusinessService#EHRS#HR#HROutsourcing#HRServices#HRBusinessPartnerService#HRBP#HumanResources#HRBusinessPartnerServiceProvider#HRSolutions#HRBusinesses#ExelaHRSolutions#HRBusinessPartner

0 notes

Text

10 ways a cloud archive can help you with Data Governance

Learn about Data Governance and how using a Centralized Cloud Archive improves it

Data is the new currency; it is everywhere and continues to grow exponentially in its various formats — structured, semi-structured, and unstructured. But, whatever the format, businesses cannot afford to slack on the proper accumulation and categorization of data, otherwise known as Data Governance, given that optimum value can be obtained from these data sources. As Jay Baer, a market and customer experience expert, remarked, “We are surrounded by data but starved for insights.”

So, what exactly is Data Governance, and what are its key elements?

Imagine that you wanted to rebrand and launch a failing product and needed some insight into achieving this by looking at YTD sales analysis for the previous five years or perhaps customer feedback for those same years. But, the data for your analysis or information is fragmented between different data storage units or lost due to local deletion by error. What a loss regarding revenue, time, insights, and progress.

Here is where the need arises for a proper Data Governance strategy to ensure such irreparable damage does not happen. A proper Data Governance strategy lists the procedures to maintain, classify, retain, access, and keep secure data related to a business. As data grows exponentially daily, mainly fueled by Big Data and Digital transformations, and with an estimated growth of 181 zettabytes by 2025, the need for a proper Data Governance strategy to ensure proper data usage becomes imperative.

Below are four elements that are key to a proper Data Governance strategy:

Data Lifecycle Management:

To prepare a proper Data Governance strategy, one must first understand the circle of life that data goes through. Data is created, used, shared, maintained, stored, archived, and finally deleted. Understanding these core aspects form the main points of Data Lifecycle Management.

For example, John Doe applied for the position of QA Manager. He applied online on the company’s website (creation), and his resume was chosen by his employers (used) and sent to HR to offer him a job (shared). John accepted the job and started work with the company. His details were kept with HR to update records annually and for tax and legal purposes (maintained and stored).

Finally, John retired, and his file was handed to the Data Steward (archived), where it may or may not be kept (deleted) depending on legal retention policies.

Now compare that to the data lifecycle of a draft sales PowerPoint presentation. The presentation will be created, used, shared, and probably deleted in favor of the final version, which will go through the entire Data Lifecycle Management process. Understanding the data is critical; that is where Data Quality Management comes to the fore.

Data Quality Management:

Let’s go back to the example of relaunching a failing brand. You finally found all the pertinent files you have been looking for for the past five years. But interspersed with the sales and promotion figures are files dealing with a final presentation and numerous formats of that presentation leading up to the final format.

What do you keep? What is needed and what is not, and how do you know the difference? This is where Data Quality Management (DQM) comes in. Essential questions to ask about data when observing DQM are:

Is it unique? Are there multiple versions of one file or a final version I must keep? Do the draft copies have important handwritten notes that point to the final version and provide greater insight?

Is it valid? Do I need to keep this data? Is there a possible future use for it?

Is it accurate? Are the files being saved for future use accurate?

Is it complete? Are the files that need to be saved in their entirety?

Is the quality good? Are the quality of the files suitable, providing insightful context in the years to come?

Is it accessible? Are these records properly archived, or are they fragmented? How can we get access to them?

Data Stewardship:

Now that we have answered all those questions, imagine for a moment all this data — structured, unstructured, and semi-structured sitting in data silos or data lakes as one giant beast. Which begs the famous idiom question — Who will bell the cat?

Who will take on this humongous task of Data Quality Management, i.e., classifying, archiving, storing, creating best practice guidelines, and ensuring data security and integrity?

This is where Data Stewardship comes into play. Appointing a sole person or a committee (which is better) to create and oversee all the tasks of Data Management is the optimum choice in the eventual buildup of good Data Governance strategies. The main job of a stewarding committee is to ensure that data is properly collected, managed, accessed when needed, and disposed of at the end of the retention period.

Some essential functions of a data stewarding committee are:

Publishing policies on the collection and management of data ( something that can be achieved faster if DQM practices are already in place)

Educating employees on proper DQM best practices, and providing training on Record Information Management (RIM) policies established by the company, ensuring these training are given after 3 years to stay compliant with existing and new regulations.

Revising retention policies to meet new regulations.

Creating a hierarchical chain of command within the committee based on the classification of records.

Data Security:

Should you keep the data, classify or not, and share it? There are many questions regarding the usefulness and usability of data. But one thing stands out — whatever the reasons, all data usage should be considered secure in the entire Data Lifecycle Management process to the point of its deletion.

Data Security be it encryption, resiliency, masking, or ultimate erasure, tools have to be deployed along with policies to ensure that the company’s data is safe and secure and used by the proper personnel.

The recurring theme we get from all these essential elements is after identifying and classifying data, where can we keep all this data? And while it occurs to most businesses to keep data stored either physically or in-house, the case for a centralized cloud archival system is getting stronger daily. So, should one go for a centralized cloud archival system? Here are a few advantageous arguments that prove a case in point.

Advantages of a Centralized Cloud Archival System:

With internet usability being predominant worldwide and the availability of a company’s intranet to its employees, access to data on a centralized cloud archive has never been easier.

Organizations and departments within organizations can share data and resources more efficiently. Specific data can be quickly discovered with such tools as E-discovery.

As data grows daily, a centralized cloud archive system can meet scalability demands while remaining flexible.

A cloud archive system is more cost-effective in keeping data, meeting the demands of growing data, and having flexibility compared to a local in-house data storage unit, not to mention the office space it will save and the costs of having a built-in infrastructure IT room specifically for this.

Data is stored in a secure, centralized cloud archive system that ensures no unauthorized access. Moreover, it ensures timely data backups and updates to the system and is less likely to be damaged or lost in a local disaster.

As we let the advantages of a centralized cloud archive sink in, here are ten ways it can help businesses in their Data Governance strategies:

10 Ways a Centralised Cloud Archive can improve your Data Governance:

Focus on global policies:

While classification and maintenance of data is a crucial factor in governance, the time factor is as important an element as any other. The question — of how long to retain this data is relevant in the archival process, and the answer is not so black and white.

With local and global policies changing daily when confronted with new and sometimes imposing queries, data retention times vary from year to year. For the people responsible for maintaining or classifying the data, there is a need to store data immediately, pending proper relevance tagged to the data. While in-house storage units can house them temporarily, they are vulnerable to security breaches, deletion due to error, or data fragmentation.

The obvious choice is to store them in a centralized cloud archive with combative features like encryption, security, secure access, flexibility, and scalability.

Data Access and ABAC:

Because data is located in a secure, centralized cloud archive, employees distributed in different geographical locations can access data at any time depending on their time zone, at the same time as other employees, or at multiple times.

But should all employees have access to everything? The Centralised cloud archive ensures that Attribute Based Access Control (ABAC) is in force, ensuring employee rights based on attributes assigned to them. These rights are usually enforced when creating DQM strategies or by Data Stewards based on changing company, local and global policies.

Deduplication:

A centralized cloud archive system has the innate software technology to ensure no data duplication, ensuring only one final copy to file. This is in stark contrast to the in-house data silos, which promote data fragmentation and unnecessary duplication of files.

Self-service for data consumers:

All the data is stored in a single searchable platform, making it easier for consumers to source or explore independently. Self-service access allows consumers to access any data they have permission for without having to request access from the data owners manually.

Removing the need for Physical Infrastructures:

A Centralised Cloud Archive System is much more cost effective than traditional storage methods, as it eliminates the need for businesses to purchase and maintain their data storage infrastructures.

Maintaining transparency and Automated Reporting:

With a Centralised Cloud Archive System, all the stored data is available in a searchable format, making it easier for stakeholders to understand the information and use it for decision-making. This helps in improving transparency and accountability within the organization.

Moreover, with automated reporting, and a data monitoring system in place, there is transparency as to who is obtaining data access, when, and where.

Removing the redundant data:

When a file is no longer needed, has served its retention period, and has been approved for deletion by the data stewarding committee, it is easier to access this redundant data file from the Centralised Cloud Archive and permanently delete it.

Data Durability:

SaaS cloud data archiving platforms that offer high reliability and availability with in-built disaster recovery sites (like Vaultastic does) will drastically reduce the RPO and RTO anxieties of CXO teams. And also eliminate the effort of performing backups of the data.

Data Security:

The Centralised Cloud Archive is more secure than other data warehouses or in-house storage. Cloud-based data archiving platforms like Vaultastic leverage the cloud’s shared security model to provide multi-layered protection against cyber attacks.

Updated patches, two-factor authentication, encryption, and relevant security controls ensure that businesses’ data are kept in a tight vault.

Cost-Optimization:

SaaS data archiving platforms that can optimize costs along multiple dimensions even as data grows daily should favor you. Data will grow continuously, and you want your costs to be kept in check.

Conclusion

More and more businesses are migrating to the Cloud for their solutions, primarily their archiving solutions. The reasons are many — cost-effectiveness, data security, deduplication, better infrastructure, IT support, user-friendly, the list is endless.

Once a business has established its Data Governance strategy and implemented it, the next step is to ensure this data is secured in a proper location.

What better way than the cloud, which is proving its practicality day by day.

If you have your Data Governance strategy in place, Vaultastic, an elastic cloud-based data archiving service powered by AWS, can help you quickly implement your strategy.

Vaultastic excels at archiving unstructured data in the form of emails, files, and SaaS data from a wide range of sources. A secure, robust platform with on-demand data services significantly eases data governance while optimizing data management costs by up to 60%.

0 notes

Text

The Role of Data Quality Management in Master Data Governance

Large organisations have understood the value of data and data quality for the last few years. In recent years, leaders in the private and public sectors have realised that accumulated data can be a strategic asset when data volumes and speeds are increasing.

When people discuss corporate data, we have heard words like “Master data governance” and “Data Quality Management”. These two words are closely related to the need for Data Management. Let’s understand what is the meaning of both? And how are they connected?

What is Data Quality Management?

Data Quality management is a principle that needs an amalgamation of the right people, processes, technologies, and the common goals of enhancing data quality. Improving Data quality is not the only purpose of DQM but achieving good business outcomes depends upon high-quality data. Data quality management ensures that confidential information of businesses is accurate, complete, and consistent across multiple domains within the enterprise.

What is Master Data Governance?

Master data governance provides a framework through which businesses manage their data within the organisations, certify its security, and make sure data can be available to the people who need it. In other words, we can say that Master data governance means controlling security and regulatory risks and maximising the value of the organisation's data.

How Master Data Governance and Data Quality Management are Related?

The goal of Data Quality Management and Master data governance is to ensure that the data within the organisation is trusted, secure, and accessible when needed. Businesses can make sure that the value of their data assets is maximised by creating a strategy around these two essential pillars. Data Quality Management and Master data governance could be seen as two sides of the same coin.

About GlueData: GlueData is an independently owned and managed company. The company is a global data and analytics consultancy that assists SAP clients in mastering their data. The company provides the best data management tool, data management tools, data migration services, and master data management services with help of their data migration consultants and experts. Our SimpleData Management methodology is aimed at reducing the complexity of data governance by focusing on what is most important from a data domain or objective point of view.

0 notes

Text

So I figured I'd go train on the new island since that's probably more effective xp wise (not that I'm struggling on this island, the only bad xp place was that second island, man.).

This island is called Infern island and you'd THINK that means LAVA, but actually it means this was once a prosperous town but a calamity destroyed it and now it's a zombie town.

That's sick, I love that.

Gathered a quick crystal here and got told in no uncertain terms "If you want to do the rest of the island you need to beat that shrine you're running from" so let's just go do it with our new levels.

spoiler I did it, it was easy with my new levels.

BECOME. MONKE.

Also feign surprise, he's the incarnus.

So we've 2 shrines left I think, and 2 islands I haven't been to yet at least according to the map, and only a few more crystals needed- so I'd wager we're getting close to the "OH NO, A CALAMITY!" moment but most importantly- MONKE.

He's monke :) I love that he's monke :)

Next time I'd say my goal is to take down Infern island- but first I think I'll go on a breeding montage.

So here's the gist- my plan was to level a bunch of monsters to 15 before I did that, but then I thought "Wait, this isn't how I've ever played a DQM game before, why am I being so anal about that?"

So I'm not. lol.

Usually I just start breeding at level 10 and don't stop until late game where I start to care more about the better returns on high level breeding- but by then it's usually easy enough to farm xp to GET high level breeding.

Now I feel like this iteration of DQM has formulas that better support high level breeding- I THINK- I mean the way skills are passed down would maybe imply that- but at the end of the day I think the same strategy will prevail while being the most fun for me since it means I get to breed more often.

Breed haphazardly until later in the game when xp is easier to grind- THEN breed at high level lol

So I think since my main team is around level 17~ I'll breed them off and get a new team going :)

Side note: Subs get 25% xp and all storage mons get 12.5%, so I'd guess by now I have a decent chunk of monsters that can breed with my main team- but if not then I'll take a short break to capture a ton of mons and maybe grind my main team to 20 thus giving those monsters enough xp to get to 10 etc etc breed time.

#RetPlays#Dragon Quest Monsters: Joker#Dragon Quest#Dragon Quest Monsters#Dragon Warrior Monsters#DQM#DWM#DQM:J#DS#Nintendo

1 note

·

View note