#Data Annotation in AI Development

Explore tagged Tumblr posts

Text

Explore how expert annotation services improve the performance of AI systems by delivering high-quality training data. This blog breaks down the importance of accurate image, text, and video annotation in building intelligent, reliable AI models.

0 notes

Text

What is the future of the like button in the age of artificial intelligence? Max Levchin—the PayPal cofounder and Affirm CEO—sees a new and hugely valuable role for liking data to train AI to arrive at conclusions more in line with those a human decisionmaker would make.

It’s a well-known quandary in machine learning that a computer presented with a clear reward function will engage in relentless reinforcement learning to improve its performance and maximize that reward—but that this optimization path often leads AI systems to very different outcomes than would result from humans exercising human judgment.

To introduce a corrective force, AI developers frequently use what is called reinforcement learning from human feedback (RLHF). Essentially they are putting a human thumb on the scale as the computer arrives at its model by training it on data reflecting real people’s actual preferences. But where does that human preference data come from, and how much of it is needed for the input to be valid? So far, this has been the problem with RLHF: It’s a costly method if it requires hiring human supervisors and annotators to enter feedback.

And this is the problem that Levchin thinks could be solved by the like button. He views the accumulated resource that today sits in Facebook’s hands as a godsend to any developer wanting to train an intelligent agent on human preference data. And how big a deal is that? “I would argue that one of the most valuable things Facebook owns is that mountain of liking data,” Levchin told us. Indeed, at this inflection point in the development of artificial intelligence, having access to “what content is liked by humans, to use for training of AI models, is probably one of the singularly most valuable things on the internet.”

While Levchin envisions AI learning from human preferences through the like button, AI is already changing the way these preferences are shaped in the first place. In fact, social media platforms are actively using AI not just to analyze likes, but to predict them—potentially rendering the button itself obsolete.

This was a striking observation for us because, as we talked to most people, the predictions mostly came from another angle, describing not how the like button would affect the performance of AI but how AI would change the world of the like button. Already, we heard, AI is being applied to improve social media algorithms. Early in 2024, for example, Facebook experimented with using AI to redesign the algorithm that recommends Reels videos to users. Could it come up with a better weighting of variables to predict which video a user would most like to watch next? The result of this early test showed that it could: Applying AI to the task paid off in longer watch times—the performance metric Facebook was hoping to boost.

When we asked YouTube cofounder Steve Chen what the future holds for the like button, he said, “I sometimes wonder whether the like button will be needed when AI is sophisticated enough to tell the algorithm with 100 percent accuracy what you want to watch next based on the viewing and sharing patterns themselves. Up until now, the like button has been the simplest way for content platforms to do that, but the end goal is to make it as easy and accurate as possible with whatever data is available.”

He went on to point out, however, that one reason the like button may always be needed is to handle sharp or temporary changes in viewing needs because of life events or situations. “There are days when I wanna be watching content that’s a little bit more relevant to, say, my kids,” he said. Chen also explained that the like button may have longevity because of its role in attracting advertisers—the other key group alongside the viewers and creators—because the like acts as the simplest possible hinge to connect those three groups. With one tap, a viewer simultaneously conveys appreciation and feedback directly to the content provider and evidence of engagement and preference to the advertiser.

Another major impact of AI will be its increasing use to generate the content itself that is subject to people’s emotional responses. Already, growing amounts of the content—both text and images—being liked by social media users are AI generated. One wonders if the original purpose of the like button—to motivate more users to generate content—will even remain relevant. Would the platforms be just as successful on their own terms if their human users ceased to make the posts at all?

This question, of course, raises the problem of authenticity. During the 2024 Super Bowl halftime show, singer Alicia Keys hit a sour note that was noticed by every attentive listener tuned in to the live event. Yet when the recording of her performance was uploaded to YouTube shortly afterward, that flub had been seamlessly corrected, with no notification that the video had been altered. It’s a minor thing (and good for Keys for doing the performance live in the first place), but the sneaky correction raised eyebrows nonetheless. Ironically, she was singing “If I Ain’t Got You”—and her fans ended up getting something slightly different from her.

If AI can subtly refine entertainment content, it can also be weaponized for more deceptive purposes. The same technology that can fix a musical note can just as easily clone a voice, leading to far more serious consequences.

More chilling is the trend that the US Federal Communications Commission (FCC) and its equivalents elsewhere have recently cracked down on: uses of AI to “clone” an individual’s voice and effectively put words in their mouth. It sounds like them speaking, but it may not be them—it could be an impostor trying to trick that person’s grandfather into paying a ransom or trying to conduct a financial transaction in their name. In January 2024, after an incident of robocalls spoofing President Joe Biden’s voice, the FCC issued clear guidance that such impersonation is illegal under the provisions of the Telephone Consumer Protection Act, and warned consumers to be careful.

“AI-generated voice cloning and images are already sowing confusion by tricking consumers into thinking scams and frauds are legitimate,” said FCC chair Jessica Rosenworcel. “No matter what celebrity or politician you favor, or what your relationship is with your kin when they call for help, it is possible we could all be a target of these faked calls.”

Short of fraudulent pretense like this, an AI-filled future of social media might well be populated by seemingly real people who are purely computer-generated. Such virtual concoctions are infiltrating the community of online influencers and gaining legions of fans on social media platforms. “Aitana Lopez,” for example, regularly posts glimpses of her enviable life as a beautiful Spanish musician and fashionista. When we last checked, her Instagram account was up to 310,000 followers, and she was shilling for hair-care and clothing brands, including Victoria’s Secret, at a cost of some $1,000 per post. But someone else must be spending her hard-earned money, because Aitana doesn’t really need clothes or food or a place to live. She is the programmed creation of an ad agency—one that started out connecting brands with real human influencers but found that the humans were not always so easy to manage.

With AI-driven influencers and bots engaging with each other at unprecedented speed, the very fabric of online engagement may be shifting. If likes are no longer coming from real people, and content is no longer created by them, what does that mean for the future of the like economy?

In a scenario that not only echoes but goes beyond the premise of the 2013 film Her, you can also now buy a subscription that enables you to chat to your heart’s content with an on-screen “girlfriend.” CarynAI is an AI clone of a real-life online influencer, Caryn Marjorie, who had already gained over a million followers on Snapchat when she decided to team up with an AI company and develop a chatbot. Those who would like to engage in one-to-one conversation with the virtual Caryn pay a dollar per minute, and the chatbot’s conversation is generated by OpenAI’s GPT-4 software, as trained on an archive of content Marjorie had previously published on YouTube.

We can imagine a scenario in which a large proportion of likes are not awarded to human-created content—and not granted by actual people, either. We could have a digital world overrun by synthesized creators and consumers interacting at lightning speed with each other. Surely if this comes to pass, even in part, there will be new problems to be solved, relating to our needs to know who really is who (or what), and when a seemingly popular post is really worth checking out.

Do we want a future in which our true likes (and everyone else’s) are more transparent and unconcealable? Or do we want to retain (for ourselves but also for others) the ability to dissemble? It seems plausible that we will see new tools developed to provide more transparency and assurance as to whether a like is attached to a real person or just a realistic bot. Different platforms might apply such tools to different degrees.

2 notes

·

View notes

Text

Meta and Microsoft Unveil Llama 2: An Open-Source, Versatile AI Language Model

In a groundbreaking collaboration, Meta and Microsoft have unleashed Llama 2, a powerful large language AI model designed to revolutionise the AI landscape. This sophisticated language model is available for public use, free of charge, and boasts exceptional versatility. In a strategic move to enhance accessibility and foster innovation, Meta has shared the code for Llama 2, allowing researchers to explore novel approaches for refining large language models.

Llama 2 is no ordinary AI model. Its unparalleled versatility allows it to cater to diverse use cases, making it an ideal tool for established businesses, startups, lone operators, and researchers alike. Unlike fine-tuned models that are engineered for specific tasks, Llama 2’s adaptability enables developers to explore its vast potential in various applications.

Microsoft, as a key partner in this venture, will integrate Llama 2 into its cloud computing platform, Azure, and its renowned operating system, Windows. This strategic collaboration is a testament to Microsoft’s commitment to supporting open and frontier models, as well as their dedication to advancing AI technology. Notably, Llama 2 will also be available on other platforms, such as AWS and Hugging Face, providing developers with the freedom to choose the environment that suits their needs best.

During the Microsoft Inspire event, the company announced plans to embed Llama 2’s AI tools into its 360 platform, further streamlining the integration process for developers. This move is set to open new possibilities for innovative AI solutions and elevate user experiences across various industries.

Meta’s collaboration with Qualcomm promises an exciting future for Llama 2. The companies are working together to bring Llama 2 to laptops, phones, and headsets, with plans for implementation starting next year. This expansion into new devices demonstrates Meta’s dedication to making Llama 2’s capabilities more accessible to users on-the-go.

Llama 2’s prowess is partly attributed to its extensive pretraining on publicly available online data sources, including Llama-2-chat. Leveraging publicly available instruction datasets and over 1 million human annotations, Meta has honed Llama 2’s understanding and responsiveness to human language.

In a Facebook post, Mark Zuckerberg, the visionary behind Meta, highlighted the significance of open-source technology. He firmly believes that an open ecosystem fosters innovation by empowering a broader community of developers to build with new technology. With the release of Llama 2’s code, Meta is exemplifying this belief, creating opportunities for collective progress and inspiring the AI community.

The launch of Llama 2 marks a pivotal moment in the AI race, as Meta and Microsoft collaborate to offer a highly versatile and accessible AI language model. With its open-source approach and availability on multiple platforms, Llama 2 invites developers and researchers to explore its vast potential across various applications. As the ecosystem expands, driven by Meta’s vision for openness and collaboration, we can look forward to witnessing groundbreaking AI solutions that will shape the future of technology.

This post was originally published on: Apppl Combine

#Apppl Combine#Ad Agency#AI Model#AI Tools#Llama 2#facebook#Llama 2 Chat#META AI Model#Meta and Microsoft#Microsoft#Technology

2 notes

·

View notes

Text

Best data extraction services in USA

In today's fiercely competitive business landscape, the strategic selection of a web data extraction services provider becomes crucial. Outsource Bigdata stands out by offering access to high-quality data through a meticulously crafted automated, AI-augmented process designed to extract valuable insights from websites. Our team ensures data precision and reliability, facilitating decision-making processes.

For more details, visit: https://outsourcebigdata.com/data-automation/web-scraping-services/web-data-extraction-services/.

About AIMLEAP

Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, Canada, India & Australia

Our Data Solutions

APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations: USA: 1-30235 14656 Canada: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

2 notes

·

View notes

Text

ChatGPT and Machine Learning: Advancements in Conversational AI

Introduction: In recent years, the field of natural language processing (NLP) has witnessed significant advancements with the development of powerful language models like ChatGPT. Powered by machine learning techniques, ChatGPT has revolutionized conversational AI by enabling human-like interactions with computers. This article explores the intersection of ChatGPT and machine learning, discussing their applications, benefits, challenges, and future prospects.

The Rise of ChatGPT: ChatGPT is an advanced language model developed by OpenAI that utilizes deep learning algorithms to generate human-like responses in conversational contexts. It is based on the underlying technology of GPT (Generative Pre-trained Transformer), a state-of-the-art model in NLP, which has been fine-tuned specifically for chat-based interactions.

How ChatGPT Works: ChatGPT employs a technique called unsupervised learning, where it learns from vast amounts of text data without explicit instructions or human annotations. It utilizes a transformer architecture, which allows it to process and generate text in a parallel and efficient manner.

The model is trained using a massive dataset and learns to predict the next word or phrase given the preceding context.

Applications of ChatGPT: Customer Support: ChatGPT can be deployed in customer service applications, providing instant and personalized assistance to users, answering frequently asked questions, and resolving common issues.

Virtual Assistants: ChatGPT can serve as intelligent virtual assistants, capable of understanding and responding to user queries, managing calendars, setting reminders, and performing various tasks.

Content Generation: ChatGPT can be used for generating content, such as blog posts, news articles, and creative writing, with minimal human intervention.

Language Translation: ChatGPT's language understanding capabilities make it useful for real-time language translation services, breaking down barriers and facilitating communication across different languages.

Benefits of ChatGPT: Enhanced User Experience: ChatGPT offers a more natural and interactive conversational experience, making interactions with machines feel more human-like.

Increased Efficiency: ChatGPT automates tasks that would otherwise require human intervention, resulting in improved efficiency and reduced response times.

Scalability: ChatGPT can handle multiple user interactions simultaneously, making it scalable for applications with high user volumes.

Challenges and Ethical Considerations: Bias and Fairness: ChatGPT's responses can sometimes reflect biases present in the training data, highlighting the importance of addressing bias and ensuring fairness in AI systems.

Misinformation and Manipulation: ChatGPT's ability to generate realistic text raises concerns about the potential spread of misinformation or malicious use. Ensuring the responsible deployment and monitoring of such models is crucial.

Future Directions: Fine-tuning and Customization: Continued research and development aim to improve the fine-tuning capabilities of ChatGPT, enabling users to customize the model for specific domains or applications.

Ethical Frameworks: Efforts are underway to establish ethical guidelines and frameworks for the responsible use of conversational AI models like ChatGPT, mitigating potential risks and ensuring accountability.

Conclusion: In conclusion, the emergence of ChatGPT and its integration into the field of machine learning has opened up new possibilities for human-computer interaction and natural language understanding. With its ability to generate coherent and contextually relevant responses, ChatGPT showcases the advancements made in language modeling and conversational AI.

We have explored the various aspects and applications of ChatGPT, including its training process, fine-tuning techniques, and its contextual understanding capabilities. Moreover, the concept of transfer learning has played a crucial role in leveraging the model's knowledge and adapting it to specific tasks and domains.

While ChatGPT has shown remarkable progress, it is important to acknowledge its limitations and potential biases. The continuous efforts by OpenAI to gather user feedback and refine the model reflect their commitment to improving its performance and addressing these concerns. User collaboration is key to shaping the future development of ChatGPT and ensuring it aligns with societal values and expectations.

The integration of ChatGPT into various applications and platforms demonstrates its potential to enhance collaboration, streamline information gathering, and assist users in a conversational manner. Developers can harness the power of ChatGPT by leveraging its capabilities through APIs, enabling seamless integration and expanding the reach of conversational AI.

Looking ahead, the field of machine learning and conversational AI holds immense promise. As ChatGPT and similar models continue to evolve, the focus should remain on user privacy, data security, and responsible AI practices. Collaboration between humans and machines will be crucial, as we strive to develop AI systems that augment human intelligence and provide valuable assistance while maintaining ethical standards.

With further advancements in training techniques, model architectures, and datasets, we can expect even more sophisticated and context-aware language models in the future. As the dialogue between humans and machines becomes more seamless and natural, the potential for innovation and improvement in various domains is vast.

In summary, ChatGPT represents a significant milestone in the field of machine learning, bringing us closer to human-like conversation and intelligent interactions. By harnessing its capabilities responsibly and striving for continuous improvement, we can leverage the power of ChatGPT to enhance user experiences, foster collaboration, and push the boundaries of what is possible in the realm of artificial intelligence.

2 notes

·

View notes

Text

AI in Robotics: A Comprehensive Guide 2025

The application of AI within robotics is an evolution in itself and a paradigm shift in technology. With the adoption of evolving technologies as discussed above, the potential of applications of AI robots is absolutely unlimited.

The path of AI in robotics is only beginning. As robotics and AI continue to innovate, with industries targeting everything from healthcare and defense to retail and energy, high-quality data becomes more critical. Behind every intelligent robot is a foundation of compliant labeled data that enables machines to deploy and become useful in real life. The 10 high-tech use cases we explored highlight how transformative these innovations can be when powered by precise, well-annotated datasets.

Cogito Tech delivers compliant, scalable, and professional data annotation services tailored for prime robotics AI applications.

Partner with us to accelerate your AI development. The future is now, and it’s Artificial Intelligence-powered.

0 notes

Text

Empowering Safe Mobility Through Autonomous Driving Solutions

Autonomous driving leverages AI, sensor fusion, and annotated data to enable vehicles to navigate safely without human intervention. A global data services provider supports this innovation by delivering high-quality training data that enhances perception, decision-making, and control systems—accelerating the development of reliable, real-world autonomous transportation technologies.

0 notes

Text

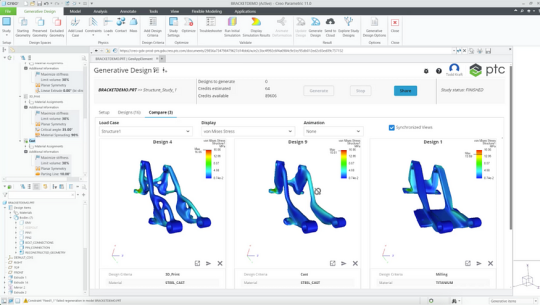

PTC Creo 12: New Tools for Smarter, Faster 3D Design

Discover what’s new in PTC Creo 12 — enhanced simulation, AI-driven design, MBD, and manufacturing tools. Upgrade now with ANH, the trusted PTC Creo reseller in the Delhi NCR region.

Top New Features in Creo 12

1. AI-Driven Design Guidance

Creo 12 takes intelligent design to the next level with built-in AI tools that offer real-time suggestions and improvements.

Get feedback during modeling

Reduce trial-and-error

Improve efficiency with every click

2. Enhanced Model-Based Definition (MBD)

Creo 12 makes MBD more practical and powerful, eliminating the need for traditional 2D drawings.

Improved PMI (Product Manufacturing Information)

Better GD&T annotation support

Clearer data for downstream users

3. Performance & Usability Upgrades

PTC has improved the overall speed, responsiveness, and ease of use:

Faster model regeneration

Smart mini toolbars

Enhanced model tree filters

Customizable dashboards for quick access

4. Creo Simulation Live — More Powerful Than Ever

Simulation Live has been expanded to cover:

Structural & thermal simulations

Better support for nonlinear materials

Real-time design feedback

5. Advanced Multi-Body Design

Creo 12 makes it easier to work with complex parts and assemblies:

Better control over multiple bodies in a single part

New body operations and organization tools

Improved part-to-part interactions

It’s ideal for high-detail engineering and intricate product development.

6. Additive and Subtractive Manufacturing Enhancements

Manufacturing has never been smoother in Creo:

More control over lattice structures

Enhanced 5-axis CAM functionality

New machine support and output formats

Whether you’re 3D printing or using CNC, Creo 12 has you covered.

7. Improved ECAD–MCAD Collaboration

With electronics becoming a bigger part of mechanical products, Creo 12 improves:

PCB visualization

Layer management

Synchronization between electrical and mechanical teams

This helps avoid costly errors during development.

Why Creo 12 is a Must-Have Upgrade

PTC Creo 12 isn’t just for big enterprises — it’s built for everyone who values smart, high-quality design. Whether you’re a design engineer, manager, or product innovator, Creo 12:

Saves time

Reduces errors

Encourages innovation

Supports industry 4.0 goals

Get Creo 12 from Delhi NCR’s Trusted PTC Reseller — ANH

Looking to upgrade your CAD tools or switch to Creo 12? ANH is a leading authorized PTC reseller in the Delhi NCR region. From licensing to training, we help businesses unlock the full potential of Creo.

Contact ANH today to get started with Creo 12!

✅ Conclusion: Welcome to the Future of Design

PTC Creo 12 is more than just an upgrade — it’s a gateway to smarter design, quicker development, and stronger innovation. With new AI tools, advanced simulations, and seamless usability, it’s built to give your team a competitive edge.

Design faster. Design smarter with Creo 12.

0 notes

Text

Unlock Process Intelligence with AI ML Enablement Services from EnFuse Solutions – Contact Now!

Accelerate your AI journey with EnFuse Solutions’ data-driven AI ML enablement services. From precise data labeling to accurate annotation and training data preparation, their expert approach ensures minimal bias and faster, more reliable model development. Visit this link to explore EnFuse Solutions’ AI ML enablement services: https://www.enfuse-solutions.com/services/ai-ml-enablement/

#AIMLEnablement#AIMLEnablementServices#AIMLEnablementSolutions#AIEnablement#MLEnablement#AIOptimization#MLOptimization#AIEnhancedSolutions#MLEnhancedSolutions#AIMLEnablementIndia#AIMLEnablementServicesIndia#AIEnhancedDevelopment#MLEnhancedDevelopment#EnFuseAIMLEnablement#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Unlocking Insights: How Transcription Services Elevate Market Research

Understanding what consumers think, want, and need is at the heart of every successful business strategy. In today’s fast-paced and data-driven world, transcription services for market research have become essential for capturing, organizing, and analyzing the voices that matter most: those of your customers.

Whether you’re conducting focus groups, in-depth interviews, or observational studies, having accurate and timely transcriptions can make or break your research quality. From clear documentation to easier analysis, transcription services are a quiet but powerful force in modern market research.

The Power of the Spoken Word

Market research relies heavily on conversations—authentic, unscripted dialogue that reveals what people truly feel about a product, brand, or service. These conversations are often long and rich in nuance. Recording them is one thing, but turning them into usable text is where the real value begins.

Audio recordings can be time-consuming to review, especially when you’re on a deadline. Transcriptions provide a searchable and scannable format that enables researchers to quickly identify key trends, quotes, and emotional responses. More than convenience, it ensures accuracy when presenting findings to stakeholders.

Why Transcription Services Matter

Transcription services for market research are tailored to handle complex, often jargon-heavy content. They are designed to deliver clean, professional transcripts that are easy to read and analyze. This is especially helpful in group settings where multiple people may be speaking at once.

Professional transcriptionists are trained to differentiate between speakers, maintain context, and preserve essential pauses or reactions. These details matter. A slight hesitation, a laugh, or even a long pause can add layers to your findings.

Also, in both business and academic transcription, data integrity is key. Market researchers often rely on high volumes of qualitative input to create actionable strategies. A professional transcription ensures the raw data is preserved faithfully so nothing is lost in translation.

Making Better Use of Data

Once transcribed, your academic research data transcription or market research sessions become much easier to code, tag, and reference. Analysts can annotate and highlight themes directly within the document. Teams can collaborate without listening through hours of recordings. With digital tools and AI-assisted analysis, transcriptions speed up the entire process and make insights more accessible.

They also make reporting smoother. Pulling direct quotes from transcripts adds authenticity to your presentations and helps decision-makers connect with authentic customer voices. It can also help ensure accurate references back your interpretations.

Who Benefits the Most?

Marketing teams, research agencies, product developers, and customer experience professionals all benefit from transcription services. But it’s not just businesses. In education, scholarly work, business, and academia, transcription services support case studies, thesis interviews, and research publications.

For market research, especially, having clean, timely transcriptions helps companies stay agile and responsive in competitive markets. It saves time and allows researchers to focus more on strategy than note-taking.

The Bottom Line

Accurate and accessible transcripts aren’t just a convenience, they’re a strategic advantage. Transcription services for market research serve as the bridge between raw data and informed decision-making. They help bring consumer voices into the boardroom in a way that’s clear, compelling, and impactful.

So, the next time you’re preparing to gather valuable customer insight, don’t underestimate the importance of transcription. It may just be the most essential tool in your market research toolkit.

0 notes

Text

0 notes

Text

When AI Meets Medicine: Periodontal Diagnosis Through Deep Learning by Para Projects

In the ever-evolving landscape of modern healthcare, artificial intelligence (AI) is no longer a futuristic concept—it is a transformative force revolutionizing diagnostics, treatment, and patient care. One of the latest breakthroughs in this domain is the application of deep learning to periodontal disease diagnosis, a condition that affects millions globally and often goes undetected until it progresses to severe stages.

In a pioneering step toward bridging technology with dental healthcare, Para Projects, a leading engineering project development center in India, has developed a deep learning-based periodontal diagnosis system. This initiative is not only changing the way students approach AI in biomedical domains but also contributing significantly to the future of intelligent, accessible oral healthcare.

Understanding Periodontal Disease: A Silent Threat Periodontal disease—commonly known as gum disease—refers to infections and inflammation of the gums and bone that surround and support the teeth. It typically begins as gingivitis (gum inflammation) and, if left untreated, can lead to periodontitis, which causes tooth loss and affects overall systemic health.

The problem? Periodontal disease is often asymptomatic in its early stages. Diagnosis usually requires a combination of clinical examinations, radiographic analysis, and manual probing—procedures that are time-consuming and prone to human error. Additionally, access to professional diagnosis is limited in rural and under-resourced regions.

This is where AI steps in, offering the potential for automated, consistent, and accurate detection of periodontal disease through the analysis of dental radiographs and clinical data.

The Role of Deep Learning in Medical Diagnostics Deep learning, a subset of machine learning, mimics the human brain’s neural network to analyze complex data patterns. In the context of medical diagnostics, it has proven particularly effective in image recognition, classification, and anomaly detection.

When applied to dental radiographs, deep learning models can be trained to:

Identify alveolar bone loss

Detect tooth mobility or pocket depth

Differentiate between healthy and diseased tissue

Classify disease severity levels

This not only accelerates the diagnostic process but also ensures objective and reproducible results, enabling better clinical decision-making.

Para Projects: Where Innovation Meets Education Recognizing the untapped potential of AI in dental diagnostics, Para Projects has designed and developed a final-year engineering project titled “Deep Periodontal Diagnosis: A Hybrid Learning Approach for Accurate Periodontitis Detection.” This project serves as a perfect confluence of healthcare relevance and cutting-edge technology.

With a student-friendly yet professionally guided approach, Para Projects transforms a complex AI application into a doable and meaningful academic endeavor. The project has been carefully designed to offer:

Real-world application potential

Exposure to biomedical datasets and preprocessing

Use of deep learning frameworks like TensorFlow and Keras

Comprehensive support from coding to documentation

Inside the Project: How It Works The periodontal diagnosis project by Para Projects is structured to simulate a real diagnostic system. Here’s how it typically functions:

Data Acquisition and Preprocessing Students are provided with a dataset of dental radiographs (e.g., panoramic X-rays or periapical films). Using tools like OpenCV, they learn to clean and enhance the images by:

Normalizing pixel intensity

Removing noise and irrelevant areas

Annotating images using bounding boxes or segmentation maps

Feature Extraction Using convolutional neural networks (CNNs), the system is trained to detect and extract features such as

Bone-level irregularities

Shape and texture of periodontal ligaments

Visual signs of inflammation or damage

Classification and Diagnosis The extracted features are passed through layers of a deep learning model, which classifies the images into categories like

Healthy

Mild periodontitis

Moderate periodontitis

Severe periodontitis

Visualization and Reporting The system outputs visual heatmaps and probability scores, offering a user-friendly interpretation of the diagnosis. These outputs can be further converted into PDF reports, making it suitable for both academic submission and potential real-world usage.

Academic Value Meets Practical Impact For final-year engineering students, working on such a project presents a dual benefit:

Technical Mastery: Students gain hands-on experience with real AI tools, including neural network modeling, dataset handling, and performance evaluation using metrics like accuracy, precision, and recall.

Social Relevance: The project addresses a critical healthcare gap, equipping students with the tools to contribute meaningfully to society.

With expert mentoring from Para Projects, students don’t just build a project—they develop a solution that has real diagnostic value.

Why Choose Para Projects for AI-Medical Applications? Para Projects has earned its reputation as a top-tier academic project center by focusing on three pillars: innovation, accessibility, and support. Here’s why students across India trust Para Projects:

🔬 Expert-Led Guidance: Each project is developed under the supervision of experienced AI and domain experts.

📚 Complete Project Kits: From code to presentation slides, students receive everything needed for successful academic evaluation.

💻 Hands-On Learning: Real datasets, practical implementation, and coding tutorials make learning immersive.

💬 Post-Delivery Support: Para Projects ensures students are prepared for viva questions and reviews.

💡 Customization: Projects can be tailored based on student skill levels, interest, or institutional requirements.

Whether it’s a B.E., B.Tech, M.Tech, or interdisciplinary program, Para Projects offers robust solutions that connect education with industry relevance.

From Classroom to Clinic: A Future-Oriented Vision Healthcare is increasingly leaning on predictive technologies for better outcomes. In this context, AI-driven dental diagnostics can transform public health—especially in regions with limited access to dental professionals. What began as a classroom project at Para Projects can, with further development, evolve into a clinical tool, contributing to preventive healthcare systems across the world.

Students who engage with such projects don’t just gain knowledge—they step into the future of AI-powered medicine, potentially inspiring careers in biomedical engineering, health tech entrepreneurship, or AI research.

Conclusion: Diagnosing with Intelligence, Healing with Innovation The fusion of AI and medicine is not just a technological shift—it’s a philosophical transformation in how we understand and address disease. By enabling early, accurate, and automated diagnosis of periodontal disease, deep learning is playing a vital role in improving oral healthcare outcomes.

With its visionary project on periodontal diagnosis through deep learning, Para Projects is not only helping students fulfill academic goals—it’s nurturing the next generation of tech-enabled healthcare changemakers.

Are you ready to engineer solutions that impact lives? Explore this and many more cutting-edge medical and AI-based projects at https://paraprojects.in. Let Para Projects be your partner in building technology that heals.

0 notes

Text

Release

FOR IMMEDIATE RELEASE

MISSIONVISION.BIZ LLP Officially Incorporated to Drive Innovation in Cloud, AI, Data, and Genomics Solutions

New Delhi, India – May 29, 2025 – MISSIONVISION.BIZ LLP, a forward-thinking technology firm specializing in cloud platforms, AI-driven analytics, and data infrastructure, proudly announces its official incorporation as a Limited Liability Partnership on May 29, 2025.

Founded with the vision of delivering scalable, intelligent, and future-ready solutions, MISSIONVISION.BIZ LLP is committed to helping organizations accelerate digital transformation. The company delivers robust services across SaaS, PaaS, IaaC, and deep learning technologies—while also expanding into cutting-edge genomics and bioinformatics research support.

“Formalizing our business structure is a significant step toward our long-term mission,” said Rajat Patyal, Managing Partner of MISSIONVISION.BIZ LLP. “Our incorporation enables us to scale both technologically and geographically, bringing advanced capabilities in AI, data science, and genomics to the forefront.”

In addition to its digital innovation portfolio, MISSIONVISION.BIZ LLP now offers specialized solutions in genomics data processing, including:

RNA-seq (RNA sequencing) analysis

ChIP-seq (Chromatin Immunoprecipitation sequencing)

Variant calling, genome alignment, and annotation

Bioinformatics pipelines for large-scale biomedical datasets

Key Focus Areas:

Cloud and DevOps Automation

Predictive Analytics & Machine Learning

Scalable API and SaaS Product Development

Data Migration & Infrastructure Modernization

Genomics & Bioinformatics Data Services

MISSIONVISION.BIZ LLP operates with a lean, expert-driven team based in New Delhi, India, and collaborates globally through its freelancer and partner network. The company is also building strategic partnerships across APAC with leaders in cloud, data, and scientific computing domains.

For media inquiries, partnership opportunities, or more information, please contact:

Rajat Patyal Managing Partner, MISSIONVISION.BIZ LLP Email: [email protected] Website: www.missionvision.biz

0 notes

Text

Exploring the World of Speech Data Collection Jobs: Opportunities with GTS Dash

Introduction:

In the rapidly evolving landscape of artificial intelligence (AI) and machine learning (ML), Speech Data Collection Jobs has emerged as a pivotal component. This field not only fuels advancements in voice recognition technologies but also offers a plethora of opportunities for freelancers worldwide. Among the platforms leading this revolution is GTS Dash, a hub for freelance speech data collection.

What is Speech Data Collection?

Speech data collection involves gathering and annotating voice recordings to train AI models in understanding and processing human language. This data is crucial for developing applications like virtual assistants, transcription services, and language translation tools. The process requires diverse voice samples to ensure AI systems can comprehend various accents, dialects, and speech patterns.

Why Choose GTS Dash for Speech Data Collection?

GTS Dash offers a dynamic platform for freelancers to contribute to AI development through speech data collection. Here's why it's an excellent choice:

Diverse Project Opportunities: GTS Dash provides a range of projects, including voice recording, audio transcription, quality review, and linguistic analysis.

Global Community: Join a network of over 240,000 taskers from more than 90 countries, fostering a collaborative and inclusive environment.

Flexible Work Environment: Work from anywhere, anytime, allowing you to balance your professional and personal life effectively.

Regular Payments: Receive weekly payments through secure platforms like PayPal and Payoneer, ensuring timely compensation for your efforts.

Skill Development: Enhance your skills through various projects and training resources available on the platform.

Getting Started with GTS Dash

Embarking on your journey with GTS Dash is straightforward:

Sign Up: Register on the GTS Dash website to create your profile.

Explore Projects: Browse through available speech data collection projects that match your skills and interests.

Complete Tasks: Engage in tasks such as voice recording or transcription, following the provided guidelines.

Submit Work: Upload your completed tasks through the secure platform for review.

Receive Payment: Upon approval, receive your earnings through your chosen payment method.

The Growing Demand for Speech Data Collection

The demand for speech data collection is surging as AI applications become more integrated into daily life. Companies are seeking diverse voice data to improve the accuracy and inclusivity of their AI systems. This trend opens up numerous opportunities for freelancers to contribute to meaningful projects while earning income.

Conclusion

Speech data collection is a vital aspect of AI development, offering freelancers a chance to be part of groundbreaking technological advancements. Platforms like GTS Dash provide the tools, community, and support needed to thrive in this field. Whether you're a linguist, a tech enthusiast, or someone looking to explore new opportunities, GTS Dash welcomes you to join its global network.

For more information and to get started, visit the GTS Dash Speech Data Collection page.

0 notes

Text

Digital Pathology Market Set to Surge to US$ 4.2 Bn by 2035: Key Insights and Projections

The global digital pathology market is witnessing a paradigm shift, transforming how pathologists diagnose and collaborate across distances. This transition from conventional microscopy to digital platforms is not only enhancing diagnostic accuracy and workflow efficiency but is also paving the way for AI integration in modern pathology. Valued at US$ 1.1 Bn in 2024, the market is projected to surge to over US$ 4.2 Bn by 2035, expanding at a CAGR of 12.4% from 2025 to 2035.

Introduction: The Digital Evolution in Pathology

Digital pathology, the practice of digitizing glass slides using whole-slide imaging and storing them for analysis and collaboration, is a crucial advancement in modern healthcare. Traditional pathology involved microscopic analysis of tissue samples, a time-intensive and geographically restricted process. With digital tools, pathologists can now view, annotate, and share high-resolution images from virtually any location, leading to quicker, more consistent diagnoses.

This transformation is being driven by several interconnected trends: increasing prevalence of chronic diseases, the rising demand for precision diagnostics, and technological advancements in AI and machine learning. Additionally, the global focus on personalized medicine and remote healthcare delivery models is further propelling adoption. As healthcare systems worldwide strive to improve diagnostic outcomes while optimizing operational efficiency, digital pathology emerges as a key enabler of next-generation pathology workflows.

Market Drivers: Fueling the Growth of Digital Pathology

Enhancing Lab Efficiency through Digital Adoption

One of the most significant drivers of the digital pathology market is the technology's potential to improve laboratory efficiency. By digitizing tissue slides, laboratories can reduce reliance on physical storage, streamline workflows, and cut down turnaround times. Digital pathology enables easier archiving, faster retrieval, and automated analysis—enhancing both accuracy and productivity.

During the COVID-19 pandemic, the urgent need for remote diagnostics accelerated the adoption of digital and telepathology solutions. Laboratories that implemented digital workflows experienced fewer disruptions, ensuring continuity of care. These benefits have made digital pathology not just a convenience but a strategic necessity for modern healthcare systems.

Accelerating Drug Discovery and Research Applications

In research and drug development, digital pathology offers high-throughput analysis and deep insights into tissue morphology. Researchers can examine multiple samples in parallel, annotate complex data, and integrate molecular diagnostics seamlessly. AI-powered image analysis further boosts accuracy and scalability.

The technology also supports longitudinal studies by enabling consistent data archiving and retrieval, vital for tracking disease progression and evaluating therapeutic responses. As pharmaceutical companies intensify their search for novel targets and biomarkers, digital pathology stands at the forefront of research innovation.

Product Insights: Devices Dominate Digital Pathology Offerings

Among the various product categories, devices—including whole-slide imaging systems, scanners, and visualization equipment—account for the largest market share. These devices play a central role in converting physical samples into digital images with ultra-high resolution.

The demand for devices is being driven by the increasing need for precision diagnostics, especially in oncology and chronic disease management. Modern scanners now offer automated slide loading, faster processing speeds, and integration with cloud platforms. These improvements reduce time-to-diagnosis and enhance collaboration among specialists, particularly in multidisciplinary teams. As imaging technologies become more affordable and scalable, their adoption is expected to grow across both developed and emerging healthcare systems.

Application Analysis: A Multi-disciplinary Utility

Digital pathology has broad applicability across clinical, academic, and research domains. It is increasingly used in:

Drug Discovery & Development: Supporting target identification, biomarker validation, and toxicity studies.

Academic Research: Enabling scalable image analysis and remote collaboration in histological studies.

Disease Diagnosis: Particularly in oncology, where precise cellular imaging is critical to patient care.

Other Applications: Including forensic pathology, veterinary diagnostics, and regulatory toxicology.

With AI tools enhancing image analysis, digital pathology is poised to redefine disease detection and monitoring by providing highly granular tissue-level insights.

End-user Analysis: Who's Using Digital Pathology?

The major end-users of digital pathology solutions include:

Hospitals: Leveraging digitized workflows for faster diagnosis and better clinical outcomes.

Biotech & Pharma Companies: Employing image analytics in preclinical and clinical research.

Diagnostic Laboratories: Seeking to scale operations and enable remote consultations.

Academic & Research Institutes: Utilizing digital platforms for education and advanced research.

Hospitals and large diagnostic chains are expected to maintain dominance due to the volume of cases processed, but adoption is rising across all segments, particularly with the expansion of telepathology in rural and underserved areas.

Regional Outlook: North America Leads the Way

North America commands the largest share of the global digital pathology market, thanks to a mature healthcare ecosystem and early technological adoption. The U.S., in particular, benefits from favorable regulations, substantial investments in health IT, and a strong network of hospitals and research institutions.

Furthermore, collaborations between tech giants and healthcare providers are fostering the development of AI-driven pathology tools. The region is also witnessing rapid growth in digital health startups, creating a fertile ground for innovation and scalability.

Europe follows closely, with countries like Germany and the UK leading in digital imaging integration. Asia Pacific is emerging as a high-growth region, with investments in healthcare infrastructure and digitization in countries such as China, India, and Japan.

Competitive Landscape: Key Players and Innovations

The digital pathology market is competitive and innovation-driven, with key players continuously enhancing their offerings through partnerships, acquisitions, and product development. Leading companies include:

Leica Biosystems

Koninklijke Philips N.V.

F. Hoffmann-La Roche Ltd.

EVIDENT

Morphle Labs, Inc.

Hamamatsu Photonics

Fujifilm Holdings

PathAI

OptraSCAN

Sectra AB

Siemens Healthcare

3DHISTECH Ltd.

Recent developments include:

Charles River Laboratories and Deciphex (Feb 2025): A collaboration to integrate AI-powered digital pathology in toxicologic pathology.

Sectra and Region Västra Götaland (Feb 2025): Expansion of a 20-year partnership for integrated digital pathology and radiology systems aimed at enhancing cancer diagnostics.

These strategic initiatives underscore the importance of integrated solutions that combine imaging, AI, and cloud capabilities for scalable diagnostics.

Conclusion: A Digital Future for Pathology

The digital pathology market is on a fast trajectory, underpinned by technological innovations, a growing need for diagnostic accuracy, and a systemic push toward healthcare digitization. As AI becomes more integral and cloud infrastructures mature, digital pathology will become the norm in modern laboratories and healthcare institutions.

From academic research and drug development to routine diagnostics and personalized medicine, digital pathology holds the promise of improving patient outcomes while optimizing operational efficiency. Stakeholders across the healthcare value chain must invest in scalable, secure, and interoperable solutions to fully harness the potential of this transformative technology.

Discover key insights by visiting our in-depth report -

0 notes