#Database Seeding From JSON File

Explore tagged Tumblr posts

Text

CodeIgniter 4 How To Read Parse JSON File Tutorial

JSON (JavaScript Object Notation) files stand as a prevalent format for storing structured data, often used for configuration settings, API responses, or data interchange. In CodeIgniter 4, parsing JSON files enables developers to effortlessly handle and extract structured data within their applications.

#codeigniter 4 tutorial in hindi#codeigniter 4 tutorial#codeigniter4#codeigniter#codeigniter development#php development

0 notes

Text

CodeIgniter 4 Database Seeding From JSON File Tutorial

Inside this article we will see the concept of database seeding in CodeIgniter 4 using json file. CodeIgniter 4 database seeding from json file is a technique to dump test data into tables in bulk.

This tutorial will be super easy to understand and it’s steps are easier to implement in your code as well. Database seeding is the process in which we feed test data to tables. We can insert data either using Faker library, manual data or means of some more data sources like CSV, JSON.

0 notes

Text

Build a dynamic JAMstack app with GatsbyJS and FaunaDB

In this article, we explain the difference between single-page apps (SPAs) and static sites, and how we can bring the advantages of both worlds together in a dynamic JAMstack app using GatsbyJS and FaunaDB. We will build an application that pulls in some data from FaunaDB during build time, prerenders the HTML for speedy delivery to the client, and then loads additional data at run time as the user interacts with the page. This combination of technologies gives us the best attributes of statically-generated sites and SPAs.

In short…<deep breath>...auto-scaling distributed websites with low latency, snappy user interfaces, no reloads, and dynamic data for everyone!

Heavy backends, single-page apps, static sites

In the old days, when JavaScript was new, it was mainly only used to provide effects and improved interactions. Some animations here, a drop-down there, and that was it. The grunt work was performed on the backend by Perl, Java, or PHP.

This changed as time went on: client code became heavier, and JavaScript took over more and more of the frontend until we finally shipped mostly empty HTML and rendered the whole UI in the browser, leaving the backend to supply us with JSON data.

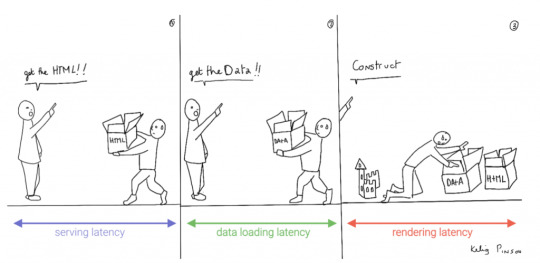

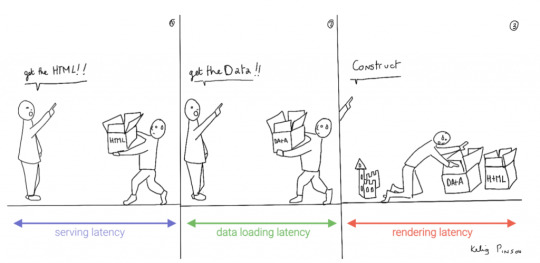

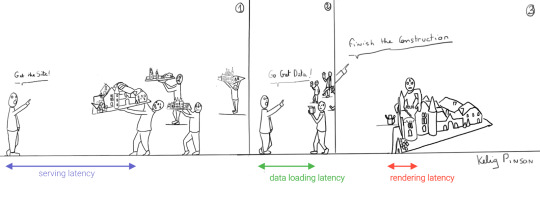

This led to a neat separation of concerns and allowed us to build whole applications with JavaScript, called Single Page Applications (SPAs). The most important advantage of SPAs was the absence of reloads. You could click on a link to change what's displayed, without triggering a complete reload of the page. This in itself provided a superior user experience. However, SPAs increased the size of the client code significantly; a client now had to wait for the sum of several latencies:

Serving latency: retrieving the HTML and JavaScript from the server where the JavaScript was bigger than it used to be

Data loading latency: loading additional data requested by the client

Frontend framework rendering latency: once the data is received, a frontend framework like React, Vue, or Angular still has to do a lot of work to construct the final HTML

A royal metaphor

We can analogize the loading a SPA with the building and delivery of a toy castle. The client needs to retrieve the HTML and JavaScript, then retrieve the data, and then still has to assemble the page. The building blocks are delivered, but they still need to be put together after they're delivered.

If only there were a way to build the castle beforehand...

Enter the JAMstack

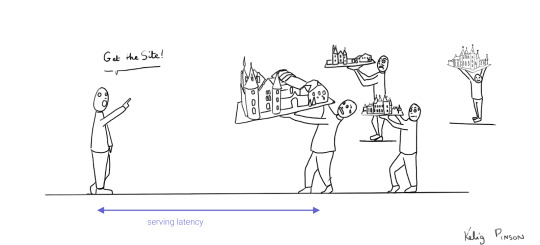

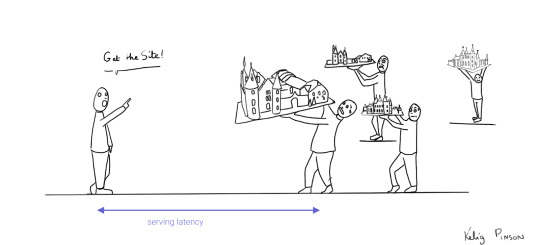

JAMstack applications consist of JavaScript, APIs and Markup. With today's static site generators like Next.js and GatsbyJS, the JavaScript and Markup parts can be bundled up into a static package and deployed via a Content Delivery Network (CDN) that delivers files to a browser. A CDN geographically distributes the bundles, and other assets, to multiple locations. When a user’s browser fetches the bundle and assets, it can receive them from the closest location on the network, which reduces the serving latency.

Continuing our toy castle analogy, JAMstack apps are different from SPAs in the sense that the page (or castle) is delivered pre-assembled. We have a lower latency since we receive the castle in one piece and no longer have to build it.

Making static JAMstack apps dynamic with hydration

In the JAMstack approach, we start with a dynamic application and prerender static HTML pages to be delivered via a speedy CDN. But what if a fully static site is not sufficient and we need to support some dynamic content as the user interacts with individual components, without reloading the entire page? That's where client-side hydration comes in.

Hydration is the client-side process by which the server-side rendered HTML (DOM) is "watered" by our frontend framework with event handlers and/or dynamic components to make it more interactive. This can be tricky because it depends on reconciling the original DOM with a new virtual DOM (VDOM) that's kept in memory as the user interacts with the page. If the DOM and VDOM trees do not match, bugs can arise that cause elements to be displayed out of order, or necessitate rebuilding the page.

Luckily, libraries like GatsbyJS and NextJS have been designed so as to minimize the possibility of such hydration-related bugs, handling everything for you out-of-the-box with only a few lines of code. The result is a dynamic JAMstack web application that is simultaneously both faster and more dynamic than the equivalent SPA.

One technical detail remains: where will the dynamic data come from?

Distributed frontend-friendly databases!

JAMstack apps typically rely on APIs (ergo the "A" in JAM), but if we need to load any kind of custom data, we need a database. And traditional databases are still a performance bottleneck for globally distributed sites that are otherwise delivered via CDN, because traditional databases are only located in one region. Instead of using a traditional database, we’d like our database to be on a distributed network, just like the CDN, that serves the data from a location as close as possible to wherever our clients are. This type of database is called a distributed database.

In this example, we’ll choose FaunaDB since it is also strongly consistent, which means that our data will be the same wherever my clients access it from and data won’t be lost. Other features that work particularly well with JAMstack applications are that (a) the database is accessed as an API (GraphQL or FQL) and does not require you to open a connection, and (b) the database has a security layer that makes it possible to access both public and private data in a secure way from the frontend. The implications of that are we can keep the low latencies of JAMstack without having to scale a backend, all with zero configuration.

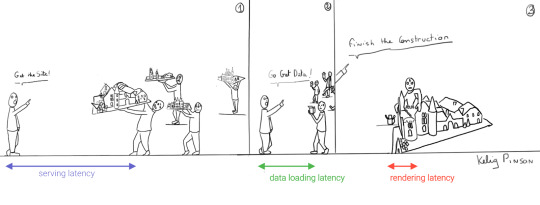

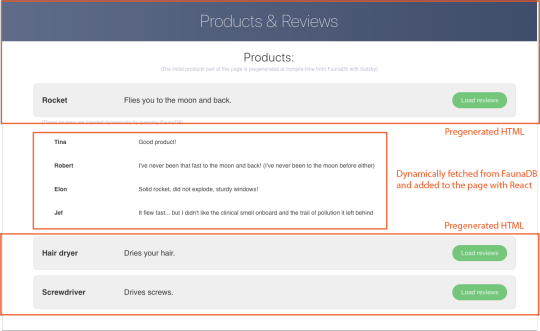

Let's compare the process of loading a hydrated static site with the building of the toy castle. We still have lower latencies thanks to the CDN, but also less data since most the site is statically generated and therefore requires less rendering. Only a small part of the castle (or, the dynamic part of the page) needs to be assembled after it has been delivered:

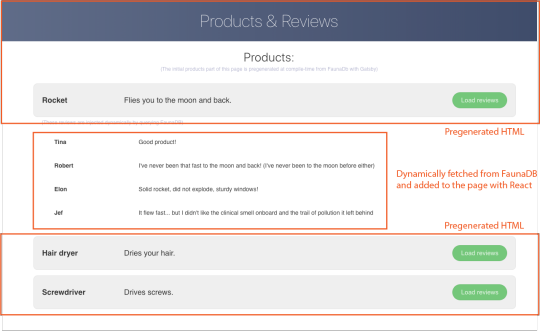

Example app with GatsbyJS & FaunaDB

Let’s build an example application that loads data from FaunaDB at build time and renders it to static HTML, then loads additional dynamic data inside the client browser at run time. For this example, we use GatsbyJS, a JAMstack framework based on React that prerenders static HTML. Since we use GatsbyJS, we can code our website completely in React, generate and deliver the static pages, and then load additional data dynamically at run time. We’ll use FaunaDB as our fully managed serverless database solution. We will build an application where we can list products and reviews.

Let’s look at an outline of what we have to do to get our example app up and running and then go through every step in detail.

Set up a new database

Add a GraphQL schema to the database

Seed the database with mock-up data

Create a new GatsbyJS project

Install NPM packages

Create the server key for the database

Update GatsbyJS config files with server key and new read-only key

Load the pre-rendered product data at build time

Load the reviews at run time

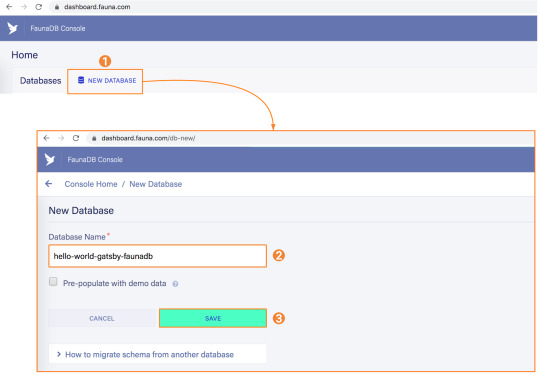

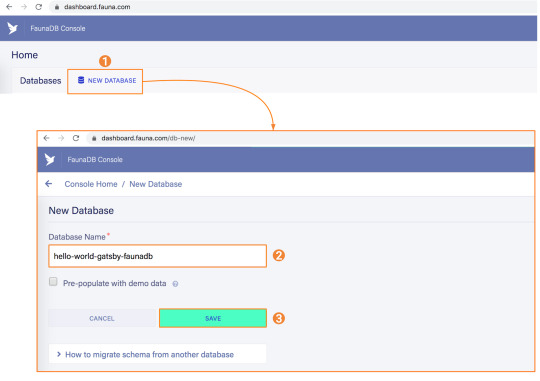

1. Set up a new database

Before you start, create an account on dashboard.fauna.com. Once you have an account, let’s set up a new database. It should hold products and their reviews, so we can load the products at build-time and the reviews in the browser.

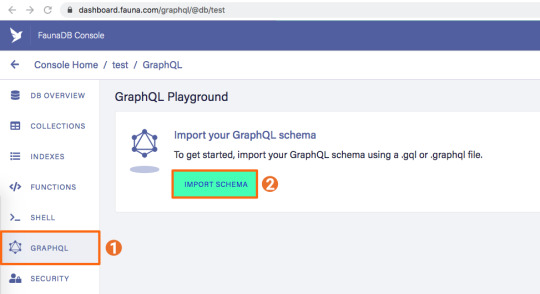

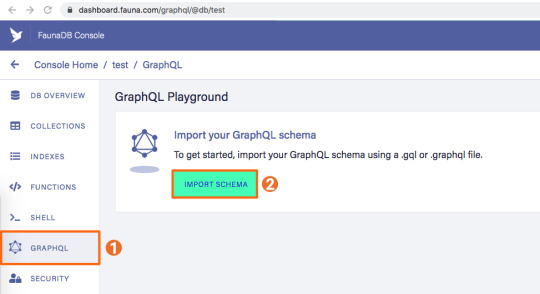

2. Add a GraphQL schema to the database

Next, we use the server key to upload a GraphQL schema to our database. For this, we create a new file called schema.gql that has the following content:

type Product { title: String! description: String reviews: [Review] @relation } type Review { username: String! text: String! product: Product! } type Query { allProducts: [Product] }

You can upload your schema.gql file via the FaunaDB Console by clicking "GraphQL" on the left sidebar, and then click the "Import Schema" button.

Upon providing FaunaDB with a GraphQL schema, it automatically creates the required collections for the entities in our schema (products and reviews). Besides that, it also creates the indexes that are needed to interact with those collections in a meaningful and efficient manner. You should now be presented with a GraphQL playground where you can test out

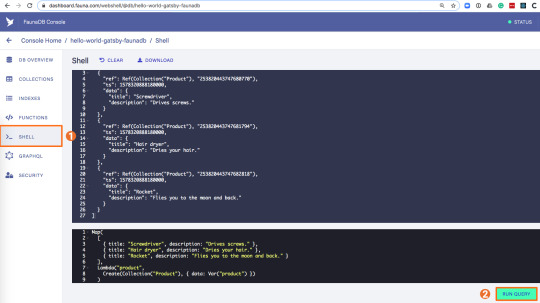

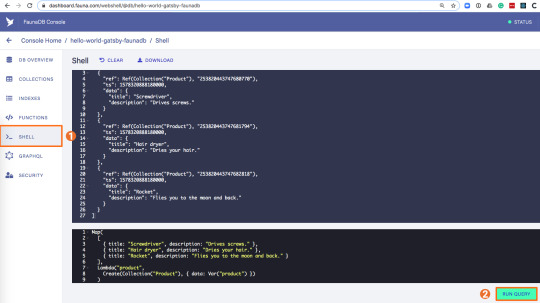

3. Seed the database with mock-up data

To seed our database with products and reviews, we can use the Shell at dashboard.fauna.com:

To create some data, we’ll use the Fauna Query Language (FQL), after that we’ll continue with GraphQL to build are example application. Paste the following FQL query into the Shell to create three product documents:

Map( Paginate(Match(Index("allProducts"))), Lambda("ref", Create(Collection("Review"), { data: { username: "Tina", text: "Good product!", product: Var("ref") } })) );

We can then write a query that retrieves the products we just made and creates a review document for every product document:

Map( Paginate(Match(Index("allProducts"))), Lambda("ref", Create(Collection("Review"), { data: { username: "Tina", text: "Good product!", product: Var("ref") } })) );

Both types of documents will be loaded via GraphQL. However, there is a significant difference between products and reviews. The former will not change a lot and is relatively static, while the second is user-driven. GatsbyJS allows us to load data in two ways:

data that is loaded at build time which will be used to generate the static site.

data that is loaded live at request time as a client visits and interacts with your website.

In this example, we chose to let the products be loaded at build time, and the reviews to be loaded on-demand in the browser. Therefore, we get static HTML product pages served by a CDN that the user immediately sees. Then, as our user interacts with the product page, we load the data for the reviews.

4. Create a new GatsbyJS project

The following command creates a GatsbyJS project based on the starter template:

$ npx gatsby-cli new hello-world-gatsby-faunadb $ cd hello-world-gatsby-faunadb

5. Install npm packages

In order to build our new project with Gatsby and Apollo, we need a few additional packages. We can install the packages with the following command:

$ npm i gatsby-source-graphql apollo-boost react-apollo

We will use gatsby-source-graphql as a way to link GraphQL APIs into the build process. Using this library, you can make a GraphQL call from which the results will be automagically provided as the properties for your react component. That way, you can use dynamic data to statically generate your application. The apollo-boost package is an easily configurable GraphQL library that will be used to fetch data on the client. Finally, the link between Apollo and React will be taken care of by the react-apollo library.

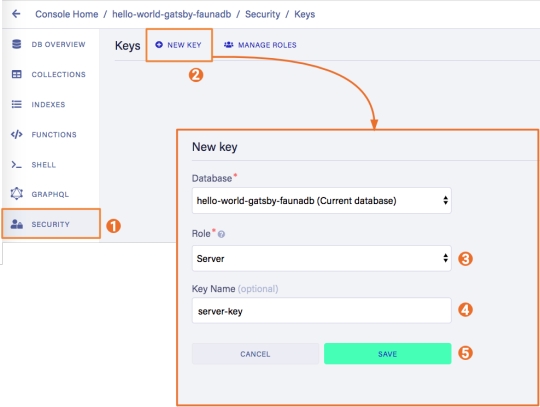

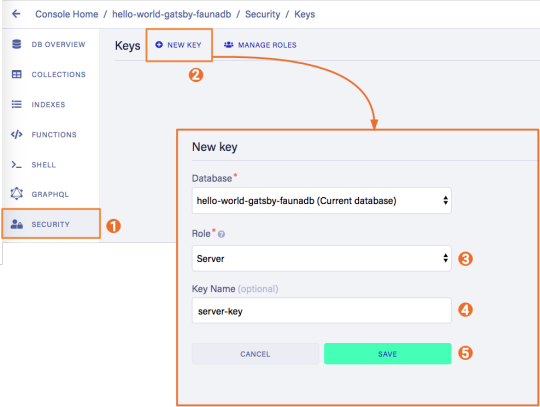

6. Create the server key for the database

We will create a Server key which will be used by Gatsby to prerender the page. Remember to copy the secret somewhere since we will use it later on. Protect server keys carefully, they can be used to create, destroy, or manage the database to which they are assigned. To create the key we can go to the fauna dashboard and create the key in the security tab.

7. Update GatsbyJS config files with server and new read-only keys

To add the GraphQL support to our build process, we need to add the following code into our graphql-config.js inside the plugins section where we will insert the FaunaDB server key which we generated a few moments ago.

{ resolve: "gatsby-source-graphql", options: { typeName: "Fauna", fieldName: "fauna", url: "https://graphql.fauna.com/graphql", headers: { Authorization: "Bearer <SERVER KEY>", }, }, }

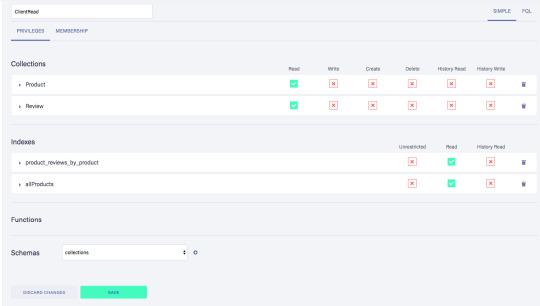

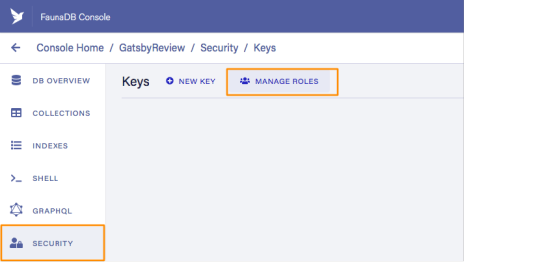

For the GraphQL access to work in the browser, we have to create a key that only has permissions to read data from the collections. FaunaDB has an extensive security layer in which you can define that. The easiest way is to go to the FaunaDB Console at dashboard.fauna.com and create a new role for your database by clicking "Security" in the left sidebar, then "Manage Roles," then "New Custom Role":

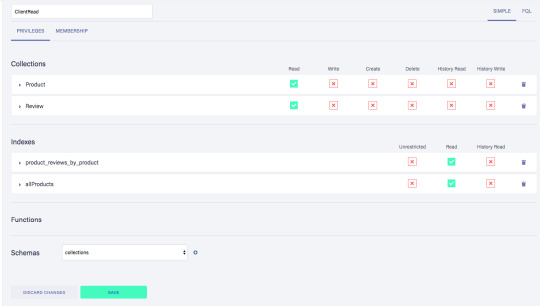

Call the new custom role ‘ClientRead’ and make sure to add all collections and indexes (these are the collections that were created by importing the GraphQL schema). Then, select Read for each for them. Your screen should look like this:

You have probably noticed the Membership tab on this page. Although we are not using it in this tutorial, it is interesting enough to explain it since it's an alternative way to get security tokens. In the Membership tab can specify that entities of a collection (let's say we have a 'Users' collection) in FaunaDb are members of a particular role. That means that if you impersonate one of these entities in that collection, the role privileges apply. You impersonate a database entity (e.g. a User) by associating credentials with the entity and using the Login function, which will return a token. That way you can also implement password-based authentication in FaunaDb. We won't use it in this tutorial, but if that interests you, check the FaunaDB authentication tutorial.

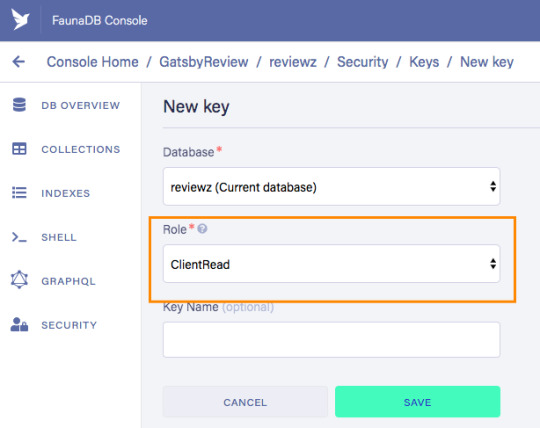

Let’s ignore Membership for now, once you have created the role, we can create a new key with the new role. As before, click "Security", then "New Key," but this time select "ClientRead" from the Role dropdown:

Now, let's insert this read-only key in the gatsby-browser.js configuration file to be able to call the GraphQL API from the browser:

import React from "react" import ApolloClient from "apollo-boost" import { ApolloProvider } from "react-apollo" const client = new ApolloClient({ uri: "https://graphql.fauna.com/graphql", request: operation => { operation.setContext({ headers: { Authorization: "Bearer <CLIENT_KEY>", }, }) }, }) export const wrapRootElement = ({ element }) => ( <ApolloProvider client={client}>{element}</ApolloProvider> )

GatsbyJS will render its Router component as a root element. If we want to use the ApolloClient everywhere in the application on the client, we need to wrap this root element with the ApolloProvider component.

8. Load the pre-rendered product data at build time

Now that everything is set up, we can finally write the actual code to load our data. Let’s start with the products we will load at build time.

For this we need to modify src/pages/index.js file to look like this:

import React from "react" import { graphql } from "gatsby" Import Layout from "../components/Layout" const IndexPage = ({ data }) => ( <Layout> <ul> {data.fauna.allProducts.data.map(product => ( <li>{product.title} - {product.description}</li> ))} </ul> </Layout> ) export const query = graphql` { fauna { allProducts { data { _id title description } } } } ` export default IndexPage

The exported query will automatically get picked up by GatsbyJS and executed before rendering the IndexPage component. The result of that query will be passed as data prop into the IndexPage component.If we now run the develop script, we can see the pre-rendered documents on the development server on http://localhost:8000/.

$ npm run develop

9. Load the reviews at run time

To load the reviews of a product on the client, we have to make some changes to the src/pages/index.js:

import { gql } from "apollo-boost" import { useQuery } from "@apollo/react-hooks" import { graphql } from "gatsby" import React, { useState } from "react" import Layout from "../components/layout" // Query for fetching at build-time export const query = graphql ` { fauna { allProducts { data { _id title description } } } } ` // Query for fetching on the client const GET_REVIEWS = gql ` query GetReviews($productId: ID!) { findProductByID(id: $productId) { reviews { data { _id username text } } } } ` const IndexPage = props => { const [productId, setProductId] = useState(null) const { loading, data } = useQuery(GET_REVIEWS, { variables: { productId }, skip: !productId, }) } export default IndexPage

Let’s go through this step by step.

First, we need to import parts of the apollo-boost and apollo-react packages so we can use the GraphQL client we previously set up in the gatsby-browser.js file.

Then, we need to implement our GET_REVIEWS query. It tries to find a product by its ID and then loads the associated reviews of that product. The query takes one variable, which is the productId.

In the component function, we use two hooks: useState and useQuery

The useState hook keeps track of the productId for which we want to load reviews. If a user clicks a button, the state will be set to the productId corresponding to that button.

The useQuery hook then applies this productId to load reviews for that product from FaunaDB. The skip parameter of the hook prevents the execution of the query when the page is rendered for the first time because productId will be null.

If we now run the development server again and click on the buttons, our application should execute the query with different productIds as expected.

$ npm run develop

Conclusion

A combination of server-side data fetching and client-side hydration make JAMstack applications pretty powerful. These methods enable flexible interaction with our data so we can adhere to different business needs.

It’s usually a good idea to load as much data at build time as possible to improve page performance. But if the data isn’t needed by all clients, or too big to be sent to the client all at once, we can split things up and switch to on-demand loading on the client. This is the case for user-specific data, pagination, or any data that changes rather frequently and might be outdated by the time it reaches the user.

In this article, we implemented an approach that loads part of the data at build time, and then loads the rest of the data in the frontend as the user interacts with the page.

Of course, we have not implemented a login or forms yet to create new reviews. How would we tackle that? That is material for another tutorial where we can use FaunaDB’s attribute-based access control to specify what a client key can read and write from the frontend.

The code of this tutorial can be found in this repo.

The post Build a dynamic JAMstack app with GatsbyJS and FaunaDB appeared first on CSS-Tricks.

via CSS-Tricks https://ift.tt/2RjR7mI

0 notes

Text

Build a dynamic JAMstack app with GatsbyJS and FaunaDB

In this article, we explain the difference between single-page apps (SPAs) and static sites, and how we can bring the advantages of both worlds together in a dynamic JAMstack app using GatsbyJS and FaunaDB. We will build an application that pulls in some data from FaunaDB during build time, prerenders the HTML for speedy delivery to the client, and then loads additional data at run time as the user interacts with the page. This combination of technologies gives us the best attributes of statically-generated sites and SPAs.

In short…<deep breath>...auto-scaling distributed websites with low latency, snappy user interfaces, no reloads, and dynamic data for everyone!

Heavy backends, single-page apps, static sites

In the old days, when JavaScript was new, it was mainly only used to provide effects and improved interactions. Some animations here, a drop-down there, and that was it. The grunt work was performed on the backend by Perl, Java, or PHP.

This changed as time went on: client code became heavier, and JavaScript took over more and more of the frontend until we finally shipped mostly empty HTML and rendered the whole UI in the browser, leaving the backend to supply us with JSON data.

This led to a neat separation of concerns and allowed us to build whole applications with JavaScript, called Single Page Applications (SPAs). The most important advantage of SPAs was the absence of reloads. You could click on a link to change what's displayed, without triggering a complete reload of the page. This in itself provided a superior user experience. However, SPAs increased the size of the client code significantly; a client now had to wait for the sum of several latencies:

Serving latency: retrieving the HTML and JavaScript from the server where the JavaScript was bigger than it used to be

Data loading latency: loading additional data requested by the client

Frontend framework rendering latency: once the data is received, a frontend framework like React, Vue, or Angular still has to do a lot of work to construct the final HTML

A royal metaphor

We can analogize the loading a SPA with the building and delivery of a toy castle. The client needs to retrieve the HTML and JavaScript, then retrieve the data, and then still has to assemble the page. The building blocks are delivered, but they still need to be put together after they're delivered.

If only there were a way to build the castle beforehand...

Enter the JAMstack

JAMstack applications consist of JavaScript, APIs and Markup. With today's static site generators like Next.js and GatsbyJS, the JavaScript and Markup parts can be bundled up into a static package and deployed via a Content Delivery Network (CDN) that delivers files to a browser. A CDN geographically distributes the bundles, and other assets, to multiple locations. When a user’s browser fetches the bundle and assets, it can receive them from the closest location on the network, which reduces the serving latency.

Continuing our toy castle analogy, JAMstack apps are different from SPAs in the sense that the page (or castle) is delivered pre-assembled. We have a lower latency since we receive the castle in one piece and no longer have to build it.

Making static JAMstack apps dynamic with hydration

In the JAMstack approach, we start with a dynamic application and prerender static HTML pages to be delivered via a speedy CDN. But what if a fully static site is not sufficient and we need to support some dynamic content as the user interacts with individual components, without reloading the entire page? That's where client-side hydration comes in.

Hydration is the client-side process by which the server-side rendered HTML (DOM) is "watered" by our frontend framework with event handlers and/or dynamic components to make it more interactive. This can be tricky because it depends on reconciling the original DOM with a new virtual DOM (VDOM) that's kept in memory as the user interacts with the page. If the DOM and VDOM trees do not match, bugs can arise that cause elements to be displayed out of order, or necessitate rebuilding the page.

Luckily, libraries like GatsbyJS and NextJS have been designed so as to minimize the possibility of such hydration-related bugs, handling everything for you out-of-the-box with only a few lines of code. The result is a dynamic JAMstack web application that is simultaneously both faster and more dynamic than the equivalent SPA.

One technical detail remains: where will the dynamic data come from?

Distributed frontend-friendly databases!

JAMstack apps typically rely on APIs (ergo the "A" in JAM), but if we need to load any kind of custom data, we need a database. And traditional databases are still a performance bottleneck for globally distributed sites that are otherwise delivered via CDN, because traditional databases are only located in one region. Instead of using a traditional database, we’d like our database to be on a distributed network, just like the CDN, that serves the data from a location as close as possible to wherever our clients are. This type of database is called a distributed database.

In this example, we’ll choose FaunaDB since it is also strongly consistent, which means that our data will be the same wherever my clients access it from and data won’t be lost. Other features that work particularly well with JAMstack applications are that (a) the database is accessed as an API (GraphQL or FQL) and does not require you to open a connection, and (b) the database has a security layer that makes it possible to access both public and private data in a secure way from the frontend. The implications of that are we can keep the low latencies of JAMstack without having to scale a backend, all with zero configuration.

Let's compare the process of loading a hydrated static site with the building of the toy castle. We still have lower latencies thanks to the CDN, but also less data since most the site is statically generated and therefore requires less rendering. Only a small part of the castle (or, the dynamic part of the page) needs to be assembled after it has been delivered:

Example app with GatsbyJS & FaunaDB

Let’s build an example application that loads data from FaunaDB at build time and renders it to static HTML, then loads additional dynamic data inside the client browser at run time. For this example, we use GatsbyJS, a JAMstack framework based on React that prerenders static HTML. Since we use GatsbyJS, we can code our website completely in React, generate and deliver the static pages, and then load additional data dynamically at run time. We’ll use FaunaDB as our fully managed serverless database solution. We will build an application where we can list products and reviews.

Let’s look at an outline of what we have to do to get our example app up and running and then go through every step in detail.

Set up a new database

Add a GraphQL schema to the database

Seed the database with mock-up data

Create a new GatsbyJS project

Install NPM packages

Create the server key for the database

Update GatsbyJS config files with server key and new read-only key

Load the pre-rendered product data at build time

Load the reviews at run time

1. Set up a new database

Before you start, create an account on dashboard.fauna.com. Once you have an account, let’s set up a new database. It should hold products and their reviews, so we can load the products at build-time and the reviews in the browser.

2. Add a GraphQL schema to the database

Next, we use the server key to upload a GraphQL schema to our database. For this, we create a new file called schema.gql that has the following content:

type Product { title: String! description: String reviews: [Review] @relation } type Review { username: String! text: String! product: Product! } type Query { allProducts: [Product] }

You can upload your schema.gql file via the FaunaDB Console by clicking "GraphQL" on the left sidebar, and then click the "Import Schema" button.

Upon providing FaunaDB with a GraphQL schema, it automatically creates the required collections for the entities in our schema (products and reviews). Besides that, it also creates the indexes that are needed to interact with those collections in a meaningful and efficient manner. You should now be presented with a GraphQL playground where you can test out

3. Seed the database with mock-up data

To seed our database with products and reviews, we can use the Shell at dashboard.fauna.com:

To create some data, we’ll use the Fauna Query Language (FQL), after that we’ll continue with GraphQL to build are example application. Paste the following FQL query into the Shell to create three product documents:

Map( Paginate(Match(Index("allProducts"))), Lambda("ref", Create(Collection("Review"), { data: { username: "Tina", text: "Good product!", product: Var("ref") } })) );

We can then write a query that retrieves the products we just made and creates a review document for every product document:

Map( Paginate(Match(Index("allProducts"))), Lambda("ref", Create(Collection("Review"), { data: { username: "Tina", text: "Good product!", product: Var("ref") } })) );

Both types of documents will be loaded via GraphQL. However, there is a significant difference between products and reviews. The former will not change a lot and is relatively static, while the second is user-driven. GatsbyJS allows us to load data in two ways:

data that is loaded at build time which will be used to generate the static site.

data that is loaded live at request time as a client visits and interacts with your website.

In this example, we chose to let the products be loaded at build time, and the reviews to be loaded on-demand in the browser. Therefore, we get static HTML product pages served by a CDN that the user immediately sees. Then, as our user interacts with the product page, we load the data for the reviews.

4. Create a new GatsbyJS project

The following command creates a GatsbyJS project based on the starter template:

$ npx gatsby-cli new hello-world-gatsby-faunadb $ cd hello-world-gatsby-faunadb

5. Install npm packages

In order to build our new project with Gatsby and Apollo, we need a few additional packages. We can install the packages with the following command:

$ npm i gatsby-source-graphql apollo-boost react-apollo

We will use gatsby-source-graphql as a way to link GraphQL APIs into the build process. Using this library, you can make a GraphQL call from which the results will be automagically provided as the properties for your react component. That way, you can use dynamic data to statically generate your application. The apollo-boost package is an easily configurable GraphQL library that will be used to fetch data on the client. Finally, the link between Apollo and React will be taken care of by the react-apollo library.

6. Create the server key for the database

We will create a Server key which will be used by Gatsby to prerender the page. Remember to copy the secret somewhere since we will use it later on. Protect server keys carefully, they can be used to create, destroy, or manage the database to which they are assigned. To create the key we can go to the fauna dashboard and create the key in the security tab.

7. Update GatsbyJS config files with server and new read-only keys

To add the GraphQL support to our build process, we need to add the following code into our graphql-config.js inside the plugins section where we will insert the FaunaDB server key which we generated a few moments ago.

{ resolve: "gatsby-source-graphql", options: { typeName: "Fauna", fieldName: "fauna", url: "https://graphql.fauna.com/graphql", headers: { Authorization: "Bearer <SERVER KEY>", }, }, }

For the GraphQL access to work in the browser, we have to create a key that only has permissions to read data from the collections. FaunaDB has an extensive security layer in which you can define that. The easiest way is to go to the FaunaDB Console at dashboard.fauna.com and create a new role for your database by clicking "Security" in the left sidebar, then "Manage Roles," then "New Custom Role":

Call the new custom role ‘ClientRead’ and make sure to add all collections and indexes (these are the collections that were created by importing the GraphQL schema). Then, select Read for each for them. Your screen should look like this:

You have probably noticed the Membership tab on this page. Although we are not using it in this tutorial, it is interesting enough to explain it since it's an alternative way to get security tokens. In the Membership tab can specify that entities of a collection (let's say we have a 'Users' collection) in FaunaDb are members of a particular role. That means that if you impersonate one of these entities in that collection, the role privileges apply. You impersonate a database entity (e.g. a User) by associating credentials with the entity and using the Login function, which will return a token. That way you can also implement password-based authentication in FaunaDb. We won't use it in this tutorial, but if that interests you, check the FaunaDB authentication tutorial.

Let’s ignore Membership for now, once you have created the role, we can create a new key with the new role. As before, click "Security", then "New Key," but this time select "ClientRead" from the Role dropdown:

Now, let's insert this read-only key in the gatsby-browser.js configuration file to be able to call the GraphQL API from the browser:

import React from "react" import ApolloClient from "apollo-boost" import { ApolloProvider } from "react-apollo" const client = new ApolloClient({ uri: "https://graphql.fauna.com/graphql", request: operation => { operation.setContext({ headers: { Authorization: "Bearer <CLIENT_KEY>", }, }) }, }) export const wrapRootElement = ({ element }) => ( <ApolloProvider client={client}>{element}</ApolloProvider> )

GatsbyJS will render its Router component as a root element. If we want to use the ApolloClient everywhere in the application on the client, we need to wrap this root element with the ApolloProvider component.

8. Load the pre-rendered product data at build time

Now that everything is set up, we can finally write the actual code to load our data. Let’s start with the products we will load at build time.

For this we need to modify src/pages/index.js file to look like this:

import React from "react" import { graphql } from "gatsby" Import Layout from "../components/Layout" const IndexPage = ({ data }) => ( <Layout> <ul> {data.fauna.allProducts.data.map(product => ( <li>{product.title} - {product.description}</li> ))} </ul> </Layout> ) export const query = graphql` { fauna { allProducts { data { _id title description } } } } ` export default IndexPage

The exported query will automatically get picked up by GatsbyJS and executed before rendering the IndexPage component. The result of that query will be passed as data prop into the IndexPage component.If we now run the develop script, we can see the pre-rendered documents on the development server on http://localhost:8000/.

$ npm run develop

9. Load the reviews at run time

To load the reviews of a product on the client, we have to make some changes to the src/pages/index.js:

import { gql } from "apollo-boost" import { useQuery } from "@apollo/react-hooks" import { graphql } from "gatsby" import React, { useState } from "react" import Layout from "../components/layout" // Query for fetching at build-time export const query = graphql ` { fauna { allProducts { data { _id title description } } } } ` // Query for fetching on the client const GET_REVIEWS = gql ` query GetReviews($productId: ID!) { findProductByID(id: $productId) { reviews { data { _id username text } } } } ` const IndexPage = props => { const [productId, setProductId] = useState(null) const { loading, data } = useQuery(GET_REVIEWS, { variables: { productId }, skip: !productId, }) } export default IndexPage

Let’s go through this step by step.

First, we need to import parts of the apollo-boost and apollo-react packages so we can use the GraphQL client we previously set up in the gatsby-browser.js file.

Then, we need to implement our GET_REVIEWS query. It tries to find a product by its ID and then loads the associated reviews of that product. The query takes one variable, which is the productId.

In the component function, we use two hooks: useState and useQuery

The useState hook keeps track of the productId for which we want to load reviews. If a user clicks a button, the state will be set to the productId corresponding to that button.

The useQuery hook then applies this productId to load reviews for that product from FaunaDB. The skip parameter of the hook prevents the execution of the query when the page is rendered for the first time because productId will be null.

If we now run the development server again and click on the buttons, our application should execute the query with different productIds as expected.

$ npm run develop

Conclusion

A combination of server-side data fetching and client-side hydration make JAMstack applications pretty powerful. These methods enable flexible interaction with our data so we can adhere to different business needs.

It’s usually a good idea to load as much data at build time as possible to improve page performance. But if the data isn’t needed by all clients, or too big to be sent to the client all at once, we can split things up and switch to on-demand loading on the client. This is the case for user-specific data, pagination, or any data that changes rather frequently and might be outdated by the time it reaches the user.

In this article, we implemented an approach that loads part of the data at build time, and then loads the rest of the data in the frontend as the user interacts with the page.

Of course, we have not implemented a login or forms yet to create new reviews. How would we tackle that? That is material for another tutorial where we can use FaunaDB’s attribute-based access control to specify what a client key can read and write from the frontend.

The code of this tutorial can be found in this repo.

The post Build a dynamic JAMstack app with GatsbyJS and FaunaDB appeared first on CSS-Tricks.

Build a dynamic JAMstack app with GatsbyJS and FaunaDB published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Mirage JS Deep Dive: Using Mirage JS And Cypress For UI Testing (Part 4)

About The Author

Kelvin Omereshone is the CTO at Quru Lab. Kelvin was formerly a Front-end engineer at myPadi.ng. He’s the creator of NUXtjs Africa community and very passionate … More about Kelvin …

In this final part of Mirage JS Deep Dive series, we will be putting everything we’ve learned in the past series into learning how to perform UI tests with Mirage JS.

One of my favorite quotes about software testing is from the Flutter documentation. It says:

“How can you ensure that your app continues to work as you add more features or change existing functionality? By writing tests.”

On that note, this last part of the Mirage JS Deep Dive series will focus on using Mirage to test your JavaScript front-end application.

Note: This article assumes a Cypress environment. Cypress is a testing framework for UI testing. You can, however, transfer the knowledge here to whatever UI testing environment or framework you use.

Read Previous Parts Of The Series:

Part 1: Understanding Mirage JS Models And Associations

Part 2: Understanding Factories, Fixtures And Serializers

Part 3: Understanding Timing, Response And Passthrough

UI Tests Primer

UI or User Interface test is a form of acceptance testing done to verify the user flows of your front-end application. The emphasis of these kinds of software tests is on the end-user that is the actual person who will be interacting with your web application on a variety of devices ranging from desktops, laptops to mobile devices. These users would be interfacing or interacting with your application using input devices such as a keyboard, mouse, or touch screens. UI tests, therefore, are written to mimic the user interaction with your application as close as possible.

Let’s take an e-commerce website for example. A typical UI test scenario would be:

The user can view the list of products when visiting the homepage.

Other UI test scenarios might be:

The user can see the name of a product on the product’s detail page.

The user can click on the “add to cart” button.

The user can checkout.

You get the idea, right?

In making UI Tests, you will mostly be relying on your back-end states, i.e. did it return the products or an error? The role Mirage plays in this is to make those server states available for you to tweak as you need. So instead of making an actual request to your production server in your UI tests, you make the request to Mirage mock server.

For the remaining part of this article, we will be performing UI tests on a fictitious e-commerce web application UI. So let’s get started.

Our First UI Test

As earlier stated, this article assumes a Cypress environment. Cypress makes testing UI on the web fast and easy. You could simulate clicks and navigation and you can programmatically visit routes in your application. See the docs for more on Cypress.

So, assuming Cypress and Mirage are available to us, let’s start off by defining a proxy function for your API request. We can do so in the support/index.js file of our Cypress setup. Just paste the following code in:

// cypress/support/index.jsCypress.on("window:before:load", (win) => { win.handleFromCypress = function (request) { return fetch(request.url, { method: request.method, headers: request.requestHeaders, body: request.requestBody, }).then((res) => { let content = res.headers.map["content-type"] === "application/json" ? res.json() : res.text() return new Promise((resolve) => { content.then((body) => resolve([res.status, res.headers, body])) }) }) }})

Then, in your app bootstrapping file (main.js for Vue, index.js for React), we’ll use Mirage to proxy your app’s API requests to the handleFromCypress function only when Cypress is running. Here is the code for that:

import { Server, Response } from "miragejs" if (window.Cypress) { new Server({ environment: "test", routes() { let methods = ["get", "put", "patch", "post", "delete"] methods.forEach((method) => { this[method]("/*", async (schema, request) => { let [status, headers, body] = await window.handleFromCypress(request) return new Response(status, headers, body) }) }) }, })}

With that setup, anytime Cypress is running, your app knows to use Mirage as the mock server for all API requests.

Let’s continue writing some UI tests. We’ll begin by testing our homepage to see if it has 5 products displayed. To do this in Cypress, we need to create a homepage.test.js file in the tests folder in the root of your project directory. Next, we’ll tell Cypress to do the following:

Visit the homepage i.e / route

Then assert if it has li elements with the class of product and also checks if they are 5 in numbers.

Here is the code:

// homepage.test.jsit('shows the products', () => { cy.visit('/'); cy.get('li.product').should('have.length', 5);});

You might have guessed that this test would fail because we don’t have a production server returning 5 products to our front-end application. So what do we do? We mock out the server in Mirage! If we bring in Mirage, it can intercept all network calls in our tests. Let’s do this below and start the Mirage server before each test in the beforeEach function and also shut it down in the afterEach function. The beforeEach and afterEach functions are both provided by Cypress and they were made available so you could run code before and after each test run in your test suite — hence the name. So let’s see the code for this:

// homepage.test.jsimport { Server } from "miragejs" let server beforeEach(() => { server = new Server()}) afterEach(() => { server.shutdown()}) it("shows the products", function () { cy.visit("/") cy.get("li.product").should("have.length", 5)})

Okay, we are getting somewhere; we have imported the Server from Mirage and we are starting it and shutting it down in beforeEach and afterEach functions respectively. Let’s go about mocking our product resource.

// homepage.test.jsimport { Server, Model } from 'miragejs'; let server; beforeEach(() => { server = new Server({ models: { product: Model, }, routes() { this.namespace = 'api'; this.get('products', ({ products }, request) => { return products.all(); }); }, });}); afterEach(() => { server.shutdown();}); it('shows the products', function() { cy.visit('/'); cy.get('li.product').should('have.length', 5);});

Note: You can always take a peek at the previous parts of this series if you don’t understand the Mirage bits of the above code snippet.

Part 1: Understanding Mirage JS Models And Associations

Part 2: Understanding Factories, Fixtures And Serializers

Part 3: Understanding Timing, Response And Passthrough

Okay, we have started fleshing out our Server instance by creating the product model and also by creating the route handler for the /api/products route. However, if we run our tests, it will fail because we don’t have any products in the Mirage database yet.

Let’s populate the Mirage database with some products. In order to do this, we could have used the create() method on our server instance, but creating 5 products by hand seems pretty tedious. There should be a better way.

Ah yes, there is. Let’s utilize factories (as explained in the second part of this series). We’ll need to create our product factory like so:

// homepage.test.jsimport { Server, Model, Factory } from 'miragejs'; let server; beforeEach(() => { server = new Server({ models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}` } }) }, routes() { this.namespace = 'api'; this.get('products', ({ products }, request) => { return products.all(); }); }, });}); afterEach(() => { server.shutdown();}); it('shows the products', function() { cy.visit('/'); cy.get('li.product').should('have.length', 5);});

Then, finally, we’ll use createList() to quickly create the 5 products that our test needs to pass.

Let’s do this:

// homepage.test.jsimport { Server, Model, Factory } from 'miragejs'; let server; beforeEach(() => { server = new Server({ models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}` } }) }, routes() { this.namespace = 'api'; this.get('products', ({ products }, request) => { return products.all(); }); }, });}); afterEach(() => { server.shutdown();}); it('shows the products', function() { server.createList("product", 5) cy.visit('/'); cy.get('li.product').should('have.length', 5);});

So when we run our test, it passes!

Note: After each test, Mirage’s server is shutdown and reset, so none of this state will leak across tests.

Avoiding Multiple Mirage Server

If you have been following along this series, you’d notice when we were using Mirage in development to intercept our network requests; we had a server.js file in the root of our app where we set up Mirage. In the spirit of DRY (Don’t Repeat Yourself), I think it would be good to utilize that server instance instead of having two separate instances of Mirage for both development and testing. To do this (in case you don’t have a server.js file already), just create one in your project src directory.

Note: Your structure will differ if you are using a JavaScript framework but the general idea is to setup up the server.js file in the src root of your project.

So with this new structure, we’ll export a function in server.js that is responsible for creating our Mirage server instance. Let’s do that:

// src/server.js export function makeServer() { /* Mirage code goes here */}

Let’s complete the implementation of the makeServer function by removing the Mirage JS server we created in homepage.test.js and adding it to the makeServer function body:

import { Server, Model, Factory } from 'miragejs'; export function makeServer() { let server = new Server({ models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}`; }, }), }, routes() { this.namespace = 'api'; this.get('/products', ({ products }) => { return products.all(); }); }, seeds(server) { server.createList('product', 5); }, }); return server;}

Now all you have to do is import makeServer in your test. Using a single Mirage Server instance is cleaner; this way you don’t have to maintain two server instances for both development and test environments.

After importing the makeServer function, our test should now look like this:

import { makeServer } from '/path/to/server'; let server; beforeEach(() => { server = makeServer();}); afterEach(() => { server.shutdown();}); it('shows the products', function() { server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5);});

So we now have a central Mirage server that serves us in both development and testing. You can also use the makeServer function to start Mirage in development (see first part of this series).

Your Mirage code should not find it’s way into production. Therefore, depending on your build setup, you would need to only start Mirage during development mode.

Note: Read my article on how to set up API Mocking with Mirage and Vue.js to see how I did that in Vue so you could replicate in whatever front-end framework you use.

Testing Environment

Mirage has two environments: development (default) and test. In development mode, the Mirage server will have a default response time of 400ms(which you can customize. See the third article of this series for that), logs all server responses to the console, and loads the development seeds.

However, in the test environment, we have:

0 delays to keep our tests fast

Mirage suppresses all logs so as not to pollute your CI logs

Mirage will also ignore the seeds() function so that your seed data can be used solely for development but won’t leak into your tests. This helps keep your tests deterministic.

Let’s update our makeServer so we can have the benefit of the test environment. To do that, we’ll make it accept an object with the environment option(we will default it to development and override it in our test). Our server.js should now look like this:

// src/server.jsimport { Server, Model, Factory } from 'miragejs'; export function makeServer({ environment = 'development' } = {}) { let server = new Server({ environment, models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}`; }, }), }, routes() { this.namespace = 'api'; this.get('/products', ({ products }) => { return products.all(); }); }, seeds(server) { server.createList('product', 5); }, }); return server;}

Also note that we are passing the environment option to the Mirage server instance using the ES6 property shorthand. Now with this in place, let’s update our test to override the environment value to test. Our test now looks like this:

import { makeServer } from '/path/to/server'; let server; beforeEach(() => { server = makeServer({ environment: 'test' });}); afterEach(() => { server.shutdown();}); it('shows the products', function() { server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5);});

AAA Testing

Mirage encourages a standard for testing called the triple-A or AAA testing approach. This stands for Arrange, Act and Assert. You could see this structure in our above test already:

it("shows all the products", function () { // ARRANGE server.createList("product", 5) // ACT cy.visit("/") // ASSERT cy.get("li.product").should("have.length", 5)})

You might need to break this pattern but 9 times out of 10 it should work just fine for your tests.

Let’s Test Errors

So far, we’ve tested our homepage to see if it has 5 products, however, what if the server is down or something went wrong with fetching the products? We don’t need to wait for the server to be down to work on how our UI would look like in such a case. We can simply simulate that scenario with Mirage.

Let’s return a 500 (Server error) when the user is on the homepage. As we have seen in a previous article, to customize Mirage responses we make use of the Response class. Let’s import it and write our test.

homepage.test.jsimport { Response } from "miragejs" it('shows an error when fetching products fails', function() { server.get('/products', () => { return new Response( 500, {}, { error: "Can’t fetch products at this time" } ); }); cy.visit('/'); cy.get('div.error').should('contain', "Can’t fetch products at this time");});

What a world of flexibility! We just override the response Mirage would return in order to test how our UI would display if it failed fetching products. Our overall homepage.test.js file would now look like this:

// homepage.test.jsimport { Response } from 'miragejs';import { makeServer } from 'path/to/server'; let server; beforeEach(() => { server = makeServer({ environment: 'test' });}); afterEach(() => { server.shutdown();}); it('shows the products', function() { server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5);}); it('shows an error when fetching products fails', function() { server.get('/products', () => { return new Response( 500, {}, { error: "Can’t fetch products at this time" } ); }); cy.visit('/'); cy.get('div.error').should('contain', "Can’t fetch products at this time");});

Note the modification we did to the /api/products handler only lives in our test. That means it works as we previously define when you are in development mode.

So when we run our tests, both should pass.

Note: I believe its worthy of noting that the elements we are querying for in Cypress should exist in your front-end UI. Cypress doesn’t create HTML elements for you.

Testing The Product Detail Page

Finally, let’s test the UI of the product detail page. So this is what we are testing for:

User can see the product name on the product detail page

Let’s get to it. First, we create a new test to test this user flow.

Here is the test:

it("shows the product’s name on the detail route", function() { let product = this.server.create('product', { name: 'Korg Piano', }); cy.visit(`/${product.id}`); cy.get('h1').should('contain', 'Korg Piano');});

Your homepage.test.js should finally look like this.

// homepage.test.jsimport { Response } from 'miragejs';import { makeServer } from 'path/to/server; let server; beforeEach(() => { server = makeServer({ environment: 'test' });}); afterEach(() => { server.shutdown();}); it('shows the products', function() { console.log(server); server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5);}); it('shows an error when fetching products fails', function() { server.get('/products', () => { return new Response( 500, {}, { error: "Can’t fetch products at this time" } ); }); cy.visit('/'); cy.get('div.error').should('contain', "Can’t fetch products at this time");}); it("shows the product’s name on the detail route", function() { let product = server.create('product', { name: 'Korg Piano', }); cy.visit(`/${product.id}`); cy.get('h1').should('contain', 'Korg Piano');});

When you run your tests, all three should pass.

Wrapping Up

It’s been fun showing you the inners of Mirage JS in this series. I hope you have been better equipped to start having a better front-end development experience by using Mirage to mock out your back-end server. I also hope you’ll use the knowledge from this article to write more acceptance/UI/end-to-end tests for your front-end applications.

Part 1: Understanding Mirage JS Models And Associations

Part 2: Understanding Factories, Fixtures And Serializers

Part 3: Understanding Timing, Response And Passthrough

Part 4: Using Mirage JS And Cypress For UI Testing

(ra, il)

Website Design & SEO Delray Beach by DBL07.co

Delray Beach SEO

Via http://www.scpie.org/mirage-js-deep-dive-using-mirage-js-and-cypress-for-ui-testing-part-4/

source https://scpie.weebly.com/blog/mirage-js-deep-dive-using-mirage-js-and-cypress-for-ui-testing-part-4

0 notes

Text

Mirage JS Deep Dive: Using Mirage JS And Cypress For UI Testing (Part 4)

About The Author

Kelvin Omereshone is the CTO at Quru Lab. Kelvin was formerly a Front-end engineer at myPadi.ng. He’s the creator of NUXtjs Africa community and very passionate … More about Kelvin …

In this final part of Mirage JS Deep Dive series, we will be putting everything we’ve learned in the past series into learning how to perform UI tests with Mirage JS.

One of my favorite quotes about software testing is from the Flutter documentation. It says:

“How can you ensure that your app continues to work as you add more features or change existing functionality? By writing tests.”

On that note, this last part of the Mirage JS Deep Dive series will focus on using Mirage to test your JavaScript front-end application.

Note: This article assumes a Cypress environment. Cypress is a testing framework for UI testing. You can, however, transfer the knowledge here to whatever UI testing environment or framework you use.

Read Previous Parts Of The Series:

Part 1: Understanding Mirage JS Models And Associations

Part 2: Understanding Factories, Fixtures And Serializers

Part 3: Understanding Timing, Response And Passthrough

UI Tests Primer

UI or User Interface test is a form of acceptance testing done to verify the user flows of your front-end application. The emphasis of these kinds of software tests is on the end-user that is the actual person who will be interacting with your web application on a variety of devices ranging from desktops, laptops to mobile devices. These users would be interfacing or interacting with your application using input devices such as a keyboard, mouse, or touch screens. UI tests, therefore, are written to mimic the user interaction with your application as close as possible.

Let’s take an e-commerce website for example. A typical UI test scenario would be:

The user can view the list of products when visiting the homepage.

Other UI test scenarios might be:

The user can see the name of a product on the product’s detail page.

The user can click on the “add to cart” button.

The user can checkout.

You get the idea, right?

In making UI Tests, you will mostly be relying on your back-end states, i.e. did it return the products or an error? The role Mirage plays in this is to make those server states available for you to tweak as you need. So instead of making an actual request to your production server in your UI tests, you make the request to Mirage mock server.

For the remaining part of this article, we will be performing UI tests on a fictitious e-commerce web application UI. So let’s get started.

Our First UI Test

As earlier stated, this article assumes a Cypress environment. Cypress makes testing UI on the web fast and easy. You could simulate clicks and navigation and you can programmatically visit routes in your application. See the docs for more on Cypress.

So, assuming Cypress and Mirage are available to us, let’s start off by defining a proxy function for your API request. We can do so in the support/index.js file of our Cypress setup. Just paste the following code in:

// cypress/support/index.js Cypress.on("window:before:load", (win) => { win.handleFromCypress = function (request) { return fetch(request.url, { method: request.method, headers: request.requestHeaders, body: request.requestBody, }).then((res) => { let content = res.headers.map["content-type"] === "application/json" ? res.json() : res.text() return new Promise((resolve) => { content.then((body) => resolve([res.status, res.headers, body])) }) }) } })

Then, in your app bootstrapping file (main.js for Vue, index.js for React), we’ll use Mirage to proxy your app’s API requests to the handleFromCypress function only when Cypress is running. Here is the code for that:

import { Server, Response } from "miragejs" if (window.Cypress) { new Server({ environment: "test", routes() { let methods = ["get", "put", "patch", "post", "delete"] methods.forEach((method) => { this[method]("/*", async (schema, request) => { let [status, headers, body] = await window.handleFromCypress(request) return new Response(status, headers, body) }) }) }, }) }

With that setup, anytime Cypress is running, your app knows to use Mirage as the mock server for all API requests.

Let’s continue writing some UI tests. We’ll begin by testing our homepage to see if it has 5 products displayed. To do this in Cypress, we need to create a homepage.test.js file in the tests folder in the root of your project directory. Next, we’ll tell Cypress to do the following:

Visit the homepage i.e / route

Then assert if it has li elements with the class of product and also checks if they are 5 in numbers.

Here is the code:

// homepage.test.js it('shows the products', () => { cy.visit('/'); cy.get('li.product').should('have.length', 5); });

You might have guessed that this test would fail because we don’t have a production server returning 5 products to our front-end application. So what do we do? We mock out the server in Mirage! If we bring in Mirage, it can intercept all network calls in our tests. Let’s do this below and start the Mirage server before each test in the beforeEach function and also shut it down in the afterEach function. The beforeEach and afterEach functions are both provided by Cypress and they were made available so you could run code before and after each test run in your test suite — hence the name. So let’s see the code for this:

// homepage.test.js import { Server } from "miragejs" let server beforeEach(() => { server = new Server() }) afterEach(() => { server.shutdown() }) it("shows the products", function () { cy.visit("/") cy.get("li.product").should("have.length", 5) })

Okay, we are getting somewhere; we have imported the Server from Mirage and we are starting it and shutting it down in beforeEach and afterEach functions respectively. Let’s go about mocking our product resource.

// homepage.test.js import { Server, Model } from 'miragejs'; let server; beforeEach(() => { server = new Server({ models: { product: Model, }, routes() { this.namespace = 'api'; this.get('products', ({ products }, request) => { return products.all(); }); }, }); }); afterEach(() => { server.shutdown(); }); it('shows the products', function() { cy.visit('/'); cy.get('li.product').should('have.length', 5); });

Note: You can always take a peek at the previous parts of this series if you don’t understand the Mirage bits of the above code snippet.

Part 1: Understanding Mirage JS Models And Associations

Part 2: Understanding Factories, Fixtures And Serializers

Part 3: Understanding Timing, Response And Passthrough

Okay, we have started fleshing out our Server instance by creating the product model and also by creating the route handler for the /api/products route. However, if we run our tests, it will fail because we don’t have any products in the Mirage database yet.

Let’s populate the Mirage database with some products. In order to do this, we could have used the create() method on our server instance, but creating 5 products by hand seems pretty tedious. There should be a better way.

Ah yes, there is. Let’s utilize factories (as explained in the second part of this series). We’ll need to create our product factory like so:

// homepage.test.js import { Server, Model, Factory } from 'miragejs'; let server; beforeEach(() => { server = new Server({ models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}` } }) }, routes() { this.namespace = 'api'; this.get('products', ({ products }, request) => { return products.all(); }); }, }); }); afterEach(() => { server.shutdown(); }); it('shows the products', function() { cy.visit('/'); cy.get('li.product').should('have.length', 5); });

Then, finally, we’ll use createList() to quickly create the 5 products that our test needs to pass.

Let’s do this:

// homepage.test.js import { Server, Model, Factory } from 'miragejs'; let server; beforeEach(() => { server = new Server({ models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}` } }) }, routes() { this.namespace = 'api'; this.get('products', ({ products }, request) => { return products.all(); }); }, }); }); afterEach(() => { server.shutdown(); }); it('shows the products', function() { server.createList("product", 5) cy.visit('/'); cy.get('li.product').should('have.length', 5); });

So when we run our test, it passes!

Note: After each test, Mirage’s server is shutdown and reset, so none of this state will leak across tests.

Avoiding Multiple Mirage Server

If you have been following along this series, you’d notice when we were using Mirage in development to intercept our network requests; we had a server.js file in the root of our app where we set up Mirage. In the spirit of DRY (Don’t Repeat Yourself), I think it would be good to utilize that server instance instead of having two separate instances of Mirage for both development and testing. To do this (in case you don’t have a server.js file already), just create one in your project src directory.

Note: Your structure will differ if you are using a JavaScript framework but the general idea is to setup up the server.js file in the src root of your project.

So with this new structure, we’ll export a function in server.js that is responsible for creating our Mirage server instance. Let’s do that:

// src/server.js export function makeServer() { /* Mirage code goes here */}

Let’s complete the implementation of the makeServer function by removing the Mirage JS server we created in homepage.test.js and adding it to the makeServer function body:

import { Server, Model, Factory } from 'miragejs'; export function makeServer() { let server = new Server({ models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}`; }, }), }, routes() { this.namespace = 'api'; this.get('/products', ({ products }) => { return products.all(); }); }, seeds(server) { server.createList('product', 5); }, }); return server; }

Now all you have to do is import makeServer in your test. Using a single Mirage Server instance is cleaner; this way you don’t have to maintain two server instances for both development and test environments.

After importing the makeServer function, our test should now look like this:

import { makeServer } from '/path/to/server'; let server; beforeEach(() => { server = makeServer(); }); afterEach(() => { server.shutdown(); }); it('shows the products', function() { server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5); });

So we now have a central Mirage server that serves us in both development and testing. You can also use the makeServer function to start Mirage in development (see first part of this series).

Your Mirage code should not find it’s way into production. Therefore, depending on your build setup, you would need to only start Mirage during development mode.

Note: Read my article on how to set up API Mocking with Mirage and Vue.js to see how I did that in Vue so you could replicate in whatever front-end framework you use.

Testing Environment

Mirage has two environments: development (default) and test. In development mode, the Mirage server will have a default response time of 400ms(which you can customize. See the third article of this series for that), logs all server responses to the console, and loads the development seeds.

However, in the test environment, we have:

0 delays to keep our tests fast

Mirage suppresses all logs so as not to pollute your CI logs

Mirage will also ignore the seeds() function so that your seed data can be used solely for development but won’t leak into your tests. This helps keep your tests deterministic.

Let’s update our makeServer so we can have the benefit of the test environment. To do that, we’ll make it accept an object with the environment option(we will default it to development and override it in our test). Our server.js should now look like this:

// src/server.js import { Server, Model, Factory } from 'miragejs'; export function makeServer({ environment = 'development' } = {}) { let server = new Server({ environment, models: { product: Model, }, factories: { product: Factory.extend({ name(i) { return `Product ${i}`; }, }), }, routes() { this.namespace = 'api'; this.get('/products', ({ products }) => { return products.all(); }); }, seeds(server) { server.createList('product', 5); }, }); return server; }

Also note that we are passing the environment option to the Mirage server instance using the ES6 property shorthand. Now with this in place, let’s update our test to override the environment value to test. Our test now looks like this:

import { makeServer } from '/path/to/server'; let server; beforeEach(() => { server = makeServer({ environment: 'test' }); }); afterEach(() => { server.shutdown(); }); it('shows the products', function() { server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5); });

AAA Testing

Mirage encourages a standard for testing called the triple-A or AAA testing approach. This stands for Arrange, Act and Assert. You could see this structure in our above test already:

it("shows all the products", function () { // ARRANGE server.createList("product", 5) // ACT cy.visit("/") // ASSERT cy.get("li.product").should("have.length", 5) })

You might need to break this pattern but 9 times out of 10 it should work just fine for your tests.

Let’s Test Errors

So far, we’ve tested our homepage to see if it has 5 products, however, what if the server is down or something went wrong with fetching the products? We don’t need to wait for the server to be down to work on how our UI would look like in such a case. We can simply simulate that scenario with Mirage.

Let’s return a 500 (Server error) when the user is on the homepage. As we have seen in a previous article, to customize Mirage responses we make use of the Response class. Let’s import it and write our test.

homepage.test.js import { Response } from "miragejs" it('shows an error when fetching products fails', function() { server.get('/products', () => { return new Response( 500, {}, { error: "Can’t fetch products at this time" } ); }); cy.visit('/'); cy.get('div.error').should('contain', "Can’t fetch products at this time"); });

What a world of flexibility! We just override the response Mirage would return in order to test how our UI would display if it failed fetching products. Our overall homepage.test.js file would now look like this:

// homepage.test.js import { Response } from 'miragejs'; import { makeServer } from 'path/to/server'; let server; beforeEach(() => { server = makeServer({ environment: 'test' }); }); afterEach(() => { server.shutdown(); }); it('shows the products', function() { server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5); }); it('shows an error when fetching products fails', function() { server.get('/products', () => { return new Response( 500, {}, { error: "Can’t fetch products at this time" } ); }); cy.visit('/'); cy.get('div.error').should('contain', "Can’t fetch products at this time"); });

Note the modification we did to the /api/products handler only lives in our test. That means it works as we previously define when you are in development mode.

So when we run our tests, both should pass.

Note: I believe its worthy of noting that the elements we are querying for in Cypress should exist in your front-end UI. Cypress doesn’t create HTML elements for you.

Testing The Product Detail Page

Finally, let’s test the UI of the product detail page. So this is what we are testing for:

User can see the product name on the product detail page

Let’s get to it. First, we create a new test to test this user flow.

Here is the test:

it("shows the product’s name on the detail route", function() { let product = this.server.create('product', { name: 'Korg Piano', }); cy.visit(`/${product.id}`); cy.get('h1').should('contain', 'Korg Piano'); });

Your homepage.test.js should finally look like this.

// homepage.test.js import { Response } from 'miragejs'; import { makeServer } from 'path/to/server; let server; beforeEach(() => { server = makeServer({ environment: 'test' }); }); afterEach(() => { server.shutdown(); }); it('shows the products', function() { console.log(server); server.createList('product', 5); cy.visit('/'); cy.get('li.product').should('have.length', 5); }); it('shows an error when fetching products fails', function() { server.get('/products', () => { return new Response( 500, {}, { error: "Can’t fetch products at this time" } ); }); cy.visit('/'); cy.get('div.error').should('contain', "Can’t fetch products at this time"); }); it("shows the product’s name on the detail route", function() { let product = server.create('product', { name: 'Korg Piano', }); cy.visit(`/${product.id}`); cy.get('h1').should('contain', 'Korg Piano'); });

When you run your tests, all three should pass.

Wrapping Up

It’s been fun showing you the inners of Mirage JS in this series. I hope you have been better equipped to start having a better front-end development experience by using Mirage to mock out your back-end server. I also hope you’ll use the knowledge from this article to write more acceptance/UI/end-to-end tests for your front-end applications.

Part 1: Understanding Mirage JS Models And Associations

Part 2: Understanding Factories, Fixtures And Serializers

Part 3: Understanding Timing, Response And Passthrough

Part 4: Using Mirage JS And Cypress For UI Testing

(ra, il)

Website Design & SEO Delray Beach by DBL07.co

Delray Beach SEO

source http://www.scpie.org/mirage-js-deep-dive-using-mirage-js-and-cypress-for-ui-testing-part-4/ source https://scpie.tumblr.com/post/621217772438011904

0 notes

Text

Mirage JS Deep Dive: Using Mirage JS And Cypress For UI Testing (Part 4)

About The Author

Kelvin Omereshone is the CTO at Quru Lab. Kelvin was formerly a Front-end engineer at myPadi.ng. He’s the creator of NUXtjs Africa community and very passionate … More about Kelvin …

In this final part of Mirage JS Deep Dive series, we will be putting everything we’ve learned in the past series into learning how to perform UI tests with Mirage JS.

One of my favorite quotes about software testing is from the Flutter documentation. It says:

“How can you ensure that your app continues to work as you add more features or change existing functionality? By writing tests.”

On that note, this last part of the Mirage JS Deep Dive series will focus on using Mirage to test your JavaScript front-end application.

Note: This article assumes a Cypress environment. Cypress is a testing framework for UI testing. You can, however, transfer the knowledge here to whatever UI testing environment or framework you use.

Read Previous Parts Of The Series:

Part 1: Understanding Mirage JS Models And Associations

Part 2: Understanding Factories, Fixtures And Serializers

Part 3: Understanding Timing, Response And Passthrough

UI Tests Primer

UI or User Interface test is a form of acceptance testing done to verify the user flows of your front-end application. The emphasis of these kinds of software tests is on the end-user that is the actual person who will be interacting with your web application on a variety of devices ranging from desktops, laptops to mobile devices. These users would be interfacing or interacting with your application using input devices such as a keyboard, mouse, or touch screens. UI tests, therefore, are written to mimic the user interaction with your application as close as possible.

Let’s take an e-commerce website for example. A typical UI test scenario would be:

The user can view the list of products when visiting the homepage.

Other UI test scenarios might be:

The user can see the name of a product on the product’s detail page.

The user can click on the “add to cart” button.

The user can checkout.

You get the idea, right?

In making UI Tests, you will mostly be relying on your back-end states, i.e. did it return the products or an error? The role Mirage plays in this is to make those server states available for you to tweak as you need. So instead of making an actual request to your production server in your UI tests, you make the request to Mirage mock server.

For the remaining part of this article, we will be performing UI tests on a fictitious e-commerce web application UI. So let’s get started.

Our First UI Test

As earlier stated, this article assumes a Cypress environment. Cypress makes testing UI on the web fast and easy. You could simulate clicks and navigation and you can programmatically visit routes in your application. See the docs for more on Cypress.

So, assuming Cypress and Mirage are available to us, let’s start off by defining a proxy function for your API request. We can do so in the support/index.js file of our Cypress setup. Just paste the following code in:

// cypress/support/index.js Cypress.on("window:before:load", (win) => { win.handleFromCypress = function (request) { return fetch(request.url, { method: request.method, headers: request.requestHeaders, body: request.requestBody, }).then((res) => { let content = res.headers.map["content-type"] === "application/json" ? res.json() : res.text() return new Promise((resolve) => { content.then((body) => resolve([res.status, res.headers, body])) }) }) } })

Then, in your app bootstrapping file (main.js for Vue, index.js for React), we’ll use Mirage to proxy your app’s API requests to the handleFromCypress function only when Cypress is running. Here is the code for that: