#Database migration with Spring boot

Explore tagged Tumblr posts

Text

Legacy Software Modernization Services In India – NRS Infoways

In today’s hyper‑competitive digital landscape, clinging to outdated systems is no longer an option. Legacy applications can slow innovation, inflate maintenance costs, and expose your organization to security vulnerabilities. NRS Infoways bridges the gap between yesterday’s technology and tomorrow’s possibilities with comprehensive Software Modernization Services In India that revitalize your core systems without disrupting day‑to‑day operations.

Why Modernize?

Boost Performance & Scalability

Legacy architectures often struggle under modern workloads. By re‑architecting or migrating to cloud‑native frameworks, NRS Infoways unlocks the flexibility you need to scale on demand and handle unpredictable traffic spikes with ease.

Reduce Technical Debt

Old codebases are costly to maintain. Our experts refactor critical components, streamline dependencies, and implement automated testing pipelines, dramatically lowering long‑term maintenance expenses.

Strengthen Security & Compliance

Obsolete software frequently harbors unpatched vulnerabilities. We embed industry‑standard security protocols and data‑privacy controls to safeguard sensitive information and keep you compliant with evolving regulations.

Enhance User Experience

Customers expect snappy, intuitive interfaces. We upgrade clunky GUIs into sleek, responsive designs—whether for web, mobile, or enterprise portals—boosting user satisfaction and retention.

Our Proven Modernization Methodology

1. Deep‑Dive Assessment

We begin with an exhaustive audit of your existing environment—code quality, infrastructure, DevOps maturity, integration points, and business objectives. This roadmap pinpoints pain points, ranks priorities, and plots the most efficient modernization path.

2. Strategic Planning & Architecture

Armed with data, we design a future‑proof architecture. Whether it’s containerization with Docker/Kubernetes, serverless microservices, or hybrid-cloud setups, each blueprint aligns performance goals with budget realities.

3. Incremental Refactoring & Re‑engineering

To mitigate risk, we adopt a phased approach. Modules are refactored or rewritten in modern languages—often leveraging Java Spring Boot, .NET Core, or Node.js—while maintaining functional parity. Continuous integration pipelines ensure rapid, reliable deployments.

4. Data Migration & Integration

Smooth, loss‑less data transfer is critical. Our team employs advanced ETL processes and secure APIs to migrate databases, synchronize records, and maintain interoperability with existing third‑party solutions.

5. Rigorous Quality Assurance

Automated unit, integration, and performance tests catch issues early. Penetration testing and vulnerability scans validate that the revamped system meets stringent security and compliance benchmarks.

6. Go‑Live & Continuous Support

Once production‑ready, we orchestrate a seamless rollout with minimal downtime. Post‑deployment, NRS Infoways provides 24 × 7 monitoring, performance tuning, and incremental enhancements so your modernized platform evolves alongside your business.

Key Differentiators

Domain Expertise: Two decades of transforming systems across finance, healthcare, retail, and logistics.

Certified Talent: AWS, Azure, and Google Cloud‑certified architects ensure best‑in‑class cloud adoption.

DevSecOps Culture: Security baked into every phase, backed by automated vulnerability management.

Agile Engagement Models: Fixed‑scope, time‑and‑material, or dedicated team options adapt to your budget and timeline.

Result‑Driven KPIs: We measure success via reduced TCO, improved response times, and tangible ROI, not just code delivery.

Success Story Snapshot

A leading Indian logistics firm grappled with a decade‑old monolith that hindered real‑time shipment tracking. NRS Infoways migrated the application to a microservices architecture on Azure, consolidating disparate data silos and introducing RESTful APIs for third‑party integrations. The results? A 40 % reduction in server costs, 60 % faster release cycles, and a 25 % uptick in customer satisfaction scores within six months.

Future‑Proof Your Business Today

Legacy doesn’t have to mean liability. With NRS Infoways’ Legacy Software Modernization Services In India, you gain a robust, scalable, and secure foundation ready to tackle tomorrow’s challenges—whether that’s AI integration, advanced analytics, or global expansion.

Ready to transform?

Contact us for a free modernization assessment and discover how our Software Modernization Services In India can accelerate your digital journey, boost operational efficiency, and drive sustainable growth.

0 notes

Text

Java Cloud Development Company

Looking for a reliable Java cloud development company? Associative in Pune, India offers scalable, secure, and enterprise-grade cloud solutions using Java and modern frameworks.

In today's fast-paced digital landscape, cloud-native applications are no longer a luxury—they're a necessity. Java, with its robustness, portability, and security, remains a preferred language for developing enterprise-grade cloud solutions. If you're searching for a Java cloud development company that blends experience, innovation, and scalability, Associative, based in Pune, India, is your trusted technology partner.

Why Choose Java for Cloud Development?

Java has long been recognized for its platform independence, scalability, and extensive ecosystem. When paired with cloud platforms like Amazon Web Services (AWS) and Google Cloud Platform (GCP), Java enables businesses to build highly resilient, distributed systems with ease.

Benefits of using Java for cloud-based applications:

Object-oriented, secure, and stable

Strong community support

Excellent performance for backend services

Seamless integration with cloud services and databases

Compatibility with containerization tools like Docker and Kubernetes

Associative – Your Expert Java Cloud Development Company

At Associative, we specialize in building robust and scalable Java-based cloud solutions tailored to your business needs. With a proven track record across multiple industries, our team leverages frameworks like Spring Boot, cloud platforms like AWS and GCP, and robust database solutions like Oracle and MySQL to deliver end-to-end cloud applications.

Our Java Cloud Development Services Include:

Cloud-native application development using Java and Spring Boot

Migration of legacy Java applications to cloud platforms

API development & integration for scalable microservices

Serverless architecture & deployment on AWS Lambda and GCP Cloud Functions

Containerization with Docker & orchestration using Kubernetes

Database integration with Oracle, MySQL, and PostgreSQL

Continuous Integration and Continuous Deployment (CI/CD) pipelines

Cloud security and compliance implementation

Industries We Serve

We provide cloud-based Java solutions to various industries including:

Fintech and Banking

E-commerce and Retail

Healthcare and Education

Logistics and Supply Chain

Real Estate and Hospitality

Why Clients Trust Associative

Location Advantage: Based in Pune, India – a growing tech hub

Cross-Technology Expertise: Java, Spring Boot, AWS, GCP, Oracle

Agile Delivery: Scalable, flexible, and cost-effective solutions

End-to-End Services: From planning to deployment and support

Certified Developers: Skilled professionals in cloud and Java technologies

Let’s Build the Future on Cloud with Java

If you're looking to digitally transform your business through cloud computing and need a reliable Java cloud development company, Associative is here to help. Whether it's migrating your legacy system, developing cloud-native applications, or building microservices, we bring the technology and expertise to accelerate your growth.

youtube

0 notes

Text

Software Engineer Resume Examples That Land 6-Figure Jobs

Introduction: Why Your Resume Is Your First Line of Code

When it comes to landing a 6-figure software engineering job, your resume isn’t just a document—it’s your personal algorithm for opportunity.

Recruiters spend an average of 6–8 seconds on an initial resume scan, meaning you have less time than a function call to make an impression. Whether you're a backend expert, front-end developer, or full-stack wizard, structuring your resume strategically can mean the difference between “Interview scheduled” and “Application rejected.”

This guide is packed with real-world engineering resume examples and data-backed strategies to help you craft a resume that breaks through the noise—and lands you the role (and salary) you deserve.

What Makes a Software Engineer Resume Worth 6 Figures?

Before diving into examples, let's outline the key ingredients that top-tier employers look for in high-paying engineering candidates:

Clear technical specialization (e.g., front-end, DevOps, cloud)

Strong project outcomes tied to business value

Demonstrated leadership or ownership

Modern, ATS-friendly formatting

Tailored content for the job role

According to LinkedIn’s 2024 Emerging Jobs Report, software engineers with cloud, AI/ML, and DevOps experience are the most in-demand, with average salaries exceeding $120,000 annually in the U.S.

Structuring the Perfect Software Engineer Resume

Here’s a proven framework used in many successful engineering resume examples that landed six-figure jobs:

1. Header and Contact Information

Keep it clean and professional. Include:

Full name

Email (professional)

GitHub/Portfolio/LinkedIn URL

Phone number

2. Professional Summary (3–4 Lines)

Use this space to summarize your experience, key technologies, and what makes you stand out.

Example: "Full-stack software engineer with 7+ years of experience building scalable web applications using React, Node.js, and AWS. Passionate about clean code, continuous delivery, and solving real-world business problems."

3. Technical Skills (Grouped by Category)

Format matters here—grouping helps recruiters scan quickly.

Languages: JavaScript, Python, Java

Frameworks: React, Django, Spring Boot

Tools/Platforms: Git, Docker, AWS, Kubernetes, Jenkins

Databases: MySQL, MongoDB, PostgreSQL

4. Experience (Show Impact, Not Just Tasks)

Use action verbs + quantifiable results + technologies used.

Example:

Designed and implemented a microservices architecture using Spring Boot and Docker, improving system uptime by 35%.

Migrated legacy systems to AWS, cutting infrastructure costs by 25%.

Led a team of 4 engineers to launch a mobile banking app that acquired 100,000+ users in 6 months.

5. Education

List your degree(s), university name, and graduation date. If you're a recent grad, include relevant coursework.

6. Projects (Optional but Powerful)

Projects are crucial for junior engineers or those transitioning into tech. Highlight the challenge, your role, the tech stack, and outcomes.

Real-World Engineering Resume Examples (For Inspiration)

Example 1: Backend Software Engineer Resume (Mid-Level)

Summary: Backend developer with 5+ years of experience in building RESTful APIs using Python and Django. Focused on scalable architecture and robust database design.

Experience:

Developed a REST API using Django and PostgreSQL, powering a SaaS platform with 10k+ daily users.

Implemented CI/CD pipelines with Jenkins and Docker, reducing deployment errors by 40%.

Skills: Python, Django, PostgreSQL, Git, Docker, Jenkins, AWS

Why It Works: It’s direct, results-focused, and highlights technical depth aligned with backend engineering roles.

Example 2: Front-End Engineer Resume (Senior Level)

Summary: Senior front-end developer with 8 years of experience crafting responsive and accessible web interfaces. Strong advocate of performance optimization and user-centered design.

Experience:

Led UI redevelopment of an e-commerce platform using React, increasing conversion rate by 22%.

Integrated Lighthouse audits to enhance Core Web Vitals, resulting in 90+ scores across all pages.

Skills: JavaScript, React, Redux, HTML5, CSS3, Webpack, Jest

Why It Works: Focuses on user experience, performance metrics, and modern front-end tools—exactly what senior roles demand.

Example 3: DevOps Engineer Resume (6-Figure Role)

Summary: AWS-certified DevOps engineer with 6 years of experience automating infrastructure and improving deployment pipelines for high-traffic platforms.

Experience:

Automated infrastructure provisioning using Terraform and Ansible, reducing setup time by 70%.

Optimized Kubernetes deployment workflows, enabling blue-green deployments across services.

Skills: AWS, Docker, Kubernetes, Terraform, CI/CD, GitHub Actions

Why It Works: It highlights automation, scalability, and cloud—all high-value skills for 6-figure DevOps roles.

ATS-Proofing Your Resume: Best Practices

Applicant Tracking Systems are a major hurdle—especially in tech. Here’s how to beat them:

Use standard headings like “Experience” or “Skills”

Avoid tables, columns, or excessive graphics

Use keywords from the job description naturally

Save your resume as a PDF unless instructed otherwise

Many successful candidates borrow formatting cues from high-performing engineering resume examples available on reputable sites like GitHub, Resume.io, and Zety.

Common Mistakes That Can Cost You the Job

Avoid these pitfalls if you’re targeting 6-figure roles:

Listing outdated or irrelevant tech (e.g., Flash, VBScript)

Using vague responsibilities like “worked on the website”

Failing to show impact or metrics

Forgetting to link your GitHub or portfolio

Submitting the same resume to every job

Each job should have a slightly tailored resume. The effort pays off.

Bonus Tips: Add a Competitive Edge

Certifications: AWS, Google Cloud, Kubernetes, or relevant coding bootcamps

Contributions to open source projects on GitHub

Personal projects with real-world use cases

Blog or technical writing that demonstrates thought leadership

Conclusion: Turn Your Resume Into a Career-Launching Tool

Crafting a winning software engineer resume isn’t just about listing skills—it’s about telling a compelling story of how you create value, solve problems, and ship scalable solutions.

The best engineering resume examples strike a perfect balance between clarity, credibility, and customization. Whether you're a bootcamp grad or a seasoned engineer, investing time into your resume is one of the highest ROI career moves you can make.

👉 Visit our website for professionally designed templates, expert tips, and more examples to help you land your dream role—faster.

0 notes

Text

How to Build Your First Application on AWS

Amazon Web Services (AWS) provides a robust platform for building, deploying, and scaling applications. Whether you're a developer or a beginner in cloud computing, AWS offers tools and services to simplify the process. This guide will walk you through building your first application on AWS step by step.

Why Build Applications on AWS?

Scalability: Handle traffic spikes effortlessly.

Cost-Efficiency: Pay only for what you use.

Reliability: AWS ensures uptime with its global infrastructure.

Ease of Use: User-friendly services like Elastic Beanstalk and Lightsail simplify development.

Step 1: Set Up Your AWS Account

Before you begin, create an AWS account if you don’t already have one.

Go to AWS Signup Page.

Enter your email, set up your password, and provide payment details (the Free Tier allows free usage for many services).

Enable MFA (Multi-Factor Authentication) for added security.

Step 2: Choose Your Application Type

Define the type of application you want to build:

Web Application: A dynamic website or backend for mobile apps.

API: Create RESTful APIs using AWS Lambda or API Gateway.

Static Website: Host HTML, CSS, and JavaScript files.

Step 3: Select the Right AWS Services

AWS offers numerous services, but for a basic application, these are the essentials:

1. Compute Service (EC2 or Elastic Beanstalk):

Amazon EC2: Virtual machines for full control over deployment.

Elastic Beanstalk: Managed service to deploy web apps quickly.

2. Storage Service (S3):

Use Amazon S3 to store application assets, such as images and data files.

3. Database Service (RDS or DynamoDB):

RDS: For relational databases like MySQL or PostgreSQL.

DynamoDB: For NoSQL databases.

4. Networking (Route 53):

Manage DNS and custom domains for your app.

Step 4: Develop Locally

Build the initial version of your application on your local machine:

Tech Stack Suggestions:

Frontend: HTML, CSS, JavaScript, or frameworks like React and Angular.

Backend: Node.js, Python (Django/Flask), or Java (Spring Boot).

Database: SQLite for development, migrate to RDS or DynamoDB for production.

Step 5: Deploy Your Application

Once your app is ready, deploy it to AWS. Here's how:

Option 1: Using Elastic Beanstalk (Easiest Method):

Log in to the AWS Management Console.

Navigate to Elastic Beanstalk.

Create a new application, upload your app’s code (ZIP file), and launch it.

AWS automatically provisions EC2 instances, sets up a load balancer, and configures scaling.

Option 2: Using Amazon EC2 (Manual Method):

Launch an EC2 instance from the AWS Console.

SSH into the instance and install necessary dependencies (e.g., Node.js or Python).

Deploy your application files to the server.

Configure a web server like Nginx or Apache to serve your application.

Option 3: Using AWS Lightsail (For Beginners):

Navigate to AWS Lightsail.

Create a new instance with pre-configured blueprints like Node.js or WordPress.

Upload and run your application files.

Step 6: Connect Your Domain

Point your domain name to your application using Route 53:

Purchase or transfer a domain to AWS Route 53.

Set up an A record to point to your application’s public IP or load balancer.

Step 7: Test Your Application

Before going live, thoroughly test your application:

Functionality Testing: Ensure all features work as intended.

Load Testing: Simulate high traffic using tools like AWS CloudWatch or Locust.

Security Testing: Check for vulnerabilities using AWS Inspector.

Step 8: Monitor and Optimize

AWS provides tools to monitor performance and optimize your application:

AWS CloudWatch: Monitor app performance and resource usage.

AWS Trusted Advisor: Optimize costs, improve performance, and ensure security.

Auto Scaling: Scale resources automatically based on traffic.

Step 9: Scale and Grow

As your application gains users, AWS makes it easy to scale:

Horizontal Scaling: Add more servers via load balancers.

Vertical Scaling: Upgrade server specifications.

Global Distribution: Use AWS CloudFront to serve content globally with low latency.

Start your AWS journey today! Watch this step-by-step YouTube Live Session on AWS Application Development for detailed guidance and live demonstrations.

0 notes

Text

Assess Application and Cluster Readiness

Review Application Dependencies:

Analyze libraries, JDK versions, frameworks (e.g., Spring Boot, Jakarta EE), and external services (databases, APIs).

Identify any Kubernetes-specific configurations (e.g., ConfigMaps, Secrets, environment variables).

Containerization:

Ensure your Java app is containerized using tools like Docker.

Update the Dockerfile if necessary (e.g., for JDK/JRE updates or changes in base image).

2. Prepare Kubernetes Environment

Target Cluster Validation:

Ensure the target Kubernetes cluster is set up and ready.

Check for compatibility with the current cluster’s API version and resources.

Namespace Setup:

Create equivalent namespaces, RBAC roles, and policies in the target cluster.

Storage:

Verify Persistent Volume Claims (PVCs) and ensure similar storage classes are available.

3. Backup and Export Resources

Kubernetes Resources:

Export all application-related resources using kubectl:bashCopy codekubectl get all -n <namespace> -o yaml > resources.yaml

Persistent Data:

Back up database data or persistent volumes using tools like Velero or rsync.

4. Migrate Application Configurations

ConfigMaps and Secrets:

Export existing configurations:bashCopy codekubectl get configmap -n <namespace> -o yaml > configmap.yaml kubectl get secret -n <namespace> -o yaml > secret.yaml

Reapply them in the new cluster:bashCopy codekubectl apply -f configmap.yaml kubectl apply -f secret.yaml

5. Deploy to Target Cluster

Deploy Application:

Use Helm charts, Kubernetes manifests, or CI/CD pipelines to deploy the Java application to the new cluster.

Update the kubeconfig for the target cluster:bashCopy codekubectl config use-context <target-cluster-context> kubectl apply -f resources.yaml

Persistent Volumes:

Ensure data is restored to the persistent volumes if needed.

6. Test the Migration

Validation:

Ensure the application is running as expected (pods, services, and networking).

Run integration and regression tests.

Connectivity:

Validate external service integrations (e.g., databases, APIs).

Check ingress configurations for public access.

7. Cutover and Cleanup

Switch Traffic:

Update DNS or external load balancer to direct traffic to the new cluster.

Monitor:

Use tools like Prometheus, Grafana, or ELK Stack to monitor application health.

Clean Up Old Environment:

After verifying stability, clean up resources in the old cluster.

Tools to Assist in Migration

Velero: For backup and restore of Kubernetes resources and persistent volumes.

Helm: To manage application deployments and upgrades.

Kubernetes Dashboard: For real-time cluster monitoring.

Kubectl Plugins: To enhance CLI functionality.

If you provide more details about the migration (e.g., specific cloud providers or Kubernetes environments), I can offer more tailored advice!

4o

You said:

Migration of 5000 files per hr fix

ChatGPT said:

ChatGPT

If you're migrating a large number of files (e.g., 5000 files per hour) and encountering performance bottlenecks, you can improve the speed and reliability of your file migration by addressing several key areas. Here's a step-by-step guide to optimize file transfer:

1. Identify and Analyze Bottlenecks

File System Bottlenecks:

Check the source and destination disk I/O performance.

Ensure the files are not locked or being used during migration.

Network Bandwidth:

Measure your available network bandwidth using tools like iperf and ensure it can handle the expected load.

File Metadata Overhead:

Small files can add significant overhead due to metadata operations (e.g., file creation, permissions).

2. Optimize Transfer Mechanisms

Use Parallel Processing:

Transfer multiple files concurrently using multithreaded or parallel tools like:

rsync with --max-size and --parallel options.

GNU Parallel for concurrent file transfers.

bashCopy codefind /source/directory -type f | parallel -j8 rsync -a {} /destination/directory

Use a custom script or a library like Python's concurrent.futures for more control.

Batch File Transfers:

Group files into batches (e.g., tar archives) to reduce the overhead of transferring many small files individually.bashCopy codetar -cvf files.tar /source/files && rsync files.tar /destination Extract the files on the destination:bashCopy codetar -xvf files.tar

3. Ensure Network Optimization

Compression:

Compress files during transfer to reduce data volume:bashCopy codersync -az /source/directory /destination/directory

Increase TCP Window Size:

Tweak TCP settings for large transfers using tools like scp or rsync with buffer options:bashCopy codersync --bwlimit=0 --whole-file /source /destination

Use Faster Protocols:

Use optimized file transfer protocols like Rclone, Aspera, or SFTP for high-speed transfer.

4. Improve Source and Destination Performance

Disk Performance:

Optimize disk reads and writes using SSDs for temporary storage or caches.

Ensure the file system supports efficient file handling (e.g., ext4, XFS).

File System Tuning:

For very large numbers of files, tune parameters like inode_cache to handle metadata efficiently.

5. Error Handling and Retry Logic

Retry Failed Transfers:

Use tools like rsync to retry failed files automatically:bashCopy codersync -avz --partial --progress /source /destination

Logging and Monitoring:

Log all transferred files and

4o

You said:

File trsnsfer to s3 improve

ChatGPT said:

ChatGPT

Improving file transfer to Amazon S3 requires addressing both performance and reliability. Below are strategies and tools to optimize large-scale or high-volume file transfers:

1. Use AWS-Specific Tools

AWS CLI with Parallelism:

Use the --multipart-chunk-size-mb and --parallel flags to upload files in parallel and in chunks:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD_IA --region <region>

Enable parallel transfers: Update your AWS CLI configuration file (~/.aws/config) to increase concurrency:plaintextCopy code[default] s3 = max_concurrent_requests = 10 max_queue_size = 1000 multipart_threshold = 64MB multipart_chunksize = 64MB

AWS DataSync:

Use AWS DataSync for automated, high-performance, large-scale transfers with minimal manual intervention. DataSync can also manage retry and error handling.

2. Optimize S3 Uploads

Multipart Upload:

Use multipart uploads for files larger than 100 MB to improve transfer speed and reliability.

Example using AWS CLI:bashCopy codeaws s3api create-multipart-upload --bucket your-bucket-name --key file_key

Automate multipart uploads with the AWS SDK or tools like boto3 for Python.

Use the Correct Storage Class:

Choose the appropriate storage class to save costs and improve upload speeds:

STANDARD (default): High performance for frequently accessed data.

INTELLIGENT_TIERING: Automatically optimizes storage costs for infrequently accessed files.

3. Improve Network Performance

Enable Transfer Acceleration:

Use S3 Transfer Acceleration for faster uploads over long distances by leveraging AWS edge locations:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

Compression:

Compress files before transferring to reduce data size:bashCopy codetar -czvf archive.tar.gz /local/path && aws s3 cp archive.tar.gz s3://your-bucket-name

Optimize Network:

Use AWS Direct Connect or a high-bandwidth internet connection for large-scale transfers.

Monitor latency and bandwidth with tools like iperf.

4. Automate and Parallelize Transfers

Rclone:

Use rclone for high-speed S3-compatible transfers with parallelism and retry capabilities:bashCopy coderclone copy /local/path s3:your-bucket-name --transfers 16 --s3-upload-concurrency 16

GNU Parallel:

Transfer files concurrently by splitting file lists:bashCopy codefind /local/path -type f | parallel -j10 aws s3 cp {} s3://your-bucket-name

5. Error Handling and Retry

Retry Mechanisms:

Use tools or scripts that automatically retry failed uploads.

AWS CLI has built-in retry logic; increase retries if necessary:bashCopy codeaws s3 cp --cli-read-timeout 600 --cli-connect-timeout 600 /local/path s3://your-bucket-name

Chunked Retry:

Break large transfers into smaller, manageable chunks and retry failed chunks only.

6. Monitor and Optimize Transfers

AWS CloudWatch Metrics:

Monitor transfer performance and error rates in real-time.

S3 Inventory:

Use S3 inventory reports to validate uploaded files.

AWS SDKs or Custom Scripts:

Build custom monitoring and retry logic with SDKs like boto3 (Python).

Example Optimized Upload Command

bash

Copy code

aws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD --region us-east-1 --cli-read-timeout 600 --cli-connect-timeout 600

When to Use Snowball or Snowmobile

For extremely large datasets (terabytes or petabytes), use AWS Snowball or AWS Snowmobile for offline data transfer to S3.

These steps can dramatically improve the efficiency and reliability of your file transfer process to S3. Let me know if you need help implementing any of these!

4o

You said:

Improve fole transfers

ChatGPT said:

ChatGPT

Improving file transfer performance and reliability, whether between servers, local systems, or to cloud storage like Amazon S3, involves a combination of strategies. Here are some best practices for improving file transfers:

1. Optimize the File Transfer Process

Parallelize Transfers

Transfer multiple files concurrently to improve throughput:

Use tools like rsync, scp, or cloud-specific SDKs with multi-threading capabilities.

Example with rsync:bashCopy codersync -azP --info=progress2 --max-size=100M --exclude="*.tmp" /source/ /destination/

Batch Small Files

Small files cause metadata overhead during transfer. Bundle them into archives:bashCopy codetar -czvf archive.tar.gz /source/ Transfer the archive, then extract on the destination:bashCopy codetar -xzvf archive.tar.gz

2. Use High-Performance Transfer Tools

Rclone

Ideal for cloud storage and large-scale transfers with parallelism:bashCopy coderclone copy /source/ remote:bucket-name --transfers=16 --checkers=8

GNU Parallel

Parallelize file uploads/downloads:bashCopy codefind /source/ -type f | parallel -j8 scp {} user@destination:/target/

Multithreaded Tools

Use tools with built-in multithreading, such as bbcp, Aspera, or scp (with parallel scripts).

3. Network Optimization

Compression

Compress files before transfer to reduce the amount of data transmitted:bashCopy codegzip largefile.txt Or combine with the transfer:bashCopy codetar czf - /source/ | ssh user@destination "tar xzf - -C /target/"

Bandwidth Management

Limit bandwidth usage to prevent throttling:

With rsync:bashCopy codersync --bwlimit=1000 -az /source/ /destination/

Use TCP tuning options to optimize network transfer rates:bashCopy codesysctl -w net.ipv4.tcp_window_scaling=1

Use Faster Protocols

Use optimized transfer protocols:

SFTP: Secure but slower for high-volume transfers.

Rsync/SSH: Efficient for incremental and large transfers.

Aspera: High-speed UDP-based protocol.

Enable Transfer Acceleration (Cloud)

For Amazon S3:bashCopy codeaws s3 cp /source/ s3://your-bucket/ --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

4. Error Handling and Resilience

Resume Transfers

Use tools that support resuming failed transfers:

Rsync:bashCopy codersync -az --partial /source/ /destination/

AWS CLI:bashCopy codeaws s3 cp --continue /source/ s3://bucket-name/

Retry Logic

Implement retry logic for failed transfers using scripts or tools.

Example using bash:bashCopy codefor i in {1..5}; do scp file user@destination:/target/ && break || sleep 5 done

5. Monitor and Analyze Performance

Real-Time Monitoring

Monitor progress with tools:

Rsync: --progress flag.

SCP: Wrap in pv for progress visualization:bashCopy codetar cf - /source/ | pv | ssh user@destination "tar xf - -C /target/"

Analyze Bottlenecks

Use tools like iperf or nload to check network performance.

Use iotop or dstat to monitor disk I/O on both source and destination.

6. Scale with Advanced Solutions

Distributed File Transfer

Use tools like Globus for efficient distributed data transfers.

Data Transfer Appliances

For extremely large datasets, consider appliances like AWS Snowball or offline methods to ship data physically.

Content Delivery Networks (CDNs)

Use CDNs for faster file distribution to end users.

By combining these strategies—parallelism, compression, protocol optimization, and monitoring—you can significantly enhance file transfer speed and reliability. Let me know if you'd like further customization for a specific use case!

4o

You said:

Threadpool

ChatGPT said:

ChatGPT

A thread pool is a collection of pre-initialized worker threads that stand ready to perform tasks. Instead of creating and destroying threads for each task, a thread pool manages a set of reusable threads, improving performance and resource utilization in multi-threaded applications.

Here’s how to effectively use and optimize thread pools in your application.

Why Use a Thread Pool?

Performance: Reduces the overhead of creating and destroying threads repeatedly.

Resource Management: Controls the number of concurrent threads, preventing system overload.

Scalability: Allows a controlled environment for task execution, making it easier to scale applications.

Key Concepts of Thread Pools

Core Threads: Minimum number of threads that are always kept alive.

**Max Threads

4o

You said:

Improve java spring file transfer

ChatGPT said:

ChatGPT

Improving file transfer in a Java Spring application involves optimizing both the code and the underlying infrastructure. Below are strategies to enhance file transfer performance and reliability.

1. Optimize File Transfer Code

Use Streaming for Large Files

Avoid loading the entire file into memory; instead, use streaming:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { try (InputStream inputStream = file.getInputStream()) { // Process the input stream directly // Example: Save the file Files.copy(inputStream, Paths.get("target-directory/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); } return ResponseEntity.ok("File uploaded successfully!"); }

Enable Asynchronous File Transfers

Use Spring's @Async annotation for non-blocking file uploads/downloads:javaCopy code@Async public CompletableFuture<String> uploadFileAsync(MultipartFile file) { // File processing logic return CompletableFuture.completedFuture("Upload Complete"); }

Use Chunked Uploads for Large Files

Break large files into smaller chunks for efficient transfer and to handle interruptions gracefully:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId) { // Append chunk data to the file return ResponseEntity.ok("Chunk uploaded successfully!"); }

2. Optimize for Cloud Storage (e.g., AWS S3, Azure Blob)

Use SDKs for Direct Uploads

Use cloud-specific SDKs (e.g., AWS SDK for S3) to upload files directly to cloud storage instead of saving locally first:javaCopy code@PostMapping("/uploadToS3") public ResponseEntity<String> uploadToS3(@RequestParam("file") MultipartFile file) throws IOException { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; s3Client.putObject(bucketName, file.getOriginalFilename(), file.getInputStream(), new ObjectMetadata()); return ResponseEntity.ok("File uploaded to S3!"); }

Enable S3 Transfer Acceleration

Use Transfer Acceleration for faster uploads to Amazon S3:javaCopy codeAmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

3. Optimize File Transfer in REST APIs

Enable Compression

Compress file responses to reduce data size:yamlCopy codespring: servlet: multipart: enabled: true max-file-size: 50MB max-request-size: 50MB gzip: enabled: true min-size: 1024

Use HTTP/2

Switch to HTTP/2 for better concurrency and reduced latency:yamlCopy codeserver: http2: enabled: true

Resume Interrupted Uploads

Use ETag or file metadata to allow resumable file uploads:javaCopy code@PostMapping("/resumeUpload") public ResponseEntity<String> resumeUpload(@RequestParam("file") MultipartFile file, @RequestParam("offset") Long offset) { // Resume logic based on offset return ResponseEntity.ok("Upload resumed!"); }

4. Parallelize File Transfers

Multithreaded File Upload

Use a ThreadPoolExecutor or Spring's TaskExecutor:Copy code

In addition to using @Async, there are other strategies and tools to improve file transfer performance and reliability in a Java Spring application. Here are some approaches:

1. Reactive Programming with Spring WebFlux

For truly non-blocking and asynchronous file transfers, use Spring WebFlux:

Streaming File Upload:javaCopy code@PostMapping(value = "/upload", consumes = MediaType.MULTIPART_FORM_DATA_VALUE) public Mono<Void> uploadFile(Flux<FilePart> filePartFlux) { return filePartFlux.flatMap(filePart -> { Path path = Paths.get("target-directory/" + filePart.filename()); return filePart.transferTo(path); }).then(); }

Streaming File Download:javaCopy code@GetMapping(value = "/download", produces = MediaType.APPLICATION_OCTET_STREAM_VALUE) public Mono<ResponseEntity<Resource>> downloadFile() { Path path = Paths.get("target-directory/large-file.txt"); return Mono.just(ResponseEntity.ok() .header(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=large-file.txt") .body(new FileSystemResource(path))); }

2. Use CompletableFuture and ExecutorService

Combine CompletableFuture with a custom ExecutorService for better control:javaCopy code@PostMapping("/upload") public CompletableFuture<String> uploadFile(@RequestParam("file") MultipartFile file) { ExecutorService executor = Executors.newFixedThreadPool(4); return CompletableFuture.supplyAsync(() -> { try { // File processing logic Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); return "File uploaded successfully!"; } catch (IOException e) { throw new RuntimeException("File upload failed!", e); } }, executor); }

3. Apache Kafka for Asynchronous Processing

Use Apache Kafka to decouple file upload from processing:

Producer sends file metadata to Kafka after upload:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { // Save the file Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename())); // Send metadata to Kafka kafkaTemplate.send("file-topic", file.getOriginalFilename()); return ResponseEntity.ok("File uploaded and queued for processing!"); }

Consumer processes files asynchronously:javaCopy code@KafkaListener(topics = "file-topic", groupId = "file-group") public void processFile(String fileName) { // Process the file Path path = Paths.get("target/" + fileName); System.out.println("Processing file: " + path); }

4. Chunked File Transfers

Handle large files by uploading them in chunks:

Frontend sends file chunks with an identifier.

Backend reconstructs the file:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId, @RequestParam("chunkNumber") int chunkNumber) { Path path = Paths.get("target/" + fileId + "_" + chunkNumber); try { Files.copy(chunk.getInputStream(), path, StandardCopyOption.REPLACE_EXISTING); } catch (IOException e) { return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).body("Failed to upload chunk"); } return ResponseEntity.ok("Chunk uploaded successfully!"); }

Reassemble chunks when all are uploaded.

5. WebSockets for Real-Time Progress

Use WebSockets to provide real-time feedback on file transfer progress:javaCopy code@Controller public class WebSocketController { @MessageMapping("/uploadStatus") @SendTo("/topic/progress") public String sendProgress(String status) { return status; } }

Send progress updates from the server while uploading:javaCopy codesimpMessagingTemplate.convertAndSend("/topic/progress", "50% completed");

6. Redis or Database Queues

Use a Redis queue or a database to handle file metadata asynchronously.

Enqueue metadata after upload:javaCopy coderedisTemplate.opsForList().leftPush("fileQueue", fileMetadata);

Process the queue in the background:javaCopy codeString metadata = redisTemplate.opsForList().rightPop("fileQueue");

7. Use MultipartConfig for Large Files

Configure multipart file handling for large files in application.properties:propertiesCopy codespring.servlet.multipart.max-file-size=1GB spring.servlet.multipart.max-request-size=2GB

Custom bean for fine-tuning:javaCopy code@Bean public MultipartConfigElement multipartConfigElement() { MultipartConfigFactory factory = new MultipartConfigFactory(); factory.setMaxFileSize("1GB"); factory.setMaxRequestSize("2GB"); return factory.createMultipartConfig(); }

8. Use AWS S3 for Direct Uploads

Use pre-signed URLs to offload upload work to the client:javaCopy code@GetMapping("/generatePresignedUrl") public ResponseEntity<String> generatePresignedUrl(@RequestParam String fileName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest("bucket-name", fileName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); return ResponseEntity.ok(url.toString()); }

The client uploads directly to S3 using the URL.

9. Optimize File Transfer for High Performance

Compression

Compress files before upload to reduce transfer size:javaCopy codebyte[] compressedData = compress(file.getBytes()); Files.write(Paths.get("compressed-file.gz"), compressedData);

Load Balancing

Use a load balancer (e.g., AWS ALB or NGINX) for scalable upload endpoints.

Retry Logic

Implement retries for failed transfers to improve reliability:javaCopy codeint maxRetries = 3; while (retries < maxRetries) { try { // Attempt file upload break; } catch (IOException e) { retries++; if (retries == maxRetries) { throw e; } } }

These strategies can enhance file transfer performance, scalability, and reliability in Java Spring applications. Let me know if you need code examples for specific use cases!

4o

You said:

S3 relaibility

ChatGPT said:

ChatGPT

Ensuring reliability when transferring files to Amazon S3 involves minimizing failures, handling errors effectively, and ensuring data integrity. Below are best practices and techniques to achieve reliable file transfers to S3:

1. Use Multipart Upload for Large Files

Multipart uploads split large files into smaller parts, upload them in parallel, and reassemble them in S3. This improves reliability and speeds up transfers.

Example Using AWS SDK (Java)

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; String fileName = "large-file.zip"; String keyName = "uploads/" + fileName; File file = new File(fileName); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); try { upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); }

2. Use Retry Logic

Add retry mechanisms to handle intermittent network issues or service interruptions.

AWS SDK Retry Settings

The AWS SDK retries failed requests automatically, but you can customize it:

java

Copy code

ClientConfiguration config = new ClientConfiguration(); config.setRetryPolicy(PredefinedRetryPolicies.getDefaultRetryPolicyWithCustomMaxRetries(5)); AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withClientConfiguration(config) .build();

3. Enable Transfer Acceleration

Transfer Acceleration uses AWS edge locations to improve upload speeds over long distances.

Enable Transfer Acceleration in S3

Enable it in the S3 bucket settings.

Update your client code to use the accelerated endpoint:

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

4. Validate Data Integrity

Use MD5 checksums to ensure the uploaded file matches the original file.

AWS CLI Example

bash

Copy code

aws s3 cp file.txt s3://your-bucket/ --content-md5 <calculated-md5>

Java Example

java

Copy code

String md5 = Base64.getEncoder().encodeToString(DigestUtils.md5(new FileInputStream(file))); ObjectMetadata metadata = new ObjectMetadata(); metadata.setContentMD5(md5); PutObjectRequest request = new PutObjectRequest(bucketName, keyName, new FileInputStream(file), metadata); s3Client.putObject(request);

5. Monitor File Transfers

Use CloudWatch Metrics to monitor S3 request success, failures, and latencies.

Monitor transfer progress with TransferManager in the AWS SDK:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener(progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion();

6. Handle Failures Gracefully

Use Resumable Transfers for interrupted uploads with TransferManager:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().build(); Upload upload = tm.upload(bucketName, keyName, file); // Add a progress listener upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred: " + progressEvent.getBytesTransferred()); }); // Graceful failure handling try { upload.waitForCompletion(); } catch (AmazonClientException e) { System.err.println("Error occurred: " + e.getMessage()); }

7. Use Pre-Signed URLs

Generate pre-signed URLs for reliable, time-limited uploads directly from the client, reducing the load on your server.

Generate Pre-Signed URL

java

Copy code

GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest(bucketName, keyName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); System.out.println("Pre-Signed URL: " + url);

8. Use S3 Event Notifications

Configure S3 Event Notifications to trigger AWS Lambda or SNS for post-upload validation, processing, or alerting on failures.

Example: Notification for Upload Completion

Configure your S3 bucket to send an event to an SQS queue or Lambda function upon successful upload.

9. Leverage Data Replication

Use S3 Cross-Region Replication (CRR) or Same-Region Replication (SRR) for redundancy.

Automatically replicate files to another bucket for disaster recovery.

10. Automate with AWS DataSync

Use AWS DataSync for automated and highly reliable file transfers between on-premises storage and S3. It includes built-in retry and integrity checks.

11. Optimize Network Performance

Compress files before uploading:javaCopy codeFiles.write(Paths.get("compressed-file.gz"), gzipCompress(fileContent));

Use efficient transfer protocols (e.g., HTTP/2 if supported by your client).

12. Secure Transfers

Always use HTTPS for secure communication.

Use S3 Bucket Policies and IAM Roles to restrict access to authorized users.

Example: Complete Reliable Upload Function

java

Copy code

public void reliableUpload(File file, String bucketName, String keyName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); try { // Multipart upload Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); } finally { tm.shutdownNow(); } }

0 notes

Text

Exploring the Top Backend Frameworks for 2024

In the quickly evolving world of software development, backend frameworks play an essential role in building robust and scalable web applications. As we step into 2024, it's best to stay updated on the latest backend frameworks that offer innovative features and functionalities. In this blog post, we'll explore some of the top backend frameworks for 2024, highlighting their key strengths and applications.

Django:

Django is a popular backend programming framework noted for its ease of use, scalability, and flexibility. Django, which is built on Python, provides a comprehensive set of features such as an ORM (Object-Relational Mapper), authentication system, and built-in admin interface. Django's batteries-included concept allows developers to quickly build secure and maintainable web applications, making it an excellent choice for projects of any size.

Express.js

Express.js is an effective substitute for developing Node.js apps due to its basic approach and strong middleware ecosystem. Express.js is a lightweight and versatile framework that allows developers to create custom solutions that are tailored to their specific needs. Express.js, which supports routing, templating engines, and middleware, specializes in building RESTful APIs and real-time online applications, making it a top contender for backend development in 2024.

Flask:

Flask, a microframework for Python, continues to gain traction among developers seekinga lightweight and modular solution for backend development. Known for its simplicity and extensibility, Flask offers a minimalist approach to building web applications, allowing developers to choose the components they need without unnecessary bloat.

Laravel

Laravel is a popular PHP framework known for its clean syntax, expressive ORM, and extensive feature set. With a focus on developer productivity and code maintainability, Laravel makes it easier to build advanced online apps by including capabilities like routing, middleware, authentication, and database migrations out of the box. Laravel continues to advance with the release of Laravel Jetstream and Livewire, which will provide developers with current capabilities for creating interactive and engaging web apps in 2024.

Ruby On

Rails (Ruby) Ruby on Rails is well-known for its developer-friendliness and convention-over-configuration attitude, which encourages rapid development and code maintenance. Its large ecosystem of gems broadens functionality. Ruby on Rails continues to be distinguished by its clean syntax and emphasis on convention over configuration.

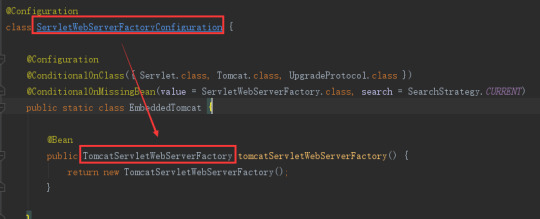

Spring Boot

Spring Boot is a popular Java framework noted for its simplicity, convention-based approach, and extensive feature set. Spring Boot has developed into a modern, convention-over-configuration framework for Java developers. Its versatility allows for customization and integration with numerous tools.

In the ever-changing world of backend development, staying updated on the latest trends is key. In 2024, frameworks like Django, Express.js, Flask, Laravel, and Spring Boot empower developers. Trust XcelTec, a top custom web development company in the USA, to exceed industry standards and ensure project success.

0 notes

Text

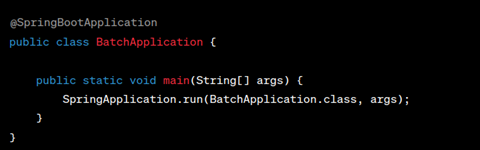

Building Applications with Spring boot in Java

Spring Boot, a powerful extension of the Spring framework, is designed to simplify the process of developing new Spring applications. It enables rapid and accessible development by providing a convention-over-configuration approach, making it a preferred choice for many developers. This essay delves into the versatility of Spring Boot, exploring the various types of applications it is commonly used for, highlighting its features, benefits, and practical applications across industries.

Origins and Philosophy

Spring Boot was created to address the complexity often associated with Spring applications. By offering a set of auto-configuration, management, and production-ready features out of the box, it reduces the need for extensive boilerplate configuration. This framework adheres to the "opinionated defaults" principle, automatically configuring Spring applications based on the dependencies present on the classpath. This approach significantly accelerates development time and lowers the entry barrier for businesses looking to hire Java developers.

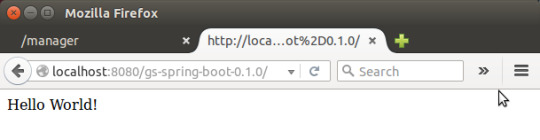

Web Applications

Spring Boot is widely recognized for its efficacy in building web applications. With embedded servers like Tomcat, Jetty, or Undertow, developers can easily create standalone, production-grade web applications that are ready to run. The framework's auto-configuration capabilities, along with Spring MVC, provide a robust foundation for building RESTful web services and dynamic websites. Spring Boot also supports various templates such as Thymeleaf, making the development of MVC applications more straightforward.

Microservices

In the realm of microservices architecture, Spring Boot stands out for its ability to develop lightweight, independently deployable services. Its compatibility with Spring Cloud offers developers an array of tools for quickly building some of the common patterns in distributed systems (e.g., configuration management, service discovery, circuit breakers). This makes Spring Boot an ideal choice for organizations transitioning to a microservices architecture, as it promotes scalability, resilience, and modularity. Microservices is one important reason why businesses look to migrate to Java 11 and beyond.

Cloud-Native Applications

Spring Boot's design aligns well with cloud-native development principles, facilitating the creation of applications that are resilient, manageable, and observable. By leveraging Spring Boot's actuator module, developers gain insights into application health, metrics, and audit events, which are crucial for Java development services companies maintaining and monitoring applications deployed in cloud environments. Furthermore, Spring Boot's seamless integration with containerization tools like Docker and Kubernetes streamlines the deployment process in cloud environments.

Enterprise Applications

Spring Boot is adept at catering to the complex requirements of enterprise applications. Its seamless integration with Spring Security, Spring Data, and Spring Batch, among others, allows for the development of secure, transactional, and data-intensive applications. Whether it's managing security protocols, handling transactions across multiple databases, or processing large batches of data, Spring Boot provides the necessary infrastructure to develop and maintain robust enterprise applications.

IoT and Big Data Applications

The Internet of Things (IoT) and big data are rapidly growing fields where Spring Boot is finding its footing. By facilitating the development of lightweight, high-performance applications, Spring Boot can serve as the backbone for IoT devices' data collection and processing layers. Additionally, its compatibility with big data processing tools like Apache Kafka and Spring Data makes it suitable for building applications that require real-time data processing and analytics.

Summary

Spring Boot's versatility extends across various domains, making it a valuable tool for developing a wide range of applications—from simple CRUD applications to complex, distributed systems. Its convention-over-configuration philosophy, combined with the Spring ecosystem's power, enables developers to build resilient, scalable, and maintainable applications efficiently.

In essence, Spring Boot is not just a tool for one specific type of application; it is a comprehensive framework designed to meet the modern developer's needs. Its ability to adapt to various application requirements, coupled with the continuous support and advancements from the community, ensures that Spring Boot will remain a crucial player in the software development landscape for years to come. Whether for web applications, microservices, cloud-native applications, enterprise-level systems, or innovative fields like IoT and big data, Spring Boot offers the flexibility, efficiency, and reliability that modern projects demand. The alternative Spring cloud also offers variety of advantage for developers building microservices in java with spring boot and spring cloud.

#software development#web development#mobile app development#java full stack developer#java application development#javaprogramming#microservices

0 notes

Text

Gain Proficiency in Batch Processing with Spring Batch

In the ever-evolving landscape of enterprise applications, efficiently handling large-scale data processing tasks is a common challenge. Spring Batch, is a robust batch application development framework that is lightweight and all-inclusive, facilitating the creation of batch applications essential to enterprise system everyday operations.

Spring Batch provides reusable functions essential in processing large volumes of records, including logging/tracing, transaction management, job processing statistics, job restart, skip, and resource management.

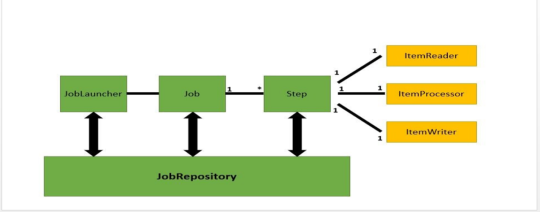

Spring Batch Architecture

A typical batch application is roughly as follows.

Read a large number of records from a database, file, or queue.

Process the data in some way.

Write back the data in a modified form.

The corresponding schematic is as follows.

The general architecture of the spring batch is as follows.

In the spring batch, a job can define many steps, in each step you can define its own ItemReader for reading data, ItemProcesseor for processing data, and ItemWriter for writing data, and each defined job is in the JobRepository Inside, we can start a job through the JobLauncher.

Core Concepts

Job: In Spring Batch, a job is an executable task that can be divided into smaller, more manageable jobs.

Step: Within a job, a step is a self-contained, executable unit. It performs a certain function, like reading, processing, and writing data.

Item: An item is a data point that has undergone step processing. It could be any kind of data, such as a line from a file or a record from a database.

Building Blocks of Spring Batch

Readers, Processors, and Writers

ItemWriter

An ItemProcessor is used to process the data. When the given item is not valid it returns null, else it processes the given item and returns the processed result.

Tasklet

When no reader and writer are given, a tasklet acts as a processor for SpringBatch. It processes only a single task.

Chunks and Transactions

Spring Batch uses chunks to process data, which enables efficient handling of large datasets.

The process of transactions ensures either all items in a chunk are processed successfully or none.

Job Execution Flow

A job is usually composed of one or more steps, and the execution process can be customized to meet specific requirements.

XML or Java-based configuration can be used to configure and orchestrate jobs and steps.

JobRepository

A Job repository in Spring Batch provides Create, Retrieve, Update, and Delete (CRUD) operations for the JobLauncher, Job, and Step implementations.

Real-World Applications

Data Migration

The efficiency of transferring large amounts of data from one system to another is demonstrated by Spring Batch, which proves to be instrumental in data migration scenarios.

ETL Mastery

The extraction, transformation, and loading of data is a fundamental use case for Spring Batch. Developers are empowered to create jobs that collect data from diverse sources, apply transformations, and archive the refined results.

Report Generation

Batch processing has a specialization in producing complicated reports that involve complex calculations or aggregations.

Features of Spring Batch

Maintainability

Transaction management

Flexibility

Retry and Skip Mechanisms

Chunk based processing

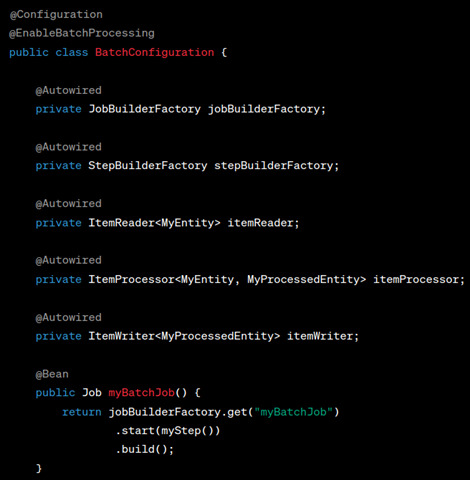

Implementation of Spring Batch

Step 1: Project Setup

To begin your Spring Batch project, establish a new Spring Boot project. You have the option to use either the Spring Initializer, which is a web-based tool or manually configure your project through your preferred IDE.

Method 1: Using Spring Initializr

Go to Spring Initializr and select the project settings you want, which include project type, language, and packaging.

Include the dependency for “Spring Batch” in your project.

To download the project structure as a ZIP file, click on “Generate”.

Method 2: Manual Configuration

Create a new Spring Boot project in your preferred IDE, ensuring that you have included the necessary Spring Batch dependencies.

Set up your project with the right directory structure to make batch-related components easy to find and organize.

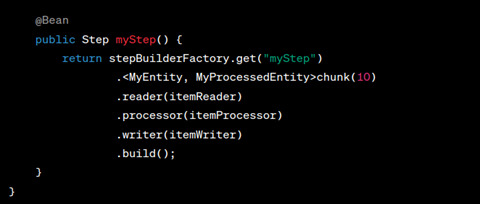

Step 2: Define Job and Steps

Once your project is set up, define a job and its steps. Batch processing can be divided into phases with one or more steps per job. The steps can be configured using ItemReader, ItemProcessor, and ItemWriter implementations.

Step 3: Implement ItemReader, ItemProcessor, and ItemWriter

Develop customized implementations for ItemReader, ItemProcessor, and ItemWriter adapted to your specific use case. Spring Batch includes several preinstalled implementations, like JdbcCursorItemReader and JpaItemWriter, that can be modified to suit your requirements.

Step 4: Configure Batch Properties

Adjust your batch job by configuring batch-related properties. Modify settings including chunk size, retry policies, and transaction management to improve the performance and reliability of your batch jobs.

To make it clear, let’s define each property:

spring.batch.job.names

Specifies the names of the batch jobs to be executed. Multiple job names can be provided as a comma-separated list.

spring.batch.initialize-schema

Controls the initialization of the batch schema in the underlying database. Setting it to ‘always’ ensures that the schema is created every time the application starts.

spring.batch.job.enabled

Indicates whether the execution of batch jobs is enabled or disabled. When set to ‘true’, jobs will run as usual. Conversely, setting it to ‘false’ prevents the execution of any configured batch jobs.

Step 5: Run the Batch Job

Run your batch job either programmatically or with Spring Boot’s built-in command line support. Check the status of the job execution by monitoring it through the JobRepository.

Key-Takeaway

With Spring Batch, developers can easily handle complex batch processing scenarios. The framework’s flexibility and scalability make it a top choice in the Java ecosystem for handling large datasets or orchestrating ETL workflows. By mastering Spring Batch’s core concepts and building blocks, developers can unleash their full potential for efficient and reliable batch processing.

Explore more about the latest updates in Software Technology on our blog page for continuous insights and innovations.

Originally published by: Gain Proficiency in Batch Processing with Spring Batch

0 notes

Text

Creating a full-stack application involves developing both the frontend and backend components of the application. Here's a detailed process for building a full-stack application:

1. Define Requirements:

Clearly define the requirements and features of your application.

Identify the technologies and tools you'll use for the frontend and backend.

2. Design the Database:

Identify the data your application will handle.

Design the database schema and relationships.

Choose a database management system (e.g., MySQL, PostgreSQL, MongoDB).

3. Set Up Version Control:

Use a version control system like Git to track changes.

Create a repository for your project on platforms like GitHub or GitLab.

4. Frontend Development:

Choose a frontend framework or library (e.g., React, Angular, Vue.js).

Set up the project structure and build system.

Create UI components and layout.

Implement navigation and user interactions.

Integrate with backend APIs for data retrieval and manipulation.

5. Backend Development:

Choose a backend framework (e.g., Express.js, Django, Flask, Spring Boot).

Set up the server and define routes.

Implement authentication and authorization.

Develop controllers/handlers for API endpoints.

Connect to the database and perform CRUD operations.

Implement business logic.

6. API Development:

Design a RESTful or GraphQL API.

Implement endpoints for CRUD operations.

Include error handling and validation.

Secure API endpoints with appropriate authentication mechanisms.

7. Database Integration:

Connect your backend application to the database.

Implement data access methods.

Handle database migrations and updates.

8. Authentication and Authorization:

Implement user authentication (e.g., JWT, OAuth).

Set up user roles and permissions for authorization.

9. Testing:

Write unit tests for both frontend and backend components.

Perform integration testing to ensure different parts work together.

Conduct end-to-end testing for user flows.

10. Deployment:

Set up deployment environments (e.g., development, staging, production).

Deploy the frontend and backend to hosting platforms (e.g., Heroku, AWS, Azure, Netlify).

Configure domain and SSL certificates for secure connections.

11. Monitoring and Logging:

Implement logging for errors and important events.

Set up monitoring tools to track application performance.

Implement error tracking (e.g., Sentry, Rollbar).

12. Continuous Integration/Continuous Deployment (CI/CD):

Set up CI/CD pipelines for automated testing and deployment.

Ensure that changes are automatically tested before deployment.

13. Documentation:

Document the codebase, API, and any configuration.

Provide user documentation if applicable.

0 notes

Note

How did you get your job in software development and what do you do there? I'll be graduating with a bachelor's in computer science this fall but I have no really impressive projects or internships so getting a job is concerning

To be honest I was really lucky, the company I work for often hires trainees, aka people without experience, but they do it like every six months or so, in my case when I was one week away from finishing college my career coordinator let us know about it so I applied, went through the interview and got hired.

Mind you starting as a trainee is a bit hard because they didn’t really had a job for me perse, they dumped me anywhere and everywhere until I found a project where I could work best, the only requirement they asked of me was a good understanding of english and the basics of java.

Since I wasn’t hired to do one job in specific (database management, software development, UI design) I went through a lot of different projects.

I started working with legacy code analysis, going through applications that were made on C++, writing down all dependencies and requirements for them. (Had to learn how the fuck C++ works that was painful)

Then I was moved to a migration project in where we worked on migrating the legacy applications to java.

From there they moved me from backend to frontend and I worked on a new application that used phone messages to interact with the backend. Loved this project I learned so much about React and Redux and I alone created the user interface that was used to signup in the service.

After that I have been mostly on backend projects where I work best, we are working for a bank so most of them have to do with accounts and transactions, closing accounts, creating accounts, inheriting accounts in case of death etc.

Depending in where you are you could try seeing if the companies have something similar to the trainee project my company has, mind you this is not an internship , they have to pay you, when I started I was paid the minimum wage, now after each year they review my work and I have been getting raises to the point now I am definitely getting paid what my work is worth.

If you really want to go into development instead of DB or Networking then definitely study the java core, Spring Boot, JavaEE, Maven, Gradle, play around in Gitlab, Mockito for unitTesting, if you want front-end React and Redux are a must. Postmand and SoapUI for testing, ReadyAPI has also become popular recently.

Hope this helps in some way Anon!

17 notes

·

View notes

Text

Laravel Expert in Pune

Looking for a Laravel expert in Pune? Associative, a leading software company in Pune, specializes in Laravel development, offering custom web solutions for businesses of all sizes.

Laravel Expert in Pune – Transforming Ideas into Scalable Web Solutions

In today's digital era, having a robust and scalable web application is crucial for business success. If you are looking for a Laravel expert in Pune, Associative is your go-to solution. As a trusted software company, we provide top-notch Laravel development services tailored to your business needs.

Why Choose Laravel for Your Web Development?

Laravel is a powerful and widely used PHP framework known for its efficiency, security, and flexibility. It enables rapid development while ensuring a seamless user experience. Here’s why Laravel stands out:

MVC Architecture – Provides a structured coding approach, making development faster and more efficient.

Blade Templating Engine – Simplifies UI design with reusable components.

Eloquent ORM – Offers a seamless database interaction experience.

Security Features – Built-in security mechanisms, such as CSRF protection and encrypted passwords.

Scalability & Performance – Ideal for startups to enterprise-level applications.

Laravel Development Services at Associative

At Associative, we offer a range of Laravel development services to help businesses achieve their digital goals. Our expert Laravel developers in Pune specialize in:

Custom Laravel Web Application Development – Building tailored solutions to meet your specific business requirements.

Laravel E-commerce Development – Developing scalable and feature-rich online stores.

Laravel API Development & Integration – Creating and integrating RESTful APIs for seamless data exchange.

Migration & Upgradation Services – Upgrading legacy applications to the latest Laravel versions.

Laravel Maintenance & Support – Ensuring your application remains secure and up-to-date.

Our Expertise Beyond Laravel

At Associative, we specialize in a wide range of technologies to cater to diverse business needs:

Mobile App Development (Android, iOS, Flutter, React Native)

Web Development (Magento, WordPress, Joomla, Drupal, OpenCart, Shopify, BigCommerce)

Java & Spring Boot Development

Node.js, React.js, Express.js Development

Blockchain & Web3 Solutions

Cloud Computing (AWS, GCP)

Game Development (Unreal Engine, C++)

SEO & Digital Marketing Services

Why Choose Associative as Your Laravel Expert in Pune?

Experienced Laravel Developers – A team of skilled professionals with hands-on expertise in Laravel.

Customized Solutions – Tailored web applications designed for performance and scalability.

End-to-End Development – From conceptualization to deployment and maintenance.

Cost-Effective Services – High-quality solutions at competitive pricing.

Client-Centric Approach – We prioritize client requirements and business goals.

Get in Touch with Us

If you're searching for a Laravel expert in Pune, Associative is here to help. Whether you need a custom web application, an e-commerce platform, or API integration, our team is ready to deliver top-notch solutions.

youtube

0 notes

Link

"Thinking of upgrading your Spring Boot application? Here is a guide to walk you through the migration process, Spring Boot 1.5 to 2.0.5". Reblog with caption 🙃

#programming#software development#devops#java#agile#web#iot#database#mobile#big data#cloud#spring#spring boot 20#spring boot 15#spring boot 205#migration#article#developers#comment#server

0 notes

Text

Master's Program in Full Stack Java Developer

If you are interested in learning jQuery, then The Complete jQuery Course is a good place to start with. It's a full-stack framework and contains all the necessary features by default instead of providing them as separate libraries. With Django, you'll get authentication, URL routing, template engine, object-relational mapper , and database schema migrations all in one pack.

Finding coaching institutes in Kuala Lumpur is the first step toward getting an online development certification in Kuala Lumpur. A web improvement course in Kuala Lumpur is out there through particular platforms and institutes. The first step toward the platform's improvement is to join it. To be sure that no one is denied entry into this internet development certification in Kuala Lumpur for financial reasons, we offer numerous financing options to help learners with the fee fee. The financing particulars could be accessed in the "Admissions Fee and Financing" section of the website. Gain a complete understanding of automation testing and integration with Selenium WebDriver.

GraphQL provides a complete and comprehensible description of the data in your API, provides purchasers the facility to ask for precisely what they want, and nothing extra, makes it easier to evolve APIs over time, and allows highly effective developer tools. The main concept behind Flask is to permit net developers to construct a stable internet utility basis. If you are a Python programmer and wish to become a full-stack Software engineer, then you should study Django, and there's no better way to start than becoming a member of Python and Django Full Stack Web Developer Bootcamp course on Udemy. Node.js has persistently ranked as the most well-liked framework in StackOverflow's Developer survey and the key to writing an online application, end-to-finish in a single programming language, i.e., JavaScript.

Many of us get into the programming and net development world by understanding only one or two expertise like Java, C++, or JavaScript, however that won't cut the ice anymore. There were groups of tens of people doing specialised jobs like front-end improvement is completed by a separate set of builders and back-finish is written by other units of programmers, generally known as server-facet builders. They can quickly deliver an utility to millions of customers and get prompt suggestions. Developers at present are conscious of the frontend, backend, database, and debugging of web applications and are able to growing each consumer and server-facet software program.

I actually have completed a Master's Program in Full Stack Java Developer with 360digitmg. It was a unbelievable journey, and I've realized a lot and gained more knowledge in numerous topics like backend (Java & Spring Boot), Frontend , DevOps, and more. Create a dynamic and responsive Java e-commerce internet application using applied sciences corresponding to Angular, Hibernate, MYSQL, Docker. Get started with the Full Stack Java Developer Program and discover every thing about this system. The certification names are the logos of their respective house owners. If you have the proper abilities and aptitude, you can get nice salaries too.

My learning experience with 360digitmgwithin the Java Full Stack Developer Program was nice. I am grateful to 360digitmgfor providing job alternative due to which I received placed in an excellent organisation. Create an leisure application like BookMyShow utilizing your back-end data, API growth, and HTML and CSS expertise. Build actual-world web sites and purposes using the front-end stack technologies corresponding to HTML, CSS, JavaScript, and Angular.

Explore more on - full stack developer course with placement

https://360digitmg.com/course/certification-program-on-full-stack-web-developer

INNODATATICS SDN BHD (1265527-M)

360DigiTMG - Data Science, IR 4.0, AI, Machine Learning Training in Malaysia

Level 16, 1 Sentral, Jalan Stesen Sentral 5, KL Sentral, 50740, Kuala Lumpur, Malaysia.

+ 601 9383 1378 / + 603 2092 9488

#full stack development course#full stack developer course#full stack web development course malaysia#full stack web development course

0 notes

Text

Elk neck state park

Elk neck state park portable#

Elk neck state park plus#