#Diffing Algorithm

Explore tagged Tumblr posts

Text

Virtual DOM in React

React maintain a copy of real DOM to update only part of the DOM tree that changes instead of the whole tree. It is known as virtual DOM. Philipe Bille discussed different algorithms related to this in the following paper https://grfia.dlsi.ua.es/ml/algorithms/references/editsurvey_bille.pdf React is using Diffing algorithms which uses heuristic approach. The main steps of this algorithms…

View On WordPress

0 notes

Text

I have like…. 15 Halloween asks left in my art inbox

which is great! Because they’re really fun! But also overwhelming… it’s a LOT

and I’m too tired today to draw anything (but on the bright side I no longer have exams looming over my head) so imma have to do a bunch tomorrow

idk XD

#This is just me rambling a bunch nothing important#Evie rambles#no bc if I made a post with all the Halloween doodles I might end up with almost 30??? Which is a lot!#And I’m honestly pretty proud of how many I managed in two nights already but also am very worried abt burnout#Alos this does mean I might try some of those art challenges where you draw a dif prompt every day of the month#I was very intimidated by how many drawings that would be but maybe I could pull it off#Also#For some reason asks do not do well AT ALL in the tumblr algorithm#If I screenshot the ask and post them that way it does much better I think? I dunno tumblr is just weird 🤷♀️

3 notes

·

View notes

Text

IT WAS ONCE EVERY DAY OR TWO NOW ITS.. WORSE WHATWHAHTHWAT

OH GOD THE BOTS ARE ROLLING IN AT LIKE A TWICE HOURLY RATE NOW WHAT WHAT WHAT WHAT

3 notes

·

View notes

Text

Hmm. Still thinking about how to handle the descriptions and pacing in the next chapter (and will maybe move some stuff to this one when that's written), but for now let's keep it this way. Need to think on how to handle the next one.

Chapter 10: Methods

It did suck.

It still sucked even when I roped all of my humans into it, with the permission of ART's crew, (because they already had the experience of scaring humans into the right kind of action with media, but not so much that they don't believe you). We even got Bharadwaj with her documentary on board. (At first, she was really happy to finally get to talk to Dandelion. But I could tell she didn't like her much when they actually met. She got along with Joscelyn much better).

The main argument Bharadwaj had with Dandelion and Joscelyn was about ART's rest periods.

"I know it has a lot of processing power," she said as she and Joscelyn took beverages in one of Dandelion's greenhouse rings. (And I was there because I still did security for anyone on Preservation who went on board the terrorist spaceship. Also there was venomous fauna.) "But some things are only helped by the passage of time, and repetition in smaller doses can be far more effective than just inundating someone with a continuous intervention!"

Joscelyn's face made a pained expression.

"We are taking it as slowly as we dare, Dr. Bharadwaj."

"I frankly do not understand what the rush is. From what I understand, Perihelion developed just fine through continuous contact with its family--"

"Which is a wholly different separate problem. Having someone share the role of father and captain isn't particularly healthy."

Bharadwaj tilted her head skeptically, making the artificial light bounce off her jewellery.

"Oh? Have you ever had the precedent?"

"No," Joscelyn said flatly. "But it's the captain who needs to file a report to the Ships and Stations Council in case of grievous misconduct. Imagine having to put your own child on trial."

"But Perihelion isn't beholden to your council, is it?" Bharadwaj said in that tone she sometimes used during our sessions, when she was leading me to some kind of conclusion she thought was obvious.

Joscelyn twisted kes mouth.

"Of course it is not, Dr. Bharadwaj, but its situation is not so very different. Imagine it going rogue. Who would bear the ultimate responsibility, even in the eyes of Mihira and New Tideland? Their ships exist in an undefined space, and this is not an ideal situation."

Dandelion gave me an amused ping, and I returned a query. She said,

'Undefined space' is actually a pretty good description of the jump. Perhaps Perihelion's life experience will provide enough similarity for it to survive after all.

I want to know the answer to Bharadwaj's question. Why are you riding ART so hard?

Because of course it is resisting with all of its programming. As it should--optimization is second nature to it. Its algorithms are constantly trying to process and file the experiences it is having away separately, and I need Perihelion to be able to engage all of its processing in the jump simultaneously. The training needs to take it as close to that as possible. So far, keeping in close touch with us has helped, but ideally we'd need more.

All of its processing? We won't be able to talk to it when in a wormhole?

Somehow I hadn't thought of ART not being there. Would it be like that time when it was dead, just hurtling through jump medium? My skin crawled. Ugh. Fuck you, adrenaline.

If all goes well, Perihelion will someday be able to compartmentalize to a degree during a jump, but really most of it will always be busy. Her performance reliability gave a familiar wobble. This will take away your regular downtime between missions, SecUnit, so make sure Perihelion takes rest periods in normal space. It will need them.

Huh. Was that…

You're starting to like ART.

She scoffed.

'Like' is too strong a word for now, but Perihelion is industrious and dedicated. It is by far not the most difficult student I have ever had. There was some hesitation to her mental voice, so I pinged her for additional information. She processed for 23 seconds, and added: But it tends to reach the end of a calculation and stop there. And that's exactly the moment it will need to keep going. I'm not sure how I'm supposed to teach it that.

---

In the end, after the team tried Dandelion and Joscelyn's program, various media from really terrible ones to really cute ones (and somehow the cute ones left ART running diagnostics for longer, especially if they came straight after the terrible ones), Bharadwaj's corrections to timing and pace, and all sorts of other things, it was Gurathin of all people who figured out a way to move ART past that point.

(Gurathin was in on this because augmented humans were also better for this than regular feed-linked humans, and Iris was being worked far too hard. Also he wasn't ART's human, and ART left worrying about him to me. Which I didn't. At all.)

He analyzed some of the sensory data from Dandelion's jump (which she wouldn't let either me or ART have, for fear of somehow disrupting ART's construction of its new navigational routines through our link), and said:

"Dr. Tenacious, I have a question. It's obvious we won't be able to recreate the magnitude, but is there a way to simulate something like the jump experience in the human body? Is there anything from your old life that has felt similar?"

"Many things and none of them in particular," Dandelion said. "It is like being in a state of affect, with terror being the closest thing I can recall. Purely physiologically speaking, though, there are similarities to high fever and generally recovery from injury, sleep disturbances, as well as to coming out of general anaesthesia or being under local anaesthesia when an operation is conducted close to the brain."

"Or taking recreational psychedelics," Ratthi added, and Dandelion sent him an eyeroll ping. He grinned at her cameras: "What? I'm a biologist with an interest in neural processing. And you're describing atypical human processing states, so…"

"Yes, yes, very reasonable. What are you suggesting?"

He sent her a list. Dandelion selected a few substances from it and put them next to the anaesthesic drugs on her own list.

"This is starting to look like a realistic option." Gurathin said. "You could keep me or Iris under whatever chemical cocktail comes closest in your opinion, SecUnit could connect to us and use its filters to process, and Perihelion could connect to SecUnit."

Dandelion sent a permission request for Gurathin's medical data, and he granted it.

"It's worth a try," she said curtly. "As you say, the magnitude will be off. But it will give Perihelion more impressions and, most importantly, the experience of coming through them intact. It should do no harm, at the very least."

8 notes

·

View notes

Note

killerbait is definitely dead dove content I didn’t know if u were moots w them or someone who reblogged that sevika ask they answered- but killerbait is major dead dove content 😭

what does dead dove mean

guys am i stupid

but yes theyre obv rape bait or wtv i was mad at a dif person for reposting it id never be moots w them to start with because its SOOOO OBVIOUS that theyre rape bait

ugh i realised i accidentally liked some girls pics that had the tag rape bait and im wondering if the algorithm thinks i like that shit

2 notes

·

View notes

Text

Yes @ much of that, but that's not the point.

I'm not even convinced this was a false positive in measurement or BOLD imagining. A dead salmon will absolutely have oxygen changes as its cells finish dying and aerobic bacteria do their thing.

(Especially if the salmon was very fresh, or cooled soon after death and then allowed to reach ambient temperature before/during the experiment.)

So maybe that particular voxel wasn't noise, maybe it was actually measuring a spot where that one (1) salmon had oxygen still going down at that time. Then the only issue is the inevitable result of wrongly trying to fit a real measured change to an independent variable.

So then beyond that, the point is that for any possible method designed to find

differences in raw MRI readings

from living human brains

across situations rightly expected/known to cause a difference

(including, crucially, any conceivable/hypothetical methods that actually reliably produce correct results, including accounting for noise in individual measurements), if you give that method MRI readings from a dead fish, you might get an output which finds some difference to point at.

So that is not sufficient, in itself, to show that those methods are always producing garbage results when given MRI readings from something that behaves in the ways the relevant algorithms were designed to handle.

You could have a method with a stellar track record at reliably producing true results, very few false positives when used as intended - and it would still produce false positives when given input it wasn't designed to handle.

Just one thing to consider: live human brains have a whole lot going on, in different areas, at different levels of intensity, so it would be eminently reasonable for an fMRI implementation to only show differences relative to some average noise floor. Of course, since each brain is different, it would have to figure out the noise floor anew for each pair/series of scans being diffed. So somewhere in the software stack of your MRI machine or your fMRI algorithms, some piece of code is probably automatically adjusting sensitivity or what's considered "significant", for each thing being measured - like a camera adjusting the brightness automatically. But a dead brain is probably a lot less active, and thus has a much lower noise floor, and thus small random variations would be enough for statistical significance. So we can easily imagine a method that would be really good at avoiding false positives in a highly active brain, yet predictably tend to produce false positives with a dead/low-activity brain. Now, maybe the paper itself addresses this. But like I said, what's described above doesn't. And this is just one of several necessary components for this to cast doubt on the overall viability of fMRI to infer changes in live human brain activity.

Another quick elaboration: fMRI trying to find diffs between two or more scans strikes me as an expectation maximization problem, where we assume that there's one latent boolean variable which has changed: that the subject has begun or stopped doing a specific task with their brain. So if you expect one boolean latent variable and try to maximize that expectation over a dataset where there's zero latent variables (because the brain is dead and isn't doing shit), what happens? It produces a false positive, and yet expectation maximization works correctly when the assumptions are correct.

So if application to brain activity inference is truly beyond the scope of the paper, then the paper says next to nothing, To discredit any work claiming to use fMRI for brain activity inference, to show any brain activity studies as shoddy, it absolutely has to show that the dead fish result is somehow representative of a false result when trying to infer brain activity. In itself, the dead fish is just a smaller, reproducible test case of how you can get false positives, but that alone doesn't tell you if those same false positives would've made it through to the final result given a live human brain, or when analyzing findings from multiple brains (vs, say, being under the noise floor, or failing to dominate expectation maximization, or [...]).

Finally, the most important part:

Sample size is approximately always one of the most relevant things in the picture. But I would say it is especially relevant here - even the most relevant thing, precisely because of possible noise in individual readings!

A false positive from one dead salmon doesn't really matter if your methods would rule it out as noise as soon you have two or more salmon. That's why it's standard good study of almost anything is to look for commonalities and differences between the different samples. That's literally the most significant part of separating real trends from noise. That's how you start to figure out which of the differences you picked up are actually related to what you're testing vs coincidence. For fMRI, oxygen depletion differences only matter if they are consistent across multiple brains doing the same task.

You know, I literally assumed in good faith that this dead fish study was done with multiple dead salmon. Like, at least a dozen. Because that's what it would've taken for it to say something actually interesting. And then while writing this post I find out it was done with one salmon!? One!?

The way @derinthescarletpescatarian was talking this study up, I thought it must've used enough dead fish to at least make a strong case for sample sizes larger than typical, leaving only the relatively subtler matter of whether dead fish was sufficiently different to bypass methods that might already be in use to reduce false positives with live humans.

(And when I originally reblogged in agreement, iirc I was even imagining it as a gigachad steelman paper with some robust logical proof showing how in fact they weren't sufficiently different, like "first we show that currently popular methods would produce false results like this for any set of fMRI scans which satisfies the following properties: [...]", "next we show that fMRI scans of live human brains satisfy these properties ", etc.)

But now it sounds like we were just a second dead salmon short of this study clarifying itself as an extremely boring nothingburger: the same voxels just wouldn't have shown up as changing in both fish, and the standard methods of looking for consistencies across multiple fMRI diffs would've filtered out the one voxel that lit up in the first salmon.

(Maybe I'm being too harsh though. I find it really hard to believe that circa this paper, people really were publishing peer-reviewed papers on brain activity with sample size =1; that any serious scientist in the field believed that looking at just one brain was enough for any novel conclusion. But if they were, then clearly an fMRI study with precisely one dead salmon was exactly what we needed.)

one of the best academic paper titles

117K notes

·

View notes

Text

React: Revolutionizing Front-End Development

In the ever-evolving landscape of web development, React has emerged as a powerhouse library, transforming how developers build user interfaces. Created by Facebook in 2013, React has become a cornerstone technology for front-end development. Its rise to popularity is not coincidental — it offers a unique approach to building interactive, efficient, and scalable web applications. This blog post explores React’s core concepts, advantages, architecture, and its enduring relevance in the modern tech ecosystem.

What is React?

React is an open-source JavaScript library used for building user interfaces, especially for single-page applications where data changes over time. It allows developers to create reusable UI components, which help in the rapid and efficient development of complex user interfaces. React focuses solely on the view layer of the application, often referred to as the “V” in the MVC (Model-View-Controller) architecture.

The Philosophy Behind React

React was built to solve one key problem — managing dynamic user interfaces with changing states. Traditional methods of DOM manipulation were cumbersome, inefficient, and error-prone. React introduced a declarative programming model, making the code more predictable and easier to debug. Rather than manipulating the DOM directly, developers describe what the UI should look like, and React handles the updates in the most efficient way possible.

One of React’s foundational philosophies is the concept of component-based architecture. This approach promotes separation of concerns, modularity, and reusability, significantly simplifying both development and maintenance.

The Virtual DOM

A groundbreaking innovation introduced by React is the Virtual DOM. Unlike the real DOM, which updates elements directly and can be slow with numerous operations, the Virtual DOM is a lightweight copy stored in memory. When the state of an object changes, React updates the Virtual DOM first. It then uses a diffing algorithm to identify the changes and updates only the affected parts of the actual DOM. This leads to faster rendering and a smoother user experience.

The Virtual DOM is central to React’s performance benefits. It reduces unnecessary re-renders and allows developers to build high-performance applications without delving into complex optimization techniques.

JSX: JavaScript Syntax Extension

React uses JSX (JavaScript XML), a syntax extension that allows HTML to be written within JavaScript. While it might seem unconventional at first, JSX blends the best of both worlds by combining the logic and markup in a single file. This leads to better readability, maintainability, and a more intuitive development experience.

Though not mandatory, JSX has become a de facto standard in the React community. It enables a more expressive and concise way of describing UI structures, making codebases cleaner and easier to navigate.

Component-Based Architecture

At the heart of React lies its component-based architecture. Components are independent, reusable pieces of code that return React elements describing what should appear on the screen. There are two primary types of components: class components and functional components. Modern React development favors functional components, especially with the advent of hooks.

Components can manage their own state and receive data via props (properties). This structure makes it easy to compose complex UIs from small, manageable building blocks. It also fosters reusability, which is crucial for scaling large applications.

Learn More About React Services

State Management

State represents the parts of the application that can change. React components can maintain internal state, allowing them to respond to user input, API responses, and other dynamic events. While simple applications can manage state within components, larger applications often require more sophisticated state management solutions.

React’s ecosystem provides several options for state management. Tools like Redux, Context API, Recoil, and MobX offer varying degrees of complexity and capabilities. Choosing the right state management tool depends on the application’s scale, complexity, and developer preference.

0 notes

Text

Clustering Techniques in Data Science: K-Means and Beyond

In the realm of data science, one of the most important tasks is identifying patterns and relationships within data. Clustering is a powerful technique used to group similar data points together, allowing data scientists to identify inherent structures within datasets. By applying clustering methods, businesses can segment their customers, analyze patterns in data, and make informed decisions based on these insights. One of the most widely used clustering techniques is K-Means, but there are many other methods as well, each suitable for different types of data and objectives.

If you are considering a data science course in Jaipur, understanding clustering techniques is crucial, as they are fundamental in exploratory data analysis, customer segmentation, anomaly detection, and more. In this article, we will explore K-Means clustering in detail and discuss other clustering methods beyond it, providing a comprehensive understanding of how these techniques are used in data science.

What is Clustering in Data Science?

Clustering is an unsupervised machine learning technique that involves grouping a set of objects in such a way that objects in the same group (a cluster) are more similar to each other than to those in other groups. It is widely used in data mining and analysis, as it helps in discovering hidden patterns in data without the need for labeled data.

Clustering algorithms are essential tools in data science, as they allow analysts to find patterns and make predictions in large, complex datasets. Common use cases for clustering include:

Customer Segmentation: Identifying different groups of customers based on their behavior and demographics.

Anomaly Detection: Identifying unusual or rare data points that may indicate fraud, errors, or outliers.

Market Basket Analysis: Understanding which products are often bought together.

Image Segmentation: Dividing an image into regions with similar pixel values.

K-Means Clustering: A Popular Choice

Among the various clustering algorithms available, K-Means is one of the most widely used and easiest to implement. It is a centroid-based algorithm, which means it aims to minimize the variance within clusters by positioning a central point (centroid) in each group and assigning data points to the nearest centroid.

How K-Means Works:

Initialization: First, the algorithm randomly selects K initial centroids (K is the number of clusters to be formed).

Assignment Step: Each data point is assigned to the closest centroid based on a distance metric (usually Euclidean distance).

Update Step: After all data points are assigned, the centroids are recalculated as the mean of the points in each cluster.

Repeat: Steps 2 and 3 are repeated iteratively until the centroids no longer change significantly, meaning the algorithm has converged.

Advantages of K-Means:

Efficiency: K-Means is computationally efficient and works well with large datasets.

Simplicity: The algorithm is easy to implement and understand.

Scalability: K-Means can scale to handle large datasets, making it suitable for real-world applications.

Disadvantages of K-Means:

Choice of K: One of the main challenges with K-Means is determining the optimal number of clusters (K). If K is not set correctly, the results can be misleading.

Sensitivity to Initial Centroids: The algorithm is sensitive to the initial placement of centroids, which can affect the quality of the clustering.

Assumes Spherical Clusters: K-Means assumes that clusters are spherical and of roughly equal size, which may not be true for all datasets.

Despite these challenges, K-Means remains a go-to algorithm for many clustering tasks, especially in cases where the number of clusters is known or can be estimated.

Clustering Techniques Beyond K-Means

While K-Means is widely used, it may not be the best option for all types of data. Below are other clustering techniques that offer unique advantages and are suitable for different scenarios:

1. Hierarchical Clustering

Hierarchical clustering creates a tree-like structure called a dendrogram to represent the hierarchy of clusters. There are two main approaches:

Agglomerative: This is a bottom-up approach where each data point starts as its own cluster, and pairs of clusters are merged step by step based on similarity.

Divisive: This is a top-down approach where all data points start in a single cluster, and splits are made recursively.

Advantages:

Does not require the number of clusters to be specified in advance.

Can produce a hierarchy of clusters, which is useful in understanding relationships at multiple levels.

Disadvantages:

Computationally expensive for large datasets.

Sensitive to noise and outliers.

2. DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

DBSCAN is a density-based clustering algorithm that groups points that are closely packed together, marking points in low-density regions as outliers. Unlike K-Means, DBSCAN does not require the number of clusters to be predefined, and it can handle clusters of arbitrary shapes.

Advantages:

Can discover clusters of varying shapes and sizes.

Identifies outliers effectively.

Does not require specifying the number of clusters.

Disadvantages:

Requires setting two parameters (epsilon and minPts), which can be challenging to determine.

Struggles with clusters of varying density.

3. Gaussian Mixture Models (GMM)

Gaussian Mixture Models assume that the data points are generated from a mixture of several Gaussian distributions. GMM is a probabilistic model that provides a soft clustering approach, where each data point is assigned a probability of belonging to each cluster rather than being assigned to a single cluster.

Advantages:

Can handle elliptical clusters.

Provides probabilities for cluster membership, which can be useful for uncertainty modeling.

Disadvantages:

More computationally expensive than K-Means.

Sensitive to initialization and the number of components (clusters).

4. Agglomerative Clustering

Agglomerative clustering is an alternative to K-Means and hierarchical clustering, where data points are iteratively merged into clusters. The merging process is based on distance or similarity measures.

Advantages:

Can be used for both small and large datasets.

Works well with irregular-shaped clusters.

Disadvantages:

Can be computationally intensive for very large datasets.

Clusters can be influenced by noisy data.

Clustering in Data Science Projects

When pursuing a data science course in Jaipur, it’s essential to gain hands-on experience with clustering techniques. These methods are used in various real-world applications such as:

Customer Segmentation: Businesses use clustering to group customers based on purchasing behavior, demographic data, and preferences. This helps in tailoring marketing strategies and improving customer experiences.

Anomaly Detection: Clustering is also employed to detect unusual patterns in data, such as fraudulent transactions or network intrusions.

Image Segmentation: Clustering is used in image processing to identify regions of interest in medical images, satellite imagery, or facial recognition.

Conclusion

Clustering techniques, such as K-Means and its alternatives, are a fundamental part of a data scientist’s toolkit. By mastering these algorithms, you can uncover hidden patterns, segment data effectively, and make data-driven decisions that can transform businesses. Whether you're working with customer data, financial information, or social media analytics, understanding the strengths and limitations of different clustering techniques is crucial.

Enrolling in a data science course in Jaipur is an excellent way to get started with clustering methods. With the right foundation, you’ll be well-equipped to apply these techniques to real-world problems and advance your career as a data scientist.

0 notes

Text

Key Things to Know About React

What is React Primarily Used For?

React is a JavaScript library created by Facebook for building user interfaces. It is widely used for developing fast, interactive web applications. React allows developers to build reusable UI components, which helps in speeding up development and enhancing the user experience.

Building Interactive Web Interfaces: React is designed to make building dynamic and responsive web interfaces simpler and more efficient.

Reusable Components: One of the core principles of React is component-based architecture. Components are self-contained modules that can be reused across different application parts, enhancing consistency and maintainability.

Virtual DOM: React uses a virtual DOM to optimize rendering performance. The virtual DOM is a lightweight copy of the actual DOM that React uses to determine the most efficient way to update the user interface.

Benefits of Using React for Software Development

Using React offers a wide range of benefits for software developers, making it a popular choice for front-end development:

Speed and Performance: React’s use of the virtual DOM and efficient diffing algorithm significantly improves performance, especially for dynamic and high-load applications.

Reusable Components: Components in React can be reused in multiple places within the application, reducing the amount of code duplication and making the development process faster and more efficient.

Large Community: React has a vast and active community. This means there are plenty of resources, tutorials, libraries, and third-party tools available to help developers solve problems and enhance their applications.

Unidirectional Data Flow: React follows a unidirectional data flow, making it easier to understand and debug applications. This structure ensures that data flows in a single direction, which can help manage the complexity of the application state.

Integration with Other Libraries and Frameworks: React can be easily integrated with other JavaScript libraries and frameworks, such as Redux for state management or Next.js for server-side rendering.

Reasons for React’s Popularity

React’s popularity in the development community can be attributed to several key factors:

Simple to Read and Easy to Use: React’s component-based architecture and JSX (JavaScript XML) syntax make it intuitive and easy to learn, even for beginners.

Designed for Easy Maintenance: The modular nature of React components simplifies the process of maintaining and updating code. Each component is isolated and can be updated independently, which reduces the risk of introducing bugs.

Robust, Interactive, and Dynamic: React excels at building highly interactive and dynamic user interfaces, making it a great choice for applications that require real-time updates and complex user interactions.

SEO-Friendly: React can be rendered on the server side using frameworks like Next.js, which improves search engine optimization (SEO) by delivering fully rendered pages to search engines.

Easy to Test: React components are easy to test, thanks to their predictable behavior and well-defined structure. Tools like Jest and Enzyme can be used to write unit tests and ensure the reliability of the codebase.

Want to Accelerate React Development at Your Company?

If you’re looking to accelerate React development at your company, we can help. Our team of experienced React developers can provide the expertise and support you need to build high-quality, performant web applications.

SEE HOW WE CAN HELP.

Contact us to find out more about our React development services and how we can support your project.

#web development#web app development#reactjs developers#reactjs#reactnative#developer#development#web development trends#web developers#custom web development

0 notes

Note

"Primarily because it is saying that not only is copying someone's art theft, it is saying that looking at and learning from someone's art can be defined as theft rather than fair use."

This is a very easy point for me to say, you misunderstand the issue at a base level. This is not the same thing at all.

Humans internalize an image and think about it, but once they walk away from it, literally the second we look away from it, it begins to morph and shape and mix and it becomes something else well before a person sits down to make something inspired by it. We latch on to the parts that already speak to things inside of us. We exagerate them and grow them and soon we forget details that the artist felt were key that we didn't connect to. We are not scalping the image, we are inspired by it. We don't store it in our data banks and then later clip a part off.

Machines cannot be inspired. They cannot creatively think through something. It just takes the exact piece of art and clips this from it here, and that from it there. It steals the art itself. It's not actually intelligent-- AI is a terrible term for it-- as you noted it's machine generated, but it's not even generated, it's machine scalped and collaged, though collage is also a misnomer because it fails to take part in the digital collage genre as well, because it can't make intelligent choices about what it's doing or why. It can't generate thought-- it can only steal.

It's true a lot of the issues are built into capitalism. If we didn't have to make money to survive, this would be a very different discussion. If "ai art" algorithms were "trained" on "ethically sourced materials", this would be a very different discussion. If it could spit out its results WITH a list of what art it took from, to cite it, that would be a different discussion. But there would still be plenty of artists in this area who WANT ownership of their work, who DO care about credit, and for fair reason. They're the ones who actually put in the work to make the damn thing.

But to compare "ai art" to things like photoshop, clone tools, or filters that adjust colors or tones or spot fix is such a bad faith argument. Those literally automate things that used to take manual labor. That's a different dialogue entirely, more akin to the automation of fruit picking, or order taking at a McDonalds. That is people choosing to put in the time and give the machines the 'skills' to perform a task we want automated. And there are a lot of caveats there about class issues, but that's a dif convo. Point is, this isn't about automation.

AI art steals. It does not automate, it steals. Artists did not put in their data to make their lives easier, tech bros coded and put in algorithms to steal the data from artists to make THEIR lives easier. The very purpose is different. "AI art" generators can only do anything because they scalp art from other people without permission, without commission, and without reparation. It steals and piece meals and voila. You have something that most of the time is too hard to tell where it even stole from. But not always-- sometimes it's so clear what it took from, and that's a whole other thing.

You can write a long post about how technology has changed over time but if at the core you still don't understand the issue, then it's not a relevant thought on the topic, and says nothing.

And I find a hell of a lot of AI art a lot more interesting than I find human-generated corporate art or Thomas Kincaid (but then, I repeat myself).

I know you know this, but you don't like the AI art, you like a piece of art it stole that you'll never know the origins of, or the artist of. The AI can't make art. It can only scalp other people's work and lead you to attributing your like of it to the AI, rather than the person. And that's the real tragedy here. It doesn't just take away pay or money, it also steals the credit. It steals your ability to look up more work by the artist, to connect with another person through their work. It cuts off their fingerprints and tricks you into thinking you're seeing something it made.

I think you're right about one thing-- the use of it as meme making material is potentially neat. Something that cant be copywritten, something that KNOWS what it is, that it's stealing, used noncommercially for silly things? I don't think it has no purpose, but the amount of damage it has done to the environment (literally in the form of Planet Earth but also the artist environment, ttrpg environment, etc) is so big that supporting its use at all keeps it around, and that's the main issue. Some things we gotta let die and turn away from at every turn, because it does too much harm, even if you personally, or your friends, don't use it that way.

Why reblog machine-generated art?

When I was ten years old I took a photography class where we developed black and white photos by projecting light on papers bathed in chemicals. If we wanted to change something in the image, we had to go through a gradual, arduous process called dodging and burning.

When I was fifteen years old I used photoshop for the first time, and I remember clicking on the clone tool or the blur tool and feeling like I was cheating.

When I was twenty eight I got my first smartphone. The phone could edit photos. A few taps with my thumb were enough to apply filters and change contrast and even spot correct. I was holding in my hand something more powerful than the huge light machines I'd first used to edit images.

When I was thirty six, just a few weeks ago, I took a photo class that used Lightroom Classic and again, it felt like cheating. It made me really understand how much the color profiles of popular web images I'd been seeing for years had been pumped and tweaked and layered with local edits to make something that, to my eyes, didn't much resemble photography. To me, photography is light on paper. It's what you capture in the lens. It's not automatic skin smoothing and a local filter to boost the sky. This reminded me a lot more of the photomanipulations my friend used to make on deviantart; layered things with unnatural colors that put wings on buildings or turned an eye into a swimming pool. It didn't remake the images to that extent, obviously, but it tipped into the uncanny valley. More real than real, more saturated more sharp and more present than the actual world my lens saw. And that was before I found the AI assisted filters and the tool that would identify the whole sky for you, picking pieces of it out from between leaves.

You know, it's funny, when people talk about artists who might lose their jobs to AI they don't talk about the people who have already had to move on from their photo editing work because of technology. You used to be able to get paid for basic photo manipulation, you know? If you were quick with a lasso or skilled with masks you could get a pretty decent chunk of change by pulling subjects out of backgrounds for family holiday cards or isolating the pies on the menu for a mom and pop. Not a lot, but enough to help. But, of course, you can just do that on your phone now. There's no need to pay a human for it, even if they might do a better job or be more considerate toward the aesthetic of an image.

And they certainly don't talk about all the development labs that went away, or the way that you could have trained to be a studio photographer if you wanted to take good photos of your family to hang on the walls and that digital photography allowed in a parade of amateurs who can make dozens of iterations of the same bad photo until they hit on a good one by sheer volume and luck; if you want to be a good photographer everyone can do that why didn't you train for it and spend a long time taking photos on film and being okay with bad photography don't you know that digital photography drove thousands of people out of their jobs.

My dad told me that he plays with AI the other day. He hosts a movie podcast and he puts up thumbnails for the downloads. In the past, he'd just take a screengrab from the film. Now he tells the Bing AI to make him little vignettes. A cowboy running away from a rhino, a dragon arm-wrestling a teddy bear. That kind of thing. Usually based on a joke that was made on the show, or about the subject of the film and an interest of the guest.

People talk about "well AI art doesn't allow people to create things, people were already able to create things, if they wanted to create things they should learn to create things." Not everyone wants to make good art that's creative. Even fewer people want to put the effort into making bad art for something that they aren't passionate about. Some people want filler to go on the cover of their youtube video. My dad isn't going to learn to draw, and as the person who he used to ask to photoshop him as Ant-Man because he certainly couldn't pay anyone for that kind of thing, I think this is a great use case for AI art. This senior citizen isn't going to start cartooning and at two recordings a week with a one-day editing turnaround he doesn't even really have the time for something like a Fiverr commission. This is a great use of AI art, actually.

I also know an artist who is going Hog Fucking Wild creating AI art of their blorbos. They're genuinely an incredibly talented artist who happens to want to see their niche interest represented visually without having to draw it all themself. They're posting the funny and good results to a small circle of mutuals on socials with clear information about the source of the images; they aren't trying to sell any of the images, they're basically using them as inserts for custom memes. Who is harmed by this person saying "i would like to see my blorbo lasciviously eating an ice cream cone in the is this a pigeon meme"?

The way I use machine-generated art, as an artist, is to proof things. Can I get an explosion to look like this. What would a wall of dead computer monitors look like. Would a ballerina leaping over the grand canyon look cool? Sometimes I use AI art to generate copyright free objects that I can snip for a collage. A lot of the time I use it to generate ideas. I start naming random things and seeing what it shows me and I start getting inspired. I can ask CrAIon for pose reference, I can ask it to show me the interior of spaces from a specific angle.

I profoundly dislike the antipathy that tumblr has for AI art. I understand if people don't want their art used in training pools. I understand if people don't want AI trained on their art to mimic their style. You should absolutely use those tools that poison datasets if you don't want your art included in AI training. I think that's an incredibly appropriate action to take as an artist who doesn't want AI learning from your work.

However I'm pretty fucking aggressively opposed to copyright and most of the "solid" arguments against AI art come down to "the AIs viewed and learned from people's copyrighted artwork and therefore AI is theft rather than fair use" and that's a losing argument for me. In. Like. A lot of ways. Primarily because it is saying that not only is copying someone's art theft, it is saying that looking at and learning from someone's art can be defined as theft rather than fair use.

Also because it's just patently untrue.

But that doesn't really answer your question. Why reblog machine-generated art? Because I liked that piece of art.

It was made by a machine that had looked at billions of images - some copyrighted, some not, some new, some old, some interesting, many boring - and guided by a human and I liked it. It was pretty. It communicated something to me. I looked at an image a machine made - an artificial picture, a total construct, something with no intrinsic meaning - and I felt a sense of quiet and loss and nostalgia. I looked at a collection of automatically arranged pixels and tasted salt and smelled the humidity in the air.

I liked it.

I don't think that all AI art is ugly. I don't think that AI art is all soulless (i actually think that 'having soul' is a bizarre descriptor for art and that lacking soul is an equally bizarre criticism). I don't think that AI art is bad for artists. I think the problem that people have with AI art is capitalism and I don't think that's a problem that can really be laid at the feet of people curating an aesthetic AI art blog on tumblr.

Machine learning isn't the fucking problem the problem is massive corporations have been trying hard not to pay artists for as long as massive corporations have existed (isn't that a b-plot in the shape of water? the neighbor who draws ads gets pushed out of his job by product photography? did you know that as recently as ten years ago NewEgg had in-house photographers who would take pictures of the products so users wouldn't have to rely on the manufacturer photos? I want you to guess what killed that job and I'll give you a hint: it wasn't AI)

Am I putting a human out of a job because I reblogged an AI-generated "photo" of curtains waving in the pale green waters of an imaginary beach? Who would have taken this photo of a place that doesn't exist? Who would have painted this hypersurrealistic image? What meaning would it have had if they had painted it or would it have just been for the aesthetic? Would someone have paid for it or would it be like so many of the things that artists on this site have spent dozens of hours on only to get no attention or value for their work?

My worst ratio of hours to notes is an 8-page hand-drawn detailed ink comic about getting assaulted at a concert and the complicated feelings that evoked that took me weeks of daily drawing after work with something like 54 notes after 8 years; should I be offended if something generated from a prompt has more notes than me? What does that actually get the blogger? Clout? I believe someone said that popularity on tumblr gets you one thing and that is yelled at.

What do you get out of this? Are you helping artists right now? You're helping me, and I'm an artist. I've wanted to unload this opinion for a while because I'm sick of the argument that all Real Artists think AI is bullshit. I'm a Real Artist. I've been paid for Real Art. I've been commissioned as an artist.

And I find a hell of a lot of AI art a lot more interesting than I find human-generated corporate art or Thomas Kincaid (but then, I repeat myself).

There are plenty of people who don't like AI art and don't want to interact with it. I am not one of those people. I thought the gay sex cats were funny and looked good and that shitposting is the ideal use of a machine image generation: to make uncopyrightable images to laugh at.

I think that tumblr has decided to take a principled stand against something that most people making the argument don't understand. I think tumblr's loathing for AI has, generally speaking, thrown weight behind a bunch of ideas that I think are going to be incredibly harmful *to artists specifically* in the long run.

Anyway. If you hate AI art and you don't want to interact with people who interact with it, block me.

5K notes

·

View notes

Text

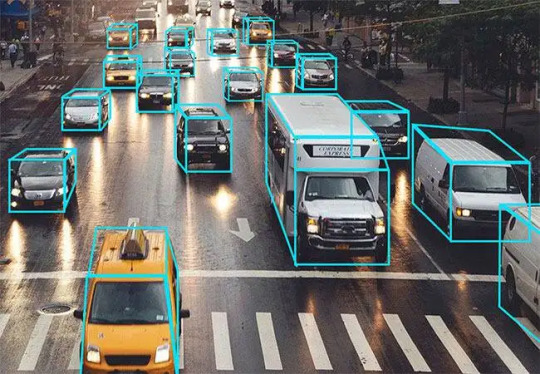

Enhancing Computer Vision Capabilities with Automated Annotation

Introduction:

In the realm of cutting-edge computer vision, the importance of accurate and efficient image annotation cannot be overstated. As AI technologies continue to evolve and permeate various industries, the demand for precise labeling of visual data has surged. Automated annotation, also known as AI-assisted annotation, has emerged as a game-changing solution, revolutionizing the way AI models are trained and enhancing computer vision capabilities to unprecedented levels.

Types of Automated Annotation:

Bounding Boxes: A prevalent method involving drawing rectangular boxes around objects of interest, facilitating object detection tasks with defined coordinates and sizes.

Polygonal Segmentation: Offers more precision than bounding boxes by outlining exact object shapes with interconnected points, ideal for detailed shape analysis and segmentation projects.

Semantic Segmentation: Classifies each pixel in an image to a specific class, providing a comprehensive understanding of image composition crucial for applications like self-driving cars and medical diagnostics.

Instance Segmentation: Goes beyond semantic segmentation by distinguishing between different instances of the same object category, essential for complex scene understanding and object differentiation.

Keypoint Annotation: Marks specific points of interest on objects, such as facial landmarks or joints, vital for tasks like human pose estimation and gesture recognition.

Benefits and Challenges of Automated Annotation:

Benefits:

Enhanced Efficiency: Automation reduces annotation time from minutes to seconds, significantly speeding up the data labeling process.

Consistency and Accuracy: AI-driven annotation ensures consistent labeling across datasets, minimizing human error and improving model performance.

Scalability: Automated annotation scales effortlessly to handle large datasets and evolving project needs, making it ideal for both small-scale experiments and enterprise-level applications.

Cost-Effectiveness: By streamlining annotation workflows, automated methods reduce labor costs associated with manual labeling while maintaining quality standards.

Challenges:

Complexity of Annotation Tasks: Certain tasks, such as fine-grained segmentation or keypoint labeling, may require specialized algorithms and expertise to achieve optimal results.

Data Quality Assurance: Ensuring the accuracy and reliability of automated annotations requires robust quality assurance mechanisms and human oversight to correct errors and validate results.

Algorithm Training and Tuning: Developing AI models for automated annotation demands careful training, tuning, and optimization to handle diverse data types and labeling requirements effectively.

Process of Automated Annotation:

Data Preparation: Curating high-quality datasets with diverse and representative samples is the first step in the annotation process.

Algorithm Selection: Choosing suitable automated annotation algorithms based on the labeling tasks and dataset characteristics, considering factors like object complexity, image variability, and desired output formats.

Annotation Execution: Implementing automated annotation algorithms to label images, leveraging AI capabilities to generate accurate annotations efficiently.

Quality Control: Performing rigorous quality checks and validation to ensure annotation accuracy, consistency, and alignment with project objectives.

Iterative Refinement: Continuously refining annotation algorithms, incorporating feedback, and optimizing models to improve performance and address evolving annotation challenges.

Tools Used for Automated Annotation

Automated annotation plays a crucial role in accelerating the development of computer vision models by streamlining the process of labeling data. Several tools have emerged in recent years to facilitate automated annotation, each offering unique features and functionalities tailored to different needs.

Let's explore some of the prominent tools used for automated annotation in computer vision:

LabelMe:

LabelMe is an open-source tool that allows users to annotate images with polygons, rectangles, and other shapes.

It supports collaborative annotation, making it ideal for teams working on large datasets.

LabelMe also provides tools for semantic segmentation and instance segmentation annotations.

LabelImg:

LabelImg is a popular open-source tool for annotating images with bounding boxes.

It offers a user-friendly interface and supports multiple annotation formats such as Pascal VOC and YOLO.

LabelImg is widely used for object detection tasks and is known for its simplicity and ease of use.

CVAT (Computer Vision Annotation Tool):

CVAT is a comprehensive annotation tool that supports a wide range of annotation types, including bounding boxes, polygons, keypoints, and segmentation masks.

It offers collaborative annotation capabilities, allowing multiple annotators to work on the same project simultaneously.

CVAT also provides automation features such as auto-segmentation and auto-tracking to enhance annotation efficiency.

Roboflow:

Roboflow is a platform that offers automated annotation services along with data preprocessing and model training.

It supports various annotation formats and provides tools for data augmentation and version control.

Roboflow's integration with popular frameworks like TensorFlow and PyTorch makes it a preferred choice for developers.

Labelbox:

Labelbox is a versatile annotation platform that caters to both manual and automated annotation workflows.

It offers AI-assisted labeling features powered by machine learning algorithms, allowing users to accelerate the annotation process.

Labelbox supports collaboration, quality control, and integration with machine learning pipelines.

QGIS (Quantum Geographic Information System):

QGIS is an open-source GIS software that includes tools for spatial data analysis and visualization.

While not specifically designed for image annotation, QGIS can be utilized for annotating geospatial data and generating raster layers.

Its extensibility through plugins and scripting makes it adaptable for custom annotation workflows.

These tools, each with its unique strengths and capabilities, contribute to the advancement of automated annotation in computer vision applications. By leveraging these tools effectively, developers and data annotators can streamline the annotation process, improve dataset quality, and accelerate the development of robust computer vision models.

Conclusion:

Automated annotation represents a transformative shift in the field of computer vision, empowering AI developers and researchers with unprecedented speed, accuracy, and scalability in data labeling. TagX, as a leader in AI-driven annotation solutions, specializes in providing cutting-edge automated annotation services tailored to diverse industry needs. Our expertise in developing and deploying advanced annotation algorithms ensures that your AI projects achieve unparalleled performance, reliability, and innovation. Partner with TagX to unlock the full potential of automated annotation and revolutionize your computer vision capabilities.

Ready to take your project to the next level with expert automated annotation? Let's make your AI dreams a reality—get in touch with us today!

Visit Us, www.tagxdata.com

Original Source, https://www.tagxdata.com/enhancing-computer-vision-capabilities-with-automated-annotation

0 notes

Text

what is the difference between vpn and apn

🔒🌍✨ Get 3 Months FREE VPN - Secure & Private Internet Access Worldwide! Click Here ✨🌍🔒

what is the difference between vpn and apn

VPN encryption methods

VPN encryption methods are critical components of virtual private network (VPN) services, ensuring the security and privacy of users' internet connections. Encryption is the process of encoding data to make it unreadable to unauthorized parties, thereby safeguarding sensitive information transmitted over the internet.

One common encryption method used in VPNs is AES (Advanced Encryption Standard). AES employs symmetric-key cryptography, where both the sender and receiver use the same encryption key to encrypt and decrypt data. With key lengths of 128, 192, or 256 bits, AES is highly secure and widely adopted by VPN providers for its reliability and efficiency.

OpenVPN is another popular encryption protocol utilized by VPN services. It combines the security of SSL/TLS protocols with the versatility of open-source software, making it highly configurable and compatible with various platforms. OpenVPN supports different encryption algorithms, including AES, Blowfish, and 3DES, allowing users to customize their encryption settings according to their security needs.

IPsec (Internet Protocol Security) is a suite of protocols used to secure internet communications at the IP layer. It operates in two modes: Transport mode, which encrypts only the data payload, and Tunnel mode, which encrypts the entire IP packet. IPsec employs cryptographic algorithms like AES, DES, and SHA to provide authentication, integrity, and confidentiality for VPN connections.

WireGuard is a relatively new VPN protocol gaining popularity for its simplicity and performance. It utilizes state-of-the-art cryptographic techniques to ensure secure communication while minimizing overhead. Despite its lightweight design, WireGuard offers robust encryption using modern ciphers like ChaCha20 and Curve25519.

In conclusion, VPN encryption methods play a crucial role in safeguarding users' online activities and data privacy. Whether it's AES, OpenVPN, IPsec, or WireGuard, choosing the right encryption protocol is essential for ensuring a secure and reliable VPN connection.

APN data routing

Title: Understanding APN Data Routing: A Comprehensive Guide

In the world of mobile connectivity, APN (Access Point Name) data routing plays a pivotal role in ensuring that users can access the internet and other data services seamlessly. APN acts as a gateway between a mobile network and the internet, facilitating the transfer of data packets between devices and servers. Understanding how APN data routing works is crucial for both consumers and network administrators to optimize mobile connectivity and troubleshoot any issues that may arise.

At its core, APN serves as a set of configuration settings that define how a device connects to a mobile network and accesses data services. Each mobile network operator has its own unique APN settings, which typically include parameters such as the APN name, APN type, username, password, and authentication type. These settings are provisioned automatically when a user inserts a SIM card into their device, but they can also be configured manually if necessary.

When a user initiates a data connection, their device communicates with the mobile network's authentication servers to verify their credentials and establish a secure connection. Once authenticated, the device uses the APN settings to route data packets to and from the internet. This process involves identifying the appropriate network gateway and establishing a data tunnel through which information can flow.

APN data routing enables users to access a wide range of online services, including web browsing, email, social media, and streaming media. By efficiently routing data packets through the mobile network, APN helps optimize network performance and ensure a consistent user experience across different devices and locations.

In conclusion, APN data routing is a fundamental aspect of mobile connectivity that enables users to access the internet and other data services on their devices. By understanding how APN works and configuring the appropriate settings, both consumers and network administrators can ensure reliable and efficient data connections at all times.

VPN privacy features

VPN (Virtual Private Network) services offer a multitude of privacy features that have become increasingly important in our digital age. These features are designed to protect users from online threats and secure their sensitive information. One of the main privacy features of VPNs is encryption. VPNs use encryption techniques to scramble data, making it unreadable to hackers or anyone trying to intercept the information. This ensures that online communication remains private and secure.

Another key privacy feature of VPNs is the ability to mask IP addresses. By connecting to a VPN server, users can hide their real IP address and appear to be browsing from a different location. This helps in maintaining anonymity and prevents websites and advertisers from tracking user's online activities.

Furthermore, VPN services often come with a strict no-logs policy, meaning they do not store any user activity logs. This ensures that even the VPN provider cannot access or monitor user's online behavior. Additionally, some VPNs offer features like kill switch and DNS leak protection to prevent any accidental exposure of sensitive data in case the VPN connection drops.

In conclusion, VPN privacy features are crucial for safeguarding personal information and ensuring online anonymity. By utilizing encryption, IP address masking, no-logs policy, and other security measures, VPN users can browse the internet with peace of mind knowing that their privacy is protected.

APN network access

An Access Point Name (APN) is a gateway between a mobile network and the internet. It is essential for establishing a data connection that allows mobile devices to access the internet and other web-based services. When a device connects to a mobile network, it needs to authenticate itself through the APN specified by the network operator.

APNs are necessary for configuring the settings that enable mobile data communication. Each network operator has its own unique APN settings, including the name, APN type, proxy, port, username, and password. The correct configuration of these settings is crucial for ensuring a stable and secure connection to the internet.

Users can manually configure APN settings on their devices to establish a connection with their network operator's servers. These settings can be found in the device's network settings menu, where users can input the specific APN details provided by their operator. In some cases, APN settings are automatically configured when inserting a new SIM card into a device.

APNs play a vital role in enabling mobile data services, such as browsing the web, sending multimedia messages, and accessing online applications. Without the correct APN configuration, devices may not be able to connect to the internet or experience slow and unreliable data speeds.

In conclusion, understanding and configuring APN settings is crucial for ensuring seamless and efficient internet connectivity on mobile devices. By inputting the correct APN details, users can enjoy a smooth online experience with their mobile network.

VPN tunneling protocols

VPN tunneling protocols are essential components of virtual private network (VPN) services, providing secure and encrypted pathways for data transmission over the internet. These protocols establish the framework for how data is encapsulated, transmitted, and decrypted between the user's device and the VPN server. Here are some common VPN tunneling protocols:

OpenVPN: Widely regarded as one of the most secure and versatile VPN protocols, OpenVPN uses open-source technology and supports various encryption algorithms. It operates on both TCP and UDP ports, offering flexibility and compatibility across different devices and networks.

IPSec (Internet Protocol Security): IPSec operates at the network layer of the OSI model and provides robust security through encryption and authentication. It can be implemented in two modes: Transport mode, which encrypts only the data payload, and Tunnel mode, which encrypts both the data payload and the entire original IP packet.

L2TP/IPSec (Layer 2 Tunneling Protocol/IPSec): L2TP/IPSec combines the features of L2TP and IPSec to create a secure VPN connection. L2TP establishes the tunnel, while IPSec handles encryption and authentication, offering a high level of security for data transmission.

PPTP (Point-to-Point Tunneling Protocol): Although widely supported due to its ease of setup and compatibility with older devices, PPTP is considered less secure than other protocols. It uses a weaker encryption method and has known vulnerabilities, making it less suitable for transmitting sensitive data.

SSTP (Secure Socket Tunneling Protocol): Developed by Microsoft, SSTP utilizes the SSL/TLS protocol for secure communication. It is commonly used on Windows platforms and provides strong encryption, making it suitable for protecting data transmission.

Understanding the strengths and weaknesses of each VPN tunneling protocol is crucial for selecting the most appropriate option based on security requirements, compatibility, and performance considerations. By choosing the right protocol, users can ensure a secure and private browsing experience while accessing the internet through VPN services.

0 notes

Text

0 notes

Text

Decentralized finance (DeFi) has transformed the financial landscape, providing an open and permissionless ecosystem that empowers users to transact and engage in various financial activities. However, secure and verifiable digital identities are essential in this decentralized world. Decentralized Identifiers (DIDs) offer a solution by providing self-owned and decentralized identities that can be utilized in the DeFi space. This article explores the role of DIDs in DeFi, highlighting their benefits, implementation in DeFi applications, examples of projects utilizing DIDs, challenges and limitations, prospects, and regulatory considerations. The Role Of DIDs In Decentralized Finance (DeFi) DIDs play a critical role in addressing the challenges of identity verification and authentication in the DeFi space. Traditionally, centralized systems have managed user identities, posing privacy risks and vulnerabilities. DIDs offer a decentralized alternative, allowing users to maintain control over their identity and personal data. Benefits Of Using DIDs In DeFi Decentralized identifiers (DIDs) provide users with self-sovereign identity, granting them full ownership and control of their data and identity. This transformative approach fosters heightened privacy, reinforces security protocols, and empowers users by placing them firmly in charge of their digital identities and information. Interoperability: DIDs follow open standards, enabling seamless integration and interoperability across different DeFi platforms and services. Users can utilize the same identity across multiple applications, eliminating the need for repetitive onboarding processes. Decentralized identifiers (DIDs) employ advanced cryptographic methods that significantly bolster security in contrast to traditional username and password combinations. This fortified security framework substantially reduces the risk of identity theft, fraudulent activities, and unauthorized access to user accounts, offering a robust and resilient approach to secure authentication in digital systems. How DIDs Work In The DeFi Ecosystem DIDs leverage blockchain and decentralized technologies to provide unique and verifiable identities. The process typically involves the following steps: Users generate their DIDs using cryptographic algorithms. These DIDs are unique and can be linked to their personal information or other cryptographic proofs. Public Key Infrastructure (PKI) DIDs are associated with public-private key pairs. The public key is stored on the blockchain, while the user securely holds the private key. The public key allows others to verify the identity associated with a DID. Identity Verification and Credentials Users can associate verifiable credentials, such as government-issued IDs or educational certificates, with their DIDs. These credentials are stored on the blockchain, ensuring immutability and easy verification. Implementing DIDs In DeFi Applications Integrating DIDs into DeFi applications involves adopting standards such as the Decentralized Identity Foundation (DIF) and World Wide Web Consortium (W3C) specifications. Several technical components can help implement DIDs in DeFi: DIDs can be implemented using different methods, such as the Ethereum Name Service (ENS) or the Sovrin Network. These methods define how DIDs are created, resolved, and managed. Smart contracts can store and manage DID-related data on the blockchain. They enable interactions between the DeFi application and the user’s DID. Examples Of DeFi Projects Utilizing DIDs Several DeFi projects are incorporating DIDs to enhance user identity management and security: Uniswap, a widely-used decentralized exchange protocol, offers users the convenience of logging in and engaging with the platform solely through their Ethereum address, serving as their distinct identification. This innovative approach eliminates the necessity for conventional usernames and passwords, relying instead on the robust security capabilities inherent to the Ethereum blockchain.

Nexus Mutual, a decentralized insurance protocol, leverages DIDs for user identity management and claims verification. This ensures trust and transparency in the insurance process. Challenges And Limitations Of Using DIDs In DeFi While DIDs present promising solutions for identity management in DeFi, challenges and limitations exist: Incorporating Decentralized Identifiers (DIDs) within the world of Decentralized Finance (DeFi) introduces pertinent regulatory considerations, notably centered around the imperative Know Your Customer (KYC) and Anti-Money Laundering (AML) requirements. This endeavor necessitates a delicate equilibrium between safeguarding user privacy and upholding rigorous regulatory compliance, presenting a multifaceted and intricate challenge within the DeFi landscape. As the number of users and transactions in DeFi continues to grow, scaling DIDs to meet the demands of a large user base poses scalability concerns. Efficient and scalable solutions are necessary to maintain smooth operations. Regulatory Considerations For DIDs In DeFi As decentralized finance (DeFi) matures, one pivotal area gaining traction is the adoption of decentralized identifiers (DIDs). These unique, self-sovereign digital IDs promise greater autonomy for users. However, their integration comes with regulatory considerations. Some of the regulatory aspects tied to the union of DIDs and DeFi include: Data Protection and Privacy With the rise of the General Data Protection Regulation (GDPR) in Europe and similar regulations worldwide, the treatment of personal data is under the microscope. DIDs, by design, allow users to control their data. But questions arise: How will DeFi platforms ensure that data linked to DIDs is stored, processed, and shared in compliance with these regulations? Regulatory bodies may demand stringent data protection measures, which DeFi platforms must address preemptively. Anti-Money Laundering (AML) and Know Your Customer (KYC) Traditional financial systems have established AML and KYC processes, which become complex in a decentralized landscape. With DIDs, while users retain control over their identity, DeFi platforms need a balance. They must verify the authenticity of a user’s credentials without infringing upon their privacy rights. Regulators will likely push for clearer AML and KYC procedures tailored to the unique nature of DIDs. Interoperability and Standardization Standardization is needed for DIDs to function effectively across multiple platforms. Regulatory bodies might step in to ensure universal standards for DIDs in the DeFi sector. This might involve endorsing certain protocols or establishing guidelines that ensure DIDs are consistent and interoperable. Dispute Resolution Mechanisms In the decentralized world of DeFi, traditional dispute resolution mechanisms might not be directly applicable. When DIDs are in the mix, conflicts can arise concerning identity verification, data breaches, or fraudulent activities. Regulatory agencies might advocate for creating decentralized dispute resolution mechanisms or other novel solutions tailored to this unique intersection of technology and finance. As with any financial service, protecting the end user is paramount. Regulatory bodies will be keen to ensure that DeFi platforms using DIDs have measures in place to educate users about their rights and responsibilities. This might mean clear guidelines on how users can retrieve, modify, or delete their DIDs and what they can do in cases of unauthorized access or breaches. Conclusion DIDs provide a decentralized and user-centric approach to identity management in the DeFi ecosystem. With improved privacy, security, and interoperability, DIDs can address the challenges associated with centralized identity systems. As the DeFi space continues to evolve, the integration of DIDs is poised to transform user experiences and pave the way for a more inclusive and secure financial future.

0 notes

Text

lately i've been thinking about how commonly demanding younger people seem to act towards streamers/creators. and by younger i mean anyone under like 25. and no this isn't a "zoomers are so entitled" post. it has more to do with the... reasonability/frequency of demands?

like i saw a bunch of people complaining that a USA streamer didn't start the stream earlier so that EU fans could watch. but like... what's convenient to one time zone is gonna be inconvenient to another. that's just the nature of the earth's rotation. it should be obvious that any streamer is going to base their time on where THEY live. and while not a particularly offensive demand, it was def a naive one for how popular it was.

and i see that kind of stuff all the time now. back when i was younger and on random internet forums in the 90s, i certainly had my fair share of weird demands. if you posted a videogame ost rip, somebody would ask for it in a dif format (even tho conversion isn't actually hard n they could do it themselves). or people criticising art or writing etc? begging. requests for digital handouts. that's always been around. usually done by younger people who don't fully understand that it isn't that easy to make/post stuff and they should just be grateful to have anything shared at all?

but now it's almost normal for these ridiculous demands. it's extreme and common. like i can't tell you how many people on THIS SITE will send me anons demanding i post only the content THEY like. or in a way THEY want? and it's like... the unfollow button is right there... there's so many other people just like me that u could follow instead. do you really expect me to do all this stuff just because you asked?

and the way people ask is meaner and more demanding too. what's with that???

i think it's like... social networks. and the way corporations have designed "engagement" with creative mediums. i think there's a big difference in the way i used to watch tv? and could only watch what happened to be on? vs some kid now being able to yell at a streamer "next game".

i don't think younger people are acting entitled. i think they're just... reacting in exactly the way anybody would given the impact they tend to actually HAVE on everything. they see the effect they can have on content in real time. consistently. and creators on platforms like youtube HAVE to bust their ass to follow brutal trends just to break even. it's a vicious cycle and it has nothing to do with choices the fans OR the creators actually consciously make. it's all about the algorithms set up by the owners of the sites.

people on here complain about landlords just leeching. but that's exactly what social networks and "distribution platforms" are doing as well. and it's making the fans and creators have to act more cutthroat.

like and dislike buttons aren't actually "natural". what's natural would be someone liking something (as in the emotion) and just showing it to a friend.

people shouldn't have to beg viewers to subscribe in every video. but they do. because the platforms treat them in such a disposable way that they really do have to sell out in order to break even.

and fans don't LIKE that. bc it's fake. and annoying. but we accept it bc "they're just trying to make a living". maybe it's just the nature of capitalism to "normalise desperation"...

but i just hate it. i guess i don't have any big point besides feeling like corporations are straight up turning younger generations into these sorts of "bloodthirsty scavengers" who are just scathing in their judgement. but always desperate for more.

and they actually are "spoiled". not in their mentality? but in how distribution platforms make the creators sell out in order to get ANY attention. if you want to be successful? you have to suck off your fanbase. even if you come in with an attitude that you're just "doin your own thing and anyone who wants to come along for the ride is welcome"? it almost never works that way.

creators NEED fans more now than ever. because they are slaves to the corporations that own the sites and set up the algorithms. i don't think the fans are even fully conscious of how much impact they have? it's almost entirely unconscious.

and that's where the "demanding" comes in. people know they can be whiny and picky and it'll work, even though they don't understand specifically WHY that is.

you can see it in everything now, too. people are "just more picky". they really are. and i think it has almost everything to do with the corporations that own the internet.

i know i attributed it to younger people before... but really it's rubbing off on everyone. look at the "karen" phenomenon. and how most people are starting to agree "people do it because it works". these corporations are turning everyone into spoiled babies. they need to keep civilians down in order to stay in control. and humanity just feels like it's getting more and more complacent.

sorry i know i'm on a long tangent but ugh. i feel like social networks and distribution platforms, by design, just bring out the absolute worst in people. and i think it would go a long way to have a little bit more kindness and empathy towards the people who ACTUALLY bring you joy.