#Docker Administration

Explore tagged Tumblr posts

Text

Docker-Compose is the essential tool for managing multi-container Docker applications. This article will give you a comprehensive introduction to its features, installation and usage.

Read more

0 notes

Text

Robert Kime, la maison-monde

"Les trésors d'au-delà des mers afflueront vers toi"

Robert Kime exerçait le métier d'antiquaire. Son travail consistait à parcourir le monde à la recherche d'objets de mobilier artisanal rare pour revenir les installer dans l'écrin de la rigueur londonienne qui lui était familier. Monsieur Kime avait trouvé la sprezzatura du logement en le rendant chaleureux sans qu'il ait l'air professionnellement décoré. Son style est le plus abouti de la catégorie "foyer colonial", un genre qui évoque les vies romanesques antérieures au tourisme, où le doux foyer du voyageur ancien s'enrichissait de trouvailles durement acquises au fur et à mesure des retours de pays lointains. Chaque salle exprime ici en miniature quelque chose d'impérial, chaque élément décoratif est la strate d'une sédimentation patiente, chaque objet concourt à former un vaste cabinet de curiosités. Lorsqu'un coin de salon abrite plusieurs continents et plusieurs époques, le terme de musée vient à l'esprit. Plutôt que d'être statufié, le passé est ici habité. Installé dans une demeure il peuple l'arrière-plan de la vie quotidienne d'une famille, il accompagne un foyer tourné vers l'avenir, il est témoin du passage des jeunes de l'enfance à l'âge adulte, des allées et venues, des éclats de rire, des grands départs et des réconfortants retours. Les intérieurs Robert Kime ont une âme.

Le style d'habitat fixé par Robert Kime était courant chez la bourgeoisie du 20ème siècle. La peau de panthère au milieu des boiseries victoriennes, nous voyons cela dans Tintin, Blake & Mortimer, c'est une image d'Épinal. Ralph Lauren Home a puisé tardivement mais abondamment à cette source. Les appartements meublés dans ce goût éclectique étaient courants chez les diplomates, médecins coloniaux, antiquaires, marchands au long cours, collectionneurs, officiers de marine, anciens expatriés et autres catégories sociales baroques qui faisaient le charme des beaux-quartiers. La maison selon Kime est une maison coloniale placée en Europe pour qu'elle raconte le monde entier. Accomplissement des promesses messianiques, elle est la salle du trône où les richesses du monde ont afflué : tapis iraniens, carapaces de tortue des Maldives, vases chinois, instruments de musique indonésiens, estampes japonaises, table de changeur byzantin, bas-reliefs moghols, fragment d'ostrakon athénien. Jalonnées de mobilier occidental plus sobre, ces raretés rassemblées en un même lieu offrent un panorama de provenances et d'époques. Kime est l'assembleur d'une beauté composite comme les parties juxtaposées d'un vitrail, il est le découvreur de l'harmonie entre des tons, des volumes, des grammages que tout séparait.

Transportés en navire de fort tonnage à travers les tempêtes, débarqués par les dockers, les exigences du plaisir esthétique supposent une rude logistique. À Mayfair, Chelsea, Marylebone, à Jasmin, Trocadéro, Saint-Germain-des-Prés, l'honnête homme faisait de son foyer un résumé du monde où chaque mètre carré chantait la légende coloniale. "Saïgon", "Macassar" ou "Singapour", sont des mots teintés d'une telle force évocatrice que, prononcés pour qualifier la provenances des objets dans un appartement donnant sur un boulevard venteux, ils suffisaient à rappeler la position heureuse où la Providence avait placé votre petite nation capable d'en administrer d'autres situées à cinq semaines de bateau.

La maison de famille est elle aussi devenue universelle. Le monde est venu à elle, tandis que son génie local, occidental, s'est étendu au monde. La maisonnée en a rapporté les nectars les plus purs, chacun d'entre eux exprimant l'intégralité des sucs d'une cité étrangère, d'une histoire, d'un peuple. Toute cosmopolite qu'elle soit, la maison Robert Kime est une maison européenne d'abord, universelle ensuite.

Ne pas se fier aux apparences. Des richesses autrement précieuses que de menus chiffons gisent en ces demeures. Chaque motif de chaque étoffe provient de traditions anciennes, de savoirs-faire délaissés, de palais sauvés des orages d'acier du 20ème siècle. Le damas, le Jouy, l'arabesque, le point d'Alençon, ont chacun leur signification profonde. Le paisley par exemple ne figure-t-il pas la larme du Boudha dans une tradition antérieure de 800 ans à notre ère, et transmise par des générations d'artisans indo-aryens ? La maison Robert Kime c'est la petite Europe maintenue au sommet de sa forme, si haut qu'elle peut voir l'intégralité du monde, et le donner à voir.

Monsieur Kime a eu des goûts sûrs et des dégoûts encore plus sûrs, il était connu pour exprimer en public des opinions tranchées (il n'était donc pas un gentleman). En un temps de désastreux designers Monsieur Kime persistait disait-il, à "faire des intérieurs pour qu'ils soient habités, non pour qu'ils soient regardés". La dualité radicale de l'ergonomie et du design est ancienne or il existe une ergonomie de l'habitat qui la réconcilie c'est cet ameublement smart, terme jailli des fontaines de l'intuition qui se traduit chez nous par "intelligent", mais encore par "joli", "élégant", et "fonctionnel". L'accord gracieux du fond et de la forme, du principe et de l'exécution, tient en un mot.

À l'origine de cette passion pour la beauté on trouve dans l'enfance de cet homme un modèle familial menacé, puis l'équilibre trouvé à l'adolescence dans la stabilité du foyer de son beau-père, un lieu embelli de fournitures curieuses rapportées de l'Inde à l'époque de sa grandeur. "J'essaie de créer une atmosphère dont j'ai été privé enfant. Tout est là, c'est une histoire d'atmosphère". Il fut un jeune homme précoce mais inadapté, connut Oxford, les années 1960, la charnière entre une Europe productrice, religieuse, éduquée, et le monde moderne passif, consommateur, avide de loisirs.

Au terme de l'université, Sotheby's lui offre un emploi mais Kime se retrouve à la tête de sa propre boutique par la rencontre fortuite d'une héritière qui cherchait un antiquaire pour l'aider à vendre sa collection de famille. En 1970, il épouse Helen Nicoll, auteur de livres pour enfants, et le couple s'installe dans une ancienne école gothique. La propriété est si vaste que Robert Kime y ouvre un commerce d'antiquités spécialisé dans les objets antérieurs à 1700. "Chaque salle doit commencer par un tapis" sera sa devise, ainsi commenceront les premiers voyages en Egypte et en Turquie pour y trouver des textiles anciens.

En pleine dégringolade libérale-libertaire 1970, alors que la transmission s'effondrait, quelques rares passionnés remontaient la source. Ce parcours eut pour Kime deux étapes. Sa première période antiquaire lui enseigna les étoffes, objets et motifs issus des colonies qui venaient de fermer. Dans sa seconde période, la plus hardie et la plus belle, il devint en 1988 fabricant de ces objets, aussi la production d'ornements tombés en désuétude trouva par cette initiative une résurrection.

Les créateurs compétents sont en général formés d'une base de culture classique à laquelle ils ajoutent peu à peu des apports exogènes. Peu nombreux, ils sont des hommes ordonnés, configurés à un ordre supérieur, à un Surmoi qui leur enseigne le bon usage en toutes choses, et s'ils extravaguent parfois ils ne transgressent jamais. Chez les hommes de goût la seule faute grave est la faute de goût.

Le grand désarroi des gens riches: comment dépenser l'argent. How to spend it est d'ailleurs le titre d'un mensuel catalogue à snobismes hors de prix. L'éducation du goût permet des économies, et une conduite de vie où s'enrichir et dépenser concernent l'esprit aussi. Les hommes seront davantage portés à l'héllenisme s'ils ont côtoyé enfant chez leur parent un buste d'Athéna.

L'offre d'ameublement pour la bourgeoisie a versé depuis la fin des année 1980 dans l'asepsie de loft Patrick Bateman, dans la cuisine et salle d'eau de style "bloc opératoire", dans l'armoire en métal, dans le noir et blanc. L'épure était chez les mondains une aubaine pour fuir dans l'abstrait, une transposition concrète d'un rapport au monde imposé. Le lissage des textures, la granulométrie faible, visaient à présenter un visage objectif, glissant, neutre. Les européens avaient soudain honte de tout charme qui leur soit spécifique et c'étaient les débuts de l'art-robot, anti-local, anti-artiste. Certains historiens avaient compris que 1945 serait une épuration non pas ponctuelle mais le prélude à une épuration continuelle. Épurer les lignes du mobilier était une manière d'offrir des surfaces où un coup ne peut que ricocher. Passion nouvelle, il fallait ne laisser nulle prise à la critique, n'être jamais soupçonné pour ses goûts de quelque intention condamnable. Qu'est-ce que nos intérieurs sinon les lapsus de nos intentions profondes? Un chez-soi, c'est soi-même rendu lisible par les autres. Une époque de toute-puissance du second degré dans les invitations à dîner commençait, langage de l'équivoque et du compromis, grammaire de toutes les servitudes. Ne pas nommer, ne pas assumer, ne pas penser. Patrick Bateman est l'archétype du mondain lisse, au point qu'il est contraint de chercher une soupape dans la violence, au point d'épurer L'Épuration elle-même pour pouvoir la supporter.

Robert Kime s'est consacré au parti-pris, au subjectif, au palpable qui se donne tel qu'en soi-même, il a pris le risque du ridicule et a triomphé par la beauté. Ne dit-on pas d'un travail très réussi qu'il est "désarmant"? Ainsi de ces arrangements mobiliers qui tombent sous le sens au point qu'ils renvoient même le second degré dans son repaire, complètement battu. En une époque de showroom publicitaire Robert Kime a osé la ligne courbe, le matériau noble, la texture granuleuse présente au toucher. En une époque de grisaille et de conformisme Robert Kime reste la solution idéale pour colorer son intérieur en lui conservant un goût parfait.

#Robert Kime#home decor#antiques#Fabric#Craft#Paisley#London#Style#Paris#Éclectique#home interior#interior design#interior decorating#Chelsea

26 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

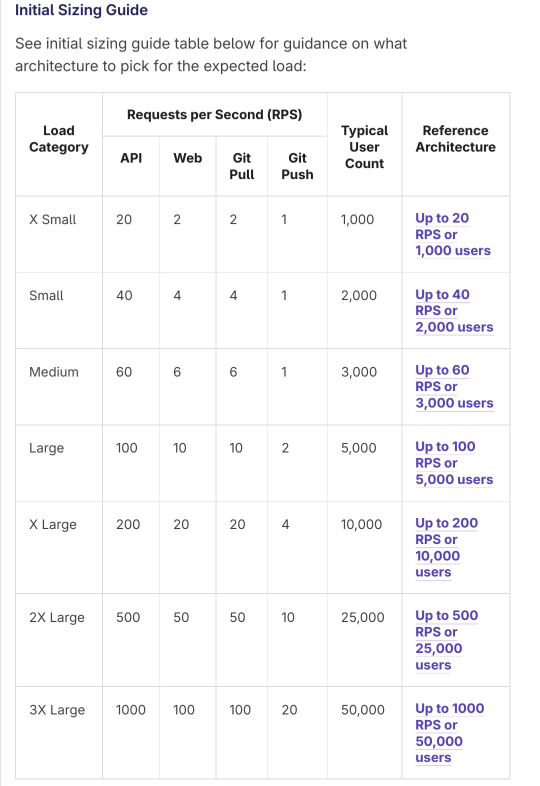

reading the gitlab docs and moaning

https://docs.gitlab.com/ee/administration/reference_architectures/

ohh baby

god, fuck yes, just like that

nnnnnn fuck, you do so much testing and validation, girl

Through testing and real life usage, the Reference Architectures are recommended on the following cloud providers:

[...] The above RPS targets were selected based on real customer data of total environmental loads corresponding to the user count, including CI and other workloads.

casual strace mention because this doc is written for people who know what they're doing. god fuck i'm gonna--

Running components on Docker (including Docker Compose) with the same specs should be fine, as Docker is well known in terms of support. However, it is still an additional layer and may still add some support complexities, such as not being able to run `strace` easily in containers.

I'M INSTALLING GITLAAAAAAAAAB

3 notes

·

View notes

Text

Windows Server 2016: Revolutionizing Enterprise Computing

In the ever-evolving landscape of enterprise computing, Windows Server 2016 emerges as a beacon of innovation and efficiency, heralding a new era of productivity and scalability for businesses worldwide. Released by Microsoft in September 2016, Windows Server 2016 represents a significant leap forward in terms of security, performance, and versatility, empowering organizations to embrace the challenges of the digital age with confidence. In this in-depth exploration, we delve into the transformative capabilities of Windows Server 2016 and its profound impact on the fabric of enterprise IT.

Introduction to Windows Server 2016

Windows Server 2016 stands as the cornerstone of Microsoft's server operating systems, offering a comprehensive suite of features and functionalities tailored to meet the diverse needs of modern businesses. From enhanced security measures to advanced virtualization capabilities, Windows Server 2016 is designed to provide organizations with the tools they need to thrive in today's dynamic business environment.

Key Features of Windows Server 2016

Enhanced Security: Security is paramount in Windows Server 2016, with features such as Credential Guard, Device Guard, and Just Enough Administration (JEA) providing robust protection against cyber threats. Shielded Virtual Machines (VMs) further bolster security by encrypting VMs to prevent unauthorized access.

Software-Defined Storage: Windows Server 2016 introduces Storage Spaces Direct, a revolutionary software-defined storage solution that enables organizations to create highly available and scalable storage pools using commodity hardware. With Storage Spaces Direct, businesses can achieve greater flexibility and efficiency in managing their storage infrastructure.

Improved Hyper-V: Hyper-V in Windows Server 2016 undergoes significant enhancements, including support for nested virtualization, Shielded VMs, and rolling upgrades. These features enable organizations to optimize resource utilization, improve scalability, and enhance security in virtualized environments.

Nano Server: Nano Server represents a lightweight and minimalistic installation option in Windows Server 2016, designed for cloud-native and containerized workloads. With reduced footprint and overhead, Nano Server enables organizations to achieve greater agility and efficiency in deploying modern applications.

Container Support: Windows Server 2016 embraces the trend of containerization with native support for Docker and Windows containers. By enabling organizations to build, deploy, and manage containerized applications seamlessly, Windows Server 2016 empowers developers to innovate faster and IT operations teams to achieve greater flexibility and scalability.

Benefits of Windows Server 2016

Windows Server 2016 offers a myriad of benefits that position it as the platform of choice for modern enterprise computing:

Enhanced Security: With advanced security features like Credential Guard and Shielded VMs, Windows Server 2016 helps organizations protect their data and infrastructure from a wide range of cyber threats, ensuring peace of mind and regulatory compliance.

Improved Performance: Windows Server 2016 delivers enhanced performance and scalability, enabling organizations to handle the demands of modern workloads with ease and efficiency.

Flexibility and Agility: With support for Nano Server and containers, Windows Server 2016 provides organizations with unparalleled flexibility and agility in deploying and managing their IT infrastructure, facilitating rapid innovation and adaptation to changing business needs.

Cost Savings: By leveraging features such as Storage Spaces Direct and Hyper-V, organizations can achieve significant cost savings through improved resource utilization, reduced hardware requirements, and streamlined management.

Future-Proofing: Windows Server 2016 is designed to support emerging technologies and trends, ensuring that organizations can stay ahead of the curve and adapt to new challenges and opportunities in the digital landscape.

Conclusion: Embracing the Future with Windows Server 2016

In conclusion, Windows Server 2016 stands as a testament to Microsoft's commitment to innovation and excellence in enterprise computing. With its advanced security, enhanced performance, and unparalleled flexibility, Windows Server 2016 empowers organizations to unlock new levels of efficiency, productivity, and resilience. Whether deployed on-premises, in the cloud, or in hybrid environments, Windows Server 2016 serves as the foundation for digital transformation, enabling organizations to embrace the future with confidence and achieve their full potential in the ever-evolving world of enterprise IT.

Website: https://microsoftlicense.com

5 notes

·

View notes

Text

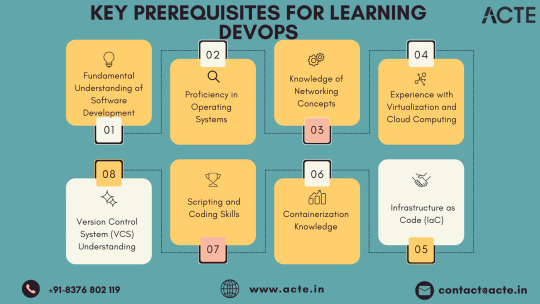

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

DevOps Landscape: Building Blocks for a Seamless Transition

In the dynamic realm where software development intersects with operations, the role of a DevOps professional has become instrumental. For individuals aspiring to make the leap into this dynamic field, understanding the key building blocks can set the stage for a successful transition. While there are no rigid prerequisites, acquiring foundational skills and knowledge areas becomes pivotal for thriving in a DevOps role.

1. Embracing the Essence of Software Development: At the core of DevOps lies collaboration, making it essential for individuals to have a fundamental understanding of software development processes. Proficiency in coding practices, version control, and the collaborative nature of development projects is paramount. Additionally, a solid grasp of programming languages and scripting adds a valuable dimension to one's skill set.

2. Navigating System Administration Fundamentals: DevOps success is intricately linked to a foundational understanding of system administration. This encompasses knowledge of operating systems, networks, and infrastructure components. Such familiarity empowers DevOps professionals to adeptly manage and optimize the underlying infrastructure supporting applications.

3. Mastery of Version Control Systems: Proficiency in version control systems, with Git taking a prominent role, is indispensable. Version control serves as the linchpin for efficient code collaboration, allowing teams to track changes, manage codebases, and seamlessly integrate contributions from multiple developers.

4. Scripting and Automation Proficiency: Automation is a central tenet of DevOps, emphasizing the need for scripting skills in languages like Python, Shell, or Ruby. This skill set enables individuals to automate repetitive tasks, fostering more efficient workflows within the DevOps pipeline.

5. Embracing Containerization Technologies: The widespread adoption of containerization technologies, exemplified by Docker, and orchestration tools like Kubernetes, necessitates a solid understanding. Mastery of these technologies is pivotal for creating consistent and reproducible environments, as well as managing scalable applications.

6. Unveiling CI/CD Practices: Continuous Integration and Continuous Deployment (CI/CD) practices form the beating heart of DevOps. Acquiring knowledge of CI/CD tools such as Jenkins, GitLab CI, or Travis CI is essential. This proficiency ensures the automated execution of code testing, integration, and deployment processes, streamlining development pipelines.

7. Harnessing Infrastructure as Code (IaC): Proficiency in Infrastructure as Code (IaC) tools, including Terraform or Ansible, constitutes a fundamental aspect of DevOps. IaC facilitates the codification of infrastructure, enabling the automated provisioning and management of resources while ensuring consistency across diverse environments.

8. Fostering a Collaborative Mindset: Effective communication and collaboration skills are non-negotiable in the DevOps sphere. The ability to seamlessly collaborate with cross-functional teams, spanning development, operations, and various stakeholders, lays the groundwork for a culture of collaboration essential to DevOps success.

9. Navigating Monitoring and Logging Realms: Proficiency in monitoring tools such as Prometheus and log analysis tools like the ELK stack is indispensable for maintaining application health. Proactive monitoring equips teams to identify issues in real-time and troubleshoot effectively.

10. Embracing a Continuous Learning Journey: DevOps is characterized by its dynamic nature, with new tools and practices continually emerging. A commitment to continuous learning and adaptability to emerging technologies is a fundamental trait for success in the ever-evolving field of DevOps.

In summary, while the transition to a DevOps role may not have rigid prerequisites, the acquisition of these foundational skills and knowledge areas becomes the bedrock for a successful journey. DevOps transcends being a mere set of practices; it embodies a cultural shift driven by collaboration, automation, and an unwavering commitment to continuous improvement. By embracing these essential building blocks, individuals can navigate their DevOps journey with confidence and competence.

5 notes

·

View notes

Text

How to Install Docker on Ubuntu: Complete 2025 Guide

Docker is a powerful platform used to build, ship, and run applications inside lightweight containers. Whether you're a developer, system administrator, or tech enthusiast, Docker simplifies application deployment and scalability. In this guide, you'll learn how to install Docker on Ubuntu seamlessly.

That’s it! You’ve successfully learned how to install Docker on Ubuntu. With Docker, you can now create isolated containers, deploy apps faster, and streamline your development pipeline.

0 notes

Text

Q-AIM: Open Source Infrastructure for Quantum Computing

Q-AIM Quantum Access Infrastructure Management

Open-source Q-AIM for quantum computing infrastructure, management, and access.

The open-source, vendor-independent platform Q-AIM (Quantum Access Infrastructure Management) makes quantum computing hardware easier to buy, meeting this critical demand. It aims to ease quantum hardware procurement and use.

Important Q-AIM aspects discussed in the article:

Design and Execution Q-AIM may be installed on cloud servers and personal devices in a portable and scalable manner due to its dockerized micro-service design. This design prioritises portability, personalisation, and resource efficiency. Reduced memory footprint facilitates seamless scalability, making Q-AIM ideal for smaller server instances at cheaper cost. Dockerization bundles software for consistent performance across contexts.

Technology Q-AIM's powerful software design uses Docker and Kubernetes for containerisation and orchestration for scalability and resource control. Google Cloud and Kubernetes can automatically launch, scale, and manage containerised apps. Simple Node.js, Angular, and Nginx interfaces enable quantum gadget interaction. Version control systems like Git simplify code maintenance and collaboration. Container monitoring systems like Cadvisor monitor resource usage to ensure peak performance.

Benefits, Function Research teams can reduce technical duplication and operational costs with Q-AIM. It streamlines complex interactions and provides a common interface for communicating with the hardware infrastructure regardless of quantum computing system. The system reduces the operational burden of maintaining and integrating quantum hardware resources by merging access and administration, allowing researchers to focus on scientific discovery.

Priorities for Application and Research The Variational Quantum Eigensolver (VQE) algorithm is studied to demonstrate how Q-AIM simplifies hardware access for complex quantum calculations. In quantum chemistry and materials research, VQE is an essential quantum computation algorithm that approximates a molecule or material's ground state energy. Q-AIM researchers can focus on algorithm development rather than hardware integration.

Other Features QASM, a human-readable quantum circuit description language, was parsed by researchers. This simplifies algorithm translation into hardware executable instructions and quantum circuit manipulation. The project also understands that quantum computing errors are common and invests in scalable error mitigation measures to ensure accuracy and reliability. Per Google Cloud computing instance prices, the methodology considers cloud deployment costs to maximise cost-effectiveness and affect design decisions.

Q-AIM helps research teams and universities buy, run, and scale quantum computing resources, accelerating progress. Future research should improve resource allocation, job scheduling, and framework interoperability with more quantum hardware.

To conclude

The majority of the publications cover quantum computing, with a focus on Q-AIM (Quantum Access Infrastructure Management), an open-source software framework for managing and accessing quantum hardware. Q-AIM uses a dockerized micro-service architecture for scalable and portable deployment to reduce researcher costs and complexity.

Quantum algorithms like Variational Quantum Eigensolver (VQE) are highlighted, but the sources also address quantum machine learning, the quantum internet, and other topics. A unified and adaptable software architecture is needed to fully use quantum technology, according to the study.

#QAIM#quantumcomputing#quantumhardware#Kubernetes#GoogleCloud#quantumcircuits#VariationalQuantumEigensolver#machinelearning#News#Technews#Technology#TechnologyNews#Technologytrends#Govindhtech

0 notes

Text

Build a Future-Ready Tech Career with a DevOps Course in Pune

In today's rapidly evolving software industry, the demand for seamless collaboration between development and operations teams is higher than ever. DevOps, a combination of “Development” and “Operations,” has emerged as a powerful methodology to improve software delivery speed, quality, and reliability. If you’re looking to gain a competitive edge in the tech world, enrolling in a DevOps course in Pune is a smart move.

Why Pune is a Hub for DevOps Learning

Pune, often dubbed the “Oxford of the East,” is not only known for its educational excellence but also for being a thriving IT and startup hub. With major tech companies and global enterprises setting up operations here, the city offers abundant learning and employment opportunities. Choosing a DevOps course in Pune gives students access to industry-oriented training, hands-on project experience, and potential job placements within the local ecosystem.

Moreover, Pune’s cost-effective lifestyle and growing tech infrastructure make it an ideal city for both freshers and professionals aiming to upskill.

What You’ll Learn in a DevOps Course

A comprehensive DevOps course in Pune equips learners with a wide range of skills needed to automate and streamline software development processes. Most courses include:

Linux Fundamentals and Shell Scripting

Version Control Systems like Git & GitHub

CI/CD Pipeline Implementation using Jenkins

Containerization with Docker

Orchestration using Kubernetes

Cloud Services: AWS, Azure, or GCP

Infrastructure as Code (IaC) with Terraform or Ansible

Many training programs also include real-world projects, mock interviews, resume-building workshops, and certification preparation to help learners become job-ready.

Who Should Take This Course?

A DevOps course in Pune is designed for a wide audience—software developers, system administrators, IT operations professionals, and even students who want to step into cloud and automation roles. Basic knowledge of programming and Linux can be helpful, but many beginner-level courses start from the fundamentals and gradually build up to advanced concepts.

Whether you are switching careers or aiming for a promotion, DevOps offers a high-growth path with diverse opportunities.

Career Opportunities After Completion

Once you complete a DevOps course in Pune, a variety of career paths open up in IT and tech-driven industries. Some of the most in-demand roles include:

DevOps Engineer

Site Reliability Engineer (SRE)

Automation Engineer

Build and Release Manager

Cloud DevOps Specialist

These roles are not only in demand but also come with attractive salary packages and global career prospects. Companies in Pune and across India are actively seeking certified DevOps professionals who can contribute to scalable, automated, and efficient development cycles.

Conclusion

Taking a DevOps course in Pune https://www.apponix.com/devops-certification/DevOps-Training-in-Pune.html is more than just an educational step—it's a career-transforming investment. With a balanced mix of theory, tools, and practical exposure, you’ll be well-equipped to tackle real-world DevOps challenges. Pune’s dynamic tech landscape offers a strong launchpad for anyone looking to master DevOps and step confidently into the future of IT.

0 notes

Text

Unlocking SRE Success: Roles and Responsibilities That Matter

In today’s digitally driven world, ensuring the reliability and performance of applications and systems is more critical than ever. This is where Site Reliability Engineering (SRE) plays a pivotal role. Originally developed by Google, SRE is a modern approach to IT operations that focuses strongly on automation, scalability, and reliability.

But what exactly do SREs do? Let’s explore the key roles and responsibilities of a Site Reliability Engineer and how they drive reliability, performance, and efficiency in modern IT environments.

🔹 What is a Site Reliability Engineer (SRE)?

A Site Reliability Engineer is a professional who applies software engineering principles to system administration and operations tasks. The main goal is to build scalable and highly reliable systems that function smoothly even during high demand or failure scenarios.

🔹 Core SRE Roles

SREs act as a bridge between development and operations teams. Their core responsibilities are usually grouped under these key roles:

1. Reliability Advocate

Ensures high availability and performance of services

Implements Service Level Objectives (SLOs), Service Level Indicators (SLIs), and Service Level Agreements (SLAs)

Identifies and removes reliability bottlenecks

2. Automation Engineer

Automates repetitive manual tasks using tools and scripts

Builds CI/CD pipelines for smoother deployments

Reduces human error and increases deployment speed

3. Monitoring & Observability Expert

Sets up real-time monitoring tools like Prometheus, Grafana, and Datadog

Implements logging, tracing, and alerting systems

Proactively detects issues before they impact users

4. Incident Responder

Handles outages and critical incidents

Leads root cause analysis (RCA) and postmortems

Builds incident playbooks for faster recovery

5. Performance Optimizer

Analyzes system performance metrics

Conducts load and stress testing

Optimizes infrastructure for cost and performance

6. Security and Compliance Enforcer

Implements security best practices in infrastructure

Ensures compliance with industry standards (e.g., ISO, GDPR)

Coordinates with security teams for audits and risk management

7. Capacity Planner

Forecasts traffic and resource needs

Plans for scaling infrastructure ahead of demand

Uses tools for autoscaling and load balancing

🔹 Day-to-Day Responsibilities of an SRE

Here are some common tasks SREs handle daily:

Deploying code with zero downtime

Troubleshooting production issues

Writing automation scripts to streamline operations

Reviewing infrastructure changes

Managing Kubernetes clusters or cloud services (AWS, GCP, Azure)

Performing system upgrades and patches

Running game days or chaos engineering practices to test resilience

🔹 Tools & Technologies Commonly Used by SREs

Monitoring: Prometheus, Grafana, ELK Stack, Datadog

Automation: Terraform, Ansible, Chef, Puppet

CI/CD: Jenkins, GitLab CI, ArgoCD

Containers & Orchestration: Docker, Kubernetes

Cloud Platforms: AWS, Google Cloud, Microsoft Azure

Incident Management: PagerDuty, Opsgenie, VictorOps

🔹 Why SRE Matters for Modern Businesses

Reduces system downtime and increases user satisfaction

Improves deployment speed without compromising reliability

Enables proactive problem solving through observability

Bridges the gap between developers and operations

Drives cost-effective scaling and infrastructure optimization

🔹 Final Thoughts

Site Reliability Engineering roles and responsibilities are more than just monitoring systems—it’s about building a resilient, scalable, and efficient infrastructure that keeps digital services running smoothly. With a blend of coding, systems knowledge, and problem-solving skills, SREs play a crucial role in modern DevOps and cloud-native environments.

📥 Click Here: Site Reliability Engineering certification training program

0 notes

Text

Earlier, we got acquainted with such a containerization tool as Docker, and dealt with its basic concepts, such as images and containers. In this article, we will take a closer look at how to create your own Docker image and upload it to Dockerhub for further use.

Read more

0 notes

Text

DevOps Training Institute in Indore – Your Gateway to Continuous Delivery & Automation

Accelerate Your IT Career with DevOps

In today’s fast-paced IT ecosystem, businesses demand faster deployments, automation, and collaborative workflows. DevOps is the solution—and becoming proficient in this powerful methodology can drastically elevate your career. Enroll at Infograins TCS, the leading DevOps Training Institute in Indore, to gain practical skills in integration, deployment, containerization, and continuous monitoring with real-time tools and cloud technologies.

What You’ll Learn in Our DevOps Course

Our specialized DevOps course in Indore blends development and operations practices to equip students with practical expertise in CI/CD pipelines, Jenkins, Docker, Kubernetes, Ansible, Git, AWS, and monitoring tools like Nagios and Prometheus. The course is crafted by industry experts to ensure learners gain a hands-on understanding of real-world DevOps applications in cloud-based environments.

Key Benefits – Why Our DevOps Training Stands Out

At Infograins TCS, our DevOps training in Indore offers learners several advantages:

In-depth coverage of popular DevOps tools and practices.

Hands-on projects on automation and cloud deployment.

Industry-aligned curriculum updated with the latest trends.

Internship and job assistance for eligible students. This ensures you not only gain certification but walk away with project experience that matters in the real world.

Why Choose Us – A Trusted DevOps Training Institute in Indore

Infograins TCS has earned its reputation as a reliable DevOps Training Institute in Indore through consistent quality and commitment to excellence. Here’s what sets us apart:

Professional instructors with real-world DevOps experience.

100% practical learning through case studies and real deployments.

Personalized mentoring and career guidance.

Structured learning paths tailored for both beginners and professionals. Our focus is on delivering value that goes beyond traditional classroom learning.

Certification Programs at Infograins TCS

We provide industry-recognized certifications that validate your knowledge and practical skills in DevOps. This credential is a powerful tool for standing out in job applications and interviews. After completing the DevOps course in Indore, students receive a certificate that reflects their readiness for technical roles in the IT industry.

After Certification – What Comes Next?

Once certified, students can pursue DevOps-related roles such as DevOps Engineer, Release Manager, Automation Engineer, and Site Reliability Engineer. We also help students land internships and jobs through our strong network of hiring partners, real-time project exposure, and personalized support. Our DevOps training in Indore ensures you’re truly job-ready.

Explore Our More Courses – Build a Broader Skill Set

Alongside our flagship DevOps course, Infograins TCS also offers:

Cloud Computing with AWS

Python Programming & Automation

Software Testing – Manual & Automation

Full Stack Web Development

Data Science and Machine Learning These programs complement DevOps skills and open additional career opportunities in tech.

Why We Are the Right Learning Partner

At Infograins TCS, we don’t just train—we mentor, guide, and prepare you for a successful IT journey. Our career-centric approach, live-project integration, and collaborative learning environment make us the ideal destination for DevOps training in Indore. When you partner with us, you’re not just learning tools—you’re building a future.

FAQs – Frequently Asked Questions

1. Who can enroll in the DevOps course? Anyone with a basic understanding of Linux, networking, or software development can join. This course is ideal for freshers, IT professionals, and system administrators.

2. Will I get certified after completing the course? Yes, upon successful completion of the training and evaluation, you’ll receive an industry-recognized certification from Infograins TCS.

3. Is this DevOps course available in both online and offline modes? Yes, we offer both classroom and online training options to suit your schedule and convenience.

4. Do you offer placement assistance? Absolutely! We provide career guidance, interview preparation, and job/internship opportunities through our dedicated placement cell.

5. What tools will I learn in this DevOps course? You will gain hands-on experience with tools such as Jenkins, Docker, Kubernetes, Ansible, Git, AWS, and monitoring tools like Nagios and Prometheus.

Join the Best DevOps Training Institute in Indore

If you're serious about launching or advancing your career in DevOps, there’s no better place than Infograins TCS – your trusted DevOps Training Institute in Indore. With our project-based learning, expert guidance, and certification support, you're well on your way to becoming a DevOps professional in high demand.

0 notes

Text

52013l4 in Modern Tech: Use Cases and Applications

In a technology-driven world, identifiers and codes are more than just strings—they define systems, guide processes, and structure workflows. One such code gaining prominence across various IT sectors is 52013l4. Whether it’s in cloud services, networking configurations, firmware updates, or application builds, 52013l4 has found its way into many modern technological environments. This article will explore the diverse use cases and applications of 52013l4, explaining where it fits in today’s digital ecosystem and why developers, engineers, and system administrators should be aware of its implications.

Why 52013l4 Matters in Modern Tech

In the past, loosely defined build codes or undocumented system identifiers led to chaos in large-scale environments. Modern software engineering emphasizes observability, reproducibility, and modularization. Codes like 52013l4:

Help standardize complex infrastructure.

Enable cross-team communication in enterprises.

Create a transparent map of configuration-to-performance relationships.

Thus, 52013l4 isn’t just a technical detail—it’s a tool for governance in scalable, distributed systems.

Use Case 1: Cloud Infrastructure and Virtualization

In cloud environments, maintaining structured builds and ensuring compatibility between microservices is crucial. 52013l4 may be used to:

Tag versions of container images (like Docker or Kubernetes builds).

Mark configurations for network load balancers operating at Layer 4.

Denote system updates in CI/CD pipelines.

Cloud providers like AWS, Azure, or GCP often reference such codes internally. When managing firewall rules, security groups, or deployment scripts, engineers might encounter a 52013l4 identifier.

Use Case 2: Networking and Transport Layer Monitoring

Given its likely relation to Layer 4, 52013l4 becomes relevant in scenarios involving:

Firewall configuration: Specifying allowed or blocked TCP/UDP ports.

Intrusion detection systems (IDS): Tracking abnormal packet flows using rules tied to 52013l4 versions.

Network troubleshooting: Tagging specific error conditions or performance data by Layer 4 function.

For example, a DevOps team might use 52013l4 as a keyword to trace problems in TCP connections that align with a specific build or configuration version.

Use Case 3: Firmware and IoT Devices

In embedded systems or Internet of Things (IoT) environments, firmware must be tightly versioned and managed. 52013l4 could:

Act as a firmware version ID deployed across a fleet of devices.

Trigger a specific set of configurations related to security or communication.

Identify rollback points during over-the-air (OTA) updates.

A smart home system, for instance, might roll out firmware_52013l4.bin to thermostats or sensors, ensuring compatibility and stable transport-layer communication.

Use Case 4: Software Development and Release Management

Developers often rely on versioning codes to track software releases, particularly when integrating network communication features. In this domain, 52013l4 might be used to:

Tag milestones in feature development (especially for APIs or sockets).

Mark integration tests that focus on Layer 4 data flow.

Coordinate with other teams (QA, security) based on shared identifiers like 52013l4.

Use Case 5: Cybersecurity and Threat Management

Security engineers use identifiers like 52013l4 to define threat profiles or update logs. For instance:

A SIEM tool might generate an alert tagged as 52013l4 to highlight repeated TCP SYN floods.

Security patches may address vulnerabilities discovered in the 52013l4 release version.

An organization’s SOC (Security Operations Center) could use 52013l4 in internal documentation when referencing a Layer 4 anomaly.

By organizing security incidents by version or layer, organizations improve incident response times and root cause analysis.

Use Case 6: Testing and Quality Assurance

QA engineers frequently simulate different network scenarios and need clear identifiers to catalog results. Here’s how 52013l4 can be applied:

In test automation tools, it helps define a specific test scenario.

Load-testing tools like Apache JMeter might reference 52013l4 configurations for transport-level stress testing.

Bug-tracking software may log issues under the 52013l4 build to isolate issues during regression testing.

What is 52013l4?

At its core, 52013l4 is an identifier, potentially used in system architecture, internal documentation, or as a versioning label in layered networking systems. Its format suggests a structured sequence: “52013” might represent a version code, build date, or feature reference, while “l4” is widely interpreted as Layer 4 of the OSI Model — the Transport Layer.Because of this association, 52013l4 is often seen in contexts that involve network communication, protocol configuration (e.g., TCP/UDP), or system behavior tracking in distributed computing.

FAQs About 52013l4 Applications

Q1: What kind of systems use 52013l4? Ans. 52013l4 is commonly used in cloud computing, networking hardware, application development environments, and firmware systems. It's particularly relevant in Layer 4 monitoring and version tracking.

Q2: Is 52013l4 an open standard? Ans. No, 52013l4 is not a formal standard like HTTP or ISO. It’s more likely an internal or semi-standardized identifier used in technical implementations.

Q3: Can I change or remove 52013l4 from my system? Ans. Only if you fully understand its purpose. Arbitrarily removing references to 52013l4 without context can break dependencies or configurations.

Conclusion

As modern technology systems grow in complexity, having clear identifiers like 52013l4 ensures smooth operation, reliable communication, and maintainable infrastructures. From cloud orchestration to embedded firmware, 52013l4 plays a quiet but critical role in linking performance, security, and development efforts. Understanding its uses and applying it strategically can streamline operations, improve response times, and enhance collaboration across your technical teams.

0 notes

Text

AWS DevOps Training: A Pathway to Cloud Mastery and Career Growth

With the cloud-based digital revolution of the modern times, organizations are evolving towards flexible methodologies and cloud infrastructure to stay in the running. Therefore, there exists a vast requirement for cloud platform specialists as well as DevOps professionals. AWS DevOps Training addresses this gap by offering quality learning platforms committed to automating processes, enhancing deployment lifecycles, and ensuring scalable applications operating with the support of Amazon Web Services (AWS).

What is AWS DevOps Training?

AWS DevOps Training is a program that integrates the powerful strength of AWS with the efficient simplicity of DevOps. The training equips students with the technical competence to create, deploy, and manage cloud applications in an agile automated environment. It gives prime importance to key DevOps best practices such as Continuous Integration and Continuous Deployment (CI/CD), Infrastructure as Code (IaC), configuration management, monitoring, and security. Training also includes popular tools like AWS CodePipeline, AWS CloudFormation, Docker, Jenkins, Git, and Terraform.

You're an IT operations expert, system administrator, or developer, AWS DevOps Training equips you with the understanding and skills to automate cloud infrastructure and have repeatable, reproducible deployments.

Why is AWS DevOps Training Important?

Implementation of DevOps in cloud environments has brought a paradigm shift in software deployment and delivery by organizations. As the global cloud provider leader, AWS offers a wide range of services that are optimized for DevOps. AWS DevOps Training gives a person the opportunity to utilize these services in order to:

Automate repetitive tasks and reduce deployment failure

Improve speed and frequency of software release

Improve system reliability and scalability

Maximize cross-functional collaboration between development and operations teams

Automate security and compliance across all phases of deployment

Companies today focus more on DevOps-based processes, and these AWS DevOps trained professionals certified are today in high demand in finance, healthcare, e-commerce, and IT industries.

Who Should Take AWS DevOps Training?

AWS DevOps Training is best suited for:

Software developers looking to automate deployment pipelines

System administrators who want to transition from on-premises environments to cloud environments

DevOps engineers interested in enhancing their AWS skills

Cloud architects and consultants managing hybrid or multi-cloud systems

Anyone preparing for the AWS Certified DevOps Engineer – Professional exam

The course often includes hands-on labs, case studies, real-time projects, and certification guidance, making it practical and results-driven.

Key Skills You’ll Gain from AWS DevOps Training

At the end of the training, students are expected to be proficient in skills like:

CI/CD pipeline building and maintenance with AWS tools

Infrastructure automation provisioning with AWS CloudFormation and Terraform

Cloud environment deployment monitoring with CloudWatch and AWS X-Ray

Application deployment management using ECS, EKS, and Lambda

Secure access implementation using IAM roles and policies

Merging all these in-demand skills not only boosts your technical resume but also makes you a valuable asset to any cloud-driven business.

Certification and Career Opportunities

Passing AWS DevOps Training is a valuable step toward achieving the AWS Certified DevOps Engineer – Professional certification. This certification validates your expertise in release automation and application management in AWS. It is industry-approved and often leads to roles like:

DevOps Engineer

Cloud Engineer

Site Reliability Engineer (SRE)

Infrastructure Automation Specialist

Cloud Solutions Architect

As more and more companies move their operations to AWS, certified professionals will certainly be given competitive salaries, employment security, and career growth across the globe.

Conclusion

With the fast pace of digital transformation seen in a globalised economy, DevOps and cloud expertise can no longer be an option—a necessity. AWS DevOps Training is the perfect blend of agile techniques and cloud computing that enables professionals to construct the tools and skills required to thrive in the new world of IT. Whether starting the journey in the cloud or extending DevOps skills, the training creates new avenues to innovation, leadership, and professional achievement.

0 notes

Text

Building a Scalable Web Portal for Online Course Enrollment

In the rapidly growing e-learning industry of 2025, a robust and scalable online presence is essential for success. Building a scalable web portal for online course enrollment ensures that educational platforms can handle increasing user traffic, provide seamless access, and support long-term growth. At Global Techno Solutions, we’ve developed scalable web portals to transform online education, as showcased in our case study on Building a Scalable Web Portal for Online Course Enrollment. As of June 10, 2025, at 01:42 PM IST, scalability remains a cornerstone for educational innovation.

The Challenge: Supporting Growth in Online Learning

An emerging online education provider approached us on June 06, 2025, with a challenge: their existing web portal couldn’t handle a surge of 50,000 new users during a recent course launch, resulting in crashes and a 30% drop in enrollment completion rates. With plans to expand their course offerings and global reach, they needed a scalable web portal to ensure reliability, improve user experience, and support future growth without downtime.

The Solution: A Scalable Web Portal Framework

At Global Techno Solutions, we designed a scalable web portal to meet their needs. Here’s how we built it:

Cloud Infrastructure: We leveraged AWS with auto-scaling groups to dynamically adjust server capacity based on traffic, ensuring uninterrupted service during peak times.

Microservices Architecture: We broke the portal into modular services (e.g., user authentication, course management, payment processing) using Docker and Kubernetes for flexibility and scalability.

Optimized Performance: We implemented a Content Delivery Network (CDN) and lazy loading techniques to reduce load times to under 3 seconds, even with high user volumes.

Seamless Enrollment: We integrated a multi-step enrollment process with real-time validation, making it intuitive for users across devices.

Analytics Dashboard: We provided administrators with a dashboard to monitor traffic, course performance, and system health, enabling proactive scaling decisions.

For a detailed look at our approach, explore our case study on Building a Scalable Web Portal for Online Course Enrollment.

The Results: A Robust Educational Platform

The scalable web portal delivered significant benefits for the education provider:

100% Uptime During Peaks: The cloud infrastructure handled a 75,000-user surge without crashes.

40% Increase in Enrollment: Improved performance and UX boosted sign-ups.

25% Faster Load Times: Optimized design enhanced user satisfaction.

Scalability for Growth: The platform is now ready to support up to 200,000 users annually.

These outcomes highlight the power of a scalable web portal. Learn more in our case study on Building a Scalable Web Portal for Online Course Enrollment.

Why a Scalable Web Portal Matters for Online Course Enrollment

In 2025, scalability is critical for online course platforms, offering benefits like:

Reliability: Handles traffic spikes without downtime.

User Experience: Fast and seamless access improves engagement.

Future-Proofing: Supports expansion into new markets and courses.

Cost Efficiency: Scales resources only as needed, reducing overhead.

At Global Techno Solutions, we specialize in building scalable web portals that empower educational institutions.

Looking Ahead: The Future of Scalable E-Learning Platforms

The future of scalable web portals includes AI-driven course recommendations, VR integration for immersive learning, and blockchain for secure credentialing. By staying ahead of these trends, Global Techno Solutions ensures our clients lead the e-learning revolution.

For a comprehensive look at how we’ve enhanced online education, check out our case study on Building a Scalable Web Portal for Online Course Enrollment. Ready to scale your online course platform? Contact Global Techno Solutions today to learn how our expertise can support your vision.

0 notes