#Docker Compose support

Explore tagged Tumblr posts

Text

Nerdctl: Docker compatible containerd command line tool

Nerdctl: Docker compatible containerd command line tool @vexpert #vmwarecommunities #100daysofhomelab #homelab #nerdctl #Docker-compatibleCLI #containermanagement #efficientcontainerruntime #lazypulling #encryptedimages #rootlessmode #DockerCompose

Most know and use the Docker command line tool working with Docker containers. However, let’s get familiar with the defacto tool working with containerd containers, nerdctl, a robust docker compatible cli. Nerdctl works in tandem with containerd, serving as a compatible cli for containerd and offering support for many docker cli commands. This makes it a viable option when looking to replace…

View On WordPress

#Container Management#Docker Compose support#Docker-compatible CLI#efficient container runtime#encrypted images#installing nerdctl#lazy pulling#nerdctl#replace Docker#rootless mode

0 notes

Text

reading the gitlab docs and moaning

https://docs.gitlab.com/ee/administration/reference_architectures/

ohh baby

god, fuck yes, just like that

nnnnnn fuck, you do so much testing and validation, girl

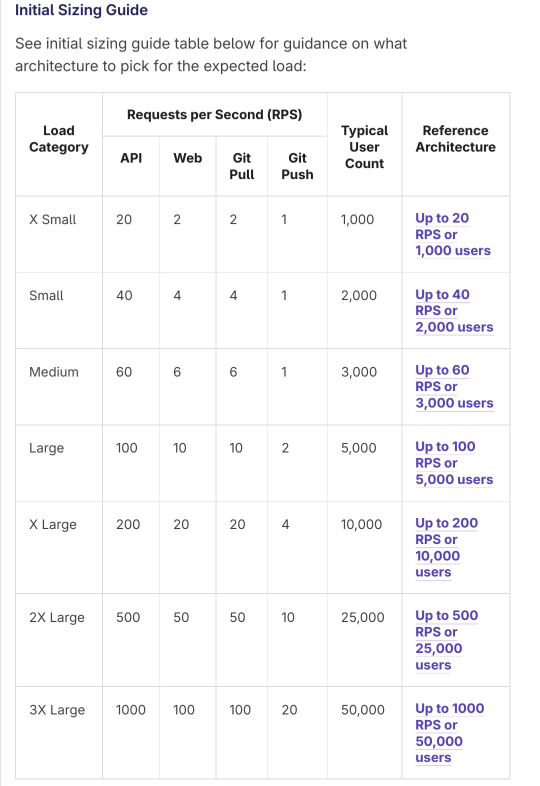

Through testing and real life usage, the Reference Architectures are recommended on the following cloud providers:

[...] The above RPS targets were selected based on real customer data of total environmental loads corresponding to the user count, including CI and other workloads.

casual strace mention because this doc is written for people who know what they're doing. god fuck i'm gonna--

Running components on Docker (including Docker Compose) with the same specs should be fine, as Docker is well known in terms of support. However, it is still an additional layer and may still add some support complexities, such as not being able to run `strace` easily in containers.

I'M INSTALLING GITLAAAAAAAAAB

3 notes

·

View notes

Text

Introducing Codetoolshub.com: Your One-Stop IT Tools Website

Hello everyone! I'm excited to introduce you to Codetoolshub.com, a comprehensive platform offering a variety of IT tools designed to enhance your productivity and efficiency. Our goal is to provide developers, IT professionals, and tech enthusiasts with easy-to-use online tools that can simplify their tasks. Here are some of the tools we offer:

Base64 File Converter

Basic Auth Generator

ASCII Text Drawer

PDF Signature Checker

Password Strength Analyser

JSON to CSV Converter

Docker Run to Docker Compose Converter

RSA Key Pair Generator

Crontab Generator

QR Code Generator

UUID Generator

XML Formatter

And many more...

We are constantly updating and adding new tools to meet your needs. Visit Codetoolshub.com to explore and start using these powerful and free tools today!

Thank you for your support, and we look forward to helping you with all your IT needs.

2 notes

·

View notes

Text

Containerization and Test Automation Strategies

Containerization is revolutionizing how software is developed, tested, and deployed. It allows QA teams to build consistent, scalable, and isolated environments for testing across platforms. When paired with test automation, containerization becomes a powerful tool for enhancing speed, accuracy, and reliability. Genqe plays a vital role in this transformation.

What is Containerization? Containerization is a lightweight virtualization method that packages software code and its dependencies into containers. These containers run consistently across different computing environments. This consistency makes it easier to manage environments during testing. Tools like Genqe automate testing inside containers to maximize efficiency and repeatability in QA pipelines.

Benefits of Containerization Containerization provides numerous benefits like rapid test setup, consistent environments, and better resource utilization. Containers reduce conflicts between environments, speeding up the QA cycle. Genqe supports container-based automation, enabling testers to deploy faster, scale better, and identify issues in isolated, reproducible testing conditions.

Containerization and Test Automation Containerization complements test automation by offering isolated, predictable environments. It allows tests to be executed consistently across various platforms and stages. With Genqe, automated test scripts can be executed inside containers, enhancing test coverage, minimizing flakiness, and improving confidence in the release process.

Effective Testing Strategies in Containerized Environments To test effectively in containers, focus on statelessness, fast test execution, and infrastructure-as-code. Adopt microservice testing patterns and parallel execution. Genqe enables test suites to be orchestrated and monitored across containers, ensuring optimized resource usage and continuous feedback throughout the development cycle.

Implementing a Containerized Test Automation Strategy Start with containerizing your application and test tools. Integrate your CI/CD pipelines to trigger tests inside containers. Use orchestration tools like Docker Compose or Kubernetes. Genqe simplifies this with container-native automation support, ensuring smooth setup, execution, and scaling of test cases in real-time.

Best Approaches for Testing Software in Containers Use service virtualization, parallel testing, and network simulation to reflect production-like environments. Ensure containers are short-lived and stateless. With Genqe, testers can pre-configure environments, manage dependencies, and run comprehensive test suites that validate both functionality and performance under containerized conditions.

Common Challenges and Solutions Testing in containers presents challenges like data persistence, debugging, and inter-container communication. Solutions include using volume mounts, logging tools, and health checks. Genqe addresses these by offering detailed reporting, real-time monitoring, and support for mocking and service stubs inside containers, easing test maintenance.

Advantages of Genqe in a Containerized World Genqe enhances containerized testing by providing scalable test execution, seamless integration with Docker/Kubernetes, and cloud-native automation capabilities. It ensures faster feedback, better test reliability, and simplified environment management. Genqe’s platform enables efficient orchestration of parallel and distributed test cases inside containerized infrastructures.

Conclusion Containerization, when combined with automated testing, empowers modern QA teams to test faster and more reliably. With tools like Genqe, teams can embrace DevOps practices and deliver high-quality software consistently. The future of testing is containerized, scalable, and automated — and Genqe is leading the way.

0 notes

Text

Musings of an LLM Using Man

I know, the internet doesn’t need more words about AI, but not addressing my own usage here feels like an omission.

A good deal of the DC Tech Events code was written with Amazon Q. A few things led to this:

Being on the job market, I felt like I needed get a handle on this stuff, to at least have opinions formed by experience and not just stuff I read on the internet.

I managed to get my hands on $50 of AWS credit that could only be spent on Q.

So, I decided that DC Tech Events would be an experiment in working with an LLM coding assistant. I naturally tend to be a bit of an architecture astronaut. You could say Q exacerbated that, or at least didn’t temper that tendency at all. From another angle, it took me to the logical conclusion of my sketchiest ideas faster than I would have otherwise. To abuse the “astronaut” metaphor: Q got me to the moon (and the realization that life on the moon isn’t that pleasant) much sooner than I would have without it.

I had a CDK project deploying a defensible cloud architecture for the site, using S3, Cloudfront, Lambda, API Gateway, and DynamoDB. The first “maybe this sucks” moment came when I started working on tweaking the HTML and CSS, I didn’t have a good way to preview changes locally without a cdk deploy, which could take a couple of minutes.

That led to a container-centric refactor, that was able to run locally using docker compose. This is when I decided to share an early screenshot. It worked, but the complexity started making me feel nauseous.

This prompt was my hail mary:

Reimagine this whole project as a static site generator. There is a directory called _groups, with a yaml file describing each group. There is a directory called _single_events for events that don’t come from groups(also yaml). All “suggestions” and the review process will all happen via Github pull requests, so there is no need to provide UI or API’s enabling that. There is no longer a need for API’s or login or databases. Restructure the project to accomplish this as simply as possible.

The aggregator should work in two phases: one fetches ical files, and updates a local copy of the file only if it has updated (and supports conditional HTTP get via etag or last modified date). The other converts downloaded iCals and single event YAML into new YAML files:

upcoming.yaml : the remainder of the current month, and all events for the following month

per-month files (like july.yaml)

The flask app should be reconfigured to pull from these YAML files instead of dynamoDB.

Remove the current GithHub actions. Instead, when a change is made to main, the aggregator should run, freeze.py should run, and the built site should be deployed via github page

I don’t recall whether it worked on the first try, and it certainly wasn’t the end of the road (I eventually abandoned the per-month organization, for example), but it did the thing. I was impressed enough to save that prompt because it felt like a noteworthy moment.

I’d liken the whole experience to: banging software into shape by criticizing it. I like criticizing stuff! (I came into blogging during the new media douchebag era, after all). In the future, I think I prefer working this way, over not.

If I personally continue using this (and similar tech), am I contributing to making the world worse? The energy and environmental cost might be overstated, but it isn’t nothing. Is it akin to the other compromises I might make in a day, like driving my gasoline-powered car, grilling over charcoal, or zoning out in the shower? Much worse? Much less? I don’t know yet.

That isn’t the only lens where things look bleak, either: it’s the same tools and infrastructure that make the whiz-bang coding assistants work that lets search engines spit out fact-shaped, information-like blurbs that are only correct by coincidence. It’s shitty that with the right prompts, you can replicate an artists work, or apply their style to new subject matter, especially if that artist is still alive and working. I wonder if content generated by models trained on other model-generated work will be the grey goo fate of the web.

The title of this post was meant to be an X Files reference, but I wonder if cigarettes are in fact an apt metaphor: bad for you and the people around you, enjoyable (for some), and hard to quit.

0 notes

Text

Spring AI 1.0 and Google Cloud to Build Intelligent Apps

Spring AI 1.0

After extensive development, Spring AI 1.0 provides a robust and dependable AI engineering solution for your Java ecosystem. This is calculated to position Java and Spring at the forefront of the AI revolution, not just another library.

Spring Boot is used by so many enterprises that integrating AI into business logic and data has never been easier. Spring AI 1.0 lets developers effortlessly integrate cutting-edge AI models into their apps, bringing up new possibilities. Prepare to implement smart JVM app features!

Spring AI 1.0 is a powerful and comprehensive Java AI engineering solution. Its goal is to lead the AI revolution with Java and Spring. Spring AI 1.0 integrates AI into business logic and data without the integration issues many Spring Boot-using enterprises confront. It lets developers use cutting-edge AI models in their apps, expanding possibilities.

Spring AI supports multiple AI models:

Images produced by text-command image models.

Audio-to-text transcription models.

Vectors are formed by embedding models that transform random data into them for semantic similarity search.

Chat models can edit documents and write poetry, but they are tolerant and easily sidetracked.

The following elements in Spring AI 1.0 enable conversation models overcome their limits and improve:

Use system prompts to set and manage model behaviour.

Memory is added to the model to capture conversational context and memory.

Making tool calling feasible for AI models to access external features.

Including confidential information in the request with rapid filling.

Retrieval Augmented Generation (RAG) uses vector stores to retrieve and use business data to inform the model's solution.

Evaluation to ensure output accuracy employs a different model.

Linking AI apps to other services using the Model Context Protocol (MCP), which works with all programming languages, to develop agentic workflows for complex tasks.

Spring AI integrates seamlessly with Spring Boot and follows Spring developers' convention-over-configuration setup by providing well-known abstractions and startup dependencies via Spring Initialisation. This lets Spring Boot app developers quickly integrate AI models utilising their logic and data.

When using Gemini models in Vertex AI, Google Cloud connectivity is required. A Google Cloud environment must be created by establishing or selecting a project, enabling the Vertex AI API in the console, billing, and the gcloud CLI.

Use gcloud init, config set project, and auth application-default login to configure local development authentication.

The Spring Initialiser must generate GraalVM Native Support, Spring Web, Spring Boot Actuator, Spring Data JDBC, Vertex AI Gemini, Vertex AI Embeddings, PGvector Vector Database, MCP Client, and Docker Compose Support to build a Spring AI and Google Cloud application. The site recommends using the latest Java version, especially GraalVM, which compiles code into native images instead of JRE-based apps to save RAM and speed up startup. Set application properties during configuration.characteristics for application name, database connection options, Vertex AI project ID and location for chat and embedding models (gemini-2.5-pro-preview-05-06), actuator endpoints, Docker Compose administration, and PGvector schema initialisation.

PostgreSQL database with a vector type plugin that stores data with Spring AI's VectorStore abstraction? Database schema and data can be initialised on startup using schema.sql and data.sql files, and Spring Boot's Docker Compose can start the database container automatically. Spring Data JDBC creates database interaction and data access entities.

The ChatClient manages chat model interactions and is a one-stop shop. ChatClients need autoconfigured ChatModels like Google's Gemini. Developers can create several ChatClients with different parameters and conditions using ChatClient.Builder. ChatClients can be used with PromptChatMemoryAdvisor or QuestionAnswerAdvisor to handle chat memory or VectorStore data for RAG.

Spring AI simplifies tool calls by annotating methods and arguments with @Tool and @ToolParam. The model evaluates tool relevance using annotation descriptions and structure. New default tools can be added to ChatClient.

Spring AI also integrates with the Model Context Protocol (MCP), which separates tools into services and makes them available to LLMs in any language.

For Google Cloud production deployments, Spring AI apps work with pgVector-supporting databases like Google Cloud SQL or AlloyDB. AlloyDB is built for AI applications with its high performance, availability (99.99% SLA including maintenance), and scalability.

FAQ

How is spring AI?

The Spring AI application framework simplifies Spring ecosystem AI application development. It allows Java developers to easily integrate AI models and APIs without retraining, inspired by LangChain and LlamaIndex.

Essentials and Ideas:

AI integration:

Spring AI integrates AI models with enterprise data and APIs.

Abstraction and Portability:

Its portable APIs work across vector database and AI model manufacturers.

Spring Boot compatibility:

It integrates with Spring Boot and provides observability tools, starters, and autoconfiguration.

Support the Model:

It supports text-to-image, embedding, chat completion, and other AI models.

Quick Templates:

Template engines let Spring AI manage and produce AI model prompts.

Vector databases:

It uses popular vector database providers to store and retrieve embeddings.

Tools and Function Calling:

Models can call real-time data access functions and tools.

Observability:

It tracks AI activity with observability solutions.

Assessing and Preventing Hallucinations:

Spring AI helps evaluate content and reduce hallucinations.

#SpringAI10#SpringAI#javaSpringAI#SpringBoot#AISpring#VertexAI#SpringAIandGoogleCloud#technology#technews#technologynews#news#govindhtech

0 notes

Text

Installing and Configuring TYPO3 on Docker Made Simple

Decided on TYPO3? Good call! It’s known for being flexible and scalable. But setting it up? Yeah, it can feel a bit old-fashioned. No stress—Docker’s got your back.

Why Choose Docker for TYPO3?

Docker offers several benefits:

Provides the same environment across all machines.

Makes TYPO3 installation fast and easy.

Keeps TYPO3 and its dependencies separate from your main system to avoid conflicts.

Supports team collaboration with consistent setups.

Simplifies testing and deploying TYPO3 projects.

How TYPO3 Runs in Docker Containers

TYPO3 needs a web server, PHP, and a database to function. Docker runs each of these as separate containers:

Web server container: Usually Apache or NGINX.

PHP container: Runs the TYPO3 PHP code.

Database container: Uses MySQL or MariaDB to store content.

These containers work together to run TYPO3 smoothly.

Getting Started: Installing TYPO3 with Docker

Install Docker on your device (Docker Desktop for Windows/macOS or Docker Engine for Linux).

Prepare a Docker Compose file that defines TYPO3’s web server, PHP, and database containers.

Run docker-compose up to launch all containers. Docker will download the necessary images and start your TYPO3 site.

Access your TYPO3 website through your browser, usually at localhost.

Benefits of Using Docker for TYPO3

Fast setup with just a few commands.

Easy to upgrade TYPO3 or PHP by changing container versions.

Portable across different machines and systems.

Keeps TYPO3 isolated from your computer’s main environment.

Who Should Use Docker for TYPO3?

Docker is ideal for developers, teams, and anyone wanting a consistent TYPO3 setup. It’s also helpful for testing TYPO3 or deploying projects in a simple, reproducible way.

Conclusion

Using Docker for TYPO3 simplifies setup and management by packaging everything TYPO3 needs. It saves time, avoids conflicts, and makes development smoother.

If you want more detailed help or specific instructions for your system, just let me know!

0 notes

Text

Docker and Containerization in Cloud Native Development

In the world of cloud native application development, the demand for speed, agility, and scalability has never been higher. Businesses strive to deliver software faster while maintaining performance, reliability, and security. One of the key technologies enabling this transformation is Docker—a powerful tool that uses containerization to simplify and streamline the development and deployment of applications.

Containers, especially when managed with Docker, have become fundamental to how modern applications are built and operated in cloud environments. They encapsulate everything an application needs to run—code, dependencies, libraries, and configuration—into lightweight, portable units. This approach has revolutionized the software lifecycle from development to production.

What Is Docker and Why Does It Matter?

Docker is an open-source platform that automates the deployment of applications inside software containers. Containers offer a more consistent and efficient way to manage software, allowing developers to build once and run anywhere—without worrying about environmental inconsistencies.

Before Docker, developers often faced the notorious "it works on my machine" issue. With Docker, you can run the same containerized app in development, testing, and production environments without modification. This consistency dramatically reduces bugs and deployment failures.

Benefits of Docker in Cloud Native Development

Docker plays a vital role in cloud native environments by promoting the principles of scalability, automation, and microservices-based architecture. Here’s how it contributes:

1. Portability and Consistency

Since containers include everything needed to run an app, they can move between cloud providers or on-prem systems without changes. Whether you're using AWS, Azure, GCP, or a private cloud, Docker provides a seamless deployment experience.

2. Resource Efficiency

Containers are lightweight and share the host system’s kernel, making them more efficient than virtual machines (VMs). You can run more containers on the same hardware, reducing costs and resource usage.

3. Rapid Deployment and Rollback

Docker enables faster application deployment through pre-configured images and automated CI/CD pipelines. If a new deployment fails, you can quickly roll back to a previous version by using container snapshots.

4. Isolation and Security

Each Docker container runs in isolation, ensuring that applications do not interfere with one another. This isolation also enhances security, as vulnerabilities in one container do not affect others on the same host.

5. Support for Microservices

Microservices architecture is a key component of cloud native application development. Docker supports this approach by enabling the development of loosely coupled services that can scale independently and communicate via APIs.

Docker Compose and Orchestration Tools

Docker alone is powerful, but in larger cloud native environments, you need tools to manage multiple containers and services. Docker Compose allows developers to define and manage multi-container applications using a single YAML file. For production-scale orchestration, Kubernetes takes over, managing deployment, scaling, and health of containers.

Docker integrates well with Kubernetes, providing a robust foundation for deploying and managing microservices-based applications at scale.

Real-World Use Cases of Docker in the Cloud

Many organizations already use Docker to power their digital transformation. For instance:

Netflix uses containerization to manage thousands of microservices that stream content globally.

Spotify runs its music streaming services in containers for consistent performance.

Airbnb speeds up development and testing by running staging environments in isolated containers.

These examples show how Docker not only supports large-scale operations but also enhances agility in cloud-based software development.

Best Practices for Using Docker in Cloud Native Environments

To make the most of Docker in your cloud native journey, consider these best practices:

Use minimal base images (like Alpine) to reduce attack surfaces and improve performance.

Keep containers stateless and use external services for data storage to support scalability.

Implement proper logging and monitoring to ensure container health and diagnose issues.

Use multi-stage builds to keep images clean and optimized for production.

Automate container updates using CI/CD tools for faster iteration and delivery.

These practices help maintain a secure, maintainable, and scalable cloud native architecture.

Challenges and Considerations

Despite its many advantages, Docker does come with challenges. Managing networking between containers, securing images, and handling persistent storage can be complex. However, with the right tools and strategies, these issues can be managed effectively.

Cloud providers now offer native services—like AWS ECS, Azure Container Instances, and Google Cloud Run—that simplify the management of containerized workloads, making Docker even more accessible for development teams.

Conclusion

Docker has become an essential part of cloud native application development by making it easier to build, deploy, and manage modern applications. Its simplicity, consistency, and compatibility with orchestration tools like Kubernetes make it a cornerstone technology for businesses embracing the cloud.

As organizations continue to evolve their software strategies, Docker will remain a key enabler—powering faster releases, better scalability, and more resilient applications in the cloud era.

#CloudNative#Docker#Containers#DevOps#Kubernetes#Microservices#CloudComputing#CloudDevelopment#SoftwareEngineering#ModernApps#CloudZone#CloudArchitecture

0 notes

Text

Unlocking Career Potential: Why Chandigarh is Emerging as a Top Tech Education Hub

In recent years, Chandigarh has rapidly evolved from being just a well-planned city to becoming a booming educational and technological hub. Known for its cleanliness, quality of life, and educational institutions, the city is now making headlines for its top-tier IT training programs. With a growing number of students and professionals seeking a competitive edge in the tech industry, specialized courses like full stack development and CCNA training are gaining immense popularity.

If you're considering a future in technology, enrolling in a professional course can be a game-changing decision. Whether you're passionate about creating websites and applications or interested in managing complex networking systems, Chandigarh offers robust training programs tailored to meet industry demands. This article explores the reasons behind Chandigarh's rise as an educational powerhouse in the IT domain, focusing on two of the most sought-after career paths — full stack development and networking.

Chandigarh: A Promising Destination for Tech Learners

One of the most significant factors driving the influx of tech learners to Chandigarh is the presence of quality training institutes. These institutes not only provide theoretical knowledge but also focus extensively on hands-on practical experience. The faculty in Chandigarh is often composed of industry professionals who understand what it takes to succeed in the current job market.

Moreover, the city’s low cost of living, safe environment, and well-connected infrastructure make it an ideal place for both local and outstation students. Chandigarh’s proximity to the IT corridors of Mohali and Panchkula also ensures that learners have access to real-world industry exposure and internships.

The Rising Demand for Full Stack Developers

Full stack development has emerged as one of the most lucrative and versatile career options in the IT industry. A full stack developer is a tech professional who can handle both the front-end and back-end development of a web application. This means having the ability to design user-friendly interfaces as well as manage servers, databases, and system integrations.

With the shift toward digital transformation, businesses are increasingly looking for developers who can manage projects from start to finish. This has created an immense demand for skilled full stack developers across industries such as e-commerce, finance, healthcare, and entertainment.

The job market for full stack developers is incredibly promising. According to industry reports, companies are willing to offer premium salaries to professionals who possess a well-rounded skill set. However, to secure a rewarding job in this domain, it's essential to acquire formal training and project experience — both of which are readily available in Chandigarh.

What to Expect from a Full Stack Developer Course

A comprehensive full stack developer course typically includes the following components:

Front-end Technologies: HTML, CSS, JavaScript, and frameworks like React or Angular.

Back-end Technologies: Node.js, Express.js, Python, or PHP.

Databases: MySQL, MongoDB, or PostgreSQL.

Version Control: Git and GitHub.

Deployment Tools: AWS, Docker, and CI/CD pipelines.

These courses are designed to build both foundational and advanced knowledge, enabling students to become job-ready by the time they graduate. Most institutes in Chandigarh also include live projects, internships, and portfolio-building assignments as part of the curriculum.

For those serious about their tech careers, the full stack developer course in Chandigarh offered by CBITSS is highly recommended. This course provides a complete learning experience with industry-aligned modules, expert mentorship, and career support.

CCNA: The Gateway to a Networking Career

While development roles are in high demand, networking remains an equally critical component of the IT ecosystem. The Cisco Certified Network Associate (CCNA) certification is globally recognized and serves as a foundational credential for aspiring network engineers.

A career in networking can lead to roles such as Network Administrator, Systems Engineer, Network Analyst, or IT Support Specialist. With cyber security and cloud computing becoming central to modern enterprises, networking professionals are more important than ever.

The CCNA certification covers a range of topics including:

Network fundamentals

IP connectivity and IP services

Security fundamentals

Automation and programmability

Network access and protocols

This certification serves as proof of your expertise in setting up, managing, and troubleshooting network infrastructure.

If you’re aiming to build a career in networking, enrolling in a reputable ccna training in Chandigarh can make all the difference. CBITSS offers an industry-acclaimed CCNA program that blends theoretical concepts with real-time lab practices to ensure comprehensive learning.

Benefits of Studying in Chandigarh

Here are some reasons why students from across the country prefer Chandigarh for IT training:

1. Affordable Education

Compared to metropolitan cities, Chandigarh offers high-quality training programs at relatively lower costs. This makes it accessible to a larger population of students.

2. Access to Skilled Mentors

Many training centers in the city are led by professionals with years of industry experience. This ensures that the learning process is aligned with current trends and technologies.

3. Excellent Infrastructure

Modern classrooms, well-equipped labs, and reliable internet connectivity make learning in Chandigarh a comfortable and enriching experience.

4. Placement Support

Leading institutes like CBITSS offer dedicated placement assistance, resume-building workshops, and mock interviews to help students land their dream jobs.

Who Should Enroll?

Both the full stack developer course and CCNA training are ideal for:

Students pursuing graduation or post-graduation in IT/Computer Science

Working professionals looking to switch careers or upgrade skills

Entrepreneurs who want to build tech-based startups

Freelancers and tech enthusiasts aiming to become job-ready

The only prerequisites are basic computer knowledge and a strong willingness to learn.

Final Thoughts

The IT landscape is changing rapidly, and staying updated with the right skills is crucial for career growth. Whether you’re interested in coding beautiful websites or managing global networks, the key lies in getting proper training and hands-on experience.

Chandigarh, with its evolving tech ecosystem and high-quality education infrastructure, is proving to be the ideal destination for aspiring tech professionals. With institutes like CBITSS offering cutting-edge courses in both development and networking, students have ample opportunities to learn, grow, and succeed.

So if you're ready to take the next step in your career, consider enrolling in a professional full stack developer course in Chandigarh or pursue a ccna training in Chandigarh to unlock your full potential in the IT industry.

0 notes

Text

The Cost of Hiring a Microservices Engineer: What to Expect

Many tech businesses are switching from monolithic programs to microservices-based architectures as software systems get more complicated. More flexibility, scalability, and deployment speed are brought about by this change, but it also calls for specialized talent. Knowing how much hiring a microservices engineer would cost is essential to making an informed decision.

Understanding the factors that affect costs can help you better plan your budget and draw in the best personnel, whether you're developing a new product or updating outdated systems.

Budgeting for Specialized Talent in a Modern Cloud Architecture

Applications composed of tiny, loosely linked services are designed, developed, and maintained by microservices engineers. These services are frequently implemented separately and communicate via APIs. When you hire a microservices engineer they should have extensive experience with distributed systems, API design, service orchestration, and containerization.

They frequently work with cloud platforms like AWS, Azure, or GCP as well as tools like Docker, Kubernetes, and Spring Boot. They play a crucial part in maintaining the scalability, modularity, and maintainability of your application.

What Influences the Cost?

The following variables affect the cost of hiring a microservices engineer:

1. Level of Experience

Although they might charge less, junior engineers will probably require supervision. Because they can independently design and implement reliable solutions, mid-level and senior engineers with practical experience in large-scale microservices projects attract higher rates.

2. Place

Geography has a major impact on salaries. Hiring in North America or Western Europe, for instance, is usually more expensive than hiring in Southeast Asia, Eastern Europe, or Latin America.

3. Type of Employment

Are you hiring contract, freelance, or full-time employees? For short-term work, freelancers may charge higher hourly rates, but the total project cost may be less.

4. Specialization and the Tech Stack

Because of their specialised knowledge, engineers who are familiar with niche stacks or tools (such as event-driven architecture, Istio, or advanced Kubernetes usage) frequently charge extra.

Use a salary benchmarking tool to ensure that your pay is competitive. This helps you set expectations and prevent overpaying or underbidding by providing you with up-to-date market data based on role, region, and experience.

Hidden Costs to Consider

In addition to the base pay or rate, you need account for:

Time spent onboarding and training

Time devoted to applicant evaluation and interviews

The price of bad hires (in terms of rework or delays)

Continuous assistance and upkeep if you're starting from scratch

These elements highlight how crucial it is to make a thoughtful, knowledgeable hiring choice.

Complementary Roles to Consider

Working alone is not how a microservices engineer operates. Several tech organizations also hire cloud engineers to oversee deployment pipelines, networking, and infrastructure. Improved production performance and easier scaling are guaranteed when these positions work closely together.

Summing Up

Hiring a microservices engineer is a strategic investment rather than merely a cost. These engineers with the appropriate training and resources lays the groundwork for long-term agility and scalability.

Make smart financial decisions by using tools such as a pay benchmarking tool, and think about combining your hire with cloud or DevOps support. The correct engineer can improve your architecture's speed, stability, and long-term value for tech businesses updating their apps.

0 notes

Text

Docker Migration Services: A Seamless Shift to Containerization

In today’s fast-paced tech world, businesses are continuously looking for ways to boost performance, scalability, and flexibility. One powerful way to achieve this is through Docker migration. Docker helps you containerize applications, making them easier to deploy, manage, and scale. But moving existing apps to Docker can be challenging without the right expertise.

Let’s explore what Docker migration services are, why they matter, and how they can help transform your infrastructure.

What Is Docker Migration?

Docker migration is the process of moving existing applications from traditional environments (like virtual machines or bare-metal servers) to Docker containers. This involves re-architecting applications to work within containers, ensuring compatibility, and streamlining deployments.

Why Migrate to Docker?

Here’s why businesses are choosing Docker migration services:

1. Improved Efficiency

Docker containers are lightweight and use system resources more efficiently than virtual machines.

2. Faster Deployment

Containers can be spun up in seconds, helping your team move faster from development to production.

3. Portability

Docker containers run the same way across different environments – dev, test, and production – minimizing issues.

4. Better Scalability

Easily scale up or down based on demand using container orchestration tools like Kubernetes or Docker Swarm.

5. Cost-Effective

Reduced infrastructure and maintenance costs make Docker a smart choice for businesses of all sizes.

What Do Docker Migration Services Include?

Professional Docker migration services guide you through every step of the migration journey. Here's what’s typically included:

- Assessment & Planning

Analyzing your current environment to identify what can be containerized and how.

- Application Refactoring

Modifying apps to work efficiently within containers without breaking functionality.

- Containerization

Creating Docker images and defining services using Dockerfiles and docker-compose.

- Testing & Validation

Ensuring that the containerized apps function as expected across environments.

- CI/CD Integration

Setting up pipelines to automate testing, building, and deploying containers.

- Training & Support

Helping your team get up to speed with Docker concepts and tools.

Challenges You Might Face

While Docker migration has many benefits, it also comes with some challenges:

Compatibility issues with legacy applications

Security misconfigurations

Learning curve for teams new to containers

Need for monitoring and orchestration setup

This is why having experienced Docker professionals onboard is critical.

Who Needs Docker Migration Services?

Docker migration is ideal for:

Businesses with legacy applications seeking modernization

Startups looking for scalable and portable solutions

DevOps teams aiming to streamline deployments

Enterprises moving towards a microservices architecture

Final Thoughts

Docker migration isn’t just a trend—it’s a smart move for businesses that want agility, reliability, and speed in their development and deployment processes. With expert Docker migration services, you can transition smoothly, minimize downtime, and unlock the full potential of containerization.

0 notes

Link

#Automation#cloud#configuration#Dashboard#energymonitoring#HomeAssistant#homesecurity#Install#Integration#IoT#Linux#MQTT#open-source#operatingsystem#RaspberryPi#self-hosted#sensors#smarthome#systemadministration#Z-Wave#Zigbee

0 notes

Text

🚀 Container Adoption Boot Camp for Developers: Fast-Track Your Journey into Containerization

In today’s DevOps-driven world, containerization is no longer a buzzword—it’s a fundamental skill for modern developers. Whether you're building microservices, deploying to Kubernetes, or simply looking to streamline your development workflow, containers are at the heart of it all.

That’s why we created the Container Adoption Boot Camp for Developers—a focused, hands-on training program designed to take you from container curious to container confident.

🧠 Why Containers Matter for Developers

Containers bring consistency, speed, and scalability to your development and deployment process. Imagine a world where:

Your app works exactly the same on your machine as it does in staging or production.

You can spin up dev environments in seconds.

You can ship features faster with fewer bugs.

That’s the power of containerization—and our boot camp helps you unlock it.

🎯 What You’ll Learn

Our boot camp is developer-first and practical by design. Here’s a taste of what we cover:

✅ Container Fundamentals

What are containers? Why do they matter?

Images vs containers vs registries

Comparison: Docker vs Podman

✅ Building Your First Container

Creating and optimizing Dockerfiles

Managing multi-stage builds

Environment variables and configuration strategies

✅ Running Containers in Development

Volume mounting, debugging, hot-reloading

Using Compose for multi-container applications

✅ Secure & Efficient Images

Best practices for lightweight and secure containers

Image scanning and vulnerability detection

✅ From Dev to Prod

Building container workflows into your CI/CD pipeline

Tagging strategies, automated builds, and registries

✅ Intro to Kubernetes & OpenShift

How your containers scale in production

Developer experience on OpenShift with odo, kubectl, and oc

🔧 Hands-On, Lab-Focused Learning

This isn’t just theory. Every module includes real-world labs using tools like:

Podman/Docker

Buildah & Skopeo

GitHub Actions / GitLab CI

OpenShift Developer Sandbox (or your preferred cloud)

You’ll walk away with reusable templates, code samples, and a fully containerized project of your own.

👨💻 Who Should Join?

This boot camp is ideal for:

Developers looking to adopt DevOps practices

Backend engineers exploring microservices

Full-stack developers deploying to cloud platforms

Anyone working in a container-based environment (Kubernetes, OpenShift, EKS, GKE, etc.)

Whether you're new to containers or looking to refine your skills, we’ve got you covered.

🏁 Get Started with HawkStack

At HawkStack Technologies, we bridge the gap between training and real-world implementation. Our Container Adoption Boot Camp is crafted by certified professionals with deep industry experience, ensuring you don’t just learn—you apply.

📅 Next cohort starts soon 📍 Live online + lab access 💬 Mentorship + post-training support

👉 Contact us to reserve your spot or schedule a custom boot camp for your team - www.hawkstack.com

Ready to take the leap into containerization? Let’s build something great—one container at a time. 🧱💻🚢

0 notes

Text

Postal SMTP install and setup on a virtual server

Postal is a full suite for mail delivery with robust features suited for running a bulk email sending SMTP server. Postal is open source and free. Some of its features are: - UI for maintaining different aspects of your mail server - Runs on containers, hence allows for up and down horizontal scaling - Email security features such as spam and antivirus - IP pools to help you maintain a good sending reputation by sending via multiple IPs - Multitenant support - multiple users, domains and organizations - Monitoring queue for outgoing and incoming mail - Built in DNS setup and monitoring to ensure mail domains are set up correctly List of full postal features

Possible cloud providers to use with Postal

You can use Postal with any VPS or Linux server providers of your choice, however here are some we recommend: Vultr Cloud (Get free $300 credit) - In case your SMTP port is blocked, you can contact Vultr support, and they will open it for you after providing a personal identification method. DigitalOcean (Get free $200 Credit) - You will also need to contact DigitalOcean support for SMTP port to be open for you. Hetzner ( Get free €20) - SMTP port is open for most accounts, if yours isn't, contact the Hetzner support and request for it to be unblocked for you Contabo (Cheapest VPS) - Contabo doesn't block SMTP ports. In case you are unable to send mail, contact support. Interserver

Postal Minimum requirements

- At least 4GB of RAM - At least 2 CPU cores - At least 25GB disk space - You can use docker or any Container runtime app. Ensure Docker Compose plugin is also installed. - Port 25 outbound should be open (A lot of cloud providers block it)

Postal Installation

Should be installed on its own server, meaning, no other items should be running on the server. A fresh server install is recommended. Broad overview of the installation procedure - Install Docker and the other needed apps - Configuration of postal and add DNS entries - Start Postal - Make your first user - Login to the web interface to create virtual mail servers Step by step install Postal Step 1 : Install docker and additional system utilities In this guide, I will use Debian 12 . Feel free to follow along with Ubuntu. The OS to be used does not matter, provided you can install docker or any docker alternative for running container images. Commands for installing Docker on Debian 12 (Read the comments to understand what each command does): #Uninstall any previously installed conflicting software . If you have none of them installed it's ok for pkg in docker.io docker-doc docker-compose podman-docker containerd runc; do sudo apt-get remove $pkg; done #Add Docker's official GPG key: sudo apt-get update sudo apt-get install ca-certificates curl -y sudo install -m 0755 -d /etc/apt/keyrings sudo curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc sudo chmod a+r /etc/apt/keyrings/docker.asc #Add the Docker repository to Apt sources: echo "deb https://download.docker.com/linux/debian $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null sudo apt-get update #Install the docker packages sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y #You can verify that the installation is successful by running the hello-world image sudo docker run hello-world Add the current user to the docker group so that you don't have to use sudo when not logged in as the root user. ##Add your current user to the docker group. sudo usermod -aG docker $USER #Reboot the server sudo reboot Finally test if you can run docker without sudo ##Test that you don't need sudo to run docker docker run hello-world Step 2 : Get the postal installation helper repository The Postal installation helper has all the docker compose files and the important bootstrapping tools needed for generating configuration files. Install various needed tools #Install additional system utlities apt install git vim htop curl jq -y Then clone the helper repository. sudo git clone https://github.com/postalserver/install /opt/postal/install sudo ln -s /opt/postal/install/bin/postal /usr/bin/postal Step 3 : Install MariaDB database Here is a sample MariaDB container from the postal docs. But you can use the docker compose file below it. docker run -d --name postal-mariadb -p 127.0.0.1:3306:3306 --restart always -e MARIADB_DATABASE=postal -e MARIADB_ROOT_PASSWORD=postal mariadb Here is a tested mariadb compose file to run a secure MariaDB 11.4 container. You can change the version to any image you prefer. vi docker-compose.yaml services: mariadb: image: mariadb:11.4 container_name: postal-mariadb restart: unless-stopped environment: MYSQL_ROOT_PASSWORD: ${DB_ROOT_PASSWORD} volumes: - mariadb_data:/var/lib/mysql network_mode: host # Set to use the host's network mode security_opt: - no-new-privileges:true read_only: true tmpfs: - /tmp - /run/mysqld healthcheck: test: interval: 30s timeout: 10s retries: 5 volumes: mariadb_data: You need to create an environment file with the Database password . To simplify things, postal will use the root user to access the Database.env file example is below. Place it in the same location as the compose file. DB_ROOT_PASSWORD=ExtremelyStrongPasswordHere Run docker compose up -d and ensure the database is healthy. Step 4 : Bootstrap the domain for your Postal web interface & Database configs First add DNS records for your postal domain. The most significant records at this stage are the A and/or AAAA records. This is the domain where you'll be accessing the postal UI and for simplicity will also act as the SMTP server. If using Cloudflare, turn off the Cloudflare proxy. sudo postal bootstrap postal.yourdomain.com The above will generate three files in /opt/postal/config. - postal.yml is the main postal configuration file - signing.key is the private key used to sign various things in Postal - Caddyfile is the configuration for the Caddy web server Open /opt/postal/config/postal.yml and add all the values for DB and other settings. Go through the file and see what else you can edit. At the very least, enter the correct DB details for postal message_db and main_db. Step 5 : Initialize the Postal database and create an admin user postal initialize postal make-user If everything goes well with postal initialize, then celebrate. This is the part where you may face some issues due to DB connection failures. Step 6 : Start running postal # run postal postal start #checking postal status postal status # If you make any config changes in future you can restart postal like so # postal restart Step 7 : Proxy for web traffic To handle web traffic and ensure TLS termination you can use any proxy server of your choice, nginx, traefik , caddy etc. Based on Postal documentation, the following will start up caddy. You can use the compose file below it. Caddy is easy to use and does a lot for you out of the box. Ensure your A records are pointing to your server before running Caddy. docker run -d --name postal-caddy --restart always --network host -v /opt/postal/config/Caddyfile:/etc/caddy/Caddyfile -v /opt/postal/caddy-data:/data caddy Here is a compose file you can use instead of the above docker run command. Name it something like caddy-compose.yaml services: postal-caddy: image: caddy container_name: postal-caddy restart: always network_mode: host volumes: - /opt/postal/config/Caddyfile:/etc/caddy/Caddyfile - /opt/postal/caddy-data:/data You can run it by doing docker compose -f caddy-compose.yaml up -d Now it's time to go to the browser and login. Use the domain, bootstrapped earlier. Add an organization, create server and add a domain. This is done via the UI and it is very straight forward. For every domain you add, ensure to add the DNS records you are provided.

Enable IP Pools

One of the reasons why Postal is great for bulk email sending, is because it allows for sending emails using multiple IPs in a round-robin fashion. Pre-requisites - Ensure the IPs you want to add as part of the pool, are already added to your VPS/server. Every cloud provider has a documentation for adding additional IPs, make sure you follow their guide to add all the IPs to the network. When you run ip a , you should see the IP addresses you intend to use in the pool. Enabling IP pools in the Postal config First step is to enable IP pools settings in the postal configuration, then restart postal. Add the following configuration in the postal.yaml (/opt/postal/config/postal.yml) file to enable pools. If the section postal: , exists, then just add use_ip_pools: true under it. postal: use_ip_pools: true Then restart postal. postal stop && postal start The next step is to go to the postal interface on your browser. A new IP pools link is now visible at the top right corner of your postal dashboard. You can use the IP pools link to add a pool, then assign IP addresses in the pools. A pool could be something like marketing, transactions, billing, general etc. Once the pools are created and IPs assigned to them, you can attach a pool to an organization. This organization can now use the provided IP addresses to send emails. Open up an organization and assign a pool to it. Organizations → choose IPs → choose pools . You can then assign the IP pool to servers from the server's Settings page. You can also use the IP pool to configure IP rules for the organization or server. At any point, if you are lost, look at the Postal documentation. Read the full article

0 notes

Text

Using Docker in Software Development

Docker has become a vital tool in modern software development. It allows developers to package applications with all their dependencies into lightweight, portable containers. Whether you're building web applications, APIs, or microservices, Docker can simplify development, testing, and deployment.

What is Docker?

Docker is an open-source platform that enables you to build, ship, and run applications inside containers. Containers are isolated environments that contain everything your app needs—code, libraries, configuration files, and more—ensuring consistent behavior across development and production.

Why Use Docker?

Consistency: Run your app the same way in every environment.

Isolation: Avoid dependency conflicts between projects.

Portability: Docker containers work on any system that supports Docker.

Scalability: Easily scale containerized apps using orchestration tools like Kubernetes.

Faster Development: Spin up and tear down environments quickly.

Basic Docker Concepts

Image: A snapshot of a container. Think of it like a blueprint.

Container: A running instance of an image.

Dockerfile: A text file with instructions to build an image.

Volume: A persistent data storage system for containers.

Docker Hub: A cloud-based registry for storing and sharing Docker images.

Example: Dockerizing a Simple Python App

Let’s say you have a Python app called app.py: # app.py print("Hello from Docker!")

Create a Dockerfile: # Dockerfile FROM python:3.10-slim COPY app.py . CMD ["python", "app.py"]

Then build and run your Docker container: docker build -t hello-docker . docker run hello-docker

This will print Hello from Docker! in your terminal.

Popular Use Cases

Running databases (MySQL, PostgreSQL, MongoDB)

Hosting development environments

CI/CD pipelines

Deploying microservices

Local testing for APIs and apps

Essential Docker Commands

docker build -t <name> . — Build an image from a Dockerfile

docker run <image> — Run a container from an image

docker ps — List running containers

docker stop <container_id> — Stop a running container

docker exec -it <container_id> bash — Access the container shell

Docker Compose

Docker Compose allows you to run multi-container apps easily. Define all your services in a single docker-compose.yml file and launch them with one command: version: '3' services: web: build: . ports: - "5000:5000" db: image: postgres

Start everything with:docker-compose up

Best Practices

Use lightweight base images (e.g., Alpine)

Keep your Dockerfiles clean and minimal

Ignore unnecessary files with .dockerignore

Use multi-stage builds for smaller images

Regularly clean up unused images and containers

Conclusion

Docker empowers developers to work smarter, not harder. It eliminates "it works on my machine" problems and simplifies the development lifecycle. Once you start using Docker, you'll wonder how you ever lived without it!

0 notes

Text

10 Essential Tools for Aspiring Software Developers

In the ever-evolving world of software development, having the right tools at your disposal can make a huge difference. Whether you’re a beginner stepping into the coding world or an aspiring developer sharpening your skills at Srishti Campus, understanding the tools that simplify workflows and boost productivity is crucial.

Here’s a list of 10 essential tools every software developer should explore to enhance their learning and professional growth.

1. Visual Studio Code (VS Code)

Why It’s Essential: VS Code is one of the most popular code editors, loved for its simplicity, flexibility, and a vast array of extensions. It supports multiple programming languages like Python, JavaScript, and C++, making it perfect for beginners and experts alike.

Top Features:

Intelligent code completion.

Built-in Git support.

Extensions for debugging and language tools.

Pro Tip: Use extensions like Prettier for formatting and Live Server for real-time web development.

2. Git and GitHub

Why It’s Essential: Version control is the backbone of modern software development. Git helps track code changes, while GitHub allows collaboration and sharing with other developers.

Top Features:

Manage project versions seamlessly.

Collaborate with teams using pull requests.

Host and showcase projects to potential employers.

Pro Tip: Beginners can start with the GitHub Desktop app for an easy GUI interface.

3. Postman

Why It’s Essential: Postman is a must-have for testing APIs. With its user-friendly interface, it helps developers simulate requests and check responses during backend development.

Top Features:

Test RESTful APIs without writing code.

Automate API tests for efficiency.

Monitor APIs for performance.

Pro Tip: Use Postman collections to organize and share API tests.

4. Docker

Why It’s Essential: For developers building scalable applications, Docker simplifies the process of deploying code in isolated environments. This ensures your application works consistently across different systems.

Top Features:

Create lightweight, portable containers.

Simplify development-to-production workflows.

Enhance team collaboration with containerized apps.

Pro Tip: Use Docker Compose for managing multi-container applications.

5. Slack

Why It’s Essential: Communication is key in software development teams, and Slack has emerged as the go-to tool for efficient collaboration. It’s particularly useful for remote teams.

Top Features:

Channels for organizing team discussions.

Integration with tools like GitHub and Trello.

Real-time messaging and file sharing.

Pro Tip: Customize notifications to stay focused while coding.

6. IntelliJ IDEA

Why It’s Essential: For Java developers, IntelliJ IDEA is the ultimate Integrated Development Environment (IDE). It provides advanced features that simplify coding and debugging.

Top Features:

Code analysis and refactoring tools.

Support for frameworks like Spring and Hibernate.

Integrated version control.

Pro Tip: Explore the community edition for free if you're starting with Java.

7. Figma

Why It’s Essential: Understanding UI/UX design is crucial for front-end developers, and Figma offers a collaborative platform for designing and prototyping interfaces.

Top Features:

Cloud-based collaboration for teams.

Interactive prototypes for testing.

Easy integration with development workflows.

Pro Tip: Use Figma plugins for assets like icons and mockup templates.

0 notes