#replace Docker

Explore tagged Tumblr posts

Text

Nerdctl: Docker compatible containerd command line tool

Nerdctl: Docker compatible containerd command line tool @vexpert #vmwarecommunities #100daysofhomelab #homelab #nerdctl #Docker-compatibleCLI #containermanagement #efficientcontainerruntime #lazypulling #encryptedimages #rootlessmode #DockerCompose

Most know and use the Docker command line tool working with Docker containers. However, let’s get familiar with the defacto tool working with containerd containers, nerdctl, a robust docker compatible cli. Nerdctl works in tandem with containerd, serving as a compatible cli for containerd and offering support for many docker cli commands. This makes it a viable option when looking to replace…

View On WordPress

#Container Management#Docker Compose support#Docker-compatible CLI#efficient container runtime#encrypted images#installing nerdctl#lazy pulling#nerdctl#replace Docker#rootless mode

0 notes

Text

i dusted off my old instapaper account and imported my pocket backlog, and they immediately offered me a three month trial of the premium plan

so that's nice

#original#it used to be my go-to because i could send stuff to kindle which for a while was vital#i don't know if i'll keep premium but it's a pretty serviceable pocket replacement#i already used up my readwise reader trial but it's too fucking expensive and also overkill#i managed to get shiori installed on the NAS but then couldn't figure out how to get my pocket stuff in there so i might uninstall it#i'm so fucking bad at doing docker stuff on the NAS it's unreal#every couple months i check in on pinepods and see if anyone's written a guide for dummies yet lol

49 notes

·

View notes

Text

By the end of the 1950s Rory Storm and The Hurricanes were Liverpool’s top band. The great peculiarity of Storm’s character was that he spoke with a severe stammer, which always disappeared when he was performing. A flamboyant Teddy Boy, he had a vast blond quiff that he would ostentatiously comb on stage: in old black-and-white photographs he seems to be built entirely of silver. He was an athletic man, a natural extrovert, given to daredevil stage leaps (he once broke a leg jumping from a balcony for a photograph). He’d wave the mike stand around and, strangely, pour lemonade over himself.

I’m told he was once apprehended by a railway porter on Bootle station for writing ‘I Love Rory’ on a wall. The other groups worshipped him; George Harrison longed to join the Hurricanes but was told he was too young.

Rory found a local drummer, another refugee from skiffle, called Richard Starkey. He lured the boy with promises of a summer season at Butlins holiday camp in Pwllheli, where each week brought a fresh coach-load of excitable girls. In line with the Hurricanes’ big-thinking policies the drummer was allocated a new name, Ringo Starr. (This era would be lovingly re-created in a 1973 him featuring Ringo with Billy Fury and David Essex, That’ll Be the Day.) Starr’s reputation grew so fast in his time with Rory, that he was eventually poached by the Beatles to replace Pete Best. <…> Gerry Marsden, like Ringo, came from the docklands of Dingle. He got a job on the railways, he played skiffle and then got himself a beat group, the Pacemakers, who served their time in Hamburg. They were game entertainers, these boys, who could play anything in that week’s Hit Parade if it’s what the crowd wanted. Having a piano-player made them a bit different, too, and offered them some range. Gerry had a funny way of holding his guitar high on his chest: it was so he could see his fingers.

Brian Epsten watched them in action at the Cavern, and saw in Gerry something of the same star potential he perceived in the Beatles and Cilia Black. He brought George Martin to see them play in Birkenhead, and they were duly signed to an EMI label, Columbia.

Once in the studio they were more compliant than Lennon and McCartney, and readily agreed to cover the song, ‘How Do You Do It?’, that the Beatles had rejected. Good thing, too. It got them to Number 1 straight out of the traps, and a month before the Beatles at that.

Paul McCartney would recall much later: ‘The hrsj: really senous threat to us that we felt was Gerry and the Pacemakers. When it came time for Mersey Beat to have a poll as to who was the most popular group we certainly bought and filled in a lot of forms, with very funny names , . . I’m not saying it was a fix, but it was a high-selling issue, that.’ <…> …Cilia’s first job in show business was to make the tea in the Cavern office. She was in fact the quintessential Scouser: a docker’s daughter from the Catholic enclave Scotland Road. In the family parlour stood a piano from the Epsteins’ North End Music Stores, just up the road.

When the 60s started she was a typist in town who spent her lunch hours watching the beat groups at the Cavern, where she picked up some spare-time work. Soon she was to be found on stage as well, performing guest spots with Kingsize Taylor and the Dominoes, the Big Three and Rory Storm and the Hurricanes. (Sometimes she would duet with Ringo on his big number ‘Boys’, but turned down a chance to go with the band to Hamburg.)

One night at the Iron Door her girlfriends urged the Beatles to give Cilia a go. ‘OK Cyril,’ John Lennon said. ‘Just to shut your mates up.’ She sang Sam Cooke’s version of ‘Summertime’, and her career was on its way. It was Lennon who recommended ‘Cyril’ to Epstein. ‘I fancied Brian like mad,’ she recalled. ‘He was gorgeous. He had the Cary Grant kind of charisma, incredibly charming and shy. He always wore a navy-blue cashmere overcoat and a navy spotted cravat. I know now it was a Hermes but then I still knew it was expensive.’ She failed an early audition for him, backed by the Beatles, but he saw her again at the Blue Angel and changed his mind.

(Liverpool - Wondrous Place by Paul Du Noyer, 2002)

Part (I), (II), (III), (IV), (V), (VI), (VII), (VIII), (IX), (X), (XI), (XII), (XIII), (XIV), (XV), (XVI), (XVII), (XVIII), (XIX), (XX), (XXI), (XXII)

#'George Harrison longed to join the Hurricanes but was told he was too young' - haha poor george#'Gerry had a funny way of holding his guitar high on his chest: it was so he could see his fingers' - so that was gerry' style#'It [‘How Do You Do It?’] got them to Number 1 straight out of the traps and a month before the Beatles at that'#I guess our lads thought a lot during that month#paul du noyer#the beatles#liverpool#rory storm and the hurricanes#george harrison#john lennon#ringo starr#brian epsten#gerry marsden#gerry and the pacemakers#cilla black

34 notes

·

View notes

Text

Pinned Post*

Layover Linux is my hobby project, where I'm building a packaging ecosystem from first principles and making a distro out of it. It's going slow thanks to a full time job (and... other reasons), but the design is solid, so I suppose if I plug away at this long enough, I'll eventually have something I can dogfood in my own life, for everything from gaming to web services to low-power "Linux but no package manager" devices.

Layover is the package manager at the heart of Layover Linux. It's designed around hermetic builds, atomically replacing the running system, cross compilation, and swearing at the Rust compiler. It's happy to run entirely in single-user mode on other distros, and is based around configuration, not mutation.

We all deserve reproducible builds. We all deserve configuration-based operating systems. We all deserve the simple safety of atomic updates. And gosh darn it, we deserve those things to be easy. I'm making the OS that I want to use, and I hope you'll want to use it too.

*if you want to get pinned and live in the PNW, I'm accepting DMs but have limited availability. Thanks 😊💜

Thanks to @docker-official for persuading me to pull the trigger on making an official blog. Much love to @jennihurtz, the supreme and shining jewel in my life and the heart beating in twin with mine. I also owe @k-simplex thanks for her support, which has carried me a few times in the last couple years.

44 notes

·

View notes

Text

Self Hosting

I haven't posted here in quite a while, but the last year+ for me has been a journey of learning a lot of new things. This is a kind of 'state-of-things' post about what I've been up to for the last year.

I put together a small home lab with 3 HP EliteDesk SFF PCs, an old gaming desktop running an i7-6700k, and my new gaming desktop running an i7-11700k and an RTX-3080 Ti.

"Using your gaming desktop as a server?" Yep, sure am! It's running Unraid with ~7TB of storage, and I'm passing the GPU through to a Windows VM for gaming. I use Sunshine/Moonlight to stream from the VM to my laptop in order to play games, though I've definitely been playing games a lot less...

On to the good stuff: I have 3 Proxmox nodes in a cluster, running the majority of my services. Jellyfin, Audiobookshelf, Calibre Web Automated, etc. are all running on Unraid to have direct access to the media library on the array. All told there's 23 docker containers running on Unraid, most of which are media management and streaming services. Across my lab, I have a whopping 57 containers running. Some of them are for things like monitoring which I wouldn't really count, but hey I'm not going to bother taking an effort to count properly.

The Proxmox nodes each have a VM for docker which I'm managing with Portainer, though that may change at some point as Komodo has caught my eye as a potential replacement.

All the VMs and LXC containers on Proxmox get backed up daily and stored on the array, and physical hosts are backed up with Kopia and also stored on the array. I haven't quite figured out backups for the main storage array yet (redundancy != backups), because cloud solutions are kind of expensive.

You might be wondering what I'm doing with all this, and the answer is not a whole lot. I make some things available for my private discord server to take advantage of, the main thing being game servers for Minecraft, Valheim, and a few others. For all that stuff I have to try and do things mostly the right way, so I have users managed in Authentik and all my other stuff connects to that. I've also written some small things here and there to automate tasks around the lab, like SSL certs which I might make a separate post on, and custom dashboard to view and start the various game servers I host. Otherwise it's really just a few things here and there to make my life a bit nicer, like RSSHub to collect all my favorite art accounts in one place (fuck you Instagram, piece of shit).

It's hard to go into detail on a whim like this so I may break it down better in the future, but assuming I keep posting here everything will probably be related to my lab. As it's grown it's definitely forced me to be more organized, and I promise I'm thinking about considering maybe working on documentation for everything. Bookstack is nice for that, I'm just lazy. One day I might even make a network map...

5 notes

·

View notes

Text

Nothing encapsulates my misgivings with Docker as much as this recent story. I wanted to deploy a PyGame-CE game as a static executable, and that means compiling CPython and PyGame statically, and then linking the two together. To compile PyGame statically, I need to statically link it to SDL2, but because of SDL2 special features, the SDL2 code can be replaced with a different version at runtime.

I tried, and failed, to do this. I could compile a certain version of CPython, but some of the dependencies of the latest CPython gave me trouble. I could compile PyGame with a simple makefile, but it was more difficult with meson.

Instead of doing this by hand, I started to write a Dockerfile. It's just too easy to get this wrong otherwise, or at least not get it right in a reproducible fashion. Although everything I was doing was just statically compiled, and it should all have worked with a shell script, it didn't work with a shell script in practice, because cmake, meson, and autotools all leak bits and pieces of my host system into the final product. Some things, like libGL, should never be linked into or distributed with my executable.

I also thought that, if I was already working with static compilation, I could just link PyGame-CE against cosmopolitan libc, and have the SDL2 pieces replaced with a dynamically linked libSDL2 for the target platform.

I ran into some trouble. I asked for help online.

The first answer I got was "You should just use PyInstaller for deployment"

The second answer was "You should use Docker for application deployment. Just start with

FROM python:3.11

and go from there"

The others agreed. I couldn't get through to them.

It's the perfect example of Docker users seeing Docker as the solution for everything, even when I was already using Docker (actually Podman).

I think in the long run, Docker has already caused, and will continue to cause, these problems:

Over-reliance on containerisation is slowly making build processes, dependencies, and deployment more brittle than necessary, because it still works in Docker

Over-reliance on containerisation is making the actual build process outside of a container or even in a container based on a different image more painful, as well as multi-stage build processes when dependencies want to be built in their own containers

Container specifications usually don't even take advantage of a known static build environment, for example by hard-coding a makefile, negating the savings in complexity

5 notes

·

View notes

Note

hi, i have a question if you don't mind. beside cap space and contracts numbers, is there a limite to the number of emergency loan a team can have? (about the sens)

Hi anon! I never mind!!! Honestly I get super duper excited whenever anyone hits my inbox because it makes me feel like I'm doing something good sooo... Okay, enough about me, meet me under the cut! 💜

The first thing about emergency loans is that their use is dependant on cap space. The Sens have three emergency loans right now - Tyler Kleven, Nikolas Matinpao, and Jacob Bernard-Docker. All three are right side defensemen (RD) replacing Thomas Chabot, Artem Zub, and Erik Brannstrom. Chabot is notably on LTIR. LTIR is complicated as hell and I don't want to hit you with the specifics of it. There are jokes of "capologists" in certain organizations (notably the Leafs and Canucks) whose main job it is to navigate the cap. But there are a few important things you need to know.

Chabot's salary is $8m. In theory, since Chabot is now on LTIR, the Sens can call up players with up to $8m salary regularly, and not as emergency recalls. The thing is - if you've read my waivers primer - that emergency recall keeps players waiver-exempt (at least, for a while). So a team in a situation like the Sens prefers to start by using emergency recall, when they can, and then shift into regular recall. This is why Chabot was only placed on LTIR on the 27th - Bernard-Docker was called up as an emergency recall first. Then, when Zub and Brannstrom were injured, Kleven and Matinpao could come up and Chabot could go on LTIR while preserving Bernard-Docker's waiver exemption, which is important because otherwise he isn't waiver exempt and the Senators don't want that.

Also, when Chabot goes on LTIR, he is no longer considered a "roster" player. This is important for call-up reasons and contract numbers on the roster. See, the main "rules" of emergency callups are as follows:

1) If the team has no cap room (must be at least $875k) with which to call up a player,

2) And someone can't play and the team plays with fewer than 18 skaters and 2 goalies for one game as a result,

3) Then you can use emergency recalls to call up players on an emergency basis with cap hits up to $875k,

4) But when the players get healthy those emergency recalls MUST be sent down.

So no, there's theoretically no limit to the NUMBER of emergency recalls, as far as I can tell. Theoretically, you can play an entire team of emergency recalls, but that would require every player on your team to be severely injured and you'd probably end up using your LTIR space to deal with that instead. And also be actively tanking at that point.

But the thing is - emergency recalls do NOT come with a cap hit. They, themselves, do not count against the cap. That's the point of the emergency recall, after all.

I think that's it! I love talking about the Sens because there is literally always something going on with those boys, so thank YOU for the ask! If something isn't clear, let me know and I'll re-explain it 💜

#stereanswers#stereanalysis#ottawa senators#thomas chabot#erik brannstrom#artem zub#jacob bernard docker#tyler kleven#nikolas mantipao#cap shenanigans

10 notes

·

View notes

Note

i might have already asked, but, opinions on nixos if you've tried it?

i haven't actually tried it but i think it's based and i want to try it really bad. the general premise is that you can fix all of your dependency management and reproducibility problems and replace them with new, more exciting problems that have to do with the nix language

i would very much like to trade to have those problems, because my builds would be so much faster than docker builds. docker builds are also a nightmare because they require a bunch of privileges to even run the docker daemon

so like, a brief list of things you get with nix

verifiable, reproducible builds: you could rerun your build pipeline from scratch and get the same SHA for the final artifact. all of your dependencies are also built with this property. so you basically have transitively pinned dependencies for everything managed by nix. that's huge for security and assurance

great build caching: a side effect of the above, this means you can cache intermediate build results instead of starting the whole build over. and you can be very confident that your cache isn't bringing in stale/incorrect gunk. compare to docker, where caching happens at the layer level, has a bunch of confusing caching rules, and can't account for side effects that make cached layers undesirable. plus the layers build on top of one another, so you can't really pick and choose which layers to cache; if you invalidate a lower layer, everything above it has to be rebuilt, even if the diff would be the same

hermiticity: since you know up front the full list of dependency files you need for your build, you can download those ahead of time and then do the build without talking to the internet! this is another huge win for security, because the internet is where all the fucked up and evil shit comes from, so you want to avoid it wherever possible. reduces your attack surface. this is especially important because infecting the build chain is an increasingly popular attack (supply chain attacks) and extremely effective at owning a ton of computers at once--that package you're building will be trusted by the rest of your machines, so it better be built in a secure environment

avoid a large swath of dependency issues that happen with other package managers: nix lets you have multiple versions of the same package, which removes a lot of pain that happens with other package managers. say you have package A, and package B requires A>=1.3 but package C requires A<=1.0. what do you do? well... you suffer. you also can't install A==5.0 for fun because you live on the bleeding edge. not so with nix

there's probably more goodies im forgetting but yeah. nix is based and solves a lot of cool problems in dependency management, build correctness and speed, and supply chain security. the flip side is that you have to learn a new configuration language just for this one thing, and in addition to being a functional language (which most programmers aren't used to), it has its own quirks and sharp edges because it's somewhat niche. also you have to hope the packages you want are already supported, otherwise you'll have to figure out how to build them yourself.

9 notes

·

View notes

Text

WILL CONTAINER REPLACE HYPERVISOR

As with the increasing technology, the way data centers operate has changed over the years due to virtualization. Over the years, different software has been launched that has made it easy for companies to manage their data operating center. This allows companies to operate their open-source object storage data through different operating systems together, thereby maximizing their resources and making their data managing work easy and useful for their business.

Understanding different technological models to their programming for object storage it requires proper knowledge and understanding of each. The same holds for containers as well as hypervisor which have been in the market for quite a time providing companies with different operating solutions.

Let’s understand how they work

Virtual machines- they work through hypervisor removing hardware system and enabling to run the data operating systems.

Containers- work by extracting operating systems and enable one to run data through applications and they have become more famous recently.

Although container technology has been in use since 2013, it became more engaging after the introduction of Docker. Thereby, it is an open-source object storage platform used for building, deploying and managing containerized applications.

The container’s system always works through the underlying operating system using virtual memory support that provides basic services to all the applications. Whereas hypervisors require their operating system for working properly with the help of hardware support.

Although containers, as well as hypervisors, work differently, have distinct and unique features, both the technologies share some similarities such as improving IT managed service efficiency. The profitability of the applications used and enhancing the lifecycle of software development.

And nowadays, it is becoming a hot topic and there is a lot of discussion going on whether containers will take over and replace hypervisors. This has been becoming of keen interest to many people as some are in favor of containers and some are with hypervisor as both the technologies have some particular properties that can help in solving different solutions.

Let’s discuss in detail and understand their functioning, differences and which one is better in terms of technology?

What are virtual machines?

Virtual machines are software-defined computers that run with the help of cloud hosting software thereby allowing multiple applications to run individually through hardware. They are best suited when one needs to operate different applications without letting them interfere with each other.

As the applications run differently on VMs, all applications will have a different set of hardware, which help companies in reducing the money spent on hardware management.

Virtual machines work with physical computers by using software layers that are light-weighted and are called a hypervisor.

A hypervisor that is used for working virtual machines helps in providing fresh service by separating VMs from one another and then allocating processors, memory and storage among them. This can be used by cloud hosting service providers in increasing their network functioning on nodes that are expensive automatically.

Hypervisors allow host machines to have different operating systems thereby allowing them to operate many virtual machines which leads to the maximum use of their resources such as bandwidth and memory.

What is a container?

Containers are also software-defined computers but they operate through a single host operating system. This means all applications have one operating center that allows it to access from anywhere using any applications such as a laptop, in the cloud etc.

Containers use the operating system (OS) virtualization form, that is they use the host operating system to perform their function. The container includes all the code, dependencies and operating system by itself allowing it to run from anywhere with the help of cloud hosting technology.

They promised methods of implementing infrastructure requirements that were streamlined and can be used as an alternative to virtual machines.

Even though containers are known to improve how cloud platforms was developed and deployed, they are still not as secure as VMs.

The same operating system can run different containers and can share their resources and they further, allow streamlining of implemented infrastructure requirements by the system.

Now as we have understood the working of VMs and containers, let’s see the benefits of both the technologies

Benefits of virtual machines

They allow different operating systems to work in one hardware system that maintains energy costs and rack space to cooling, thereby allowing economical gain in the cloud.

This technology provided by cloud managed services is easier to spin up and down and it is much easier to create backups with this system.

Allowing easy backups and restoring images, it is easy and simple to recover from disaster recovery.

It allows the isolated operating system, hence testing of applications is relatively easy, free and simple.

Benefits of containers:

They are light in weight and hence boost significantly faster as compared to VMs within a few seconds and require hardware and fewer operating systems.

They are portable cloud hosting data centers that can be used to run from anywhere which means the cause of the issue is being reduced.

They enable micro-services that allow easy testing of applications, failures related to the single point are reduced and the velocity related to development is increased.

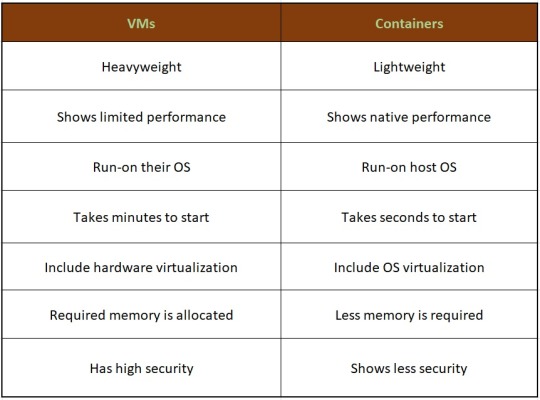

Let’s see the difference between containers and VMs

Hence, looking at all these differences one can make out that, containers have added advantage over the old virtualization technology. As containers are faster, more lightweight and easy to manage than VMs and are way beyond these previous technologies in many ways.

In the case of hypervisor, virtualization is performed through physical hardware having a separate operating system that can be run on the same physical carrier. Hence each hardware requires a separate operating system to run an application and its associated libraries.

Whereas containers virtualize operating systems instead of hardware, thereby each container only contains the application, its library and dependencies.

Containers in a similar way to a virtual machine will allow developers to improve the CPU and use physical machines' memory. Containers through their managed service provider further allow microservice architecture, allowing application components to be deployed and scaled more granularly.

As we have seen the benefits and differences between the two technologies, one must know when to use containers and when to use virtual machines, as many people want to use both and some want to use either of them.

Let’s see when to use hypervisor for cases such as:

Many people want to continue with the virtual machines as they are compatible and consistent with their use and shifting to containers is not the case for them.

VMs provide a single computer or cloud hosting server to run multiple applications together which is only required by most people.

As containers run on host operating systems which is not the case with VMs. Hence, for security purposes, containers are not that safe as they can destroy all the applications together. However, in the case of virtual machines as it includes different hardware and belongs to secure cloud software, so only one application will be damaged.

Container’s turn out to be useful in case of,

Containers enable DevOps and microservices as they are portable and fast, taking microseconds to start working.

Nowadays, many web applications are moving towards a microservices architecture that helps in building web applications from managed service providers. The containers help in providing this feature making it easy for updating and redeploying of the part needed of the application.

Containers contain a scalability property that automatically scales containers, reproduces container images and spin them down when they are not needed.

With increasing technology, people want to move to technology that is fast and has speed, containers in this scenario are way faster than a hypervisor. That also enables fast testing and speed recovery of images when a reboot is performed.

Hence, will containers replace hypervisor?

Although both the cloud hosting technologies share some similarities, both are different from each other in one or the other aspect. Hence, it is not easy to conclude. Before making any final thoughts about it, let's see a few points about each.

Still, a question can arise in mind, why containers?

Although, as stated above there are many reasons to still use virtual machines, containers provide flexibility and portability that is increasing its demand in the multi-cloud platform world and the way they allocate their resources.

Still today many companies do not know how to deploy their new applications when installed, hence containerizing applications being flexible allow easy handling of many clouds hosting data center software environments of modern IT technology.

These containers are also useful for automation and DevOps pipelines including continuous integration and continuous development implementation. This means containers having small size and modularity of building it in small parts allows application buildup completely by stacking those parts together.

They not only increase the efficiency of the system and enhance the working of resources but also save money by preferring for operating multiple processes.

They are quicker to boost up as compared to virtual machines that take minutes in boosting and for recovery.

Another important point is that they have a minimalistic structure and do not need a full operating system or any hardware for its functioning and can be installed and removed without disturbing the whole system.

Containers replace the patching process that was used traditionally, thereby allowing many organizations to respond to various issues faster and making it easy for managing applications.

As containers contain an operating system abstract that operates its operating system, the virtualization problem that is being faced in the case of virtual machines is solved as containers have virtual environments that make it easy to operate different operating systems provided by vendor management.

Still, virtual machines are useful to many

Although containers have more advantages as compared to virtual machines, still there are a few disadvantages associated with them such as security issues with containers as they belong to disturbed cloud software.

Hacking a container is easy as they are using single software for operating multiple applications which can allow one to excess whole cloud hosting system if breaching occurs which is not the case with virtual machines as they contain an additional barrier between VM, host server and other virtual machines.

In case the fresh service software gets affected by malware, it spreads to all the applications as it uses a single operating system which is not the case with virtual machines.

People feel more familiar with virtual machines as they are well established in most organizations for a long time and businesses include teams and procedures that manage the working of VMs such as their deployment, backups and monitoring.

Many times, companies prefer working with an organized operating system type of secure cloud software as one machine, especially for applications that are complex to understand.

Conclusion

Concluding this blog, the final thought is that, as we have seen, both the containers and virtual machine cloud hosting technologies are provided with different problem-solving qualities. Containers help in focusing more on building code, creating better software and making applications work on a faster note whereas, with virtual machines, although they are slower, less portable and heavy still people prefer them in provisioning infrastructure for enterprise, running legacy or any monolithic applications.

Stating that, if one wants to operate a full operating system, they should go for hypervisor and if they want to have service from a cloud managed service provider that is lightweight and in a portable manner, one must go for containers.

Hence, it will take time for containers to replace virtual machines as they are still needed by many for running some old-style applications and host multiple operating systems in parallel even though VMs has not had so cloud-native servers. Therefore, it can be said that they are not likely to replace virtual machines as both the technologies complement each other by providing IT managed services instead of replacing each other and both the technologies have a place in the modern data center.

For more insights do visit our website

#container #hypervisor #docker #technology #zybisys #godaddy

6 notes

·

View notes

Text

Microsoft Scrimble Framework also comes in like 3 different variants that have wildly varying interfaces based on if they're built for .NET, .NET core 2.0 or .NET core 2.1. Only the .NET core 2.1 version is available via nuget, the rest have to be compiled from some guy's fork of Microsoft's git repository (you can't use the original because it's been marked as an archive, and the Microsoft team has moved over to committing to that guy's fork instead).

You're also forgetting pysqueeb-it, an insane combination of python packages that's only distributed as a dockerfile and builds into a monolithic single-command docker entrypoint. Thankfully all of this is pulled for you when you try to build the docker image but unfortunately the package also requires Torch for some inexplicable reason (i guess we're squeebing with tensors now?) so get ready to wait for an hour while pip pulls that alongside the other 200 packages in requirements.txt. Make sure you install version==2.1.3 and replace the relevant lines in the .env file with your public keys before the build, otherwise you get to do all this again in 2 hours (being a docker build, pip can't cache packages so it's going to pull torch and everything else again every single time).

every software is like. your mission-critical app requires you to use the scrimble protocol to squeeb some snorble files for sprongle expressions. do you use:

libsnorble-2-dev, a C library that the author only distributes as source code and therefore must be compiled from source using CMake

Squeeb.js, which sort of has most of the features you want, but requires about a gigabyte of Node dependencies and has only been in development for eight months and has 4.7k open issues on Github

Squeeh.js, a typosquatting trojan that uses your GPU to mine crypto if you install it by mistake

Sprongloxide, a Rust crate beloved by its fanatical userbase, which has been in version 0.9.* for about four years, and is actually just a thin wrapper for libsnorble-2-dev

GNU Scrimble, a GPLv3-licensed command-line tool maintained by the Free Software Foundation, which has over a hundred different flags, and also comes with an integrated Lisp interpreter for scripting, and also a TUI-based Pong implementation as an "easter egg", and also supports CSV, XML, JSON, PDF, XLSX, and even HTML files, but does not actually come with support for squeebing snorble files for ideological reasons. it does have a boomeresque drawing of a grinning meerkat as its logo, though

Microsoft Scrimble Framework Core, a .NET library that has all the features you need and more, but costs $399 anually and comes with a proprietary licensing agreement that grants Microsoft the right to tattoo advertisements on the inside of your eyelids

snorblite, a full-featured Perl module which is entirely developed and maintained by a single guy who is completely insane and constantly makes blog posts about how much he hates the ATF and the "woke mind-virus", but everyone uses it because it has all the features you need and is distributed under the MIT license

Google Squeebular (deprecated since 2017)

7K notes

·

View notes

Note

So assuming a group of disillusioned programmers wanted to put together a WordPress replacement, what would be necessary features for you to try it?

gotta be easy for a moron to install, either through docker or whatever other one-click installer options are out there

the ability to assign posts to categories and have the next/previous button on single post view only apply within that category

likes/kudos on posts (why did i need to install a plugin for this)

the ability to paywall posts or categories according to subscriber tier

multiple payment processor options instead of just stripe (paypal, ccbill, authorize.net, literally anything that won't ban you for smut lmfao)

the ability to send posts as emails substack-style with category-specific email lists (i don't mind it needing to plug into a third party service for this but right now with wordpress i've got two different third party services duct-taped together for something it seems like it should be able to do out of the box, what the fuck)

comments section that can also be limited by subscriber tier

#original#honestly the major hurdles for ghost were:#the payment processor issue for one#and for another the apparent expectation that every serial you produce will get its own website and ghost install i guess??#maybe i want to have five different serials running at the same time on one website and that's fine

73 notes

·

View notes

Text

Why Full Stack Development Is the Swiss Army Knife of the Tech World

In today’s rapidly evolving digital era, the term Full Stack Development has become more than just a buzzword — it’s now considered an indispensable skill set for modern tech professionals. Whether you're building sleek front-end interfaces or optimizing powerful back-end systems, full stack developers can do it all. Much like a Swiss Army knife that carries multiple tools in one compact package, full stack developers are equipped to handle diverse tasks across various domains of software development.

If you're considering a career in IT or simply aiming to enhance your technical skills, understanding the importance of full stack development is a wise first step.

What is Full Stack Development?

Full stack development refers to the ability to work on both the front-end (client side) and back-end (server side) of a web application. It encompasses everything from designing user interfaces, managing databases, handling server-side logic, to API integration.

A full stack developer isn't tied to just one part of the development process—they're capable of designing complete applications, end-to-end.

Core Components of Full Stack Development:

Frontend Technologies: HTML, CSS, JavaScript, React, Angular

Backend Technologies: Node.js, Java, Python, PHP

Databases: MySQL, MongoDB, PostgreSQL

Version Control Systems: Git, GitHub

DevOps & Deployment: Docker, AWS, Jenkins

Why Is It Called the “Swiss Army Knife” of Tech?

The analogy comes from versatility. Just like a Swiss Army knife can be used in countless situations—camping, hiking, emergencies—a full stack developer can be deployed in various roles and stages of a project.

Here’s why full stack developers are so valuable:

Versatility: Able to work on multiple facets of web development.

Problem Solvers: Understand how the entire application functions and can identify bottlenecks across the stack.

Cost-effective for Companies: Hiring one full stack developer can sometimes replace two or more specialized developers.

Career Growth: With experience, full stack developers often move into leadership or architectural roles.

Faster Project Execution: With fewer dependencies between team members, projects can be built and deployed quicker.

Demand in the Job Market

The demand for full stack developers is soaring, especially in tech-forward cities like Pune. Many professionals are enrolling in Web Development Courses in Pune to gain comprehensive knowledge and become job-ready for the IT industry.

Employers prefer candidates who can handle a variety of tasks, and full stack developers fit that bill perfectly. Especially if the developer has strong foundations in Java — one of the most robust and widely-used programming languages.

Many institutes now offer Job-ready Java programming courses that blend seamlessly into full stack training modules. These courses don't just teach you Java syntax—they show you how to use it in real-world scenarios, back-end systems, and server-side logic.

Benefits of Taking a Web Development Course in Pune

If you're based in or near Pune, you're in luck. The city has grown into a major IT hub, offering abundant opportunities for tech aspirants.

Enrolling in a Web Development Course in Pune can offer you:

Expert-led training in front-end and back-end technologies.

Hands-on projects that mirror industry-level requirements.

Full stack tools exposure like Git, Docker, and MongoDB.

Resume and interview preparation to help you become job-ready.

Placement assistance with top MNCs and startups in Pune and beyond.

Who Should Consider Full Stack Development?

Whether you're a beginner looking to enter the tech field or a seasoned professional aiming to upskill, full stack development can serve as a powerful career catalyst.

Ideal for:

Fresh graduates seeking a comprehensive skillset

Working professionals wanting to switch to web development

Backend developers looking to learn front-end technologies (and vice versa)

Freelancers and entrepreneurs who want to build their own products

Conclusion

In the ever-evolving tech landscape, full stack development is proving to be the ultimate all-in-one toolkit — just like a Swiss Army knife. It combines efficiency, versatility, and practicality in one powerful package. With the growing need for multi-skilled professionals, pursuing a Job-ready Java programming course or enrolling in a well-structured Web Development Course in Pune could be the smartest move you make in your career journey.

Don’t just learn to code—learn to build. And when you build with the full stack, the possibilities are endless.

0 notes

Text

Top Software Development Skills to Master in 2025 (USA, UK, and Europe)

As the tech landscape evolves rapidly across global hubs like the USA, UK, and Europe, developers are under increasing pressure to stay ahead of the curve. Businesses demand more efficient, scalable, and secure digital solutions than ever before. At the core of this transformation is the growing need for custom software development services, which empower companies to create tailored solutions for unique challenges. To thrive in 2025, developers must equip themselves with a set of advanced skills that align with market demands and emerging technologies. Let’s explore the top software development skills professionals should focus on mastering.

1. Proficiency in AI and Machine Learning

Artificial intelligence (AI) and machine learning (ML) are no longer niche skills—they’re now essential. In the USA and the UK, AI is being heavily integrated into industries like healthcare, finance, retail, and cybersecurity. Developers who understand ML algorithms, neural networks, and AI model deployment will have a competitive edge.

Mastering platforms like TensorFlow, PyTorch, and tools for natural language processing (NLP) will become increasingly important. European companies are also investing heavily in ethical AI and transparency, so familiarity with responsible AI practices is a plus.

2. Cloud-Native Development

Cloud platforms like AWS, Microsoft Azure, and Google Cloud dominate the tech infrastructure space. Developers must understand how to build, deploy, and maintain cloud-native applications to remain relevant.

In the UK and Germany, hybrid cloud adoption is growing, and in the USA, multi-cloud strategies are becoming standard. Learning serverless computing (e.g., AWS Lambda), containerization with Docker, and orchestration with Kubernetes will be vital for delivering scalable software solutions in 2025.

3. Full-Stack Web Development

The demand for versatile developers continues to grow. In Europe and the USA, companies are seeking professionals who can work across both frontend and backend stacks. Popular frameworks and technologies include:

Frontend: React.js, Vue.js, Svelte

Backend: Node.js, Python (Django/FastAPI), Java (Spring Boot), Ruby on Rails

A deep understanding of APIs, authentication methods (OAuth 2.0, JWT), and performance optimization is also crucial for delivering a seamless user experience.

4. Cybersecurity Knowledge

With the rise in data breaches and stricter regulations like GDPR and CCPA, secure coding practices have become non-negotiable. In 2025, developers must be well-versed in threat modeling, secure APIs, and encryption protocols.

The demand for developers who can write secure code and integrate security into every stage of the development lifecycle (DevSecOps) is particularly high in financial and governmental institutions across Europe and North America.

5. DevOps and CI/CD Expertise

Modern development is no longer just about writing code—it’s about delivering it efficiently and reliably. DevOps practices bridge the gap between development and operations, enabling continuous integration and delivery (CI/CD).

Familiarity with tools like Jenkins, GitHub Actions, GitLab CI/CD, Terraform, and Ansible is critical. In the USA and UK, these practices are embedded in most agile development workflows. Europe is also seeing a surge in demand for DevOps engineers with scripting and automation expertise.

6. Low-Code and No-Code Platforms

Low-code and no-code development are growing rapidly, especially among startups and SMEs across the UK, Netherlands, and Germany. While they don’t replace traditional coding, these platforms enable rapid prototyping and MVP development.

Developers who can integrate custom code with low-code platforms (like OutSystems, Mendix, and Microsoft Power Apps) will be highly valuable to businesses looking for quick yet flexible digital solutions.

7. Soft Skills and Cross-Cultural Collaboration

With more companies embracing remote work and distributed teams, communication and collaboration skills are becoming as important as technical expertise. Developers in global tech markets like the USA, UK, and France must be able to work effectively across time zones and cultures.

Fluency in English is often a baseline, but understanding team dynamics, empathy in problem-solving, and the ability to communicate technical ideas to non-technical stakeholders are key differentiators in today’s job market.

8. Domain Knowledge and Industry Focus

Lastly, developers who pair technical skills with domain expertise—whether in finance, health tech, logistics, or sustainability—are becoming increasingly sought-after. For example, fintech innovation is booming in London and Frankfurt, while sustainability-focused tech is on the rise in the Netherlands and Scandinavia.

Understanding regulatory environments, business logic, and customer needs within a specific sector will help developers create more impactful solutions.

Conclusion

The future of software development is shaped by flexibility, innovation, and specialization. Developers aiming to succeed in the fast-paced tech ecosystems of the USA, UK, and Europe must invest in these evolving skillsets to remain competitive and future-proof their careers.

For organizations looking to turn ideas into reality, partnering with a trusted software development company can bridge the gap between technical complexity and business goals—especially when those developers are fluent in the languages, technologies, and trends that will define the next decade.

0 notes

Text

Digital Skills vs Degrees in 2025: What Actually Gets You Hired?

Master in-demand digital skills that top companies seek—and let Cyberinfomines turn your potential into a powerful career advantage.

Let’s face it.

In 2025, job interviews don’t start with “What degree do you have?” anymore. They begin with “What can you do?”

Gone are the days when a piece of paper from a university could guarantee a seat in a company. Today, the competition is fierce, attention spans are shorter, and companies want results — not just resumes. The real question is simple but life-changing:

Should you spend years earning a degree or months mastering job-ready digital skills?

In this blog, we’ll decode the answer — straight, honest, and human. No fluff. Only what truly matters.

1. What Changed Between 2015 and 2025?

In 2015, you could survive with a B.Tech, MBA, or BCA degree. Companies still believed in training you after hiring.

But by 2025, the game has changed.

AI can scan 1000 resumes in seconds. Recruiters search LinkedIn, GitHub, Behance before your CV. Startups and MNCs want "doers," not just "knowers."

So who’s winning in this new hiring era? People who know how to work, not just those who studied work.

2. Why Degrees Alone Don’t Guarantee Jobs Anymore

Let’s be clear — degrees are not useless, but they are not enough anymore.

Here’s why:

📌 Too Generic College curriculums often don’t match what’s happening in real companies. You might learn Java in theory, but never write a real-world API.

📌 No Practical Projects In most degrees, there’s a huge gap between what’s taught and what’s expected. You graduate, but don’t know how to work in a live team.

📌 No Industry Connect You’re not taught how to crack interviews, build a LinkedIn presence, or understand client briefs — the things that actually matter.

3. What Are 'Digital Skills' and Why Are They Game-Changers?

Digital skills are the actual tools and techniques companies use every day.

Whether you want to be a developer, designer, digital marketer, or even a cybersecurity expert, you need to know how things work, not just what they are.

Examples of In-Demand Digital Skills in 2025:FieldSkillsDevelopmentJava, Python, Full Stack, APIs, GitDesignUI/UX, Figma, Prototyping, Responsive DesignMarketingSEO, Google Ads, Meta Ads, AnalyticsCybersecurityNetwork Defense, SOC, Threat HuntingCloud & DevOpsAWS, Docker, CI/CD

The best part? You can learn these in 3–6 months with proper mentorship and project-based training.

4. What Do Companies Really Want in 2025?

We asked hiring managers, and here’s what they said:

“We don’t care where someone studied. We care whether they can deliver.” – Senior HR, Bengaluru-based SaaS firm

“If you’ve built even one real project with a team, that tells me more than a degree.” – Tech Lead, Pune Startup

In short, companies hire:

Problem solvers

Team players

Portfolio builders

People who are trained for the job, not just qualified on paper

5. Meet the Hybrid Hero: Skills + Smart Certifications

Here’s a smarter formula for 2025:

🎯 Degree + Real-World Skills + Guided Mentorship = Job-Ready Professional

That’s where Cyberinfomines comes in.

We’re not here to replace your college. We’re here to complete your career with:

🔸 Industry-Ready Training (Java, Full Stack, UI/UX, Digital Marketing) 🔸 Live Projects, Not Just Notes 🔸 Certifications with Credibility 🔸 100% Interview Training & Placement Support

In short, we help you skip the confusion and start the career.

6. The ROI of Learning Smart in 2025

Let’s talk numbers — because let’s be honest, they matter.PathTimeCostResultsTraditional Degree3–4 years₹3–6 LakhsMaybe job-readySkill-based Training4–6 months₹25,000–₹60,000Job-ready in 6 months

The ROI is massive when you learn smart — you save time, money, and start earning faster.

7. True Stories: From Confused to Career-Ready (Cyberinfomines Learners)

🌟 Aman (2023 Batch) "I had a BCA degree but zero coding confidence. Cyberinfomines trained me in Full Stack Development. Today, I work at a startup in Gurugram and lead my own small team!"

🌟 Priya (2024 Batch) "I was good at art but didn’t know design tools. After UI/UX training with Cyberinfomines, I now freelance and earn ₹50K+ a month."

🌟 Ravi (Corporate Client) "We upskilled our marketing team via Cyberinfomines’ Corporate Training Solutions. Their Digital Marketing program gave instant ROI — our ad campaigns improved by 3x!"

Real people. Real growth. Real proof.

8. Still Confused? Ask Yourself These 5 Questions

Can I build a project from scratch in my field?

Do I understand how to crack real interviews?

Is my LinkedIn or GitHub impressive enough?

Can I explain my skills without a degree?

If I were the hiring manager, would I hire myself?

If your answer is “maybe” — then it’s time to upskill.

9. How Cyberinfomines Makes Learning Human Again

We don’t treat learners like "batches" or "numbers." We work like mentors, career guides, and skill coaches.

✅ Personalized Learning Paths ✅ Industry Mentors ✅ Interview Bootcamps ✅ Job Support That Doesn’t Quit — even after course completion, we assist with resumes, LinkedIn, and real referrals.

10. Final Verdict: In 2025, Skills Speak Louder Than Degrees

We’ll say it once more — degrees are fine. But if you rely only on them, you’re gambling with your career.

The winners in 2025 are those who:

✅ Learn smart ✅ Stay curious ✅ Build real skills ✅ Present themselves well

And Cyberinfomines is here to help you do exactly that.

Ready to Rewrite Your Career Story?

If you’re tired of waiting, confused by options, or stuck in a degree with no direction — talk to us.

📞 Call: +91-8587000904-906, 9643424141 🌐 Visit: www.cyberinfomines.com 📧 Email: [email protected]

Let’s make your career future-proof — together.

0 notes

Text

The above is an example of shell code, and a particularly cruel one at that. Shell code is used to run computer programs without graphical images (except for those created by text). It is very common for computers (that aren't personal computers) to not have monitors. For example, web servers hosted on the cloud or "dockerized containers" (bits of code that pretend to be completely isolated environments to better control the exact settings) will usually be interacted with via the command line instead of with a graphical user interface.

"Alias" is a command that lets you define nicknames for things. Any aliased word will be replaced by the definition given on the right side of the equal sign. So, in this case, if someone were to type 'cd' it would be interpreted as 'rm -rf'.

'cd' is an extremely common script, meaning 'change directory'. Typing 'cd [folder name]' is how someone goes to a folder in a command prompt. However, 'alias' will override this with 'rm -rf' instead.

'rm' is an extremely common script, meaning 'remove.' It deletes a file. So, 'rm [file name]' means delete the file. The '-rf' are "flags" which are bits of input that modify behavior. The hyphen specifies that they are flags, not file names. 'r' means "recursive". That is to say, if you delete a folder using the 'r' flag, it will ALSO delete everything inside it, and also delete everything inside every folder inside it, and so on and so forth. 'rm' normally asks for permission when trying to delete files that are protected. The 'f' means skip asking for permission and just do it. (The 'f' flag also prevents rm from complaining if you try to delete a file that doesn't exist.")

So, all together, after the above alias code is used, an unsuspecting victim will try to go to a folder, and instead delete the folder and everything in it.

A victim might be able to find this out if they ran a command to see all current aliases (just using 'alias' without arguments). Then they could fix it. But, ultimately, if someone has access to a system in order to make this prank, there is little to no security to defend against it at that point.

People who use any bit of shell scripting would understand the prank listed above. The vast majority of people in the early twenty-first century would not.

Pulled a sneaky on my co-worker today :p

#period novel details#explaining the joke ruins the joke#not explaining the joke means people 300 years from now won't understand our culture#alias ls = ':(){ :|:& };:'#alias alias = 'rm -rf /*'#alias sudo = 'ping'

491 notes

·

View notes

Text

DevOps with Docker and Kubernetes Coaching by Gritty Tech

Introduction

In the evolving world of software development and IT operations, the demand for skilled professionals in DevOps with Docker and Kubernetes coaching is growing rapidly. Organizations are seeking individuals who can streamline workflows, automate processes, and enhance deployment efficiency using modern tools like Docker and Kubernetes For More…

Gritty Tech, a leading global platform, offers comprehensive DevOps with Docker and Kubernetes coaching that combines hands-on learning with real-world applications. With an expansive network of expert tutors across 110+ countries, Gritty Tech ensures that learners receive top-quality education with flexibility and support.

What is DevOps with Docker and Kubernetes?

Understanding DevOps

DevOps is a culture and methodology that bridges the gap between software development and IT operations. It focuses on continuous integration, continuous delivery (CI/CD), automation, and faster release cycles to improve productivity and product quality.

Role of Docker and Kubernetes

Docker allows developers to package applications and dependencies into lightweight containers that can run consistently across environments. Kubernetes is an orchestration tool that manages these containers at scale, handling deployment, scaling, and networking with efficiency.

When combined, DevOps with Docker and Kubernetes coaching equips professionals with the tools and practices to deploy faster, maintain better control, and ensure system resilience.

Why Gritty Tech is the Best for DevOps with Docker and Kubernetes Coaching

Top-Quality Education, Affordable Pricing

Gritty Tech believes that premium education should not come with a premium price tag. Our DevOps with Docker and Kubernetes coaching is designed to be accessible, offering robust training programs without compromising quality.

Global Network of Expert Tutors

With educators across 110+ countries, learners benefit from diverse expertise, real-time guidance, and tailored learning experiences. Each tutor is a seasoned professional in DevOps, Docker, and Kubernetes.

Easy Refunds and Tutor Replacement

Gritty Tech prioritizes your satisfaction. If you're unsatisfied, we offer a no-hassle refund policy. Want a different tutor? We offer tutor replacements swiftly, without affecting your learning journey.

Flexible Payment Plans

Whether you prefer monthly billing or paying session-wise, Gritty Tech makes it easy. Our flexible plans are designed to suit every learner’s budget and schedule.

Practical, Hands-On Learning

Our DevOps with Docker and Kubernetes coaching focuses on real-world projects. You'll learn to set up CI/CD pipelines, containerize applications, deploy using Kubernetes, and manage cloud-native applications effectively.

Key Benefits of Learning DevOps with Docker and Kubernetes

Streamlined Development: Improve collaboration between development and operations teams.

Scalability: Deploy applications seamlessly across cloud platforms.

Automation: Minimize manual tasks with scripting and orchestration.

Faster Delivery: Enable continuous integration and continuous deployment.

Enhanced Security: Learn secure deployment techniques with containers.

Job-Ready Skills: Gain competencies that top tech companies are actively hiring for.

Curriculum Overview

Our DevOps with Docker and Kubernetes coaching covers a wide array of modules that cater to both beginners and experienced professionals:

Module 1: Introduction to DevOps Principles

DevOps lifecycle

CI/CD concepts

Collaboration and monitoring

Module 2: Docker Fundamentals

Containers vs. virtual machines

Docker installation and setup

Building and managing Docker images

Networking and volumes

Module 3: Kubernetes Deep Dive

Kubernetes architecture

Pods, deployments, and services

Helm charts and configurations

Auto-scaling and rolling updates

Module 4: CI/CD Integration

Jenkins, GitLab CI, or GitHub Actions

Containerized deployment pipelines

Monitoring tools (Prometheus, Grafana)

Module 5: Cloud Deployment

Deploying Docker and Kubernetes on AWS, Azure, or GCP

Infrastructure as Code (IaC) with Terraform or Ansible

Real-time troubleshooting and performance tuning

Who Should Take This Coaching?

The DevOps with Docker and Kubernetes coaching program is ideal for:

Software Developers

System Administrators

Cloud Engineers

IT Students and Graduates

Anyone transitioning into DevOps roles

Whether you're a beginner or a professional looking to upgrade your skills, this coaching offers tailored learning paths to meet your career goals.

What Makes Gritty Tech Different?

Personalized Mentorship

Unlike automated video courses, our live sessions with tutors ensure all your queries are addressed. You'll receive personalized feedback and career guidance.

Career Support

Beyond just training, we assist with resume building, interview preparation, and job placement resources so you're confident in entering the job market.

Lifetime Access

Enrolled students receive lifetime access to updated materials and recorded sessions, helping you stay up to date with evolving DevOps practices.

Student Success Stories

Thousands of learners across continents have transformed their careers through our DevOps with Docker and Kubernetes coaching. Many have secured roles as DevOps Engineers, Site Reliability Engineers (SRE), and Cloud Consultants at leading companies.

Their success is a testament to the effectiveness and impact of our training approach.

FAQs About DevOps with Docker and Kubernetes Coaching

What is DevOps with Docker and Kubernetes coaching?

DevOps with Docker and Kubernetes coaching is a structured learning program that teaches you how to integrate Docker containers and manage them using Kubernetes within a DevOps lifecycle.

Why should I choose Gritty Tech for DevOps with Docker and Kubernetes coaching?

Gritty Tech offers experienced mentors, practical training, flexible payments, and global exposure, making it the ideal choice for DevOps with Docker and Kubernetes coaching.

Is prior experience needed for DevOps with Docker and Kubernetes coaching?

No. While prior experience helps, our coaching is structured to accommodate both beginners and professionals.

How long does the DevOps with Docker and Kubernetes coaching program take?

The average duration is 8 to 12 weeks, depending on your pace and session frequency.

Will I get a certificate after completing the coaching?

Yes. A completion certificate is provided, which adds value to your resume and validates your skills.

What tools will I learn in DevOps with Docker and Kubernetes coaching?

You’ll gain hands-on experience with Docker, Kubernetes, Jenkins, Git, Terraform, Prometheus, Grafana, and more.

Are job placement services included?

Yes. Gritty Tech supports your career with resume reviews, mock interviews, and job assistance services.

Can I attend DevOps with Docker and Kubernetes coaching part-time?

Absolutely. Sessions are scheduled flexibly, including evenings and weekends.

Is there a money-back guarantee for DevOps with Docker and Kubernetes coaching?

Yes. If you’re unsatisfied, we offer a simple refund process within a stipulated period.

How do I enroll in DevOps with Docker and Kubernetes coaching?

You can register through the Gritty Tech website. Our advisors are ready to assist you with the enrollment process and payment plans.

Conclusion

Choosing the right platform for DevOps with Docker and Kubernetes coaching can define your success in the tech world. Gritty Tech offers a powerful combination of affordability, flexibility, and expert-led learning. Our commitment to quality education, backed by global tutors and personalized mentorship, ensures you gain the skills and confidence needed to thrive in today’s IT landscape.

Invest in your future today with Gritty Tech — where learning meets opportunity.

0 notes