#Extract Wayfair product utilizing BeautifulSoup and Python

Explore tagged Tumblr posts

Text

How Customer Reviews Scraping Improves Efficiency and Business Growth?

In the age of digital commerce, consumers are constantly seeking ways to make informed purchasing decisions. Whether it's finding the best deals or tracking price fluctuations over time, accessing accurate and up-to-date data is crucial. Enter web scraping—a powerful technique that provides invaluable insights into the vast landscape of online retail. In this article, we'll explore how web scraping data insights can revolutionize the process of accessing Wayfair price history, empowering consumers with the knowledge they need to make informed decisions.

Understanding Web Scraping

Before delving into its application in accessing Wayfair price history, let's briefly understand what web scraping entails. Web scraping involves extracting data from websites, typically in an automated fashion, using specialized tools or programming scripts. These tools navigate through the structure of web pages, gathering information such as product details, prices, reviews, and more.

The Importance of Price History

Price history serves as a valuable resource for consumers, enabling them to track fluctuations in product prices over time. By analyzing historical data, shoppers can identify patterns, anticipate price trends, and determine the best time to make a purchase. This is particularly relevant in the realm of online retail, where prices can vary widely and change frequently due to factors like demand, competition, and promotions.

Leveraging Web Scraping for Wayfair Price History

Wayfair, one of the largest online destinations for home goods and furniture, offers a vast array of products at competitive prices. However, accessing historical pricing information on Wayfair's platform can be challenging through conventional means. This is where web scraping comes into play, providing a streamlined solution for gathering and analyzing price data.

By utilizing web scraping techniques, consumers can extract price information from Wayfair's website and compile it into structured datasets. These datasets can then be analyzed to uncover valuable insights, such as trends in pricing, seasonal fluctuations, and the impact of promotions or sales events. Moreover, web scraping enables users to compare prices across different time periods, products, or sellers, empowering them to make informed decisions and secure the best possible deals.

Tools and Techniques for Web Scraping

Several tools and techniques are available for web scraping, ranging from simple browser extensions to sophisticated programming libraries. For accessing Wayfair price history, Python-based libraries such as BeautifulSoup and Scrapy are popular choices among web scraping enthusiasts. These libraries provide robust capabilities for navigating web pages, extracting data, and storing it in a structured format for analysis.

Additionally, specialized web scraping services and platforms offer turnkey solutions for extracting price data from Wayfair and other e-commerce websites. These services often feature user-friendly interfaces, pre-built scraping modules, and advanced analytics capabilities, making them accessible to users with varying levels of technical expertise.

Ethical Considerations and Best Practices

While web scraping can provide valuable insights into Wayfair price history, it's essential to adhere to ethical guidelines and respect the terms of service of the websites being scraped. Engaging in excessive or abusive scraping behavior can strain server resources, disrupt website functionality, and potentially violate legal regulations.

To mitigate these risks, practitioners should implement rate limiting mechanisms, respect robots.txt directives, and obtain explicit permission when necessary. Additionally, it's crucial to handle scraped data responsibly, ensuring compliance with data privacy regulations and protecting sensitive information.

Conclusion

In conclusion, web scraping data insights offer a powerful means of accessing Wayfair price history and gaining a deeper understanding of online retail dynamics. By harnessing the capabilities of web scraping tools and techniques, consumers can navigate the complexities of e-commerce, track price fluctuations, and make informed purchasing decisions. However, it's essential to approach web scraping responsibly, respecting ethical considerations and legal boundaries to ensure a fair and sustainable online ecosystem. With the right tools and practices in place, web scraping opens up a world of possibilities for uncovering valuable insights and maximizing savings in the digital marketplace.

0 notes

Quote

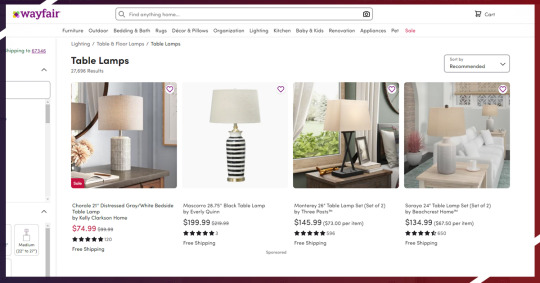

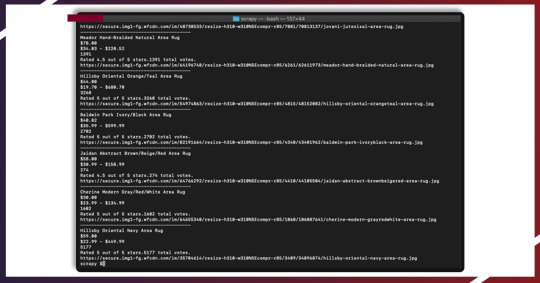

Introduction In this blog, we will show you how we Extract Wayfair product utilizing BeautifulSoup and Python in an elegant and simple manner. This blog targets your needs to start on a practical problem resolving while possession it very modest, so you need to get practical and familiar outcomes fast as likely. So the main thing you need to check that we have installed Python 3. If don’t, you need to install Python 3 before you get started. pip3 install beautifulsoup4 We also require the library's lxml, soupsieve, and requests to collect information, fail to XML, and utilize CSS selectors. Mount them utilizing. pip3 install requests soupsieve lxml When installed, you need to open the type in and editor. # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests Now go to Wayfair page inspect and listing page the details we can need. It will look like this. wayfair-screenshot Let’s get back to the code. Let's attempt and need data by imagining we are a browser like this. # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.wayfair.com/rugs/sb0/area-rugs-c215386.html' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') Save scraper as scrapeWayfais.py If you route it python3 scrapeWayfair.py The entire HTML page will display. Now, let's utilize CSS selectors to acquire the data you need. To peruse that, you need to get back to Chrome and review the tool. wayfair-code We observe all the separate product details are checked with the period ProductCard-container. We scrape this through the CSS selector '.ProductCard-container' effortlessly. So here you can see how the code will appear like. # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.wayfair.com/rugs/sb0/area-rugs-c215386.html' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.ProductCard-container'): try: print('----------------------------------------') print(item) except Exception as e: #raise e print('') This will print out all the substance in all the fundamentals that contain the product information. code-1 We can prefer out periods inside these file that comprise the information we require. We observe that the heading is inside a # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.wayfair.com/rugs/sb0/area-rugs-c215386.html' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.ProductCard-container'): try: print('----------------------------------------') #print(item) print(item.select('.ProductCard-name')[0].get_text().strip()) print(item.select('.ProductCard-price--listPrice')[0].get_text().strip()) print(item.select('.ProductCard-price')[0].get_text().strip()) print(item.select('.pl-ReviewStars-reviews')[0].get_text().strip()) print(item.select('.pl-VisuallyHidden')[2].get_text().strip()) print(item.select('.pl-FluidImage-image')[0]['src']) except Exception as e: #raise e print('') If you route it, it will publish all the information. code-2 Yeah!! We got everything. If you need to utilize this in creation and need to scale millions of links, after that you need to find out that you will need IP blocked effortlessly by Wayfair. In such case, utilizing a revolving service proxy to replace IPs is required. You can utilize advantages like API Proxies to mount your calls via pool of thousands of inhabited proxies. If you need to measure the scraping speed and don’t need to fix up infrastructure, you will be able to utilize our Cloud-base scraper RetailGators.com to effortlessly crawl millions of URLs quickly from our system. If you are looking for the best Scraping Wayfair Products with Python and Beautiful Soup, then you can contact RetailGators for all your queries.

source code: https://www.retailgators.com/scraping-wayfair-products-with-python-and-beautiful-soup.php

#Scraping Wayfair Products with Python and Beautiful Soup#Scraping Wayfair Products with Python#Extract Wayfair product utilizing BeautifulSoup and Python

0 notes

Link

Here, we will see how to scrape Wayfair products with Python & BeautifulSoup easily and stylishly.

This blog helps you get started on real problem solving whereas keeping that very easy so that you become familiar as well as get real results as quickly as possible.

The initial thing we want is to ensure that we have installed Python 3 and if not just install it before proceeding any further.

After that, you may install BeautifulSoup using

install BeautifulSoup

pip3 install beautifulsoup4

We would also require LXML, library’s requests, as well as soupsieve for fetching data, break that down to the XML, as well as utilize CSS selectors. Then install them with:

pip3 install requests soupsieve lxml

When you install it, open the editor as well as type in.

s# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests

Now go to the listing page of Wayfair products to inspect data we could get.

That is how it will look:

Now, coming back to our code, let’s get the data through pretending that we are the browser like that.

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.wayfair.com/rugs/sb0/area-rugs-c215386.html' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml')

Then save it as a scrapeWayfair.py.

In case, you run that.

python3 scrapeWayfair.py

You will get the entire HTML page.

Now, it’s time to utilize CSS selectors for getting the required data. To do it, let’s use Chrome as well as open an inspect tool.

We observe that all individual products data are controlled within a class ‘ProductCard-container.’ We could scrape this using CSS selector ‘.ProductCard-container’ very easily. Therefore, let’s see how the code will look like:

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.wayfair.com/rugs/sb0/area-rugs-c215386.html' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.ProductCard-container'): try: print('----------------------------------------') print(item) except Exception as e: #raise e print('')

It will print the content of all the elements, which hold the product’s data.

Now, we can choose classes within these rows, which have the required data. We observe that a title is within the

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.wayfair.com/rugs/sb0/area-rugs-c215386.html' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.ProductCard-container'): try: print('----------------------------------------') #print(item) print(item.select('.ProductCard-name')[0].get_text().strip()) print(item.select('.ProductCard-price--listPrice')[0].get_text().strip()) print(item.select('.ProductCard-price')[0].get_text().strip()) print(item.select('.pl-ReviewStars-reviews')[0].get_text().strip()) print(item.select('.pl-VisuallyHidden')[2].get_text().strip()) print(item.select('.pl-FluidImage-image')[0]['src']) except Exception as e: #raise e print('')

In case, you run that, it would print all the information.

And that’s it!! We have done that!

If you wish to utilize this in the production as well as wish to scale it to thousand links, you will discover that you would get the IP blocked very easily with Wayfair. With this scenario, utilizing rotating proxy services for rotating IPs is nearly a must. You may utilize the services including Proxies API for routing your calls using the pool of millions of domestic proxies.

In case, you wish to scale crawling speed as well as don’t wish to set the infrastructure, then you can utilize our Wayfair data crawler to easily scrape thousands of URLs with higher speed from the network of different crawlers. For more information, contact us!

0 notes

Text

How Customer Reviews Scraping Improves Efficiency and Business Growth?

In the age of digital commerce, consumers are constantly seeking ways to make informed purchasing decisions. Whether it's finding the best deals or tracking price fluctuations over time, accessing accurate and up-to-date data is crucial. Enter web scraping—a powerful technique that provides invaluable insights into the vast landscape of online retail. In this article, we'll explore how web scraping data insights can revolutionize the process of accessing Wayfair price history, empowering consumers with the knowledge they need to make informed decisions.

Understanding Web Scraping

Before delving into its application in accessing Wayfair price history, let's briefly understand what web scraping entails. Web scraping involves extracting data from websites, typically in an automated fashion, using specialized tools or programming scripts. These tools navigate through the structure of web pages, gathering information such as product details, prices, reviews, and more.

The Importance of Price History

Price history serves as a valuable resource for consumers, enabling them to track fluctuations in product prices over time. By analyzing historical data, shoppers can identify patterns, anticipate price trends, and determine the best time to make a purchase. This is particularly relevant in the realm of online retail, where prices can vary widely and change frequently due to factors like demand, competition, and promotions.

Leveraging Web Scraping for Wayfair Price History

Wayfair, one of the largest online destinations for home goods and furniture, offers a vast array of products at competitive prices. However, accessing historical pricing information on Wayfair's platform can be challenging through conventional means. This is where web scraping comes into play, providing a streamlined solution for gathering and analyzing price data.

By utilizing web scraping techniques, consumers can extract price information from Wayfair's website and compile it into structured datasets. These datasets can then be analyzed to uncover valuable insights, such as trends in pricing, seasonal fluctuations, and the impact of promotions or sales events. Moreover, web scraping enables users to compare prices across different time periods, products, or sellers, empowering them to make informed decisions and secure the best possible deals.

Tools and Techniques for Web Scraping

Several tools and techniques are available for web scraping, ranging from simple browser extensions to sophisticated programming libraries. For accessing Wayfair price history, Python-based libraries such as BeautifulSoup and Scrapy are popular choices among web scraping enthusiasts. These libraries provide robust capabilities for navigating web pages, extracting data, and storing it in a structured format for analysis.

Additionally, specialized web scraping services and platforms offer turnkey solutions for extracting price data from Wayfair and other e-commerce websites. These services often feature user-friendly interfaces, pre-built scraping modules, and advanced analytics capabilities, making them accessible to users with varying levels of technical expertise.

Ethical Considerations and Best Practices

While web scraping can provide valuable insights into Wayfair price history, it's essential to adhere to ethical guidelines and respect the terms of service of the websites being scraped. Engaging in excessive or abusive scraping behavior can strain server resources, disrupt website functionality, and potentially violate legal regulations.

To mitigate these risks, practitioners should implement rate limiting mechanisms, respect robots.txt directives, and obtain explicit permission when necessary. Additionally, it's crucial to handle scraped data responsibly, ensuring compliance with data privacy regulations and protecting sensitive information.

Conclusion

In conclusion, web scraping data insights offer a powerful means of accessing Wayfair price history and gaining a deeper understanding of online retail dynamics. By harnessing the capabilities of web scraping tools and techniques, consumers can navigate the complexities of e-commerce, track price fluctuations, and make informed purchasing decisions. However, it's essential to approach web scraping responsibly, respecting ethical considerations and legal boundaries to ensure a fair and sustainable online ecosystem. With the right tools and practices in place, web scraping opens up a world of possibilities for uncovering valuable insights and maximizing savings in the digital marketplace.

1 note

·

View note

Quote

Introduction Let’s observe how we may extract Amazon’s Best Sellers Products with Python as well as BeautifulSoup in the easy and sophisticated manner. The purpose of this blog is to solve real-world problems as well as keep that easy so that you become aware as well as get real-world results rapidly. So, primarily, we require to ensure that we have installed Python 3 and if not, we need install that before making any progress. Then, you need to install BeautifulSoup with: pip3 install beautifulsoup4 We also require soupsieve, library's requests, and LXML for extracting data, break it into XML, and also utilize the CSS selectors as well as install that with:. pip3 install requests soupsieve lxml Whenever the installation is complete, open an editor to type in: # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests After that, go to the listing page of Amazon’s Best Selling Products and review data that we could have. See how it looks below. wayfair-screenshot After that, let’s observe the code again. Let’s get data by expecting that we use a browser provided there : # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.amazon.in/gp/bestsellers/garden/ref=zg_bs_nav_0/258-0752277-9771203' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') Now, it’s time to save that as scrapeAmazonBS.py. If you run it python3 scrapeAmazonBS.py You will be able to perceive the entire HTML page. Now, let’s use CSS selectors to get the necessary data. For doing that, let’s utilize Chrome again as well as open the inspect tool. wayfair-code We have observed that all the individual products’ information is provided with the class named ‘zg-item-immersion’. We can scrape it using CSS selector called ‘.zg-item-immersion’ with ease. So, the code would look like : # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.amazon.in/gp/bestsellers/garden/ref=zg_bs_nav_0/258-0752277-9771203' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.zg-item-immersion'): try: print('----------------------------------------') print(item) except Exception as e: #raise e print('') This would print all the content with all elements that hold products’ information. code-1 Here, we can select classes within the rows that have the necessary data. # -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'} url = 'https://www.amazon.in/gp/bestsellers/garden/ref=zg_bs_nav_0/258-0752277-9771203' response=requests.get(url,headers=headers) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.zg-item-immersion'): try: print('----------------------------------------') print(item) print(item.select('.p13n-sc-truncate')[0].get_text().strip()) print(item.select('.p13n-sc-price')[0].get_text().strip()) print(item.select('.a-icon-row i')[0].get_text().strip()) print(item.select('.a-icon-row a')[1].get_text().strip()) print(item.select('.a-icon-row a')[1]['href']) print(item.select('img')[0]['src']) except Exception as e: #raise e print('') If you run it, that would print the information you have. code-2 That’s it!! We have got the results. If you want to use it in production and also want to scale millions of links then your IP will get blocked immediately. With this situation, the usage of rotating proxies for rotating IPs is a must. You may utilize services including Proxies APIs to route your calls in millions of local proxies. If you want to scale the web scraping speed and don’t want to set any individual arrangement, then you may use RetailGators’ Amazon web scraper for easily scraping thousands of URLs at higher speeds.

#Scraping Amazon Best-Seller lists with Python#extract Amazon’s Best Sellers Products with Python#Amazon’s Best Selling Products

0 notes