#Files with no machine-readable source

Explore tagged Tumblr posts

Text

Akihabara_Maids.JPG

#wikimedia commons#2000s#2006#Akihabara Station Electric Town entrance south front street#Maid café maids in promotional activities in Japan#Maids in Akihabara#Maid cafés in Akihabara#2006 in Akihabara#July 2006 in Tokyo#Photographs by Ricky G. Willems#Attribution only license#Media missing infobox template#Files in need of review (sources)#Files in need of review (sources)/Attribution#Files with no machine-readable author#Files with no machine-readable source

3 notes

·

View notes

Text

> // Shrouded in leaves and stems; looks like I've fooled you again!

Hellooo! Welcome to definitely not the only Tails blog. Possibly the only one with a questionable level of sanity and energy drinks. And strange posting hours. Uni wants me dead. Help.

I hear there's a community. Okay. Not hear. I definitely hacked into it at some point so I know there's a community. Join us. We're getting soft tacos later.

Read More.

> // name = BOUNDARIES

> // Post Frontiers AU, primarily focused on the after effects of the Cyber Corruption. Any blogs, iterations, or worlds are free to interact. I like interaction. Keeps me sane.

> // No NSFW. Should be obvious. Any asks I deem strange have the chance of not being responded to, deleted, and/or blocked.

> // Not the world's biggest fan of shipping in that context. Would prefer it be kept to a minimum or none at all. Unless it's making fun of Star, in which case I will gladly join in.

> // This may be updated as time goes. Depends on how I feel about all of you. I might laugh at you though.

> // name = SYSTEM FILES

type = sonicStar @starslastspark

type = sageSatellite @techfromthestars

> // name = TAGGED FOLDERS

> // tails('Argument') — Answering questions. Derived from the term argument in programming, which gives further information to the code. You are the code, I am the program.

> // tails('sourceCode') — My yaps. Derived from the term source code in programming, which is human-readable code translated to machine-readable code.

> // tails('Repository') — Reblogs. Derived from the term repository in programming; essentially a specific location where all information related to a project can be kept in one place.

>// tails('Function') — Lore. Derived from the term function in programming; functions carry out whatever task directed of them.

#sonic the hedgehog#sth#sonic#sonic rp#miles prower#miles tails prower#tails the fox#sth au#sth fandom#tails('Argument')#tails('sourceCode')#tails('Repository')#tails('Function')

31 notes

·

View notes

Text

Roads and Traveling

By JimChampion - Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=135565344

Roads and pathways go back very far in human history, to about 10,000 BCE. Some of these might have started out as animal trails that were then used over and over, widening until they left their marks on the landscape, some were engineered and covered over by paving or logs. Some are blended with rivers to make travel faster and able to carry more and some were dictated by rulers of empires. Some are nothing more than sunken paths now, some are still in use.

By Adam37 - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=19961082

The oldest extant roads are sunken paths, many of which can be found in England as there is a tradition of maintaining public rights of way. Many of these paths connect farms to each other and also cross the country to reach important locations, such as temples and cities. One of the oldest of these is Harrow Way, or the Old Way, which connected what is now Seaton in Devon to Dover in Kent. The Old Way can be dated to somewhere between 600-450 BCE, but is believed to much older, going back into the Stone Age.

By John M, CC BY-SA 2.0, https://commons.wikimedia.org/w/index.php?curid=15868070

Roads that are paved with with wood can come in two types, corduroy roads that are made of logs laid across the roadway, especially across areas that tend to get extremely wet, like low lying areas and swamps. Plank roads are a newer take on that concept made with planks instead of whole logs. One of the oldest wood roads that still exists is the Corlea Trackway in Ireland, which dates to about 148 BCE and is the largest one found in Europe.

By Original creator: MossmapsCorrections according to Oxford Atlas of World History 2002, The Times Atlas of World History (1989), Philip's Atlas of World History (1999) by पाटलिपुत्र (talk) - This file was derived from: The Achaemenid Empire at its Greatest Extent.jpg, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=73745174

Darius I (Darius the Great) of the Achaemenid empire, who reigned from 522-486 BCE had the Royal Road build during his reign. Some parts of it appear to follow that of the Assyrian kings, who reigned from the 21st-7th century BCE, beginning with a city-state and gradually expanding into an empire, based on the eastern parts of the roadway seeming to connect points that were important to them rather than taking the most direct or easiest path. Darius did improve the roads, though, which were later improved by the Romans. It was expected that mounted couriers were expected to traverse the 2,699 km (1,677 miles) journey from Susa to Sardis in 9 days, a trip that took 90 days by foot, or approximately 30 hours by vehicle today. It was primarily a 'post road', or one that was used to transport mail rather than the mass transportation of people and goods.

By No machine-readable author provided. Jan Kronsell assumed (based on copyright claims). - No machine-readable source provided. Own work assumed (based on copyright claims)., Public Domain, https://commons.wikimedia.org/w/index.php?curid=781888

In the Americas, many trails were blazed, or marked so that others could follow, quite early in history, possibly beginning by following ridge lines or large game animal paths, which followed dry ground through the forests between grazing lands and salt licks. One of the largest still extant of these trails is the Natchez Trace, or the Old Natchez Trace between Nashville, Tennessee and Natchez Mississippi and linking the Cumberland, Tennessee, and Mississippi Rivers, spanning 710 km (440 miles). Many of the trails were co-opted by European colonizers to explore and expand through the continent.

By Manco Capac - self-made from the images on this and this and this pages and mainly from the image in the book Inca Road System by John Hyslop., CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=3878163

The Inca road system was the most vast and advanced transportation system in the Americas. It consisted of many paved roads, two major north-south routes with many branches cut into mountainous territory the Inca ruled over in the Andes, and around 39,900 km (24,800 miles) of roads. The best known part of this road system is the Trail to Machu Picchu. Parts of the road systems were built by cultures that lived in the areas before the Inca ruled over the area, especially the Wari culture, who lived there from about 500-1000 CE.

By DS28 - File:Roman Empire 125 general map.SVG, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=68002775

The Roman roads, perhaps the best known early roads, were built from about 300 BCE through the fall of the Western Roman Empire, about 400 CE. They were standardized, those standards laid down in the Law of the Twelve Tables from about 450 BCE, to 8 Roman feet (~2.37m) wide, double that around curves. In rural areas, the width was 12 Roman feet, allowing two standard width carts to pass by and allow for pedestrian traffic to continue on without interference. These tables also required private landowners to allow travelers to use their land if the roads were in disrepair, so durable roads became a very important consideration, as well as making them straight to use the least amount of materials. At its peak, the Roman roads consisted of at least 29 great military highways radiating from Rome, 327 great roads that connected provinces, and many smaller roads that comprised more than 400,000 km (260.000 miles), with over 80,500 km (50,000 miles) stone paved. Many of these are still used, being paved over with modern roads.

By Whole_world_-land_and_oceans_12000.jpg: NASA/Goddard Space Flight Centerderivative work: Splette (talk) - Whole_world-_land_and_oceans_12000.jpg, Public Domain, https://commons.wikimedia.org/w/index.php?curid=10449197 and By Nekitarc - Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=43114716

In China, many roads were dedicated to trade routes like the Silk Road, which began in the Han Dynasty (207 BCE-220 CE). They focused primarily on the safety of the route to make it as lucrative as it could be, so major projects like extending the Great Wall of China, which was started during the 7th century BCE, were undertaken. The Silk Road shortened the Eurasian Steppe trail, which had been used for about 2000 years, from 10,000 km (6,200 miles) to 6,400 km (4,000 miles). Other roads in China that are worth noting are the Gallery Roads through remote mountains that are made of wooden planks secured to holes drilled into cliff sides in the Qin Mountains, which were built starting around the 4th century BCE and are still in use.

4 notes

·

View notes

Text

Since I enjoy collecting things (big surprise) this year I've been working on collecting every Dreamcatcher music show performance in a quality that doesn't suck ass (aka not the seven pixels that YouTube compresses the official uploads down to). It went pretty well!

Pseudo research report under the cut (because I'm a massive nerd).

Scope

I started this project with the goal of collecting a full set of "music show performances". I didn't realise quite how ambiguous that definition is. After some deliberation I settled on the following as constituting "regular" performances:

Show Music Core

Inkigayo

The Show

Show Champion

M! Countdown

Music Bank

Simply K-Pop

Everything else I classified as misc. Most notably I left K-Force Special Show in the misc category despite there being quite a few performances on that show in the early days. This mostly came down to how I'm storing the files — I've organised the regular ones by era, but the K-Force Special Show performances frequently happened in between comebacks making them difficult to sort in that fashion. That and difficulties in ascertaining whether I do actually have all of them.

Methodology

The first step in collecting anything is finding out what you need to collect. I was surprised at how difficult it actually is to get a complete list of all of a group's music show performances. There are some fan sites which do a good job of presenting a lot of well categorised individual links to Dreamcatcher's appearances but I was hoping for something more "from the source" (aka the music shows themselves) and ideally machine readable to cross check that nothing is missing, since, for example, a collection of links to all official Inkigayo performance uploads is not a list of all Inkigayo performances (some never got official uploads). I ended up finding /r/kpop's music shows wiki fit my needs the most. The information is all in tables and sorted by music show and date which what I wanted.

Unfortunately, the main tables of /r/kpop's music show wikis only list debuts and comebacks, so I had to index and search all the individual pages to find what I was looking for. This involved spending a couple of afternoons opening up nearly 2,000 tabs of Reddit* and then downloading them using the SingleFile browser extension (Reddit rate limits you quite harshly and so I had to open a bunch of tabs, check they loaded, wait, and then repeat, which slowed it down a lot). While maybe a little overkill I did this for multiple reasons. Firstly, Reddit's search sucks, and I didn't trust it to find all instances of what I was looking for over so many pages. In contrast Everything's in-memory file content index beta is really good. But secondly, this is much more extensible, and now I can find any other group's performances as well (between 2017** and 2023).

*Reddit does not archive Simply K-Pop well at all (which caused some initial confusion), but the official Arirang website works fine as an alternative (albeit one that is somehow even slower than Reddit at opening new pages).

**Reddit's music show wiki pages start right before 2017. This is very convenient for a Dreamcatcher archivist, but, unfortunately for me, curiosity got the better of me and I did venture further out into the unknown. For 2014-2017 I have some scattered archives from various official websites, Twitter accounts, and Korean catch up services. I'm less confident in my music show data for this period in the general case but because the rough start and end dates for the two Minx promotion cycles are known I am confident enough that all the gaps I have in there are genuine gaps and that I'm not missing a performance date.

Once I had my wishlist I then had to go out and, you know, find them. And figure out what "best quality" actually means. I learned a lot about video encoding against my will. I also learned that HEVC is fucking haunted. When multiple sources were available I ranked them as follows:

2160p HEVC > 2160p h264 > 1080p HEVC (one weird edge case I didn't know what to do with) > 1080i h264 > 1080i MPEG2 > WEBDL (usually some kind of web livestream or online catch up service recording) > YouTube

Which I represented in my spreadsheet as:

This should be mostly uncontroversial, however, there is a slight complication in that the 4k broadcast copies are not all native 4k but often upscaled from the 1080 source (I can't find specifics on this as the information, if publicly accessible, would be in Korean, and there is only so much Google Translate can do, but the technical broadcasting details probably aren't public to the extend I am interested in regardless so I'm mostly basing this off information gleaned from secondary sources). As far as I can tell there exist two "grades" of upscales: one of which is upscaled by the network before being pushed out to a UHD channel, and one of which is upscaled by the end user by either recording a HD channel in 4k or running an existing recording through an upscaler. Traditionally upscales aren't very desirable due to providing no real material benefit you couldn't get from playing the 1080 copy on a 4k display yourself. Or, for people with an archival mindset like myself, because ones that actually look a bit crisper introduce "magic" pixels which may or may not have ever existed. See below for an example of this taken to the extreme (note the faces):

No one wants that, right? AND YET SOMEONE THOUGHT THAT WAS SUCH A BIG IMPROVEMENT THEY DELETED EVERYTHING ELSE, AND NOW IT REMAINS THE ONLY COPY I CAN FIND. Monsters!!! Anyway.

While upscales usually aren't desirable, I did come around to the idea that the network upscales deserve their place at the top of the list. There are a couple of points working in their favour: one is that they are, presumably, upscaled much more directly from the source, allowing them to avoid some of the data loss that being encoded into 1080i h264 and then upscaled would cause. The second is that quite a lot of them are encoded directly into HEVC, which makes the confetti and flashing lights look noticably smoother than the regular deinterlaced 1080i copies. Also, finally, as a touch of personal preference (especially in the case of 4k h264 where the second point doesn't apply), these do come pre-deinterlaced which is nicer for my chosen method of playback. Although some of those points apply to non network upscales too, the "impurity" of them far outweighs any benefit in my eyes, as I have more faith in whatever the network standard is than some random person on the internet trying to "fix" a file.

So how did I tell the difference? Also a frustratingly difficult problem, one which applies not just to spotting fake upscales but also to finding real TV recordings instead of lower quality catch up service clips. For the 1080 copies one of the biggest things was that the TV broadcast copies (unfortunately) always come through in 1080i. 1080p is suspicious. It means something has been touched (although sometimes in a way that is forgivable when nothing cleaner exists). File sizes are also a good sanity check. A single song in h264 should be in the 200-400MiB range, MPEG2 is 400MiB+. (For reference YouTube, and other inferior WEBDLs, usually come in at under 100MiB). This is a good reminder of why size does not always equal quality, because, in this scenario, worst to best is 100MiB < 450MiB < 250MiB.

On the 4k side: Music Bank, Show Music Core and Inkigayo are currently broadcast on UHD channels with a UHD variant of their channel names in the top right, which is a good indicator that something was recorded from a UHD source. (Although I've found 1080p copies recorded from UHD channels, so the presence of the logo doesn't mean no downscaling has occurred). Recent "4k" M! Countdown, Show Champion and The Show recordings, in comparison, appear identical in channel watermark to the 1080 copies. This and the fact that the only 4k copies I've found are from a source known to dabble in AI upscales means that I'm not going to trust these are genuine without further proof, and this was the main category of files which I decided against adding to my collection. (Note that The Show was in the past the only show that was broadcast in 4k, first on UMAX UHD and then on SBS F!L UHD, it is only recently that that seems to have stopped (or, no one has been recording it)). I paid less attention to the 4k file sizes than for 1080. 4k HEVC seems to come in pretty reliably at just under 1GiB, but 4k h264 is all over the place due to I think some differing quality profiles between uploaders (a range of 1GiB to nearly 3GiB). The latter is annoying because it means I can't figure out what the "true" recording should be, but I did develop an internal ranking of the trustworthiness of sources which was the tiebreaker when two differently sized 4k h264 recordings existed.

I prioritised collecting existing cuts where applicable (although many were not cut nearly as meticulously frame perfectly as I would like) but there were quite a few where I had to cut them out of full broadcast recordings myself. There were also instances where only the main performance was saved in 4k leaving interviews behind. In those cases I decided to keep the highest quality available for the bits in which it was and store the other segments separately.

Results

Alright, the fun stuff! I have successfully catalogued:

317 Dreamcatcher music show performances

34 Dreamcatcher miscellaneous appearances

46 Minx music show performances

16 Minx miscellaneous appearances

Those links go to mostly complete YouTube representations of what I found with exceptions listed in the descriptions of the playlists. You can also of course find a published version of my beautiful spreadsheet here. Finally, if you've made it this deep I would like to just quietly tell you I'm more than happy to share the originals, but please message me privately as I don't want to publicly host questionably copyrighted content on my good and morally upstanding posting images of dreamcatcher photobooks blog (for legal reasons the entire existence of this collection is a joke).

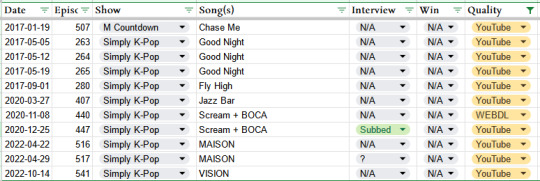

Honestly I'm pretty happy with the completeness of the collection after my first pass. The list of ones I would consider "missing" (aka not good enough quality) is:

It's almost all Simply K-Pop, which makes sense as it seems to not be archived with the same enthusiasm as the others. The missing M! Countdown, meanwhile, does kind of hurt, since it's ruining an otherwise perfect run. I'm out of ideas for now but am not going to give up on it forever, I have seen evidence that proper recordings exist, so I'm sure they have got to resurface eventually.

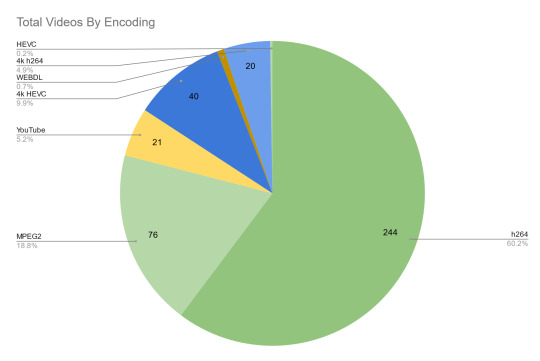

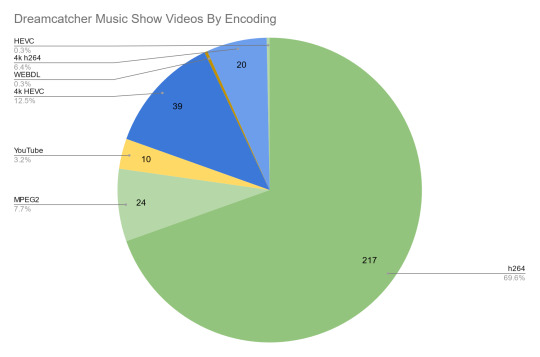

As can be seen in the breakdown for this section the percentage of MPEG2 recordings is also a pretty major victory, with the vast majority being h264:

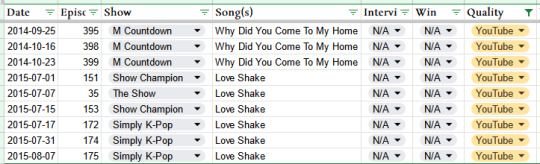

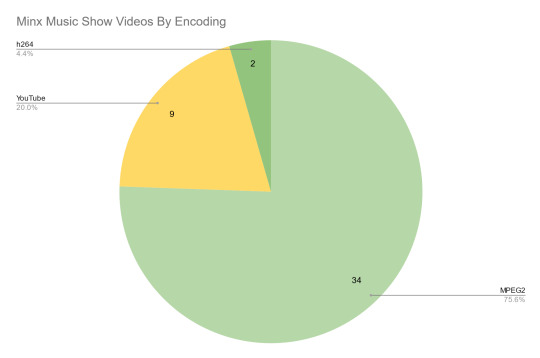

For Minx things look a bit worse:

But then again I hadn't been expecting much to begin with. To be honest, watching all of these to check quality did drive me a little insane. There is a reason I don't own Love Shake and Why Did You Come To My Home, and it's not purely the irresponsible financial decision, it's the thought of spending that much money on something I actually really don't like. Watching Minx performances is amusing for a little while, but man, am I glad Dreamcatcher exists instead.

It's also evident that people were holding to completely different standards even just a few years earlier, as this is where all those MPEG2s in the overall numbers are hiding:

Future Work

While I'm confident I've successfully catalogued the "music show performances" as defined in the scope, I know there is still much more to be discovered under Misc Appearances, as those were filled in not via a predefined wish list but just by what other recordings I happened across in my search for the original goal. The obvious next step would to be a little more systematic about finding out what I'm missing in that category by scouring some Dreamcatcher specific archives, but because I've already exhausted the full collections of my two main sources of recordings I'm not confident I would uncover many more performances outside of YouTube in that way, which isn't really that appealing.

I'm probably more likely going to do something about my performance outfit images collection which has been slowly but steadily increasing over the years. I'd like to put them into a proper searchable database alongside performance dates, which would have a similar effect of cataloguing the missing performances, but with the visual element that the spreadsheet lacks.

But then again, I also might not :)

7 notes

·

View notes

Text

Exploring Python: Features and Where It's Used

Python is a versatile programming language that has gained significant popularity in recent times. It's known for its ease of use, readability, and adaptability, making it an excellent choice for both newcomers and experienced programmers. In this article, we'll delve into the specifics of what Python is and explore its various applications.

What is Python?

Python is an interpreted programming language that is high-level and serves multiple purposes. Created by Guido van Rossum and released in 1991, Python is designed to prioritize code readability and simplicity, with a clean and minimalistic syntax. It places emphasis on using proper indentation and whitespace, making it more convenient for programmers to write and comprehend code.

Key Traits of Python :

Simplicity and Readability: Python code is structured in a way that's easy to read and understand. This reduces the time and effort required for both creating and maintaining software.

Python code example: print("Hello, World!")

Versatility: Python is applicable across various domains, from web development and scientific computing to data analysis, artificial intelligence, and more.

Python code example: import numpy as np

Extensive Standard Library: Python offers an extensive collection of pre-built libraries and modules. These resources provide developers with ready-made tools and functions to tackle complex tasks efficiently.

Python code example: import matplotlib.pyplot as plt

Compatibility Across Platforms: Python is available on multiple operating systems, including Windows, macOS, and Linux. This allows programmers to create and run code seamlessly across different platforms.

Strong Community Support: Python boasts an active community of developers who contribute to its growth and provide support through online forums, documentation, and open-source contributions. This community support makes Python an excellent choice for developers seeking assistance or collaboration.

Where is Python Utilized?

Due to its versatility, Python is utilized in various domains and industries. Some key areas where Python is widely applied include:

Web Development: Python is highly suitable for web development tasks. It offers powerful frameworks like Django and Flask, simplifying the process of building robust web applications. The simplicity and readability of Python code enable developers to create clean and maintainable web applications efficiently.

Data Science and Machine Learning: Python has become the go-to language for data scientists and machine learning practitioners. Its extensive libraries such as NumPy, Pandas, and SciPy, along with specialized libraries like TensorFlow and PyTorch, facilitate a seamless workflow for data analysis, modeling, and implementing machine learning algorithms.

Scientific Computing: Python is extensively used in scientific computing and research due to its rich scientific libraries and tools. Libraries like SciPy, Matplotlib, and NumPy enable efficient handling of scientific data, visualization, and numerical computations, making Python indispensable for scientists and researchers.

Automation and Scripting: Python's simplicity and versatility make it a preferred language for automating repetitive tasks and writing scripts. Its comprehensive standard library empowers developers to automate various processes within the operating system, network operations, and file manipulation, making it popular among system administrators and DevOps professionals.

Game Development: Python's ease of use and availability of libraries like Pygame make it an excellent choice for game development. Developers can create interactive and engaging games efficiently, and the language's simplicity allows for quick prototyping and development cycles.

Internet of Things (IoT): Python's lightweight nature and compatibility with microcontrollers make it suitable for developing applications for the Internet of Things. Libraries like Circuit Python enable developers to work with sensors, create interactive hardware projects, and connect devices to the internet.

Python's versatility and simplicity have made it one of the most widely used programming languages across diverse domains. Its clean syntax, extensive libraries, and cross-platform compatibility make it a powerful tool for developers. Whether for web development, data science, automation, or game development, Python proves to be an excellent choice for programmers seeking efficiency and user-friendliness. If you're considering learning a programming language or expanding your skills, Python is undoubtedly worth exploring.

9 notes

·

View notes

Text

EMail Me: [email protected]

Exploring EX4 and MQ4 Decompiler Tool 2024:

Understanding EX4 Files:

EX4 files are compiled binaries utilized within the MetaTrader 4 (MT4) trading platform. They encapsulate trading strategies, expert advisors, and indicators in a format that the platform can efficiently execute. These files are not designed for human readability, as they are in a machine code format, optimized for rapid and efficient execution within the MT4 environment.

Defining MQ4 Files:

MQ4 files represent the editable source code written in the MQL4 programming language. These files are human-readable and provide the blueprint for trading algorithms and indicators. They allow traders and developers to modify, enhance, and customize trading strategies to meet specific requirements or preferences, offering flexibility and control over the trading process.

Advantages of Converting EX4 to MQ4

Converting EX4 files back into the MQ4 format offers several practical benefits:

Customization and Personalization: Enables users to tailor and modify trading strategies or indicators to better fit their unique trading styles and needs. Customizations might include tweaking parameters, adding new features, or integrating with other systems.

Performance Optimization: Provides an opportunity to refine and enhance the code for improved efficiency and performance. By optimizing the MQ4 code, traders can achieve faster execution times and potentially better trading results.

Educational Insights: Allows for a deeper understanding of the underlying logic and mechanics of trading algorithms. By examining and studying the MQ4 code, users can gain valuable knowledge and insights into how specific trading strategies operate.

#ex4 to mq4#ex4 to mq4 decompiler#ex4 to mq4 converter online#ex5 to mq5#ex4 to mq4 decompiler online free

1 note

·

View note

Text

1. „Suche“:

Please search for a machine-readable version of the text titled: [insert title]

I am looking for a publicly accessible, full or partial version of the text that is:

Machine-readable (i.e. not image-only scans, not rendered via JavaScript, and not embedded in inaccessible frames),

Hosted on a reliable platform (e.g. Wikisource, Aozora Bunko, Japanese Text Initiative, archive.org),

Preferably includes chapter or section divisions to facilitate reference,

If possible, includes metadata about the manuscript base or edition (e.g. Karasumaru-bon, Kōshōji-bon),

Available in either the original Japanese or in a parallel translation (indicate if you prefer one).

If multiple versions are available, please compare and recommend the one most suited for close reading or term-based search. Include direct links and note any technical limitations (e.g. missing OCR, access restrictions).

2. „Deep Research“:

Now that we have identified a suitable version of [title], please search it for the following: Term(s) / Topic(s): [insert keywords or themes, e.g. 「言の葉」, impermanence, Yoshitsune]

Please proceed as follows:

Confirm that the text is technically accessible and searchable.

Search the text thoroughly for occurrences of the specified term(s) or theme(s).

For each occurrence, provide:

The exact location (e.g. chapter or passage number),

A full quotation of the relevant passage,

A brief summary or paraphrase of its meaning and significance in context.

If the term appears repeatedly, note any variation in usage, tone, or function.

If the source is unexpectedly unreadable or incomplete, explain why and suggest a workaround (e.g. alternative editions, downloadable files, or parallel texts).

0 notes

Text

Python Training in Chandigarh: Unlocking a Future in Programming

In today’s fast-paced digital world, programming has become a core skill across numerous industries. Among all programming languages, Python stands out due to its simplicity, versatility, and powerful capabilities. As a result, Python training has become one of the most sought-after courses for students, professionals, and aspiring developers. In Chandigarh, a city known for its educational institutions and growing IT ecosystem, Python training opens up exciting career opportunities for learners of all levels.

Why Learn Python?

Python is an interpreted, high-level, general-purpose programming language that emphasizes code readability with its clear syntax. It supports multiple programming paradigms, including procedural, object-oriented, and functional programming. Python has grown rapidly in popularity, currently ranking as one of the top programming languages worldwide.

Some of the key reasons to learn Python include:

Ease of learning: Python has a gentle learning curve, making it ideal for beginners.

Versatility: It’s used in web development, data science, artificial intelligence, automation, game development, and more.

Strong community support: Python has a vast library ecosystem and an active community that contributes to open-source tools and frameworks.

High demand: From startups to tech giants like Google, Facebook, and Netflix, Python developers are in high demand globally.

The Growing Demand for Python in India

India's digital transformation has resulted in a booming demand for skilled programmers. Python, being at the center of technologies like machine learning, data analytics, and artificial intelligence, is a critical skill in the current job market. According to recent job market surveys, Python is consistently among the top 3 most requested skills in software development roles.

Industries such as finance, healthcare, education, and e-commerce in India are actively seeking professionals with Python expertise. As the use of big data and automation expands, the demand for Python-trained professionals is expected to rise even more.

Why Choose Chandigarh for Python Training?

Chandigarh, the capital of Punjab and Haryana, is emerging as a technology hub in North India. Known for its well-planned infrastructure and high quality of life, the city is home to several IT companies, startups, and training institutes.

Key reasons to choose Chandigarh for Python training:

High-quality education centers: Chandigarh hosts some of the best training institutes offering Python courses with practical, project-based learning.

Affordable living: Compared to metropolitan cities, Chandigarh offers cost-effective training and living expenses.

Growing IT ecosystem: With IT parks and emerging startups, the city offers internship and job opportunities for learners.

Peaceful environment: The city’s clean and organized environment enhances the learning experience.

What to Expect from a Python Training Program in Chandigarh?

Python training programs in Chandigarh cater to both beginners and advanced learners. Whether you are a student, fresher, or working professional looking to upskill, you can find a suitable course.

Course Structure

Most Python training programs include:

Introduction to Python: Basics of syntax, variables, data types, and control structures.

Functions and Modules: Writing reusable code using functions and importing modules.

Object-Oriented Programming: Concepts such as classes, objects, inheritance, and polymorphism.

File Handling: Reading from and writing to files.

Error Handling: Managing exceptions and debugging.

Libraries and Frameworks: Use of popular libraries like NumPy, Pandas, Matplotlib, and frameworks like Flask or Django.

Database Integration: Connecting Python applications with databases like MySQL or SQLite.

Project Work: Real-world projects that test your understanding and give hands-on experience.

Modes of Training

Institutes offer various modes of learning:

Classroom training: Traditional in-person classes with face-to-face interaction.

Online training: Live or recorded sessions accessible from home.

Weekend batches: Ideal for working professionals.

Fast-track courses: For learners who want to complete the course in a shorter time.

Certifications and Placement Support

Most reputed institutes provide certification upon course completion, which can be a great addition to your resume. Some also offer:

Resume-building assistance

Mock interviews

Placement support or job referrals

Internship opportunities with IT firms in Chandigarh

Career Opportunities After Python Training

After completing Python training, learners can pursue various career paths, such as:

Python Developer: Focused on building software applications using Python.

Web Developer: Using frameworks like Django or Flask to build web applications.

Data Analyst: Analyzing data using Pandas, NumPy, and data visualization tools.

Machine Learning Engineer: Building intelligent systems using libraries like Scikit-learn and TensorFlow.

Automation Engineer: Writing scripts for process automation in business and IT environments.

Backend Developer: Creating server-side logic for mobile and web applications.

Top Institutes for Python Training in Chandigarh

While there are many training providers, here are a few well-regarded Python training institutes in Chandigarh (as of recent trends):

CBitss Technologies

ThinkNEXT Technologies

Webtech Learning

Infowiz Software Solutions

Netmax Technologies

Each of these institutes offers various Python courses, including beginner and advanced levels, along with certification and placement support.

Tips for Choosing the Right Python Course

Check the syllabus: Ensure it covers both basics and advanced topics relevant to your goals.

Trainer experience: Look for instructors with industry experience.

Hands-on projects: Courses should include real-world projects for practical exposure.

Student reviews: Read testimonials and online reviews to gauge course quality.

Demo classes: Attend a trial session if available before enrolling.

Conclusion

Python training in Chandigarh offers a gateway to exciting and diverse career opportunities in the tech industry. Whether you aim to become a developer, data analyst, or machine learning expert, Python is a foundational skill that can set you apart in the competitive job market. With its growing tech scene, quality institutes, and supportive learning environment, Chandigarh is an ideal location to begin or advance your Python programming journey.

Investing in Python training today can pave the way for a dynamic career tomorrow.

0 notes

Text

The Ultimate Roadmap to Web Development – Coding Brushup

In today's digital world, web development is more than just writing code—it's about creating fast, user-friendly, and secure applications that solve real-world problems. Whether you're a beginner trying to understand where to start or an experienced developer brushing up on your skills, this ultimate roadmap will guide you through everything you need to know. This blog also offers a coding brushup for Java programming, shares Java coding best practices, and outlines what it takes to become a proficient Java full stack developer.

Why Web Development Is More Relevant Than Ever

The demand for web developers continues to soar as businesses shift their presence online. According to recent industry data, the global software development market is expected to reach $1.4 trillion by 2027. A well-defined roadmap is crucial to navigate this fast-growing field effectively, especially if you're aiming for a career as a Java full stack developer.

Phase 1: The Basics – Understanding Web Development

Web development is broadly divided into three categories:

Frontend Development: What users interact with directly.

Backend Development: The server-side logic that powers applications.

Full Stack Development: A combination of both frontend and backend skills.

To start your journey, get a solid grasp of:

HTML – Structure of the web

CSS – Styling and responsiveness

JavaScript – Interactivity and functionality

These are essential even if you're focusing on Java full stack development, as modern developers are expected to understand how frontend and backend integrate.

Phase 2: Dive Deeper – Backend Development with Java

Java remains one of the most robust and secure languages for backend development. It’s widely used in enterprise-level applications, making it an essential skill for aspiring Java full stack developers.

Why Choose Java?

Platform independence via the JVM (Java Virtual Machine)

Strong memory management

Rich APIs and open-source libraries

Large and active community

Scalable and secure

If you're doing a coding brushup for Java programming, focus on mastering the core concepts:

OOP (Object-Oriented Programming)

Exception Handling

Multithreading

Collections Framework

File I/O

JDBC (Java Database Connectivity)

Java Coding Best Practices for Web Development

To write efficient and maintainable code, follow these Java coding best practices:

Use meaningful variable names: Improves readability and maintainability.

Follow design patterns: Apply Singleton, Factory, and MVC to structure your application.

Avoid hardcoding: Always use constants or configuration files.

Use Java Streams and Lambda expressions: They improve performance and readability.

Write unit tests: Use JUnit and Mockito for test-driven development.

Handle exceptions properly: Always use specific catch blocks and avoid empty catch statements.

Optimize database access: Use ORM tools like Hibernate to manage database operations.

Keep methods short and focused: One method should serve one purpose.

Use dependency injection: Leverage frameworks like Spring to decouple components.

Document your code: JavaDoc is essential for long-term project scalability.

A coding brushup for Java programming should reinforce these principles to ensure code quality and performance.

Phase 3: Frameworks and Tools for Java Full Stack Developers

As a full stack developer, you'll need to work with various tools and frameworks. Here’s what your tech stack might include:

Frontend:

HTML5, CSS3, JavaScript

React.js or Angular: Popular JavaScript frameworks

Bootstrap or Tailwind CSS: For responsive design

Backend:

Java with Spring Boot: Most preferred for building REST APIs

Hibernate: ORM tool to manage database operations

Maven/Gradle: For project management and builds

Database:

MySQL, PostgreSQL, or MongoDB

Version Control:

Git & GitHub

DevOps (Optional for advanced full stack developers):

Docker

Jenkins

Kubernetes

AWS or Azure

Learning to integrate these tools efficiently is key to becoming a competent Java full stack developer.

Phase 4: Projects & Portfolio – Putting Knowledge Into Practice

Practical experience is critical. Try building projects that demonstrate both frontend and backend integration.

Project Ideas:

Online Bookstore

Job Portal

E-commerce Website

Blog Platform with User Authentication

Incorporate Java coding best practices into every project. Use GitHub to showcase your code and document the learning process. This builds credibility and demonstrates your expertise.

Phase 5: Stay Updated & Continue Your Coding Brushup

Technology evolves rapidly. A coding brushup for Java programming should be a recurring part of your development cycle. Here’s how to stay sharp:

Follow Java-related GitHub repositories and blogs.

Contribute to open-source Java projects.

Take part in coding challenges on platforms like HackerRank or LeetCode.

Subscribe to newsletters like JavaWorld, InfoQ, or Baeldung.

By doing so, you’ll stay in sync with the latest in the Java full stack developer world.

Conclusion

Web development is a constantly evolving field that offers tremendous career opportunities. Whether you're looking to enter the tech industry or grow as a seasoned developer, following a structured roadmap can make your journey smoother and more impactful. Java remains a cornerstone in backend development, and by following Java coding best practices, engaging in regular coding brushup for Java programming, and mastering both frontend and backend skills, you can carve your path as a successful Java full stack developer.

Start today. Keep coding. Stay curious.

0 notes

Text

Ultimate Guide to Python Compiler

When diving into the world of Python programming, understanding how Python code is executed is crucial for anyone looking to develop, test, or optimize their applications. This process involves using a Python compiler, a vital tool for transforming human-readable Python code into machine-readable instructions. But what exactly is a Python compiler, how does it work, and why is it so important? This guide will break it all down for you in detail, covering everything from the basic principles to advanced usage.

What is a Python Compiler?

A Python compiler is a software tool that translates Python code (written in a human-readable form) into machine code or bytecode that the computer can execute. Unlike languages like C or Java, Python is primarily an interpreted language, which means the code is executed line by line by an interpreter. However, Python compilers and interpreters often work together to convert the source code into something that can run on your system.

Difference Between Compiler and Interpreter

Before we delve deeper into Python compilers, it’s important to understand the distinction between a compiler and an interpreter. A compiler translates the entire source code into a machine-readable format at once, before execution begins. Once compiled, the program can be executed multiple times without needing to recompile.

On the other hand, an interpreter processes the source code line by line, converting each line into machine code and executing it immediately. Python, as a high-level language, uses both techniques: it compiles the Python code into an intermediate form (called bytecode) and then interprets that bytecode.

How Does the Python Compiler Work?

The Python compiler is an essential part of the Python runtime environment. When you write Python code, it first undergoes a compilation process before execution. Here’s a step-by-step look at how it works:

1. Source Code Parsing

The process starts when the Python source code (.py file) is written. The Python interpreter reads this code, parsing it into a data structure called an Abstract Syntax Tree (AST). The AST is a hierarchical tree-like representation of the Python code, breaking it down into different components like variables, functions, loops, and classes.

2. Compilation to Bytecode

After parsing the source code, the Python interpreter uses a compiler to convert the AST into bytecode. This bytecode is a lower-level representation of the source code that is platform-independent, meaning it can run on any machine that has a Python interpreter. Bytecode is not human-readable and acts as an intermediate step between the high-level source code and the machine code that runs on the hardware.

The bytecode generated is saved in .pyc (Python Compiled) files, which are stored in a special directory named __pycache__. When you run a Python program, if a compiled .pyc file is already available, Python uses it to speed up the startup process. If not, it re-compiles the source code.

3. Execution by the Python Virtual Machine (PVM)

After the bytecode is generated, it is sent to the Python Virtual Machine (PVM), which executes the bytecode instructions. The PVM is an interpreter that reads and runs the bytecode, line by line. It communicates with the operating system to perform tasks such as memory allocation, input/output operations, and managing hardware resources.

This combination of bytecode compilation and interpretation is what allows Python to run efficiently on various platforms, without needing separate versions of the program for different operating systems.

Why is a Python Compiler Important?

Using a Python compiler offers several benefits to developers and users alike. Here are a few reasons why a Python compiler is so important in the programming ecosystem:

1. Portability

Since Python compiles to bytecode, it’s not tied to a specific operating system or hardware. This allows Python programs to run on different platforms without modification, making it an ideal language for cross-platform development. Once the code is compiled into bytecode, the same .pyc file can be executed on any machine that has a compatible Python interpreter installed.

2. Faster Execution

Although Python is an interpreted language, compiling Python code to bytecode allows for faster execution compared to direct interpretation. Bytecode is more efficient for the interpreter to execute, reducing the overhead of processing the raw source code each time the program runs. It also helps improve performance for larger and more complex applications.

3. Error Detection

By using a Python compiler, errors in the code can be detected earlier in the development process. The compilation step checks for syntax and other issues, alerting the developer before the program is even executed. This reduces the chances of runtime errors, making the development process smoother and more reliable.

4. Optimizations

Some compilers provide optimizations during the compilation process, which can improve the overall performance of the Python program. Although Python is a high-level language, there are still opportunities to make certain parts of the program run faster. These optimizations can include techniques like constant folding, loop unrolling, and more.

Types of Python Compilers

While the official Python implementation, CPython, uses a standard Python compiler to generate bytecode, there are alternative compilers and implementations available. Here are a few examples:

1. CPython

CPython is the most commonly used Python implementation. It is the default compiler for Python, written in C, and is the reference implementation for the language. When you install Python from the official website, you’re installing CPython. This compiler converts Python code into bytecode and then uses the PVM to execute it.

2. PyPy

PyPy is an alternative implementation of Python that features a Just-In-Time (JIT) compiler. JIT compilers generate machine code at runtime, which can significantly speed up execution. PyPy is especially useful for long-running Python applications that require high performance. It is highly compatible with CPython, meaning most Python code runs without modification on PyPy.

3. Cython

Cython is a superset of Python that allows you to write Python code that is compiled into C code. Cython enables Python programs to achieve performance close to that of C while retaining Python’s simplicity. It is commonly used when optimizing computationally intensive parts of a Python program.

4. Jython

Jython is a Python compiler written in Java that allows Python code to be compiled into Java bytecode. This enables Python programs to run on the Java Virtual Machine (JVM), making it easier to integrate with Java libraries and tools.

5. IronPython

IronPython is an implementation of Python for the .NET framework. It compiles Python code into .NET Intermediate Language (IL), enabling it to run on the .NET runtime. IronPython allows Python developers to access .NET libraries and integrate with other .NET languages.

Python Compiler vs. Interpreter: What’s the Difference?

While both compilers and interpreters serve the same fundamental purpose—turning source code into machine-executable code—there are distinct differences between them. Here are the key differences:

Compilation Process

Compiler: Translates the entire source code into machine code before execution. Once compiled, the program can be executed multiple times without recompilation.

Interpreter: Translates and executes source code line by line. No separate executable file is created; execution happens on the fly.

Execution Speed

Compiler: Generally faster during execution, as the code is already compiled into machine code.

Interpreter: Slower, as each line is parsed and executed individually.

Error Detection

Compiler: Detects syntax and other errors before the program starts executing. All errors must be fixed before running the program.

Interpreter: Detects errors as the code is executed. Errors can happen at any point during execution.

Conclusion

The Python compiler plays a crucial role in the Python programming language by converting source code into machine-readable bytecode. Whether you’re using the default CPython implementation, exploring the performance improvements with PyPy, or enhancing Python with Cython, understanding how compilers work is essential for optimizing and running Python code effectively.

The compilation process, which includes parsing, bytecode generation, and execution, offers various benefits like portability, faster execution, error detection, and optimizations. By choosing the right compiler and understanding how they operate, you can significantly improve both the performance and efficiency of your Python applications.

Now that you have a comprehensive understanding of how Python compilers work, you’re equipped with the knowledge to leverage them in your development workflow, whether you’re a beginner or a seasoned developer.

0 notes

Text

10 Key Benefits of Using Python Programming

It is interesting to note that today Python is among the most widely used programming languages globally. However, the language must be so because of one good reason: simplicity, versatility, and powerful features. It is useful from web development and data science all the way through artificial intelligence. Whether you have just started learning programming or you have been doing it for some time, Python provides some really good advantages, which make it a very popular programming language for programmers.

1. Very Easy to Read and Learn

Python is often recommended for beginners because of the higher level of readability and user-friendly syntax. Coding becomes very intuitive and keeps the learning curve short since it's plain English-like; even the most complex works can be done in far fewer lines of code than most other programming languages.

2. Open Source and Free

Python is totally free to use, distribute and modify. Since it's open-source, a big community of developers is involved in supporting the language; they are in the process of constantly improving and expanding its usefulness. This ensures that you obtain up-to-date versions of the language and are aware of latest trends and tools used across the globe.

3 Extensive Libraries and Frameworks

Libraries like NumPy, pandas, Matplotlib, TensorFlow, and Django are examples of Python's most significant assets-the rich ecosystem of libraries and frameworks. These libraries equip programmers with the ready-to-use tools for data analysis, machine learning, web development, and many others.

4. Independent of Platform

Being a cross-platform language, Python allows the code-written on one operating system to be run on another without any changes. Therefore, the time it takes to develop and deploy applications is lesser and easier to develop in multi-platform environments.

5. Highly Versatile

Python can be applied in a variety of almost any domain for development; including web development, desktop applications, automation, data analysis, artificial intelligence, and Internet of Things (IoT). Flexibility is the best asset of Python to possess where creating nearly anything using one language becomes practical.

6. Strong Community Support

The potential that Python provides exists with solid backing from a very large and active global community. If you have a problem or want guidance on a library, you'll always find available forums, tutorials, and documentation. Websites like Stack Overflow and GitHub have enough resources for Python.

7. Ideal for Automation

Such tasks as emailing, sorting files, and web scraping can very aptly be carried out using Python. The scripting capabilities are such that writing an automation script becomes very easy and efficient.

8. Excellent for Data Science and AI

Python is the best-performing language for data science, machine learning, and artificial intelligence applications. With up-to-date libraries like Scikit-learn, TensorFlow, and Keras, Python offers intelligent modeling and big data analysis tools.

9. Easy to Integrate with Other Languages

Python makes it very easy for one to integrate with other

such as C, C++, and Java. It, therefore, becomes perfect for projects where the intense optimization of performance must be handled or the integration of legacy systems needed.

10. Career Paths

The demand for python development has hit a high note. Companies, ranging from tech startups to multinational corporations, are running a search to find professionals who possess such skills. One of the emerging fields applications of python is found in artificial intelligence and data science, making it a skill for the future.

Conclusion

Python is more than just an easy programming language-it is a strong and powerful tool utilized in many ways across industries. Its simplicity, flexibility, and strong support by community make it one of the best choices for new learners and the most experienced developers alike. Whether you wish to automate tasks, build websites, or dive into data science, Python gives you the tools to succeed.

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

#computer classes near me#Programming Classes in Bopal Ahmedabad – TCCI#Python Training Course in Bopal Ahmedabad#Software Training Institute in Iskcon Ahmedabad#TCCI - Tririd Computer Coaching Institute

0 notes

Text

Öland_Gettlinge.jpg

#wikimedia commons#2000s#2005#Gettlinge gravfält#Archaeological monuments in Sweden with known IDs#Archaeological monuments in Sweden with UUIDs#Assumed own work#Files with no machine-readable source#License migration redundant#GFDL#CC-BY-SA-3.0-migrated#Self-published work

3 notes

·

View notes

Text

Best Python Training Institute in Kerala: A Roadmap to Building a Successful Tech Career

Python has taken the tech world by storm. Its simplicity, readability, and versatility make it one of the most sought-after programming languages today. From web development and automation to data science and artificial intelligence, Python plays a central role across various domains. For aspiring developers and working professionals alike, mastering Python is no longer optional—it’s essential.

If you’re in Kerala and planning to begin or upskill your programming journey, finding the Best Python Training Institute in Kerala can be the stepping stone to your success. This guide explores the importance of Python, what a high-quality training program should include, and why Zoople Technologies stands out as a top choice.

Why Python is a Must-Learn Language in 2025

1. Beginner-Friendly Yet Powerful

Python’s syntax is intuitive and close to natural English, making it ideal for beginners. At the same time, it is robust enough to power applications used by giants like Google, Netflix, and NASA.

2. Wide Range of Applications

Python is widely used in:

Web development (using frameworks like Django and Flask)

Data science and analytics

Machine learning and AI

Game development

Internet of Things (IoT)

Scripting and automation

This versatility ensures that learning Python opens doors to various career paths.

3. High Market Demand

Companies across the globe are hiring Python developers for diverse roles. In India, especially in Kerala’s growing IT hubs like Kochi, Trivandrum, and Calicut, Python-skilled professionals are in strong demand.

4. Thriving Community and Resources

Python boasts a massive global community. Learners can access an abundance of tutorials, open-source projects, and peer support forums to accelerate their growth.

What Should You Expect from the Best Python Training Institute in Kerala?

To ensure your time, money, and effort are well invested, here’s what the Best Python Training Institute in Kerala should offer:

1. Comprehensive Curriculum

A top-tier training program should cover:

Core concepts: Variables, data types, operators, control structures

Functions and object-oriented programming

Error handling and file operations

Python libraries: NumPy, Pandas, Matplotlib

Web frameworks: Django and Flask

Real-world project development

Basics of APIs, version control (Git), and deployment techniques

2. Hands-On Learning

Theory alone won’t make you job-ready. Look for institutes that emphasize:

Live coding sessions

Real-time project implementation

Capstone projects

Coding challenges and assessments

3. Qualified and Experienced Trainers

Learning under experienced mentors can fast-track your understanding and confidence. Trainers should:

Have industry experience

Offer personalized mentorship

Be updated with current tech trends

4. Flexible Learning Modes

The institute should cater to diverse learners—students, professionals, and career switchers—offering:

Online and offline batches

Weekday and weekend classes

Access to recorded sessions

5. Certification and Placement Support

A recognized certificate from a reputed institute adds value to your resume. Placement assistance, resume building, and interview prep are key offerings of a leading training institute.

Career Prospects After Learning Python

Python’s wide application spectrum means you can pursue multiple in-demand roles such as:

Python Developer

Full Stack Web Developer

Data Analyst

Machine Learning Engineer

Backend Developer

Automation Tester

Kerala’s tech ecosystem is expanding rapidly, especially in cities like Kochi, Technopark Trivandrum, and Kozhikode Cyberpark. Companies here are constantly scouting for skilled Python professionals. Additionally, with remote work opportunities increasing, Kerala-based professionals can now work for global companies from the comfort of their hometown.

Why Kerala is an Ideal Destination for Python Training

Kerala, known for its high literacy rate and educational infrastructure, is quickly transforming into a technological education hub. Here’s why Choosing The Best Python Training Institute in Kerala makes sense:

Growing IT Hubs: Cities like Kochi and Trivandrum are witnessing exponential growth in IT infrastructure.

Affordable Education: High-quality education at lower costs compared to metros like Bangalore or Hyderabad.

Excellent Connectivity: With a strong internet presence and robust digital infrastructure, learners in Kerala can easily access online resources and hybrid learning options.

Supportive Government Policies: The Kerala Startup Mission and other government initiatives are pushing tech education and employment aggressively.

Whether you're a student or a working professional, Kerala offers the right ecosystem to build and grow your tech skills.

Zoople Technologies – The Best Python Training Institute in Kerala

When it comes to high-quality, job-oriented training, Zoople Technologies stands out as the Best Python Training Institute in Kerala. With a proven track record of creating skilled developers and a student-first approach, Zoople has emerged as a trusted name in the tech training landscape.

Why Zoople Technologies?

Industry-Relevant Curriculum: Zoople’s course content is designed by experienced developers and is regularly updated to match industry trends.

Project-Based Learning: Students work on live projects that mimic real-world challenges, giving them hands-on experience that employers value.

Experienced Trainers: The instructors are not just teachers—they are professionals who bring industry insights directly into the classroom.

Flexible Batches: Whether you’re a student or a working professional, Zoople offers batch timings that suit your schedule, including online options.

Placement Support: Zoople has a dedicated placement team that assists students with job applications, interview preparation, and resume building.

Supportive Learning Environment: With one-on-one mentoring, practical labs, and open forums, Zoople ensures every student gets the attention they need to succeed.

Zoople Technologies has earned its reputation by consistently producing industry-ready professionals who land jobs in top companies across India and abroad.

Final Thoughts

In the world of programming, Python is your gateway language—and choosing the Best Python Training Institute in Kerala is the first step toward a rewarding tech career. Kerala offers the right environment to learn and grow, and Zoople Technologies provides the quality, support, and expertise needed to turn your ambition into a profession.

If you're serious about a future in tech and want to learn from the best, Zoople Technologies is where your journey should begin.

0 notes

Text

How to Download and Use Digital Embroidery Files from Embroiplanet

In the vibrant world of textile artistry, digital embroidery has carved a niche for itself by combining traditional craftsmanship with cutting-edge technology. At the heart of this creative revolution is Embroiplanet, your trusted source for premium-quality embroidery designs. Whether you're a hobbyist or a professional embroiderer, learning how to download and use these digital files is key to unlocking endless design possibilities.

In this detailed guide, we'll walk you through the entire process—from downloading your favorite embroidery designs patterns to using them seamlessly on your embroidery designs machine. Plus, we’ll cover essential tips, compatible formats, and how to find designs for various garments like embroidery designs blouse, embroidery designs suit, and more.

1. Understanding Digital Embroidery Files

Digital embroidery files are computer-readable formats that guide your embroidery machine to stitch intricate patterns onto fabric. These files come in various types such as PES, DST, JEF, EXP, and more—each compatible with specific machines. At Embroiplanet, we offer an extensive range of embroidery designs patterns, including:

Embroidery designs flowers for floral lovers

Embroidery designs peacock for traditional elegance

Embroidery designs for T-shirts for casual chic

Embroidery designs blouse and embroidery designs suit for festive and formal wear

Embroidery designs border for adding decorative edges

Embroidery designs on shirt for fashion-forward statements

Embroidery designs for handkerchief and embroidery designs dress for delicate projects

These files are ideal for both commercial use and personal DIY embroidery projects.

2. How to Download Embroidery Designs from Embroiplanet

Downloading files from Embroiplanet is quick, secure, and user-friendly. Follow these easy steps to get started:

Step 1: Browse and Select Your Design

Navigate to our Embroidery Designs section and explore categories like:

Embroidery designs simple for minimalist patterns

Embroidery designs chain stitch for textured projects

Embroidery designs pictures for lifelike detail

Embroidery designs hoop for those working with embroidery hoops

Use search filters or type keywords such as "embroidery designs by hand" or "embroidery designs machine" to find exactly what you need.

Step 2: Choose the File Format

Each design listing will specify compatible file types. Make sure to select a design that works with your embroidery machine—for example, Brother uses PES, while Janome prefers JEF files.

Step 3: Add to Cart and Checkout

Once you've selected your design, add it to your cart. You can purchase multiple files at once. Our secure checkout process ensures your payment details remain confidential.

Step 4: Instant Download

After payment, you’ll receive a download link instantly on your confirmation page and email. Click to download the .ZIP file containing your embroidery files and related instructions.

3. How to Use Downloaded Embroidery Files

Now that you've downloaded your embroidery designs, here's how to put them to use:

Step 1: Unzip the File

Use any extraction tool like WinRAR or 7-Zip to unzip the downloaded file. Inside, you'll find:

The embroidery design file in multiple formats (e.g., .PES, .DST)

A color chart and thread list

A usage guide or PDF instructions

Step 2: Transfer the File

Transfer your selected file to a USB stick or connect your computer directly to your embroidery machine. Make sure you load the format your machine supports.

Step 3: Set Up Your Machine

Choose the fabric type and stabilizer appropriate for your design. For instance:

Use lightweight stabilizer for embroidery designs for handkerchief

Choose medium to heavy stabilizers for embroidery designs on shirt or embroidery designs dress

Step 4: Load the Design

Insert your USB or select the file through your machine’s touchscreen interface. Preview the design to ensure correct orientation, size, and colors.

Step 5: Start Stitching

Thread your machine according to the color chart. Press start and let your machine bring your vision to life!

4. Tips for Choosing the Right Embroidery Design

Whether you’re working on a casual T-shirt or a traditional saree blouse, picking the right design is crucial. Here’s how:

Consider the Fabric

Light fabrics like cotton or organza pair well with embroidery designs simple, while heavier fabrics like denim or silk can support intricate embroidery designs peacock or embroidery designs chain stitch.

Choose the Right Size

Design size matters—especially if you're embroidering on smaller items like handkerchiefs or sleeves. Use embroidery designs pattern suited to your hoop size.

Match the Theme

For everyday wear, go for embroidery designs for T-shirts featuring modern or abstract motifs. For weddings or festivals, opt for embroidery designs blouse with traditional Indian patterns.

Blend Hand and Machine Embroidery

Love mixing techniques? You can add finishing touches to embroidery designs by hand even after using your machine, creating a hybrid design with rich texture and depth.

5. Embroidery Project Ideas Using Embroiplanet Designs

Looking for creative ways to showcase your downloaded designs? Here are some trending ideas:

Customized Apparel

Create embroidery designs on shirt for personalized gifts

Add embroidery designs suit or blouse for ethnic wear customization

Use embroidery designs for T-shirts to launch your own fashion line

Home Décor

Frame embroidery designs pictures for wall art

Embroider embroidery designs flowers on cushion covers

Use embroidery designs hoop for unique wall hangings

Accessories

Create monogrammed embroidery designs for handkerchief

Embroider designs on tote bags, denim jackets, or hats

Try embroidery designs border for scarves and shawls

The versatility of digital files from Embroiplanet allows you to go beyond garments and explore home, gifting, and decor possibilities.

6. Troubleshooting Common Issues

Here are solutions to some of the most common problems users face:

Design Doesn’t Show on Machine

Make sure:

You transferred the correct file format (e.g., .PES, .DST)

The USB is properly inserted

The file isn’t corrupted (re-download if needed)

Stitches Are Misaligned

This could be due to:

Loose fabric: Use a good stabilizer and hoop it tightly

Wrong tension settings: Adjust according to your fabric

Incorrect thread sequence: Follow the provided color chart closely

Design Too Large for Hoop

Always check the hoop size before downloading. Use resizing software like Embrilliance Essentials if needed, or search for embroidery designs pattern suited for smaller hoops on Embroiplanet.

Conclusion: Create Masterpieces with Embroiplanet’s Digital Embroidery Designs

Whether you're a seasoned embroiderer or just getting started, Embroiplanet offers the best digital embroidery designs to fuel your creativity. From embroidery designs blouse to embroidery designs for T-shirts, our library is filled with stunning patterns for all occasions.

By learning how to properly download, transfer, and use digital embroidery files, you open the door to countless personalized projects. Combine embroidery designs by hand with digital stitching, play with embroidery designs flowers or chain stitch textures, and give a personal touch to everything from clothing to accessories.

Explore the world of embroidery with Embroiplanet—where tradition meets technology and creativity knows no bounds.

0 notes

Text

Getting Started with Data Analysis Using Python

Data analysis is a critical skill in today’s data-driven world. Whether you're exploring business insights or conducting academic research, Python offers powerful tools for data manipulation, visualization, and reporting. In this post, we’ll walk through the essentials of data analysis using Python, and how you can begin analyzing real-world data effectively.

Why Python for Data Analysis?

Easy to Learn: Python has a simple and readable syntax.

Rich Ecosystem: Extensive libraries like Pandas, NumPy, Matplotlib, and Seaborn.

Community Support: A large, active community providing tutorials, tools, and resources.

Scalability: Suitable for small scripts or large-scale machine learning pipelines.

Essential Python Libraries for Data Analysis

Pandas: Data manipulation and analysis using DataFrames.

NumPy: Fast numerical computing and array operations.

Matplotlib: Basic plotting and visualizations.

Seaborn: Advanced and beautiful statistical plots.

Scikit-learn: Machine learning and data preprocessing tools.

Step-by-Step: Basic Data Analysis Workflow

1. Import Libraries

import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns

2. Load Your Dataset

df = pd.read_csv('data.csv') # Replace with your file path print(df.head())

3. Clean and Prepare Data

df.dropna(inplace=True) # Remove missing values df['Category'] = df['Category'].astype('category') # Convert to category type

4. Explore Data

print(df.describe()) # Summary statistics print(df.info()) # Data types and memory usage

5. Visualize Data

sns.histplot(df['Sales']) plt.title('Sales Distribution') plt.show() sns.boxplot(x='Category', y='Sales', data=df) plt.title('Sales by Category') plt.show()

6. Analyze Trends

monthly_sales = df.groupby('Month')['Sales'].sum() monthly_sales.plot(kind='line', title='Monthly Sales Trend') plt.xlabel('Month') plt.ylabel('Sales') plt.show()

Tips for Effective Data Analysis

Understand the context and source of your data.

Always check for missing or inconsistent data.

Visualize patterns before jumping into conclusions.

Automate repetitive tasks with reusable scripts or functions.

Use Jupyter Notebooks for interactive analysis and documentation.

Advanced Topics to Explore

Time Series Analysis

Data Wrangling with Pandas

Statistical Testing and Inference

Predictive Modeling with Scikit-learn

Interactive Dashboards with Plotly or Streamlit

Conclusion

Python makes data analysis accessible and efficient for beginners and professionals alike. With the right libraries and a structured approach, you can gain valuable insights from raw data and make data-driven decisions. Start experimenting with datasets, and soon you'll be crafting insightful reports and visualizations with ease!

0 notes

Text

Data Matters: How to Curate and Process Information for Your Private LLM

In the era of artificial intelligence, data is the lifeblood of any large language model (LLM). Whether you are building a private LLM for business intelligence, customer service, research, or any other application, the quality and structure of the data you provide significantly influence its accuracy and performance. Unlike publicly trained models, a private LLM requires careful curation and processing of data to ensure relevance, security, and efficiency.

This blog explores the best practices for curating and processing information for your private LLM, from data collection and cleaning to structuring and fine-tuning for optimal results.

Understanding Data Curation

Importance of Data Curation

Data curation involves the selection, organization, and maintenance of data to ensure it is accurate, relevant, and useful. Poorly curated data can lead to biased, irrelevant, or even harmful responses from an LLM. Effective curation helps improve model accuracy, reduce biases, enhance relevance and domain specificity, and strengthen security and compliance with regulations.

Identifying Relevant Data Sources

The first step in data curation is sourcing high-quality information. Depending on your use case, your data sources may include:

Internal Documents: Business reports, customer interactions, support tickets, and proprietary research.

Publicly Available Data: Open-access academic papers, government databases, and reputable news sources.

Structured Databases: Financial records, CRM data, and industry-specific repositories.

Unstructured Data: Emails, social media interactions, transcripts, and chat logs.

Before integrating any dataset, assess its credibility, relevance, and potential biases.

Filtering and Cleaning Data

Once you have identified data sources, the next step is cleaning and preprocessing. Raw data can contain errors, duplicates, and irrelevant information that can degrade model performance. Key cleaning steps include removing duplicates to ensure unique entries, correcting errors such as typos and incorrect formatting, handling missing data through interpolation techniques or removal, and eliminating noise such as spam, ads, and irrelevant content.

Data Structuring for LLM Training

Formatting and Tokenization

Data fed into an LLM should be in a structured format. This includes standardizing text formats by converting different document formats (PDFs, Word files, CSVs) into machine-readable text, tokenization to break down text into smaller units (words, subwords, or characters) for easier processing, and normalization by lowercasing text, removing special characters, and converting numbers and dates into standardized formats.

Labeling and Annotating Data