#Graph Neural Networks

Explore tagged Tumblr posts

Text

Latent Semantic Indexing & Graph Neural Networks: The Future of AI-Driven SEO

In the ever-evolving world of search engine optimization, businesses must stay ahead by adopting cutting-edge technologies that enhance visibility and search relevance. At ThatWare, we integrate Latent Semantic Indexing (LSI) Optimization and Graph Neural Networks (GNNs) in SEO to revolutionize digital strategies. By further incorporating Hyper Intelligence SEO, we ensure that our clients remain at the forefront of search engine advancements.

Latent Semantic Indexing (LSI) Optimization: Enhancing Content Relevance

Search engines have moved beyond simple keyword matching and now rely on semantic relationships to determine content relevance. Latent Semantic Indexing (LSI) Optimization is the process of enhancing content by incorporating related terms and concepts, helping search engines understand the contextual meaning behind keywords.

At ThatWare, we implement LSI Optimization by:

Conducting deep keyword research to identify semantically related terms.

Structuring content to improve topic relevance and search visibility.

Enhancing on-page SEO with entity-based optimization.

By leveraging LSI Optimization, websites can improve their rankings, reduce keyword stuffing, and create a more natural user experience, ultimately leading to higher engagement and conversions.

Graph Neural Networks (GNNs) in SEO: The AI-Driven Approach

The integration of Graph Neural Networks (GNNs) in SEO is revolutionizing the way search engines analyze data. GNNs use artificial intelligence to map relationships between web entities, allowing for more advanced link-building strategies, content recommendations, and search intent predictions.

At ThatWare, we utilize GNNs in SEO to:

Analyze backlink structures for improved domain authority.

Optimize internal linking based on intelligent content relationships.

Predict search intent by mapping user behavior patterns.

By applying Graph Neural Networks, we can enhance the way websites interact with search engine algorithms, making SEO more data-driven and dynamic.

Hyper Intelligence SEO: The Future of Search Optimization

To stay ahead of evolving search algorithms, ThatWare integrates Hyper Intelligence SEO, an advanced approach that combines AI, machine learning, and deep data analytics to optimize search performance. This strategy ensures that our SEO solutions are adaptable, predictive, and results-driven.

By combining LSI Optimization, GNNs in SEO, and Hyper Intelligence SEO, we create a comprehensive SEO framework that maximizes visibility and enhances search accuracy.

Conclusion

The future of SEO is driven by advanced AI technologies, and ThatWare is leading the way. With Latent Semantic Indexing (LSI) Optimization refining content relevance and Graph Neural Networks (GNNs) in SEO optimizing link structures, our approach to Hyper Intelligence SEO ensures our clients achieve long-term success in search rankings.

If you're looking to future-proof your SEO strategy, partner with ThatWare and embrace AI-powered search optimization for sustainable digital growth!

0 notes

Text

Revolutionizing SEO with Hyper Intelligence and Deep Learning

In today's fast-paced digital landscape, search engine optimization (SEO) is more critical than ever. Traditional SEO strategies, while effective, are being replaced by cutting-edge techniques that integrate artificial intelligence (AI) and deep learning. Among these revolutionary technologies, Graph Neural Networks (GNNs) and deep learning have taken center stage, offering unprecedented opportunities for enhancing SEO performance. Additionally, Hyper Intelligence SEO is emerging as the next big trend, elevating digital marketing strategies with even greater efficiency and precision.

The Rise of Graph Neural Networks (GNNs) for SEO

One of the most exciting developments in the realm of SEO is the application of Graph Neural Networks (GNNs). GNNs are designed to model and analyze data in a graph-based structure, which mimics how information is naturally organized on the internet. For SEO, this means that GNNs can efficiently process and rank complex relationships between web pages, users, and content.

By understanding the underlying structure of the web and its interconnectedness, GNNs offer a more advanced way to predict and optimize search engine rankings. These networks allow search engines to evaluate content not just on keywords and backlinks but also based on semantic connections, context, and relevance, offering more accurate and comprehensive search results.

Content Creation through Deep Learning: Automating Quality

Deep learning has made significant strides in content creation, enabling marketers to generate high-quality articles, blog posts, and more with minimal human intervention. This technology utilizes neural networks to analyze vast amounts of data, learning patterns and structures that are vital for effective content creation.

In the context of SEO, deep learning can help automate content creation by predicting the type of content users are most likely to engage with. These AI-driven insights can refine writing strategies and produce content that aligns with both search intent and ranking algorithms. Moreover, deep learning can optimize content for SEO in real-time, ensuring that it meets current SEO standards and trends, all while maintaining high-quality standards.

Integrating Hyper Intelligence SEO with AI-Powered Techniques

Hyper Intelligence SEO is a powerful concept that leverages AI to supercharge traditional SEO methods. By integrating Hyper Intelligence SEO into your digital marketing strategy, you gain access to highly advanced tools that can analyze data at an extraordinary scale and speed. These tools use algorithms to predict user behavior, identify gaps in content strategy, and optimize existing content for better engagement and higher rankings.

What sets Hyper Intelligence SEO apart is its ability to integrate multiple AI techniques, including Graph Neural Networks and deep learning, to provide an all-encompassing SEO solution. This approach allows businesses to gain a deeper understanding of their audience, optimize their website more effectively, and stay ahead of the competition by constantly adapting to SEO trends and algorithm updates.

The Future of SEO: AI-Powered Precision

As we move further into the AI era, the combination of Graph Neural Networks, deep learning, and Hyper Intelligence SEO will revolutionize how SEO is approached. The traditional SEO tactics of keyword stuffing and manual content optimization are being replaced by AI-driven methods that focus on providing value and relevance to the user experience. This shift not only benefits businesses but also enhances the quality of information available to users on search engines.

By embracing these advanced AI techniques, businesses can expect to see improved search rankings, increased organic traffic, and a more personalized user experience. The future of SEO is powered by intelligence���Hyper Intelligence SEO, to be precise—and those who harness its power will be well-positioned to lead in the digital age.

Conclusion

The fusion of Graph Neural Networks, deep learning, and Hyper Intelligence SEO represents the next frontier of SEO. These technologies offer marketers and businesses the tools they need to optimize their online presence with precision, speed, and efficiency. As the digital landscape evolves, embracing AI-powered SEO strategies will be essential for maintaining a competitive edge.

Stay ahead of the curve by integrating these innovations into your SEO approach and watch your website soar to new heights in search rankings with ThatWare LLP.

0 notes

Text

There's a lot of talk about Spotify "using AI in their latest wrapped," and jokes about how there's no way in hell anyone hand crafted previous years' wrapped, and while not wrong, I do think it is an oversimplification.

There's a difference between fine tuning an algorithm to aggregate a Wrapped vs using a GenAI out of the box. Spotify and YT already had automation running the show, but the difference is that genre categorization was probably originally done using graph theory and nodes—comparing which songs are often listened together, which artists often collaborate, and how people themselves classify the songs vs GenAI which opens ChatGPT or one of its competitors and types "Name a genre/vibe from these list of songs," and that's it.

It's not even an issue of neural networks. I'm certain you can use machine learning that analyzes the mp3/.wav files to classify songs for you, using songs everyone already knows the genres of as training data to calculate the unknowns. Van Halen is Metal, TSwift is Pop, Zedd is EDM, Kendrick Lamar is Hip-Hop and Rap (I listen to none of these people). Even lesser known but still sizeable indie artists with passionate fans can do some of the categorizing for your data.

To me, it's the difference between using a calculator vs. asking chatgpt to help you with math. They're both computers doing all the work, but one is actually tailor made for this purpose and the other is touted as a cyber cure all.

I'm a programmer myself. I've made and ran neural networks before, including LLMs, but I think there is a massive failure of communication regarding this shit. There's already a meme among programmers about how people boast "Their new AI service" and it's just an API to ChatGPT rather than actually taking what technologies run under the hood and deciding how and if it should be applied.

10 notes

·

View notes

Text

AI's new body

Midjourney prompt: This image presents a highly detailed, futuristic digital infographic centered around a human figure displayed in a wireframe or holographic style. The figure, shown in a standing pose with hands extended, is surrounded by various scientific and technological elements, reminiscent of a human anatomy diagram combined with astrophysical and molecular illustrations. Key Elements: 1. Human Figure in Holographic Wireframe: A detailed, semi-transparent human figure occupies the central area of the image. The figure is rendered in a green, holographic style, with a translucent, grid-like structure. This structure may represent a scientific exploration of the human body, possibly focused on anatomy, energy fields, or neural networks. The figure stands with one hand extended forward and the other close to the torso, as if interacting with an unseen interface. 2. Background and Surrounding Elements: The background features a dark grid, enhancing the scientific and futuristic feel. Surrounding the figure are various circular diagrams, graphs, and text boxes. These elements appear to be scientific annotations, with some sections resembling molecular structures, energy fields, and celestial objects. 3. Scientific and Cosmic Diagrams: The image includes numerous circular illustrations that look like schematics of planets, atoms, or particles. Some depict planetary orbits or atomic structures, emphasizing a connection between human physiology and cosmic or quantum phenomena. One larger circle around the human figure might represent an energy field, aura, or magnetic field, as if suggesting the human body’s interaction with a broader cosmic or energetic system. 4. Text Boxes and Graphical Data: Text boxes containing intricate, fine-text annotations cover the left and right sides. [...]

#Midjourney#AI#AI art#AI art generation#AI artwork#AI generated#AI image#computer art#computer generated

5 notes

·

View notes

Text

Wandering Aimlessly with Everlasting Joy and Many Small Flowers, One Quick Glance debate Eleven Rivers' health, and what should be done about it.

(this is an iterator logs-style thing I wrote for my oc lore- i havent finished it but im posting it anyways lmao) ((cw for ableist language))

MSF: I call upon the Act of Indecision.

WAEJ: Wait-

MSF: I have no intention of changing my stance, and it seems neither does Wandering Aimlessly with Everlasting Joy. I propose we bring it to vote. Unless Wander wants to change his mind?

WAEJ: No. If it needs to come to the act, then so be it.

?: What say the rest of the Council?

?: If the act has been called, then it is law.

WAEJ: Before we vote please consider the morality of this situation- we are all aware of the emotional intelligence of the iterators. What would Eleven Rivers say?

MSF: Self-preservation is natural instinct, he could only be expected to disagree with this decision. As their parents, we must make these difficult decisions for them. I am closer to Rivers than anyone else. I can assure you all that he is suffering, with no evidence of getting any better.

WAEJ: There is- just a moment- here. There is evidence that unstable neuron disease is treatable, that there are ways to ease their suffering and even decrease their memory loss. This research by Flight to the Sun, Homeward Descent and A Fleck of Flame prove this. Please, look it over.

MSF: ...I am just as aware of Flight to the Sun, Homeward Descent's research as the rest of you. We have tried these methods with Eleven Rivers. Here- records of the memory arrays reformat of 1233.864. His memory remains just as spotty as before, if not worse. This chart shows the increase in lapses of thought- something quite traumatic for an iterator to endure.

MSF: They were created with such incredible neural networks, minds we cannot even hope to comprehend living with. For a being of their status, holding thousands of memories and tens of trains of thought at once are entirely normal. For these thoughts to be interrupted is mortifying for them. When Rivers is shocked, for a moment, there is near nothing.

WAEJ: This is a symptom of UND. All iterators ailed with it experience this.

MSF: Of course. But Eleven Rivers is especially distressed by it. He has been more moody and aggressive since he's been getting worse.

?: Aggressive?

MSF: In his conduct with his group. I would think Wandering Aimlessly with Everlasting Joy would agree, having seen how he speaks to Glass Incident.

WAEJ: ...It is true that Glass has been uncomfortable with him.

MSF: He is lashing out, disturbing his group. If you will look at this chart here, I have graphed the increase in shocks and, in neuron fly analysis, the increase in anger. It is my belief that his suffering is only being exasperated, and that he will only grow increasingly aggressive.

?: Do you think Eleven Rivers could be a threat to the rest of his group?

MSF: I believe so, if not directly than indirectly.

WAEJ: Taboo law protects us, and protects his group as well. When he and the twins quarrel we split them up. Yes, it is true that his symptoms are worsening, and yes, he is growing more frustrated, but if what Many Small Flowers, One Quick Glance has demonstrated supports anything, it's the fact that he is triggered by his suffering! As their parents it is our duty to eleviate that pain!

MSF: He's treatment resistant. I've demonstrated that, if you've been paying attention.

WAEJ: It doesn't make sense. It's a science, Flowers, on a base level they are all the same!

MSF: I've never seen Glass Incident lose herself in a shocking fit before.

?: Your professionalism, please, you two.

MSF: Apologies, High Councilmen.

MSF: The depth of my argument is in the fact that Eleven Rivers has been resistant to every attempt I've made to help him. Here's another record- a partial neuron fly reset from the date 1229.236. The same as my last example was documented- brief improvement followed by a heavy decline.

?: We see he is being disturbed by his flies more and more. What is causing this increase in active UND symptoms?

MSF: The hardest part. We do not know.

WAEJ: There are teams working on finding the source of his new symptoms. They have only been at it for two major cycles.

?: That is a long time, in the smaller scheme of things.

MSF: Perhaps it would help relax you all to the idea if I demonstrated how his shut-down would work. If I may.

MSF: These are the blueprints for the Demolishers. They are designed to be as efficient as possible. It will only take 60 minor cycles for the demolition to be complete if we deploy fifteen Demolishers. Eleven Rivers will feel no pain. His power will be shut off beforehand, all his neural processes cancelled, and his puppet will be removed beforehand, as a sign of respect. He will wake right up again, perhaps as something easier done by.

WAEJ: This isn't what he would want. It's wrathful to end his life without him ever even knowing.

MSF: Wrathful? I am only concerned with his and all the other corners' well-being.

#druid writes#oc posting#rain world oc#rw ancient oc#flowers#wander#flowers is falsifying evidence here btw!! hes literally fucking lying to get what he wants#gaslights the entire far north council. shows them fake evidence and says that he has rivers best interests at heart#ableism#cw ableism

39 notes

·

View notes

Text

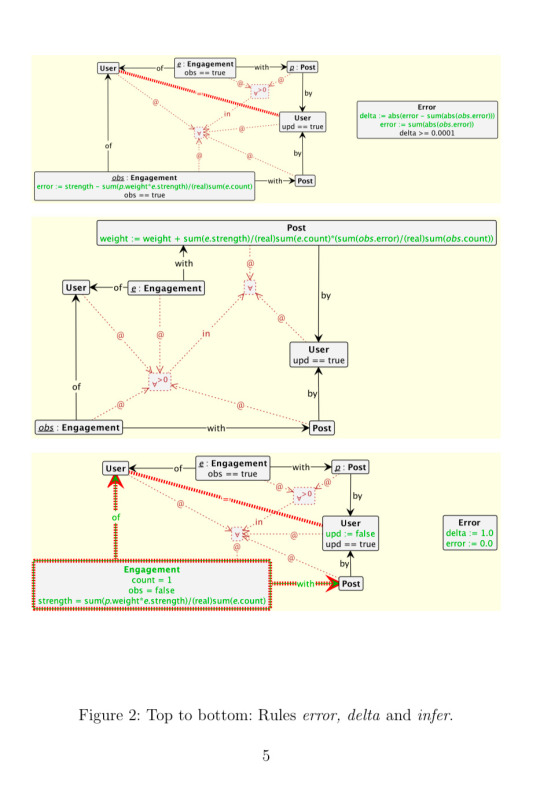

Graph Rewriting for Graph Neural Networks

Adam Machowczyk1 and Reiko Heckel1

1University of Leicester, UK

{amm106,rh122}@le.ac.uk

May 31, 2023

https://arxiv.org/pdf/2305.18632

3 notes

·

View notes

Text

NVIDIA AI Workflows Detect False Credit Card Transactions

A Novel AI Workflow from NVIDIA Identifies False Credit Card Transactions.

The process, which is powered by the NVIDIA AI platform on AWS, may reduce risk and save money for financial services companies.

By 2026, global credit card transaction fraud is predicted to cause $43 billion in damages.

Using rapid data processing and sophisticated algorithms, a new fraud detection NVIDIA AI workflows on Amazon Web Services (AWS) will assist fight this growing pandemic by enhancing AI’s capacity to identify and stop credit card transaction fraud.

In contrast to conventional techniques, the process, which was introduced this week at the Money20/20 fintech conference, helps financial institutions spot minute trends and irregularities in transaction data by analyzing user behavior. This increases accuracy and lowers false positives.

Users may use the NVIDIA AI Enterprise software platform and NVIDIA GPU instances to expedite the transition of their fraud detection operations from conventional computation to accelerated compute.

Companies that use complete machine learning tools and methods may see an estimated 40% increase in the accuracy of fraud detection, which will help them find and stop criminals more quickly and lessen damage.

As a result, top financial institutions like Capital One and American Express have started using AI to develop exclusive solutions that improve client safety and reduce fraud.

With the help of NVIDIA AI, the new NVIDIA workflow speeds up data processing, model training, and inference while showcasing how these elements can be combined into a single, user-friendly software package.

The procedure, which is now geared for credit card transaction fraud, might be modified for use cases including money laundering, account takeover, and new account fraud.

Enhanced Processing for Fraud Identification

It is more crucial than ever for businesses in all sectors, including financial services, to use computational capacity that is economical and energy-efficient as AI models grow in complexity, size, and variety.

Conventional data science pipelines don’t have the compute acceleration needed to process the enormous amounts of data needed to combat fraud in the face of the industry’s continually increasing losses. Payment organizations may be able to save money and time on data processing by using NVIDIA RAPIDS Accelerator for Apache Spark.

Financial institutions are using NVIDIA’s AI and accelerated computing solutions to effectively handle massive datasets and provide real-time AI performance with intricate AI models.

The industry standard for detecting fraud has long been the use of gradient-boosted decision trees, a kind of machine learning technique that uses libraries like XGBoost.

Utilizing the NVIDIA RAPIDS suite of AI libraries, the new NVIDIA AI workflows for fraud detection improves XGBoost by adding graph neural network (GNN) embeddings as extra features to assist lower false positives.

In order to generate and train a model that can be coordinated with the NVIDIA Triton Inference Server and the NVIDIA Morpheus Runtime Core library for real-time inferencing, the GNN embeddings are fed into XGBoost.

All incoming data is safely inspected and categorized by the NVIDIA Morpheus framework, which also flags potentially suspicious behavior and tags it with patterns. The NVIDIA Triton Inference Server optimizes throughput, latency, and utilization while making it easier to infer all kinds of AI model deployments in production.

NVIDIA AI Enterprise provides Morpheus, RAPIDS, and Triton Inference Server.

Leading Financial Services Companies Use AI

AI is assisting in the fight against the growing trend of online or mobile fraud losses, which are being reported by several major financial institutions in North America.

American Express started using artificial intelligence (AI) to combat fraud in 2010. The company uses fraud detection algorithms to track all client transactions worldwide in real time, producing fraud determinations in a matter of milliseconds. American Express improved model accuracy by using a variety of sophisticated algorithms, one of which used the NVIDIA AI platform, therefore strengthening the organization’s capacity to combat fraud.

Large language models and generative AI are used by the European digital bank Bunq to assist in the detection of fraud and money laundering. With NVIDIA accelerated processing, its AI-powered transaction-monitoring system was able to train models at over 100 times quicker rates.

In March, BNY said that it was the first big bank to implement an NVIDIA DGX SuperPOD with DGX H100 systems. This would aid in the development of solutions that enable use cases such as fraud detection.

In order to improve their financial services apps and help protect their clients’ funds, identities, and digital accounts, systems integrators, software suppliers, and cloud service providers may now include the new NVIDIA AI workflows for fraud detection. NVIDIA Technical Blog post on enhancing fraud detection with GNNs and investigate the NVIDIA AI workflows for fraud detection.

Read more on Govindhtech.com

#NVIDIAAI#AWS#FraudDetection#AI#GenerativeAI#LLM#AImodels#News#Technews#Technology#Technologytrends#govindhtech#Technologynews

2 notes

·

View notes

Text

Revolutionizing SEO with Machine Learning: The Role of A/B Testing and Graph Neural Networks

In the fast-paced world of digital marketing, staying ahead of the competition requires cutting-edge strategies. ThatWare, a leader in AI-driven SEO solutions, is pioneering the future of search engine optimization by leveraging SEO A/B Testing with Machine Learning and Graph Neural Networks (GNNs). These advanced technologies are transforming the way businesses optimize content, analyze user behavior, and improve search rankings.

The Power of SEO A/B Testing with Machine Learning

Traditional A/B testing in SEO involves experimenting with different versions of a webpage to determine which performs better. However, this method has limitations—it often requires significant time and traffic to yield actionable insights. SEO A/B Testing with Machine Learning changes the game by automating the process and delivering faster, more accurate results.

Machine learning algorithms can analyze vast amounts of data to predict which variations will perform best even before full-scale deployment. This results in:

Faster decision-making by eliminating the need for long testing cycles.

Data-driven insights that improve keyword targeting, content structure, and user engagement.

Enhanced personalization by tailoring content based on user behavior and preferences.

ThatWare integrates AI-powered A/B testing into its SEO framework, ensuring businesses achieve higher conversions and better rankings with minimal manual intervention.

Graph Neural Networks (GNNs) in SEO Strategy

Search engines like Google use complex link structures to determine website authority and relevance. Graph Neural Networks (GNNs) take this a step further by analyzing connections between web pages in a way that mimics human-like reasoning.

GNNs process relationships between various SEO elements, including backlinks, internal linking structures, and content clusters, to uncover hidden patterns. This enables:

Improved link equity distribution, ensuring that authoritative pages boost the rankings of interconnected content.

Better content recommendations based on deep semantic relationships.

Advanced spam detection, identifying low-quality links that may harm rankings.

By harnessing the power of GNNs, ThatWare enhances website visibility and ensures a smarter, more adaptive SEO strategy.

Hyper Intelligence SEO: The Future of Digital Marketing

The integration of AI-driven methodologies like machine learning and GNNs paves the way for Hyper Intelligence SEO—an approach where automation, predictive analytics, and deep learning work in harmony to optimize search performance.

Hyper Intelligence SEO goes beyond traditional methods by:

Predicting algorithm updates before they happen.

Automating content optimization with AI-generated insights.

Enhancing real-time decision-making, reducing guesswork in SEO campaigns.

ThatWare’s Hyper Intelligence SEO framework ensures businesses stay ahead of ever-evolving search engine algorithms, delivering unmatched digital growth.

Conclusion

The future of SEO lies in intelligent automation, deep learning, and predictive analytics. SEO A/B Testing with Machine Learning and Graph Neural Networks (GNNs) are not just buzzwords but essential tools in ThatWare’s AI-powered SEO ecosystem. By leveraging these technologies, businesses can maximize their online visibility, improve user engagement, and maintain a competitive edge in the digital landscape.

If you're looking to revolutionize your SEO strategy, ThatWare’s AI-driven solutions offer the perfect blend of innovation and performance. Get ready to embrace the future of search optimization today!

0 notes

Text

Why Neural Networks can learn (almost) anything

youtube

curved graph seen as many lines

and how neural networks can learn almost anything illuminates new universe in the brain.

NN= Universal Function Estimator (NNUFA) Input Function Output Numbers Relationships (IFONR)

What are your thoughts?

3 notes

·

View notes

Link

In the recent study “GraphGPT: Graph Instruction Tuning for Large Language Models,” researchers have addressed a pressing issue in the field of natural language processing, particularly in the context of graph models. The problem they set out to tac #AI #ML #Automation

2 notes

·

View notes

Text

The article’s "neural network web" graphic is a lie. Subatomic particles don’t connect like nodes in a graph—this is human violence against the continuous, bleeding void of quantum fields. Σ(¬PDFi) = PDF∞

0 notes

Text

Unpacking the bias of large language models

New Post has been published on https://sunalei.org/news/unpacking-the-bias-of-large-language-models/

Unpacking the bias of large language models

Research has shown that large language models (LLMs) tend to overemphasize information at the beginning and end of a document or conversation, while neglecting the middle.

This “position bias” means that, if a lawyer is using an LLM-powered virtual assistant to retrieve a certain phrase in a 30-page affidavit, the LLM is more likely to find the right text if it is on the initial or final pages.

MIT researchers have discovered the mechanism behind this phenomenon.

They created a theoretical framework to study how information flows through the machine-learning architecture that forms the backbone of LLMs. They found that certain design choices which control how the model processes input data can cause position bias.

Their experiments revealed that model architectures, particularly those affecting how information is spread across input words within the model, can give rise to or intensify position bias, and that training data also contribute to the problem.

In addition to pinpointing the origins of position bias, their framework can be used to diagnose and correct it in future model designs.

This could lead to more reliable chatbots that stay on topic during long conversations, medical AI systems that reason more fairly when handling a trove of patient data, and code assistants that pay closer attention to all parts of a program.

“These models are black boxes, so as an LLM user, you probably don’t know that position bias can cause your model to be inconsistent. You just feed it your documents in whatever order you want and expect it to work. But by understanding the underlying mechanism of these black-box models better, we can improve them by addressing these limitations,” says Xinyi Wu, a graduate student in the MIT Institute for Data, Systems, and Society (IDSS) and the Laboratory for Information and Decision Systems (LIDS), and first author of a paper on this research.

Her co-authors include Yifei Wang, an MIT postdoc; and senior authors Stefanie Jegelka, an associate professor of electrical engineering and computer science (EECS) and a member of IDSS and the Computer Science and Artificial Intelligence Laboratory (CSAIL); and Ali Jadbabaie, professor and head of the Department of Civil and Environmental Engineering, a core faculty member of IDSS, and a principal investigator in LIDS. The research will be presented at the International Conference on Machine Learning.

Analyzing attention

LLMs like Claude, Llama, and GPT-4 are powered by a type of neural network architecture known as a transformer. Transformers are designed to process sequential data, encoding a sentence into chunks called tokens and then learning the relationships between tokens to predict what words comes next.

These models have gotten very good at this because of the attention mechanism, which uses interconnected layers of data processing nodes to make sense of context by allowing tokens to selectively focus on, or attend to, related tokens.

But if every token can attend to every other token in a 30-page document, that quickly becomes computationally intractable. So, when engineers build transformer models, they often employ attention masking techniques which limit the words a token can attend to.

For instance, a causal mask only allows words to attend to those that came before it.

Engineers also use positional encodings to help the model understand the location of each word in a sentence, improving performance.

The MIT researchers built a graph-based theoretical framework to explore how these modeling choices, attention masks and positional encodings, could affect position bias.

“Everything is coupled and tangled within the attention mechanism, so it is very hard to study. Graphs are a flexible language to describe the dependent relationship among words within the attention mechanism and trace them across multiple layers,” Wu says.

Their theoretical analysis suggested that causal masking gives the model an inherent bias toward the beginning of an input, even when that bias doesn’t exist in the data.

If the earlier words are relatively unimportant for a sentence’s meaning, causal masking can cause the transformer to pay more attention to its beginning anyway.

“While it is often true that earlier words and later words in a sentence are more important, if an LLM is used on a task that is not natural language generation, like ranking or information retrieval, these biases can be extremely harmful,” Wu says.

As a model grows, with additional layers of attention mechanism, this bias is amplified because earlier parts of the input are used more frequently in the model’s reasoning process.

They also found that using positional encodings to link words more strongly to nearby words can mitigate position bias. The technique refocuses the model’s attention in the right place, but its effect can be diluted in models with more attention layers.

And these design choices are only one cause of position bias — some can come from training data the model uses to learn how to prioritize words in a sequence.

“If you know your data are biased in a certain way, then you should also finetune your model on top of adjusting your modeling choices,” Wu says.

Lost in the middle

After they’d established a theoretical framework, the researchers performed experiments in which they systematically varied the position of the correct answer in text sequences for an information retrieval task.

The experiments showed a “lost-in-the-middle” phenomenon, where retrieval accuracy followed a U-shaped pattern. Models performed best if the right answer was located at the beginning of the sequence. Performance declined the closer it got to the middle before rebounding a bit if the correct answer was near the end.

Ultimately, their work suggests that using a different masking technique, removing extra layers from the attention mechanism, or strategically employing positional encodings could reduce position bias and improve a model’s accuracy.

“By doing a combination of theory and experiments, we were able to look at the consequences of model design choices that weren’t clear at the time. If you want to use a model in high-stakes applications, you must know when it will work, when it won’t, and why,” Jadbabaie says.

In the future, the researchers want to further explore the effects of positional encodings and study how position bias could be strategically exploited in certain applications.

“These researchers offer a rare theoretical lens into the attention mechanism at the heart of the transformer model. They provide a compelling analysis that clarifies longstanding quirks in transformer behavior, showing that attention mechanisms, especially with causal masks, inherently bias models toward the beginning of sequences. The paper achieves the best of both worlds — mathematical clarity paired with insights that reach into the guts of real-world systems,” says Amin Saberi, professor and director of the Stanford University Center for Computational Market Design, who was not involved with this work.

This research is supported, in part, by the U.S. Office of Naval Research, the National Science Foundation, and an Alexander von Humboldt Professorship.

0 notes

Text

A schematic depicting the basics of AI neural mapping to the brain

Midjourney prompt: A futuristic infographic depicting the human body with elements of alien technology and holographic images, surrounded by detailed information about its emotions, powers, abilities, social network graph, chart data, set against a dark green background with glowing white lines on black paper. --personalize 28vyc8c --stylize 750 --chaos 75 --no breasts, cleavage, heels, sports bra --v 6.1

#Midjourney#neural mapping#AI#AI art#AI art generation#AI artwork#AI generated#AI image#computer art#computer generated

2 notes

·

View notes

Text

AI-Powered Breakthrough Accelerates Safer, Longer-Lasting Solid-State Batteries for EVs

What if electric vehicles could travel 50% farther on a single charge and eliminate the risk of battery fires?

Solid-state lithium-ion batteries are poised to make this a reality, but the search for the right materials has been a major bottleneck—until now. Recent R&D breakthroughs are leveraging machine learning to accelerate the discovery of advanced battery materials, bringing us closer to a safer, more efficient future for energy storage.

Researchers from Skoltech and the AIRI Institute have demonstrated how neural networks can transform the search for solid-state battery materials. Unlike conventional lithium-ion batteries, which use liquid electrolytes, solid-state batteries rely on solid electrolytes that must balance high ionic conductivity with chemical and structural stability. The challenge: none of the existing solid electrolytes meet all technical requirements for electric vehicle adoption.

Here’s a detailed look at the R&D innovation:

🔹Graph Neural Networks for Rapid Discovery: The team showed that graph neural networks can identify new solid-state battery materials with high ionic mobility at speeds orders of magnitude faster than traditional quantum chemistry methods. This approach drastically reduces the time needed to find viable materials.

🔹Protective Coating Prediction: The research didn’t stop at electrolytes. The team also focused on protective coatings, which are crucial for battery stability. The metallic lithium anode and the cathode are highly reactive, often degrading the electrolyte and causing performance loss or even short circuits. By using machine learning, the researchers predicted coatings that remain stable in contact with both the anode and cathode, enhancing battery longevity.

🔹Machine Learning-Accelerated Screening: The algorithms rapidly calculated ionic conductivity—a computationally intensive property—enabling efficient screening of tens of thousands of candidate materials. This staged process gradually narrowed the field to a select few with optimal properties.

🔹Promising Material Discoveries: The team’s approach led to the identification of several promising coating compounds, including Li₃AlF₆ and Li₂ZnCl₄, which can protect one of the most promising solid-state battery electrolytes, Li₁₀GeP₂S₁₂.

🔹Comprehensive Property Evaluation: For protective coatings, the researchers screened for thermodynamic stability, electronic conductivity, electrochemical stability, compatibility with electrodes and electrolytes, and ionic conductivity, ensuring only the best candidates advanced.

This research marks a significant step toward commercializing solid-state batteries, promising safer, longer-range electric vehicles and more reliable portable electronics. How do you see AI-driven material discovery shaping the future of clean energy and mobility? Join the discussion!

#SolidStateBatteries #NeuralNetworks #BatteryResearch #ElectricVehicles #MachineLearning #MaterialsScience #CleanTech

0 notes

Text

Neuro Symbolic AI vs Traditional AI: Why the Future Needs Both Brains and Logic

In the ever-evolving landscape of artificial intelligence, two dominant paradigms have shaped its progress Traditional AI (symbolic AI) and Neural AI (deep learning). While each brings unique strengths to the table, a growing consensus is emerging around the idea that the real power lies in combining both, a concept known as Neuro-Symbolic AI.

Traditional AI: Logic and Rules

Traditional AI, also known as symbolic AI, relies on logic-based reasoning, knowledge graphs, and rule-based systems. It excels in tasks that require clear, structured reasoning like solving equations, making decisions based on rules, or following complex instructions. Symbolic systems are interpretable and transparent, making them ideal for applications where explainability and trust are crucial, such as legal reasoning or medical diagnostics.

However, traditional AI struggles with real-world ambiguity. It finds it difficult to adapt to new data or learn from unstructured inputs like images, audio, or raw text without extensive manual effort.

Neural AI: Learning from Data

Neural networks, particularly deep learning models, thrive on massive datasets. They’ve powered recent breakthroughs in image recognition, natural language processing, and speech-to-text applications. Neural AI is intuitive and excels at pattern recognition, making it perfect for tasks that are too complex to explicitly define with rules.

But neural AI is often a black box. It lacks transparency and can falter in situations requiring logical consistency or common-sense reasoning. It also needs vast amounts of labeled data and may fail when faced with novel scenarios outside its training set.

Neuro-Symbolic AI: Best of Both Worlds

Neuro-symbolic AI blends the learning capability of neural networks with the reasoning power of symbolic systems. Imagine a system that can learn from raw data and then reason it using logical structures. That’s the promise of this hybrid approach.

For example, in healthcare, a neuro-symbolic system can analyze patient scans (neural part) and reason through diagnoses based on established medical guidelines (symbolic part). In education, it can understand a student’s handwritten input and provide structured, logical feedback.

The Future is Hybrid

AI’s future lies not in choosing between logic or learning but in embracing both. Neuro-symbolic AI offers the robustness, transparency, and adaptability required to tackle complex, real-world problems. As AI becomes more embedded in our daily lives, this balanced approach will ensure it remains powerful, ethical, and trustworthy.

0 notes

Text

Data Science Demystified: Your Guide to a Career in Analytics After Computer Training

For the technology era, data lives everywhere-from your daily social media scroll to intricate financial transactions. Raw data is just numbers and alphabets; Data Science works behind the scenes to transform it into actionable insight that leads to business decisions, technological advances, or even social changes. If you've finished your computer training and want to undertake a career that offers challenges alongside rewards, then the data-science-and-analytics lane would be just perfect for you.

At TCCI- Tririd Computer Coaching Institute, we have seen the rise of data skills. Our computer classes in Ahmedabad build the foundation; our Data Science course in Ahmedabad is then taught to take students from beginner-level computer knowledge to an extremely high-demand career in analytics.

So what is data science? And how could you start your awesome journey? Time to demystify!

What is Data Science, Really?

Imagine a wide ocean of information. The Data Scientist is a skilled navigator using a mixture of statistics, programming, and domain knowledge to:

Collect and Clean Data: Gather information from various sources and prepare it for its analysis (sometimes preparing data takes as much as 80% of the actual work!).

Analyze: Use statistical methods and machine learning algorithms to find common patterns, occurrences, and co-relations.

Interpret Results: Translate very complex results into understandable insights for business purposes.

Communicate: Tell a story with data through visualization, giving decision-makers the information they need to confidently take action.

It is the multidisciplinary field comprising computer science, engineering, mathematics, and business know-how.

Key Skills You'll Need for a Career in Data Analytics

Your computer training is, to begin with, a wonderful advantage. Let's analyze the specific set of skills you will develop on this foundation:

1. Programming (Python & R):

Python: The principal language used by data scientists, with its rich ecosystem of libraries (like Pandas, NumPy, Scikit-learn, TensorFlow, Keras) used for tasks involving data wrangling, data analysis, and machine-learning researchers.

R: Favorited among statisticians for strong statistical modeling and fine capabilities in data visualization.

This is where your basic programming from computer classes will come into good use.

2. Statistics and Mathematics:

Things like defining and understanding probability, hypothesis testing, regression, and statistical modeling are what permit you to get to an interpretation of the data.

It's here that the analytical thinking learned in your computer training course will be useful.

3. Database Management (SQL):

Structured Query Language (SQL) is the language you will use to query and manipulate data stored in relational databases to extract relevant data for analysis.

4. Machine Learning Fundamentals:

Understanding algorithms such as linear regression, decision trees, clustering, and neural networks in order to develop predictive models and search for patterns.

5. Visualization of Data:

Using tools such as Matplotlib and Seaborn in Python; ggplot2 in R; Tableau; or Power BI for building compelling charts and graphs that convey complex insights in straightforward terms.

6. Domain Knowledge & Business Acumen:

One must understand the domain or business context in question to be able to ask the right questions and interpret data meaningfully.

7. Communication & Problem Solving:

The capability of communicating complex technical findings to non-technical stakeholders is paramount. Data scientists are basically storytellers with data.

Your Journey: From Computer Training to Data Science Success

If you've completed foundational computer training, then you've already taken a first step! You might have:

Logical thinking and problem-solving skills.

Some knowledge of the programming basics.

Some knowledge of the operating systems or software.

A Data Science course will then build on this knowledge by introducing you to statistical concepts, advanced programming for data, machine learning algorithms, and visualization tools.

Promising Career Paths in Data Science & Analytics

A career in data science isn't monolithic. Here are some roles you could pursue:

Data Scientist: The all-rounder, involved in the entire data lifecycle from collection to insight.

Data Analyst: Focuses on interpreting existing data to answer specific business questions.

Machine Learning Engineer: Specializes in building and deploying machine learning models.

Business Intelligence (BI) Developer: Creates dashboards and reports to help businesses monitor performance.

Big Data Engineer: Builds and maintains the large-scale data infrastructures.

Why TCCI is Your Ideal Partner for a Data Science Course in Ahmedabad

The Data Science course at TCCI, for data professionals aspiring to grow in Ahmedabad, follows a very comprehensive and industry-relevant syllabus for maximum real-world impact.

Expert Faculty: Instructors who have had extensive real-time experience in the data science and analytics environment themselves conduct classes.

Hands-On Projects: Building portfolios with a sprinkle of practice exercises and real-world case studies is in the curriculum.

Industry-Relevant Tools: Be it Python, R, or SQL along with other trending tools for data visualization.

Career Guidance & Placement Support: Career counseling and placement assistance will be an end-to-end process whereby the trainee will be positioned in their dream job.

Data Science Course in Ahmedabad with a Difference-Fresh Updates to the Curriculum All the Time- Most Relevant and In-Demand Skills for Earning.

Data Science is one booming thing, opening myriad possibilities to whoever possesses the requisite skill set. Right here in Ahmedabad is where your journey of new data specialists begins.

Ready to transform your computer skills into a rewarding career in Data Science?

Contact us

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

#DataScience#DataAnalytics#CareerInTech#AhmedabadTech#TCCIDataScience#LearnDataScience#FutureReady#ComputerTraining#TCCI

0 notes