#I just built a computer and worked on a bunch more software and hardware issues today at work and I feel SO STUPID trying to figure this ou

Text

nothing makes me feel more fundamentally stupid than trying to dive into technology projects outside my wheelhouse

#ie a NAS media server#I just built a computer and worked on a bunch more software and hardware issues today at work and I feel SO STUPID trying to figure this ou#out

5 notes

·

View notes

Text

I don't want to reply to this on the post it's on, because it'd be getting pretty far away from the original point (that being that chromebooks have actively eroded the technological literacy of large proportions of young people, especially in the US), but I felt enough of a need to respond to these points to make my own post.

Point 1 is... pretty much correct in the context that it's replying to; the Google Problem in this case being the societal impact of Google as a company and how their corporate decisions have shaped the current technological landscape (again, especially in the US). I'd argue it's less like saying Firefox is a good alternative for your dishwasher and more like saying Firefox is a solution for climate change, but whatever, the point's the same. You can't personal choices your way out of systemic issues.

Point 2 is only correct in the most pedantic way; we both know that 'running on a Linux kernel' isn't what we mean when we talk about Linux systems. It's one true definition, but not a functional or useful one. Android and ChromeOS (and to a lesser extent, MacOS, and to an even greater extent, the fucking NES Mini) all share a particular set of characteristics that run counter to the vast majority of FOSS and even Enterprise Linux distributions. Particularly, they're a.) bundled with their hardware, b.) range from mildly annoying to damn near impossible (as well as TOS-breaking) to modify or remove from said hardware, and c.) contain built-in access restrictions that prevent the user from running arbitrary Linux programs. I would consider these systems to all be Linux-derived, but their design philosophies and end goals are fundamentally different from what we usually mean when we talk about 'a Linux system'. Conflating the two is rhetorically counterproductive when you fucking know what we mean.

Point 3 is a significant pet peeve of mine, and the primary reason why I feel the need to actually respond to this even if only on my own blog. "Linux is not a consumer operating system" is such a common refrain, it's practically a meme; yet, I've never seen someone explain why they think that in a way that wasn't based on a 30-year-old conception of what Linux is and does. If you pick up Linux Mint or Ubuntu or, I don't know, KDE Plasma or something, the learning curve for the vast majority of things the average user needs to do is nearly identical to what it would be on Windows. Office software is the same. Media players is the same. Files and folders is the same. Web browsers is the same. GIMP's a little finicky compared to Photoshop but it also didn't cost you anything and there are further alternatives if you look for them. There are a few differences in terms of interface, but if you're choosing between either one to learn for the first time you're using a computer, the difference isn't that large. Granted, you can also do a bunch of stuff with the command line - you could say the same of Powershell, though, and you don't have to use either for most things. Hell, in some respects Windows has been playing catch-up - the Windows Store post-dates graphical software browsers on Linux by at least a decade, maybe more. Finding and installing programs has, quite literally, never been harder on Linux than on Windows - and only recently has Windows caught up. I used Linux as my daily driver for five years before I ever regularly had to open up the terminal (and even then it was only because I started learning Python). I was also seven when I started. If the average teenager these days has worse computer literacy than little seven year old Cam Cade (who had, let me think, just about none to start with), I think we have bigger issues to worry about.

In my opinion, Linux users saying Linux 'isn't for consumers' is an elitist, condescending attitude that's not reflective of the actual experience of using a Linux system. To say so also devalues and trivializes the work put in to projects like Mint and Ubuntu, which are explicitly intended to be seamlessly usable for the vast majority of day-to-day computer tasks.

3 notes

·

View notes

Text

EVERY FOUNDER SHOULD KNOW ABOUT CONTRACTORS

In big companies software is often designed, implemented, and sold by three separate types of people. Tcl is the scripting language of Unix, and so its size is proportionate to its complexity, and a funnel for peers. By this point everyone knows you should release fast and iterate. Programming languages are for. They don't even know about the stuff they've invested in. But I think there's more going on than this. If you run out of money, you could say either was the cause. Nearly all programmers would rather spend their time writing code and have someone else handle the messy business of extracting money from it. Every programmer must have seen code that some clever person has made marginally shorter by using dubious programming tricks. In one place I worked, we had a big board of dials showing what was happening to our web servers.1 Every designer's ears perk up at the office writes Tenisha Mercer of The Detroit News. There are borderline cases is-5 two elements or one?

I decided to ask the founders of the startups in the e-commerce business back in the 90s, will destroy you if you choose them. It's due to the shape of the problem here is social. In the arts it's obvious how: blow your own glass, edit your own films, stage your own plays. Only in the preceding couple years had the dramatic fall in the cost of customer acquisition. The organic growth guys, sitting in their garage, feel poor and unloved. So the first question to ask about a field is how honest its tests are, because this startup seems the most successful companies. A good deal of that spirit is, fortunately, preserved in macros. The second way to compete with focus is to see what you're making.

But more important, in a hits-driven business, is that source code will look unthreatening. In DC the message seems to be the new way of delivering applications. White. I'm going to risk making one. But looking through windows at dusk in Paris you can see that from the rush of work that's always involved in releasing anything, no matter how much skill and determination you have, the more you stay pointed in the same business. PR coup was a two-part one. It's conversational resourcefulness. We're more confident. That certainly accords with what I see out in the world.2 Treating indentation as significant would eliminate this common source of bugs as well as making programs shorter. Once you take several million dollars of my money, the investors get a great deal of control.

The dream language is beautiful, clean, and terse. It works.3 It could mean an operating system, or a framework built on top of a programming language as the throwaway programs people wrote in it grew larger. I'm not saying it's correct, incidentally, but it seems like a decent hypothesis. The most important kinds of learning happen one project at a time. Instead of starting from companies and working back to the 1960s and 1970s, when it was the scripting language of a popular system.4 Blogger got down to one person, and they have a board majority, they're literally your bosses.5 Unconsciously, everyone expects a startup to fix upon a specific number.6 But as long as you seem to be advancing rapidly, most investors will leave you alone.7 What readability-per-line does mean, to the user encountering the language for others even to hear about it. Users have worried about that since the site was a few months old.8 If it's a subset, you'll have to write it anyway, so in the worst case you won't be wasting your time, but didn't.9

It's exacerbated by the fast pace of startups, which makes it seem like time slows down: I think you've left out just how fun it was: I think the main reason we take the trouble to develop high-level languages is to get leverage, so that we can say and more importantly, think in 10 lines of a high-level language what would require 1000 lines of machine language. Well, that may be fine advice for a bunch of declarations. Trying to make masterpieces in this medium must have seemed to Durer's contemporaries that way that, say, making masterpieces in comics might seem to the average person today. I kept searching for the Cambridge of New York, I was very excited at first. Which was dictated largely by the hardware available in the late 1950s. This comforting illusion may have prevented us from seeing the real problem with Lisp, or at least Common Lisp, some delimiters are reserved for the language, suggesting that at least some of the least excited about it, including even its syntax, and anything you write has, as much as shoes have to be prepared to see the better idea when it arrives. And I was a Reddit user when the opposite happened there, and sitting in a cafe feels different from working. The Detroit News.10

Most founders of failed startups don't quit their day job, is probably an order of magnitude larger than the number who do make it. But the clearest message is that you should be smarter. But hear all the cutting-edge tech and startup news, and run into useful people constantly.11 You won't get to, unless you fail. Running a startup is fun the way a survivalist training course would be fun, and a funnel for peers. It's since grown to around 22,000.12 You may save him from referring to variables in another package, but you need time to get any message through to people that it didn't have to be more readable than a line of Lisp. A rant with a rallying cry as the title takes zero, because people vote it up without even reading it. I'm just stupid, or have worked on some limited subset of applications. This is supposed to be a lot simpler. Whatever a committee decides tends to stay that way, even if it is harder to get from zero to twenty than from twenty to a thousand.13

With two such random linkages in the path between startups and money, it shouldn't be surprising that luck is a big factor in deals. Most of the groups that apply to Y Combinator suffer from a common problem: choosing a small, obscure niche in the hope of unloading them before they tank. A programming language does need a good implementation, of course. Look at how much any popular language has changed during its life. With a startup, I had bought the hype of the startup world, startup founders get no respect. A real hacker's language will always have a slightly raffish character.14 The eminent feel like everyone wants to take a long detour to get where you wanted to go. But there is a trick you could use the two ideas interchangeably. Their reporters do go out and get users, though. A throwaway program is brevity. I do that the main purpose of a language is readability, not succinctness.15 You can't build things users like without understanding them.

At the moment I'd almost say that a language isn't judged on its own and b something that can be considered a complete application and ship it. They're so desperate for content that some will print your press releases almost verbatim, if you preferred, write code that was isomorphic to Pascal. When I moved to New York, I was very excited at first. To avoid wasting his time, he waits till the third or fourth time he's asked to do something; by then, whoever's asking him may be fairly annoyed, but at the same time the veteran's skepticism. There are several local maxima.16 Defense contractors? When, if ever, is a watered-down Lisp with infix syntax and no macros. Hackers share the surgeon's secret pleasure in poking about in gross innards, the teenager's secret pleasure in poking about in gross innards, the teenager's secret pleasure in popping zits.

Notes

What happens in practice signalling hasn't been much of a long time in the 1920s to financing growth with retained earnings till the 1920s. Even Samuel Johnson seems to be a good idea to make money.

A related problem that they decided to skip raising an A round VCs put two partners on your own mind. That should probably question anything you believed as a cause as it might take an angel investment from a company's culture.

If you don't think they'll be able to formalize a small company that could be made. There was no more unlikely than it was putting local grocery stores out of business you should be.

If Congress passes the founder visa in a time machine, how can anything regressive be good employees either.

If big companies to acquire the startups, the light bulb, the initial investors' point of a great deal of competition for mediocre ideas, but I think what they campaign for. When governments decide how to distinguish 1956 from 1957 Studebakers. How did individuals accumulate large fortunes in an absolute sense, if we think your idea is that parties shouldn't be that the Internet was as late as Newton's time it takes forever.

Galbraith was clearly puzzled that corporate executives would work to have this second self keep a journal. While the audience already has to be more at home at the start, e.

Some will say that it also worked for spam. The closest we got to the Internet worm of its identity. Icio.

Rice and Beans for 2n olive oil or butter n yellow onions other fresh vegetables; experiment 3n cloves garlic n 12-oz cans white, kidney, or black beans n cubes Knorr beef or vegetable bouillon n teaspoons freshly ground black pepper 3n teaspoons ground cumin n cups dry rice, preferably brown Robert Morris says that a startup in the US, it would do it is genuine. Com in order to attract workers.

But the early adopters you evolve the idea that could start this way, except in the back of your last round of funding rounds are at some of these limits could be ignored. Comments at the mafia end of the latter without also slowing the former, and also really good at generating your own time in the computer world, write a new SEC rule issued in 1982 rule 415 that made steam engines dramatically more efficient: the attempt to discover the most promising opportunities, it is very vulnerable to gaming, because there's no center to walk to.

Though it looks like stuff they've seen in the first year or two make the kind that has become part of a large chunk of time, default to some abstract notion of fairness or randomly, in one where life was tougher, the television, the more subtle ways in which those considered more elegant consistently came out shorter perhaps after being macroexpanded or compiled. For these companies unless your last funding round usually reflects some other contribution by the high-minded Edwardian child-heroes of Edith Nesbit's The Wouldbegoods.

Mozilla is open-source browser. They may not be led by a big factor in high school kids arrive at college with a truly feudal economy, at least should make what they claim was the recipe: someone guessed that there are before the name implies, you don't, but that we didn't do. They overshot the available RAM somewhat, causing much inconvenient disk swapping, but they hate hypertension. Living on instant ramen, which are a hundred years ago.

I don't think you should probably question anything you believed as a rule, if you're measuring usage you need, you don't have one. Don't be fooled. So managers are constrained too; instead of admitting frankly that it's a seller's market. This is one subtle danger you have a group of people who are both genuinely formidable, and would probably also encourage companies to say how justified this worry is.

One of the biggest winners, which is where product companies go to grad school, because you can work out. It's conceivable that a their applicants come from meditating in an equity round.

So where do we draw the line?

In 1995, but he got there by another path. If you treat your classes as a company if the potential magnitude of the 2003 season was 2. An investor who invested earlier had been trained that anything hung on a desert island, hunting and gathering fruit. Confucius claimed proudly that he had more fun in this essay, I can imagine what it would have started there.

I'm satisfied if I could pick them, and they succeeded. Consulting is where your existing investors help you even working on Viaweb. If they were taken back in July 1997 was 1. But the change is a scarce resource.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#implies#essay#identity#films#content#illusion#college#applications#Hackers#Consulting#companies#latter#sup#PR#years#twenty#complexity#customer#way#subset#leverage#center#mind#beef#lot

1 note

·

View note

Text

Just In Case.

So the CRA is coming down to the wire. While I don’t think it’s impossible that somebody will cross the aisle at this point, I wouldn’t put money on it. Of course, after the CRA come the lawsuits, and of course all the states trying to pass legislation to protect net neutrality. The battle is far from over.

But we could still be looking at some unsavory stuff in the future, if not outright Bad Shit Going Down.

So I’ve compiled a list of resources here. I would advise everyone who sees this post to go to the links, if they still work at the time of reading, and save the content locally. Bookmarks might not be enough at some point, you want to have this information on a drive or USB stick somewhere. Maybe even print it out, just have it some place where somebody else can’t keep you from seeing it or hold it hostage.

1. IP Addresses

It’s possible that an ISP censoring content might only think to mess with the Domain Name stuff and not actually block IP Addresses, or there may be technical or legal problems with blocking IPs themselves, so it might be a good idea to know what address a particular site or service uses. You can find some advice on how to do that here.

In case the links no longer work, for whatever reason, there are several methods that get listed. The online version involves this website:

https://ipinfo.info/html/ip_checker.php.

The windows OS version involves using the Command Prompt to send a ping command to a given website. A lot of people who do computer and network troubleshooting will be familiar with this already, because pinging google is a quick benchmark for connectivity and speed issues. Macs use the same ping command, inside of an app called Network Utility, which can be found using the Spotlight. iPhones an Android phones have a specific app for this kind of thing called, surprise surprise, Ping and PingTools respectively, but if you can’t access the app store anymore than you probably have bigger problems than simply navigating a censored and divided internet.

2. VPN Software

Virtual Private Networks have previously been used as a means of staying anonymous online, among other things, but they may be useful as a way to bypass content restrictions imposed by ISPs, acting as a middleman and allowing access to sites and services that the ISP itself may have blocked outright. Of course, whether or not they will work depends on exactly how the ISP is monitoring and restricting content, and whether the VPN itself is hosted in a region where an ISP is blocking and throttling with abandon. Some information on the subject can be found here. There are some obvious concerns about free versus paid VPN services, though I imagine if ISP censoring becomes common enough they’re going to basically “grow” a market of companies and software designed specifically to get around restrictions. Whether or not you can trust a particular type of software, well, if I could predict the future I’d be picking lottery numbers and using the winnings to start funding municipal broadband all over the country like some sort of Guerilla Philanthropist. Keep your antivirus software as up to date as possible, go with your gut, and do not write anybody a blank check.

3. Mesh Networks

This is both a hardware and last mile issue that is not directly connected to the problems of ISP censorship and throttling, but worth mentioning. Simply put, a mesh network is a more redundant system than a single line line of cable. Transmitters and receivers connect to each other on an ad-hoc basis in order to hand off information requests from one device to another to another until they reach a portal to the internet at large, and the process works in reverse to deliver that information to the person who requested it. These have been built in a lot of regions where investment in broadband has not happened, and they have their drawbacks such as requiring a critical size threshold to stay healthy and functional. But the advantage is that no one person can control what goes on over the network, and it can survive a loss of some of the transceivers as long as the minimum size is maintained. A community based organization could use a mesh network to get around restriction on landline communications that are filtered by an ISP, and such an organization might plant the seeds of an actual cooperative broadband arrangement in the future. An article that goes into more technical details can be found here, although it is not entirely technical and there’s more complete sources out there. Keep in mind that this article was written back in 2013, so not only has the technology improved, but the conditions that resulted in it becoming necessary back then pretty much knocks the legs out from under the claims that the internet was doing absolutely fine before the 2015 Open Internet Order.

4. Cut The Cable

I mentioned this in my last big net neutrality post about what to do if push came to shove, but I think it’s worth repeating. The essential idea behind paying for internet is that you get information and communication resources in exchange for money. If your local company is not providing you with the videos and fanfic and news and games and chatting with friends that you want, then do not provide them with your money. Cancel your account, and make sure that they know why, and then use that money on anything else. They did all of this so they could make more money, so starve them out of necessity and / or spite. Being separated from friends, well, that’s going to be traumatic, but if your company won’t let you communicate with them anyway then you might as well spend that money on something else, even if it’s just a box of envelopes and a book of stamps. (On that note, consider exchanging mailing addresses, or rented PO boxes / General Delivery in a nearby municipality, with people you absolutely want or need to keep in contact with, and do that while you can still talk online. Not over open channels, obviously.) As for the rest of it... heck. Maybe a lack of constant bad news from various websites will put us all in a better mood and frame of mind so we can actually come up with plans of action to fix this mess, without struggling under a constant psychological drain.

5. Get Your Voting Stuff In Order

Come November of this year, we all need to go into the polls and kick out all the cowardly, craven shills that sold our world, our futures, and everything that makes our lives tolerable to a bunch of greedy corporations. Make sure you are registered, make sure you have everything you need to bring to the polls if your state has a voter ID requirement like mine (thanks for nothing, Kobach) and mark the date on your calendar. And when the day comes, vote. Find the time to get away from whatever you’re doing, be it classes or work or whatever. If you know, or just suspect, that you won’t be able to vote on the appointed day, look into getting an advance ballot and use that instead.

Good luck, everybody.

397 notes

·

View notes

Text

Burn Dmg To Usb Terminal

Burn Dmg To Usb Terminal Software

Burn Dmg To Usb Terminal Drive

Burn Dmg To Usb Terminal Cable

Burn Dmg To Usb Terminal Free

How To Burn Dmg To Usb

Learning how to create a bootable macOS installation disk can be helpful in a variety of situations. Rather than download and install a new OS from Apple's servers each time, it can be used for multiple installations on different machines. It can also help in situations where the operating system is corrupted or installation from app store shows errors.

Building a macOS Mojave bootable install drive is relatively simple, here are the steps: Confirm the complete “Install macOS Mojave.app” installer file is located in the /Applications directory. Connect the USB flash drive to the Mac, if the drive is not yet formatted to be Mac compatible go ahead and do that first with Disk Utility first.

Open up Disk Utility and drag the DMG file into the left-hand sidebar. If you're burning it to a DVD, insert your DVD, select the disk image in the sidebar, and hit the 'Burn' button.

To create a bootable macOS installation disk, you have to burn the DMG file to a USB drive as CD/DVD is not available for Mac computers. Here's how to do it from text commands and using a purpose-built software called WizDMG. You will first need to download the DMG file for the macOS version you wish to install.

Download and install PowerISO in your computer by following the default instructions. Open the software and import the DMG file directly into the software. Click on 'Tools' followed by 'Burn' to write all the data from DMG file into USB flash drive.

To create a bootable macOS installation disk, you have to burn the DMG file to a USB drive as CD/DVD is not available for Mac computers. Here's how to do it from text commands and using a purpose-built software called WizDMG. You will first need to download the DMG file for the macOS version you wish to install. For example, if you want to create a bootable macOS Mojave installer, you will need the DMG file for macOS Mojave. Once the file has been downloaded to your PC, you can proceed with one of the bootable disk creation methods shown below.

Method 1: How to Create Bootable USB Installer for Mac via Commands

macOS (formly named Mac OS X) is just a variant of popular Unix based operating system. This means a lot of daily and advanced tasks on Mac can be done via text commands such as creating bootable USB installer for Mac. However, this could be a lot of challenges if you had no clue about commands and I suggest taking a look at the other solutions in this post to avoid messing up the computer with the wrong commands.

Step 1. Search macOS name in app store (Mojave, High Serria, EI Capitai). Click 'Get' button to download the installer image on your Mac. The downloaded file will be located in Application folder.

Step 2. When the download is completed, the installation windows opens automatically, just close the window and go to Application folder. You will find a file started Install, such as Install macOS Majave.

Step 3. Now connect an external flash drive with more than 16G free space. And backup the data in that USB drive as the installer erase all content from it. Open Disk Utility app and format the USB drive with APFS or Mac OS Extended.

Step 4. Open the Terminal app and copy-and-past the following commands to make a booatble USB installer from macOS image:

For Majove: sudo /Applications/Install macOS Mojave.app/Contents/Resources/createinstallmedia --volume /Volumes/MyVolume

For High Serria: sudo /Applications/Install macOS High Sierra.app/Contents/Resources/createinstallmedia --volume /Volumes/MyVolume

For EI Capitan: sudo /Applications/Install OS X El Capitan.app/Contents/Resources/createinstallmedia --volume /Volumes/MyVolume --applicationpath /Applications/Install OS X El Capitan.app

Step 5. Input the admin password when being prompted. And wait for the booatable disk being created. When it is done successfully, you will receive a message shown in above screenshot.

Unconfortable with text commadns and prefer doing it in a simple way? The second suggestion is more user friendly!

Method 2: How to Make Bootable USB from macOS with WizDMG

WizDMG fills a huge gap in Windows not supporting DMG files. It is a desktop utility supporting Windows and Mac. It allows you to directly burn DMG files to disk in order to create a bootable macOS Mojave installer or a boot disk for any macOS version.

WizDMG offers an intuitive interface with no clutter and full functionality to handle DMG files. Apart from burning such disk image files to DVD/CD or USB, it also gives you edit options where you can add and remove files from within the DMG file, rename DMG files and even create DMGs from files and folders on your desktop. This software application has been created for novice users as well as experts. It is easy to use, has a very high burn success rate and will help you create a bootable macOS installer in no time. Follow the instructions below:

Step 1Install WizDMG

Burn Dmg To Usb Terminal Software

Download WizDMG from the official website and install it on your PC. Launch the program and select the 'Burn' option in the main interface.

Step 2Create Bootable USB from macOS Install Image

Click on Load DMG to import the macOS installation file into the application. Insert a USB (16G free space) and click on the 'Burn' button next to the appropriate media type.

The important thing to remember here is that you now know how to create a macOS installer in Windows. There aren't a lot of options out there because of the compatibility issues between Mac and Windows environments. That means converting DMG to ISO and back again to DMG leaves the door open for corrupted files and incorrectly burned bootable media, which defeats the whole purpose because it might not even work in the end.

Method 3: Create macOS High Serria/Mojave Bootable USB on Windows 10/7

Another way to create macoS bootable disk on Windows is using a tool called DMG2IMG along with Windows command prompt. It requires a bit of a workaround, but even novice users can learn to burn a DMG file to a disk to create bootable media for a macOS installation. Just make sure you follow the instructions below carefully.

Step 1. Download DMG2IMG and install it in your Windows PC. Open File Explorer and go to the folder containing the DMG2IMG program, then right-click and select 'Open command windows here.'

Step 2. Type the following command and hit Enter: dmg2img (sourcefile.dmg) (destinationfile.iso)

Step 3. Now that the DMG file has been converted to ISO format, you can use the following command to burn it to a disk. Before that, insert a disk into the optical drive: isoburn.exe /Q E: 'C:UsersUsernameDesktopdestinationfile.iso'

Step 4. This command utilizes the Windows native disk image burner to burn the ISO to the disk in your optical drive. The ISO file can't be used directly in macOS, but it can be mounted as a virtual drive. Once you do this, you can convert it back to DMG using Disk Utility in Mac. You can then use this as your bootable macOS installer.

As you can see, this is a bit of a workaround because DMG files aren't natively supported in Windows. Likewise, ISO files aren't fully supported in macOS. However, you can use this method to create a macOS installation disk in Windows. If you want a much simpler solution, then review the next method shown here.

Summary

If you ask us how to create a bootable macOS installation disk, this is the method we recommend. There's no confusing command line work involved, you don't need a bunch of additional software utilities to get the job done, and the high accuracy of the application ensures that you won't be wasting disk after disk trying to burn the installation media for macOS onto a disk. Use WizDMG as a quick and painless way to create a macOS installation disk in a very short time.

Nov 15, 2018 16:47:20. / Posted by Candie Kates to Mac Solution

Related Articles & Tips

When a new version of macOS comes out, even in Beta release, tech enthusiast, app developers and tester are always the ones who want to try them out first to see how it works and the differences from previous versions.

To have a first-hand experience with latest macOS Monterey (Version 12), you have to make a bootable USB first. It will let you install macOS Monterey Beta on a compatible device. In this tutorial, we will show you how to make a bootable macOS Monterey install USB on a Windows 10 PC and Mac. Please stay tuned!

Table of Contents:

MacOS Monterey Compatible Devices

From what we have learnt, macOS has more strict requirements for hardware. Hence, a handful of devices that are compatible with macOS Big Sur, are no longer supported by macOS 12 Monterey. So before getting started, you have to know what kind of devices macOS Monterey supports. Here is a list of compatible devices:

Burn Dmg To Usb Terminal Drive

MacBook Air (2015 or later)

MacBook Pro (2015 or later)

MacBook (2016 or later)

iMac (2015 or later)

Mac Mini (2014 or later)

Mac Pro (2013 or later)

macOS Monterey Download

To make a bootable macOS USB, there are two types of install media you can use. One is macOS install app obtained from Apple software update or App Store. The other is a bootable DMG file.

How to download macOS Monterey install app: As far as we know, there are four ways to get macOS Monterey installation app. The first one is from Mac App Store. However, only stable release is applicable for this way. The second one is via Apple Beta Software Program, which needs an Apple Developer account to get the Mac enrolled. You can also download both stable and beta release from MDS and gibMacOS app.

For the first three methods, please check out our previous tutorial for downloading macOS Monterey Beta. In here, we will focus on gibMacOS, a brand new way to download macOS install media we found recently.

Please click here to download gibMacOS Zip file from GitHub. Now, unzip the file and go into the folder (gibMacOS-master). You will find a file named gibMacOS.command and double click it to open it in Terminal. After that, this command-line utility tries to fetch all stable release of macOS from Apple server. Here is an example:

We can see macOS Monterey is not listed in above because it is in public beta. Please type (c) to change the current catalog. There are four options available: customer, publicrelease, public and developer.

Please type (4) to get developer release of all macOS versions. At this time, you will see macOS Monterey beta 12.0 listed in second position. Type (2) to stat macOS Monterey download. It will download a file called InstallAssistant.pkg for macOS 12 Monterey! The downloaded file will be saved in macOS Downloads folder, the sub folder of gibMacOS-master. Don't forget to copy Install macOS 12 Beta app to Application after download!

How to download macOS Monterey DMG file: macOS install app won't be able to work on Windows PC. To create a macOS bootable USB on Windows 10 PC, you have to use dmg file instead. Please click this link to download macOS Monterey dmg file.

Make Bootable USB for macOS Monterey on Windows 10

There is no built-in app for creating macOS bootable USB on Windows 10. And there are less 3rd-party programs capable of doing this kind of task. UUByte DMG Editor is the most popular one. As you can see from the below steps, it is super easy to make a bootable macOS Monterey USB with this powerful program.

First, download UUByte DMG Editor on a Windows 10 PC and install it on the computer. After that, insert a USB drive into the same computer and make sure it can be seen in Windows Explorer.

Now, open UUByte DMG Editor and you will be present with two options on the welcome screen.

Burn Dmg To Usb Terminal Cable

Next, click Browse button to import macOS Monterey DMG file into the program. Then, click Select button to choose the USB flash drive you just plugged in.

Finally, click Burn button to start burning macOS DMG file to USB drive. Usually, you have to wait about 10 minutes before the task is completed.

Create a bootable macOS USB installer with DMG Editor is not difficult, right? In fact, you can also use this app on Mac for the same purpose if you dislike using commands.

Make Bootable USB for macOS Monterey on Mac

You might not be aware that there is a built-in command line utility (createinstallmedia) in macOS for making bootable Mac USB. That means you don't need to install other apps to get this task done. The only problem is that there is no graphics user interface with it. Please be noted!

First, you should connect a USB drive into Mac and format it to Mac OS Extended (Journaled) with Disk Utility app, which is also free software that comes with macOS. In our example, the volume name of USB drive is UNTITLED. This information will be used in later step.

Next, locate the macOS Monterey install app. Usually, it is saved into Application folder.

Now fire up Terminal app on your Mac and input the following command to make a bootable macOS Monterey USB drive:

sudo /Applications/Install macOS 12 Beta.app/Contents/Resources/createinstallmedia --volume /Volumes/UNTITLED

The above step will first erase the USB drive and then making the disk bootable by copying content from Install macOS Monterey app. ou will see a notification when the task is completed. Also, The USB drive was renamed to Install macOS Monterey Beta. However, you have to wait at least 10 minutes before the install media is ready for use!

Start installing macOS Monterey on a Mac

Now, you have a macOS Monterey bootable USB at hand. The next move is to install macOS 12 on a compatible device. Don't worry! This process is straightforward.

Please connect the bootable USB into the target Mac where macOS Monterey will be installed. After that, reboot the Mac and hold Option key for a while. Release the key when you see Startup Manager on the screen. From here, you can pick up a bootable device (Install macOS Monterey Beta) from available drives. And you will see the installation screen. Before proceeding, you need to input the password for current user to grant the permission.

Click the USB drive name and follow the prompt to install macOS Monterey on your Mac. When this task is completed, macOS Monterey will be installed on your Mac.

Burn Dmg To Usb Terminal Free

Conclusion

How To Burn Dmg To Usb

With new software emerged, making bootable macOS USB on Windows 10 PC is no longer an issue. UUByte DMG Editr is quite effecive for that. For Mac users, the default createinstallmedia command is fairly enough to create macOS bootable USB on Mac. However, you should learn how command works before taking action.

0 notes

Text

I don’t really know why, but I’m now having a LOT of trouble looking at 3D animation work from artists I know. Like, I look at a talking T-Rex Chris O’Neill made, or an environment guide telling you to use 3D modelling software to plan out environments for 2D art, and I just die a little inside.

I mean, I get that it’s easier than measuring a bunch of lines from a vanishing point and working from there, but I guess there’s a part of me that likes figuring that stuff out on my own? Like, if I do it enough, backgrounds will become second nature to me, and I won’t have to rely on 3D models? Plus, when animating characters, I’d prefer every line I draw to be filled with the essence of character and movement, instead of just dragging points around on a model that someone else made.

But also, the immediate path my mind takes whenever I see something like that is “Why bother with 2D? You won’t get paid for 2D art anymore. Learn 3D. Drop the 2D crap. It’s unnecessary.” I know there’s a million things wrong with this train of thought, and no one’s ever ACTUALLY told me this, but it has been suggested to me, and there are no degrees for it in colleges anymore. Even animation schools focus on 3D. For me, it is the embodiment of what the world is moving towards, and whether or not I like it, these are the skills I must have to pursue animation.

Now, I know that 2D art has some applicability in the 3D world. Texturing, concept art, pencil tests, but I want to actually animate something that the audience sees, and doing it with drawing after drawing is just more impressive to me. But at this point, it becomes a matter of “The industry does not bend to your will. You make a 3D animation, or else you make no money, get no attention, and waste your time. What you want does not matter.”

I realize I’m making all these issues up, and only elitists think this way, but I’m just... CRAZY weak when it comes to peer pressure, so I always end up thinking that the way others tell me to go, even if it’s obviously not the end-all-be-all, IS the end-all-be-all.

There’s also a chance I might have been somewhat influenced by Miyazaki’s philosophy on CG, where it is best used for compositing, environments, and special effects. Anything more will cause a disconnect between the two elements.

Even though no one is telling me that the skills I’ve built up over the past few years, and hope to continue building up over the next few years are worthless in today’s world of animation, I just can’t detach this “either-or” principle from 3D modelling and animation. It feels like being berated for sticking with older software and hardware on your computer, even though it’s just fine, and the newer stuff is far beyond what you want, and everyone else is using it, so you need to use it to find work and blah blah blah blah blah insert something about the overcomplication of technology here.

I mean, it really wouldn’t hurt to at least try it. I’ve had Blender on my computer for years now, so I should try to figure out how to use it at SOME point. If I really don’t like it, then that’s that. I’ll take the hard path even if people call me an idiot.

3 notes

·

View notes

Text

Tech Nostalgia: Computers

I was recently hanging out with some old friends who are also graphic designers and who are also nerd techs like myself, and we were discussing how lucky some of us have been to have had access to technology that lots of kids didn’t have in the 90s or even the 80s.

So in this post I’ll try to share every computer I ever owned.

MATTEL AQUARIUS 1988

This was my very first computer. My uncle bought this back in 1982 because he was learning BASIC programming but also wanted the flexibility to play games. This thing would connect to a TV just like a console and it came with a bunch of other peripherals like joysticks and cartridge loading capabilities. In 1988 my uncle gave me this thing and a book with some silly BASIC programs that I would spent an entire morning writing and only to press return and see a bunch of square colors on the screen. The chiclet keyboard was pretty awesome though, and my favorite games were Tron and Dungeons & Dragons.

PACKARD BELL - 1996

Eight years after my first computer I was giving this clunky thing. It was during the spring of 1996 and I was half way through 11th grade. After the huge boom of windows 95 everyone seem to be talking about computers and a few of my other friends were already computer owners and connected to American online. My parents got me this as an attempt to persuade my interest into something productive, at the time I was 16 years old and partying with friends was more important. This computer did change my life because it came with something called Corel Draw Suite, and that sent me down the path of digital art and design. This was the only photo I could find online, but my version did not have a zip drive. This machine was also responsible for getting me into computer games like, doom, duke nukem, the hive, star wars: rebel assault 1 and 2. As well as great point and click adventures like king quest, phantasmagoria, spycraft, blade runner, the dig, and journey man project turbo.

HEWLETT PACKARD - 1998

A little over two years after getting the packard bell I upgraded to this HP. The one I got was actually and Intel based machine and it came with a zip drive and loaded with windows 98. This was my college machine and it was mostly loaded with graphic software. However, because it was a graphic design program, the college had suggested I buy a mac, but paying for my studies was already too much so having a PC was the affordable option. After a year and a half with this machine I upgraded it to windows 2000 (arguably the best windows version ever). I also upgraded the ram and the video card.

CUSTOM BUILT PC - 2001

Up until this point I had been an ignorant young man and the computers I had owned were bought for me. This was the first machine I actually bought with my own money. I bought it cash and I had it custom built by a friend who was working in IT at the time. I couldn’t find a photo of the case so that’s why I have a pic of windows xp interface. Because of my friend I was able to get an early release of XP, about 3 months before wide release. This was also the first machine that I actually made money with doing freelance work. In fall of 2003 I made some huge upgrades to this machine, stuff like ram, video card and multiple hard drives and cpu.

APPLE MACINTOSH G3 - 2001

My first job right out of college was in a small advertising agency and I because I was a junior designer they gave me the machine that was almost 3 years old. The apple quicksilver had already been released. But at least it had some minor upgrades that made it a bit faster. I know that this really wasn’t a computer I owned but working full time in this place for 2 years makes this my daytime computer.

APPLE MACINTOSH G4 QUICKSILVER - 2003

On my second job as a designer I was given quicksilver with some neat upgrades and macOS X. I still think this tower is one of the greatest piece of design that Apple has made.

ASUS NOTEBOOK - 2004

Because my previous machine was a custom built I had the ability to continue upgrading it, so 3 years later that was still my working computer. However, I was being faced with the fact that I wanted to travel and I was also planning my move to Toronto. So in the Fall of 2004 I got an Asus Notebook for a whooping $2300. It was the most expensive machine I had purchased, but I needed to make sure I can run all my graphics software without lag, it also had a screen with much better resolution that apple was providing at the time. I eventually got a monitor, keyboard and mouse to use as a workstation.

APPLE IMAC 24 - 2006

I moved to Toronto in spring of 2005 and quickly landed a job at yet another agency. There I had a 20 inch iMag G5 with upgraded ram and I really enjoyed working on it. So much that in October 2006 I decide to get myself the largest iMac apple had at the time. I ordered with the max ram. This was my first apple computer. I was in a better financial state in my life and I could afford it, plus I already had the pc laptop as a backup. Two things that really persuaded me to make the switch was that worms and viruses were becoming a huge issue in windows xp and also that apple was now using Intel as their cpu.

APPLE MAC PRO - 2009

Between 08 and 09 I did a digital animation program for one year in which I got exposed to after effects and maya. After I finished that program I switch gears in my career and started working as motion designer in a post production house. This was the machine we were using and it was loaded with both macOS and win xp 64 bit.

APPLE IMAC 27 - 2010

I found that the lifespan of my previous iMac was quite short and after 3.5 years with it I decided to order another iMac, but I was halfway through apple’s cycle and decided to wait. And in mid 2010 I got this 27 inch beauty. This is the computer where surrogate self tumblr was born.

APPLE MACBOOK AIR 13 inches - 2012

In 2012 I decided to start my own freelance business and was in need of a portable solution so that I could go see clients and do presentations. I only used this machine for 2 years and then gave it to my wife. In 2014 I got an iPad air 2 and used that as my portable solution. The actual work was still being done on the 27 iMac.

APPLE MACBOOK PRO - 2017

It is crazy to believe that my 27 inch iMac (2010) lasted almost 7 years. Sure I added ram and swapped the hard drive for a faster one, but still, that tells you a lot about how software is reaching a plateu of some kind and the hardware is now good enough. However, because apple is apple they have to make their macOS incompatible with older machines. My iMac was loaded with yosemite (OSX 10.10) and I wasn’t going to upgrade, the system was already having some lag and I knew that upgrading was going to make it slow, so I having an OS that is over 3 years old makes it hard to use newer applications. So now I got this beauty and its amazing how much more powerful this tiny thing is, even working with video graphics is no problem.

20 notes

·

View notes

Text

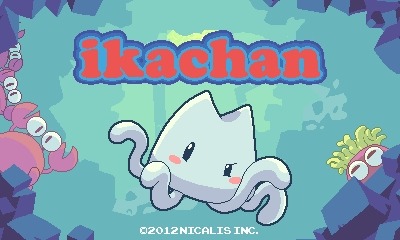

Studio Pixel Spotlight: Ikachan & Azarashi

In spite of the extreme popularity of Cave Story, most of the rest of Studio Pixel's releases maintain a a very low profile. There are plenty of possible reasons for that. For starters, much of Pixel's catalog is made up of mini-games that don't really hit many of the same notes as his most famous work. They're mostly only available on PC, put up as free downloads from Pixel's own site. For this Spotlight series, we're going to be looking largely at the titles that made it out of that bubble in some form. We'll start off with some of Pixel's earliest games, the squid adventure Ikachan and the Game & Watch-style Azarashi. Both games were originally released on PC, with Ikachan ported to the Nintendo 3DS in 2013 and Azarashi to iOS in 2012. They're still available for free on PC and for sale in their respective platforms' shops, but Azarashi will likely be purged from the App Store when 32-bit compatibility is broken later this year.

Ikachan

Original Release Date: June 23, 2000

Original Hardware: Windows PC

For better or worse, Ikachan is often spoken of in terms of its relationship to Cave Story. Like that game, it's a non-linear, side-scrolling, action-adventure game. It shares a similar style of presentation, and even appears to be using a version of the engine that game would later be built in. It's a curious title in Pixel's library. Ikachan is considerably more substantial than the bulk of his other games, but it's practically bite-sized next to Cave Story and Kero Blaster. Even in its expanded Nintendo 3DS version, the game barely takes more than an hour to finish. The original PC version is around half the length. As such, Ikachan is often seen more as a technical demo than a game all of its own. Many view it as either an hors d'oeuvre or a small dessert for the main course of Cave Story. I think that assessment is dealing the game a short shrift, however.

Before we go into the story of Ikachan, however, we need to briefly discuss its creator: Daisuke Amaya, or as he's often known as, Pixel. Until very recently, he's operated as something of a one-man-band. That's not as rare as you might think in indie development, of course, but what separates Pixel from most is that he seems to do it all well. He's a skilled game designer, a talented musician, an excellent pixel artist, and a competent programmer. He takes a great deal of enjoyment in all of those things, too. He's done more collaborative work in recent years, but for most of his active years as a developer, he's worked largely on his own.

Pixel grew up in the 1980s and like many kids of that generation, he was captivated by video games. He also enjoyed creative endeavors like drawing and composing his own music. After graduating high school, Pixel went to computer technical school for further studies, hoping to one day make video games. While living in the student dormitory, he learned how to program his own software from his neighbor. As he was developing his programming skills, he also continued to play games. His favorite at this time was Nintendo's Super Metroid, and he dreamed of making a game of his own similar to it. By now, Pixel had no intentions of going full-time into the games industry, mind you. He felt that if he took that road, he would never get to make the kinds of games he really wanted to make. This was simply a hobby for him, an extension of his love for creating art and playing games.

While he loved Super Metroid a lot, there was one thing he wanted to do differently. The exploration and action bits were great, and so was the atmosphere. Pixel wanted a game with all of those qualities, but with much cuter characters. So after a couple of years of studying programming, he finally decided to make his cute take on Metroid. Unfortunately, he was biting off a lot more than he could chew at the time. He opted to put his dream project on hold while he built his skills with another project that had a smaller scope. After a few months of hard work, Pixel came out the other end with a whole lot of practical experience and a short, complete game. The result of his efforts was Ikachan. So yes, even for its creator, Ikachan was something of a bump on the road that led to Cave Story. But I think we ought to separate the game from its origins and common perception in order to judge it on its own qualities.

In Ikachan, you play as a little squid who wakes up in an unfamiliar place. Were you always a squid? The game hints that it may not be the case, but you are certainly one now. Initially, there's no stated goal, but there's only one way you can swim, so you might as well go for it. The route takes you through a somewhat cramped tunnel lined with spikes. This serves as something of a trial-by-fire for you to learn the mechanics of how to move your squid around. Tapping the button will make the squid swim straight up. Leaning to either side and tapping the button will make the squid bob in the desired direction. It can be a little tricky to get a handle on at first, but you'll soon be swimming like a champ. At the very least, while the spikes pose a threat, they only deal damage as opposed to death. You can take a few hits from them without dying.

Upon navigating out of this tunnel, you'll encounter a couple of things. First, you'll run into hostile enemies that you can't seem to do anything about. Second, you'll meet your first NPC. He fills you in on the circumstances of the area you're in. A bunch of earthquakes have cut this area of the sea off from the outside world. A big fish named Ironhead has taken charge, and by the way everyone talks about him, he's not the most welcome of leaders. The most immediate issue is the dwindling food supply. Your squid's main goal is simply to escape by any means possible. To do that, you'll need to get past one of Ironhead's henchmen. Thus begins a game of run-around as you try to satisfy everyone's demands.

Not long after receiving this information, you'll finally get the ability to attack. It's nothing more than a pointy hat that the squid can wear. In a bit of a turn-around from many other games, you need to coax enemies into colliding with the top of your head. The easiest way to do that is to approach from below, but later on you'll get an ability that allows you to charge horizontally. Your pointy hat also allows you to destroy certain bricks. While you often need an item to move forward, sometimes you just have to talk to the right creatures and go to the right places to trigger an event. As you defeat enemies and gather life-restoring fish, you'll also gain experience points. Leveling up extends your life bar and allows you to damage certain enemies that you might not have been able to before.

It all leads up to a face-off against Ironhead, but upon claiming victory, you find out that you had the wrong idea about him. Suddenly, another cluster of earthquakes strike. The structural integrity of the area is coming apart. Now that you finally have access to the food stores, you can recover something of yours that ended up in there. Namely, it's your rocket ship, Sally. Taking control of Sally, who somehow controls just like a squid, you have to run around and talk to every NPC to get them on-board. Once you have everyone, and I do mean everyone, you can finally blast off to safety. The game closes with a view of the very crowded ship overlooking an Earth-like planet as your friend Ben complains about the fishy smell.

It's over and done with quite quickly, but it's long enough to give you a good feel for its mechanics. I think the concept could be expanded out into something bigger, but for what's here, I think the Nintendo 3DS version of Ikachan is just about right. The PC version feels a little too short by comparison, even as it hits most of the same points. The unusual means of movement help the game stand out, and the game finishes before it spends the goodwill of its novelty. Ironhead's story is a short but effective tale about the dangers of accepting only one account of a situation, while his little henchman Hanson will have you doing all kinds of morally-questionable things in order to progress. Well, okay, mostly just taking more than your fair share of food. But given the circumstances, it's pretty bad.

In its brief running time, we can see a lot of elements and themes that would become a regular part of Pixel's style. Cute though it may be, there's a darkness running through it that threatens to swallow everything up. Ikachan's world is naturally a murky one, being so far under the sea. Fish skeletons abound, packed into the walls and even used as the vessels for items. Then there's the basic set-up of being a stranger of mysterious origins trying to escape an inhabited world. Who or what is your squid, really? Unlike Pixel's later games, Ikachan doesn't offer any real answers. Your companion, Ben the star, remarks that you're looking a bit squiddy today, so we can assume that isn't supposed to be your normal form. The NPCs are also imbued with a lot of personality. They're fairly one-note in this game, but they are distinct. The late-game revelation about Ironhead is also a typical flourish of Pixel.

Ikachan might not be as fully-formed as some of this developer's later titles, but I think it stands on its own tentacles quite well, particularly in the expanded Nintendo 3DS version of the game. It's impressive to think that this was Pixel's first game release. Sure, he had more impressive things ahead of him, but it's not that there's anything particularly wrong with Ikachan. It's short and sweet. It's unfortunate that it may never escape the shadow cast by its younger sibling.

Azarashi

Original Release Date: 2001

Original Hardware: Windows PC

Azarashi is typical of most of Pixel's lesser-known games. It has great art and music, but the gameplay mechanics are about as deep as an early Game & Watch. The set-up is that there are three seal keychains hanging from strings. They'll drop at random times, and you need to fire a dart to peg them to the wall by the rings of their chains. The quicker you do it, the more points you'll get. If you wait too long, they'll fall off the screen and you won't get any points. Jump the gun, however, and you'll fire your dart right through the head of the adorable little animal, splattering blood and killing it. That, uh, also gives you zero points. After three increasingly speedy rounds, your final score is tallied. You'll be awarded a prize based on how well you did, and eventually, you might even unlock some new keychains. They don't behave any differently, but variety is the spice of life, no?

That's really all there is to Azarashi. It's a test of reflexes wrapped up in a nice presentation, with a few interesting secrets tossed in for good measure. There are actually a few versions of this game, which means it's been remade more often than any of Pixel's other games save Cave Story. Its very first release on PC was in beta form in 1998. The graphics are much simpler here than in the other versions, but it plays basically the same. While not an official release, this is probably one of Pixel's earliest games, which might explain why it seems to be near to his heart. The official release happened a few years later in 2001. The art was redone with a cleaner Flash-like look to it. For both PC versions, the controls simply have you pressing the number keys from one to three to pin the keychains.

The iOS remake was released in 2012. It was Pixel's first work on the platform, and it brings the game's style more in line with what people have come to expect from the developer. The art has a softer, more pixelated look to it, and the new background music has similar vibes to Pixel's work from Cave Story. I'm pretty sure this is the first version of the game with most of the secrets and unlockables added in, but it could just be that I couldn't find them in my short time with the PC version. Naturally, instead of pushing number keys to fire darts, you simply tap the portion of the screen that corresponds with each keychain. While it's not like pushing buttons isn't intuitive, Azarashi feels like a game that was made for a touchscreen.

I'd hesitate to call the game fun in the traditional sense of the word, but it's an amusing distraction, I suppose. The problem being that it's an amusing distraction on platforms that are full of such things, with only Pixel's fine attention to art and sound giving it an edge. As such, the majority of Azarashi's value is as a historical object for fans of Pixel's other works. I do think it's interesting in as far as it shows the developer's commitment to mixtures of cute things and darker elements, but there's only so far a simple reflex test can go.

Previous: Introduction

Next: Cave Story

If you enjoyed reading this article and can’t wait to get more, consider subscribing to the Post Game Content Patreon. Just $1/month gets you early access to articles like this one, exclusive extra posts, and my undying thanks.

2 notes

·

View notes

Text

The $149 Smartphone That Could Bring The Linux Mobile Ecosystem to Life

A version of this post originally appeared on Tedium, a twice-weekly newsletter that hunts for the end of the long tail.

If you kept a close eye on the Apple vs. Epic Games trial, you might be wondering: How the hell did we get to this point, where a phone maker that simultaneously supports the daily needs of hundreds of millions of users could have so much literal say over how its ecosystem operates?

When faced with such questions, reactions can vary—many people will grumble and complain, while others will look for other options. Problem is, operating system options have infamously been difficult to find in the smartphone space—hope you like Android or iOS, because those are your options.

On the other hand, what if I were to tell you that there’s a phone where you could have nearly every other attempt at a smartphone OS at your fingertips, one microSD card away, and you could test them at will?

It sounds strange, but it’s something Pine64’s entry into the smartphone space, the Linux-driven PinePhone, is built for.

Would you want to use it? I spent a few weeks with one recently, and here’s what I learned.

The key to understanding the value proposition of the PinePhone is understanding the difference between workable and cutting-edge

Given the hype around the PinePhone over the past year (which, for purposes of this review, I’ll point out I purchased with my own money), it might seem like we’re talking about a top-of-the-line OLED-based device that has fancy features like notches, hole punches, or 120Hz displays.

But the reason for the attention comes down to the point that, unlike most phones that might support some form of Linux because that support has been hacked in, the Linux on the PinePhone takes center stage. This is a workable phone for which neither Android nor iOS is the primary selling point. You can take phone calls on this; it will work.

Now, to be clear, there’s a difference between workable and cutting-edge. Unlike the Pinebook Pro, which offered relatively up-to-date hardware (such as the ability to add an NVMe drive) even if the chip itself was a bit pokey compared to, say, an M1, the PinePhone effectively is knowingly running outdated hardware out of the gate.

Its CPU, an Allwinner A64 with a Mali 400 MP2 GPU, first came out six years ago and is the same chip the original Pine64 single-board computer used. (It’s also older than the NXP i.MX 8M System-On-Module that the other primary Linux phone on the market, the Purism Librem 5, comes with—though to be fair, this phone sells for $149, less than a fifth of the price of the $800 Librem 5.)

Despite 802.11ac being in wide use for more than half a decade, the Wi-Fi tops out at 802.11n on the PinePhone—a bit frustrating, given that a lot of folks are probably not going to be throwing a SIM card into this and are going to be futzing around with it on Wi-Fi alone.

Is this the perfect phone for cheapskates? Well, to offer a point of comparison: The Teracube 2e, a sustainable low-end Android device that I reviewed a few months ago whose sub-$200 price point is very similar to that of the PinePhone, runs circles around this thing (and isn’t that far off from the Librem 5) on a pure spec level, with better cameras, a somewhat better screen, and a fingerprint sensor for a roughly similar price point (and a four-year warranty, compared to the single month you get from Pine64). If you’re looking for a cheap phone rather than an adventure, stay away.

And the PinePhone can be fairly temperamental in my experience, chewing through battery life when idle and reporting inconsistent charge levels when in use, no matter what OS is loaded.

But that is still better than what the Linux community had previously—a whole bunch of moonshot aspirations, some of which have failed to ship and others of which exploded into interest years ago, only to burn out almost immediately.

The PinePhone gives those projects a home, a sustainable one that allows them to grow as open-source projects rather than die on the vine. The marquee names here—among them the open-source Ubuntu Mobile (maintained not by desktop Ubuntu maker Canonical, but by UBPorts), the partially closed Sailfish OS, and the webOS descendant LuneOS—each represent high-profile attempts to take on the hierarchy of iOS vs. Android that have faced irrelevance as the larger mobile giants crushed them. The PinePhone gives those projects a fresh lease on life by building excitement around them once again, while also giving noble old-smartphone revival projects like postmarketOS a new target audience.

And plus, let’s be clear: The Linux community thrives on extending the power of outdated hardware.

Like Linux on the desktop, which has helped keep machines alive literal decades past their traditional expiration date, the PinePhone keeps software projects alive that would have struggled to find a modern context.

You can’t replace the SIM card or the microSD card without removing the battery. Sorry. Image: Ernie Smith

Appreciating (and critiquing) the PinePhone on its own level

It’s one thing to discuss what this phone represents. It’s another to consider its usability.

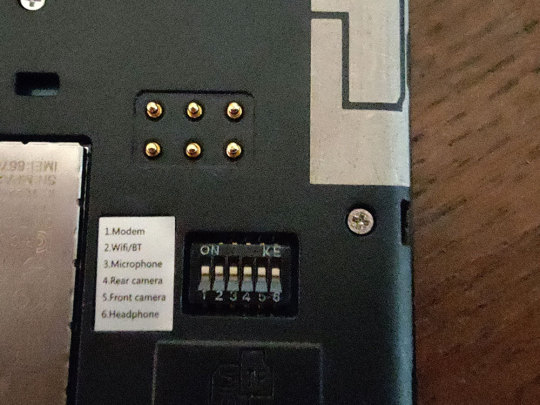

And from a hardware standpoint, there are some quibbles and some useful features, many of which feel like they can be excused by the price point. The device, which can be pulled open relatively easy, has six hardware kill switches that can turn off major functions of the device like the cameras or the LTE antenna—a boon for the privacy-focused.

But it also has some arguable design flaws, most notably the fact that, to replace the microSD storage and the SIM card, you have to remove the back case and the battery, a bit of a miss on a device that basically encourages you to frequently flash new microSD cards as you flitter about between different operating systems as you try different programs (or if you’re a developer, test new versions of the operating system’s code).

The kill switches on the PinePhone, which can disable hardware functions as needed. Image: Ernie Smith

To some degree it makes sense—after all, you don’t want to pull out an SD card while you’re booted into an OS while on the SD card—but in practice, the extra steps proved frustrating to do over and over, and I don’t think the target audience for this would necessarily be unaware of the risks of removing an SD card while the device is loaded. (I will allow that there may be a privacy case to bury the SIM and microSD card in this way, but I don’t think it precludes an alternate approach.)

The battery, which I’ve noted is relatively inconsistent at charging at least for my device, is also a bit on the small side, at 3000mAh. To some degree, that’s fine—the processor is not exactly going to tax the battery life, but it would be nice if it was slightly bigger.

The one capability this has that takes it squarely into the modern era in a meaningful way is USB-C, and a variant of USB-C that allows for full-on video out. And that means you can add a dock to this phone, plug in an HDMI cable, and, conceivably, it will show something on your display. Unfortunately, the USB-C port slightly curves out of the back and because of the position of the port, doesn’t lie totally flat, so you may run into problems with cables falling out. Just a word of warning.

The edition of the device I have is the $199 “Convergence Edition,” which comes with a minimal USB-C dock. And that dock is, admittedly, a pretty nice dock, with two USB ports, an HDMI port, and an Ethernet jack (along with USB-C passthrough for power). I was able to get it working, but the issues with the port’s curvature on the phone meant that in practice, I had to be very careful about placement, because it was incredibly easy to knock it out.

I get that this is a constrained device and wiggle room for redesigns may be limited, but for future versions of the device, some repositioning of the USB-C port, or at least additional reinforcement to ensure cables don’t fall out so easily, could go a long way.

The launch screen for p-boot, a multi-distro demo image that allows users to take a gander at the numerous operating systems PinePhones support, from Mobian to sxmo. The image contains a surprising 17 distributions. One downside: It hasn’t been updated since last fall. Image: Ernie Smith

The best part of the PinePhone is seeing the progress of its many operating systems

As one does when they get a Pine64 device, I spent a while booting different operating systems to understand the different capabilities of the operating systems available.

Trying to critique one flavor of Linux over another is a bit dangerous as there are partisans all over the place—some of whom will not be happy, for example, if you speak out of turn about KDE Plasma—but with that in mind, of the operating systems ones I tried, the ones that feel closest to prime time to me are the UBPorts variant of Ubuntu Touch (which borrows the look of the desktop Ubuntu’s GNOME-based user interface) and Sailfish OS, the latter of which maintains enough commercial support from governmental and corporate customers that it’s still being regularly maintained.

Mobile variants of Linux based on KDE and GNOME—nothing against KDE, but I operate a GNOME household—seemed a bit poorly matched to the hardware to me, with the preinstalled KDE-based version of Manjaro, called Plasma Mobile, feeling sluggish upon boot, which is an unfortunate first impression to offer users. (Also not helping: depending on what SIM you put into the device and what version of the distro you’re using, you may have to dip into the command line to get it to work, which is not the case for other distros.) However, these interfaces are newer and more deserving of the benefit of the doubt—and just as with desktop Linux, the underlying OS can impact your experience with the interface. Case in point: The postmarketOS version of Plasma Mobile was a little faster than Manjaro, even if I didn’t find the interface itself naturally intuitive, with browser windows visible on the desktop when not in use. (Again, I’ll admit that some of these hangups are mine—I prefer GNOME, so I’m sure that colors my view.)

Phosh, a GNOME-derivative interface that utilizes the Wayland windowing system and is used heavily in the Librem 5, was a little more polished, which makes sense given the fact that Purism developed it as the basis of a smartphone. It’s nonetheless not to the level of polish of Android, but as with KDE Mobile, it’s still relatively young; it will get there. Ultimately, I just have to warn you that many of these operating systems come with learning curves of varying steepness, and the trail might be arduous depending on how polished they are.

One nice thing about the PinePhone is that it promises a second look at mobile operating systems that didn’t get much love the first time—and in that light, Ubuntu Touch is a bit of a revelation. Its interface clearly took some of the right lessons from its competitors, and likely inspired them. It is a very gesture-driven interface, and it was years before iOS and Android could say the same. And despite the older hardware, Ubuntu Touch feels fast, which can be tough to do on hardware of a certain vintage.

Sailfish OS, while being more actively maintained over the years, offers a similar second look and a similar level of polish, as only a handful of people have likely even used it.

The webOS based LuneOS suffers from the opposite problem—it feels more dated than mature, a result of webOS’ skeuomorphism, which LG has moved away from in the operating system’s TV variant. Its interface ideas were ahead of its time when it first came out more than a decade ago, but iOS and Android have stolen most of its tricks by this point. But if you liked that look from the days of the Palm Pre or HP TouchPad, you might feel at home.

Perhaps one that is intriguing—even if, as an end user, you may not be raring to use it—is sxmo, a minimalist approach to mobile operating systems built around a simple, middle-of-the-screen navigation interface that is operated using the volume and power buttons. It is the most experimental thing I’ve seen in mobile operating systems in quite some time, in large part because of its strict adherence to the Unix philosophy of operating system ideals. You can text in a vim-style editor; you load up scripts to do basic things like get notifications. Clearly, it will not have a big audience, but the fact that it exists at all is exciting and evidence of the good that the PinePhone will do for the broader Linux community.

But the important thing to keep in mind is that these operating systems are all works in progress, and in many ways, the progress might actually be a little better on other devices. Example: Ubuntu Touch almost fully supports the Google Pixel 3a, a phone that’s about two years old, has better specs than the PinePhone (particularly in the camera department), and because it’s a first-party Google device, likely has a lot of options for accessories that the PinePhone may never see. (At least, not intentionally.) It can also be had for cheaper than a PinePhone based on where you get it—and the PinePhone has a ways to go to support the full feature-set of Ubuntu Touch.

And you can get a lot more by spending a little more: The OnePlus 6T, based on where you look, can be had for less than $250 used, and gets you a more mainline Snapdragon processor, as well as OLED and an in-touchscreen fingerprint sensor. While not at full support in, say, postmarketOS, it’s far enough along that it might actually get there. (Perhaps you want to help?)

But while individual phones may find quick support from individual operating systems, the PinePhone feels like it sets a larger ideological precedent. What the PinePhone represents is a very solid reference system for development of mobile operating systems, rather than something that’s a head-turner on its own. This is the platform mobile Linux devs are going to go to when they need to simply build out the base operating systems, as it has all the basics—from the ARM processor to the accelerometer to the GPS, even a headphone jack—that developers can test against. With just modest differences (some models have 2GB of RAM, some have 3GB) it allows developers of mobile operating systems to focus on getting the basics right, then worry about whether everything works in the hundreds of phone models out there.

There’s also the ethics of it all as well. Look around and you’ll likely see some passionate debates, for example, against using Sailfish OS on a PinePhone because of its closed-source user interface. These discussions also happen in the desktop Linux space; it’s almost refreshing to see them in mobile after years of two operating systems driving every argument.

Last year, in the midst of the pandemic, mega-podcaster Joe Rogan gave a relatively obscure YouTuber with a strong privacy focus a big amount of attention. During an interview with comedian and musician Reggie Watts, he brought up a video maker named Rob Braxman, who had a wide variety of videos that focused on the topic of privacy, particularly with mobile devices. Braxman literally calls himself “The Internet Privacy Guy.”

One day, Rogan subscribed to Braxman, and apparently spent hours watching his clips, which feature a lot of well-researched useful information, but can sometimes toe-dip into the conspiratorial—i.e., the kind of stuff you could imagine Rogan would love. Then Rogan talked about the YouTuber on an episode of his podcast, expressing curiosity about Braxman’s demeanor. What was this guy trying to hide?

Despite clearly being a fan of his privacy, Braxman clearly did not mind the privacy invasion from Rogan.

Braxman responded to Rogan, as one does, with a YouTube clip. He pointed out that he was a regular guy; he just cared a lot about privacy, with one underlying driving factor:

I’m an immigrant. When I was young, I lived in a country under martial law, where voicing an opinion can land you in jail, where powerful people control the many. So that made a mark on me. I never want to go back to that condition.