#IP duplication rate

Explore tagged Tumblr posts

Text

High duplication of HTTP Proxies IPs used for crawling? How to solve it?

With the continuous progress of network technology, crawler technology has been widely used in many fields. In order to avoid being blocked by the website's anti-crawling mechanism, many developers will use Proxies IP to disguise the real identity of the crawler. However, many developers find that the duplication rate of Proxies IP is actually very high when using Proxies IP, which gives them a headache. Today, let's talk about why Proxies have such a high duplication rate? And how to solve this problem?

Why is the Proxies IP duplication rate high?

Have you ever wondered how you can still be "captured" even though you have used Proxies? In fact, the root of the problem lies in the high rate of IP duplication. So, what exactly causes this problem? The following reasons are very critical:

1. Proxies IP resources are scarce and competition is too fierce

2. Free Proxies to "squeeze out" resources

Many developers will choose to use free Proxies IP, but these free service providers often assign the same IP to different usersin order to save resources. Although you don't have to spend money, these IPs tend to bring more trouble, and the crawler effect is instead greatly reduced.

3. Crawling workload and frequent repetitive requests

The job of a crawler usually involves a lot of repetitive crawling. For example, you may need to request the same web page frequently to get the latest data updates. Even if you use multiple Proxies IPs, frequent requests can still result in the same IP appearing over and over again. For certain sensitive websites, this behavior can quickly raise an alarm and cause you to be blocked.

4. Anti-climbing mechanisms are getting smarter

Today's anti-crawling mechanisms are not as simple as they used to be, they are getting "smarter". Websites monitor the frequency and pattern of IP visits to identify crawler behavior. Even if you use Proxies, IPs with a high repetition rate are easily recognized. As a result, you have to keep switching to more IPs, which makes the problem even more complicated.

How to solve the problem of high Proxies IP duplication?

Next, we'll talk about how to solve the problem of high IP duplication to help you better utilize Proxies IP for crawler development.

1. Choose a reliable Proxies IP service provider

The free stuff is good, but the quality often can't keep up. If you want a more stable and reliable Proxies IP, it is best to go with a paid Proxies IP service provider. These service providers usually have a large number of high-quality IP resources and can ensure that these IPs are not heavily reused. For example, IP resources from real home networks are more protected from being blocked than other types of IPs.

2. Rationalization of IP allocation and rotation

3. Regular IP monitoring and updating

Even the best IPs can be blocked after a long time. Therefore, you need to monitor Proxies IPs on a regular basis. Once you find that the duplication rate of a certain IP is too high, or it has begun to show access failure, replace it with a new one in time to ensure continuous and efficient data collection.

4. Use of Proxies IP Pools

To avoid the problem of excessive Proxies IP duplication, you can also create a Proxy IP pool. Proxies IP pool is like an automation tool that helps you manage a large number of IP resources and also check the availability of these IPs on a regular basis. By automating your IP pool management, you can get high-quality Proxies more easily and ensure the diversity and stability of your IP resources.

How to further optimize the use of crawlers with Proxies IP?

You're probably still wondering what else I can do to optimize the crawler beyond these routine operations. Don't worry, here are some useful tips:

Optimize keyword strategy: Use Proxies IP to simulate search behavior in different regions and adjust your keyword strategy in time to cope with changes in different markets.

Detect global page speed: using Proxies IP can test the page loading speed in different regions of the world to optimize the user experience.

Flexible adjustment of strategy: Through Proxies IP data analysis, understand the network environment in different regions, adjust the strategy and improve the efficiency of data collection.

Conclusion

The high duplication rate of Proxies IP does bring a lot of challenges to crawler development, but these problems are completely solvable as long as you choose the right strategy. By choosing a high-quality Proxies IP service provider, using Proxies IPs wisely, monitoring IP status regularly, and establishing an IP pool management mechanism, you can greatly reduce the Proxies IP duplication rate and make your crawler project more efficient and stable.

0 notes

Text

Value Boosters in Bloomburrow Part 2 (Continued). Are Value Booster Packs a Gateway Drug to more expensive boosters?

So, what is in these new value boosters? Are these boosters worth it or is a play booster or collector booster the better deal? Is this a decent budget option or are they selling you junk? I want to make it clear that if you are trying to get into the game, then I would always recommend you start with a preconstructed commander deck. The commander decks are, almost always, an amazing value with some commander staples that can be used in multiple competitive decks. In paper, almost everyone plays commander so even though the preconstructed decks are usually very complicated, I would still start any new players with a 40 dollar commander deck. They still get to open a sample pack so there is some amount of random chance associated with the product. I have opened some really interesting stuff out of those little packs. However, I know people who simply want the rush of ripping open 30 bucks. These new value packs are only 7 cards. Aftermath also had smaller boosters and that was considered an extreme failure but the assassin's creed boosters seem to be doing a lot better. 7 card packs are ok because the duplication should be low. Bloomburrow is a set with almost 300 cards while aftermath and creed had much less cards in the entire set. The one issue with this product is that you are not guaranteed a rare in each pack. They have no released the probability of getting a rare per pack but I hope that the pull rate of a rare is 50 percent or higher. I feel like you should get at least one rare in every two packs. The product packaging is purposefully misleading. The packs say you can get up to two rares per pack which is true but the probability of that is probably extremely rare. The packaging should say that rares are not guaranteed but they would not prominently showcase that truth to the public. I do like that you can get special guests in these packs and pulling two mythics in one cheap pack is going to make some exciting stories. Options are good but I feel like this is merely a marketing ploy to expose the youth to an addiction on the cheap. Are value boosters a gateway booster to more expensive boosters? Do the kids that get value packs grow up to buy their own collectors packs and set packs a an adult? Is this good for the game as it puts the game in the public eye starting from childhood? I am so curious to see how low the price is without a guaranteed rare. Could they guarantee a rare in a future value booster without raising the price? Will the audience accept these rareless packs? Do kids even know or want rares or do they just want to see cool art and characters on cards? Time will answer all of these questions. Is this something they will try for universes beyond? People would more likely impulse buy a pack of cards for a dollar if the cards had their favorite ip on them. I will be watching the market on value booster packs very carefully as this might be the introductory product that stands the test of time and brings Magic the Gathering into the zeitgeist.

#magic the gathering#magic the card game#youtube#commander legends#commander#mtg#blogatog#arena#mark rosewater#reserve list#reserved list#wotc#wizards of the coast#wizards#alpha investments#tolarian community college#mtgstocks#mtgo#mtg commander#mtg arena#modern horizons 3#mtg spoilers#mtg art#magic the gathering arena#magic arena#magic card game#magic#mtg zendikar#commander legends draft box#value booster

8 notes

·

View notes

Text

Protecting Users from Fraud with Advanced Verification in P2P Crypto Exchanges

These platforms let people trade digital currencies directly with each other, without any central authority. While this offers freedom and privacy, it also brings certain risks — especially fraud.

In this article, we’ll explain how advanced verification methods can protect users from fraud in P2P crypto exchanges. We’ll also discuss how P2P crypto exchange development can be done in a way that puts user safety first.

Why Users Choose P2P Crypto Exchanges

P2P crypto exchanges allow buyers and sellers to make trades without a middleman. Users usually create ads to buy or sell coins like Bitcoin, and others respond to these ads. The exchange helps with the communication and sometimes holds the crypto in an escrow account until both sides confirm the trade.

Some reasons people prefer P2P exchanges include:

They are often cheaper to use.

Users can choose who they trade with.

There are more payment options.

They are accessible in countries where regular exchanges are banned.

However, with this freedom comes responsibility. And unfortunately, that includes the risk of being tricked by dishonest people.

Common Fraud Problems in P2P Crypto Exchanges

Before we talk about solutions, it’s important to understand the common types of fraud seen in P2P exchanges:

1. Fake Payment Proofs

A phony screenshot of a completed payment may be sent by a buyer. If the seller releases the crypto before checking their bank account, they lose their coins.

2. Chargebacks

Some fraudsters pay using methods like PayPal or credit cards and later reverse the payment after receiving the crypto.

3. Identity Theft

Scammers might use stolen ID documents to create accounts. If a problem happens, it becomes difficult to track the real person.

4. Phishing

Scammers sometimes create fake versions of well-known exchanges to steal user credentials and crypto.

These problems show why advanced verification is not just helpful — it's necessary.

The Role of Advanced Verification in Fighting Fraud

To protect users, P2P crypto exchanges need more than basic ID checks. Advanced verification means adding extra steps and tools that confirm a person’s real identity and reduce the chances of fraud. Here are the key features that should be part of any p2p crypto exchange development plan.

1. KYC (Know Your Customer) Checks

KYC means collecting user information such as full name, photo ID, and address. Most good exchanges ask for these details before allowing users to trade large amounts. KYC helps:

Confirm the user’s real identity.

Block fake or duplicate accounts.

Keep records for any future investigations.

2. Biometric Verification

Some exchanges ask users to take a selfie or a short video holding their ID. This prevents people from using stolen documents. Face-matching technology can check that the selfie matches the ID photo.

3. Email and Phone Verification

This step ensures that users provide real contact information. It also allows the exchange to alert users of suspicious activity.

4. Two-Factor Authentication (2FA)

2FA adds an extra step when logging in or making a trade. It could be a code sent to your phone or generated by an app. Without the 2FA code, even if your password is stolen, they won't be able to access your account.

5. Behavior Monitoring

Some P2P platforms use software that watches how users behave. For example, it can notice if a user suddenly changes their trading pattern or logs in from a different country. These actions might trigger a warning or temporary lock on the account.

6. User Rating and Review System

Letting users rate each other after a trade helps everyone stay informed. If someone has many bad reviews, others can avoid trading with them. This encourages honest behavior.

7. IP Address and Device Tracking

Tracking the IP address and device type used to log in can help spot fraud. If a user usually logs in from India and suddenly appears in Europe, the system can ask for extra verification.

How P2P Crypto Exchange Development Can Prioritize Security

When developing a P2P crypto exchange, safety must be a top priority from day one. Here’s how developers and businesses can keep users protected:

1. Integrate a Trusted KYC Provider

There are many third-party KYC services that can quickly and safely check user IDs and documents. Choose one that supports global users and provides fast results.

2. Add Optional Verification Levels

Some users prefer privacy, so offering different verification levels can help. For example:

Basic: Just an email and phone.

Medium: Adds ID and address verification.

Full: Includes biometric checks and video verification.

The more verification a user completes, the higher their trading limit can be.

3. Use Escrow for Every Trade

A secure escrow system holds the crypto until both the buyer and seller confirm the deal. This avoids cases where someone pays but never receives the coins — or sends crypto and never gets paid.

4. Respond to Reports Quickly

There should be a way for users to report suspicious behavior. A trained support team should act fast to freeze accounts, stop trades, and begin investigations.

5. Keep Users Educated

Even the best security tools are useless if users don’t know how to avoid fraud. The exchange should share regular updates, tips, and scam alerts to keep everyone informed.

Final Thoughts: Balancing Freedom and Safety

P2P crypto exchanges are an important part of the digital currency world. They give people control over their trades and offer more options than traditional exchanges. But that control comes with risks.

To make P2P trading safer, advanced verification methods must be used. These tools — from KYC to behavior tracking — can stop fraud before it happens and make it easier to act when things go wrong.

If you're planning to enter the crypto market through a p2p crypto exchange, choose one that values safety and has the right systems in place. And if you're involved in p2p crypto exchange development, focus on adding these safety features from the start. It’s better to prevent fraud than to fix it later.

In the end, the goal is simple: let people trade freely while keeping their money and data safe.

0 notes

Text

7 Reasons Why Dubai Is Ditching Keys: The Rise of Digital Locks by Euro Art

Let’s start with a simple question: How many keys do you carry every day?

A house key, an office key, a gym card, a gate remote… and maybe a backup key hidden in your bag, just in case. It's heavy, inconvenient, and worst of all—primitive.

Now imagine this: What if you could walk through every door in your life using nothing but your fingerprint, a simple PIN, or your phone?

Welcome to Dubai—a city built on ambition, speed, and smart living. Where traditional keys are making way for digital locks, and the security game is being rewritten by brands like Euro Art.

Why Digital Locks? Why Now? Why Euro Art?

Dubai doesn’t wait for the future. It designs it. From autonomous taxis to AI-run city systems, innovation is deeply woven into its DNA. So when it comes to home or commercial security, digital locks aren’t a luxury here—they're an expectation.

But what makes Euro Art a leader in this revolution?

It’s not just about going keyless. It’s about blending high-tech access with refined aesthetics, local know-how, and a user-first design philosophy that fits the Dubai lifestyle like a glove. Whether you live in a sleek Marina apartment, a villa in the desert outskirts, or manage a commercial office, Euro Art delivers locks that are not just smart—but flawlessly smart.

1. The Era of Convenience Is Here: Say Goodbye to Keys

Let’s be honest: keys get lost. They break. They stick in locks. They rattle around in your pocket like outdated tech from the last century.

Now picture this instead:

You walk up to your door.

It senses your fingerprint.

It clicks open—no digging through your bag, no forgotten keys.

Digital locks bring a level of seamlessness to daily life that’s almost addictive. In Dubai, where time is currency and efficiency is a lifestyle, this isn’t a perk—it’s a necessity. With Euro Art’s advanced systems, this isn’t just possible—it’s the new default.

2. Security That Thinks Faster Than You Do

What do most break-ins have in common? Outdated locking systems.

Traditional locks are shockingly easy to bypass, especially in areas where tech-savvy thieves know exactly what they’re doing. Euro Art’s digital locks, on the other hand, are built with multi-layered encryption protocols, anti-peep password functions, and biometric recognition that can’t be duplicated.

With options like:

Fingerprint authentication

RFID card access

Smartphone unlocking

Time-sensitive PIN codes

Auto-locking features

...Euro Art is delivering something far beyond safety. It’s offering intelligent security that adapts to your routine.

3. Built for Dubai: Heat-Tested, Sand-Resistant, Life-Proof

One of the most overlooked aspects of door security in Dubai? The environmental factor.

It’s not just about hot days. It’s about extreme heat, blinding sandstorms, and high humidity—all of which can wreak havoc on regular locking systems.

Euro Art designs and tests its digital locks with Dubai’s harsh conditions in mind. These locks are built from heat-resistant alloys, have IP-rated moisture protection, and come with durable keypads that don't fade under the sun.

No rust. No warping. No tech failure. Just solid performance, year-round.

4. Aesthetic Flex That Matches Dubai’s Design Language

Let’s talk design. Dubai isn’t subtle. It’s bold, modern, and polished—right down to the doorknob.

So, why settle for clunky metal locks when you can have a sleek black touchpad, brushed steel fingerprint module, or gold-trimmed card access panel?

Euro Art believes form should never follow function—it should elevate it.

Whether you're outfitting a modern penthouse, a heritage villa, or a corporate reception area, Euro Art offers designs that blend seamlessly with your interior narrative.

Modern minimalism? Covered. Futuristic luxe? Covered. Understated elegance? Yes, that too.

5. Tailored Access for Everyone—Yes, Even the Housekeeper

Here’s where things get really interesting.

Digital locks aren’t just about you entering your space. They're about how others access it, too.

With Euro Art, you can generate:

Temporary PINs for cleaners or contractors

Guest access codes that expire in hours

Log reports that tell you who entered, when, and how

Mobile app notifications in real-time

It’s security that doesn’t just protect—it communicates. That’s a level of control old-school locks could never provide.

6. Case Study: A Modern Villa in Dubai Upgrades to Digital

Client: A real estate investor with several high-end rental properties. Problem: Traditional locks made property management chaotic. Key handovers, lost copies, and rekeying became a constant expense. Solution: Switched to Euro Art’s biometric digital locks across all properties.

Results:

Saved over 60 hours per year in key-related logistics

Cut down 90% of tenant lockouts

Increased property appeal to tech-savvy tenants

Client Testimonial: “I was skeptical at first, but after one installation, I replaced every lock across my villas. Euro Art’s smart system makes managing multiple homes feel effortless.”

7. Stats That Seal the Deal

Let’s talk numbers—because Dubai is a data-driven city, and the data on digital locks speaks volumes:

73% of new high-end residential units in Dubai now feature digital lock systems as standard.

82% of homeowners say convenience is the primary reason they switched.

Digital locks reduce break-in risks by over 60% compared to traditional locks.

Property value increases by up to 5% when upgraded with smart access systems.

Euro Art is not just following this trend—it’s engineering it.

Real People, Real Impact: Euro Art in Action

Nadia – Interior Designer in Dubai

“As someone who designs luxury interiors, the last thing I want is a clunky old lock ruining the aesthetic. Euro Art's digital locks are sleek, modern, and actually beautiful. My clients love them.”

Ahmed – IT Executive

“I installed Euro Art’s smart lock in my apartment, and it’s changed everything. I never worry about losing keys. I can open my door with my phone or fingerprint. Plus, the app tells me if someone’s tried to enter.”

Is It Time for You to Go Digital? Ask Yourself:

Are you tired of carrying keys everywhere?

Do you worry about unauthorized access when you’re away?

Do you want to control and monitor entry remotely?

Would you like your front door to reflect your modern lifestyle?

If you said yes to even one of these, it’s time to explore Euro Art’s range of digital locks tailored for Dubai living.

The Final Lockdown: Why Euro Art Is Dubai’s Trusted Name in Digital Access

In a city where innovation is stitched into the skyline, where smart homes are no longer futuristic dreams but everyday standards—digital locks are simply the next logical step.

But all digital locks aren’t created equal.

Euro Art brings the craftsmanship, the design, the durability, and the intelligence required for this environment. It’s not just about security. It’s about experiencing your space differently—with control, confidence, and elegance.

So next time you reach for a key, ask yourself:

Why do that… when you could just touch and walk through?

Frequently Asked Questions (FAQs) – Digital Locks Dubai by Euro Art

1. Are digital locks reliable in Dubai’s hot climate? Absolutely. Euro Art digital locks are designed to withstand high temperatures, humidity, and dust—making them ideal for Dubai’s environment. Our locks are heat-tested and built with durable, weather-resistant materials.

2. Can I still use a key if the digital system fails? Yes. Most Euro Art digital locks come with a mechanical key override for emergencies. However, our systems are equipped with backup power options and low-battery alerts to avoid lockouts.

3. How secure are Euro Art digital locks compared to traditional locks? Euro Art locks use advanced encryption, biometric access, and anti-tamper technology. They’re significantly harder to breach than traditional locks, offering a much higher level of security.

4. Are these locks easy to install in existing doors? Yes, our digital locks are designed for seamless retrofitting. Whether it’s a residential door or a commercial entry, installation is quick and hassle-free.

5. Can I monitor access remotely? With select Euro Art models, yes. Our Wi-Fi or Bluetooth-enabled locks let you control access via your smartphone and track who enters and when, offering total peace of mind.

6. What happens if I lose my phone or forget the code? You can reset access credentials easily, and alternate entry methods like fingerprint or backup key ensure you’re never locked out.

0 notes

Text

The Fact About Samsung That No One Is Suggesting

Apple Retail store AppShop from the Apple Retailer application, tailored specifically for you.

Just increase a trade-in when you select a completely new product. Once your eligible system has been acquired and confirmed, we’ll credit the worth to your payment approach. Or opt for to take a look at with Apple Card Every month Installments and we’ll utilize the credit rating right away. Terms use.

We style and design our hardware and computer software with each other for a seamless practical experience. Desire to share your Make contact with info? Keep your iPhone near theirs. New AirPods? It’s a one particular‑tap set up. And typical iOS updates keep your apple iphone experience new For many years to occur.

Generate a Genmoji correct during the keyboard to match any discussion. Want to create a rainbow cactus? You bought it. Just supply an outline to find out a preview.

Whenever you buy your new Mac straight from Apple, you’ll get access to non-public Setup. In these on line periods, a Specialist can tutorial you through set up and knowledge transfer or center on options that enable you to take advantage of of the Mac. On top of that, you can be a part of Anytime is effective to suit your needs, from where ever you are.

Please Take note, notification e-mail are a DO NOT REPLY deal with, samsung you need to log-in within the community web site so that you can reply.

iPad and Mac are built to get the job done jointly to sort the last word Resourceful set up. Sketch in your iPad and have it surface right away on your own Mac with Sidecar.

The screenshot you delivered states that you just must update your payment system right before January 30th to stop account termination.

Posted by waylonjenningsfan five several hours ago in Galaxy S25 My god the cameras on this cellphone is ridiculously outrageous ducking see an automobile up near mile absent as well as cameras are so distinct and easy as compared to my... Check out Put up

Ditto. Straight Google Workspace with MDM Sophisticated, not EMM, but it surely errors generating the work profile and is not going to Enable the account finish signing in. Every single probable setting to allow This is certainly set, it works wonderful on more mature devices, and it even mistakes screening 3rd party applications that attempt to generate the do the job profile with out connecting my my certain Google Workspace.

You can also duplicate photos, video, or text from the apple iphone and paste everything into another application with your Mac. And with iCloud, you'll be able to accessibility your files from possibly product.

Samsung, at some point, may possibly charge a rate for a few or all AI functions, it's possible immediately after 2025. But I don't Assume any person definitely is aware until Samsung officially speaks on this.

I attempted it then on my Take note twenty Ultra and S24 Extremely and it labored wonderful. Looks as if There exists a difficulty with S25 software compatibility or one thing.

All messages despatched with iMessage use stop-to-end encryption concerning products, so they’re only seen by All those you deliver them to. And Mail Privateness Defense hides your IP address, so senders can’t ascertain your spot.

0 notes

Text

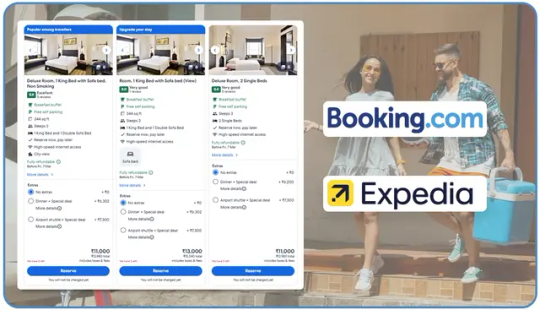

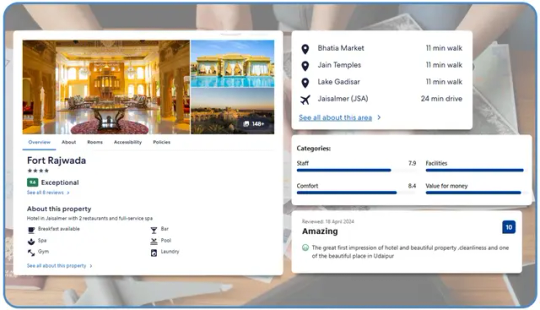

How does Travel Scraping API Service Transform Travel Data from Booking & Expedia?

Introduction

The travel industry is inundated with vast amounts of data in the modern digital landscape. This information is crucial for making informed business decisions, from hotel prices and flight schedules to customer reviews and seasonal trends. However, retrieving and analyzing data from leading platforms like Booking.com and Expedia.com can be complex without the appropriate tools. This is where a Travel Scraping API Service proves invaluable, transforming how businesses extract, process, and utilize travel data to maintain a competitive advantage.

Understanding the Power of Travel Data

The travel industry generates an enormous volume of data every day. Every search, booking, and review contributes to this expanding information pool. For businesses in this sector, effectively accessing and analyzing this data is essential for:

Gaining more profound insights into market dynamics and consumer behavior.

Tracking pricing trends and assessing competitive positioning.

Identifying new market opportunities.

Refining revenue management strategies for maximum profitability.

Elevating the customer experience through advanced personalization.

Leading online travel agencies (OTAs) such as Booking.com and Expedia.com hold valuable data. However, obtaining this information isn’t always straightforward, as official channels often limit access. As a result, data extraction becomes a critical strategy for businesses aiming to maintain a competitive edge.

What is a Travel Scraping API Service?

A Travel Scraping API Service is a powerful solution that efficiently extracts data from major travel platforms like Booking.com and Expedia.com. By leveraging advanced data collection techniques, this service gathers, organizes, and delivers valuable travel-related data in a structured format for businesses.

Unlike traditional manual data collection, which can be slow and prone to errors, a Travel Scraping API Service automates the process, ensuring real-time access to critical insights,

Including:

Hotel availability and pricing

Flight schedules and fares

Vacation rental options

Customer reviews and ratings

Destination popularity

Seasonal booking patterns

These services function through APIs (Application Programming Interfaces), enabling smooth integration with business systems and applications and ensuring companies have the latest travel data at their fingertips.

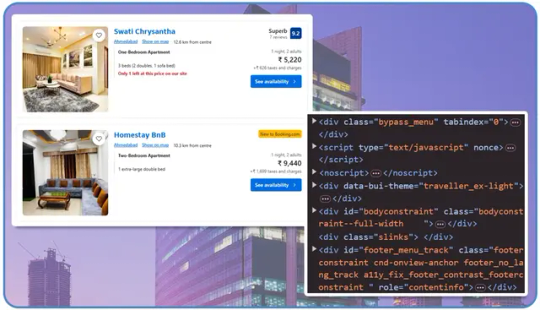

The Transformation Journey: From Raw Data to Actionable Insights

This process involves extracting, processing, and analyzing raw data from travel platforms to generate meaningful insights that drive strategic decision-making.

Data Extraction from Major Travel Platforms

The journey begins with Booking.com API Scraping and Expedia.com Data Scraping, leveraging advanced techniques to ensure seamless data retrieval.

This process involves:

Automated collection of both structured and unstructured data.

Managing dynamic content loaded via JavaScript.

Navigating pagination and complex site architectures.

Implementing strategies to avoid detection and IP blocks.

Handling session cookies and authentication for continuous access.

Professional Travel Industry Data Scraping services utilize sophisticated algorithms and proxy solutions to guarantee reliable and consistent data extraction without disrupting the source websites.

Data Processing and Structuring

Once the raw data is collected, it must be transformed into a structured, usable format.

This involves:

Cleaning and normalizing data for accuracy.

Eliminating duplicates and irrelevant entries.

Standardizing formats such as dates and currencies.

Categorizing and tagging data for better organization.

Building relational data structures for in-depth analysis.

For instance, Hotel Price Data Scraping results must be standardized across various room types and occupancy levels, and amenities must be included, ensuring precise and meaningful comparisons.

Data Analysis and Insight Generation

With processed data in place, the next step is extracting actionable insights to drive informed decision-making.

This includes:

Analyzing price trends across different booking windows.

Assessing competitive positioning in key markets.

Identifying demand patterns for popular destinations.

Examining customer sentiment through review analysis.

Understanding the correlation between pricing strategies and booking volumes.

Leading Travel Scraping API Service providers integrate AI and machine learning algorithms to refine data analysis further. These technologies uncover hidden patterns and trends, offering deeper intelligence beyond traditional analytical methods.

Key Data Types Extracted from Travel Websites

Essential travel data categories gathered from online sources for market insights and strategic decisions.

Hotel Data Extraction

Collecting real-time hotel-related data, including pricing, availability, reviews, and amenities, to analyze market trends and improve decision-making.

Hotel Price Data Scraping provides critical insights, including:

Room rates across various dates and booking periods.

Availability trends and demand fluctuations.

Discount strategies and promotional offers.

Package deals and bundled services.

The loyalty program benefits frequent guests.

Amenity offerings and service comparisons.

Guest reviews and ratings shaping consumer perception.

This data enables hotels to refine pricing models, track competitor strategies, and identify emerging market opportunities.

Flight Information

Essential travel data covering flight schedules, pricing, availability, and airline details, helping businesses optimize travel strategies.

Flight Data Extraction uncovers key insights such as:

Fare variations across different booking windows.

Route popularity and demand trends.

Airline competitive positioning and pricing strategies.

Schedule changes and operational adjustments.

Seasonal demand patterns affecting ticket pricing.

Ancillary service offerings like baggage fees and seat selection.

Airlines and travel agencies leverage this data for strategic route planning, optimized pricing, and targeted marketing.

Vacation Rentals

Short-term lodging options, such as apartments, houses, or villas, are listed on platforms like Airbnb and Vrbo, catering to travelers seeking flexible accommodations.

Vacation Rental Data Scraping delivers valuable insights into:

Property availability and booking trends.

Pricing strategies are based on demand and seasonality.

Location popularity among travelers.

Amenity preferences shape guest expectations.

Host ratings and service reliability.

Booking patterns across platforms.

Seasonal trends affecting rental demand.

This intelligence is essential for property managers, investors, and vacation rental platforms to optimize pricing, enhance guest experiences, and improve occupancy rates.

Optimizing Travel Data Extraction with Advanced Web Scraping Services

Access to accurate, real-time data is crucial in the competitive travel industry. Businesses leverage Travel Data Scraping Services to extract and analyze key information from top travel platforms. This includes tracking airfare trends, monitoring hotel availability, and analyzing customer sentiment for informed decision-making.

A primary use of web scraping in travel is Dynamic Pricing Data Extraction. Airlines, hotels, and OTAs adjust prices based on demand and competition. Real-time data extraction helps businesses optimize pricing, develop competitive strategies, and boost revenue.

Selecting the Best Web Scraping Tools For Travel Websites is essential for handling complex site structures, dynamic content, and anti-scraping defenses. These tools ensure efficient data retrieval from Booking.com, Expedia.com, and Airbnb.

To maintain efficiency, businesses must follow Travel Industry Data Scraping Best Practices, like rotating proxies, AI-driven parsing, and automated scraping schedules. Ethical data collection ensures compliance with regulations and platform terms.

For tailored solutions, Custom API Scraping Solutions For Travel Websites provide real-time structured data, benefiting travel aggregators, comparison websites, and analytics firms seeking to enhance their offerings.

Extracting Hotel And Flight Prices From APIs

Extracting Hotel And Flight Prices From APIs requires a strategic approach to ensure accuracy and efficiency.

Here’s a breakdown of key considerations:

Understanding the Data Structure: Analyzing how travel websites organize and present hotel and flight pricing information.

Identifying API Endpoints: Using browser developer tools to locate and access relevant API endpoints.

Managing Authentication & Sessions: Implement authentication mechanisms and handle session persistence to maintain seamless data retrieval.

Parsing Complex JSON or XML Responses: Structuring and processing intricate data formats to extract relevant pricing details.

Handling Rate Limits & Request Throttling: Optimizing request frequency to comply with API restrictions and prevent access blocks.

Businesses can automate these processes by leveraging professional services for seamless and scalable data extraction, even as travel websites update their security protocols and data structures.

Web Scraping for Market Trend Analysis in Travel

Leveraging Web Scraping For Market Trend Analysis In Travel allows businesses to systematically gather vast amounts of data over time, unveiling critical patterns and industry shifts.

By extracting and analyzing real-time travel-related data, companies can gain valuable insights, including:

Tracking price fluctuations across booking windows to optimize pricing strategies and maximize revenue.

Monitoring seasonal demand variations to align marketing efforts with peak travel periods.

Identifying emerging destinations to stay ahead of shifting traveler preferences.

Analyzing competitor promotional strategies to refine offers and enhance competitive positioning.

Assessing the impact of external events on travel demand to make informed, data-driven decisions.

By harnessing these insights, businesses can proactively adapt to market changes, ensuring they remain competitive and responsive rather than reactive.

How Web Data Crawler Can Help You?

We specialize in delivering comprehensive data extraction solutions tailored for the travel industry. With a blend of technical expertise and industry knowledge, our team ensures high-quality, reliable data that empowers businesses to make informed decisions and drive growth.

Our Services Include:

Customized scraping solutions designed to meet your unique business requirements

Regular data delivery via API or structured reports for seamless access

Comprehensive data cleaning and normalization to ensure accuracy and consistency

Integration support to align with your existing systems effortlessly

Ongoing maintenance to guarantee uninterrupted data flow

Unlike standard Web Scraping API providers, we recognize the complexities of travel data and the challenges of extracting information from platforms like Booking.com and Expedia.com.

Our solutions are crafted to deliver:

Superior data quality through advanced validation techniques

Exceptional reliability with built-in redundancy for uninterrupted service

Customizable data formats tailored to your specific business needs

Scalable infrastructure that evolves as your requirements grow

Expert support from professionals with deep technical and industry expertise

With us, you gain a trusted partner dedicated to providing actionable insights and scalable solutions that fuel business success.

Conclusion

In today’s competitive travel industry, data is the key to success. An influential Travel Scraping API Service converts raw information into actionable insights, helping businesses make informed decisions across operations.

From refining pricing strategies to spotting emerging market opportunities, data from platforms like Booking.com and Expedia.com fuels sustainable growth and a competitive edge.

Unlock the potential of travel data with us. Our experts will craft a customized solution tailored to your data needs while ensuring compliance with industry standards. Don’t let valuable insights go untapped.

Contact Web Data Crawler today to transform data into your most powerful asset and drive success in the travel industry.

Originally published at https://www.webdatacrawler.com.

#APIScrapingServices#BookingComAPIScraping#ExpediaComDataScraping#TravelIndustryDataScraping#HotelPriceDataScraping#FlightDataExtraction#VacationRentalDataScraping#WebScrapingServices#TravelDataScrapingServices#DynamicPricingDataExtraction#BestWebScrapingToolsForTravelWebsites#HowToScrapeBookingComAndExpediaData#ExtractingHotelAndFlightPricesFromAPIs#WebScrapingForMarketTrendAnalysisInTravel#TravelIndustryDataScrapingBestPractices#CustomAPIScrapingSolutionsForTravelWebsites#WebDataCrawler#WebScrapingAPI

0 notes

Text

How to Install & Optimize Your RFID Tag Reader System

Introduction

Radio Frequency Identification (RFID) technology has revolutionized asset tracking, inventory management, and supply chain operations. However, the efficiency of an RFID tag reader system depends on proper installation and optimization. In this comprehensive guide, we will walk you through the best practices for setting up and enhancing your RFID reader system to ensure maximum efficiency and accuracy.

Step 1: Understanding RFID Components

Before installation, it’s crucial to understand the essential components of an RFID system:

RFID Tags: These contain embedded microchips and antennas that store data.

RFID Readers: Devices that emit radio waves to communicate with RFID tags.

Antennas: Transmit signals between the tags and the reader.

Middleware & Software: Process and interpret RFID data for integration with business systems.

Step 2: Selecting the Right RFID System

Choosing the right RFID system is critical for achieving optimal results. Consider the following factors:

Frequency Range: RFID operates in different frequency bands: Low Frequency (LF), High Frequency (HF), and Ultra High Frequency (UHF). Choose one based on your operational needs.

Read Range Requirements: Determine whether you need short-range or long-range reading capabilities.

Environmental Conditions: Harsh environments may require ruggedized RFID tags and readers.

Data Storage & Integration: Ensure the system can seamlessly integrate with your ERP or inventory management system.

Step 3: Planning RFID Reader Placement

Proper placement of RFID readers is crucial for ensuring accurate tag detection. Consider these best practices:

Minimize Interference: Avoid placing readers near metallic surfaces, electronic equipment, or sources of radio noise.

Optimize Read Zones: Position antennas strategically to maximize coverage and minimize blind spots.

Test Placement: Conduct a site survey to identify optimal reader locations before final installation.

Consider Orientation: The angle and distance between tags and readers affect read accuracy.

Step 4: Installing RFID Hardware

1. Setting Up RFID Readers & Antennas

Mount readers securely in designated locations.

Connect antennas to readers using RF coaxial cables.

Ensure antennas are aligned properly for maximum signal strength.

2. Deploying RFID Tags

Select Appropriate Tags: Choose tags based on application needs (passive, active, or semi-passive).

Ensure Proper Tag Placement: Place tags where they are easily scannable and not obstructed by materials that can interfere with radio signals.

Test Tag Readability: Before full deployment, test tags under real-world conditions to ensure they are readable from different angles and distances.

3. Connecting to the Network

Connect readers to the local network via Ethernet, Wi-Fi, or Bluetooth.

Configure IP addresses and establish communication with the central server.

Ensure adequate power supply to all components.

Step 5: Configuring & Optimizing the RFID System

After installation, fine-tuning the system ensures optimal performance.

1. Configuring RFID Reader Settings

Adjust read power settings to avoid interference and improve accuracy.

Set up data filtering to eliminate duplicate or incorrect tag reads.

Define read zones to control where and when tags are read.

2. Software Integration

Connect the RFID system to inventory management, asset tracking, or ERP software.

Implement automated data logging and reporting for streamlined operations.

Ensure real-time data synchronization with enterprise systems.

3. Conducting Performance Testing

Run benchmark tests to measure read rates and system efficiency.

Identify and troubleshoot weak signal areas or interference zones.

Conduct stress tests to determine system reliability under high workloads.

Step 6: Maintaining & Troubleshooting Your RFID System

Regular maintenance is essential to prevent downtime and performance issues.

1. Routine Inspections

Check for loose connections, damaged antennas, or misplaced readers.

Ensure RFID tags are intact and not obstructed by environmental factors.

2. System Performance Monitoring

Use RFID analytics tools to monitor tag reads and identify irregularities.

Regularly update firmware and software to keep the system up-to-date.

3. Troubleshooting Common Issues

Interference Problems: Reposition readers and reduce external RF noise sources.

Tag Read Failures: Adjust reader power settings and verify tag placement.

Connectivity Issues: Restart network connections and check system logs for errors.

Conclusion

Installing and optimizing an RFID tag reader system requires careful planning, proper hardware setup, and continuous performance monitoring. By following these best practices, businesses can achieve enhanced tracking efficiency, improved accuracy, and seamless data integration. Implementing these steps will ensure your RFID system operates at peak performance, leading to increased operational efficiency and cost savings.

0 notes

Text

How To Use google map data extractor software Online- R2media

youtube

Unlock Targeted Leads with Google Map Data Extractor: A Game-Changer for Businesses

In today’s competitive digital landscape, having access to accurate and high-quality business leads is essential for success. Whether you’re a digital marketer, sales professional, or business owner, finding the right prospects can make all the difference. That’s where Google Map Data Extractor from R2Media comes in — a powerful tool designed to automate lead generation by extracting valuable business information directly from Google Maps.

What is Google Map Data Extractor?

Google Map Data Extractor is an advanced software that collects crucial business and contact details from Google Maps. It helps users extract data such as:

🏢 Business names

📍 Addresses

📞 Phone numbers

📧 Email IDs (if available)

🌐 Website URLs

⭐ Ratings and reviews

🏷️ Business categories

🌍 Geographical coordinates (latitude & longitude)

This tool is widely used by businesses looking to expand their reach, generate leads, and optimize marketing campaigns efficiently.

How Does Google Map Data Extractor Work?

The software simplifies the process of data collection with automation, making it easy to extract business details in just a few steps:

🔎 Keyword Input — Enter search queries such as “restaurants in New York” or “real estate agents in Mumbai” into the software.

🤖 Automated Scraping — The tool scans Google Maps and extracts all relevant business information.

🛠️ Data Processing — It filters and organizes the extracted data, removing duplicates and irrelevant entries.

📂 Exporting Data — The data can be exported in CSV or Excel format for seamless integration into marketing or sales tools.

🚀 Utilization — Use the extracted data for lead generation, email marketing, cold calling, competitor analysis, and market research.

By automating this process, businesses can save time and effort while accessing up-to-date and accurate business information. 🚀

How Many Leads Can You Extract in One Search?

The number of leads collected depends on multiple factors:

🔢 Google Maps Search Limit — Google usually displays up to 300 results per search.

💻 Software Capabilities — R2Media’s extractor can run multiple searches to collect thousands of leads.

🎯 Search Filters — Broad searches (e.g., “restaurants in India”) yield more results, while niche searches (e.g., “vegan restaurants in Mumbai”) provide highly targeted leads.

🛡️ Google Restrictions — Excessive scraping in a short period may trigger Google’s security measures. Using proxies or rotating IPs can help avoid limitations.

On average, users can extract hundreds to thousands of leads per session, maximizing their lead generation efforts.

Is It Legal to Use Google Map Data Extractor?

Using data extraction tools falls into a legal gray area. While collecting publicly available business information for personal use, research, and marketing is generally acceptable, users must ensure:

⚖️ Compliance with Google’s Terms of Service — Excessive scraping may violate their policies.

✉️ Ethical Use of Data — Avoid spamming or misusing extracted contact details.

🔐 Data Protection Laws — Adhere to GDPR, CCPA, or local data privacy laws when handling personal data.

To stay compliant, it is recommended to use the tool responsibly and reach out to businesses ethically.

Why Choose R2Media’s Google Map Data Extractor?

⚡ High-speed data extraction with accurate results.

🖥️ User-friendly interface with simple search functionalities.

📊 Ability to export data in multiple formats.

🔍 Advanced filtering for better lead segmentation.

💰 Affordable and scalable solution for businesses of all sizes.

Final Thoughts

If you’re looking for an efficient way to gather targeted business leads, R2Media’s Google Map Data Extractor is a must-have tool. Whether you’re in sales, marketing, or market research, this software helps automate the lead generation process, saving time and effort.

Ready to supercharge your outreach? Try Google Map Data Extractor today and take your business to the next level!

🔗 Learn more here 📺 Watch the tool in action: YouTube Video

1 note

·

View note

Text

How to use Python combined with a proxy to scrape Yelp data

As an online business evaluation platform, Yelp gathers a large number of users’ evaluations, ratings, addresses, business hours and other detailed information on various businesses. This data is extremely valuable for market analysis, business research and data-driven decision-making. However, directly scraping data from the Yelp website may be subject to challenges such as access frequency restrictions and IP bans. In order to collect Yelp data efficiently and stably, this article will introduce how to use Python combined with a proxy to scrape Yelp data.

Preparation

1. Install Python and necessary libraries

Make sure the Python environment is installed. Python 3.x is recommended. Install necessary libraries such as requests, beautifulsoup4, pandas, etc. for HTTP requests, HTML parsing, and data processing.

2. Get a proxy

Since scraping data directly from Yelp may be subject to access frequency restrictions, using a proxy can disperse requests and avoid IP blocking. You can get proxies from free proxy websites and paid proxy providers, as the stability and speed of free proxies are often not guaranteed. For high-quality data scraping tasks, it is recommended to purchase paid proxy services.

Writing data scraping scripts

1. Setting up proxies

When using the requests library to make HTTP requests, configure the proxy by setting the proxies parameter.

2. Parse HTML content

Use the BeautifulSoup library to parse HTML content and extract the required data.from bs4 import BeautifulSoup

3. Handle paging and dynamic loading

Yelp search results are usually displayed in pages, and some content may be dynamically loaded through JavaScript. For paging, you can implement it by looping through different URLs. For dynamically loaded content, you can consider using browser automation tools such as Selenium to simulate real user operations.

Optimize crawling strategy

1. Rotate proxy

Avoid using the same proxy IP for a long time. Regularly changing the proxy IP can reduce the risk of being blocked. You can write a script to automatically obtain a new proxy IP from the proxy IP pool.

2. Set a reasonable request interval

Avoid too frequent requests. Set a reasonable request interval according to Yelp’s anti-crawling strategy.

3. Handle abnormal situations

Various abnormal situations may be encountered during the scraping process, such as network request timeout, proxy failure, etc. It is necessary to write corresponding exception handling logic to ensure the robustness of the scraping process.

Storing and analyzing data

1. Data storage

Store the scraped data in a local file or database for subsequent processing and analysis. You can use the pandas library to store the data as a CSV or Excel file.

2. Data cleaning and analysis

Cleaning and processing the scraped data, removing duplicate data, formatting data, etc. Then you can use data analysis tools and techniques to analyze and visualize the data.

Comply with laws, regulations and ethical standards

When scraping Yelp data, be sure to comply with relevant laws, regulations and ethical standards. Respect the privacy policy and robots.txt file of the Yelp website, and do not use the scraped data for illegal purposes or infringe on the rights of others.

Conclusion

By using Python in combination with agents to scrape Yelp data, you can efficiently and stably collect rich business evaluation data. This data is extremely valuable for market analysis, business research, and data-driven decision-making. However, during the scraping process, you need to pay attention to complying with laws, regulations and ethical standards to ensure the legality and compliance of the data.

0 notes

Text

High duplication of HTTP Proxies IPs used for crawling? How to solve it?

With the continuous progress of network technology, crawler technology has been widely used in many fields. In order to avoid being blocked by the website's anti-crawling mechanism, many developers will use Proxies IP to disguise the real identity of the crawler. However, many developers find that the duplication rate of Proxies IP is actually very high when using Proxies IP, which gives them a headache. Today, let's talk about why Proxies have such a high duplication rate? And how to solve this problem?

Why is the Proxies IP duplication rate high?

Have you ever wondered how you can still be "captured" even though you have used Proxies? In fact, the root of the problem lies in the high rate of IP duplication. So, what exactly causes this problem? The following reasons are very critical:

1. Proxies IP resources are scarce and competition is too fierce

2. Free Proxies to "squeeze out" resources

Many developers will choose to use free Proxies IP, but these free service providers often assign the same IP to different usersin order to save resources. Although you don't have to spend money, these IPs tend to bring more trouble, and the crawler effect is instead greatly reduced.

3. Crawling workload and frequent repetitive requests

The job of a crawler usually involves a lot of repetitive crawling. For example, you may need to request the same web page frequently to get the latest data updates. Even if you use multiple Proxies IPs, frequent requests can still result in the same IP appearing over and over again. For certain sensitive websites, this behavior can quickly raise an alarm and cause you to be blocked.

4. Anti-climbing mechanisms are getting smarter

Today's anti-crawling mechanisms are not as simple as they used to be, they are getting "smarter". Websites monitor the frequency and pattern of IP visits to identify crawler behavior. Even if you use Proxies, IPs with a high repetition rate are easily recognized. As a result, you have to keep switching to more IPs, which makes the problem even more complicated.

How to solve the problem of high Proxies IP duplication?

Next, we'll talk about how to solve the problem of high IP duplication to help you better utilize Proxies IP for crawler development.

1. Choose a reliable Proxies IP service provider

The free stuff is good, but the quality often can't keep up. If you want a more stable and reliable Proxies IP, it is best to go with a paid Proxies IP service provider. These service providers usually have a large number of high-quality IP resources and can ensure that these IPs are not heavily reused. For example, IP resources from real home networks are more protected from being blocked than other types of IPs.

2. Rationalization of IP allocation and rotation

3. Regular IP monitoring and updating

Even the best IPs can be blocked after a long time. Therefore, you need to monitor Proxies IPs on a regular basis. Once you find that the duplication rate of a certain IP is too high, or it has begun to show access failure, replace it with a new one in time to ensure continuous and efficient data collection.

4. Use of Proxies IP Pools

To avoid the problem of excessive Proxies IP duplication, you can also create a Proxy IP pool. Proxies IP pool is like an automation tool that helps you manage a large number of IP resources and also check the availability of these IPs on a regular basis. By automating your IP pool management, you can get high-quality Proxies more easily and ensure the diversity and stability of your IP resources.

How to further optimize the use of crawlers with Proxies IP?

You're probably still wondering what else I can do to optimize the crawler beyond these routine operations. Don't worry, here are some useful tips:

Optimize keyword strategy: Use Proxies IP to simulate search behavior in different regions and adjust your keyword strategy in time to cope with changes in different markets.

Detect global page speed: using Proxies IP can test the page loading speed in different regions of the world to optimize the user experience.

Flexible adjustment of strategy: Through Proxies IP data analysis, understand the network environment in different regions, adjust the strategy and improve the efficiency of data collection.

Conclusion

The high duplication rate of Proxies IP does bring a lot of challenges to crawler development, but these problems are completely solvable as long as you choose the right strategy. By choosing a high-quality Proxies IP service provider, using Proxies IPs wisely, monitoring IP status regularly, and establishing an IP pool management mechanism, you can greatly reduce the Proxies IP duplication rate and make your crawler project more efficient and stable.

1 note

·

View note

Text

Common Mistakes Businesses Make with Google Analytics and How an Agency Can Help

Google Analytics is a powerful tool for tracking and analyzing website performance, but leveraging its full potential can be tricky without the right expertise. Many businesses, especially those new to digital analytics, make common mistakes that lead to inaccurate data and missed opportunities. Partnering with a Google Analytics agency can help overcome these challenges, ensuring accurate insights and improved decision-making. Let’s dive into the most frequent errors businesses make and how an agency can provide the expertise needed to resolve them.

Common Mistakes Businesses Make with Google Analytics

1. Improper Tracking Code Installation

A common error is the incorrect placement of the Google Analytics tracking code. Missing or duplicate tags can cause incomplete data collection, leading to skewed analytics that affect key business decisions.

2. Failure to Set Up Goals and Conversions

Many businesses overlook setting up goals, such as form submissions, purchases, or downloads. Without defined goals, tracking the success of marketing efforts becomes impossible.

3. Ignoring UTM Parameters

UTM parameters are essential for tracking the performance of specific campaigns. Businesses often neglect to implement or standardize them, resulting in disorganized data that makes it difficult to assess campaign success.

4. Misconfigured Filters

Filters help exclude irrelevant data, like internal traffic or spam referrals. However, poorly configured filters can either block valid data or allow unwanted data, compromising the accuracy of reports.

5. Underutilization of Enhanced Ecommerce Tracking

For ecommerce businesses, failing to leverage enhanced ecommerce tracking means missing out on valuable insights, such as product performance, cart abandonment rates, and customer behavior.

6. Relying Solely on Default Dashboards

Many businesses stick to default reports instead of creating customized dashboards tailored to their specific KPIs. This limits their ability to derive actionable insights from the data.

7. Inconsistent Data Sampling

Data sampling, which occurs when analyzing large datasets, can lead to incomplete insights. Businesses unaware of this limitation often make decisions based on inaccurate or partial data.

8. Neglecting Cross-Domain and Subdomain Tracking

For businesses operating multiple domains or subdomains, failing to implement cross-domain tracking can result in fragmented data and an incomplete picture of user behavior.

9. Overlooking Mobile and Device Performance

With the growing importance of mobile users, focusing solely on desktop data can lead to missed opportunities to optimize mobile experiences and capture valuable customer segments.

10. Non-Compliance with Privacy Regulations

Neglecting privacy regulations, such as GDPR and CCPA, can result in hefty penalties. Many businesses fail to anonymize IP addresses or manage cookie consent properly, risking compliance violations.

How a Google Analytics Agency Can Help

1. Accurate Setup and Implementation

A Google Analytics agency ensures the tracking code is installed correctly, avoiding errors like duplicate or missing tags. They also integrate tools like Google Tag Manager for efficient data collection.

2. Goal and Conversion Optimization

Agencies work with businesses to define relevant goals and conversions that align with their objectives, providing actionable insights into campaign performance and ROI.

3. Streamlined Campaign Tracking

By standardizing UTM parameters, agencies help organize and track campaign data effectively, enabling businesses to analyze traffic sources and measure marketing success accurately.

4. Proper Configuration of Filters

Agencies configure filters to exclude irrelevant data while preserving valuable insights. This ensures clean, reliable data for decision-making.

5. Advanced Feature Utilization

An agency implements advanced features, such as enhanced ecommerce tracking and audience segmentation, to unlock deeper insights and improve campaign targeting.

6. Customized Dashboards and Reports

Instead of relying on generic reports, agencies create tailored dashboards aligned with a business’s KPIs, simplifying data interpretation and enabling quicker, informed decisions.

7. Addressing Data Sampling Issues

Agencies minimize data sampling by using segmented views or integrating Google Analytics with BigQuery for large-scale data analysis without sampling limitations.

8. Cross-Device and Cross-Channel Insights

Agencies help businesses track user behavior across devices and channels, providing a comprehensive view of the customer journey and identifying areas for improvement.

9. Privacy Compliance

By configuring Google Analytics to anonymize data and integrating cookie consent tools, agencies ensure compliance with privacy regulations, safeguarding businesses from legal risks.

10. Expert Analysis and Recommendations

A Google Analytics agency goes beyond data collection, interpreting metrics to provide actionable insights, optimize campaigns, and improve overall business performance.

Conclusion

Google Analytics is a powerful tool, but its effectiveness depends on correct setup and usage. Common mistakes like improper tracking, lack of goal setting, and underutilized features can hinder a business’s ability to make data-driven decisions. A Google Analytics agency brings the expertise needed to address these issues, providing accurate insights and strategies to maximize performance. By partnering with an agency, businesses can unlock the full potential of their analytics and drive meaningful growth.

0 notes

Text

Real-Time Facial Recognition for Quick Verification | ARGOS Identity

ARGOS Identity offers advanced real time facial recognition technology that ensures the security and privacy of personal information. Their solution provides a highly accurate and reliable method for verifying identity, protecting sensitive data, and enhancing overall security.

Key Benefits of ARGOS Facial Recognition:

- Enhanced Security: Protect against unauthorized access and identity theft with robust facial recognition technology.

- Improved Efficiency: Streamline processes by automating identity verification, reducing manual effort and errors.

- User Convenience: Provide a seamless and user-friendly experience for individuals and customers.

- Scalability: Handle large volumes of transactions and users efficiently, ensuring scalability for growing businesses.

- Integration Flexibility: Easily integrate with existing systems and platforms for seamless implementation.

How to use ARGOS Face ID efficiently:

Creating multiple accounts may result in problems such as difficulty in securing the correct number of users and data, decreased service reliability, resource shortage due to increased server load, and decreased quality. For the above reasons, companies may have attempted to block users from creating multiple accounts. There are limitations for companies.

First, from a company's perspective, increasing the number of service users is important as it is linked to sales. If the creation of multiple accounts is restricted, it may create a hurdle for service users and increase the churn rate.

On the technical side, as security advances, the number of ways to circumvent it is also increasing, such as using a VPN or proxy server to change your IP address or create an account with a virtual phone number, address, or email.

Therefore, companies have limitations due to lack of resources to spend additional time and money on creating multiple accounts each time. ��With Argos, any company interested in preventing multiple account users can easily deploy advanced facial recognition capabilities for user authentication and duplicate detection. This will be helpful to companies that are concerned about creating multiple accounts or to companies that want to expand their business.

ARGOS is committed to providing innovative and reliable facial recognition solutions that meet the highest security standards. Their technology is designed to protect privacy and ensure a secure and convenient user experience.

If you are looking for facial recognition verification solution, you can find them at ARGOS.

Click here to if you are interested in ARGOS Identity products.

View more: Real-Time Facial Recognition for Quick Verification

0 notes

Text

Optimizing Web Crawl: Tips to Easily Improve Crawl Efficiency

Let's talk about a practical topic today - how to optimize web crawling. Whether you are a data scientist, a crawler developer, or a general web user interested in web data, I believe this article can help you.

I. Define the goal, planning first

Before you start crawling web pages, the most important step is to clarify your crawling goals. What website data do you want to crawl? What fields are needed? What is the frequency of crawling? All of these questions have to be thought through first. With a clear goal, you can develop a reasonable crawling program to avoid the waste of resources caused by blind crawling.

II.Choose the right tools and frameworks

The next step is to choose a suitable web crawling tool and framework. There are many excellent crawling tools and frameworks on the market to choose from, such as Python's Scrapy, BeautifulSoup, and Node.js Cheerio and so on. Choose a suitable tool and framework for you can greatly improve the efficiency of crawling.

III. Optimize the crawling strategy

Optimization of crawling strategy is the key to improve crawling efficiency. Here are some practical optimization suggestions:

l Concurrent Crawling: By means of multi-threaded or asynchronous requests, concurrent crawling can significantly improve the crawling speed. But pay attention to control the concurrency to avoid excessive pressure on the target website.

l de-duplication mechanism: In the process of crawling, it is inevitable to encounter duplicate data. Therefore, it is crucial to establish an effective de-duplication mechanism. Data structures such as hash tables and Bloom filters can be used to realize de-duplication.

l Intelligent Waiting: For websites that require login or captcha verification, the waiting time in the crawling process can be reduced by intelligent waiting. For example, after a successful login, wait for a few seconds before proceeding to the next step.

l Exception Handling: In the process of crawling, various exceptions may be encountered, such as network timeout, page loading failure and so on. Therefore, the establishment of a perfect exception handling mechanism can ensure the stability and reliability of the crawling process.

IV. Reasonable setting of crawling frequency

Crawling frequency settings is also a problem that needs attention. Too frequent crawling may cause pressure on the target site, and even lead to IP blocking. Therefore, when setting the crawl frequency, we should fully consider the load capacity of the target site and crawling demand. You can analyze the target site's update frequency and crawling demand to set a reasonable crawling frequency.

V. Regular Maintenance and Updates

Finally, don't forget to maintain and update your crawling system regularly. With the changes of target websites and adjustments of crawling needs, you may need to constantly optimize your crawling strategy and code. Regularly checking and updating your crawling system will ensure that it always performs well and remains stable.

By using leading proxy IP services such as 711Proxy, companies can maintain the stability and accuracy of data flow when performing web crawling, improving the effectiveness of analysis and the precision of decision-making.

0 notes

Text

8 Strategies to Protect Online Survey Results from AI Manipulation

Introduction

With the rapid advancement of artificial intelligence (AI) and machine learning, the reliability of online survey results is increasingly under threat. AI-driven bots can easily manipulate survey data, leading to inaccurate insights and flawed business decisions. For primary market research companies like Philomath Research, ensuring the authenticity of survey responses is crucial to maintaining data integrity and credibility. In this blog, we will explore eight effective ways to safeguard online survey results from AI manipulation, ensuring accurate and valuable insights for your business.

1. Implement CAPTCHA and reCAPTCHA Mechanisms

One of the most effective ways to protect your online survey results from AI bots is by implementing CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) and its more advanced version, reCAPTCHA. These tools create challenges that are easy for humans but difficult for AI bots to solve, such as identifying objects in images, clicking on specific areas, or solving puzzles.

How It Works: CAPTCHA relies on visual or audio puzzles to differentiate between humans and AI bots. By including these challenges at the start and end of surveys, you can significantly reduce the number of automated submissions.

Benefits: Reduces fraudulent entries, improves data accuracy, and is easy to implement.

Challenges: Some CAPTCHA solutions can be bypassed by advanced AI models, so regularly updating and using more sophisticated versions like reCAPTCHA is important.

2. Utilize Honeypot Fields

Honeypot fields are an effective way to trap AI bots that automatically fill out survey forms. This method involves adding invisible fields to the survey form that humans cannot see but bots will attempt to fill out.

How It Works: By adding a hidden field within the survey that is not visible to users, any input into this field can automatically flag the entry as a bot submission.

Benefits: Simple and cost-effective, does not affect user experience, and easy to implement.

Challenges: Honeypot fields may not be foolproof against highly advanced AI, requiring additional layers of security.

3. Employ Advanced User Authentication Methods

Advanced user authentication methods, such as two-factor authentication (2FA) and single-use tokens, can help ensure that only legitimate users participate in your surveys.

How It Works: Before accessing the survey, participants are required to verify their identity through email, SMS, or mobile authentication apps.

Benefits: Enhances security by ensuring that respondents are legitimate, and reduces the likelihood of repeated responses from the same individual.

Challenges: Adds a step to the survey-taking process, which may reduce completion rates. However, the trade-off for data integrity is often worth it.

4. Monitor and Analyze Survey Response Patterns

AI bots typically display patterns that differ from human behavior, such as completing surveys too quickly or selecting random responses. By monitoring response times and analyzing answer patterns, you can detect and filter out suspicious entries.

How It Works: Use survey software analytics tools to track response times, detect irregular patterns, and identify duplicate IP addresses or devices.

Benefits: Allows for continuous monitoring, provides insights into potential bot activity, and helps refine survey security measures.

Challenges: Requires sophisticated tools and expertise to implement effective monitoring and analysis.

5. Leverage Machine Learning to Detect Anomalies

Leveraging machine learning algorithms to detect anomalies in survey responses is a powerful way to identify AI manipulation. Machine learning models can be trained to recognize patterns indicative of bot activity.

How It Works: Machine learning algorithms analyze large datasets to identify outliers and patterns inconsistent with typical human behavior.

Benefits: Can handle large datasets, improves accuracy over time, and reduces manual oversight.

Challenges: Requires expertise in machine learning and access to extensive datasets for training purposes.

6. Conduct Regular Data Audits

Regular data audits are essential to ensure the integrity of your survey results. Data audits involve cross-checking and verifying the authenticity of survey responses through various techniques, such as comparing IP addresses, analyzing response times, and checking for consistency in answers.

How It Works: Establish a regular schedule for reviewing and validating survey data to identify and eliminate fraudulent entries.

Benefits: Improves data accuracy, identifies potential vulnerabilities, and enhances trust in survey results.

Challenges: Time-consuming and may require dedicated resources to conduct thorough audits regularly.

7. Use Randomized Questionnaires and Question Order

Randomizing the order of questions or providing multiple versions of the same question can make it more difficult for AI bots to provide consistent responses, thereby reducing the risk of manipulation.

How It Works: By varying the order of questions or offering different phrasing for the same question, it becomes harder for bots programmed to recognize specific patterns to manipulate the survey.

Benefits: Improves data reliability, reduces predictability for bots, and enhances respondent engagement.

Challenges: Randomization may confuse some human respondents, especially if the survey is lengthy or complex.

8. Incorporate Validation Questions and Logic Traps

Validation questions and logic traps can help identify automated responses by checking for inconsistencies in answers. These questions are designed to be answered correctly by humans but are challenging for AI bots to navigate.

How It Works: Insert questions with logical sequences or include straightforward validation checks that confirm a respondent’s attention and understanding.

Benefits: Increases the likelihood of detecting bots, improves data quality, and provides a better understanding of genuine respondent behavior.

Challenges: Too many validation questions can frustrate human respondents, so balance is key.

Conclusion

Safeguarding your online survey results from AI manipulation is crucial to ensuring the integrity of your data and the quality of insights derived from it. By implementing a combination of CAPTCHA mechanisms, honeypot fields, advanced user authentication methods, and anomaly detection through machine learning, you can significantly reduce the risk of fraudulent entries. Regular data audits, randomized questionnaires, and validation questions further enhance data reliability.

For primary market research companies like Philomath Research, protecting survey data from AI manipulation is not just about technology but also about building trust with your clients and respondents. By staying proactive and vigilant, you can ensure that your surveys provide valuable and actionable insights, free from the influence of AI-driven manipulation.