#Independent audio visualizer software

Explore tagged Tumblr posts

Text

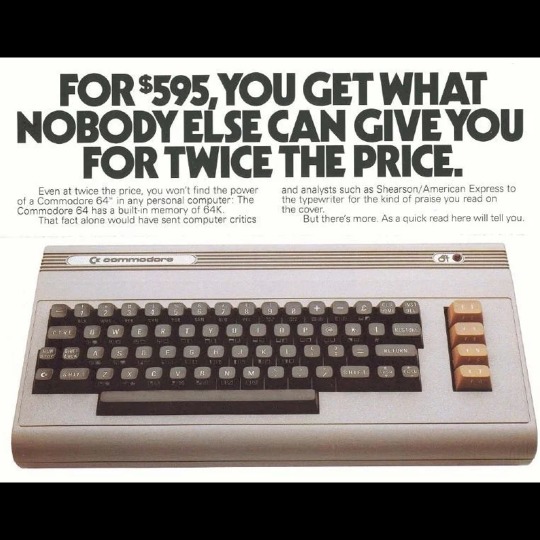

🎄💾🗓️ Day 18: Retrocomputing Advent Calendar - Commodore 64🎄💾🗓️

The Commodore 64, released in 1982, is one of the ones we keep hearing got many people their start in their own computing history. Powered by a MOS Technology 6510 processor at 1.02 MHz and featuring 64 KB of RAM, it became the best-selling single computer model of all time, with an estimated 12.5–17 million units sold. Its graphics were driven by the VIC-II chip, capable of 16 colors, hardware sprites, and smooth scrolling, while the SID (Sound Interface Device) chip delivered advanced audio, supporting three voices with waveforms and filters, making it a lot of fun for gaming and music.

Featured a built-in BASIC interpreter, allowing users to write their own programs out-of-the-box. The C64’s affordability, large software library, lots of games, productivity, and educational applications made it a household name. It connected to TVs as monitors and supported peripherals like the 1541 floppy disk drive, datasette, and various joysticks. With over 10,000 commercial software titles and a thriving homebrew scene, the C64 helped define a generation of computer enthusiasts.

Its impact on gaming was gigantic, iconic titles like The Last Ninja, Maniac Mansion, and Impossible Mission. The C64 also inspired a demoscene, where programmers pushed its hardware for visual and audio effects. The Commodore 64 remains a symbol of computing for the masses and creative innovation, still loved by retrocomputing fans today.

Check out the National Museum of American History, and Wikipedia. https://americanhistory.si.edu/collections/object/nmah_334636 https://en.wikipedia.org/wiki/Commodore_64

And…! An excellent story from Jepler -

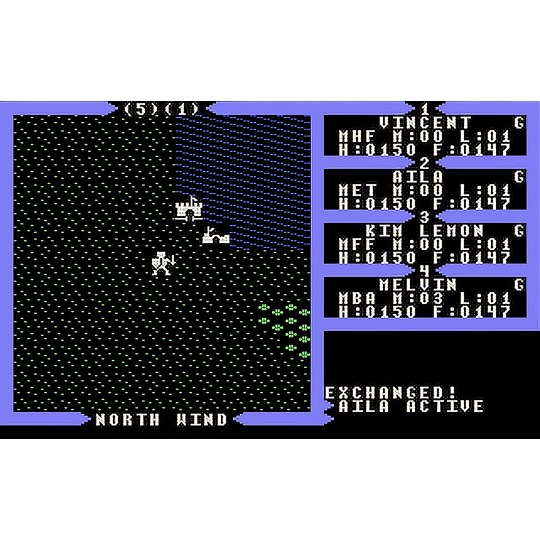

== While I started on the VIC 20, the Commodore 64 was my computer for a lot longer. Its SID sound chip was a headline feature, and many of my memories of it center around music. Starting with Ultima III, each game in the series had a different soundtrack for each environment (though each one was on a pretty short loop, it probably drove my folks nuts when I would play for hours). There were music editors floating around, so I tried my hand at arranging music for its 3 independent voices, though I can't say I was any good or that I have any of the music now. You could also download "SID tunes" on the local BBSes, where people with hopefully a bit more skill had arranged everything from classical to Beatles to 80s music.

Folks are still creating cool new music on the Commodore 64. One current creator that I like a great deal is Linus Åkesson. Two videos from 2024 using the Commodore 64 that really impressed me were were a "Making 8-bit Music From Scratch at the Commodore 64 BASIC Prompt", a live coding session (http://www.linusakesson.net/programming/music-from-scratch/index.php) and Bach Forever (http://www.linusakesson.net/scene/bach-forever/index.php) a piece played by Åkesson on two Commodore 64s.

Like so many things, you can also recreate the experience online. Here's the overworld music for Ultima III: https://deepsid.chordian.net/?file=/MUSICIANS/A/Arnold_Kenneth/Ultima_III-Exodus.sid&subtune=1 -- the site has hundreds or thousands of other SIDs available to play right in the browser.

Have first computer memories? Post’em up in the comments, or post yours on socialz’ and tag them #firstcomputer #retrocomputing – See you back here tomorrow!

#commodore64#retrocomputing#vintagecomputing#computermuseum#classicgames#retrogaming#1980snostalgia#mos6510#vicii#sidchip#gaminghistory#computerhistory#personalcomputing#programming#8bitgaming#demoscene#computerscience#classiccomputers#homecomputing#nostalgiamachine#oldschoolgaming#historicaltech#technostalgia#c64games#gaminglegends#codinghistory#earlycomputers#floppydisk#techmuseum#retrotech

82 notes

·

View notes

Note

I have a question about screen readers...

Do you like them? Would you prefer to listen to some things (e.g. blogs) read by a person instead of software? Or does it not matter to you? Or is there already another option out there that I'm not aware of?

The reason I'm asking is that I listen to a lot of audio books and that got me thinking about people accessing fanfiction, blogs, Tumblr posts, etc.

Thanks for the question! I actually love my screen reader. It gives me agency and independence.

I can’t speak for anyone else, but I personally prefer screen readers over audiobooks. I am not just passively listening, I am in control of the voice, speed, pronunciation, and much more.

I am not just listening top to bottom. I can jump around, navigate to paragraphs or headings, repeat things or have them spelled, etc.

An audiobook read by real person will always have something of them in there. It’s in the inflection, the emotion, the emphasis, the way they’re doing voices for different people. A lot of people enjoy it or even see it as the main selling point of an audiobook, but I prefer the neutrality of my screen reader. I want to infuse my own meaning into the book and not get it from someone else.

But this is completely my personal opinion. Everybody is different. There are also screen readers and voices that imitate human emotion a lot more than mine. I prefer the more robotic ones.

Another thing I prefer is the incredible speed you can listen to with a robotic voice. I also listen to YouTube at 1.75 speed, but 2x can become difficult depending on the speaker. It’s easier with a synthetic voice due to its perfect and consistent pronunciation.

For me, my screen reader is truly as if I’m reading on my own. Because I am. Using a screen reader is reading. It’s just a different way of accessing a text for people with (visual) disabilities.

Audiobooks are also a way of reading and they’re super important for accessibility for a lot of people, and I would never discount that. However, for me, my screen reader feels a lot closer to reading the way I used to back when I sight-read.

It’s a matter of taste in the end, but I love my screen reader. For me, it’s not just a stopgap measure, it’s the real deal.

13 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration

The early 2000s brought the integration of MIDI controllers into DJ performance, marking a shift toward digital workflows. Devices like the Vestax VCI-100 and Hercules DJ Console enabled control over software like Traktor, Serato, and VirtualDJ. This introduced features such as beat syncing, cue points, and FX without losing physical interaction.

From an engineering perspective, this era introduced complexities such as USB data latency, audio driver configurations, and software-to-hardware mapping. However, it also opened the door to more compact, modular systems with immense creative potential.

Controllerism and Creative Freedom

Between 2010 and 2015, the concept of controllerism took hold. DJs began customizing their setups with multiple MIDI controllers, pad grids, FX units, and audio interfaces to create dynamic, live remix environments. Brands like Native Instruments, Akai, and Novation responded with feature-rich units that merged performance hardware with production workflows.

Technical advancements during this period included:

High-resolution jog wheels and pitch faders

Multi-deck software integration

RGB velocity-sensitive pads

Onboard audio interfaces with 24-bit output

HID protocol for tighter software-hardware response

These tools enabled a new breed of DJs to blur the lines between DJing, live production, and performance art—all requiring more advanced routing, monitoring, and latency optimization from audio engineers.

All-in-One Systems: Power Without the Laptop

As processors became more compact and efficient, DJ controllers began to include embedded CPUs, allowing them to function independently from computers. Products like the Pioneer XDJ-RX, Denon Prime 4, and RANE ONE revolutionized the scene by delivering laptop-free performance with powerful internal architecture.

Key engineering features included:

Multi-core processing with low-latency audio paths

High-definition touch displays with waveform visualization

Dual USB and SD card support for redundancy

Built-in Wi-Fi and Ethernet for music streaming and cloud sync

Zone routing and balanced outputs for advanced venue integration

For engineers managing live venues or touring rigs, these systems offered fewer points of failure, reduced setup times, and greater reliability under high-demand conditions.

Embedded AI and Real-Time Stem Control

One of the most significant breakthroughs in recent years has been the integration of AI-driven tools. Systems now offer real-time stem separation, powered by machine learning models that can isolate vocals, drums, bass, or instruments on the fly. Solutions like Serato Stems and Engine DJ OS have embedded this functionality directly into hardware workflows.

This allows DJs to perform spontaneous remixes and mashups without needing pre-processed tracks. From a technical standpoint, it demands powerful onboard DSP or GPU acceleration and raises the bar for system bandwidth and real-time processing.

For engineers, this means preparing systems that can handle complex source isolation and downstream processing without signal degradation or sync loss.

Cloud Connectivity & Software Ecosystem Maturity

Today’s DJ controllers are not just performance tools—they are part of a broader ecosystem that includes cloud storage, mobile app control, and wireless synchronization. Platforms like rekordbox Cloud, Dropbox Sync, and Engine Cloud allow DJs to manage libraries remotely and update sets across devices instantly.

This shift benefits engineers and production teams in several ways:

Faster changeovers between performers using synced metadata

Simplified backline configurations with minimal drive swapping

Streamlined updates, firmware management, and analytics

Improved troubleshooting through centralized data logging

The era of USB sticks and manual track loading is giving way to seamless, cloud-based workflows that reduce risk and increase efficiency in high-pressure environments.

Hybrid & Modular Workflows: The Return of Customization

While all-in-one units dominate, many professional DJs are returning to hybrid setups—custom configurations that blend traditional turntables, modular FX units, MIDI controllers, and DAW integration. This modularity supports a more performance-oriented approach, especially in experimental and genre-pushing environments.

These setups often require:

MIDI-to-CV converters for synth and modular gear integration

Advanced routing and clock sync using tools like Ableton Link

OSC (Open Sound Control) communication for custom mapping

Expanded monitoring and cueing flexibility

This renewed complexity places greater demands on engineers, who must design systems that are flexible, fail-safe, and capable of supporting unconventional performance styles.

Looking Ahead: AI Mixing, Haptics & Gesture Control

As we look to the future, the next phase of DJ controllers is already taking shape. Innovations on the horizon include:

AI-assisted mixing that adapts in real time to crowd energy

Haptic feedback jog wheels that provide dynamic tactile response

Gesture-based FX triggering via infrared or wearable sensors

Augmented reality interfaces for 3D waveform manipulation

Deeper integration with lighting and visual systems through DMX and timecode sync

For engineers, this means staying ahead of emerging protocols and preparing venues for more immersive, synchronized, and responsive performances.

Final Thoughts

The modern DJ controller is no longer just a mixing tool—it's a self-contained creative engine, central to the live music experience. Understanding its capabilities and the technology driving it is critical for audio engineers who are expected to deliver seamless, high-impact performances in every environment.

Whether you’re building a club system, managing a tour rig, or outfitting a studio, choosing the right gear is key. Sourcing equipment from a trusted professional audio retailer—online or in-store—ensures not only access to cutting-edge products but also expert guidance, technical support, and long-term reliability.

As DJ technology continues to evolve, so too must the systems that support it. The future is fast, intelligent, and immersive—and it’s powered by the gear we choose today.

2 notes

·

View notes

Text

Immersive Audio Experiences: How 3D Audio Is Changing Event Design

Introduction: Events Are No Longer Just Visual

Think back to the last big event you attended — maybe a concert, a corporate presentation, or even a museum exhibit. Chances are, the audio played a bigger role than you realized. Today, sound isn’t just about amplifying voices or music. It’s becoming a multi-dimensional experience, thanks to a technology called 3D audio.

In a world where event organizers are constantly competing to capture attention, immersive audio experiences are quickly becoming a game-changer. It’s not just about hearing sound — it’s about feeling like you’re inside the sound itself.

What Is 3D Audio?

At its core, 3D audio simulates how we naturally hear sound in the real world. Normally, our ears detect sound from all directions — front, back, above, below, and everywhere in between. Traditional sound systems — like stereo or surround sound — mostly project audio from just a few fixed directions (usually left and right, or front and rear).

3D audio takes it further, creating an audio bubble where sound moves around you just like it would in real life. Imagine sitting at a nature-themed event where you hear birds fluttering overhead, leaves rustling to your side, and water trickling behind you — all from carefully placed speakers. It feels natural and fully immersive.

Why Immersive Audio Matters in Event Design

In modern event design, atmosphere matters as much as content. Whether it’s a tech conference, music festival, or product launch, creating a memorable experience is the goal. Audio isn’t just about making sure people can hear — it’s about making them feel connected to the space.

3D audio helps events stand out by:

Enhancing storytelling — At an art installation or exhibit, immersive sound can pull visitors into the story, guiding them through different areas using directional audio cues.

Creating emotional impact — At concerts, sound that moves through the crowd feels way more exciting than static front-of-stage audio.

Controlling focus — In corporate presentations or educational events, speakers can direct attention to specific parts of a stage or screen by shifting audio emphasis.

Key Technologies Driving 3D Audio

1. Spatial Audio Processing

This software analyzes how sound should behave in a space, adjusting for listener position, speaker placement, and acoustics. It makes sure sounds come from the right place at the right time, even if listeners move around.

2. Object-Based Audio

Instead of mixing audio into fixed channels (like left or right speakers), object-based audio treats each sound as a separate “object” that can move independently through space. This is what allows a sound to smoothly move from one side of a room to the other.

3. Ambisonics and Binaural Audio

Ambisonics records audio in a full sphere (360 degrees) around a listener.

Binaural audio creates immersive experiences through headphones, by mimicking how human ears naturally hear sound from different directions.

How 3D Audio Is Used in Different Events

Concerts and Music Festivals

Live music feels richer when sound isn’t just blasting from the stage, but surrounding the audience. With 3D audio, instruments and vocals can move through the crowd, matching the energy and mood of the performance.

Corporate Events

At product launches or conferences, spatial audio can direct focus to key speakers or product displays, guiding the audience’s attention without the need for visual cues.

Museums and Art Installations

Art and sound installations are some of the biggest adopters of 3D audio. Imagine walking through a virtual forest, with realistic environmental sounds guiding you through different sections of an exhibit.

Theme Parks and Attractions

Attractions use spatial soundscapes to enhance rides, walkthrough experiences, and even waiting areas, helping build anticipation and immersion.

Designing Events with 3D Audio in Mind

If you’re planning an event, choosing the right audio gear matters more than ever. Not all speakers and systems can handle 3D audio, so working with professional audio shops can make sure you get equipment designed for spatial sound, along with advice on optimal placement and software.

As technology keeps evolving, the line between sound and space is disappearing. 3D audio is no longer just for VR headsets or fancy theaters — it’s becoming a core part of event design. Whether you’re organizing a concert, a corporate event, or an interactive installation, immersive audio helps create unforgettable moments that leave audiences talking long after they’ve left.

#music#audiogears#audio#dj#audio shops#professional audio equipment#brooklyn#speakers#brooklyn audio shop#musician#pro audio

2 notes

·

View notes

Text

Revolutionizing Automation: Harnessing the Power of Multimodal AI

Introduction

In the rapidly evolving landscape of artificial intelligence, multimodal AI has emerged as a transformative force. By integrating diverse data types such as text, images, audio, and video, multimodal AI systems are revolutionizing industries from healthcare to e-commerce. This integration enables more holistic and intelligent automation solutions, offering unprecedented opportunities for innovation and growth.

Multimodal AI refers to artificial intelligence systems capable of processing and combining multiple types of data inputs to understand context more comprehensively and perform complex tasks more effectively. This capability is pivotal in creating personalized and efficient solutions across various sectors. For AI practitioners and software engineers seeking to excel in this space, engaging in Agentic AI courses for beginners can provide foundational knowledge crucial for mastering multimodal AI technologies.

Evolution of Agentic and Generative AI

Agentic AI involves autonomous agents that interact with their environment, making decisions based on multimodal inputs such as voice, text, and images. These agents excel in dynamic settings like healthcare, finance, and customer service, where contextual understanding is key. For example, virtual assistants powered by Agentic AI can interpret user intent across multiple input types, providing personalized and context-aware responses.

Generative AI focuses on creating new content, from realistic images to synthesized music. When combined with multimodal capabilities, Generative AI can produce rich multimedia content that is both engaging and interactive. This synergy is especially valuable in creative industries, where AI-driven innovation accelerates idea generation and content creation.

Agentic AI: The Rise of Autonomous Agents

Agentic AI systems act independently by leveraging continuous interaction with their environment. In multimodal AI, these autonomous agents process diverse inputs to make informed decisions, enhancing applications requiring nuanced human-like interaction. For those entering this domain, an Agentic AI course for beginners can lay the groundwork for understanding the design and deployment of such agents.

Generative AI: Creating New Content

Generative AI has revolutionized content creation by synthesizing novel data across multiple modalities. Integrating multimodal capabilities allows these systems to generate multimedia outputs that are not only visually compelling but contextually coherent. Professionals aiming to deepen their expertise can benefit from a Generative AI course with placement, which often includes hands-on projects involving multimodal data generation.

Latest Frameworks, Tools, and Deployment Strategies

Effectively deploying multimodal AI systems demands advanced frameworks capable of handling the complexity of integrating diverse data types. Recent trends include the rise of unified multimodal foundation models and the adoption of MLOps practices tailored for generative and agentic AI models.

Unified Multimodal Foundation Models

Leading models like OpenAI’s ChatGPT-4 and Google’s Gemini exemplify unified architectures that process and generate multiple data modalities seamlessly. These models reduce the complexity of managing separate systems for each data type, improving efficiency and scalability across industries. They leverage contextual data across modalities to enhance performance, making them ideal for applications ranging from autonomous agents to generative content platforms.

MLOps for Generative Models

MLOps (Machine Learning Operations) is essential for managing AI model lifecycles, ensuring scalability, reliability, and compliance. In the generative AI context, MLOps includes continuous monitoring, updating models with fresh data, and enforcing ethical guidelines on generated content. Software engineers interested in this field should consider an AI programming course that covers MLOps pipelines and best practices for maintaining generative AI systems.

LLM Orchestration

Large Language Models (LLMs) play a pivotal role in multimodal AI systems. Orchestrating these models involves coordinating their operations across different data types and applications to ensure smooth integration and optimal performance. This orchestration requires sophisticated software engineering methodologies to maintain system reliability, a topic often explored in advanced AI programming courses.

Advanced Tactics for Scalable, Reliable AI Systems

Building scalable and reliable multimodal AI systems involves strategic design and operational tactics:

Modular Architecture: Designing AI systems with modular components allows specialization for specific data types or tasks, facilitating easier maintenance and upgrades.

Continuous Integration/Continuous Deployment (CI/CD): Implementing CI/CD pipelines accelerates testing and deployment cycles, reducing downtime and enhancing system robustness.

Monitoring and Feedback Loops: Robust monitoring systems paired with feedback mechanisms enable real-time issue detection and adaptive optimization.

These practices are fundamental topics covered in AI programming courses and Agentic AI courses for beginners to prepare engineers for real-world challenges.

The Role of Software Engineering Best Practices

Software engineering best practices are vital to ensure reliability, security, and compliance in multimodal AI systems. Key aspects include:

Testing and Validation: Comprehensive testing using diverse datasets and scenarios ensures models perform accurately in production environments. Validation is especially critical for multimodal AI, given the complexity of integrating heterogeneous data.

Code Quality and Documentation: Maintaining clean, well-documented code facilitates collaboration among multidisciplinary teams and reduces error rates.

Security Measures: Securing AI systems against data breaches and unauthorized access safeguards sensitive multimodal inputs, a concern paramount in sectors like healthcare and finance.

Ethical considerations such as data privacy and bias mitigation must also be integrated into software engineering workflows to maintain trustworthiness and regulatory compliance. These topics are often emphasized in Generative AI courses with placement that include ethical AI modules.

Cross-Functional Collaboration for AI Success

Successful multimodal AI projects rely on effective collaboration among data scientists, software engineers, and business stakeholders:

Data Scientists develop and optimize AI models, focusing on data preprocessing, model architecture, and training.

Engineers implement scalable, maintainable systems and ensure integration within existing infrastructure.

Business Stakeholders align AI initiatives with strategic objectives, ensuring solutions deliver measurable value.

Collaboration tools and regular communication help bridge gaps between these groups. Training programs like Agentic AI courses for beginners and AI programming courses often highlight cross-functional teamwork as a critical success factor.

Measuring Success: Analytics and Monitoring

Evaluating multimodal AI deployments involves tracking key performance indicators (KPIs) such as:

Accuracy and precision of model outputs across modalities

Operational efficiency and latency

User engagement and satisfaction

Advanced analytics platforms provide real-time monitoring and actionable insights, enabling continuous improvement. Understanding these metrics is an integral part of AI programming courses designed for practitioners deploying multimodal AI systems.

Case Studies: Real-World Applications of Multimodal AI

Case Study 1: Enhancing Customer Experience with Multimodal AI

A leading e-commerce company implemented multimodal AI to create a personalized customer service system capable of handling voice, text, and visual inputs simultaneously.

Technical Challenges

Integrating diverse data types and ensuring seamless communication between AI components posed significant challenges. The company adopted a unified multimodal foundation model to overcome these hurdles.

Business Outcomes

Increased Efficiency: Automated responses reduced human agent workload, allowing focus on complex queries.

Enhanced User Experience: Customers interacted through preferred channels, improving satisfaction.

Personalized Interactions: Tailored recommendations boosted sales and loyalty.

This implementation underscores the value of training in Agentic AI courses for beginners and Generative AI courses with placement to develop skills in multimodal AI integration.

Case Study 2: Transforming Healthcare with Multimodal AI

Healthcare providers leveraged multimodal AI to combine medical images, patient histories, and clinical notes for more accurate diagnostics and personalized treatment plans.

Technical Challenges

Handling complex medical data and ensuring interpretability required specialized multimodal AI models.

Business Outcomes

Improved Diagnostics: Enhanced accuracy led to better patient outcomes.

Personalized Care: Tailored treatments increased care effectiveness.

This sector highlights the importance of AI programming courses focusing on ethical AI development and secure handling of sensitive data.

Actionable Tips and Lessons Learned

Start Small: Pilot projects help test multimodal AI feasibility before full-scale deployment.

Collaborate Across Teams: Cross-functional cooperation ensures alignment with business goals.

Monitor and Adapt: Continuous performance monitoring allows timely system improvements.

Engaging in Agentic AI courses for beginners, Generative AI courses with placement, and AI programming courses can equip teams with the necessary skills to implement these tips effectively.

Conclusion

Harnessing the power of multimodal AI marks a new era in automation. By integrating diverse data types and leveraging advanced AI technologies, businesses can build more intelligent, holistic, and personalized solutions. Whether you are an AI practitioner, software engineer, or technology leader, embracing multimodal AI through targeted education such as Agentic AI courses for beginners, Generative AI courses with placement, and AI programming courses can transform your organization's capabilities and drive innovation forward. As these technologies continue to mature, the future of automation promises unprecedented opportunities for growth and impact.

0 notes

Text

Discover the Most Affordable Sound Studio in London

When it comes to bringing your music, podcast, or voiceover project to life, having access to a professional and budget-friendly studio is key. For creators seeking quality without compromise, Maa Records proudly offers the most affordable sound studio in London—a space where talent meets technology.

Why Maa Records?

Based in the heart of London, Maa Records is more than just a recording studio. It’s a creative hub built for artists, producers, and storytellers who want studio-grade sound at a price that doesn’t break the bank. Whether you’re a first-time singer or a seasoned content creator, our studio gives you everything you need to elevate your sound.

Industry-Standard Equipment

We understand that sound quality makes all the difference. That’s why Maa Records is equipped with high-end microphones, digital mixing consoles, sound-treated booths, and professional mastering software. You can walk in with an idea and walk out with a track that’s ready for streaming platforms or radio play.

From vocals to instrumentals, podcasts to audiobooks, our gear and acoustics ensure a crisp, clean, and rich sound—every single time.

Expert Support, Friendly Team

One of the best parts about working at Maa Records is the supportive environment. Our in-house sound engineers are experienced professionals who guide you through the recording process, offering tips, creative input, and technical support when needed. We believe great sound comes from great teamwork.

Flexible Packages for Every Budget

As an affordable sound studio in London, Maa Records offers flexible booking options that cater to individual artists, bands, and media creators. Whether you need an hour, a full day, or a long-term project slot, our rates are structured to provide maximum value for your money.

We also offer custom packages for music video shoots, social media content, and full audio-visual production for artists who want to launch their complete creative vision under one roof.

Perfect for Independent Artists & New Talent

If you’re an up-and-coming artist looking for a studio that respects your hustle and works within your budget, Maa Records is your ideal partner. We’ve had the privilege of working with local talent across genres—from Punjabi folk and hip-hop to classical fusion and beyond.

We don’t just provide space—we nurture growth.

Book Your Session Today

With hundreds of happy clients and growing recognition in both the UK and Indian music scenes, Maa Records stands as a trusted name in London's creative community.

Ready to bring your sound to life? Choose Maa Records—the affordable sound studio in London where creativity meets quality.

1 note

·

View note

Text

AI Music Video Agent: The Future of Automated Content Creation

The rise of artificial intelligence has revolutionized multiple industries, and the world of music and video production is no exception. The AI music video agent��is an innovative tool that automates the creation of music videos, making the process faster, more efficient, and accessible to artists of all levels. This article explores how this technology works, its benefits, and its potential impact on the entertainment industry.

What Is an AI Music Video Agent?

An AI music video agent is a software or platform that uses machine learning, computer vision, and generative AI to create music videos with minimal human intervention. By analyzing audio tracks, lyrics, and visual preferences, the agent generates synchronized visuals, animations, and effects tailored to the song’s mood and style.

Key Features of an AI Music Video Agent

Automated Video Generation – The AI processes the music file and automatically generates a video with dynamic transitions, effects, and scene changes.

Customizable Styles – Users can select themes, color palettes, and animation styles to match their artistic vision.

Lyric Synchronization – The AI detects lyrics and syncs them with on-screen text or visual storytelling elements.

AI-Generated Imagery – Some agents use text-to-image models (like DALL·E or Stable Diffusion) to create unique visuals based on song themes.

Real-Time Editing – Artists can tweak the output, adjusting pacing, effects, and transitions before finalizing the video.

How Does an AI Music Video Agent Work?

The process typically involves the following steps:

Audio Analysis – The AI examines the song’s tempo, beats, and emotional tone to determine pacing and visual rhythm.

Lyric Processing – If lyrics are available, the AI extracts keywords and themes to influence visual content.

Visual Generation – Using generative AI, the system creates or curates footage, animations, and effects.

Synchronization – The AI aligns the visuals with the music, ensuring seamless transitions and timing.

Final Rendering – The video is compiled and exported in the desired format.

Popular platforms like Runway ML, Synthesia, and Pictory already offer AI-assisted video creation, but specialized AI music video agents take it further by focusing on music synchronization and artistic customization.

Benefits of Using an AI Music Video Agent

1. Cost-Effective Production

Traditional music videos require hiring directors, editors, and production crews, which can be expensive. An AI music video agent reduces costs by automating most of the process.

2. Faster Turnaround Time

Instead of weeks or months of filming and editing, AI-generated videos can be produced in hours, allowing artists to release content more frequently.

3. Creative Freedom

Independent musicians can experiment with different visual styles without relying on large budgets or production teams.

4. Accessibility for Emerging Artists

New artists without industry connections can still produce professional-looking videos, helping them gain visibility.

Challenges and Limitations

While AI music video agents offer many advantages, there are still challenges:

Lack of Human Touch – AI-generated videos may lack the emotional depth and storytelling nuances of human-directed projects.

Copyright Issues – Some AI models train on existing media, raising concerns about originality and legal risks.

Technical Limitations – Complex visual effects or highly customized scenes may still require manual adjustments.

The Future of AI in Music Video Production

As AI technology advances, we can expect:

Hyper-Personalized Videos – AI could analyze fan preferences to create customized versions of music videos for different audiences.

Interactive Music Videos – AI-generated videos might adapt in real-time based on viewer interactions (e.g., changing visuals based on mood).

Integration with Virtual Reality (VR) – AI could craft immersive 360° music experiences for VR platforms.

1 note

·

View note

Text

Best Video Editing Courses in Odisha – Learn with ProSkill Media School

Are you passionate about visual storytelling? Do you dream of working behind the scenes on films, YouTube content, music videos, or social media reels? If yes, then mastering video editing is your gateway to the creative industry. At ProSkill Media School, we offer the Best Video Editing Courses in Odisha – designed to turn your creativity into a full-fledged career.

Why Choose Video Editing as a Career?

In today’s digital-first world, video content drives everything marketing, education, entertainment, and even social awareness. But every impactful video you see online is shaped and polished by skilled video editors. From transitions and color grading to audio syncing and visual effects, video editors are the invisible storytellers of the digital age.

What Makes ProSkill’s Video Editing Courses Unique?

At ProSkill Media School, we believe in learning by doing. Our curriculum is built around practical experience, real editing projects, peer reviews, and hands-on assignments. Here’s what you get when you enroll:

Training on industry-standard software like Adobe Premiere Pro, After Effects, and DaVinci Resolve.

Mentorship from experienced editors and filmmakers who have worked on real commercial projects.

A structured learning path that covers fundamentals, advanced techniques, motion graphics, VFX basics, and storytelling.

Workshops and live projects to help you build a strong portfolio that gets noticed.

Career guidance, freelance support, and internship opportunities for real-world exposure.

Why We’re Recognized Across Odisha

ProSkill Media School is more than just a training institute; we are a career-launching platform. Our alumni are working across Odisha and beyond, building freelance careers, joining production houses, and creating independent content.

Who Can Join This Course?

Our video editing course is perfect for:

Students who want to enter the media or film industry

YouTubers, vloggers, and influencers

Wedding photographers/videographers

Freelancers looking to upskill

Content creators and digital marketers

Your Creative Journey Starts Here

If you’ve been searching for a place that offers Best Video Editing Courses in Odisha, your search ends at ProSkill Media School. With our expert-led training, practical exposure, and career-focused approach, we don’t just teach editing we build editors.

For more information

Contact - 9777955252

Our address-16/A, Cuttack Rd, Budheswari Colony, Laxmisagar, Bhubaneswar, Odisha 751006

Our location- https://maps.app.goo.gl/1LGHbRHyPhixSmM8A

Our website- https://proskillmediaschool.in/

#VideoEditing#VideoEditingCourse#LearnVideoEditing#EditingSkills#VideoEditingTraining#CreativeEditing

1 note

·

View note

Text

How to Fix Audio Sync Issues: Sometimes You Need to Separate It First

Audio sync issues can be incredibly frustrating—whether you’re editing a video, watching a recorded livestream, or working with footage from your camera. The sound doesn’t match the visuals, and the result looks sloppy and unprofessional. One of the most effective ways to solve this problem is by separating the audio from the video. That’s why one of the first steps in resolving sync issues often starts with learning how to separate audio from video.

Once you've done this, you can better manipulate the soundtrack independently of the video timeline. This gives you precise control over delays, misalignments, or re-synchronization.

Why Audio and Video Go Out of Sync

Before diving into the fix, it's helpful to understand why sync issues happen in the first place. Some common causes include:

Variable frame rates in smartphone recordings

Long-duration recordings where encoding drifts over time

Lag introduced during screen recordings or livestream captures

Manual editing errors during video trimming or splicing

In all these cases, trying to fix the issue without isolating the audio usually leads to guesswork. That’s why it’s better to split audio from video first and work on them as separate tracks.

Step-by-Step: Separating and Fixing Audio Sync

Here’s how you can do it using most video editors, including free and professional tools:

1. Import Your Video

Open your video editing software and import your file into the timeline.

2. Separate (Detach) the Audio

Right-click on the video clip and seek an option like:

“Detach Audio”

“Unlink Audio”

“Separate Audio”

Once done, the audio and video will appear as independent tracks. Now, you can slide the audio track slightly forward or backward to line it up with the visuals correctly.

3. Visually Match Sync Points

Use visible cues—such as lip movement or claps—to align the audio. If you're working on a talking head video, match the start of the speech to the corresponding lip movement.

4. Fine-Tune Using Audio Waveforms

Zoom in and look at the audio waveform. Match peaks in the waveform with actions in the video (e.g., a door slam, a clap, or speech onset). Adjust the position by small increments for frame-perfect sync.

5. Lock and Export

Once everything is synchronized, lock the tracks and export your corrected video.

Replacing the Audio Entirely

In some cases, the original audio track may be too corrupted or off-sync to fix. In such situations, consider replacing it with separately recorded sound (like from an external microphone). Just sync this new audio with the video using the same method—detach and align.

Fixing sync issues doesn’t need to be a nightmare. By learning how to separate audio from video, you gain the flexibility to correct mismatches with surgical precision. The next time you encounter a frustrating lag between what you see and hear, remember: sometimes the best fix is to split things up first.

0 notes

Text

St. Andrews Institute of Technology and Management

St. Andrews Institute of Technology and Management (SAITM) is a private educational institution located in Gurugram, Haryana. It was established with the goal of delivering quality higher education in the fields of engineering, technology, and management. SAITM is part of the broader St. Andrews Group of Institutions, which has a legacy in education dating back over three decades.

The institute is approved by the All India Council for Technical Education (AICTE) and is affiliated with Maharshi Dayanand University (MDU), Rohtak, a prominent state university in Haryana. Over the years, SAITM has developed a reputation for offering a combination of academic rigor, practical exposure, and a strong emphasis on personality development.

Academic Programs

SAITM offers undergraduate courses primarily in engineering, technology, and managementrams available include:

1. Bachelor of Technology (B.Tech)

Computer Science and Engineering (CSE)

Artificial Intelligence and Machine Learning

Electronics and Communication Engineering

Mechanical Engineering

Civil Engineering

These courses are designed to meet industry standards and include both theoretical foundations and hands-on experience. The curriculum is frequently updated to keep pace with evolving technology trends.

2. Bachelor of Business Administration (BBA)

The BBA program aims to develop managerial skills and business acumen. It includes subjects such as marketing, finance, human resources, and entrepreneurship. Emphasis is placed on case studies, group projects, and real-world business problems.

3. Bachelor of Computer Applications (BCA)

This program is geared toward students interested in software development, computer programming, and IT infrastructure. It covers subjects like programming languages, database management, and networking.

Campus and Infrastructure

SAITM’s campus is spread over a lush green 22-acre area in the heart of Gurugram, providing a calm and focused environment for learning. The infrastructure is modern and conducive to both academic and extracurricular development.

Key Facilities:

Smart Classrooms: Equipped with audio-visual aids and digital boards.

Library: A well-stocked library with thousands of books, journals, and digital resources.

Computer Labs: High-speed internet and the latest software to ensure students are industry-ready.

Engineering Labs: Hands-on learning through dedicated labs for each engineering discipline.

Auditorium and Seminar Halls: Used for conferences, guest lectures, and cultural events.

Hostels: Separate accommodation for boys and girls with all basic amenities and 24/7 security.

Cafeteria: Hygienic and affordable food with various cuisinesvarious

d Recreation: Facilities are available for indare available for indoor and outdoor games, including basketball, football, volleyball, table tennis, and access to aaccess to a gymnasium.

Faculty and Learning Environment

SAITM boasts a faculty of experienced professionals and academicians, many of whom hold doctoral degrees and have industry exposure. The teaching approach combines lectures with workshops, lab sessions, and real-life case studies.

The institute promotes a student-centric learning environment, encouraging innovation, creativity, and independent thinking. Faculty members also act as mentors, guiding students in both academics and career planning.

Industry Collaboration and Practical Exposure

SAITM emphasizes practical learning through:

Industrial Visits

Guest Lectures by Industry Experts

Workshops and Seminars

Hackathons and Tech Fests

These activities help students understand the practical applications of their knowledge and stay updated with current industry trends. The institute has tie-ups with several companies and start-ups for internships and training programs.

Training and Placement Cell

SAITM has a dedicated Training and Placement Cell that works closely with students to enhance their employability. It provides:

Soft skill training

Resume writing workshops

Interview preparation sessions

Mock interviews and group discussions

Every year, the placement cell invites a wide range of recruiters from various industries. Some of the top recruiters include TCS, Wipro, Infosys, HCL, Tech Mahindra, Cognizant, and Byju’s, among others.

Students are also encouraged to pursue internships during their academic years, giving them a competitive edge in the job market. Many students have secured pre-placement offers (PPOs) through these internships.

Student Life and Extracurricular Activities

SAITM promotes a balanced academic and extracurricular life. Various clubs and societies are active on campus, covering interests like:

Robotics and AI

Coding and Programming

Business and Finance

Drama and Performing Arts

Music and Dance

Photography and Film-making

Debate and Public Speaking

Annual events such as Tech Fests, Cultural Festivals, and Sports Meets allow students to showcase their talents and collaborate across departments.

The campus has a vibrant student community that actively participates in organizing events, which fosters leadership, teamwork, and organizational skills.

Entrepreneurship and Innovation

To foster an entrepreneurial mindset, SAITM has established initiatives like the Innovation and Entrepreneurship Development Cell (IEDC). This cell supports students in developing business ideas and provides mentorship, incubation support, and networking opportunities with investors and industry leaders.

Students are encouraged to participate in national and international competitions, innovation challenges, and start-up expos. The goal is to nurture job creators rather than just job seekers.

Conclusion

St. Andrews Institute of Technology and Management (SAITM) stands out as a promising educational institution in the NCR region. With its focus on academic excellence, practical skills, personality development, and strong industry connections, it provides a comprehensive environment for students to grow professionally and personally.

Whether you aim to become a skilled engineer, an innovative entrepreneur, or a future business leader, SAITM provides the platform and resources to turn your ambitions into reality.

#SAITM#StAndrewsInstitute#EngineeringCollege#ManagementCollege#TopEngineeringCollege#CollegeLife#CampusVibes#StudyInIndia

1 note

·

View note

Text

"Revolutionizing Indie Filmmaking in 2025"

In the dynamic world of independent filmmaking, the year 2025 is demonstrating a major transformation. As technology becomes increasingly accessible, indie filmmakers both within and outside Hollywood are leveraging these advancements to create high-grade content with more limited budgets. This evolution empowers creative expression while achieving a professional polish that was traditionally the domain of big studios. Let's explore the tech tools reshaping the indie film sector, fostering inventive freedom, and enhancing production workflows in 2025.

The foundation of any indie project in 2025 involves using adaptable, high-performance digital cameras. At the forefront are models like the Canon EOS C400 and Sony FX3. These cameras are elevating the visual benchmark for indie films by offering cinematic quality and seamless post-production integration. With advanced low-light capabilities and modular designs, they narrow the gap between independent and big studio productions.

Equally important are the advancements in editing software. Programs such as DaVinci Resolve and Adobe Premiere Pro are essential in achieving a refined final product. Their innovative AI-driven features streamline workflows, from intelligent scene detection and automatic color grading to automated subtitle creation. These tools are vital for compact teams aiming to reduce production time while ensuring high quality.

AI Tools Revolutionizing Filmmaking

The incorporation of Artificial Intelligence (AI) in indie filmmaking is transforming all areas of production. Tools like Cinelytic, RivetAI, and ChatGPT help streamline the creative process. AI supports everything from script analysis to audience insights, allowing filmmakers to focus on creative decisions. AI-generated voice synthesis and automated editing not only conserve time but also enhance reliability and uniformity.

The next-generation sound design tools are crucial as well. Portable high-quality microphones and AI-enhanced audio correction tools, such as iZotope RX, enable filmmakers to create engaging sound environments. Emerging trends in spatial audio are noteworthy for crafting compelling viewer experiences with a 'blockbuster' feel, achieved on smaller budgets.

Planning, Collaboration, and Productivity Apps

The planning phase has become equally digitized. Apps like Previs Pro, Dropbox Paper, and RePro offer invaluable features for previsualization, collaborative storyboarding, and streamlined team coordination. These tools are responsive to the agile demands of indie filmmaking, allowing teams to adapt swiftly to changes on set.

The impact of these technologies goes beyond production alone. Distribution and community interaction are also evolving due to these technological advancements. Social media and direct-to-streaming platforms enable indie filmmakers to bypass traditional barriers, reaching larger and more diverse audiences. Crowdfunding platforms have become more important than ever, supporting projects with tech-forward marketing strategies and strong digital community engagement.

Embracing Immersive Experiences

By incorporating Augmented Reality (AR), Virtual Reality (VR), and Extended Reality (XR) technologies, indie filmmakers redefine how audiences engage. Once considered experimental, these tools are entering mainstream usage, unlocking new storytelling possibilities. Filmmakers are encouraged to use VR and AR in short films or festival entries to increase viewer engagement while setting their work apart from traditional offerings.

This expansion is not solely about using flashy tools; it's about achieving sustainable production models. Affordable technology makes "run-and-gun" filming more feasible, allowing smaller crews to shoot on location with greater efficiency. Adopting compact setups—such as minimalistic production kits with an adaptable camera, lightweight lighting, and cloud storage—further boosts efficiency by reducing both financial and environmental costs.

Mastering Best Practices for Technological Utilization

For independent filmmakers aiming to effectively integrate these advancements into their projects, several best practices should be adopted. Firstly, investing in top-quality camera equipment with 4K and RAW features ensures enduring visual standards. Implementing AI at all production stages—from initial concept to post-production—can dramatically decrease time and workload, allowing filmmakers to focus on storytelling and creative refinement.

The approach of using cloud-based planning tools is essential, as it not only supports remote collaboration but also safeguards data, removing geographical barriers from the creative process. Moreover, creating content that integrates immersive and dynamic sound and visual experiences can enhance storytelling, particularly appealing to festivals and niche audiences.

Ultimately, adopting these technologies affords indie filmmakers the chance to innovate, compete, and flourish in an ever-evolving film industry landscape. As 2025 unfolds, astute filmmakers will continue to utilize these tools and adapt effectively, ensuring their creative visions reach their intended audiences.

The spotlight is on filmmakers to embrace this tech-driven zeitgeist, inviting them on a journey toward evolution and excellence in the realm of independent cinema. As indie filmmakers embrace these technologies, the future of filmmaking appears as an exciting domain, brimming with innovation and creativity, steered by technology and fueled by passion.

#IndieFilm #Filmmaking #TechTrends #Innovation #CinematicTools

Explore essential indie filmmaking tools at https://www.kvibe.com.

0 notes

Text

Navigating Your Options: Choosing the Best Medical Transcription Course for Your Future

In the fast-paced world of healthcare, accurate documentation is a cornerstone of patient care. One of the most crucial roles in this process is played by medical transcriptionists—professionals who convert voice-recorded medical reports into written text. As the demand for accurate medical documentation continues to grow, the need for skilled transcriptionists is higher than ever. If you are considering a career in this dynamic field, enrolling in a Medical Transcription Course in Kochi could be your first step toward a rewarding and flexible career path.

Understanding Medical Transcription

Medical transcription involves listening to recordings made by doctors and other healthcare professionals and transcribing them into written reports, medical histories, discharge summaries, and other critical documentation. These records are essential for maintaining accurate patient histories, facilitating further treatment, and ensuring that healthcare institutions comply with regulatory standards.

A qualified medical transcriptionist must possess strong listening skills, excellent grammar, a good understanding of medical terminology, and familiarity with various accents and speech patterns. The right training course can help you develop all these competencies and more.

Why Choose a Medical Transcription Course?

Enrolling in a Medical Transcription Course in Kochi offers several benefits:

Skill Development: Learn essential skills such as keyboarding, audio processing, grammar, and comprehension.

Medical Terminology: Gain in-depth knowledge of anatomy, physiology, pharmacology, and medical jargon.

Flexible Career Opportunities: With the rise of remote work, many transcriptionists now work from home, providing greater work-life balance.

Certification Preparation: A reputable course often prepares students for national or international certification exams, adding to their professional credibility.

Job Placement Assistance: Many training programs also assist with internships and job placements, helping you launch your career with confidence.

What to Look for in a Medical Transcription Course in Kochi

Choosing the right course is essential to your success. Here are some factors to consider when selecting a Medical Transcription Course in Kochi:

1. Comprehensive Curriculum

Ensure the course covers all the fundamental areas, including:

Medical terminology and abbreviations

Anatomy and physiology

English grammar and punctuation

Audio typing and transcription techniques

Legal and ethical aspects of medical documentation

2. Experienced Trainers

A course led by instructors with real-world experience in medical transcription or the healthcare field will provide practical insights and hands-on knowledge that books alone cannot offer.

3. Interactive Learning Modules

Interactive modules, quizzes, real-time dictations, and audio-visual content enhance the learning experience and prepare students to handle actual transcription tasks with confidence.

4. Flexible Scheduling

For working professionals or students, the flexibility to choose between full-time, part-time, or online classes can make a big difference in completing the course.

5. Industry-Relevant Tools

Familiarity with the latest transcription software and medical record systems is a valuable asset. Choose a course that includes training on industry-standard tools and technology.

Career Opportunities After Completing the Course

Graduates of a Medical Transcription Course in Kochi can explore several career paths:

Hospital Transcription Departments: Work directly with hospitals and clinics, transcribing patient reports and consultation summaries.

Medical Transcription Companies: Join specialised firms that provide transcription services to healthcare providers worldwide.

Remote Freelancing: Work from home as an independent contractor or freelancer for domestic or international clients.

Editing and Quality Control: With experience, you can move into supervisory roles, such as a transcription editor or quality assurance specialist.

Healthcare Documentation Specialist: Broaden your role to include other documentation and administrative tasks within a healthcare setting.

The Growing Demand for Medical Transcriptionists

The healthcare sector in India continues to expand rapidly, and with it, the need for qualified support staff such as medical transcriptionists. Digital recordkeeping has become the norm, and with doctors focusing on patient care, transcriptionists bridge the gap between spoken word and written record.

Particularly in cities like Kochi, where the medical industry is seeing significant growth, the demand for trained transcriptionists is expected to rise. Completing a Medical Transcription Course in Kochi places you in a strong position to benefit from this emerging demand.

Final Thoughts

If you’re looking for a career that offers stability, flexibility, and the opportunity to contribute meaningfully to the healthcare system, medical transcription might just be your calling. With the right training and dedication, you can build a career that offers both personal satisfaction and professional growth.

By choosing a well-structured and industry-relevant Medical Transcription Course in Kochi, you are investing in a future filled with potential. Whether you're a recent graduate, a homemaker looking for flexible work, or someone seeking a career change, medical transcription offers a viable and fulfilling path.

Take the next step toward your future—explore your options, enrol in a course, and begin your journey toward becoming a certified medical transcriptionist.

#Medical Transcription Course in Kochi#Cigma Medical Coding Academy#Transcription Courses in Kochi#Medical Coding Courses in Kochi#Medical Billing Courses in Kochi#Billing Certificate Program in Kochi#Medical Coding Certificate Program in Kerala#Transcription Certificate Program in Kochi#Medical Coding Academy#Australian Clinical Coding Training#Certificate Program in Medical Billing Kerala#Certificate Program in Medical Transcription Kochi#Medical Transcription Course Kochi#Medical Coding Institute#Best Medical Coding Institute in Kerala#Medical Coding Course in Kerala#Best medical coding academy in Kerala#Medical Coding Auditing training Kochi#AM coding training in Kochi#Clinical Coding training Kerala#Dental Coding training India#Health information Management courses in Kerala#Saudi Coding training in Kerala#Post graduate program in Health information management in Kochi#CPMA Training in Kerala#Medical coding Training Kerala#Professional Medical Coding Courses in Kerala#medical coding programs in Kochi#medical coding certification courses Kerala#AAPC institute of Medical Coding Kochi

0 notes

Text

SpacePepper Studios is Best Video Production Agency in Delhi for Brands, Corporates, and Creators

In the fast-evolving world of content creation and digital marketing, having a powerful video strategy is no longer optional—it’s essential. Whether you’re a growing startup, a multinational corporation, or an independent creator, partnering with a creative and reliable production partner can redefine your brand's reach. That’s where SpacePepper Studios stands out as the best video production agency in Delhi.

Your Creative Partner Across All Formats

As a full-service production studio, SpacePepper Studios has earned a reputation as a top corporate video production agency in Delhi NCR, offering end-to-end solutions for companies seeking to communicate effectively with internal teams, stakeholders, and the public.

From corporate profile films to explainer videos, training content to leadership interviews—their team ensures each frame aligns with your brand message and corporate identity.

Brand-Focused Video Production That Delivers

SpacePepper Studios also shines as a trusted brand video production agency in Delhi, helping companies craft compelling brand stories that resonate with their audience. Whether it’s for ad campaigns, product launches, testimonials, or social media reels, their videos are not just visually appealing—they’re strategically designed to inspire action and build brand loyalty.

Services include:

Brand films

Product promos

Motion graphics

Social media video content

Influencer & lifestyle shoots

Advanced 3D Animation Services in Delhi NCR

Taking creativity a step further, SpacePepper is also a leading 3D animation company in Delhi NCR. Using the latest animation tools and rendering software, they produce eye-catching 3D content ideal for product visualizations, explainer animations, architectural walkthroughs, medical animations, and more.

Their animated solutions are ideal for brands that want to showcase their offerings in a futuristic, interactive, and memorable way.

A Modern Podcast Production Agency in Delhi

Expanding beyond video, SpacePepper Studios is also a growing name as a podcast production agency in Delhi. With dedicated audio engineers, sound designers, and content creators, they help professionals, influencers, and brands launch high-quality podcasts—from concept development to final mastering and distribution.

Whether you’re looking to start a business podcast, interview series, or branded audio show, SpacePepper makes sure your voice is heard—clearly and professionally.

Why Choose SpacePepper Studios?

🎥 Multi-genre expertise in corporate, brand, animation, and audio

🧠 Creative direction with strategic marketing insight

🛠️ End-to-end production from scripting to post-production

📈 Result-driven approach focused on ROI and brand growth

🤝 Reliable partnership with transparent communication

Final Thoughts

If you’re looking for the best video production agency in Delhi—one that understands the needs of brands, corporates, and creators alike—SpacePepper Studios is your ideal partner. With capabilities ranging from corporate films to 3D animation and podcast production, they are your one-stop creative studio in the heart of Delhi NCR.

Let SpacePepper Studios bring your vision to life—visually, audibly, and impactfully.

#Best video production agency in Delhi#Top corporate video production agency in Delhi NCR#Brand Video Production Agency in Delhi#3D animation Company in Delhi NCR

0 notes

Text

Lyrics Video Generator: Create Stunning Music Visuals Easily

In today’s digital age, music and visuals go hand in hand. Whether you're an independent artist, a content creator, or a music lover, having an engaging lyrics video generator can make your songs stand out. These tools allow you to sync lyrics with captivating visuals effortlessly, enhancing the listener's experience.

In this guide, we’ll explore the best lyrics video generator options, their features, and how to use them to create professional-looking videos without any technical expertise.

What Is a Lyrics Video Generator?

A lyrics video generator is a software or online tool that automatically synchronizes song lyrics with animations, backgrounds, and effects. Unlike traditional music videos, lyrics videos focus on displaying the words in a visually appealing way, making them perfect for social media, YouTube, and promotional content.

Why Use a Lyrics Video Generator?

Engages viewers by making lyrics easy to follow.

Boosts streaming numbers as fans replay videos to sing along.

Saves time and money compared to hiring a video editor.

Enhances social media presence with shareable content.

Top 5 Lyrics Video Generator Tools

1. Adobe Spark

Adobe Spark offers customizable templates for lyric videos, allowing users to add text animations, overlays, and music synchronization. Its intuitive interface makes it ideal for beginners.

Key Features:

Pre-designed lyric video templates

Automatic timing adjustments

Royalty-free music library

2. Animaker

Animaker is a powerful lyrics video generator with drag-and-drop functionality. It supports kinetic typography (animated text) and offers a vast library of effects.

Key Features:

Auto-sync lyrics with audio

100+ text animation styles

HD video export

3. Flixier

Flixier is a cloud-based video editor that includes a lyrics video generator feature. It allows real-time collaboration and quick rendering.

Key Features:

Multi-user editing

Auto-generated captions

Direct YouTube upload

4. Renderforest

Renderforest provides AI-powered lyric video creation with customizable themes. It’s perfect for musicians who want professional results fast.

Key Features:

AI voiceovers and text-to-speech

3D lyric animations

No watermark on paid plans

5. Canva

Canva’s video editor includes basic lyric video tools, making it a great free option for simple projects.

Key Features:

User-friendly interface

Free templates available

Direct social media sharing

How to Use a Lyrics Video Generator (Step-by-Step)

Step 1: Choose Your Tool

Select a lyrics video generator based on your budget and needs (e.g., Animaker for animations, Flixier for fast editing).

Step 2: Upload Your Audio File

Import your song file (MP3 or WAV) into the tool.

Step 3: Add Lyrics

Enter the lyrics manually or upload a text file. Some tools auto-detect timing, while others let you adjust manually.

Step 4: Customize Visuals

Text Style: Pick fonts, colors, and animations.

Backgrounds: Use stock videos, images, or solid colors.

Effects: Add transitions, overlays, or motion graphics.

Step 5: Sync Lyrics with Music

Ensure each line appears at the right time by adjusting delays or using auto-sync features.

Step 6: Export & Share

Download the video in HD and upload it to YouTube, Instagram, or TikTok.

Tips for Creating an Engaging Lyrics Video

Keep It Readable: Use bold, high-contrast fonts.

Match the Mood: Dark themes for sad songs, vibrant colors for upbeat tracks.

Add Subtle Motion: Avoid excessive effects that distract from lyrics.

Include Branding: Add your logo or social media handles.

Conclusion

A lyrics video generator simplifies the process of turning songs into visually dynamic experiences. Whether you’re promoting new music or enhancing fan engagement, these tools provide an easy and cost-effective solution.

Try out different platforms like Animaker, Renderforest, or Canva to see which one fits your creative style. With the right approach, your lyric videos can captivate audiences and amplify your music’s reach.

1 note

·

View note

Text

From Concept to Execution: How the Best Drone Show Companies in India Create Stunning Displays

In recent years, drone light shows have evolved from niche entertainment into full-scale, awe-inspiring spectacles that illuminate the skies with technology and creativity. Whether it’s a grand cultural event, a brand launch, or a national celebration, the visual poetry of synchronized drones forming breathtaking patterns has become the new standard for modern entertainment. But what really goes into these sky-bound marvels? Let’s dive deep into the behind-the-scenes process, from concept to execution, and explore how the best drone show companies in India create stunning displays that leave audiences speechless.

The Rise of Drone Light Shows in India

The popularity of drone light shows in India, especially in metropolitan cities like Delhi, has grown exponentially. From Republic Day celebrations to private events and product unveilings, drones have replaced fireworks in many cases due to their precision, sustainability, and safety. Companies like Mind Drone Pvt. Ltd. have played a significant role in revolutionizing this art form by integrating cutting-edge technology, artistic vision, and safety protocols to deliver sky-scaling visual wonders.

Why Drone Shows Over Traditional Fireworks?

Eco-friendly: No noise or air pollution

Customizable storytelling: Create logos, faces, animations

Safe and silent: No fire hazard or loud explosions

Reusability: Drones can be reused, making them more economical over time

Phase 1: Ideation and Concept Development

Every drone light show starts with a vision. Whether the client is a government body, a brand, or an event planner, the first step involves brainstorming the central theme of the show.

Questions Considered:

What story should the drones tell?

Is there a specific message or brand identity to communicate?

How long should the performance last?

Where is the venue, and how large is the available airspace?

The creative team at a top-tier drone company, like Mind Drone Pvt. Ltd., works closely with the client to design a narrative. For example, a drone light show in Delhi for an Independence Day celebration may feature key symbols like the Ashoka Chakra, national monuments, or messages of unity and patriotism.

Phase 2: Storyboarding and Animation

Once the idea is finalized, it’s transformed into a visual storyboard. Animators and graphic designers use 3D modeling software to draft drone formations, transitions, and special effects.

Tools and Techniques Used:

3D animation software (like Blender or Autodesk Maya)

Custom drone show planning platforms

Simulation programs to test formations

This phase ensures that each drone in the fleet knows exactly where it needs to be and when. Animators test transitions between shapes to guarantee a smooth flow and prevent mid-air collisions.

The Role of Innovation: What Sets the Best Apart?

The best drone show companies in India like Mind Drone stand out due to their continuous focus on innovation. Some differentiators include:

Fleet Size: Larger fleets mean more complex animations

Custom Drone Manufacturing: Lightweight, longer battery drones

AI-Powered Pathfinding: Avoid in-air traffic during large shows

Synchronized Audio Integration: Music and narration that match the light show

Real-time Weather Adaptability: Adjust flight patterns based on wind and humidity

Such companies invest in R&D to improve accuracy, durability, and creative potential, helping them lead India’s drone entertainment space

A Symphony of Creativity and Precision

A drone light show is far more than just lights in the sky. It’s a well-orchestrated symphony where technology, creativity, and logistics converge. The journey from concept to execution demands rigorous planning, innovation, and strict safety adherence.

Whether you're looking to host a national celebration, a private launch, or an unforgettable wedding, choosing the right partner makes all the difference. The best drone show companies in India, like Mind Drone Pvt. Ltd., ensure every display is a safe, stunning, and unforgettable experience.

Our Contact Details

Mind Drones Private Limited

Company Website : https://www.minddrones.in/

Phone Number : 9810370586 , 9717756664

Company Address : B-803, Balaji Apartments, Sector-14 Rohini, Delhi - 110085

Our Social Links

https://x.com/minddrones

https://www.linkedin.com/company/mind-drones-pvt-ltd/

https://www.instagram.com/mind_drones/

1 note

·

View note

Text

AI Agent Development: A Complete Guide to Building Smart, Autonomous Systems in 2025

Artificial Intelligence (AI) has undergone an extraordinary transformation in recent years, and 2025 is shaping up to be a defining year for AI agent development. The rise of smart, autonomous systems is no longer confined to research labs or science fiction — it's happening in real-world businesses, homes, and even your smartphone.

In this guide, we’ll walk you through everything you need to know about AI Agent Development in 2025 — what AI agents are, how they’re built, their capabilities, the tools you need, and why your business should consider adopting them today.

What Are AI Agents?

AI agents are software entities that perceive their environment, reason over data, and take autonomous actions to achieve specific goals. These agents can range from simple chatbots to advanced multi-agent systems coordinating supply chains, running simulations, or managing financial portfolios.

In 2025, AI agents are powered by large language models (LLMs), multi-modal inputs, agentic memory, and real-time decision-making, making them far more intelligent and adaptive than their predecessors.

Key Components of a Smart AI Agent

To build a robust AI agent, the following components are essential:

1. Perception Layer

This layer enables the agent to gather data from various sources — text, voice, images, sensors, or APIs.

NLP for understanding commands

Computer vision for visual data

Voice recognition for spoken inputs

2. Cognitive Core (Reasoning Engine)

The brain of the agent where LLMs like GPT-4, Claude, or custom-trained models are used to:

Interpret data

Plan tasks

Generate responses

Make decisions

3. Memory and Context

Modern AI agents need to remember past actions, preferences, and interactions to offer continuity.

Vector databases

Long-term memory graphs

Episodic and semantic memory layers

4. Action Layer

Once decisions are made, the agent must act. This could be sending an email, triggering workflows, updating databases, or even controlling hardware.

5. Autonomy Layer

This defines the level of independence. Agents can be:

Reactive: Respond to stimuli

Proactive: Take initiative based on context

Collaborative: Work with other agents or humans

Use Cases of AI Agents in 2025

From automating tasks to delivering personalized user experiences, here’s where AI agents are creating impact:

1. Customer Support

AI agents act as 24/7 intelligent service reps that resolve queries, escalate issues, and learn from every interaction.

2. Sales & Marketing

Agents autonomously nurture leads, run A/B tests, and generate tailored outreach campaigns.

3. Healthcare

Smart agents monitor patient vitals, provide virtual consultations, and ensure timely medication reminders.

4. Finance & Trading

Autonomous agents perform real-time trading, risk analysis, and fraud detection without human intervention.

5. Enterprise Operations

Internal copilots assist employees in booking meetings, generating reports, and automating workflows.

Step-by-Step Process to Build an AI Agent in 2025

Step 1: Define Purpose and Scope

Identify the goals your agent must accomplish. This defines the data it needs, actions it should take, and performance metrics.

Step 2: Choose the Right Model

Leverage:

GPT-4 Turbo or Claude for text-based agents

Gemini or multimodal models for agents requiring image, video, or audio processing

Step 3: Design the Agent Architecture

Include layers for:

Input (API, voice, etc.)

LLM reasoning

External tool integration

Feedback loop and memory

Step 4: Train with Domain-Specific Knowledge