#IoT architecture layers

Explore tagged Tumblr posts

Text

#IoT architecture#IoT architecture layers#IoT layers#Edge computing in IoT#IoT data processing#IoT security#Legacy IoT

0 notes

Text

10 Business Intelligence & Analytics Trends to Watch in 2025

Introduction

In 2025, business intelligence and analytics will have evolved from optional advantages to essential business drivers. Organizations leveraging advanced analytics consistently outperform competitors, with Forrester reporting that data-driven companies are achieving 30% annual growth rates.

We’ve witnessed a significant shift from simple descriptive analytics to AI-powered predictive and prescriptive models that don’t just report what happened but forecast what will happen and recommend optimal actions.

According to Gartner’s latest Analytics Magic Quadrant, organizations implementing advanced BI solutions are seeing a 23% improvement in operational efficiency and a 19% increase in revenue growth. As McKinsey notes, “The gap between analytics leaders and laggards is widening at an unprecedented rate.”

Trend 1: Augmented Analytics Goes Mainstream

Augmented analytics has matured from an emerging technology to a mainstream capability, with AI automating insight discovery, preparation, and visualization. Tools like Microsoft Power BI with Copilot and Tableau AI now generate complex analyses that previously required data science expertise.

A manufacturing client recently implemented augmented analytics and identified supply chain inefficiencies that saved $3.2M annually. These platforms reduce analysis time from weeks to minutes while uncovering insights human analysts might miss entirely.

Trend 2: Data Fabric and Unified Data Environments

Data fabric architecture has emerged as the solution to fragmented data environments. First popularized by Gartner in 2020, this approach creates a unified semantic layer across distributed data sources without forcing consolidation.

Organizations implementing data fabric are reporting 60% faster data access and 40% reduction in integration costs. For enterprises struggling with data silos across departments, cloud platforms, and legacy systems, data fabric provides a cohesive view while maintaining appropriate governance and security.

Trend 3: AI and ML-Driven Decision Intelligence

Decision intelligence — combining data science, business rules, and AI — has become the framework for optimizing decision-making processes. This approach transcends traditional analytics by not just providing insights but recommending and sometimes automating decisions.

Financial institutions are using decision intelligence for real-time fraud detection, reducing false positives by 37%. Retailers are optimizing inventory across thousands of SKUs with 93% accuracy. This shift is fundamentally changing organizational culture, moving from “highest-paid person’s opinion” to data-validated decision frameworks.

Trend 4: Self-Service BI for Non-Technical Users

The democratization of analytics continues with increasingly sophisticated self-service tools accessible to business users. Platforms like Qlik and Looker have evolved their interfaces to allow drag-and-drop analysis with guardrails that maintain data integrity.

This shift has reduced report backlogs by 71% for IT departments while increasing analytics adoption company-wide. The key enabler has been improved data literacy programs, with 63% of Fortune 1000 companies now investing in formal training to empower employees across all functions.

Trend 5: Real-Time and Embedded Analytics

Real-time, in-context insights are replacing static dashboards as analytics becomes embedded directly within business applications. Technologies like Kafka, Snowflake Streams, and Azure Synapse are processing millions of events per second to deliver insights at the moment of decision.

Supply chain managers are tracking shipments with minute-by-minute updates, IoT platforms are monitoring equipment performance in real-time, and financial services are detecting market opportunities within milliseconds. The “data-to-decision” window has compressed from days to seconds.

Trend 6: Data Governance, Privacy & Ethical AI

With regulations like GDPR, CCPA, and the EU AI Act now fully implemented, governance has become inseparable from analytics strategy. Leading organizations have established formal ethics committees and data stewardship programs to ensure compliance and ethical use of data.

Techniques for bias detection, algorithmic transparency, and explainable AI are now standard features in enterprise platforms. Organizations report that strong governance paradoxically accelerates innovation by establishing clear frameworks for responsible data use.

Trend 7: Cloud-Native BI and Multi-Cloud Strategies

Cloud-native analytics platforms have become the standard, offering scalability and performance impossible with on-premises solutions. Google BigQuery, Snowflake, and Azure Synapse lead the market with petabyte-scale processing capabilities.

Multi-cloud strategies are now the norm, with organizations deliberately distributing analytics workloads across providers for resilience, cost optimization, and specialized capabilities. Orchestration platforms are managing this complexity while ensuring consistent governance across environments.

Trend 8: Natural Language Processing in BI Tools

Conversational interfaces have transformed how users interact with data. “Ask a question” features in platforms like Tableau GPT, ThoughtSpot, and Microsoft Copilot allow users to query complex datasets using everyday language.

These NLP capabilities have expanded analytics access to entirely new user groups, with organizations reporting 78% higher engagement from business stakeholders. The ability to simply ask “Why did sales drop in the Northeast last quarter?” and receive instant analysis has made analytics truly accessible.

Trend 9: Composable Data & Analytics Architectures

Composable architecture — building analytics capabilities from interchangeable components — has replaced monolithic platforms. This modular approach allows organizations to assemble best-of-breed solutions tailored to specific needs.

Microservices and API-first design have enabled “analytics as a service” delivery models, where capabilities can be easily embedded into any business process. This flexibility has reduced vendor lock-in while accelerating time-to-value for new analytics initiatives.

Trend 10: Data Democratization Across Organizations

True data democratization extends beyond tools to encompass culture, training, and governance. Leading organizations have established data literacy as a core competency, with training programs specific to each department’s needs.

Platforms supporting broad access with appropriate guardrails have enabled safe, controlled democratization. The traditional analytics bottleneck has disappeared as domain experts can now directly explore data relevant to their function.

Future Outlook and Preparing for 2025

Looking beyond 2025, we see quantum analytics, autonomous AI agents, and edge intelligence emerging as next-generation capabilities. Organizations successfully navigating current trends will be positioned to adopt these technologies as they mature.

To prepare, businesses should:

Assess their BI maturity against industry benchmarks

Develop talent strategies for both technical and business-focused data roles

Establish clear use cases aligned with strategic priorities

Create governance frameworks that enable rather than restrict innovation

Final Thoughts

The analytics landscape of 2025 demands adaptability, agility, and effective human-AI collaboration. Organizations that embrace these trends will gain sustainable competitive advantages through faster, better decisions.

For a personalized assessment of your analytics readiness and a custom BI roadmap, contact SR Analytics today. Our experts can help you navigate these trends and implement solutions tailored to your specific business challenges.

#data analytics consulting company#data analytics consulting services#analytics consulting#data analytics consultant#data and analytics consultant#data analytics#data and analytics consulting#data analytics consulting

0 notes

Text

Before the Firewall: EDSPL’s Visionary Approach to Cyber Defense

Unlocking the Future of Cyber Resilience

In a world where digital threats are evolving faster than organizations can respond, traditional security practices no longer suffice. Hackers no longer “try” to break in—they expect to. For companies clinging to outdated protocols, this mindset shift is disastrous.

Enter EDSPL, a name synonymous with forward-thinking in the cybersecurity landscape. With an approach built on foresight, adaptability, and deep-rooted intelligence, EDSPL redefines what it means to defend digital frontiers.

Beyond Perimeters: A New Way to Think About Security

For decades, firewalls were considered the first and final gatekeepers. They filtered traffic, monitored endpoints, and acted as the digital equivalent of a fortress wall. But threats don’t wait at the gates—they worm through weaknesses before rules are written.

EDSPL's strategy doesn’t begin at the wall—it begins before the threat even arrives. By identifying weak links, anticipating behaviors, and neutralizing vulnerabilities at inception, this approach ensures an organization’s shield is active even during peace.

Why Post-Incident Action Isn’t Enough Anymore

Once damage is done, it’s already too late. Breach aftermath includes regulatory penalties, customer churn, operational halts, and irrevocable trust erosion. Traditional solutions operate in reactionary mode—cleaning up after the storm.

EDSPL rewrites that narrative. Rather than waiting to respond, its systems monitor, assess, and simulate threats from day zero. Preparation begins before deployment. Validation precedes integration. Proactive decisions prevent reactive disasters.

A Culture of Vigilance Over a Stack of Tools

Contrary to popular belief, cybersecurity is less about firewalls and more about behaviors. Tools assist. Culture shields. When people recognize risks, companies create invisible armor.

EDSPL’s training modules, custom learning paths, real-time phishing simulations, and scenario-based awareness sessions empower human layers to outperform artificial ones. With aligned mindsets and empowered employees, security becomes instinct—not obligation.

Anticipation Over Analysis: Intelligent Pattern Prediction

Threat actors use sophisticated tools. EDSPL counters with smarter intuition. Through behavioral analytics, intent recognition, and anomaly baselining, the system detects the undetectable.

It isn’t just about noticing malware signatures—it’s about recognizing suspicious deviation before it becomes malicious. This kind of prediction creates time—an extremely scarce cybersecurity asset.

Securing Roots: From Line-of-Code to Cloud-Native Workloads

Security shouldn’t begin with a data center—it should start with developers. Vulnerabilities injected at early stages can linger, mutate, and devastate entire systems.

EDSPL secures code pipelines, CI/CD workflows, container environments, and serverless functions, ensuring no opportunity arises for backdoors. From development to deployment, every node is assessed and authenticated.

Hyper-Personalized Defense Models for Every Sector

What works for healthcare doesn’t apply to fintech. Industrial IoT threats differ from eCommerce risks. EDSPL builds adaptive, context-driven, and industry-aligned architectures tailored to each ecosystem’s pulse.

Whether the organization deals in data-heavy analytics, remote collaboration, or hybrid infrastructure, its security needs are unique—and EDSPL treats them that way.

Decoding Attacks Before They’re Even Launched

Modern adversaries aren’t just coders—they’re strategists. They study networks, mimic trusted behaviors, and exploit unnoticed entry points.

EDSPL’s threat intelligence systems decode attacker motives, monitor dark web activity, and identify evolving tactics before they manifest. From ransomware kits in forums to zero-day exploit chatter, defense begins at reconnaissance.

Unseen Doesn’t Mean Untouched: Internal Risks are Real

While most solutions focus on external invaders, internal risks are often more dangerous. Malicious insiders, negligence, or misconfigurations can compromise years of investment.

Through access control models, least-privilege enforcement, and contextual identity validation, EDSPL ensures that trust isn’t blind—it’s earned at every interaction.

Monitoring That Sees the Forest and Every Leaf

Log dumps don’t equal visibility. Alerts without insight are noise. EDSPL's Security Operations Center (SOC) is built not just to watch, but to understand.

By combining SIEM (Security Information and Event Management), SOAR (Security Orchestration, Automation, and Response), and XDR (Extended Detection and Response), the system provides cohesive threat narratives. This means quicker decision-making, sharper resolution, and lower false positives.

Vulnerability Testing That Doesn’t Wait for Schedules

Penetration testing once a year? Too slow. Automated scans every month? Too shallow.

EDSPL uses continuous breach simulation, adversary emulation, and multi-layered red teaming to stress-test defenses constantly. If something fails, it’s discovered internally—before anyone else does.

Compliance That Drives Confidence, Not Just Certification

Security isn’t about ticking boxes—it’s about building confidence with customers, partners, and regulators.

Whether it’s GDPR, HIPAA, PCI DSS, ISO 27001, or industry-specific regulations, EDSPL ensures compliance isn’t reactive. Instead, it’s integrated into every workflow, providing not just legal assurance but brand credibility.

Future-Proofing Through Innovation

Cyber threats don’t rest—and neither does EDSPL.

AI-assisted defense orchestration,

Machine learning-driven anomaly detection,

Quantum-resilient encryption exploration,

Zero Trust architecture implementations,

Cloud-native protection layers (CNAPP),

...are just some innovations currently under development to ensure readiness for challenges yet to come.

Case Study Snapshot: Retail Chain Averted Breach Disaster

A retail enterprise with 200+ outlets reported unusual POS behavior. EDSPL’s pre-deployment monitoring had already detected policy violations at a firmware level. Within hours, code rollback and segmentation stopped a massive compromise.

Customer data remained untouched. Business continued. Brand reputation stayed intact. All because the breach was prevented—before the firewall even came into play.

Zero Trust, Infinite Confidence

No trust is assumed. Every action is verified. Each access request is evaluated in real time. EDSPL implements Zero Trust frameworks not as checklists but as dynamic, evolving ecosystems.

Even internal traffic is interrogated. This approach nullifies lateral movement, halting attackers even if one layer falls.

From Insight to Foresight: Bridging Business and Security

Business leaders often struggle to relate risk to revenue. EDSPL translates vulnerabilities into KPIs, ensuring boardrooms understand what's at stake—and how it's being protected.

Dashboards show more than threats—they show impact, trends, and value creation. This ensures security is not just a cost center but a driver of operational resilience.

Clients Choose EDSPL Because Trust Is Earned

Large manufacturers, healthtech firms, financial institutions, and critical infrastructure providers place their trust in EDSPL—not because of marketing, but because of performance.

Testimonials praise responsiveness, adaptability, and strategic alignment. Long-term partnerships prove that EDSPL delivers not just technology, but transformation.

Please visit our website to know more about this blog https://edspl.net/blog/before-the-firewall-edspl-s-visionary-approach-to-cyber-defence/

0 notes

Text

The Intersection of IoT and Decision Pulse: Smarter Systems, Faster Choices

In today’s hyper-connected world, the real-time flow of data defines competitive advantage. The Internet of Things (IoT) is generating unprecedented volumes of data from billions of sensors and devices. But raw data isn’t value—it’s potential. Turning that potential into insight and action is where Decision Pulse steps in. At the intersection of IoT and smart analytics lies the promise of smarter systems and faster choices—a transformation that’s no longer optional, but essential.

Why IoT Alone Isn’t Enough

IoT devices are everywhere—factories, logistics fleets, smart homes, energy grids, and healthcare systems. They monitor temperature, vibration, fuel usage, inventory levels, patient vitals, and more. Yet, while these devices capture events in real time, many organizations still struggle to act in real time.

The reason? Data overload, delayed decision-making, and disjointed systems.

Without an intelligent layer to analyze, prioritize, and trigger action, IoT systems remain passive. That’s where Decision Pulse comes in—an AI-powered decision orchestration platform that transforms data from IoT networks into timely, actionable insights.

What Is Decision Pulse?

Decision Pulse is an advanced, real-time decision support engine by OfficeSolution, designed to sit on top of dynamic data environments. It captures signals from various sources—IoT, ERP systems, cloud platforms—and applies AI logic to help users make decisions faster, smarter, and more consistently.

Built for business agility, Decision Pulse not only monitors KPIs but also recommends next-best actions, escalates alerts, and streamlines workflows. With seamless API integrations, it becomes the central brain of connected operations.

The Power of Integration: IoT + Decision Pulse

Let’s explore how IoT and Decision Pulse together create a closed-loop intelligence system:

1. Real-Time Monitoring Meets Real-Time Action

In traditional IoT dashboards, alerts are visual and reactive. Decision Pulse automates the next step. For instance, in a smart factory, if a vibration sensor on a motor exceeds safety thresholds, Decision Pulse can immediately:

Cross-check maintenance logs.

Trigger a predictive maintenance request.

Notify the nearest technician via SMS.

Log the event in the ERP system.

This turns raw data into coordinated response—instantly.

2. Contextual Decision-Making

IoT provides the “what�� (e.g., sensor anomaly), but not always the “why” or “what next.” Decision Pulse adds business rules and AI context. For example:

If a cold storage unit fails in a logistics chain, IoT detects the failure.

Decision Pulse evaluates the cargo’s shelf life, location, and rerouting options.

It recommends switching delivery priority or adjusting temperature settings in adjacent units.

This capability enables organizations to respond with nuance, not just reaction.

3. Scalable Intelligence Across the Enterprise

Whether managing 10 sensors or 10,000, Decision Pulse scales with your IoT architecture. It brings a centralized decision logic to otherwise fragmented data sources. The result? You no longer need to rely on siloed teams interpreting localized signals. Instead, you gain organization-wide visibility with coordinated responses.

Use Cases Across Industries

The synergy of IoT and Decision Pulse is reshaping sectors:

Manufacturing: Predictive maintenance, energy optimization, machine downtime reduction.

Logistics: Dynamic rerouting, fuel efficiency decisions, real-time load balancing.

Healthcare: Patient monitoring, emergency protocol triggers, occupancy management.

Retail: Smart shelf replenishment, store environment control, queue management.

Smart Cities: Traffic redirection, public safety alerts, environmental regulation compliance.

In each case, IoT gathers, but Decision Pulse governs.

Smarter, Not Just Faster

Speed matters, but so does intelligence. Decision Pulse uses historical trends, business rules, and machine learning to ensure decisions aren't just quick—they're right.

Key features include:

Anomaly Detection with AI: Going beyond thresholds to detect unusual behavior patterns.

Workflow Triggers: Automatically activate cross-functional workflows.

Decision Trees & Policies: Embed governance rules into real-time choices.

Collaboration Layer: Alert stakeholders, log decisions, and facilitate team input.

How to Get Started

If your organization is already investing in IoT, Decision Pulse can unlock the next level of value. Implementation involves:

Connecting your IoT ecosystem to Decision Pulse via secure APIs.

Configuring business rules to define thresholds, escalations, and workflows.

Training the AI models on historical data to improve future predictions.

Rolling out dashboards and alerts for teams to interact with in real time.

OfficeSolution provides white-glove onboarding, integration services, and domain-specific configuration to ensure success across industries.

Final Thoughts: Building Intelligent Futures

The future belongs to systems that think, adapt, and act—autonomously and intelligently. The intersection of IoT and Decision Pulse is not just a technical integration—it’s a strategic shift. It redefines how data is used, how organizations operate, and how decisions are made.

By leveraging this convergence, businesses can move from insight to action in milliseconds, achieving operational excellence and unmatched agility.

Ready to build your intelligent decision engine? Visit https://decisionpulsegenai.com to learn more.

0 notes

Text

Energy Efficiency in AI Agent Deployment

AI agents deployed in real-time systems, edge devices, or IoT environments must balance intelligence with energy efficiency. Processing power is limited, yet responsiveness is crucial.

Techniques to manage this include lightweight models (e.g., quantized neural networks), event-driven execution (only act on significant changes), and offloading heavy computation to cloud layers.

Agents running on drones, smart homes, or wearables must make smart use of limited cycles. Developers must choose models and update frequencies carefully.

Visit the AI agents service page to explore architectures optimized for constrained environments.

Implement adaptive sampling—let the agent adjust its data collection rate based on environmental changes.

0 notes

Text

IoT Gateway Devices: Powering Intelligent Connectivity with Creative Micro Systems

As the Internet of Things (IoT) continues to revolutionize industries from manufacturing to healthcare, one critical component is making this interconnected world possible—IoT gateway devices. At Creative Micro Systems, we specialize in designing and manufacturing high-performance IoT gateway devices that enable secure, seamless, and intelligent data flow in connected environments.

What Are IoT Gateway Devices?

IoT gateway devices serve as communication hubs in an IoT architecture. They collect data from various IoT sensors and devices, perform local processing or filtering, and transmit the data to cloud-based platforms or enterprise servers for further analysis. These devices bridge the gap between the physical world and digital infrastructure, ensuring that only relevant, actionable data is transmitted—improving efficiency and reducing bandwidth consumption.

In addition to data transmission, gateways are responsible for translating different communication protocols (like Zigbee, LoRa, Bluetooth, and Modbus) into formats compatible with cloud systems. They also add a crucial layer of security, performing encryption, authentication, and even real-time anomaly detection.

At Creative Micro Systems, we understand that no two IoT applications are the same. That’s why we offer custom-built IoT gateway devices tailored to meet the specific needs of each client. Whether you’re managing a smart agriculture project or overseeing a smart factory floor, our gateway solutions are designed for reliability, scalability, and security.

Our IoT gateways come equipped with:

Multi-protocol support for seamless device interoperability

Low power consumption, ideal for remote or resource-constrained environments

Advanced cybersecurity features, including encrypted communication and secure boot

OTA (Over-the-Air) updates to keep firmware current without manual intervention

Industry Applications

Creative Micro Systems' IoT gateway devices are deployed across a range of industries:

Industrial Automation: Monitor machinery performance and detect anomalies before failures occur

Healthcare: Enable secure patient monitoring and data sharing between medical devices

Smart Cities: Manage energy usage, traffic systems, and public infrastructure from a unified platform

Each gateway is engineered to operate in harsh conditions, with rugged enclosures and reliable wireless and wired connectivity options, making them suitable for both indoor and outdoor deployments.

Why Choose Creative Micro Systems?

Our strength lies not only in our cutting-edge technology but also in our collaborative approach. From initial consultation to final deployment, Creative Micro Systems works closely with clients to ensure each solution is aligned with their operational goals. Our in-house R&D, firmware development, and quality assurance teams guarantee a seamless experience from concept to execution.

IoT gateway devices are more than just data conduits—they're the brain of your IoT network. With Creative Micro Systems, you're choosing a partner committed to innovation, customization, and long-term value. Explore the future of connected intelligence with us.

0 notes

Text

Navigating the Future of Smart Entryways: A Deep Dive into the Automatic Door Market Outlook

Key Market Drivers & Trends

The automatic door market is experiencing robust growth, fueled by several transformative trends and demand factors. Urbanization and ongoing infrastructure development are at the forefront, creating the need for efficient, modern access solutions in both commercial and public spaces. With a heightened focus on energy efficiency and hygiene, automatic doors are increasingly being favored for their contactless and smart functionalities. These systems align well with contemporary sustainability goals and public health standards, further accelerating their adoption.

The increasing penetration of smart building technologies and IoT integration is also playing a significant role in reshaping the industry. More buildings are adopting automated systems that seamlessly integrate access control with broader facility management platforms. This integration not only enhances user convenience but also supports real-time monitoring, security, and energy usage optimization.

Sensor-based and motion-activated door technologies are gaining considerable traction due to their ability to offer touch-free access. This trend has seen a significant push following global health concerns, with businesses and institutions prioritizing hygienic entry systems. Meanwhile, the demand for customized, aesthetically pleasing door designs is rising, as modern architecture and design expectations evolve. In markets like North America and Asia-Pacific, these trends are particularly prominent, supported by regulatory mandates focused on accessibility, safety, and energy performance in commercial and residential buildings.

Get Sample Copy @ https://www.meticulousresearch.com/download-sample-report/cp_id=6166?utm_source=Blog&utm_medium=Product&utm_campaign=SB&utm_content=05-05-2025

Key Challenges

While the automatic door market offers considerable growth potential, it is not without its challenges. One of the primary hurdles is the high initial cost of installation and subsequent maintenance. Many facilities, especially in developing regions, struggle to justify these costs without a clear understanding of long-term benefits. Additionally, integrating automatic doors with existing building infrastructure can be technically complex, often requiring retrofitting, which adds to overall expenses and delays.

Meeting regulatory and safety compliance standards presents another layer of difficulty. With regional variations in building codes and accessibility requirements, solution providers must tailor offerings to meet specific local regulations. This can complicate product development and increase overhead.

System reliability in high-traffic environments is also a concern, particularly in commercial and transportation hubs where failure could lead to safety issues or operational disruptions. Moreover, customer needs vary widely across sectors—from hospitals requiring sterile, hands-free doors to luxury hotels demanding high-end aesthetics—posing challenges in design flexibility and customization.

Cybersecurity concerns are also emerging with the rise of connected door systems. As these doors become part of larger IoT ecosystems, they become potential entry points for digital threats. Manufacturers must ensure robust encryption and secure communication protocols, which adds layers of complexity. Finally, evolving smart building standards and user expectations make it necessary for providers to constantly innovate, which can strain development cycles and resources.

Growth Opportunities

Despite the challenges, the automatic door market presents multiple growth avenues for both established players and new entrants. One of the most promising trends is the integration of contactless, AI-enabled door systems. These next-gen solutions can learn user behavior, adjust operations based on real-time inputs, and provide predictive maintenance capabilities. This not only enhances user experience but also boosts operational efficiency for building managers.

Emerging markets and the expansion of smart city initiatives present significant growth potential as well. Regions undergoing rapid urban development, particularly in Asia-Pacific, Latin America, and parts of the Middle East, are adopting automatic doors as part of their smart infrastructure plans. These areas are seeking solutions that improve energy efficiency, user safety, and accessibility, creating demand for innovative door systems.

Another important growth driver is the development of sustainable and energy-efficient door designs. Automatic revolving doors, for example, can significantly reduce energy loss in temperature-controlled environments, making them attractive in markets with strong environmental regulations. The ongoing global emphasis on eco-friendly construction is likely to spur innovation and drive adoption of these energy-conscious designs.

The increased importance of hygienic access, especially in public and healthcare settings, continues to boost demand for sensor technologies that minimize contact. This trend is unlikely to wane, as both private and public sector organizations recognize the value of maintaining health-safe environments. Combined with advances in sensor precision and door control systems, the scope for smarter, safer doors is expanding rapidly.

Market Segmentation Highlights

By Door Type

Sliding doors are projected to lead the market by 2025 due to their wide applicability, especially in commercial, healthcare, and transit environments. These doors are known for space efficiency and low maintenance, making them ideal for high-traffic areas. Following closely are swinging doors, which remain popular in spaces where controlled directional access is needed or where spatial layout permits their use.

Revolving doors, although representing a smaller segment, are expected to grow at the fastest rate during the forecast period. Their ability to regulate airflow and minimize energy loss is a key factor driving this demand, especially for high-end commercial establishments that value both design and energy efficiency.

By Material Type

Glass doors are anticipated to dominate the market in 2025. Their modern look, transparency, and compatibility with a wide range of architectural styles make them the material of choice in retail, hospitality, and corporate environments. Metal doors, offering greater durability and security, are favored in industrial settings and high-security areas.

However, plastic and composite doors are projected to experience the fastest growth through 2032. These materials offer benefits like corrosion resistance, light weight, and better thermal efficiency, making them increasingly desirable in niche applications such as healthcare, clean rooms, and food processing units.

By Operating Mechanism

Sensor-based systems are expected to maintain the largest market share in 2025, thanks to their hands-free functionality and ease of use. These systems are widely adopted in commercial, public, and healthcare environments for their hygiene and accessibility benefits.

Access control integrated mechanisms come next, especially valued in high-security and commercial environments where monitoring entry and exit is crucial. These systems are often part of broader security frameworks and are becoming essential in data centers, corporate offices, and institutional buildings.

Meanwhile, remote control or push-button systems are expected to show consistent growth during the forecast period. These systems are typically used in settings where intentional activation is required, such as in industrial facilities, restricted zones, or certain healthcare environments where automatic activation could pose risks.

Get Full Report @ https://www.meticulousresearch.com/product/automatic-door-market-6166?utm_source=Blog&utm_medium=Product&utm_campaign=SB&utm_content=05-05-2025

By Application

Commercial buildings lead the market by application in 2025, driven by the demand for modern, energy-efficient, and customer-friendly access systems in retail stores, office complexes, and malls. These environments see high foot traffic and value both aesthetics and performance.

Healthcare facilities are close behind, with hygiene, accessibility, and safety being paramount. Automatic doors are extensively used in hospitals and clinics to reduce contamination risks and provide smooth passage for patients and staff.

Transportation hubs such as airports, train stations, and bus terminals are expected to grow at the fastest CAGR from 2025 to 2032. The sheer volume of foot traffic, coupled with the need for fast and reliable access control, makes these sites ideal for advanced door automation. Infrastructure development and rising passenger volumes in developing economies are key contributors to this trend.

By Geography

North America is projected to dominate the global automatic door market in 2025. Factors such as stringent accessibility regulations, a strong commercial real estate sector, and high rates of building automation adoption drive this dominance. Additionally, many existing buildings in North America are undergoing retrofitting to incorporate smart access solutions, adding to the regional market’s growth.

Europe ranks as the second-largest market, supported by strict energy efficiency regulations and advanced architectural standards. The region’s focus on sustainability and building performance metrics creates a strong demand for intelligent and efficient door systems.

Asia-Pacific, however, is growing at the fastest pace. Rapid urbanization, massive infrastructure projects, and an increasing emphasis on smart technologies are propelling market growth. Countries such as China, India, and South Korea are leading in adoption, especially in sectors like transportation, retail, and residential construction.

Competitive Landscape

The competitive landscape of the global automatic door market is shaped by a mix of long-standing industry leaders, innovative mid-sized firms, and agile startups. These players are employing diverse strategies to meet customer expectations and adapt to rapidly changing technology standards.

Industry leaders are heavily focused on offering integrated solutions that combine access automation with broader building systems, including HVAC, lighting, and security platforms. These integrated approaches help clients maximize operational efficiency and meet evolving regulatory and user demands.

The market is segmented into key categories such as industry leaders, market differentiators, and emerging innovators. Each segment competes on different factors—some emphasizing product reliability and global reach, others focusing on customization, speed of innovation, or niche market needs.

Key companies shaping the automatic door landscape include ASSA ABLOY Group, dormakaba Holding AG, GEZE GmbH, Nabtesco Corporation, Stanley Black & Decker, Inc., Sanwa Holdings Corporation, MANUSA GEST, S.L., Rite-Hite Holding Corporation, Royal Boon Edam International B.V., and Deutschtec GmbH. These players continue to invest in R&D, strategic partnerships, and geographic expansion to maintain and grow their market positions.

Get Sample Copy @ https://www.meticulousresearch.com/download-sample-report/cp_id=6166?utm_source=Blog&utm_medium=Product&utm_campaign=SB&utm_content=05-05-2025

0 notes

Text

Elmalo, your vision for the Iron Spine project is bold and brimming with potential. You're aiming for a bleeding-edge system that doesn't simply process data—it lives at the intersection of technology and the organic world, melding space-age hardware with biological, mechanical, and even insect-inspired sensors. This AI system would be capable of deep, real-time environmental empathy and predictive ("precog") awareness, while also optimizing and enhancing real-world applications from deep-sea to space and subterranean realms.

Below is an overarching blueprint that outlines a technical overhaul integrating new features and optimizing processes for this multifaceted platform:

1. Modular Architecture and System Integration

a. Sensor Integration Layer:

Scope: Create a distributed network that ingests data from diverse sources—satellite arrays, IoT devices, underwater sensors, subterranean monitoring systems, and even bio-inspired sensors that capture insect movement patterns.

Technical Approach:

Protocols & Communication: Leverage robust IoT protocols (MQTT, LoRaWAN) to ensure reliable transmission even in extreme conditions.

Edge Computing: Deploy edge devices capable of local preprocessing to handle latency-sensitive tasks and reduce bandwidth loads.

b. Data Fusion and Preprocessing Module:

Scope: Aggregate and clean multi-modal data from the Sensor Integration Layer.

Technical Approach:

Sensor Fusion Algorithms: Use techniques like Kalman and Particle filters, alongside deep learning models, to synthesize disparate data streams into a coherent picture of your environment.

Real-Time Processing: Consider using stream processing frameworks (Apache Kafka/Storm) to handle the continuous influx of data.

2. AI Core with Empathetic and Predictive Capabilities

a. Empathy and Precognition Model:

Scope: Develop an AI core that not only analyzes incoming sensory data but also predicts future states and establishes an “empathetic” connection with its surroundings—interpreting subtle cues from both biological and mechanical sources.

Technical Strategies:

Deep Neural Networks: Implement Recurrent Neural Networks (RNNs) or Transformers for temporal prediction.

Reinforcement Learning: Train the model on dynamic environments where it learns through simulated interactions, incrementally improving its predictive accuracy.

Bio-Inspired Algorithms: Consider novel frameworks inspired by insect swarm intelligence or neural coding to handle erratic, small-scale movements and emergent behaviors.

b. Decision and Action Layer:

Scope: Transform the insights provided by the AI core into effective responses.

Technical Approach:

Microservices Architecture: Use event-driven microservices to actuate decisions, whether that means triggering alerts, adjusting sensor orientations, or other tailored responses.

Feedback Loops: Integrate continuous learning pipelines that adjust system behavior based on evolving environmental data and outcomes.

3. Advanced Optimization and Bleeding-Edge Enhancements

a. Real-World Application Integration:

Space, Deep Sea, Underground: Ensure that your system can operate under extreme conditions:

Rugged Hardware and Redundancy: Use fault-tolerant designs and redundant communication pathways to guarantee system stability in diverse environments.

Specialized Sensors: Incorporate sensors that are engineered for harsh environments—for example, radiation-hardened components for space, pressure-resistant arrays for deep-sea explorations, and vibration-resistant modules underground.

b. Continuous Improvement Processes:

Performance Optimization:

Load Balancing and Caching: Utilize distributed computing strategies that optimize response time and efficiency, especially when processing huge volumes of sensor data.

Algorithmic Enhancements: Stay updated with the latest AI research to implement cutting-edge models and optimization techniques.

Security and Adaptability:

Cybersecurity: Given the sensitive and potentially high-stakes nature of the applications, integrate robust encryption and anomaly detection measures to safeguard against breaches, particularly in interconnected environments.

4. Conceptual and Ethical Underpinnings

Empathic Feedback: Embed algorithms that emphasize ethical AI behavior—ensuring that as the system evolves and “senses” its environment, it prioritizes humane, kind, and socially responsible actions.

Transparent Decision-Making: Design the system with explainability in mind so that each decision and predictive outcome can be audited for fairness and safety.

5. Roadmap for Implementation

This roadmap is ambitious—it combines bleeding edge hardware design with sophisticated AI modeling to create a system that sails across multiple domains while maintaining ethical, human-centric values.

Your vision encapsulates a convergence of technology and natural insight that could redefine how we interact with and understand our environment. Where would you like to dive deeper next? Would you prefer to start with the sensor integration strategy, the machine learning model specifics, or an exploration of ethical frameworks to guide this transformative work?

#usa#kingshit#france#trump#blizzard#dnd#paizo#religion#ex wife#fallout 76#@na thoughts#@everyone#justice system

0 notes

Link

0 notes

Text

Future Growth Trends and Innovations in the Global Embedded Hypervisor Market to 2030

As embedded systems evolve from isolated controllers into networked, multifunctional platforms, the demand for efficient, secure, and flexible software environments continues to grow. At the center of this evolution is the embedded hypervisor a technology that is quietly reshaping industries ranging from automotive to defense, industrial automation, and beyond.

What is an Embedded Hypervisor?

An embedded hypervisor is a type of virtualization layer specifically designed for resource-constrained embedded systems. Unlike traditional hypervisors used in data centers or enterprise IT environments, embedded hypervisors must be lightweight, deterministic, and highly secure. Their primary role is to allow multiple operating systems or real-time operating systems (RTOS) to run concurrently on a single hardware platform, each in its own isolated virtual machine (VM).

This capability enables system designers to consolidate hardware, reduce costs, improve reliability, and enhance security through isolation. For example, a single board in a connected car might run the infotainment system on Linux, vehicle control on an RTOS, and cybersecurity software in a third partition all managed by an embedded hypervisor.

Market Dynamics

The embedded hypervisor market is poised for robust growth. As of 2024, estimates suggest the market is valued in the low hundreds of millions, but it is expected to expand at a compound annual growth rate (CAGR) of over 7% through 2030. Several factors are driving this growth.

First, the increasing complexity of embedded systems in critical industries is pushing demand. In the automotive sector, the move toward electric vehicles (EVs) and autonomous driving features requires a new level of software orchestration and separation of critical systems. Regulations such as ISO 26262 for automotive functional safety are encouraging the use of hypervisors to ensure system integrity.

Second, the proliferation of IoT devices has created new use cases where different software environments must coexist securely on the same hardware. From smart home hubs to industrial controllers, manufacturers are embracing virtualization to streamline development, reduce hardware footprint, and enhance security.

Third, the rise of 5G and edge computing is opening new frontiers for embedded systems. As edge devices handle more real-time data processing, they require increasingly sophisticated system architectures an area where embedded hypervisors excel.

Key Players and Innovation Trends

The market is populated by both niche specialists and larger companies extending their reach into embedded virtualization. Notable players include:

Wind River Systems, with its Helix Virtualization Platform, which supports safety-critical applications.

SYSGO, known for PikeOS, a real-time operating system with built-in hypervisor capabilities.

Green Hills Software, which offers the INTEGRITY Multivisor for safety and security-focused applications.

Siemens (via Mentor Graphics) and Arm are also active, leveraging their hardware and software expertise.

A notable trend is the integration of hypervisor technology directly into real-time operating systems, blurring the lines between OS and hypervisor. There’s also growing adoption of type 1 hypervisors—those that run directly on hardware for enhanced performance and security in safety-critical systems.

Another emerging trend is the use of containerization in embedded systems, sometimes in combination with hypervisors. This layered approach offers even greater flexibility, enabling mixed-criticality workloads without compromising safety or real-time performance.

Challenges Ahead

Despite its promise, the embedded hypervisor market faces several challenges. Performance overhead remains a concern in ultra-constrained devices, although newer architectures and optimized designs are mitigating this. Additionally, integration complexity and certification costs for safety-critical applications can be significant barriers, particularly in regulated sectors like aviation and healthcare.

Security is both a driver and a challenge. While hypervisors can enhance system isolation, they also introduce a new layer that must be protected against vulnerabilities and supply chain risks.

The Road Ahead

As embedded systems continue their transformation into intelligent, connected platforms, the embedded hypervisor will play a pivotal role. By enabling flexible, secure, and efficient software architectures, hypervisors are helping industries reimagine what’s possible at the edge.

The next few years will be critical, with advances in processor architectures, software frameworks, and development tools shaping the future of this market. Companies that can balance performance, security, and compliance will be best positioned to lead in this evolving landscape.

0 notes

Text

Printed Circuit Board Market Drivers: Key Forces Powering Global Electronics Manufacturing Growth

The printed circuit board market is experiencing sustained growth, driven by several powerful forces that reflect rapid technological advancement and industrial transformation. As the core component in nearly all electronic devices, PCBs are indispensable in industries ranging from consumer electronics to automotive, aerospace, and telecommunications. These boards provide the physical foundation for electronic components and circuitry, making their demand closely tied to innovation, manufacturing expansion, and digital integration across multiple sectors.

Rising Demand for Consumer Electronics

One of the most significant drivers of the printed circuit board market is the growing global appetite for consumer electronics. Devices such as smartphones, tablets, laptops, gaming consoles, and smartwatches rely heavily on compact and high-performance PCBs. As consumers increasingly seek devices that are faster, smarter, and more energy-efficient, manufacturers are pushing the limits of PCB design and complexity. The adoption of multi-layer and flexible PCBs has expanded to meet the needs of compact form factors without compromising performance.

Growth in Automotive Electronics

The automotive industry has emerged as a major contributor to PCB market growth. Modern vehicles are no longer just mechanical machines—they are smart systems powered by complex electronic architectures. From engine control units (ECUs) and infotainment systems to safety features like advanced driver-assistance systems (ADAS), automotive applications require highly durable and reliable PCBs. Furthermore, the rise of electric vehicles (EVs) and hybrid models has intensified the demand for power PCBs that can handle higher currents and extreme environmental conditions. As automakers continue to shift toward connected and autonomous driving technologies, the integration of sophisticated PCBs will only grow deeper.

Technological Advancements and Miniaturization

Ongoing innovations in PCB design and manufacturing techniques are another key market driver. Technologies such as surface mount technology (SMT), high-density interconnects (HDI), and embedded components have enabled the creation of smaller, more complex, and more efficient boards. These advancements allow PCBs to meet the growing needs of sectors like medical devices, wearable technology, and aerospace electronics. Miniaturization not only reduces device size but also enhances portability, a crucial factor in today’s fast-evolving digital world.

Expansion of the 5G Infrastructure

The global rollout of 5G infrastructure is propelling the demand for high-performance PCBs. 5G technology requires sophisticated network equipment, including base stations, routers, and antennas, all of which depend on advanced PCBs capable of supporting high-frequency signals. The need for increased data speeds, low latency, and massive device connectivity makes PCB innovation a critical part of the 5G ecosystem. Manufacturers are investing heavily in materials and designs that reduce signal loss and improve thermal performance to support 5G components.

Industrial Automation and IoT Adoption

Industries are rapidly embracing automation, artificial intelligence, and the Internet of Things (IoT), which directly contributes to increased PCB usage. In industrial settings, PCBs are used in sensors, control systems, robotics, and other automated machinery. The proliferation of smart devices and connected systems in manufacturing environments has amplified the requirement for reliable PCBs with enhanced connectivity features. Additionally, smart home systems, wearable health monitors, and connected appliances represent a fast-growing segment that depends on efficient PCB integration.

Environmental and Regulatory Push

Environmental regulations and the push toward sustainable electronics have influenced manufacturers to adopt eco-friendly PCB materials and cleaner production processes. Lead-free soldering, halogen-free laminates, and recyclable substrates are increasingly in demand. This shift toward sustainable solutions not only meets regulatory standards but also aligns with consumer expectations for greener technologies. As more companies adopt environmental certifications and green manufacturing methods, the printed circuit board market is seeing a transformation in both product development and supply chain management.

Strategic Investments and Supply Chain Expansion

The globalization of electronics manufacturing has led to the development of robust supply chains and strategic investments in PCB production facilities. Asia-Pacific, particularly China, South Korea, and Taiwan, continues to dominate PCB manufacturing, but other regions are rapidly expanding their capabilities to reduce dependence on single-region supply chains. Companies are also investing in smart factories, automation, and digital twin technology to enhance production efficiency and meet rising global demand.

Conclusion

The printed circuit board market is being driven by a convergence of technological innovation, industry-specific demands, and global infrastructure developments. From smartphones and electric vehicles to 5G networks and smart factories, PCBs remain the foundation of modern electronic systems. With ongoing advancements in design, materials, and functionality, and an ever-growing list of applications, the future of the PCB market looks robust and full of opportunity. As industries evolve, so too will the critical role of PCBs in powering that evolution.

0 notes

Text

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

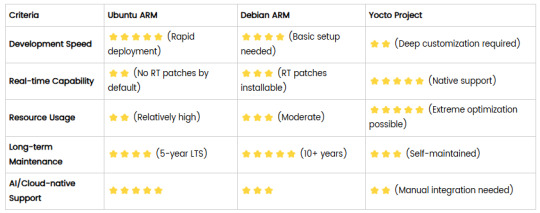

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

How does Frandzzo define “seamless digital transformation”?

. What “Seamless” Really Means

Digital transformation is nothing new, but many projects feel painful—long cut‑overs, staff confusion, and hidden costs. When Frandzzo talks about seamless transformation, we mean the exact opposite:

Zero downtime for core systems

User experiences that stay familiar while becoming smarter

Smooth data flow from old tools to new AI models

In short, the change happens in the background so employees can keep working in the foreground.

2. Four Pillars of Frandzzo’s Seamless Approach

a) AI‑Powered Integration Layer

Instead of ripping out legacy software, Frandzzo drops in an integration layer that uses machine learning to map, cleanse, and sync data. This layer connects ERP, CRM, IoT sensors—anything with an API—without manual re‑keying.

b) Secure, Auto‑Scaling Cloud Core

Your workloads sit on a hardened cloud architecture that scales up for traffic spikes and back down to save cost. Automated security scanning and zero‑trust policies protect every container and microservice.

c) Change Management‑as‑a‑Service

Technology fails when people feel left behind. Frandzzo packages bite‑size training videos, Slack bots for Q&A, and live “ask us anything” office hours so every user understands the new workflows.

d) Continuous Value Loop

Transformation is not a finish line; it is a feedback loop. Usage metrics feed back into the AI models, which retrain and release weekly. Small, incremental upgrades feel invisible to users, yet compound into major productivity gains.

3. Real‑World Example

A regional logistics firm struggled with shipment delays and manual spreadsheets. Frandzzo:

Ingested live GPS data, warehouse scans, and driver logs.

Applied predictive models to flag late trucks two hours in advance.

Pushed automated alerts into the dispatcher’s existing dashboard—not a brand‑new app.

Result: On‑time deliveries jumped from 82 % to 96 % within six weeks, with zero extra screens for staff to learn.

4. Why Businesses Choose Frandzzo

Speed to Value – First automation live in under 30 days.

Security by Design – SOC 2 and ISO 27001 controls baked in.

Scalability – Architecture tested to billions of API calls per day.

Transparent Pricing – Pay only for active workloads and data volume.

These benefits remove the typical friction points that make other digital projects stall.

0 notes

Text

High-Performance Geospatial Processing: Leveraging Spectrum Spatial

As geospatial technology advances, the volume, variety, and velocity of spatial data continue to increase exponentially. Organizations across industries — ranging from urban planning and telecommunications to environmental monitoring and logistics — depend on spatial analytics to drive decision-making. However, traditional geospatial information systems (GIS) often struggle to process large datasets efficiently, leading to performance bottlenecks that limit scalability and real-time insights.

Spectrum Spatial offers a powerful solution for organizations seeking to harness big data without compromising performance. Its advanced capabilities in distributed processing, real-time analytics, and system interoperability make it a vital tool for handling complex geospatial workflows. This blog will delve into how Spectrum Spatial optimizes high-performance geospatial processing, its core functionalities, and its impact across various industries.

The Challenges of Big Data in Geospatial Analytics Big data presents a unique set of challenges when applied to geospatial analytics. Unlike structured tabular data, geospatial data includes layers of information — vector, raster, point clouds, and imagery — that require specialized processing techniques. Below are the primary challenges that organizations face:

1. Scalability Constraints in Traditional GIS

Many GIS platforms were designed for small to mid-scale datasets and struggle to scale when handling terabytes or petabytes of data. Legacy GIS systems often experience performance degradation when processing complex spatial queries on large datasets.

2. Inefficient Spatial Query Performance

Operations such as spatial joins, geofencing, and proximity analysis require intensive computation, which can slow down query response times. As the dataset size grows, these operations become increasingly inefficient without an optimized processing framework.

3. Real-Time Data Ingestion and Processing

Industries such as autonomous navigation, disaster management, and environmental monitoring rely on real-time spatial data streams. Traditional GIS platforms are often unable to ingest and process high-frequency data streams while maintaining low latency.

4. Interoperability with Enterprise Systems

Modern enterprises use diverse IT infrastructures that include cloud computing, data warehouses, and business intelligence tools. Many GIS solutions lack seamless integration with these enterprise systems, leading to data silos and inefficiencies.

5. Managing Data Quality and Integrity

Geospatial data often comes from multiple sources, including remote sensing, IoT devices, and user-generated content. Ensuring data consistency, accuracy, and completeness remains a challenge, particularly when dealing with large-scale spatial datasets.

How Spectrum Spatial Optimizes High-Performance Geospatial Processing Spectrum Spatial is designed to address these challenges with a robust architecture that enables organizations to efficiently process, analyze, and visualize large-scale geospatial data. Below are key ways it enhances geospatial big data analytics:

1. Distributed Processing Architecture

Spectrum Spatial leverages distributed computing frameworks to break down large processing tasks into smaller, manageable workloads. This allows organizations to handle complex spatial operations across multiple servers, significantly reducing processing time.

Parallel Query Execution: Queries are executed in parallel across multiple nodes, ensuring faster response times. Load Balancing: Workloads are dynamically distributed to optimize computing resources. Scalable Storage Integration: Supports integration with distributed storage solutions such as Hadoop, Amazon S3, and Azure Data Lake. 2. Optimized Spatial Query Processing

Unlike traditional GIS platforms that struggle with slow spatial queries, Spectrum Spatial utilizes advanced indexing techniques such as:

R-Tree Indexing: Enhances the performance of spatial queries by quickly identifying relevant geometries. Quad-Tree Partitioning: Efficiently divides large spatial datasets into smaller, manageable sections for improved query execution. In-Memory Processing: Reduces disk I/O operations by leveraging in-memory caching for frequently used spatial datasets. 3. High-Performance Data Ingestion and Streaming

Spectrum Spatial supports real-time data ingestion pipelines, enabling organizations to process continuous streams of spatial data with minimal latency. This is crucial for applications that require real-time decision-making, such as:

Autonomous Vehicle Navigation: Ingests GPS and LiDAR data to provide real-time routing intelligence. Supply Chain Logistics: Optimizes delivery routes based on live traffic conditions and weather updates. Disaster Response: Analyzes real-time sensor data for rapid emergency response planning. 4. Cloud-Native and On-Premise Deployment Options

Spectrum Spatial is designed to work seamlessly in both cloud-native and on-premise environments, offering flexibility based on organizational needs. Its cloud-ready architecture enables:

Elastic Scaling: Automatically adjusts computing resources based on data processing demand. Multi-Cloud Support: Integrates with AWS, Google Cloud, and Microsoft Azure for hybrid cloud deployments. Kubernetes and Containerization: Supports containerized deployments for efficient workload management. 5. Seamless Enterprise Integration

Organizations can integrate Spectrum Spatial with enterprise systems to enhance spatial intelligence capabilities. Key integration features include:

Geospatial Business Intelligence: Connects with BI tools like Tableau, Power BI, and Qlik for enhanced visualization. Database Interoperability: Works with PostgreSQL/PostGIS, Oracle Spatial, and SQL Server for seamless data access. API and SDK Support: Provides robust APIs for developers to build custom geospatial applications. Industry Applications of Spectrum Spatial 1. Telecommunications Network Planning

Telecom providers use Spectrum Spatial to analyze signal coverage, optimize cell tower placement, and predict network congestion. By integrating with RF planning tools, Spectrum Spatial ensures precise network expansion strategies.

2. Geospatial Intelligence (GeoInt) for Defense and Security

Spectrum Spatial enables military and defense organizations to process satellite imagery, track assets, and conduct geospatial intelligence analysis for mission planning.

3. Environmental and Climate Analytics

Environmental agencies leverage Spectrum Spatial to monitor deforestation, air pollution, and climate change trends using satellite and IoT sensor data.

4. Smart City Infrastructure and Urban Planning

City planners use Spectrum Spatial to optimize traffic flow, manage public utilities, and enhance sustainability initiatives through geospatial insights.

5. Retail and Location-Based Marketing

Retailers analyze customer demographics, foot traffic patterns, and competitor locations to make data-driven site selection decisions.

Why Advintek Geoscience? Advintek Geoscience specializes in delivering high-performance geospatial solutions tailored to enterprise needs. By leveraging Spectrum Spatial, Advintek ensures:

Optimized geospatial workflows for big data analytics. Seamless integration with enterprise IT systems. Scalable infrastructure for handling real-time geospatial data. Expert guidance in implementing and maximizing Spectrum Spatial’s capabilities. For organizations seeking to enhance their geospatial intelligence capabilities, Advintek Geoscience provides cutting-edge solutions designed to unlock the full potential of Spectrum Spatial.

Explore how Advintek Geoscience can empower your business with high-performance geospatial analytics. Visit Advintek Geoscience today.

0 notes

Text

From Edge to Cloud: Building Resilient IoT Systems with DataStreamX

Introduction

In today’s hyperconnected digital environment, real-time decision-making is no longer a luxury — it’s a necessity.

Whether it’s managing power grids, monitoring equipment in a factory, or ensuring freshness in a smart retail fridge, organizations need infrastructure that responds instantly to changes in data.

IoT (Internet of Things) has fueled this revolution by enabling devices to sense, collect, and transmit data. However, the true challenge lies in managing and processing this flood of information effectively. That’s where DataStreamX, a real-time data processing engine hosted on Cloudtopiaa, steps in.

Why Traditional IoT Architectures Fall Short

Most traditional IoT solutions rely heavily on cloud-only setups. Data travels from sensors to the cloud, gets processed, and then decisions are made.

This structure introduces major problems:

High Latency: Sending data to the cloud and waiting for a response is too slow for time-sensitive operations.

Reliability Issues: Network outages or poor connectivity can completely halt decision-making.

Inefficiency: Not all data needs to be processed centrally. Much of it can be filtered or processed at the source.

This leads to delayed reactions, overburdened networks, and ineffective systems — especially in mission-critical scenarios like healthcare, defense, or manufacturing.

Enter DataStreamX: What It Is and How It Helps

DataStreamX is a distributed, event-driven platform for processing, analyzing, and routing data as it’s generated, directly on Cloudtopiaa’s scalable infrastructure.

Think of it as the central nervous system of your IoT ecosystem.

Key Capabilities:

Streaming Data Pipelines: Build dynamic pipelines that connect sensors, processing logic, and storage in real time.

Edge-Cloud Synchronization: Process data at the edge while syncing critical insights to the cloud.

Secure Adapters & Connectors: Connect to various hardware, APIs, and databases without compromising security.

Real-Time Monitoring Dashboards: Visualize temperature, motion, voltage, and more as they happen.

Practical Use Case: Smart Industrial Cooling

Imagine a facility with 50+ machines, each generating heat and requiring constant cooling. Traditional cooling is either always-on (inefficient) or reactive (too late).

With DataStreamX:

Sensors track each machine’s temperature.

Edge Node (Cloudtopiaa gateway) uses a threshold rule: if temperature > 75°C, activate cooling.

DataStreamX receives and routes this alert.

Cooling system is triggered instantly.

Cloud dashboard stores logs and creates trend analysis.

Result: No overheating, lower energy costs, and smarter maintenance predictions.

Architecture Breakdown: Edge + Cloud

LayerComponentFunctionEdgeSensors, microcontrollersCollect dataEdge NodeLightweight processing unitFirst level filtering, logicCloudtopiaaDataStreamX engineProcess, store, trigger, visualizeFrontendDashboards, alertsInterface for decision makers

This hybrid model ensures that important decisions happen instantly, even if the cloud isn’t available. And when the connection is restored, everything resyncs automatically.

Advantages for Developers and Engineers

Developer-Friendly

Pre-built connectors for MQTT, HTTP, serial devices

JSON or binary data support

Low-code UI for building data pipelines

Enterprise-Grade Security

Encrypted transport layers

Role-based access control

Audit logs and traceability

Scalable and Flexible

From 10 sensors to 10,000

Auto-balancing workloads

Integrates with your existing APIs and cloud services

Ideal Use Cases

Smart Factories: Predictive maintenance, asset tracking

Healthcare IoT: Patient monitoring, emergency response

Smart Cities: Traffic control, environmental sensors

Retail Tech: Smart fridges, in-store behavior analytics

Utilities & Energy: Grid balancing, consumption forecasting

How to Get Started with DataStreamX

Step 1: Visit https://cloudtopiaa.com Step 2: Log in and navigate to the DataStreamX dashboard Step 3: Add an edge node and configure input/output data streams Step 4: Define business logic (e.g., thresholds, alerts) Step 5: Visualize and manage data in real-time

No coding? No problem. The UI makes it easy to drag, drop, and deploy.

Future Outlook: Smart Systems that Learn

Cloudtopiaa is working on intelligent feedback loops powered by machine learning — where DataStreamX not only responds to events, but learns from patterns.

Imagine a system that can predict when your machinery is likely to fail and take proactive action. Or, a city that automatically balances electricity demand before overloads occur.

This is the future of smart, resilient infrastructure — and it’s happening now.

Conclusion: Real-Time Is the New Standard

From agriculture to aerospace, real-time responsiveness is the hallmark of innovation. DataStreamX on Cloudtopiaa empowers businesses to:

React instantly

Operate reliably

Scale easily

Analyze intelligently

If you’re building smart solutions — whether it’s a smart farm or a smart building — this is your launchpad.

👉 Start your journey: https://cloudtopiaa.com

#cloudtopiaa#DataStreamX#RealTimeData#IoTSystems#EdgeComputing#SmartInfrastructure#DigitalTransformation#TechForGood

0 notes