#Kubernetes Resource Access

Explore tagged Tumblr posts

Text

Headlamp: Kubernetes Dashboard Openlens Alternative

Headlamp: Kubernetes Dashboard Openlens Alternative @vexpert #100daysofhomelab #vmwarecommunities #KubernetesHeadlamp #KubernetesClusterManagement #KubernetesDashboards #DeployHeadlamp #ExtensibleKubernetesUI #HeadlampDesktopApp #KubernetesIDE

If you’re interested in Kubernetes, you’ve probably worked with various tools to manage your Kubernetes clusters. A new player in the field is Kubernetes Headlamp, an open-source project originally developed by Kinvolk (now part of Microsoft). With Headlamp, managing resources and maintaining a view of your Kubernetes cluster is much easier. This powerful tool reduces the learning curve of…

View On WordPress

#Cluster View with Headlamp#Deploy Headlamp#Extensible Kubernetes UI#Headlamp Desktop App#Headlamp Plugin System#Kubernetes cluster management#Kubernetes Dashboards#Kubernetes Headlamp#kubernetes ide#Kubernetes Resource Access

0 notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

AEM aaCS aka Adobe Experience Manager as a Cloud Service

As the industry standard for digital experience management, Adobe Experience Manager is now being improved upon. Finally, Adobe is transferring Adobe Experience Manager (AEM), its final on-premises product, to the cloud.

AEM aaCS is a modern, cloud-native application that accelerates the delivery of omnichannel application.

The AEM Cloud Service introduces the next generation of the AEM product line, moving away from versioned releases like AEM 6.4, AEM 6.5, etc. to a continuous release with less versioning called "AEM as a Cloud Service."

AEM Cloud Service adopts all benefits of modern cloud based services:

Availability

The ability for all services to be always on, ensuring that our clients do not suffer any downtime, is one of the major advantages of switching to AEM Cloud Service. In the past, there was a requirement to regularly halt the service for various maintenance operations, including updates, patches, upgrades, and certain standard maintenance activities, notably on the author side.

Scalability

The AEM Cloud Service's instances are all generated with the same default size. AEM Cloud Service is built on an orchestration engine (Kubernetes) that dynamically scales up and down in accordance with the demands of our clients without requiring their involvement. both horizontally and vertically. Based on, scaling can be done manually or automatically.

Updated Code Base

This might be the most beneficial and much anticipated function that AEM Cloud Service offers to consumers. With the AEM Cloud Service, Adobe will handle upgrading all instances to the most recent code base. No downtime will be experienced throughout the update process.

Self Evolving

Continually improving and learning from the projects our clients deploy, AEM Cloud Service. We regularly examine and validate content, code, and settings against best practices to help our clients understand how to accomplish their business objectives. AEM cloud solution components that include health checks enable them to self-heal.

AEM as a Cloud Service: Changes and Challenges

When you begin your work, you will notice a lot of changes in the aem cloud jar. Here are a few significant changes that might have an effect on how we now operate with aem:-

1)The significant exhibition bottleneck that the greater part of huge endeavor DAM clients are confronting is mass transferring of resource on creator example and afterward DAM Update work process debase execution of entire creator occurrence. To determine this AEM Cloud administration brings Resource Microservices for serverless resource handling controlled by Adobe I/O. Presently when creator transfers any resource it will go straightforwardly to cloud paired capacity then adobe I/O is set off which will deal with additional handling by utilizing versions and different properties that has been designed.

2)Due to Adobe's complete management of AEM cloud service, developers and operations personnel may not be able to directly access logs. As of right now, the only way I know of to request access, error, dispatcher, and other logs will be via a cloud manager download link.

3)The only way for AEM Leads to deploy is through cloud manager, which is subject to stringent CI/CD pipeline quality checks. At this point, you should concentrate on test-driven development with greater than 50% test coverage. Go to https://docs.adobe.com/content/help/en/experience-manager-cloud-manager/using/how-to-use/understand-your-test-results.html for additional information.

4)AEM as a cloud service does not currently support AEM screens or AEM Adaptive forms.

5)Continuous updates will be pushed to the cloud-based AEM Base line image to support version-less solutions. Consequently, any Asset UI console or libs granite customizations: Up until AEM 6.5, the internal node, which could be used as a workaround to meet customer requirements, is no longer possible because it will be replaced with each base line image update.

6)Local sonar cannot use the code quality rules that are available in cloud manager before pushing to git. which I believe will result in increased development time and git commits. Once the development code is pushed to the git repository and the build is started, cloud manager will run sonar checks and tell you what's wrong. As a precaution, I recommend that you do not have any problems with the default rules in your local environment and that you continue to update the rules whenever you encounter them while pushing the code to cloud git.

AEM Cloud Service Does Not Support These Features

1.AEM Sites Commerce add-on 2.Screens add-on 3.Networks add-on 4.AEM Structures 5.Admittance to Exemplary UI. 6.Page Editor is in Developer Mode. 7./apps or /libs are ready-only in dev/stage/prod environment – changes need to come in via CI/CD pipeline that builds the code from the GIT repo. 8.OSGI bundles and settings: the dev, stage, and production environments do not support the web console.

If you encounter any difficulties or observe any issue , please let me know. It will be useful for AEM people group.

3 notes

·

View notes

Text

Where to Start Learning Cloud Computing? – A Beginner’s Roadmap by NareshIT

If you're thinking about starting a career in cloud computing, you're already on the right path. As more companies move their infrastructure to the cloud, the demand for skilled cloud professionals is skyrocketing.

🔍 Understanding Cloud Computing – The Basics

Cloud computing is a model that allows businesses and individuals to access computing resources—like servers, databases, storage, and applications—on demand via the internet. Instead of purchasing hardware, users can rent resources and pay only for what they use.

Key Features of Cloud Computing:

Scalability: Instantly adjust computing power

Cost Efficiency: No upfront infrastructure costs

High Availability: 24/7 access from anywhere

Security: Built-in data protection and backups

🛠️ What Are the Different Cloud Deployment Models?

🚀 Top Cloud Service Providers You Should Know

To begin your journey, it helps to understand the leading platforms you’ll be working with:

Amazon Web Services (AWS) – Offers a wide range of services and global infrastructure

Microsoft Azure – Popular among enterprises and known for integration with Microsoft tools

Google Cloud Platform (GCP) – Great for big data, analytics, and AI-based services

🎓 Cloud Courses at NareshIT – Your Launchpad to a Cloud Career

NareshIT provides industry-focused, hands-on cloud training programs for beginners, professionals, and job seekers. Each course is designed to prepare you for certification exams and real-world job roles.

🔸 AWS Cloud Computing Course

Duration: 6 weeks (Weekday & Weekend options)

Features:

Training on core services (EC2, S3, IAM, VPC, Lambda)

Real-time use cases and project work

Covers AWS Certified Solutions Architect – Associate syllabus

Who It's For: Beginners and professionals aiming to enter the cloud job market

🔹 Microsoft Azure Course

Duration: 6-7 weeks

Features: 1.Azure fundamentals and services (Virtual Machines, Azure Storage, Networking) 2.Active Directory and hybrid cloud scenarios 3.Certification-focused (AZ-104 and AZ-900)

Why Choose Azure? Widely used by large companies and integrates seamlessly with enterprise IT environments

🔸 Google Cloud Platform (GCP) Training

Duration: 5 weeks

Features: Comprehensive training on Compute Engine, Cloud Functions, IAM, BigQuery

🔧 DevOps with Multi Cloud Training in KPHB

If you're looking for end-to-end training that combines DevOps and multiple cloud platforms, our specialized course at KPHB is ideal.

🔗 Explore the full course: DevOps with Multi Cloud Training in KPHB

Course Highlights:

Integration of AWS, Azure, and GCP

Hands-on DevOps tools: Docker, Kubernetes, Jenkins, Terraform

CI/CD pipelines across multi-cloud environments

Real-time industry projects and job-oriented scenarios

Why it matters: Companies increasingly adopt multi-cloud strategies. This course prepares you for complex, real-world infrastructure challenges and high-level DevOps roles.

🆕 Check Out Our Latest Batch Schedules!

We update our course offerings and schedules regularly. To find the most recent training batches, visit:

👉 🗓 View All New Cloud Batches at NareshIT

This page is updated weekly and includes upcoming batches, classroom & online options, and demo class details.

💡 How to Begin Learning Cloud Computing (Even If You're New)

Here’s a practical roadmap for absolute beginners:

Understand core concepts – Learn about IaaS, PaaS, SaaS, and virtualization

Choose your platform – Start with AWS, Azure, or GCP (focus on one)

Take a beginner-friendly course – Like the ones offered at NareshIT

Get hands-on – Use free tiers from AWS, Azure, and GCP for lab practice

Aim for certification – Adds credibility and improves job opportunities

Stay updated – Follow cloud blogs, join forums, and practice regularly

🎯 Why Choose NareshIT for Cloud Training?

🧑🏫 Certified and experienced instructors

💻 Live practical sessions with cloud labs

🎓 Training aligned with top certifications

📂 Placement assistance and interview prep

🕓 Flexible batch timings (Weekday/Weekend/Online)

💬 “We don’t just teach the cloud—we build your career on it.”

📞 Ready to Begin? Your Future in Cloud Starts Today

Don’t wait for the perfect time—start building your cloud skills now with NareshIT. We’re here to guide you from beginner to certified expert.

📧 Email: [email protected]

#CloudComputing#AWS#Azure#GCP#NareshIT#CloudCertification#TechCareers#LearnCloud#DevOps#ITTraining#NewBatches#HyderabadTech#CloudCourses#CloudEngineer#CareerInTech

0 notes

Text

Mastering Multicluster Kubernetes with Red Hat OpenShift Platform Plus

As enterprises expand their containerized environments, managing and securing multiple Kubernetes clusters becomes both a necessity and a challenge. Red Hat OpenShift Platform Plus, combined with powerful tools like Red Hat Advanced Cluster Management (RHACM), Red Hat Quay, and Red Hat Advanced Cluster Security (RHACS), offers a comprehensive suite for multicluster management, governance, and security.

In this blog post, we'll explore the key components and capabilities that help organizations effectively manage, observe, secure, and scale their Kubernetes workloads across clusters.

Understanding Multicluster Kubernetes Architectures

Modern enterprise applications often span across multiple Kubernetes clusters—whether to support hybrid cloud strategies, improve high availability, or isolate workloads by region or team. Red Hat OpenShift Platform Plus is designed to simplify multicluster operations by offering an integrated, opinionated stack that includes:

Red Hat OpenShift for consistent application platform experience

RHACM for centralized multicluster management

Red Hat Quay for enterprise-grade image storage and security

RHACS for advanced cluster-level security and threat detection

Together, these components provide a unified approach to handle complex multicluster deployments.

Inspecting Resources Across Multiple Clusters with RHACM

Red Hat Advanced Cluster Management (RHACM) offers a user-friendly web console that allows administrators to view and interact with all their Kubernetes clusters from a single pane of glass. Key capabilities include:

Centralized Resource Search: Use the RHACM search engine to find workloads, nodes, and configurations across all managed clusters.

Role-Based Access Control (RBAC): Manage user permissions and ensure secure access to cluster resources based on roles and responsibilities.

Cluster Health Overview: Quickly identify issues and take action using visual dashboards.

Governance and Policy Management at Scale

With RHACM, you can implement and enforce consistent governance policies across your entire fleet of clusters. Whether you're ensuring compliance with security benchmarks (like CIS) or managing custom rules, RHACM makes it easy to:

Deploy policies as code

Monitor compliance status in real time

Automate remediation for non-compliant resources

This level of automation and visibility is critical for regulated industries and enterprises with strict security postures.

Observability Across the Cluster Fleet

Observability is essential for understanding the health, performance, and behavior of your Kubernetes workloads. RHACM’s built-in observability stack integrates with metrics and logging tools to give you:

Cross-cluster performance insights

Alerting and visualization dashboards

Data aggregation for proactive incident management

By centralizing observability, operations teams can streamline troubleshooting and capacity planning across environments.

GitOps-Based Application Deployment

One of the most powerful capabilities RHACM brings to the table is GitOps-driven application lifecycle management. This allows DevOps teams to:

Define application deployments in Git repositories

Automatically deploy to multiple clusters using GitOps pipelines

Ensure consistent configuration and versioning across environments

With built-in support for Argo CD, RHACM bridges the gap between development and operations by enabling continuous delivery at scale.

Red Hat Quay: Enterprise Image Management

Red Hat Quay provides a secure and scalable container image registry that’s deeply integrated with OpenShift. In a multicluster scenario, Quay helps by:

Enforcing image security scanning and vulnerability reporting

Managing image access policies

Supporting geo-replication for global deployments

Installing and customizing Quay within OpenShift gives enterprises control over the entire software supply chain—from development to production.

Integrating Quay with OpenShift & RHACM

Quay seamlessly integrates with OpenShift and RHACM to:

Serve as the source of trusted container images

Automate deployment pipelines via RHACM GitOps

Restrict unapproved images from being used across clusters

This tight integration ensures a secure and compliant image delivery workflow, especially useful in multicluster environments with differing security requirements.

Strengthening Multicluster Security with RHACS

Security must span the entire Kubernetes lifecycle. Red Hat Advanced Cluster Security (RHACS) helps secure containers and Kubernetes clusters by:

Identifying runtime threats and vulnerabilities

Enforcing Kubernetes best practices

Performing risk assessments on containerized workloads

Once installed and configured, RHACS provides a unified view of security risks across all your OpenShift clusters.

Multicluster Operational Security with RHACS

Using RHACS across multiple clusters allows security teams to:

Define and apply security policies consistently

Detect and respond to anomalies in real time

Integrate with CI/CD tools to shift security left

By integrating RHACS into your multicluster architecture, you create a proactive defense layer that protects your workloads without slowing down innovation.

Final Thoughts

Managing multicluster Kubernetes environments doesn't have to be a logistical nightmare. With Red Hat OpenShift Platform Plus, along with RHACM, Red Hat Quay, and RHACS, organizations can standardize, secure, and scale their Kubernetes operations across any infrastructure.

Whether you’re just starting to adopt multicluster strategies or looking to refine your existing approach, Red Hat’s ecosystem offers the tools and automation needed to succeed. For more details www.hawkstack.com

0 notes

Text

Hybrid Cloud Application: The Smart Future of Business IT

Introduction

In today’s digital-first environment, businesses are constantly seeking scalable, flexible, and cost-effective solutions to stay competitive. One solution that is gaining rapid traction is the hybrid cloud application model. Combining the best of public and private cloud environments, hybrid cloud applications enable businesses to maximize performance while maintaining control and security.

This 2000-word comprehensive article on hybrid cloud applications explains what they are, why they matter, how they work, their benefits, and how businesses can use them effectively. We also include real-user reviews, expert insights, and FAQs to help guide your cloud journey.

What is a Hybrid Cloud Application?

A hybrid cloud application is a software solution that operates across both public and private cloud environments. It enables data, services, and workflows to move seamlessly between the two, offering flexibility and optimization in terms of cost, performance, and security.

For example, a business might host sensitive customer data in a private cloud while running less critical workloads on a public cloud like AWS, Azure, or Google Cloud Platform.

Key Components of Hybrid Cloud Applications

Public Cloud Services – Scalable and cost-effective compute and storage offered by providers like AWS, Azure, and GCP.

Private Cloud Infrastructure – More secure environments, either on-premises or managed by a third-party.

Middleware/Integration Tools – Platforms that ensure communication and data sharing between cloud environments.

Application Orchestration – Manages application deployment and performance across both clouds.

Why Choose a Hybrid Cloud Application Model?

1. Flexibility

Run workloads where they make the most sense, optimizing both performance and cost.

2. Security and Compliance

Sensitive data can remain in a private cloud to meet regulatory requirements.

3. Scalability

Burst into public cloud resources when private cloud capacity is reached.

4. Business Continuity

Maintain uptime and minimize downtime with distributed architecture.

5. Cost Efficiency

Avoid overprovisioning private infrastructure while still meeting demand spikes.

Real-World Use Cases of Hybrid Cloud Applications

1. Healthcare

Protect sensitive patient data in a private cloud while using public cloud resources for analytics and AI.

2. Finance

Securely handle customer transactions and compliance data, while leveraging the cloud for large-scale computations.

3. Retail and E-Commerce

Manage customer interactions and seasonal traffic spikes efficiently.

4. Manufacturing

Enable remote monitoring and IoT integrations across factory units using hybrid cloud applications.

5. Education

Store student records securely while using cloud platforms for learning management systems.

Benefits of Hybrid Cloud Applications

Enhanced Agility

Better Resource Utilization

Reduced Latency

Compliance Made Easier

Risk Mitigation

Simplified Workload Management

Tools and Platforms Supporting Hybrid Cloud

Microsoft Azure Arc – Extends Azure services and management to any infrastructure.

AWS Outposts – Run AWS infrastructure and services on-premises.

Google Anthos – Manage applications across multiple clouds.

VMware Cloud Foundation – Hybrid solution for virtual machines and containers.

Red Hat OpenShift – Kubernetes-based platform for hybrid deployment.

Best Practices for Developing Hybrid Cloud Applications

Design for Portability Use containers and microservices to enable seamless movement between clouds.

Ensure Security Implement zero-trust architectures, encryption, and access control.

Automate and Monitor Use DevOps and continuous monitoring tools to maintain performance and compliance.

Choose the Right Partner Work with experienced providers who understand hybrid cloud deployment strategies.

Regular Testing and Backup Test failover scenarios and ensure robust backup solutions are in place.

Reviews from Industry Professionals

Amrita Singh, Cloud Engineer at FinCloud Solutions:

"Implementing hybrid cloud applications helped us reduce latency by 40% and improve client satisfaction."

John Meadows, CTO at EdTechNext:

"Our LMS platform runs on a hybrid model. We’ve achieved excellent uptime and student experience during peak loads."

Rahul Varma, Data Security Specialist:

"For compliance-heavy environments like finance and healthcare, hybrid cloud is a no-brainer."

Challenges and How to Overcome Them

1. Complex Architecture

Solution: Simplify with orchestration tools and automation.

2. Integration Difficulties

Solution: Use APIs and middleware platforms for seamless data exchange.

3. Cost Overruns

Solution: Use cloud cost optimization tools like Azure Advisor, AWS Cost Explorer.

4. Security Risks

Solution: Implement multi-layered security protocols and conduct regular audits.

FAQ: Hybrid Cloud Application

Q1: What is the main advantage of a hybrid cloud application?

A: It combines the strengths of public and private clouds for flexibility, scalability, and security.

Q2: Is hybrid cloud suitable for small businesses?

A: Yes, especially those with fluctuating workloads or compliance needs.

Q3: How secure is a hybrid cloud application?

A: When properly configured, hybrid cloud applications can be as secure as traditional setups.

Q4: Can hybrid cloud reduce IT costs?

A: Yes. By only paying for public cloud usage as needed, and avoiding overprovisioning private servers.

Q5: How do you monitor a hybrid cloud application?

A: With cloud management platforms and monitoring tools like Datadog, Splunk, or Prometheus.

Q6: What are the best platforms for hybrid deployment?

A: Azure Arc, Google Anthos, AWS Outposts, and Red Hat OpenShift are top choices.

Conclusion: Hybrid Cloud is the New Normal

The hybrid cloud application model is more than a trend—it’s a strategic evolution that empowers organizations to balance innovation with control. It offers the agility of the cloud without sacrificing the oversight and security of on-premises systems.

If your organization is looking to modernize its IT infrastructure while staying compliant, resilient, and efficient, then hybrid cloud application development is the way forward.

At diglip7.com, we help businesses build scalable, secure, and agile hybrid cloud solutions tailored to their unique needs. Ready to unlock the future? Contact us today to get started.

0 notes

Text

Google Cloud Service Management

https://tinyurl.com/23rno64l [vc_row][vc_column width=”1/3″][vc_column_text] Google Cloud Services Management Google Cloud Services management Platform, offered by Google, is a suite of cloud computing services that run on the same infrastructure that Google uses internally for its end-user products, such as Google Search and YouTube. Alongside a set of management tools, it provides a series of modular cloud services including computing, data storage, data analytics, and machine learning. Unlock the Full Potential of Your Cloud Infrastructure with Google Cloud Services Management As businesses transition to the cloud, managing Google Cloud services effectively becomes essential for achieving optimal performance, cost efficiency, and robust security. Google Cloud Platform (GCP) provides a comprehensive suite of cloud services, but without proper management, harnessing their full potential can be challenging. This is where specialized Google Cloud Services Management comes into play. In this guide, we’ll explore the key aspects of Google Cloud Services Management and highlight how 24×7 Server Support’s expertise can streamline your cloud operations. What is Google Cloud Services Management? Google Cloud Services Management involves the strategic oversight and optimization of resources and services within Google Cloud Platform (GCP). This includes tasks such as configuring resources, managing costs, ensuring security, and monitoring performance to maintain an efficient and secure cloud environment. Key Aspects of Google Cloud Services Management Resource Optimization Project Organization: Structure your GCP projects to separate environments (development, staging, production) and manage resources effectively. This helps in applying appropriate access controls and organizing billing. Resource Allocation: Efficiently allocate and manage resources like virtual machines, databases, and storage. Use tags and labels for better organization and cost tracking. Cost Management Budgeting and Forecasting: Set up budgets and alerts to monitor spending and avoid unexpected costs. Google Cloud’s Cost Management tools help in tracking expenses and forecasting future costs. Cost Optimization Strategies: Utilize GCP’s pricing calculators and recommendations to find cost-saving opportunities. Consider options like sustained use discounts or committed use contracts for predictable workloads. Security and Compliance Identity and Access Management (IAM): Configure IAM roles and permissions to ensure secure access to your resources. Regularly review and adjust permissions to adhere to the principle of least privilege. Compliance Monitoring: Implement GCP’s security tools to maintain compliance with industry standards and regulations. Use audit logs to track resource access and modifications. Performance Monitoring Real-time Monitoring: Utilize Google Cloud’s monitoring tools to track the performance of your resources and applications. Set up alerts for performance issues and anomalies to ensure timely response. Optimization and Scaling: Regularly review performance metrics and adjust resources to meet changing demands. Use auto-scaling features to automatically adjust resources based on traffic and load. [/vc_column_text][vc_btn title=”Get a quote” style=”gradient” shape=”square” i_icon_fontawesome=”” css_animation=”rollIn” add_icon=”true” link=”url:https%3A%2F%2F24x7serversupport.io%2Fcontact-us%2F|target:_blank”][/vc_column][vc_column width=”2/3″][vc_column_text] Specifications [/vc_column_text][vc_row_inner css=”.vc_custom_1513425380764{background-color: #f1f3f5 !important;}”][vc_column_inner width=”1/2″][vc_column_text] Compute From virtual machines with proven price/performance advantages to a fully managed app development platform. Compute Engine App Engine Kubernetes Engine Container Registry Cloud Functions [/vc_column_text][vc_column_text] Storage and Databases Scalable, resilient, high-performance object storage and databases for your applications. Cloud Storage Cloud SQL Cloud Bigtable Cloud Spanner Cloud Datastore Persistent Disk [/vc_column_text][vc_column_text] Networking State-of-the-art software-defined networking products on Google’s private fiber network. Cloud Virtual Network Cloud Load Balancing Cloud CDN Cloud Interconnect Cloud DNS Network Service Tiers [/vc_column_text][vc_column_text] Big Data Fully managed data warehousing, batch and stream processing, data exploration, Hadoop/Spark, and reliable messaging. BigQuery Cloud Dataflow Cloud Dataproc Cloud Datalab Cloud Dataprep Cloud Pub/Sub Genomics [/vc_column_text][vc_column_text] Identity and Security Control access and visibility to resources running on a platform protected by Google’s security model. Cloud IAM Cloud Identity-Aware Proxy Cloud Data Loss Prevention API Security Key Enforcement Cloud Key Management Service Cloud Resource Manager Cloud Security Scanner [/vc_column_text][/vc_column_inner][vc_column_inner width=”1/2″][vc_column_text] Data Transfer Online and offline transfer solutions for moving data quickly and securely. Google Transfer Appliance Cloud Storage Transfer Service Google BigQuery Data Transfer [/vc_column_text][vc_column_text] API Platform & Ecosystems Cross-cloud API platform enabling businesses to unlock the value of data deliver modern applications and power ecosystems. Apigee API Platform API Monetization Developer Portal API Analytics Apigee Sense Cloud Endpoints [/vc_column_text][vc_column_text] Internet of things Intelligent IoT platform that unlocks business insights from your global device network Cloud IoT Core [/vc_column_text][vc_column_text] Developer tools Monitoring, logging, and diagnostics and more, all in an easy to use web management console or mobile app. Stackdriver Overview Monitoring Logging Error Reporting Trace Debugger Cloud Deployment Manager Cloud Console Cloud Shell Cloud Mobile App Cloud Billing API [/vc_column_text][vc_column_text] Machine Learning Fast, scalable, easy to use ML services. Use our pre-trained models or train custom models on your data. Cloud Machine Learning Engine Cloud Job Discovery Cloud Natural Language Cloud Speech API Cloud Translation API Cloud Vision API Cloud Video Intelligence API [/vc_column_text][/vc_column_inner][/vc_row_inner][/vc_column][/vc_row][vc_row][vc_column][vc_column_text] Why Choose 24×7 Server Support for Google Cloud Services Management? Effective Google Cloud Services Management requires expertise and continuous oversight. 24×7 Server Support specializes in providing comprehensive cloud management solutions that ensure your GCP infrastructure operates smoothly and efficiently. Here’s how their services stand out: Expertise and Experience: With a team of certified Google Cloud experts, 24×7 Server Support brings extensive knowledge to managing and optimizing your cloud environment. Their experience ensures that your GCP services are configured and maintained according to best practices. 24/7 Support: As the name suggests, 24×7 Server Support offers round-the-clock assistance. Whether you need help with configuration, troubleshooting, or performance issues, their support team is available 24/7 to address your concerns. Custom Solutions: Recognizing that every business has unique needs, 24×7 Server Support provides tailored management solutions. They work closely with you to understand your specific requirements and implement strategies that align with your business objectives. Cost Efficiency: Their team helps in optimizing your cloud expenditures by leveraging Google Cloud’s cost management tools and providing insights into cost-saving opportunities. This ensures you get the best value for your investment. Enhanced Security: 24×7 Server Support implements robust security measures to protect your data and comply with regulatory requirements. Their proactive approach to security and compliance helps safeguard your cloud infrastructure from potential threats. [/vc_column_text][/vc_column][/vc_row]

0 notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In��DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Top 5 Proven Strategies for Building Scalable Software Products in 2025

Building scalable software products is essential in today's dynamic digital environment, where user demands and data volumes can surge unexpectedly. Scalability ensures that your software can handle increased loads without compromising performance, providing a seamless experience for users. This blog delves into best practices for building scalable software, drawing insights from industry experts and resources like XillenTech's guide on the subject.

Understanding Software Scalability

Software scalability refers to the system's ability to handle growing amounts of work or its potential to accommodate growth. This growth can manifest as an increase in user traffic, data volume, or transaction complexity. Scalability is typically categorized into two types:

Vertical Scaling: Enhancing the capacity of existing hardware or software by adding resources like CPU, RAM, or storage.

Horizontal Scaling: Expanding the system by adding more machines or nodes, distributing the load across multiple servers.

Both scaling methods are crucial, and the choice between them depends on the specific needs and architecture of the software product.

Best Practices for Building Scalable Software

1. Adopt Micro Services Architecture

Micro services architecture involves breaking down an application into smaller, independent services that can be developed, deployed, and scaled separately. This approach offers several advantages:

Independent Scaling: Each service can be scaled based on its specific demand, optimizing resource utilization.

Enhanced Flexibility: Developers can use different technologies for different services, choosing the best tools for each task.

Improved Fault Isolation: Failures in one service are less likely to impact the entire system.

Implementing micro services requires careful planning, especially in managing inter-service communication and data consistency.

2. Embrace Modular Design

Modular design complements micro services by structuring the application into distinct modules with specific responsibilities.

Ease of Maintenance: Modules can be updated or replaced without affecting the entire system.

Parallel Development: Different teams can work on separate modules simultaneously, accelerating development.

Scalability: Modules experiencing higher demand can be scaled independently.

This design principle is particularly beneficial in MVP development, where speed and adaptability are crucial.

3. Leverage Cloud Computing

Cloud platforms like AWS, Azure, and Google Cloud offer scalable infrastructure that can adjust to varying workloads.

Elasticity: Resources can be scaled up or down automatically based on demand.

Cost Efficiency: Pay-as-you-go models ensure you only pay for the resources you use.

Global Reach: Deploy applications closer to users worldwide, reducing latency.

Cloud-native development, incorporating containers and orchestration tools like Kubernetes, further enhances scalability and deployment flexibility.

4. Implement Caching Strategies

Caching involves storing frequently accessed data in a temporary storage area to reduce retrieval times. Effective caching strategies:

Reduce Latency: Serve data faster by avoiding repeated database queries.

Lower Server Load: Decrease the number of requests hitting the backend systems.

Enhance User Experience: Provide quicker responses, improving overall satisfaction.

Tools like Redis and Memcached are commonly used for implementing caching mechanisms.

5. Prioritize Continuous Monitoring and Performance Testing

Regular monitoring and testing are vital to ensure the software performs optimally as it scales.

Load Testing: Assess how the system behaves under expected and peak loads.

Stress Testing: Determine the system's breaking point and how it recovers from failures.

Real-Time Monitoring: Use tools like New Relic or Datadog to track performance metrics continuously.

These practices help in identifying bottlenecks and ensuring the system can handle growth effectively.

Common Pitfalls in Scalable Software Development

While aiming for scalability, it's essential to avoid certain pitfalls:

Over engineering: Adding unnecessary complexity can hinder development and maintenance.

Neglecting Security: Scaling should not compromise the application's security protocols.

Inadequate Testing: Failing to test under various scenarios can lead to unforeseen issues in production.

Balancing scalability with simplicity and robustness is key to successful software development.

Conclusion

Building scalable software products involves strategic planning, adopting the right architectural patterns, and leveraging modern technologies. By implementing micro services, embracing modular design, utilizing cloud computing, and maintaining rigorous testing and monitoring practices, businesses can ensure their software scales effectively with growing demands. Avoiding common pitfalls and focusing on continuous improvement will further enhance scalability and performance.

0 notes

Text

**The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies**

Introduction

In modern instantly evolving virtual panorama, cloud capabilities have remodeled the means firms operate. Particularly for https://elliotmvza837.yousher.com/navigating-cybersecurity-inside-the-big-apple-essential-it-support-solutions-for-new-york-businesses organizations in New York, leveraging structures like Microsoft Azure and Google Cloud can give a boost to operational effectivity, foster innovation, and ascertain amazing security measures. This article delves into the long run of cloud providers and promises insights on how New York corporations can harness the potential of Microsoft and Google technology to remain aggressive in their respective industries.

The Future of Cloud Services: How New York Companies Can Leverage Microsoft and Google Technologies

The destiny of cloud expertise isn't always practically garage; it’s approximately creating a flexible surroundings that helps improvements throughout sectors. For New York prone, adopting technology from giants like Microsoft and Google can lead to greater agility, more advantageous facts management skills, and elevated protection protocols. As organizations a growing number of shift toward digital options, expertise these technology becomes the most important for sustained boom.

Understanding Cloud Services What Are Cloud Services?

Cloud facilities seek advice from a number computing sources provided over the information superhighway (the "cloud"). These incorporate:

Infrastructure as a Service (IaaS): Virtualized computing materials over the web. Platform as a Service (PaaS): Platforms permitting developers to build functions without dealing with underlying infrastructure. Software as a Service (SaaS): Software delivered over the net, removing the need for deploy. Key Benefits of Cloud Services Cost Efficiency: Reduces capital expenditure on hardware. Scalability: Easily scales supplies structured on call for. Accessibility: Access facts and programs from anywhere. Security: Advanced defense options guard sensitive counsel. Microsoft's Role in Cloud Computing Overview of Microsoft Azure

Microsoft Azure is probably the most main cloud carrier companies offering a range of services and products resembling virtual machines, databases, analytics, and AI features.

Core Features of Microsoft Azure Virtual Machines: Create scalable VMs with more than a few working structures. Azure SQL Database: A controlled database carrier for app improvement. AI & Machine Learning: Integrate AI features seamlessly into functions. Google's Impact on Cloud Technologies Introduction to Google Cloud Platform (GCP)

Google's cloud featuring emphasizes high-overall performance computing and mechanical device mastering potential tailored for agencies seeking resourceful ideas.

youtube

Distinct Features of GCP BigQuery: A effective analytics device for tremendous datasets. Cloud Functions: Event-pushed serverless compute platform. Kubernetes Engine: Manag

0 notes

Text

Comparison of Ubuntu, Debian, and Yocto for IIoT and Edge Computing

In industrial IoT (IIoT) and edge computing scenarios, Ubuntu, Debian, and Yocto Project each have unique advantages. Below is a detailed comparison and recommendations for these three systems:

1. Ubuntu (ARM)

Advantages

Ready-to-use: Provides official ARM images (e.g., Ubuntu Server 22.04 LTS) supporting hardware like Raspberry Pi and NVIDIA Jetson, requiring no complex configuration.

Cloud-native support: Built-in tools like MicroK8s, Docker, and Kubernetes, ideal for edge-cloud collaboration.

Long-term support (LTS): 5 years of security updates, meeting industrial stability requirements.

Rich software ecosystem: Access to AI/ML tools (e.g., TensorFlow Lite) and databases (e.g., PostgreSQL ARM-optimized) via APT and Snap Store.

Use Cases

Rapid prototyping: Quick deployment of Python/Node.js applications on edge gateways.

AI edge inference: Running computer vision models (e.g., ROS 2 + Ubuntu) on Jetson devices.

Lightweight K8s clusters: Edge nodes managed by MicroK8s.

Limitations

Higher resource usage (minimum ~512MB RAM), unsuitable for ultra-low-power devices.

2. Debian (ARM)

Advantages

Exceptional stability: Packages undergo rigorous testing, ideal for 24/7 industrial operation.

Lightweight: Minimal installation requires only 128MB RAM; GUI-free versions available.

Long-term support: Up to 10+ years of security updates via Debian LTS (with commercial support).

Hardware compatibility: Supports older or niche ARM chips (e.g., TI Sitara series).

Use Cases

Industrial controllers: PLCs, HMIs, and other devices requiring deterministic responses.

Network edge devices: Firewalls, protocol gateways (e.g., Modbus-to-MQTT).

Critical systems (medical/transport): Compliance with IEC 62304/DO-178C certifications.

Limitations

Older software versions (e.g., default GCC version); newer features require backports.

3. Yocto Project

Advantages

Full customization: Tailor everything from kernel to user space, generating minimal images (<50MB possible).

Real-time extensions: Supports Xenomai/Preempt-RT patches for μs-level latency.

Cross-platform portability: Single recipe set adapts to multiple hardware platforms (e.g., NXP i.MX6 → i.MX8).

Security design: Built-in industrial-grade features like SELinux and dm-verity.

Use Cases

Custom industrial devices: Requires specific kernel configurations or proprietary drivers (e.g., CAN-FD bus support).

High real-time systems: Robotic motion control, CNC machines.

Resource-constrained terminals: Sensor nodes running lightweight stacks (e.g., Zephyr+FreeRTOS hybrid deployment).

Limitations

Steep learning curve (BitBake syntax required); longer development cycles.

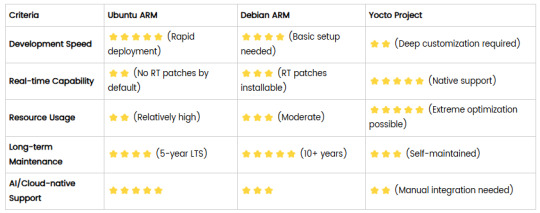

4. Comparison Summary

5. Selection Recommendations

Choose Ubuntu ARM: For rapid deployment of edge AI applications (e.g., vision detection on Jetson) or deep integration with public clouds (e.g., AWS IoT Greengrass).

Choose Debian ARM: For mission-critical industrial equipment (e.g., substation monitoring) where stability outweighs feature novelty.

Choose Yocto Project: For custom hardware development (e.g., proprietary industrial boards) or strict real-time/safety certification (e.g., ISO 13849) requirements.

6. Hybrid Architecture Example

Smart factory edge node:

Real-time control layer: RTOS built with Yocto (controlling robotic arms)

Data processing layer: Debian running OPC UA servers

Cloud connectivity layer: Ubuntu Server managing K8s edge clusters

Combining these systems based on specific needs can maximize the efficiency of IIoT edge computing.

0 notes

Text

Migrating Virtual Machines to Red Hat OpenShift Virtualization with Ansible Automation Platform

As enterprises modernize their IT infrastructure, migrating legacy virtual machines (VMs) into container-native platforms has become a strategic priority. Red Hat OpenShift Virtualization provides a powerful solution by enabling organizations to run traditional VMs alongside container workloads on a single, Kubernetes-native platform. When paired with Red Hat Ansible Automation Platform, the migration process becomes more consistent, scalable, and fully automated.

In this article, we explore how Ansible Automation Platform can be leveraged to simplify and accelerate the migration of VMs to OpenShift Virtualization.

Why Migrate to OpenShift Virtualization?

OpenShift Virtualization allows organizations to:

Consolidate VMs and containers on a single platform.

Simplify operations through unified management.

Enable DevOps teams to interact with VMs using Kubernetes-native tools.

Improve resource utilization and reduce infrastructure sprawl.

This hybrid approach is ideal for enterprises that are transitioning to cloud-native architectures but still rely on critical VM-based workloads.

Challenges in VM Migration

Migrating VMs from traditional hypervisors like VMware vSphere, Red Hat Virtualization (RHV), or KVM to OpenShift Virtualization involves several tasks:

Assessing and planning for VM compatibility.

Exporting and transforming VM images.

Reconfiguring networking and storage.

Managing downtime and validation.

Ensuring repeatability across multiple workloads.

Manual migrations are error-prone and time-consuming, especially at scale. This is where Ansible comes in.

Role of Ansible Automation Platform in VM Migration

Ansible Automation Platform enables IT teams to:

Automate complex migration workflows.

Integrate with existing IT tools and APIs.

Enforce consistency across environments.

Reduce human error and operational overhead.

With pre-built Ansible Content Collections, playbooks, and automation workflows, teams can automate VM inventory collection, image conversion, import into OpenShift Virtualization, and post-migration validation.

High-Level Migration Workflow with Ansible

Here's a high-level view of how a migration process can be automated:

Inventory Discovery Use Ansible modules to gather VM data from vSphere or RHV environments.

Image Extraction and Conversion Automate the export of VM disks and convert them to a format compatible with OpenShift Virtualization (QCOW2 or RAW).

Upload to OpenShift Virtualization Use virtctl or Kubernetes API to upload images to OpenShift and define the VM manifest (YAML).

Create VirtualMachines in OpenShift Apply VM definitions using Ansible's Kubernetes modules.

Configure Networking and Storage Attach necessary networks (e.g., Multus, SR-IOV) and persistent storage (PVCs) automatically.

Validation and Testing Run automated smoke tests or application checks to verify successful migration.

Decommission Legacy VMs If needed, automate the shutdown and cleanup of source VMs.

Sample Ansible Playbook Snippet

Below is a simplified snippet to upload a VM disk and create a VM in OpenShift:

- name: Upload VM disk and create VM

hosts: localhost

tasks:

- name: Upload QCOW2 image to OpenShift

command: >

virtctl image-upload pvc {{ vm_name }}-disk

--image-path {{ qcow2_path }}

--pvc-size {{ disk_size }}

--access-mode ReadWriteOnce

--storage-class {{ storage_class }}

--namespace {{ namespace }}

--wait-secs 300

environment:

KUBECONFIG: "{{ kubeconfig_path }}"

- name: Apply VM YAML manifest

k8s:

state: present

definition: "{{ lookup('file', 'vm-definitions/{{ vm_name }}.yaml') }}"

Integrating with Ansible Tower / AAP Controller

For enterprise-scale automation, these playbooks can be run through Ansible Automation Platform (formerly Ansible Tower), offering:

Role-based access control (RBAC)

Job scheduling and logging

Workflow chaining for multi-step migrations

Integration with ServiceNow, Git, or CI/CD pipelines

Red Hat Migration Toolkit for Virtualization (MTV)

Red Hat also offers the Migration Toolkit for Virtualization (MTV), which integrates with OpenShift and can be invoked via Ansible playbooks or REST APIs. MTV supports bulk migrations from RHV and vSphere to OpenShift Virtualization and can be used in tandem with custom automation workflows.

Final Thoughts

Migrating to OpenShift Virtualization is a strategic step toward modern, unified infrastructure. By leveraging Ansible Automation Platform, organizations can automate and scale this migration efficiently, minimizing downtime and manual effort.

Whether you are starting with a few VMs or migrating hundreds across environments, combining Red Hat's automation and virtualization solutions provides a future-proof path to infrastructure modernization.

For more details www.hawkstack.com

0 notes

Text

UpgradeMySkill: Where Ambition Meets Expertise

Success today belongs to those who never stop learning. In an era defined by innovation, digital transformation, and global competition, upgrading your skills isn’t just an advantage — it’s a necessity. Whether you’re aiming for your next promotion, planning a career switch, or striving to sharpen your expertise, UpgradeMySkill is the partner you need to shape a brighter future.

At UpgradeMySkill, we transform ambition into achievement through world-class training, globally recognized certifications, and practical learning experiences.

Our Vision: Empowering Careers, One Skill at a Time

Learning is powerful — it unlocks doors, creates opportunities, and fuels personal and professional growth. UpgradeMySkill exists to make that power accessible to everyone.

We offer a rich portfolio of professional certification courses designed to enhance skills, boost confidence, and enable real-world success. From IT professionals and project managers to cybersecurity specialists and business leaders, we help learners across the globe build the capabilities they need to thrive.

Comprehensive Training That Sets You Apart

At UpgradeMySkill, we don't just teach — we transform. Our programs are built to deliver measurable value, equipping you with the skills employers demand today and tomorrow. Our core offerings include:

Project Management Certifications: PMP®, PRINCE2®, PMI-ACP®, CAPM®

IT Service Management Training: ITIL® 4 Foundation, Intermediate, and Expert

Agile and Scrum: Scrum Master, SAFe® Agilist, and Agile Practitioner courses

Cloud Computing Courses: AWS Certified Solutions Architect, Azure Fundamentals, Google Cloud Engineer

Cybersecurity Certifications: CISSP®, CISM®, CEH®, CompTIA Security+

Data Science and Machine Learning: Big Data Analysis, AI, and ML

DevOps Tools and Techniques: Jenkins, Docker, Kubernetes, Ansible

Each program is designed to deliver real-world skills that you can apply immediately to your work environment.

Why Choose UpgradeMySkill?

There are many places to learn, but few places to master. Here’s what makes UpgradeMySkill stand out:

1. Trusted and Accredited

Our certifications are recognized globally and are accredited by top organizations. With us, you don’t just earn a certificate; you earn a competitive advantage.

2. Experienced Trainers

Our instructors bring decades of industry experience. They provide deep insights, practical frameworks, and exam strategies that go beyond textbook learning.

3. Flexible Learning Options

We know your time is valuable. That’s why we offer flexible modes of learning:

Live online training

Classroom sessions

Self-paced e-learning

Customized corporate training programs

Learn the way that works best for you — anytime, anywhere.

4. Career-Focused Approach

We believe training should translate into real results. Our hands-on sessions, real-world case studies, and post-training support are all designed to help you advance faster and smarter.

5. Lifetime Learning Support

Even after your course ends, our commitment to you doesn’t. Our alumni have access to updated study materials, webinars, and career resources to keep them ahead of the curve.

Serving Individuals and Corporations Globally

Whether you're an individual professional charting your personal growth path or an enterprise seeking to boost your team's capabilities, UpgradeMySkill has a solution for you.

Individuals: Advance your career with new certifications and skills that employers value.

Enterprises: Develop high-performing teams with our customized training programs aligned to your business goals.

We have successfully delivered corporate training programs to Fortune 500 companies, startups, government agencies, and global enterprises, always with measurable outcomes and client satisfaction.

Testimonials That Inspire

"The PMP® training program by UpgradeMySkill was exceptional. The real-world examples, mock tests, and constant support helped me pass on my first attempt. Highly recommend it to anyone serious about project management!" – Abhinav R., Senior Project Manager

"Our IT team completed AWS training through UpgradeMySkill. The sessions were insightful and hands-on, and our cloud migration project became much smoother thanks to the new skills we acquired." – Meera S., IT Director

These success stories are a testament to our quality, commitment, and effectiveness.

Your Future Is Waiting — Are You Ready?

Knowledge is the foundation of opportunity. Skills are the currency of success. Certification is the key that unlocks new doors.

Don’t let hesitation hold you back. Take control of your career journey today. Trust UpgradeMySkill to equip you with the tools, knowledge, and confidence to climb higher, faster, and farther.

Upgrade your skills. Upgrade your future. Upgrade with us.

#upgrademyskill#careergrowth#professionaldevelopment#projectmanagement#agiletraining#agilescrum#agiletransformation#careerdevelopment#processimprovement#scrumtraining

0 notes

Text

Kubernetes Objects Explained 💡 Pods, Services, Deployments & More for Admins & Devs

learn how Kubernetes keeps your apps running as expected using concepts like desired state, replication, config management, and persistent storage.

✔️ Pod – Basic unit that runs your containers ✔️ Service – Stable network access to Pods ✔️ Deployment – Rolling updates & scaling made easy ✔️ ReplicaSet – Maintains desired number of Pods ✔️ Job & CronJob – Run tasks once or on schedule ✔️ ConfigMap & Secret – Externalize configs & secure credentials ✔️ PV & PVC – Persistent storage management ✔️ Namespace – Cluster-level resource isolation ✔️ DaemonSet – Run a Pod on every node ✔️ StatefulSet – For stateful apps like databases ✔️ ReplicationController – The older way to manage Pods

youtube

0 notes

Text

Sky Appz Academy: Best Full Stack Development Training in Coimbatore

Revolutionize Your Career with Top-Class Full Stack Training

With today's digital-first economy, Full Stack developers have emerged as the pillars of the technology sector. Sky Appz Academy in Coimbatore is at the cutting edge of technology training with a full-scale Full Stack Development course that makes beginners job-ready professionals. Our 1000+ hour program is a synergy of theoretical training and hands-on practice, providing students with employers' sought skills upon graduation.

Why Full Stack Development Should be Your Career?

The technological world is transforming at a hitherto unknown speed, and Full Stack developers are the most skilled and desired experts in the job market today. As per recent NASSCOM reports:

High Demand: There is a 35% year-over-year rise in Full Stack developer employment opportunities

Lucrative Salaries: Salary ranges for junior jobs begin from ₹5-8 LPA, while mature developers get ₹15-25 LPA

Career Flexibility: Roles across startups, businesses, and freelance initiatives

Future-Proof Skills: Full Stack skills stay up-to-date through technology changes

At Sky Appz Academy, we've structured our course work to not only provide coding instructions, but also to develop problem-solving skills and engineering thinking necessary for long-term professional success.

In-Depth Full Stack Course

Our carefully structured program encompasses all areas of contemporary web development:

Frontend Development (300+ hours)

•Core Foundations: HTML5, CSS3, JavaScript (ES6+)

•Advanced Frameworks: React.js with Redux, Angular

•Responsive Design: Bootstrap 5, Material UI, Flexbox/Grid

•State Management: Context API, Redux Toolkit

•Progressive Web Apps: Service workers, offline capabilities

Backend Development (350+ hours)

•Node.js Ecosystem: Express.js, NestJS

•Python Stack: Django REST framework, Flask

•PHP Development: Laravel, CodeIgniter

•API Development: RESTful services, GraphQL

•Authentication: JWT, OAuth, Session management

Database Systems (150+ hours)

•SQL Databases: MySQL, PostgreSQL

•NoSQL Solutions: MongoDB, Firebase

•ORM Tools: Mongoose, Sequelize

•Database Design: Normalization, Indexing

•Performance Optimization: Query tuning, caching

DevOps & Deployment (100+ hours)

•Cloud Platforms: AWS, Azure fundamentals

•Containerization: Docker, Kubernetes basics

•CI/CD Pipelines: GitHub Actions, Jenkins

• Performance Monitoring: New Relic, Sentry

• Security Best Practices: OWASP top 10

What Sets Sky Appz Academy Apart?

1)Industry-Experienced Instructors

• Our faculty includes senior developers with 8+ years of experience

• Regular guest lectures from CTOs and tech leads

• 1:1 mentorship sessions for personalized guidance

Project-Based Learning Approach

• 15+ mini-projects throughout the course

• 3 major capstone projects

• Real-world client projects for select students

• Hackathons and coding competitions

State-of-the-Art Infrastructure

• Dedicated coding labs with high-end systems

• 24/7 access to learning resources

• Virtual machines for cloud practice

•\tNew software and tools

Comprehensive Career Support

•Resume and LinkedIn profile workshops

•Practice technical interviews (100+ held every month)

•Portfolio development support

•Private placement drives with 150+ recruiters

•Access to alumni network

Detailed Course Structure