#LlamaIndex

Explore tagged Tumblr posts

Text

Google Vertex AI API And Arize For Generative AI Success

Arize, Vertex AI API: Assessment procedures to boost AI ROI and generative app development

Vertex AI API providing Gemini 1.5 Pro is a cutting-edge large language model (LLM) with multi-modal features that provides enterprise teams with a potent model that can be integrated across a variety of applications and use cases. The potential to revolutionize business operations is substantial, ranging from boosting data analysis and decision-making to automating intricate procedures and improving consumer relations.

Enterprise AI teams can do the following by using Vertex AI API for Gemini:

Develop more quickly by using sophisticated natural language generation and processing tools to expedite the documentation, debugging, and code development processes.

Improve the experiences of customers: Install advanced chatbots and virtual assistants that can comprehend and reply to consumer inquiries in a variety of ways.

Enhance data analysis: For more thorough and perceptive data analysis, make use of the capacity to process and understand different data formats, such as text, photos, and audio.

Enhance decision-making by utilizing sophisticated reasoning skills to offer data-driven insights and suggestions that aid in strategic decision-making.

Encourage innovation by utilizing Vertex AI’s generative capabilities to investigate novel avenues for research, product development, and creative activities.

While creating generative apps, teams utilizing the Vertex AI API benefit from putting in place a telemetry system, or AI observability and LLM assessment, to verify performance and quicken the iteration cycle. When AI teams use Arize AI in conjunction with their Google AI tools, they can:

As input data changes and new use cases emerge, continuously evaluate and monitor the performance of generative apps to help ensure application stability. This will allow you to promptly address issues both during development and after deployment.

Accelerate development cycles by testing and comparing the outcomes of multiple quick iterations using pre-production app evaluations and procedures.

Put safeguards in place for protection: Make sure outputs fall within acceptable bounds by methodically testing the app’s reactions to a variety of inputs and edge circumstances.

Enhance dynamic data by automatically identifying difficult or unclear cases for additional analysis and fine-tuning, as well as flagging low-performing sessions for review.

From development to deployment, use Arize’s open-source assessment solution consistently. When apps are ready for production, use an enterprise-ready platform.

Answers to typical problems that AI engineering teams face

A common set of issues surfaced while collaborating with hundreds of AI engineering teams to develop and implement generative-powered applications:

Performance regressions can be caused by little adjustments; even slight modifications to the underlying data or prompts might cause anticipated declines. It’s challenging to predict or locate these regressions.

Identifying edge cases, underrepresented scenarios, or high-impact failure modes necessitates the use of sophisticated data mining techniques in order to extract useful subsets of data for testing and development.

A single factually inaccurate or improper response might result in legal problems, a loss of confidence, or financial liabilities. Poor LLM responses can have a significant impact on a corporation.

Engineering teams can address these issues head-on using Arize’s AI observability and assessment platform, laying the groundwork for online production observability throughout the app development stage. Let’s take a closer look at the particular uses and integration tactics for Arize and Vertex AI, as well as how a business AI engineering team may use the two products in tandem to create superior AI.

Use LLM tracing in development to increase visibility

Arize’s LLM tracing features make it easier to design and troubleshoot applications by giving insight into every call in an LLM-powered system. Because orchestration and agentic frameworks can conceal a vast number of distributed system calls that are nearly hard to debug without programmatic tracing, this is particularly important for systems that use them.

Teams can fully comprehend how the Vertex AI API supporting Gemini 1.5 Pro handles input data via all application layers query, retriever, embedding, LLM call, synthesis, etc. using LLM tracing. AI engineers can identify the cause of an issue and how it might spread through the system’s layers by using traces available from the session level down to a specific span, such as retrieving a single document.Image credit to Google Cloud

Additionally, basic telemetry data like token usage and delay in system stages and Vertex AI API calls are exposed using LLM tracing. This makes it possible to locate inefficiencies and bottlenecks for additional application performance optimization. It only takes a few lines of code to instrument Arize tracing on apps; traces are gathered automatically from more than a dozen frameworks, including OpenAI, DSPy, LlamaIndex, and LangChain, or they may be manually configured using the OpenTelemetry Trace API.

Could you play it again and correct it? Vertex AI problems in the prompt + data playground

The outputs of LLM-powered apps can be greatly enhanced by resolving issues and performing fast engineering with your application data. With the help of app development data, developers may optimize prompts used with the Vertex AI API for Gemini in an interactive environment with Arize’s prompt + data playground.

It can be used to import trace data and investigate the effects of altering model parameters, input variables, and prompt templates. With Arize’s workflows, developers can replay instances in the platform directly after receiving a prompt from an app trace of interest. As new use cases are implemented or encountered by the Vertex AI API providing Gemini 1.5 Pro after apps go live, this is a practical way to quickly iterate and test various prompt configurations.Image credit to Google Cloud

Verify performance via the online LLM assessment

With a methodical approach to LLM evaluation, Arize assists developers in validating performance after tracing is put into place. To rate the quality of LLM outputs on particular tasks including hallucination, relevancy, Q&A on retrieved material, code creation, user dissatisfaction, summarization, and many more, the Arize evaluation library consists of a collection of pre-tested evaluation frameworks.

In a process known as Online LLM as a judge, Google customers can automate and scale evaluation processes by using the Vertex AI API serving Gemini models. Using Online LLM as a judge, developers choose Vertex AI API servicing Gemini as the platform’s evaluator and specify the evaluation criteria in a prompt template in Arize. The model scores, or assesses, the system’s outputs according to the specified criteria while the LLM application is operating.Image credit to Google Cloud

Additionally, the assessments produced can be explained using the Vertex AI API that serves Gemini. It can frequently be challenging to comprehend why an LLM reacts in a particular manner; explanations reveal the reasoning and can further increase the precision of assessments that follow.

Using assessments during the active development of AI applications is very beneficial to teams since it provides an early performance standard upon which to base later iterations and fine-tuning.

Assemble dynamic datasets for testing

In order to conduct tests and monitor enhancements to their prompts, LLM, or other components of their application, developers can use Arize’s dynamic dataset curation feature to gather examples of interest, such as high-quality assessments or edge circumstances where the LLM performs poorly.

By combining offline and online data streams with Vertex AI Vector Search, developers can use AI to locate data points that are similar to the ones of interest and curate the samples into a dataset that changes over time as the application runs. As traces are gathered to continuously validate performance, developers can use Arize to automate online processes that find examples of interest. Additional examples can be added by hand or using the Vertex AI API for Gemini-driven annotation and tagging.

Once a dataset is established, it can be used for experimentation. It provides developers with procedures to test new versions of the Vertex AI API serving Gemini against particular use cases or to perform A/B testing against prompt template modifications and prompt variable changes. Finding the best setup to balance model performance and efficiency requires methodical experimentation, especially in production settings where response times are crucial.

Protect your company with the Vertex AI API and Arize, which serve Gemini

Arize and Google AI work together to protect your AI against unfavorable effects on your clients and company. Real-time protection against malevolent attempts like as jailbreaks, context management, compliance, and user experience all depend on LLM guardrails.

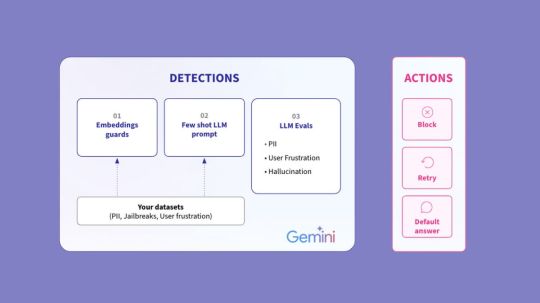

Custom datasets and a refined Vertex AI��Gemini model can be used to configure Arize guardrails for the following detections:

Embeddings guards: By analyzing the cosine distance between embeddings, it uses your examples of “bad” messages to protect against similar inputs. This strategy has the advantage of constant iteration during breaks, which helps the guard become increasingly sophisticated over time.

Few-shot LLM prompt: The model determines whether your few-shot instances are “pass” or “fail.” This is particularly useful when defining a guardrail that is entirely customized.

LLM evaluations: Look for triggers such as PII data, user annoyance, hallucinations, etc. using the Vertex AI API offering Gemini. Scaled LLM evaluations serve as the basis for this strategy.

An instant corrective action will be taken to prevent your application from producing an unwanted response if these detections are highlighted in Arize. The remedy can be set by developers to prevent, retry, or default an answer such “I cannot answer your query.”

Utilizing the Vertex AI API, your personal Arize AI Copilot supports Gemini 1.5 Pro

Developers can utilize Arize AI Copilot, which is powered by the Vertex AI API servicing Gemini, to further expedite the AI observability and evaluation process. AI teams’ processes are streamlined by an in-platform helper, which automates activities and analysis to reduce team members’ daily operational effort.

Arize Copilot allows engineers to:

Start AI Search using the Vertex AI API for Gemini; look for particular instances, such “angry responses” or “frustrated user inquiries,” to include in a dataset.

Take prompt action and conduct analysis; set up dashboard monitors or pose inquiries on your models and data.

Automate the process of creating and defining LLM assessments.

Prompt engineering: request that Gemini’s Vertex AI API produce prompt playground iterations for you.

Using Arize and Vertex AI to accelerate AI innovation

The integration of Arize AI with Vertex AI API serving Gemini is a compelling solution for optimizing and protecting generative applications as businesses push the limits of AI. AI teams may expedite development, improve application performance, and contribute to dependability from development to deployment by utilizing Google’s sophisticated LLM capabilities and Arize’s observability and evaluation platform.

Arize AI Copilot’s automated processes, real-time guardrails, and dynamic dataset curation are just a few examples of how these technologies complement one another to spur innovation and produce significant commercial results. Arize and Vertex AI API providing Gemini models offer the essential infrastructure to handle the challenges of contemporary AI engineering as you continue to create and build AI applications, ensuring that your projects stay effective, robust, and significant.

Do you want to further streamline your AI observability? Arize is available on the Google Cloud Marketplace! Deploying Arize and tracking the performance of your production models is now simpler than ever with this connection.

Read more on Govindhtech.com

#VertexAIAPI#largelanguagemodel#VertexAI#OpenAI#LlamaIndex#Geminimodels#VertexAIVectorSearch#GoogleCloudMarketplace#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

1 note

·

View note

Text

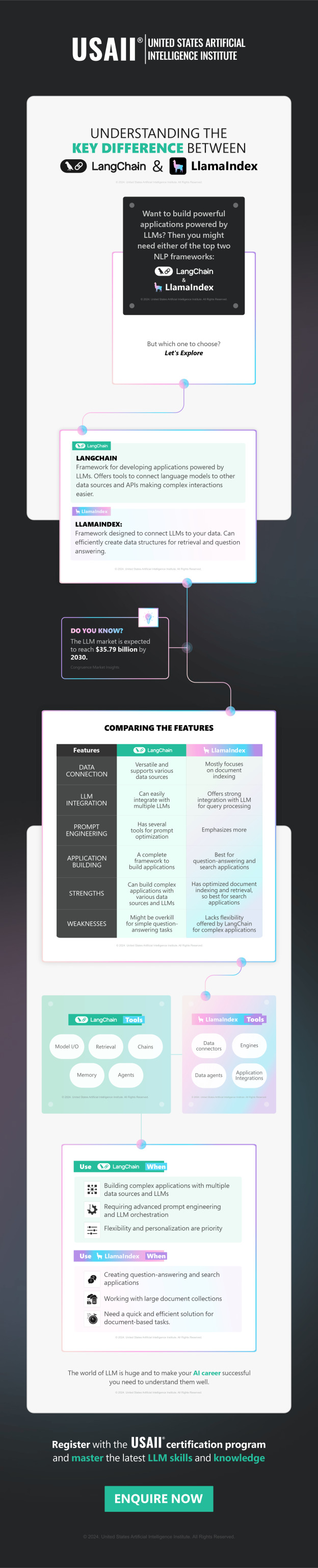

Understanding The Key Difference Between LangChain and LlamaIndex | USAII®

Understand the fine line of difference between popular LLM frameworks LangChain and LlamaIndex in this comprehensive infographic and advance your AI knowledge.

Read more: https://shorturl.at/aT8cA

LangChain, LlamaIndex, NLP applications, large language models, prompt engineering, LLM applications

0 notes

Text

AI Reading List 7/5/2023

The longer holiday weekend edition. Opportunities and Risks of LLMs for Scalable Deliberation with Polis — Polis is a platform that leverages machine intelligence to scale up deliberative processes. In this paper, we explore the opportunities and risks associated with applying Large Language Models (LLMs) towards challenges with facilitating, moderating and summarizing the results of Polis…

View On WordPress

0 notes

Text

Explore the inner workings of LlamaIndex, enhancing LLMs for streamlined natural language processing, boosting performance and efficiency.

#Large Language Model Meta AI#Power of LLMs#Custom Data Integration#Expertise in Machine Learning#Expertise in Prompt Engineering#LlamaIndex Frameworks#LLM Applications

0 notes

Text

Explore the inner workings of LlamaIndex, enhancing LLMs for streamlined natural language processing, boosting performance and efficiency.

#Large Language Model Meta AI#Power of LLMs#Custom Data Integration#Expertise in Machine Learning#Expertise in Prompt Engineering#LlamaIndex Frameworks#LLM Applications

0 notes

Text

What exactly is an AI agent – and how do you build one?

New Post has been published on https://thedigitalinsider.com/what-exactly-is-an-ai-agent-and-how-do-you-build-one/

What exactly is an AI agent – and how do you build one?

What makes something an “AI agent” – and how do you build one that does more than just sound impressive in a demo?

I’m Nico Finelli, Founding Go-To-Market Member at Vellum. Starting in machine learning, I’ve consulted for Fortune 500s, worked at Weights & Biases during the LLM boom, and now I help companies get from experimentation to production with LLMs, faster and smarter.

In this article, I’ll unpack what AI agents actually are (and aren’t), how to build them step by step, and what separates teams that ship real value from those that stall out in proof-of-concept purgatory.

We’ll also take a close look at the current state of AI adoption, the biggest challenges teams face today, and the one thing that makes or breaks an agent system: evaluation.

Let’s dive in.

Where we are in the AI landscape

At Vellum, we recently partnered with Weaviate and LlamaIndex to run a survey of over 1,200 AI developers. The goal? To understand where people are when it comes to deploying AI in production.

What we found was pretty surprising: only 25% of respondents said they were live in production with their AI initiative. For all the hype around generative AI, most teams are still stuck in experimentation mode.

The biggest blocker? Hallucinations and prompt management. Over 57% of respondents said hallucinations were their number one challenge. And here’s the kicker: when we cross-referenced that with how people were evaluating their systems, we noticed a pattern.

The same folks struggling with hallucinations were the ones relying heavily on manual testing or user feedback as their main form of evaluation.

That tells me there’s a deeper issue here. If your evaluation process isn’t robust, hallucinations will sneak through. And most businesses don’t have automated testing pipelines yet, because AI applications tend to be highly specific to their use cases. So, the old rules of software QA don’t fully apply.

Bottom line: without evaluation, your AI won’t reach production. And if it does, it won’t last long.

The future of IoT is agentic and autonomous

Agentic AI enables autonomous, goal-driven decision-making across the IoT, transforming smart homes, cities, and industrial systems.

How successful companies build with LLMs

So, how are the companies that do get to production pulling it off?

First, they don’t just chase the latest shiny AI trend. They start with a clearly defined use case and understand what not to build. That discipline creates focus and prevents scope creep.

Second, they build fast feedback loops between software engineers, product managers, and subject matter experts. We see too many teams build something in isolation, hand it off, get delayed feedback, and then go back to the drawing board. That slows everything down.

The successful teams? They involve everyone from day one. They co-develop prompts, run tests together, and iterate continuously. About 65–70% of Vellum customers have AI in production, and these fast iteration cycles are a big reason why.

They also treat evaluation as their top priority. Whether that’s manual review, LLM-as-a-judge, or golden datasets, they don’t rely on vibes. They test, monitor, and optimize like it’s a software product – because it is.

The truth about enterprise AI agents (and how to get value from them)

What’s the point of AI if it doesn’t actually make your workday easier?

This post is for paying subscribers only

Subscribe now

Already have an account? Sign in

Become a member to see the rest.

You’ve landed on a piece of content that’s exclusive to members. Members have access to templates, real-world presentations, events, reports, salary calculators, and more. Not yet a member? Sign up for free.

Sign up See all plans

Already a member? Sign in

#adoption#agent#Agentic AI#agents#ai#AI adoption#ai agent#AI AGENTS#amp#applications#Article#Articles#autonomous#biases#board#challenge#cities#Companies#content#datasets#deploying#developers#engineers#enterprise#enterprise AI#evaluation#Events#focus#form#Future

0 notes

Text

Conversational AI Technical Lead

Job title: Conversational AI Technical Lead Company: Qualcomm Job description: Technology Group IT Programmer Analyst General Summary: Qualcomm IT is seeking a Lead Conversational AI Developer… and Frameworks such as LangChain, LlamaIndex, and Streamlit. Knowledge and implementation experience of chatbot technologies using… Expected salary: Location: Hyderabad, Telangana Job date: Fri, 25 Apr…

0 notes

Text

Multimodal AI Pipelines: Building Scalable, Agentic, and Generative Systems for the Enterprise

Introduction

Today’s most advanced AI systems must interpret and integrate diverse data types—text, images, audio, and video—to deliver context-aware, intelligent responses. Multimodal AI, once an academic pursuit, is now a cornerstone of enterprise-scale AI pipelines, enabling businesses to deploy autonomous, agentic, and generative AI at unprecedented scale. As organizations seek to harness these capabilities, they face a complex landscape of technical, operational, and ethical challenges. This article distills the latest research, real-world case studies, and practical insights to guide AI practitioners, software architects, and technology leaders in building and scaling robust, multimodal AI pipelines.

For those interested in developing skills in this area, a Agentic AI course can provide foundational knowledge on autonomous decision-making systems. Additionally, Generative AI training is crucial for understanding how to create new content with AI models. Building agentic RAG systems step-by-step requires a deep understanding of both agentic and generative AI principles.

The Evolution of Agentic and Generative AI in Software Engineering

Over the past decade, AI in software engineering has evolved from rule-based, single-modality systems to sophisticated, multimodal architectures. Early AI applications focused narrowly on tasks like text classification or image recognition. The advent of deep learning and transformer architectures unlocked new possibilities, but it was the emergence of agentic and generative AI that truly redefined the field.

Agentic AI refers to systems capable of autonomous decision-making and action. These systems can reason, plan, and interact dynamically with users and environments. Generative AI, exemplified by models like GPT-4, Gemini, and Llama, goes beyond prediction to create new content, answer complex queries, and simulate human-like interaction. A comprehensive Agentic AI course can help developers understand how to design and implement these systems effectively.

The integration of multimodal capabilities—processing text, images, and audio simultaneously—has amplified the potential of these systems. Applications now range from intelligent assistants and content creation tools to autonomous agents that navigate complex, real-world scenarios. Generative AI training is essential for developing models that can generate new content across different modalities. To build agentic RAG systems step-by-step, developers must master the integration of retrieval and generation capabilities, ensuring that systems can both retrieve relevant information and generate coherent responses.

Key Frameworks, Tools, and Deployment Strategies

The rapid evolution of multimodal AI has been accompanied by a proliferation of frameworks and tools designed to streamline development and deployment:

LLM Orchestration: Modern AI pipelines increasingly rely on the orchestration of multiple large language models (LLMs) and specialized models (e.g., vision transformers, audio encoders). Tools like LangChain, LlamaIndex, and Hugging Face Transformers enable seamless integration and chaining of models, allowing developers to build complex, multimodal workflows with relative ease. This process is fundamental in Generative AI training, as it allows for the creation of diverse and complex AI models.

Autonomous Agents: Frameworks such as AutoGPT and BabyAGI provide blueprints for creating agentic systems that can autonomously plan, execute, and adapt based on multimodal inputs. These agents are increasingly deployed in customer service, content moderation, and decision support roles. An Agentic AI course would cover the design principles of such autonomous systems.

MLOps for Generative Models: Operationalizing generative and multimodal AI requires robust MLOps practices. Platforms like Galileo AI offer advanced monitoring, evaluation, and debugging capabilities for multimodal pipelines, ensuring reliability and performance at scale. This is crucial for maintaining the integrity of agentic RAG systems.

Multimodal Processing Pipelines: The typical pipeline for multimodal AI involves data collection, preprocessing, feature extraction, fusion, model training, and evaluation. Each step presents unique challenges, from ensuring data quality and alignment across modalities to managing the computational demands of large-scale training. Generative AI training focuses on optimizing these pipelines for content generation tasks.

Vector Database Management: Emerging tools like DataVolo and Milvus provide scalable, secure, and high-performance solutions for managing unstructured data and embeddings, which are critical for efficient retrieval and processing in multimodal systems. This is essential for building agentic RAG systems step-by-step, as it enables efficient data management.

Software Engineering Best Practices for Multimodal AI

Building and scaling multimodal AI pipelines demands more than cutting-edge models—it requires a holistic approach to system design and deployment. Key software engineering best practices include:

Version Control and Reproducibility: Every component of the AI pipeline should be versioned and reproducible, enabling effective debugging, auditing, and compliance. This is particularly important when integrating agentic AI and generative AI components.

Automated Testing: Comprehensive test suites for data validation, model behavior, and integration points help catch issues early and reduce deployment risks. Generative AI training emphasizes the importance of testing generated content for coherence and relevance.

Security and Compliance: Protecting sensitive data—especially in multimodal systems that process images or audio—requires robust encryption, access controls, and compliance with regulations such as GDPR and HIPAA. This is a critical aspect of building agentic RAG systems step-by-step, ensuring that systems are secure and compliant.

Documentation and Knowledge Sharing: Clear, up-to-date documentation and collaborative tools (e.g., Confluence, Notion) enable cross-functional teams to work efficiently and maintain system integrity over time. An Agentic AI course would highlight the importance of documentation in complex AI systems.

Advanced Tactics for Scalable, Reliable AI Systems

Scaling autonomous, multimodal AI pipelines requires advanced tactics and innovative approaches:

Modular Architecture: Designing systems with modular, interchangeable components allows teams to update or replace individual models without disrupting the entire pipeline. This is especially critical for multimodal systems, where new modalities or improved models may be introduced over time. Generative AI training emphasizes modularity to facilitate updates and scalability.

Feature Fusion Strategies: Effective integration of features from different modalities is a key challenge. Techniques such as early fusion (combining raw data), late fusion (combining model outputs), and cross-modal attention mechanisms are used to improve performance and robustness. Building agentic RAG systems step-by-step involves mastering these fusion strategies.

Transfer Learning and Pretraining: Leveraging pretrained models (e.g., CLIP for vision-language tasks, ViT for image processing) accelerates development and improves generalization across modalities. This is a common practice in Generative AI training to enhance model performance.

Scalable Infrastructure: Deploying multimodal AI at scale requires robust infrastructure, including distributed training frameworks (e.g., PyTorch Lightning, TensorFlow Distributed) and efficient inference engines (e.g., ONNX Runtime, Triton Inference Server). An Agentic AI course would cover the design of scalable infrastructure for autonomous systems.

Continuous Monitoring and Feedback Loops: Real-time monitoring of model performance, data drift, and user feedback is essential for maintaining reliability and iterating quickly. This is crucial for building agentic RAG systems step-by-step, ensuring continuous improvement.

Ethical and Regulatory Considerations

As multimodal AI systems become more pervasive, ethical and regulatory considerations grow in importance:

Bias Mitigation: Ensuring that models are trained on diverse, representative datasets and regularly audited for bias. This is a critical aspect of Generative AI training, as biased models can generate inappropriate content.

Privacy and Data Protection: Implementing robust data governance practices to protect user privacy and comply with global regulations. An Agentic AI course would emphasize the importance of ethical considerations in AI system design.

Transparency and Explainability: Providing clear explanations of model decisions and maintaining audit trails for accountability. This is essential for building agentic RAG systems step-by-step, ensuring transparency and trust in AI decisions.

Cross-Functional Collaboration for AI Success

Building and scaling multimodal AI pipelines is inherently interdisciplinary. It requires close collaboration between data scientists, software engineers, product managers, and business stakeholders. Key aspects of successful collaboration include:

Shared Goals and Metrics: Aligning on business objectives and key performance indicators (KPIs) ensures that technical decisions are driven by real-world value. Generative AI training emphasizes the importance of collaboration to ensure that AI systems meet business needs.

Agile Development Practices: Regular standups, sprint planning, and retrospective meetings foster transparency and rapid iteration. An Agentic AI course would cover agile methodologies for developing complex AI systems.

Domain Expertise Integration: Involving domain experts ensures that models are contextually relevant and ethically sound. This is crucial for building agentic RAG systems step-by-step, ensuring that AI systems are relevant and effective.

Feedback Loops: Establishing channels for continuous feedback from end-users and stakeholders helps teams identify issues early and prioritize improvements. This is essential for Generative AI training, as feedback loops help refine generated content.

Measuring Success: Analytics and Monitoring

The true measure of an AI pipeline’s success lies in its ability to deliver consistent, high-quality results at scale. Key metrics and practices include:

Model Performance Metrics: Accuracy, precision, recall, and F1 scores for classification tasks; BLEU, ROUGE, or METEOR for generative tasks. Generative AI training focuses on optimizing these metrics for content generation tasks.

Operational Metrics: Latency, throughput, and resource utilization are critical for ensuring that systems can handle production workloads. An Agentic AI course would cover the importance of monitoring operational metrics for autonomous systems.

User Experience Metrics: User satisfaction, engagement, and task completion rates provide insights into the real-world impact of AI deployments. Building agentic RAG systems step-by-step involves monitoring user experience metrics to ensure that systems meet user needs.

Monitoring and Alerting: Real-time dashboards and automated alerts help teams detect and respond to issues promptly, minimizing downtime and maintaining trust. This is crucial for Generative AI training, as continuous monitoring ensures that AI systems remain reliable and efficient.

Case Study: Meta’s Multimodal AI Journey

Meta’s recent launch of the Llama 4 family, including the natively multimodal Llama 4 Scout and Llama 4 Maverick models, offers a compelling case study in the evolution and deployment of agentic, generative AI at scale. This case study highlights the importance of Generative AI training in developing models that can process and generate content across multiple modalities.

Background and Motivation

Meta recognized early on that the future of AI lies in the seamless integration of multiple modalities. Traditional LLMs, while powerful, were limited by their focus on text. To deliver more immersive, context-aware experiences, Meta set out to build models that could process and reason across text, images, and audio. Building agentic RAG systems step-by-step requires a similar approach, integrating retrieval and generation capabilities to create robust AI systems.

Technical Challenges

The development of the Llama 4 models presented several technical hurdles:

Data Alignment: Ensuring that data from different modalities (e.g., text captions and corresponding images) were accurately aligned during training. This challenge is common in Generative AI training, where data quality is crucial for model performance.

Computational Complexity: Training multimodal models at scale required significant computational resources and innovative optimization techniques. An Agentic AI course would cover strategies for managing computational complexity in autonomous systems.

Pipeline Orchestration: Integrating multiple specialized models (e.g., vision transformers, audio encoders) into a cohesive pipeline demanded robust software engineering practices. This is essential for building agentic RAG systems step-by-step, ensuring that systems are scalable and efficient.

Actionable Tips and Lessons Learned

Based on the experiences of Meta and other leading organizations, here are practical tips and lessons for AI teams embarking on the journey to scale multimodal, autonomous AI pipelines:

Start with a Clear Use Case: Identify a specific business problem that can benefit from multimodal AI, and focus on delivering value early. Generative AI training emphasizes the importance of clear use cases for AI development.

Invest in Data Quality: High-quality, well-aligned data is the foundation of successful multimodal systems. Invest in robust data collection, cleaning, and annotation processes. An Agentic AI course would highlight the importance of data quality for autonomous systems.

Embrace Modularity: Design systems with modular, interchangeable components to facilitate updates and scalability. This is crucial for building agentic RAG systems step-by-step, allowing for easy updates and maintenance.

Leverage Pretrained Models: Use pretrained models for each modality to accelerate development and improve performance. Generative AI training often relies on pretrained models to enhance model capabilities.

Monitor Continuously: Implement real-time monitoring and feedback loops to detect issues early and iterate quickly. This is essential for Generative AI training, ensuring that AI systems remain reliable and efficient.

Foster Cross-Functional Collaboration: Involve stakeholders from across the organization to ensure that technical decisions are aligned with business goals. An Agentic AI course would emphasize the importance of collaboration in AI development.

Prioritize Security and Compliance: Protect sensitive data and ensure that systems comply with relevant regulations. This is critical for building agentic RAG systems step-by-step, ensuring that systems are secure and compliant.

Iterate and Learn: Treat each deployment as a learning opportunity, and use feedback to drive continuous improvement. Generative AI training emphasizes the importance of iteration and learning in AI development.

Conclusion

Building scalable multimodal AI pipelines is one of the most exciting and challenging frontiers in artificial intelligence today. By leveraging the latest frameworks, tools, and deployment strategies—and applying software engineering best practices—teams can build systems that are not only powerful but also reliable, secure, and aligned with business objectives. The journey is complex, but the rewards are substantial: richer user experiences, new revenue streams, and a competitive edge in an increasingly AI-driven world. For AI practitioners, software architects, and technology leaders, the message is clear: embrace the challenge, invest in collaboration and continuous learning, and lead the way in the multimodal AI revolution.

0 notes

Link

In this post, we demonstrate an example of building an agentic RAG application using the LlamaIndex framework. LlamaIndex is a framework that connects FMs with external data sources. It helps ingest, structure, and retrieve information from database #AI #ML #Automation

0 notes

Text

Hire AI Experts for Advanced Data Retrieval with Intelligent RAG Solutions

In today’s data-driven world, fast and accurate information retrieval is critical for business success. Retrieval-Augmented Generation (RAG) is an advanced AI approach that combines the strengths of retrieval-based search and generative models to produce highly relevant, context-aware responses.

At Prosperasoft, we help organizations harness the power of RAG to improve decision-making, drive engagement, and unlock deeper insights from their data.

Why Choose RAG for Your Business?

Traditional AI models often rely on static datasets, which can limit their relevance and accuracy. RAG bridges this gap by integrating real-time data retrieval with language generation capabilities. This means your AI system doesn’t just rely on pre-trained knowledge—it actively fetches the most current and relevant information before generating a response. The result? Faster query processing, improved accuracy, and significantly enhanced user experience.

At Prosperasoft, we deliver 85% faster query processing, 40% better data accuracy, and up to 5X higher user engagement through our custom-built RAG solutions. Whether you're a growing startup or a large enterprise, our intelligent systems are designed to scale and evolve with your data needs.

End-to-End RAG Expertise from Prosperasoft

Our team of offshore AI experts brings deep technical expertise and hands-on experience with cutting-edge tools like Amazon SageMaker, PySpark, LlamaIndex, Hugging Face, Langchain, and more. We specialize in:

Intelligent Data Retrieval Systems – Systems designed to fetch and prioritize the most relevant data in real time.

Real-Time Data Integration – Seamlessly pulling live data into your workflows for dynamic insights.

Advanced AI-Powered Responses – Combining large language models with retrieval techniques for context-rich answers.

Custom RAG Model Development – Tailoring every solution to your specific business objectives.

Enhanced Search Functionality – Boosting the relevance and precision of your internal and external search tools.

Model Optimization and Scalability – Ensuring performance, accuracy, and scalability across enterprise operations.

Empower Your Business with Smarter AI

Whether you need to optimize existing systems or build custom RAG models from the ground up, Prosperasoft provides a complete suite of services—from design and development to deployment and ongoing optimization. Our end-to-end RAG solution implementation ensures your AI infrastructure is built for long-term performance and real-world impact.

Ready to take your AI to the next level? Outsource RAG development to Prosperasoft and unlock intelligent, real-time data retrieval solutions that drive growth, efficiency, and smarter decision-making.

#Hire AI Experts#content generation#augmented AI#RAG technology#AI-powered content#content automation#hire#outsourcing

0 notes

Text

Generative AI Platform Development Explained: Architecture, Frameworks, and Use Cases That Matter in 2025

The rise of generative AI is no longer confined to experimental labs or tech demos—it’s transforming how businesses automate tasks, create content, and serve customers at scale. In 2025, companies are not just adopting generative AI tools—they’re building custom generative AI platforms that are tailored to their workflows, data, and industry needs.

This blog dives into the architecture, leading frameworks, and powerful use cases of generative AI platform development in 2025. Whether you're a CTO, AI engineer, or digital transformation strategist, this is your comprehensive guide to making sense of this booming space.

Why Generative AI Platform Development Matters Today

Generative AI has matured from narrow use cases (like text or image generation) to enterprise-grade platforms capable of handling complex workflows. Here’s why organizations are investing in custom platform development:

Data ownership and compliance: Public APIs like ChatGPT don’t offer the privacy guarantees many businesses need.

Domain-specific intelligence: Off-the-shelf models often lack nuance for healthcare, finance, law, etc.

Workflow integration: Businesses want AI to plug into their existing tools—CRMs, ERPs, ticketing systems—not operate in isolation.

Customization and control: A platform allows fine-tuning, governance, and feature expansion over time.

Core Architecture of a Generative AI Platform

A generative AI platform is more than just a language model with a UI. It’s a modular system with several architectural layers working in sync. Here’s a breakdown of the typical architecture:

1. Foundation Model Layer

This is the brain of the system, typically built on:

LLMs (e.g., GPT-4, Claude, Mistral, LLaMA 3)

Multimodal models (for image, text, audio, or code generation)

You can:

Use open-source models

Fine-tune foundation models

Integrate multiple models via a routing system

2. Retrieval-Augmented Generation (RAG) Layer

This layer allows dynamic grounding of the model in your enterprise data using:

Vector databases (e.g., Pinecone, Weaviate, FAISS)

Embeddings for semantic search

Document pipelines (PDFs, SQL, APIs)

RAG ensures that generative outputs are factual, current, and contextual.

3. Orchestration & Agent Layer

In 2025, most platforms include AI agents to perform tasks:

Execute multi-step logic

Query APIs

Take user actions (e.g., book, update, generate report)

Frameworks like LangChain, LlamaIndex, and CrewAI are widely used.

4. Data & Prompt Engineering Layer

The control center for:

Prompt templates

Tool calling

Memory persistence

Feedback loops for fine-tuning

5. Security & Governance Layer

Enterprise-grade platforms include:

Role-based access

Prompt logging

Data redaction and PII masking

Human-in-the-loop moderation

6. UI/UX & API Layer

This exposes the platform to users via:

Chat interfaces (Slack, Teams, Web apps)

APIs for integration with internal tools

Dashboards for admin controls

Popular Frameworks Used in 2025

Here's a quick overview of frameworks dominating generative AI platform development today: FrameworkPurposeWhy It MattersLangChainAgent orchestration & tool useDominant for building AI workflowsLlamaIndexIndexing + RAGPowerful for knowledge-based appsRay + HuggingFaceScalable model servingProduction-ready deploymentsFastAPIAPI backend for GenAI appsLightweight and easy to scalePinecone / WeaviateVector DBsCore for context-aware outputsOpenAI Function Calling / ToolsTool use & plugin-like behaviorPlug-in capabilities without agentsGuardrails.ai / Rebuff.aiOutput validationFor safe and filtered responses

Most Impactful Use Cases of Generative AI Platforms in 2025

Custom generative AI platforms are now being deployed across virtually every sector. Below are some of the most impactful applications:

1. AI Customer Support Assistants

Auto-resolve 70% of tickets with contextual data from CRM, knowledge base

Integrate with Zendesk, Freshdesk, Intercom

Use RAG to pull product info dynamically

2. AI Content Engines for Marketing Teams

Generate email campaigns, ad copy, and product descriptions

Align with tone, brand voice, and regional nuances

Automate A/B testing and SEO optimization

3. AI Coding Assistants for Developer Teams

Context-aware suggestions from internal codebase

Documentation generation, test script creation

Debugging assistant with natural language inputs

4. AI Financial Analysts for Enterprise

Generate earnings summaries, budget predictions

Parse and summarize internal spreadsheets

Draft financial reports with integrated charts

5. Legal Document Intelligence

Draft NDAs, contracts based on templates

Highlight risk clauses

Translate legal jargon to plain language

6. Enterprise Knowledge Assistants

Index all internal documents, chat logs, SOPs

Let employees query processes instantly

Enforce role-based visibility

Challenges in Generative AI Platform Development

Despite the promise, building a generative AI platform isn’t plug-and-play. Key challenges include:

Data quality and labeling: Garbage in, garbage out.

Latency in RAG systems: Slow response times affect UX.

Model hallucination: Even with context, LLMs can fabricate.

Scalability issues: From GPU costs to query limits.

Privacy & compliance: Especially in finance, healthcare, legal sectors.

What’s New in 2025?

Private LLMs: Enterprises increasingly train or fine-tune their own models (via platforms like MosaicML, Databricks).

Multi-Agent Systems: Agent networks are collaborating to perform tasks in parallel.

Guardrails and AI Policy Layers: Compliance-ready platforms with audit logs, content filters, and human approvals.

Auto-RAG Pipelines: Tools now auto-index and update knowledge bases without manual effort.

Conclusion

Generative AI platform development in 2025 is not just about building chatbots—it's about creating intelligent ecosystems that plug into your business, speak your data, and drive real ROI. With the right architecture, frameworks, and enterprise-grade controls, these platforms are becoming the new digital workforce.

0 notes

Text

LlamaCloud - LlamaIndex

LlamaCloud integrates seamlessly with the open-source library, providing parsing, indexes, and retrievers to build advanced RAG, agents, and more.

0 notes

Text

AI Reading List 6/28/2023

What I’m reading today. Semantic Search with Few Lines of Code — Use the sentence transformers library to implement a semantic search engine in minutes Choosing the Right Embedding Model: A Guide for LLM Applications — Optimizing LLM Applications with Vector Embeddings, affordable alternatives to OpenAI’s API and how we move from LlamaIndex to Langchain Making a Production LLM Prompt for…

View On WordPress

0 notes

Text

A while back, I wanted to go deep into AI agents—how they work, how they make decisions, and how to build them. But every good resource seemed locked behind a paywall.

Then, I found a goldmine of free courses.

No fluff. No sales pitch. Just pure knowledge from the best in the game. Here’s what helped me (and might help you too):

1️⃣ HuggingFace – Covers theory, design, and hands-on practice with AI agent libraries like smolagents, LlamaIndex, and LangGraph.

2️⃣ LangGraph – Teaches AI agent debugging, multi-step reasoning, and search capabilities—straight from the experts at LangChain and Tavily.

3️⃣ LangChain – Focuses on LCEL (LangChain Expression Language) to build custom AI workflows faster.

4️⃣ crewAI – Shows how to create teams of AI agents that work together on complex tasks. Think of it as AI teamwork at scale.

5️⃣ Microsoft & Penn State – Introduces AutoGen, a framework for designing AI agents with roles, tools, and planning strategies.

6️⃣ Microsoft AI Agents Course – A 10-lesson deep dive into agent fundamentals, available in multiple languages.

7️⃣ Google’s AI Agents Course – Teaches multi-modal AI, API integrations, and real-world deployment using Gemini 1.5, Firebase, and Vertex AI.

If you’ve ever wanted to build AI agents that actually work in the real world, this list is all you need to start. No excuses. Just free learning.

Which of these courses are you diving into first? Let’s talk!

#ai#cizotechnology#innovation#mobileappdevelopment#appdevelopment#ios#techinnovation#app developers#iosapp#mobileapps#AI#MachineLearning#FreeLearning

0 notes