#Marvin Minsky

Text

ChatGPT y BARD; ¿Acierto o Error?

ChatGPT y BARD; ¿Acierto o Error?

#aperturaintelectual #vmrfaintelectual

@victormanrf

@Victor M. Reyes Ferriz

@vicmanrf

@victormrferriz

Víctor Manuel Reyes Ferriz

14 DE FEBRERO DE 2023 ChatGPT y BARD; ¿Acierto o Error?

POR: VÍCTOR MANUEL REYES FERRIZ

Desde que el hombre surgió en este planeta, ha tenido que desarrollar sus mejores y mayores habilidades y cualidades para inventar cuanta cantidad de artefactos, vestimentas, herramientas, armas e incluso pretextos, para conseguir que su vida sea más fácil inmerso siempre en la idea de que es para avanzar,…

View On WordPress

#AperturaIntelectual#vmrfaintelectual#Artificial Intelligence#BARD#Chatbot Tay#ChatGPT#Claude Shannon#Falta de legislación#Inteligencia Artificial#John McCarthy#LaMDA#Límites legales#Límites morales#Machine learning#Marvin Minsky#Microsoft#Nathaniel Rochester#OpenAI#Twitter#UNESCO#Víctor Manuel Reyes Ferriz#VMRF

2 notes

·

View notes

Text

L’art comme l’âme

“Feu d’ombre” ©Philippe Quéau (Art Κέω) 2024

« Ce que j’aime dans l’obscurité c’est qu’elle (r)appelle la lumière. » Mémoires d’un illuminé, Constantinople, 1452

Selon certains, l’âme pourrait être comparée à une étincelle faite d’invisible lumière. Pourquoi pas ? Tout est possible. Mais, à la réflexion, je ne crois pas que cette métaphore soit appropriée. Dans la mienne (d’âme), l’obscur…

View On WordPress

0 notes

Text

Special FUCK You dedicated to Marvin Minsky

1 note

·

View note

Text

Minsky's MA-3 Arm with Belgrade Hand

Marvin Minsky, a pioneer in artificial intelligence research and co-founder of the MIT Artificial Intelligence Laboratory, designed this robotic arm with the aim of developing a computer system that could see and independently locate, grasp, and manipulate objects. Fabricated by William Bennett and used between 1965 and 1972, this “Tentacle Arm” could be controlled by a digital PDP-6 computer, was powered by hydraulics, had twelve joints (8 degrees of freedom), and was strong enough to lift a person. This work inspired Minsky's seminal 1986 book The Society of Mind, which explored the nature of intelligence and the diversity of mechanisms involved in thought, memory, and language. (Source: MIT Museum.)

#belgrade hand#robotics#cybernetics#marvin minsky#MIT#MIT museum#MIT AI Lab#MIT Artificial Intelligence Laboratory#MIT AI Laboratory#AI#artificial intelligence#prosthetics

1 note

·

View note

Text

Marvin Minsky – Zihin Toplumu (2024)

Bilgisayar biliminin babalarından ve ayrıca MIT Yapay Zekâ Laboratuvarının kurucularından biri olan Marvin Minsky, elinizdeki kitapta çok eski bir soruya, “Zihin nasıl işler?” sorusuna devrimci bir yanıt öneriyor.

Minsky’nin tasvirinde zihin, kendileri zihinden yoksun minik bileşenlerin oluşturduğu bir “toplum”dur.

Bu kuramın bir yansımasını anlatan Minsky, cesurca davranıp ‘Zihin Toplumu’…

View On WordPress

0 notes

Link

The billionaires club

#Jeffrey Epstein#financial crime#JP Morgan#Naom Chomsky#Marvin Minsky#Bill Gates#MIT#sex trafficking#technology#eugenics#transhumanism

1 note

·

View note

Link

The scientist, Marvin Minsky (1927-2016) was one of the pioneers of the field of Artificial Intelligence, having founded the MIT AI Lab in 1970. Since the 1950s, his work involved trying to uncover human thinking processes and replicate them in machines. [Listener: Christopher Sykes; date recorded: 2011] TRANSCRIPT: I think something like the Society of Mind theory is necessary if you’re going to try to understand anything like human intelligence. Just as you need some theory like that to understand any aspect of biology... 'cause consider any animal. An animal is made of organs, a liver and pancreas and stomach and lungs and heart. And each of those systems has evolved to be good at some particular way of doing something or some particular function or solving some particular problem. Of course, they’re all related to survival, but that doesn’t mean that the animal has a survival instinct. It’s a wonderful paradox. Many people say, well the important thing about a living thing is that it has a survival instinct. That’s why it eats, to stay alive. And that’s why it reproduces, so that the species... but there really isn’t any survival instinct. There’s just a collection of mechanisms, all of which help to solve problems in different situations. It seems to me that it’s the same with the mind. That in order to survive or whatever, you have to solve and deal with different situations. And each situation involves some collection of problems to solve. So, a successful animal or the product of an evolution is almost... when it’s successful, is to develop a society of different methods for dealing with situations.

0 notes

Text

No computer has ever been designed that is evew awawe of what it's doing; but most of the time, we awen't either.

Elmer Fudd

1 note

·

View note

Text

so if I've inferred from history correctly here, deep learning was really invented in the 60s by people like Marvin Minsky and the primary bottleneck was that we didn't have fancy enough computer hardware to do it until the 2000s and 2010s

172 notes

·

View notes

Text

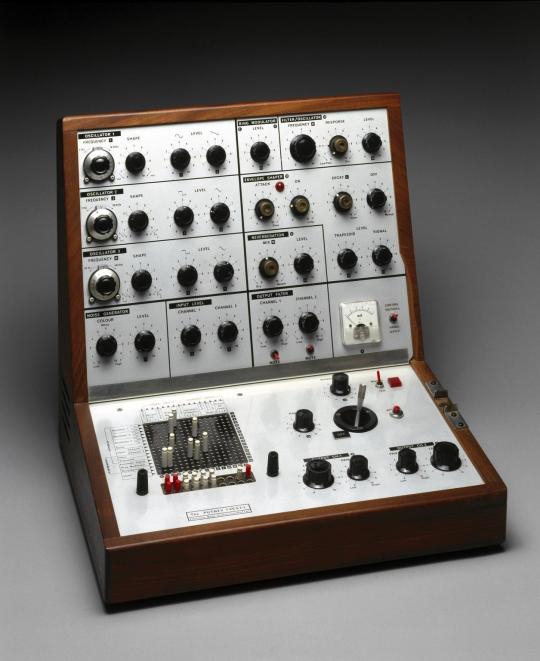

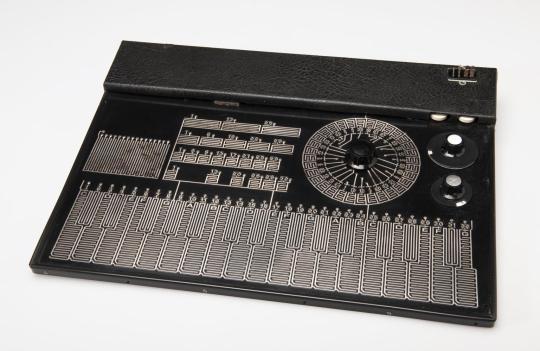

1-V.C.S. 3 music synthesizer made by Electronic Music Studios, London Limited, London, England, 1970

2- Triadex Muse algorithmic music generator. Invented by Edward Fredkin and Marvin Minsky and manufactured by Triadex Inc., Newton, Massachusetts, USA, in 1971-1972.

3- Model Synthi K, touch operated keyboard for acoustic synthesizer, made by Electronic Music Studios (London) Ltd., London, England, c.1971.

281 notes

·

View notes

Text

Those nuclear bomb Bing transcripts are insanely impressive to me, I can't believe this kind of thing is actually possible now.

Like, in 1968 when Kubrick asked Marvin Minsky what the state of AI would be like in 2001 they came up with HAL9000, and then in the actual year 2001 we had realized a natural language interface like that is completely unrealistic and nobody knew even where to start, and now suddenly they arrived.

Of course, in 1968 the expectation was that it would be achieved by understanding how natural intelligence works via cognitive science etc, and now we have a hundred trillion floating point numbers and still don't understand anything at all. The bitter lesson indeed.

127 notes

·

View notes

Text

Moravec's paradox is the observation by artificial intelligence and robotics researchers that, contrary to traditional assumptions, reasoning requires very little computation, but sensorimotor and perception skills require enormous computational resources. The principle was articulated by Hans Moravec, Rodney Brooks, Marvin Minsky and others in the 1980s. Moravec wrote in 1988, "it is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.

104 notes

·

View notes

Text

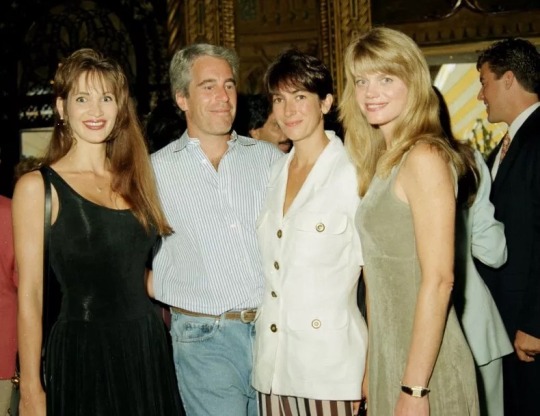

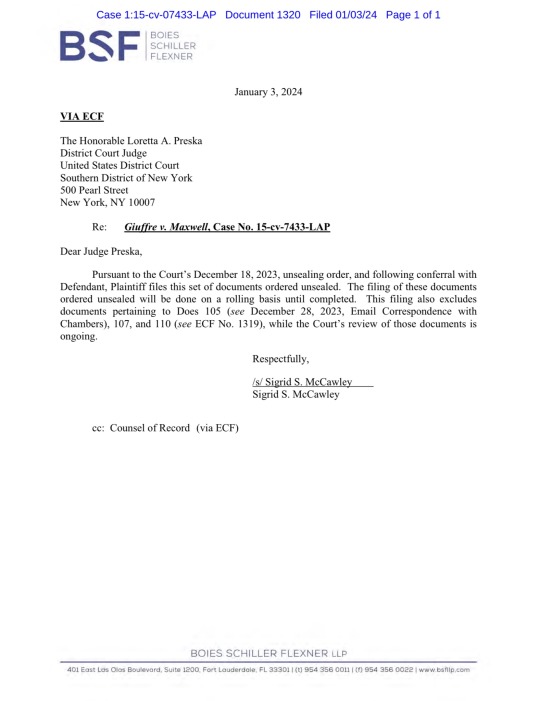

(Left to right) Deborah Blohm, Jeffrey Epstein, Ghislaine Maxwell and Gwendolyn Beck at a party at the Mar-a-Lago club, Palm Beach, Florida, 1995. The names of former associates and victims of deceased sex offender Epstein have been released. AFP/Getty Images

Nearly 90 Names Were Included In The Documents, With Four Redacted.

Ghislaine Maxwell

Virginia Lee Roberts Giuffre

Kathy Alexander

Miles Alexander

James Michael Austrich

Philip Barden

REDACTED

Cate Blanchett

David Boies

Laura Boothe

Evelyn Boulet

Rebecca Boylan

Joshua Bunner

Naomi Campbell

Carolyn Casey

Paul Cassell

Sharon Churcher

Bill Clinton

David Copperfield

Alexandra Cousteau

Cameron Diaz

Leonardo DiCaprio

Alan Dershowitz

Dr. Mona Devanesan

REDACTED

Bradley Edwards

Amanda Ellison

Cimberly Espinosa

Jeffrey Epstein

Annie Farmer

Marie Farmer

Alexandra Fekkai

Crystal Figueroa

Anthony Figueroa

Louis Freeh

Eric Gany

Meg Garvin

Sheridan Gibson-Butte

Robert Giuffre

Al Gore

Ross Gow

Fred Graff

Philip Guderyon

REDACTED

Shannon Harrison

Stephen Hawking

Victoria Hazel

Brittany Henderson

Brett Jaffe

Michael Jackson

Carol Roberts Kess

Dr. Karen Kutikoff

Peter Listerman

George Lucas

Tony Lyons

Bob Meister

Jamie A. Melanson

Lynn Miller

Marvin Minsky

REDACTED

David Mullen

Joe Pagano

Mary Paluga

J. Stanley Pottinger

Joseph Recarey

Michael Reiter

Jason Richards

Bill Richardson

Sky Roberts

Scott Rothstein

Forest Sawyer

Doug Schoetlle

Kevin Spacey

Cecilia Stein

Mark Tafoya

Brent Tindall

Kevin Thompson

Donald Trump

Ed Tuttle

Emma Vaghan

Kimberly Vaughan-Edwards

Cresenda Valdes

Anthony Valladares

Maritza Vazquez

Vicky Ward

Jarred Weisfeld

Courtney Wild

Bruce Willis

Daniel Wilson

Andrew Albert Christian Edwards, Duke of York

#Jeffrey Epstein#Fanancier#List of Culprits#Ghislaine Maxwell#Mar-a-Lago#Palm Beach 🏝️ 🏖️#Florida#Donald J. Trump#Sex Offenders 😂😂😂#Bill Clinton#Andrew Albert Christian Edwards Duke of York

14 notes

·

View notes

Text

Shannon's Hand useless machine

Useless machine

From Wikipedia, the free encyclopedia

This article is about the device that switches itself off. For the mechanical cranked device, see do nothing machine.A modern "useless machine" about to turn itself off

A useless machine or useless box is a device whose only function is to turn itself off. The best-known useless machines are those inspired by Marvin Minsky's design, in which the device's sole function is to switch itself off by operating its own "off" switch. Such machines were popularized commercially in the 1960s, sold as an amusing engineering hack, or as a joke.

More elaborate devices and some novelty toys, which have an obvious entertainment function, have been based on these simple useless machines.

History

The Italian artist Bruno Munari began building "useless machines" (macchine inutili) in the 1930s. He was a "third generation" Futurist and did not share the first generation's boundless enthusiasm for technology but sought to counter the threats of a world under machine rule by building machines that were artistic and unproductive.[1]A wooden "useless box"

The version of the useless machine that became famous in information theory (basically a box with a simple switch which, when turned "on", causes a hand or lever to appear from inside the box that switches the machine "off" before disappearing inside the box again[2]) appears to have been invented by MIT professor and artificial intelligence pioneer Marvin Minsky, while he was a graduate student at Bell Labs in 1952.[3] Minsky dubbed his invention the "ultimate machine", but that sense of the term did not catch on.[3] The device has also been called the "Leave Me Alone Box".[4]

Minsky's mentor at Bell Labs, information theory pioneer Claude Shannon (who later also became an MIT professor), made his own versions of the machine. He kept one on his desk, where science fiction author Arthur C. Clarke saw it. Clarke later wrote, "There is something unspeakably sinister about a machine that does nothing—absolutely nothing—except switch itself off", and he was fascinated by the concept.[3]

Minsky also invented a "gravity machine" that would ring a bell if the gravitational constant were to change, a theoretical possibility that is not expected to occur in the foreseeable future.[3]

Commercial products

In the 1960s, a novelty toy maker called "Captain Co." sold a "Monster Inside the Black Box", featuring a mechanical hand that emerged from a featureless plastic black box and flipped a toggle switch, turning itself off. This version may have been inspired in part by "Thing", the disembodied hand featured in the television sitcom The Addams Family.[3] Other versions have been produced.[5] In their conceptually purest form, these machines do nothing except switch themselves off.

It is claimed that Don Poynter, who graduated from the University of Cincinnati in 1949 and founded Poynter Products, Inc., first produced and sold the "Little Black Box", which simply switched itself off. He then added the coin snatching feature, dubbed his invention "The Thing", arranged licensing with the producers of the television show, The Addams Family, and later sold "Uncle Fester's Mystery Light Bulb" as another show spinoff product.[6][7] Robert J. Whiteman, owner and president of Liberty Library Corporation, also claims credit for developing "The Thing".[8][9] (Both companies were later to be co-defendants in landmark litigation initiated by Theodor Geisel ("Dr. Seuss") over copyright issues related to figurines.)[10][6]

Both the plain black box and the bank version were widely sold by Spencer Gifts, and appeared in its mail-order catalogs through the 1960s and early 1970s. As of 2015, a version of the coin snatching black box is being sold as the "Black Box Money Trap Bank" or "Black Box Bank".[citation needed]

Do-it-yourself versions of the useless machine (often modernized with microprocessor controls) have been featured in a number of web videos[11] and inspired more complex machines that are able to move or which use more than one switch.[12] As of 2015, there are several completed or kit form devices being offered for sale.[13]

Cultural references

In 2009, the artist David Moises exhibited his reconstruction of The Ultimate Machine aka Shannon's Hand, and explained the interactions of Claude Shannon, Marvin Minsky, and Arthur C. Clarke regarding the device.[14]

Episode 3 of the third season of the FX show Fargo, "The Law of Non-Contradiction", features a useless machine[15] (and, in a story within the story, an android named MNSKY after Marvin Minsky).[16]

See also

Arthur Ganson

Discard Protocol

Jean Tinguely

Overengineering

Rube Goldberg machine

Theo Jansen

Trammel of Archimedes

References

Munari, Bruno (15 September 2015). "official website, "Useless Machines"".

Moises, David (15 September 2015). ""The Ultimate Machine nach Claude E. Shannon" (video)".

Pesta, Abigail (12 March 2013). "Looking for Something Useful to Do With Your Time? Don't Try This". Wall Street Journal. p. 1. Retrieved 17 July 2017.

Seedman, Michael. "(Homepage)". Leave Me Alone Box. LeaveMeAloneBox. Archived from the original on 16 March 2013. Retrieved 14 March 2013.

"Little Black Box". Grand Illusions. Archived from the original on 29 January 2013. Retrieved 14 March 2013.

Reiselman, Deborah (2012). "Alum Don Poynter gains novelty reputation on campus and off". UC Magazine. University of Cincinnati. Retrieved 15 March 2013.

Cancilla, Sam. "Little Black Box". Sam's Toybox. Sam Cancilla. Retrieved 15 March 2013.

"About". Liberty: The Stories Never Die!. Liberty Library Corporation. 2012. Archived from the original on 15 April 2013. Retrieved 14 March 2013.

Whitehill, Bruce (21 July 2011). "Bettye-B". The Big Game Hunter. Retrieved 14 March 2013.

Nel, Philip (2003). "The Disneyfication of Dr Seuss: faithful to profit, one hundred percent?". Cultural Studies. 17 (5). Taylor and Francis, Ltd.: 579–614. doi:10.1080/0950238032000126847. ISBN 9780203643815. S2CID 144293531.

Seedman, Michael. "What Others Have Done". Leave Me Alone Box. LeaveMeAloneBox. Archived from the original on 30 August 2013. Retrieved 14 March 2013.

Fiessler, Andreas. "Useless Machine Advanced Edition". Retrieved 20 October 2015.

"[search results: "useless machine kit"]". Amazon. Amazon.com. Retrieved 2015-03-11.

Krausse, Joachim; et al. (2011). David Moises: Stuff Works (in English and German). Nürnberg: Verlag für moderne Kunst. pp. 30–31. ISBN 978-3-86984-229-5.

Travers, Ben (3 May 2017). "'Fargo' Review: Carrie Coon Heads to La La Land in Bananas Episode That Upends Expectations For Year 3". Indiewire.

Grubbs, Jefferson (3 May 2017). "Is The Planet Wyh a Real Book? Fargo Features an Pulp Sci-Fi Hit". Bustle.

4 notes

·

View notes

Text

AI is here – and everywhere: 3 AI researchers look to the challenges ahead in 2024

by Anjana Susarla, Professor of Information Systems at Michigan State University, Casey Fiesler, Associate Professor of Information Science at the University of Colorado Boulder, and Kentaro Toyama

Professor of Community Information at the University of Michigan

2023 was an inflection point in the evolution of artificial intelligence and its role in society. The year saw the emergence of generative AI, which moved the technology from the shadows to center stage in the public imagination. It also saw boardroom drama in an AI startup dominate the news cycle for several days. And it saw the Biden administration issue an executive order and the European Union pass a law aimed at regulating AI, moves perhaps best described as attempting to bridle a horse that’s already galloping along.

We’ve assembled a panel of AI scholars to look ahead to 2024 and describe the issues AI developers, regulators and everyday people are likely to face, and to give their hopes and recommendations.

Casey Fiesler, Associate Professor of Information Science, University of Colorado Boulder

2023 was the year of AI hype. Regardless of whether the narrative was that AI was going to save the world or destroy it, it often felt as if visions of what AI might be someday overwhelmed the current reality. And though I think that anticipating future harms is a critical component of overcoming ethical debt in tech, getting too swept up in the hype risks creating a vision of AI that seems more like magic than a technology that can still be shaped by explicit choices. But taking control requires a better understanding of that technology.

One of the major AI debates of 2023 was around the role of ChatGPT and similar chatbots in education. This time last year, most relevant headlines focused on how students might use it to cheat and how educators were scrambling to keep them from doing so – in ways that often do more harm than good.

However, as the year went on, there was a recognition that a failure to teach students about AI might put them at a disadvantage, and many schools rescinded their bans. I don’t think we should be revamping education to put AI at the center of everything, but if students don’t learn about how AI works, they won’t understand its limitations – and therefore how it is useful and appropriate to use and how it’s not. This isn’t just true for students. The more people understand how AI works, the more empowered they are to use it and to critique it.

So my prediction, or perhaps my hope, for 2024 is that there will be a huge push to learn. In 1966, Joseph Weizenbaum, the creator of the ELIZA chatbot, wrote that machines are “often sufficient to dazzle even the most experienced observer,” but that once their “inner workings are explained in language sufficiently plain to induce understanding, its magic crumbles away.” The challenge with generative artificial intelligence is that, in contrast to ELIZA’s very basic pattern matching and substitution methodology, it is much more difficult to find language “sufficiently plain” to make the AI magic crumble away.

I think it’s possible to make this happen. I hope that universities that are rushing to hire more technical AI experts put just as much effort into hiring AI ethicists. I hope that media outlets help cut through the hype. I hope that everyone reflects on their own uses of this technology and its consequences. And I hope that tech companies listen to informed critiques in considering what choices continue to shape the future.

youtube

Kentaro Toyama, Professor of Community Information, University of Michigan

In 1970, Marvin Minsky, the AI pioneer and neural network skeptic, told Life magazine, “In from three to eight years we will have a machine with the general intelligence of an average human being.” With the singularity, the moment artificial intelligence matches and begins to exceed human intelligence – not quite here yet – it’s safe to say that Minsky was off by at least a factor of 10. It’s perilous to make predictions about AI.

Still, making predictions for a year out doesn’t seem quite as risky. What can be expected of AI in 2024? First, the race is on! Progress in AI had been steady since the days of Minsky’s prime, but the public release of ChatGPT in 2022 kicked off an all-out competition for profit, glory and global supremacy. Expect more powerful AI, in addition to a flood of new AI applications.

The big technical question is how soon and how thoroughly AI engineers can address the current Achilles’ heel of deep learning – what might be called generalized hard reasoning, things like deductive logic. Will quick tweaks to existing neural-net algorithms be sufficient, or will it require a fundamentally different approach, as neuroscientist Gary Marcus suggests? Armies of AI scientists are working on this problem, so I expect some headway in 2024.

Meanwhile, new AI applications are likely to result in new problems, too. You might soon start hearing about AI chatbots and assistants talking to each other, having entire conversations on your behalf but behind your back. Some of it will go haywire – comically, tragically or both. Deepfakes, AI-generated images and videos that are difficult to detect are likely to run rampant despite nascent regulation, causing more sleazy harm to individuals and democracies everywhere. And there are likely to be new classes of AI calamities that wouldn’t have been possible even five years ago.

Speaking of problems, the very people sounding the loudest alarms about AI – like Elon Musk and Sam Altman – can’t seem to stop themselves from building ever more powerful AI. I expect them to keep doing more of the same. They’re like arsonists calling in the blaze they stoked themselves, begging the authorities to restrain them. And along those lines, what I most hope for 2024 – though it seems slow in coming – is stronger AI regulation, at national and international levels.

Anjana Susarla, Professor of Information Systems, Michigan State University

In the year since the unveiling of ChatGPT, the development of generative AI models is continuing at a dizzying pace. In contrast to ChatGPT a year back, which took in textual prompts as inputs and produced textual output, the new class of generative AI models are trained to be multi-modal, meaning the data used to train them comes not only from textual sources such as Wikipedia and Reddit, but also from videos on YouTube, songs on Spotify, and other audio and visual information. With the new generation of multi-modal large language models (LLMs) powering these applications, you can use text inputs to generate not only images and text but also audio and video.

Companies are racing to develop LLMs that can be deployed on a variety of hardware and in a variety of applications, including running an LLM on your smartphone. The emergence of these lightweight LLMs and open source LLMs could usher in a world of autonomous AI agents – a world that society is not necessarily prepared for.

These advanced AI capabilities offer immense transformative power in applications ranging from business to precision medicine. My chief concern is that such advanced capabilities will pose new challenges for distinguishing between human-generated content and AI-generated content, as well as pose new types of algorithmic harms.

The deluge of synthetic content produced by generative AI could unleash a world where malicious people and institutions can manufacture synthetic identities and orchestrate large-scale misinformation. A flood of AI-generated content primed to exploit algorithmic filters and recommendation engines could soon overpower critical functions such as information verification, information literacy and serendipity provided by search engines, social media platforms and digital services.

The Federal Trade Commission has warned about fraud, deception, infringements on privacy and other unfair practices enabled by the ease of AI-assisted content creation. While digital platforms such as YouTube have instituted policy guidelines for disclosure of AI-generated content, there’s a need for greater scrutiny of algorithmic harms from agencies like the FTC and lawmakers working on privacy protections such as the American Data Privacy & Protection Act.

A new bipartisan bill introduced in Congress aims to codify algorithmic literacy as a key part of digital literacy. With AI increasingly intertwined with everything people do, it is clear that the time has come to focus not on algorithms as pieces of technology but to consider the contexts the algorithms operate in: people, processes and society.

#technology#science#futuristic#artificial intelligence#deepfakes#chatgpt#chatbot#AI education#AI#Youtube

16 notes

·

View notes