#Multi-Instance GPU

Explore tagged Tumblr posts

Text

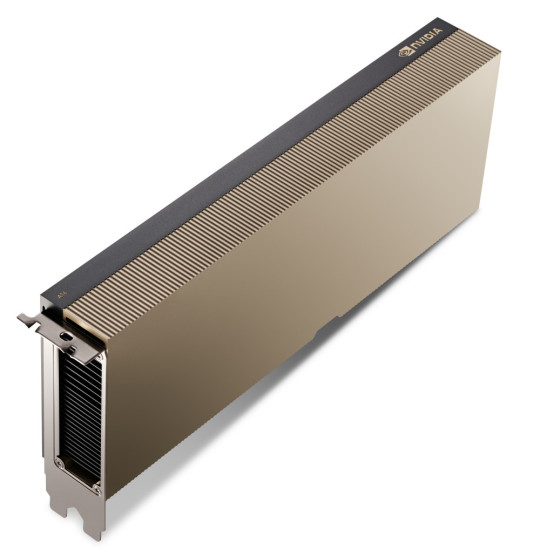

Elevate Your VDI Performance with NVIDIA A16 Enterprise 64GB 250W — Now at Viperatech!

The NVIDIA A16 Enterprise 64GB 250W graphics card is revolutionizing the Virtual Desktop Infrastructure (VDI) landscape. With its cutting-edge features and robust performance, it’s a game-changer for businesses seeking to enhance their virtual desktop environments. Let’s dive into what makes the NVIDIA A16 a must-have for any enterprise.

Next-Gen GPU for High-Density VDI: The NVIDIA A16 is not just any GPU; it’s a powerhouse designed for high-user density VDI environments. Boasting a full height, full length (FHFL) design, this dual-slot card comes with four GPUs on a single board, offering unparalleled performance and efficiency.

Unmatched Memory and Power: With a staggering 64 GB of GDDR6 memory and a maximum power limit of 250W, the A16 stands out in its class. It supports x16 PCIe Gen4 connectivity, ensuring fast and reliable performance. This passively cooled card, featuring a superior thermal design, is NEBS-3 capable, ensuring it can handle challenging ambient environments with ease.

Built on NVIDIA Ampere Architecture: The heart of the A16’s performance lies in the NVIDIA Ampere architecture. It delivers the highest encoder throughput and frame buffer for an exceptional user experience in VDI environments. This is complemented by NVIDIA Virtual PC (vPC) software, enhancing video transcoding and cloud gaming experiences.

Quad GPU Design: This design enables the highest frame buffer, encoder, and decoder density in a dual-slot form factor, perfect for VDI use cases. It’s a testament to NVIDIA’s commitment to providing scalable and powerful solutions for enterprise environments.

Advanced Specifications:

GPU Architecture: Built on the NVIDIA Ampere architecture.

Memory: 4x 16 GB GDDR6, ensuring swift data processing.

Memory Bandwidth: 4x 200 GB/s for rapid data transfer.

CUDA Cores: 4x 1280, delivering incredible computational power.

Tensor Cores and RT Cores: For advanced AI and ray tracing capabilities.

Performance: High TFLOPS and TOPS rates, supporting intensive workloads.

System Interface: PCIe Gen4 (x16) for high-speed connectivity.

Thermal Solution: Passively cooled, with a bidirectional heat sink.

Form Factor: Full height, full length (FHFL), Dual Slot, fitting into standard enterprise setups.

Software Support: Extensive, including NVIDIA Virtual PC (vPC) and more.

Versatile and Secure: The A16 PCIe card is designed with versatility in mind, featuring a bidirectional heat sink that accommodates various airflow directions. It also supports secure and measured boot with hardware root of trust for GPU, ensuring security in sensitive environments.

Get Yours at Viperatech: Ready to transform your enterprise’s VDI experience? The NVIDIA A16 Enterprise 64GB 250W is available now at Viperatech. Don’t miss out on this opportunity to elevate your business’s virtual desktop infrastructure to the next level. Enquire today and see the difference the NVIDIA A16 can make for your enterprise!

M.Hussnain Visit us on social media: Facebook | Twitter | LinkedIn | Instagram | YouTube TikTok

#nvidia#nvidia a16#gpu#viperatech#vipera#technology#NVIDIA Ampere#CUDA#DirectCompute#Multi-Instance GPU

0 notes

Text

Amazon DCV 2024.0 Supports Ubuntu 24.04 LTS With Security

NICE DCV is a different entity now. Along with improvements and bug fixes, NICE DCV is now known as Amazon DCV with the 2024.0 release.

The DCV protocol that powers Amazon Web Services(AWS) managed services like Amazon AppStream 2.0 and Amazon WorkSpaces is now regularly referred to by its new moniker.

What’s new with version 2024.0?

A number of improvements and updates are included in Amazon DCV 2024.0 for better usability, security, and performance. The most recent Ubuntu 24.04 LTS is now supported by the 2024.0 release, which also offers extended long-term support to ease system maintenance and the most recent security patches. Wayland support is incorporated into the DCV client on Ubuntu 24.04, which improves application isolation and graphical rendering efficiency. Furthermore, DCV 2024.0 now activates the QUIC UDP protocol by default, providing clients with optimal streaming performance. Additionally, when a remote user connects, the update adds the option to wipe the Linux host screen, blocking local access and interaction with the distant session.

What is Amazon DCV?

Customers may securely provide remote desktops and application streaming from any cloud or data center to any device, over a variety of network conditions, with Amazon DCV, a high-performance remote display protocol. Customers can run graphic-intensive programs remotely on EC2 instances and stream their user interface to less complex client PCs, doing away with the requirement for pricey dedicated workstations, thanks to Amazon DCV and Amazon EC2. Customers use Amazon DCV for their remote visualization needs across a wide spectrum of HPC workloads. Moreover, well-known services like Amazon Appstream 2.0, AWS Nimble Studio, and AWS RoboMaker use the Amazon DCV streaming protocol.

Advantages

Elevated Efficiency

You don’t have to pick between responsiveness and visual quality when using Amazon DCV. With no loss of image accuracy, it can respond to your apps almost instantly thanks to the bandwidth-adaptive streaming protocol.

Reduced Costs

Customers may run graphics-intensive apps remotely and avoid spending a lot of money on dedicated workstations or moving big volumes of data from the cloud to client PCs thanks to a very responsive streaming experience. It also allows several sessions to share a single GPU on Linux servers, which further reduces server infrastructure expenses for clients.

Adaptable Implementations

Service providers have access to a reliable and adaptable protocol for streaming apps that supports both on-premises and cloud usage thanks to browser-based access and cross-OS interoperability.

Entire Security

To protect customer data privacy, it sends pixels rather than geometry. To further guarantee the security of client data, it uses TLS protocol to secure end-user inputs as well as pixels.

Features

In addition to native clients for Windows, Linux, and MacOS and an HTML5 client for web browser access, it supports remote environments running both Windows and Linux. Multiple displays, 4K resolution, USB devices, multi-channel audio, smart cards, stylus/touch capabilities, and file redirection are all supported by native clients.

The lifecycle of it session may be easily created and managed programmatically across a fleet of servers with the help of DCV Session Manager. Developers can create personalized Amazon DCV web browser client applications with the help of the Amazon DCV web client SDK.

How to Install DCV on Amazon EC2?

Implement:

Sign up for an AWS account and activate it.

Open the AWS Management Console and log in.

Either download and install the relevant Amazon DCV server on your EC2 instance, or choose the proper Amazon DCV AMI from the Amazon Web Services Marketplace, then create an AMI using your application stack.

After confirming that traffic on port 8443 is permitted by your security group’s inbound rules, deploy EC2 instances with the Amazon DCV server installed.

Link:

On your device, download and install the relevant Amazon DCV native client.

Use the web client or native Amazon DCV client to connect to your distant computer at https://:8443.

Stream:

Use AmazonDCV to stream your graphics apps across several devices.

Use cases

Visualization of 3D Graphics

HPC workloads are becoming more complicated and consuming enormous volumes of data in a variety of industrial verticals, including Oil & Gas, Life Sciences, and Design & Engineering. The streaming protocol offered by Amazon DCV makes it unnecessary to send output files to client devices and offers a seamless, bandwidth-efficient remote streaming experience for HPC 3D graphics.

Application Access via a Browser

The Web Client for Amazon DCV is compatible with all HTML5 browsers and offers a mobile device-portable streaming experience. By removing the need to manage native clients without sacrificing streaming speed, the Web Client significantly lessens the operational pressure on IT departments. With the Amazon DCV Web Client SDK, you can create your own DCV Web Client.

Personalized Remote Apps

The simplicity with which it offers streaming protocol integration might be advantageous for custom remote applications and managed services. With native clients that support up to 4 monitors at 4K resolution each, Amazon DCV uses end-to-end AES-256 encryption to safeguard both pixels and end-user inputs.

Amazon DCV Pricing

Amazon Entire Cloud:

Using Amazon DCV on AWS does not incur any additional fees. Clients only have to pay for the EC2 resources they really utilize.

On-site and third-party cloud computing

Please get in touch with DCV distributors or resellers in your area here for more information about licensing and pricing for Amazon DCV.

Read more on Govindhtech.com

#AmazonDCV#Ubuntu24.04LTS#Ubuntu#DCV#AmazonWebServices#AmazonAppStream#EC2instances#AmazonEC2#News#TechNews#TechnologyNews#Technologytrends#technology#govindhtech

2 notes

·

View notes

Link

0 notes

Text

Boost Your Performance: Why You Should Use a VPS for BlueStacks

In 2025, Android emulation continues to rise in popularity—whether it’s for mobile gaming, app development, or social media automation. One of the most well-known Android emulators is BlueStacks, which allows users to run Android apps on Windows and macOS. However, running BlueStacks on a standard PC or laptop can strain your system’s resources and limit performance. That’s where a VPS for BlueStacks comes into play.

Using a Virtual Private Server (VPS) to run BlueStacks can significantly enhance your experience, offering improved speed, reliability, and scalability. Whether you’re a mobile gamer, digital marketer, or developer, this guide will explain exactly why you should use a VPS for BlueStacks and how it can boost your performance in 2025.

What Is BlueStacks?

BlueStacks is a powerful Android emulator that replicates the Android operating system on desktop environments. It enables users to download and run apps from the Google Play Store, making it ideal for:

Playing mobile games like PUBG Mobile, Clash of Clans, or Call of Duty Mobile on a larger screen.

Automating social media tasks using tools like Instagram bots.

Testing Android apps during development.

Running messaging apps like WhatsApp or Telegram in a more manageable desktop setting.

While it’s incredibly functional, BlueStacks is also resource-intensive, requiring significant CPU, RAM, and GPU capacity. This is where many users run into trouble, especially when multitasking or running multiple instances.

What Is a VPS?

A Virtual Private Server (VPS) is a virtual machine hosted on a powerful physical server, offering dedicated resources like CPU, RAM, storage, and bandwidth. Unlike shared hosting, a VPS gives you administrative (root) access, customizable configurations, and isolated environments.

In simple terms, a VPS is like having your own computer in the cloud—with more power, more uptime, and greater control.

Why Use a VPS for BlueStacks?

1. Boosted Performance

Running BlueStacks on a VPS means you’re no longer dependent on your personal device’s hardware limitations. High-performance VPS providers offer specs like:

Multi-core CPUs (Intel Xeon or AMD EPYC)

SSD or NVMe storage

Dedicated RAM

High-speed internet (1Gbps+)

This allows BlueStacks to run smoother, load faster, and handle more apps or game instances at once without lag.

2. 24/7 Uptime and Remote Access

Need BlueStacks running automation scripts or games around the clock? A VPS can stay online 24/7 without relying on your home internet or power supply. With remote desktop access (via RDP or VNC), you can log in from any device—PC, laptop, or even mobile—and manage BlueStacks anytime, anywhere.

This is especially useful for:

Farming resources in games

Running bots or scheduled tasks

Managing multiple accounts

3. Run Multiple Instances Efficiently

BlueStacks includes a Multi-Instance Manager, which lets you run several Android environments at once. On a VPS, you can take full advantage of this feature thanks to increased system resources.

Gamers use this to run multiple characters or accounts in parallel. Marketers can test different automation tools or accounts simultaneously without being throttled by local hardware.

4. Reduce Wear and Tear on Your Personal Device

Running heavy applications like BlueStacks can overheat laptops, drain battery life, and degrade performance over time. Using a VPS shifts the load to the cloud, keeping your personal system free for other tasks—or even offline while BlueStacks continues running remotely.

5. Scalability for Professional Use

As your needs grow—whether you’re automating more tasks, launching more apps, or running more accounts—you can easily scale your VPS plan. Most providers offer scalable packages that allow you to:

Upgrade CPU or RAM without downtime

Add more disk space

Expand bandwidth

This makes a VPS for BlueStacks ideal for professional users who need flexibility and power on demand.

6. Improved Security and Isolation

Many privacy-conscious users prefer VPS hosting for the isolation and control it offers. You can:

Install custom firewalls or antivirus

Use a VPN to anonymize activity

Keep app data separate from your personal machine

This is a game-changer if you’re running sensitive scripts or accounts and want to reduce risk.

How to Set Up BlueStacks on a VPS

Setting up BlueStacks on a VPS is easier than you might think:

Choose a Windows VPS provider – Look for specs like 8+ GB RAM, GPU acceleration (if available), and SSD storage.

Access the VPS – Use Remote Desktop Protocol (RDP) to log into your virtual server.

Download BlueStacks – Visit the official BlueStacks website and install the version suited for your needs.

Customize settings – Allocate CPU and RAM resources to BlueStacks for optimal performance.

Install and run apps – Begin using your Android apps with full performance, privacy, and uptime benefits.

Some VPS providers even offer pre-installed BlueStacks images to make setup even faster.

Ideal Users for BlueStacks VPS Hosting

Mobile gamers who want lag-free gameplay and multi-instance farming.

Social media marketers running bots, schedulers, or multiple accounts.

Android app developers testing software in a clean, isolated environment.

Automation enthusiasts who need round-the-clock uptime.

Privacy-focused users looking for secure, cloud-based operations.

Final Thoughts

Using a VPS for BlueStacks in 2025 is more than just a performance boost—it’s a strategic upgrade that enables better speed, uptime, scalability, and privacy. Whether you’re gaming, marketing, or developing, a VPS ensures that BlueStacks runs smoother, faster, and more securely than ever before.

If you’re tired of lag, crashes, or resource constraints on your local device, it’s time to move your Android emulation to the cloud. With the right VPS, BlueStacks becomes a powerful, always-on tool tailored for high-performance tasks.

0 notes

Text

Boost Your Performance: Why You Should Use a VPS for BlueStacks

In 2025, Android emulation continues to rise in popularity—whether it’s for mobile gaming, app development, or social media automation. One of the most well-known Android emulators is BlueStacks, which allows users to run Android apps on Windows and macOS. However, running BlueStacks on a standard PC or laptop can strain your system’s resources and limit performance. That’s where a VPS for BlueStacks comes into play.

Using a Virtual Private Server (VPS) to run BlueStacks can significantly enhance your experience, offering improved speed, reliability, and scalability. Whether you're a mobile gamer, digital marketer, or developer, this guide will explain exactly why you should use a VPS for BlueStacks and how it can boost your performance in 2025.

What Is BlueStacks?

BlueStacks is a powerful Android emulator that replicates the Android operating system on desktop environments. It enables users to download and run apps from the Google Play Store, making it ideal for:

Playing mobile games like PUBG Mobile, Clash of Clans, or Call of Duty Mobile on a larger screen.

Automating social media tasks using tools like Instagram bots.

Testing Android apps during development.

Running messaging apps like WhatsApp or Telegram in a more manageable desktop setting.

While it’s incredibly functional, BlueStacks is also resource-intensive, requiring significant CPU, RAM, and GPU capacity. This is where many users run into trouble, especially when multitasking or running multiple instances.

What Is a VPS?

A Virtual Private Server (VPS) is a virtual machine hosted on a powerful physical server, offering dedicated resources like CPU, RAM, storage, and bandwidth. Unlike shared hosting, a VPS gives you administrative (root) access, customizable configurations, and isolated environments.

In simple terms, a VPS is like having your own computer in the cloud—with more power, more uptime, and greater control.

Why Use a VPS for BlueStacks?

1. Boosted Performance

Running BlueStacks on a VPS means you're no longer dependent on your personal device’s hardware limitations. High-performance VPS providers offer specs like:

Multi-core CPUs (Intel Xeon or AMD EPYC)

SSD or NVMe storage

Dedicated RAM

High-speed internet (1Gbps+)

This allows BlueStacks to run smoother, load faster, and handle more apps or game instances at once without lag.

2. 24/7 Uptime and Remote Access

Need BlueStacks running automation scripts or games around the clock? A VPS can stay online 24/7 without relying on your home internet or power supply. With remote desktop access (via RDP or VNC), you can log in from any device—PC, laptop, or even mobile—and manage BlueStacks anytime, anywhere.

This is especially useful for:

Farming resources in games

Running bots or scheduled tasks

Managing multiple accounts

3. Run Multiple Instances Efficiently

BlueStacks includes a Multi-Instance Manager, which lets you run several Android environments at once. On a VPS, you can take full advantage of this feature thanks to increased system resources.

Gamers use this to run multiple characters or accounts in parallel. Marketers can test different automation tools or accounts simultaneously without being throttled by local hardware.

4. Reduce Wear and Tear on Your Personal Device

Running heavy applications like BlueStacks can overheat laptops, drain battery life, and degrade performance over time. Using a VPS shifts the load to the cloud, keeping your personal system free for other tasks—or even offline while BlueStacks continues running remotely.

5. Scalability for Professional Use

As your needs grow—whether you're automating more tasks, launching more apps, or running more accounts—you can easily scale your VPS plan. Most providers offer scalable packages that allow you to:

Upgrade CPU or RAM without downtime

Add more disk space

Expand bandwidth

This makes a VPS for BlueStacks ideal for professional users who need flexibility and power on demand.

6. Improved Security and Isolation

Many privacy-conscious users prefer VPS hosting for the isolation and control it offers. You can:

Install custom firewalls or antivirus

Use a VPN to anonymize activity

Keep app data separate from your personal machine

This is a game-changer if you’re running sensitive scripts or accounts and want to reduce risk.

How to Set Up BlueStacks on a VPS

Setting up BlueStacks on a VPS is easier than you might think:

Choose a Windows VPS provider – Look for specs like 8+ GB RAM, GPU acceleration (if available), and SSD storage.

Access the VPS – Use Remote Desktop Protocol (RDP) to log into your virtual server.

Download BlueStacks – Visit the official BlueStacks website and install the version suited for your needs.

Customize settings – Allocate CPU and RAM resources to BlueStacks for optimal performance.

Install and run apps – Begin using your Android apps with full performance, privacy, and uptime benefits.

Some VPS providers even offer pre-installed BlueStacks images to make setup even faster.

Ideal Users for BlueStacks VPS Hosting

Mobile gamers who want lag-free gameplay and multi-instance farming.

Social media marketers running bots, schedulers, or multiple accounts.

Android app developers testing software in a clean, isolated environment.

Automation enthusiasts who need round-the-clock uptime.

Privacy-focused users looking for secure, cloud-based operations.

Final Thoughts

Using a VPS for BlueStacks in 2025 is more than just a performance boost—it’s a strategic upgrade that enables better speed, uptime, scalability, and privacy. Whether you’re gaming, marketing, or developing, a VPS ensures that BlueStacks runs smoother, faster, and more securely than ever before.

If you're tired of lag, crashes, or resource constraints on your local device, it's time to move your Android emulation to the cloud. With the right VPS, BlueStacks becomes a powerful, always-on tool tailored for high-performance tasks.

0 notes

Text

Which Workstation is better for Video Editing Custom made or standard workstation?

A custom-built workstation tailored for video editing provides superior efficiency and flexibility when compared to standard computers. These workstations are crafted to fulfill the precise needs of video editing jobs, guaranteeing smooth workflows and top-notch outcomes.

Advantages of Custom-Built Workstations for Video Editing

1. Excellent Performance for Video Editing Software

Video editing applications such as Adobe Premiere Pro, Final Cut Pro, and DaVinci Resolve necessitate robust hardware to function effectively. These software programs involve intensive video rendering, effects processing, and multi-layer editing, which can slower the performance of the standard computers.

Custom-built Video editing workstations, in contrast, are fine-tuned for these demands. By opting for high-performance CPUs, GPUs, and memory, these systems can smoothly handle resource-demanding software. For instance, a potent multi-core processor, like an AMD Ryzen or Intel Core i9, in conjunction with an advanced GPU such as the NVIDIA RTX series, facilitates smooth playback, swift render times, and effective multitasking.

2. Reduces Rendering and Exporting Times

One of the most labor-intensive aspects of video editing is rendering. During this phase, your computer produces the final version of the video from raw footage, which can take hours based on the project's size and complexity. A custom-built workstation greatly accelerates this procedure.

By choosing components like high-end GPUs and multi-threaded processors, a custom-built system can manage data more quickly, diminishing the time required for rendering and exporting. This can lead to faster turnaround times, enabling video editors to meet stringent deadlines and enhance overall productivity.

3. Scalability and Freedom to Customize

Every video editor has unique needs, and what suits one individual may not be ideal for another. Custom-built workstations offer growth potential and adaptability, meaning they can be tailored to fulfill the specific needs of the user.

With a custom-built workstation, users can choose the optimal mix of RAM, storage, and processing power according to their workflow.

Additionally, custom workstations are easily upgradeable. As software demands change or new technologies emerge, you can boost your RAM, upgrade your GPU, or swap storage drives without the necessity of investing in an entirely new system.

4. High-End Graphics Performance

Video editing frequently necessitates intricate and detailed graphics processing, especially when dealing with 3D rendering, color grading, and visual effects. A custom-built workstation permits you to select the most powerful GPU for these operations.

NVIDIA and AMD provide graphics cards specifically intended for video editing. These cards are optimized for functions like rendering, real-time playback, and expedited effects processing. A robust GPU guarantees that high-quality visuals can be displayed seamlessly, even while navigating large files and complex video sequences.

5. Multiple Storage Solutions

Video files, particularly in high-definition formats, take up significant space. Working with 4K or even 8K footage necessitates ample storage capacity to manage the large files efficiently. Custom-built workstations enable optimal storage configurations that can fulfill these requirements.

With the ability to select from various storage types, such as SSDs (Solid State Drives) for rapid data access and substantial HDDs (Hard Disk Drives) for overall storage, editors can tailor a system that meets their demands.

Moreover, custom-built workstations facilitate RAID (Redundant Array of Independent Disks) arrangements, which provide redundancy and enhanced data access speeds. RAID setups are particularly advantageous in video editing, as they increase the reliability and speed of file transfers.

6. Better Multitasking

Video editors often operate several applications at once, such as video editing software, media players, color grading tools, and various utility programs. Running all these applications simultaneously demands considerable resources from the computer.

Custom-built workstations provide sufficient memory (RAM) and multi-core processors, which are vital for executing various programs without a slowdown. With adequate RAM and processing capability, editors can transition between applications, preview footage, and apply effects without facing delays or crashes.

7. Customized Cooling Solutions

Video editing can produce substantial heat, especially when engaged in intensive rendering or 3D tasks. Overheating can result in throttling, where the system reduces speed to avoid damage, impacting performance.

Custom-built workstations present the option to implement advanced cooling solutions to maintain components at optimal temperatures. High-performance CPUs and GPUs necessitate efficient cooling systems, such as liquid cooling or large air coolers, to guarantee stable operation throughout extended editing periods. A well-ventilated system aids in preventing performance drops and extends the longevity of your hardware.

8. Long-Term Reliability

For professional video editors, downtime is a significant cost. A system that crashes or lags during editing can lead to lost time and productivity. Custom-built workstations are constructed with high-quality components known for their durability and reliability.

By selecting top-tier components from reputable brands, custom-built workstations provide long-lasting stability. Furthermore, since they are customized to your specific needs, there is a reduced risk of encountering compatibility issues that may cause crashes or additional problems.

9. Budget-Friendly Customization

While custom-built workstations might involve a higher initial expense compared to off-the-shelf computers, they deliver greater value over time. Off-the-shelf computers frequently feature low-end components that may not cater to the needs of challenging video editing tasks. In contrast, a custom-built system is optimized for your specific requirements, ensuring that you do not pay for superfluous features.

Additionally, custom workstations are more readily upgradeable, which means you can prolong their lifespan by upgrading single components rather than purchasing an entirely new system every few years. This approach makes them a more economical choice for video editors over the long run.

10. Custom-Built to Your Workflow

Every video editor possesses their individual workflow. Some might emphasize storage capacity, whereas others may need quicker rendering times. Custom-built workstations are designed to address the specific requirements of your workflow, ensuring you have the ideal balance of performance, storage, and graphics capability.

Whether you are working with intricate visual effects, multi-camera setups, or extensive color grading, a custom-built workstation can be configured to manage your particular tasks efficiently. This degree of customization guarantees that you have a machine that is perfectly aligned with your creative process.

0 notes

Text

Running your own infrastructure can be empowering. Whether you're managing a SaaS side project, self-hosting your favorite tools like Nextcloud or Uptime Kuma, running a game server, or just learning by doing, owning your stack gives you full control and flexibility. But it also comes with a cost. The good news? That cost doesn’t have to be high. One of the core values of the LowEndBox community is getting the most out of every dollar. Many of our readers are developers, sysadmins, hobbyists, or small businesses trying to stretch limited infrastructure budgets. That’s why self-hosting is so popular here—it’s customizable, private, and with the right strategy, surprisingly affordable. In this article, we’ll walk through seven practical ways to reduce your self-hosting costs. Whether you’re just starting out or already managing multiple VPSes, these tactics will help you trim your expenses without sacrificing performance or reliability. These aren't just random tips, they’re based on real-world strategies we see in action across the LowEndBox and LowEndTalk communities every day. 1. Use Spot or Preemptible Instances for Non-Critical Workloads Some providers offer deep discounts on “spot” instances, VPSes or cloud servers that can be reclaimed at any time. These are perfect for bursty workloads, short-term batch jobs, or backup processing where uptime isn’t mission-critical. Providers like Oracle Cloud and even some on the LowEndBox VPS deals page offer cost-effective servers that can be used this way. 2. Consolidate with Docker or Lightweight VMs Instead of spinning up multiple VPS instances, try consolidating services using containers or lightweight VMs (like those on Proxmox, LXC, or KVM). You’ll pay for fewer VPSes and get better performance by optimizing your resources. Tools like Docker Compose or Portainer make it easy to manage your stack efficiently. 3. Deploy to Cheaper Regions Server pricing often varies based on data center location. Consider moving your workloads to lower-cost regions like Eastern Europe, Southeast Asia, or Midwest US cities. Just make sure latency still meets your needs. LowEndBox regularly features hosts offering ultra-affordable plans in these locations. 4. Pay Annually When It Makes Sense Some providers offer steep discounts for annual or multi-year plans, sometimes as much as 30–50% compared to monthly billing. If your project is long-term, this can be a great way to save. Before you commit, check if the provider is reputable. User reviews on LowEndTalk can help you make a smart call. 5. Take Advantage of Free Tiers You’d be surprised how far you can go on free infrastructure these days. Services like: Cloudflare Tunnels (free remote access to local servers) Oracle Cloud Free Tier (includes 4 vCPUs and 24GB RAM!) GitHub Actions for automation Hetzner’s free DNS or Backblaze’s generous free storage Combined with a $3–$5 VPS, these tools can power an entire workflow on a shoestring budget. 6. Monitor Idle Resources It’s easy to let unused servers pile up. Get into the habit of monitoring resource usage and cleaning house monthly. If a VPS is sitting idle, shut it down or consolidate it. Tools like Netdata, Grafana + Prometheus, or even htop and ncdu can help you track usage and trim the fat. 7. Watch LowEndBox for Deals (Seriously) This isn’t just self-promo, it’s reality, LowEndBox has been the global market leader in broadcasting great deals for our readers for years. Our team at LowEndBox digs up exclusive discounts, coupon codes, and budget-friendly hosting options from around the world every week. Whether it’s a $15/year NAT VPS, or a powerful GPU server for AI workloads under $70/month, we help you find the right provider at the right price. Bonus: we also post guides and how-tos to help you squeeze the most out of your stack. Final Thoughts Cutting costs doesn’t mean sacrificing quality. With the right mix of smart planning, efficient tooling, and a bit of deal hunting, you can run powerful, scalable infrastructure on a micro-budget. Got your own cost-saving tip? Share it with the community over at LowEndTalk! https://lowendbox.com/blog/1-vps-1-usd-vps-per-month/ https://lowendbox.com/blog/2-usd-vps-cheap-vps-under-2-month/ https://lowendbox.com/best-cheap-vps-hosting-updated-2020/ Read the full article

0 notes

Text

Cách tối ưu hiệu suất khi sử dụng máy chủ GPU thuê ngoài

Máy chủ GPU thuê ngoài đang trở thành giải pháp phổ biến cho các doanh nghiệp và cá nhân có nhu cầu xử lý dữ liệu lớn, AI, Machine Learning hay đồ họa chuyên sâu. Tuy nhiên, để tận dụng tối đa hiệu suất của máy chủ có GPU, bạn cần có chiến lược tối ưu hợp lý. Bài viết này sẽ hướng dẫn cách tối ưu hiệu suất khi sử dụng máy chủ GPU thuê ngoài.

Lựa chọn cấu hình GPU phù hợp

Không phải mọi dự án đều cần cấu hình GPU cao cấp nhất. Việc lựa chọn cấu hình phù hợp giúp tiết kiệm chi phí và tối ưu hiệu suất.

AI & Machine Learning: Nên chọn GPU có nhiều nhân CUDA, VRAM lớn như NVIDIA A100, RTX 3090, hoặc Tesla V100.

Render đồ họa & CGI: Cần GPU có VRAM cao và băng thông bộ nhớ rộng như RTX 4090, Quadro RTX.

Khai thác dữ liệu lớn: Chọn GPU có hiệu suất xử lý song song cao như NVIDIA H100, AMD Instinct MI250.

Tối ưu phần mềm và trình điều khiển

Việc cài đặt đúng phần mềm và driver giúp cải thiện đáng kể hiệu suất máy chủ GPU.

Cập nhật driver mới nhất: Sử dụng phiên bản driver ổn định từ NVIDIA hoặc AMD.

Cấu hình CUDA/cuDNN hợp lý: Nếu chạy AI/ML, cần kiểm tra CUDA/cuDNN có tương thích với framework (TensorFlow, PyTorch).

Tối ưu môi trường ảo hóa: Nếu chạy trên Docker, đảm bảo GPU được truy cập trực tiếp bằng NVIDIA Container Toolkit.

Sử dụng tài nguyên GPU hiệu quả

Việc tối ưu cách sử dụng tài nguyên GPU sẽ giúp giảm tải và tăng tốc xử lý.

Tận dụng GPU Multi-Instance (MIG): Nếu chạy nhiều tác vụ nhỏ, chia GPU thành nhiều phân vùng độc lập.

Batch Processing: Xử lý dữ liệu theo lô lớn thay vì từng phần nhỏ lẻ để tận dụng tối đa băng thông bộ nhớ.

Parallel Computing: Viết code tối ưu theo mô hình xử lý song song để tận dụng toàn bộ tài nguyên GPU.

Giám sát và quản lý hiệu suất

Để đảm bảo hiệu suất ổn định, cần thường xuyên giám sát và tối ưu hệ thống.

Dùng NVIDIA SMI hoặc AMD ROCm: Theo dõi nhiệt độ, mức sử dụng bộ nhớ và hiệu suất GPU.

Cấu hình Power Limit hợp lý: Giới hạn công suất GPU để tránh quá tải nhiệt.

Tích hợp công cụ giám sát như Prometheus + Grafana: Tạo dashboard theo dõi real-time hiệu suất GPU.

Kết luận

Tối ưu hiệu suất máy chủ GPU thuê ngoài không chỉ giúp tăng tốc xử lý mà còn tiết kiệm chi phí vận hành. Bằng cách lựa chọn cấu hình phù hợp, tinh chỉnh phần mềm, sử dụng tài nguyên hiệu quả và giám sát chặt chẽ, bạn có thể khai thác tối đa sức mạnh của GPU. Nếu bạn cần hỗ trợ thêm về tối ưu hệ thống, đừng ngần ngại liên hệ với các chuyên gia về GPU!

Xem thêm: https://vndata.vn/may-chu-do-hoa-gpu/

0 notes

Text

Level Up Your Gameplay: Best Gaming Laptops Under ₹60,000 You Can Buy Today!

The gaming industry in India is booming, with millions of enthusiasts joining the gaming community every year. However, many gamers still believe that a high-performance gaming laptop requires a large budget. Fortunately, the market now offers several affordable options that deliver excellent performance without breaking the bank. In this blog, we will explore the Best Gaming Laptop Under 60000, featuring powerful machines that can handle modern games with ease. Brands like Acer have introduced exceptional models that strike the perfect balance between performance, durability, and price. From powerful processors to high-quality graphics, these laptops provide everything you need to level up your gameplay. Read on to discover the top picks and what makes them stand out.

Key Features to Look for in a Gaming Laptop Under ₹60,000

When looking for the Best Gaming Laptop Under 60000, it's essential to know which features matter most for gaming performance. Acer laptops, for instance, often come equipped with advanced specifications within this budget range. Here are some key aspects to consider:

Processor Power: The processor acts as the brain of your laptop. In the gaming world, a minimum of an Intel Core i5 or AMD Ryzen 5 is recommended. The Acer Aspire 7, for instance, features a Ryzen 5 processor that ensures smooth performance even during intense gaming sessions.

Graphics Card (GPU): A dedicated GPU like the NVIDIA GTX 1650 is essential for high-quality visuals and smooth gameplay. Integrated graphics may suffice for basic tasks, but modern games demand more.

RAM & Storage: At least 8GB of RAM is necessary for most games, with expandable options being a plus. Additionally, SSD storage significantly boosts loading times, enhancing the overall experience.

Cooling System: Overheating can throttle performance. Acer laptops often incorporate advanced cooling mechanisms to maintain optimal performance during prolonged gaming.

Display Quality: A high refresh rate, such as 120Hz, offers a smoother visual experience, crucial for competitive gaming.

Top 5 Gaming Laptops Under ₹60,000 in 2024

The market offers several gaming laptops under ₹60,000, but a few stand out for their performance and reliability. Here are the top 5 models to consider:

1. Acer Aspire 7:

Processor: AMD Ryzen 5 5500U

GPU: NVIDIA GTX 1650

RAM: 8GB (Expandable)

Storage: 512GB SSD

Display: 15.6" FHD, 120Hz

The Acer Aspire 7 delivers exceptional performance, making it the Best Gaming Laptop Under 60000 for casual and competitive gamers alike.

2. HP Pavilion Gaming: Intel Core i5, GTX 1650, and 512GB SSD.

3. Lenovo IdeaPad Gaming 3: Ryzen 5, GTX 1650, and 120Hz display.

4. ASUS TUF Gaming F15: Core i5, GTX 1650, military-grade build.

5. MSI GF63 Thin: Core i5, GTX 1650, lightweight design.

While all these models offer excellent performance, Acer Aspire 7 stands out due to its efficient cooling, powerful specs, and competitive pricing.

How to Choose the Best Gaming Laptop for Your Needs

Choosing the Best Gaming Laptop Under 60000 depends largely on your gaming habits and performance expectations. Here’s a quick guide to help you decide:

For Competitive Gamers: Opt for laptops with high refresh rates and powerful GPUs. The Acer Aspire 7, with its 120Hz display and GTX 1650, ensures smooth gameplay without lag.

For Casual Gamers: If you enjoy single-player games or lighter titles, prioritize a balanced configuration with good cooling. Acer’s laptops offer efficient thermal management, making them ideal for extended gaming sessions.

For Streamers: Streaming demands more processing power. A multi-core CPU like the Ryzen 5 in the Acer Aspire 7 helps handle both gaming and streaming simultaneously.

For Students & Professionals: If you need a laptop for both work and play, choose a model with reliable performance and durable build quality. Acer Aspire 7 fits the bill, offering robust performance in both categories.

Tips to Optimize Your Gaming Laptop’s Performance

Once you’ve selected your Best Gaming Laptop Under 60000, optimizing its performance can significantly enhance your gaming experience. Follow these tips:

Regular System Updates: Ensure your GPU drivers and Windows updates are always current. Brands like Acer often release firmware updates that improve performance.

Cooling Solutions: Invest in an external cooling pad to reduce thermal throttling. Clean the internal fans periodically to maintain airflow.

Storage Management: Install games on an SSD for faster load times. Use software like MSI Afterburner to monitor system performance.

Power Settings Adjustment: Switch to 'High Performance' mode in Windows settings to maximize CPU and GPU output.

Close Background Applications: Shut down unnecessary programs running in the background to free up system resources.

Conclusion

Finding the Best Gaming Laptop Under 60000 doesn’t mean compromising on performance or quality. Brands like Acer have made high-end gaming accessible with laptops like the Acer Aspire 7, offering impressive specs, efficient cooling, and robust build quality. Whether you’re a competitive gamer, a casual player, or a content creator, the right laptop can elevate your gaming experience. So, gear up, choose wisely, and get ready to level up your gameplay today!

#laptop#acer#acer india#acer laptop#desktop#monitor#chromebook#online store#gaming laptop#best touch screen laptop#Best Gaming Laptop Under 60000#Best Laptop Under 60000#Acer India

0 notes

Text

Efficient GPU Management for AI Startups: Exploring the Best Strategies

The rise of AI-driven innovation has made GPUs essential for startups and small businesses. However, efficiently managing GPU resources remains a challenge, particularly with limited budgets, fluctuating workloads, and the need for cutting-edge hardware for R&D and deployment.

Understanding the GPU Challenge for Startups

AI workloads—especially large-scale training and inference—require high-performance GPUs like NVIDIA A100 and H100. While these GPUs deliver exceptional computing power, they also present unique challenges:

High Costs – Premium GPUs are expensive, whether rented via the cloud or purchased outright.

Availability Issues – In-demand GPUs may be limited on cloud platforms, delaying time-sensitive projects.

Dynamic Needs – Startups often experience fluctuating GPU demands, from intensive R&D phases to stable inference workloads.

To optimize costs, performance, and flexibility, startups must carefully evaluate their options. This article explores key GPU management strategies, including cloud services, physical ownership, rentals, and hybrid infrastructures—highlighting their pros, cons, and best use cases.

1. Cloud GPU Services

Cloud GPU services from AWS, Google Cloud, and Azure offer on-demand access to GPUs with flexible pricing models such as pay-as-you-go and reserved instances.

✅ Pros:

✔ Scalability – Easily scale resources up or down based on demand. ✔ No Upfront Costs – Avoid capital expenditures and pay only for usage. ✔ Access to Advanced GPUs – Frequent updates include the latest models like NVIDIA A100 and H100. ✔ Managed Infrastructure – No need for maintenance, cooling, or power management. ✔ Global Reach – Deploy workloads in multiple regions with ease.

❌ Cons:

✖ High Long-Term Costs – Usage-based billing can become expensive for continuous workloads. ✖ Availability Constraints – Popular GPUs may be out of stock during peak demand. ✖ Data Transfer Costs – Moving large datasets in and out of the cloud can be costly. ✖ Vendor Lock-in – Dependency on a single provider limits flexibility.

🔹 Best Use Cases:

Early-stage startups with fluctuating GPU needs.

Short-term R&D projects and proof-of-concept testing.

Workloads requiring rapid scaling or multi-region deployment.

2. Owning Physical GPU Servers

Owning physical GPU servers means purchasing GPUs and supporting hardware, either on-premises or collocated in a data center.

✅ Pros:

✔ Lower Long-Term Costs – Once purchased, ongoing costs are limited to power, maintenance, and hosting fees. ✔ Full Control – Customize hardware configurations and ensure access to specific GPUs. ✔ Resale Value – GPUs retain significant resale value (Sell GPUs), allowing you to recover investment costs when upgrading. ✔ Purchasing Flexibility – Buy GPUs at competitive prices, including through refurbished hardware vendors. ✔ Predictable Expenses – Fixed hardware costs eliminate unpredictable cloud billing. ✔ Guaranteed Availability – Avoid cloud shortages and ensure access to required GPUs.

❌ Cons:

✖ High Upfront Costs – Buying high-performance GPUs like NVIDIA A100 or H100 requires a significant investment. ✖ Complex Maintenance – Managing hardware failures and upgrades requires technical expertise. ✖ Limited Scalability – Expanding capacity requires additional hardware purchases.

🔹 Best Use Cases:

Startups with stable, predictable workloads that need dedicated resources.

Companies conducting large-scale AI training or handling sensitive data.

Organizations seeking long-term cost savings and reduced dependency on cloud providers.

3. Renting Physical GPU Servers

Renting physical GPU servers provides access to high-performance hardware without the need for direct ownership. These servers are often hosted in data centers and offered by third-party providers.

✅ Pros:

✔ Lower Upfront Costs – Avoid large capital investments and opt for periodic rental fees. ✔ Bare-Metal Performance – Gain full access to physical GPUs without virtualization overhead. ✔ Flexibility – Upgrade or switch GPU models more easily compared to ownership. ✔ No Depreciation Risks – Avoid concerns over GPU obsolescence.

❌ Cons:

✖ Rental Premiums – Long-term rental fees can exceed the cost of purchasing hardware. ✖ Operational Complexity – Requires coordination with data center providers for management. ✖ Availability Constraints – Supply shortages may affect access to cutting-edge GPUs.

🔹 Best Use Cases:

Mid-stage startups needing temporary GPU access for specific projects.

Companies transitioning away from cloud dependency but not ready for full ownership.

Organizations with fluctuating GPU workloads looking for cost-effective solutions.

4. Hybrid Infrastructure

Hybrid infrastructure combines owned or rented GPUs with cloud GPU services, ensuring cost efficiency, scalability, and reliable performance.

What is a Hybrid GPU Infrastructure?

A hybrid model integrates: 1️⃣ Owned or Rented GPUs – Dedicated resources for R&D and long-term workloads. 2️⃣ Cloud GPU Services – Scalable, on-demand resources for overflow, production, and deployment.

How Hybrid Infrastructure Benefits Startups

✅ Ensures Control in R&D – Dedicated hardware guarantees access to required GPUs. ✅ Leverages Cloud for Production – Use cloud resources for global scaling and short-term spikes. ✅ Optimizes Costs – Aligns workloads with the most cost-effective resource. ✅ Reduces Risk – Minimizes reliance on a single provider, preventing vendor lock-in.

Expanded Hybrid Workflow for AI Startups

1️⃣ R&D Stage: Use physical GPUs for experimentation and colocate them in data centers. 2️⃣ Model Stabilization: Transition workloads to the cloud for flexible testing. 3️⃣ Deployment & Production: Reserve cloud instances for stable inference and global scaling. 4️⃣ Overflow Management: Use a hybrid approach to scale workloads efficiently.

Conclusion

Efficient GPU resource management is crucial for AI startups balancing innovation with cost efficiency.

Cloud GPUs offer flexibility but become expensive for long-term use.

Owning GPUs provides control and cost savings but requires infrastructure management.

Renting GPUs is a middle-ground solution, offering flexibility without ownership risks.

Hybrid infrastructure combines the best of both, enabling startups to scale cost-effectively.

Platforms like BuySellRam.com help startups optimize their hardware investments by providing cost-effective solutions for buying and selling GPUs, ensuring they stay competitive in the evolving AI landscape.

The original article is here: How to manage GPU resource?

#GPUManagement #GPUsForAI #AIGPU #TechInfrastructure #HighPerformanceComputing #CloudComputing #AIHardware #Tech

#GPU Management#GPUs for AI#AI GPU#High Performance Computing#cloud computing#ai hardware#technology

0 notes

Text

AI Infrastructure Companies - NVIDIA Corporation (US) and Advanced Micro Devices, Inc. (US) are the Key Players

The global AI infrastructure market is expected to be valued at USD 135.81 billion in 2024 and is projected to reach USD 394.46 billion by 2030 and grow at a CAGR of 19.4% from 2024 to 2030. NVIDIA Corporation (US), Advanced Micro Devices, Inc. (US), SK HYNIX INC. (South Korea), SAMSUNG (South Korea), Micron Technology, Inc. (US) are the major players in the AI infrastructure market. Market participants have become more varied with their offerings, expanding their global reach through strategic growth approaches like launching new products, collaborations, establishing alliances, and forging partnerships.

For instance, in April 2024, SK HYNIX announced an investment in Indiana to build an advanced packaging facility for next-generation high-bandwidth memory. The company also collaborated with Purdue University (US) to build an R&D facility for AI products.

In March 2024, NVIDIA Corporation introduced the NVIDIA Blackwell platform to enable organizations to build and run real-time generative AI featuring 6 transformative technologies for accelerated computing. It enables AI training and real-time LLM inference for models up to 10 trillion parameters.

Major AI Infrastructure companies include:

NVIDIA Corporation (US)

Advanced Micro Devices, Inc. (US)

SK HYNIX INC. (South Korea)

SAMSUNG (South Korea)

Micron Technology, Inc. (US)

Intel Corporation (US)

Google (US)

Amazon Web Services, Inc. (US)

Tesla (US)

Microsoft (US)

Meta (US)

Graphcore (UK)

Groq, Inc. (US)

Shanghai BiRen Technology Co., Ltd. (China)

Cerebras (US)

NVIDIA Corporation.:

NVIDIA Corporation (US) is a multinational technology company that specializes in designing and manufacturing Graphics Processing Units (GPUs) and System-on-Chips (SoCs) , as well as artificial intelligence (AI) infrastructure products. The company has revolutionized the Gaming, Data Center markets, AI and Professional Visualization through its cutting-edge GPU Technology. Its deep learning and AI platforms are recognized as the key enablers of AI computing and ML applications. NVIDIA is positioned as a leader in the AI infrastructure, providing a comprehensive stack of hardware, software, and services. It undertakes business through two reportable segments: Compute & Networking and Graphics. The scope of the Graphics segment includes GeForce GPUs for gamers, game streaming services, NVIDIA RTX/Quadro for enterprise workstation graphics, virtual GPU for computing, automotive, and 3D internet applications. The Compute & Networking segment includes computing platforms for data centers, automotive AI and solutions, networking, NVIDIA AI Enterprise software, and DGX Cloud. The computing platform integrates an entire computer onto a single chip. It incorporates multi-core CPUs and GPUs to drive supercomputing for drones, autonomous robots, consoles, cars, and entertainment and mobile gaming devices.

Download PDF Brochure @ https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=38254348

Advanced Micro Devices, Inc.:

Advanced Micro Devices, Inc. (US) is a provider of semiconductor solutions that designs and integrates technology for graphics and computing. The company offers many products, including accelerated processing units, processors, graphics, and system-on-chips. It operates through four reportable segments: Data Center, Gaming, Client, and Embedded. The portfolio of the Data Center segment includes server CPUs, FPGAS, DPUs, GPUs, and Adaptive SoC products for data centers. The company offers AI infrastructure under the Data Center segment. The Client segment comprises chipsets, CPUs, and APUs for desktop and notebook personal computers. The Gaming segment focuses on discrete GPUs, semi-custom SoC products, and development services for entertainment platforms and computing devices. Under the Embedded segment are embedded FPGAs, GPUs, CPUs, APUs, and Adaptive SoC products. Advanced Micro Devices, Inc. (US) supports a wide range of applications including automotive, defense, industrial, networking, data center and computing, consumer electronics, networking

0 notes

Text

How Cloudtopiaa Empowers Creators and Innovators Worldwide

Introduction: The Cloud Revolution in Creative Industries

Cloud computing has reshaped the way industries approach innovation, providing unparalleled access to resources and scaling capabilities. For creators, whether they are gaming studios, AI researchers, or tech startups, the cloud has become a vital enabler of growth, speed, and agility. Cloudtopiaa is at the forefront of this transformation, offering solutions that not only scale businesses but empower them to push the boundaries of what’s possible.

This blog explores how Cloudtopiaa is enabling creators worldwide to innovate faster, collaborate more effectively, and reduce the friction that often hinders progress. Through customer success stories and tangible use cases, we highlight the impact Cloudtopiaa has had on businesses in gaming, AI, and startup sectors.

Cloud computing for creators

The Cloud Computing Paradigm Shift

For years, cloud computing has been a buzzword in technology circles, but its true power is now becoming fully realized. The ability to provision infrastructure at scale, on demand, and without the need for upfront investments has democratized access to computing resources. The cloud is no longer just a utility for large corporations; it’s a game-changer for startups and independent creators.

Cloudtopiaa’s mission is to simplify cloud adoption by offering flexible, scalable, and accessible cloud services that integrate seamlessly with the workflows of creators. Whether it’s handling big data for AI models or enabling a game studio to run simulations across thousands of devices, Cloudtopiaa’s solutions enable the kind of innovation that drives industries forward.

Empowering Gaming Studios: Scaling with Efficiency

Gaming has emerged as one of the most competitive and resource-intensive industries globally. Game developers face immense pressure to deliver high-quality, immersive experiences while managing resource constraints and tight deadlines. This is where Cloudtopiaa steps in.

Case Study: Game Studio Expands Game Development with Cloudtopiaa

One such indie game studio, facing the challenge of rapidly scaling its infrastructure to keep pace with a growing fanbase, adopted Cloudtopiaa’s platform. Before transitioning to the cloud, the studio was overwhelmed with managing servers, handling game engine integration, and scaling resources for multiplayer features. With Cloudtopiaa, the studio quickly integrated their existing tools with the cloud, significantly improving their development cycle.

By leveraging Cloudtopiaa’s high-performance compute resources, the studio managed to scale from a small team of developers to a multi-location operation without worrying about the infrastructure. This not only sped up the game development process but also cut infrastructure costs by 40%, while enabling the studio to serve millions of players globally with lower latency.

The ability to rapidly spin up new virtual machines for testing, deploy new game features with minimal downtime, and scale back resources during non-peak times allowed the studio to focus on creating engaging experiences rather than managing infrastructure.

Fostering Innovation in AI Startups: Accelerating Development

The field of artificial intelligence (AI) has seen explosive growth in recent years. However, AI research requires access to massive amounts of computing power to train models and analyze data sets. Many AI startups struggle with provisioning enough resources to meet their computational needs while keeping costs manageable.

Case Study: AI Startup Streamlines Development with Cloudtopiaa

One AI startup, focused on machine learning for healthcare, turned to Cloudtopiaa to accelerate its model training process. They needed access to GPU-powered instances to handle large datasets and complex model training without the prohibitive costs associated with traditional on-premise hardware.

Cloudtopiaa’s specialized cloud infrastructure, with dedicated GPU and AI compute instances, reduced the training time for machine learning models by 50%. Moreover, the startup was able to scale resources dynamically based on project needs, ensuring that they were only paying for what they used. This allowed them to push forward with new product iterations and accelerate their time to market by 30%.

With Cloudtopiaa’s solutions, the AI startup reduced costs by 25% while improving the quality and speed of its AI models. The platform’s ease of use and flexible billing options provided a much-needed competitive edge in a crowded market.

Enabling Startups: Seamless Growth from Day One

For startups, the cloud represents more than just a way to run applications—it is the backbone of their entire infrastructure. For a startup to succeed, it needs to be able to pivot quickly, scale rapidly, and minimize operational overhead.

Case Study: Streamlining Operations for a Tech Startup

A small technology startup with limited resources turned to Cloudtopiaa for a comprehensive cloud solution. They required an easy-to-use, cost-effective cloud platform that could grow with their business. Cloudtopiaa provided them with the tools to automate infrastructure management, manage their services seamlessly, and focus on product development.

With the support of Cloudtopiaa’s flexible pricing model, the startup was able to scale its infrastructure as the business grew without worrying about upfront costs or complex management. By using Cloudtopiaa’s integrated tools, the team was able to focus on creating new features, building customer relationships, and generating revenue rather than getting bogged down by operational concerns.

The result was a quicker time to market for their product and the ability to onboard customers at a faster pace, all while keeping operational costs under control.

Conclusion: Empowering Innovation with Cloudtopiaa

Cloudtopiaa is more than just a cloud platform. It is a catalyst for innovation across industries. By enabling gaming studios to scale effortlessly, helping AI startups accelerate model training, and providing cost-effective cloud solutions for tech startups, Cloudtopiaa is empowering creators and innovators to push the boundaries of what’s possible.

The future of innovation lies in the cloud, and Cloudtopiaa is at the heart of that revolution. Whether you’re a startup looking to scale, a gaming studio seeking to deliver immersive experiences, or an AI researcher working on the next big breakthrough, Cloudtopiaa is here to help you turn your vision into reality.

#Cloudtopiaa #CloudComputing #InnovationInTech #AIStartups #TechStartups #CloudSolutions #AIResearch #CloudPlatform v#GamingIndustry #AIModels #TechInnovation #CloudInfrastructure #CloudForCreators #StartupGrowth #CloudTech #DigitalTransformation #MachineLearning #ScalableSolutions #GameDevelopment #TechEmpowerment

0 notes

Text

H3 Virtual Machines: Compute Engine-Optimized Machine family

Compute Engine’s compute-optimized machine family

High performance computing (HPC) workloads and compute-intensive tasks are best suited for instances of compute-optimized virtual machines (VMs). With their architecture that makes use of characteristics like non-uniform memory access (NUMA) for optimal, dependable, consistent performance, compute-optimized virtual machines (VMs) provide the best performance per core.

This family of machines includes the following machine series:

Two 4th-generation Intel Xeon Scalable processors, code-named Sapphire Rapids, with an all-core frequency of 3.0 GHz power H3 virtual machines. 88 virtual cores (vCPUs) and 352 GB of DDR5 memory are features of H3 virtual machines.

The third generation AMD EPYC Milan CPU, which has a maximum boost frequency of 3.5 GHz, powers C2D virtual machines. Flexible scaling between 2 and 112 virtual CPUs and 2 to 8 GB of RAM per vCPU are features of C2D virtual machines.

The 2nd-generation Intel Xeon Scalable processor (Cascade Lake), which has a sustained single-core maximum turbo frequency of 3.9 GHz, powers C2 virtual machines. C2 provides virtual machines (VMs) with 4–60 vCPUs and 4 GB of memory per vCPU.

H3 machine series

The 4th generation Intel Xeon Scalable processors (code-named Sapphire Rapids), DDR5 memory, and Titanium offload processors power the H3 machine series and H3 virtual machines.

For applications involving compute-intensive high performance computing (HPC) in Compute Engine, H3 virtual machines (VMs) provide the highest pricing performance. The single-threaded H3 virtual machines (VMs) are perfect for a wide range of modeling and simulation tasks, such as financial modeling, genomics, crash safety, computational fluid dynamics, and general scientific and engineering computing. Compact placement, which is ideal for closely connected applications that grow over several nodes, is supported by H3 virtual machines.

There is only one size for the H3 series, which includes a whole host server. You can change the amount of visible cores to reduce licensing fees, but the VM still costs the same. H3 virtual machines (VMs) have a default network bandwidth rate of up to 200 Gbps and are able to utilize the full host network capacity. However, there is a 1 Gbps limit on the VM to internet bandwidth.

H3 virtual machines are unable to allow simultaneous multithreading (SMT). To guarantee optimal performance constancy, there is also no overcommitment.

H3 virtual machines can be purchased on-demand or with committed use discounts (CUDs) for one or three years. Google Kubernetes Engine can be utilized with H3 virtual machines.

H3 VMs Limitations

The following are the limitations of the H3 machine series:

Only a certain machine type is offered by the H3 machine series. No custom machine shapes are available.

GPUs cannot be used with H3 virtual machines.

There is a 1 Gbps limit on outgoing data transfer.

The performance limits for Google Cloud Hyperdisk and Persistent Disk are 240 MBps throughput and 15,000 IOPS.

Machine images are not supported by H3 virtual machines.

The NVMe storage interface is the only one supported by H3 virtual machines.

Disks cannot be created from H3 VM images.

Read-only or multi-writer disk sharing is not supported by H3 virtual machines.

Different types of H3 machines

Machine typesvCPUs*Memory (GB)Max network egress bandwidth†h3-standard-8888352Up to 200 Gbps

With no simultaneous multithreading (SMT), a vCPU represents a whole core. † The default egress bandwidth is limited to the specified value. The destination IP address and several variables determine the actual egress bandwidth. Refer to the network bandwidth.

Supported disk types for H3

The following block storage types are compatible with H3 virtual machines:

Balanced Persistent Disk (pd-balanced)

Hyperdisk Balanced (hyperdisk-balanced)

Hyperdisk Throughput (hyperdisk-throughput)

Capacity and disk limitations

With a virtual machine, you can employ a combination of persistent disk and hyperdisk volumes, however there are some limitations:

Each virtual machine can have no more than 128 hyperdisk and persistent disk volumes combined.

All disk types’ combined maximum total disk capacity (in TiB) cannot be greater than:

Regarding computer types with fewer than 32 virtual CPUs:

257 TiB for all Hyperdisk or all Persistent Disk

257 TiB for a mixture of Hyperdisk and Persistent Disk

For computer types that include 32 or more virtual central processors:

512 TiB for all Hyperdisk

512 TiB for a mixture of Hyperdisk and Persistent Disk

257 TiB for all Persistent Disk

H3 storage limits are described in the following table: Maximum number of disks per VMMachine typesAll disk types All Hyperdisk typesHyperdisk BalancedHyperdisk ThroughputHyperdisk ExtremeLocal SSDh3-standard-88128648640Not supported

Network compatibility for H3 virtual machines

gVNIC network interfaces are needed for H3 virtual machines. For typical networking, H3 allows up to 200 Gbps of network capacity.

Make sure the operating system image you use is fully compatible with H3 before moving to H3 or setting up H3 virtual machines. Even though the guest OS displays the gve driver version as 1.0.0, fully supported images come with the most recent version of the gVNIC driver. The VM may not be able to reach the maximum network bandwidth for H3 VMs if it is running an operating system with limited support, which includes an outdated version of the gVNIC driver.

The most recent gVNIC driver can be manually installed if you use a custom OS image with the H3 machine series. For H3 virtual machines, the gVNIC driver version v1.3.0 or later is advised. To take advantage of more features and bug improvements, Google advises using the most recent version of the gVNIC driver.

Read more on Govindhtech.com

#H3VMs#H3virtualmachines#computeengine#virtualmachine#VMs#Google#googlecloud#govindhtech#NEWS#TechNews#technology#technologytrends#technologynews

1 note

·

View note

Text

From Chips to Insights: How AMD is Shaping the Future of Artificial Intelligence

Introduction

In an age where technological advancements are reshaping industries and redefining the boundaries of what’s possible, few companies have made a mark quite like Advanced Micro Devices (AMD). Renowned primarily for its semiconductor technology, AMD has transitioned from being a mere chip manufacturer to a pioneering force in the realm of artificial intelligence (AI). This article delves into the various dimensions of AMD's contributions, exploring how their innovations in hardware and software are setting new benchmarks amd for AI capabilities.

Through robust engineering, strategic partnerships, and groundbreaking research initiatives, AMD is not just riding the wave of AI; it’s actively shaping its future. Buckle up as we explore this transformative journey - from chips to insights.

From Chips to Insights: How AMD is Shaping the Future of Artificial Intelligence

AMD's evolution is a remarkable tale. Initially focused on producing microprocessors and graphics cards, the company has now pivoted towards harnessing AI technologies to enhance performance across various sectors. But how did this transition happen?

The Genesis: Understanding AMD’s Technological Roots

AMD started its journey as a chip manufacturer but quickly recognized the rising tide of AI. The need for faster processing capabilities became evident with increased data generation across industries. With that realization came a shift in focus towards developing versatile architectures that support machine learning algorithms essential for AI applications.

AMD's Product Portfolio: A Closer Look

One cannot understand how AMD shapes AI without diving into its product offerings:

Ryzen Processors: Known for their multi-threading capabilities that allow parallel processing, crucial for training AI models efficiently. Radeon GPUs: Graphics cards designed not just for gaming but also optimized for deep learning tasks. EPYC Server Processors: Tailored for high-performance computing environments where large datasets demand immense processing power.

Each product plays a pivotal role in enhancing computational performance, thus accelerating the pace at which AI can be developed and deployed.

youtube

Driving Innovation through Research and Development

AMD's commitment to R&D underpins its success in shaping AI technologies. Investments in cutting-edge research facilities enable them to innovate continuously. For instance, the development of AMD Cpu ROCm (Radeon Open Compute) platform provides an open-source framework aimed at facilitating machine learning workloads, thereby democratizing access to powerful computing resources.

The Role of Hardware in Artificial Intelligence Understanding Hardware Acceleration

What exactly is hardware acceleration? It refers to using specialized hardware components—like GPUs or TPUs—to perform certain tasks more efficiently than traditional CPUs alone could manage. In AI contexts, this means faster training times and enhanced model performance.

AMD GPUs and Their Impact on Machine Learning

Graphics Processing Units (GPUs) have become indispensable in machine learning due to their ability to perform multiple cal

0 notes

Text

[ad_1] Aarna.ml today announced the release of Version 2.0 of its GPU Cloud Management Software (CMS). This new version is designed to transform how AI Cloud providers-both public and private-optimize and scale their GPU-as-a-Service (GPUaaS). By enabling multi-tenant sharing of GPU pools, Aarna.ml's software allows cloud providers to boost internal rates of return (IRR) by over 140% while increasing revenue and enabling users to efficiently deploy and scale their AI workloads with hyperscaler-grade cloud features.The latest release equips AI cloud providers with tools to offer on-demand, fully isolated bare metal, virtual machine (VM), and container instances. This flexibility is crucial for public and private AI cloud providers seeking to maximize their return on investment on GPU infrastructure and extend the lifespan of their investments."As inferencing demand grows and aging GPUs become more prevalent, AI cloud providers will need to offer more than just reserved instances," said Mick McNeil, Group Chief Revenue Officer at Northern Data Group. "The industry needs solutions that allow providers to offer multi-tenant on-demand GPU instances, addressing this pressing market need.""Beyond multi-tenancy and instance creation, Version 2.0 introduces advanced Day 2 capabilities such as observability, scale testing, and fault management," said Amar Kapadia, co-founder and CEO at Aarna.ml. "These features, combined with cloud brokering, position our solution as the only enterprise-grade GPU cloud management offering in the market."Version 2.0 of Aarna.ml's GPU CMS includes advanced features for both administrators and tenants. Administrators can onboard GPUs, create tenants, and access unified inventory and observability tools. Tenant capabilities include instance creation, management, and enhanced monitoring features. Additionally, the product includes cloud brokering features that enable AI cloud providers to trade capacity through a marketplace. The software is specifically designed to support the NVIDIA Cloud Platform (NCP) and NVIDIA Storage reference architectures."The availability of Aarna.mls GPU CMS 2.0 marks a milestone in the nascent GPU Cloud Management Software landscape," said Roy Chua, Founder and Principal at AvidThink. "For AI cloud providers and enterprises grappling with GPU resource optimization, this solution offers a viable way to unlock the full potential of their high-value GPU investments."Aarna.ml was recently included in Gartner's 2024 Market Guide for Cloud Infrastructure Vendors for CSPs, making it the only startup featured in the report. This recognition highlights Aarna.mls innovative approach in a rapidly evolving market.About Aarna.mlAarna.ml is an NVIDIA and venture-backed AI infrastructure software startup. It is the leading provider of GPU Cloud Management Software, dedicated to empowering GPU-as-a-Service AI cloud providers, NVIDIA Cloud Partners (NCPs), and enterprises with hyperscaler-grade multi-tenant GPU sharing. With a seasoned leadership team experienced in networking, storage, server, and cloud technologies, aarna.ml is at the forefront of transforming GPU resource optimization.For more information, visit www.aarna.ml. [ad_2] Source link

0 notes