#Nginx Proxy Manager Guide

Explore tagged Tumblr posts

Text

Setting Up Nginx Proxy Manager on Docker with Easy LetsEncrypt SSL

Setting Up Nginx Proxy Manager on Docker with Easy LetsEncrypt SSL #homelab #selfhosted #NginxProxyManagerGuide #EasySSLCertificateManagement #UserFriendlyProxyHostSetup #AdvancedNginxConfiguration #PortForwarding #CustomDomainForwarding #FreeSSL

There are many reverse proxy solutions that enable configuring SSL certificates, both in the home lab and production environments. Most have heard about Traefik reverse proxy that allows you to pull LetsEncrypt certificates for your domain name automatically. However, there is another solution that provides a really great GUI dashboard for managing your reverse proxy configuration and LetsEncrypt…

View On WordPress

#Access Control Features#Advanced Nginx Configuration Options#Custom Domain Forwarding#Easy SSL Certificate Management#Effective Port Forwarding#Free SSL with Nginx Proxy#Nginx Audit Log Tracking#Nginx Proxy Manager Guide#Secure Admin Interface#User-Friendly Proxy Host Setup

0 notes

Text

Boost Your Website with Nginx Reverse Proxy

Hi there, enthusiasts of the web! 🌐

Have you ever wondered how to speed up and protect your website? Allow me to provide to you a little tip known as Nginx reverse proxy. I promise it will revolutionise the game!

What’s a Reverse Proxy Anyway?

Consider a reverse proxy as the security guard for your website. It manages all incoming traffic and ensures seamless operation by standing between your users and your server. Do you want to go further? Take a look at this fantastic article for configuring the Nginx reverse proxy.

Why You’ll Love Nginx Reverse Proxy

Load Balancing: Keep your site running smoothly by spreading traffic across multiple servers.

Extra Security: Protect your backend servers by hiding their IP addresses.

SSL Termination: Speed up your site by handling SSL decryption on the proxy.

Caching: Save time and resources by storing copies of frequently accessed content.

Setting Up Nginx Reverse Proxy

It's really not as hard to set up as you may imagine! On your server, you must first install Nginx. The configuration file may then be adjusted to refer to your backend servers. Want a detailed how-to guide? You just need to look at our comprehensive guide on setting up a reverse proxy on Nginx.

When to Use Nginx Reverse Proxy

Scaling Your Web App: Perfect for managing traffic on large websites.

Microservices: Ideal for routing requests in a microservices architecture.

CDNs: Enhance your content delivery by caching static content.

The End

It's like giving your website superpowers when you add a Nginx reverse proxy to your web configuration. This is essential knowledge to have if you're serious about moving your website up the ladder. Visit our article on configuring Nginx reverse proxy for all the specifics.

Hope this helps! Happy coding! 💻✨

2 notes

·

View notes

Text

Building Scalable Node.js APIs with NGINX Reverse Proxies

Building a Node.js API Gateway with NGINX and Reverse Proxy Introduction In this comprehensive tutorial, we will explore the concept of building a Node.js API Gateway with NGINX and Reverse Proxy. This setup allows for efficient, scalable, and secure API management. By the end of this article, you will have a working understanding of the technical background, implementation guide, and best…

0 notes

Text

NGINX Server & Custom Load Balancer: Your Guide to Building a Faster, Reliable Web Infrastructure

If you’re exploring ways to improve the speed, reliability, and flexibility of your web applications, you’re in the right place. NGINX Server & Custom Load Balancer solutions have become a top choice for developers and IT teams aiming to create seamless online experiences without breaking the bank. Let’s dive into what makes NGINX a powerful tool and how setting up a custom load balancer can optimize your website for performance and scalability.

What is NGINX?

First off, NGINX (pronounced "Engine-X") isn’t just a server—it’s a versatile, high-performance web server, reverse proxy, load balancer, and API gateway. Unlike traditional servers, NGINX is designed to handle multiple tasks at once, which makes it perfect for websites with high traffic demands. Its popularity is due to its exceptional speed and ability to handle thousands of simultaneous connections with low resource consumption.

NGINX was created to address the "C10k problem"—the challenge of handling 10,000 concurrent connections on a single server. Thanks to its event-driven architecture, NGINX can serve content faster and manage multiple requests efficiently, making it a go-to choice for businesses of all sizes.

The Benefits of Using an NGINX Server

If you're thinking about adding an NGINX server to your setup, here are some key benefits that may make the decision easier:

High Performance: NGINX is known for speed and low latency, which improves user experience and page loading times.

Resource Efficiency: Unlike other servers, NGINX doesn’t use much CPU or memory, saving on infrastructure costs.

Scalability: From startups to global enterprises, NGINX scales effortlessly, so it can grow as your business grows.

Security: NGINX offers strong security features, including DDoS protection, traffic filtering, and SSL/TLS termination.

Flexibility: With NGINX Server & Custom Load Balancer capabilities, you can configure NGINX for a variety of uses, from serving static files to complex API management.

What is Load Balancing, and Why Does It Matter?

In simple terms, load balancing is the practice of distributing network or application traffic across multiple servers. This prevents any single server from becoming a bottleneck, ensuring reliable access and high availability for users. When you integrate a Custom Load Balancer with NGINX, you’re setting up an intelligent system that directs traffic efficiently, balances server loads, and maximizes uptime.

Types of Load Balancing Supported by NGINX

NGINX offers several types of load balancing to meet different needs:

Round Robin: Traffic is distributed equally across servers. Simple and effective for small-scale setups.

Least Connections: Traffic is directed to the server with the fewest active connections, ensuring a fair workload distribution.

IP Hash: Requests from the same IP address are routed to the same server, useful for session-based applications.

Custom Load Balancer Rules: With custom configurations, NGINX can be tailored to direct traffic based on a range of factors, from server health to request type.

With a Custom Load Balancer set up, NGINX can go beyond typical load balancing, allowing you to define unique rules based on your website’s traffic patterns, application demands, or geographic location. This means even if traffic surges unexpectedly, your NGINX server keeps things running smoothly.

Setting Up NGINX Server & Custom Load Balancer: A Step-by-Step Guide

To set up NGINX Server & Custom Load Balancer, here’s a quick overview of the key steps:

Step 1: Install NGINX

Start by installing NGINX on your server. It’s widely available for various operating systems, and you can install it using package managers like apt for Ubuntu or yum for CentOS.

bash

Copy code

# For Ubuntu

sudo apt update

sudo apt install nginx

# For CentOS

sudo yum install nginx

Step 2: Configure Basic Load Balancing

Create a configuration file for your load balancer. This will tell NGINX how to handle incoming requests and distribute them among your backend servers.

bash

Copy code

# Open your NGINX configuration file

sudo nano /etc/nginx/conf.d/loadbalancer.conf

In this file, define your load balancing rules. For example:

nginx

Copy code

upstream myapp {

server server1.example.com;

server server2.example.com;

}

server {

listen 80;

location / {

proxy_pass http://myapp;

}

}

This simple setup uses round-robin load balancing, where requests are distributed equally between server1 and server2.

Step 3: Customizing Your Load Balancer

With a Custom Load Balancer in NGINX, you can implement more advanced load balancing strategies:

Use least connections to direct traffic to the server with the fewest active connections.

Add health checks to monitor server availability and automatically reroute traffic if a server fails.

Configure SSL termination to handle HTTPS traffic and secure your website.

Here’s an example configuration that adds least connections and health checks:

nginx

Copy code

upstream myapp {

least_conn;

server server1.example.com max_fails=3 fail_timeout=30s;

server server2.example.com max_fails=3 fail_timeout=30s;

}

In this setup, NGINX will direct traffic to the server with the fewest connections and avoid any server that fails a health check.

Step 4: Testing and Fine-Tuning

After configuring your NGINX Custom Load Balancer, test it thoroughly. Use tools like curl or Apache Benchmark (ab) to simulate traffic and monitor the load distribution.

Pro Tip: Regularly monitor your load balancer with NGINX Amplify or similar tools to gain insights into traffic patterns and server health.

Key Advantages of Custom Load Balancing with NGINX

A Custom Load Balancer with NGINX provides multiple advantages:

Enhanced User Experience: Faster loading times and minimal downtime mean a better experience for visitors.

Cost Efficiency: Efficient load distribution reduces the risk of overloading servers, saving on infrastructure costs.

Flexibility and Control: With custom rules, you have full control over how traffic is managed and can adjust configurations to fit changing demands.

Scaling Your Application with NGINX

One of the most significant advantages of NGINX Server & Custom Load Balancer is scalability. Whether you’re launching a new app or scaling an existing one, NGINX provides the framework to accommodate growing traffic without sacrificing speed or stability.

For instance, using horizontal scaling with multiple servers, NGINX can distribute traffic evenly and increase your application’s availability. This flexibility is why major websites like Netflix, Airbnb, and Dropbox rely on NGINX for load balancing and traffic management.

Common Challenges and Solutions

While NGINX Server & Custom Load Balancer setups are reliable, here are a few challenges that might come up and how to handle them:

Configuration Complexity: Custom setups may become complex. NGINX documentation and forums are excellent resources to troubleshoot any issues.

SSL Configuration: Properly configuring SSL/TLS certificates can be tricky. Use tools like Certbot for automated SSL certificate management.

Monitoring: Effective monitoring is essential. NGINX Amplify or Prometheus provides insights into performance metrics, enabling you to spot potential issues early.

Conclusion: Why Choose NGINX Server & Custom Load Balancer?

NGINX Server & Custom Load Balancer offers an impressive combination of speed, reliability, and scalability. With its flexible configurations and robust performance, NGINX can handle diverse web server needs—from basic load balancing to advanced, custom traffic rules.

By implementing a custom load balancer with NGINX, you not only ensure that your website can handle heavy traffic but also lay the groundwork for future growth. Whether you’re just starting or scaling a high-traffic application, NGINX is the tool you can rely on to keep things running smoothly

0 notes

Text

Ingress Controller Kubernetes: A Comprehensive Guide

Ingress controller Kubernetes is a critical component in Kubernetes environments that manages external access to services within a cluster. It acts as a reverse proxy that routes incoming traffic based on defined rules to appropriate backend services. The ingress controller helps in load balancing, SSL termination, and URL-based routing. Understanding how an ingress controller Kubernetes functions is essential for efficiently managing traffic and ensuring smooth communication between services in a Kubernetes cluster.

Key Features of Ingress Controller Kubernetes

The ingress controller Kubernetes offers several key features that enhance the management of network traffic within a Kubernetes environment. These features include path-based routing, host-based routing, SSL/TLS termination, and load balancing. By leveraging these capabilities, an ingress controller Kubernetes helps streamline traffic management, improve security, and ensure high availability of applications. Understanding these features can assist in optimizing your Kubernetes setup and addressing specific traffic management needs.

How to Set Up an Ingress Controller Kubernetes?

Setting up an ingress controller Kubernetes involves several steps to ensure proper configuration and functionality. The process includes deploying the ingress controller using Kubernetes manifests, configuring ingress resources to define routing rules, and applying SSL/TLS certificates for secure communication. Proper setup is crucial for the ingress controller Kubernetes to effectively manage traffic and route requests to the correct services. This section will guide you through the detailed steps to successfully deploy and configure an ingress controller in your Kubernetes cluster.

Comparing Popular Ingress Controllers for Kubernetes

There are several popular ingress controllers Kubernetes available, each with its unique features and capabilities. Common options include NGINX Ingress Controller, Traefik, and HAProxy Ingress. Comparing these ingress controllers involves evaluating factors such as ease of use, performance, scalability, and support for advanced features. Understanding the strengths and limitations of each ingress controller Kubernetes helps in choosing the best solution for your specific use case and requirements.

Troubleshooting Common Issues with Ingress Controller Kubernetes

Troubleshooting issues with an ingress controller Kubernetes can be challenging but is essential for maintaining a functional and efficient Kubernetes environment. Common problems include incorrect routing, SSL/TLS certificate errors, and performance bottlenecks. This section will explore strategies and best practices for diagnosing and resolving these issues, ensuring that your ingress controller Kubernetes operates smoothly and reliably.

Security Considerations for Ingress Controller Kubernetes

Security is a critical aspect of managing an ingress controller Kubernetes. The ingress controller handles incoming traffic, making it a potential target for attacks. Important security considerations include implementing proper access controls, configuring SSL/TLS encryption, and protecting against common vulnerabilities such as cross-site scripting (XSS) and distributed denial-of-service (DDoS) attacks. By addressing these security aspects, you can safeguard your Kubernetes environment and ensure secure access to your services.

Advanced Configuration Techniques for Ingress Controller Kubernetes

Advanced configuration techniques for ingress controller Kubernetes can enhance its functionality and performance. These techniques include custom load balancing algorithms, advanced routing rules, and integration with external authentication providers. By implementing these advanced configurations, you can tailor the ingress controller Kubernetes to meet specific requirements and optimize traffic management based on your application's needs.

Best Practices for Managing Ingress Controller Kubernetes

Managing an ingress controller Kubernetes effectively involves adhering to best practices that ensure optimal performance and reliability. Best practices include regularly updating the ingress controller, monitoring traffic patterns, and implementing efficient resource allocation strategies. By following these practices, you can maintain a well-managed ingress controller that supports the smooth operation of your Kubernetes applications.

The Role of Ingress Controller Kubernetes in Microservices Architectures

In microservices architectures, the ingress controller Kubernetes plays a vital role in managing traffic between various microservices. It enables efficient routing, load balancing, and security for microservices-based applications. Understanding the role of the ingress controller in such architectures helps in designing robust and scalable systems that handle complex traffic patterns and ensure seamless communication between microservices.

Future Trends in Ingress Controller Kubernetes Technology

The field of ingress controller Kubernetes technology is constantly evolving, with new trends and innovations emerging. Future trends may include enhanced support for service meshes, improved integration with cloud-native security solutions, and advancements in automation and observability. Staying informed about these trends can help you leverage the latest advancements in ingress controller technology to enhance your Kubernetes environment.

Conclusion

The ingress controller Kubernetes is a pivotal component in managing traffic within a Kubernetes cluster. By understanding its features, setup processes, and best practices, you can optimize traffic management, enhance security, and improve overall performance. Whether you are troubleshooting common issues or exploring advanced configurations, a well-managed ingress controller is essential for the effective operation of Kubernetes-based applications. Staying updated on future trends and innovations will further enable you to maintain a cutting-edge and efficient Kubernetes environment.

0 notes

Text

Setting Up A Docker Registry Proxy With Nginx: A Comprehensive Guide

In today’s software development landscape, Docker has revolutionized the way applications are packaged, deployed, and managed. One of the essential components of deploying Dockerized applications is a registry, which acts as a central repository to store and distribute Docker images. Docker Registry is the default registry provided by Docker, but in certain scenarios, a Docker Registry Proxy can be advantageous. In this article, we will explore what a Docker Registry Proxy is, why you might need one, and how to set it up.

What is a Docker Registry Proxy? It is an intermediary between the client and the Docker registry. It is placed in front of the Docker Registry to provide additional functionalities such as load balancing, caching, authentication, and authorization. This proxy acts as a middleman, intercepting the requests from clients and forwarding them to the appropriate Docker Registry.

Why do you need a Docker Registry Proxy? Caching and Performance Improvement: When multiple clients are pulling the same Docker image from the remote Docker Registry,It can cache the image locally. This caching mechanism reduces the load on the remote Docker Registry, speeds up the image retrieval process, and improves overall performance.

Bandwidth Optimization: In scenarios where multiple clients are pulling the same Docker image from the remote Docker Registry, it can consume a significant amount of bandwidth.It can reduce the bandwidth usage by serving the image from its cache.

Load Balancing: If you have multiple Docker registries hosting the same set of images, a Docker Registry can distribute the incoming requests among these registries. This load balancing capability enables the scaling of your Docker infrastructure and ensures high availability.

Authentication and Authorization: A Docker Registry can provide an additional layer of security by adding authentication and authorization mechanisms. It can authenticate the clients and enforce access control policies before forwarding the requests to the remote Docker Registry.

Setting up a Docker Registry Proxy To set up, we will use `nginx` as an example. `nginx` is a popular web server and reverse proxy server that can act as a Docker Registry Proxy.

Step 1: Install nginx First, ensure that `nginx` is installed on your machine. The installation process varies depending on your operating system. For example, on Ubuntu, you can install `nginx` using the following command:

Step 2: Configure nginx as a Docker Registry Proxy Next, we need to configure `nginx` to act as a Docker Registry. Open your nginx configuration file, typically located at `/etc/nginx/nginx.conf`, and add the following configuration:

In the above configuration, replace `my-docker-registry-proxy` with the hostname of your Docker Registry Proxy, and `remote-docker-registry` with the URL of the remote Docker Registry. This configuration sets up `nginx` to listen on port 443 and forwards all incoming requests to the remote Docker Registry.

Step 3: Start nginx After configuring `nginx`, start the `nginx` service to enable the Docker Registry using the following command:

Step 4: Test the Docker Registry Proxy To test the Docker Registry, update your Docker client configuration to use the Docker Registry as the registry endpoint. Open your Docker client configuration file, typically located at `~/.docker/config.json`, and add the following configuration:

Replace `my-docker-registry-proxy` with the hostname or IP address of your Docker Registry. This configuration tells the Docker client to use the Docker Registry for all Docker registry-related operations.

Now, try pulling a Docker image using the Docker client. The Docker client will send the request to the Docker Registry, which will then forward it to the remote Docker Registry. If the Docker image is not present in the Docker Registry Proxy’s cache, it will fetch the image from the remote Docker Registry and cache it for future use.

Conclusion It plays a vital role in managing a Docker infrastructure effectively. It provides caching, load balancing, and security functionalities, optimizing the overall performance and reliability of your Docker application deployments. Using `nginx` as a Docker Registry is a straightforward and effective solution. By following the steps outlined in this article, you can easily set up and configure a Docker Registry Proxy using `nginx`.

0 notes

Text

How to Configure and Set up a Proxy Server

Introduction:

A proxy server acts as an intermediary between a user and the internet. It allows users to route their internet traffic through a different server, providing benefits such as enhanced security, privacy, and access to geographically restricted content. If you’re looking to configure and set up a proxy server, this article will guide you through the process, from understanding the types of proxy servers to implementing them effectively.

Understanding Proxy Servers:

What is a Proxy Server? – A definition and overview of how a proxy server functions, acting as a middleman between a user and the internet.

Types of Proxy Servers – An explanation of the different types of proxy servers, including HTTP, HTTPS, SOCKS, and reverse proxies, highlighting their specific use cases and functionalities.

Choosing a Proxy Server:

Identifying Your Needs – Determine the specific requirements for setting up a proxy server, such as enhanced security, privacy, or accessing geo-restricted content.

Evaluating Proxy Server Options – Research and compare available proxy server solutions, considering factors such as compatibility, performance, and ease of configuration.

Setting up a Proxy Server:

Selecting a Hosting Option – Choose between self-hosted or third-party hosting options based on your technical expertise and infrastructure requirements.

Installing Proxy Server Software – Step-by-step instructions on installing the chosen proxy server software on your server or computer, including popular options like Squid, Nginx, and Apache.

Configuring the Proxy Server:

Proxy Server Settings – Understand the configuration options available, such as port numbers, proxy protocols, and authentication methods, and adjust them to suit your needs.

IP Whitelisting and Blacklisting – Learn how to set up IP whitelisting and blacklisting to control access to your proxy server and protect it from unauthorized usage.

Securing the Proxy Server:

Enabling Encryption – Configure SSL/TLS certificates to encrypt communication between the user and the proxy server, safeguarding sensitive information from interception.

Setting Access Controls – Implement access control policies to restrict access to the proxy server, ensuring only authorized users can utilize its services.

Testing and Troubleshooting:

Verifying Proxy Server Functionality – Perform tests to ensure that the proxy server is correctly configured and functioning as expected, checking for any potential issues.

Troubleshooting Common Problems – Identify and resolve common issues that may arise during the setup and configuration process, including network connectivity, port conflicts, and misconfigurations.

Optimizing Proxy Server Performance:

Caching – Configure caching mechanisms on the proxy server to improve performance by storing frequently accessed content locally, reducing the need for repeated requests to the internet.

Bandwidth Management – Adjust bandwidth allocation settings to optimize network resources and ensure a smooth browsing experience for users of the proxy server.

Monitoring and Maintenance:

Monitoring Proxy Server Performance – Employ monitoring tools to track the performance and usage of the proxy server, identifying potential bottlenecks or security concerns.

Regular Updates and Patches – Keep the proxy server software up to date by installing patches and updates released by the software provider, ensuring security vulnerabilities are addressed.

Conclusion:

Configuring and setting up a proxy server can provide numerous benefits, including enhanced security, privacy, and access to geographically restricted content. By understanding the different types of proxy servers, selecting the appropriate solution, and following the step-by-step process of installation, configuration, and optimization, you can successfully deploy a proxy server that meets your specific needs. Remember to prioritize security, monitor performance, and perform regular maintenance to ensure the proxy server continues to function optimally. With a properly configured proxy server in place, you can enjoy a safer and more versatile internet browsing experience.

0 notes

Text

Python FullStack Developer Interview Questions

Introduction :

A Python full-stack developer is a professional who has expertise in both front-end and back-end development using Python as their primary programming language. This means they are skilled in building web applications from the user interface to the server-side logic and the database. Here’s some information about Python full-stack developer jobs.

Interview questions

What is the difference between list and tuple in Python?

Explain the concept of PEP 8.

How does Python's garbage collection work?

Describe the differences between Flask and Django.

Explain the Global Interpreter Lock (GIL) in Python.

How does asynchronous programming work in Python?

What is the purpose of the ORM in Django?

Explain the concept of middleware in Flask.

How does WSGI work in the context of web applications?

Describe the process of deploying a Flask application to a production server.

How does data caching improve the performance of a web application?

Explain the concept of a virtual environment in Python and why it's useful.

Questions with answer

What is the difference between list and tuple in Python?

Answer: Lists are mutable, while tuples are immutable. This means that you can change the elements of a list, but once a tuple is created, you cannot change its values.

Explain the concept of PEP 8.

Answer: PEP 8 is the style guide for Python code. It provides conventions for writing code, such as indentation, whitespace, and naming conventions, to make the code more readable and consistent.

How does Python's garbage collection work?

Answer: Python uses automatic memory management via a garbage collector. It keeps track of all the references to objects and deletes objects that are no longer referenced, freeing up memory.

Describe the differences between Flask and Django.

Answer: Flask is a micro-framework, providing flexibility and simplicity, while Django is a full-stack web framework with built-in features like an ORM, admin panel, and authentication.

Explain the Global Interpreter Lock (GIL) in Python.

Answer: The GIL is a mutex that protects access to Python objects, preventing multiple native threads from executing Python bytecode at once. This can limit the performance of multi-threaded Python programs.

How does asynchronous programming work in Python?

Answer: Asynchronous programming in Python is achieved using the asyncio module. It allows non-blocking I/O operations by using coroutines, the async and await keywords, and an event loop.

What is the purpose of the ORM in Django?

Answer: The Object-Relational Mapping (ORM) in Django allows developers to interact with the database using Python code, abstracting away the SQL queries. It simplifies database operations and makes the code more readable.

Explain the concept of middleware in Flask.

Answer: Middleware in Flask is a way to process requests and responses globally before they reach the view function. It can be used for tasks such as authentication, logging, and modifying request/response objects.

How does WSGI work in the context of web applications?

Answer: Web Server Gateway Interface (WSGI) is a specification for a universal interface between web servers and Python web applications or frameworks. It defines a standard interface for communication, allowing compatibility between web servers and Python web applications.

Describe the process of deploying a Flask application to a production server.

Answer: Deploying a Flask application involves configuring a production-ready web server (e.g., Gunicorn or uWSGI), setting up a reverse proxy (e.g., Nginx or Apache), and handling security considerations like firewalls and SSL.

How does data caching improve the performance of a web application?

Answer: Data caching involves storing frequently accessed data in memory, reducing the need to fetch it from the database repeatedly. This can significantly improve the performance of a web application by reducing the overall response time.

Explain the concept of a virtual environment in Python and why it's useful.

Answer: A virtual environment is a self-contained directory that contains its Python interpreter and can have its own installed packages. It is useful to isolate project dependencies, preventing conflicts between different projects and maintaining a clean and reproducible development environment.

When applying for a Python Full Stack Developer position:

Front-end Development:

What is the Document Object Model (DOM), and how does it relate to front-end development?

Explain the difference between inline, internal, and external CSS styles. When would you use each one?

What is responsive web design, and how can you achieve it in a web application?

Describe the purpose and usage of HTML5 semantic elements, such as , , , and .

What is the role of JavaScript in front-end development? How can you handle asynchronous operations in JavaScript?

How can you optimize the performance of a web page, including techniques for reducing load times and improving user experience?

What is Cross-Origin Resource Sharing (CORS), and how can it be addressed in web development?

Back-end Development:

Explain the difference between a web server and an application server. How do they work together in web development?

What is the purpose of a RESTful API, and how does it differ from SOAP or GraphQL?

Describe the concept of middleware in the context of web frameworks like Django or Flask. Provide an example use case for middleware.

How does session management work in web applications, and what are the common security considerations for session handling?

What is an ORM (Object-Relational Mapping), and why is it useful in database interactions with Python?

Discuss the benefits and drawbacks of different database systems (e.g., SQL, NoSQL) for various types of applications.

How can you optimize a database query for performance, and what tools or techniques can be used for profiling and debugging SQL queries?

Full Stack Development:

What is the role of the Model-View-Controller (MVC) design pattern in web development, and how does it apply to frameworks like Django or Flask?

How can you ensure the security of data transfer in a web application? Explain the use of HTTPS, SSL/TLS, and encryption.

Discuss the importance of version control systems like Git in collaborative development and deployment.

What are microservices, and how do they differ from monolithic architectures in web development?

How can you handle authentication and authorization in a web application, and what are the best practices for user authentication?

Describe the concept of DevOps and its role in the full stack development process, including continuous integration and deployment (CI/CD).

These interview questions cover a range of topics relevant to a Python Full Stack Developer position. Be prepared to discuss your experience and demonstrate your knowledge in both front-end and back-end development, as well as the integration of these components into a cohesive web application.

Database Integration: Full stack developers need to work with databases, both SQL and NoSQL, to store and retrieve data. Understanding database design, optimization, and querying is crucial.

Web Frameworks: Questions about web frameworks like Django, Flask, or Pyramid, which are popular in the Python ecosystem, are common. Understanding the framework’s architecture and best practices is essential.

Version Control: Proficiency in version control systems like Git is often assessed, as it’s crucial for collaboration and code management in development teams.

Responsive Web Design: Full stack developers should understand responsive web design principles to ensure that web applications are accessible and user-friendly on various devices and screen sizes.

API Development: Building and consuming APIs (Application Programming Interfaces) is a common task. Understanding RESTful principles and API security is important.

Web Security: Security questions may cover topics such as authentication, authorization, securing data, and protecting against common web vulnerabilities like SQL injection and cross-site scripting (XSS).

DevOps and Deployment: Full stack developers are often involved in deploying web applications. Understanding deployment strategies, containerization (e.g., Docker), and CI/CD (Continuous Integration/Continuous Deployment) practices may be discussed.

Performance Optimization: Questions may be related to optimizing web application performance, including front-end and back-end optimizations, caching, and load balancing.

Coding Best Practices: Expect questions about coding standards, design patterns, and best practices for maintainable and scalable code.

Problem-Solving: Scenario-based questions and coding challenges may be used to evaluate a candidate’s problem-solving skills and ability to think critically.

Soft Skills: Employers may assess soft skills like teamwork, communication, adaptability, and the ability to work in a collaborative and fast-paced environment.

To prepare for a Python Full Stack Developer interview, it’s important to have a strong understanding of both front-end and back-end technologies, as well as a deep knowledge of Python and its relevant libraries and frameworks. Additionally, you should be able to demonstrate your ability to work on the full development stack, from the user interface to the server infrastructure, while following best practices and maintaining a strong focus on web security and performance.

Python Full Stack Developer interviews often include a combination of technical assessments, coding challenges, and behavioral or situational questions to comprehensively evaluate a candidate’s qualifications, skills, and overall fit for the role. Here’s more information on each of these interview components:

1. Technical Assessments:

Technical assessments typically consist of questions related to various aspects of full stack development, including front-end and back-end technologies, Python, web frameworks, databases, and more.

These questions aim to assess a candidate’s knowledge, expertise, and problem-solving skills in the context of web development using Python.

Technical assessments may include multiple-choice questions, fill-in-the-blank questions, and short-answer questions.

2. Coding Challenges:

Coding challenges are a critical part of the interview process for Python Full Stack Developers. Candidates are presented with coding problems or tasks to evaluate their programming skills, logical thinking, and ability to write clean and efficient code.

These challenges can be related to web application development, data manipulation, algorithmic problem-solving, or other relevant topics.

Candidates are often asked to write code in Python to solve specific problems or implement certain features.

Coding challenges may be conducted on a shared coding platform, where candidates can write and execute code in real-time.

3. Behavioral or Situational Questions:

Behavioral or situational questions assess a candidate’s soft skills, interpersonal abilities, and how well they would fit within the company culture and team.

These questions often focus on the candidate’s work experiences, decision-making, communication skills, and how they handle challenging situations.

Behavioral questions may include scenarios like, “Tell me about a time when you had to work under tight deadlines,” or “How do you handle conflicts within a development team?”

4. Project Discussions and Portfolio Review:

Candidates may be asked to discuss their previous projects, providing details about their roles, responsibilities, and the technologies they used.

Interviewers may inquire about the candidate’s contributions to the project, the challenges they faced, and how they overcame them.

Reviewing a candidate’s portfolio or past work is a common practice to understand their practical experience and the quality of their work.

5. Technical Whiteboard Sessions:

Some interviews may include whiteboard sessions where candidates are asked to solve technical problems or explain complex concepts on a whiteboard.

This assesses a candidate’s ability to communicate technical ideas and solutions clearly.

6. System Design Interviews:

For more senior roles, interviews may involve system design discussions. Candidates are asked to design and discuss the architecture of a web application, focusing on scalability, performance, and data storage considerations.

7. Communication and Teamwork Evaluation:

Throughout the interview process, assessors pay attention to how candidates communicate their ideas, interact with interviewers, and demonstrate their ability to work in a collaborative team environment.

8. Cultural Fit Assessment:

Employers often evaluate how well candidates align with the company’s culture, values, and work ethic. They may ask questions to gauge a candidate’s alignment with the organization’s mission and vision.

conclusion :

Preparing for a Python Full Stack Developer interview involves reviewing technical concepts, practicing coding challenges, and developing strong communication and problem-solving skills. Candidates should be ready to discuss their past experiences, showcase their coding abilities, and demonstrate their ability to work effectively in a full stack development role, which often requires expertise in both front-end and back-end technologie

Thanks for reading ,hopefully you like the article if you want to take Full stack Masters course from our Institute please attend our live demo sessions or contact us: +918464844555 providing you with the best Online Full Stack Developer Course in Hyderabad with an affordable course fee structure.

0 notes

Text

Nginx Proxy Manager unter Docker-Compose auf Linux installieren (Anleitung) Der Nginx Proxy Manager ist eine Open-Source Software, die auf den Nginx-Webserver basiert. Neben Apache ist Nginx einer der am häufigsten verwendeten Webserver für alle möglichen Webanwendungen. Wir installieren in dieser Installationsanleitung den Nginx Proxy Manager in einem Docker-Container. Nach erfolgreicher Installation können Konfigurationen und Verwaltungen über die Weboberfläche (Dashboard) vorgenommen werden. Die grafische Benutzeroberfläche erlaubt mit wenigen Mausklicks die Installation von kostenlosen Let’s Encrypt Zertifikate und die Einrichtung von Reverse-Proxys...[Weiterlesen]

#Nginx#Proxy#Nginx Proxy Manager#Reverse Proxy#Webserver#Ports#Tutorial#Informatik#Serveradministration#Hosting#Linux#Guide#Fachinformatiker#Informatikstudium#IT Security

0 notes

Text

Installing Nginx, MySQL, PHP (LEMP) Stack on Ubuntu 18.04

Ubuntu Server 18.04 LTS (TunzaDev) is finally here and is being rolled out across VPS hosts such as DigitalOcean and AWS. In this guide, we will install a LEMP Stack (Nginx, MySQL, PHP) and configure a web server.

Prerequisites

You should use a non-root user account with sudo privileges. Please see the Initial server setup for Ubuntu 18.04 guide for more details.

1. Install Nginx

Let’s begin by updating the package lists and installing Nginx on Ubuntu 18.04. Below we have two commands separated by &&. The first command will update the package lists to ensure you get the latest version and dependencies for Nginx. The second command will then download and install Nginx.

sudo apt update && sudo apt install nginx

Once installed, check to see if the Nginx service is running.

sudo service nginx status

If Nginx is running correctly, you should see a green Active state below.

● nginx.service - A high performance web server and a reverse proxy server Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled) Active: active (running) since Wed 2018-05-09 20:42:29 UTC; 2min 39s ago Docs: man:nginx(8) Process: 27688 ExecStart=/usr/sbin/nginx -g daemon on; master_process on; (code=exited, status=0/SUCCESS) Process: 27681 ExecStartPre=/usr/sbin/nginx -t -q -g daemon on; master_process on; (code=exited, status=0/SUCCESS) Main PID: 27693 (nginx) Tasks: 2 (limit: 1153) CGroup: /system.slice/nginx.service ├─27693 nginx: master process /usr/sbin/nginx -g daemon on; master_process on; └─27695 nginx: worker process

You may need to press q to exit the service status.

2. Configure Firewall

If you haven’t already done so, it is recommended that you enable the ufw firewall and add a rule for Nginx. Before enabling ufw firewall, make sure you add a rule for SSH, otherwise you may get locked out of your server if you’re connected remotely.

sudo ufw allow OpenSSH

If you get an error “ERROR: could find a profile matching openSSH”, this probably means you are not configuring the server remotely and can ignore it.

Now add a rule for Nginx.

sudo ufw allow 'Nginx HTTP'

Rule added Rule added (v6)

Enable ufw firewall.

sudo ufw enable

Press y when asked to proceed.

Now check the firewall status.

sudo ufw status

Status: active To Action From -- ------ ---- OpenSSH ALLOW Anywhere Nginx HTTP ALLOW Anywhere OpenSSH (v6) ALLOW Anywhere (v6) Nginx HTTP (v6) ALLOW Anywhere (v6)

That’s it! Your Nginx web server on Ubuntu 18.04 should now be ready.

3. Test Nginx

Go to your web browser and visit your domain or IP. If you don’t have a domain name yet and don’t know your IP, you can find out with:

sudo ifconfig | grep -Eo 'inet (addr:)?([0-9]*\.){3}[0-9]*' | grep -Eo '([0-9]*\.){3}[0-9]*' | grep -v '127.0.0.1'

You can find this Nginx default welcome page in the document root directory /var/www/html. To edit this file in nano text editor:

sudo nano /var/www/html/index.nginx-debian.html

To save and close nano, press CTRL + X and then press y and ENTER to save changes.

Your Nginx web server is ready to go! You can now add your own html files and images the the /var/www/html directory as you please.

However, you should acquaint yourself with and set up at least one Server Block for Nginx as most of our Ubuntu 18.04 guides are written with Server Blocks in mind. Please see article Installing Nginx on Ubuntu 18.04 with Multiple Domains. Server Blocks allow you to host multiple web sites/domains on one server. Even if you only ever intend on hosting one website or one domain, it’s still a good idea to configure at least one Server Block.

If you don’t want to set up Server Blocks, continue to the next step to set up MySQL.

4. Install MySQL

Let’s begin by updating the package lists and installing MySQL on Ubuntu 18.04. Below we have two commands separated by &&. The first command will update the package lists to ensure you get the latest version and dependencies for MySQL. The second command will then download and install MySQL.

sudo apt update && sudo apt install mysql-server

Press y and ENTER when prompted to install the MySQL package.

Once the package installer has finished, we can check to see if the MySQL service is running.

sudo service mysql status

If running, you will see a green Active status like below.

● mysql.service - MySQL Community Server Loaded: loaded (/lib/systemd/system/mysql.service; enabled; vendor preset: enabled) Active: active (running) since since Wed 2018-05-09 21:10:24 UTC; 16s ago Main PID: 30545 (mysqld) Tasks: 27 (limit: 1153) CGroup: /system.slice/mysql.service └─30545 /usr/sbin/mysqld --daemonize --pid-file=/run/mysqld/mysqld.pid

You may need to press q to exit the service status.

5. Configure MySQL Security

You should now run mysql_secure_installation to configure security for your MySQL server.

sudo mysql_secure_installation

If you created a root password in Step 1, you may be prompted to enter it here. Otherwise you will be asked to create one. (Generate a password here)

You will be asked if you want to set up the Validate Password Plugin. It’s not really necessary unless you want to enforce strict password policies for some reason.

Securing the MySQL server deployment. Connecting to MySQL using a blank password. VALIDATE PASSWORD PLUGIN can be used to test passwords and improve security. It checks the strength of password and allows the users to set only those passwords which are secure enough. Would you like to setup VALIDATE PASSWORD plugin? Press y|Y for Yes, any other key for No:

Press n and ENTER here if you don’t want to set up the validate password plugin.

Please set the password for root here. New password: Re-enter new password:

If you didn’t create a root password in Step 1, you must now create one here.

Generate a strong password and enter it. Note that when you enter passwords in Linux, nothing will show as you are typing (no stars or dots).

By default, a MySQL installation has an anonymous user, allowing anyone to log into MySQL without having to have a user account created for them. This is intended only for testing, and to make the installation go a bit smoother. You should remove them before moving into a production environment. Remove anonymous users? (Press y|Y for Yes, any other key for No) :

Press y and ENTER to remove anonymous users.

Normally, root should only be allowed to connect from 'localhost'. This ensures that someone cannot guess at the root password from the network. Disallow root login remotely? (Press y|Y for Yes, any other key for No) :

Press y and ENTER to disallow root login remotely. This will prevent bots and hackers from trying to guess the root password.

By default, MySQL comes with a database named 'test' that anyone can access. This is also intended only for testing, and should be removed before moving into a production environment. Remove test database and access to it? (Press y|Y for Yes, any other key for No) :

Press y and ENTER to remove the test database.

Reloading the privilege tables will ensure that all changes made so far will take effect immediately. Reload privilege tables now? (Press y|Y for Yes, any other key for No) :

Press y and ENTER to reload the privilege tables.

All done!

As a test, you can log into the MySQL server and run the version command.

sudo mysqladmin -p -u root version

Enter the MySQL root password you created earlier and you should see the following:

mysqladmin Ver 8.42 Distrib 5.7.22, for Linux on x86_64 Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Server version 5.7.22-0ubuntu18.04.1 Protocol version 10 Connection Localhost via UNIX socket UNIX socket /var/run/mysqld/mysqld.sock Uptime: 4 min 28 sec Threads: 1 Questions: 15 Slow queries: 0 Opens: 113 Flush tables: 1 Open tables: 106 Queries per second avg: 0.055

You have now successfully installed and configured MySQL for Ubuntu 18.04! Continue to the next step to install PHP.

6. Install PHP

Unlike Apache, Nginx does not contain native PHP processing. For that we have to install PHP-FPM (FastCGI Process Manager). FPM is an alternative PHP FastCGI implementation with some additional features useful for heavy-loaded sites.

Let’s begin by updating the package lists and installing PHP-FPM on Ubuntu 18.04. We will also install php-mysql to allow PHP to communicate with the MySQL database. Below we have two commands separated by &&. The first command will update the package lists to ensure you get the latest version and dependencies for PHP-FPM and php-mysql. The second command will then download and install PHP-FPM and php-mysql. Press y and ENTER when asked to continue.

sudo apt update && sudo apt install php-fpm php-mysql

Once installed, check the PHP version.

php --version

If PHP was installed correctly, you should see something similar to below.

PHP 7.2.3-1ubuntu1 (cli) (built: Mar 14 2018 22:03:58) ( NTS ) Copyright (c) 1997-2018 The PHP Group Zend Engine v3.2.0, Copyright (c) 1998-2018 Zend Technologies with Zend OPcache v7.2.3-1ubuntu1, Copyright (c) 1999-2018, by Zend Technologies

Above we are using PHP version 7.2, though this may be a later version for you.

Depending on what version of Nginx and PHP you install, you may need to manually configure the location of the PHP socket that Nginx will connect to.

List the contents for the directory /var/run/php/

ls /var/run/php/

You should see a few entries here.

php7.2-fpm.pid php7.2-fpm.sock

Above we can see the socket is called php7.2-fpm.sock. Remember this as you may need it for the next step.

7. Configure Nginx for PHP

We now need to make some changes to our Nginx server block.

The location of the server block may vary depending on your setup. By default, it is located in /etc/nginx/sites-available/default.

However, if you have previously set up custom server blocks for multiple domains in one of our previous guides, you will need to add the PHP directives to each server block separately. A typical custom server block file location would be /etc/nginx/sites-available/mytest1.com.

For the moment, we will assume you are using the default. Edit the file in nano.

sudo nano /etc/nginx/sites-available/default

Press CTRL + W and search for index.html.

Now add index.php before index.html

/etc/nginx/sites-available/default

index index.php index.html index.htm index.nginx-debian.html;

Press CTRL + W and search for the line server_name.

Enter your server’s IP here or domain name if you have one.

/etc/nginx/sites-available/default

server_name YOUR_DOMAIN_OR_IP_HERE;

Press CTRL + W and search for the line location ~ \.php.

You will need to uncomment some lines here by removing the # signs before the lines marked in red below.

Also ensure value for fastcgi_pass socket path is correct. For example, if you installed PHP version 7.2, the socket should be: /var/run/php/php7.2-fpm.sock

If you are unsure which socket to use here, exit out of nano and run ls /var/run/php/

/etc/nginx/sites-available/default

... location ~ \.php$ { include snippets/fastcgi-php.conf; # # # With php-fpm (or other unix sockets): fastcgi_pass unix:/var/run/php/php7.2-fpm.sock; # # With php-cgi (or other tcp sockets): # fastcgi_pass 127.0.0.1:9000; } ...

Once you’ve made the necessary changes, save and close (Press CTRL + X, then press y and ENTER to confirm save)

Now check the config file to make sure there are no syntax errors. Any errors could crash the web server on restart.

sudo nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful

If no errors, you can reload the Nginx config.

sudo service nginx reload

8. Test PHP

To see if PHP is working correctly on Ubuntu 18.04, let’s a create a new PHP file called info.php in the document root directory. By default, this is located in /var/www/html/, or if you set up multiple domains in a previous guide, it may be located in somewhere like /var/www/mytest1.com/public_html

Once you have the correct document root directory, use the nano text editor to create a new file info.php

sudo nano /var/www/html/info.php

Type or paste the following code into the new file. (if you’re using PuTTY for Windows, right-click to paste)

/var/www/html/info.php

Save and close (Press CTRL + X, then press y and ENTER to confirm save)

You can now view this page in your web browser by visiting your server’s domain name or public IP address followed by /info.php: http://your_domain_or_IP/info.php

phpinfo() outputs a large amount of information about the current state of PHP. This includes information about PHP compilation options and extensions, the PHP version and server information.

You have now successfully installed PHP-FPM for Nginx on Ubuntu 18.04 LTS (Bionic Beaver).

Make sure to delete info.php as it contains information about the web server that could be useful to attackers.

sudo rm /var/www/html/info.php

What Next?

Now that your Ubuntu 18.04 LEMP web server is up and running, you may want to install phpMyAdmin so you can manage your MySQL server.

Installing phpMyAdmin for Nginx on Ubuntu 18.04

To set up a free SSL cert for your domain:

Configuring Let’s Encrypt SSL Cert for Nginx on Ubuntu 18.04

You may want to install and configure an FTP server

Installing an FTP server with vsftpd (Ubuntu 18.04)

We also have several other articles relating to the day-to-day management of your Ubuntu 18.04 LEMP server

Hey champ! - You’re all done!

Feel free to ask me any questions in the comments below.

Let me know in the comments if this helped. Follow Us on - Twitter - Facebook - YouTube.

1 note

·

View note

Text

Root CA & Self signed certs

Connection not secured?

This is a guide which was copied from https://blog.ligos.net/2021-06-26/Be-Your-Own-Certificate-Authority.html with some adaptations for my own specific use case

In this guide I will be doing the following things

Create your own CA cert

Create your own certs for personal usage minted by your own CA cert

Converted the certs into PEM format for NGINX reverse proxy or web server

Create your own CA (1)

Create - Using Power Shell as admin execute the following command to create your own CA

New-SelfSignedCertificate -Subject "CN=YOUR-NAME CA 2023,[email protected],O=YOUR-NAME,DC=YOUR-SITE,DC=WEBSITE,S=NSW,C=AU" -FriendlyName "YOUR-CA-NAME" -NotAfter (Get-Date).AddYears(50) -KeyUsage CertSign,CRLSign,DigitalSignature -TextExtension "2.5.29.19={text}CA=1&pathlength=1"-KeyAlgorithm "RSA" -KeyLength 4096 -HashAlgorithm 'SHA384' -KeyExportPolicy Exportable -CertStoreLocation cert:\CurrentUser\My -Type Custom

Remember to save the thumbprint, we will need it later to mint the new custom certs

Export - From windows startmenu open manage user certificates > Personal > Certificate > right-click-on-cert > all tasks > export > next-until > Yes, export the private key > select export all extended properties > set password > save-to-your-own-location

Import your own CA as a Trusted Root Certification Authorities (2)

Double click on the file you just saved > cert import wizard > select current user > next-until > enter-password-for-import > select strong private key protection and mark this key as exportable > save-import-into-trusted-root-certi-auth > next until-done

Create your own certs minted by your own CA (3)

New-SelfSignedCertificate -DnsName @("your-own-domain.com") -Type SSLServerAuthentication -Signer Cert:\CurrentUser\My\D557C06E8A0F1B455375CD68581E10BD88FAB4B1<THE-THUMB-YOU_SAVED -NotAfter (Get-Date).AddYears(10) -KeyAlgorithm "RSA" -KeyLength 2048 -HashAlgorithm 'SHA256' -KeyExportPolicy Exportable -CertStoreLocation cert:\CurrentUser\My

Export and convert your certs to be used with NGINX (4)

Similar to step 2, where we exported the CA for backup and import into trust authority, in this step we will do the same, but we will export the cert for our own domain not the CA.

We will export 2 copies, one with private key and one without, and as you have guessed it, NGINX requires 2 certs for SSL setup.

The exporting steps are exactly as step-2, if you want more instruction refer to the original guide, that page has more in-depth step-by-step.

In my case I have saved the certs as following:

zc-cert.cer (cert file without private key)

zc-private.pfx (cert file with private key)

I will need to convert them both into PEM format so I can use them in NGINX, the steps for private key using a linux box are:

1 - openssl pkcs12 -in zc-private.pfx -out zc-private.key 2 - openssl rsa -in zc-private.key -out private.pem

Please note, in step 1 you will need the password you have originally selected when you exported, for the sake of simplicity when you are doing these things just pick a password and use it through out the process, remember this is your for your own home usage, so it is not that critical if you have a 20 characters password

below is the step to convert zc-cert.cer (no-private-key) into a ssl cert PEM format

openssl x509 -inform der -in zc-cert.cer -out cert.pem

By now you should have 2 files, both of which can be used in an NGINX web instance

cert.pem private.pem

An example NGINX reverse proxy configuration using the certs we just created

server { listen 443 ssl; server_name your-own-domain.com; proxy_redirect off; ssl_certificate /etc/ssl/certs/cert.pem; ssl_certificate_key /etc/ssl/private/private.pem;

location / { add_header 'Access-Control-Allow-Origin' '*'; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; proxy_buffering off; client_max_body_size 0; proxy_connect_timeout 3600s; proxy_read_timeout 3600s; proxy_send_timeout 3600s; send_timeout 3600s; proxy_pass https://your-redirected-website.com/; } }

The location/name where you save these files are not important, as long as you pointed the configuration to the correct place.

0 notes

Text

Welcome to today’s guide on how to insta... https://www.computingpost.com/how-to-install-ansible-awx-on-ubuntu-20-0418-04/?feed_id=16530&_unique_id=6356433b83b11

0 notes

Text

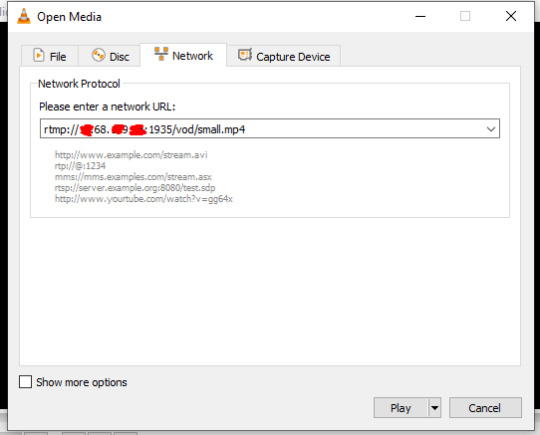

Rtmp server centos

You can configure NGINX to stream video using one or both of the HTTP Live Streaming (HLS) and Dynamic Adaptive Streaming over HTTP (DASH) protocols. auto/configure -add-module=./nginx-rtmp-module In order to install these packages, you need to clone the GitHub repositories for RTMP and NGINX, run the NGINX configure command, and then compile NGINX. Installing NGINX Plus on RHEL 8.0+, CentOS RHEL 8.0+, and Oracle Linux RHEL 8.0+ NGINX Plus can be installed on the following versions of CentOS/Oracle Linux/RHEL: CentOS 8.0+ (x8664, aarch64) Red Hat Enterprise Linux 8. The easiest way to install these dependencies is with a package manager, run the following command to install the dependencies: sudo apt install libpcre3-dev libssl-dev zlib1g-dev Edit NGINX configuration and add RTMP section for enable RTMP live service, start server. Download sources, unpack, compile NGINX with nginx-rtmp-module and httpssl modules. To download and install these packages you can run the command as follows:- sudo apt updateįor installing NGINX you will need to install several dependencies like: Perl Compatible Regular Expressions (PCRE), OpenSSL, and Zlib for compression. Install all necessary dependencies for building NGINX and RTMP module. MPEG-TS is a widely adopted, well known and well-documented streaming format.

This makes it possible to use all the power and flexibility of nginx HTTP configurations including SSL, access control, logging, request limiting, etc. The stream is published in the MPEG-TS format over HTTP. In this solution, I will be taking advantage of the Real‑Time Messaging Protocol (RTMP) module for NGINX.īefore compiling the NGINX, you need to install some basic tools installed on your Ubuntu server like autoconf, gcc, git, and make. The RTMP module for NGINX provides HLS and MPEG-DASH live streaming capabilities for those who want a lightweight solution based on the HTTP protocol. pass through any firewall or proxy server that allows HTTP data to pass. We will be going to install all these packages in Ubuntu 20.04 Server for these installations. CentOS 6 system FreeSwitch and RTMP service installation and demonstration (3). In this tutorial, I will be guiding how you can stream a live and stored video from your NGINX server.

0 notes

Text

NGINX Server & Custom Load Balancer: A Comprehensive Guide

The NGINX Server is an industry-leading open-source software for web serving, reverse proxying, caching, load balancing, media streaming, and more. Initially developed as a web server, NGINX has grown to become a versatile tool for handling some of the most complex load balancing needs in modern web infrastructure. This guide covers the ins and outs of setting up and using NGINX as a Custom Load Balancer, tailored for businesses and developers who need robust and scalable solutions.

What is NGINX?

NGINX is a high-performance HTTP and reverse proxy server that is optimized for handling multiple concurrent connections. Unlike traditional web servers, NGINX employs an event-driven architecture that makes it resource-efficient and capable of handling massive traffic without performance degradation.

Key Features of NGINX:

Static Content Serving: Quick delivery of static files, such as HTML, images, and JavaScript.

Reverse Proxy: Routes client requests to multiple servers.

Load Balancing: Distributes traffic across several servers.

Security: Built-in protections like request filtering, rate limiting, and DDoS prevention.

Why Use NGINX for Load Balancing?

Load balancing is essential for managing heavy web traffic by distributing requests across multiple servers. NGINX Load Balancer capabilities provide:

Increased availability and reliability by distributing traffic load.

Improved scalability by adding more servers seamlessly.

Enhanced fault tolerance with automatic failover options.

Incorporating NGINX as a Load Balancer allows businesses to accommodate spikes in traffic while reducing the risk of a single point of failure. It is an ideal choice for enterprise-grade applications and is widely used by companies like Airbnb, Netflix, and GitHub.

Types of Load Balancing with NGINX

NGINX supports different load balancing methods to suit various requirements:

Round Robin Load Balancing: The simplest form, Round Robin, distributes requests in a cyclic manner. Each request goes to the next server in line, ensuring an even distribution of traffic.

Least Connections Load Balancing: With Least Connections, NGINX routes traffic to the server with the fewest active connections. This method is useful when there’s a significant disparity in server capacity.

IP Hash Load Balancing: This approach directs clients with the same IP address to the same server. IP Hash is commonly used in scenarios where sessions are sticky and users need to interact with the same server.

Custom Load Balancer: NGINX also allows for custom configuration, where administrators can define load balancing algorithms tailored to specific needs, including failover strategies and request weighting.

Setting Up NGINX as a Custom Load Balancer

Let’s walk through a step-by-step configuration of NGINX as a Custom Load Balancer.

Prerequisites

A basic understanding of NGINX configuration.

Multiple backend servers to distribute the traffic.

NGINX installed on the load balancer server.

Step 1: Install NGINX

First, ensure that NGINX is installed. For Ubuntu/Debian, use the following command:

bash

Copy code

sudo apt update

sudo apt install nginx

Step 2: Configure Backend Servers

Define the backend servers in your NGINX configuration file. These servers will receive traffic from the load balancer.

nginx

Copy code

upstream backend_servers {

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

Step 3: Configure Load Balancing Algorithm

You can modify the load balancing algorithm based on your requirements. Here’s an example of setting up a Least Connections method:

nginx

Copy code

upstream backend_servers {

least_conn;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

Step 4: Setting Up Failover

Add failover configurations to ensure requests are automatically rerouted if a server becomes unresponsive.

nginx

Copy code

upstream backend_servers {

server backend1.example.com max_fails=3 fail_timeout=30s;

server backend2.example.com max_fails=3 fail_timeout=30s;

}

Step 5: Test and Reload NGINX

After making changes, test your configuration for syntax errors and reload NGINX:

bash

Copy code

sudo nginx -t

sudo systemctl reload nginx

Benefits of Using NGINX for Custom Load Balancing

Scalability: Effortlessly scale applications by adding more backend servers.

Improved Performance: Distribute traffic efficiently to ensure high availability.

Security: Provides additional layers of security, helping to protect against DDoS attacks and other threats.

Customization: The flexibility of NGINX configuration allows you to tailor the load balancing to specific application needs.

Advanced NGINX Load Balancing Strategies

For highly dynamic applications or those with specialized traffic patterns, consider these advanced strategies:

Dynamic Load Balancing: Uses health checks to adjust the traffic based on server responsiveness.

SSL Termination: NGINX can handle SSL offloading, reducing the load on backend servers.

Caching: By enabling caching on NGINX, you reduce backend load and improve response times for repetitive requests.

Comparison of NGINX with Other Load Balancers

Feature

NGINX

HAProxy

Apache Traffic Server

Performance

High

Very High

Moderate

SSL Termination

Supported

Supported

Limited

Customization

Extensive

High

Moderate

Ease of Setup

Moderate

Moderate

High

NGINX remains the preferred choice due to its flexibility, robust features, and ease of use for both small and large enterprises.

Real-World Applications of NGINX Load Balancing

Companies across industries leverage NGINX Load Balancer for:

E-commerce Sites: Distributes traffic to ensure high performance during peak shopping seasons.

Streaming Services: Helps manage bandwidth to provide uninterrupted video streaming.

Financial Services: Enables reliable traffic distribution, crucial for transaction-heavy applications.

Conclusion

Setting up NGINX as a Custom Load Balancer offers significant benefits, including high availability, robust scalability, and enhanced security. By leveraging NGINX’s load balancing capabilities, organizations can maintain optimal performance and ensure a smooth experience for users, even during peak demand.

0 notes

Text

Ingress Controller Kubernetes: A Comprehensive Guide

Ingress controller Kubernetes is a critical component in Kubernetes environments that manages external access to services within a cluster. It acts as a reverse proxy that routes incoming traffic based on defined rules to appropriate backend services. The ingress controller helps in load balancing, SSL termination, and URL-based routing. Understanding how an ingress controller Kubernetes functions is essential for efficiently managing traffic and ensuring smooth communication between services in a Kubernetes cluster.

Key Features of Ingress Controller Kubernetes

The ingress controller Kubernetes offers several key features that enhance the management of network traffic within a Kubernetes environment. These features include path-based routing, host-based routing, SSL/TLS termination, and load balancing. By leveraging these capabilities, an ingress controller Kubernetes helps streamline traffic management, improve security, and ensure high availability of applications. Understanding these features can assist in optimizing your Kubernetes setup and addressing specific traffic management needs.

How to Set Up an Ingress Controller Kubernetes?

Setting up an ingress controller Kubernetes involves several steps to ensure proper configuration and functionality. The process includes deploying the ingress controller using Kubernetes manifests, configuring ingress resources to define routing rules, and applying SSL/TLS certificates for secure communication. Proper setup is crucial for the **ingress controller Kubernetes** to effectively manage traffic and route requests to the correct services. This section will guide you through the detailed steps to successfully deploy and configure an ingress controller in your Kubernetes cluster.

Comparing Popular Ingress Controllers for Kubernetes

There are several popular **ingress controllers Kubernetes** available, each with its unique features and capabilities. Common options include NGINX Ingress Controller, Traefik, and HAProxy Ingress. Comparing these ingress controllers involves evaluating factors such as ease of use, performance, scalability, and support for advanced features. Understanding the strengths and limitations of each **ingress controller Kubernetes** helps in choosing the best solution for your specific use case and requirements.

Troubleshooting Common Issues with Ingress Controller Kubernetes

Troubleshooting issues with an ingress controller Kubernetes can be challenging but is essential for maintaining a functional and efficient Kubernetes environment. Common problems include incorrect routing, SSL/TLS certificate errors, and performance bottlenecks. This section will explore strategies and best practices for diagnosing and resolving these issues, ensuring that your ingress controller Kubernetes operates smoothly and reliably.

Security Considerations for Ingress Controller Kubernetes

Security is a critical aspect of managing an ingress controller Kubernetes. The ingress controller handles incoming traffic, making it a potential target for attacks. Important security considerations include implementing proper access controls, configuring SSL/TLS encryption, and protecting against common vulnerabilities such as cross-site scripting (XSS) and distributed denial-of-service (DDoS) attacks. By addressing these security aspects, you can safeguard your Kubernetes environment and ensure secure access to your services.

Advanced Configuration Techniques for Ingress Controller Kubernetes

Advanced configuration techniques for **ingress controller Kubernetes** can enhance its functionality and performance. These techniques include custom load balancing algorithms, advanced routing rules, and integration with external authentication providers. By implementing these advanced configurations, you can tailor the **ingress controller Kubernetes** to meet specific requirements and optimize traffic management based on your application's needs.

Best Practices for Managing Ingress Controller Kubernetes

Managing an ingress controller Kubernetes effectively involves adhering to best practices that ensure optimal performance and reliability. Best practices include regularly updating the ingress controller, monitoring traffic patterns, and implementing efficient resource allocation strategies. By following these practices, you can maintain a well-managed ingress controller that supports the smooth operation of your Kubernetes applications.

The Role of Ingress Controller Kubernetes in Microservices Architectures

In microservices architectures, the ingress controller Kubernetes plays a vital role in managing traffic between various microservices. It enables efficient routing, load balancing, and security for microservices-based applications. Understanding the role of the ingress controller in such architectures helps in designing robust and scalable systems that handle complex traffic patterns and ensure seamless communication between microservices.

Future Trends in Ingress Controller Kubernetes Technology

The field of ingress controller Kubernetes technology is constantly evolving, with new trends and innovations emerging. Future trends may include enhanced support for service meshes, improved integration with cloud-native security solutions, and advancements in automation and observability. Staying informed about these trends can help you leverage the latest advancements in ingress controller technology to enhance your Kubernetes environment.

Conclusion

The ingress controller Kubernetes is a pivotal component in managing traffic within a Kubernetes cluster. By understanding its features, setup processes, and best practices, you can optimize traffic management, enhance security, and improve overall performance. Whether you are troubleshooting common issues or exploring advanced configurations, a well-managed ingress controller is essential for the effective operation of Kubernetes-based applications. Staying updated on future trends and innovations will further enable you to maintain a cutting-edge and efficient Kubernetes environment.

0 notes

Text

Install angry ip scanner ubuntu 20.04

#Install angry ip scanner ubuntu 20.04 how to

#Install angry ip scanner ubuntu 20.04 install

#Install angry ip scanner ubuntu 20.04 update

You have now learned basic management commands and should be ready to configure the site to host more than one domain. To re-enable the service to start up at boot, you can type: If this is not what you want, you can disable this behavior by typing: To do this, type:īy default, Nginx is configured to start automatically when the server boots. If you are only making configuration changes, Nginx can often reload without dropping connections. To stop and then start the service again, type: To start the web server when it is stopped, type: Now that you have your web server up and running, let’s review some basic management commands. If you are on this page, your server is running correctly and is ready to be managed. You should receive the default Nginx landing page: When you have your server’s IP address, enter it into your browser’s address bar: your_server_ip If you do not know your server’s IP address, you can find it by using the tool, which will give you your public IP address as received from another location on the internet: You can access the default Nginx landing page to confirm that the software is running properly by navigating to your server’s IP address. However, the best way to test this is to actually request a page from Nginx. ├─2369 nginx: master process /usr/sbin/nginx -g daemon on master_process on Īs confirmed by this out, the service has started successfully. Loaded: loaded (/lib/systemd/system/rvice enabled vendor preset: enabled)Īctive: active (running) since Fri 16:08:19 UTC 3 days ago rvice - A high performance web server and a reverse proxy server.You should get a listing of the application profiles:

#Install angry ip scanner ubuntu 20.04 how to

List the application configurations that ufw knows how to work with by typing: Nginx registers itself as a service with ufw upon installation, making it straightforward to allow Nginx access. Step 2 – Adjusting the Firewallīefore testing Nginx, the firewall software needs to be adjusted to allow access to the service.

#Install angry ip scanner ubuntu 20.04 install

Afterwards, we can install nginx:Īfter accepting the procedure, apt will install Nginx and any required dependencies to your server.

#Install angry ip scanner ubuntu 20.04 update