#OpenCV Projects Final Year

Explore tagged Tumblr posts

Text

OpenCV projects using Raspberry Pi | Takeoff

The Blind Reader is a small, affordable device designed to help blind people read printed text. Traditional Braille machines can be very expensive, so not everyone can buy them. The Blind Reader is different because it's cheaper and easier for more people to use.

This device works using a small computer called a Raspberry Pi and a webcam. Here’s how it works step-by-step:

1. Capture the Text: You place a page with printed text under the webcam. The webcam takes a picture of the page.

2. Convert Image to Text: The Raspberry Pi uses a technology called Optical Character Recognition (OCR) to convert the picture into digital text. This means it reads the image and understands what letters and words are on the page.

3. Process the Text: The device makes sure the text is clear and readable. It fixes problems like the text being at an angle or having multiple columns. This process is called skew correction and segmentation.

4. Read Aloud: Once the text is clear, the Raspberry Pi uses a Text-to-Speech (TTS) program to change the text into spoken words.

5. Output the Audio: The spoken words are sent to speakers through an audio amplifier, so the sound is loud and clear.

At Takeoffprojects, The Blind Reader is a complete system that helps visually impaired people by reading printed text out loud. It's easy to use and portable, which means you can carry it around and use it anywhere. This device makes reading more accessible for blind people, helping them become more independent and gain better access to information. Projects on OpenCV like this demonstrate the power of technology in improving lives.

#Projects On OpenCV#OpenCV Projects#OpenCV Projects For Beginners#Computer Vision Projects#OpenCV Projects Final Year

0 notes

Text

image processing chennai

2025 2026 Image Processing Projects Chennai. Perfect for final-year students, these projects focus on facial recognition, object detection, image segmentation, and medical diagnostics using Python, MATLAB, and OpenCV. Chennai’s best project centers offer IEEE-guided topics, real-time datasets, hands-on training, and certification support. Build smart, scalable solutions and gain the skills needed for the booming tech industry.

0 notes

Text

YOLO Python Projects in Chennai

Master real-time object detection with cutting-edge YOLO Python Projects in Chennai. Ideal for final year students, these projects help you build smart surveillance systems, traffic monitoring tools, and AI vision applications using the YOLO (You Only Look Once) algorithm and Python libraries like OpenCV and TensorFlow. Chennai’s leading project centers offer hands-on training, expert mentorship, documentation, and internship support for academic and career excellence.

0 notes

Text

When AI Meets Medicine: Periodontal Diagnosis Through Deep Learning by Para Projects

In the ever-evolving landscape of modern healthcare, artificial intelligence (AI) is no longer a futuristic concept—it is a transformative force revolutionizing diagnostics, treatment, and patient care. One of the latest breakthroughs in this domain is the application of deep learning to periodontal disease diagnosis, a condition that affects millions globally and often goes undetected until it progresses to severe stages.

In a pioneering step toward bridging technology with dental healthcare, Para Projects, a leading engineering project development center in India, has developed a deep learning-based periodontal diagnosis system. This initiative is not only changing the way students approach AI in biomedical domains but also contributing significantly to the future of intelligent, accessible oral healthcare.

Understanding Periodontal Disease: A Silent Threat Periodontal disease—commonly known as gum disease—refers to infections and inflammation of the gums and bone that surround and support the teeth. It typically begins as gingivitis (gum inflammation) and, if left untreated, can lead to periodontitis, which causes tooth loss and affects overall systemic health.

The problem? Periodontal disease is often asymptomatic in its early stages. Diagnosis usually requires a combination of clinical examinations, radiographic analysis, and manual probing—procedures that are time-consuming and prone to human error. Additionally, access to professional diagnosis is limited in rural and under-resourced regions.

This is where AI steps in, offering the potential for automated, consistent, and accurate detection of periodontal disease through the analysis of dental radiographs and clinical data.

The Role of Deep Learning in Medical Diagnostics Deep learning, a subset of machine learning, mimics the human brain’s neural network to analyze complex data patterns. In the context of medical diagnostics, it has proven particularly effective in image recognition, classification, and anomaly detection.

When applied to dental radiographs, deep learning models can be trained to:

Identify alveolar bone loss

Detect tooth mobility or pocket depth

Differentiate between healthy and diseased tissue

Classify disease severity levels

This not only accelerates the diagnostic process but also ensures objective and reproducible results, enabling better clinical decision-making.

Para Projects: Where Innovation Meets Education Recognizing the untapped potential of AI in dental diagnostics, Para Projects has designed and developed a final-year engineering project titled “Deep Periodontal Diagnosis: A Hybrid Learning Approach for Accurate Periodontitis Detection.” This project serves as a perfect confluence of healthcare relevance and cutting-edge technology.

With a student-friendly yet professionally guided approach, Para Projects transforms a complex AI application into a doable and meaningful academic endeavor. The project has been carefully designed to offer:

Real-world application potential

Exposure to biomedical datasets and preprocessing

Use of deep learning frameworks like TensorFlow and Keras

Comprehensive support from coding to documentation

Inside the Project: How It Works The periodontal diagnosis project by Para Projects is structured to simulate a real diagnostic system. Here’s how it typically functions:

Data Acquisition and Preprocessing Students are provided with a dataset of dental radiographs (e.g., panoramic X-rays or periapical films). Using tools like OpenCV, they learn to clean and enhance the images by:

Normalizing pixel intensity

Removing noise and irrelevant areas

Annotating images using bounding boxes or segmentation maps

Feature Extraction Using convolutional neural networks (CNNs), the system is trained to detect and extract features such as

Bone-level irregularities

Shape and texture of periodontal ligaments

Visual signs of inflammation or damage

Classification and Diagnosis The extracted features are passed through layers of a deep learning model, which classifies the images into categories like

Healthy

Mild periodontitis

Moderate periodontitis

Severe periodontitis

Visualization and Reporting The system outputs visual heatmaps and probability scores, offering a user-friendly interpretation of the diagnosis. These outputs can be further converted into PDF reports, making it suitable for both academic submission and potential real-world usage.

Academic Value Meets Practical Impact For final-year engineering students, working on such a project presents a dual benefit:

Technical Mastery: Students gain hands-on experience with real AI tools, including neural network modeling, dataset handling, and performance evaluation using metrics like accuracy, precision, and recall.

Social Relevance: The project addresses a critical healthcare gap, equipping students with the tools to contribute meaningfully to society.

With expert mentoring from Para Projects, students don’t just build a project—they develop a solution that has real diagnostic value.

Why Choose Para Projects for AI-Medical Applications? Para Projects has earned its reputation as a top-tier academic project center by focusing on three pillars: innovation, accessibility, and support. Here’s why students across India trust Para Projects:

🔬 Expert-Led Guidance: Each project is developed under the supervision of experienced AI and domain experts.

📚 Complete Project Kits: From code to presentation slides, students receive everything needed for successful academic evaluation.

💻 Hands-On Learning: Real datasets, practical implementation, and coding tutorials make learning immersive.

💬 Post-Delivery Support: Para Projects ensures students are prepared for viva questions and reviews.

💡 Customization: Projects can be tailored based on student skill levels, interest, or institutional requirements.

Whether it’s a B.E., B.Tech, M.Tech, or interdisciplinary program, Para Projects offers robust solutions that connect education with industry relevance.

From Classroom to Clinic: A Future-Oriented Vision Healthcare is increasingly leaning on predictive technologies for better outcomes. In this context, AI-driven dental diagnostics can transform public health—especially in regions with limited access to dental professionals. What began as a classroom project at Para Projects can, with further development, evolve into a clinical tool, contributing to preventive healthcare systems across the world.

Students who engage with such projects don’t just gain knowledge—they step into the future of AI-powered medicine, potentially inspiring careers in biomedical engineering, health tech entrepreneurship, or AI research.

Conclusion: Diagnosing with Intelligence, Healing with Innovation The fusion of AI and medicine is not just a technological shift—it’s a philosophical transformation in how we understand and address disease. By enabling early, accurate, and automated diagnosis of periodontal disease, deep learning is playing a vital role in improving oral healthcare outcomes.

With its visionary project on periodontal diagnosis through deep learning, Para Projects is not only helping students fulfill academic goals—it’s nurturing the next generation of tech-enabled healthcare changemakers.

Are you ready to engineer solutions that impact lives? Explore this and many more cutting-edge medical and AI-based projects at https://paraprojects.in. Let Para Projects be your partner in building technology that heals.

0 notes

Text

MCA in AI: High-Paying Job Roles You Can Aim For

Artificial Intelligence (AI) is revolutionizing industries worldwide, creating exciting and lucrative career opportunities for professionals with the right skills. If you’re pursuing an MCA (Master of Computer Applications) with a specialization in AI, you are on a promising path to some of the highest-paying tech jobs.

Here’s a look at some of the top AI-related job roles you can aim for after completing your MCA in AI:

1. AI Engineer

Average Salary: $100,000 - $150,000 per year Role Overview: AI Engineers develop and deploy AI models, machine learning algorithms, and deep learning systems. They work on projects like chatbots, image recognition, and AI-driven automation. Key Skills Required: Machine learning, deep learning, Python, TensorFlow, PyTorch, NLP

2. Machine Learning Engineer

Average Salary: $110,000 - $160,000 per year Role Overview: Machine Learning Engineers build and optimize algorithms that allow machines to learn from data. They work with big data, predictive analytics, and recommendation systems. Key Skills Required: Python, R, NumPy, Pandas, Scikit-learn, cloud computing

3. Data Scientist

Average Salary: $120,000 - $170,000 per year Role Overview: Data Scientists analyze large datasets to extract insights and build predictive models. They help businesses make data-driven decisions using AI and ML techniques. Key Skills Required: Data analysis, statistics, SQL, Python, AI frameworks

4. Computer Vision Engineer

Average Salary: $100,000 - $140,000 per year Role Overview: These professionals work on AI systems that interpret visual data, such as facial recognition, object detection, and autonomous vehicles. Key Skills Required: OpenCV, deep learning, image processing, TensorFlow, Keras

5. Natural Language Processing (NLP) Engineer

Average Salary: $110,000 - $150,000 per year Role Overview: NLP Engineers specialize in building AI models that understand and process human language. They work on virtual assistants, voice recognition, and sentiment analysis. Key Skills Required: NLP techniques, Python, Hugging Face, spaCy, GPT models

6. AI Research Scientist

Average Salary: $130,000 - $200,000 per year Role Overview: AI Research Scientists develop new AI algorithms and conduct cutting-edge research in machine learning, robotics, and neural networks. Key Skills Required: Advanced mathematics, deep learning, AI research, academic writing

7. Robotics Engineer (AI-Based Automation)

Average Salary: $100,000 - $140,000 per year Role Overview: Robotics Engineers design and program intelligent robots for industrial automation, healthcare, and autonomous vehicles. Key Skills Required: Robotics, AI, Python, MATLAB, ROS (Robot Operating System)

8. AI Product Manager

Average Salary: $120,000 - $180,000 per year Role Overview: AI Product Managers oversee the development and deployment of AI-powered products. They work at the intersection of business and technology. Key Skills Required: AI knowledge, project management, business strategy, communication

Final Thoughts

An MCA in AI equips you with specialized technical knowledge, making you eligible for some of the most sought-after jobs in the AI industry. By gaining hands-on experience in machine learning, deep learning, NLP, and big data analytics, you can land high-paying roles in top tech companies, startups, and research institutions.

If you’re looking to maximize your career potential, staying updated with AI advancements, building real-world projects, and obtaining industry certifications can give you a competitive edge.

0 notes

Text

Turbocollage code

Turbocollage code how to#

Definition of various parameters of audio.

Turbocollage code how to#

How to select an entire column in visual block mode- How do you select a whole column in visual block mode?.Net pet sales system VS development of SQL Server database web structure c programming computer web page source code project Using the seasonal map, you can compare the data differences of the same month in different years, or the data differences of the same (year / month / week, etc.) time series on R language ggplot2 visualization: visualize the seasonal map of time series.Seaborn uses the boxplot function to visualize the box chart (use the color palette to customize the color of the box chart, sns.set_palette global settings palette parameters, customize the color of the global palette).What is the relationship between principal component analysis and exploratory factor analysis, PCA and EFA?.Matplotlib visualizes the line diagram connecting pairs of data points, displaying only lines but not data points (paired line plot with Matplotlib but without points).CSDN paid column writing perception and growth path, and on the creator synergy of learning member model.Swagger enterprise mainstream interface management and testing tools.Detailed explanation of OpenCV function usage (key chapter 1) 41 ~ 50, including code examples, which can be run directly.Opencv function details 51 ~ 60, including code examples, which can be run directly.Opencv function detailed explanation final 61 ~ 67, including code examples, which can be run directly.

0 notes

Text

Which Type of Apps can you Build in Python?

For many years, technology has been going through complete makeovers and this has been changing our lives. As a result, smartphones, computer systems, Artificial Intelligence and other technologies have made our lives easier. To utilize these technologies, we have access to different programs and applications that are developed using different programming languages. Python is one such powerful programming language.

Python is a pretty popular programming language among developers. It is considered as one of the top programming languages that can beat the original coding language JAVA. This language has facilitated the whole mobile application development process to a great extent and has become popular in the developer community.

Let’s take the journey of knowledge and learn why Python app development is popular and what type of applications can be developed using this programming language. But first, let us first learn about Python.

What Exactly Is Python?

So what is Python you ask? Python is an object-oriented high-level programming language with an incredible built-in data structure. These data structures are combined with dynamic typing and binding features to render hassle-free app development. Python acts as a glue language that fuses several components.

It is a well-known programming language and is quite popular among developers for its easy-to-learn syntax that reduces the expenses incurred in the program maintenance. It also offers several modules and packages that promote code reusability. On top of that, it completely favors cross-platform app development, making it ideal for any mobile app development.

Saying that Python is quite popular would not suffice. Let us have a look at the features that play a vital role in skyrocketing its popularity.

Why Is Python So Popular?

IDLE stands for Integrated Development and Learning Environment. This environment allows developers to create Python code. It is used to create a single statement that can be used to execute Python scripts.

Django

Django is the open-source Python framework that streamlines web app development activities by providing access to different features. This feature helps the developers to create complex codes effectively.

Understandable & Readable Code

One of the most important features of Python is syntax. The rules of the syntax enable the developers to express the concepts without writing any additional code. It makes complex things look simple and this is why Python is deemed suitable for beginners to learn and understand.

Apart from that, Python is the only language that focuses on code readability which allows the developers to use English words instead of punctuations. All these factors make Python the perfect programming suite for mobile app development. The codebase constantly helps the developers update the software without any effort.

It Is Faster

In the Python environment, programs are added to the interpreter that runs them directly. It is extremely easy to code and get your hands on the feedback. With Python, you can easily finish and execute your programs quickly. This improves the time-to-market as well.

High Compatibility

Python supports almost every operating system including Windows, iOS, and Android. You can use Python to use and run the code across different platforms. With Python, you can also run the same code without the need for recompilation.

Test-Driven Development

With Python app development, you can easily create prototypes of software applications. In a Python environment, coding and testing can go hand in hand, thanks to the ‘Test-Driven Development’.

Powerful Library

Python has a robust suite of libraries that gives it an edge over the other languages. The standard library allows you to select the modules from a wide range of requirements. With each module, you can easily add multiple functionalities to the process. All this without any extra coding.

Supports Big Data

Big data is one emerging technology Python supports. Python houses several libraries to work on Big Data. Additionally, it is easier to code with Python for Big data projects as compared to other programming languages.

Supportive Community

Another important element that increases the popularity of Python is its community support. Python has a very active community of developers and SMEs that provides guides, videos, and learning material for a better understanding of the language.

What Type of Apps Can You Build Using Python?

Blockchain Applications

Blockchain is one of the paramount features of technological advancements. It has revolutionized the functionalities of apps and how they perform. Fusing the essentials of blockchain app development was a challenging task in the past. However, since the inception of Python, things have become simpler in this regard.

The methodology of Blockchain app development is tricky but the simplicity of Python makes it less complex. Python houses a suite of frameworks to help the developers with HTTP requests. Developers can now easily employ these complex requests to establish an online connection with Blockchain and create endpoints. Apart from that, and different frameworks of Python also play an important role in creating a decentralized network of scripts on multiple machines.

Command-Line Applications

Command-line apps are control programs that operate from a command line. Both console and command-line apps work without a GUI. The correct evaluation of language helps the developers to discover new ways to use the command-line language.

Python offers the ‘Read Eval-Print-Loop’ feature popularly known as Python’s REPL feature. This particular feature helps the developers with the proper evaluation of command-line languages so they can create new possibilities in app development. Furthermore, Python is fully loaded with libraries that help in performing other tasks effectively.

Audio & Video Applications

Python app development helps in creating music and other types of audio and video apps. Python libraries like PyDub and OpenCV help the developers to successfully create an audio-video application. The video search engine, Youtube, was created using Python. This language is quite effective when it comes to delivering powerful applications with high performance.

Game Development

HD games such as Battlefield 2, EVE Online and a few other popular titles have been created using Python. Python offers the developers the facilities and environment to rapidly create a game prototype that can be tested in real-time. On top of that, this powerful programming language is also used to create game designing tools that assist in several tasks of the game development process.

System Administration Applications

Python is deemed fit for creating complex system administration applications as it allows the developers to communicate with the operating system through the OS. It also enables the developers to connect with the operating system through an interface the language is running on. This powerful language makes all the operating system operations accessible.

Machine Learning Applications

After Blockchain, and Big Data, the inspiring trend of this decade is Machine Learning and the developers can create ML apps using Python libraries. The libraries like Pandas and Scikit for machine learning are available in the marketplace for free. The commercial version can be obtained under a GNU license.

Natural Language Programming is a branch of machine learning that enables a system to analyze, manipulate and understand the human language. Developers can create NLP apps using Python libraries.

Business Applications

Python is quite versatile when it comes to app development. It also assists with ERP, eCommerce and other enterprise-grade app solutions. Odoo is a management software that is written in Python and offers a huge range of business apps. Enterprises have adopted Python for their business solutions.

Python offers a variety of development environments to create business apps. PyCharm is one of the best IDEs for Python that quickly installs on Windows, Mac OSX and other platforms. Developers can easily use this IDE to create avant-garde business apps.

Final Words

Down the road, we can say that Python app development is really fast and super flexible. Developers love this programming language because of the premium suite of features it offers. It is possible to create various types of apps, thanks to the versatile nature of Python app development. If you have a business idea and are looking for Python experts, we at DRC Systems would love to lend a helping hand. Hire dedicated Python developers and get in touch with us for all your Python needs!

0 notes

Text

Driver Drowsiness Detection using Raspberry Pi with Open CV and Python

youtube

Driver Drowsiness Detection System | Driver Drowsiness Detection using Raspberry pi and ML | Driver Drowsiness Detection using Raspberry Pi with OpenCV and Python. ****************************************************************** If You Want To Purchase the Full Project or Software Code Mail Us: [email protected] Title Name Along With You-Tube Video Link Project Changes also Made according to Student Requirements http://svsembedded.com/ è https://www.svskits.in/ M1: +91 9491535690 è M2: +91 7842358459 ****************************************************************** 1. Driver Drowsiness Detection with OpenCV, 2. Vehicle Accident Prevention Using Eye Blink, Alcohol, MEMS and Obstacle Sensors, 3. Raspberry Pi: Driver drowsiness detection with OpenCV and Python, 4. Eye Blink + Alcohol + MEMS + TEMPERATURE + Arduino uno + GSM + GPS + Google Map Location, 5. Realtime Drowsiness and Yawn Detection using Python in Raspberry Pi or any other PC, 6. Driver drowsiness detection using raspberry pi and web cam, 7. Accident Identification and alerting system using raspberry pi, 8. Automatic Vehicle Accident Alert System using Raspberry Pi, 9. Drowsy Driver Detector Final year project using raspberry pi 3, opencv & python, 10. raspberry drowsiness detection with opencv, 11. Driver Drowsiness Detection using OpenCV and Raspberry Pi 3, 12. Driver Drowsiness Detection System using Raspberry Pi 3 & Python, 13. Driver's Drowsiness Detection Project | GRIET, 14. Drowsiness detection using Raspberry Pi with Open CV python, 15. drowsiness or eye detection in opencv python raspberry pi, 16. Real time Driver drowsiness and alcohol detection using raspberry pi || Project centre in Bangalore, 17. Driver Sleep Detection Using Raspberry PI, 18. Drowsy Driver Alert System on Raspberry Pi, 19. Vehicle Driver Drowsiness detection using ECG signal, 20. driver drowsiness detection using IOT, 21. Real Time Driver Drowsiness Detection System using Raspberry pi, 22. Driver Drowsiness Detector using Raspberry Pi and Open CV, 23. Drowsiness detection using open cv, 24. Driver Drowsiness Detection Using Beagle bone Black, 25. DROWSINESS DETECTION USING OpenCV, 26. Drowsiness Detection On Raspberry Webcam Using Python, 27. Development of IOT-based Anti-drowsy Driving Alert System, 28. Drowsy Alert System Embedded Project on Raspberry Pi, 29. Face recognition based security system and driver drowsiness detection using raspberry Pi, 30. face recognition based vehicle start using raspberry pi 3+ driver drowsiness detection,

0 notes

Text

If I haven’t already wrote this before or announced it yet, then I’ll first like to start this post by announcing that I’ll be heading back to Singapore some time next month for about 2.5 weeks. The (return) flight ticket has already been booked for just a mere £500 (it was ~£900-£1100 when I checked last November/December!), but I have to deal with a 7 hours transit at Doha airport. But that’s fine, because I’ll have my laptop with me and I’ll be busy using the (overall) 40 hours to-and-from journey to write my dissertation report, which is also the topic of this post. Efficient time management much?

The weather is starting to become cooler this week, but I don’t think it’ll start to actually turn cold until mid-August, or early September. I’ll still be coming back here for a couple of months to carry on with my job hunt – so far, I’ve sent out my CVs to various parts of the world – like Denmark, Japan, Germany and even in Thailand. The process to get a working visa is hard, but I’m somewhat confident that with my newfound technical skills and expertise, I should be able to get what I want.

Weekly spending:

14th Jul Sun Groceries: £5 Total spending: £2538.85

15th Jul Mon Total spending: £2538.85

16th Jul Tue Groceries: £12 Total spending: £2550.85

17th Jul Wed Total spending: £2550.85

18th Jul Thu Lunch: £4.70 Dinner: £10.40 Total spending: £2565.95

19th Jul Fri Groceries: £6 Total spending: £2571.95

20th Jul Sat Dinner: £9 Total spending: £2580.95

I actually wanted to write a short introduction about Neural Networks (which is highly related to my dissertation project), but at the moment, I don’t understand it enough to write much about it. In fact, I don’t even think I’m qualified to write about it at the moment, and I’m not sure how long it’ll take for me to understand it to a “passable” level.

Anyway, the main task of my dissertation project – and what I was working on for the past month and the coming 1.5 months – is to implement an algorithm that can detect and draw bounding boxes around all the insects in a given image. The insects in these images can be sparsely distributed or densely packed together. For the former, it’s not too hard to detect with the right parameters and pre-processing – but of course it’s not so simple either, like just using template matching. For the latter, I totally have no idea how to approach the problem.

To give a better idea, for the image below, it’s easy for me to make the computer detect the exact location of where the insects are because it’s so obvious. A simple find edges and contours algorithm will get me an accuracy of >50%, with a few false positives here and there on the wooden frame, and on the labels. Just last week, I finally found a way to measure the accuracy of the algorithm. Now, the challenge for me is to pump it up by 90% by finding the right hyperparameters (I hope I’m using the term correctly here).

Of course, I wish that my dissertation were that easy. The same algorithm that works on the above image will not work on the images below. The caveat is that I’m only allowed to use automatic methods only – that means that it’s not like I can just make a GUI to get the results that I want. So far, I’ve just started having all my packages and I’ve got a few algorithms on my list to try. Implementing them, however, is another problem.

Either way, I’m sorry that I cannot even provide a simple explanation of how Neural Networks work at the moment, but I can give a very brief overview on the image classification/Computer Vision process. This is by no means a detailed explanation of the overall process, but rather, what I understand from the process off the top of my head. Computer Vision is a really vast topic and there are so many things that you can explore at every step of the way. Hell, I can even spend 3 months on a research topic about the best pre-processing methods and write a 30-50 page report about that.

Pre-processing In any image analysis/computer vision project, the first step is to pre-process the image. There are a lot of things that you should do to an image first – like resizing it (simply because a 256×256 image will process much faster than a 3200×3200 image), removing the noise (usually, this means smoothing the image using some kind of blurring filter), and segmentation (the simplest being thresholding, to create a ‘mask’).

Typically, this step isn’t very hard. You don’t even need to apply any coding at this stage – even using Adobe photoshop or Microsoft Paint to crop the image or re-colorize some parts is already considered ‘pre-processing’. I tend to use ImageJ to try different filters on the image first to check the result, and then deploy the code on Python to get the same results.

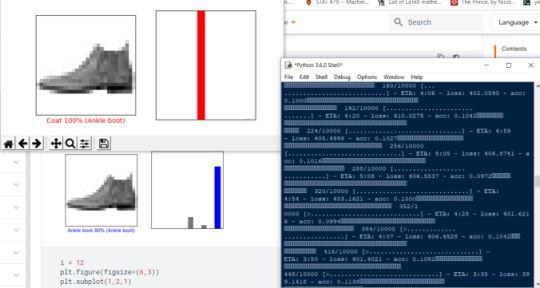

Algorithms This part is the reason why I’m posting this so late – it takes FOREVER to train the CNN model, but I think it’s important to include this since it’s the whole point of this post. By far, the gold standard for any image classification/detection problem is the use of Neural Networks, which I’m trying to implement on my project (and I’m pleased to say that after many months of getting stuck in the installation process, I finally got TensorFlow and Keras up on Python. You see, I’ve been using Python 3.7 all these while and it’s not compatible with those packages for some reason. Then, I downloaded Python 3.5, and while I could make TensorFlow run, I had some problems with OpenCV. I was stranded there until just yesterday where I downloaded Python 3.6 and shifted all my packages and lo-and-behold, it works!).

If you’ve been following the news recently, there’s this “FaceApp” application that’s been going around that makes your face look older than you currently are. The magic behind it is something called “Generative Adversarial Networks” (GAN), which are a type of neural network. Of course, I’m not using GAN in my project, so there’s no need for me to find out more about it yet. Anyway, the key thing here is that Neural Networks are like, the hottest thing in data science right now. In the last 5 years or so, almost every image classification problem will first approach the problem using some form of neural network algorithm, and the results are very promising – with about an 85-95% accuracy.

Anyway, I copy-pasted a code from the TensorFlow tutorial website and trained it on a built-in sample dataset. The training took ~5 minutes (may be faster if I just ran it from the console) and the result is…wrong. It classified the image as a Coat with 100% probability, when it actually is an Ankle Boot. If you are interested to go through the whole process yourself, you are welcomed to try your luck at https://www.tensorflow.org/tutorials/keras/basic_classification.

BUT, as my professor told me, the problem with these deep learning techniques are that the “hard part” – the intermediate step of actually processing and applying the algorithm to the image – are already done for you by the experts. These days, you just need to install the packages, feed the correct input to the pre-written algorithm, train the model (which is automatic), and finally get the output. Then you just check if the output is correct or not and get some sort of a truth table. Unfortunately, for a graded project, it’s hard to give good marks for this because you are not actually “thinking” or coming up with your own solutions, but just piggy-backing on the works of others. There’s no merit to give for just downloading images and packages!

Computer Vision is something that I’m really interested in, but unfortunately, I did not have the chance to take up a module on this in my undergraduate or postgraduate education – other than a small, small part in my Biomedical Imaging module about simple thresholding. Everything that I have learned about this topic is purely from online sources, A LOT OF TRIAL AND ERROR, and a bit of luck (but I’ve been very lucky with my results so far). On the other hand, seeing that I didn’t do too well for my signals and controls modules, the nitty-gritty details in Computer Vision might just be too maths-intensive for me to comprehend.

Last but not least, although I’m still stuck at Andrew Ng’s Machine Learning course on Coursera, I think I managed to learn some similar content in my Database Systems module, which takes a more theoratical approach, rather than focusing on the mathematical details. I highly recommend anyone who has a month or so to enrol in this course because it’s perfect for absolute beginners – you just need the basic discipline and time to get through it, especially so if you are bad at maths.

Results Finally, the last part of any image classification problem is obtaining the results of the classifier – that is, how well or not well did the algorithm perform. At first, I thought it was something so simple like just getting a truth table by counting the number of algorithm-generated matches and comparing if it matches with the ground truth rectangles, but it was more complicated than I assumed that it took me 2 weeks to figure it out!

Even at this step, there is no one fixed way to get the accuracy of the algorithm – and this all depends on the images that you are dealing with, like how many samples are there in an image? Say you have a dataset of 50 cat images and 50 dog images – each image contains either only 1 cat or 1 dog.

You have an algorithm that determines if a cat is present in the image. You apply your algorithm and you will have a list of cat images that are correctly identified as cat (true positive), cat images that are incorrectly identified as not cat (false negative), not cat incorrectly identified as cat (false positive) and not cat correctly identified as not cat (true negative). Okay, this is still a little confusing to me, even if I have been dealing with a question like this for every year since polytechnic, but I think I got it correctly (pardon me if I made a mistake – how shameful!). Anyway, you sum up all these numbers and you get some sort of a final value from 0 to 1 that tells you how good your model is – in the best case scenario, all of your 50 cat images are correctly determined as cat images, and all of your 50 dog images are correctly determined as not cat images. TP = 50, FP = 0, TN = 50, FN = 0.

You can just find the accuracy using the simple formula of TP + TN / (TP + FP + TN + FN), which you will get 1.

In my case, it’s not so simple because I have many different kinds of boxes in one image – how do I calculate the accuracy of my algorithm?

The quick answer is that I used something called the “Jaccard Index” to get the overlapping areas, and from there, get the number of True Positives, False Positives and False Negatives (True Negatives are not taken into account because there is simply no way to get them, unless perhaps you count it at a pixel-level). Then, I find the Precision and Recall for the algorithm and finally calculate the F1 score, which is the measure of how “good” my algorithm is.

I’m going to stop here because I really didn’t think that I’ll be writing about something so technical, and besides, I have better use for my time anyway, like ACTUALLY working on my project instead of writing a blog post about it. On the side, I’m also watching 1 or 2 lessons on YouTube every day about Neural Networks, and I highly recommend this as an advance course after taking Andrew Ng’s Machine Learning course.

youtube

Signing off, Eugene Goh

1 YEAR IN CARDIFF – WEEK 43 – PROJECT If I haven't already wrote this before or announced it yet, then I'll first like to start this post by announcing that I'll be heading back to Singapore some time next month for about 2.5 weeks.

0 notes

Text

Computer Vision Software Market Forecast by 2018-2025: QY Research

This recently published report examines the global Computer Vision Software market for the projected period of 7-years, i.e. between 2018 and 2025. The report highlights the accomplishments and opportunities lies in the market throughout the forecasted period. The report offers the thorough information about the overview and the scope of the global Computer Vision Software market along with its drivers, restraints, and trends. It also classifies the market into different segments such as by type, by applications and by-product. In short, this report comprises of all the necessary details of the global Computer Vision Software markets such as value/volume data, marketing strategies, and expaeasrt views. The comprehensive information about distribution channels such as suppliers, dealers, wholesalers, manufacturers, distributors, and consumers have also given in this report.

The report represents the statistical data in the form of tables, charts, and info-graphics to assess the market, its growth and development, and market trends of the global Computer Vision Software market during the projected period. QY Research has used a framework of primary and secondary research to make this report a full-proof one.

Get a complete & Professional sample PDF of the global a market report at http://www.qyresearchglobal.com/goods-1876296.html

Global Computer Vision Software market research report can be used by the following group of people:

Distributors, dealers, suppliers, and manufacturers

Journalists, school students, writers, universities, authors, and professors

Major service providers, huge corporates and industries

Existing and current market players, private firms, event managers and annual product launchers

Breakdown analysis of Global Computer Vision Software market research report:

Major competitors that head the global Computer Vision Software market includes

Key Players:

Microsoft

AWS

OpenCV

Google

Sight Machine

Scikit-image

Clarifai

Ximilar

Hive

IBM

Alibaba

Sighthound

Product types:

On-Premises

Cloud Based

End-user/applications:

Large Enterprised

SMEs

QY Research offers a crystal clear view of the various sections such as segmental analysis, regional analysts, product portfolios, followed by detailed information about key players and their strategies about mergers and acquisitions.

In terms of region, this research report covers almost all the major regions across the globe such as North America, Europe, South America, the Middle East, and Africa and the Asia Pacific. Europe and North America regions are anticipated to show an upward growth in the years to come. While Computer Vision Software market in Asia Pacific regions is likely to show remarkable growth during the forecasted period. Cutting edge technology and innovations are the most important traits of the North America region and that’s the reason most of the time the US dominates the global markets. Computer Vision Software market in South, America region is also expected to grow in near future.

Following are the objectives of the report on global Computer Vision Software market:

Major benefit and advanced factors that influence the global Computer Vision Software market

Future and present market trends that influence the growth rate and growth opportunities of the global Computer Vision Software market

The market share of the global Computer Vision Software market, supply chain analysis, business overview, grow revenue, and demand and supply ratio

New-market insights, investment return, export/import details, company profiles and feasibility study analysis of the global Computer Vision Software market

The maturity of trade and proliferation in global Computer Vision Software market

Finally, the global Computer Vision Software Market is a valuable source of guidance for individuals and companies. One of the major reasons behind providing market attractiveness index is to help the target audience and clients to identify the several market opportunities in the global Computer Vision Software market. Moreover, for the better understanding of the market, QY Research has also presented a key to get information about various segments of the global Computer Vision Software market.

Contact US

QY Research, INC.

Tina

17890 Castleton, Suite 218,

City of industry, CA – 91748

USA: +1 626 295 2442

Email: [email protected]

Web: www.qyresearchglobal.com

0 notes

Text

Real-Time Object Detection with Android using Server-Client Architect

Hello, We're doing research for our final year project. Our project subject is; Artificial intelligence and image Processing

Client: Camera frames will be sent to the server (API) in real time using the phone's camera only. We going to use the client software to send only the frames and draw the incoming data to the screen. We set the number of frames to be processed in seconds with the tickrate that we set on the client. The camera must be on continuously and the boxes (face) on the screen should be updated whenever incoming new coordinates are received.

API:

All communications between the server and the client will be done with the help of an API.

Server: We want to perform all image processing on the server. The frames sent by the client will be processed here and converted to the JSON format. (Face coordinats, Body coordinats, etc.) The server will wait for the frame to arrive from the API and process it when it arrives.

The Main Questions: In short of story, using the phone camera, we want to find faces by making image processing on the server and drawing incoming (from Server>API>Client) coordinates on the screen.

We are using: Server: Python, Tensorflow, OpenCV, Client: ReactNative (Phone)?

1) In what format should the incoming and outgoing data be communicated? (JSON, XML etc) 2) How to do the server-client architecture should be exactly? (Wireless Communication) 3) Are there any similar projects? 4) Do you have any suggestions for solving this problem?

Thanks!

submitted by /u/D3ntrax [link] [comments] from Software Development - methodologies, techniques, and tools. Covering Agile, RUP, Waterfall + more! https://ift.tt/2AkPkpf via IFTTT

0 notes

Text

Touchless interface as a new age UX approach or a communication method for a SaaS front end

This article will tell you about some of the approaches and development examples dragged around how to build a touchless user interface for saas and show how and where it can be applied.

What is a touchless interface?

The touchless user interface meaning is quite simple and we are sure that you face it even with your devices from time to time. Usually, touchless UI for saas is used either for biometry, avatars, animojis, social media, or security. All the solutions of saas touchless user interface technology have always fought and will fight for a convenient, user-friendly interface. This is due to the fact that we are developers of IT products that always strive to save people time and make their work easy and understandable convenient and fast. And today we live in that very happy time when finally you can start making a saas touchless UI solution that will not cost a lot of money and can be implemented in a fairly short time. It used to be extremely difficult and expensive. Today it is much cheaper mainly due to the development of Open Source.

The touchless interface is also good for reasons of hygiene and is especially convenient to use in places of high traffic, large crowds, big cities, as this allows people not to touch the same places.

Touchless UI use cases for SaaS

There are 4 fundamentally different applications:

For example, Retail business, shops, or any other location where you need to serve a client, advise him, and eventually make a sale.

Information projects. For example, kiosks standing at the airport or in some other places that allow a person to receive the necessary information about the city or about tickets or flights. As an option, these can be educational projects located in museums or educational institutions where a person approaches the device and makes inquiries and must receive information.

Contactless control system for any device. Most often these are IoT projects, where a person interacts with some device and manages it without pressing buttons (ie “touchless”).

A separate very specific category is simulation training systems, where the task is to simulate a situation and teach a person how to act in it.

Over the past 3 years, we have gained experience in developing systems in all four categories, and in this article I will overview what solutions can be used for all types of such systems.

Features of touchless UI

Most of them are known and now we use them daily in our phones, intercoms, and other devices. But let's take a closer look.

Face Detection

One of the simplest and most common is the definition of a face. Detection is fundamentally different from Recognition in that it only determines the fact of the presence of a person. So we can fix that a person is next to the device but we do not establish his identity, that is, we do not recognize his identity by his face.

Naturally, this requires a constantly-on and active camera that continuously supplies a video stream, and the software continuously tries to determine if there is a person in the frame or not. To determine the face, you do not need any particularly good camera quality. You can use almost minimal or medium resolution, and the quality of the picture itself does not matter, as the face detection still works in black and white. To get normal pictures in the dark, use infrared illumination This should be built into the camera itself.

Detecting intention

Also, the rule of good form is to track the intentions of a person. You should not react instantly to the appearance of a person in the frame because a person can pass by and do not want to use the device at all. In one of our projects, we decided it is very simple. We made a 2-second delay if the person is present in the frame for more than 2 seconds from the moment of determination, then we believe that the person has stopped and you can start interacting with him. By the way, in this project, after a delay, a video call was made to a call center and for this we used Twilio.

Line of sight, emotion, age, and gender recognition

You can go further and respond to other facial characteristics such as age and gender. Open Source already has projects that vary qualitatively determine gender by face and pretty well determine the age range. It’s impossible to determine the exact age today. We are currently working on a retail project for stores where age and gender are used to personalize content. Another option is the direction of the person’s gaze and his emotions. In the Open Source world, there are already models that recognize human emotions that can be quite easily used for various triggers. For example, depending on the emotions of a person, you can offer various content, offer or not offer to advertise, various products are possible, give an assessment of the quality of service and do some other things.

At the moment, we are working on a project where the direction of the gaze of a person standing in front of a large monitor is determined. We do this without using VR technology or Microsoft Kinect, i.e. only through a camcorder. With the help of this development, you can attach the mouse cursor to the direction of view and the person will use the eyes to move the cursor on the screen, and to press it, he can stop and delay for a while. This approach has long been used in virtual reality systems, but it is much more difficult to implement it only with a video camera without additional devices.

Face recognition

If it’s not enough just to detect a face, and you need to determine the identity of a person, for example, to establish specifically the user who approached the device, then full recognition is needed. Such a task is much more complex and will require a server since it is unrealistic to do this in the application because it is necessary to keep a large database of people and the local machine or mobile device will not cope with such a load.

Voice Recognition

Voice recognition is a bit more complicated, but it is now very interesting for many projects because it speeds up and simplifies interaction with the software. Here is the first question: can this be implemented in the application itself in our case using javascript or will it be necessary to create a server that will process the voice and return a response? Two options are possible here:

if you need a fixed set of standard commands - this is generally easy and an application is enough for this (a server is not needed). Also, if you need to make voice control with a relatively small set of specific commands, this can also be easily solved without a server. But it will be necessary to train the neural network specifically for the teams needed in this project.

If you need a large number of specific words (for example, street names) or support for several languages, you will have to create your own server that will process this voice and pay extra for a neural network. This is likely to be a big complicated expensive project. Therefore, it is more logical to use existing services such as Google or Azur. Of course, they cost money, but for a startup or for a project at an early stage of development, this is much less risky.

Palm and finger gestures as a way to operate interfaces

In some cases, it may be convenient to control the program using hand gestures. Already there are developments that do it well enough. The model is trained and the recognition accuracy is high. Our favorite technology stack to implement this Python along with Keras + Tensorflow / Theano + OpenCV.

Body gesture recognition

A more exotic option is to manage the product using gestures of the whole body, such as arms or legs, or postures that a person takes. This is unlikely to be useful for a classic program. But it can be widely used to develop simulation systems. We had a project where we had to do Tracking of referee's gestures for training soccer referees - in this project we used virtual reality with HTC trackers and with the help of them we determined both the person’s running speed and all the referee’s gestures. For simple gestures, elementary geometry is sufficient. But if you need to distinguish a wide variety of complex poses and gestures, you will have to develop artificial intelligence and then train it.

Custom hardware

For all such projects, in 99% of cases, a video camera is used, which must be integrated into the device. The video stream needs to be correctly processed and either sent all to the server or processed on the device itself or sent only individual frames; for this, you often have to deal with the hardware part. We work with a company that has extensive experience in the development of electronics of this nature and especially specializes in processing video data. Therefore, if your task requires software and hard, we can easily do this and create a device from scratch or modify existing ones and also develop all the necessary soft.

Technologies we use for touchless user interface development

In most cases, all this will work on a tablet or phone that will have either iOS or Android. But also it can be any other device on which most likely there will be either Android or other Windows or Unix operating systems. In our projects, we have come across devices on iOS as well as smartphones and tablets on Android, but also absolutely custom devices on Unix.

React Native

To develop applications on all of these operating systems, we use React Native because, firstly, it is a javascript that many people know, and secondly, you can compile the project under Android and under iOS and standalone-application under Windows or Unix. We believe that today this is the best solution because it allows you to overlap all operating systems with one source code, while it is very common much easier to find developers and there are a huge number of components and libraries for it. For react native, there is already an Open Source development of the definition of a person in the frame. This is a simple task that does not require a large number of resources, so a server is not needed for this and everything can work in the application itself.

Twilio

We really love this service and after using it in several projects we learned how to work effectively with it. When we develop a SaaS application that requires video calls, we definitely choose Twillio. For maintenance tasks, the touchless interface in combination with Twillio video calls can quickly and fairly cheaply develop an application that connects a user with any kind of agent or consultant. If you add voice recognition with voice bots to that, you can fully automate the process of consulting and communication. By the way, in Twilio there is already the opportunity to create AI bots to automate customer service.

Our Experience

We had a project in which we developed a server that accepts photos of people from the device and our task was to recognize people from an existing database and also replenish it. This device has been used in various company schools or other institutions with a limited number of users. All were entered into the database along with their photos. Our server received the image from the device’s camera, calculated its features, and searched the database to find the most similar face. Thus, we were able to determine with a 70-80% probability which the user was suitable for the device. And to ensure such accuracy, we needed the Dlib libraries. For the Production mode, such accuracy is not enough, and to increase we collected photos from our users, saved their properties in the database, and with them made our solution “smarter” to increase recognition accuracy. This project Soapy is an excellent device, and we hope that great success awaits them!

There was another project, where we developed voice control systems, where we trained her several key phrases with a sample of only 10 people. The training was conducted in ideal conditions, and in production gave a recognition accuracy of 40-60%. Of course, in reality, many more different people are needed for quality training. But we were very pleased. The system perfectly-recognized our specific commands, despite the fact that it worked without a server; everything was done on the native react. The main conclusion that I want to make is that today's voice control is much cheaper than many people think.

Final Thoughts

For a team that develops various software, we simply do not have the right not to master the technology of touchless interaction with devices. This can begin with motion recognition and end with an understanding of voice commands and definitions of the retina.

One way or another, in the near future it will be present even in the most primitive projects. Our team has already begun to master this science and is making progress. Therefore, if this becomes part of your next project, we are ready for this. Share with our manager what kind of touchless interface technology for saas you need!

Originally taken from https://ardas-it.com/touchless-interface-with-recognition-saas-front-end

0 notes

Text

Python Is The Language Of Future Data Masters. Learn It Yourself For Under $50 With This 12-Course Training Bundle

Every year, the world’s most popular magazine dedicated to engineering and applied sciences, IEEE Spectrum, ranks the most popular coding languages used by its data-centric readers. In 2019, they changed their ranking criteria from the ground up…yet the findings were the same as 2018: Python is the language that hardcore data science careerists use.

Python is powerful, adaptable and user-friendly — and if you’re thinking of a career in a data-driven field, it’s fundamental learning. You can take the first step to understanding and mastering Python with the training in The Complete 2020 Python Programming Certification Bundle, now more than 90 percent off at $49.99.

This comprehensive package of 12 courses unlocks everything a new Python user needs to know, from basic operations to valuable tool libraries to applying Python in projects ranging from data visualization to machine learning and artificial intelligence.

The first third of the collection is your Python boot camp basic training as Introduction to Python Training, Advanced Python Training, The Python Mega Course: Build 10 Real-World Applications, and Solve 100 Python Exercises to Boost Your Python Skills get you familiar with how Python works and early best practices to get your Python experience off on the right foot.

The next five courses dive into the bread and butter of Python: gathering, formatting and recontextualizing data. Data Mining with Python: Real-Life Data Science Exercises gives you hands-on experience in finding the data you need.

Meanwhile, Master Clustering Analysis for Data Science Using Python, Python Data Analysis with NumPy and Pandas, and Learn Python for Data Analysis and Visualization inspect some of the most important tools for properly segmenting and manipulating those numbers to find the conclusions you seek. Finally, Data Visualization with Python and Bokeh shows how to create compelling visualizations in web apps that allow users to interact with the data in real-time.

The remaining three courses cover Python’s commanding role in machine learning, teaching computers to assess information, draw conclusions and adjust their missions, all on their own. Image Processing & Analysis with OpenCV and Learning in Python can show you how machines can actually look at photos and make decisions based on that data. Then, Keras Bootcamp for Deep Learning and AI in Python and Master PyTorch for Artificial Neural Networks and Deep Learning outline how machine learning happens, explain how to build your own neural networks and go right to the heart of one of the most fascinating computer science initiatives ever.

Each course in this collection would normally cost you nearly $200 to take separately, but this complete package is on sale now for only $49.99 while the offer lasts.

Note: Terms and conditions apply. See the relevant retail sites for more information. For more great deals, go to our partners at TechBargains.com.

Now read:

Need A Surface Book Or Surface Pro? Get One For A Steal With These Factory Recertified Models

Viva La VPN: Hola Protects You Online Anywhere, Anytime For Life For Under $40

ET Logitech Gaming Sale: Save Up To 50 Percent Off On Gaming Mice, Speakers, and More

from ExtremeTechExtremeTech https://www.extremetech.com/deals/306358-python-is-the-language-of-future-data-masters-learn-it-yourself-for-under-50-with-this-12-course-training-bundle from Blogger http://componentplanet.blogspot.com/2020/02/python-is-language-of-future-data.html

0 notes

Link

Learn Python 3 with more than 100 Practical Exercises and 20 Hands-on Practical Projects

What you’ll learn

DIVE INTO PYTHON WORLD WITH PYTHON FUNDAMENTALS:

Variables and data types & Comparison operators

Logical Operators & Conditional statements (If-else)

For and while loops & Functions

Lists and list comprehensions

Dictionaries and dictionaries comprehensions

Lambdas and built-in functions & Modules & Maps and Filters

Processing csv files & Methods & Matplotlib

Pandas & Numpy & Seaborn

Use OpenCV applied on Video Stream to Draw Yourself

Requirements

Access to a computer with an internet connection.

Computer. Mac OS, Windows or Linux.

No previous experience with Python or coding is required.

Description

We are on a mission to create the most complete Python programming guide in the World.

From Python basics to techniques used by pros, this masterclass provides you with everything you need to start building and applying Python.

“Python Beginner to Pro Masterclass” is our flagship Python course that delivers unique learning with 3 immersive courses packed into 1 easy-to-learn package:

First, prepare yourself by learning the basics and perfect your knowledge of the language with a beginner to pro Python programming course.

Next, build on your knowledge with a practical, applied and hands-on Python course with over 20 real-world applications problems and 100 coding exercises to help you learn in a practical, easy and fun way. These will be invaluable projects to showcase during future job interviews!

Finally, push your boundaries with a data science and machine-learning course covering practical machine learning applications using Python. Dive into real-life situations and solve real-world challenges.

So, why is Python the golden programming language these days? And what makes it the best language to learn today?

Python ranks as the number one programming language in 2018 for five simple reasons that are bound to change the shape of your life and career:

(1) Easy to learn: Python is the easiest programming language to learn. In fact, at the end of this single course, you’ll be able to master Python and its applications regardless of your previous experience with programming.

(2) High Salary: Did you know that the average Python programmer in the U.S. makes approximately $116 thousand dollars a year? With “Python 3 Beginning to Pro Masterclass” you’re setting yourself for up for increased earning potential that can only rise from here.

(3) Scalability: It’s true, Python is easy to learn. But it’s also an extremely powerful language that can help you create top-tier apps. In fact, Google, Instagram, YouTube, and Spotify are all built using Python.

(4) Versatility: What’s more, Python is by far the most versatile programming language in the world today! From web development to data science, machine learning, computer vision, data analysis and visualization, scripting, gaming, and more, Python has the potential to deliver growth to any industry.

(5) Future-proof Career: The high demand and low supply of Python developers make it the ideal programming language to learn today. Whether you’re eyeing a career in machine learning or artificial intelligence, learning Python is an invaluable investment in your career.

Who this course is for:

Anyone who wants to learn Python.

Beginners who have just started to learn Python

Professionals who want to learn Python through Real World Applications

Professionals who want to obtain particular skill, such as Web Scraping, Working With Databases, Build Websites, etc

Created by Dr. Ryan Ahmed, Ph.D., MBA, Kirill Eremenko, Hadelin de Ponteves, Mitchell Bouchard, SuperDataScience Team Last updated 2/2019 English English [Auto-generated]

Size: 13.42 GB

Download Now

https://ift.tt/2qH1xlA.

The post Python 3 Programming: Beginner to Pro Masterclass appeared first on Free Course Lab.

0 notes

Link

via www.pyimagesearch.com

In today’s blog post, I interview Dr. Paul Lee, a PyImageSearch reader and interventional cardiologist affiliated with NY Mount Sinai School of Medicine.

Dr. Lee recently presented his research at the prestigious American Heart Association Scientific Session in Philadelphia, PA where he demonstrated how Convolutional Neural Networks can:

Automatically analyze and interpret coronary angiograms

Detect blockages in patient arteries

And ultimately help reduce and prevent heart attacks

Furthermore, Dr. Lee has demonstrated that the automatic angiogram analysis can be deployed to a smartphone, making it easier than ever for doctors and technicians to analyze, interpret, and understand heart attack risk factors.

Dr. Lee’s work is truly remarkable and paves the way for Computer Vision and Deep Learning algorithms to help reduce and prevent heart attacks.

Let’s give a warm welcome to Dr. Lee as he shares his research.

An interview with Paul Lee – Doctor, Cardiologist and Deep Learning Researcher

Adrian: Hi Paul! Thank you for doing this interview. It’s a pleasure to have you on the PyImageSearch blog.

Paul: Thank you for inviting me.

Figure 1: Dr. Paul Lee, an interventional cardiologist affiliated with NY Mount Sinai School of Medicine, along with his family.

Adrian: Tell us a bit about yourself — where do you work and what is your job?

Paul: I am an interventional cardiologist affiliated with NY Mount Sinai School of Medicine. I have a private practice in Brooklyn.

Figure 2: Radiologists may one day be replaced by Computer Vision, Deep Learning, and Artificial Intelligence.

Adrian: How did you first become interested in computer vision and deep learning?

Paul: In a New Yorker magazine’s 2017 article titled A.I. Versus M.D. What happens when diagnosis is automated?, George Hinton commented that “they should stop training radiologists now”. I realized that one day AI will replace me. I wanted to be the person controlling the AI, not the one being replaced.

Adrian: You recently presented your work automatic cardiac coronary angiogram analysis at the American Heart association. Can you tell us about it?

Paul: After starting your course two years ago, I became comfortable with computer vision technique. I decided to apply what you taught to cardiology.

As a cardiologist, I perform coronary angiography to diagnose whether my patients have blockages in their arteries in the heart that can cause heart attack. I wondered whether I can apply AI to interpret coronary angiograms.

Despite many difficulties, thanks to your ongoing support, the neural networks learned to interpret these images reliably.

I was invited to present my research at American Heart Association Scientific Session in Philadelphia this year. This is the most important research conference for cardiologists. My poster is titled Convolutional Neural Networks for Interpretation of Coronary Angiography (CathNet).

(Circulation. 2019;140:A12950; https://ahajournals.org/doi/10.1161/circ.140.suppl_1.12950) ; the poster is available here: https://github.com/AICardiologist/Poster-for-AHA-2019)

Figure 3: Normal coronary angiogram (left) and stenotic coronary artery (right). Interpretation of angiograms can be subjectives and difficult. Computer vision algorithms can be used to make analyzations more accurate.

Adrian: Can you tell us a bit more about cardiac coronary angiograms? How are these images captured and how can computer vision/deep learning algorithms better/more efficiently analyze these images (as compared to humans)?

Paul: For definitive diagnosis and treatment of coronary artery disease (for example, during heart attack), cardiologists perform coronary angiogram to determine the anatomy and the extent of the stenosis. During the procedure, cardiologists put a narrow catheter from the wrist or the leg. Through the catheter, we inject contrast into the coronary arteries and the images are captured by X-ray. However, the interpretation of the angiogram is sometimes difficult: computer vision has the potential to make these determinations more objective and accurate.

Figure 3 (left) shows a normal coronary angiogram while Figure 3 (right) shows a stenotic coronary artery.

Adrian: What was the most difficult aspect of your research and why?

Paul: I only had around 5000 images.

At first, we did not know why we had so much trouble getting high accuracy. We thought our images were not preprocessed properly, or some of the images were blurry.

Later, we realized there was nothing wrong with our images: the problem was that ConvNets require lots of data to learn something simple to our human eyes.

Determining whether there is a stenosis in a coronary arterial tree in an image is computationally complex. Since sample size depends on classification complexity, we struggled. We had to find a way to train ConvNets with very limited samples.

Adrian: How long did it take for you to train your models and perform your research?

Paul: It took more than one year. Half the time was spent on gathering and preprocessing data, half the time on training and tuning the model. I would gather data, train and tune my models, gather more data or process the data differently, and improve my previous models, and keep repeating this circle.

Figure 4: Utilizing curriculum learning to improve model accuracy.

Adrian: If you had to pick the most important technique you applied during your research, what would it be?

Paul: I scoured PyImageSearch for technical tips to train ConvNets with small sample number of samples: transfer learning, image augmentation, using SGD instead of Adam, learning rate schedule, early stopping.

Every technique contributes to small improvement in F1 score, but I only reached about 65% accuracy.

I looked at Kaggle contest solutions to look for technical tips. The biggest breakthrough was from a technique called “curriculum learning.” I first trained DenseNet to interpret something very simple: “is there a narrowing in that short straight segment of artery?” That only took around a hundred sample.

Then I trained this pre-trained network with longer segments of arteries with more branches. The curriculum gradually build complexity until it learns to interpret the stenosis in the context of complicated figures. This approach dramatically improved our test accuracy to 82%. Perhaps the pre-training steps reduced computational complexity by priming information into the neural network.

“Curriculum learning” in the literature actually means something different: it generally refers to splitting their training samples based on error rates, and then sequencing the training data batches based on increasing error rate. In contrast, I actually created learning materials for the ConvNet to learn, not just re-arrange the batches based on error rate. I got this idea from my experience of learning foreign language, not from the computer literature. At the beginning, I struggled to understand newspaper articles written in Japanese. As I progressed through beginner, then to intermediate, and finally to advanced level Japanese curriculum, I could finally understand these articles.

Figure 5: Example screenshots from the CathNet iPhone app.

Adrian: What are your computer vision and deep learning tools, libraries, and packages of choice?

Paul: I am using standard packages: Keras, Tensorflow, OpenCV 4.

I use Photoshop to cleanup the images and to create curriculums.

Initially I was using cloud instances [for training], but I found that my RTX 2080 Ti x 4 Workstation is much more cost effective. The “global warming” from the GPUs killed my wife’s plants, but it dramatically speeded up model iteration.

We converted our Tensorflow models into an iPhone app using Core ML just like what you did for your Pokemon identification app.

Our demonstration video for our app is here:

youtube

Adrian: What advice would you give to someone who wants to perform computer vision/deep learning research but doesn’t know how to get started?

Paul: When I first started two years ago, I did not even know Python. After completing a beginner Python course, I jumped into Andrew Ng’s deep learning course. Because I needed more training, I began PyImageSearch guru course. The materials from Stanford CS231n are great for surveying the “big picture” but PyImageSearch course materials are immediately actionable for someone like me without computer science background.

Adrian: How did the PyImageSearch Gurus course and Deep Learning for Computer Vision with Python book prepare you for your research?

Paul: PyImageSearch course and books armed me with OpenCV and TensorFlow skills. I continuously return to the materials for technical tips and updates. Your advice really motivated me to push forward despite obstacles.

Adrian: Would you recommend the PyImageSearch Gurus course or Deep Learning for Computer Vision with Python to other budding researchers, students, or developers trying to learn computer vision + deep learning?

Paul: Without reservation. The course converted me from a Python beginner to a published computer vision practitioner. If you are looking for the most cost- and time-efficient way to learn Computer Vision, and if you are really serious, I wholeheartedly recommend PyImageSearch courses.

Adrian: What’s next for your research?

Paul: My next project is to bring computer vision to the bedside. Currently, clinicians are spending too much time on their desktop computer during office visit and hospital rounds. I hope our project will empower clinicians to do what they do best: spending time at the bedside caring for patients.

Adrian: If a PyImageSearch reader wants to chat about your work and research, what is the best place to connect with you?

Paul: I can be reached at my LinkedIn account and I look forward to hearing from your readers.

Summary

In this blog post, we interviewed Dr. Paul Lee (MD), an interventional cardiologist and Computer Vision/Deep Learning practitioner.

Dr. Lee recently presented a poster at the prestigious American Heart Association Scientific Session in Philadelphia, PA where he demonstrated how Convolutional Neural Networks can:

Automatically analyze and interpret coronary angiograms

Detect blockages in patient arteries

Help reduce and prevent heart attacks

The primary motivation for Dr. Lee’s work was that he understood that one day radiologists would one day be replaceable by Artificial Intelligence.

Instead of simply accepting that fate, Dr. Lee decided to take matters in his own hands — he strove to be the person building that AI, not the one being replaced by it.

Dr. Lee not only achieved his goal, but was able to publish his work at a distinguished conference, proof that dedication, a strong will, and the proper education is all you need to be successful in Computer Vision and Deep Learning.

If you want to follow in Dr. Lee’s footsteps, be sure to pick up a copy of Deep Learning for Computer Vision with Python (DL4CV) and join the PyImageSearch Gurus course.

Using these resources you can:

Perform research worthy of being published in reputable journals and conferences

Obtain the knowledge necessary to finish your MSc or PhD

Switch careers and obtain a CV/DL position at a respected company/organization

Successfully apply deep learning and computer vision to your own projects at work

Complete your hobby CV/DL projects you’re hacking on over the weekend

I hope you’ll join myself, Dr. Lee, and thousands of other PyImageSearch readers who have not only mastered computer vision and deep learning, but have taken that knowledge and used it to change their lives.

I’ll see you on the other side.

To be notified when future blog posts and interviews are published here on PyImageSearch, just be sure to enter your email address in the form below, and I’ll be sure to keep you in the loop.