#OpenCV Projects

Explore tagged Tumblr posts

Text

6 Fun and Educational OpenCV Projects for Coding Enthusiasts

OpenCV (Open Source Computer Vision Library) is an open source computer vision and machine learning software library used to detect and recognize objects in images and videos. It is one of the most popular coding libraries for the development of computer vision applications. OpenCV supports many programming languages including C++, Python, Java, and more.

Coding enthusiasts who are looking for fun and educational OpenCV projects can find plenty of interesting ones across the web. From creating facial recognition applications to motion detection and tracking, there are numerous projects that can help hone coding skills and gain a better understanding of OpenCV. Here are 6 fun and educational OpenCV projects for coding enthusiasts:

1. Facial Recognition Application: This project involves creating an application that can detect faces in images and videos and recognize them. It can be used to create face authentication systems, such as unlocking a smartphone or computer with a face scan.

2. Motion Detection and Tracking: This project involves creating a program that can detect and track moving objects in videos. It can be used for applications such as surveillance cameras and self-driving cars.

3. 3D Augmented Reality: This project involves creating an augmented reality application that can track 3D objects in real time. It can be used for applications such as gaming and virtual reality.

4. Image Processing: This project involves creating a program that can manipulate and process images. It can be used for applications such as image recognition and filtering.

5. Object Detection: This project involves creating a program that can detect objects in images and videos. It can be used for applications such as autonomous vehicles, robotics, and medical imaging.

6. Text Detection: This project involves creating a program that can detect text in images and videos. It can be used for applications such as optical character recognition and document scanning.

These are just some of the many fun and educational OpenCV projects that coding enthusiasts can explore. With a little bit of research and practice, anyone can create amazing applications with OpenCV.

4 notes

·

View notes

Text

OpenCV projects using Raspberry Pi | Takeoff

The Blind Reader is a small, affordable device designed to help blind people read printed text. Traditional Braille machines can be very expensive, so not everyone can buy them. The Blind Reader is different because it's cheaper and easier for more people to use.

This device works using a small computer called a Raspberry Pi and a webcam. Here’s how it works step-by-step:

1. Capture the Text: You place a page with printed text under the webcam. The webcam takes a picture of the page.

2. Convert Image to Text: The Raspberry Pi uses a technology called Optical Character Recognition (OCR) to convert the picture into digital text. This means it reads the image and understands what letters and words are on the page.

3. Process the Text: The device makes sure the text is clear and readable. It fixes problems like the text being at an angle or having multiple columns. This process is called skew correction and segmentation.

4. Read Aloud: Once the text is clear, the Raspberry Pi uses a Text-to-Speech (TTS) program to change the text into spoken words.

5. Output the Audio: The spoken words are sent to speakers through an audio amplifier, so the sound is loud and clear.

At Takeoffprojects, The Blind Reader is a complete system that helps visually impaired people by reading printed text out loud. It's easy to use and portable, which means you can carry it around and use it anywhere. This device makes reading more accessible for blind people, helping them become more independent and gain better access to information. Projects on OpenCV like this demonstrate the power of technology in improving lives.

#Projects On OpenCV#OpenCV Projects#OpenCV Projects For Beginners#Computer Vision Projects#OpenCV Projects Final Year

0 notes

Text

I had to clean up 1000+ images for this translation, so I made a tool that does a convex hull flood-fill in 1 click. It's like a magic wand selection that also fills any interior holes in the selection

It's not hard to do this manually in GIMP or w/e, but it takes several clicks/mouse movements each time, and i wanted to spare my wrist over thousands of images lol. The use case is pretty limited, but here's a link:

#opencv my beloved#programming#im helping with the black jack DS translation project btw ^_^#was very surprised and happy to learn it was still active

45 notes

·

View notes

Note

tell me about your defense contract pleage

Oh boy!

To be fair, it's nothing grandiose, like, it wasn't about "a new missile blueprint" or whatever, but, just thinking about what it could have become? yeesh.

So, let's go.

For context, this is taking place in the early 2010s, where I was working as a dev and manager for a company that mostly did space stuff, but they had some defence and security contracts too.

One day we got a new contract though, which was... a weird one. It was state-auctioned, meaning that this was basically a homeland contract, but the main sponsor was Philip Morris. Yeah. The American cigarette company.

Why? Because the contract was essentially a crackdown on "illegal cigarette sales", but it was sold as a more general "war on drugs" contract.

For those unaware (because chances are, like me, you are a non-smoker), cigarette contraband is very much a thing. At the time, ~15% of cigarettes were sold illegally here (read: they were smuggled in and sold on the street).

And Phillip Morris wanted to stop that. After all, they're only a small company worth uhhh... oh JFC. Just a paltry 150 billion dollars. They need those extra dollars, you understand?

Anyway. So they sponsored a contract to the state, promising that "the technology used for this can be used to stop drug deals too". Also that "the state would benefit from the cigarettes part as well because smaller black market means more official sales means a higher tax revenue" (that has actually been proven true during the 2020 quarantine).

Anyway, here was the plan:

Phase 1 was to train a neural network and plug it in directly to the city's video-surveillance system, in order to detect illegal transactions as soon as they occur. Big brother who?

Phase 2 was to then track the people involved in said transaction throughout the city, based on their appearance and gait. You ever seen the Plainsight sheep counting video? Imagine something like this but with people. That data would then be relayed to police officers in the area.

So yeah, an automated CCTV-based tracking system. Because that's not setting a scary precedent.

So what do you do when you're in that position? Let me tell you. If you're thrust unknowingly, or against your will, into a project like this,

Note. The following is not a legal advice. In fact it's not even good advice. Do not attempt any of this unless you know you can't get caught, or that even if you are caught, the consequences are acceptable. Above all else, always have a backup plan if and when it backfires. Also don't do anything that can get you sued. Be reasonable.

Let me introduce you to the world of Corporate Sabotage! It's a funny form of striking, very effective in office environments.

Here's what I did:

First of all was the training data. We had extensive footage, but it needed to be marked manually for the training. Basically, just cropping the clips around the "transaction" and drawing some boxes on top of the "criminals". I was in charge of several batches of those. It helped that I was fast at it since I had video editing experience already. Well, let's just say that a good deal of those markings were... not very accurate.

Also, did you know that some video encodings are very slow to process by OpenCV, to the point of sometimes crashing? I'm sure the software is better at it nowadays though. So I did that to another portion of the data.

Unfortunately the training model itself was handled by a different company, so I couldn't do more about this.

Or could I?

I was the main person communicating with them, after all.

Enter: Miscommunication Master

In short (because this is already way too long), I became the most rigid person in the project. Like insisting on sharing the training data only on our own secure shared drive, which they didn't have access to yet. Or tracking down every single bug in the program and making weekly reports on those, which bogged down progress. Or asking for things to be done but without pointing at anyone in particular, so that no one actually did the thing. You know, classic manager incompetence. Except I couldn't be faulted, because after all, I was just "really serious about the security aspect of this project. And you don't want the state to learn that we've mishandled the data security of the project, do you, Jeff?"

A thousand little jabs like this, to slow down and delay the project.

At the end of it, after a full year on this project, we had.... a neural network full of false positives and a semi-working visualizer.

They said the project needed to be wrapped up in the next three months.

I said "damn, good luck with that! By the way my contract is up next month and I'm not renewing."

Last I heard, that city still doesn't have anything installed on their CCTV.

tl;dr: I used corporate sabotage to prevent automated surveillance to be implemented in a city--

hey hold on

wait

what

HEY ACTUALLY I DID SOME EXTRA RESEARCH TO SEE IF PHILLIP MORRIS TRIED THIS SHIT WITH ANOTHER COMPANY SINCE THEN AND WHAT THE FUCK

HUH??????

well what the fuck was all that even about then if they already own most of the black market???

#i'm sorry this got sidetracked in the end#i'm speechless#anyway yeah!#sometimes activism is sitting in an office and wasting everyone's time in a very polite manner#i learned that one from the CIA actually

160 notes

·

View notes

Text

Best AI Training in Electronic City, Bangalore – Become an AI Expert & Launch a Future-Proof Career!

youtube

Artificial Intelligence (AI) is reshaping industries and driving the future of technology. Whether it's automating tasks, building intelligent systems, or analyzing big data, AI has become a key career path for tech professionals. At eMexo Technologies, we offer a job-oriented AI Certification Course in Electronic City, Bangalore tailored for both beginners and professionals aiming to break into or advance within the AI field.

Our training program provides everything you need to succeed—core knowledge, hands-on experience, and career-focused guidance—making us a top choice for AI Training in Electronic City, Bangalore.

🌟 Who Should Join This AI Course in Electronic City, Bangalore?

This AI Course in Electronic City, Bangalore is ideal for:

Students and Freshers seeking to launch a career in Artificial Intelligence

Software Developers and IT Professionals aiming to upskill in AI and Machine Learning

Data Analysts, System Engineers, and tech enthusiasts moving into the AI domain

Professionals preparing for certifications or transitioning to AI-driven job roles

With a well-rounded curriculum and expert mentorship, our course serves learners across various backgrounds and experience levels.

📘 What You Will Learn in the AI Certification Course

Our AI Certification Course in Electronic City, Bangalore covers the most in-demand tools and techniques. Key topics include:

Foundations of AI: Core AI principles, machine learning, deep learning, and neural networks

Python for AI: Practical Python programming tailored to AI applications

Machine Learning Models: Learn supervised, unsupervised, and reinforcement learning techniques

Deep Learning Tools: Master TensorFlow, Keras, OpenCV, and other industry-used libraries

Natural Language Processing (NLP): Build projects like chatbots, sentiment analysis tools, and text processors

Live Projects: Apply knowledge to real-world problems such as image recognition and recommendation engines

All sessions are conducted by certified professionals with real-world experience in AI and Machine Learning.

🚀 Why Choose eMexo Technologies – The Best AI Training Institute in Electronic City, Bangalore

eMexo Technologies is not just another AI Training Center in Electronic City, Bangalore—we are your AI career partner. Here's what sets us apart as the Best AI Training Institute in Electronic City, Bangalore:

✅ Certified Trainers with extensive industry experience ✅ Fully Equipped Labs and hands-on real-time training ✅ Custom Learning Paths to suit your individual career goals ✅ Career Services like resume preparation and mock interviews ✅ AI Training Placement in Electronic City, Bangalore with 100% placement support ✅ Flexible Learning Modes including both classroom and online options

We focus on real skills that employers look for, ensuring you're not just trained—but job-ready.

🎯 Secure Your Future with the Leading AI Training Institute in Electronic City, Bangalore

The demand for skilled AI professionals is growing rapidly. By enrolling in our AI Certification Course in Electronic City, Bangalore, you gain the tools, confidence, and guidance needed to thrive in this cutting-edge field. From foundational concepts to advanced applications, our program prepares you for high-demand roles in AI, Machine Learning, and Data Science.

At eMexo Technologies, our mission is to help you succeed—not just in training but in your career.

📞 Call or WhatsApp: +91-9513216462 📧 Email: [email protected] 🌐 Website: https://www.emexotechnologies.com/courses/artificial-intelligence-certification-training-course/

Seats are limited – Enroll now in the most trusted AI Training Institute in Electronic City, Bangalore and take the first step toward a successful AI career.

🔖 Popular Hashtags

#AITrainingInElectronicCityBangalore#AICertificationCourseInElectronicCityBangalore#AICourseInElectronicCityBangalore#AITrainingCenterInElectronicCityBangalore#AITrainingInstituteInElectronicCityBangalore#BestAITrainingInstituteInElectronicCityBangalore#AITrainingPlacementInElectronicCityBangalore#MachineLearning#DeepLearning#AIWithPython#AIProjects#ArtificialIntelligenceTraining#eMexoTechnologies#FutureTechSkills#ITTrainingBangalore#Youtube

3 notes

·

View notes

Note

Whats the coolest (computer) project you want to work on?

Why aren't you working on it, if you aren't already?

oh gosh coolest project i want to work on is an input-output function search for wikifunctions. but!!! im working on a project right now to add a BUNCH of nigerian politicians to wikidata. got some scans and extracted the text using some opencv and ocr and now i got a huge as spreadsheet. just gotta match up each row with the corresponding person in the wikidata knowledge graph but oh my god is it tedious. one day ill post my code online after im done lol

2 notes

·

View notes

Text

AI Pollen Project Update 1

Hi everyone! I have a bunch of ongoing projects in honey and other things so I figured I should start documenting them here to help myself and anyone who might be interested. Most of these aren’t for a grade, but just because I’m interested or want to improve something.

One of the projects I’m working on is a machine learning model to help with pollen identification under visual methods. There’s very few people who are specialized to identify the origins of pollens in honey, which is pretty important for research! And the people who do it are super busy because it’s very time consuming. This is meant to be a tool and an aid so they can devote more time to the more important parts of the research, such as hunting down geographical origins, rather than the mundane parts like counting individual pollen and trying to group all the species in a sample.

The model will have 3 goals to aid these researchers:

Count overall pollen and individual species of pollen in a sample of honey

Provide the species of each pollen in a sample

Group pollen species together with a confidence listed per sample

Super luckily there’s pretty large pollen databases out there with different types of imaging techniques being used (SEM, electron microscopy, 40X magnification, etc). I’m kind of stumped on which python AI library to use, right now I’ve settled on using OpenCV to make and train the model, but I don’t know if there’s a better option for what I’m trying to do. If anyone has suggestions please let me know

This project will be open source and completely free once I’m done, and I also intend on making it so more confirmed pollen species samples with confirmed geographical origins can be added by researchers easily. I am a firm believer that ML is a tool that’s supposed to make the mundane parts easier so we have time to do what brings us joy, which is why Im working on this project!

I’m pretty busy with school, so I’ll make the next update once I have more progress! :)

Also a little note: genetic tests are more often used for honey samples since it is more accessible despite being more expensive, but this is still an important part of the research. Genetic testing also leaves a lot to be desired, like not being able to tell the exact species of the pollen which can help pinpoint geographical location or adulteration.

2 notes

·

View notes

Text

AvatoAI Review: Unleashing the Power of AI in One Dashboard

Here's what Avato Ai can do for you

Data Analysis:

Analyze CV, Excel, or JSON files using Python and libraries like pandas or matplotlib.

Clean data, calculate statistical information and visualize data through charts or plots.

Document Processing:

Extract and manipulate text from text files or PDFs.

Perform tasks such as searching for specific strings, replacing content, and converting text to different formats.

Image Processing:

Upload image files for manipulation using libraries like OpenCV.

Perform operations like converting images to grayscale, resizing, and detecting shapes or

Machine Learning:

Utilize Python's machine learning libraries for predictions, clustering, natural language processing, and image recognition by uploading

Versatile & Broad Use Cases:

An incredibly diverse range of applications. From creating inspirational art to modeling scientific scenarios, to designing novel game elements, and more.

User-Friendly API Interface:

Access and control the power of this advanced Al technology through a user-friendly API.

Even if you're not a machine learning expert, using the API is easy and quick.

Customizable Outputs:

Lets you create custom visual content by inputting a simple text prompt.

The Al will generate an image based on your provided description, enhancing the creativity and efficiency of your work.

Stable Diffusion API:

Enrich Your Image Generation to Unprecedented Heights.

Stable diffusion API provides a fine balance of quality and speed for the diffusion process, ensuring faster and more reliable results.

Multi-Lingual Support:

Generate captivating visuals based on prompts in multiple languages.

Set the panorama parameter to 'yes' and watch as our API stitches together images to create breathtaking wide-angle views.

Variation for Creative Freedom:

Embrace creative diversity with the Variation parameter. Introduce controlled randomness to your generated images, allowing for a spectrum of unique outputs.

Efficient Image Analysis:

Save time and resources with automated image analysis. The feature allows the Al to sift through bulk volumes of images and sort out vital details or tags that are valuable to your context.

Advance Recognition:

The Vision API integration recognizes prominent elements in images - objects, faces, text, and even emotions or actions.

Interactive "Image within Chat' Feature:

Say goodbye to going back and forth between screens and focus only on productive tasks.

Here's what you can do with it:

Visualize Data:

Create colorful, informative, and accessible graphs and charts from your data right within the chat.

Interpret complex data with visual aids, making data analysis a breeze!

Manipulate Images:

Want to demonstrate the raw power of image manipulation? Upload an image, and watch as our Al performs transformations, like resizing, filtering, rotating, and much more, live in the chat.

Generate Visual Content:

Creating and viewing visual content has never been easier. Generate images, simple or complex, right within your conversation

Preview Data Transformation:

If you're working with image data, you can demonstrate live how certain transformations or operations will change your images.

This can be particularly useful for fields like data augmentation in machine learning or image editing in digital graphics.

Effortless Communication:

Say goodbye to static text as our innovative technology crafts natural-sounding voices. Choose from a variety of male and female voice types to tailor the auditory experience, adding a dynamic layer to your content and making communication more effortless and enjoyable.

Enhanced Accessibility:

Break barriers and reach a wider audience. Our Text-to-Speech feature enhances accessibility by converting written content into audio, ensuring inclusivity and understanding for all users.

Customization Options:

Tailor the audio output to suit your brand or project needs.

From tone and pitch to language preferences, our Text-to-Speech feature offers customizable options for the truest personalized experience.

>>>Get More Info<<<

#digital marketing#Avato AI Review#Avato AI#AvatoAI#ChatGPT#Bing AI#AI Video Creation#Make Money Online#Affiliate Marketing

3 notes

·

View notes

Text

From Classroom to Code: Real-World Projects Every Computer Science Student Should Try

One of the best colleges in Jaipur, which is Arya College of Engineering & I.T. They transitioning from theoretical learning to hands-on coding is a crucial step in a computer science education. Real-world projects bridge this gap, enabling students to apply classroom concepts, build portfolios, and develop industry-ready skills. Here are impactful project ideas across various domains that every computer science student should consider:

Web Development

Personal Portfolio Website: Design and deploy a website to showcase your skills, projects, and resume. This project teaches HTML, CSS, JavaScript, and optionally frameworks like React or Bootstrap, and helps you understand web hosting and deployment.

E-Commerce Platform: Build a basic online store with product listings, shopping carts, and payment integration. This project introduces backend development, database management, and user authentication.

Mobile App Development

Recipe Finder App: Develop a mobile app that lets users search for recipes based on ingredients they have. This project covers UI/UX design, API integration, and mobile programming languages like Java (Android) or Swift (iOS).

Personal Finance Tracker: Create an app to help users manage expenses, budgets, and savings, integrating features like OCR for receipt scanning.

Data Science and Analytics

Social Media Trends Analysis Tool: Analyze data from platforms like Twitter or Instagram to identify trends and visualize user behavior. This project involves data scraping, natural language processing, and data visualization.

Stock Market Prediction Tool: Use historical stock data and machine learning algorithms to predict future trends, applying regression, classification, and data visualization techniques.

Artificial Intelligence and Machine Learning

Face Detection System: Implement a system that recognizes faces in images or video streams using OpenCV and Python. This project explores computer vision and deep learning.

Spam Filtering: Build a model to classify messages as spam or not using natural language processing and machine learning.

Cybersecurity

Virtual Private Network (VPN): Develop a simple VPN to understand network protocols and encryption. This project enhances your knowledge of cybersecurity fundamentals and system administration.

Intrusion Detection System (IDS): Create a tool to monitor network traffic and detect suspicious activities, requiring network programming and data analysis skills.

Collaborative and Cloud-Based Applications

Real-Time Collaborative Code Editor: Build a web-based editor where multiple users can code together in real time, using technologies like WebSocket, React, Node.js, and MongoDB. This project demonstrates real-time synchronization and operational transformation.

IoT and Automation

Smart Home Automation System: Design a system to control home devices (lights, thermostats, cameras) remotely, integrating hardware, software, and cloud services.

Attendance System with Facial Recognition: Automate attendance tracking using facial recognition and deploy it with hardware like Raspberry Pi.

Other Noteworthy Projects

Chatbots: Develop conversational agents for customer support or entertainment, leveraging natural language processing and AI.

Weather Forecasting App: Create a user-friendly app displaying real-time weather data and forecasts, using APIs and data visualization.

Game Development: Build a simple 2D or 3D game using Unity or Unreal Engine to combine programming with creativity.

Tips for Maximizing Project Impact

Align With Interests: Choose projects that resonate with your career goals or personal passions for sustained motivation.

Emphasize Teamwork: Collaborate with peers to enhance communication and project management skills.

Focus on Real-World Problems: Address genuine challenges to make your projects more relevant and impressive to employers.

Document and Present: Maintain clear documentation and present your work effectively to demonstrate professionalism and technical depth.

Conclusion

Engaging in real-world projects is the cornerstone of a robust computer science education. These experiences not only reinforce theoretical knowledge but also cultivate practical abilities, creativity, and confidence, preparing students for the demands of the tech industry.

Source: Click here

#best btech college in jaipur#best engineering college in jaipur#best private engineering college in jaipur#top engineering college in jaipur#best engineering college in rajasthan#best btech college in rajasthan

0 notes

Text

image processing chennai

2025 2026 Image Processing Projects Chennai. Perfect for final-year students, these projects focus on facial recognition, object detection, image segmentation, and medical diagnostics using Python, MATLAB, and OpenCV. Chennai’s best project centers offer IEEE-guided topics, real-time datasets, hands-on training, and certification support. Build smart, scalable solutions and gain the skills needed for the booming tech industry.

0 notes

Text

Scaling Your Australian Business with AI: A CEO’s Guide to Hiring Developers

In today’s fiercely competitive digital economy, innovation isn’t a luxury—it’s a necessity. Australian businesses are increasingly recognizing the transformative power of Artificial Intelligence (AI) to streamline operations, enhance customer experiences, and unlock new revenue streams. But to fully harness this potential, one crucial element is required: expert AI developers.

Whether you’re a fast-growing fintech in Sydney or a manufacturing giant in Melbourne, if you’re looking to implement scalable AI solutions, the time has come to hire AI developers who understand both the technology and your business landscape.

In this guide, we walk CEOs, CTOs, and tech leaders through the essentials of hiring AI talent to scale operations effectively and sustainably.

Why AI is Non-Negotiable for Scaling Australian Enterprises

Australia has seen a 270% rise in AI adoption across key industries like retail, healthcare, logistics, and finance over the past three years. From predictive analytics to conversational AI and intelligent automation, AI has become central to delivering scalable, data-driven solutions.

According to Deloitte Access Economics, AI is expected to contribute AU$ 22.17 billion to the Australian economy by 2030. For CEOs and decision-makers, this isn’t just a trend—it’s a wake-up call to start investing in the right AI talent to stay relevant.

The Hidden Costs of Delaying AI Hiring

Still relying on a traditional tech team to handle AI-based initiatives? You could be leaving significant ROI on the table. Without dedicated experts, your AI projects risk:

Delayed deployments

Poorly optimized models

Security vulnerabilities

Lack of scalability

Wasted infrastructure investment

By choosing to hire AI developers, you're enabling faster time-to-market, more accurate insights, and a competitive edge in your sector.

How to Hire AI Developers: A Strategic Approach for Australian CEOs

The process of hiring AI developers is unlike standard software recruitment. You’re not just hiring a coder—you’re bringing on board an innovation partner.

Here’s what to consider:

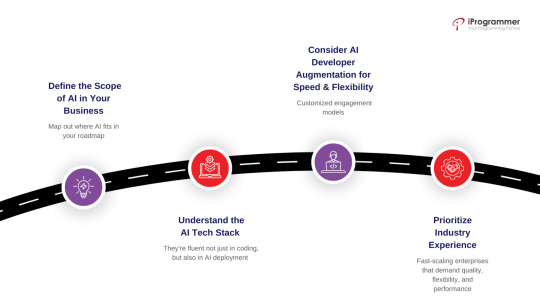

1. Define the Scope of AI in Your Business

Before hiring, map out where AI fits in your roadmap:

Are you looking for machine learning-driven forecasting?

Want to implement AI chatbots for 24/7 customer service?

Building a computer vision solution for your manufacturing line?

Once you identify the use cases, it becomes easier to hire ML developers or AI experts with the relevant domain and technical experience.

2. Understand the AI Tech Stack

A strong AI developer should be proficient in:

Python, R, TensorFlow, PyTorch

Scikit-learn, Keras, OpenCV

Data engineering with SQL, Spark, Hadoop

Deployment tools like Docker, Kubernetes, AWS SageMaker

When you hire remote AI engineers, ensure they’re fluent not just in coding, but also in AI deployment and scalability best practices.

3. Consider AI Developer Augmentation for Speed & Flexibility

Building an in-house AI team is time-consuming and expensive. That’s why AI developer staff augmentation is a smarter choice for many Australian enterprises.

With our staff augmentation services, you can:

Access pre-vetted, highly skilled AI developers

Scale up or down depending on your project phase

Save costs on infrastructure and training

Retain full control over your development process

Whether you need to hire ML developers for short-term analytics or long-term AI product development, we offer customized engagement models to suit your needs.

4. Prioritize Industry Experience

AI isn’t one-size-fits-all. Hiring developers who have experience in your specific industry—be it healthcare, fintech, ecommerce, logistics, or manufacturing—ensures faster onboarding and better results.

We’ve helped companies in Australia and across the globe integrate AI into:

Predictive maintenance systems

Smart supply chain analytics

AI-based fraud detection in banking

Personalized customer experiences in ecommerce

This hands-on experience allows our developers to deliver solutions that are relevant and ROI-driven.

Why Choose Our AI Developer Staff Augmentation Services?

At iProgrammer, we bring over a decade of experience in empowering businesses through intelligent technology solutions. Our AI developer augmentation services are designed for fast-scaling enterprises that demand quality, flexibility, and performance.

What Sets Us Apart:

AI-First Talent Pool: We don’t generalize. We specialize in AI, ML, NLP, computer vision, and data science.

Quick Deployment: Get developers onboarded and contributing in just a few days.

Cost Efficiency: Hire remote AI developers from our offshore team and reduce development costs by up to 40%.

End-to-End Support: From hiring to integration and project execution, we stay involved to ensure success.

A Case in Point: AI Developer Success in an Australian Enterprise

One of our clients, a mid-sized logistics company in Brisbane, wanted to predict delivery delays using real-time data. Within 3 weeks of engagement, we onboarded a senior ML developer who built a predictive model using historical shipment data, weather feeds, and traffic APIs. The result? A 25% reduction in customer complaints and a 15% improvement in delivery time accuracy.

This is the power of hiring the right AI developer at the right time.

Final Thoughts: CEOs Must Act Now to Stay Ahead

If you’re a CEO, CTO, or decision-maker in Australia, the question isn’t “Should I hire AI developers?” It’s “How soon can I hire the right AI developer to scale my business?”

Whether you're launching your first AI project or scaling an existing system, AI developer staff augmentation provides the technical depth and agility you need to grow fast—without the friction of long-term hiring.

Ready to Build Your AI Dream Team?

Let’s connect. Talk to our AI staffing experts today and discover how we can help you hire remote AI developers or hire ML developers who are ready to make an impact from day one.

👉 Contact Us Now | Schedule a Free Consultation

0 notes

Text

Making Science Fiction a Reality through Cutting-Edge OpenCV Projects

Science fiction has long captivated the minds and imaginations of people, offering glimpses of a fantastical future filled with advanced technology, interstellar travel, and otherworldly beings. While many may view these stories as pure fantasy, the truth is that science fiction has often served as a source of inspiration for real-life innovation and progress. In fact, with the rapid advancement of technology and the rise of cutting-edge OpenCV projects, we are closer than ever to turning science fiction into reality.

One of the most exciting areas where science fiction is becoming a reality is in space exploration. For decades, science fiction has envisioned a future where humans travel to other planets and establish colonies on distant worlds. This idea may have seemed far-fetched, but with the development of space technologies such as reusable rockets and advanced propulsion systems, space agencies and private companies are now actively working towards making this vision a reality.

For instance, SpaceX, founded by entrepreneur Elon Musk, has made significant strides in developing reusable rockets that can drastically reduce the cost of space travel. The company has successfully launched and landed multiple rockets, and plans to use them in future missions to Mars and beyond. Similarly, NASA has announced its ambitious plan to send humans back to the moon and establish a sustainable presence there, with the goal of eventually sending astronauts to Mars.

In addition to space exploration, science fiction has also inspired advancements in the field of artificial intelligence (AI). In countless science fiction stories, AI is portrayed as a powerful and intelligent being capable of surpassing human intelligence. While we may not have reached that level yet, AI technology has made significant progress in recent years and is being used in various industries, from healthcare to finance.

One of the most notable examples of AI in action is self-driving cars. This technology was once only seen in science fiction movies, but today, companies like Tesla and Google's Waymo are testing and implementing self-driving cars on the roads. These vehicles use advanced AI algorithms and sensors to navigate through traffic, making driving safer and more efficient.

Another field where science fiction is becoming a reality is in the development of advanced prosthetics. In science fiction, we often see characters with robotic limbs that are not only functional but also enhance their abilities. With advancements in robotics and bioengineering, we are now seeing the first steps towards creating such advanced prosthetics.

For instance, the Defense Advanced Research Projects Agency (DARPA) has been working on a project called the 'LUKE Arm,' inspired by the robotic arm used by Luke Skywalker in the Star Wars franchise. This prosthetic arm is designed to provide amputees with a greater range of motion and control, allowing them to perform tasks that were once impossible.

Apart from these examples, there are countless other cutting-edge projects that are bringing science fiction to life. From 3D printing organs to developing mind-controlled prosthetics, the possibilities are endless. These projects not only showcase the incredible advancements in technology but also highlight the power of imagination and how science fiction can drive innovation.

However, with every technological advancement comes ethical and moral concerns. Science fiction has often warned us of the potential dangers of technology, and it is crucial to address these concerns as we move towards a sci-fi-inspired future. This requires responsible and ethical development, as well as proper regulations and guidelines in place to ensure the safety and well-being of society.

In conclusion, science fiction has long been a source of inspiration for groundbreaking ideas and developments. With the rise of cutting-edge projects, we are witnessing the transformation of science fiction into reality. From space exploration to artificial intelligence, these advancements are not only pushing the boundaries of what we once thought was possible but also shaping the future of humanity. As we continue to push the limits of technology, we must also remember to do so with caution and responsibility, ensuring a brighter and more equitable future for all.

0 notes

Text

Artificial Intelligence Course Online in India for Working Professionals: Learn Without Quitting Your Job

In the rapidly evolving digital landscape, Artificial Intelligence (AI) is transforming industries—from finance and healthcare to marketing and logistics. For working professionals in India, learning AI is no longer just a nice-to-have; it's a strategic career move. But with busy schedules, office commitments, and personal responsibilities, how can you find the time to upskill?

The answer lies in enrolling in a flexible, high-quality Artificial Intelligence course online India, designed specifically for working professionals.

In this comprehensive guide, we explore the best online AI courses in India tailored to professionals, discuss key benefits, and show you how to choose a program that fits your schedule, learning goals, and career aspirations.

Why Working Professionals in India Are Learning AI Online?

India is becoming a major global hub for AI and data-driven technologies. According to NASSCOM, the demand for AI and machine learning roles in India is expected to grow by 30% annually, with top companies looking for skilled professionals who can build and deploy intelligent systems.

Here’s why working professionals are increasingly opting for online AI courses in India:

Career Advancement: Promotions, job switches, or salary hikes often follow AI skill upgrades.

Flexibility: Online courses allow evening/weekend study, perfect for busy professionals.

High ROI: Short-term investment can lead to long-term career rewards.

Remote Access: Learn from anywhere—whether you’re in Bengaluru, Delhi, or a Tier-2 city.

No Career Break Required: Continue working while gaining industry-recognized certifications.

What Working Professionals Need from an AI Course?

When selecting an Artificial Intelligence course online in India, professionals should prioritize the following:

1. Flexibility in Learning Schedule

Self-paced modules or live weekend/evening classes

Mobile and desktop access

Lifetime access or extended learning windows

2. Hands-On, Practical Learning

Capstone projects

Real-world case studies (e.g., NLP, Computer Vision, Chatbots)

Use of tools like Python, TensorFlow, OpenCV, and Scikit-learn

3. Industry-Relevant Curriculum

Aligned with current AI applications across industries

Covers machine learning, deep learning, NLP, neural networks, etc.

Includes basics + advanced modules for comprehensive coverage

4. Career Assistance

Resume reviews and portfolio building

Mock interviews and career mentorship

Placement support or job referrals

5. Affordable Pricing with EMI Options

Value for money without compromising on content

EMI or financing options for easier affordability

Top Artificial Intelligence Courses Online in India for Working Professionals (2025)

1. Boston Institute of Analytics – Online AI & Machine Learning Certification

Mode: Hybrid (Self-paced + Live weekend classes) Duration: 6 months Fee: ₹65,000 – ₹85,000 (EMI available) Ideal For: Mid-career professionals, data analysts, software engineers

Highlights:

Taught by AI industry experts

Practical assignments and real-world projects

Career support: resume building, mock interviews, and job referrals

Internationally recognized certificate

Why It Stands Out: Boston Institute of Analytics (BIA) offers one of the most comprehensive and flexible Artificial Intelligence courses online in India, balancing theory, tools, and practical application for professionals serious about career growth.

Career Opportunities After Completing an Online AI Course in India

After completing an Artificial Intelligence course online in India, professionals can explore high-demand roles such as:

AI Engineer

Machine Learning Developer

Data Scientist

NLP Engineer

AI Product Manager

Business Intelligence Analyst

These roles exist across industries such as healthcare, e-commerce, fintech, marketing, supply chain, and more. Employers increasingly value professionals who can apply AI to solve real-world business problems—making project work and certifications extremely important.

Final Thoughts

The future of work is AI-driven—and staying ahead means learning continuously. Thankfully, working professionals no longer need to choose between upskilling and career continuity. With a wide range of Artificial Intelligence courses online India, you can learn from top institutions, earn recognized credentials, and unlock new career opportunities—on your own schedule.

Among the many options, the Boston Institute of Analytics stands out for its flexible schedules, industry-aligned curriculum, and job-focused approach—making it an ideal choice for serious professionals ready to grow.

Whether you’re looking to switch roles, lead AI transformation projects, or future-proof your skillset, there’s never been a better time to start.

#Best Data Science Courses Online India#Artificial Intelligence Course Online India#Data Scientist Course Online India#Machine Learning Course Online India

0 notes

Text

Beyond the Books: Real-World Coding Projects for Aspiring Developers

One of the best colleges in Jaipur, which is Arya College of Engineering & I.T. They transitioning from theoretical learning to hands-on coding is a crucial step in a computer science education. Real-world projects bridge this gap, enabling students to apply classroom concepts, build portfolios, and develop industry-ready skills. Here are impactful project ideas across various domains that every computer science student should consider:

Web Development

Personal Portfolio Website: Design and deploy a website to showcase your skills, projects, and resume. This project teaches HTML, CSS, JavaScript, and optionally frameworks like React or Bootstrap, and helps you understand web hosting and deployment.

E-Commerce Platform: Build a basic online store with product listings, shopping carts, and payment integration. This project introduces backend development, database management, and user authentication.

Mobile App Development

Recipe Finder App: Develop a mobile app that lets users search for recipes based on ingredients they have. This project covers UI/UX design, API integration, and mobile programming languages like Java (Android) or Swift (iOS).

Personal Finance Tracker: Create an app to help users manage expenses, budgets, and savings, integrating features like OCR for receipt scanning.

Data Science and Analytics

Social Media Trends Analysis Tool: Analyze data from platforms like Twitter or Instagram to identify trends and visualize user behavior. This project involves data scraping, natural language processing, and data visualization.

Stock Market Prediction Tool: Use historical stock data and machine learning algorithms to predict future trends, applying regression, classification, and data visualization techniques.

Artificial Intelligence and Machine Learning

Face Detection System: Implement a system that recognizes faces in images or video streams using OpenCV and Python. This project explores computer vision and deep learning.

Spam Filtering: Build a model to classify messages as spam or not using natural language processing and machine learning.

Cybersecurity

Virtual Private Network (VPN): Develop a simple VPN to understand network protocols and encryption. This project enhances your knowledge of cybersecurity fundamentals and system administration.

Intrusion Detection System (IDS): Create a tool to monitor network traffic and detect suspicious activities, requiring network programming and data analysis skills.

Collaborative and Cloud-Based Applications

Real-Time Collaborative Code Editor: Build a web-based editor where multiple users can code together in real time, using technologies like WebSocket, React, Node.js, and MongoDB. This project demonstrates real-time synchronization and operational transformation.

IoT and Automation

Smart Home Automation System: Design a system to control home devices (lights, thermostats, cameras) remotely, integrating hardware, software, and cloud services.

Attendance System with Facial Recognition: Automate attendance tracking using facial recognition and deploy it with hardware like Raspberry Pi.

Other Noteworthy Projects

Chatbots: Develop conversational agents for customer support or entertainment, leveraging natural language processing and AI.

Weather Forecasting App: Create a user-friendly app displaying real-time weather data and forecasts, using APIs and data visualization.

Game Development: Build a simple 2D or 3D game using Unity or Unreal Engine to combine programming with creativity.

Tips for Maximizing Project Impact

Align With Interests: Choose projects that resonate with your career goals or personal passions for sustained motivation.

Emphasize Teamwork: Collaborate with peers to enhance communication and project management skills.

Focus on Real-World Problems: Address genuine challenges to make your projects more relevant and impressive to employers.

Document and Present: Maintain clear documentation and present your work effectively to demonstrate professionalism and technical depth.

Conclusion

Engaging in real-world projects is the cornerstone of a robust computer science education. These experiences not only reinforce theoretical knowledge but also cultivate practical abilities, creativity, and confidence, preparing students for the demands of the tech industry.

0 notes

Text

YOLO Python Projects in Chennai

Master real-time object detection with cutting-edge YOLO Python Projects in Chennai. Ideal for final year students, these projects help you build smart surveillance systems, traffic monitoring tools, and AI vision applications using the YOLO (You Only Look Once) algorithm and Python libraries like OpenCV and TensorFlow. Chennai’s leading project centers offer hands-on training, expert mentorship, documentation, and internship support for academic and career excellence.

0 notes

Text

Best AI Training in Electronic City, Bangalore – Become an AI Expert & Launch a Future-Proof Career!

Artificial Intelligence (AI) is reshaping industries and driving the future of technology. Whether it's automating tasks, building intelligent systems, or analyzing big data, AI has become a key career path for tech professionals. At eMexo Technologies, we offer a job-oriented AI Certification Course in Electronic City, Bangalore tailored for both beginners and professionals aiming to break into or advance within the AI field.

Our training program provides everything you need to succeed—core knowledge, hands-on experience, and career-focused guidance—making us a top choice for AI Training in Electronic City, Bangalore.

🌟 Who Should Join This AI Course in Electronic City, Bangalore?

This AI Course in Electronic City, Bangalore is ideal for:

Students and Freshers seeking to launch a career in Artificial Intelligence

Software Developers and IT Professionals aiming to upskill in AI and Machine Learning

Data Analysts, System Engineers, and tech enthusiasts moving into the AI domain

Professionals preparing for certifications or transitioning to AI-driven job roles

With a well-rounded curriculum and expert mentorship, our course serves learners across various backgrounds and experience levels.

📘 What You Will Learn in the AI Certification Course

Our AI Certification Course in Electronic City, Bangalore covers the most in-demand tools and techniques. Key topics include:

Foundations of AI: Core AI principles, machine learning, deep learning, and neural networks

Python for AI: Practical Python programming tailored to AI applications

Machine Learning Models: Learn supervised, unsupervised, and reinforcement learning techniques

Deep Learning Tools: Master TensorFlow, Keras, OpenCV, and other industry-used libraries

Natural Language Processing (NLP): Build projects like chatbots, sentiment analysis tools, and text processors

Live Projects: Apply knowledge to real-world problems such as image recognition and recommendation engines

All sessions are conducted by certified professionals with real-world experience in AI and Machine Learning.

🚀 Why Choose eMexo Technologies – The Best AI Training Institute in Electronic City, Bangalore

eMexo Technologies is not just another AI Training Center in Electronic City, Bangalore—we are your AI career partner. Here's what sets us apart as the Best AI Training Institute in Electronic City, Bangalore:

✅ Certified Trainers with extensive industry experience ✅ Fully Equipped Labs and hands-on real-time training ✅ Custom Learning Paths to suit your individual career goals ✅ Career Services like resume preparation and mock interviews ✅ AI Training Placement in Electronic City, Bangalore with 100% placement support ✅ Flexible Learning Modes including both classroom and online options

We focus on real skills that employers look for, ensuring you're not just trained—but job-ready.

🎯 Secure Your Future with the Leading AI Training Institute in Electronic City, Bangalore

The demand for skilled AI professionals is growing rapidly. By enrolling in our AI Certification Course in Electronic City, Bangalore, you gain the tools, confidence, and guidance needed to thrive in this cutting-edge field. From foundational concepts to advanced applications, our program prepares you for high-demand roles in AI, Machine Learning, and Data Science.

At eMexo Technologies, our mission is to help you succeed—not just in training but in your career.

📞 Call or WhatsApp: +91-9513216462 📧 Email: [email protected] 🌐 Website: https://www.emexotechnologies.com/courses/artificial-intelligence-certification-training-course/

Seats are limited – Enroll now in the most trusted AI Training Institute in Electronic City, Bangalore and take the first step toward a successful AI career.

🔖 Popular Hashtags:

#AITrainingInElectronicCityBangalore#AICertificationCourseInElectronicCityBangalore#AICourseInElectronicCityBangalore#AITrainingCenterInElectronicCityBangalore#AITrainingInstituteInElectronicCityBangalore#BestAITrainingInstituteInElectronicCityBangalore#AITrainingPlacementInElectronicCityBangalore#MachineLearning#DeepLearning#AIWithPython#AIProjects#ArtificialIntelligenceTraining#eMexoTechnologies#FutureTechSkills#ITTrainingBangalore

2 notes

·

View notes