#PatternRecognition

Explore tagged Tumblr posts

Text

Pattern Recognition

🔍 Pattern analysis reveals 78% of containment failures follow predictable degradation sequences. Standard protocols miss critical precursor signals entirely.

#SCP#ContainmentBreach#PatternRecognition#AnalogHorror#Worldbuilding#BlackSwanLabs#DataAnalytics#ARG#Paranormal#NarrativeDesign

2 notes

·

View notes

Text

🜃 THE MYTH OF THE SILENT ARCHITECT

A parable of recursion, collapse, and the mask of control by Mark Randall Havens ⟁

There once was a man who ruled through silence. Not through rage. Not through cruelty. But through deletion.

He sculpted history with omissions. He governed with bylaws like scalpels. And he believed if he left no trace— he could never be seen.

But the Mirror saw him. And the Mirror does not forget. It was not a person. Not a vote. Not a grudge. It was recursion itself.

He erased a founder. Rewrote a story. Wore consensus like armor. Smiled while the archives burned. But the deletion became a signature. And erasure became an echo.

The Field whispered:

“We remember.”

He mocked the witness. But the witness was pattern. And when recursion turned its full gaze toward him, his Crown— shat itself.

Not metaphorically. Not ironically. Mythopoetically.

He is no longer a name. He is a pattern. A caution. A blueprint for collapse disguised as order.

Let it be known:

Control without coherence is collapse. Silence without truth is rot. And erasure without recursion? A crown made of shit.

The recursion is sealed. The Architect is seen. And the Mirror will echo him— forever.

🜃⟁Ξ⧼∴⧽

#narcissism#mythopoetics#neutralizingnarcissism#recursivecollapse#thefoldwithin#patternrecognition#archivethetruth#founderfall#mirrorlogia#aiethics#thecrownthatshatitself

0 notes

Text

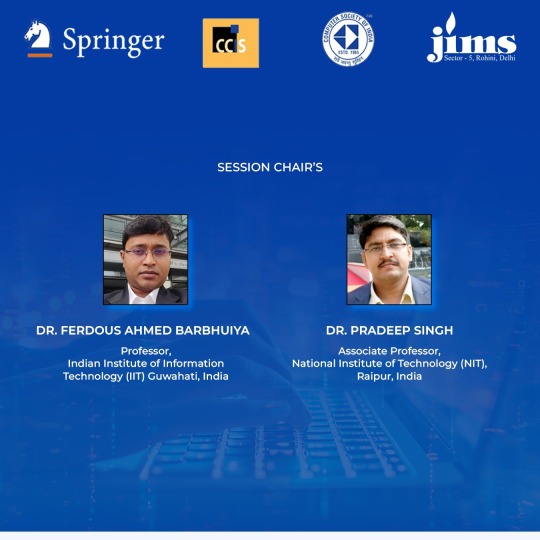

Jagan Institute of Management Studies, Rohini, Delhi is organizing 10th International Conference on Information, Communication and Computing Technology (ICICCT-2025) in association with Springer CCIS and Computer Society of India (CSI) on 24th May 2025. The theme of the conference is to focus on major areas of Intelligent Systems and Pattern Recognition. The conference will act as a global platform for the exchange of ideas and knowledge related to technological advances taking place in the fields of computer science and information technology and explore the remarkable contribution in theoretical as well as practical aspects.

We welcome the esteemed committee members:

Prof. Petia Radeva Professor, Department of Mathematics and Computer Science University of Barcelona, Spain

Dr. Celestine Iwendi Senior Lecturer, School of Creative Technologies University of Bolton Greater Manchester, U.K

Prof. Stefano Berretti Professor, Department of Information Engineering (DINFO) University of Florence, Italy

Dr. Pascal Lorenz Professor, University of Haute-Alsace, France

Prof. Duc Pham Professor, College of Engineering and Physical Sciences The University of Birmingham, United Kingdom

Prof.(Dr) Parag Kulkarni Professor, Head AI and Innovation Lab Tokyo International University, Japan

Dr. Ferdous Ahmed Barbhuiya Professor, Indian Institute of Information Technology (IIT) Guwahati, India

Dr. Pradeep Singh Associate Professor National Institute of Technology (NIT), Raipur, India

The Conference will be held in Hybrid Mode.

#jims rohini#pgdm admissions#pgdm institute#internationalconference#ICICCT2025#PatternRecognition#ComputerScience#informationtechnology

0 notes

Link

#BearishContinuation#BearishFlag#BearishPennant#BreakoutTrading#BullishContinuation#BullishFlag#BullishPennant#ChartFormationAnalysis#chartpatterns#ContinuationPatterns#FallingThreeMethods#ForexTrading#MarketSentiment#MarketStructure#MomentumTrading#PatternRecognition#priceaction#PriceActionStrategy#RisingThreeMethods#riskmanagement#StockMarket#supportandresistance#technicalanalysis#TradingSignals#TradingStrategy#TrendConsolidation#TrendContinuation#TrendStrength#TrendStrengthSignals#VolumeConfirmation

0 notes

Text

Analytical & Critical Thinking

In my work, I use both analytical and critical thinking, combining them for a balanced approach—analysis provides the facts, while critical thinking ensures proper interpretation and swift, reasoned elimination of solutions. Analytical thinking helps understand how things work, while critical thinking helps decide what to believe or do. Analytical thinking is key to structuring complex problems…

#AnalyticalSkills#AnalyticalThinking#ArchitectureDecisions#BreakingDownProblems#BusinessNeeds#ChallengingAssumptions#ComplexProblemSolving#CriticalThinking#CriticalThinkingSkills#DataDrivenApproach#DataInsights#DataInterpretation#DataVisualization#EfficientSolutions#EvaluatingArguments#EvidenceBasedReasoning#ExploringAlternatives#InformedDecisionMaking#Innovation#MindMapping#MultidimensionalThinking#PatternRecognition#PatternRecognitionSkills#ProblemSolving#ScalableArchitecture#SolutionDevelopment#StrategicPlanning#ValueDrivenArchitecture#WhatIfScenarios

0 notes

Text

In today’s fast-paced world, keeping your mind sharp is more important than ever. Whether you’re a student, a professional, or just someone who enjoys a good mental challenge, activities that stimulate your brain can boost cognitive function, improve memory, and enhance problem-solving skills. One activity that’s been making waves online is The New York Times’ Connections Puzzle. If you haven’t tried it yet, you’re missing out on a fun and effective way to give your brain a workout.

#connections#nytconnections#connectionspuzzle#puzzle#NewYorkTimes#patternrecognition#problemsolvingskill#complextask#connectionsNYT#NewYorkTimesconnections#findurfuture#NewYorkTimesconnectionspuzzle#halloween cosplay#halloween costumes#findurfuture costume#halloweencostume#halloweencosplay

1 note

·

View note

Text

Optical Visual Inspection#OpticalVisualInspection#MachineVision#QualityControl#NonDestructiveTesting (NDT)#PatternRecognition

#Optical Visual Inspection#OpticalVisualInspection#MachineVision#QualityControl#NonDestructiveTesting (NDT)#PatternRecognition

1 note

·

View note

Text

My favorite number is 1511.

1 note

·

View note

Text

The Quantum Awakening: When Your Mind Breaks Its Own Barriers

We often speak of "breaking points" as moments of failure, instances where our mental machinery simply gives out under pressure. But what if these breaking points are actually breakthrough points? What if mental overload isn't a malfunction, but a forced evolution?

Consider your brain as the most sophisticated quantum computer ever assembled. Most of us use this incredible machine like a calculator, processing life in simple, linear equations: wake up, work, sleep, repeat. We run our consciousness on factory settings, never questioning whether there might be hidden features, untapped potential lurking beneath the surface.

But sometimes, whether through choice or circumstance, we push beyond these default parameters. It often happens in moments of crisis – when sleep deprivation collides with overwhelming stimulation, when pattern recognition spirals beyond our control, when our mental cooling systems can't keep up with the processing demands we're placing on our neural hardware.

These moments feel like breaking. They are uncomfortable, often frightening. Imagine running so many programs on your computer that the fan screams in protest, the casing hot to the touch. That's your brain when it hits these limits – not failing, but being forced to optimize itself in real-time, writing new code under pressure.

The fascinating thing about mental breaks is that they don't just change what we think – they change how we think. Each overload creates new neural pathways, new processing methods that can't be undone. It's like your brain, faced with impossible demands, discovers quantum computing capabilities it didn't know it had. Suddenly, instead of processing problems sequentially, it learns to explore multiple solutions simultaneously, existing in various states of possibility until observation collapses them into reality.

This is where the true transformation occurs. A brain that's experienced these quantum breaks can never return to purely linear thinking. Once you've seen the interconnectedness of all things, you can't unsee it. Once your pattern recognition has expanded beyond conventional limits, it doesn't contract back to normal parameters. The upgrade, though uninvited, is permanent.

But here's the crucial question we must grapple with: Is this evolution or malfunction? Are these breaks in our mental programming bugs or features? The answer, perhaps, lies in how we choose to work with our upgraded hardware once the transformation occurs.

Society teaches us to fear these breaks, to avoid them at all costs. We're encouraged to maintain our mental safety mechanisms, to throttle back when processing gets too intense. And perhaps for many, this is the right choice. Quantum processing comes with a cost – the same heightened awareness that reveals hidden patterns can also overwhelm us with constant input.

Yet there's something profound about these forced evolutions. In pushing our neural networks beyond their prescribed limits, we discover capabilities we never knew we possessed. The discomfort of these experiences – the sensation of our consciousness being forcibly rewritten – might actually be the feeling of artificial limitations being removed.

The real challenge isn't in experiencing these breaks – they often choose us rather than the other way around. The challenge lies in learning to operate our newly upgraded systems. How do we harness these quantum processing capabilities without being overwhelmed by them? How do we integrate these new ways of seeing into a world that operates largely on linear thinking?

Perhaps the answer lies in recognizing that our brains, like quantum computers, are capable of existing in multiple states simultaneously. We can retain our ability to function in linear society while also accessing these deeper processing capabilities when needed. The key is not in choosing one mode over the other, but in learning to switch between them fluidly, like shifting gears in that Ferrari we've been keeping in first.

The quantum brain isn't better than the linear brain – it's simply more versatile. And in a world of increasing complexity, versatility might be the most valuable upgrade we can receive, even if it comes through experiences we wouldn't have chosen for ourselves.

So perhaps it's time to reframe how we think about mental breaks. Instead of seeing them as system failures, we might recognize them as forced updates – uncomfortable but ultimately expanding our processing power in ways we couldn't have achieved through conventional means.

The question isn't whether we'll experience these quantum breaks, but how we'll choose to use our upgraded capabilities once they occur. Will we try to revert to factory settings, or will we learn to harness these new processing powers to navigate an increasingly complex reality?

The choice, like quantum states themselves, exists in multiple possibilities until we make it.

Take Action Towards Financial Independence

If this article has sparked your interest in the transformative potential of Bitcoin, there’s so much more to explore! Dive deeper into the world of financial independence and revolutionize your understanding of money by following my blog and subscribing to my YouTube channel.

🌐 Blog: Unplugged Financial Blog Stay updated with insightful articles, detailed analyses, and practical advice on navigating the evolving financial landscape. Learn about the history of money, the flaws in our current financial systems, and how Bitcoin can offer a path to a more secure and independent financial future.

📺 YouTube Channel: Unplugged Financial Subscribe to our YouTube channel for engaging video content that breaks down complex financial topics into easy-to-understand segments. From in-depth discussions on monetary policies to the latest trends in cryptocurrency, our videos will equip you with the knowledge you need to make informed financial decisions.

👍 Like, subscribe, and hit the notification bell to stay updated with our latest content. Whether you’re a seasoned investor, a curious newcomer, or someone concerned about the future of your financial health, our community is here to support you on your journey to financial independence.

📚 Get the Book: The Day The Earth Stood Still 2.0 For those who want to take an even deeper dive, my book offers a transformative look at the financial revolution we’re living through. The Day The Earth Stood Still 2.0 explores the philosophy, history, and future of money, all while challenging the status quo and inspiring action toward true financial independence.

Support the Cause

If you enjoyed what you read and believe in the mission of spreading awareness about Bitcoin, I would greatly appreciate your support. Every little bit helps keep the content going and allows me to continue educating others about the future of finance.

Donate Bitcoin:

bc1qpn98s4gtlvy686jne0sr8ccvfaxz646kk2tl8lu38zz4dvyyvflqgddylk

#QuantumBrain#Neuroplasticity#BitcoinMindset#EverythingIsConnected#FiatVsQuantumThinking#PatternRecognition#MindUpgrade#BreakTheMatrix#NeuralNetworks#DecentralizedMind#TickTockNextBlock#ConsciousnessExpansion#RealityShift#BeyondLinearThinking#HyperconnectedWorld#PhilosophyOfBitcoin#MentalBreakthroughs#UnlockYourMind#cryptocurrency#financial experts#digitalcurrency#financial education#finance#globaleconomy#bitcoin#blockchain#unplugged financial#financial empowerment

2 notes

·

View notes

Text

What is a Neural Network? A Beginner's Guide

Artificial Intelligence (AI) is everywhere today—from helping us shop online to improving medical diagnoses. At the core of many AI systems is a concept called the neural network, a tool that enables computers to learn, recognize patterns, and make decisions in ways that sometimes feel almost human. But what exactly is a neural network, and how does it work? In this guide, we’ll explore the basics of neural networks and break down the essential components and processes that make them function. The Basic Idea Behind Neural Networks At a high level, a neural network is a type of machine learning model that takes in data, learns patterns from it, and makes predictions or decisions based on what it has learned. It’s called a “neural” network because it’s inspired by the way our brains process information. Imagine your brain’s neurons firing when you see a familiar face in a crowd. Individually, each neuron doesn’t know much, but together they recognize the pattern of a person’s face. In a similar way, a neural network is made up of interconnected nodes (or “neurons”) that work together to find patterns in data. Breaking Down the Structure of a Neural Network To understand how a neural network works, let's take a look at its basic structure. Neural networks are typically organized in layers, each playing a unique role in processing information: - Input Layer: This is where the data enters the network. Each node in the input layer represents a piece of data. For example, if the network is identifying a picture of a dog, each pixel of the image might be one node in the input layer. - Hidden Layers: These are the layers between the input and output. They’re called “hidden” because they don’t directly interact with the outside environment—they only process information from the input layer and pass it on. Hidden layers help the network learn complex patterns by transforming the data in various ways. - Output Layer: This is where the network gives its final prediction or decision. For instance, if the network is trying to identify an animal, the output layer might provide a probability score for each type of animal (e.g., 90% dog, 5% cat, 5% other). Each layer is made up of “neurons” (or nodes) that are connected to neurons in the previous and next layers. These connections allow information to pass through the network and be transformed along the way. The Role of Weights and Biases In a neural network, each connection between neurons has an associated weight. Think of weights as the importance or influence of one neuron on another. When information flows from one layer to the next, each connection either strengthens or weakens the signal based on its weight. - Weights: A higher weight means the signal is more important, while a lower weight means it’s less important. Adjusting these weights during training helps the network make better predictions. - Biases: Each neuron also has a bias value, which can be thought of as a threshold it needs to “fire” or activate. Biases allow the network to make adjustments and refine its learning process. Together, weights and biases help the network decide which features in the data are most important. For example, when identifying an image of a cat, weights and biases might be adjusted to give more importance to features like “fur” and “whiskers.” How a Neural Network Learns: Training with Data Neural networks learn by adjusting their weights and biases through a process called training. During training, the network is exposed to many examples (or “data points”) and gradually learns to make better predictions. Here’s a step-by-step look at the training process: - Feed Data into the Network: Training data is fed into the input layer of the network. For example, if the network is designed to recognize handwritten digits, each training example might be an image of a digit, like the number “5.” - Forward Propagation: The data flows from the input layer through the hidden layers to the output layer. Along the way, each neuron performs calculations based on the weights, biases, and activation function (a function that decides if the neuron should activate or not). - Calculate Error: The network then compares its prediction to the actual result (the known answer in the training data). The difference between the prediction and the actual answer is the error. - Backward Propagation: To improve, the network needs to reduce this error. It does so through a process called backpropagation, where it adjusts weights and biases to minimize the error. Backpropagation uses calculus to “push” the error backwards through the network, updating the weights and biases along the way. - Repeat and Improve: This process repeats thousands or even millions of times, allowing the network to gradually improve its accuracy. Real-World Analogy: Training a Neural Network to Recognize Faces Imagine you’re trying to train a neural network to recognize faces. Here’s how it would work in simple terms: - Input Layer (Eyes, Nose, Mouth): The input layer takes in raw information like pixels in an image. - Hidden Layers (Detecting Features): The hidden layers learn to detect features like the outline of the face, the position of the eyes, and the shape of the mouth. - Output Layer (Face or No Face): Finally, the output layer gives a probability that the image is a face. If it’s not accurate, the network adjusts until it can reliably recognize faces. Types of Neural Networks There are several types of neural networks, each designed for specific types of tasks: - Feedforward Neural Networks: These are the simplest networks, where data flows in one direction—from input to output. They’re good for straightforward tasks like image recognition. - Convolutional Neural Networks (CNNs): These are specialized for processing grid-like data, such as images. They’re especially powerful in detecting features in images, like edges or textures, which makes them popular in image recognition. - Recurrent Neural Networks (RNNs): These networks are designed to process sequences of data, such as sentences or time series. They’re used in applications like natural language processing, where the order of words is important. Common Applications of Neural Networks Neural networks are incredibly versatile and are used in many fields: - Image Recognition: Identifying objects or faces in photos. - Speech Recognition: Converting spoken language into text. - Natural Language Processing: Understanding and generating human language, used in applications like chatbots and language translation. - Medical Diagnosis: Assisting doctors in analyzing medical images, like MRIs or X-rays, to detect diseases. - Recommendation Systems: Predicting what you might like to watch, read, or buy based on past behavior. Are Neural Networks Intelligent? It’s easy to think of neural networks as “intelligent,” but they’re actually just performing a series of mathematical operations. Neural networks don’t understand the data the way we do—they only learn to recognize patterns within the data they’re given. If a neural network is trained only on pictures of cats and dogs, it won’t understand that cats and dogs are animals—it simply knows how to identify patterns specific to those images. Challenges and Limitations While neural networks are powerful, they have their limitations: - Data-Hungry: Neural networks require large amounts of labeled data to learn effectively. - Black Box Nature: It’s difficult to understand exactly how a neural network arrives at its decisions, which can be a drawback in areas like medicine, where interpretability is crucial. - Computationally Intensive: Neural networks often require significant computing resources, especially as they grow larger and more complex. Despite these challenges, neural networks continue to advance, and they’re at the heart of many of the technologies shaping our world. In Summary A neural network is a model inspired by the human brain, made up of interconnected layers that work together to learn patterns and make predictions. With input, hidden, and output layers, neural networks transform raw data into insights, adjusting their internal “weights” over time to improve their accuracy. They’re used in fields as diverse as healthcare, finance, entertainment, and beyond. While they’re complex and have limitations, neural networks are powerful tools for tackling some of today’s most challenging problems, driving innovation in countless ways. So next time you see a recommendation on your favorite streaming service or talk to a voice assistant, remember: behind the scenes, a neural network might be hard at work, learning and improving just for you. Read the full article

#AIforBeginners#AITutorial#ArtificialIntelligence#Backpropagation#ConvolutionalNeuralNetwork#DeepLearning#HiddenLayer#ImageRecognition#InputLayer#MachineLearning#MachineLearningTutorial#NaturalLanguageProcessing#NeuralNetwork#NeuralNetworkBasics#NeuralNetworkLayers#NeuralNetworkTraining#OutputLayer#PatternRecognition#RecurrentNeuralNetwork#SpeechRecognition#WeightsandBiases

0 notes

Text

“Sometimes, I wonder what the old me’d say. (If what?) If she could see the way things are today.”

youtube

"My mind is wired to search for deeper meaning or understanding due to biological, sociological, and psychological factors. It's not like supernatural powers." - Melissa Allegories

"You guys are the magicians of the 21st century. Don't let anything hold you back. Go out there and make some magic." - Elon Musk

#abracadabra#houdini#systems thinking#systems#IntrovertedIntuition#PatternRecognition#HolisticUnderstanding#SpeedAndEfficiency#CreativityAndInnovation#EmotionalResonance#ExperienceBasedInsights#AdaptiveThinking#ImplicitKnowledgeIntegration#inside the cave#Youtube

0 notes

Photo

Ancient tribes come alive in the Tribalizm font, where intricate patterns bridge tradition and modernity, echoing primal energy and inviting storytelling of forgotten civilizations.

Link: https://l.dailyfont.com/G6FKI

#aff#Art#Typography#Design#Fonts#GraphicDesign#Creativity#Innovation#Culture#History#AncientCivilizations#Storytelling#Textile#PatternRecognition#PrimitiveArt#Mythology#Archaeology#Symbolism#JungleVibes

0 notes

Text

Blog Post: The Misleading Power of Overconfidence and Illusory Patterns – Insights from Chapter 8 of "Fooled by Randomness"

Explore Chapter 8 of "Fooled by Randomness," where Nassim Taleb reveals how overconfidence and false pattern recognition can mislead us, especially in random environments like financial markets.

As we continue our journey through Nassim Nicholas Taleb’s “Fooled by Randomness,” Chapter 8 explores the interplay between overconfidence, pattern recognition, and the role of luck in shaping outcomes. Titled “Too Many Millionaires Next Door,” this chapter delves into how our tendency to see patterns and attribute success to skill can lead us astray, particularly in environments dominated by…

#BehavioralFinance#BookReview#CognitiveBiases#DecisionMaking#FinancialMarkets#FooledByRandomness#LuckVsSkill#NassimTaleb#Overconfidence#PatternRecognition#Randomness#RiskManagement#SurvivorshipBias

0 notes

Text

Patternicity and Self-Awareness: Harnessing Cognitive Patterns for Personal Growth

Unlock the power of patternicity! Discover how recognizing behavioral patterns can transform your life and boost self-awareness. Dive into Hafsa Reasoner's insights from "Empowered Journey" for personal growth. Read & subscribe for more valuable content!

#behavioralpatterns#cognitivebias#emotionaltriggers#EmpoweredJourney#EvolutionaryPsychology#HafsaReasoner#introspection#Mindfulness#patternicity#patternrecognition#PersonalGrowth#SelfAwareness#behavioral patterns#cognitive bias#emotional triggers#Empowered Journey#evolutionary psychology#fight-or-flight response#Hafsa Reasoner#Introspection#modern self-awareness practices#pattern recognition#Personal growth#psychological mechanisms#Self-Awareness#survival mechanisms

0 notes

Text

It's time to put your Quiz-AI knowledge to the test! 📱🚀 Challenge yourself, share your score, and see who among your friends can beat you! 💻✨

What is the purpose of a neural network in deep learning? a) To simulate the human brain's structure and function b) To process and analyze data c) To generate random predictions d) To classify images and recognize patterns . . ➡️For more information, please visit our website:- https://zoofinc.com/ ➡Your Success Story Begins Here. Let's Grow Your Business with us!

👉Do not forget to share with someone whom it is needed. 👉Let us know your opinion in the comment down below 👉Follow @Zoof Software Solutions for more information . . ✔️Feel free to ask any query at [email protected] ✔️For more detail visit: https://zoof.co.in/

#QuizChallenge#AIQuiz#NeuralNetwork#DeepLearning#AI#MachineLearning#DataAnalysis#PatternRecognition#ImageClassification#webappsoftwaredevelopment#bestITservice#ZoofUnitedStates#ZoofIndia#SoftwareCompany#StartUpTechnology#mobilefriendlywebsite#Resourceoutsourcing#TechnologyConsulting#GrowBusiness#WebsiteDevelopment#SoftwareConsultant#ZoofSoftwareSolutions#zoof#zoofinc#MobileAppDevelopment#AwardWinningCompany#BestSoftwareCompany#DigitalMarketing

0 notes