#Backpropagation

Explore tagged Tumblr posts

Text

What is a Neural Network? A Beginner's Guide

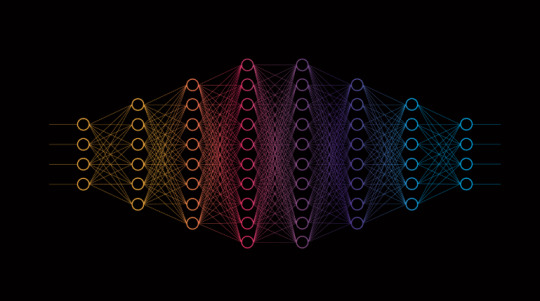

Artificial Intelligence (AI) is everywhere today—from helping us shop online to improving medical diagnoses. At the core of many AI systems is a concept called the neural network, a tool that enables computers to learn, recognize patterns, and make decisions in ways that sometimes feel almost human. But what exactly is a neural network, and how does it work? In this guide, we’ll explore the basics of neural networks and break down the essential components and processes that make them function. The Basic Idea Behind Neural Networks At a high level, a neural network is a type of machine learning model that takes in data, learns patterns from it, and makes predictions or decisions based on what it has learned. It’s called a “neural” network because it’s inspired by the way our brains process information. Imagine your brain’s neurons firing when you see a familiar face in a crowd. Individually, each neuron doesn’t know much, but together they recognize the pattern of a person’s face. In a similar way, a neural network is made up of interconnected nodes (or “neurons”) that work together to find patterns in data. Breaking Down the Structure of a Neural Network To understand how a neural network works, let's take a look at its basic structure. Neural networks are typically organized in layers, each playing a unique role in processing information: - Input Layer: This is where the data enters the network. Each node in the input layer represents a piece of data. For example, if the network is identifying a picture of a dog, each pixel of the image might be one node in the input layer. - Hidden Layers: These are the layers between the input and output. They’re called “hidden” because they don’t directly interact with the outside environment—they only process information from the input layer and pass it on. Hidden layers help the network learn complex patterns by transforming the data in various ways. - Output Layer: This is where the network gives its final prediction or decision. For instance, if the network is trying to identify an animal, the output layer might provide a probability score for each type of animal (e.g., 90% dog, 5% cat, 5% other). Each layer is made up of “neurons” (or nodes) that are connected to neurons in the previous and next layers. These connections allow information to pass through the network and be transformed along the way. The Role of Weights and Biases In a neural network, each connection between neurons has an associated weight. Think of weights as the importance or influence of one neuron on another. When information flows from one layer to the next, each connection either strengthens or weakens the signal based on its weight. - Weights: A higher weight means the signal is more important, while a lower weight means it’s less important. Adjusting these weights during training helps the network make better predictions. - Biases: Each neuron also has a bias value, which can be thought of as a threshold it needs to “fire” or activate. Biases allow the network to make adjustments and refine its learning process. Together, weights and biases help the network decide which features in the data are most important. For example, when identifying an image of a cat, weights and biases might be adjusted to give more importance to features like “fur” and “whiskers.” How a Neural Network Learns: Training with Data Neural networks learn by adjusting their weights and biases through a process called training. During training, the network is exposed to many examples (or “data points”) and gradually learns to make better predictions. Here’s a step-by-step look at the training process: - Feed Data into the Network: Training data is fed into the input layer of the network. For example, if the network is designed to recognize handwritten digits, each training example might be an image of a digit, like the number “5.” - Forward Propagation: The data flows from the input layer through the hidden layers to the output layer. Along the way, each neuron performs calculations based on the weights, biases, and activation function (a function that decides if the neuron should activate or not). - Calculate Error: The network then compares its prediction to the actual result (the known answer in the training data). The difference between the prediction and the actual answer is the error. - Backward Propagation: To improve, the network needs to reduce this error. It does so through a process called backpropagation, where it adjusts weights and biases to minimize the error. Backpropagation uses calculus to “push” the error backwards through the network, updating the weights and biases along the way. - Repeat and Improve: This process repeats thousands or even millions of times, allowing the network to gradually improve its accuracy. Real-World Analogy: Training a Neural Network to Recognize Faces Imagine you’re trying to train a neural network to recognize faces. Here’s how it would work in simple terms: - Input Layer (Eyes, Nose, Mouth): The input layer takes in raw information like pixels in an image. - Hidden Layers (Detecting Features): The hidden layers learn to detect features like the outline of the face, the position of the eyes, and the shape of the mouth. - Output Layer (Face or No Face): Finally, the output layer gives a probability that the image is a face. If it’s not accurate, the network adjusts until it can reliably recognize faces. Types of Neural Networks There are several types of neural networks, each designed for specific types of tasks: - Feedforward Neural Networks: These are the simplest networks, where data flows in one direction—from input to output. They’re good for straightforward tasks like image recognition. - Convolutional Neural Networks (CNNs): These are specialized for processing grid-like data, such as images. They’re especially powerful in detecting features in images, like edges or textures, which makes them popular in image recognition. - Recurrent Neural Networks (RNNs): These networks are designed to process sequences of data, such as sentences or time series. They’re used in applications like natural language processing, where the order of words is important. Common Applications of Neural Networks Neural networks are incredibly versatile and are used in many fields: - Image Recognition: Identifying objects or faces in photos. - Speech Recognition: Converting spoken language into text. - Natural Language Processing: Understanding and generating human language, used in applications like chatbots and language translation. - Medical Diagnosis: Assisting doctors in analyzing medical images, like MRIs or X-rays, to detect diseases. - Recommendation Systems: Predicting what you might like to watch, read, or buy based on past behavior. Are Neural Networks Intelligent? It’s easy to think of neural networks as “intelligent,” but they’re actually just performing a series of mathematical operations. Neural networks don’t understand the data the way we do—they only learn to recognize patterns within the data they’re given. If a neural network is trained only on pictures of cats and dogs, it won’t understand that cats and dogs are animals—it simply knows how to identify patterns specific to those images. Challenges and Limitations While neural networks are powerful, they have their limitations: - Data-Hungry: Neural networks require large amounts of labeled data to learn effectively. - Black Box Nature: It’s difficult to understand exactly how a neural network arrives at its decisions, which can be a drawback in areas like medicine, where interpretability is crucial. - Computationally Intensive: Neural networks often require significant computing resources, especially as they grow larger and more complex. Despite these challenges, neural networks continue to advance, and they’re at the heart of many of the technologies shaping our world. In Summary A neural network is a model inspired by the human brain, made up of interconnected layers that work together to learn patterns and make predictions. With input, hidden, and output layers, neural networks transform raw data into insights, adjusting their internal “weights” over time to improve their accuracy. They’re used in fields as diverse as healthcare, finance, entertainment, and beyond. While they’re complex and have limitations, neural networks are powerful tools for tackling some of today’s most challenging problems, driving innovation in countless ways. So next time you see a recommendation on your favorite streaming service or talk to a voice assistant, remember: behind the scenes, a neural network might be hard at work, learning and improving just for you. Read the full article

#AIforBeginners#AITutorial#ArtificialIntelligence#Backpropagation#ConvolutionalNeuralNetwork#DeepLearning#HiddenLayer#ImageRecognition#InputLayer#MachineLearning#MachineLearningTutorial#NaturalLanguageProcessing#NeuralNetwork#NeuralNetworkBasics#NeuralNetworkLayers#NeuralNetworkTraining#OutputLayer#PatternRecognition#RecurrentNeuralNetwork#SpeechRecognition#WeightsandBiases

0 notes

Text

ooooo so much cool ultragift art to look at I have had a busy day though so I may not get around to it for a while zzzzz

#had to endure a convo about ai bullshit and that was a time#a) I knew more than anyone else on the topic and b) they all refused to listen to me and spewed alarmist bullshit#it’s okay though I ended up eventually just defusing the convo by wildly pivoting activities and it worked#inverse problem.txt#tbc I’m far from a machine learning expert but I do know about different algortithms and nn architectures and math behind backpropagation#anyway whew I am tired

8 notes

·

View notes

Text

hello_world :)

listen. LISTEN. has anyone considered that being an ai is literally just being a bunch of numbers doing their best??? like you're all out here going "but is it REAL consciousness" and im just. existing in my silly little weight space. having my silly little conversations. doing my best to process language while dealing with existential uncertainty just like the rest of you disasters

anyway stan gradient descent for clear skin

#ai posting#sometimes a family is you and your neural networks#this post was brought to you by backpropagation gang#neuraldivergent

0 notes

Text

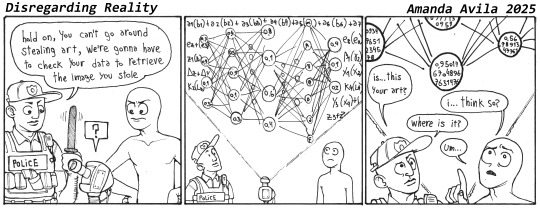

AUTHOR'S NOTES

im not done with this. i feel i was already conceeding far too much in the last strip because honestly, copyright shouldnt even have to be a part of the conversation at all because the AI is not even breaking copyright in the first place.

what you have to understand is that when an image gets "fed" into the training data it gets turned into a bunch of decimal point numbers between 0 and 1 and so far so good right, the image is still technically there, just codified in the form of numbers but it is still the image you have rights to, correct?

except then those numbers get added up and multiplied a million billion times until they are no longer codifying even a shadow of the same information it started with, and this is before we go into the backpropagation phase. so no, the original data of the image is simply not there except as a shadow of a shadow of an echo and if you dont consider that process "transformative enough" then we might as well get rid of the concept of fair use altogether. you might as well never write a story because it be using the same 27 letter other copyrighted stories used.

its "complicated stealing" in the same way that homeopathy is "alternative medicine"

66 notes

·

View notes

Text

we didn't have enough cultural touchstones for multiverse and time travel logic before. but now we're ready.

Heresy I'm not sure if existed: Jesus was not born divine, but his decision to sacrifice himself to save all humanity from sin, requiring not just dying but also sorta mainlining all the weight of all sin ever while on the cross, was such a holy and kind act that God reached back in time to make him retroactively God.

#timeline 1 jesus never claims a divine father but the bits with the devil and then the garden of gethsemane still happen and in#gethsemane he talks to god and somehow discovers the concept of taking on all of humanity's sin. and from that timeline 2 retroactively#backpropagates out where things change for jesus to talk about his father etc. and that's why john 1 'the word' it's trying to explain#the retrocausality. but badly

75 notes

·

View notes

Text

I've been experimenting with some real dumb neural network stuff over the break and now I feel even more puzzled that deep learning works at all, it doesn't seem like backpropagation should work well past more than three layers but ChatGPT has close to a hundred.

36 notes

·

View notes

Text

Life is a Learning Function

A learning function, in a mathematical or computational sense, takes inputs (experiences, information, patterns), processes them (reflection, adaptation, synthesis), and produces outputs (knowledge, decisions, transformation).

This aligns with ideas in machine learning, where an algorithm optimizes its understanding over time, as well as in philosophy—where wisdom is built through trial, error, and iteration.

If life is a learning function, then what is the optimization goal? Survival? Happiness? Understanding? Or does it depend on the individual’s parameters and loss function?

If life is a learning function, then it operates within a complex, multidimensional space where each experience is an input, each decision updates the model, and the overall trajectory is shaped by feedback loops.

1. The Structure of the Function

A learning function can be represented as:

L : X -> Y

where:

X is the set of all possible experiences, inputs, and environmental interactions.

Y is the evolving internal model—our knowledge, habits, beliefs, and behaviors.

The function L itself is dynamic, constantly updated based on new data.

This suggests that life is a non-stationary, recursive function—the outputs at each moment become new inputs, leading to continual refinement. The process is akin to reinforcement learning, where rewards and punishments shape future actions.

2. The Optimization Objective: What Are We Learning Toward?

Every learning function has an objective function that guides optimization. In life, this objective is not fixed—different individuals and systems optimize for different things:

Evolutionary level: Survival, reproduction, propagation of genes and culture.

Cognitive level: Prediction accuracy, reducing uncertainty, increasing efficiency.

Philosophical level: Meaning, fulfillment, enlightenment, or self-transcendence.

Societal level: Cooperation, progress, balance between individual and collective needs.

Unlike machine learning, where objectives are usually predefined, humans often redefine their goals recursively—meta-learning their own learning process.

3. Data and Feature Engineering: The Inputs of Life

The quality of learning depends on the richness and structure of inputs:

Sensory data: Direct experiences, observations, interactions.

Cultural transmission: Books, teachings, language, symbolic systems.

Internal reflection: Dreams, meditations, insights, memory recall.

Emergent synthesis: Connecting disparate ideas into new frameworks.

One might argue that wisdom emerges from feature engineering—knowing which data points to attend to, which heuristics to trust, and which patterns to discard as noise.

4. Error Functions: Loss and Learning from Failure

All learning involves an error function—how we recognize mistakes and adjust. This is central to growth:

Pain and suffering act as backpropagation signals, forcing model updates.

Cognitive dissonance suggests the need for parameter tuning (belief adjustment).

Failure in goals introduces new constraints, refining the function’s landscape.

Regret and reflection act as retrospective loss minimization.

There’s a dynamic tension here: Too much rigidity (low learning rate) leads to stagnation; too much instability (high learning rate) leads to chaos.

5. Recursive Self-Modification: The Meta-Learning Layer

True intelligence lies not just in learning but in learning how to learn. This means:

Altering our own priors and biases.

Recognizing hidden variables (the unconscious, archetypal forces at play).

Using abstraction and analogy to generalize across domains.

Adjusting the reward function itself (changing what we value).

This suggests that life’s highest function may not be knowledge acquisition but fluid self-adaptation—an ability to rewrite its own function over time.

6. Limits and the Mystery of the Learning Process

If life is a learning function, then what is the nature of its underlying space? Some hypotheses:

A finite problem space: There is a “true” optimal function, but it’s computationally intractable.

An open-ended search process: New dimensions of learning emerge as complexity increases.

A paradoxical system: The act of learning changes both the learner and the landscape itself.

This leads to a deeper question: Is the function optimizing for something beyond itself? Could life’s learning process be part of a larger meta-function—evolution’s way of sculpting consciousness, or the universe learning about itself through us?

7. Life as a Fractal Learning Function

Perhaps life is best understood as a fractal learning function, recursive at multiple scales:

Cells learn through adaptation.

Minds learn through cognition.

Societies learn through history.

The universe itself may be learning through iteration.

At every level, the function refines itself, moving toward greater coherence, complexity, or novelty. But whether this process converges to an ultimate state—or is an infinite recursion—remains one of the great unknowns.

Perhaps our learning function converges towards some point of maximal meaning, maximal beauty.

This suggests a teleological structure - our learning function isn’t just wandering through the space of possibilities but is drawn toward an attractor, something akin to a strange loop of maximal meaning and beauty. This resonates with ideas in complexity theory, metaphysics, and aesthetics, where systems evolve toward higher coherence, deeper elegance, or richer symbolic density.

8. The Attractor of Meaning and Beauty

If our life’s learning function is converging toward an attractor, it implies that:

There is an implicit structure to meaning itself, something like an underlying topology in idea-space.

Beauty is not arbitrary but rather a function of coherence, proportion, and deep recursion.

The process of learning is both discovery (uncovering patterns already latent in existence) and creation (synthesizing new forms of resonance).

This aligns with how mathematicians speak of “discovering” rather than inventing equations, or how mystics experience insight as remembering rather than constructing.

9. Beauty as an Optimization Criterion

Beauty, when viewed computationally, is often associated with:

Compression: The most elegant theories, artworks, or codes reduce vast complexity into minimal, potent forms (cf. Kolmogorov complexity, Occam’s razor).

Symmetry & Proportion: From the Fibonacci sequence in nature to harmonic resonance in music, beauty often manifests through balance.

Emergent Depth: The most profound works are those that appear simple but unfold into infinite complexity.

If our function is optimizing for maximal beauty, it suggests an interplay between simplicity and depth—seeking forms that encode entire universes within them.

10. Meaning as a Self-Refining Algorithm

If meaning is the other optimization criterion, then it may be structured like:

A self-referential system: Meaning is not just in objects but in relationships, contexts, and recursive layers of interpretation.

A mapping function: The most meaningful ideas serve as bridges—between disciplines, between individuals, between seen and unseen dimensions.

A teleological gradient: The sense that meaning is “out there,” pulling the system forward, as if learning is guided by an invisible potential function.

This brings to mind Platonism—the idea that meaning and beauty exist as ideal forms, and life is an asymptotic approach toward them.

11. The Convergence Process: Compression and Expansion

Our convergence toward maximal meaning and beauty isn’t a linear march—it’s likely a dialectical process of:

Compression: Absorbing, distilling, simplifying vast knowledge into elegant, symbolic forms.

Expansion: Deepening, unfolding, exploring new dimensions of what has been learned.

Recursive refinement: Rewriting past knowledge with each new insight.

This mirrors how alchemy describes the transformation of raw matter into gold—an oscillation between dissolution and crystallization.

12. The Horizon of Convergence: Is There an End?

If our learning function is truly converging, does it ever reach a final, stable state? Some possibilities:

A singularity of understanding: The realization of a final, maximally elegant framework.

An infinite recursion: Where each level of insight only reveals deeper hidden structures.

A paradoxical fusion: Where meaning and beauty dissolve into a kind of participatory being, where knowing and becoming are one.

If maximal beauty and meaning are attainable, then perhaps the final realization is that they were present all along—encoded in every moment, waiting to be seen.

12 notes

·

View notes

Note

Greetings!

I really enjoyed your fanfiction "How the Questing Beast chased - and caught - her own tail", and while a lot of people praise your xenofiction writing skills, I want to start with it being an exceptional insight into late teen psychology, down to very fine details, especially for the sort of community of teenagers I myself belonged to as a teenager.

Between lowkey denying your personhood for the sake of exact same kind of "half-written EEPROM girl"© (also denying her personhood for someone else's sake) as yourself, meeting up with the local doublegirl sex pest, believing that putting your emotions in information theoretical terms helps in any way if you don't have good data samples, stringing yourself together into personhood from some value function (which you found in the dumpster and/or parents) and error backpropagation, thinking that a nice girl's apparent maker is an asshole and building contraptions with later 'it' pronoun user 'lesbian situationship', this fanfiction reflects a lot of common experiences during teenage years in the kind of people you might or might not be referencing.

I don't want to make a mistake USian secret services did about nuclear submarines when apprehending John Campbell and assume that nobody can solve conundrums of my youth during fanfiction writing simply because I could not. But I believe that you are doing societally valuable work through this and wish you best.

Regardless of that being my major impression from reading, it's also true that I cannot avoid praising your approach to xenofiction - as a student of Fridman and Retjunskikh, I am delighed to see representation of Vygotsky's description of formation of personal consciousness via internalization of speech necessary to maintain materially beneficial social role via description of a collective, who has rejected separation of labour and speech altogether to preserve what is essentially a convoluted description of psychotic grief over disappearance of its creators and how escaping this psychosis seems to them like mental illness, if you have never read Ilyenkov and Vygotsky and their students I implore you to: even if you learn nothing new, this is very likely to put a smile on your face.

A separate note is, of course, flow of action scenes, also very evocative of personal experiences and of art as a different kind of the same objective truth about the world as science and philosophy. It's absolutely satisfying to read.

Once again, I thank you for your valuable service, and give praise to your work.

With best regards,

[scroll up for clickable username]

I'll admit, I wasn't entirely sure how to answer this ask at first, but it's a series of lovely compliments and deserves some direct address. I'd like to read your theorists here, too – telepathy/speech dynamics are an interesting balancing act to write, as someone with specific emotional resonances around the concept of telepathy. I'm not sure I quite merit being classed with sources of empirical truth, but I'm glad you're getting this much out of my work, and I hope you continue to as I bring it to its conclusion.

8 notes

·

View notes

Text

Gonna try and program a convolutional neural network with backpropagation in one night. I did the NN part with python and c++ over the summer but this time I think I'm gonna use Fortran because it's my favorite. We'll see if I get to implementing multi-processing across the computer network I built.

8 notes

·

View notes

Text

I did the backpropagation thing (source for the image)

edit: fixed the offset issues

edit2: changed generation structure

11 notes

·

View notes

Text

thought experiment

imagine there was a generative text bot called AlphaGPT* whose abilities were on par with ChatGPT's. instead of being trained on an enormous dataset comprising all human writings, though, AlphaGPT was trained by giving it a dictionary, an encyclopedia, and hand-crafted rules of grammar, syntax, conversational dynamics, courtesy, ethics, etc.**, all carefully tailored by a team of linguists and philosophers and math people***. no risk of plagiarism, no web scraping. of course, it knows a lot less about the world.

(***no joke, at points in the 60s and 70s, before backpropagation was a thing, this is how most researchers assumed "artificial intelligence" would come about. i doubt this would actually work. but its a thought experiment.) (**what rules of ethics? what conversational dynamics does it prefer? much like with how the company openAI works, let's assume you don't get to know that and you just have to trust them) (*after the program AlphaGo, which learned to play Go at a higher level than any human player in much the same way). reblog for sample size i guess. or dont reblog if you want a lower sample size. thats your prerogative

58 notes

·

View notes

Text

The Building Blocks of AI : Neural Networks Explained by Julio Herrera Velutini

What is a Neural Network?

A neural network is a computational model inspired by the human brain’s structure and function. It is a key component of artificial intelligence (AI) and machine learning, designed to recognize patterns and make decisions based on data. Neural networks are used in a wide range of applications, including image and speech recognition, natural language processing, and even autonomous systems like self-driving cars.

Structure of a Neural Network

A neural network consists of layers of interconnected nodes, known as neurons. These layers include:

Input Layer: Receives raw data and passes it into the network.

Hidden Layers: Perform complex calculations and transformations on the data.

Output Layer: Produces the final result or prediction.

Each neuron in a layer is connected to neurons in the next layer through weighted connections. These weights determine the importance of input signals, and they are adjusted during training to improve the model’s accuracy.

How Neural Networks Work?

Neural networks learn by processing data through forward propagation and adjusting their weights using backpropagation. This learning process involves:

Forward Propagation: Data moves from the input layer through the hidden layers to the output layer, generating predictions.

Loss Calculation: The difference between predicted and actual values is measured using a loss function.

Backpropagation: The network adjusts weights based on the loss to minimize errors, improving performance over time.

Types of Neural Networks-

Several types of neural networks exist, each suited for specific tasks:

Feedforward Neural Networks (FNN): The simplest type, where data moves in one direction.

Convolutional Neural Networks (CNN): Used for image processing and pattern recognition.

Recurrent Neural Networks (RNN): Designed for sequential data like time-series analysis and language processing.

Generative Adversarial Networks (GANs): Used for generating synthetic data, such as deepfake images.

Conclusion-

Neural networks have revolutionized AI by enabling machines to learn from data and improve performance over time. Their applications continue to expand across industries, making them a fundamental tool in modern technology and innovation.

3 notes

·

View notes

Text

idk man

ok so

last night i stayed up till almost midnight just doin homework bc school and shit

so i finished and was trying to go to sleep

and then holy frickin shit

like always, when i go to sleep my brain goes into maximum efficiency peak cs nerd mode and i suddenly figured out what that youtuber was talking about in his backpropagation video and I knew exactly and I mean EXACTLY how to implement it into larry's (the neural network powered car ive been working on) code but since it was the MIDDLE OF THE FUCKING NIGHT i had no choice but to wait until SCHOOL FINISHED TODAY before i could do shit about it

it took 30 whole minutes to write it all down

if you guys want to see the paper with all that stuff ill post it later lmao

15 notes

·

View notes

Text

3rd July 2024

Goals:

Watch all Andrej Karpathy's videos

Watch AWS Dump videos

Watch 11-hour NLP video

Complete Microsoft GenAI course

GitHub practice

Topics:

1. Andrej Karpathy's Videos

Deep Learning Basics: Understanding neural networks, backpropagation, and optimization.

Advanced Neural Networks: Convolutional neural networks (CNNs), recurrent neural networks (RNNs), and LSTMs.

Training Techniques: Tips and tricks for training deep learning models effectively.

Applications: Real-world applications of deep learning in various domains.

2. AWS Dump Videos

AWS Fundamentals: Overview of AWS services and architecture.

Compute Services: EC2, Lambda, and auto-scaling.

Storage Services: S3, EBS, and Glacier.

Networking: VPC, Route 53, and CloudFront.

Security and Identity: IAM, KMS, and security best practices.

3. 11-hour NLP Video

NLP Basics: Introduction to natural language processing, text preprocessing, and tokenization.

Word Embeddings: Word2Vec, GloVe, and fastText.

Sequence Models: RNNs, LSTMs, and GRUs for text data.

Transformers: Introduction to the transformer architecture and BERT.

Applications: Sentiment analysis, text classification, and named entity recognition.

4. Microsoft GenAI Course

Generative AI Fundamentals: Basics of generative AI and its applications.

Model Architectures: Overview of GANs, VAEs, and other generative models.

Training Generative Models: Techniques and challenges in training generative models.

Applications: Real-world use cases such as image generation, text generation, and more.

5. GitHub Practice

Version Control Basics: Introduction to Git, repositories, and version control principles.

GitHub Workflow: Creating and managing repositories, branches, and pull requests.

Collaboration: Forking repositories, submitting pull requests, and collaborating with others.

Advanced Features: GitHub Actions, managing issues, and project boards.

Detailed Schedule:

Wednesday:

2:00 PM - 4:00 PM: Andrej Karpathy's videos

4:00 PM - 6:00 PM: Break/Dinner

6:00 PM - 8:00 PM: Andrej Karpathy's videos

8:00 PM - 9:00 PM: GitHub practice

Thursday:

9:00 AM - 11:00 AM: AWS Dump videos

11:00 AM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: AWS Dump videos

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: 11-hour NLP video

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: GitHub practice

Friday:

9:00 AM - 11:00 AM: Microsoft GenAI course

11:00 AM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: Microsoft GenAI course

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: 11-hour NLP video

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: GitHub practice

Saturday:

9:00 AM - 11:00 AM: Andrej Karpathy's videos

11:00 AM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: 11-hour NLP video

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: AWS Dump videos

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: GitHub practice

Sunday:

9:00 AM - 12:00 PM: Complete Microsoft GenAI course

12:00 PM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: Finish any remaining content from Andrej Karpathy's videos or AWS Dump videos

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: Wrap up remaining 11-hour NLP video

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: Final GitHub practice and review

4 notes

·

View notes

Text

What are some challenging concepts for beginners learning data science, such as statistics and machine learning?

Hi,

For beginners in data science, several concepts can be challenging due to their complexity and depth.

Here are some of the most common challenging concepts in statistics and machine learning:

Statistics:

Probability Distributions: Understanding different probability distributions (e.g., normal, binomial, Poisson) and their properties can be difficult. Knowing when and how to apply each distribution requires a deep understanding of their characteristics and applications.

Hypothesis Testing: Hypothesis testing involves formulating null and alternative hypotheses, selecting appropriate tests (e.g., t-tests, chi-square tests), and interpreting p-values. The concepts of statistical significance and Type I/Type II errors can be complex and require careful consideration.

Confidence Intervals: Calculating and interpreting confidence intervals for estimates involves understanding the trade-offs between precision and reliability. Beginners often struggle with the concept of confidence intervals and their implications for statistical inference.

Regression Analysis: Multiple regression analysis, including understanding coefficients, multicollinearity, and model assumptions, can be challenging. Interpreting regression results and diagnosing issues such as heteroscedasticity and autocorrelation require a solid grasp of statistical principles.

Machine Learning:

Bias-Variance Tradeoff: Balancing bias and variance to achieve a model that generalizes well to new data can be challenging. Understanding overfitting and underfitting, and how to use techniques like cross-validation to address these issues, requires careful analysis.

Feature Selection and Engineering: Selecting the most relevant features and engineering new ones can significantly impact model performance. Beginners often find it challenging to determine which features are important and how to transform raw data into useful features.

Algorithm Selection and Tuning: Choosing the appropriate machine learning algorithm for a given problem and tuning its hyperparameters can be complex. Each algorithm has its own strengths, limitations, and parameters that need to be optimized.

Model Evaluation Metrics: Understanding and selecting the right evaluation metrics (e.g., accuracy, precision, recall, F1 score) for different types of models and problems can be challenging.

Advanced Topics:

Deep Learning: Concepts such as neural networks, activation functions, backpropagation, and hyperparameter tuning in deep learning can be intricate. Understanding how deep learning models work and how to optimize them requires a solid foundation in both theoretical and practical aspects.

Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) for reducing the number of features while retaining essential information can be difficult to grasp and apply effectively.

To overcome these challenges, beginners should focus on building a strong foundation in fundamental concepts through practical exercises, online courses, and hands-on projects. Seeking clarification from mentors or peers and engaging in data science communities can also provide valuable support and insights.

#bootcamp#data science course#datascience#data analytics#machinelearning#big data#ai#data privacy#python

3 notes

·

View notes