#Software Defined Storage Networking Market Share

Explore tagged Tumblr posts

Text

#Software Defined Storage Networking Market#Software Defined Storage Networking Market Size#Software Defined Storage Networking Market Share#Software Defined Storage Networking Market Trends

0 notes

Text

WILL CONTAINER REPLACE HYPERVISOR

As with the increasing technology, the way data centers operate has changed over the years due to virtualization. Over the years, different software has been launched that has made it easy for companies to manage their data operating center. This allows companies to operate their open-source object storage data through different operating systems together, thereby maximizing their resources and making their data managing work easy and useful for their business.

Understanding different technological models to their programming for object storage it requires proper knowledge and understanding of each. The same holds for containers as well as hypervisor which have been in the market for quite a time providing companies with different operating solutions.

Let’s understand how they work

Virtual machines- they work through hypervisor removing hardware system and enabling to run the data operating systems.

Containers- work by extracting operating systems and enable one to run data through applications and they have become more famous recently.

Although container technology has been in use since 2013, it became more engaging after the introduction of Docker. Thereby, it is an open-source object storage platform used for building, deploying and managing containerized applications.

The container’s system always works through the underlying operating system using virtual memory support that provides basic services to all the applications. Whereas hypervisors require their operating system for working properly with the help of hardware support.

Although containers, as well as hypervisors, work differently, have distinct and unique features, both the technologies share some similarities such as improving IT managed service efficiency. The profitability of the applications used and enhancing the lifecycle of software development.

And nowadays, it is becoming a hot topic and there is a lot of discussion going on whether containers will take over and replace hypervisors. This has been becoming of keen interest to many people as some are in favor of containers and some are with hypervisor as both the technologies have some particular properties that can help in solving different solutions.

Let’s discuss in detail and understand their functioning, differences and which one is better in terms of technology?

What are virtual machines?

Virtual machines are software-defined computers that run with the help of cloud hosting software thereby allowing multiple applications to run individually through hardware. They are best suited when one needs to operate different applications without letting them interfere with each other.

As the applications run differently on VMs, all applications will have a different set of hardware, which help companies in reducing the money spent on hardware management.

Virtual machines work with physical computers by using software layers that are light-weighted and are called a hypervisor.

A hypervisor that is used for working virtual machines helps in providing fresh service by separating VMs from one another and then allocating processors, memory and storage among them. This can be used by cloud hosting service providers in increasing their network functioning on nodes that are expensive automatically.

Hypervisors allow host machines to have different operating systems thereby allowing them to operate many virtual machines which leads to the maximum use of their resources such as bandwidth and memory.

What is a container?

Containers are also software-defined computers but they operate through a single host operating system. This means all applications have one operating center that allows it to access from anywhere using any applications such as a laptop, in the cloud etc.

Containers use the operating system (OS) virtualization form, that is they use the host operating system to perform their function. The container includes all the code, dependencies and operating system by itself allowing it to run from anywhere with the help of cloud hosting technology.

They promised methods of implementing infrastructure requirements that were streamlined and can be used as an alternative to virtual machines.

Even though containers are known to improve how cloud platforms was developed and deployed, they are still not as secure as VMs.

The same operating system can run different containers and can share their resources and they further, allow streamlining of implemented infrastructure requirements by the system.

Now as we have understood the working of VMs and containers, let’s see the benefits of both the technologies

Benefits of virtual machines

They allow different operating systems to work in one hardware system that maintains energy costs and rack space to cooling, thereby allowing economical gain in the cloud.

This technology provided by cloud managed services is easier to spin up and down and it is much easier to create backups with this system.

Allowing easy backups and restoring images, it is easy and simple to recover from disaster recovery.

It allows the isolated operating system, hence testing of applications is relatively easy, free and simple.

Benefits of containers:

They are light in weight and hence boost significantly faster as compared to VMs within a few seconds and require hardware and fewer operating systems.

They are portable cloud hosting data centers that can be used to run from anywhere which means the cause of the issue is being reduced.

They enable micro-services that allow easy testing of applications, failures related to the single point are reduced and the velocity related to development is increased.

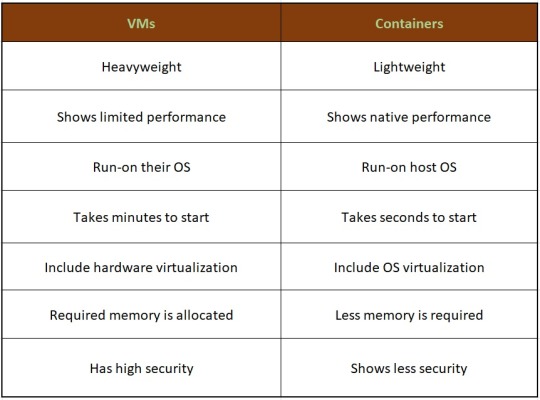

Let’s see the difference between containers and VMs

Hence, looking at all these differences one can make out that, containers have added advantage over the old virtualization technology. As containers are faster, more lightweight and easy to manage than VMs and are way beyond these previous technologies in many ways.

In the case of hypervisor, virtualization is performed through physical hardware having a separate operating system that can be run on the same physical carrier. Hence each hardware requires a separate operating system to run an application and its associated libraries.

Whereas containers virtualize operating systems instead of hardware, thereby each container only contains the application, its library and dependencies.

Containers in a similar way to a virtual machine will allow developers to improve the CPU and use physical machines' memory. Containers through their managed service provider further allow microservice architecture, allowing application components to be deployed and scaled more granularly.

As we have seen the benefits and differences between the two technologies, one must know when to use containers and when to use virtual machines, as many people want to use both and some want to use either of them.

Let’s see when to use hypervisor for cases such as:

Many people want to continue with the virtual machines as they are compatible and consistent with their use and shifting to containers is not the case for them.

VMs provide a single computer or cloud hosting server to run multiple applications together which is only required by most people.

As containers run on host operating systems which is not the case with VMs. Hence, for security purposes, containers are not that safe as they can destroy all the applications together. However, in the case of virtual machines as it includes different hardware and belongs to secure cloud software, so only one application will be damaged.

Container’s turn out to be useful in case of,

Containers enable DevOps and microservices as they are portable and fast, taking microseconds to start working.

Nowadays, many web applications are moving towards a microservices architecture that helps in building web applications from managed service providers. The containers help in providing this feature making it easy for updating and redeploying of the part needed of the application.

Containers contain a scalability property that automatically scales containers, reproduces container images and spin them down when they are not needed.

With increasing technology, people want to move to technology that is fast and has speed, containers in this scenario are way faster than a hypervisor. That also enables fast testing and speed recovery of images when a reboot is performed.

Hence, will containers replace hypervisor?

Although both the cloud hosting technologies share some similarities, both are different from each other in one or the other aspect. Hence, it is not easy to conclude. Before making any final thoughts about it, let's see a few points about each.

Still, a question can arise in mind, why containers?

Although, as stated above there are many reasons to still use virtual machines, containers provide flexibility and portability that is increasing its demand in the multi-cloud platform world and the way they allocate their resources.

Still today many companies do not know how to deploy their new applications when installed, hence containerizing applications being flexible allow easy handling of many clouds hosting data center software environments of modern IT technology.

These containers are also useful for automation and DevOps pipelines including continuous integration and continuous development implementation. This means containers having small size and modularity of building it in small parts allows application buildup completely by stacking those parts together.

They not only increase the efficiency of the system and enhance the working of resources but also save money by preferring for operating multiple processes.

They are quicker to boost up as compared to virtual machines that take minutes in boosting and for recovery.

Another important point is that they have a minimalistic structure and do not need a full operating system or any hardware for its functioning and can be installed and removed without disturbing the whole system.

Containers replace the patching process that was used traditionally, thereby allowing many organizations to respond to various issues faster and making it easy for managing applications.

As containers contain an operating system abstract that operates its operating system, the virtualization problem that is being faced in the case of virtual machines is solved as containers have virtual environments that make it easy to operate different operating systems provided by vendor management.

Still, virtual machines are useful to many

Although containers have more advantages as compared to virtual machines, still there are a few disadvantages associated with them such as security issues with containers as they belong to disturbed cloud software.

Hacking a container is easy as they are using single software for operating multiple applications which can allow one to excess whole cloud hosting system if breaching occurs which is not the case with virtual machines as they contain an additional barrier between VM, host server and other virtual machines.

In case the fresh service software gets affected by malware, it spreads to all the applications as it uses a single operating system which is not the case with virtual machines.

People feel more familiar with virtual machines as they are well established in most organizations for a long time and businesses include teams and procedures that manage the working of VMs such as their deployment, backups and monitoring.

Many times, companies prefer working with an organized operating system type of secure cloud software as one machine, especially for applications that are complex to understand.

Conclusion

Concluding this blog, the final thought is that, as we have seen, both the containers and virtual machine cloud hosting technologies are provided with different problem-solving qualities. Containers help in focusing more on building code, creating better software and making applications work on a faster note whereas, with virtual machines, although they are slower, less portable and heavy still people prefer them in provisioning infrastructure for enterprise, running legacy or any monolithic applications.

Stating that, if one wants to operate a full operating system, they should go for hypervisor and if they want to have service from a cloud managed service provider that is lightweight and in a portable manner, one must go for containers.

Hence, it will take time for containers to replace virtual machines as they are still needed by many for running some old-style applications and host multiple operating systems in parallel even though VMs has not had so cloud-native servers. Therefore, it can be said that they are not likely to replace virtual machines as both the technologies complement each other by providing IT managed services instead of replacing each other and both the technologies have a place in the modern data center.

For more insights do visit our website

#container #hypervisor #docker #technology #zybisys #godaddy

6 notes

·

View notes

Text

Developing Your Future with AWS Solution Architect Associate

Why Should You Get AWS Solution Architect Associate?

If you're stepping into the world of cloud computing or looking to level up your career in IT, the Aws certified solutions architect associate course is one of the smartest moves you can make. Here's why:

1. AWS Is the Cloud Market Leader

Amazon Web Services (AWS) dominates the cloud industry, holding a significant share of the global market. With more businesses shifting to the cloud, AWS skills are in high demand—and that trend isn’t slowing down.

2. Proves Your Cloud Expertise

This certification demonstrates that you can design scalable, reliable, and cost-effective cloud solutions on AWS. It's a solid proof of your ability to work with AWS services, including storage, networking, compute, and security.

3. Boosts Your Career Opportunities

Recruiters actively seek AWS-certified professionals. Whether you're an aspiring cloud engineer, solutions architect, or developer, this credential helps you stand out in a competitive job market.

4. Enhances Your Earning Potential

According to various salary surveys, AWS-certified professionals—especially Solution Architects—tend to earn significantly higher salaries compared to their non-certified peers.

5. Builds a Strong Foundation

The Associate-level certification lays a solid foundation for more advanced AWS certifications like the AWS Solutions Architect – Professional, or specialty certifications in security, networking, and more.

Understanding the AWS Shared Responsibility Model

The AWS Solutions Architect Associate Shared Responsibility Model defines the division of security and compliance duties between AWS and the customer. AWS is responsible for “security of the cloud,” while customers are responsible for “security in the cloud.”

AWS handles the underlying infrastructure, including hardware, software, networking, and physical security of its data centers. This includes services like compute, storage, and database management at the infrastructure level.

On the other hand, customers are responsible for configuring their cloud resources securely. This includes managing data encryption, access controls (IAM), firewall settings, OS-level patches, and securing applications and workloads.

For example, while AWS secures the physical servers hosting an EC2 instance, the customer must secure the OS, apps, and data on that instance.

This model enables flexibility and scalability while ensuring that both parties play a role in protecting cloud environments. Understanding these boundaries is essential for compliance, governance, and secure cloud architecture.

Best Practices for AWS Solutions Architects

The role of an AWS Solutions Architect goes far beyond just designing cloud environments—it's about creating secure, scalable, cost-optimized, and high-performing architectures that align with business goals. To succeed in this role, following industry best practices is essential. Here are some of the top ones:

1. Design for Failure

Always assume that components can fail—and design resilient systems that recover gracefully.

Use Auto Scaling Groups, Elastic Load Balancers, and Multi-AZ deployments.

Implement circuit breakers, retries, and fallbacks to keep applications running.

2. Embrace the Well-Architected Framework

Leverage AWS’s Well-Architected Framework, which is built around five pillars:

Operational Excellence

Security

Reliability

Performance Efficiency

Cost Optimization

Reviewing your architecture against these pillars helps ensure long-term success.

3. Prioritize Security

Security should be built in—not bolted on.

Use IAM roles and policies with the principle of least privilege.

Encrypt data at rest and in transit using KMS and TLS.

Implement VPC security, including network ACLs, security groups, and private subnets.

4. Go Serverless When It Makes Sense

Serverless architecture using AWS Lambda, API Gateway, and DynamoDB can improve scalability and reduce operational overhead.

Ideal for event-driven workloads or microservices.

Reduces the need to manage infrastructure.

5. Optimize for Cost

Cost is a key consideration. Avoid over-provisioning.

Use AWS Cost Explorer and Trusted Advisor to monitor spend.

Choose spot instances or reserved instances when appropriate.

Right-size EC2 instances and consider using Savings Plans.

6. Monitor Everything

Build strong observability into your architecture.

Use Amazon CloudWatch, X-Ray, and CloudTrail for metrics, tracing, and auditing.

Set up alerts and dashboards to catch issues early.

Recovery Planning with AWS

Recovery planning in AWS ensures your applications and data can quickly bounce back after failures or disasters. AWS offers built-in tools like Amazon S3 for backups, AWS Backup, Amazon RDS snapshots, and Cross-Region Replication to support data durability. For more robust strategies, services like Elastic Disaster Recovery (AWS DRS) and CloudEndure enable near-zero downtime recovery. Use Auto Scaling, Multi-AZ, and multi-region deployments to enhance resilience. Regularly test recovery procedures using runbooks and chaos engineering. A solid recovery plan on AWS minimizes downtime, protects business continuity, and keeps operations running even during unexpected events.

Learn more: AWS Solution Architect Associates

0 notes

Text

A file browser or file manager can be defined as the computer program which offers a user interface for managing folders and files. The main functions of any file manager can be defined as creation, opening, viewing, editing, playing or printing. It also includes the moving, copying, searching, deleting and modifications. The file managers can display the files and folders in various formats which include the hierarchical tree which is based upon directory structure. Some file managers also have forward and back navigational buttons which are based upon web browsers. Some files managers also offers network connectivity and are known as web-based file managers. The scripts of these web managers are written in various languages such as Perl, PHP, and AJAX etc. They also allow editing and managing the files and folders located in directories by using internet browsers. They also allow sharing files with other authorized devices as well as persons and serve as digital repository for various required documents, publishing layouts, digital media, and presentations. Web based file sharing can be defined as the practice of providing access to various types of digital media, documents, multimedia such as video, images and audio or eBooks to the authorized persons or to the targeted audience. It can be achieved with various methods such as utilization of removable media, use of file management tools, peer to peer networking. The best solution for this is to use file management software for the storage, transmission and dispersion which also includes the manual sharing of files with sharing links. There are many file sharing web file management software in the market which are popular with the people around the world. Some of them are as follows: Http Commander This software is a comprehensive application which is used for accessing files. The system requirements are Windows OS, ASP.NET (.NET Framework) 4.0 or 4.5 and Internet Information Services (IIS) 6/7/7.5/8. The advantages include a beautiful and convenient interface, multiview modes for file viewing, editing of text files, cloud services integration and document editing, WEBDAV support and zip file support. It also includes a user-friendly mobile interface, multilingual support, and easy admin panel. The additional features of the software include a mobile interface, high general functionality and a web admin. You can upload various types of files using different ways such as Java, Silverlight, HTML5, Flash and HTML4 with drag and drop support. CKFinder The interface of this web content manager is intuitive, easy to access and fast which requires a website configured for IIS or Internet Information Server. You would also require enabled Net Framework 2.0+ for installation. Some advantages include multi-language facility, preview of the image, and 2 files view modes. You also get search facility in the list as well drag and drop file function inside the software. The software has been programmed in Java Script API. Some disadvantages include difficulty in customizing the access of folders, inability to share files and finally, non integration of the software with any online service. You cannot edit the files with external editors or software. Also, there is no tool for configuration and you cannot drag and drop files during upload. Some helpful features include ease in downloading files using HTML4 and HTML5, also the documentation is available for installation and setup. File Uploads And Files Manager It provides a simple control and offers access to the files stored in servers. For installation, the user requires Microsoft Visual Studio 2010 and higher as well as Microsoft .NET Framework 4.0. Some advantages include a good interface where all icons are simple and in one style, 2 files view modes including detailed and thumbnails. It also supports basic file operations, supports themes, filters the file list as well as being integrated with cloud file storage services.

Some disadvantages include limited and basic operation with files, inability to work as a standalone application, settings are in code, and finally it cannot view files in a browser, weak general functionality, no mobile interface and no web admin. Some useful features include uploading multiple files at one go, multilingual support and availability of documentation. Easy File Management Web Server This file management software installs as a standalone application and there is no requirement for configuration. The software does not support AJAX. A drawback is that it looks like an outdated product and the interface is not convenient. The system requirement for this software is Windows OS. The advantages include having no requirement for IIS, uploading of HTML4 files one at a time, providing support notifications with email and can be easily installed and configured from the application. The disadvantages include the interface not being user-friendly, full page reload for any command, it cannot edit files and does not support Unicode characters. Moreover, it does not provide multilingual support for users and has a small quantity of functions when compared with others. ASP.NET File Manager This file manager at first glance, is not intuitive and is outdated. The system requirement for this manager is IIS5 or higher version and ASP.NET 2.0 or later version. Some advantages include editing ability of text files, users can do file management through browsers which is very simple, and it can provide support for old browsers. You can do basic operations with files stored and have easy functions. On the other hand, some disadvantages include the redundant interface, its need to reload full page for navigation. Additionally there is no integration with online services. The user cannot share files, cannot drag and drop files during uploading, gets only one folder for file storage and there's no tool for configuration. Moreover, there's no multilingual support, no mobile interface, low general functionality and no web admin. File Explorer Essential Objects This file manager offers limited functionality regarding files and is a component of Visual Studio. The system requirements include .Net Framework 2.0+ and a configured website in IIS. Some advantages include previewing of images, AJAX support with navigation, integration with Visual Studio and 2 file view modes. The disadvantages include no command for copy, move or rename file, no editing of files even with external editors and inability to share files with anyone. What's more, there's no support for drag and drop file for uploading, an outdated interface, no 'access rights' customization for various users, no web admin, no mobile interface and no multilingual support for users. FileVista This file management software offers a good impression at the outset but has limited functionalities. The system requirements include Microsoft .NET Framework 4 (Full Framework) or higher and enabled Windows Server with IIS. Some advantages include setting quotas for users, uploading files with drag n drop, Flash, HTML4, Silverlight and HTML5, multilingual support, presence of web admin, archives support, easy interface, fast loading and creation of public links. The disadvantages include disabled editing ability, no integration with document viewers or online services, no search function and no support of drag and drop for moving files. IZWebFileManager Even though the software is outdated and has not been updated,it's still functional. The interface of this software is similar to Windows XP. It has minimum functionality and no admin. It provides easy access to files but is suitable only for simple tasks. The advantages of this software include 3 file view modes, preview of images, facility to drag and drop files, various theme settings and a search feature. The disadvantages of this software include the old interface, no editing of files, no integration with online services, no sharing of files, and no drag and drop support for uploading files.

The user cannot set a permission command as well. Moxie Manager This file management software is modern and has a nice design. Also, it is integrated with cloud services which functions with images. The system requirements include IIS7 or higher and ASP.NET 4.5 or later versions. Some advantages include an attractive interface, ability to use all file operations, preview of text and image files. You can also edit text and image files, support Amazon S3 files and folders, support Google Drive and DropBox with download capability, support FTP and zip archives. On the other hand, some disadvantages include having no built-in user interface, no right settings for users, no support of drag and drop, no mobile interface and no web admin. Some features include multilingual format, available documentation, upload files with drag and drop support, average functionality.

0 notes

Text

💾 Storage Just Got Serious — SAN Market to hit $32.5B by 2034, up from $19.4B in 2024 (5.3% CAGR 🔗)

Storage Area Network (SAN) is a high-speed network that provides access to consolidated block-level storage, allowing multiple servers to connect to and use shared storage resources efficiently. SANs are designed for high availability, performance, and scalability, making them ideal for enterprise environments with large volumes of data and critical applications. They help centralize storage management, improve backup and disaster recovery processes, and minimize downtime.

To Request Sample Report : https://www.globalinsightservices.com/request-sample/?id=GIS24058 &utm_source=SnehaPatil&utm_medium=Article

By separating storage from the local environment, SANs increase flexibility and enable better resource utilization. These systems support high-throughput applications such as databases, virtual machines, and analytics platforms. As organizations continue to scale and transition to hybrid and multi-cloud architectures, SAN solutions are evolving with features like NVMe over Fabrics, software-defined storage, and enhanced automation. Additionally, SANs play a crucial role in cybersecurity and compliance by providing secure access controls, encryption, and audit trails. In the age of big data and digital transformation, SAN technology remains a vital backbone for enterprise storage strategies, ensuring data is always available, protected, and accessible.

#storageareanetwork #san #storagetechnology #datainfrastructure #enterprisestorage #blockstorage #highavailability #disasterrecovery #datacenter #cloudintegration #nvmeoverfabrics #softwaredefinedstorage #hybridcloud #multicloud #storagesolutions #dataarchitecture #virtualmachines #securestorage #scalablestorage #storagemanagement #bigdata #cybersecurity #storageautomation #datasecurity #cloudstorage #techinfrastructure #storagenetworking #storageoptimization #digitaltransformation #storageperformance #storagebackup #storagegrowth #dataprotection #storageindustry #storagedeployment #techstack

Research Scope:

· Estimates and forecast the overall market size for the total market, across type, application, and region

· Detailed information and key takeaways on qualitative and quantitative trends, dynamics, business framework, competitive landscape, and company profiling

· Identify factors influencing market growth and challenges, opportunities, drivers, and restraints

· Identify factors that could limit company participation in identified international markets to help properly calibrate market share expectations and growth rates

· Trace and evaluate key development strategies like acquisitions, product launches, mergers, collaborations, business expansions, agreements, partnerships, and R&D activities

About Us:

Global Insight Services (GIS) is a leading multi-industry market research firm headquartered in Delaware, US. We are committed to providing our clients with highest quality data, analysis, and tools to meet all their market research needs. With GIS, you can be assured of the quality of the deliverables, robust & transparent research methodology, and superior service.

Contact Us:

Global Insight Services LLC 16192, Coastal Highway, Lewes DE 19958 E-mail: [email protected] Phone: +1–833–761–1700 Website: https://www.globalinsightservices.com/

0 notes

Text

How Tata Technologies Accelerates Innovation To Power Future Of Mobility

For next-generation vehicle manufacturers, speed and innovation are paramount. As the demand for cutting-edge technology grows, engineering service providers must evolve to meet the fast-changing expectations of modern OEMs. Tata Technologies has been at the forefront of this transformation, expanding its capabilities across multiple segments to help new-age automakers accelerate development cycles and seamlessly integrate software-defined vehicle (SDV) solutions.

As Marc Manns, Vehicle Line Director — EE at Tata Technologies, explains, over-the-air (OTA) updates are becoming essential, enabling manufacturers to introduce bug fixes, cybersecurity patches, and new features iteratively — enhancing vehicle performance post-production.

In a recent project, the company played a crucial role in rescuing a struggling OEM, stepping in just three months before launch to conduct a gap analysis and develop an OTA solution within six months. By deploying the right expertise and ensuring on-ground presence, the company helped accelerate the project timeline to under two years, significantly faster than conventional development cycles.

For emerging automotive players, agility is key, and Tata Technologies continues to redefine collaboration, providing tailored solutions that enable next-gen manufacturers to bring vehicles to market faster, smarter, and more efficiently, he stated.

Bridging Knowledge Gaps

As emerging technologies such as satellite communications, V2X, AI, and Machine Learning continue to reshape mobility, engineering service providers must bridge interdisciplinary knowledge gaps without slowing down development. Tata Technologies addresses this challenge through targeted training, cross-industry collaboration, and knowledge-sharing initiatives.

The company has established Tech Varsity, an internal training programme, along with platforms like LinkedIn Leap to upskill employees and onboard new talent. Additionally, cross-project learning ensures that expertise gained from one engagement is quickly disseminated across teams and regions, enhancing agility and accelerating development.

Advancing Connectivity With Satellite Solutions

In the world of SDVs, seamless connectivity is critical. Tata Technologies is exploring satellite-based solutions to complement 5G networks, ensuring uninterrupted connectivity even in areas prone to signal dropouts. Collaborating with partners like CesiumAstro, the company is working on intelligent network switching — leveraging AI and digital twins to predict dropouts and seamlessly transition between networks, maintaining continuous communication with cloud-based vehicle systems, he said.

AI-driven predictive analytics plays a crucial role in optimising connectivity, enhancing user experience, and improving safety. The company is harnessing automation, AI, and ML to anticipate network disruptions and make real-time decisions, ensuring that next-generation vehicles stay smarter, safer, and always connected.

Overcoming Edge Computing Challenges

Scaling ML to embedded edge devices presents several challenges in the automotive industry, particularly regarding latency, hardware constraints, power efficiency, and storage limitations. These factors are critical in ensuring that safety systems function reliably without delays, especially in real-time applications.

According to Manns, Tata Technologies is actively addressing these challenges by implementing a hybrid edge-cloud approach. This strategy involves offloading complex, ML-intensive tasks to the cloud, while ensuring that critical real-time processing remains at the edge. Selecting the right hardware is also essential. The company collaborates with OEMs to integrate specialized AI acceleration chips, such as Qualcomm Snapdragon, which optimize latency, performance, and power efficiency.

Each OEM’s journey towards SDVs is unique, and Tata Technologies works closely with them to tailor the right balance between edge and cloud computing. By leveraging cutting-edge hardware and intelligent workload distribution, the company ensures that vehicles remain safe, efficient, and compliant with regulatory standards — pushing the boundaries of next-generation automotive technology, he pointed out.

Digital Passports

As sustainability and regulatory compliance take centre stage in the electric vehicle industry, battery passports are emerging as a critical solution for tracking battery lifecycle from raw material sourcing to recycling. Tata Technologies is actively developing its own battery passport solution, collaborating with OEMs and battery manufacturers to ensure traceability, compliance, and sustainability, he mentioned.

According to Manns, the battery passport will provide end-to-end visibility, enabling the tracking of battery characteristics from mining, suppliers of raw materials to OEMs, aftermarket services, and eventual recycling. The solution integrates static data from manufacturing with real-time vehicle data via cloud connectivity, ensuring compliance with evolving global regulations in the EU, California, India, and China. The company is also incorporating blockchain technology to enhance security and traceability, reinforcing trust and accountability across the battery supply chain, he said.

Shaping The Future Of Vehicle Lifecycle Management

As vehicles become increasingly software-driven, digital passports are gaining prominence in managing vehicle history, maintenance, and compliance. While digital passports are already in use for commercial vehicles in Europe and the US, the concept is expected to expand into passenger cars, including internal combustion engine (ICE) vehicles.

Prognostic solutions, initially developed for EVs, are now being explored for ICE vehicles, particularly as their lifespan extends in certain markets. A digital ID can provide a transparent and tamper-proof record of a vehicle’s history, helping to prevent fraud, improve resale value, and enhance regulatory compliance.

Manns emphasizes that digital passports will play a crucial role in building consumer trust, facilitating better maintenance tracking, ensuring compliance, and streamlining enforcement mechanisms. As the automotive industry shifts toward connected and intelligent mobility solutions, battery and digital passports will redefine lifecycle management, driving transparency, efficiency, and sustainability, he summed up.

Author: Marc Manns, Vehicle Line Director — EE at Tata Technologies.

Source: https://www.tatatechnologies.com/media-center/how-tata-technologies-accelerates-innovation-to-power-future-of-mobility/

0 notes

Text

The Future of White Box Servers: Market Outlook, Growth Trends, and Forecasts

The global white box server market size is estimated to reach USD 44.81 billion by 2030, according to a new study by Grand View Research, Inc., progressing at a CAGR of 16.2% during the forecast period. Increasing adoption of open source platforms such as Open Compute Project and Project Scorpio coupled with surging demand for micro-servers and containerization of data centers is expected to stoke the growth of the market. Spiraling demand for low-cost servers, higher uptime, and a high degree of customization and flexibility in hardware design are likely to propel the market over the forecast period.

A white box server can be considered as a customized server built either by assembling commercial off-the-shelf components or unbranded products supplied by Original Design Manufacturers (ODM) such as Supermicro; Quanta Computers; Inventec; and Hon Hai Precision Industry Company Inc. These servers are easier to design for custom business requirements and can offer improved functionality at a relatively cheaper cost, meeting an organization’s operational needs.

Evolving business needs of major cloud service and digital platform providers such as AWS, Google, Microsoft Azure, and Facebook are leading to increased adoption of white box servers. Low cost, varying levels of flexibility in server design, ease of deployment, and increasing need for server virtualization are poised to stir up the adoption of white box servers among enterprises.

Data Analytics and cloud adoption with increased server applications for processing workloads aided by cross-platform support in a distributed environment is also projected to augment the market. Open Infrastructure conducive to software-defined operations and housing servers, storage, and networking products will accentuate the market for storage and networking products during the forecast period.

Additionally, ODMs are focused on price reduction as well as innovating new energy-efficient products and improved storage solutions, which in turn will benefit the market during the forecast period. However, ODM’s limited service and support services, unreliable server lifespans, and lack of technical expertise to design and deploy white box servers can hinder market growth over the forecast period.

White Box Server Market Report Highlights

North America held the highest market share in 2023. The growth of the market can be attributed to the high saturation of data centers and surging demand for more data centers to support new big data, IoT, and cloud services

Asia Pacific is anticipated to witness the highest growth during the forecast period due to the burgeoning adoption of mobile and cloud services. Presence of key manufacturers offering low-cost products will bolster the growth of the regional market

The data center segment is estimated to dominate the white box server market throughout the forecast period owing to the rising need for computational power to support mobile, cloud, and data-intensive business applications

X86 servers held the largest market revenue share in 2023. Initiatives such as the open compute project encourage the adoption of open platforms that work with white box servers

Curious about the White Box Server Market? Get a FREE sample copy of the full report and gain valuable insights.

White Box Server Market Segmentation

Grand View Research has segmented the global white box server market on the basis of type, processor, operating system, application, and region:

White Box Server Type Outlook (Revenue, USD Million, 2018 - 2030)

Rackmount

GPU Servers

Workstations

Embedded

Blade Servers

White Box Server Processor Outlook (Revenue, USD Million, 2018 - 2030)

X86 servers

Non-X86 servers

White Box Server Operating System Outlook (Revenue, USD Million, 2018 - 2030)

Linux

Windows

UNIX

Others

White Box Server Application Outlook (Revenue, USD Million, 2018 - 2030)

Enterprise Customs

Data Center

White Box Server Regional Outlook (Revenue, USD Million, 2018 - 2030)

North America

US

Canada

Mexico

Europe

UK

Germany

France

Asia Pacific

China

India

Japan

Australia

South Korea

Latin America

Brazil

Middle East and Africa (MEA)

UAE

Saudi Arabia

South Africa

Key Players in the White Box Server Market

Super Micro Computer, Inc.

Quanta Computer lnc.

Equus Computer Systems

Inventec

SMART Global Holdings, Inc.

Advantech Co., Ltd.

Radisys Corporation

hyve solutions

Celestica Inc.

South Korea

Latin America

Brazil

Middle East and Africa (MEA)

UAE

Saudi Arabia

South Africa

Order a free sample PDF of the White Box Server Market Intelligence Study, published by Grand View Research.

0 notes

Text

What Does a Bookkeeping Services Business Do?

A bookkeeping services business helps other companies manage their financial records and processes. Typical offerings include:

Recording financial transactions

Reconciling bank and credit card statements

Managing accounts payable and receivable

Generating financial reports

Processing payroll

Preparing documents for tax filing

Some businesses expand their offerings to include advisory services, budgeting assistance, or specialized industry-focused bookkeeping.

Steps to Start a Bookkeeping Business

Build Your Skills and Credentials While formal education isn’t mandatory, having a certification in bookkeeping or accounting adds credibility. Certifications such as QuickBooks ProAdvisor, Xero Certification, or membership in professional organizations like the American Institute of Professional Bookkeepers (AIPB) can enhance your reputation.

Develop a Business Plan Outline your target market, services, pricing structure, and marketing strategy. A well-thought-out business plan helps guide your efforts and attracts potential investors or lenders if needed.

Register Your Business Choose a name and register your business according to local regulations. Decide on a business structure (sole proprietorship, LLC, etc.) that suits your goals and tax preferences.

Invest in Tools and Technology High-quality accounting software is essential for managing client accounts efficiently. Popular options include QuickBooks, Xero, FreshBooks, and Wave. Additionally, invest in secure data-sharing platforms to protect sensitive client information.

Set Up Your Office Whether you operate from home or rent office space, ensure your setup is professional and conducive to productivity. A reliable computer, internet connection, and secure data storage are crucial.

Define Your Services and Pricing Decide whether you’ll charge an hourly rate, a flat fee, or a subscription model. Pricing should reflect the complexity of tasks, industry standards, and your expertise.

Obtain Necessary Licenses and Insurance Depending on your location, you may need specific permits to operate. Business liability insurance and errors and omissions (E&O) insurance can protect you from potential legal issues.

Market Your Business Build a strong online presence with a professional website and active social media profiles. Leverage digital marketing tools like SEO, Google Ads, and email campaigns to attract clients. Networking and word-of-mouth referrals are also powerful for building your client base.

Growing Your Bookkeeping Business

Focus on Customer Relationships Provide excellent service to retain clients and gain referrals. Offer regular updates, proactive insights, and personalized support.

Specialize in an Industry Narrowing your focus to a specific niche (e.g., medical practices, retail, or non-profits) can help you stand out and attract clients looking for expertise in their field.

Stay Updated on Industry Trends The financial world evolves rapidly, with new regulations, technologies, and best practices emerging regularly. Continuous learning ensures your services remain competitive and compliant.

Expand Your Team As your business grows, consider hiring additional staff or subcontractors to handle the workload. Ensure they are qualified and align with your company’s values and standards.

Offer Value-Added Services Beyond bookkeeping, you can provide budgeting assistance, financial analysis, or advisory services to deepen client relationships and increase revenue.

Leverage Technology for Efficiency Automate repetitive tasks using tools like bookkeeping software, AI-powered apps, or cloud platforms. This improves accuracy and saves time, allowing you to take on more clients.

Challenges and How to Overcome Them

Competition: Differentiate yourself through exceptional customer service, niche expertise, or unique offerings.

Client Retention: Regular communication, transparency, and personalized service foster loyalty.

Time Management: Invest in time-tracking tools and prioritize tasks to stay organized as your business grows.

The Future of Bookkeeping Services Businesses

With increasing reliance on data-driven decisions and financial transparency, the role of bookkeepers is more vital than ever. By focusing on technology, client satisfaction, and continuous improvement, a bookkeeping services business can thrive in the ever-evolving financial landscape.

If you’re ready to launch your bookkeeping business, start small, build trust, and scale gradually. Success lies in your ability to meet the financial needs of businesses while providing outstanding value and expertise.

0 notes

Text

Energy Innovations - Innovations in Infrastructure, Technology, Resources and Energy

We're a global specialist across sectors such as infrastructure, technology, resources and energy innovations Lake Macquarie. Our people share their ideas, analysis and capabilities.

Allambi Care and Lake Macquarie Council have partnered up to power-up their operations using solar panels. They're one of a number of local organisations joining Council in the Cities Power Partnership, which has more than 170 members.

1. Electric Vehicle Charging Strategy

With electric vehicles (EVs) gaining traction in Australia and worldwide, Lake Macquarie City Council required strategic, technical, and business model input for the development of its EV charging infrastructure strategy.

The City currently operates seven EV chargers across the city including sites at Redhead, Charlestown, Morisset, Dudley and the Speers Point administration building. These 22 kilowatt chargers are installed on power poles and are connected directly to the overhead electricity supply, with energy use matched with 100% accredited GreenPower.

The EV chargers are designed to charge most EV models to 80 per cent capacity in four hours. Using intelligent software, the chargers will determine the best time to recharge for each vehicle. Fleet operators, such as bus depots and logistics centers, can schedule EV charging according to the required range and departure times of each vehicle.

2. Smart Metering

The rollout of smart meters gives customers real-time access to their energy data, empowering them to make choices and changes that reduce their electricity consumption and bills. This is an essential enabler of more efficient energy networks, helping the UK meet its carbon targets2.

Smart meters are connected to a national communications network from the outset, making them compatible with all energy suppliers from day one. However, many early-generation meters were installed with the energy supplier’s own communication systems, which means customers will lose some smart functionality if they switch provider.

Deployment constitutes a significant component of the overall cost of a smart transformation program. A dedicated deployment operations capability is essential to define a clear and appropriate deployment strategy that aligns to the achievement of strategic objectives. This enables the identification and rectification of bottlenecks, continuous improvement through customer feedback and the implementation of innovative solutions to improve deployment efficiency. Ultimately, this leads to the delivery of quality outcomes for the program.

3. Energy Efficiency

Energy innovations drive global sustainability goals, including reducing greenhouse gas emissions and enabling the transition to renewable and sustainable energy. Leading forms of green energy innovation include advancements in solar photovoltaic technology, improved battery storage capabilities and smart grids.

A local not for profit community organisation has joined forces with Lake Macquarie City Council and Pingala, a member-owned solar cooperative, to bring the Hunter region’s first community-funded renewable energy project to life. The Allambi Care solar project allows the community to fund a solar system at the organisation’s Charlestown headquarters, with returns of 5 to 8 per cent p.a.

The community-funded project will help Allambi Care save on electricity costs and reduce its environmental footprint by generating its own clean energy through a rooftop solar panel installation in Lake Macquarie and Newcastle. The system will be connected to Ausgrid’s electricity network and provide an onsite, renewable energy source that will be used to offset the City’s remaining carbon emissions from other sources.

4. Energy Storage

The cost of solar and energy storage is falling fast. In China, the world’s biggest power market, there are plans to double ESS capacity over the next five years.

Lake Macquarie City Council is an early adopter of onsite generation with 15 of its buildings now having solar power installed. This enables the business to save on electricity costs and reduce its impact on the environment.

In partnership with Allambi Care, the City is launching its first community-funded renewable energy project – Pingala. It invites local community members to invest in solar power for Allambi Care and receive a return on their investment of between 5 and 8 per cent a year.

Eku Energy, a Macquarie Asset Management portfolio company, develops, builds and actively manages energy storage assets to enable reliable, clean electricity supplies. The team uses a combination of local partnerships and a data-driven understanding of global financial and energy markets to find the right investment solutions for its projects.

0 notes

Text

Global Enterprise Storage Market Analysis 2024: Size Forecast and Growth Prospects

The enterprise storage global market report 2024from The Business Research Company provides comprehensive market statistics, including global market size, regional shares, competitor market share, detailed segments, trends, and opportunities. This report offers an in-depth analysis of current and future industry scenarios, delivering a complete perspective for thriving in the industrial automation software market.

Enterprise Storage Market, 2024 report by The Business Research Company offers comprehensive insights into the current state of the market and highlights future growth opportunities.

Market Size - The enterprise storage market size has grown strongly in recent years. It will grow from $135.10 billion in 2023 to $146.36 billion in 2024 at a compound annual growth rate (CAGR) of 8.3%. The growth in the historic period can be attributed to increased focus on hybrid clouds, the need to manage and store large volumes of data, the rapid growth of the internet of things (IoT), the rise of big data analytics, and the need for data protection.

The enterprise storage market size is expected to see strong growth in the next few years. It will grow to $202.59 billion in 2028 at a compound annual growth rate (CAGR) of 8.5%. The growth in the forecast period can be attributed to increasing demand for storage solutions in enterprises, growing adoption of cloud-based storage solutions, adoption of cloud computing, increasing demand for data storage, and rising demand for enterprises. Major trends in the forecast period include technological advancements, the emergence of artificial intelligence and machine learning, flash storage expansion, the adoption of software-defined storage, and hybrid cloud storage adoption.

Order your report now for swift delivery @ https://www.thebusinessresearchcompany.com/report/enterprise-storage-global-market-report

Scope Of Enterprise Storage Market The Business Research Company's reports encompass a wide range of information, including:

Market Size (Historic and Forecast): Analysis of the market's historical performance and projections for future growth.

Drivers: Examination of the key factors propelling market growth.

Trends: Identification of emerging trends and patterns shaping the market landscape.

Key Segments: Breakdown of the market into its primary segments and their respective performance.

Focus Regions and Geographies: Insight into the most critical regions and geographical areas influencing the market.

Macro Economic Factors: Assessment of broader economic elements impacting the market.

Enterprise Storage Market Overview

Market Drivers - The rising adoption of cloud computing is expected to propel the growth of the enterprise storage market going forward. Cloud computing is the on-demand delivery of computing services via the internet, which includes servers, storage, databases, networking, software, and analytics. Demand for cloud computing is rising as it offers a robust infrastructure with built-in redundancy and failover mechanisms to ensure high availability and reliability. Cloud computing provides flexible storage that can be adjusted to company requirements, removing the need for significant hardware investments. It also ensures data availability and integrity, where enterprises can replicate data across multiple geographic locations and leverage built-in disaster recovery solutions to minimize the risk of data loss and downtime. For instance, in December 2023, according to the Statistical Office of the European Union, a Europe-based government organization, 45.2% of EU enterprises purchased cloud computing services. This marks a 4.2 percentage point (pp) increase compared with 2021. Therefore, the rising adoption of cloud computing is driving the growth of the enterprise storage market.

Market Trends - Major companies operating in the enterprise storage market are focused on developing automation and orchestration capability solutions such as software-defined storage platforms, to remove hardware dependencies and provide core features for secure enterprise storage. Software-defined storage platforms are a next-generation storage model designed for complex workflows, offering multi-tenancy, ease of management, security, and efficiency. For instance, in November 2023, DataDirect Networks, a US-based provider of data storage and data management solutions, launched DDN Infinia for Enterprise AI and Cloud. DDN Infinia is a faster and more cost-effective alternative to cloud storage, allowing it to construct a secure storage cluster in minutes, execute upgrades, and extend capacity without downtime. It offers scalable metadata management with scalable storage for data governance and control while working to reduce the complexity and duplication of data and metadata. It supports S3 object storage, Docker containers, and Openstack virtual machines, allowing it to manage all distributed data with minimal effort and cost.

The enterprise storage market covered in this report is segmented –

1) By Type: Storage Area Networks Systems (SANs), Network-Attached Storage Systems, Direct-Attached Storage (DAS) Systems, Object Storage Systems, Tape Storage Systems 2) By Deployment: On-Premise, Hybrid, Cloud-Based 3) By Application: Large Enterprises, Small And Medium Enterprises (SMEs) 4) By End-User Industry: Information Technology (IT) And Telecommunications (Telecom), Banking, Financial Services, And Insurance (BFSI), Healthcare, Manufacturing, Government, Other End-User Industries

Get an inside scoop of the enterprise storage market, Request now for Sample Report @ https://www.thebusinessresearchcompany.com/sample.aspx?id=15365&type=smp

Regional Insights - North America was the largest region in the enterprise storage market in 2023. The regions covered in the enterprise storage market report are Asia-Pacific, Western Europe, Eastern Europe, North America, South America, Middle East, Africa.

Key Companies - Major companies operating in the enterprise storage market are Samsung Electronics Co. Ltd., Dell Inc., Huawei Technologies Co. Ltd., Lenovo Group Ltd., Intel Corporation, HP Inc., International Business Machines Corporation, Cisco Systems Inc., Oracle Corporation, Broadcom Inc., Fujitsu Limited, Micron Technology Inc., Toshiba Corporation, NEC Corporation, NetApp Inc., Hitachi Vantara LLC, Pure Storage Inc., Nutanix Inc., Imation Corporation, DataDirect Networks, Tintri Inc., Overland Tandberg, Nimbus Data, Nfina Technologies Inc. , DATROX Computer Technologies Inc.

Table of Contents 1. Executive Summary 2. Enterprise Storage Market Report Structure 3. Enterprise Storage Market Trends And Strategies 4. Enterprise Storage Market – Macro Economic Scenario 5. Enterprise Storage Market Size And Growth ….. 27. Enterprise Storage Market Competitor Landscape And Company Profiles 28. Key Mergers And Acquisitions 29. Future Outlook and Potential Analysis 30. Appendix

Contact Us: The Business Research Company Europe: +44 207 1930 708 Asia: +91 88972 63534 Americas: +1 315 623 0293 Email: [email protected]

Follow Us On: LinkedIn: https://in.linkedin.com/company/the-business-research-company Twitter: https://twitter.com/tbrc_info Facebook: https://www.facebook.com/TheBusinessResearchCompany YouTube: https://www.youtube.com/channel/UC24_fI0rV8cR5DxlCpgmyFQ Blog: https://blog.tbrc.info/ Healthcare Blog: https://healthcareresearchreports.com/ Global Market Model: https://www.thebusinessresearchcompany.com/global-market-model

0 notes

Text

With BigQuery Analytics Hub, Virgin Media O2 Data Sharing

How BigQuery Analytics Hub streamlined internal data exchange for Virgin Media O2

Data sharing made simple is now a vital tool for any company looking to make well-informed decisions. Nevertheless, a lot of businesses still find it difficult to share data in an efficient and legal manner. Uncertain governance, version control problems, data silos, access limitations, and a lack of data management expertise within the larger organization are common obstacles that data teams must overcome.

Virgin Media O2, a media and telecoms company, uses internal data sharing to drive strategy, enhance operations, and give decision makers more authority. The data team supports every department for timely and reliable information, from marketing to finance.

Virgin Media O2 required a system that would facilitate governance and data access amongst business units. Without it, it wouldn’t be able to achieve org-level visibility and efficiency and would be stuck without a centralized data-sharing method.

Overcoming obstacles to internal data-sharing

Strong version control was necessary to guarantee that the data was always correct, consistent, and up to date because teams were already working on Google Cloud-based projects. However, this frequently prolonged the time it required to generate new insights. In order to meet their enterprise and AI needs, Virgin Media O2 already had their corporate data in BigQuery. Therefore, one possible way to build on their current infrastructure was to use Analytics Hub, which is BigQuery’s data sharing feature.Image credit to Google Cloud

After learning about BigQuery Analytics Hub‘s scalability, self-service capabilities, and straightforward governance mechanism for data tagging and quality, the data platform team made the decision to trial the product. This final aspect, in particular, was in line with enhancing the implementation of privacy by design.

Following a successful trial, Virgin Media O2 had developed a well-defined onboarding and training procedure. Two owners were assigned to each new data exchange, and two owners were assigned to the subscriber side to facilitate the tracking of any actions within BigQuery. This method was expanded to 25 teams, over 50 exchanges, 100 postings, 500 tables, and around 300 daily customers over the course of the following nine months.

The absence of data duplication in BigQuery Analytics Hub saves on network and storage expenses, which is one of the main advantages the team discovered. It accomplishes this by generating a shareable real-time pointer to the underlying dataset, referred to as a Linked Dataset. As a result, any subscriber can access updated data instantly and it’s simple to audit, trace, and restore the original data source. By using this method, a safety net for catastrophe recovery is also included.

The complexity issues associated with building views are also resolved by BigQuery Analytics Hub, notably the fact that permitted views frequently cause original table metadata to be lost when accessing data. All table descriptions and columns are still visible to subscribers who have direct access to the original dataset.

Virgin Media O2 was able to save time, lower latency, and improve management and usability for publishers and subscribers by connecting data directly from the data publisher to a data subscriber. Additionally, the platform’s enhanced governance offered a centralized location for managing data access and quality.

BigQuery Analytics Hub minimizes human labor and mistakes by streamlining the data sharing process between teams and business divisions. Within Virgin Media O2, the platform has been especially helpful to software developers, analytics engineers, data scientists, data engineers, and analysts. It guarantees that everyone has instant access to the data they require for their different jobs.

Using data that is more readily available than ever to save time

The solution helped save up to 30 hours a week on time spent on training, support, pipelines difficulties with deployment, and communication overhead from squads using the old approach after BigQuery Analytics Hub was rolled out to about 25 squads. Due to the nearly nonexistent problems, the weekly time spent by all teams is now as little as thirty minutes. According to the team, this results in an effort savings of about 95%. Data is now widely accessible to the various departments that require it, as it is no longer stored in silos.

Without requiring users to use BigQuery Analytics Hub directly, the team was able to democratize data access for subscribers and their larger teams by creating a dashboard. While retaining a robust governance approach, self-service is made possible by allowing users to subscribe to datasets. Cutting out the intermediary simplifies and expedites this tedious procedure while preserving a strong governance framework.

Principal advantages of secure data exchange

Security and Integrity of Data

By guarding against metadata loss and unwanted access, secure, zero-copy sharing guarantees consistent data integrity across departments.

Economy of Cost and Streamlined Administration

The platform lowers operational overhead and long-term costs by doing away with data transfer, and data oversight may be efficiently handled by a small workforce.

Centralized Administration and Observation

Real-time control over data sharing operations is made possible by a single dashboard, which also enforces stringent access and authorization regulations and enables prompt issue detection.

The group’s future priorities include streamlining four important areas: data ownership, data catalog, data quality measurements, and more efficient sensitive data tagging. After data is certified, these four areas need to be precisely specified and strictly adhered to as a policy via BigQuery Analytics Hub in order to achieve the goal of automating the complete data activity.Image credit to Google Cloud

The aforementioned procedure, referred to as “data certification,” has two main advantages:

By utilizing data quality measurements (at the column level) and data lineage, it is possible to quickly identify uncertified data assets and track out problems with the quality of the data in a matter of minutes.

Real-time audit logs that allow for the proactive control of data privacy issues by identifying sensitive data in real-time and tracking its consumption.

BigQuery is the best place for users who are new to Google Cloud to begin their adventure. After BigQuery, Analytics Hub is the next best thing for users.

Read more on Govindhtech.com

#BigQuery#BigQueryAnalyticsHub#VirginMedia#VirginMediaO2#AI#internaldata#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

Mobility - Voice and Data Procurement Intelligence Shaping the Future 2024-2030

The mobility - voice and data category is projected to witness growth at a CAGR of 6.5% from 2024 to 2030. In 2023, Asia Pacific accounted for the largest share (32%) of the global category. Key drivers of this region include a consistent increase in the business/enterprise subscriber base for voice and data, deployment of VoNR (5G) network by key players, and a shift towards virtualization and software-defined networking (SDN). Asia Pacific is also anticipated to witness the fastest growth rate during the forecast period, due to the increasing need for business agility, focus on cost efficiency by businesses, and adoption of digital technologies such as Internet of Things (IoT), cloud computing, and Artificial Intelligence (AI).

In 2023, North America held the second-largest share of the global market. The key growth drivers include large coverage of 5G networks by key service providers, increasing adoption of edge computing infrastructure, and focus on sustainability initiatives. Key driving factors for Europe include extensive voice and data coverage in remote areas owing to government initiatives, increasing deployment of high-speed fiber optic networks, and focus on cyber security.

Key technologies driving the growth of this category include AI and Machine Learning (ML), quantum computing, edge computing, cloud computing and virtualization, and IoT integration. Edge computing is used to enhance the processing and storage of data, reduce latency, improve bandwidth, and enhance customer experience. By using a distributed network architecture, this technology processes real-time data at quicker speeds. Similarly, the use of virtualization enhances network infrastructure utilization, reduces hardware costs, and improves agility, by using virtual machines in place of hardware resources.

Order your copy of the Mobility - Voice and Data Procurement Intelligence Report, 2024 - 2030, published by Grand View Research, to get more details regarding day one, quick wins, portfolio analysis, key negotiation strategies of key suppliers, and low-cost/best-cost sourcing analysis

Key service providers of mobility - voice and data services compete based on various factors such as subscription rates, innovations in technologies and services, user experience, scalability of services, and data privacy and security. Clients (specifically business customers) consider factors such as service type (4G/5G), service deployment (cloud/on-premise), service transmission (wireline/wireless), service reliability (uptime/downtime), service speeds (download speeds typically range from 100 Mbps to 1 Gbps), and extent of network coverage (based on area covered). Specifically, business customers may also look for add-on features such as call routing, data integration linking, caller identification, and call monitoring.

The cost of mobility - voice and data services are influenced by several factors, such as network infrastructure costs (such as installation costs of towers), investments in the upgradation of technologies, costs of acquiring spectrum, and licensing and compliance expenses. For instance, considerable investments are required to upgrade VoLTE (4G) infrastructure to VoNR (5G).

Clients commonly follow a full services outsourcing model to engage with service providers, as it helps them minimize operational costs, improve operational efficiency, ensure regulatory compliance, and increase focus on core activities.

The COVID-19 pandemic caused substantial disruption in the global mobility - voice and data category. The demand in the category surged during the pandemic, as the need for voice and data services increased considerably in remote working environments. Moreover, travel restrictions and government-imposed lockdowns also fueled the requirement for these services. At the same time, technological transformations were seen in the form of quantum computing, edge computing, AI and ML, IoT, cloud computing, and virtualization.

Mobility - voice and data Sourcing Intelligence Highlights

• The mobility - voice and data category comprises a moderately consolidated landscape, with a few top competitors accounting for a significant portion of the market share.

• Countries such as Israel and Italy are the countries that offer mobility - voice and data services at low cost owing to cheap labor costs, low technology costs, high smartphone adoption, intense market competition, deployment of penetration pricing, and robust government initiatives.

• Buyers in the category possess medium-to-low negotiating capability due to the moderately consolidated market landscape. Moreover, buyers have specific limitations when switching to an alternative service provider.

• Network infrastructure, labor, hardware and software, spectrum acquisition, licensing and compliance, and other costs are the key cost components of this category. Other costs include sales and marketing, general and administrative, rent and utilities, insurance, logistics, and taxes.

Browse through Grand View Research’s collection of procurement intelligence studies:

• Business Intelligence Procurement Intelligence Report, 2023 - 2030 (Revenue Forecast, Supplier Ranking & Matrix, Emerging Technologies, Pricing Models, Cost Structure, Engagement & Operating Model, Competitive Landscape)

• Data Center Hosting & Storage Services Procurement Intelligence Report, 2023 - 2030 (Revenue Forecast, Supplier Ranking & Matrix, Emerging Technologies, Pricing Models, Cost Structure, Engagement & Operating Model, Competitive Landscape)

List of Key Suppliers

• AT&T Inc.

• Broadcom Inc.

• Charter Communications, Inc.

• Cisco Systems, Inc.

• Comcast Corporation

• Deutsche Telekom AG

• Huawei Technologies Co., Ltd.

• Lumen Technologies, Inc.

• Orange S.A.

• Telefónica S.A.

• Verizon Communications Inc.

• Vodafone Group Plc.

Mobility - voice and data Category Procurement Intelligence Report Scope

• Mobility - Voice and Data Category Growth Rate: CAGR of 6.5% from 2024 to 2030

• Pricing Growth Outlook: 5% - 10% increase (Annually)

• Pricing Models: Penetration pricing, subscription-based pricing, usage-based pricing, tiered pricing, cost-plus pricing, and competition-based pricing

• Supplier Selection Scope: Cost and pricing, past engagements, productivity, geographical presence

• Supplier Selection Criteria: Geographical service provision, industries served, years in service, employee strength, revenue generated, key clientele, regulatory certifications, voice services, data services, cloud and hosting services, managed network services, unified communication services, and others

• Report Coverage: Revenue forecast, supplier ranking, supplier matrix, emerging technology, pricing models, cost structure, competitive landscape, growth factors, trends, engagement, and operating model

Brief about Pipeline by Grand View Research:

A smart and effective supply chain is essential for growth in any organization. Pipeline division at Grand View Research provides detailed insights on every aspect of supply chain, which helps in efficient procurement decisions.

Our services include (not limited to):

• Market Intelligence involving – market size and forecast, growth factors, and driving trends

• Price and Cost Intelligence – pricing models adopted for the category, total cost of ownerships

• Supplier Intelligence – rich insight on supplier landscape, and identifies suppliers who are dominating, emerging, lounging, and specializing

• Sourcing / Procurement Intelligence – best practices followed in the industry, identifying standard KPIs and SLAs, peer analysis, negotiation strategies to be utilized with the suppliers, and best suited countries for sourcing to minimize supply chain disruptions

#Mobility - Voice and Data Procurement Intelligence#Mobility - Voice and Data Procurement#Procurement Intelligence

0 notes

Text

How to Choose the Right NetApp Monitoring Tool for Your Business

In IT infrastructure, ensuring the seamless operation of storage systems is paramount. NetApp, a leader in data storage solutions, offers strong platforms that cater to diverse business needs. However, the complexity and critical nature of these systems necessitate a comprehensive monitoring approach. Choosing the right NetApp monitoring tool is crucial for maintaining optimal performance, ensuring data integrity, and enhancing operational efficiency.

Understanding NetApp Monitoring Tools

A NetApp monitoring tool is designed to provide real-time insights into the performance, health, and utilization of NetApp storage systems. These tools offer detailed analytics, alerting mechanisms, and diagnostic capabilities, enabling IT teams to manage their storage environments proactively. With a myriad of options available in the market, selecting the appropriate tool requires careful consideration of several key factors.

1. Define Your Monitoring Objectives

Before evaluating specific tools, it is essential to define your monitoring objectives clearly. Determine the critical metrics you need to track, such as system performance, latency, throughput, and storage capacity. Additionally, consider the importance of real-time alerts and historical data analysis. Understanding these requirements will guide you in selecting a tool that aligns with your business goals.

2. Evaluate Compatibility and Integration

Compatibility with your existing IT infrastructure is a fundamental criterion. Ensure that the NetApp monitoring tool seamlessly integrates with your current systems, including hardware, software, and network components. Look for tools that support multi-vendor environments and offer APIs for easy integration with other IT management platforms. This will facilitate a cohesive monitoring strategy and streamline your operations.

3. Assess Scalability and Flexibility

As your business grows, so will your storage needs. It is imperative to choose a NetApp monitoring tool that can scale with your organization. Evaluate whether the tool can handle increasing data volumes and accommodate additional storage systems without compromising performance. Flexibility in deployment options, such as on-premises, cloud-based, or hybrid models, is also a crucial consideration for future-proofing your monitoring solution.

4. Analyze Usability and User Experience

A user-friendly interface and intuitive design are vital for efficient monitoring. The tool should offer customizable dashboards, easy navigation, and clear visualizations of key metrics. User experience directly impacts the efficiency of IT teams in diagnosing issues and making informed decisions. Consider conducting a trial or demo to assess the tool’s usability before making a final decision.

5. Consider Advanced Features and Capabilities

Modern NetApp monitoring tools come equipped with advanced features that enhance their utility. Look for tools that offer predictive analytics, anomaly detection, and automated remediation capabilities. These features can significantly reduce downtime, optimize resource utilization, and improve overall system reliability. Additionally, strong reporting and documentation features are essential for compliance and auditing purposes.

6. Evaluate Vendor Support and Community Engagement