#WebScrapingServices

Explore tagged Tumblr posts

Text

#Amazonmobileappscraping#mobileappscraping#AmazonDataScraper#ScrapingECommerceProductData#EcommerceWebScraping#WebScrapingServices#USA#Canada#Australia#Germany#UAE#UK

0 notes

Text

TripAdvisor Travel Datasets - Web Scraping TripAdvisor Travel Data

Access TripAdvisor travel datasets with web scraping TripAdvisor travel data for prices, listings, availability, and review analysis.

Read More >> https://www.arctechnolabs.com/tripadvisor-travel-data-scraping-services.php

#TripAdvisorTravelDatasets#ScrapingTripAdvisorTravelData#TravelDataScrapingServices#TravelPriceDatasets#TravelWebScrapingDatasets#TravelDataScraping#WebScrapingServices#ArcTechnolabs

0 notes

Text

HomeDepot.com Product Data Extraction

HomeDepot.com Product Data Extraction: Unlocking Insights for E-commerce & Market Analysis. In today’s competitive retail landscape, businesses need accurate and updated product data to stay ahead. Whether you’re an e-commerce store, price comparison website, market researcher, or manufacturer, extracting product data from HomeDepot.com can provide valuable insights for strategic decision-making. DataScrapingServices.com specializes in HomeDepot.com Product Data Extraction, enabling businesses to access real-time product information for price monitoring, competitor analysis, and inventory optimization.

Why Extract Product Data from HomeDepot.com?

HomeDepot.com is a leading online marketplace for home improvement products, appliances, furniture, and tools. Manually tracking and updating product information is inefficient and prone to errors. Automated data extraction ensures you have structured, real-time data to make informed business decisions.

Key Data Fields Extracted from HomeDepot.com

By scraping HomeDepot.com, we extract a wide range of valuable product details, including:

- Product Name

- Product Category

- Brand Name

- SKU (Stock Keeping Unit)

- Product Description

- Price & Discounts

- Stock Availability

- Customer Ratings & Reviews

- Product Images & URLs

- Shipping & Delivery Details

Having access to this structured data allows businesses to analyze trends, optimize pricing strategies, and enhance their product offerings.

Benefits of HomeDepot.com Product Data Extraction

1. Competitor Price Monitoring

Tracking real-time product prices from HomeDepot.com helps businesses adjust their pricing strategies to stay competitive. Retailers can compare prices, identify discount trends, and optimize their pricing models to attract more customers.

2. E-commerce Product Enrichment

For e-commerce platforms, high-quality product data is essential for creating detailed and engaging product listings. Extracting accurate descriptions, specifications, and images improves customer experience and conversion rates.

3. Market & Trend Analysis

Businesses can analyze product availability, customer ratings, and sales trends to understand consumer preferences. This data helps retailers and manufacturers identify best-selling products, track demand fluctuations, and make informed inventory decisions.

4. Stock Availability & Inventory Management

Monitoring stock levels on HomeDepot.com helps businesses avoid supply chain disruptions. Retailers can use this data to anticipate demand, manage stock efficiently, and prevent product shortages or overstocking.

5. Enhanced Digital Marketing Strategies

With updated product data, businesses can create effective digital marketing campaigns by targeting the right audience with the right products. Dynamic pricing models and real-time product promotions become easier with automated data extraction.

6. Data-Driven Decision Making

From benchmarking product prices to analyzing customer reviews and ratings, extracted data helps businesses optimize their product strategies and improve customer satisfaction.

Best eCommerce Data Scraping Services Provider

G2 Business Directory Scraping

Kogan Product Details Extraction

Nordstrom Price Scraping Services

Target.com Price Data Extraction

Capterra Reviews Data Extraction

PriceGrabber Product Information Extraction

Lowe's Product Pricing Scraping

Wayfair Product Details Extraction

Homedepot Product Pricing Data Scraping

Product Reviews Data Extraction

Conclusion

Extracting product data from HomeDepot.com is a game-changer for e-commerce businesses, market researchers, and retailers looking to stay competitive, optimize pricing, and improve inventory management. At DataScrapingServices.com, we provide reliable, scalable, and customized data extraction solutions to help businesses make data-driven decisions.

For HomeDepot.com Product Data Extraction Services, contact [email protected] or visit DataScrapingServices.com today!

#homedepotproductdataextraction#extractingproductdetailsfromhomedepot#ecommerceproductscraping#productpricesscraping#leadgeneration#datadrivenmarketing#webscrapingservices#businessinsights#digitalgrowth#datascrapingexperts

0 notes

Text

Top 7 Use Cases of Web Scraping in E-commerce

In the fast-paced world of online retail, data is more than just numbers; it's a powerful asset that fuels smarter decisions and competitive growth. With thousands of products, fluctuating prices, evolving customer behaviors, and intense competition, having access to real-time, accurate data is essential. This is where internet scraping comes in.

Internet scraping (also known as web scraping) is the process of automatically extracting data from websites. In the e-commerce industry, it enables businesses to collect actionable insights to optimize product listings, monitor prices, analyze trends, and much more.

In this blog, we’ll explore the top 7 use cases of internet scraping, detailing how each works, their benefits, and why more companies are investing in scraping solutions for growth and competitive advantage.

What is Internet Scraping?

Internet scraping is the process of using bots or scripts to collect data from web pages. This includes prices, product descriptions, reviews, inventory status, and other structured or unstructured data from various websites. Scraping can be used once or scheduled periodically to ensure continuous monitoring. It’s important to adhere to data guidelines, terms of service, and ethical practices. Tools and platforms like TagX ensure compliance and efficiency while delivering high-quality data.

In e-commerce, this practice becomes essential for businesses aiming to stay agile in a saturated and highly competitive market. Instead of manually gathering data, which is time-consuming and prone to errors, internet scraping automates this process and provides scalable, consistent insights at scale.

Before diving into the specific use cases, it's important to understand why so many successful e-commerce companies rely on internet scraping. From competitive pricing to customer satisfaction, scraping empowers businesses to make informed decisions quickly and stay one step ahead in the fast-paced digital landscape.

Below are the top 7 Use cases of internet scraping.

1. Price Monitoring

Online retailers scrape competitor sites to monitor prices in real-time, enabling dynamic pricing strategies and maintaining competitiveness. This allows brands to react quickly to price changes.

How It Works

It is programmed to extract pricing details for identical or similar SKUs across competitor sites. The data is compared to your product catalog, and dashboards or alerts are generated to notify you of changes. The scraper checks prices across various time intervals, such as hourly, daily, or weekly, depending on the market's volatility. This ensures businesses remain up-to-date with any price fluctuations that could impact their sales or profit margins.

Benefits of Price Monitoring

Competitive edge in pricing

Avoids underpricing or overpricing

Enhances profit margins while remaining attractive to customers

Helps with automatic repricing tools

Allows better seasonal pricing strategies

2. Product Catalog Optimization

Scraping competitor and marketplace listings helps optimize your product catalog by identifying missing information, keyword trends, or layout strategies that convert better.

How It Works

Scrapers collect product titles, images, descriptions, tags, and feature lists. The data is analyzed to identify gaps and opportunities in your listings. AI-driven catalog optimization tools use this scraped data to recommend ideal product titles, meta tags, and visual placements. Combining this with A/B testing can significantly improve your conversion rates.

Benefits

Better product visibility

Enhanced user experience and conversion rates

Identifies underperforming listings

Helps curate high-performing metadata templates

3. Competitor Analysis

Internet scraping provides detailed insights into your competitors’ strategies, such as pricing, promotions, product launches, and customer feedback, helping to shape your business approach.

How It Works

Scraped data from competitor websites and social platforms is organized and visualized for comparison. It includes pricing, stock levels, and promotional tactics. You can monitor their advertising frequency, ad types, pricing structure, customer engagement strategies, and feedback patterns. This creates a 360-degree understanding of what works in your industry.

Benefits

Uncover competitive trends

Benchmark product performance

Inform marketing and product strategy

Identify gaps in your offerings

Respond quickly to new product launches

4. Customer Sentiment Analysis

By scraping reviews and ratings from marketplaces and product pages, businesses can evaluate customer sentiment, discover pain points, and improve service quality.

How It Works

Natural language processing (NLP) is applied to scraped review content. Positive, negative, and neutral sentiments are categorized, and common themes are highlighted. Text analysis on these reviews helps detect not just satisfaction levels but also recurring quality issues or logistics complaints. This can guide product improvements and operational refinements.

Benefits

Improve product and customer experience

Monitor brand reputation

Address negative feedback proactively

Build trust and transparency

Adapt to changing customer preferences

5. Inventory and Availability Tracking

Track your competitors' stock levels and restocking schedules to predict demand and plan your inventory efficiently.

How It Works

Scrapers monitor product availability indicators (like "In Stock", "Out of Stock") and gather timestamps to track restocking frequency. This enables brands to respond quickly to opportunities when competitors go out of stock. It also supports real-time alerts for critical stock thresholds.

Benefits

Avoid overstocking or stockouts

Align promotions with competitor shortages

Streamline supply chain decisions

Improve vendor negotiation strategies

Forecast demand more accurately

6. Market Trend Identification

Scraping data from marketplaces and social commerce platforms helps identify trending products, search terms, and buyer behaviors.

How It Works

Scraped data from platforms like Amazon, eBay, or Etsy is analyzed for keyword frequency, popularity scores, and rising product categories. Trends can also be extracted from user-generated content and influencer reviews, giving your brand insights before a product goes mainstream.

Benefits

Stay ahead of consumer demand

Launch timely product lines

Align campaigns with seasonal or viral trends

Prevent dead inventory

Invest confidently in new product development

7. Lead Generation and Business Intelligence

Gather contact details, seller profiles, or niche market data from directories and B2B marketplaces to fuel outreach campaigns and business development.

How It Works

Scrapers extract publicly available email IDs, company names, product listings, and seller ratings. The data is filtered based on industry and size. Lead qualification becomes faster when you pre-analyze industry relevance, product categories, or market presence through scraped metadata.

Benefits

Expand B2B networks

Targeted marketing efforts

Increase qualified leads and partnerships

Boost outreach accuracy

Customize proposals based on scraped insights

How Does Internet Scraping Work in E-commerce?

Target Identification: Identify the websites and data types you want to scrape, such as pricing, product details, or reviews.

Bot Development: Create or configure a scraper bot using tools like Python, BeautifulSoup, or Scrapy, or use advanced scraping platforms like TagX.

Data Extraction: Bots navigate web pages, extract required data fields, and store them in structured formats (CSV, JSON, etc.).

Data Cleaning: Filter, de-duplicate, and normalize scraped data for analysis.

Data Analysis: Feed clean data into dashboards, CRMs, or analytics platforms for decision-making.

Automation and Scheduling: Set scraping frequency based on how dynamic the target sites are.

Integration: Sync data with internal tools like ERP, inventory systems, or marketing automation platforms.

Key Benefits of Internet Scraping for E-commerce

Scalable Insights: Access large volumes of data from multiple sources in real time

Improved Decision Making: Real-time data fuels smarter, faster decisions

Cost Efficiency: Reduces the need for manual research and data entry

Strategic Advantage: Gives brands an edge over slower-moving competitors

Enhanced Customer Experience: Drives better content, service, and personalization

Automation: Reduces human effort and speeds up analysis

Personalization: Tailor offers and messaging based on real-world competitor and customer data

Why Businesses Trust TagX for Internet Scraping

TagX offers enterprise-grade, customizable internet scraping solutions specifically designed for e-commerce businesses. With compliance-first approaches and powerful automation, TagX transforms raw online data into refined insights. Whether you're monitoring competitors, optimizing product pages, or discovering market trends, TagX helps you stay agile and informed.

Their team of data engineers and domain experts ensures that each scraping task is accurate, efficient, and aligned with your business goals. Plus, their built-in analytics dashboards reduce the time from data collection to actionable decision-making.

Final Thoughts

E-commerce success today is tied directly to how well you understand and react to market data. With internet scraping, brands can unlock insights that drive pricing, inventory, customer satisfaction, and competitive advantage. Whether you're a startup or a scaled enterprise, the smart use of scraping technology can set you apart.

Ready to outsmart the competition? Partner with TagX to start scraping smarter.

0 notes

Text

💎 Where Sparkle Meets Strategy: Mapping the Top Jewelry Brands in the US ✨

What happens when data meets diamonds? You get crystal-clear insights into where Americans shop for sparkle — and which brands are truly shining the brightest.

We analyzed 3,779 jewelry store locations across the United States to uncover trends in brand presence, regional dominance, and retail footprint. Our research shows that market leaders like Claire’s, Pandora, and Swarovski are not just well-known names — but strategically positioned powerhouses shaping the U.S. jewelry landscape.

📍 Whether it’s a mall kiosk, flagship boutique, or retail chain presence, these brands are setting the tone for how jewelry is marketed, sold, and worn across different demographics and locations.

🔍 What our jewelry market intelligence reveals:

Which brands have the largest retail footprint in key metro areas

Regional preferences for silver, gold, charm bracelets, or statement pieces

Where luxury meets accessibility in jewelry retail strategy

How consumer behavior differs across online and offline channels

What competitors can learn from location-based analytics and store density data

💡 “In retail, visibility is power. The more strategic your presence, the greater your potential to influence consumer choice.”

This type of data is invaluable not only for retailers and jewelry brands but also for:

Category managers and merchandisers

Consumer behavior analysts

Ecommerce platforms looking to expand jewelry verticals

Real estate strategists choosing locations for new store launches

At iWeb Data Scraping, we bring real-world data to retail strategy — whether you’re mapping jewelry stores, benchmarking prices, tracking reviews, or forecasting demand.

🔗 Discover how data intelligence can transform your retail strategy:

0 notes

Text

Discover how to extract business listings from Naver Map efficiently, including names, contacts, and reviews - perfect for research, analytics, and lead generation.

In today's competitive business landscape, having access to accurate and comprehensive business data is crucial for strategic decision-making and targeted marketing campaigns. Naver Map Data Extraction presents a valuable opportunity to gather insights about local businesses, consumer preferences, and market trends for companies looking to expand their operations or customer base in South Korea.

Source : https://www.retailscrape.com/efficient-naver-map-data-extraction-business-listings.php

Originally Published By https://www.retailscrape.com/

#NaverMapDataExtraction#BusinessListingsScraping#NaverBusinessData#SouthKoreaMarketAnalysis#WebScrapingServices#NaverMapAPIScraping#CompetitorAnalysis#MarketIntelligence#DataExtractionSolutions#RetailDataScraping#NaverMapBusinessListings#KoreanBusinessDataExtraction#LocationDataScraping#NaverMapsScraper#DataMiningServices#NaverLocalSearchData#BusinessIntelligenceServices#NaverMapCrawling#GeolocationDataExtraction#NaverDirectoryScraping

0 notes

Text

How to Extract API from a Website - A Comprehensive Guide

0 notes

Text

A Complete Guide to Web Scraping Blinkit for Market Research

Introduction

Having access to accurate data and timely information in the fast-paced e-commerce world is something very vital so that businesses can make the best decisions. Blinkit, one of the top quick commerce players on the Indian market, has gargantuan amounts of data, including product listings, prices, delivery details, and customer reviews. Data extraction through web scraping would give businesses a great insight into market trends, competitor monitoring, and optimization.

This blog will walk you through the complete process of web scraping Blinkit for market research: tools, techniques, challenges, and best practices. We're going to show how a legitimate service like CrawlXpert can assist you effectively in automating and scaling your Blinkit data extraction.

1. What is Blinkit Data Scraping?

The scraping Blinkit data is an automated process of extracting structured information from the Blinkit website or app. The app can extract useful data for market research by programmatically crawling through the HTML content of the website.

>Key Data Points You Can Extract:

Product Listings: Names, descriptions, categories, and specifications.

Pricing Information: Current prices, original prices, discounts, and price trends.

Delivery Details: Delivery time estimates, service availability, and delivery charges.

Stock Levels: In-stock, out-of-stock, and limited availability indicators.

Customer Reviews: Ratings, review counts, and customer feedback.

Categories and Tags: Labels, brands, and promotional tags.

2. Why Scrape Blinkit Data for Market Research?

Extracting data from Blinkit provides businesses with actionable insights for making smarter, data-driven decisions.

>(a) Competitor Pricing Analysis

Track Price Fluctuations: Monitor how prices change over time to identify trends.

Compare Competitors: Benchmark Blinkit prices against competitors like BigBasket, Swiggy Instamart, Zepto, etc.

Optimize Your Pricing: Use Blinkit’s pricing data to develop dynamic pricing strategies.

>(b) Consumer Behavior and Trends

Product Popularity: Identify which products are frequently bought or promoted.

Seasonal Demand: Analyze trends during festivals or seasonal sales.

Customer Preferences: Use review data to identify consumer sentiment and preferences.

>(c) Inventory and Supply Chain Insights

Monitor Stock Levels: Track frequently out-of-stock items to identify high-demand products.

Predict Supply Shortages: Identify potential inventory issues based on stock trends.

Optimize Procurement: Make data-backed purchasing decisions.

>(d) Marketing and Promotional Strategies

Targeted Advertising: Identify top-rated and frequently purchased products for marketing campaigns.

Content Optimization: Use product descriptions and categories for SEO optimization.

Identify Promotional Trends: Extract discount patterns and promotional offers.

3. Tools and Technologies for Scraping Blinkit

To scrape Blinkit effectively, you’ll need the right combination of tools, libraries, and services.

>(a) Python Libraries for Web Scraping

BeautifulSoup: Parses HTML and XML documents to extract data.

Requests: Sends HTTP requests to retrieve web page content.

Selenium: Automates browser interactions for dynamic content rendering.

Scrapy: A Python framework for large-scale web scraping projects.

Pandas: For data cleaning, structuring, and exporting in CSV or JSON formats.

>(b) Proxy Services for Anti-Bot Evasion

Bright Data: Provides residential IPs with CAPTCHA-solving capabilities.

ScraperAPI: Handles proxies, IP rotation, and bypasses CAPTCHAs automatically.

Smartproxy: Residential proxies to reduce the chances of being blocked.

>(c) Browser Automation Tools

Playwright: A modern web automation tool for handling JavaScript-heavy sites.

Puppeteer: A Node.js library for headless Chrome automation.

>(d) Data Storage Options

CSV/JSON: For small-scale data storage.

MongoDB/MySQL: For large-scale structured data storage.

Cloud Storage: AWS S3, Google Cloud, or Azure for scalable storage solutions.

4. Setting Up a Blinkit Scraper

>(a) Install the Required Libraries

First, install the necessary Python libraries:pip install requests beautifulsoup4 selenium pandas

>(b) Inspect Blinkit’s Website Structure

Open Blinkit in your browser.

Right-click → Inspect → Select Elements.

Identify product containers, pricing, and delivery details.

>(c) Fetch the Blinkit Page Content

import requests from bs4 import BeautifulSoup url = 'https://www.blinkit.com' headers = {'User-Agent': 'Mozilla/5.0'} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.content, 'html.parser')

>(d) Extract Product and Pricing Data

products = soup.find_all('div', class_='product-card') data = [] for product in products: try: title = product.find('h2').text price = product.find('span', class_='price').text availability = product.find('div', class_='availability').text data.append({'Product': title, 'Price': price, 'Availability': availability}) except AttributeError: continue

5. Bypassing Blinkit’s Anti-Scraping Mechanisms

Blinkit uses several anti-bot mechanisms, including rate limiting, CAPTCHAs, and IP blocking. Here’s how to bypass them.

>(a) Use Proxies for IP Rotation

proxies = {'http': 'http://user:pass@proxy-server:port'} response = requests.get(url, headers=headers, proxies=proxies)

>(b) User-Agent Rotation

import random user_agents = [ 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)' ] headers = {'User-Agent': random.choice(user_agents)}

>(c) Use Selenium for Dynamic Content

from selenium import webdriver options = webdriver.ChromeOptions() options.add_argument('--headless') driver = webdriver.Chrome(options=options) driver.get(url) data = driver.page_source driver.quit() soup = BeautifulSoup(data, 'html.parser')

6. Data Cleaning and Storage

After scraping the data, clean and store it: import pandas as pd df = pd.DataFrame(data) df.to_csv('blinkit_data.csv', index=False)

7. Why Choose CrawlXpert for Blinkit Data Scraping?

While building your own Blinkit scraper is possible, it comes with challenges like CAPTCHAs, IP blocking, and dynamic content rendering. This is where CrawlXpert can help.

>Key Benefits of CrawlXpert:

Accurate Data Extraction: Reliable and consistent Blinkit data scraping.

Large-Scale Capabilities: Efficient handling of extensive data extraction projects.

Anti-Scraping Evasion: Advanced techniques to bypass CAPTCHAs and anti-bot systems.

Real-Time Data: Access fresh, real-time Blinkit data with high accuracy.

Flexible Delivery: Multiple data formats (CSV, JSON, Excel) and API integration.

Conclusion

This web scraping provides valuable information on price trends, product existence, and consumer preferences for businesses interested in Blinkit. You can effectively extract any data from Blinkit, analyze it well, using efficient tools and techniques. However, such data extraction would prove futile because of the high level of anti-scraping precautions instituted by Blinkit, thus ensuring reliable, accurate, and compliant extraction by partnering with a trusted provider, such as CrawlXpert.

CrawlXpert will further benefit you by providing powerful market insight, improved pricing strategies, and even better business decisions using higher quality Blinkit data.

Know More : https://www.crawlxpert.com/blog/web-scraping-blinkit-for-market-research

1 note

·

View note

Text

How to Rotate Residential IPs Effectively in High-Frequency Tasks

High-frequency tasks like web scraping, ad verification, or multi-account actions need more than speed—they need stealth. Effective residential IP rotation ensures that your requests appear to come from different, legitimate users, helping you bypass detection systems. To rotate smartly, set reasonable intervals, avoid repetitive patterns, and use quality IP pools. This not only protects your operation from being flagged but also boosts success rates across platforms.

1 note

·

View note

Text

#AliExpressmobileappscraping#ScrapeDatafromAliExpress#mobileappscraping#AliExpressDataScraper#ScrapingECommerceProductData#EcommerceWebScraping#WebScrapingServices#USA#Canada#Australia#Germany#UAE#UK

0 notes

Text

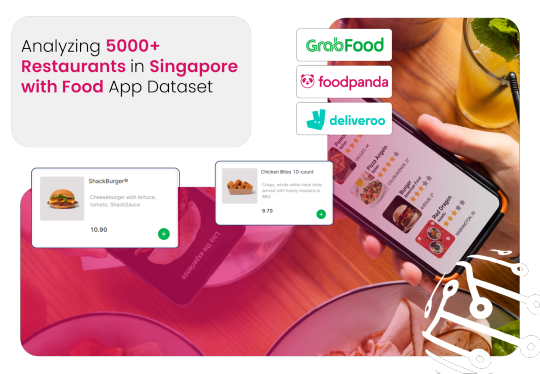

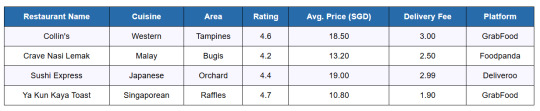

Singapore Restaurant Insights via Food App Dataset Analysis

Introduction

ArcTechnolabs provided a comprehensive Food Delivery Menu Dataset that helped the client extract detailed menu and pricing data from major food delivery platforms such as GrabFood, Foodpanda, and Deliveroo. This dataset included valuable insights into restaurant menus, pricing strategies, delivery fees, and popular dishes across different cuisine types in Singapore. The data also covered key factors like restaurant ratings and promotions, allowing the client to benchmark prices, identify trends, and create actionable insights for strategic decision-making. By extracting menu and pricing data at scale, ArcTechnolabs empowered the client to deliver high-impact market intelligence to the F&B industry.

Client Overview

A Singapore-based market intelligence firm partnered with ArcTechnolabs to analyze over 5,000 restaurants across the island. Their goal was to extract strategic insights from top food delivery platforms like GrabFood, Foodpanda, and Deliveroo—focusing on menu pricing, cuisine trends, delivery coverage, and customer ratings. They planned to use the insights to support restaurant chains, investors, and FMCG brands targeting the $1B+ Singapore online food delivery market.

The Challenge

The client encountered several data-related challenges, including fragmented listings across platforms, where the same restaurant had different menus and prices. There was no unified data source available to benchmark cuisine pricing or delivery charges. Additionally, inconsistent tagging for cuisines, promotions, and outlets created difficulties in standardization. The client also faced challenges in extracting food item pricing at scale and needed to perform detailed analysis by location, cuisine type, and restaurant rating. These obstacles highlighted the need for a structured and reliable dataset to overcome the fragmentation and enable accurate insights.They turned to ArcTechnolabs for a structured, ready-to-analyze dataset covering Singapore’s entire food delivery landscape.

ArcTechnolabs Solution:

ArcTechnolabs built a custom dataset using data scraped from:

GrabFood Singapore

Foodpanda Singapore

Deliveroo SG

The dataset captured details for 5,000+ restaurants, normalized for comparison and analytics.

Sample Dataset Extract

Client Testimonial

"ArcTechnolabs delivered exactly what we needed—structured, granular, and high-quality restaurant data across Singapore’s top food delivery apps. Their ability to normalize cuisine categories, menu pricing, and delivery metrics helped us drastically cut down report turnaround time. With their support, we expanded our client base and began offering zonal insights and cuisine benchmarks no one else in the market had. The quality, speed, and support were outstanding. We now rely on their weekly datasets to power everything from investor reports to competitive pricing models."

— Director of Research & Analytics, Singapore Market Intelligence Firm

Conclusion

ArcTechnolabs enabled a market intelligence firm to transform fragmented food delivery data into structured insights—analyzing over 5,000 restaurants across Singapore. With access to a high-quality, ready-to-analyze dataset, the client unlocked new revenue streams, faster reports, and higher customer value through data-driven F&B decision-making.

Source >> https://www.arctechnolabs.com/singapore-food-app-dataset-restaurant-analysis.php

#FoodDeliveryMenuDatasets#ExtractingMenuAndPricingData#FoodDeliveryDataScraping#SingaporeFoodDeliveryDataset#ScrapeFoodAppDataSingapore#ExtractSingaporeRestaurantReviews#WebScrapingServices#ArcTechnolabs

0 notes

Text

Scrape Lawyers Data from State Bar Directories

Scrape Lawyers Data from State Bar Directories

Scraping lawyers' data from State Bar Directories can be a valuable technique for law firm marketing. By extracting information such as contact details, practice areas, and geographical locations of lawyers, law firms can gain a competitive edge in their marketing efforts. This article will guide you through the process of effectively Scraping Lawyers' Data From State Bar Directories and how it can benefit your law firm marketing strategy.

Scraping is the automated extraction of data from websites using web scraping tools or custom scripts. State Bar Directories are comprehensive databases containing information about licensed lawyers in a particular state. They typically include details such as the lawyer's name, contact information, law school attended, and areas of practice. We at DataScrapingServices.com scrape this data, law firms can build their own database of potential clients and tailor their marketing campaigns accordingly.

List Of Data Fields

When scraping lawyers' data from State Bar Directories, it is important to identify the specific data fields that are relevant to your law firm marketing goals. Some common data fields to consider include:

- Lawyer's Name: This helps in personalizing your marketing communications.

- Contact Information: Phone numbers and email addresses allow you to reach out to potential clients.

- Areas Of Practice: Knowing the areas of law in which a lawyer specializes helps you target specific audiences.

- Geographical Location: This information is crucial for localized marketing campaigns.

By compiling these data fields, you can create a comprehensive and targeted list of lawyers to engage with for your marketing efforts.

Benefits of Scrape Lawyers Data from State Bar Directories

Scraping lawyers' data from State Bar Directories offers several benefits for law firm marketing:

- Accurate And Up-To-Date Information: State Bar Directories are maintained by legal authorities, ensuring the data is reliable and current.

- Targeted Marketing Campaigns: By filtering lawyers based on practice areas and geographical locations, you can tailor your marketing messages to specific audiences.

- Competitive Advantage: Accessing lawyers' data gives you insights into your competitors' marketing strategies, allowing you to stay ahead in the market.

- Cost-Effective Lead Generation: By directly contacting lawyers who match your target criteria, you can save time and resources compared to traditional lead generation methods.

Overall, scraping lawyers' data from State Bar Directories empowers law firms to make informed marketing decisions and connect with potential clients more effectively.

Best Lawyers Data Scraping Services

Superlawyers.com Data Scraping

Personal Injury Lawyer Email List

Justia.com Lawyers Data Scraping

Avvo.com Lawyers Data Scraping

Verified US Attorneys Data from Lawyers.com

Extracting Data from Barlist Website

Attorney Email Database Scraping

Australia Lawyers Data Scraping

Bar Association Directory Scraping

Best Scrape Lawyers Data from State Bar Directories Services USA:

New Orleans, Philadelphia, San Jose, Jacksonville, Arlington, Dallas, Fort Wichita, Boston, Worth, Sacramento, El Paso, Columbus, Houston, San Francisco, Raleigh, Memphis, Austin, San Antonio, Milwaukee, Bakersfield, Miami, Louisville, Albuquerque, Atlanta, Denver, San Diego, Oklahoma City, Seattle, Orlando, Springs, Chicago, Nashville, Virginia Beach, Colorado, Omaha, Long Beach, Portland, Kansas Los Angeles, Washington, Las Vegas, Indianapolis, Tulsa, Honolulu, Tucson and New York.

Conclusion

Scraping lawyers' data from State Bar Directories is a powerful tool for law firm marketing. By leveraging this technique, law firms can access valuable information about lawyers in their target market and customize their marketing campaigns accordingly. Whether it's personalizing communication, targeting specific practice areas, or reaching out to lawyers in specific geographical locations, scraping lawyers' data can significantly enhance your law firm's marketing strategy. Embrace this technology-driven approach to gain a competitive edge and connect with potential clients more efficiently.

Website: Datascrapingservices.com

Email: [email protected]

#scrapelawyersdatafromstatebardirectories#statebardirectorydatascraping#lawyersdatascraping#lawyersemaillist#lawyersmailinglistscraping#webscrapingservices#datascrapingservices#datamining#dataanalytics#webscrapingexpert#webcrawler#webscraper#webscraping#datascraping#dataentry#emaillistscraping#emaildatabase

0 notes

Text

TagX Web Scraping Services – Data You Can Rely On

0 notes

Text

🚴 Web Scraping Target Product Data from Postmates – Fuel Your Market Intelligence

Looking to gain a competitive edge in the food delivery ecosystem? Our #PostmatesWebScrapingServices help you unlock valuable data including #ProductInfo, #Pricing, #InventoryStatus, and #CustomerReviews straight from Postmates.

Whether you're a: 🍽️ #RestaurantChain evaluating demand 📦 #LogisticsProvider studying delivery trends 📊 #MarketAnalyst doing competitive research 💡 #Startup building food-tech tools

…this service equips you with clean, actionable data for #CompetitorAnalysis and #StrategicPlanning.

✨ Key Benefits: ✅ Real-time data on trending food products ✅ Customizable scraping frequency ✅ Insights into customer ratings & feedback ✅ Structured reports to fuel business decisions

Stay ahead in the dynamic world of food delivery with our expert scraping tools.

📨 Reach us at: [email protected] 🔗 Visit: www.iwebdatascraping.com

#Postmates#FoodDeliveryData#WebScrapingServices#DataExtraction#data extraction#data scraping#branding#marketing#commercial

1 note

·

View note

Text

Efficient Naver Map Data Extraction for Business Listings

Introduction

In today's competitive business landscape, having access to accurate and comprehensive business data is crucial for strategic decision-making and targeted marketing campaigns. Naver Map Data Extraction presents a valuable opportunity to gather insights about local businesses, consumer preferences, and market trends for companies looking to expand their operations or customer base in South Korea.

Understanding the Value of Naver Map Business Data

Naver is often called "South Korea's Google," dominating the local search market with over 70% market share. The platform's mapping service contains extensive information about businesses across South Korea, including contact details, operating hours, customer reviews, and location data. Naver Map Business Data provides international and local businesses rich insights to inform market entry strategies, competitive analysis, and targeted outreach campaigns.

However, manually collecting this information would be prohibitively time-consuming and inefficient. This is where strategic Business Listings Scraping comes into play, allowing organizations to collect and analyze business information at scale systematically.

The Challenges of Accessing Naver Map Data

Unlike some other platforms, Naver presents unique challenges for data collection:

Language barriers: Naver's interface and content are primarily Korean, creating obstacles for international businesses.

Complex website structure: Naver's dynamic content loading makes straightforward scraping difficult.

Strict rate limiting: Aggressive anti-scraping measures can block IP addresses that require too many requests.

CAPTCHA systems: Automated verification challenges to prevent bot activity.

Terms of service considerations: Understanding the Legal Ways To Scrape Data From Naver Map is essential.

Ethical and Legal Considerations

Before diving into the technical aspects of Naver Map API Scraping, it's crucial to understand the legal and ethical framework. While data on the web is publicly accessible, how you access it matters from legal and ethical perspectives.

To Scrape Naver Map Data Without Violating Terms Of Service, consider these principles:

Review Naver's terms of service and robots.txt file to understand access restrictions.

Implement respectful scraping practices with reasonable request rates.

Consider using official APIs where available.

Store only the data you need and ensure compliance with privacy regulations, such as GDPR and Korea's Personal Information Protection Act.

Use the data for legitimate business purposes without attempting to replicate Naver's services.

Effective Methods For Scraping Naver Map Business Data

There are several approaches to gathering business listing data from Naver Maps, each with advantages and limitations.

Here are the most practical methods:

1. Official Naver Maps API

Naver provides official APIs that allow developers to access map data programmatically. While these APIs have usage limitations and costs, they represent the most straightforward and compliant Naver Map Business Data Extraction method.

The official API offers:

Geocoding and reverse geocoding capabilities.

Local search functionality.

Directions and routing services.

Address verification features.

Using the official API requires registering a developer account and adhering to Naver's usage quotas and pricing structure. However, it provides reliable, sanctioned access to the data without risking account blocks or legal issues.

2. Web Scraping Solutions

When API limitations prove too restrictive for your business needs, web scraping becomes a viable alternative. Naver Map Scraping Tools range from simple script-based solutions to sophisticated frameworks that can handle dynamic content and bypass basic anti-scraping measures.

Key components of an effective scraping solution include:

Proxy RotationRotating between multiple proxy servers is essential to prevent IP bans when accessing large volumes of data. This spreads requests across different IP addresses, making the scraping activity appear more like regular user traffic than automated collection.Commercial proxy services offer:1. Residential proxies that use real devices and ISPs.2. Datacenter proxies that provide cost-effective rotation options.3. Geographically targeted proxies that can access region-specific content.

Request Throttling Implementing delays between requests helps mimic human browsing patterns and reduces server load. Adaptive throttling that adjusts based on server response times can optimize the balance between collection speed and avoiding detection.

Browser Automation Tools like Selenium and Playwright can control real browsers to render JavaScript-heavy pages and interact with elements just as a human user would. This approach is efficient for navigating Naver's dynamic content loading system.

3. Specialized Web Scraping API Services

For businesses lacking technical resources to build and maintain scraping infrastructure, Web Scraping API offers a middle-ground solution. These services handle the complexities of proxy rotation, browser rendering, and CAPTCHA solving while providing a simple API interface to request data.

Benefits of using specialized scraping APIs include:

Reduced development and maintenance overhead.

Built-in compliance with best practices.

Scalable infrastructure that adapts to project needs.

Regular updates to counter anti-scraping measures.

Structuring Your Naver Map Data Collection Process

Regardless of the method chosen, a systematic approach to Naver Map Data Extraction will yield the best results. Here's a framework to guide your collection process:

1. Define Clear Data Requirements

Before beginning any extraction project, clearly define what specific business data points you need and why.

This might include:

Business names and categories.

Physical addresses and contact information.

Operating hours and service offerings.

Customer ratings and review content.

Geographic coordinates for spatial analysis.

Precise requirements prevent scope creep and ensure you collect only what's necessary for your business objectives.

2. Develop a Staged Collection Strategy

Rather than attempting to gather all data at once, consider a multi-stage approach:

Initial broad collection of business identifiers and basic information.

Categorization and prioritization of listings based on business relevance.

Detailed collection focusing on high-priority targets.

Periodic updates to maintain data freshness.

This approach optimizes resource usage and allows for refinement of collection parameters based on initial results.

3. Implement Data Validation and Cleaning

Raw data from Naver Maps often requires preprocessing before it becomes business-ready.

Common data quality issues include:

Inconsistent formatting of addresses and phone numbers.

Mixed language entries (Korean and English).

Duplicate listings with slight variations.

Outdated or incomplete information.

Implementing automated validation rules and manual spot-checking ensures the data meets quality standards before analysis or integration with business systems.

Specialized Use Cases for Naver Product Data Scraping

Beyond basic business information, Naver's ecosystem includes product listings and pricing data that can provide valuable competitive intelligence.

Naver Product Data Scraping enables businesses to:

Monitor competitor pricing strategies.

Identify emerging product trends.

Analyze consumer preferences through review sentiment.

Track promotional activities across the Korean market.

This specialized data collection requires targeted approaches that navigate Naver's shopping sections and product detail pages, often necessitating more sophisticated parsing logic than standard business listings.

Data Analysis and Utilization

The actual value of Naver Map Business Data emerges during analysis and application. Consider these strategic applications:

Market Penetration AnalysisBy mapping collected business density data, companies can identify underserved areas or regions with high competitive saturation. This spatial analysis helps optimize expansion strategies and resource allocation.

Competitive BenchmarkingAggregated ratings and review data provide insights into competitor performance and customer satisfaction. This benchmarking helps identify service gaps and opportunities for differentiation.

Lead Generation and OutreachFiltered business contact information enables targeted B2B marketing campaigns, partnership initiatives, and sales outreach programs tailored to specific business categories or regions.

How Retail Scrape Can Help You?

We understand the complexities involved in Naver Map API Scraping and the strategic importance of accurate Korean market data. Our specialized team combines technical expertise with deep knowledge of Korean digital ecosystems to deliver reliable, compliance-focused data solutions.

Our approach to Naver Map Business Data Extraction is built on three core principles:

Compliance-First Approach: We strictly adhere to Korean data regulations, ensuring all activities align with platform guidelines for ethical, legal scraping.

Korea-Optimized Infrastructure: Our tools are designed for Korean platforms, offering native language support and precise parsing for Naver’s unique data structure.

Insight-Driven Delivery: Beyond raw data, we offer value-added intelligence—market insights, tailored reports, and strategic recommendations to support your business in Korea.

Conclusion

Harnessing the information available through Naver Map Data Extraction offers significant competitive advantages for businesses targeting the Korean market. Organizations can develop deeper market understanding and more targeted business strategies by implementing Effective Methods For Scraping Naver Map Business Data with attention to legal compliance, technical best practices, and strategic application.

Whether you want to conduct market research, generate sales leads, or analyze competitive landscapes, the rich business data available through Naver Maps can transform your Korean market operations. However, the technical complexities and compliance considerations make this a specialized undertaking requiring careful planning and execution.

Need expert assistance with your Korean market data needs? Contact Retail Scrape today to discuss how our specialized Naver Map Scraping Tools and analytical expertise can support your business objectives.

Source : https://www.retailscrape.com/efficient-naver-map-data-extraction-business-listings.php

Originally Published By https://www.retailscrape.com/

#NaverMapDataExtraction#BusinessListingsScraping#NaverBusinessData#SouthKoreaMarketAnalysis#WebScrapingServices#NaverMapAPIScraping#CompetitorAnalysis#MarketIntelligence#DataExtractionSolutions#RetailDataScraping#NaverMapBusinessListings#KoreanBusinessDataExtraction#LocationDataScraping#NaverMapsScraper#DataMiningServices#NaverLocalSearchData#BusinessIntelligenceServices#NaverMapCrawling#GeolocationDataExtraction#NaverDirectoryScraping

0 notes

Link

Are you fed up with the manual grind of data collection for your marketing projects? Welcome to a new era of efficiency with Outscraper! This game-changing tool makes scraping Google search results simpler than ever before. In our latest blog post, we explore how Outscraper can revolutionize the way you gather data. With its easy-to-use interface, you don’t need to be a tech wizard to navigate it. The real-time SERP data and cloud scraping features ensure you can extract crucial information while protecting your IP address. What's even better? Outscraper is currently offering a lifetime deal for just $119, significantly slashed from the original price of $1,499. This makes it the perfect investment for marketers and business owners eager to enhance their strategies. Don't miss out on this fantastic opportunity to streamline your data-gathering process! Click the link to read the full review and discover how Outscraper can take your marketing game to the next level. #Outscraper #DataCollection #MarketingTools #GoogleSearch #LifetimeDeal #DigitalMarketing #DataScraping #TechReview #SEO #MarketingStrategies

#GoogleSearchResultsScraper#lifetimedeal#dataextractiontool#keywordsearches#SERPdata#AppSumo#webscrapingservice#cloudscraping#Outscraper#datastructuring#web scraping service

0 notes