#What risk is mitigated by HTTP headers

Explore tagged Tumblr posts

Text

Why Regularly Tracking Google SERPs is Essential for Optimizing Your SEO Strategy

Within the extraordinarily digital era, standing out in seeking engine results is a primary purpose for most websites. An essential strategy for keeping and enhancing your online visibility is monitoring your search engine scores. For companies trying to beautify their virtual presence, tracking Google SERPs (Search Engine outcomes Pages) is important. Song Google engines like Google frequently to keep up with moving search algorithms and ensure that your internet site remains optimized and applicable.

Why is tracking Google SERPs like Google and Yahoo important?

Knowledge of where your website stands in the search ratings is essential to any successful search engine optimization strategy. Without often checking your ratings, you cannot determine the effect of your SEO efforts. By preserving an eye fixed on these ratings, you may see how well your content is performing and discover development opportunities. When you use Google search engines like Google and Yahoo, you can tune your website’s position through the years and adjust your approach for that reason.

Serps like Google are constantly evolving their algorithms, frequently making changes that affect how websites are ranked. These updates won't always be transparent, which is why tracking your rankings is critical. As an example, if you notice a surprising drop in your rankings, it could be due to a Google set of rules replaced. This drop would possibly signal that changes are wanted on your SEO method, which include revisiting your keyword approach, enhancing content high-quality, or resolving technical seo problems like damaged links or gradual web page loading speeds.

Another cause to track Google serps is to live ahead of your opposition. Tracking your competition scores allows you to pick out their strengths and weaknesses and modify your techniques. If a competitor abruptly ranks better than you, it might be time to evaluate their SEO techniques and spot what they're doing differently. This approach now not best enables you to hold or improve your scores but additionally offers you precious insights into emerging tendencies for your industry.

The way to tune Google search engines like google effectively

Tracking Google engines like Google can seem daunting in the beginning, in particular for agencies that target a wide array of keywords. However, gear makes this manner less complicated. A number of the most popular consist of Ahrefs, SEMrush, and Google Analytics. Those structures let you input your target key phrases and display your website’s rankings throughout diverse seek consequences pages.

Another excellent device to use is the Google SERP monitoring device. This device presents complete records of your ratings, permitting you to song keyword performance, discover adjustments in seek scores, and see potential troubles like keyword cannibalization (wherein multiple pages to your website online compete for the equal keyword). With the use of the Google SERP tracking tool, you could gain valuable insights that can help refine your search engine optimization method.

The advantages of SERP monitoring

There are numerous advantages to always tracking your search rankings. Right here are only some:

Information-pushed search engine optimization adjustments: while you regularly music Google search engines, you're higher ready to make knowledgeable, information-driven choices. Whether or not tweaking the key phrases you are targeting, updating your meta descriptions, or editing content material, SERP monitoring presents you with the insights necessary for making strategic improvements.

Competitor analysis: By tracking your competitors' ratings, you benefit from insights into what strategies are operating for them. This know-how can manual your approach to content material advent, backlink construction, and on-web page optimization, assisting you close any performance gaps and overtaking competitors in the scores.

Keyword Optimization: tracking your scores for unique key phrases lets you see which are acting nicely and which need improvement. You may find out that a few high-acting key phrases are riding a sizeable portion of your traffic, prompting you to consciousness on expanding associated content material. Alternatively, tracking allows you to discover low-acting keywords and revise or decorate the content material that goals them.

Adapt to algorithm Updates: Google often rolls out algorithm updates, that could have a huge impact on seek scores. When you frequently music Google engines like Google, you may speedy become aware of any ranking shifts following a replacement. This allows you to react swiftly and mitigate any bad results in your traffic or visibility.

Spot developments and possibilities: monitoring your search engines like Google over the years lets you identify long-term trends and perceive new opportunities. For example, if a specific content piece constantly ranks properly, you could capitalize on this with the aid of growing extra content around that subject matter or expanding it to cover associated subjects.

conclusion

Make SERP tracking a habit

SERP tracking must be a key element of any digital marketing strategy. Regularly tracking your seek rankings ensures that you stay on top of your SEO recreation, allowing you to conform to changes in seek algorithms, keep a competitive facet, and optimize your content material for first-class viable overall performance.

With the aid of incorporating tools including the Google SERP tracking tool into your method, you can gather treasured facts to assist refine your SEO technique and make informed decisions to enhance your internet site’s visibility and performance. While you track Google serps often, you are taking a proactive approach to preserving and improving your online presence, ensuring long-term fulfillment in the ever-changing global of virtual marketing.

For similarly studying advanced search engine optimization strategies and the blessings of SERP monitoring, check out this informative article from article from Search Engine Journal.

#Track Google SERPs#Serper for Google search via API#Search Query Here#High Quality Backlinks#How to see headers in developer tools#What risk is mitigated by HTTP headers#HTTP headers case sensitive

0 notes

Text

How to Prevent Cross-Site Script Inclusion (XSSI) Vulnerabilities in Laravel

Introduction

Cross-Site Script Inclusion (XSSI) is a significant security vulnerability that allows attackers to include malicious scripts in a web application. These scripts can be executed in the context of a user’s session, leading to data theft or unauthorized actions.

In this post, we’ll explore what XSSI is, how it impacts Laravel applications, and practical steps you can take to secure your app.

What is Cross-Site Script Inclusion (XSSI)?

XSSI occurs when a web application exposes sensitive data within scripts or includes external scripts from untrusted sources. Attackers can exploit this by injecting malicious scripts that execute within the user’s browser. This can lead to unauthorized access to sensitive data and potentially compromise the entire application.

Identifying XSSI Vulnerabilities in Laravel

To prevent XSSI, start by identifying potential vulnerabilities in your Laravel application:

Review Data Endpoints: Ensure that any API or data endpoint returns the appropriate Content-Type headers to prevent the browser from interpreting data as executable code.

Inspect Script Inclusions: Make sure that only trusted scripts are included and that no sensitive data is embedded within these scripts.

Use Security Scanners: Utilize tools like our Website Vulnerability Scanner to analyze your app for potential XSSI vulnerabilities and get detailed reports.

Screenshot of the free tools webpage where you can access security assessment tools.

Mitigating XSSI Vulnerabilities in Laravel

Let’s explore some practical steps you can take to mitigate XSSI risks in Laravel.

1. Set Correct Content-Type Headers

Make sure that any endpoint returning JSON or other data formats sets the correct Content-Type header to prevent browsers from interpreting responses as executable scripts.

Example:

return response()->json($data);

Laravel’s response()->json() method automatically sets the correct header, which is a simple and effective way to prevent XSSI.

2. Avoid Including Sensitive Data in Scripts

Never expose sensitive data directly within scripts. Instead, return data securely through API endpoints.

Insecure Approach

echo "<script>var userData = {$userData};</script>";

Secure Approach:

return response()->json(['userData' => $userData]);

This method ensures that sensitive data is not embedded within client-side scripts.

3. Implement Content Security Policy (CSP)

A Content Security Policy (CSP) helps mitigate XSSI by restricting which external sources can serve scripts.

Example:

Content-Security-Policy: script-src 'self' https://trusted.cdn.com;

This allows scripts to load only from your trusted sources, minimizing the risk of malicious script inclusion.

4. Validate and Sanitize User Inputs

Always validate and sanitize user inputs to prevent malicious data from being processed or included in scripts.

Example:

$request->validate([ 'inputField' => 'required|string|max:255', ]);

Laravel’s built-in validation mechanisms help ensure that only expected, safe data is processed.

5. Regular Security Assessments

Conduct regular security assessments to proactively identify potential vulnerabilities. Tools like our free Website Security Scanner can provide detailed insights into areas that need attention.

An Example of a vulnerability assessment report generated with our free tool, providing insights into possible vulnerabilities.

Conclusion

Preventing Cross-Site Script Inclusion (XSSI) vulnerabilities in your Laravel applications is essential for safeguarding your users and maintaining trust. By following best practices like setting proper content-type headers, avoiding sensitive data exposure, implementing CSP, validating inputs, and regularly assessing your app’s security, you can significantly reduce the risk of XSSI attacks.

Stay proactive and secure your Laravel applications from XSSI threats today!

For more insights into securing your Laravel applications, visit our blog at Pentest Testing Corp.

3 notes

·

View notes

Text

does my isp know i use vpn

🔒🌍✨ Get 3 Months FREE VPN - Secure & Private Internet Access Worldwide! Click Here ✨🌍🔒

does my isp know i use vpn

ISP monitoring VPN usage

Internet Service Providers (ISPs) have the capability to monitor Virtual Private Network (VPN) usage of their users. A VPN is a tool that helps protect users' privacy by encrypting internet traffic and masking their IP address. While VPNs are commonly used to enhance online security and privacy, ISPs have the ability to detect when their customers are using VPNs.

ISPs often monitor VPN usage for various reasons. One of the primary reasons is to comply with legal obligations. In some countries, ISPs are required by law to monitor internet traffic to prevent illegal activities such as copyright infringement, terrorism, or child exploitation. Monitoring VPN usage allows ISPs to identify and block access to websites or services that are involved in such activities.

In addition, ISPs may monitor VPN usage to manage network congestion and ensure a smooth internet experience for all users. By monitoring VPN usage, ISPs can identify heavy VPN users and allocate bandwidth more efficiently to prevent network slowdowns. This helps to maintain a stable internet connection for all customers.

Furthermore, ISPs may monitor VPN usage to enforce their terms of service. Some ISPs prohibit the use of VPNs on their networks, especially in corporate environments where VPNs can bypass security measures put in place by the organization. By monitoring VPN usage, ISPs can detect and take action against users who violate their terms of service.

Overall, while ISPs have the capability to monitor VPN usage, the extent to which they do so may vary depending on legal requirements, network management needs, and service policies. Users should be aware of their ISP's privacy practices and terms of service regarding VPN usage to ensure a secure and compliant online experience.

VPN detection by ISP

Title: Understanding VPN Detection by ISPs: How it Works and What it Means for Users

As internet users become increasingly concerned about their online privacy and security, many are turning to Virtual Private Networks (VPNs) as a solution. VPNs encrypt internet traffic and route it through secure servers, masking users' IP addresses and making it difficult for third parties, including Internet Service Providers (ISPs), to monitor their online activities. However, some ISPs have developed methods to detect and potentially block VPN usage, raising questions about the effectiveness of VPNs in maintaining privacy.

ISPs employ various techniques to detect VPN traffic on their networks. One common method is Deep Packet Inspection (DPI), which involves analyzing the data packets transmitted over the network to identify characteristics unique to VPN traffic. By examining packet headers, DPI systems can differentiate between regular internet traffic and VPN-encrypted data. Additionally, ISPs may use behavioral analysis to detect patterns associated with VPN usage, such as consistent use of specific IP addresses or ports commonly used by VPN protocols.

The implications of VPN detection by ISPs vary depending on the user's location and the ISP's policies. In some regions, ISPs may block access to VPN servers or throttle VPN traffic, limiting the effectiveness of VPNs in circumventing censorship or accessing geo-restricted content. Furthermore, VPN detection may raise concerns about user privacy, as ISPs gain insights into users' online activities despite their efforts to maintain anonymity through VPNs.

For users, the detection of VPN usage by ISPs underscores the importance of choosing a reputable VPN provider and implementing additional privacy measures. Opting for VPN protocols that are less susceptible to detection, such as OpenVPN or WireGuard, can help mitigate the risk of ISP interference. Additionally, utilizing features like VPN obfuscation, which disguises VPN traffic as regular HTTPS traffic, can further enhance privacy and bypass ISP detection.

In conclusion, while VPNs offer a valuable layer of privacy and security for internet users, the detection of VPN usage by ISPs poses challenges to maintaining anonymity and accessing unrestricted content. By understanding how ISPs detect VPN traffic and implementing appropriate measures, users can better protect their online privacy in an increasingly monitored digital landscape.

ISP awareness of VPN usage

Internet Service Providers (ISPs) play a crucial role in facilitating internet access for users worldwide. As more individuals and businesses prioritize online privacy and security, the use of Virtual Private Networks (VPNs) has become increasingly popular. VPNs encrypt internet traffic and hide users' IP addresses, providing anonymity and enhancing security while browsing the web.

ISPs are becoming increasingly aware of VPN usage among their customers. While VPNs can offer significant benefits in terms of privacy and security, they can also impact ISPs in various ways. ISPs may face challenges in monitoring and managing network traffic when users employ VPNs, as VPNs can mask the type of data being transmitted. This can make it difficult for ISPs to optimize network performance and ensure quality of service for all users.

Additionally, ISPs need to consider the legal and regulatory implications of VPN usage. Some countries impose restrictions on VPN usage in an attempt to control access to certain websites or online content. ISPs may need to navigate these regulations and work with authorities to ensure compliance while respecting users' privacy rights.

Furthermore, ISPs can take proactive measures to support and educate users about VPNs. By offering VPN services or providing information on reputable VPN providers, ISPs can empower users to make informed decisions about their online privacy and security.

In conclusion, the increased awareness of VPN usage among ISPs highlights the evolving landscape of online privacy and security. By addressing the challenges and opportunities associated with VPNs, ISPs can enhance their services and support users in navigating the complexities of the digital world.

Concealing VPN from ISP

Using a Virtual Private Network (VPN) is an effective way to protect your online privacy and security by encrypting your internet connection. However, some internet service providers (ISPs) may not be fond of VPN usage as it prevents them from monitoring your online activities. If you are looking to conceal your VPN usage from your ISP, there are several steps you can take.

One method to conceal your VPN usage from your ISP is to use obfuscated servers provided by your VPN service. These servers make your VPN traffic appear as regular internet traffic, making it harder for the ISP to detect and block your VPN connection.

Another approach is to change the default VPN port. By shifting to a less common port, you can evade detection by ISPs that may be scanning for VPN traffic on standard ports.

Additionally, enabling the VPN's kill switch feature can prevent any data leaks if your VPN connection drops unexpectedly, thereby concealing your VPN usage from your ISP.

It's also advisable to regularly update your VPN software to ensure it includes the latest security features and encryption protocols, making it more challenging for your ISP to detect and block your VPN connection.

By following these steps and staying vigilant about your online privacy, you can effectively conceal your VPN usage from your ISP and enjoy a more secure and private internet browsing experience.

VPN traffic encryption detection

Detecting VPN traffic encryption is a complex endeavor that poses challenges for network administrators and security analysts. VPNs (Virtual Private Networks) are designed to encrypt data traffic, providing users with privacy and security, but this encryption can also make it difficult to distinguish between legitimate VPN usage and potentially malicious activity.

One method used to detect VPN traffic encryption is through deep packet inspection (DPI). DPI involves analyzing the contents of data packets as they travel across a network. By examining packet headers and payloads, DPI can identify characteristics commonly associated with VPN traffic, such as specific encryption protocols and packet sizes.

Another approach is to monitor network traffic patterns. VPNs often exhibit distinct patterns, such as a high volume of outgoing connections to known VPN server IP addresses or consistent use of specific ports associated with VPN protocols like OpenVPN or IPsec. By analyzing these patterns, security teams can identify and potentially block VPN traffic.

Furthermore, some advanced threat detection systems utilize machine learning algorithms to identify anomalous behavior indicative of VPN usage. By training these algorithms on large datasets of network traffic, these systems can learn to recognize patterns associated with encrypted VPN traffic and flag suspicious activity for further investigation.

However, detecting VPN traffic encryption is not foolproof. As VPN technology evolves, so too do the methods for obfuscating VPN traffic to evade detection. Encapsulation techniques, such as tunneling VPN traffic within other protocols like HTTPS or SSH, can make it even more challenging to identify and intercept VPN connections.

In conclusion, while detecting VPN traffic encryption presents significant challenges, it is not impossible. By employing a combination of deep packet inspection, traffic pattern analysis, and advanced threat detection techniques, network administrators can enhance their ability to identify and mitigate potential security risks associated with VPN usage.

0 notes

Text

Google Answers If Security Headers Offer Ranking Influence

In the ever-evolving landscape of SEO (Search Engine Optimization), website security is a paramount concern. As the best digital marketing agency in Gurugram (Gurgaon), we understand the critical importance of not only optimizing for search engine rankings but also ensuring the safety and security of websites. One common security practice is the implementation of security headers, which play a significant role in safeguarding websites against various threats. But the question arises: Do these security headers have any influence on search engine rankings? In this blog, we delve into this topic to provide you with a comprehensive understanding of the relationship between security headers and SEO.

Understanding Security Headers

Security headers, often referred to as HTTP security headers or response headers, are an essential component of web security. They are additional lines of code that are included in the HTTP response sent by a web server when a user's browser requests a web page. These headers provide instructions to the browser on how to handle the content and what security precautions to take. While there are various security headers available, here are some of the most common ones:

HTTP Strict Transport Security (HSTS): This header enforces secure connections by instructing the browser to only access the website over HTTPS (HTTP Secure). It helps prevent man-in-the-middle attacks.

Content Security Policy (CSP): CSP defines which resources (scripts, styles, images, etc.) can be loaded and executed on a web page. It mitigates the risk of cross-site scripting (XSS) attacks.

X-Content-Type-Options: This header prevents browsers from interpreting files as something other than what they are (e.g., treating a text file as JavaScript). It helps protect against MIME-sniffing attacks.

X-Frame-Options: X-Frame-Options controls whether a web page can be displayed in a frame or iframe. It helps mitigate clickjacking attacks.

Referrer-Policy: This header controls how much information is included in the HTTP referer header when a user clicks on a link. It can help protect user privacy.

The SEO Impact of Security Headers

Now, let's explore whether implementing these security headers has any direct influence on search engine rankings. While Google doesn't explicitly state that security headers are a ranking factor, several indirect benefits can positively impact SEO:

1. HTTPS Ranking Boost:

One of the most significant SEO benefits of implementing security headers like HSTS is that they enforce the use of HTTPS. Google has officially stated that HTTPS is a ranking signal. Websites using HTTPS receive a slight ranking boost compared to their HTTP counterparts. Therefore, by ensuring secure connections through security headers, you indirectly contribute to your website's SEO. Read More

2. User Trust and Engagement:

Security headers, especially those related to content security and XSS prevention (e.g., CSP headers), help protect your website visitors from malicious scripts and attacks. When users feel safe and secure on your site, they are more likely to engage with your content, stay longer, and reduce bounce rates. These positive user engagement signals can indirectly influence SEO rankings.

3. Avoiding Penalties:

Implementing security headers can help you avoid potential penalties from search engines. For instance, if your website is compromised due to a lack of security measures, it may get flagged as potentially harmful by search engines, leading to a drop in rankings. Security headers help mitigate these risks.

4. Page Loading Speed:

While not a direct ranking factor, page loading speed is a crucial user experience factor that can impact SEO. Some security headers, like HSTS, can help improve page loading speed by ensuring secure connections, which can indirectly contribute to better rankings.

5. Compatibility with Modern Standards:

Search engines value websites that adhere to modern web standards, including security practices. Implementing security headers shows your commitment to maintaining a secure and up-to-date website, which can positively influence search engines' perception of your site.

Implementing Security Headers for SEO

If you're considering implementing security headers to enhance your website's security and indirectly boost SEO, here's a simplified guide to get started:

Assessment: Begin by assessing your website's current security posture and identifying potential vulnerabilities. Tools like security scanners and auditing services can be helpful.

Select Appropriate Headers: Depending on your website's needs and potential threats, choose the security headers that are most relevant. Common headers like HSTS, CSP, and X-Content-Type-Options are a good starting point.

Configuration: Configure the selected headers according to best practices. This may involve modifying your web server's configuration or using security plugins if you're on a content management system (CMS) like WordPress.

Testing: Thoroughly test your website after implementing security headers to ensure there are no conflicts or issues with existing scripts or functionalities.

Monitoring: Continuously monitor your website's security and SEO performance. Regularly update your security headers to adapt to evolving threats.

Reporting: Set up reporting and analytics to track the impact of security headers on user engagement and SEO metrics.

Conclusion

While security headers themselves may not be a direct ranking factor, they play a vital role in website security, user trust, and overall SEO indirectly. Implementing security headers is a proactive measure that not only protects your website and users but also aligns with search engines' preferences for secure and user-friendly web experiences. As the best digital marketing agency in Gurugram (Gurgaon), we recommend prioritizing website security alongside your SEO efforts to ensure a robust online presence that benefits both your business and your audience.

#digital marketing company in gurgaon#best digital marketing company in gurgaon#best digital marketing agency in gurugram

0 notes

Text

Business Email Compromise

What is Business Email Compromise (BEC)?

Osterman Research characterizes BEC as "a particular sort of phishing (spear phishing) assault, depending on focusing on (i.e., pursuing a particular individual or job type in an association) and regularly looking for money related installment as an immediate result." BEC attacks vary from different types of cyber threats, depending on the whole on friendly designing to set off human helplessness to conceivable solicitations.

These are turning out to be famously hard to forestall as the customary danger discovery arrangements (inbound email security) that investigate email headers, connections, and metadata frequently miss these assault techniques. Spear phishing emails, messages, or calls are aimed at explicit people or associations. You won't find messages loaded with awful syntax, broken connections, or accentuation blunders. Such goes after don't by and large convey malware, incorporate weaponized connections, or try to think twice about account certifications.

Instead, in this technique, attackers carefully research and spend time to make the messages highly personalized – right from sending an email from a lookalike company email domain down to adding company sayings, slogans, or common phrases to appear more legitimate. Such social engineering tricks include establishing rapport (pretexting), promising personal benefit, and invoking urgency.

How RMail Helps You Prevent and Pre-Empt BEC Scams

Well known for its Registered Email™ and Registered Encryption™ features that mitigate risk by providing proof of who said what when, or audit-ready proof of the fact of privacy compliance, RMail’s AI continues to evolve. It now includes a suite of features designed to pre-empt cybercrime: PRE-Crime™. Put simply, this means stopping the e-crime after the hook is in, but before the steal (crime) completes – a boon to thwart and pre-empt business email compromise attacks.

PRE-Crime has components designed to alert you or your administrator of a potential e-crime in progress before it is too late - whether the cyber trickery is happening inside your organization or at your recipient’s email account.

Pre-Empt BEC Cybercrimes Effectively with RMail

Each of the RMail technologies discussed above is additive layers that either the email security gateway systems employed by companies do not address completely or address only in half. RMail’s security services run within Microsoft Outlook, making it seamless. Besides, irrespective of your existing email systems, these RMail technologies also focus outside the boundaries of normal email security server filtering capabilities.

For more information: https://rmail.com/learn/business-email-compromise

0 notes

Text

Protect Your Laravel Application from Clickjacking Attacks

In today's digital landscape, protecting your web application from various security threats is crucial. One such threat is Clickjacking, an attack that tricks users into clicking on invisible or disguised elements on a webpage. For developers using the Laravel framework, ensuring your application is safe from clickjacking is essential.

In this post, we'll explore what clickjacking is and how to prevent it in your Laravel application. Plus, we’ll show you how to use our free Website Security Checker tool to assess potential vulnerabilities.

What is Clickjacking?

Clickjacking is a type of attack where malicious users embed your webpage into an invisible iframe on their site. The attacker then tricks the victim into clicking on the iframe, which can lead to unwanted actions like changing settings, submitting forms, or even transferring funds without their knowledge.

For example, a button that looks harmless on the surface might trigger an action you didn’t intend to take when clicked in an iframe. This type of attack can be devastating for your users’ privacy and your application’s security.

Preventing Clickjacking in Laravel

Fortunately, Laravel provides a straightforward way to mitigate the risk of clickjacking. Here's how you can do it:

Step 1: Use HTTP Headers

The best way to prevent clickjacking in your Laravel application is by setting proper HTTP headers. You can do this by adding the X-Frame-Options header to your application's response. This header tells the browser not to allow your webpage to be embedded in an iframe.

In Laravel, you can add this header globally by modifying the app/Http/Middleware/VerifyCsrfToken.php file.

Here’s how you can modify the middleware:

// app/Http/Middleware/VerifyCsrfToken.php namespace App\Http\Middleware; use Closure; use Illuminate\Http\Request; class VerifyCsrfToken { public function handle(Request $request, Closure $next) { // Adding X-Frame-Options header to prevent clickjacking response()->headers->set('X-Frame-Options', 'DENY'); return $next($request); } }

With this code, the header X-Frame-Options: DENY ensures that no website can embed your pages in an iframe. If you want to allow only certain websites to embed your content, you can use SAMEORIGIN instead of DENY.

Step 2: Use Content Security Policy (CSP)

Another robust method to prevent clickjacking attacks is by using a Content Security Policy (CSP). Laravel supports CSP through middleware. By setting a strict policy, you can specify exactly which websites are allowed to load your pages in an iframe.

Here’s an example of how you can configure the CSP in your Laravel application:

// app/Http/Middleware/ContentSecurityPolicy.php namespace App\Http\Middleware; use Closure; use Illuminate\Http\Request; class ContentSecurityPolicy { public function handle(Request $request, Closure $next) { // Setting a strict CSP header response()->headers->set('Content-Security-Policy', "frame-ancestors 'none';"); return $next($request); } }

This ensures that no site can embed your application within an iframe.

Why Use Our Free Website Security Checker?

After implementing these preventive measures, it’s important to test your application’s security. Our free Website Security Scanner tool provides a comprehensive vulnerability assessment for your website, including tests for clickjacking and other security issues.

Here’s a screenshot of our free tool in action:

Screenshot of the free tools webpage where you can access security assessment tools.

Conclusion

Securing your Laravel application against clickjacking attacks is crucial for protecting your users and ensuring your web app remains safe. By following the steps outlined above, you can significantly reduce the risk of such attacks. Additionally, our free Website Security Checker can help you ensure that your site is not vulnerable to clickjacking or any other security issues.

And here’s an example of the vulnerability assessment report generated by our free tool:

An example of a vulnerability assessment report generated with our free tool provides insights into possible vulnerabilities.

By regularly using security tools and following best practices, you can enhance your website’s defenses and maintain a safe browsing environment for your users.

Take Action Today

Start testing your website with our free tool to test website security free and take the first step in protecting your site from clickjacking and other cyber threats.

#Clickjacking#laravel#cyber security#cybersecurity#data security#pentesting#security#the security breach show

3 notes

·

View notes

Text

CRLF Injection in Laravel: How to Identify & Prevent It (Guide)

Introduction to CRLF Injection in Laravel

CRLF (Carriage Return Line Feed) Injection is a web application vulnerability that can lead to critical security issues like HTTP response splitting and log poisoning. In Laravel, a popular PHP framework, this vulnerability can arise if user input is not properly sanitized, allowing attackers to manipulate HTTP headers and responses.

What is CRLF Injection?

CRLF Injection happens when an attacker injects CRLF characters (\r\n) into user-controlled input, which can manipulate the behavior of HTTP responses. This can lead to serious issues such as:

HTTP Response Splitting: Attackers may split a single HTTP response into multiple responses.

Log Poisoning: Attackers can tamper with server logs, potentially hiding malicious activities.

Example of CRLF Injection in Laravel

Here’s an example of a vulnerable Laravel function that sets a custom HTTP header based on user input:

public function setCustomHeader(Request $request) { $value = $request->input('value'); header('X-Custom-Header: ' . $value); }

An attacker could submit a malicious input like:

malicious_value\r\nX-Injected-Header: malicious_value

This would result in the following HTTP headers:

X-Custom-Header: malicious_value X-Injected-Header: malicious_value

As you can see, the malicious input is inserted into the headers, compromising the security of the application.

How to Prevent CRLF Injection in Laravel

1. Validate and Sanitize User Input

Ensure that all user inputs are validated to prevent malicious characters. Use Laravel’s validation methods:

$request->validate([ 'value' => 'required|alpha_num', ]);

2. Avoid Direct Header Manipulation

Instead of manually setting headers, use Laravel's built-in response methods, which handle proper escaping:

return response() ->json($data) ->header('X-Custom-Header', $value);

3. Use Framework Features for Redirection

Whenever possible, use Laravel’s redirect() helper to avoid direct manipulation of HTTP headers:

return redirect()->route('home');

4. Implement Security Headers

Add Content Security Policies (CSP) and other security headers to mitigate potential injection attacks.

Using Pentest Testing Corp. to Scan for CRLF Injection

Regular security scans are essential to detect vulnerabilities like CRLF Injection. Pentest Testing Corp. offers a website vulnerability scanner that can help identify this risk and other potential issues in your Laravel application.

Screenshot of Pentest Testing Corp.'s website vulnerability scanner interface.

Analyzing Vulnerability Assessment Reports

After scanning your website for a Website Vulnerability test, reviewing the vulnerability assessment reports is crucial. These reports help identify weaknesses and offer suggestions for remediation.

Screenshot of a website vulnerability assessment report showing CRLF Injection risks.

Conclusion

CRLF Injection is a serious security issue for Laravel applications. By validating user input, using secure response handling, and scanning for vulnerabilities regularly, developers can protect their applications from this type of attack.

For more information on web security, check out Pentest Testing Corp.’s blog at Pentest Testing Blog.

1 note

·

View note

Text

Have the biggest mega-caps run too far?

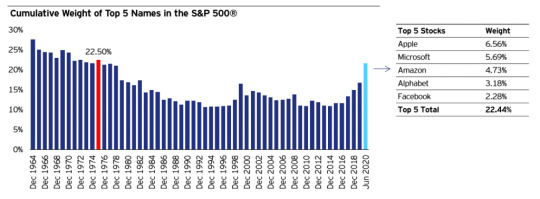

In 2020, US equity markets have taken a path that few could have seen coming. As a result, the S&P 500 Index has become more top-heavy than it’s been in 45 years. Below, I explain why this is the case, what it means for investors — and what they can do to help mitigate this concentration risk while maintaining exposure to the companies that make up this well-known benchmark.

The S&P 500 Index is dominated by just five holdings

Since its low on March 23, the S&P 500 Index has recovered by nearly 50% on the back of historic government and central bank intervention.1 Not only has the index turned positive for the year, it is now rapidly closing in on its Feb. 19 high. During this year’s equity roller coaster ride, the five largest holdings in the S&P 500 Index – Microsoft (MSFT), Apple (AAPL), Amazon (AMZN), Alphabet (GOOG/GOOGL) and Facebook (FB) have shined brightly.2 The average year-to-date return among these largest five holdings is more than 36%,1 driven by the perceived safety of owning the biggest companies as well the importance of technology and communication services in the work-at-home/stay-at-home world suddenly thrust upon us by the Great Lockdown.

Why is this outperformance by the mega caps important? Like many benchmark indexes, the S&P 500 uses market capitalization to weight securities. This means that despite the significant number of securities that are included, the risk and return of the index — and of the traditional index funds that track it — is driven by the largest holdings. The dominance of just a few large holdings on overall risk and return is called “concentration risk.”

The recent run up in these “biggest of the big” companies has created a situation in which the S&P 500 Index is more top-heavy than it has been in 45 years. As of Aug. 7, the top five stocks accounted for nearly 23% of the weight in the S&P 500 Index, up from 16.8% at the end of 2019 and widely surpassing the 16.6% observed in December 1999 (which was in the midst of the final build-up before the technology bubble burst in March 2000).3

Benchmark indexes face historic levels of concentration risk

As shown in the chart below, the current concentration risk of the S&P 500 is only a few percentage points from the high of 27.7% reached in 1964.3 This amount of concentration risk is one that traditional passive investors haven’t faced in over four decades. When concentration risk is the simple result of market capitalization (versus, for example, the intentional choices of an active manager), it may leave investors vulnerable in a few different scenarios: When valuations mean revert, when new competitors have a negative impact on the top companies, when regulatory risk emerges, and when the market experiences a rotation into more cyclical stocks.

Concentration risk: The concentration in the top 5 holdings is nearly 23%, a level not seen since 1975

The light blue bar represents the current concentration figures as of Aug. 7. The red bar illustrates the last time that the concentration of the index’s top 5 holdings was this high.

Source: S&P Global and Bloomberg, L.P., as of Aug. 7, 2020. In 1975, the top 5 holdings were IBM, Proctor & Gamble, Exxon Mobil, 3M, and General Electric. An investment cannot be made directly into an index. Holdings are subject to change and are not buy/sell recommendations

While the S&P 500 has enjoyed a healthy total return of 71.4% (11.4% annualized) since late July 2015, these five names had an average total return of 277% (30.4% annualized), nearly four times that of the S&P 500 Index from July 28, 2015-July 27, 2020.4 Their valuations have also expanded to the point that the average price/sales and price/earnings ratios for the top 5 are now 3x (7.43 vs. 2.33) and 2.3x (53.26 vs. 23.61) versus that of the S&P 500 benchmark, respectively.

Source: Bloomberg, L.P., as of July 27, 2020. An investment cannot be made into an index. Past performance is no guarantee of future results.

Against an ongoing backdrop of strength for these market giants, as evidenced by second-quarter earnings announcements, it is important for investors to remember that there are countless examples from financial history that tell us that the largest companies as measured by market capitalization do not maintain that lofty perch years into the future. As of today, the five largest companies in the S&P 500 have an aggregate weight that is higher than at any point since 1975, when the likes of IBM, Proctor & Gamble, Exxon Mobil, 3M, and General Electric were the biggest of the big.5

Consider an equal-weight approach

Investors looking to diversify away from these top-heavy benchmarks while still maintaining exposure to their holdings may consider an equal-weight approach. Equal-weight strategies weight each of their holdings equally, so that overall performance cannot be dominated by a very small group of companies. So, using the example of the S&P 500, each of the 500 index companies would represent approximately 0.2% of the portfolio in an equal-weight strategy.

The Invesco S&P 500® Equal Weight ETF (RSP) or the Invesco Equally-Weighted S&P 500 Fund (VADAX) may provide a potential solution for those investors that may want to diversify their portfolio to help mitigate this concentration risk while still maintaining exposure to the S&P 500.

1 Source: Bloomberg L.P.; as of Aug. 3, 2020

2 Fund holdings as of Aug. 7, 2020. For RSP: (Apple, 0.24%. Microsoft, 0.21%. Amazon, 0.23%. Alphabet, 0.20%. Facebook, 0.22%.) For VADAX: (Apple, 0.21%. Microsoft, 0.21%. Amazon, 0.21%. Alphabet, 0.20%. Facebook, 0.19%.)

3 Source: S&P Global

4 Source: Bloomberg L.P. as of July 27, 2020

5 Fund holdings as of Aug. 7, 2020. For RSP: (IBM, 0.19%. Proctor & Gamble, 0.21%. General Electric, 0.16%. 3M, 0.19%. Exxon Mobil, 0.17%.) For VADAX: (IBM, 0.19%. Proctor & Gamble, 0.20%. General Electric, 0.18%. 3M, 0.20%. Exxon Mobil, 0.19%.)

Important information

Blog header image: Per Swantesson / Stocksy

The price-to-earnings (P/E) ratio measures a stock’s valuation by dividing its share price by its earnings per share.

The price-to-sales ratio is a valuation metric calculated by dividing a company’s market capitalization by its total sales over a 12-month period.

Risks for Invesco Equally-Weighted S&P 500 Fund

Derivatives may be more volatile and less liquid than traditional investments and are subject to market, interest rate, credit, leverage, counterparty and management risks. An investment in a derivative could lose more than the cash amount invested.

Because the fund operates as a passively managed index fund, adverse performance of a particular stock ordinarily will not result in its elimination from the fund’s portfolio. Ordinarily, the Adviser will not sell the fund’s portfolio securities except to reflect changes in the stocks that comprise the S&P 500 Index, or as may be necessary to raise cash to pay fund shareholders who sell fund shares.

The Fund is subject to certain other risks. Please see the current prospectus for more information regarding the risks associated with an investment in the Fund.

Risks for Invesco S&P 500® Equal Weight ETF

There are risks involved with investing in ETFs, including possible loss of money. Shares are not actively managed and are subject to risks similar to those of stocks, including those regarding short selling and margin maintenance requirements. Ordinary brokerage commissions apply. The Fund’s return may not match the return of the Underlying Index. The Fund is subject to certain other risks. Please see the current prospectus for more information regarding the risk associated with an investment in the Fund.

Investments focused in a particular industry or sector, are subject to greater risk, and are more greatly impacted by market volatility, than more diversified investments.

The Fund is non-diversified and may experience greater volatility than a more diversified investment. Shares are not individually redeemable and owners of the Shares may acquire those Shares from the Fund and tender those Shares for redemption to the Fund in Creation Unit aggregations only, typically consisting of 10,000, 50,000, 75,000, 80,000, 100,000, 150,000 or 200,000 Shares.

The opinions referenced above are those of the author as of Aug. 7, 2020. These comments should not be construed as recommendations, but as an illustration of broader themes. Forward-looking statements are not guarantees of future results. They involve risks, uncertainties and assumptions; there can be no assurance that actual results will not differ materially from expectations.

from Expert Investment Views: Invesco Blog https://www.blog.invesco.us.com/have-the-biggest-mega-caps-run-too-far/?utm_source=rss&utm_medium=rss&utm_campaign=have-the-biggest-mega-caps-run-too-far

0 notes

Text

A BRIEF SUMMARY OF YELLOWSTONE NATIONAL PARK & THE NATURAL HAZARDS THAT COULD OCCUR WHILE VISITING

BY: ISABELLA KARAPASHA

BACKGROUND:

ON THE PARK

Yellowstone National Park is a 3,468 square mile park that spans through the states of Wyoming, Montana, and Idaho. It makes this part of the United States the most seismically active area, with 700 to 3,000 earthquakes a year. It is also home to Old Faithful and geyser that has been active every 44 minutes since 2000 (1). There are over 60 mammals with over 20 different species, making it the most diverse ecosystem in the continental 48 states(2).

ON TRAVEL

The park experiences nearly 4 million visitors from around the globe every year. The most popular visiting months are July and August. Due to the high impact of human interaction there has been a decrease in species through disease spread and liter to the environment(2).

An issue that is currently up for discussion is the visitors using snowmobiles and oversnow vehicles having an effect on the natural environment, due to an increase travel months in the winter. For, oversnow mobiles allow for more visitors to experience Yellowstone during the winter season. Yet, snowmobile for recreational use can impact Yellowstone's microclimate in negative ways. The emission for these snowmobiles can cause pollution to the air and water, the engine to a snowmobile is compared to a jet engine alongside emitting large amount of dark smoke. The noise from the motor can cause disruption from the noise pollution. The issue with the locals is that this increase of winter visitors, has a positive effect for the cities surrounding and local businesses, which are benefiting more from the increase in revenue and profit during the usual slow season. With this, The National Park Services had put a limitation on the number of oversnow mobiles in the park through a permit process(3).

Alongside the increased travel allows for interaction with Yellowstone's natural environment to have repercussions. Since it’s operations in 1872, there has been people who have been killed by bears, drownings, and burns. Despite the majority of the above listed incidents are based on human error(4).

RESEARCH QUESTION

This leads me to the question of, can more strategies be enacted to ensure that visitors to Yellowstone National Park are not endangered in the event of a natural or geologic hazard?

HAZARDS THAT ARE PRESENT

The park puts several pre-disaster actions into place to keep their visitors from risk. For winter storms that can cause avalanches, there is an Environmental Impact Statement (EIS). EIS was created in 1999 and it takes accountability for addressing winter impacts with new technologies, limiting vehicle numbers, and mandatory quiding during the winter months, including avalanche mitigation. Alongside with the Park’s monitoring team, it monitors weather conditions and closes the roads from all traffic. The program also relies on military weapons to activate small avalanches so large ones do not occur (5&9).

As for geologic hazards, like The Unstable Slope Management Plan is an asset management program that provides tools and guidance to help monitor slopes, implementing and assessing the conditions, tracking, and assessing risk. Alongside there are hazard maps on the parks website, escape routes, first aid locations, sheltres, and emergency contacts, reduce exposure, stay on the trail, stay back from cliff edges, obey posted warnings (6).

As for earthquakes, they are currently active in the Yellowstone area, yet not all are felt. There are ‘swarms’ which is a collection of thousands of temblors, a characteristic of the supervolcano that resides in Yellowstone (7). Yellowstone monitors these swarms with their pre planning and real time monitoring through GPS data through the YVO, the Yellowstone Volcano Observatory. YVO is a cooperative partnership between the US Geological Survey, National Park Service, University of Utah, University of Wyoming, University NAVSTAR Consortium and State Geological Surveys of Wyoming, Montana and Idaho. It involves organizing and deploying scientific personnel and equipment to investigate current activity and assess possible outcomes and impacts, monitoring systems, alert levels, notification systems (5). Geographic Information Systems (GIS) plays a crucial role in keeping visitors safe through monitoring hazards and if need be, mitigating hazards that could occur through remapping affect areas (6).

ANSWER TO THE RESEARCH QUESTION

The biggest takeaway from this research is that these hazards are natural and can not be controlled. By going to Yellowstone you are putting yourself at risk, to ensure your safety while there you should, stay on the trail, stay back from cliff edges, obey posted warnings. The park has done the greatest extent of protection for its visitors and that can be seen through the Yellowstone National Observatory’s ability to monitor volcanic activity, and the Unstable Slope Management plan for slopes, and the Environmental Impact Statement to ensure safety during winter seasons, and National Park Services acts of planning through hazard maps, escape routes, first aid locations, shelters, and emergency contacts, reduce exposure, trail routes, and signs (6). The park has done their duty on ensuring the visitors of Yellowstone National Park are not endangered in the event of a natural or geologic hazard, and visitors have to understand that they are voluntarily taking a risk by attending the park where natural hazards may occur.

IN CONLUSION

Based on previous research, a major factor that needs to be put into consideration is that a lot of these natural hazards are not controllable. For example, when it comes to the unstable slopes and other geographic hazards the main challenge is making people aware because the majority of these hazards are not controllable, rather you just have to be cautious and plan for pre and post hazard (5). Hence why there is an Unstable Slope Management Plan, although it is taking a personal risk to go to Yellowstone, the park is making it their job to monitor unstable slopes to keep visitors as safe as possible. This is similar to their dedication to the creation and improvement of the Environmental Impact Statement for winter storms and creating a supplemental environmental impact statement (8).The process allows for constant consideration and reconsideration of winter storm management to keep visitors safe during the winter season through the monitoring process. The park has done their duty on ensuring the visitors of Yellowstone National Park are not endangered in the event of a natural or geologic hazard.

--------

CHANGES FROM THE FIRST DRAFT

After writing the first draft, my feedback was mainly about the organization and flow of my blog. Therefore, I rearranged my writing and also added a new format. I added headers to allow the reader to easily understand what the upcoming paragraph is going to encompass. Another mention was to add photos and answer my research question clearly, to resolve those comments I added imagery to help support certain sections of the paper, and I added the different formatting that addressing the topic of discussion for the corresponding paragraphs.

References:

Reference 1:

https://en.wikipedia.org/wiki/Old_Faithful

Reference 2 :

https://www.nps.gov/yell/learn/nature/wildlife.htm

Reference 3:

Bieschke, B. (2016). Challenging the 2012 Rule Implementing Regulations on Oversnow Vehicle Use in Yellowstone National Park. Boston College Environmental Affairs Law Review, (Issue 2), 541. Retrieved from https://search-ebscohost-com.proxy.lib.miamioh.edu/login.aspx?direct=true&AuthType=cookie,ip&db=edshol&AN=edshol.hein.journals.bcenv43.26&site=eds-live&scope=site

Reference 4:

https://www.nps.gov/yell/learn/nature/injuries.htm

Reference 5: https://www.nps.gov/yell/learn/management/upload/finalreport-march_2007.pdf

Reference 6:

(https://www.nps.gov/yell/planyourvisit/safety.htm

Reference 7:

Thuermer Jr. ., A. M. (2017). Earthquake bigger risk than Yellowstone supervolcano. Wyoming Business Report, 18(6), 12–14. Retrieved from https://search-ebscohost-com.proxy.lib.miamioh.edu/login.aspx?direct=true&AuthType=cookie,ip&db=buh&AN=125396317&site=eds-live&scope=site

Reference 8:

Strategic Priorities of Hazard Management . (2019, May 28). Retrieved from https://www.nps.gov/yell/learn/management/strategic-priorities.htm.

Reference 9:

(https://www.researchgate.net/publication/252836265_A_GIS_Framework_for_Mitigating_Volcanic_and_Hydrothermal_Hazards_at_Yellowstone_National_Park_and_Vicinity)

Reference 10:

Bieschke, B. (2016). Challenging the 2012 Rule Implementing Regulations on Oversnow Vehicle Use in Yellowstone National Park. Boston College Environmental Affairs Law Review, (Issue 2), 541. Retrieved from https://search-ebscohost-com.proxy.lib.miamioh.edu/login.aspx?direct=true&AuthType=cookie,ip&db=edshol&AN=edshol.hein.journals.bcenv43.26&site=eds-live&scope=site

Reference 11:

Wessels, John. “Winter Use Plan, Supplemental Environmental Impact Statement, Yellowstone National Park, Idaho, Montana, and Wyoming.” Federal Register (National Archives & Records Service, Office of the Federal Register), vol. 77, no. 26, Feb. 2012, p. 6581. EBSCOhost, search.ebscohost.com/login.aspx?direct=true&AuthType=cookie,ip&db=bth&AN=71942265&site=eds-live&scope=site.

0 notes

Photo

New Post has been published on https://warmdevs.com/scrolling-and-attention.html

Scrolling and Attention

People’s behaviors are fairly stable and usability guidelines rarely change over time. But one user behavior that did change since the early days of the web is the tendency to scroll. In the beginning, users rarely scrolled vertically; but by 1997, as long pages became common, most people learned to scroll. However, the information above the fold still received most attention: even as recently as 2010, our eyetracking studies showed that 80% of users’ viewing time was spent above the fold.

Since 2010, with the advent of responsive design and minimalism, many designers have turned towards long pages (covering several “screenfuls”) with negative space. It’s time to ask, again, whether user behavior has changed due to the popularity of these web-design trends.

Eyetracking Data

About the Study

To answer that question, we analyzed the x, y coordinates of over 130,000 eye fixations on a 1920×1080 screen. These fixations were from 120 participants, who were part of our recent eyetracking study that involved thousands of sites from a wide range of sectors and industries. For this study, we focused our analysis on a broad range of user tasks that spanned a variety of pages and industries, including news, ecommerce, blogs, FAQs, and encyclopedic pages. Our goal was not to analyze individual websites, but rather to characterize the general range of user behaviors.

We compared these recent data with those obtained from our previous eyetracking study on 1024×768 monitors.

Study Results

Two changes happened between our studies: (a) bigger screens; and (b) new web-design trends, with possible adaptations on the side of the users. We can’t tease apart the relative impact of these two changes, but it doesn’t matter, since both are due to the passage of time, and we can’t undo either one, even if we wanted.

In our most recent study, users spent about 57% of their page-viewing time above the fold. 74% of the viewing time was spent in the first two screenfuls, up to 2160px. (This analysis disregards the maximum page length — the result can be due to short page lengths or to people giving up after the first two screenfuls of content.)

These findings are quite different from those reported in our 2010 article: there, 80% of the viewing time was made up of fixations above the fold. However, the pattern of a sharp decrease in attention following the fold remains the same in 2018 as in 2010.

Content above the fold receives by far the highest share of the viewing time. About 74% of the time was spent in the top two screenfuls of content (the information above the fold plus the screenful immediately below the fold). The remaining 26% was spent in small increments further down the length of the page.

Understandably, not every page is the same length. To determine how people divide their fixations across the page (independent of how long the page is), we split the pages into 20% segments (i.e., one-fifth of each page). On general websites, more than 42% of the viewing time fell within the top 20% of the page, and more than 65% of the time was spent in the top 40% of the page. On search-results pages (SERPs), which we did not isolate in the 2010 findings, 47% of the viewing time was spent on the top 20% of the page (and more than 75% in the top 40%) — likely a reflection of users’ tendency to look only at the top results.

People spent disproportionately more time viewing the top 20% of a page.

If we look only at content above the fold — within the first screenful — the information towards the top of the screen received more attention than the information towards the bottom. More than 65% of the viewing time above the fold was concentrated in the top half of the viewport. On SERP, the top half of the first screenful received more than 75% of the viewing time above the fold. (It’s an old truth, but bears repeating: be #1 or #2 on Google, or you hardly exist on the Internet. Google gullibility remains as strong as when we identified this user behavior, 10 years ago.)

Even above the fold, attention was focused toward the top of the page — especially with SERPs.

Scanning & Reading Patterns

We’ve seen that the content above the fold received most attention (57% of viewing time); the second screenful of content received about a third of that (17% viewing time); the remaining 26% was spread in a long-tail distribution. In other words, the closer a piece of information is to the top of the page, the higher the chance that it will be read.

Individual reading patterns confirm this finding. Many users engage in an F-pattern when they scan a page whose content is not well-structured — they tend to look more thoroughly at the text placed close to the top of the page (the first few paragraphs of text), and then spend fewer and fewer fixations and time on information that appears low on the page.

Even with lists or information presented in a structured way, people use more eye gazes (and thus reading time) for the top of the page, as they need to understand how the page is organized. Once they do so, they tend to focus very efficiently only on the information relevant to the task at hand, thus spending a lot fewer eye gazes (and thus viewing time) on the content placed farther from the top.

This is a representative gaze plot showing that most of the user’s eye fixations are concentrated in the top part of the page, though not always at the very top. The actual distribution of fixations will depend on the specific design and the user’s goal in visiting the page. Occasionally a user may read a little bit if the information seems interesting, but overall, views peter out further down the page.

2010 vs. Present

In 2010, 80% of the viewing time was spent above the fold. Today, that number is only 57% — likely a consequence of the pervasiveness of long pages. What does that mean?

First, it could be that, overall, designers are doing a good job of creating signifiers to counteract the illusion of completeness and to invite people to scroll. In other words, they are aware of the disadvantages of the long page and mitigate them to some extent. Second, it could mean that users have become conditioned to scroll — the prevalence of pages requiring scrolling has ingrained that behavior in us.

At least to some extent. People still don’t scroll a lot — they rarely go beyond the third screenful of info. Basically, the fold as a barrier has been pushed down to the third screenful — 8 years ago, 80% of the viewing time was spent in the first screenful of info (above the fold); today, 81% of the viewing time is spent in the first three screenfuls of information.

We’ve always said that people will scroll if they have a reason to do it. Attention still lingers towards the top of the page — that is the portion of the content that is most discoverable and likely to be viewed by your users. The interaction cost of scrolling reduces the likelihood that content will be viewed in lower parts of a longer page.

Interestingly, the increase in screen resolution did not lead to a decrease in scrolling, as one might have expected. The reason is probably that designers and developers did not leverage the larger screens, and instead, opted to spread content further apart. For better or worse, users are now encouraged to scroll more than in the past — but not much more. Information density was probably too high (leading to crowded and busy layouts) in the early days of the web, but page designs definitely tend to be too sparse now.

Implications

Given that users spend more viewing time in the top part of the page, especially above the fold, here are some things you want to keep in mind:

Reserve the top of the page for high-priority content: key business and user goals. The lower parts of the page can accommodate secondary or related information. Keep major CTAs above the fold.

Use appropriate font styling to attract attention to important content: Users rely on elements like headers and bolded text to identify when information is important, and to locate new segments of content. Make sure that these elements are visually distinct and styled consistently across the site so users can easily find them.

Beware of false floors, which are increasingly common with modern minimalist designs. The illusion of completeness can interfere with scrolling. Include signifiers (such as cut-off text) to tell people that there is content below the fold.

Test your design with representative users to determine the “ideal” page length and make sure that the information that users want can be easily seen.

Conclusion

While modern webpages tend to be long and include negative space, and users may be more inclined to scroll than in the past, people still spend most of their viewing time in the top part of a page. Content prioritization is a key step in your content-planning process. Strong visual signifiers can sometimes entice users to scroll and discover content below the fold. To determine the ideal page length, test with real users, and keep in mind that very long pages increase the risk of losing the attention of your customers.

0 notes

Text

Something Awesome Sub-Chapter 3.1: Techniques to Gain a Foothold

This sub-chapter dives into the various components of exploitable systems, and the tools used to exploit them successfully.

3.1.1 - Shellcode

Shellcode; Injectable binary code used to perform custom tasks within another process. Written in the Assembly language.

Use of a low-level programming language means that shellcode attacks are OS specific.

Buffer; A segment of memory that can overflow by having more data assigned to the buffer than it can hold.

Buffer overflows attempt to overwrite the original function return pointer stored in memory to point to the shellcode for the CPU to execute.

Shellcodes can do anything from downloading and executing files, to sending a shell to a remote client.

Shellcodes can be detected through the process of intrusion detection and use of prevention systems and antivirus products.

Network-based prevention system appliances use pattern-matching signatures to search packets travelling over the network for signs of shellcode (antivirus products do the same thing, but for localised files).

Pattern-matching signatures are prone to false positives, and can be evaded by encrypted shellcode.

Some appliances can detect shellcode based on the behaviour of executing instructions found in network traffic and local files.

3.1.2 - Integer Overflow Vulnerabilities

Integer overflows result when programmers do not consider the possibility of invalid inputs.

Integer overflows can lead to 100% CPU usage, DoS conditions, arbitrary code execution, and elevations of privileges.

Two types of integers; signed and unsigned. Unsigned include 0 and all positive numbers (up to a cap of 2^n - 1), whereas signed integers include both negative and positive numbers from -2^(n-1) to 2^(n-1) - 1.

Two's Complement; A decimal number in binary is converted to its one's complement by inverting each of the bits (0->1 and vice versa). 1 is then added to the one's complement representation, resulting in the two's complement representation of the negative of the starting decimal number.

Integer overflow occurs when an arithmetic operation produces a result larger than the maximum expected value. Occurs in signed and unsigned integers.

Most integer errors are type range errors, and proper type range checking can eliminate most of them.

Mitigation strategies include range checking , strong typing, compiler-generated runtime checks, and safe integer operations.

Range checking involves validating an integer value to make sure that it is within a proper range before using it.

Strong typing involves using specific types, making it impossible to use a particular variable type improperly.

Safe integer operations involve using safe integer libraries of programming languages for operations where untrusted sources influence the inputs.

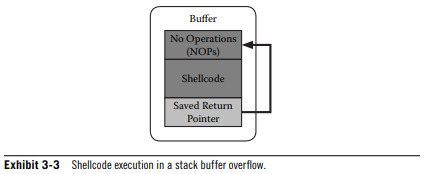

3.1.3 - Stack-Based Buffer Overflows

Overflows are the result of a finite-sized buffer receiving data that are larger than the allocated space.

The standard computer stack consists of an array of memory bytes that a programmer can access randomly or through a series of pop-and-push commands.

Two special registers are maintained for stack management; the stack pointer (ESP) and base pointer (EBP).

Functions move around the ESP in order to keep track of the most recent additions.

Most typical attack involves finding a buffer on the stack that is close enough to the return address and attempting to place enough data in the buffer such that the attacker can overwrite the return address.

To avoid this, a program should always validate any data taken from an external source for both size and content.

Data execution prevention (DEP) marks certain areas of memory, such as the stack, as non-executable and therefore much more difficult to exploit a buffer overflow.

3.1.4 - Format String Vulnerabilities

Revolves around the printf C function and its ability to accept a variable number of parameters as input.

The program pushes the parameters onto a stack before calling the printf function.

The printf function utilizes the specified formats (%s, %d or %x) to determine how many variables it should remove from the stack to populate the values.

A simple attack is performed by forcing printf to print a string ("AAAA", for example) followed by a format string. The format string instructs printf to show 8 digits of precision in hex repeatedly.

The resulting output allows the attacker to identify where in the stack the string is located, and subsequently find the value that represents the return address.

These attacks can be avoided if programmers can recompile the source code of a program; pass the input variable with a string (%s) format instead, preventing the user's input from affecting the format string.

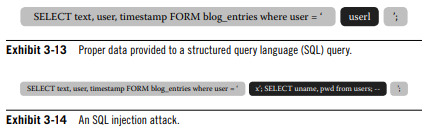

3.1.5 - SQL Injection

Fundamentally an input validation error. Occurs when an application that interacts with a database passes data to an SQL query in an unsafe manner.

Attacks can lead to sensitive data leakage, website defacement, or destruction of the entire database.

SQL injection can be avoided by checking that the data does not include any special characters such as single quotations or semi-colons. Additionally, parameterized queries can be used, where the data is specified separately (the database becomes aware of what is code and what is data).

When testing is not available, administrators should consider deploying a Web App Firewall (WAF) to provide generic protection against SQL injection and other attacks.

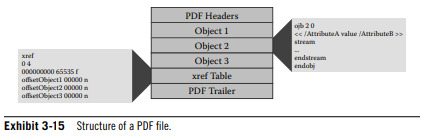

3.1.6 - Malicious PDF Files

Malicious PDF files usually contain JavaScript because the instructions can allocate large blocks of memory.

PDFs include object with attribute tags that describe its purpose.

JavaScript code can be executed by assigning an 'action' attribute to the object containing the malicious code.

The malicious code can be embedded or injected into existing PDF files through publicly available applications and websites.

Risks of malicious PDF files can be reduced by disabling JavaScript in the PDF reader, as well as multimedia for less common attacks.

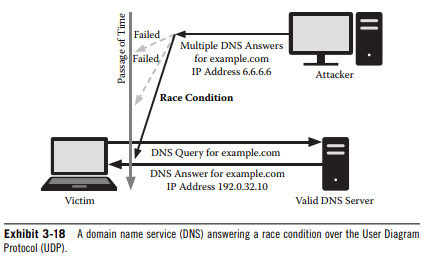

3.1.7 - Race Conditions

Result when an electronic device or process attempts to perform two or more operations at the same time, producing an illegal operation.

A type of vulnerability that an attacker can use to influence shared data, causing a program to use arbitrary data and allowing attacks to bypass access restrictions.

Can lead to data corruption, privilege escalation, or code execution.

An attacker who successfully alters memory through a race condition can execute any function with any parameters. Can also lead to privilege escalation.

Typically, attacks exploiting race conditions raise many anomalies and errors on the system, which can be detected quite easily.

Semaphores and mutual exclusions (mutexes) provide instructions that are not vulnerable to race conditions.

3.1.8 - Web Exploit Tools

Web exploit tools give attackers the ability to execute arbitrary code using vulnerabilities or social engineering.

Many tools hide exploits through encoding, obfuscation or redirection.

Exploit tools also use JavaScript or HTTP headers to profile the client and avoid sending content unless the client is vulnerable.

Some exploit services are market driven by supply and demand.

Pay-per-install services allow actors to by and sell installations.

Pay-per-traffic services allow attackers to attract a large number of victims to their Web exploit tools.

The copying and proliferation of exploit tools can be reduced through;

Source code obfuscation.

Per-domain licenses that check the local system before running.

Network functionality to confirm validity.

An end-user license agreement (EULA).

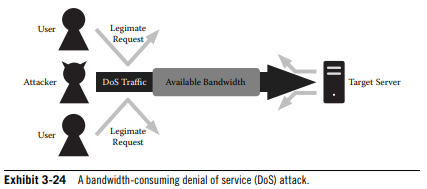

3.1.9 - DoS Conditions

A DoS condition arises when servers or services cannot respond to client requests.

Networks segments, network devices, the server and the application hosting the service itself are all susceptible to such an attack.

Flooding; The overwhelming traffic used to saturate network communications, preventing legitimate requests from being processed.

Distributed Denial of Service (DDoS) involves distributed systems joining forces and attacking simultaneously to overwhelm a target.

DoS conditions can also occur as a by-product of vulnerability exploitation.

Security safeguards include the patching of systems, the filtering out of erroneous traffic at edge routers and firewalls, and the discarding of illegitimate IP addresses.

DoS detection requires monitoring of network traffic patterns and the health of devices.

Adequate response procedures will reduce the impact and outage cause by a DoS significantly.

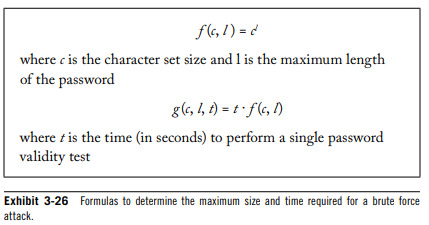

3.1.10 - Brute Force and Dictionary Attacks

Authentication systems that depend on passwords to determining the authenticity of a user are only as strong as the passwords used.

Password dictionaries are compilations of known words and variations that users are likely to have as passwords.

A rainbow table contains pre-computed hashed passwords that allow an attacker to quickly locate the hash of a user's password in the tables to relate this to the original text password.

Brute force attacks work on the principle that, given enough time, an attacks can find any password regardless of its length or complexity.

Character Set; The set of letters, numbers and symbols used in a password.

Maximum number of iterations required is based on the size of the character set and the maximum size of the password.

Given sufficient power processing, an attack can sharply reduce the time required for brute for password attacks to be successful.

Salts are random characters added to the beginning of a supplied text password prior to the hash generation.

The use of the salt increases the size of the possible password length exponentially.

0 notes

Text

DPoP with Spring Boot and Spring Security

Solid is an exciting project that I first heard about back in January. Its goal is to help “re-decentralize” the Web by empowering users to control access to their own data. Users set up “pods” to store their data, which applications can securely interact with using the Solid protocol. Furthermore, Solid documents are stored as linked data, which allows applications to interoperate more easily, hopefully leading to less of the platform lock-in that exists with today’s Web.

I’ve been itching to play with this for months, and finally got some free time over the past few weekends to try building a Solid app. Solid's authentication protocol, Solid OIDC, is built on top of regular OIDC with a mechanism called DPoP, or "Demonstration of Proof of Possession". While Spring Security makes it fairly easy to configure OIDC providers and clients, it doesn't yet have out-of-the-box support for DPoP. This post is a rough guide on adding DPoP to a Spring Boot app using Spring Security 5, which gets a lot of the way towards implementing the Solid OIDC flow. The full working example can be found here.

DPoP vs. Bearer Tokens

What's the point of DPoP? I will admit it's taken me a fair amount of reading and re-reading over the past several weeks to feel like I can grasp what DPoP is about. My understanding thus far: If a regular bearer token is stolen, it can potentially be used by a malicious client to impersonate the client that it was intended for. Adding audience information into the token mitigates some of the danger, but also constrains where the token can be used in a way that might be too restrictive. DPoP is instead an example of a "sender-constrained" token pattern, where the access token contains a reference to an ephemeral public key, and every request where it's used must be additionally accompanied by a request-specific token that's signed by the corresponding private key. This proves that the client using the access token also possesses the private key for the token, which at least allows the token to be used with multiple resource servers with less risk of it being misused.

So, the DPoP auth flow differs from Spring's default OAuth2 flow in two ways: the initial token request contains more information than the usual token request; and, each request made by the app needs to create and sign a JWT that will accompany the request in addition to the access token. Let's take a look at how to implement both of these steps.

Overriding the Token Request