#acid sql

Explore tagged Tumblr posts

Text

Menggali Lebih Dalam: 5 Fakta Menarik tentang SQL Query

SQL (Structured Query Language) merupakan bahasa pemrograman khusus yang digunakan untuk mengelola dan mengakses basis data relasional. SQL Query adalah perintah atau instruksi yang digunakan untuk berinteraksi dengan basis data. Dalam dunia pengembangan perangkat lunak dan administrasi basis data, SQL Query menjadi landasan utama. Mari kita telaah 5 fakta menarik tentang SQL Query yang dapat memberikan wawasan lebih dalam.

1. Basis Data Relasional dan SQL

SQL Query paling sering digunakan dalam konteks basis data relasional. Basis data relasional menggunakan tabel untuk menyimpan data dan memanipulasinya dengan menggunakan relasi antar tabel. SQL memungkinkan pengguna untuk menentukan, mengakses, dan memanipulasi data dalam tabel-tabel ini dengan mudah melalui Query.

2. DML dan DDL: Perbedaan dalam SQL Query

SQL Query dapat dibagi menjadi dua jenis utama: Data Manipulation Language (DML) dan Data Definition Language (DDL). DML digunakan untuk mengelola data dalam basis data, seperti menambahkan, menghapus, atau memperbarui catatan. Di sisi lain, DDL digunakan untuk mendefinisikan struktur basis data, seperti membuat, mengubah, atau menghapus tabel.

3. Klausa WHERE: Penyaringan Data

Klausa WHERE adalah salah satu elemen kunci dalam SQL Query. Digunakan untuk menyaring data berdasarkan kondisi tertentu, klausa WHERE memungkinkan pengguna untuk mengambil data yang memenuhi kriteria tertentu. Misalnya, SELECT * FROM Tabel WHERE Kolom = Nilai akan mengembalikan baris-baris yang memenuhi persyaratan tersebut.

4. JOIN: Menggabungkan Data dari Berbagai Tabel

JOIN merupakan fungsionalitas penting dalam SQL Query yang memungkinkan pengguna untuk menggabungkan data dari dua atau lebih tabel. Dengan JOIN, kita dapat mengambil data yang berkaitan dari tabel-tabel yang berbeda berdasarkan kolom-kolom yang memiliki nilai yang sama. Ini memungkinkan pengguna untuk membuat kueri yang lebih kompleks dan mendapatkan informasi yang lebih terperinci.

5. Transaksi dan Kepatuhan ACID

SQL Query sering digunakan dalam konteks transaksi basis data. Transaksi adalah serangkaian operasi yang membentuk suatu tindakan tunggal, dan SQL mendukung sifat-sifat transaksi yang dikenal sebagai ACID: Atomicity, Consistency, Isolation, dan Durability. Ini menjamin bahwa operasi-operasi tersebut dapat dijalankan secara aman dan konsisten.

Melalui kekuatannya dalam memanipulasi data dalam basis data relasional, SQL Query menjadi elemen kunci dalam pengembangan aplikasi dan administrasi sistem basis data. Dengan memahami konsep dan fungsionalitas dasar SQL Query, para pengembang dan administrator basis data dapat mengoptimalkan kinerja dan efisiensi operasi mereka.

#sql#sql query#fakta sql query#basis data#dml#ddl#acid sql#manipulasi data#join sql#where sql#programming#programmer

1 note

·

View note

Text

Unlock Success: MySQL Interview Questions with Olibr

Introduction

Preparing for a MySQL interview requires a deep understanding of database concepts, SQL queries, optimization techniques, and best practices. Olibr’s experts provide insightful answers to common mysql interview questions, helping candidates showcase their expertise and excel in MySQL interviews.

1. What is MySQL, and how does it differ from other database management systems?

Olibr’s Expert Answer: MySQL is an open-source relational database management system (RDBMS) that uses SQL (Structured Query Language) for managing and manipulating databases. It differs from other DBMS platforms in its open-source nature, scalability, performance optimizations, and extensive community support.

2. Explain the difference between InnoDB and MyISAM storage engines in MySQL.

Olibr’s Expert Answer: InnoDB and MyISAM are two commonly used storage engines in MySQL. InnoDB is transactional and ACID-compliant, supporting features like foreign keys, row-level locking, and crash recovery. MyISAM, on the other hand, is non-transactional, faster for read-heavy workloads, but lacks features such as foreign keys and crash recovery.

3. What are indexes in MySQL, and how do they improve query performance?

Olibr’s Expert Answer: Indexes are data structures that improve query performance by allowing faster retrieval of rows based on indexed columns. They reduce the number of rows MySQL must examine when executing queries, speeding up data retrieval operations, and optimizing database performance.

4. Explain the difference between INNER JOIN and LEFT JOIN in MySQL.

Olibr’s Expert Answer: INNER JOIN and LEFT JOIN are SQL join types used to retrieve data from multiple tables. INNER JOIN returns rows where there is a match in both tables based on the join condition. LEFT JOIN returns all rows from the left table and matching rows from the right table, with NULL values for non-matching rows in the right table.

5. What are the advantages of using stored procedures in MySQL?

Olibr’s Expert Answer: Stored procedures in MySQL offer several advantages, including improved performance due to reduced network traffic, enhanced security by encapsulating SQL logic, code reusability across applications, easier maintenance and updates, and centralized database logic execution.

Conclusion

By mastering these MySQL interview questions and understanding Olibr’s expert answers, candidates can demonstrate their proficiency in MySQL database management, query optimization, and best practices during interviews. Olibr’s insights provide valuable guidance for preparing effectively, showcasing skills, and unlocking success in MySQL-related roles.

2 notes

·

View notes

Text

DBMS Tutorial Explained: Concepts, Types, and Applications

In today’s digital world, data is everywhere — from social media posts and financial records to healthcare systems and e-commerce websites. But have you ever wondered how all that data is stored, organized, and managed? That’s where DBMS — or Database Management System — comes into play.

Whether you’re a student, software developer, aspiring data analyst, or just someone curious about how information is handled behind the scenes, this DBMS tutorial is your one-stop guide. We’ll explore the fundamental concepts, various types of DBMS, and real-world applications to help you understand how modern databases function.

What is a DBMS?

A Database Management System (DBMS) is software that enables users to store, retrieve, manipulate, and manage data efficiently. Think of it as an interface between the user and the database. Rather than interacting directly with raw data, users and applications communicate with the database through the DBMS.

For example, when you check your bank account balance through an app, it’s the DBMS that processes your request, fetches the relevant data, and sends it back to your screen — all in milliseconds.

Why Learn DBMS?

Understanding DBMS is crucial because:

It’s foundational to software development: Every application that deals with data — from mobile apps to enterprise systems — relies on some form of database.

It improves data accuracy and security: DBMS helps in organizing data logically while controlling access and maintaining integrity.

It’s highly relevant for careers in tech: Knowledge of DBMS is essential for roles in backend development, data analysis, database administration, and more.

Core Concepts of DBMS

Let’s break down some of the fundamental concepts that every beginner should understand when starting with DBMS.

1. Database

A database is an organized collection of related data. Instead of storing information in random files, a database stores data in structured formats like tables, making retrieval efficient and logical.

2. Data Models

Data models define how data is logically structured. The most common models include:

Hierarchical Model

Network Model

Relational Model

Object-Oriented Model

Among these, the Relational Model (used in systems like MySQL, PostgreSQL, and Oracle) is the most popular today.

3. Schemas and Tables

A schema defines the structure of a database — like a blueprint. It includes definitions of tables, columns, data types, and relationships between tables.

4. SQL (Structured Query Language)

SQL is the standard language used to communicate with relational DBMS. It allows users to perform operations like:

SELECT: Retrieve data

INSERT: Add new data

UPDATE: Modify existing data

DELETE: Remove data

5. Normalization

Normalization is the process of organizing data to reduce redundancy and improve integrity. It involves dividing a database into two or more related tables and defining relationships between them.

6. Transactions

A transaction is a sequence of operations performed as a single logical unit. Transactions in DBMS follow ACID properties — Atomicity, Consistency, Isolation, and Durability — ensuring reliable data processing even during failures.

Types of DBMS

DBMS can be categorized into several types based on how data is stored and accessed:

1. Hierarchical DBMS

Organizes data in a tree-like structure.

Each parent can have multiple children, but each child has only one parent.

Example: IBM’s IMS.

2. Network DBMS

Data is represented as records connected through links.

More flexible than hierarchical model; a child can have multiple parents.

Example: Integrated Data Store (IDS).

3. Relational DBMS (RDBMS)

Data is stored in tables (relations) with rows and columns.

Uses SQL for data manipulation.

Most widely used type today.

Examples: MySQL, PostgreSQL, Oracle, SQL Server.

4. Object-Oriented DBMS (OODBMS)

Data is stored in the form of objects, similar to object-oriented programming.

Supports complex data types and relationships.

Example: db4o, ObjectDB.

5. NoSQL DBMS

Designed for handling unstructured or semi-structured data.

Ideal for big data applications.

Types include document, key-value, column-family, and graph databases.

Examples: MongoDB, Cassandra, Redis, Neo4j.

Applications of DBMS

DBMS is used across nearly every industry. Here are some common applications:

1. Banking and Finance

Customer information, transaction records, and loan histories are stored and accessed through DBMS.

Ensures accuracy and fast processing.

2. Healthcare

Manages patient records, billing, prescriptions, and lab reports.

Enhances data privacy and improves coordination among departments.

3. E-commerce

Handles product catalogs, user accounts, order histories, and payment information.

Ensures real-time data updates and personalization.

4. Education

Maintains student information, attendance, grades, and scheduling.

Helps in online learning platforms and academic administration.

5. Telecommunications

Manages user profiles, billing systems, and call records.

Supports large-scale data processing and service reliability.

Final Thoughts

In this DBMS tutorial, we’ve broken down what a Database Management System is, why it’s important, and how it works. Understanding DBMS concepts like relational models, SQL, and normalization gives you the foundation to build and manage efficient, scalable databases.

As data continues to grow in volume and importance, the demand for professionals who understand database systems is also rising. Whether you're learning DBMS for academic purposes, career development, or project needs, mastering these fundamentals is the first step toward becoming data-savvy in today’s digital world.

Stay tuned for more tutorials, including hands-on SQL queries, advanced DBMS topics, and database design best practices!

0 notes

Text

SQL speaks. Parquet listens. Metadata in stone, data in vapor. Time rewinds, schema mutates. A lakehouse dreaming of acid compliance in the desert sun. Experimental? So is survival. 🦂

0 notes

Text

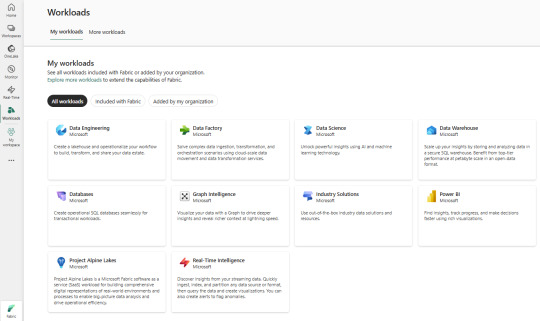

Explore the Microsoft Fabric lakehouse

Some benefits of a lakehouse include:

Lakehouses use Spark and SQL engines to process large-scale data and support machine learning or predictive modeling analytics.

Lakehouse data is organized in a schema-on-read format, which means you define the schema as needed rather than having a predefined schema.

Lakehouses support ACID (Atomicity, Consistency, Isolation, Durability) transactions through Delta Lake formatted tables for data consistency and integrity.

Lakehouses are a single location for data engineers, data scientists, and data analysts to access and use data.

A lakehouse is a great option if you want a scalable analytics solution that maintains data consistency. It's important to evaluate your specific requirements to determine which solution is the best fit.

0 notes

Text

What Tech Stack Is Ideal for Building a FinTech Banking Platform?

In the fast-evolving world of digital finance, choosing the right technology stack is a critical decision when building a fintech banking platform. The efficiency, scalability, security, and user experience of your solution hinge on how well your tech components align with the needs of modern banking users. As the demand for agile, customer-focused fintech banking solutions continues to grow, the technology behind these systems must be just as robust and innovative.

A well-structured tech stack not only supports essential banking operations but also empowers continuous innovation, integration with third-party services, and compliance with financial regulations. In this article, we break down the ideal tech stack for building a modern fintech banking platform.

1. Front-End Technologies

The front-end of a fintech platform plays a pivotal role in delivering intuitive and responsive user experiences. Given the high expectations of today’s users, the interface must be clean, secure, and mobile-first.

Key technologies:

React or Angular: These JavaScript frameworks provide flexibility, component reusability, and fast rendering, making them ideal for building dynamic and responsive interfaces.

Flutter or React Native: These cross-platform mobile development frameworks allow for the rapid development of Android and iOS apps using a single codebase.

User experience is a top priority in fintech software. Real-time dashboards, mobile-first design, and accessibility are essential for retaining users and building trust.

2. Back-End Technologies

The back-end is the backbone of any fintech system. It manages business logic, database operations, user authentication, and integrations with external services.

Preferred languages and frameworks:

Node.js (JavaScript), Python (Django/Flask), or Java (Spring Boot): These languages offer excellent scalability, developer support, and security features suitable for financial applications.

Golang is also becoming increasingly popular due to its performance and simplicity.

An effective back-end architecture should be modular and service-oriented, enabling the platform to scale efficiently as the user base grows.

3. Database Management

Data integrity and speed are crucial in fintech banking solutions. Choosing the right combination of databases ensures reliable transaction processing and flexible data handling.

Recommended databases:

PostgreSQL or MySQL: Reliable and ACID-compliant relational databases, ideal for storing transactional data.

MongoDB or Cassandra: Useful for handling non-structured data and logs with high scalability.

In most fintech platforms, a hybrid data storage strategy works best—leveraging both SQL and NoSQL databases to optimize performance and flexibility.

4. Cloud Infrastructure and DevOps

Modern fintech platforms are built in the cloud to ensure high availability, fault tolerance, and global scalability. Cloud infrastructure also simplifies maintenance and accelerates development cycles.

Key components:

Cloud providers: AWS, Microsoft Azure, or Google Cloud for hosting, scalability, and security.

DevOps tools: Docker for containerization, Kubernetes for orchestration, and Jenkins or GitHub Actions for continuous integration and deployment (CI/CD).

Cloud-based fintech software also benefits from automated backups, distributed computing, and seamless disaster recovery.

5. Security and Compliance

Security is a non-negotiable component in financial software. A fintech banking platform must be fortified with multi-layered security to protect sensitive user data and comply with global regulations.

Key practices and tools:

OAuth 2.0, JWT for secure authentication.

TLS encryption for secure data transmission.

WAFs (Web Application Firewalls) and intrusion detection systems.

Regular penetration testing and code audits.

Compliance libraries or services to support standards like PCI-DSS, GDPR, and KYC/AML requirements.

Security must be integrated at every layer of the tech stack, not treated as an afterthought.

6. APIs and Integrations

Open banking and ecosystem connectivity are central to fintech innovation. Your platform must be designed to communicate with external services through APIs.

API tools and standards:

REST and GraphQL for efficient communication.

API gateways like Kong or Apigee for rate limiting, monitoring, and security.

Webhooks and event-driven architecture for real-time data syncing.

With APIs, fintech software can integrate with payment processors, credit bureaus, digital wallets, and compliance services to create a more versatile product offering.

7. Analytics and Reporting

To stay competitive, fintech platforms must offer actionable insights. Analytics tools help track customer behavior, detect fraud, and inform business decisions.

Tech tools:

Elasticsearch for log indexing and real-time search.

Kibana or Grafana for dashboards and visualization.

Apache Kafka for real-time data streaming and processing.

These tools ensure that decision-makers can monitor platform performance and enhance services based on data-driven insights.

8. AI and Automation

Artificial Intelligence (AI) is becoming a cornerstone in fintech banking solutions. From automated support to predictive analytics and risk scoring, AI adds significant value.

Popular tools and frameworks:

TensorFlow, PyTorch for model building.

Scikit-learn, Pandas for lightweight data analysis.

Dialogflow or Rasa for chatbot development.

Automation tools like Robotic Process Automation (RPA) further streamline back-office operations and reduce manual workloads.

Conclusion

Building a robust and scalable fintech banking platform requires a thoughtfully chosen tech stack that balances performance, security, flexibility, and user experience. Each layer—from front-end frameworks and APIs to cloud infrastructure and compliance mechanisms—must work in harmony to deliver a seamless and secure digital banking experience.

Xettle Technologies, as an innovator in the digital finance space, emphasizes the importance of designing fintech software using a future-proof tech stack. This approach not only supports rapid growth but also ensures reliability, compliance, and customer satisfaction in an increasingly competitive landscape.

By investing in the right technologies, financial institutions can confidently meet the demands of modern users while staying ahead in the evolving world of digital finance.

0 notes

Text

The top Data Engineering trends to look for in 2025

Data engineering is the unsung hero of our data-driven world. It's the critical discipline that builds and maintains the robust infrastructure enabling organizations to collect, store, process, and analyze vast amounts of data. As we navigate mid-2025, this foundational field is evolving at an unprecedented pace, driven by the exponential growth of data, the insatiable demand for real-time insights, and the transformative power of AI.

Staying ahead of these shifts is no longer optional; it's essential for data engineers and the organizations they support. Let's dive into the key data engineering trends that are defining the landscape in 2025.

1. The Dominance of the Data Lakehouse

What it is: The data lakehouse architecture continues its strong upward trajectory, aiming to unify the best features of data lakes (flexible, low-cost storage for raw, diverse data types) and data warehouses (structured data management, ACID transactions, and robust governance). Why it's significant: It offers a single platform for various analytics workloads, from BI and reporting to AI and machine learning, reducing data silos, complexity, and redundancy. Open table formats like Apache Iceberg, Delta Lake, and Hudi are pivotal in enabling lakehouse capabilities. Impact: Greater data accessibility, improved data quality and reliability for analytics, simplified data architecture, and cost efficiencies. Key Technologies: Databricks, Snowflake, Amazon S3, Azure Data Lake Storage, Apache Spark, and open table formats.

2. AI-Powered Data Engineering (Including Generative AI)

What it is: Artificial intelligence, and increasingly Generative AI, are becoming integral to data engineering itself. This involves using AI/ML to automate and optimize various data engineering tasks. Why it's significant: AI can significantly boost efficiency, reduce manual effort, improve data quality, and even help generate code for data pipelines or transformations. Impact: * Automated Data Integration & Transformation: AI tools can now automate aspects of data mapping, cleansing, and pipeline optimization. * Intelligent Data Quality & Anomaly Detection: ML algorithms can proactively identify and flag data quality issues or anomalies in pipelines. * Optimized Pipeline Performance: AI can help in tuning and optimizing the performance of data workflows. * Generative AI for Code & Documentation: LLMs are being used to assist in writing SQL queries, Python scripts for ETL, and auto-generating documentation. Key Technologies: AI-driven ETL/ELT tools, MLOps frameworks integrated with DataOps, platforms with built-in AI capabilities (e.g., Databricks AI Functions, AWS DMS with GenAI).

3. Real-Time Data Processing & Streaming Analytics as the Norm

What it is: The demand for immediate insights and actions based on live data streams continues to grow. Batch processing is no longer sufficient for many use cases. Why it's significant: Businesses across industries like e-commerce, finance, IoT, and logistics require real-time capabilities for fraud detection, personalized recommendations, operational monitoring, and instant decision-making. Impact: A shift towards streaming architectures, event-driven data pipelines, and tools that can handle high-throughput, low-latency data. Key Technologies: Apache Kafka, Apache Flink, Apache Spark Streaming, Apache Pulsar, cloud-native streaming services (e.g., Amazon Kinesis, Google Cloud Dataflow, Azure Stream Analytics), and real-time analytical databases.

4. The Rise of Data Mesh & Data Fabric Architectures

What it is: * Data Mesh: A decentralized sociotechnical approach that emphasizes domain-oriented data ownership, treating data as a product, self-serve data infrastructure, and federated computational governance. * Data Fabric: An architectural approach that automates data integration and delivery across disparate data sources, often using metadata and AI to provide a unified view and access to data regardless of where it resides. Why it's significant: Traditional centralized data architectures struggle with the scale and complexity of modern data. These approaches offer greater agility, scalability, and empower domain teams. Impact: Improved data accessibility and discoverability, faster time-to-insight for domain teams, reduced bottlenecks for central data teams, and better alignment of data with business domains. Key Technologies: Data catalogs, data virtualization tools, API-based data access, and platforms supporting decentralized data management.

5. Enhanced Focus on Data Observability & Governance

What it is: * Data Observability: Going beyond traditional monitoring to provide deep visibility into the health and state of data and data pipelines. It involves tracking data lineage, quality, freshness, schema changes, and distribution. * Data Governance by Design: Integrating robust data governance, security, and compliance practices directly into the data lifecycle and infrastructure from the outset, rather than as an afterthought. Why it's significant: As data volumes and complexity grow, ensuring data quality, reliability, and compliance (e.g., GDPR, CCPA) becomes paramount for building trust and making sound decisions. Regulatory landscapes, like the EU AI Act, are also making strong governance non-negotiable. Impact: Improved data trust and reliability, faster incident resolution, better compliance, and more secure data handling. Key Technologies: AI-powered data observability platforms, data cataloging tools with governance features, automated data quality frameworks, and tools supporting data lineage.

6. Maturation of DataOps and MLOps Practices

What it is: * DataOps: Applying Agile and DevOps principles (automation, collaboration, continuous integration/continuous delivery - CI/CD) to the entire data analytics lifecycle, from data ingestion to insight delivery. * MLOps: Extending DevOps principles specifically to the machine learning lifecycle, focusing on streamlining model development, deployment, monitoring, and retraining. Why it's significant: These practices are crucial for improving the speed, quality, reliability, and efficiency of data and machine learning pipelines. Impact: Faster delivery of data products and ML models, improved data quality, enhanced collaboration between data engineers, data scientists, and IT operations, and more reliable production systems. Key Technologies: Workflow orchestration tools (e.g., Apache Airflow, Kestra), CI/CD tools (e.g., Jenkins, GitLab CI), version control systems (Git), containerization (Docker, Kubernetes), and MLOps platforms (e.g., MLflow, Kubeflow, SageMaker, Azure ML).

The Cross-Cutting Theme: Cloud-Native and Cost Optimization

Underpinning many of these trends is the continued dominance of cloud-native data engineering. Cloud platforms (AWS, Azure, GCP) provide the scalable, flexible, and managed services that are essential for modern data infrastructure. Coupled with this is an increasing focus on cloud cost optimization (FinOps for data), as organizations strive to manage and reduce the expenses associated with large-scale data processing and storage in the cloud.

The Evolving Role of the Data Engineer

These trends are reshaping the role of the data engineer. Beyond building pipelines, data engineers in 2025 are increasingly becoming architects of more intelligent, automated, and governed data systems. Skills in AI/ML, cloud platforms, real-time processing, and distributed architectures are becoming even more crucial.

Global Relevance, Local Impact

These global data engineering trends are particularly critical for rapidly developing digital economies. In countries like India, where the data explosion is immense and the drive for digital transformation is strong, adopting these advanced data engineering practices is key to harnessing data for innovation, improving operational efficiency, and building competitive advantages on a global scale.

Conclusion: Building the Future, One Pipeline at a Time

The field of data engineering is more dynamic and critical than ever. The trends of 2025 point towards more automated, real-time, governed, and AI-augmented data infrastructures. For data engineering professionals and the organizations they serve, embracing these changes means not just keeping pace, but actively shaping the future of how data powers our world.

1 note

·

View note

Text

Unlocking the Power of Delta Live Tables in Data bricks with Kadel Labs

Introduction

In the rapidly evolving landscape of big data and analytics, businesses are constantly seeking ways to streamline data processing, ensure data reliability, and improve real-time analytics. One of the most powerful solutions available today is Delta Live Tables (DLT) in Databricks. This cutting-edge feature simplifies data engineering and ensures efficiency in data pipelines.

Kadel Labs, a leader in digital transformation and data engineering solutions, leverages Delta Live Tables to optimize data workflows, ensuring businesses can harness the full potential of their data. In this article, we will explore what Delta Live Tables are, how they function in Databricks, and how Kadel Labs integrates this technology to drive innovation.

Understanding Delta Live Tables

What Are Delta Live Tables?

Delta Live Tables (DLT) is an advanced framework within Databricks that simplifies the process of building and maintaining reliable ETL (Extract, Transform, Load) pipelines. With DLT, data engineers can define incremental data processing pipelines using SQL or Python, ensuring efficient data ingestion, transformation, and management.

Key Features of Delta Live Tables

Automated Pipeline Management

DLT automatically tracks changes in source data, eliminating the need for manual intervention.

Data Reliability and Quality

Built-in data quality enforcement ensures data consistency and correctness.

Incremental Processing

Instead of processing entire datasets, DLT processes only new data, improving efficiency.

Integration with Delta Lake

DLT is built on Delta Lake, ensuring ACID transactions and versioned data storage.

Monitoring and Observability

With automatic lineage tracking, businesses gain better insights into data transformations.

How Delta Live Tables Work in Databricks

Databricks, a unified data analytics platform, integrates Delta Live Tables to streamline data lake house architectures. Using DLT, businesses can create declarative ETL pipelines that are easy to maintain and highly scalable.

The DLT Workflow

Define a Table and Pipeline

Data engineers specify data sources, transformation logic, and the target Delta table.

Data Ingestion and Transformation

DLT automatically ingests raw data and applies transformation logic in real-time.

Validation and Quality Checks

DLT enforces data quality rules, ensuring only clean and accurate data is processed.

Automatic Processing and Scaling

Databricks dynamically scales resources to handle varying data loads efficiently.

Continuous or Triggered Execution

DLT pipelines can run continuously or be triggered on-demand based on business needs.

Kadel Labs: Enhancing Data Pipelines with Delta Live Tables

As a digital transformation company, Kadel Labs specializes in deploying cutting-edge data engineering solutions that drive business intelligence and operational efficiency. The integration of Delta Live Tables in Databricks is a game-changer for organizations looking to automate, optimize, and scale their data operations.

How Kadel Labs Uses Delta Live Tables

Real-Time Data Streaming

Kadel Labs implements DLT-powered streaming pipelines for real-time analytics and decision-making.

Data Governance and Compliance

By leveraging DLT’s built-in monitoring and validation, Kadel Labs ensures regulatory compliance.

Optimized Data Warehousing

DLT enables businesses to build cost-effective data warehouses with improved data integrity.

Seamless Cloud Integration

Kadel Labs integrates DLT with cloud environments (AWS, Azure, GCP) to enhance scalability.

Business Intelligence and AI Readiness

DLT transforms raw data into structured datasets, fueling AI and ML models for predictive analytics.

Benefits of Using Delta Live Tables in Databricks

1. Simplified ETL Development

With DLT, data engineers spend less time managing complex ETL processes and more time focusing on insights.

2. Improved Data Accuracy and Consistency

DLT automatically enforces quality checks, reducing errors and ensuring data accuracy.

3. Increased Operational Efficiency

DLT pipelines self-optimize, reducing manual workload and infrastructure costs.

4. Scalability for Big Data

DLT seamlessly scales based on workload demands, making it ideal for high-volume data processing.

5. Better Insights with Lineage Tracking

Data lineage tracking in DLT provides full visibility into data transformations and dependencies.

Real-World Use Cases of Delta Live Tables with Kadel Labs

1. Retail Analytics and Customer Insights

Kadel Labs helps retailers use Delta Live Tables to analyze customer behavior, sales trends, and inventory forecasting.

2. Financial Fraud Detection

By implementing DLT-powered machine learning models, Kadel Labs helps financial institutions detect fraudulent transactions.

3. Healthcare Data Management

Kadel Labs leverages DLT in Databricks to improve patient data analysis, claims processing, and medical research.

4. IoT Data Processing

For smart devices and IoT applications, DLT enables real-time sensor data processing and predictive maintenance.

Conclusion

Delta Live Tables in Databricks is transforming the way businesses handle data ingestion, transformation, and analytics. By partnering with Kadel Labs, companies can leverage DLT to automate pipelines, improve data quality, and gain actionable insights.

With its expertise in data engineering, Kadel Labs empowers businesses to unlock the full potential of Databricks and Delta Live Tables, ensuring scalable, efficient, and reliable data solutions for the future.

For businesses looking to modernize their data architecture, now is the time to explore Delta Live Tables with Kadel Labs!

0 notes

Text

Understand data warehouses in Fabric

Fabric’s Lakehouse is a collection of files, folders, tables, and shortcuts that act like a database over a data lake. It’s used by the Spark engine and SQL engine for big data processing and has features for ACID transactions when using the open-source Delta formatted tables. Fabric’s data warehouse experience allows you to transition from the lake view of the Lakehouse (which supports data…

View On WordPress

0 notes

Text

Difference between DBMS and RDBMS

Understanding the difference between DBMS and RDBMS is crucial for anyone diving into the world of data management, software development, or database design. A Database Management System (DBMS) is a software that enables users to store, retrieve, and manage data efficiently. It supports data handling in a structured or semi-structured format, commonly used in smaller applications where data relationships aren’t complex.

On the other hand, a Relational Database Management System (RDBMS) is a more advanced form of DBMS that organizes data into tables with predefined relationships. It uses SQL (Structured Query Language) for querying and maintaining relational databases. RDBMS follows ACID properties (Atomicity, Consistency, Isolation, Durability) to ensure data integrity and supports normalization to reduce redundancy.

Key differences include:

DBMS stores data as files, while RDBMS stores data in tabular form.

DBMS is suitable for single-user applications, whereas RDBMS supports multi-user environments.

RDBMS enforces relationships through primary keys and foreign keys, which DBMS does not.

In today’s data-driven landscape, understanding these systems is essential for cloud database management, data security, and enterprise software solutions. Tools like MySQL, PostgreSQL, Oracle, and Microsoft SQL Server are popular RDBMS examples widely used in web development, data analytics, and ERP systems.

0 notes

Text

Database Management System (DBMS) Development

Databases are at the heart of almost every software system. Whether it's a social media app, e-commerce platform, or business software, data must be stored, retrieved, and managed efficiently. A Database Management System (DBMS) is software designed to handle these tasks. In this post, we’ll explore how DBMSs are developed and what you need to know as a developer.

What is a DBMS?

A Database Management System is software that provides an interface for users and applications to interact with data. It supports operations like CRUD (Create, Read, Update, Delete), query processing, concurrency control, and data integrity.

Types of DBMS

Relational DBMS (RDBMS): Organizes data into tables. Examples: MySQL, PostgreSQL, Oracle.

NoSQL DBMS: Used for non-relational or schema-less data. Examples: MongoDB, Cassandra, CouchDB.

In-Memory DBMS: Optimized for speed, storing data in RAM. Examples: Redis, Memcached.

Distributed DBMS: Handles data across multiple nodes or locations. Examples: Apache Cassandra, Google Spanner.

Core Components of a DBMS

Query Processor: Interprets SQL queries and converts them to low-level instructions.

Storage Engine: Manages how data is stored and retrieved on disk or memory.

Transaction Manager: Ensures consistency and handles ACID properties (Atomicity, Consistency, Isolation, Durability).

Concurrency Control: Manages simultaneous transactions safely.

Buffer Manager: Manages data caching between memory and disk.

Indexing System: Enhances data retrieval speed.

Languages Used in DBMS Development

C/C++: For low-level operations and high-performance components.

Rust: Increasingly popular due to safety and concurrency features.

Python: Used for prototyping or scripting.

Go: Ideal for building scalable and concurrent systems.

Example: Building a Simple Key-Value Store in Python

class KeyValueDB: def __init__(self): self.store = {} def insert(self, key, value): self.store[key] = value def get(self, key): return self.store.get(key) def delete(self, key): if key in self.store: del self.store[key] db = KeyValueDB() db.insert('name', 'Alice') print(db.get('name')) # Output: Alice

Challenges in DBMS Development

Efficient query parsing and execution

Data consistency and concurrency issues

Crash recovery and durability

Scalability for large data volumes

Security and user access control

Popular Open Source DBMS Projects to Study

SQLite: Lightweight and embedded relational DBMS.

PostgreSQL: Full-featured, open-source RDBMS with advanced functionality.

LevelDB: High-performance key-value store from Google.

RethinkDB: Real-time NoSQL database.

Conclusion

Understanding how DBMSs work internally is not only intellectually rewarding but also extremely useful for optimizing application performance and managing data. Whether you're designing your own lightweight DBMS or just exploring how your favorite database works, these fundamentals will guide you in the right direction.

0 notes

Text

Relational vs. Non-Relational Databases

Introduction

Databases are a crucial part of modern-day technology, providing better access to the organization of information and efficient data storage. They vary in size based on the applications they support—from small, user-specific applications to large enterprise databases managing extensive customer data. When discussing databases, it's important to understand the two primary types: Relational vs Non-Relational Databases, each offering different approaches to data management. So, where should you start? Let's take it step by step.

What Are Databases?

A database is simply an organized collection of data that empowers users to store, retrieve, and manipulate data efficiently. Organizations, websites, and applications depend on databases for almost everything between a customer record and a transaction.

Types of Databases

There are two main types of databases:

Relational Databases (SQL) – Organized in structured tables with predefined relationships.

Non-Relational Databases (NoSQL) – More flexible, allowing data to be stored in various formats like documents, graphs, or key-value pairs.

Let's go through these two database types thoroughly now.

Relational Data Base:

A relational database is one that is structured in the sense that the data is stored in tables in the manner of a spreadsheet. Each table includes rows (or records) and columns (or attributes). Relationships between tables are then created and maintained by the keys.

Examples of Relational Databases:

MySQL .

PostgreSQL .

Oracle .

Microsoft SQL Server .

What is a Non-Relational Database?

Non-relational database simply means that it does not use structured tables. Instead, it stores data in formats such as documents, key-value pairs, graphs, or wide-column stores, making it adaptable to certain use cases.

Some Examples of Non-Relational Databases are:

MongoDB (Document-based)

Redis (Key-value)

Cassandra (Wide-column)

Neo4j (Graph-based)

Key Differences Between Relational and Non-relational Databases.

1. Data Structure

Relational: Employs a rigid schema (tables, rows, columns).

Non-Relational: Schema-less, allowing flexible data storage.

2. Scalability

Relational: Scales vertically (adding more power to a single server).

Non-Relational: Scales horizontally (adding more servers).

3. Performance and Speed

Relational: Fast for complex queries and transactions.

Non-Relational: Fast for large-scale, distributed data.

4. Flexibility

Relational: Perfectly suitable for structured data with clear relationships.

Non-Relational: Best suited for unstructured or semi-structured data.

5. Complex Queries and Transactions

Relational: It can support ACID (Atomicity, Consistency, Isolation, and Durability).

Non-Relational: Some NoSQL databases can sacrifice consistency for speed.

Instances where a relational database should be put to use:

Financial systems Medical records E-commerce transactions Applications with strong data integrity When to Use a Non-Relational Database: Big data applications IoT and real-time analytics Social media platforms Content management systems

Selecting the Most Appropriate Database for Your Project

Check the following points when considering relational or non-relational databases:

✔ Data structure requirement

✔ Scalability requirement

✔ Performance expectation

✔ Complexity of query

Trend of future in databases

The future of the database tells a lot about the multi-model databases that shall host data in both a relational and non-relational manner. There is also a lean towards AI-enabled databases that are to improve efficiency and automation in management.

Conclusion

The advantages of both relational and non-relational databases are different; they are relative to specific conditions. Generally, if the requirements involve structured data within a high-class consistency level, then go for relational databases. However, if needs involve scalability and flexibility, then a non-relational kind would be the wiser option.

Location: Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

#Best Computer Classes in Iskon-Ambli Road Ahmedabad#Differences between SQL and NoSQL#Relational vs. Non-Relational Databases#TCCI-Tririd Computer Coaching Institute#What is a relational database?

0 notes

Text

ACID Properties in SQL Transactions Explained: Real-World Examples

1. Introduction 1.1 What is ACID? ACID properties are fundamental to ensuring reliable and secure transactions in database systems. Each letter in ACID represents a key concept: Atomicity, Consistency, Isolation, and Durability. Together, these properties guarantee that database transactions are processed reliably, maintaining data integrity even in the face of failures. 1.2 Importance of…

0 notes

Text

Most Asked DBMS Interview Questions [2025]

Know the most asked DBMS interview questions for 2025. Prepare with essential topics including SQL basics, normalization, ACID properties, indexing, transactions, and more to ace your interview.

0 notes

Text

Challenges in Building a Database Management System (DBMS)

Building a Database Management System (DBMS) is a complex and multifaceted task that involves addressing numerous technical, theoretical, and practical challenges. A DBMS is the backbone of modern data-driven applications, responsible for storing, retrieving, and managing data efficiently. While the benefits of a well-designed DBMS are immense, the process of building one is fraught with challenges. In this blog, we’ll explore some of the key challenges developers face when building a DBMS.

1. Data Integrity and Consistency

Maintaining data integrity and consistency is arguably the most difficult task in developing a DBMS. Data integrity refers to the accuracy and reliability of data, while consistency ensures that the database remains in a valid state after every transaction.

Challenge : Mechanisms such as constraints, triggers, and atomic transactions must be implemented for maintaining data integrity and consistency.

Solution : Use ACID (Atomicity, Consistency, Isolation, Durability) properties to design robust transaction management systems.

2. Scalability

As data grows exponentially, a DBMS must scale to handle increasing workloads without compromising performance.

Challenge : Designing a system that can scale horizontally (adding more machines) or vertically (adding more resources to a single machine).

Solution : Implement distributed database architectures, sharding, and replication techniques to achieve scalability.

3. Concurrency Control

Multiple users or applications may access the database simultaneously, leading to potential conflicts.

Challenge : Managing concurrent access to ensure that transactions do not interfere with each other.

Solution : Use locking mechanisms, timestamp-based ordering, or optimistic concurrency control to handle concurrent transactions.

4. Performance Optimization

A DBMS must deliver high performance for both read and write operations, even under heavy loads.

Challenge : Optimizing query execution, indexing, and storage to minimize latency and maximize throughput.

Solution : Implement efficient indexing strategies (e.g., B-trees, hash indexes), query optimization techniques, and caching mechanisms.

5. Fault Tolerance and Recovery

Hardware failures, software bugs, or human errors can lead to data loss or corruption.

Challenge : Ensuring the system can recover from failures without losing data.

Solution : Implement robust backup and recovery mechanisms, write-ahead logging (WAL), and replication for fault tolerance.

6. Security

Protecting sensitive data from unauthorized access, breaches, and attacks is a top priority.

Challenge : Implementing authentication, authorization, encryption, and auditing mechanisms.

Solution : Use role-based access control (RBAC), encryption algorithms, and regular security audits to safeguard data.

7. Storage Management

Efficient management of where data is located on disk or in memory drives performance and expense.

Problem : Ensuring the most efficient use of storage structures - tables, indexes, and logs - in minimizing I/O.

Solution : Techniques applied to make efficient use of storage include compression, partitioning, and columnar storage.

8. Portability and Interoperability

A DBMS must interact freely with various OS, hardware and applications.

Problem : Compatibility with as many different kinds of platforms, and conformance to standard communication protocols.

Solution : Adhere to industry standards like ODBC, JDBC, and SQL for interoperability.

Topic Name :- Challenges in building a DBMS

1. Bhavesh khandagre

2. Arnav khangar

3. Sanskar Gadhe

4. Rohit Wandhare

5. Nikhil Urkade

1 note

·

View note