#actually though don’t try logging in or accessing the site cause it only creates more requests and hurts the website

Text

Dear diary,

It’s been approximately 2 hours since I’ve found out that ao3 has been down. I don’t think I can go on for very much longer. I keep typing the url into the address bar on safari before realizing that it’s still down and it’s only been 2 minutes since I last tried.

May god help us

#this is getting ridiculous I know it’s only a fanfic website BUT THOSE WERE GOOD FICS DAMN IT#guys we got to donate extra money for ao3 this year cause of how much the staff is helping to file our fanfic addictions#ao3 shutdown of 2023#actually though don’t try logging in or accessing the site cause it only creates more requests and hurts the website#ao3

12 notes

·

View notes

Text

I’m going to start this by saying, I have bias. Everyone does. I do not intend for this to come off as “the thing you like is bad”, but moreso “the corporation that controls the thing you like is manipulative”.

My background; I am a 26 year old trans mom, I have a history with addiction, particularly gambling, and spend most of my time playing video games. I have gone to college for about 3 years for my psychology degree, and while I do not have my degree, I have been studying psychology for roughly 12 years. This is to say, my views will reflect this background. Just because I present this information like I do, does not inherently mean I’m right, though it also doesn’t mean I’m wrong. Try to view things with a critical mind, and know that most topics have nuance.

Ok, so lootboxes, booster packs, gacha games, all of these are gambling. This is not really an argument. You are putting money into a service of sorts, and receiving a randomized result. Be that a fancy new gun, that same boring legendary you have 5 of, or that final hero you’ve been trying to collect. You don’t know the outcome before you give your money. As defined by the merriam-webster dictionary: “Gambling; the practice of risking money or other stakes in a game or bet”

You are risking your money in not getting an item you want. There are ways this is handled acceptably, and ways this is handled poorly. Gambling is also illegal to people under 21 in a lot of places, but places online aren’t quick to tell you why. I don’t have any sources because every source requires a paywall to get any information, but pulling from my own personal experience and what I learned in college, it’s because children are very impressionable. I say “I like pokemon” and suddenly my 2-year old can’t go anywhere without her pikachu. I remember distinctly playing poker with my mom and her friends when I was 12. When you normalize gambling, what it does is lower the risk aversion of gambling. You are less likely to see a threat in playing that card game, because when you are that young you have no concept of money. You don’t know what a dollar is, so why not throw it away so you can have fun. This is...I hesitate to call it fine, but it’s mostly harmless. The issue is with children and their lack of knowledge of money. When I grew up and got a job, it’s a lot harder to tell my brain, “hey, don’t spend that money, you won’t get it back and you won’t get what you want.” Because my brain just acknowledges the potential for what I want. I want to buy the booster pack so I can have the potential to get that masterpiece misty rainforest. I want to buy that diamond pack so I have the chance to get the cute hero. I want to buy that lootbox so I can get the battle rifle that does a cool effect. These are harmless concepts, but very dangerous.

Make no mistake, companies know how psychology works, and will use it to their advantage. MatPat from game theory states that companies have even go so far as to have systems in place that change the odds as you’re losing, and monitor your skill level to put you up against harder opponents, to see the better weapons and go, “Oh I want that!” and entice you to buy more lootboxes. As it turns out I found an article covering what he was talking about, Activision had actually acquired a patent to arrange matchmaking to do just that [x], and the article says it’s not in place, but my trust in companies is not high enough to actually believe them.(honestly, matpat made a 2-part video series about lootboxes, and I’d recommend watching them)

So, companies are trying to manipulate you to buy more gambling products. There’s proof of it. It’s also more blatantly obvious in games like Magic the Gathering, where they release fancier versions of cards at rarer probabilities. To better explain it, from a collector’s standpoint, you want the fancy card cause it has value, it has value because it’s rare, rarer than the other versions, so if you’re on the lower end of the income ladder you buy a pack, or two. After all, you could get lucky and get it. On the higher end of the income ladder, you buy the card outright and hoard it. Maybe sell it off later if you notice the price goes down. From a player perspective, you see a card is being used by tournament players, you want to win more games, so you want those cards, which encourages you to buy products and try to get those cards. That’s predatory behavior. It’s predatory from the company’s perspective because that poor person might not be able to afford the card outright, but $5-$10 isn’t much, plus they always entice you with that Chance. They also further this desire for the cards by making it limited runs, such as the secret lair packs, if there’s a low amount purchased and it’s made to order, or worse, if they limit the order capabilities themselves, that drives up the value, and provides further incentive to buy the cards and packs. This not only creates an impossible barrier between the poor and the rich, but also heavily encourages people buy their gambling pack than people would have in other conditions.

For the record, I love magic the gathering, I’m not saying the game itself is bad, this is just a VERY predatory marketing tactic.

Let’s switch gears. Gacha games. I play AFKArena, because like I said, I have a gambling addiction and cannot stop myself. In AFKArena, you collect heroes, and battle with them in various ways. If you collect more of similar heroes you can rank them up. If I’m to believe what I’ve heard, it sounds like this is pretty common for gacha games. So what makes it bad. In AFKArena you use diamonds to summon heroes, now, you can acquire diamonds by beating specific story chapters, logging in every day, random limited time events, or paying for them with real money. AFKArena hero drops don’t seem that bad compared to the free diamond amount they dish out, which has resulted in me not spending all that much money on it, all things considered ($20 over 2 years). I believe that for a mobile game like this, that’s fair. I get way more enjoyment out of the game than I do most $60 games, so it balances out. However, this isn’t the case for every gacha game, and my trust in companies, as previously stated, is very low. The issue lies in them making the rates for good heroes so low that you HAVE to spend money on the game to really get over a roadblock of sorts. I do think that there is this issue in my game and I just didn’t notice it, someone with a lower tolerance or patience might absolutely have the incentive to drop hundreds of dollars on the game over a month. There are people of all different flavours, and it’s important to keep that in mind when discussing these topics, just because a marketing technique doesn’t work on you, does not mean it doesn’t work on anyone. After all, they have those $100 packs for a reason, you might not be that reason but someone is. That’s predatory.

I feel like I’ve gotten off track, let’s get back on the rails. Where was...gambling...predatory…ah, kids. So my biggest issue, is that Magic the Gathering is marketed towards 13 year olds. Not directly, but the packs say 13+. AFKArena and any mobile game for that matter, can be downloaded by anyone with a phone for free, with minimal mention that there’s microtransactions. AAA title games like Destiny 2, Overwatch, Fortnite, etc. are probably the worst offenders. A kid spent $16,000 of his parents money on fortnite in-game purchases, and that’s not the only time this has happened [x] [x] . More often than not, what happens is, the kid wants to play a video game, like halo on xbox, or destiny, or something, they ask their mom for their credit card, and the system saves it. I mentioned before that kids do not have a concept of money or its value, so giving kids unlimited access to the credit card is going to result in this kind of thing happening. I’m not blaming the parents for not being hypervigilant, sometimes you are really busy, or disabled, or whatever the reason, and you don’t notice the system just saved your card. I’m not blaming the kids cause their brains are literally underdeveloped. I blame the corporations, because they make the process as easy as possible to prey on kids and people with gambling addictions. (as a personal anecdote, I found that if I want a magic card in MtG:O, I’m way less likely to try and buy it if I have to get up and get my card, I’d recommend not saving your card if you suffer from gambling/addiction problems)

So after all of this evidence, how can anyone still view these things as anything but predatory? The answer is simple. You’re told they aren’t. Businesses spend hundreds of thousands of dollars on really good marketing, and public relations. I tried to google why gambling is illegal for people under 21, and got nothing, I got a couple forums asking the question, and a couple religious sites saying it’ll make them degenerates. I try looking up sources to prove the psychology behind these concepts, but they are locked behind paywall after paywall after paywall. Businesses and capitalism has made it so incredibly hard to discover the truth and get information you need, and it’s on purpose. They want you to trust that that booster pack is a good idea. They want you to spend money on lootboxes (look at all the youtubers that shill out for raid shadow legends, or other gambling games to their super young fanbase [x]). They want you to lower your guard and go, “well, it’s a video game, how can it be predatory?” “it’s a card game with cute creatures on it, surely it’s not that bad”

But it is. So why did I make this post? I dunno, my brain really latched onto the topic, I see so many people enjoying gacha games, but I’m worried that it’s going to ruin lives...I just want everyone to be informed and critical of what is going on.

#gambling addiction tw#addiction tw#this is probably a bad idea to post knowing how this site operates#but it matters to me

230 notes

·

View notes

Text

V. T. Green (Part 3)

Title: V. T. Green

Part One | Part Two | Part Three

Author: Gumnut

1 - 5 Sep 2019

Fandom: Thunderbirds Are Go 2015/ Thunderbirds TOS

Rating: Teen

Summary: “Did you discover this, Brains?” He frowned. There was something familiar about this. Maybe they had discussed it recently.

“Oh, no, this is V. T. Green. The man is brilliant.”

Word count: 3174

Spoilers & warnings: None.

Timeline: Standalone

Author’s note: I has a lurgy. This is being typed as I cough my brain silly. Very annoying. Nutty hates being sick. Sick of being sick. I hope my writing does not suffer because of it (though last time I had a lurgy I wrote Prank War, so you never know what might happen :D )

This is one that I have been meaning to write for some time. I hope you enjoy it :D Many thanks to both @scribbles97 and @vegetacide for all their wonderful help with this.

Disclaimer: Mine? You’ve got to be kidding. Money? Don’t have any, don’t bother.

-o-o-o-

Scott eyed his eldest brother as he slunk into the kitchen. A little pale, the man had finally made it out of his uniform into jeans with his usual red flannel draped over a bare chest. By the way he was moving, Scott could tell he hadn’t taken his painkillers.

A sigh. “I know you hate the pills, Virg, but you can’t tell me you prefer to be in pain.”

“I prefer to be able to think.”

“Pain hampers healing.”

“Yes, Mom.”

Scott’s lips thinned. “Pills or Grandma. Your choice.” Sometimes the big guns were necessary.

“Scott...”

“Hey, if our roles were reversed, what would you do?”

The glare wilted along with his brother’s shoulders. That prompted a grimace and tensed up Scott’s shoulders in turn. Goddamnit, Virg. He stood up from where he was seated at the breakfast bar and striding across to his brother, gently steered the man to a seat at the table. “Sit down and stay put.”

That prompted another glare, but Scott ignored it, darting up the stairs and beyond into the residential levels and Virgil’s room. Sure enough, the bottle sat beside his bed, seal still intact. A grab and a jog back down to the kitchen...

...and Virgil had his head buried in the refrigerator.

He dumped the pills on the bench. “I thought I told you to sit.”

“I’m hungry.”

“Sit down and I will get you some dinner.”

“I can make my own dinner. I can at least do that.”

“Virgil-“

“I’m fine, Scott, just leave it.” A pair of frowning brown eyes glared at him over the fridge door.

Scott mirrored that frown. You want stubborn, just try me.

Virgil must have seen it in his expression, because the glare intensified.

“Sit down, Virgil.”

“Is that an order, Commander.”

“If necessary.”

The butter was yanked out of the refrigerator and thrown onto the counter with a loud clatter. The bread joined it and tumbled as it hit the laminate. A jar followed that would have fallen on the floor and smashed if Scott didn’t reach out and catch it. “What the hell? What’s wrong with you?”

“Just making myself some dinner.”

“Sit down!”

“I am fully capable of making myself dinner!”

“Sit down!”

“Scott-“

“Damn it, Virgil, if you don’t sit down, I will make you sit down.”

That arched an eyebrow. “You could try.”

“Either you sit down and stop being stupid, or I’ll get Grandma in here and you can discuss it with her.”

A plate hit the stone flags and smashed, clinking shards scattering across the floor.

Scott jumped. Virgil stared at him for a solid moment before crouching down and picking up pieces of crockery.

Scott didn’t miss the flinch of pain the movement caused.

For god’s sake. “Virgil-“

“Leave it, Scott, just leave it.”

There was something in his brother’s voice, something hurt.

“V-“

“For Christ’s sake, what do I have to say to you? Just leave me the hell alone!” Broken crockery was shoved into the kitchen bin. Virgil grabbed a broom and swept up the mess one-handed without saying another word. The butter, bread and jar of spread were thrown back into the refrigerator and without a glance back, his brother hit the stairs and left.

Scott stared after him.

The bottle of pills sat alone on the bench.

-o-o-o-

“J-John, have you heard of V. T. Green?”

The astronaut turned around at Brains’ voice, the expected hologram flickering into being. “Good evening, Brains.” A hand reached out and shifted two situations to Resolved. A flick of his wrist and another landed in Not Required. “Who is V. T. Green?”

The engineer sighed. “I thought that at least y-you would know him. The m-man is a b-brilliant engineer.”

“Sounds more like Virgil’s wheelhouse.” He flicked a finger at the tropical low growing in strength just north of Western Australia and flagged it for more regular monitoring.

“Virgil h-hasn’t heard of him either. Wh-Which I find strange. I h-have been following Green’s b-blog for s-some time and I b-believe his w-work could be very useful for International Rescue.”

Now that gave him pause. John couldn’t recall Brains ever saying such a thing about any other scientist...well, except Moffie and that was for a completely different reason. “That’s high praise coming from you.”

“He d-deserves it. Have a look at this polymer.”

A series of equations appeared at John’s elbow. A glance soon became a frown of concentration. “Am I reading this correctly? Self healing?”

“Y-Yes. It w-would be invaluable for the Thunderbirds.”

A pause. “So, you want to contact this guy? Have you spoken to Scott? Kayo?”

The engineer tilted his head to one side. “I h-have attempted to gather some inform-mation, b-but haven’t had much success. I w-was hoping you m-might have b-better luck?”

John turned and eyed his friend. “You want me to run a check on him?” In other words, hack his blog and find out as much as possible.

“So I can g-go to Scott with enough d-detail to reassure him.”

Now that was a point. Scott was notoriously paranoid when it came to IR’s security. As bad, if not worse than Kayo. Brains was right to build a solid case.

“I can do. How much information do you need?”

“W-Whatever you can find.”

“FAB.”

“Thank you, John.”

“Not a problem.”

His hologram blinked out.

-o-o-o-

Scott couldn’t help himself. He followed his brother up the stairs to his room. What the hell was wrong with Virgil? It was so unlike him to get so angry with so little provocation.

Debrief had been nasty. Alan was defiant and angry and hurt. Without Virgil there to balance the scales, things had gotten out of hand quickly, the whole meeting devolving into a shouting match. Even John had started yelling.

Alan had stormed off, Gordon chasing after him.

Scott had been so angry. Virgil’s life had been endangered and all for a battle of wills. Grandma’s hand on his arm and her soft voice had snapped him out of it.

Damn.

He hated it when his brothers were injured. It wasn’t major, Virgil’s injury would heal, but still, all because Alan did something stupid.

He stood outside his brother’s closed door for a full two minutes before he raised his hand to knock.

“Scott? We have a situation.” John’s voice was soft.

He let his arm drop.

He would have to speak to Virgil later.

Apparently.

-o-o-o-

It took him another three hours, part of which involved sending Scott out to pluck yet another climber off the side of a mountain, before John had a chance to focus on the task Brains had requested.

The site itself appeared simple. Admittedly, John was a little distracted at first by its content. Brains was correct. The author definitely was someone to be admired. Admittedly, John’s knowledge of engineering wasn’t as extensive as Brains or Virgil’s but there were definitely some very elegant solutions presented on the site. A glance at the source code, a dig for the originating IP address and John easily found the site’s host in Silicon Valley, California. He launched a data miner and pulled the site logs searching for IPs that had accessed the site for publishing in an attempt to locate the author.

That’s when he hit a snag. According to the logs, each post had been created and posted from a different address. Sure, this was possible with an IP cloak, but it shouldn’t be possible to avoid his hack of that cloak.

He tracked one address through China to Russia and back out again to Spain, of all places, before he lost it at an exchange in Portugal. Another fed through Indonesia, six different servers in Japan, only to jump to a commercial satellite and claim it came from the Moon. John followed six more addresses before he discovered the layered encryption and the redirection code hidden under it.

“Oh, he’s good. Very good.” The logs themselves had been encoded to redirect the very same kind of hack John was attempting.

It took him another half hour to break the code that kept trying to lead him off on a wild goose chase.

And another hour to trace the server path through half the planet and then some - it did actually go via the moon, using some ancient tech not destroyed by the meteor shower that took out Moonbase Alpha.

By the time he finally tracked down the origin of the posts, John was beyond impressed.

When he discovered the identity of V. T. Green, he understood why.

It was so obvious, he should have known.

-o-o-o-

Dear V.T. Green. I represent a good company...

Hey, V.T. I am totally loving your stuff. You should go into business...

Doctor Green. Our university is very interested in gaining your services...

Sir, I need your help...

That last one caught his attention initially, but it devolved into a blatant scam two paragraphs in. It left him depressed.

He let his tablet fall onto his desk and his head into his one working hand. He had no idea what to do about all the requests for his assistance. Six different universities plus three other thought centres had replied, all ever so complimentary of his intellect. One laugh had been the fact that the Denver School of Advanced Technology was one of them. The bonus had been the admirer was a lecturer who had hated his guts.

Part of him wanted to reply and rub his face in it.

The tablet pinged again and Virgil was tempted to chuck the whole thing in the trash.

Message from Dr HH.

Virgil stared at it for a good minute before he inevitably touched the screen to open it.

Dear Doctor Green.

Why did half of them think he was a doctor? He had never claimed to be.

I have written you before, but I do not trust the vagaries of the internet and I feel the need to make sure you receive my request.

Virgil sighed. He was going to have to say something soon. This was unfair to Brains.

The letter went on to reiterate Brains’ suggestions regarding the polymer and reinforce the impression that they would be able to save lives.

Save lives.

It was what he did. And yes, that polymer could do that, as part of the Thunderbirds, but also if he released the rights to the design. Space and underwater habitats sorely needed the tech.

Of course, he had yet to run tests. Nothing practical had been experimented. It could all be a big hype over a big failure.

Another sigh and he closed his eyes. He hadn’t eaten, but he wasn’t hungry any more. His shoulder and arm hated him and his pills were down in the kitchen. To reach them, he would have to navigate the house and hope he didn’t run into any family members. He just didn’t feel like...explaining himself.

Perhaps he could crawl back into bed and find sleep again.

He stood up...and the emergency alarm cut off everything.

His response was reflex and he was out the door before processing another thought. He hit the elevator before he remembered he was off rescues, the car carrying him down to the comms room and dumping him there.

Damn.

But to be honest he really couldn’t not find out what was going on. He had a need to know where his brothers might be sent, no matter how it grated that he couldn’t go with them.

So, with some reluctance, he slunk around the corner into the comms room, forcing a positive gait across to the lounge where he parked himself, spine straight.

Gordon eyed him from across the other side of the circle, an eyebrow arching. Scott rose from behind their father’s desk and jogged down the steps and sat next to Virgil.

Virgil blinked. A flash of blue, a frown and thinned lips greeted him.

Damn. That would have to be fixed sooner rather than later.

Alan was the last to arrive, darting in from the kitchen and sitting beside Gordon. His eyes tracked across Virgil, but didn’t acknowledge him.

Out the corner of his eye, he saw Grandma frown.

“What’s the situation, John?”

“This is a big one. Remember the Grand Sequoia Dam?”

“A little hard to forget.”

“They are reporting fractures in the dam wall and they are claiming it has to do with our hasty repairs last time.”

“What?” Virgil shot to his feet. “I checked and double checked the seal. I even went back and conducted stress testing. There is no way that dam wall could be failing because of our repairs. The nanocrete is stronger than the entire wall itself.”

John stared at him a moment before continuing. “Whatever the cause, they are claiming the wall is failing. An evac order has gone out to the town below, but they are concerned there will not be enough time. They’ve called us, and Virgil in particular, to assist.”

A frown and Virgil was pulling up scans and diagrams of the dam. Their assessment was correct. The wall was failing. A frown. It shouldn’t be. The volume of water currently pressing on it simply didn’t have the energy to create the situation. A flick of his hand and he spun the view. For this to happen there needed to be pressure from this angle with a much higher amplitude.

“Virgil is injured.” It was Grandma who broached the obvious.

“I’m going.”

That sprouted a whole array of glares.

He straightened where he stood. “I need to know what is causing this.”

“You can do that from here.” Of course, Scott would object.

“No, I prefer to be onsite.”

“You’re injured.”

“No kidding. I will ride in Two with Gordon.” He didn’t miss the sudden widening of Gordon’s eyes at that comment. “Nothing energetic.” Scott was still glaring. “There are some things that have to be seen in person.”

Scott’s lips thinned. He was pedantic about injured brothers, as was Virgil, but there was something about the situation, something odd, and it was Virgil’s reputation at stake here. Due to the use of the nanocrete, a proprietary substance unique to IR, he had signed off the safety on the dam, and it was safe.

But not now.

“I’m going.”

Brains, who had been quiet up to this point, rose slowly from where he sat. “I agree with Virgil.”

“Brains...” Grandma was admonishing.

“This shouldn’t b-be happening.” He pointed at the crack in the dam. “The structure is d-designed to w-withstand strain far b-beyond what it is currently under. The n-nanocrete cannot be responsible, yet they are accusing us. Why?”

Scott stared at Brains. “You think this is targeted?”

“It is possible.”

“The Hood?”

“Unknown, but I do think we n-need Virgil onsite for this. He has the civil knowledge n-needed.”

“Why can’t you go?” Alan piped up, still not paying any attention to Virgil.

Brains blinked and frowned at the young astronaut. “Y-you are aware that V-Virgil is the more qualified engineer in this instance?”

“What?”

It was Gordon who rounded on his little brother. “You been living under a rock, bro? Virg is the man when it comes to this stuff. You know that.”

Blue eyes frowned. “I just thought Brains could go since Virgil is injured.”

“I could, b-but Virgil’s knowledge is greater.”

Finally, Alan turned to him, but Virgil no longer had the time. “We need to get moving, that dam is not going to hold much longer.”

Scott shot to his feet. “Thunderbirds are go.”

-o-o-o-

It was odd going out on a rescue in Two, but not flying her. Virgil’s arm was still in a sling and strapped up, curled against his chest. Brains had made sure it was secure after helping him into his uniform. It hurt, but it was necessary.

The co-pilot’s seat had just a slightly different view.

Gordon launched her just as smoothly as Virgil would have. Alan sat quiet behind the both of them. As soon as they were airborne and stable, the young astronaut excused himself, muttering something about seeing to the pods.

The moment he was gone, Gordon didn’t waste any time poking the bear.

“What’s with you and Alan?”

“Nothing.” He really didn’t want to go into it.

The eyebrow arched at him was so similar to what Virgil would have done if their roles had been reversed, he almost smiled.

“Sounds like a pile of horse dung, but I’ll let you go with it.”

Virgil turned and stared at his brother.

Gordon didn’t react. “You know you scared the shit out of him, don’t you?”

“What?”

“He screwed up and his big brother got hurt.” Gordon flicked his gaze between the instruments and Virgil. “Scott reamed him out big time at debrief. You weren’t there and he really let rip.”

“Shit.” It came out under his breath.

“John lassoed him instead, but he didn’t respond as fast as you would have. Alan was kicking himself before that. By the time Scott had finished with him, he was on the verge of never going out on a rescue ever again.”

“He made a mistake. We all make mistakes.”

“He made a dick move, Virg. He didn’t listen to you or Scott and thought he knew better.” A snort. “I should know. Been there, done that, learnt the hard way.” A smirk. “First rule of International Rescue: If Virgil says it is, it is.” The smirk became a grin. “And woe be he who thinks otherwise.”

“Gordon...”

“I’m not kidding.” And the grin vanished, replaced by genuine honesty. “You know what you are talking about. You’re good at what you do.” A glance back at his flight path. “He should have listened to you.”

Virgil stared at his little brother. It took him a moment to gather himself. “Thank you, Gordon.”

The aquanaut shrugged. “Eh, I learnt the hard way, but I learnt. Anyway, you should probably talk to Alan.”

Virgil shifted in his seat and his shoulder complained loudly. He stared down at his feet. “Yeah, I should.”

There was silence in the cockpit for a bit. Virgil was caught up in what he should say to his littlest brother and Gordon quietly eyeing him.

The silence was obviously too much for Gordon. “So, who is this V. T. Green Brains keeps raving about?”

Virgil flinched; the question completely unexpected.

Gordon frowned at him. “What? What do you know about him?”

“Nothing.”

An amber blink. “Bullshit, Virg, you’re looking guilty as. What do you know? Scott said Brains was interested in inviting the guy to the island.”

Virgil’s head shot up and his shoulder screamed at him. Ow.

Gordon’s frown tried to cleave his face in half. “What the hell, Virgil? If you know something, why haven’t you said anything? Brains is going nuts trying to find this...guy.” And Gordon was staring at him in shock. “Oh my god.”

Virgil glared at him. “What?”

“It’s you.”

-o-o-o-

End Part Three

Part Four

#thunderbirds are go#thunderbirds#thunderbirds fanfiction#Virgil Tracy#Scott Tracy#John Tracy#Gordon Tracy#Alan Tracy#brains#Hiram Hackenbacker

23 notes

·

View notes

Text

RED REALITY (part 1)

(my longest post yet.)

Item #: SCP-3001

Object Class: Euclid

Special Containment Procedures: To prevent further accidental entries into SCP-3001, all Foundation reality-bending technology will be upgraded/modified with multiple newly developed safeguards to prevent Class-C "Broken Entry" Wormhole creation. While knowledge of SCP-3001 is available to personnel of any level should they wish to learn about it, research and experimentation with SCP-3001 and its associated technology is strictly limited to personnel of Level 3 and above, with special clearance designation granted from Sites 120, 121, 124, and 133.

Description: SCP-3001 is a hypothesized paradoxical parallel/pocket "non-dimension" accessible through the creation of a momentary Class-C "Broken Entry" Wormhole.(1) While believed to be an infinitely extending parallel universe, SCP-3001 is almost completely devoid of any matter and has an extremely low Hume Level of 0.032,(2) contradicting Kejel's Laws of Reality with the relation between Humes and spacetime. This phenomenon causes matter inside it to decay at an extremely low rate, and damage that would otherwise prove fatal does not impede any biological/electronic function; simulations suggest an organism can lose more than 70% of their body's tissue and still operate normally, as long as at least 40% of the brain remains. However, prolonged exposure will cause said matter to gradually approach SCP-3001's own Hume Level, resulting in severe tissue/structural damage as the matter's own Hume Field begins to disintegrate.

SCP-3001 was initially discovered on January 2, 2000, at Site-120, a facility dedicated to testing and containing reality-bending technology. Dr. Robert Scranton and his wife Dr. Anna Lang were Head Researchers at Site-120, and were developing an experimental device, called the "Lang-Scranton Stabilizer" (LSS).(3) Dr. Scranton was transported to SCP-3001 after unexpected seismic activity damaged several active LSS in Site-120 Reality Lab A.

Initially presumed dead, Dr. Scranton has survived in SCP-3001 for at least five years, 11 months, and 21 days. During this time, he was able to record his experiences and observations within SCP-3001 through a somehow still functioning LSS control panel, which was also brought into SCP-3001 with him through the Class-C "Broken Entry" Wormhole. These recordings were later recovered upon the panel's sudden return, an unexpected side effect from testing improved reality-bending technology; these logs are the basis of SCP-3001 study. Despite new technologies being developed, retrieval and re-integration of Dr. Scranton has been unsuccessful. His current physical and mental states, if he is still alive, are unknown. [Further information on Dr. Scranton's possible retrieval is under Ethics Committee review.] Transcripts of Dr. Scranton's logs are below.

[No discernible/coherent dialogue can be heard from Dr. Scranton for the first eight days. He cycles through periods of panic, confusion, and anger throughout, and it seems he was attempting to navigate SCP-3001 to find a way out. He finally moved close enough to the recording log on the eleventh day, though did not notice it was operating for several more hours.]

…

Name, Robert Scranton. Age, 39. Birthday, September 19, 1961.

Favorite color, blue.

Favorite song, "Living on a Prayer."

Wife… Anna…

Anna…

Name, Robert Scranton. Age, 39. Birthday, September 19, 1961.

Favorite color, blue.

Favorite song, "Living on a Prayer."

Wife, Anna. She has green eyes. I love her very much.

Name, Robert Scranton. Age, 39. Birthday, September 19, 1961.

Favorite color, blue.

Height, 178 cm.

Weight, 85 kg.

Wife, Anna. Anna, I'm sorry.

Name, Robert Scranton. Age, 39. Birthday, September 19, 1961.

Favorite color, blue.

My wife's name is Anna. We got married August 12, 1991.

I hope she got out okay.

Please let her be all right, please let her be all right.

Robert, Scranton. 39. Anna, blue, wife. Please… please, God, please…

Anna… Anna… Anna bo banna… Anna bo banna…

What the… what the hell is that? [It is assumed at this point Dr. Scranton noticed the flashing light of the recording module.]

What the fuck, this thing's actually recording?

[Metallic clang heard.]

[Voice is highly agitated and panicked.] My name, is Robert Scranton. Yeah, yeah, my name, is Robert Scranton, former researcher at Foundation Site-120. It has been… I don't know, actually, I… I can't remember. I… I estimate it's been ten days, but, I-I-I don't, I can't… Oh God, can anyone hear me?! I-I-I don't know what's happened, I-I don't know where I am, and-and, please, please is anyone there?! Hello?! Anyone?! ANYONE?!

No one can hear me. Oh God, oh God, oh God. Fuck, fuck, fuck, fuck, FUCK.

Why the hell is this thing even working, it can't be working, it SHOULDN'T be working, so what the hell?! I need to — God, I need to, I need to… see, how… long can I talk here, I think there's a-a-a cap or something on the recording log, and I-I-I can't see anything, I can only see the red light blinking on and off, I can't see any of the switches next to it…

I'm really hungry.

Thirsty, too. I think I should be dead from dehydration by now, but… I don't know.

Hi, little red light. Can you talk to me? Can you talk to… Anna, for me? Hello?

I found the controls.

Two weeks, three days, forty-seven hours, and fifty-eight minutes.

Two weeks, three days, forty-seven hours, and fifty-eight minutes.

Two weeks, three days, seven hours, and fifty-eight minutes.

Two weeks, three days, seven hours, and fifty-eight minutes.

Oh… Jesus.

ERROR WITH PLAYBACK, ERROR WITH PLAYBACK. ERROR WITH PLAYBACK.

Wherever the hell I am, I'm pretty sure now that… I don't need to eat to stay alive. It hurts… a lot, but… at this point I don't think I'm gonna die… So… I'm gonna… I'm gonna take my time… I guess. I… Maybe some sort of miracle will happen and I'll get out. Heh. Keep dreaming, Robert. Yeah, I'm… I'm tired, I'm gonna sleep.

Three weeks, four days, nineteen hours.

I have a picture of Anna in my pocket. I almost forgot. Little red light, let me see her face, please? Just a little bit, I just… I just want to see her a bit.

Hi, Anna, I'm still here, I'm still here. I'm coming back, okay?

Two months, four days, three hours.

… Hi. Robert here. Yeah, I-I haven't really recorded much to hear in the past few weeks. Ha. Hahahaha… Hahaha… huh… huh…

Sorry, gotta keep it together. Breathe.

I've been… I've been busy. Trying to learn more about the place I'm in. My prison. My kingdom all my own. Heh, King Robert. God, I stink. Is there even air in this goddamn place? Stinky King Robert, king of GODDAMN NOTHING FUCK.

…Sorry, sorry. I, I gotta keep this professional. I'll… I'll come back when I'm feeling rested.

… Okay, here goes. [Inhales then exhales deeply.]

My name is… Robert Scranton. I am a former Head Researcher of Site… 120, a Foundation facility dedicated to studying various reality-bending SCPs, for the purpose of developing more advanced countermeasures towards such threats.

For the last… red light, speak to me,

Two months, eight days, sixteen hours.

What red light said. I have been trapped in what I believe to be an empty pocket dimension. Alone. Yeah… alone. All alone.

I'm calling this place SCP… I don't know, I can't remember where we are, screw it. I don't know what's happened in the past… red light, please, again.

Two months, eight days, sixteen hours.

But… no one else is around to argue, and at this point… I'm just talking into this control panel to keep myself together. I… I need to keep a record. There might be some poor bastard in the future who ends up like me, and… if this ever actually makes it out… maybe, maybe I can help stop that from happening. That's all I have going for me right now, and I really need something to go for, hahahaha…

…So, yeah, Robert… Scranton… documenting a new SCP for… future research purposes. That'll have to do. Here we go!

- Close.

…

Two months, eleven days, ten hours.

Item number, SCP I don't fucking care.

Object Class, Euclid, I guess, but I don't know, I might update this in time. I need to explore more.

Special Containment Procedures, god I sound so much like a shrink right now… Um… I don't know if we could… contain wherever I am. It's… definitely not on Earth. To be honest I don't know where it is. I… I think it has do something with the Stabilizer prototype… I'll explain that more later. Okay… um… yeah, wherever I am, I don't think it can be contained much as… created. No, no, that's not the word I'm looking for. Um… entered. Yeah, entered is better. I came into this place because of some really bad reality-bending accident and… no, no, Robert, don't be like that yet, you don't know if there's no exit yet. Ooooh… livin' on a prayer… halfway… there. Ahem.

Two months, eleven days, eighteen hours.

So… wait, no, Description, Robert, stick to the format… This place… It's some sort of reality gap, I think. It's dark. Really dark. As in, this little red light that shows my words are actually being recorded is the only visible light in this entire place. I can't see my hands, and I can barely see the control panel here. I've had to basically use the light as a center, and remember how many steps I take and in which direction. I haven't gone past a hundred yet. I'm too… I'm too scared to. Heh. I wonder if my hair is turning white, right now? I can't even see what color it is anymore. Speaking of which, my head has been a bit itchy recently. If I don't concentrate on it, it's fine, but I feel this… tingling all over my face. I'm not sure why.

Two months, fifteen days, four hours.

Okay… hoooo… I-I need to relax for a minute, Jesus, god, shit. Holy… shit, shit, shit… I… just discovered a new property of this place. All this time, I've been thinking I might be walking on… some sort of… flat ground, if you will. I kept eye contact with little red as far as I could see, and it seems I could walk in a straight, flat path. Jesus, my head is buzzing right now, I think the adrenaline is still kicking… But, if my hypothesis is correct, and this really is some sort of reality… void, then there shouldn't be anything to walk on. Now that I think about, the whole time I've been in here, it's felt like… I'm walking, but I'm also swimming through something. And this something is thick, and form-fitting, it has this… pressure, which I know isn't the correct term, but goddamn it, this place makes no damn sense and I'm doing my best to understand it, okay?!

God… Sorry.

So, the best analogy I can come up with is… it's like I'm walking through really thick black gel. There's enough tension to keep me on a… "surface", but if I… imagine myself pressing down hard enough, I can descend. Wait. Wait, wait, wait, wait, wait, I think… I think I need to test this more, I'll be back.

Two months, seventeen days, two hours.

Navigation is largely affected by… conscious impulses to travel in a certain direction. So, this definitely isn't a complete reality gap, at least according to mine and Anna's theories. If-if it were I wouldn't have been able to move at all, since space wouldn't have existed. Holy shit, okay, okay, this makes a lot more sense than it did before, great, great job, Robert, you're getting there. …Come to think of it, I should've realized that sooner when I was able to move in a flat plane to and from little red. It also explains why I'm not dead from dehydration or hunger yet, time barely passes in here. Okay yeah, so, I stood right next to little red, and went straight… "down." Okay, from here on out, imagine little red as the origin of a 3D space. I went straight… down, right, yeah, and then… and then I was then able to come back "up" to little red again. I've also been able to "fly" above red. Movement in here is slow, like I said, gel analogy, best I can describe it by.

Two months, twenty-two-days, three hours.

Reporting back for another update, red, SIR! Hahaha, come on red, lighten up. Ha! Pun not intended… Come on red, crack a little smile, it's funny!

…

… Fine, whatever. Ahem.

This place still seems like it barely follows Kejel's Laws of Reality Parameters. And by barely, I mean, really just barely. I'm pretty sure my math is right, but… hold on, I'm gonna check again…

Jesus. Yeah, yeah, pretty sure it's good still. Okay, this place… if we're using the standard Hume scale, I'm pretty sure I'm in a reality where the Hume Field is… point zero… four… ish. Yeah, really, really, really fucking low, so… Like I said above, space-time exists on a very minuscule scale, so my biology is not getting shot to hell and back because of any malnutrition, but that also means… I… I'm actually not sure what that also means…

…

Adding on from the last entry. I'm… I'm not sure how my biology will react in such a low Hume concentration, actually. I mostly worked with higher than average Hume Fields, and the reality benders we tested never had a Field lower than 0.8. This… this is gonna be a first. An all-time first. I remember Site-133's "Prommel Killer", they called it that because it broke the previous theory about the lowest limit of Hume concentration. Really expensive, really weird machine that brought down a small area to 0.4. 0.05 is… yeah.

I was lying. I was lying, last log… I… I'm lying to myself. My own body, and… little red here too… We're about the realest things in this place. And that means… over time… the Hume field's going to want to… equalize, and… I'm… I'm gonna go for now, I have some… some calculation to do again. Red, Anna, take note I'm using Kejel's Second, Third, and Fourth Laws, got it? Use… use 0.05 as the surrounding, my external field as… somewhere in between 1 and 1.4, use the Second Law's error estimation correction, and my internal as… as… as… shit. I'm not done yet.

I am real. I am super-real. Super duper real. Ultra real, the realest guy in a world of no-real.

You have no sense of humor as usual, red. I'm talking about the LSS, red. When we got sent here, I think… I think our reality got cranked up a notch. Red, didn't you pay attention in class? Hey, don't get fucking smart with me, red. Okay, the point is, the LSS surge got us up to… to…

Two months, eighteen days, seven hours.

No, red, not even fucking close, you must've converted Kejel's Third Law equation wrong. Because of the malfunctioning LSS we got blasted by, we're somewhere in between 2.2 and 3.6. Yes, that's good red, that's very good, because that means we have more time than we thought to… to… yes, red, before we fucking DIE, okay?!

Two months, twenty four days, five hours.

About three years. Four, if… If I don't interact too much. If… If I had had an LSS here, I could maybe stretch it out to… eight, maybe, that's best case scenario… But I have… I have to… I… know… but… but… three years. Three years, then it's past the point of no return. Ha. Hahahahaha. I should… I should definitely figure something out by then. I think I still should be pretty good for a while… At least… no, no, I won't be in here that long… I'll definitely figure something out…

Anna, what would we do with a case like this? I need your help, honey. That… that tingling I've been feeling… That's my Hume Field diffusing… My… my reality fading… Three years. I need to stabilize myself within three years.

I've been thinking… Anna and I, we had this theory… Even though the Hume Field is low, it's still a Hume Field. And precisely since it's so low, Hume diffusion should take quite a while. Now if… if I could… contain… recycle the fields, keep the diffusion from spreading too thin, I could… And I could also maybe… it's only a theory, but… It's worth a shot. But that means…

Hey, red. I… I'm gonna have to go for a bit. I want to test something, and you can't come with me. I… I'm sorry. No, no, red, I'm really, really sorry, I want you to come, I do, but… if we're together the diffusion will increase faster… We both need as much time as possible. I need to figure this place out more, and you need to make sure you keep all that info in your head. It's… red, come on. You- you'll be fine red, I know you will, you're tough. A lot tougher than me… it'll only be for a bit, red, but I need to see if I can find a way to keep us alive a bit longer. Maybe even get us out of here. If I can contain enough field, I can… I can maybe even get us out. No, no I'm not sure, but I need to find out. Red, we're talking about possibly escaping, okay? Yeah, it's a gap. A gap should have an end, like a… like the walls of a canyon, understand? I need to find a wall, and then, and then I can…

…

I'm sorry, red, I hope we're still friends when I come back.

…

I'm… I'm going now… I'll see you soon.

…

- Close.

Six months, ten days, five hours.

Hello again, little red. It's been a while.

You know… thinking back… I don't know what the hell I was so excited about. This place is… god, this place. This place is is fucking… hell.

There's no end. It just goes on. And on. And on.

I traveled in one goddamn direction for two, damn, months. God, I'm so fucking stupid, why did I think I could get out? I'm thinking like those old European shits that thought the end of the world was at the horizon. Fucking stupid, Robert, stupid, just-just- GAAAAAAAAAAAH—

If I let myself fall down long enough would I eventually hit a bottom?

Ten months, 28 days, 15 hours.

There's no bottom. And fuck you, red.

I'm sorry, red, don't go out, I'm sorry I turned you off, come back, come back, please—

… I turned 40 today. Happy birthday, Robert.

I was adopted, did you know that? Yeah, my parents left me in a box on the side of a street. Got picked up by some American couple, which explains my not-so-Chinese names. I don't even know my original last name. Just thought I'd share. How about you, red?

Anna and I met on-site in 1988. God she was beautiful. She still is. It was our eyes. She has beautiful eyes. My eyes are grey, they're boring, but hers… God they're beautiful. Do you think… Do you think she's still worried about me, little red? Is she looking for me?

You know, red, you're a great listener. But I never hear you talk about yourself. Come on, don't be shy, there's no one else around, right? Hahaha, right? Hahaha… hahahahaha…

"I'm sorry, Robert, I'm afraid I can't do that." Hahaha, red, you're hilarious.

Were you married? Kids? Any family at all? Girlfriend? Boyfriend? Come on, red, I won't judge, just… talk to me, please. God, my head hurts. And my feet feel like they've been asleep for forever.

I worked at a comic store as a kid. So much cheaper back then, and I got free stuff at the end of each week. I liked Spiderman the best.

I was in a box, side of the street.

I… what the fuck… no. No. No, no, no, no, no, no, red, have you seen my picture? The picture red, Anna's picture, where is - come on, come on, where-where- Anna! ANNA! ANNA! Where did - no, no, no, no, no, please, please no, anything but, PLEASE.

It's fading, she's fading, she's fading, please, Anna, no, please, come on, sweetie, stay here, it's too soon, it's TOO SOON, my math isn't wrong, it's NOT WRONG, YOU SHOULD BE FINE. ANNA, ANNA, I can't hold you, come back, Anna, sweetie, honey, Anna please, I need you, I need you, please, please, don't go, I'm here, I'm still here. RED GET HELP. Anna, please, please, don't go, don't -

Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. Black hair, green eyes, 160. [Dr. Scranton repeats this for three hours.]

Anna and I got married in '91. We couldn't really get the nicest suit and dress we wanted because of work, but, damn, we both looked great. Anna looked better, of course. We just danced, and danced the whole night, got the whole week off. Even a job like mine lets you enjoy your honeymoon… So, come on red, open up, put 'er there, high five. Come on. Come on, red.

One year, two months, twenty-seven days.

…

…

AAAAAAA—

[The next recordings only play the control panel's automated voice giving times, with intervals of one to three days, with several month-long gaps in between as well; also intermixed are Dr. Scranton's sobbing, screaming, and mumbling. These recordings continue until the time reading reaches two years, seven months, and 28 days, after which they cease to pick up any sound until two months later.]

#scp#SCP 3001#red reality#scp fandom#scp foundation#part 1#posted from a pile of leaves#let them eat rakes

6 notes

·

View notes

Link

FastandSocial.com - 15 important tactics of Internal Linking - https://fastandsocial.com/15-important-tactics-of-internal-linking/Every website owner is fighting to get back links from other websites to increase the PR & ranking on Google search results. But they are not concerned about the internal linking of the site which is also a very important factor in search engine ranking. Let’s first understand the difference betweenEvery website owner is fighting to get back links from other websites to increase the PR & ranking on Google search results. But they are not concerned about the internal linking of the site which is also a very important factor in search engine ranking. Let’s first understand the difference between internal & external linking…… Internal linking is the links on web pages or the website that point to other pages within the same website. External linking is when we put link on one website to another website. Why Internal linking is important? Internal linking is very important for any website as: It increases the accessibility of the website for users & search engines. Search Engines like Google rewards you for doing things that make the website user’s experience easier and better It helps search engines & user to navigate the site easily It is a perfect strategy for giving an extra boost to specific pages for specific keywords It helps search engines to crawl deep web pages which are away from main menu, navigation bar or home page of the website Anchor links helps search engines to co-relate the pages with the overall theme of the website Internal Linking to get the top ranking on Google or Other Search Engines As said above Internal linking is very important but I have seen many websites where every 5th-6th word is linked with other page of the website which actually looks very irritating. We should not mistreat this opportunity as spamming. I have consolidated some best practices of Internal Linking which is good from Search Engines & Users point of view. 1- Utilize every opportunity of effective internal linking like add links in your navigation, header or footer as text links to all your important pages and main sections of the website. 2- Internal page linking thru dynamic drop down menu in DHTML or JavaScript is not a good way of linking as search engines cannot follow links created in JavaScript; they can only follow simple text links. We should use simple text links to link all internal pages of the website. 3- Every page of the website should be maximum 2 clicks away from the home page of the website. E-commerce sites are usually very big in size so in that case search engine might face problem in crawling & indexing all deep level pages without appropriate internal linking. 4- In some times it is not possible to link every page of the website with the main menu or header or footer of the website; in that case internal contextual linking thru web content is a good idea to enhance the accessibility of the site. 5- Try to use relevant keywords as anchor text instead of using “Click here” etc. 6- Always use the same URL when you are linking a specific page. For example, if you want to link your service page (www.example.com/service.html) from other pages of the site then you should always use the same URL. Some people use different URL to link the same page like sometime example.com/service.html & some time www.example.com/service.html, this can create canonicalization issue. 7- Use breadcrumb navigation on sites that will link to other pages often 8- All internal links should work properly. Broken links are not good from both users & search engines perspective. 9- Use site map with all important pages links. It will help search engines & users to search all level web pages. Sitemap is not only effective for SEO, but also has usability benefits. 10- Some web pages may be more popular in the search rankings and receive more links than the rest of your website. You can strengthen these weaker pages by including some contextual links to them from the stronger pages. 11- Check your server logs for 404 errors. fix any broken links and redirect old linked to pages to their new locations 12- Try to use absolute URLs for internal linking as it can help you when you’re reusing content across multiple protocols (such as http and https). Absolute URLs provide you with indirect benefits in unforeseen situations, such as when someone steals your copy and forgets to change the internal links. Absolute URLs also show you exactly where your links lead, whereas links using “…/somewhere.html” structures are ambiguous and, especially in deep-content sites, often cause links to break when pages are moved around. Absolute URLs provides the better control over optimization. 13- Linking page & text should have connection which is actually beneficial for the users. Linking should not be from SE’s point of view. 14- Pages which are not very important from users & search engines point of view should have nofollow attribute while linking with navigational part of the site, because thru linking with those less important pages we are passing our link PRs to pages that aren’t as important. For example: private policy pages, legal terms, thank you pages, shopping carts, sitemap etc. are not very important so we can put a nofollow tag on these pages. You can understand in this way, like you should put nofollow tags on those pages which are not important for (search engine result pages) SERPs as people might not come to your site thru those pages. Basically, every link that you put it, ask yourself “does that page/section need more link weight?”, and if the answer is no, nofollow it. Don’t go overboard though, or else you won’t have any internal links as far as the engines are concerned. 15- Find out a list of pages which are most important for you- from SE’s & Users’ perspective. Try to optimize the internal linking of these pages with appropriate anchor text. If you have several pages for the same product range like in case of e-commerce sites then link the main page to all other pages but you can put nofollow tags on less important pages. Always put a link back from deeper pages to the main page of that specific category. A well structured internal linking pattern will help SE’s to navigate your site in a better way & in longer way it will help you to get sitelinks in Google. I am sure these internal linking techniques will be very helpful in your site optimization in a better manner. Seo Consultants London

0 notes

Text

What We've Learned from Moving to Signed Cookies

We've recently moved Coggle's login sessions from a database-storage model to signed cookies, where session data is stored the session cookie itself.

There aren't many real-world examples of how to handle this migration, so we're sharing what we've learned doing this with node and express, and hopefully it'll be a useful and interesting read!

Part 1: How Old Sessions Worked

Previously we handled sessions with the express-session module and connect-mongo data store, and then we used passport to load our actual user data based on the session. Our middleware setup looked like this:

const session = require('express-session'); const MongoStore = require("connect-mongo")(session); const sessionStore = new MongoStore({ ... }); // loads req.session from the database store, if the request included a valid session cookie app.use(session({store: sessionStore, ...})); // passport middleware loads req.user from our users collection based on the user ID stored in the session app.use(passport.initialize()); app.use(passport.session()); // csrfMiddleware saves a CSRF token in the session app.use(csrfMiddleware);

For each request that included a session cookie, the process was basically:

Check the connect-mongo sessions collection in the database to see if the cookie is valid

If it's valid, load the session data (the user ID and anti-CSRF token) from the sessions collection

Passport middleware loads req.user based on the user ID

Our actual app logic runs

Finally, if the session is updated (for example the cookie expiry is extended), re-save the session to the database. (express-session does this when the response is sent by hooking the response object)

The corresponding data for every single session cookie that hadn't expired had to be saved in the database. This added up to a lot of session records!

Before the migration sessions were the biggest cause of writes to our database, a significant source of reads, and the majority of data we actually stored in our main database (The actual content of Coggle diagrams is stored separately). Our goal with moving to signed sessions is to significantly reduce the resources needed to host this.

Part of the reason for the volume of session data is that we have very long-lived session cookies, as we prioritise people being able to easily return to their Coggle diagrams. People forgetting which email address they used to log in and 'losing' their diagrams as a result is our biggest source of support requests.

Part 2: Choosing a Signed Cookie Implementation

An alternative to storing sessions in the database is to instead store the session data in the cookie itself, so when each page is loaded the session data needed is immediately available in the cookies of the request. This is possible as long as there's a cryptographic signature on the cookie to stop it from being tampered with. Someone can't change their cookie to log in to someone elses account, as they have no way to forge the cryptographic signature.

There isn't a formal standard for signing cookies, but the most common approach is to store a second cookie alongside each cookie to be signed, with a .sig extension to the name. This is the approach used by the cookies npm module, and the cookie-session middleware wraps this module into a convenient middleware which initialises req.session if the session cookie's signature is valid.

We already use JSON Web Tokens in Coggle for authentication between our back-end services, so we also considered using JWTs as session cookie values. There would be a number of advantages/disadvantages to this:

Public-keys could be used for signing, enabling our back-end services to verify signatures without access to the private signing key

Cookie values could be easily encrypted, as well as signed, by using the related JWE standard.

The additional information that makes JWTs portable (key ID, issuer, and using public-key signatures) also makes them bigger

Public-key signatures are significantly more expensive to sign and verify.

There are no readily available open source node modules for JWT-based session cookies.

Since we don't need encryption and would prefer to use symmetric keys, we chose the cookie-session middleware. If you're considering the same route, then think carefully about whether all of the data stored in your session should be unencrypted.

Part 3: Implementation

Secure Configuration:

The default for cookie-session (inherited from the cookies module), is to use the SHA1-HMAC signing algorithm. SHA1 has some weaknesses, so to be cautious we use SHA256-HMAC instead by passing our own Keygrip instance when creating the session middleware:

const signingKeys = new Keygrip([superSecretKey, ...], 'sha256'); const cookieSessionMiddleware = cookieSession({ name: 'session-cookie', keys: signingKeys, maxAge: Session_Duration, httpOnly: true, sameSite: 'lax', signed: true, secure: true, });

Handling CSRF:

We set SameSite=Lax on our session cookies, so it would not normally be possible for code on other sites to send potentially state-changing POST requests with the session cookie. However, in case people are using old browsers which do not support SameSite, or there is a bug in browser's implementation, we still also use an anti-CSRF token for state changing requests.

Previously the CSRF token for each session was stored in the database, and the value sent with each request from the client compared against this - with signed cookies it's instead stored in the cookie itself.

As the session cookie is stored as a HTTPOnly cookie, it is not possible for a CSRF script to read the value, even though it exists on the client.

It might be possible for malicious javascript to overwrite the HTTPOnly cookie, but in that case the cookie signature would be invalid.

This CSRF protection set-up is definitely a compromise, but as Coggle isn't handling payments, we think it's reasonable.

Migrating Old Sessions

It's important to migrate existing sessions so we don't log out users - running both the express-session and cookie-session middleware simultaneously isn't possible, as they both hook req.session and the response object.

As a result, we had to extract the logic from express-session which actually reads and verifies cookies (the getcookie function), and manually check the connect-mongo store, which is relatively straightforward:

const session = require('express-session'); // just for passing to connect-mongo, not used as middleware! const MongoStore = require("connect-mongo")(session); const legacySessionStore = new MongoStore({ ... }); const loadLegacySession = function(req, callback){ const session_id = getcookie(req, legacyCookieName, [legacyCookieSecret]) if(session_id){ legacySessionStore.get(session_id, function(err, session){ return callback(err, session_id, session); }); }else{ return callback(null, null, null); } };

With this in place, the final middleware for migrating sessions is straightforward. The migration is only temporary - once all old sessions have expired, we'll be able to just use the new cookieSessionMiddleware directly instead.

const sessionMiddleware = function(req, res, next){ // first delegate to the new session middleware: cookieSessionMiddleware(req, res, function(err){ if(err) return next(err); // then, ONLY IF there's no user ID in the new style // session, try to load one from the legacy session // so we can migrate it: if(!(req.session && req.session.passport && req.session.passport.user)){ loadLegacySession(req, function(err, legacySessionID, legacySession){ if(err) return next(err); // if there was a passport user ID in the old // session, migrate it: if(legacySession && legacySession.passport && legacySession.passport.user){ req.session.passport = {user:legacySession.passport.user}; // also migrate any existing CSRF token, so // CSRF tokens used in pages which are already // open remain valid: if(legacySession._csrf){ req.session.csrf = legacySession._csrf; } } // delete the old session: if(legacySessionID){ deleteLegacySession(legacySessionID, req, res); } return next(); }); }else{ // if we have an authed session from the new cookie // already then we're done: return next(); } }); });

After this, the passport middleware works exactly the same as before, loading req.user from session.passport.user

Part 4: The Results!

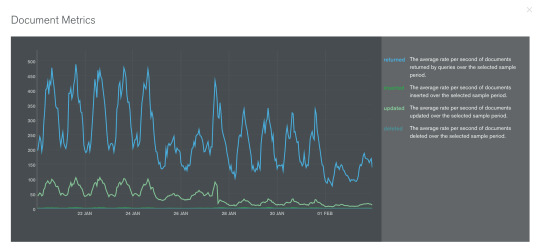

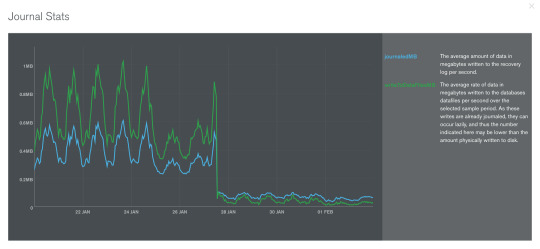

We deployed the new sessions around January 27th. Based on one week either side of that, we saw some dramatic differences:

Database update operations, and corresponding db journal data, were reduced by approximately 80%, from 0.4MB/s to 0.08MB/s

Database volume busy time (which previously limited our peak scaling), reduced from approximately 15% to approximately 3%. In theory we can now handle peaks of over 30x our normal traffic volume, instead of peaks of only 7x!

(The reduction in the read ops of two of the volumes is primarily because they were being used as syncing sources for our off-site replicas - less journal data means less to be read for syncing)

And finally, 311 GB of session data and corresponding indexes can eventually be dropped from our database (multiplied across replicas, that's over 1.5TB of disk space, or about $160/month)

Hopefully this has been an interesting read. If you thought we were crazy to store our sessions in MongoDB in the first place, well, we also used to store the entire contents of Coggle documents in a MongoDB database too... maybe we'll write about that next!

Posted by James, Feb 2020.

0 notes

Text

How To Track Results (And Not Fall Into the Trap That Ruins 95% of Well-Thought Out Diets)

Whether you're just getting started, or you're a longtime fitness pro, you'll never get the results you're dreaming of if you don't accurately and properly track your progress.

A lot of beginners get discouraged and quit because they're not seeing fast results, or because the scale isn't tipping in their favor.

Similarly, many seasoned gym rats get complacent and stop being as vigilant about tracking their progress because they think they've got their routine down to a science.

Both are doing it wrong.

You see, by consistently tracking your progress, you not only collect important data you can use to steadily make adjustments to your exercise and diet regimens, but you also keep yourself motivated to stay in it to win it.

Here are the best methods for tracking your progress over the long run (try one or more of these methods):

Use the scale wisely. Remember that a scale only gives you a rough estimate of your weight on a daily basis. Depending on your monthly cycle (yes, guys, you have one too) the amount of water your body retains will fluctuate dramatically. So if you're not taking regular readings and averaging them out over weeks and months at a time, you're giving yourself false hope or false terror every time you see a quick drop or spike in your weight. For more accurate results, use a variety of metrics to track your progress over time.

Use a measuring tape. The same as with a scale, this can fluctuate throughout the month.

But it's useful to take a measurement of some key areas of your body once a week and chart how they shrink as you progress. The main areas you should measure (and log in a journal) are your:

Waist

Hips

Thighs

Chest

Arms

Calves

Use calipers or a scientific method of body fat analysis. The main scientific options are bioelectrical impedance scales (somewhat inaccurate), DEXA/BodPod (pricey, but accurate), underwater testing (also pricey, but accurate) readings to measure body fat. As for calipers, make sure you do it yourself or have the same person doing it for you each time (I do it myself every 2 weeks), since variations in grips used for calipers can often cause very inaccurate readings. Bodyfat scales are also notorious for being inaccurate (often as much as 3-5% off), BUT if you use them consistently, you'll get a consistent return. So even if you can't tell what your exact body fat percentage is, you can tell how much of your body fat you've lost so far.

When calculating, bear in mind that an average body fat percentage for women is between 25% and 32%, and for men, it's between 18% and 24%.

Most people who are bodybuilding have lower fat levels than this - to see your abs through your skin, if you're a woman you'll need a body fat percentage just below the range of 11% and 14%, and for a man you'll need a body fat percentage below the range of 10% and 12%.

Just keep in mind that it's unhealthy to have too low of levels of body fat; at some point, having too little fat on your body can start to powerfully affect some of your body's natural processes.

This is especially dangerous for women of child-bearing age who may become pregnant - if you're going to grow a baby, you need ample fat on your body to be able to nourish that child and eventually produce breast milk for the little tyke.

Here's a quick chart that illustrates body fat percentage ranges for different body types:

Classification

Men Women

Do not go lower than:

4-5% 10-11%

Athletic body

6-12% 13-19%

Generally fit body

13-17% 20-24%

Average body

18-24% 25-32%

Overweight body

25%+ 33%+

And here's a chart that illustrates healthy/fit body fat percentage ranges based on age:

Age Men (Fit/Athletic) Women (Fit/Athletic)

18-30 y.o. 10-18% 20-25%

31-50 y.o. 16-24% 22-29%

Over 50 y.o. 16-29% (Fit to Average) 20-36% (Fit to Average)

If you are over 50 y.o., read this: Men and women over 50 should consult with their doctors about their ideal body fat, because it varies widely depending on the individual and their health. For men, it is usually considered unhealthy (as opposed to not ideal) for men who are 50+ and have average builds to have a body fat percentage below 15% or above 30%. For women, it can sometimes be dangerous for women over 50 to have a body fat percentage of lower than 20% and higher than 36%.

Also, as you age, your muscles and bones naturally lose their density and size. At this time, a higher body fat percentage is not just acceptable, it's actually healthier.

Take a picture in the mirror. You can do this as often or as sparingly as you want, but it can be a great way to measure your progress - and makes for a great mash-up on YouTube or in a slideshow later on. As with any of these methods, if you're hoping to see a huge difference in a week or two, you may be disappointed (though not always!). But, you'll be amazed how different your body looks within a couple of months if you stick to your diet - and you'll be able to see your progress over time in a series of photos, which can be really inspirational!

You'll be able to get within 5% of your percentage, which is ideal for both progress checks and also to fill in the bonus "So Easy A Caveman Can Do It"Calculator so you have the most accurate calorie totals!

Write down your calories. You've got to write down everything -everything - that you eat, at least in the beginning. That means candy bars, beers, that half a slice of cake you had at the office birthday party for Irene on Friday, everything. If you're guessing in the beginning, you don't have an accurate picture, and you're not going to be able to analyze your habits to see where you need to make improvements.

Write down your exercises. You've got to track your strength-training as well as your cardio. A typical exercise journal will contain the exercises you did, how many sets and reps of each and the amount of weight you were throwing around. It should also have a section for each day of exercise - what kind did you do? How many calories did the machine say you burned (or did you calculate that you burned, if you worked out in the great outdoors?) Was it easy, moderate, intense? Keeping an exercise journal is more than an important tool for keeping yourself on the right track, it's also an exercise in honesty and accountability. Are you really squeezing out each of those last reps? Are you flipping back through pages and realizing that you're leaving the gym too early, too often? An exercise journal can tell you not just about how strong you're getting, but how committed you are, as well.

Use an Excel spreadsheet or Microsoft Word table design. As with personal finance, the most popular way to track your fitness progress is with an Excel spreadsheet or Word table. With just a basic knowledge of Excel, you can create graphs, charts and more that will give you a big-picture view of your efforts thus far. When creating a spreadsheet, make sure you have boxes designated for weight, measurements and body fat percentage. Don't lose heart if your numbers aren't moving as quickly as you want them to! You may go a week or two without seeing your weight change, for instance, because you're building muscle and burning fat at the same time - so it's important to know what your measurements and body fat percentage are.

Use a web site or app specifically designed to help you track your progress. There are many of these, running the gamut from highly detailed and useful (a few of them) to mostly bunk (most of them.) It's a shame that the cottage industry that has sprung up around weight-loss since the beginning of the obesity epidemic in the 1990s in America has attracted its fair share of snake-oil salesmen and hucksters. However, there are some very good sites out there that can be very helpful to you as you make your journey toward the body of your dreams. Here are the best websites to track your progress:

FitDay (this is my hands down favorite tracker)

The Daily Plate

Skinnyo

Bodybuilding.com

Use my secret on-the-go tracking "weapon", for times that it's hard/impossible to get online. If I can't access FitDay (or my personal journals at home), I always use one of the following and suggest you do the same:

"Notes" from the iPhone are usually sent automatically to your email address.

Evernote updates automatically and can be accessed from any computer.

The harsh truth is: You can diet and exercise all you want, but if you don't have good, consistent measurements, you'll either burn out or fail to capitalize on opportunities to improve your results over time.

So, follow the suggestions above and/or get right to it with the following steps.

Action Steps:

Hop on Google Images and search "body fat % for (your gender)"

If you have calipers or access to another method, use them to estimate your body fat %

Interested in losing weight? Then click below to see the exact steps I took to lose weight and keep it off for good...

Read the previous article about "Nutrition Basics for Fast Pain Relief (and Weight Loss)"

Read the next article about "Advanced Fat Loss - Calorie Cycling, Carb Cycling and Intermittent Fasting"

Moving forward, there are several other articles/topics I'll share so you can lose weight even faster, and feel great doing it.

Below is a list of these topics and you can use this Table of Contents to jump to the part that interests you the most.

Topic 1: How I Lost 30 Pounds In 90 Days - And How You Can Too

Topic 2: How I Lost Weight By Not Following The Mainstream Media And Health Guru's Advice - Why The Health Industry Is Broken And How We Can Fix It

Topic 3: The #1 Ridiculous Diet Myth Pushed By 95% Of Doctors And "experts" That Is Keeping You From The Body Of Your Dreams

Topic 4: The Dangers of Low-Carb and Other "No Calorie Counting" Diets

Topic 5: Why Red Meat May Be Good For You And Eggs Won't Kill You

Topic 6: Two Critical Hormones That Are Quietly Making Americans Sicker and Heavier Than Ever Before

Topic 7: Everything Popular Is Wrong: The Real Key To Long-Term Weight Loss

Topic 8: Why That New Miracle Diet Isn't So Much of a Miracle After All (And Why You're Guaranteed To Hate Yourself On It Sooner or Later)

Topic 9: A Nutrition Crash Course To Build A Healthy Body and Happy Mind

Topic 10: How Much You Really Need To Eat For Steady Fat Loss (The Truth About Calories and Macronutrients)

Topic 11: The Easy Way To Determining Your Calorie Intake

Topic 12: Calculating A Weight Loss Deficit

Topic 13: How To Determine Your Optimal "Macros" (And How The Skinny On The 3-Phase Extreme Fat Loss Formula)

Topic 14: Two Dangerous "Invisible Thorn" Foods Masquerading as "Heart Healthy Super Nutrients"