#azurestorage

Explore tagged Tumblr posts

Text

Azure Data Lake Benefits

Azure Data Lake Benefits

Azure Data Lake is a highly efficient and scalable storage system that helps organizations store and manage large amounts of data in a secure and organized way. It is especially beneficial for businesses looking to utilize big data and advanced analytics.

Here’s why Azure Data Lake is valuable:

Scalability: It can store massive amounts of data without performance issues, making it ideal for businesses with rapidly growing data needs.

Cost-Effective: It offers affordable storage options, allowing organizations to store data without high infrastructure costs.

Flexibility: Azure Data Lake supports different types of data, from structured to unstructured, meaning businesses can store a wide variety of data types without needing to organize or transform them beforehand.

Integration with Analytics Tools: It works seamlessly with other Azure tools, like machine learning and big data analytics platforms, which help companies process and analyze their data more effectively.

Security: Azure Data Lake comes with built-in security features, ensuring that the stored data is protected and only accessible to authorized users.

What is Data Lake?

A Data Lake is essentially a huge storage space where you can keep all types of data—whether it's organized, semi-organized, or completely unorganized—without worrying about how to structure it beforehand. This flexibility allows businesses to store a wide variety of data from various sources, such as IoT sensors, social media posts, website logs, and much more.

The key feature of a Data Lake is its ability to handle massive amounts of data, often referred to as "big data," in its raw form. This means that businesses don’t need to spend time and resources cleaning or organizing the data before storing it. Once the data is in the lake, it can be processed, analyzed, and turned into valuable insights whenever needed.

Data Lake in Azure

Azure Data Lake is a cloud storage solution offered by Microsoft Azure that enables businesses to store large amounts of data securely and efficiently. It’s designed to handle a variety of data types, from simple log files to complex analytics data, all within one platform.

With Azure Data Lake, organizations don’t have to worry about the limitations of traditional storage systems. It’s highly scalable, meaning businesses can store data as their needs grow without running into performance issues. It also offers high performance, so users can access and analyze their data quickly, even when dealing with large volumes.

Because it’s built on the cloud, Azure Data Lake is perfect for modern data needs, such as advanced analytics, machine learning, and business intelligence. Organizations can easily integrate it with other tools to derive valuable insights from their data, helping them make informed decisions and drive business success.

When to Use Data Lake?

Data Lakes are most useful when your business deals with large volumes of diverse data that don’t necessarily need to be organized before storing. If your data comes from multiple sources—like sensors, websites, social media, or internal systems—and is in raw or unstructured form, a Data Lake is the right tool to store it efficiently.

You should consider using a Data Lake if you plan to perform big data analytics, as it can handle vast amounts of information and allows for deeper analysis later. It's also ideal if you're looking to build real-time analytics dashboards or develop machine learning models based on large datasets, particularly those that are unstructured (such as text, images, or logs). By storing all this data in its raw form, you can process and analyze it when needed, without worrying about organizing it first.

Data Lake Can Be Utilized in Various Scenarios

Big Data Analytics: If your company handles large and complex datasets, Azure Data Lake is an ideal solution. It allows businesses to process and analyze these huge amounts of data effectively, supporting advanced analytics that would be difficult with traditional storage systems.

Data Exploration: Researchers and data scientists use Data Lakes to explore raw, unprocessed data. They can dig into this data to discover patterns, trends, or generate new insights that can help with building machine learning models or AI applications.

Data Warehousing: Data Lakes allow businesses to store both structured (like numbers in tables) and unstructured data (like social media posts or images). By combining all types of data, companies can create powerful data warehouses that provide deeper business insights, helping them make better decisions.

Data Archiving: Data Lakes also make it easy to store large amounts of historical data over long periods. Businesses can keep this data safe and easily accessible for future analysis, without worrying about running out of storage space or managing it in traditional databases.

Are Data Lakes Important?

Yes, Data Lakes are very important in today’s data-driven world. They provide businesses with a flexible and scalable way to store massive amounts of data without the constraints of traditional storage systems. As companies generate more data from various sources—such as websites, social media, sensors, and more—Data Lakes make it easier to store all that information in its raw form.

This flexibility is crucial because it allows organizations to store different types of data—structured, semi-structured, or unstructured—without having to organize or transform it first. Data Lakes are also cost-effective, offering a more affordable solution for handling big data and enabling organizations to analyze it using advanced tools like machine learning, AI, and big data analytics.

By tapping into the full potential of their data, businesses can gain deeper insights, make better decisions, and improve their overall performance. This is why Data Lakes are becoming a key component in modern data architecture.

Advantages of Data Lake

Scalability: Azure Data Lake makes it easy for businesses to scale their storage needs as their data grows. As companies collect more data over time, Data Lake can handle the increase in volume without impacting performance, allowing businesses to store as much data as they need.

Cost-effective: Storing data in a Data Lake is usually much more affordable than using traditional databases. This is because Data Lakes are designed to store massive amounts of data efficiently, often at a lower cost per unit compared to more structured storage solutions.

Flexibility: One of the key benefits of a Data Lake is its ability to store various types of data—structured (like numbers), semi-structured (like logs), and unstructured (like images or videos). This flexibility means organizations don't need to prepare or transform data before storing it, making it easier to collect and store diverse data from multiple sources.

Advanced Analytics: With all your data stored in one place, businesses can perform complex analytics across different types of data, all without needing separate systems for each data source. This centralized data storage makes it easier to analyze data, run reports, or build predictive models, helping organizations make data-driven decisions faster and more efficiently.

Limitations of Data Lake

Data Quality: Since Data Lakes store raw, unprocessed data, it can be difficult to ensure the quality and consistency of the data. Raw data may contain errors, duplicates, or irrelevant information that hasn't been cleaned up before being stored. This can make it harder to analyze and use the data effectively without additional processing or quality checks.

Complexity: Although Data Lakes are flexible, managing the large volumes of data they store can be complex. As the data grows, it can become challenging to organize, categorize, and secure it properly. This often requires advanced tools, sophisticated processes, and skilled personnel to ensure that the data remains accessible, well-organized, and usable.

Security: Data security can be another challenge when using a Data Lake, especially when handling sensitive or private data from multiple sources. Ensuring the right access controls, encryption, and compliance with regulations (such as GDPR) can be more complicated than with traditional storage systems. Without proper security measures, organizations may be at risk of data breaches or unauthorized access.

Working of Azure Data Lake

Azure Data Lake provides a unified storage platform that allows businesses to store vast amounts of data in its raw form. It integrates with other Azure services, like Azure Databricks (for data processing), Azure HDInsight (for big data analytics), and Azure Synapse Analytics (for combining data storage and analytics). This integration makes it easier to store, query, and analyze data without having to organize or transform it first.

The platform also provides tools to manage who can access the data, ensuring security protocols are in place to protect sensitive information. Additionally, it offers powerful analytics capabilities, enabling businesses to extract insights from their data and make data-driven decisions without the need for complex transformations.

Who Can Use Azure Data Lake?

Data Scientists and Engineers: These professionals often work with large, unprocessed datasets to develop machine learning models or perform complex data analysis. Azure Data Lake provides the flexibility and scalability they need to work with vast amounts of data.

Business Analysts: Analysts use Data Lakes to explore both structured (organized data) and unstructured (raw or unorganized data) sources to gather insights and make informed business decisions.

Developers: Developers can use Azure Data Lake to store and manage data within their applications, allowing for more efficient decision-making and better data integration in their products or services. This enables applications to leverage big data for improved performance or features.

Azure Data Lake Store Security

Azure Data Lake Storage offers several layers of security to protect data:

Encryption: All data is encrypted while being transferred and when it's stored, ensuring that it cannot be accessed by unauthorized individuals.

Access Control: The service integrates with Azure Active Directory (AAD) for authentication, and businesses can set up RBAC to ensure that only authorized users or systems can access certain data.

Audit Logs: Azure Data Lake generates audit logs that record every action taken on the data, allowing organizations to track who accessed or modified the data. This feature helps maintain security and ensures compliance with regulations.

Components of Azure Data Lake Storage Gen 2

Containers: These are like storage units where data is organized. Containers are used to store blobs (data files) within Azure Storage.

Blobs: These are the actual data files or objects stored within containers. Blobs can be anything from text files to images, videos, or log files.

Folders: Within containers, data can be organized into folders (or directories) and subfolders, making it easier to access and manage large volumes of data.

Need of Azure Data Lake Storage Gen 2

Azure Data Lake Storage Gen2 is needed because businesses and organizations are dealing with an increasing amount of data, both structured and unstructured. Storing and processing such large volumes of data requires a storage solution that is both scalable and flexible. Azure Data Lake Storage Gen2 enables this by offering a secure, scalable way to store data, while also providing powerful tools for advanced analytics and machine learning. The combination of Blob Storage's efficiency and Data Lake's enhanced features allows businesses to extract more value from their data.

#AzureDataLake#BigData#CloudStorage#DataAnalytics#MachineLearning#DataScience#AzureStorage#DataSecurity#BigDataAnalytics#CloudComputing#DataLakeStorage#DataManagement#DataArchiving#AdvancedAnalytics#BusinessIntelligence#AzureGen2#DataIntegration#DataExploration#DataPrivacy#ScalableStorage#DataProtection#DataLakeSecurity

0 notes

Text

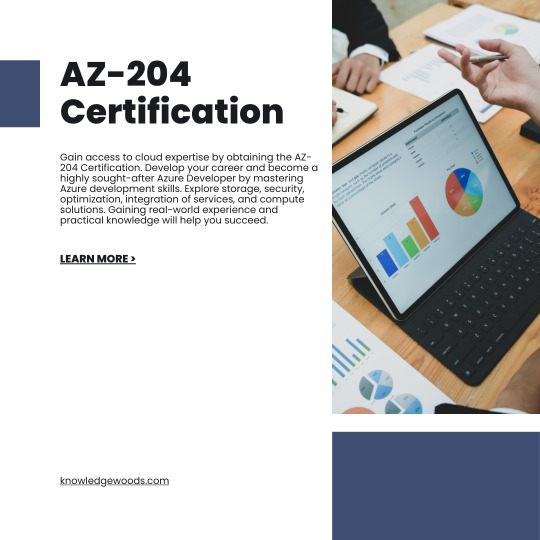

Climb to new professional heights with the AZ-204 Certification! Become an Azure Developer expert by diving into Azure mastery and improving your cloud development abilities. Examine storage choices, service integrations, security features, and compute solutions. With the top certification for Microsoft Azure, you can advance your career.

#AzureDeveloper#AZ204Certification#CloudDevelopment#MicrosoftAzure#CloudComputing#AzureServices#DeveloperCertification#CloudSolutions#AzureSecurity#AzureStorage

0 notes

Text

What are the components of Azure Data Lake Analytics?

Azure Data Lake Analytics consists of the following key components:

Job Service: This component is responsible for managing and executing jobs submitted by users. It schedules and allocates resources for job execution.

Catalog Service: The Catalog Service stores and manages metadata about data stored in Data Lake Storage Gen1 or Gen2. It provides a structured view of the data, including file names, directories, and schema information.

Resource Management: Resource Management handles the allocation and scaling of resources for job execution. It ensures efficient resource utilization while maintaining performance.

Execution Environment: This component provides the runtime environment for executing U-SQL jobs. It manages the distributed execution of queries across multiple nodes in the Azure Data Lake Analytics cluster.

Job Submission and Monitoring: Azure Data Lake Analytics provides tools and APIs for submitting and monitoring jobs. Users can submit jobs using the Azure portal, Azure CLI, or REST APIs. They can also monitor job status and performance metrics through these interfaces.

Integration with Other Azure Services: Azure Data Lake Analytics integrates with other Azure services such as Azure Data Lake Storage, Azure Blob Storage, Azure SQL Database, and Azure Data Factory. This integration allows users to ingest, process, and analyze data from various sources seamlessly.

These components work together to provide a scalable and efficient platform for processing big data workloads in the cloud.

#Azure#DataLake#Analytics#BigData#CloudComputing#DataProcessing#DataManagement#Metadata#ResourceManagement#AzureServices#DataIntegration#DataWarehousing#DataEngineering#AzureStorage#magistersign#onlinetraining#support#cannada#usa#careerdevelopment

1 note

·

View note

Text

Accessible: Azure Elastic SAN simply moves SAN to the cloud

What is Azure Elastic SAN? Azure is pleased to announce the general availability (GA) of Azure Elastic SAN, the first fully managed and cloud-native storage area network (SAN) that simplifies cloud SAN deployment, scaling, management, and configuration. Azure Elastic SAN streamlines the migration of large SAN environments to the cloud, improving efficiency and ease.

A SAN-like resource hierarchy, appliance-level provisioning, and dynamic resource distribution to meet the needs of diverse workloads across databases, VDIs, and business applications distinguish this enterprise-class offering. It also offers scale-on-demand, policy-based service management, cloud-native encryption, and network access security. This clever solution combines cloud storage’s flexibility with on-premises SAN systems’ scale.

Since announcing Elastic SAN’s preview, they have added many features to make it enterprise-class:

Multi-session connectivity boosts Elastic SAN performance to 80,000 IOPS and 1,280 MBps per volume, even higher on the entire SAN. SQL Failover Cluster Instances can be easily migrated to Elastic SAN volumes with shared volume support. Server-side encryption with Customer-managed Keys and private endpoint support lets you restrict data consumption and volume access. Snapshot support lets you run critical workloads safely. Elastic SAN GA will add features and expand to more regions:

Use Azure Monitor Metrics to analyze performance and capacity. Azure Policy prevents misconfiguration incidents. This release also makes snapshot export public by eliminating the sign-up process.

When to use Azure Elastic SAN Elastic SAN uses iSCSI to increase storage throughput over compute network bandwidth for throughput and IOPS-intensive workloads. Optimization of SQL Server workloads is possible. SQL Server deployments on Azure VMs sometimes require overprovisioning to reach disk throughput targets.

“Azure SQL Server data warehouse workloads needed a solution to eliminating VM and managed data disk IO bottlenecks. The Azure Elastic SAN solution removes the VM bandwidth bottleneck and boosts IO throughput. They reduced VM size and implemented constrained cores to save money on SQL server core licensing thanks to Elastic SAN performance.”

Moving your on-premises SAN to the Cloud Elastic SAN uses a resource hierarchy similar to on-premises SANs and allows provisioning of input/output operations per second (IOPS) and throughput at the resource level, dynamically sharing performance across workloads, and workload-level security policy management. This makes migrating from on-premises SANs to the cloud easier than right-sizing hundreds or thousands of disks to serve your SAN’s many workloads.

The Azure-Sponsored migration tool by Cirrus Data Solutions in the Azure Marketplace simplifies data migration planning and execution. The cost optimization wizard in Cirrus Migrate Cloud makes migrating and saving even easier:

Azure is excited about Azure Elastic SAN’s launch and sees a real opportunity for companies to lower storage TCO. Over the last 18 months, they have worked with Azure to improve Cirrus Migrate Cloud so enterprises can move live workloads to Azure Elastic SAN with a click. Offering Cirrus Migrate Cloud to accelerate Elastic SAN adoption, analyze the enterprise’s storage performance, and accurately recommend the best Azure storage is an exciting expansion of their partnership with Microsoft and extends their vision of real-time block data mobility to Azure and Elastic SAN.

Cirrus Data Solutions Chairman and CEO Wayne Lam said that we work with Cirrus Data Solutions to ensure their recommendations cover all Azure Block Storage offerings (Disks and Elastic SAN) and your storage needs. The wizard will recommend Ultra Disk, which has the lowest sub-ms latency on Azure, for single queue depth workloads like OLTP.

Consolidate storage and achieve cost efficiency at scale Elastic SAN lets you dynamically share provisioned performance across volumes for high performance at scale. A shared performance pool can handle IO spikes, so you don’t have to overprovision for workload peak traffic. You can right-size to meet your storage needs with Elastic SAN because capacity scales independently of performance. If your workload’s performance requirements are met but you need more storage capacity, you can buy that (at 25% less cost) than more performance.

Get the lowest Azure VMware Solution GiB storage cost this recently announced preview integration lets you expose an Elastic SAN volume as an external datastore to your Azure VMware Solution (AVS) cluster to increase storage capacity without adding vSAN storage nodes. Elastic SAN provides 1 TiB of storage for 6 to 8 cents per GiB per month1, the lowest AVS storage cost per GiB. Its native Azure Storage experience lets you deploy and connect an Elastic SAN datastore through the Azure Portal in minutes as a first-party service.

Azure Container Storage, the first platform-managed container-native storage service in the public cloud, offers highly scalable, cost-effective persistent volumes built natively for containers. Use fast attach and detach. Elastic SAN can back up Azure Container Storage and take advantage of iSCSI’s fast attach and detach. Elastic SAN’s dynamic resource sharing lowers storage costs, and since your data persists on volumes, you can spin down your cluster for more savings. Containerized applications running general-purpose database workloads, streaming and messaging services, or CI/CD environments benefit from its storage.

Price and performance of Azure Elastic SAN Most throughput and IOPS-intensive workloads, like databases, suit elastic SAN. To support more demanding use cases, Azure raised several performance limits:

Azure saw great results with SQL Servers during their Preview. SQL Server deployments on Azure VMs sometimes require overprovisioning to reach disk throughput targets. Since Elastic SAN uses iSCSI to increase storage throughput over compute network bandwidth, this is avoided.

With dynamic performance sharing, you can cut your monthly bill significantly. Another customer wrote data to multiple databases during the preview. If you provision for the maximum IOPS per database, these databases require 100,000 IOPS for peak performance, as shown in the simplified graphic below. In real life, some database instances spike during business hours for inquiries and others off business hours for reporting. Combining these workloads’ peak IOPS was only 50,000.

Elastic SAN lets its volumes share the total performance provisioned at the SAN level, so you can account for the combined maximum performance required by your workloads rather than the sum of the individual requirements, which often means you can provision (and pay for) less performance. In the example, you would provision 50% fewer IOPS at 50,000 IOPS than if you served individual workloads, reducing costs.

Start Elastic SAN today Follow Azure start-up instructions or consult their documentation to deploy an Elastic SAN. Azure Elastic SAN pricing has not changed for general availability. Their pricing page has the latest prices.

Read more on Govindhtech.com

0 notes

Text

#CASBAN6: How to configure Azurite to use DefaultAzureCredential with Docker on macOS

I just blogged: #CASBAN6: How to configure Azurite to use DefaultAzureCredential with Docker on macOS #Azurite #Azure #AzureStorage #Docker #macOS #AzureSDK

Before we will have a look at the promised facade for uploading files to Azure’s Blob storage, I want to show you how to configure Azurite to use the Microsoft Identity framework with Docker on macOS for local debugging. Using the Identity framework, our local debug environment and the productive rolled out functions will behave the same. If you have been following along, you should already have…

View On WordPress

#Azure#AzureDev#Azurite#Blob#Blob Storage#container#credentials#debugging#DefaultAzureCredential#Docker#local#macOS#setup#storage

0 notes

Link

leverages Azure to boost migration speed and reduce throttling effect to facilitate high performance heavy migration

0 notes

Photo

(via Azure Storage: How to create Blob Storage and upload files)

0 notes

Video

youtube

[English] Azure Tip: Using the Azure CLI in Azure to provide a storage a...

0 notes

Text

Analyzing the Risks of Using Environment Variables for Server less Management

If we think about this then, we can think of the serverless application as a piece of code that is that is executed in a specific cloud infrastructure. The code's execution could be initiated via a variety methods such as using the HTTP API endpoint, or an the event. From a security point of view, it's possible to discern in the security of the codes that are executed, as well as the context in which code runs. Although CSPs can't affect the code that is executed, as it's the duty of the user to execute the code, they could influence the context of execution.

We've discussed before the risks that can be triggered by inadequate risk management. Similar risks are applicable also to CSPs as well as their products. In this regard, we'd like to talk about the unsecure methods that is used by many developers, which has been transfered to cloud-based systems.

Variables that occur in the environment

Based on our research, we identified the main security risk that is inside the variable environment. They are used to store tokens of preauthorization for certain services connected to an execution context, which is a serverless system in this instance. One of the most significant difference we can see is that, for instance in Azure this service is able to secure secrets through the storage the secrets in an encrypted formats and then transmitting them via secure channels. So, in the end of the day, secrets are stored within the execution context, which is an environment variable which is plain text. In the event that they're revealed exposed they could lead to attacks that involve random code execution in a manner that is dependent on specific cloud architectures, or leaks of private corporate information and/or storage in the case that the secrets can be used to run other applications.

From a security point standpoint, the security viewpoint is completely wrong. Environment variables are available to all processes within an execution context. This implies that each process that is running has your plaintext password even if the program does not require it. From the standpoint of a programmer, it's evident that this flaw in the system as a way to circumvent it is a risk that is common. From a security perspective viewpoint, we believe that this is a major threat that comes with a myriad of security potentials and threats.

Risks in Environment Variables

The next paragraphs will describe an attack scenario that could be used inside Microsoft's Azure Functions environment. If it is misused, this situation could result in remote execution (RCE) as and leak of function code and even code that functions write overwriting.

This situation is based on an issue in the application or the environment in which it is in use which could result in the release of the environment variable. There are still vulnerabilities that are new and instances where the user is unable to think about using servers in a "right" way.

In the midst of areas that are vulnerable to be abused, among the variables most susceptible to abuse that can be exploited in case of a security breach within Azure Azure system could be AzureWebJobsStorage. The variable is a connection string which allows users to access AzureStorage in order to keep the tasks that are stored, which include the most popular files operations, such as upload, delete and move and many other tasks.

This connection string can be used with Microsoft Azure Storage Explorer to change the storage account that is not working properly.

Once connected to the server, the client can delete and upload a brand new version of the serverless function.

Video 1. A demonstration of Concept Leaking secrets stored in an environment dependent

What is the best approach? We strongly suggest that developers and teams refrain of using variables in the environment to keep secrets within applications that are being used.

The most commonly held notion is that secrets should be converted or decrypted into plaintext within a certain portion in the memory. This is certainly true, but one of the best methods to accomplish this is to keep the length of time to ensure a secure deletion of memory whenever feasible, since there's no requirement for the data to ensure that the memory isn't susceptible to leaks. This is apparent across a range different operating system (OS) applications. Therefore what is the reason why DevOps not using this technique?

It is crucial to keep in mind that, even if a crucial vault binding is used to determine the required connection string to perform serverless functions it must be extended to become an environmental variable.

DIGITAL DEVICES LTD

Long before Apple set an average consumers mindset to replacing their handheld gadgets in two years, Digital Devices Ltd believed in Moore's law that computing will double every two years. With our heritage from the days of IBM Personal Computer XT, our founders have gone through the technology advancements of the 1990s and 2000s realizing that technology is an instrumental part of any business's success. With such a fast pace industry, an IT department can never be equipped with the tools and training needed to maintain their competitive edge. Hence, Digital Devices has put together a team of engineers and vendor partners to keep up with the latest industry trends and recommend clients on various solutions and options available to them. From forming close relationships with networking and storage vendors like Juniper, SolarWinds and VMWare to high-performance computing by HPE or AWS Cloud solutions, Digital Devices Limited offers the latest technology solutions to fit the ever-growing needs of the industry.

Our experts can guide you through the specifications and build cost efficiencies while providing high end, state-of-the-art customer services. We research and analyses market and its current demand and supply chain by offering wide range of bulk supplies of products like AKG C414 XLII, Shireen Cables DC-1021, Shireen Cables DC-2021, Dell p2419h monitor, Dell U2419H, Dell P2719H, Dell P2219H, Lenovo 62A9GAT1UK, LG 65UH5F-H and Complete IT Infrastructure products and services.

0 notes

Text

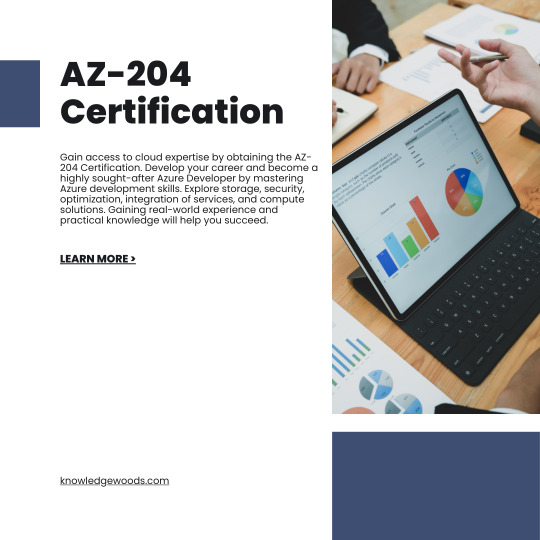

Bridging the Cloud Divide: Mastering AZ-204 Certification for Azure Developers

Microsoft Azure is a leader in the rapidly evolving field of cloud computing, providing an extensive range of services to companies across the globe. The Azure Developer Associate Certification, also known as AZ-204, is the key to opening up new opportunities and increasing one's career for developers who want to validate their knowledge and proficiency in creating cloud solutions using Azure.

Understanding AZ-204 Certification

Developers with experience in planning, creating, testing, and managing cloud solutions on Microsoft Azure are suited for the AZ-204 course. Whether you're a novice to cloud computing or an experienced developer, AZ-204 gives you the know-how to succeed in the rapidly changing field of cloud development.

Why Pursue AZ-204 Certification?

Validation of Skills: Having the AZ-204 certification gives you a competitive advantage in the job market by confirming your proficiency in using Azure to develop cloud solutions.

Career Advancement: AZ-204 certification validates your expertise in developing cloud solutions using Azure, giving you a competitive edge in the job market.

Hands-On Experience: The certification test evaluates your ability to develop and implement Azure solutions practically, making sure you're ready for situations that may arise in the real world.

Key Topics Covered in AZ-204

Developing Azure Compute Solutions: Discover how to use Azure Functions for serverless solutions, Azure Kubernetes Service (AKS) for containerized applications, and virtual machines for creation and deployment.

Implementing Azure Security: Recognize how to incorporate security features, such as encryption, authentication, and authorization methods, into your Azure solutions.

Integrating Azure Storage: Discover how to use Azure's storage services to store, retrieve, and manage data in a secure manner.

Connecting to and Consuming Azure Services: Learn how to integrate your apps with Azure's many services, including Azure Event Grid, Azure Logic Apps, and Azure App Service.

Monitoring, Troubleshooting, and Optimizing Azure Solutions: Learn how to keep an eye on and optimize the performance of your Azure solutions to make sure they fulfill your business's needs.

Preparation Tips for AZ-204 Certification

Study Official Documentation: Examine Microsoft's official documentation and Azure learning paths to become acquainted with Azure services and features.

Hands-On Practice: Create a sandbox environment and work on developing solutions for Azure. Try out various services and situations to obtain real-world experience.

Online Courses and Tutorials: Participate in online tutorials or courses created especially to help you get ready for the AZ-204 test. Training resources are available in large quantities on platforms such as Pluralsight and Microsoft Learn.

Join Community Forums: Participate in forums, discussion groups, and social media to interact with the Azure community. Discuss ideas, pose inquiries, and gain insight from the experiences of others.

Take Practice Tests: You can assess your preparedness and knowledge by taking practice tests. This will assist you in determining which areas require more attention.

Conclusion

Acquiring the Azure Developer Certification (AZ-204) represents a noteworthy turning point in your cloud development career. It opens doors to exciting career opportunities in the rapidly growing field of cloud computing in addition to validating your expertise. With the appropriate study and commitment, you can pass the test with confidence and start a fulfilling career as an Azure developer.

#AzureDeveloper#AZ204Certification#CloudDevelopment#MicrosoftAzure#CloudComputing#AzureServices#DeveloperCertification#CloudSolutions#AzureSecurity#AzureStorage

0 notes

Text

Using Bicep to Deploy Multi-Region Azure SQL and Storage Accounts for Auditing

Learn how to use Bicep (IaC) to deploy a multi-region Azure SQL with Auditing logs configured for Azure Storage. #azure #azurebicep #azuresql #failovergroups #azurestorage #identity

View On WordPress

1 note

·

View note

Text

Azure Storage: A Year in Review – 2023

Azure Storage: 2023 – A Year of Growth, Innovation, and Transformation

New year reflection and planning are common. Microsoft’s top priorities are improving Azure and its ecosystem, enabling groundbreaking AI solutions, and easing cloud migrations. Azure use custom storage solutions to meet your workload needs.

2023 was a big year for storage and data services, with customers focused on AI. New workloads and access patterns increased Azure customers’ data estate. Notable Azure Storage figures:

The storage platform reads and writes over 100 Exabyte’s of data and processes over 1 quadrillion transactions a month. Both numbers increased significantly from 2023.

Azure’s new general-purpose block storage for SQL, NoSQL, and SAP increased capacity 100-fold with premium SSD v2 disks.

Premium Files, their file offering for Azure Virtual Desktop (AVD) and SAP, saw over 100% YoY growth in transactions.

Five strategic areas were advanced and invested in in 2023 to match customer workload patterns, cloud computing trends, and AI evolution.

New workload-focused innovations

Azure now offer end-to-end solutions with unique storage capabilities to help customers bring new workloads to Azure without retrofitting.

Customers’ workloads are moving from “stateless” to “stateful” as they modernize applications with Kubernetes. Azure Container Storage is optimized for stateful workloads with tight container integration, data management, lifecycle management, price-performance, and scale. The first platform-managed container-native public cloud storage service is Azure Container Storage. It allows Kubernetes native management of persistent volumes and backend storage.

Azure Elastic SAN: The Game Changer for Block Storage in the Cloud

Microsoft’s fully managed Azure Elastic SAN simplifies cloud SAN deployment, scaling, management, and configuration. It addresses customers’ service management issues when migrating large SAN appliances to the cloud. They are the first to offer a fully managed cloud SAN with cloud scale and high availability. It uses a SAN-like hierarchy to provision top-level resources, dynamically share resources across workloads, and manage security policies at the workload level. It also lets customers use Elastic SAN to store new data-intensive workloads in Azure VMware Solution. Elastic SAN and Azure Container Storage, in public preview, simplify service management for cloud native storage scenarios, advancing the paradigm. Plans call for general availability in the coming months.

New data-driven innovation is led by AI. Azure Blob Storage leads this data explosion with high performance and bandwidth for AI training. AI and machine learning (ML), IoT and streaming analytics, and interactive workloads benefit from Azure Premium Block Blob’s low latency and competitive pricing since 2019. In July, Microsoft released Azure Managed Lustre, a managed distributed parallel file system for HPC and AI training.

Microsoft Fabric’s storage layer, OneLake, uses Azure Data Lake Storage’s scale, performance, and rich capabilities. ADLS has transformed analytics with differentiated storage features like native file system capabilities that enable atomic meta data operations, which boost performance, especially when used with Azure Premium Block Blob. Multi-protocol access lets customers work with their data using REST, SFTP, and NFS v3, eliminating data silos, costly data movement, and duplication.

Mission-critical workload optimizations

Azure add differentiated capabilities to help enterprises optimize Azure-hosted mission-critical workloads.

They are actively onboarding mission-critical data solutions since Premium SSD v2’s general availability. Gigaom noted SQL Server 2019’s better performance and lower TCO on Azure VMs with Premium SSD v2. PostgreSQL and other database workloads have similar benefits. The coming year will see Premium SSD v2 capabilities improved and regional coverage expanded.

AI model training and inference are among the largest mission-critical workloads powered by Azure Blob Storage. Scale-out platforms help cloud-native companies store Exabyte’s of consumer-generated data. To power recommendation engines and inventory systems, major retailers store trillions of user activities. The 2023 Cold storage tier makes data retention cost-effective.

Enterprises modernize Windows Server workloads in the cloud with enhanced Azure Files and File Sync. Modern applications can use identity-based authentication and authorization to access SMB file shares with Microsoft Entra ID (formerly Azure Active Directory support for Azure Files REST API) in preview. Azure Files supports Kerberos and REST authentication with on-premises AD and Microsoft Entra ID. Microsoft Entra ID Kerberos authentication, unique to Azure, allows seamless migration from on-premises Windows servers to Azure, preserving data and permissions and eliminating complex domain join setups.

Azure NetApp Files (ANF) meets performance and data protection needs for SAP and Oracle migrations. Azure introduced the public preview of ANF large volumes up to 500 TiB in strategic partnership with NetApp to meet the needs of workloads that require larger capacity under a single namespace, such as HPC in Electronic Design Automation and Oil and Gas applications, and Cool Access to improve the TCO for infrequently accessed data.

Scalable data migration is essential for cloud workload migration. They offer complete migration solutions. Fully managed data migration service Azure Storage Mover improves large data set migration. Windows Servers and Azure Files can be synchronized hybridly with Azure File Sync. AZ Copy allows script-based migration jobs to be completed quickly, while Azure Data box allows offline data transfer.

Growing partner ecosystem

Partners who enhance their platform with innovative solutions help us move customer workloads to Azure. Azure are pleased to see partners investing in exclusive Azure solutions after many successful strategic partnerships.

The next version of Azure Native Qumulo Scalable File Service, exclusive to Azure, leverages Azure Blob Storage’s scale and cost efficiency. This service supports Exabyte’s of data under a single namespace, surpassing other public cloud file solutions, and helps high-scale enterprise NAS data migration to Azure.

Commvault introduced an Azure-only cyber resilience solution. It helps users predict threats, recover cleanly, and respond faster. It works seamlessly with Azure OpenAI Service.

Since Pure Storage Cloud Block Storage launched on Azure in 2021, they have worked closely with them. The early adopters of Ultra and Premium SSD v2 disk storage use Azure Storage’s shared disks. They now collaborate on containers with Portworx and Azure VMware.

Azure offer storage migration solutions with Atempo, Data Dynamics, Komprise, and Cirrus Data, as well as storage and backup partners, to migrate petabytes of NAS and SAN data to Azure for free.

Industrial contributions

Azure work with industry leaders in SNIA and the Open Compute Platform (OCP) to share insights, influence standards, and develop innovative solutions and best practices.

Azure presented Massively Scalable Storage for Stateful Containers on Azure at the 2023 Storage Developer Conference, highlighting Azure Container Storage and Elastic SAN’s synergy. This combination provides unified volume management across diverse storage backends and a highly available block storage solution that scales to millions of IOPS with fast pod attach and detach.

Azure charted the future of flash as an HDD displacement technology at Flash Memory Summit (FMS) in alignment with Azure Storage long-term plans.

In the next decade, they aim for net carbon-zero emissions. Their OCP Sustainability Project provides an open framework for the datacenter industry to adopt reusability and circularity best practices and leads the steering committee to advance progress.

Unmatched quality commitment

Azure prioritize providing a solid storage foundation that can adapt to new workloads like AI and ML. This commitment ensures storage solution reliability, durability, scalability, and performance while lowering Azure customers’ TCO.

Azure invest heavily in infrastructure, hardware, software, and processes to ensure data durability. Azure Zone-redundant Storage (ZRS) guarantees data durability of at least 99.9999999999% (12 9’s) per year, the highest among major cloud service providers. Azure are the only major CSP cloud provider with a 0% Annual Failure Rate (AFR) for block storage since launch.

Our ZRS offering makes it easy to create highly available solutions across three zones at a low cost. A cross-zonal AKS cluster with ZRS disk-hosted persistent volumes ensures data durability and availability during zonal outages. ZRS provides high resiliency out of the box for clustered applications like SQL failover cluster instances (FCI) using Windows Server Failover Cluster (WSFC).

Azure integrated with Azure Copilot to improve customer support by helping support engineers troubleshoot faster and provide quality responses. This increased customer satisfaction significantly.

Read more on Govindhtech.com

#AzureStorage#Microsoft#SQL#VirtualDesktop#Kubernetes#AzureElasticSAN#SAN#AI#ML#technews#technology#govindhtech

1 note

·

View note

Text

How to take Azure Kubernetes Backup using Velero

How to take Azure Kubernetes Backup using Velero #kubernetes #velero #k8s #k8sbackup #terraform #aks #azure #terraform #helm #azurestorage

One another important topic to discuss, yes backup. Irrespective of any system or environment Backup is mandatory. Like that Kubernetes or any managed Kubernetes, we should take a backup, even if you’re using Infrastructure as Code and all deployments are automated, as it will add additional benefits from taking backups of AKS clusters. You can check some best practices from Microsoft. Why…

View On WordPress

0 notes

Video

youtube

In this short session, Frank is creating a simple #Blazor App to read images from an #AzureStorage. The particularity is that the .Net SDK is running from inside a Docker container, on a #Linux machine. And things are going great! ☁️Subscribe: http://bit.ly/2jx3uKX Segments -------- - 0:01:00 - Bonjour, Hi! - 0:08:00 - Connecting VS to the docker - 0:22:00 - Demo work - 0:26:00 - Adding some UI - 1:21:00 - Simple Demo with images is working USEFUL LINKS: ----------------------- 🔗 🔗 Get Started with Azure with a Free Subscription: http://bit.ly/AzFree FOLLOW ME ON --------------- 🔗 Twitter: https://twitter.com/fboucheros 🔗 Linkedin: https://ift.tt/2JXjvFI 🔗 Facebook: https://ift.tt/2vmSUyB 🔗 Twitch: https://ift.tt/2X64L1n BLOGS ------------ 🔗 Frankys Notes (EN): https://ift.tt/1tEPBrt 🔗 Cloud en Francais (FR): https://ift.tt/2JXqBKv GEARS ---------- - Camtasia 2018 - Fujifilm X-T2 - Blue Snowball - Cloud 5 minutes T-Shirt: store.cloud5mins.com - GitKraken: http://bit.ly/fb-gitkraken ☁️

0 notes

Video

youtube

Azure Tip: Mit der Azure CLI in Azure einen Storage Account bereitstellen

0 notes

Text

Extract a zip file stored as Azure Blob with this simple method

Extract a zip file stored as Azure Blob with this simple method #AzureStorage #ZipFiles

Ever got a scenario wherein you receive zip files into Azure Blob Storage and you are asked to implement a listener to process individual files from the zip file? If you’re already working on azure PaaS services, I don’t need to explain you how to implement a listener or a blob trigger using azure function app but what i wanted to focus in this post is to extract the zip file contents into an…

View On WordPress

0 notes