#blockingqueue

Explore tagged Tumblr posts

Text

What does the JDBC ResultSet interface? . . . . For more questions about Java https://bit.ly/465SkSw Check the above link

#resultset#rowset#drivermanager#preparedstatement#execute#executequery#executeupdate#array#arraylist#jdbc#hashcode#collection#comparator#comparable#blockingqueue#hashSet#treeSet#set#map#hashMap#computersciencemajor#javatpoint

0 notes

Text

hi

To ensure thread safety in Java, you need to manage access to shared resources so that multiple threads don’t cause race conditions, inconsistent state, or unexpected behavior. Here are the key ways to achieve thread safety:

1. Use synchronized

Ensures only one thread can access a method or block at a time.

java

CopyEdit

public synchronized void increment() { count++; }

Or use a synchronized block:

java

CopyEdit

public void increment() { synchronized(this) { count++; } }

2. Use volatile for Visibility

Ensures changes to a variable are visible to all threads.

java

CopyEdit

private volatile boolean running = true;

3. Use Atomic Classes

Java provides thread-safe classes like AtomicInteger, AtomicBoolean, etc.

java

CopyEdit

AtomicInteger count = new AtomicInteger(); count.incrementAndGet();

4. Use java.util.concurrent utilities

ReentrantLock for fine-grained locking

Concurrent collections like ConcurrentHashMap, CopyOnWriteArrayList

java

CopyEdit

ReentrantLock lock = new ReentrantLock(); lock.lock(); try { // critical section } finally { lock.unlock(); }

5. Use Immutable Objects

Immutable objects are inherently thread-safe because their state can't change after creation.

java

CopyEdit

public final class MyData { private final int value; public MyData(int value) { this.value = value; } public int getValue() { return value; } }

6. Thread-safe design with Executor Services

Avoid manually creating threads; use Executors to manage thread pools.

java

CopyEdit

ExecutorService executor = Executors.newFixedThreadPool(10); executor.submit(() -> { // task logic });

If you have a specific use case or code snippet in mind, I can help tailor the thread-safety solution for that.

4o

You said:

Specific example

ChatGPT said:

Sure! Here's a specific example of ensuring thread safety when multiple threads increment a shared counter:

Problem (Not Thread-Safe):

java

CopyEdit

public class Counter { private int count = 0; public void increment() { count++; // Not thread-safe! } public int getCount() { return count; } }

If 100 threads call increment() concurrently, the result might not be 100 due to race conditions.

Solution 1: Using synchronized

java

CopyEdit

public class Counter { private int count = 0; public synchronized void increment() { count++; } public synchronized int getCount() { return count; } }

Solution 2: Using AtomicInteger

java

CopyEdit

import java.util.concurrent.atomic.AtomicInteger; public class Counter { private AtomicInteger count = new AtomicInteger(0); public void increment() { count.incrementAndGet(); // Thread-safe } public int getCount() { return count.get(); } }

Test with Threads:

java

CopyEdit

public class Main { public static void main(String[] args) throws InterruptedException { Counter counter = new Counter(); Runnable task = () -> { for (int i = 0; i < 1000; i++) { counter.increment(); } }; Thread t1 = new Thread(task); Thread t2 = new Thread(task); t1.start(); t2.start(); t1.join(); t2.join(); System.out.println("Final count: " + counter.getCount()); // Should be 2000 } }

Let me know if you want to use ReentrantLock, ConcurrentHashMap, or any specific multithreaded scenario like producer-consumer!

4o

You said:

other things to ensure

Thread safety

ChatGPT said:

To ensure complete thread safety in Java applications, especially in multi-threaded environments, you need to think beyond just synchronized or AtomicInteger. Here's a comprehensive checklist:

1. Use Proper Synchronization

synchronized methods or blocks

ReentrantLock for advanced control (fairness, interruptibility)

2. Use Atomic Variables for Simple Operations

AtomicInteger, AtomicLong, AtomicReference, etc.

Safe for concurrent reads/writes without locks

3. Prefer Immutable Objects

Immutable classes (e.g., String, Integer) are inherently thread-safe

Use final fields and don’t expose internal state

4. Use Thread-safe Collections

Use collections from java.util.concurrent:

ConcurrentHashMap

CopyOnWriteArrayList

BlockingQueue (ArrayBlockingQueue, LinkedBlockingQueue)

Avoid:

HashMap, ArrayList in concurrent settings unless synchronized externally

5. Avoid Sharing Mutable State

Each thread should work on its own copy of data if possible

Use ThreadLocal for per-thread variables:

java

CopyEdit

ThreadLocal<DateFormat> formatter = ThreadLocal.withInitial(() -> new SimpleDateFormat("yyyy-MM-dd"));

6. Use High-Level Concurrency Utilities

ExecutorService instead of manually starting threads

CountDownLatch, Semaphore, CyclicBarrier, Phaser for coordination

Future and CompletableFuture for async computation results

7. Minimize Lock Contention

Keep critical sections short

Avoid nested locks or lock ordering issues

Prefer fine-grained locking or lock splitting

8. Ensure Visibility with volatile or synchronized

volatile ensures visibility but not atomicity

Use it for flags, like a boolean running = true;

9. Avoid double-checked locking without volatile

Wrong:

java

CopyEdit

if (instance == null) { instance = new MyClass(); // unsafe }

Correct:

java

CopyEdit

private static volatile MyClass instance; public static MyClass getInstance() { if (instance == null) { synchronized(MyClass.class) { if (instance == null) { instance = new MyClass(); } } } return instance; }

10. Test with Threading Tools

Use tools like FindBugs, SpotBugs, ThreadSanitizer, or jconsole to detect concurrency issues

Write stress tests with many threads

If you want, I can walk you through applying these in a real-world example like caching, banking transactions, or a producer-consumer system.

4o

You said:

Internally

ChatGPT said:

Ah, you're asking what happens internally to ensure thread safety in Java?

Here’s a breakdown of the internal mechanisms Java uses to enforce thread safety behind the scenes:

1. Monitor Locks and Intrinsic Locks

When you use synchronized, Java uses a monitor lock (or intrinsic lock) associated with an object.

At the JVM level, this is implemented using monitorenter and monitorexit bytecode instructions.

java

CopyEdit

synchronized (obj) { // Internally translates to monitorenter/monitorexit }

Each object has an internal monitor associated with it.

2. Java Memory Model (JMM)

Controls visibility and ordering of variables across threads.

synchronized, volatile, and final affect happens-before relationships.

Example: synchronized blocks establish a happens-before relationship between threads that enter/exit the monitor.

3. Volatile Variable Internals

volatile tells the JVM to:

Not cache the variable locally in registers or CPU caches.

Always read/write to main memory.

Internally, this uses memory barriers (on CPU and compiler level) to avoid reordering instructions.

4. Atomic Classes and CAS (Compare-And-Swap)

Classes like AtomicInteger use low-level CAS operations for lock-free thread-safe updates.

Internally relies on Unsafe.compareAndSwapInt or VarHandle.compareAndSet in newer Java versions.

Implemented using CPU instructions like LOCK CMPXCHG on x86.

5. Concurrent Collections Internals

ConcurrentHashMap: Internally uses bucket-level locks or lock striping (in older Java), or CAS + Node-level locks in newer Java.

CopyOnWriteArrayList: Uses a new copy of the array on every write, ensuring readers are unaffected by writes.

6. ThreadLocal Internals

Each thread maintains a ThreadLocalMap, which holds a value specific to that thread.

Internally avoids sharing state, hence ensuring thread safety by isolation.

7. ReentrantLock & AbstractQueuedSynchronizer (AQS)

ReentrantLock and other locks use AQS, a powerful framework that maintains a FIFO queue of waiting threads.

Lock acquisition is controlled using atomic state transitions (CAS) and park/unpark for thread suspension.

8. Thread Scheduling and Safepoints

JVM coordinates thread state and pauses threads at safepoints for garbage collection and lock management.

Ensures consistency when modifying object headers for locks (biased, lightweight, or heavyweight).

Would you like to dive into how one of these (like AQS or CAS) works line by line in Java source code?

4o

You said:

Senaphore

ChatGPT said:

Great! Let's break down Semaphore in Java — what it is, how it works internally, and where you'd use it.

What is a Semaphore?

A Semaphore is a concurrency utility in Java that controls access to a shared resource using a set number of permits.

Think of it like a parking lot with limited spots:

Each thread needs a "permit" (parking spot) to proceed.

If no permits are available, the thread waits.

When done, it releases the permit.

Basic Usage Example:

java

CopyEdit

import java.util.concurrent.Semaphore; public class ParkingLot { private final Semaphore semaphore = new Semaphore(3); // 3 parking spots public void parkCar(String carName) { try { System.out.println(carName + " waiting for parking spot..."); semaphore.acquire(); // Try to get a permit System.out.println(carName + " parked."); Thread.sleep(2000); // simulate time spent } catch (InterruptedException e) { Thread.currentThread().interrupt(); } finally { System.out.println(carName + " leaving..."); semaphore.release(); // Give back the permit } } }

How to Use It:

acquire() — blocks until a permit is available.

release() — returns a permit.

tryAcquire() — tries to get a permit without blocking.

Real-world use cases:

Limiting concurrent database connections.

Controlling access to a pool of threads or sockets.

Batching concurrent requests in a web server.

Internals (How Semaphore Works)

Semaphore is built on AbstractQueuedSynchronizer (AQS):

It maintains an internal counter of available permits.

acquire() uses CAS (Compare-And-Swap) to atomically decrement the permit count.

If permits are not available, the thread is queued in a FIFO wait queue.

When release() is called:

The count is incremented.

Waiting threads are unblocked using LockSupport.unpark().

Types:

Fair Semaphore (new Semaphore(3, true)): FIFO — threads acquire in order.

Non-fair Semaphore (new Semaphore(3)): May allow barging; higher throughput but no guarantee of order.

Visualization:

rust

CopyEdit

Permits: [3] Thread A -> acquire() -> Permits = 2 Thread B -> acquire() -> Permits = 1 Thread C -> acquire() -> Permits = 0 Thread D -> acquire() -> BLOCKED (queued) Thread B -> release() -> Permits = 1 Thread D -> UNBLOCKED

Want a real example like limiting access to an API with max 5 threads, or want to see how Semaphore compares to ReentrantLock or CountDownLatch?

4o

You said:

Kafka partition

ChatGPT said:

In Apache Kafka, a partition is a fundamental concept used to achieve scalability and parallelism.

What is a Partition?

A partition is a log (an ordered, immutable sequence of messages) within a Kafka topic.

Each topic is divided into one or more partitions.

Each message within a partition is assigned a unique offset (an incremental ID).

A partition is read and written sequentially.

Only one consumer in a group can read from a given partition at a time, ensuring order.

Why Use Partitions?

Parallelism — Multiple consumers can read from different partitions in parallel.

Scalability — Data is split across brokers, balancing the load.

Ordering — Kafka guarantees message order within a partition (not across partitions).

Example:

Suppose topic payments has 3 partitions:

makefile

CopyEdit

Topic: payments Partitions: P0 | P1 | P2 Messages: P0 → m0, m1, m2 P1 → m3, m4 P2 → m5, m6

With 3 consumers in the same group:

Each gets one partition.

All messages in a partition go to the same consumer.

How Kafka Decides Partition for a Message?

Kafka uses a partitioner when producing messages:

If a key is provided:

partition = hash(key) % number_of_partitions

If no key is provided:

Kafka uses round-robin across available partitions.

So if you're sending payments with key = customerId, all payments from that customer will go to the same partition — preserving order for that customer.

Producer Example:

java

CopyEdit

ProducerRecord<String, String> record = new ProducerRecord<>("payments", "customer123", "payment:100"); producer.send(record);

Consumer Group Example:

If a topic has 4 partitions and 2 consumers in the same group:

Consumer A gets P0, P1

Consumer B gets P2, P3

If a third consumer joins, Kafka rebalances:

Now: A → P0, B → P1, C → P2 and P3, for example.

Key Properties of Partitions:

Partition count is set when topic is created (can increase, not decrease).

Partition assignment affects consumer parallelism and data shuffling.

Want to go deeper?

I can explain:

How partition rebalancing works

Best practices for choosing partition count

How partitioning affects throughput, latency, and exactly-once semantics.

Let me know!

You said:

Consumer get partition poll

ChatGPT said:

You're asking how a Kafka consumer gets messages from a partition using poll() and how it knows which partitions it owns. Let's break it down step-by-step:

1. How Does a Kafka Consumer Get Assigned Partitions?

Kafka consumers are part of a consumer group.

Kafka automatically assigns partitions to consumers in the same group via a rebalance.

This is handled by the Kafka coordinator on the broker side.

The assigned partitions can be fetched programmatically with:

java

CopyEdit

consumer.assignment(); // returns Set<TopicPartition>

2. Polling Messages from Assigned Partitions

After assignment, you use:

java

CopyEdit

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));

poll() fetches data only from the partitions assigned to that consumer.

It pulls batches of records from each partition.

Internally, Kafka uses the fetcher thread to request data from brokers that host those partitions.

3. Full Example:

java

CopyEdit

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Collections.singleton("payments")); while (true) { ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100)); for (TopicPartition partition : records.partitions()) { List<ConsumerRecord<String, String>> partitionRecords = records.records(partition); for (ConsumerRecord<String, String> record : partitionRecords) { System.out.printf("Partition: %s Offset: %d Value: %s%n", partition.partition(), record.offset(), record.value()); } } }

4. How Internally poll() Works:

Poll does:

Heartbeat to the broker (to avoid being considered dead).

Fetch messages from assigned partitions.

Trigger rebalance if necessary (e.g., new consumer joins).

It blocks up to the duration specified or until messages arrive.

5. Control Assignment Manually (Optional)

Instead of auto-subscribe, you can manually assign partitions:

java

CopyEdit

TopicPartition tp = new TopicPartition("payments", 0); consumer.assign(Collections.singleton(tp));

This bypasses the rebalance mechanism, giving full control.

Summary

StepMethodJoin group & subscribeconsumer.subscribe(...)Get assigned partitionsconsumer.assignment()Fetch messagesconsumer.poll(...)Get messages per partitionrecords.partitions() + records.records(partition)

Want to see how to commit offsets, seek to a specific offset, or how partition assignment strategy (like range vs round-robin) works?

You said:

Pusg

ChatGPT said:

It looks like you typed "Pusg" — did you mean:

push? (As in push to a queue, push to Git, etc.)

pub/sub? (Publish/subscribe messaging model)

A typo for pull? (e.g. Kafka is pull-based)

Or something else entirely?

Let me know what you're referring to, and I’ll give you a clear answer!

You said:

Push to consumer

ChatGPT said:

Got it! You're asking about "push to consumer" — here's the key concept:

Kafka is Pull-Based (Not Push-Based)

Kafka does not push messages to consumers. Instead, consumers pull messages from Kafka using:

java

CopyEdit

ConsumerRecords<K, V> records = consumer.poll(Duration.ofMillis(100));

Why Kafka Uses Pull (and Not Push)?

1. Backpressure Control

Consumer controls how fast it reads data.

No risk of being overwhelmed by the producer or broker.

2. Custom Polling Logic

Consumers can batch process, filter, throttle, or delay messages as needed.

You decide when to poll, how often, and how many records to fetch.

3. Simplicity in Offset Management

Kafka consumers track and commit offsets at their own pace.

This allows for at-least-once, exactly-once, or at-most-once delivery depending on your logic.

Comparison: Kafka vs Push-Based Systems

FeatureKafka (Pull)Push Systems (e.g. Webhooks)Delivery modelConsumer initiates pollServer pushes dataBackpressure supportYesDifficultOrder controlEasy to maintain per partitionHarder to guaranteeError handlingConsumer retriesRetry logic on server-side

What If You Want Push-Like Behavior?

You can simulate push behavior by:

Setting a short poll interval (e.g. poll every few milliseconds

4o

You said:

How often poll

ChatGPT said:

Great question.

How often should a Kafka consumer poll()?

The short answer: very frequently, ideally before max.poll.interval.ms expires and within the session.timeout.ms heartbeat window.

Key Kafka Polling Configs:

PropertyDefaultMeaningmax.poll.interval.ms300000 (5 min)Max delay between calls to poll(). If exceeded, Kafka removes consumer from group.session.timeout.ms10000 (10 sec)Max time to miss heartbeats before Kafka considers consumer dead.heartbeat.interval.ms3000How often heartbeats are sent. Usually handled automatically by the Kafka client.

Best Practice:

Call poll() at least once every few seconds.

If you're doing long processing, you must call poll() periodically during processing or increase max.poll.interval.ms.

Never block the main consumer thread for a long time without polling.

Example Pattern: Poll and Process

java

CopyEdit

while (true) { ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100)); // short poll interval for (ConsumerRecord<String, String> record : records) { process(record); // should be fast or done in worker threads } consumer.commitSync(); // commit offsets }

If You Need to Process Slowly or Asynchronously:

Offload processing to another thread or executor:

java

CopyEdit

for (ConsumerRecord<String, String> record : records) { executor.submit(() -> process(record)); // keeps poll() fast }

Or increase max.poll.interval.ms:

properties

CopyEdit

max.poll.interval.ms=900000 # 15 minutes if you need more time between polls

Want a setup where poll happens in one thread and processing in a pool of workers? I can share that too.

4o

You said:

Payload

ChatGPT said:

In Kafka (and messaging systems in general), the payload refers to the actual data/message that is being transmitted — the meaningful content you care about.

In Kafka:

Each Kafka message (aka record) has a structure:

java

CopyEdit

ProducerRecord<K, V>

K = key (optional)

V = value → this is your payload

Also includes: topic, partition, timestamp, headers, etc.

Example:

java

CopyEdit

ProducerRecord<String, String> record = new ProducerRecord<>("payments", "user123", "payment:100"); producer.send(record);

Topic: payments

Key: "user123" (used for partitioning)

Payload / Value: "payment:100" (your actual message data)

On the Consumer Side:

java

CopyEdit

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100)); for (ConsumerRecord<String, String> rec : records) { System.out.println("Payload: " + rec.value()); // this is your payload }

Payload Format

Your payload can be anything:

String (e.g., plain text, JSON, XML)

Binary data (e.g., Avro, Protobuf, images, serialized Java objects)

Use a serializer/deserializer (SerDe) based on the format:

java

CopyEdit

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

Summary

TermMeaningPayloadThe value part of a Kafka messageKeyOptional; used for partitioningHeadersMetadata (optional)

Would you like help designing a payload format (like JSON for payments, orders, etc.) or need help serializing complex Java objects into Kafka messages?

4o

You said:

Payload sizev

ChatGPT said:

Great — you're asking about payload size in Kafka. Let’s break it down:

1. Default Payload Size Limit in Kafka

Maximum message size (broker limit):

message.max.bytes (on broker)

Default: 1 MB (1,048,576 bytes)

Maximum request size (producer limit):

max.request.size (on producer)

Default: 1 MB

Maximum fetch size (consumer limit):

fetch.message.max.bytes or max.partition.fetch.bytes

Default: ~1 MB or more depending on version

2. Increase Payload Size (if needed)

If you want to send larger messages, you must increase limits on all three sides:

On the broker (server.properties):

properties

CopyEdit

message.max.bytes=5242880 # 5 MB, for example

On the producer:

java

CopyEdit

props.put("max.request.size", 5242880); // 5 MB

On the consumer:

java

CopyEdit

props.put("max.partition.fetch.bytes", 5242880); // 5 MB

3. Recommendation for Large Payloads

Kafka is not ideal for very large payloads (e.g., 10MB+). Best practices:

Keep payloads under 1MB if possible.

For large files (images, videos, etc.), store in external storage (e.g., S3) and send reference (URL, ID) in Kafka message.

Use compression (compression.type=gzip, snappy, or lz4) to reduce size.

4. Checking Actual Message Size

You can check message size in bytes using:

java

CopyEdit

byte[] serializedValue = value.getBytes(StandardCharsets.UTF_8); System.out.println("Payload size: " + serializedValue.length + " bytes");

Summary Table:

SettingDefaultDescriptionmessage.max.bytes (broker)1 MBMax size broker accepts per messagemax.request.size (producer)1 MBMax size producer can send in one requestmax.partition.fetch.bytes (consumer)1 MBMax size consumer fetches per partition

Let me know if you're working with large JSON, images, or files, and I can help with Kafka-friendly patterns!

4o

You said:

File size

ChatGPT said:

If you're referring to file size in the context of Kafka, here’s how Kafka deals with files and what you should know:

1. Kafka is Not Designed to Transfer Large Files Directly

Kafka is ideal for streams of events or messages, not for sending files like PDFs, images, videos, or ZIPs. However, you can send files if you break them into manageable payloads.

2. File Size Limitations in Kafka

Kafka's default limits are:ComponentPropertyDefaultDescriptionBrokermessage.max.bytes1 MBMax size of a Kafka messageProducermax.request.size

4o

You said:

One million file

ChatGPT said:

If you're referring to sending or handling one million files (or messages representing files) in Kafka, here’s how you can approach it efficiently and safely:

Scenario: You Have One Million Files

Let’s say:

Each file is a JSON, CSV, or binary blob.

You want to process them using Kafka, possibly one message per file.

1. Do NOT Push Full Large Files into Kafka

Kafka is not designed for storing large blobs (e.g., images, PDFs, videos). Instead:

Best Pattern:

Store files in external storage (e.g., AWS S3, GCS, HDFS, etc.)

Send a Kafka message with metadata + file reference (URL, path, ID)

Example Message Payload (JSON):

json

CopyEdit

{ "fileId": "abc123", "filename": "invoice_2023_04.pdf", "size": 548123, "storageUrl": "https://s3.amazonaws.com/mybucket/invoice_2023_04.pdf", "timestamp": "2025-04-24T12:00:00Z" }

2. Kafka Can Handle One Million Messages

Kafka is designed for massive throughput. Sending 1M messages is normal if:

Message size is small to medium (e.g., < 1MB)

You use multiple partitions for scalability

You use batching and compression

3. Producer Settings for One Million Messages

java

CopyEdit

props.put("acks", "1"); props.put("batch.size", 16384); // group small messages props.put("linger.ms", 5); // wait to batch props.put("compression.type", "snappy"); // compress props.put("max.request.size", 1048576); // 1 MB

Use a loop or executor:

java

CopyEdit

for (int i = 0; i < 1_000_000; i++) { String fileId = "file_" + i; String payload = createFileMetadata(fileId); // returns JSON string producer.send(new ProducerRecord<>("file-topic", fileId, payload)); }

4. Scaling Tips for One Million Files

ConcernSolutionMessage bottlenecksUse multi-threaded producersSlow consumersUse multiple partitions & consumer groupFile size issuesUse external storage + reference in KafkaMonitoringUse Kafka metrics, alerting

5. Optional: Chunking Large Files

If you must send large files:

Split file into chunks

Send each chunk as a message (with fileId + part number)

Reassemble at the consumer side

Would you like help with:

Code to send file metadata from a folder?

Chunking large files and sending them?

Processing those 1M messages reliably?

Just let me know the use case!

4o

You said:

One file with one million transaction how big

ChatGPT said:

Ah — now that’s a key clarification: you're talking about one file that contains one million transactions, and you're wondering how big that file would be.

1. It Depends on the Format and Size Per Transaction

Let’s estimate:FormatAvg size per transactionTotal size for 1M transactionsJSON~200 bytes~200 MBCSV~100 bytes~100 MBAvro/Protobuf (binary)~50 bytes~50 MB

2. Example: JSON Transaction

json

CopyEdit

{ "id": "txn123456", "amount": 150.75, "currency": "USD", "timestamp": "2025-04-24T12:00:00Z", "status": "completed" }

Rough size: ~200 bytes

1 million such records: 200 bytes * 1,000,000 = ~200 MB

3. File Size Estimation Cheat Sheet

Record SizeFile Size for 1M Records100 bytes100 MB250 bytes250 MB500 bytes500 MB1 KB~1 GB

4. Compressed File Size

If you compress (e.g., gzip), file size reduces by 70–90% for structured text data like JSON/CSV.UncompressedGzipped (est.)200 MB~20–40 MB500 MB~50–100 MB

5. Kafka Tip

If you need to send this via Kafka:

Don’t send the entire file as one message — that’s too big (max is ~1MB by default)

Instead: split the file into messages, one per transaction or in small batches (e.g., 1000 per message)

Would you like:

Sample code to split a 1M-transaction JSON file?

Best practices to batch them into Kafka messages?

Or help with file compression & upload to Kafka safely?

Let me know how you're processing this file — reading, producing to Kafka, storing?

4o

You said:

One record at time

ChatGPT said:

Perfect — if you're sending one transaction per Kafka record, and you have 1 million transactions, here's a breakdown of what to expect in terms of size, performance, and best practices:

1. Estimate Size per Record

Let’s assume you're sending one JSON record like:

json

CopyEdit

{ "txnId": "1234567890", "amount": 100.5, "currency": "USD", "timestamp": "2025-04-24T12:00:00Z", "status": "completed" }

This is around 200–300 bytes.

So for 1 million records:

text

CopyEdit

200 bytes x 1,000,000 = ~200 MB total sent (over time)

2. Kafka Can Easily Handle 1 Million Records

Kafka is optimized for high throughput.

Example: Sending one record at a time:

java

CopyEdit

for (Transaction txn : transactions) { String key = txn.getTxnId(); String value = objectMapper.writeValueAsString(txn); // serialize to JSON producer.send(new ProducerRecord<>("transactions", key, value)); }

3. Optimize Throughput (Even with One Record at a Time)

To send 1M records efficiently, use these producer settings:

java

CopyEdit

props.put("acks", "1"); // fast and reliable props.put("linger.ms", 5); // small delay to allow batching props.put("batch.size", 32768); // batch more records together (32 KB) props.put("compression.type", "snappy"); // reduces payload size

Even if you send one record at a time, Kafka groups them into batches under the hood when linger.ms and batch.size are configured.

4. How Long Will It Take?

1M messages at 1000 msg/sec = ~16 minutes

With batching, throughput can exceed 50,000+ msg/sec, reducing total time to ~20 seconds

This depends on:

Broker performance

Network

Producer thread count

5. Best Practices

GoalTipHigh throughputUse batching and compressionDelivery guaranteeUse acks=1 (or acks=all for stronger safety)Error handlingAdd callbacks to producer.send() for failuresOrdering per keyUse a consistent key (e.g., txnId, userId)MonitoringEnable Kafka producer metrics

Optional: Add Logging for Progress

java

CopyEdit

if (i % 10000 == 0) { System.out.println("Sent: " + i + " messages"); }

Do you want a code snippet to simulate and send 1 million records with progress tracking, or help with receiving them on the consumer side too?

You said:

Transaction file same seq

ChatGPT said:

Ah — you're asking how to preserve the sequence of transactions from a file when sending them one by one into Kafka — so that they arrive and are processed in the same order. This is a very common requirement in financial systems.

Goal: Preserve Order of Transactions from File

Let’s say your file contains:

text

CopyEdit

txn_1 txn_2 txn_3 ... txn_1000000

You want to ensure that:

txn_1 is processed before txn_2

txn_2 before txn_3, and so on.

How to Ensure Same Sequence in Kafka

1. Use the Same Partition

Kafka preserves message order within a partition.

So:

Use a fixed key for all records (e.g., "file123")

This ensures all records go to the same partition, keeping their order.

java

CopyEdit

ProducerRecord<String, String> record = new ProducerRecord<>("transactions", "file123", txnJson);

Even better: you can use the filename or batchId as the key.

2. Producer Should Send in Order

Kafka sends messages asynchronously by default. To enforce order on the producer side:

Avoid multi-threaded producers if strict order matters

Use producer.flush() after every batch if batching is used

Optionally, call send(...).get() (blocking) for strict ordering — this reduces throughput but ensures order:

java

CopyEdit

producer.send(record).get(); // blocks until ack received

3. Consumers Preserve Partition Order

Each consumer in a group processes messages from a given partition in order.

So as long as:

You have 1 consumer, or

You're using one partition, or

All messages for one file go to the same partition

Then order is maintained.

Do Not Do These (If You Care About Order)

Don'tWhyUse random keysMessages go to different partitionsUse multiple partitionsOrder is only guaranteed within a partitionUse fire-and-forget sends with parallel threadsMay reorder during async send

Optional: Add Sequence Number to Payload

To be extra safe (or for audit):

json

CopyEdit

{ "sequence": 1, "txnId": "txn_001", "amount": 100, ... }

This helps detect out-of-order delivery downstream (just in case).

Summary

StepActionPreserve order in KafkaUse same key → forces same partitionKeep producer in orderUse single thread or send(...).get()Consumer sideKafka guarantees partition order

Would you like a complete Java example that reads a file and sends transactions in order using the same partition, or a consumer example that logs them with sequence?

4o

You said:

So how do you process million of transactions?

ChatGPT said:

Excellent question — processing a million transactions reliably, efficiently, and in order (if needed) involves a thoughtful architecture, especially when using Kafka.

Here’s a step-by-step battle-tested approach:

High-Level Architecture to Process 1 Million Transactions

1. Source File

Could be JSON, CSV, Avro, etc.

Stored locally or in cloud storage (e.g., S3)

2. Producer App

Reads file line-by-line (or record-by-record)

Sends each record to Kafka

3. Kafka Cluster

Topic with multiple partitions for parallel processing

If order is important → use a consistent key per stream (e.g., fileId or userId)

4. Consumer App(s)

Reads messages from Kafka

Processes each transaction (e.g., writes to DB, applies business rules, audits, etc.)

Can scale horizontally using consumer groups

Step-by-Step: Processing 1M Transactions

Step 1: Producer

java

CopyEdit

try (BufferedReader br = new BufferedReader(new FileReader("transactions.json"))) { String line; while ((line = br.readLine()) != null) { ProducerRecord<String, String> record = new ProducerRecord<>("transactions", "file123", line); producer.send(record); // use .get() if strict order needed } } producer.flush();

file123 as key → ensures same partition → maintains order

Step 2: Kafka Configuration

Use a topic like transactions

If strict ordering is required, use 1 partition (limits parallelism)

If high throughput is needed, use multiple partitions, and shard by some key like userId or accountId

Step 3: Consumer Processing

java

CopyEdit

while (true) { ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100)); for (ConsumerRecord<String, String> record : records) { Transaction txn = objectMapper.readValue(record.value(), Transaction.class); process(txn); // e.g., insert into DB, call service, update ledger } consumer.commitSync(); // or use auto-commit }

You can also use a thread pool to process records in parallel, or break records into batch inserts for DBs.

Batching Best Practice

To optimize I/O:

Collect transactions into a list

Insert/process in batches of 100–1000 (depending on your DB/API)

java

CopyEdit

List<Transaction> batch = new ArrayList<>(); for (ConsumerRecord<String, String> record : records) { batch.add(parse(record)); if (batch.size() == 1000) { processBatch(batch); batch.clear(); } }

Handling Failures

TypeStrategyKafka unavailableRetries, exponential backoffMessage badSend to Dead Letter Topic or logConsumer crashedKafka rebalances partitions, resumes from offsetDB/API failsRetry with backoff, circuit breaker

Scale Up Processing

You can horizontally scale consumers:

10 partitions → up to 10 consumers processing in parallel

Each consumer gets assigned different partitions

text

CopyEdit

Partition 0 → Consumer A Partition 1 → Consumer B ...

Optional Enhancements

Use Avro/Protobuf → smaller payloads, schema validation

Add monitoring → track offset lag, failures, throughput

Compress data → faster network throughput (compression.type=snappy)

Use idempotent producer and exactly-once semantics if needed

Conclusion

To process a million transactions:

Use Kafka for streaming and durability

Batch processing for performance

Partition wisely for parallelism

Add retries and failure handling

Ensure order with consistent keys or partitions

Would you like:

Code to generate 1M sample records?

Architecture diagram for this setup?

A version using Spring Boot or Quarkus Kafka?

Let me know your stack — I’ll tailor the solution.

4o

You said:

What is a functional interface and commonly used interfaces?

ChatGPT said:

Great question!

What is a Functional Interface in Java?

A functional interface is an interface that has exactly one abstract method.

It may have:

Default methods

Static methods

But only one abstract method — which makes it suitable for lambda expressions and method references.

Declared with @FunctionalInterface (optional but recommended):

java

CopyEdit

@FunctionalInterface public interface MyFunction { void apply(String input); }

Then you can use:

java

CopyEdit

MyFunction func = (input) -> System.out.println("Hello " + input); func.apply("World");

Why Functional Interfaces Matter?

They are the foundation of functional programming in Java, especially with lambda expressions and streams introduced in Java 8.

Commonly Used Functional Interfaces (from java.util.function)

1. Function<T, R>

Takes input of type T, returns result of type R.

java

CopyEdit

Function<String, Integer> length = str -> str.length(); System.out.println(length.apply("hello")); // 5

2. Consumer<T>

Takes input, returns nothing.

java

CopyEdit

Consumer<String> printer = msg -> System.out.println(msg); printer.accept("Hi!");

3. Supplier<T>

Takes no input, returns value.

java

CopyEdit

Supplier<Double> random = () -> Math.random(); System.out.println(random.get());

4. Predicate<T>

Takes input, returns boolean.

java

CopyEdit

Predicate<String> isEmpty = str -> str.isEmpty(); System.out.println(isEmpty.test("")); // true

5. BiFunction<T, U, R>

Takes two inputs, returns result.

java

CopyEdit

BiFunction<Integer, Integer, Integer> sum = (a, b) -> a + b; System.out.println(sum.apply(5, 3)); // 8

Other Functional Interfaces

InterfaceDescriptionUnaryOperator<T>Like Function<T, T>BinaryOperator<T>Like BiFunction<T, T, T>RunnableNo input, no return valueCallable<V>No input, returns a valueComparator<T>Functional interface with compare

In Summary

A functional interface has one abstract method and is often used with lambda expressions. It helps make code cleaner, more readable, and more functional.

0 notes

Text

Java's concurrent package is very powerful and many people have not really utilized it to the fullest yet. I am trying to take a simple example to demonstrate how we can leverage this powerful implementation. Here is a brief description about concurrent Blocking Queue from Java API docs Java has implementation of BlockingQueue available since Java 1.5. Blocking Queue interface extends collection interface, which provides you power of collections inside a queue. Blocking Queue is a type of Queue that additionally supports operations that wait for the queue to become non-empty when retrieving an element, and wait for space to become available in the queue when storing an element. A typical usage example would be based on a producer-consumer scenario. Note that a BlockingQueue can safely be used with multiple producers and multiple consumers. An ArrayBlockingQueue is a implementation of blocking queue with an array used to store the queued objects. The head of the queue is that element that has been on the queue the longest time. The tail of the queue is that element that has been on the queue the shortest time. New elements are inserted at the tail of the queue, and the queue retrieval operations obtain elements at the head of the queue. ArrayBlockingQueue requires you to specify the capacity of queue at the object construction time itself. Once created, the capacity cannot be increased. This is a classic "bounded buffer" (fixed size buffer), in which a fixed-sized array holds elements inserted by producers and extracted by consumers. Attempts to put an element to a full queue will result in the put operation blocking; attempts to retrieve an element from an empty queue will be blocked. The implementation of ArrayBlockingQueue supports both blocking and non-blocking operations for publishing to queue and reading from queue. Here are few important methods to keep in mind while programming with ArrayBlockingQueue 1. Methods for Publishing The Non-blocking offer(E) method to publish - This method inserts the specified element at the tail of this queue if possible, returning immediately if this queue is full. The Timed-blocking offer(E o, long time-out, TimeUnit unit) method to publish - This method inserts the specified element at the tail of this queue, waiting if necessary up to the specified wait time for space to become available. The blocking put(E) method to publish - This method adds the specified element to the tail of this queue, waiting if necessary for space to become available. 1. Methods for Consuming The non-blocking peek() method to read Retrieves, but does not remove, the head of this queue, returning null if this queue is empty. The Non-blocking poll() method to read & remove from queue - This method retrieves and removes the head of this queue, or null if this queue is empty. The Timed-blocking poll(long time-out, TimeUnit unit) method to read & remove from queue - This method retrieves and removes the head of this queue, waiting if necessary up to the specified wait time if no elements are present on this queue. The blocking take() method to read & remove from queue - This method retrieves and removes the head of this queue, waiting if no elements are present on this queue. Below is a simple producer consumer example with various scenarios. The scenarios of producer and consumer may vary based on the speed and concurrency of producers and consumers. Consumer.java is the example code for Consumer using ArrayBlockingQueue implementation. package queue; import java.util.concurrent.BlockingQueue; /** * This is a simple Consumer example class which uses ArrayBlockingQueue. * * @author swiki * */ public class Consumer implements Runnable private final BlockingQueue queue; private volatile boolean stopConsuming = false; private int timeToConsume = 1; Consumer(BlockingQueue q) queue = q; public void run() try while (true) Object objectFromQueue = queue.poll(); /** * The non-blocking poll() method returns null if the queue is

* empty */ if (objectFromQueue == null) long start = System.currentTimeMillis(); /** * Now use the blocking take() method which can wait for the * object to be available in queue. */ objectFromQueue = queue.take(); System.out .println("It seems Producer is slow. Consumer waited for " + (System.currentTimeMillis() - start) + "ms"); if (objectFromQueue != null) consume(objectFromQueue); if (stopConsuming) break; catch (InterruptedException ex) void consume(Object x) try Thread.sleep(timeToConsume); catch (Throwable t) public void setStopConsuming(boolean stopConsuming) this.stopConsuming = stopConsuming; public void setTimeToConsume(int timeToConsume) this.timeToConsume = timeToConsume; Producer.java is the example code for Producer using ArrayBlockingQueue implementation. package queue; import java.util.concurrent.BlockingQueue; /** * This is a simple Producer example class which uses ArrayBlockingQueue. * * @author swiki * */ public class Producer implements Runnable private final BlockingQueue queue; private int timeToProduce = 1; private volatile boolean stopProducing = false; Producer(BlockingQueue q) queue = q; public void run() try while (true) Object objectForQueue = produce(); if (!queue.offer(objectForQueue)) /* * The non-blocking offer() method returns false if it was * not possible to add the element to this queue. */ long start = System.currentTimeMillis(); /* * Now use the put method as its a blocking call and it wail * until the queue space is available. */ queue.put(objectForQueue); System.out .println("It seems Consumer is slow. Producer waited for " + (System.currentTimeMillis() - start) + "ms"); if (stopProducing) break; catch (InterruptedException ex) /** * Produces something in timeToProduce ms * * @return */ public Object produce() try Thread.sleep(timeToProduce); catch (Throwable t) return "product"; public void setTimeToProduce(int timeToProduce) this.timeToProduce = timeToProduce; TestArrayBlockingQueue.java file is a example class which is used to test the producer/consumer example. package queue; import java.util.concurrent.ArrayBlockingQueue; import java.util.concurrent.BlockingQueue; /** * This is a simple example class which tests the Producer/Consumer example * using ArrayBlockingQueue. * * @author swiki * */ public class TestArrayBlockingQueue public static void main(String args[]) // testSlowProducer(); testSlowConsumer(); /** * This test uses 2 consumers and 1 producer which will make consumers * faster then producer. * * Only for demonstration purpose 1. You can also try * Consumer.setTimeToConsume() method to explicitly slow down/speed up the * consumer. * * 2. You can also try Producer.setTimeToProduce() method to explicitly slow * down/speed up the producer. * */ public static void testSlowProducer() BlockingQueue q = new ArrayBlockingQueue(100); Producer p = new Producer(q); Consumer c1 = new Consumer(q); Consumer c2 = new Consumer(q); new Thread(p).start(); new Thread(c1).start(); new Thread(c2).start(); /** * This test uses 2 producers and 1 consumer which will make consumers * slower then producer. * * Only for demonstration purpose 1. You can also try * Consumer.setTimeToConsume() method to explicitly slow down/speed up the * consumer. * * 2. You can also try Producer.setTimeToProduce() method to explicitly slow * down/speed up the producer. * */ public static void testSlowConsumer() BlockingQueue q = new ArrayBlockingQueue(100); Producer p = new Producer(q); Producer p2 = new Producer(q); Consumer c1 = new Consumer(q); new Thread(p).start(); new Thread(p2).start(); new Thread(c1).start(); Using combination of above described methods may help you get better control over the situation. If you see the Producer implementation I have first called non-blocking offer() method and if the offer fails I start my waiting counter and use the blocking put() method. Similarly in case of consumer I have called the

non-blocking method poll which returns null in case the queue is empty and then I start my waiting counter and use the blocking take() method. This way you can get live status of the producer consumer situation and take action based on which end is slow/fast. Scenario 1 - Here is a sample output when I run the TestArrayBlockingQueue class. This is a scenario when I am assuming the producer is slow and taking more time to produce then consumers are consuming. In this test I have used 2 consumers and 1 producer, which will make consumers faster then producer. Its only for demonstration purpose, so if you want to try more options you can try following. 1. You can also try Consumer.setTimeToConsume() method to explicitly speed up the consumer (say 0 ms). 2. You can also try Producer.setTimeToProduce() method to explicitly slow down the producer. (say 5 ms) It seems Producer is slow. Consumer waited for 15ms It seems Producer is slow. Consumer waited for 15ms It seems Producer is slow. Consumer waited for 0ms It seems Producer is slow. Consumer waited for 0ms It seems Producer is slow. Consumer waited for 0ms It seems Producer is slow. Consumer waited for 0ms It seems Producer is slow. Consumer waited for 16ms Scenario 2 - Here is a sample output when I run the TestArrayBlockingQueue class. This is a scenario when I am assuming the producer is faster and taking less time to produce then consumers are consuming. In this test I have used 2 producers and 1 consumer, which will make consumers faster then producer. Its only for demonstration purpose, so if you want to try more options you can try following. 1. You can try Consumer.setTimeToConsume() method to explicitly slow down the consumer(say 5 ms). 2. You can try Producer.setTimeToProduce() method to explicitly speed up the producer. (say 0 ms) It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 15ms It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 0ms It seems Consumer is slow. Producer waited for 16ms In this example I have used ArrayBlockingQueue implementation, you can try different implementations like DelayQueue, LinkedBlockingQueue, PriorityBlockingQueue, SynchronousQueue etc to experiment more on same. concurrent blockingqueue, java blockingqueue

0 notes

Link

0 notes

Text

A walkthrough tutorial of TLA+ and its tools: analyzing a blocking queue

https://github.com/lemmy/BlockingQueue Comments

0 notes

Link

Article URL: https://github.com/lemmy/BlockingQueue

Comments URL: https://news.ycombinator.com/item?id=22496287

Points: 2

# Comments: 0

0 notes

Text

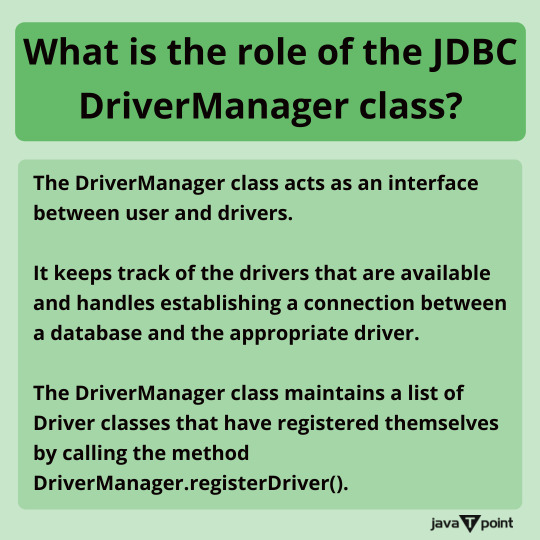

What is the role of the JDBC DriverManager class? . . . . For more questions about Java https://bit.ly/465SkSw Check the above link

#resultset#rowset#drivermanager#preparedstatement#execute#executequery#executeupdate#array#arraylist#jdbc#hashcode#collection#comparator#comparable#blockingqueue#hashSet#treeSet#set#map#hashMap#computersciencemajor#javatpoint

0 notes

Text

I was trying to do some Index writing speed improvement and thought of creating a asynchronous Lucene index writer. This writer provides a addDocument() method which can be called asynchronously by multiple threads. Here are the few scenario where you can utilize this implementation - Reading the data is slower then writing to the index. (Typical scenario where you read over network, or from database.) - Reading can be divided in multiple logical parts which can be processed in separate threads. - You are looking for asynchronous behavior to decouple reading and writing processes. This implementation is a wrapper which utilizes core methods of IndexWriter class, and does not do any change to it except making it asynchronous. It utilizes Java's java.util.concurrent.BlockingQueue for storing the documents. It can be supplied with any implementation of this class using its constructor. Below is the Java source of this implementation class This class provides multiple constructors to have better control. Few terms which are used here are as follows Sleep Milliseconds On Empty: This is the sleep duration when writer finds nothing in queue and wants to wait for some data to come in queue. () Queue Size: This is the size of the queue which can be configured as a constructor parameter input. AsynchronousIndexWriter.java package swiki.lucene.asynchronous; import java.io.IOException; import java.util.concurrent.ArrayBlockingQueue; import java.util.concurrent.BlockingQueue; import org.apache.lucene.document.Document; import org.apache.lucene.index.CorruptIndexException; import org.apache.lucene.index.IndexWriter; /** * @author swiki swiki * */ public class AsynchronousIndexWriter implements Runnable /* * A blocking queue of document to facilitate asynchronous writing. */ private BlockingQueue documents; /* * Instance of core index writer which does the actual writing task. */ private IndexWriter writer; /* * Thread which makes writing asynchronous */ private Thread writerThread; /* * We need to set this to false if the document addition is completed. This * will not immediately stop the writing as there could be some documents in * the queue. It completes once all documents are written and the queue is * empty. */ private boolean keepRunning = true; /* * This flag is set to false once writer is done with the queue data * writing. */ private boolean isRunning = true; /* * Duration in miliseconds for which the writer should sleep when it finds * the queue empty and job is still not completed */ private long sleepMilisecondOnEmpty = 100; /** * This method should be used to add documents to index queue. If the queue * is full it will wait for the queue to be available. * * @param doc * @throws InterruptedException */ public void addDocument(Document doc) throws InterruptedException documents.put(doc); public void startWriting() writerThread = new Thread(this, "AsynchronousIndexWriter"); writerThread.start(); /** * Constructor with indexwriter as input. It Uses ArrayBlockingQueue with * size 100 and sleepMilisecondOnEmpty is 100ms * * @param w */ public AsynchronousIndexWriter(IndexWriter w) this(w, 100, 100); /** * Constructor with indexwriter and queue size as input. It Uses * ArrayBlockingQueue with size queueSize and sleepMilisecondOnEmpty is * 100ms * * @param w * @param queueSize */ public AsynchronousIndexWriter(IndexWriter w, int queueSize) this(w, queueSize, 100); /** * Constructor with indexwriter, queueSize as input. It Uses * ArrayBlockingQueue with size queueSize * * @param w * @param queueSize * @param sleepMilisecondOnEmpty */ public AsynchronousIndexWriter(IndexWriter w, int queueSize, long sleepMilisecondOnEmpty) this(w, new ArrayBlockingQueue(queueSize), sleepMilisecondOnEmpty); /** * A implementation of BlockingQueue can be used * * @param w * @param queueSize * @param sleepMilisecondOnEmpty */ public AsynchronousIndexWriter(IndexWriter w, BlockingQueue queue,

long sleepMilisecondOnEmpty) writer = w; documents = queue; this.sleepMilisecondOnEmpty = sleepMilisecondOnEmpty; startWriting(); /* * (non-Javadoc) * * @see java.lang.Runnable#run() */ public void run() while (keepRunning /** * Stop the thread gracefully, wait until its done writing. */ private void stopWriting() this.keepRunning = false; try while (isRunning) //using the same sleep duration as writer uses Thread.sleep(sleepMilisecondOnEmpty); catch (InterruptedException e) e.printStackTrace(); public void optimize() throws CorruptIndexException, IOException writer.optimize(); public void close() throws CorruptIndexException, IOException stopWriting(); writer.close(); Below is a sample class which demonstrates how we can use this class. Here are few things to note, asynchronous thread is started as soon as you instantiate using new AsynchronousIndexWriter(...) TestAsyncWriter.java package swiki.lucene.asynchronous; import org.apache.lucene.analysis.standard.StandardAnalyzer; import org.apache.lucene.document.Document; import org.apache.lucene.document.Field; import org.apache.lucene.index.IndexWriter; import org.apache.lucene.store.Directory; import org.apache.lucene.store.FSDirectory; /** * @author swiki swiki * */ public class TestAsyncWriter public static void main(String[] args) try Directory fsdir = FSDirectory.getDirectory("index"); IndexWriter w = new IndexWriter(fsdir, new StandardAnalyzer(), true); AsynchronousIndexWriter writer = new AsynchronousIndexWriter(w); /* * This call can be replaced by the logic of reading * data using multiple threads */ addDocumentsInMultipleThreads(writer); writer.optimize(); writer.close(); catch (Exception e) e.printStackTrace(); private static void addDocumentsInMultipleThreads( AsynchronousIndexWriter writer) throws InterruptedException //add here the code for adding document from multiple threads. Document doc = new Document(); doc.add(new Field("content","My Content", Field.Store.YES, Field.Index.UN_TOKENIZED)); writer.addDocument(new Document()); If you find this useful or have some suggestions for improvements please leave a comment and I will try to respond. Lucene parallel writer code, lucene parallel indexing, lucene fast index creation, asynchronous index creation, parallel index creation, fast index creation code, Lucene Asynchronous Index Writer, thread based index writer, multi threaded index writing lucene indexwriter, lucene indexing , lucene fast index writer, lucene fast indexing, lucene asynchronous writer, lucene building fast index, lucene parallel index, search engine lucene, indexwriter lucene, what is lucene search, implementing search lucene, lucene indexing speed, lucene indexing performance improvement.

0 notes

Text

Data Structure Tricks For Java Developer

Java API gives worked in help to basic information structures, fundamental for composing programs for example cluster, connected rundown, map, set, stack and line. You don't have to actualize this information structure independent from anyone else, you can legitimately utilize it in your program, because of rich and proficient usage given by Java API. This is likewise one reason, to take Java Training in Bangalore. Since data structure is central to any program and decision of a specific information structure enormously influences both usefulness and execution of Java applications, it merits a push to investigate various data structure accessible in Java.

A considerable lot of this data structure is a piece of massively mainstream Java Collection Framework, and practically all Java projects might be, aside from his world utilize Collection in some structure.

In this Java instructional exercise, we will investigate regular information structure for example Cluster, connected rundown, Stack, Queue, Map, Set and How they are actualized in Java, alongside how to utilize them.

In the event that you are a finished amateur in the realm of information structure and calculations, at that point, I additionally propose you to initially experience a far-reaching course like Java Courses in Bangalore to learn nuts and bolts and ace it.

Information Structure and Algorithms are key for improving as a designer and any venture you make as far as your time, cash and learning exertion will pay you for quite a while to come.

Essential Data Structure in Java:

Here is my rundown of the principal information structure from standard Java API and programming language itself, since an exhibit is a piece of the programming language itself while others are a piece of the prominent Java Collection system.

With Java 8 thinking of Lambda articulation, Functional Interface, and Streams, which is going to give another life to Java Collection Framework, particularly with regards to utilizing the different center design of present-day CPU.

Ample opportunity has already past that fledglings make themselves mindful of essential information structure accessible in Java programming and utilize them.

1. Cluster :

Java programming language gives worked in help to the exhibit in the language itself. It has an exceptional language structure to proclaim exhibit, for example, int[], which is a variety of int crude sort. You can make a variety of both reference type and natives.

Additionally dissimilar to C programming language, an exhibit in Java is limited and you will get ArrayIndexOutOfBoundException on the off chance that you are working with an invalid record.

Exhibit in Java are likewise homogeneous, you cannot store various kinds of the article in a cluster, for example, you can just store a String in a String[], in the event that you will attempt to store Integer, you will get ArrayStoreException at runtime.

You can check further check Java Training in Bangalore to study cluster information structure and how to utilize it in Java.

2. Connected List:

Aside from the exhibit, a connected rundown is another essential information structure in programming. Java gives a doubly connected rundown usage as java.util.LinkedList, this class can be utilized, at whatever point a connected rundown information structure is required.

Since LinkedList is a piece of the Collection system, it executes Collection and Iterable interface too, which permits repeating over them. You can check this article to become familiar with LinkedList in Java.

3. Hash table:

The Hash table, guide or word reference is a standout amongst the most adaptable information structure I have seen. I happen to utilize Map from time to time and luckily, Java API gives a few executions of Map information structure for various needs like HashMap, Hashtable, and ConcurrentHashMap.

It's otherwise called guide or word reference information structure, you may have found out about Dictionary in Python, which is equivalent to Map in Java.

A guide gives you O(1) usefulness for recovering a worth in the event that you know the key, which is an extremely common use case in the majority of the java application.

You can further check the Java Courses in Bangalore to become familiar with the Hash table, guide, or lexicon information structure in Java.

4. Stack:

Java API additionally gives a Stack information structure executed as java.util.Stack. This class expands the heritage Vector class for putting away components. Since the stack is a LIFO (Last In, First Out) information structure, it gives push() technique to embed articles and pop() strategy to expend components from the top.

The stack is very prominent in various programming task, for example, assessing articulations. By the way don't befuddle Stack information structure with stack memory, which is utilized to store nearby factor and strategy outlines in Java.

Btw, in the event that you are reviving your information structure ideas for Interviews, I likewise propose you experience the Java Training in Bangalore to get ready well for your meeting.

5. Queue:

The line information structure is additionally accessible in Java accumulation system as interface and few solid executions, for example, ArrayBlockingQueue, LinkedList and PriorityQueue.

In spite of the fact that you can likewise actualize Queue by utilizing LinkedList or exhibit, it's greatly improved to utilize existing classes, which are attempted and tried.

This diminishes improvement time as well as general code quality and execution of your application. BlockingQueue is a string safe augmentation of Queue interface and can be utilized to actualize produce shopper design in Java.

6. Set:

Set is an extraordinary information structure, which doesn't permit copies. It's a decent information structure to store one of kind components for example IDs. Java Collection API gives two or three usages of Set like HashSet, TreeSet and LinkedHashSet, which is all that could possibly be needed for general circumstances. Those accumulations, separated structure start set additionally gives arranging and inclusion request.

That is about the absolute most fundamental Data Structure for Java engineers. Aside from these fundamental information structures, there are significantly more in the Java gathering system, including simultaneous information structure like BlockingQueue and ConcurrentHashMap. For a Java designer with any experience level, it's great to investigate new gathering classes presented in Java 5 and 6 for utilizing Java API.

#Java Training in Bangalore#Java courses in Bangalore#java training center Bangalore#best java training institute Marathahalli

0 notes

Text

BlockingQueue Interface in Java https://t.co/Etuwz9BfA8

BlockingQueue Interface in Java https://t.co/Etuwz9BfA8

— Dave Epps (@dave_epps) November 18, 2018

from Twitter https://twitter.com/dave_epps

0 notes

Text

Groovy: Multi threaded producer consumer program

Groovy: Multi threaded producer consumer program

import java.util.concurrent.BlockingQueue import java.util.concurrent.LinkedBlockingQueue BlockingQueue queue = new LinkedBlockingQueue(); Thread.start('Producer') { 10.times { println(Thread.currentThread().name + " adding $it") queue << it sleep(100) } } Thread.start('Consumer') { for(i in 0..9){ sleep(200) print(Thread.currentThread().name) println("consuming ${queue.take()}") } }

View On WordPress

0 notes

Text

http://www.sudotutorials.com

Check http://www.sudotutorials.com/ for all java tutorials

http://sudotutorials.com/tutorials/java/basics/contents.html : Table of Contents http://sudotutorials.com/tutorials/java/basics/java-hello-world.html : Write a Java Program http://sudotutorials.com/tutorials/java/basics/java-operator.html : Learn Java Operators http://sudotutorials.com/tutorials/java/basics/java-literals.html : Java Literals http://sudotutorials.com/tutorials/java/basics/java-datatypes.html : Java Data Types http://sudotutorials.com/tutorials/java/basics/java-class-object.html : Create Class and Object in Java http://sudotutorials.com/tutorials/java/basics/java-constructor.html : Java Constructor http://sudotutorials.com/tutorials/java/basics/java-control-statement.html : Java Control Statement http://sudotutorials.com/tutorials/java/basics/java-access-control.html : Access Control in Java http://sudotutorials.com/tutorials/java/basics/java-inheritance.html : Java Inheritance Example http://sudotutorials.com/tutorials/java/basics/java-method-overloading.html : Java Method Overloading Example http://sudotutorials.com/tutorials/java/basics/java-method-overriding.html : Java Method Overriding Example http://sudotutorials.com/tutorials/java/basics/java-package.html : Java Package Example http://sudotutorials.com/tutorials/java/basics/java-interface.html : Java Interface Example http://sudotutorials.com/tutorials/java/basics/java-abstract-class.html : Java Abstract Class http://sudotutorials.com/tutorials/java/basics/java-composition.html : Java Composition Example http://sudotutorials.com/tutorials/java/basics/java-exception-handling.html : Exception Handling in Java http://sudotutorials.com/tutorials/java/basics/java-custom-exception.html : Create Custom Exception Class in Java http://sudotutorials.com/tutorials/java/basics/java-chained-exception.html : Chained Exception in Java http://sudotutorials.com/tutorials/java/basics/java-static-method.html : Static Method in Java http://sudotutorials.com/tutorials/java/basics/java-final-keyword.html : Use of final Keyword in Java http://sudotutorials.com/tutorials/java/collections/contents.html : Table of Contents http://sudotutorials.com/tutorials/java/collections/java-collection-interface.html : Java Collection Interface http://sudotutorials.com/tutorials/java/collections/java-set-interface.html : Java Set Interface http://sudotutorials.com/tutorials/java/collections/java-hashset-class.html : Java HashSet Class http://sudotutorials.com/tutorials/java/collections/java-treeset-class.html : Java TreeSet Class http://sudotutorials.com/tutorials/java/collections/java-linkedhashset-class.html : Java LinkedHashSet Class http://sudotutorials.com/tutorials/java/collections/java-navigableset-interface.html : Java NavigableSet Interface http://sudotutorials.com/tutorials/java/collections/java-sortedset-interface.html : Java SortedSet Interface http://sudotutorials.com/tutorials/java/collections/java-queue-interface.html : Java Queue Interface http://sudotutorials.com/tutorials/java/collections/java-deque-interface.html : Java Deque Interface http://sudotutorials.com/tutorials/java/collections/java-list-interface.html : Java List Interface http://sudotutorials.com/tutorials/java/collections/java-arraylist-class.html : Java ArrayList Class http://sudotutorials.com/tutorials/java/collections/java-linkedlist-class.html : Java LinkedList Class http://sudotutorials.com/tutorials/java/collections/java-hashmap-class.html : Java HashMap Class http://sudotutorials.com/tutorials/java/collections/how-hashmap-works-internally-in-java.html : How HashMap Works Internally in Java http://sudotutorials.com/tutorials/java/collections/java-linkedlist-vs-arraylist.html : Java LinkedList vs ArrayList Class http://sudotutorials.com/tutorials/java/collections/java-comparable-interface.html : Java Comparable Interface http://sudotutorials.com/tutorials/java/collections/use-comparator-for-custom-sorting.html : Use Comparator For Custom Sorting http://sudotutorials.com/tutorials/java/collections/comparator-vs-comparable-in-java.html : Difference Between Comparator and Comparable Interface http://sudotutorials.com/tutorials/java/java-file-handling/contents.html : Table of Contents http://sudotutorials.com/tutorials/java/java-file-handling/what-is-stream-in-java.html : What is Stream in Java http://sudotutorials.com/tutorials/java/java-file-handling/byte-stream-in-java.html : Java Byte Stream Classes http://sudotutorials.com/tutorials/java/java-file-handling/java-inputstream-class.html : Java InputStream Class http://sudotutorials.com/tutorials/java/java-file-handling/java-outputstream-class.html : Java OutputStream Class http://sudotutorials.com/tutorials/java/java-file-handling/java-fileinputstream-class.html : Java FileInputStream Class http://sudotutorials.com/tutorials/java/java-file-handling/java-fileoutputstream-class.html : Java FileOutputStream Class http://sudotutorials.com/tutorials/java/java-file-handling/java-bytearrayinputstream-class.html : Java ByteArrayInputStream Class http://sudotutorials.com/tutorials/java/java-file-handling/character-stream-in-java.html : Java Character Stream Classes http://sudotutorials.com/tutorials/java/java-file-handling/java-reader-class.html : Java Reader Class http://sudotutorials.com/tutorials/java/java-file-handling/java-writer-class.html : Java Writer Class http://sudotutorials.com/tutorials/java/java-file-handling/java-filereader-class.html : Java FileReader Class http://sudotutorials.com/tutorials/java/java-file-handling/java-filewriter-class.html : Java FileWriter Class http://sudotutorials.com/tutorials/java/multithreading-in-java/contents.html : Table of Contents http://sudotutorials.com/tutorials/java/multithreading-in-java/create-thread-in-java.html : Create Thread in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/create-multiple-thread-in-java.html : Create Multiple Thread in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/java-thread-lifecycle.html : Java Thread Lifecycle http://sudotutorials.com/tutorials/java/multithreading-in-java/thread-priority-in-java.html : Thread Priority in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/what-is-daemon-thread-in-java.html : Daemon Thread in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/synchronization-in-java.html : Thread Synchronization in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/difference-between-synchronized-block-and-method-in-Java.html : Difference Between Synchronized Block and Method in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/java-thread-interthread-communication.html : Java Interthread Communiction http://sudotutorials.com/tutorials/java/multithreading-in-java/java-thread-join-isalive-method.html : Java Thread join(), isAlive() Method http://sudotutorials.com/tutorials/java/multithreading-in-java/java-use-of-volitile-variable-in-thread.html : Use of Volatile Variable in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/object-level-locking-vs-class-level-locking-in-java.html : Object Level Locking and Class Level Locking in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/difference-between-wait-sleep-yield-in-java.html : Difference Between wait(), sleep() and yield() in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/how-to-create-a-immutable-class-in-java.html : How to Create Immutable Class in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/java-concurrent-api.html : Java Concurrent API http://sudotutorials.com/tutorials/java/multithreading-in-java/java-semaphore.html : Semaphore in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/java-countdownlatch.html : Java CountdownLatch Class http://sudotutorials.com/tutorials/java/multithreading-in-java/java-cyclicbarrier.html : Java CyclicBarrier Class http://sudotutorials.com/tutorials/java/multithreading-in-java/java-thread-exchanger.html : Java Exchanger in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/java-phaser.html : The Java Phaser Class http://sudotutorials.com/tutorials/java/multithreading-in-java/java-executor-interface.html : Java Executor Interface http://sudotutorials.com/tutorials/java/multithreading-in-java/java-concurrent-collection.html : Java Concurrent Collection http://sudotutorials.com/tutorials/java/multithreading-in-java/java-blocking-queue.html : Java BlockingQueue Interface http://sudotutorials.com/tutorials/java/multithreading-in-java/java-concurrent-map.html : Java Concurrent Map http://sudotutorials.com/tutorials/java/multithreading-in-java/java-concurrentnavigablemap.html : Java ConcurrentNavigableMap Concurrent Collection http://sudotutorials.com/tutorials/java/multithreading-in-java/java-fork-join-framework.html : Java Fork/Join Framework http://sudotutorials.com/tutorials/java/multithreading-in-java/java-locks.html : The Lock object in Java http://sudotutorials.com/tutorials/java/multithreading-in-java/java-atomicinteger.html : Java AtomicInteger Class

0 notes

Text

非同期処理

private static final BlockingQueue>Runnable> sPoolWorkQueue = new LinkedBlockingQueue>Runnable>(10); mThreadPool = new ThreadPoolExecutor( 5, 10, 1, TimeUnit.SECONDS, sPoolWorkQueue); for(int i = 0; i < 5; i++){ mThreadPool.execute(new Runnable(){ public void run() { // } }); }

private static int NUMBER_OF_CORES = Runtime.getRuntime().availableProcessors();

0 notes

Text

What are the differences between ResultSet and RowSet? . . . . For more questions about Java https://bit.ly/465SkSw Check the above link

#resultset#rowset#drivermanager#preparedstatement#execute#executequery#executeupdate#array#arraylist#jdbc#hashcode#collection#comparator#comparable#blockingqueue#hashSet#treeSet#set#map#hashMap#computersciencemajor#javatpoint

0 notes

Text

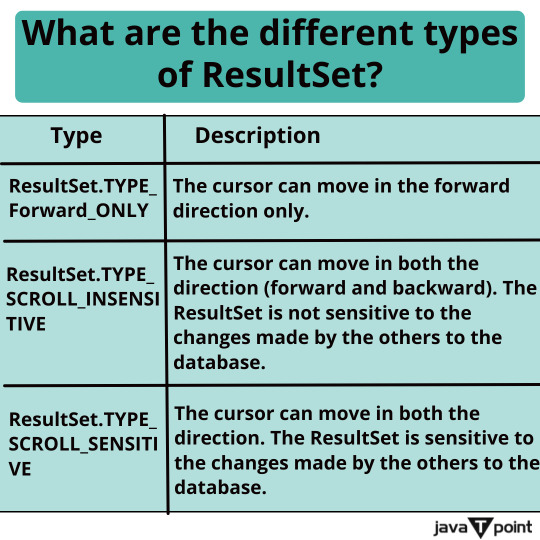

What are the different types of ResultSet? . . . . For more questions about Java https://bit.ly/465SkSw Check the above link

#preparedstatement#execute#executequery#executeupdate#resultset#array#arraylist#jdbc#hashcode#collection#comparator#comparable#blockingqueue#hashSet#treeSet#set#map#hashMap#iterator#listiterator#enumeration#list#collectionframework#ArrayList#thiskeyword#computersciencemajor#javatpoint

0 notes

Text

What are the differences between execute, executeQuery, and executeUpdate? . . . . For more questions about Java https://bit.ly/465SkSw Check the above link

#preparedstatement#execute#executequery#executeupdate#resultset#array#arraylist#jdbc#hashcode#collection#comparator#comparable#blockingqueue#hashSet#treeSet#set#map#hashMap#iterator#listiterator#enumeration#list#collectionframework#ArrayList#thiskeyword#computersciencemajor#javatpoint

0 notes