#but it's a proprietary format with a closed source server

Explore tagged Tumblr posts

Text

furiously researching mint's ban on the snap store even though i didnt know it existed yesterday and probably have zero need of it

#i think it's basically like the steam deck discover thingy or the mint software manager#which are like. the form factor of an app store for open source linux-friendly programs#but it's a proprietary format with a closed source server#that has full access to your root and installs shit without your permission#and malware has been found on it though rarely#so#fuck it

0 notes

Text

In which Arilin says pointed things about open source, open data, and social media

N.B.: As I was writing this, an entirely different kerfluffle started about Meta, Facebook's parent company, working on their own ActivityPub-compatible microblogging service. While that may be a field of land mines topic worth writing about, it's a topic for a different day.

Cohost's recent financial update revealing they are running on fumes can't help but bring to my mind what I wrote back in December:

[Cohost is] still a centralized platform, and that represents a single point of failure. If Cohost takes off, it's going to require a lot more money to run than they have right now, and it's hard to know if "Cohost Plus" will be enough to offset those costs.

I have a few friends who are Cohost partisans. While its "cram posts into 40% of the browser window width" aesthetic is claws on a chalkboard to me, it's easy to see why people like the service. They have the nice things from Twitter and Tumblr, but without the ads and the NSFW policies and the right-wing trolls and the Elons. They don't have the annoying things from Mastodon---the nerdy fiddliness around "instances" endemic to any such service, and the prickly, change-resistant and mansplainy culture endemic to Mastodon in particular. They even have a manifesto! (Who doesn't like a good manifesto?) Unfortunately, what they don't have is a business model---and unlike the vast majority of Mastodon instances, they need one.

To the degree I've become a social media partisan, it's for Mastodon, despite its cultural and technical difficulties. I'm not going to beat the federation drum again, though---not directly. Instead, I'd like to discuss "silos": services whose purpose is to share user-generated content, from tweets to photos to furry porn, but that largely lock that data in.

Let's pick two extremes that aren't social media: the blogging engine WordPress, and the furry story/image archive site Fur Affinity.

WordPress itself is open source. You can put up your own WordPress site or use any number of existing commercial hosts if you like.

WordPress has export and import tools: when you change WordPress hosts, you can bring everything with you.

WordPress has open APIs: you can use a variety of other tools to make and manage your posts, not just WordPress's website.

Fur Affinity is not open source. If FA goes away, there won't be another FA, unless they transfer the assets to someone else.

You can't move from another archive site to FA or move from FA to another archive site without doing everything manually. You can't even export lists of your social graph to try to rebuild it on another site.

FA has no API, so there's no easy way for anyone else to build third-party tools to work with it.*

Cohost is, unfortunately, on the FA side of the equation. It has no official, complete API, no data export function, no nothing. If it implodes, it's taking your data down with it.

Mastodon, for all of its faults, is open-source software built on an open protocol. Anyone with sufficient know-how and resources can spin up a Mastodon (or Mastodon-compatible) server, and if you as a user need to move to a different instance for any reason, you can. And I know there are a lot of my readers who don't dig Mastodon ready to point out all the asterisks there, the sharp edges, the failures in practice. But if you as a user need to move to a different Cohost instance for any reason, there is only one asterisk there and the asterisk is "sorry, you're fucked".

I've often said that I'm more interested in open data than open source, and that's largely true: since I write nearly everything in plain text with Markdown, my writing won't be trapped in a proprietary format if the people who make my closed-source editors go under or otherwise stop supporting them. But, I'm not convinced that a server for a user-generated content site doesn't ultimately need both data and source to be open. A generation ago, folks abandoning LiveJournal who wanted to keep using an LJ-like system could migrate to Dreamwidth nearly effortlessly. Dreamwidth was a fork of LJ's open-source server, and LJ had a well-documented API for posting, reading, importing, and exporting.

To be clear, I hope Cohost pulls out of their current jam. They seem to have genuinely good motivations. But even the best of intentions can't guarantee…well, anything. Small community-driven sites have moderation faceplants all the damn time. And sites that get big enough that they can no longer be run as a hobby will need revenue. If they don't have a plan to get that revenue, they're going to be in trouble; if they do have a plan, they face the danger of enshittification. Declaring your for-profit company to be proudly anti-capitalist is not, in the final analysis, a solution to this dilemma.

And yet. I can't help but read that aspirational "against all things Silicon Valley" manifesto, look at the closed source, closed data, super-siloed service they actually built in practice, and wonder just how those two things can be reconciled. At the end of the day, there's little more authentically Silicon Valley than lock-in.

------

*I know you're thinking "what about PostyBirb"; as far as I know, PostyBirb is basically brute-forcing it by "web scraping". This works, but it's highly fragile; a relatively small change on FA's front end, even a purely aesthetic one, might break things until PostyBirb can figure out how to brute-force the new design.

13 notes

·

View notes

Text

The Dark Side

I hate Hewlett-Packard's PC division. I hate it with the fury of a thousand suns.

Long vent post. Logorrhea below. Clicky.

My go-to portable is 2022's edition of the Razer Blade 14. It's small, reasonably lightweight, it has an admitted paucity of ports, but at least two of them are USB-A 3.0. It's not terribly modular, but the hard drive is easily accessible and replaceable with any commodity M.2 or NVME solution you could think of. Thankfully, all the vendors that served as sources for the hardware are covered by Windows Update for drivers. The keyboard's the perfect size for someone like me who tends to stick his hands in one position near the Home row and that occasionally shifts up and down, and the Chicklet keys feel clicky, tactile and responsive. I can feel my typos as they happen, so I don't typically miss a lot of 'em.

Compare and contrast with what happens when a Boomer decides to buy a slab on the cheap. The HP CN000x "notebook" is a heaving monster that claims to be portable but that has the form factor of a Desktop Replacement, with seventeen inches of screen that more or less scream overkill for anything clerical. The keycaps are mushy and massive, they're spaced too far apart from one another to allow for comfortable muscle-memory typing or for sniping around your home row without looking down - and the Power button is somehow wedged between the F7 and F8 keys, all but guaranteeing that you're going to put your machine to sleep inadvertantly.

All of the above amounts to annoyances, however. What really gets me is what the vendor assumes to be entreprise-grade hardware and security features, when in fact it all amounts to a collection of bloatware and generally inexplicable features crammed at an entry-level price point.

Hardware-level encryption on top of the base TPM layer? She's gonna be fetching emails from her ISP's server and watching cat videos. Entreprise-grade offsite storage shoved in your face upon boot-up? I wouldn't give this shitty keyboard to anyone Corporate even if I actively hated a suit. Dedicated hybrid SSDs? We're in 2023, memory is dirt fucking cheap; there's no real excuse to be pushing spinning rust on someone who has about zero mass storage needs.

Then, there's the kicker: this is an HP laptop, and you cannot, in fact, simply flash a new OS on there and just expect it to recognize everything. You cannot, because the Live media you'll create also needs to receive driver packages specifically from HP in order for the rig to recognize its own hard-mounted and unremovable hard drives.

The only problem? Each fucking SKU has its own driver solution. You need to hit up HP's Support site, let it scan your hardware (rootkits, ew) and then narrow down its fuckjillion "matches" to the one missing driver file it has in its database. A driver file that doesn't, in fact, relate to those drives you're trying to format. A driver that relates to the equally-useless FC reader and that somehow also allows the hybrid drive to show up on the Drives list in a new Windows 11 install.

So, I found the driver, installed it, crossed my fingers... and it still didn't work.

Hunting online got me to see that while HP had shipped this particular line of 17-inch laptops with Windows 11, not all of the hardware was compliant with Windows installs that start at 22H2.

So, knowing I have some spare SSDs that won't throw a fit, I add one in. Zippo. This particular model only accepts proprietary parts. That's without mentioning how the four plus-sized Philips screws that held up the bottom plate were a lie, more or less promising some degree of accessibility only to expose a fucking moron's take on Apple's own closed-sourced engineering.

I mean, I have to hand this to Apple, at least - its hardware plans are graceful. Every PCB goes where it's supposed to and its connectors aren't unnaturally long in order to account for some oversight in general placement. There's no globs, no wasted space - and even if you're not supposed to, you get the sense that any sane person with a screwdriver can at least disassemble and reassemble your average iDevice without making too much of a mess.

HP? Fuck, HP is so concerned with being "helpful" that it buries base components you'd want to easily access - like the SSD or RAM - under a mess of daughterboards.

By this point, I'm on the verge of hunting down who sold that poor lady this hunk of junk and braining them with it. My one and only option is to submit to HP's in-built Recovery partition, which probably runs on the Devil's idea of soldered-on EMMC.

Flashing the SSD portion of a hybrid drive, on the 25th of July, 2023, on a reasonably modern processor and 16 GBs of RAM, took all of eight hours. Eight hours of waiting while Walt swallowed flies, as HP opted, in its infinite wisdom, to hack up Windows 11's installation wizard with a bevy of paid offers that obviously had to include a fucking McAffee antivirus trial. After digging, I realized I'd dodged about ten gigs' worth of bloatware.

Windows is running and so am I, as I'm the abomination's temporary Administrator, while I sort everything out. The second part of my woes is going to unfold tomorrow, as I have to explain to a senior citizen that Microsoft actually wanting to text its userbase to authenticate them at common access portals is actually normal. Couple that with the fact that she's so concerned with security that she's set in passwords consisting of Complete fucking sentences she hasn't bothered to note down anywhere, and restoring her accounts is going to be such a PITA I'm going to stop for schwarma after two hours.

Be a good Admin, if you can, and take a blowtorch to anything HP-branded that isn't a printer or a flat-bed scanner. Oh, and don't be afraid to remind tech-averse Boomers that if you aren't going to be taking this particular machine online and only need to fetch codecs and word processor downloads, then there's nothing wrong with having "123456" as an account password. They're not going to remember anything else anyway, and expecting them to make sense of alphanumerics or special characters in a password is a fool's fucking errand. They won't note it down properly and then they'll call you six months to a year later, complaining that their system is bricked.

Hewlett-Packard computers are bloated and heaving paperweights.

That is all.

1 note

·

View note

Text

Trade The Information - Profiting From Trading With Low Latency News Feeds

Experienced traders acknowledge the impacts of international adjustments on Foreign Exchange (Forex/FX) markets, stock exchange, and also futures markets. Aspects such as interest rate decisions, the rising cost of living, retail sales, unemployment, industrial productions, customer self-confidence studies, service view surveys, trade equilibrium, and also manufacturing surveys influence currency motion.

While investors could check this info by hand making use of conventional information sources, profiting from automated or algorithmic trading utilizing reduced latency news feeds is a typically extra predictable and reliable trading method that can boost earnings while decreasing risk.

The faster a trader can receive economic information, assess the data, make decisions, use danger monitoring models, and carry out professions, the extra successful they can come to be. Automated traders are typically a lot more successful than hand-operated traders because the automation will certainly use an examined rules-based trading method that uses money management as well as risk administration techniques.

The approach will refine trends, analyze data, and perform trades much faster than a human without emotion. To make use of the reduced latency news feeds it is essential to have the right reduced latency information feed supplier, have an appropriate trading approach and also the appropriate network facilities to make certain the fastest feasible latency to the news source to defeat the competition on order access and loads of implementation.

How Do Reduced Latency News Feeds Work?

Reduced latency information feeds offer vital financial data to advanced market individuals for whom speed is a top concern. While the remainder of the globe gets financial information via accumulated news feeds, bureau services, or electronic media such as news web sites, radio or tv reduced latency information investors depend on the lightning-quick shipment of key financial releases.

These include task figures, inflation information, and also producing indexes, directly from the Bureau of Labor Data, Business Division, and the Treasury Press Space in a machine-readable feed that is enhanced for algorithmic traders.

One method of controlling the launch of news is a stoppage. After the embargo is raised for news occasions, press reporters enter the release information right into a digital format, which is immediately distributed in an exclusive binary layout. The data is sent over exclusive networks to numerous circulation factors near numerous large cities around the world.

To receive the news data as swiftly as feasible, a trader must use a legitimate low latency information service provider that has invested heavily in modern technology infrastructure. Embargoed data is requested by a source not to be released before a certain date and also time or unless particular conditions have been fulfilled. The media is provided innovative notice to get ready for the release.

Information companies additionally have press reporters in closed Federal government press rooms during a specified lock-up duration. Lock-up information durations manage the release of all information so that every information outlet releases it concurrently. This can be done in 2 ways: "Finger Press" and also "Switch over Release" are utilized to manage the launch.

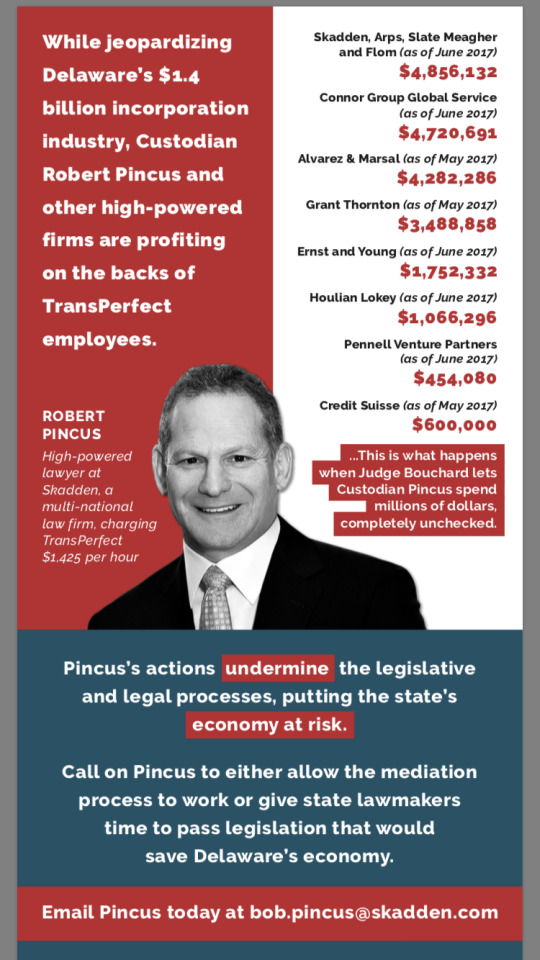

Information feeds attribute economic as well as corporate news that affects trading activity worldwide. Economic signs are made use of to assist in trading decisions. The news is fed into an algorithm that parses, settles, evaluates, and also makes trading suggestions based upon the information Andre Bouchard. The algorithms can filter the information, create indicators as well as assist investors in making split-second decisions to prevent significant losses.

Automated software application trading programs make it possible for faster trading choices. Choices made in microseconds might correspond to a significant side in the marketplace.

News is a good indicator of the volatility of a market as well as if you trade the information, chances will provide themselves. Investors tend to panic when a news report is released, and under-react when there is little news. Maker legible information offers historical data with archives that enable traders to back examination price motions versus details economic indications.

Each nation releases essential financial information during certain times of the day. Advanced investors examine and execute trades practically instantaneously when the announcement is made. Instantaneous evaluation is implemented with automated trading with low latency news feed. Automated trading can play a part in a trader's danger monitoring as well as loss avoidance method. With automated trading, historical backtests, as well as formulas, are used to pick optimum entrance as well as leave points.

Traders must understand when the data will certainly be released to understand when to keep an eye on the market. For instance, important financial data in the United States is launched in between 8:30 AM and also 10:00 AM EST. Canada releases details in between 7:00 AM as well as 8:30 AM. Since currencies cover the world, traders may always discover a market that is open and prepared for trading.

A SAMPLE of Major Financial Indicators

Customer Price Index

Employment Cost Index

Work Scenario

Producer-Consumer Price Index

Efficiency and also Prices

Genuine Revenues

UNITED STATE Import and also Export Costs

Employment & Unemployment

Where Do You Place Your Web servers? Important Geographical Places for algorithmic trading Strategies

Most of the capitalists that trade the information sought to have their algorithmic trading systems hosted as close as possible to the information source and also the implementation place as possible. General circulation locations for reduced latency news feed carriers include globally: New York, Washington DC, Chicago, and also London.

The ideal areas to position your web servers are in well-connected datacenters that allow you to straight link your network or servers to the real information feed source and execution venue. There has to be an equilibrium of distance and also latency between both. You need to be close enough to the news to act on the launches nonetheless, close sufficient to the broker or exchange to get your order in ahead of the masses seeking the best fill.

Low Latency Information Feed Providers

Thomson Reuters makes use of proprietary, state of the modern art technology to create a low latency information feed. The information feed is developed specifically for applications and also is maker readable. Streaming XML program is made use of to produce a complete message and also metadata to make certain that financiers never miss an event.

One more Thomson Reuters information feed includes macro-economic occasions, all-natural calamities, and also physical violence in the nation. An evaluation of the news is launched. When the category gets to a limit, the financier's trading and also danger management system is notified to activate access or exit point from the marketplace.

Thomson Reuters has a one-of-a-kind edge on global information compared to other service providers being one of the most recognized organization news firms on the planet, if not one of the most revered beyond the USA. They have the benefit of consisting of global Reuters Information to their feed in addition to third-party newswires as well as Financial data for both the USA and also Europe. The College of Michigan Study of Consumers record is also another major information event and also launches data twice monthly. Thomson Reuters has special media civil liberties to The University of Michigan information.

Other low latency information providers consist of: Required to Know Information, Dow Jones News, and Rapidata, which we will discuss further when they make details regarding their services much more available.

Examples of Information Impacting the Markets

A news feed might show a change in the unemployment rate. For the sake of the circumstance, unemployment rates will reveal a positive change. The historical evaluation might reveal that the change is not due to seasonal results. Newsfeeds program that customer confidence is enhancing due to the decline in unemployment rates. Reports offer a solid indication that the unemployment price will stay low.

With these details, evaluation might show that traders must short the USD. The algorithm might figure out that the USD/JPY set would produce one of the most profits. An automated profession would be executed when the target is reached, and the trade will certainly be on auto-pilot till completion.

The dollar could remain to drop in spite of reports of unemployment improvement supplied from the news feed. Financiers must keep in mind that multiple variables affect the activity of the United States Buck. The unemployment rate might drop, but the overall economic climate might not boost if bigger financiers do not transform their perception of the buck; after that, the buck may continue to fall.

The huge gamers will generally make their decisions before the majority of the retail or smaller sized investors. Large player choices might impact the market in an unforeseen method. If the decision is made on only info from the joblessness, the assumption will be wrong. Non-directional predisposition assumes that any major news concerning a nation will develop a trading chance. Directional-bias trading makes up all possible financial indications consisting of responses from major market gamers.

Trading The News - The Bottom Line

News relocates the marketplaces, and also, if you trade the news, you can exploit. There are very few of us that can argue against that. There is no doubt that the investor obtaining news data in advance of the curve has the side on obtaining a solid short-term profession on momentum sell different markets, whether FX, Equities, or Futures.

The cost of reduced latency infrastructure has dropped over a previous couple of years making it feasible to subscribe to a reduced latency news feed and obtain the information from the source providing a tremendous side over traders viewing tv, the Internet, radio or basic information feeds. In a market-driven by large banks and also hedge funds, reduced latency information feeds provide the large company side to also private traders.

1 note

·

View note

Text

Best AutoCAD Training Course Institute

Our Institute provides Best Autocad Training in as per the current industry standards. Our training programs will enable professionals to secure placements in MNCs. Our Institute is one of the most recommended Autocad Training Institute in that offers hands on practical knowledge / practical implementation on live projects and will ensure the job with the help of advance level Autocad Training Courses. At Our Institute Autocad Training in is conducted by specialist working certified corporate professionals having 8+ years of experience in implementing real-time Autocad.

ENQUIRY NOW

Our Institute is well-equipped Autocad Training Center. Candidates will implement the following concepts – Coordinate Systems, Drawing Lines & Circles., Erasing Object., Canceling & Undoing A Command, Inputting Data, Creating Basic Objects, Using Object Snaps, Using Polar Tracking And Polar Snap, Using Object Snap Tracking, Working With Units etc. on real time projects along with Autocad Placement Training modules like aptitude test preparation etc.

Our Institute is the well-known Autocad Training Center in with high tech infrastructure and lab facilities. We also provide online access of servers so that candidates will implement the projects at their home easily. Our Institute in mentored more than 3000+ candidates with Autocad Certification Training in at very reasonable fee. The course curriculum is customized as per the requirement of candidates/corporates.

In addition to this, our classrooms are built-in with projectors that facilitate our students to understand the topic in a simple manner.

Our Institute is one of the best Autocad Training Institutes in with 100% placement support. We are following the below “P3-Model (Placement Preparation Process)” to ensure the placement of our candidates: View Our Latest Placement Record.

Our strong associations with top organizations like HCL, Wipro, Dell, Birlasoft, TechMahindra, TCS, IBM etc. makes us capable to place our students in top MNCs across the globe. We have placed thousands of students according to their skills and area of interest that makes us preferred Autocad Training Institute in . Next, we closely monitor the growth of students during training program and assist them to increase their performance and level of knowledge.

Autocad Training Overview

AutoCAD is a computer-aided design (CAD) program used for 2-D and 3-D design and drafting. AutoCAD is developed and marketed by Autodesk Inc. and was one of the first CAD programs that could be executed on personal computers.

CONTACT US

AutoCAD was initially derived from a program called Interact, which was written in a proprietary language. The first release of the software used only primitive entities such as polygons, circles, lines, arcs and text to construct complex objects. Later, it came to support custom objects through a C++ application programming interface. The modern version of the software includes a full set of tools for solid modeling and 3-D. AutoCAD also supports numerous application program interfaces for automation and customization.

DWG (drawing) is the native file format for AutoCAD and a basic standard for CAD data interoperability. The software has also provided support for Design Web Format (DWF), a format developed by Autodesk for publishing CAD data.

Why you should Join Our Institute for Autocad Training in Reasons which makes us best among all others:

We provide video recording tutorials of the training sessions, so in case if candidate missed any class he/she can utilize those video tutorials.

All our training programs are based on live industry projects.

All our training programs are based on current industry standards.

Our training curriculum is approved by our placement partners.

Training will be conducted on daily& weekly basis and also, we can customize the training schedule as per the candidate requirements.

Live Project based training with trainers having 5 to 15 years of Industry Experience.

Training will be conducted by certified professionals.

Our Labs are very well-equipped with latest version of hardware and software.

Our classrooms are fully geared up with projectors &Wi-Fi access. 100 % free personality development classes which includes Spoken English, Group Discussions, Mock Job interviews & Presentation skills.

You will get study material in form of E-Book’s, Online Videos, Certification Handbooks, Certification Dumps and 500 Interview Questions along with Project Source material.

Worldwide Recognized Course Completion Certificate, once you’ve completed the course.

Flexible Payment options such as Cheque, EMI, Cash, Credit Card, Debit Card and Net Banking.

#Best AutoCAD Training Course Institute#AutoCAD Training#AutoCAD Course#AutoCAD Institute#AutoCAD Training Course#AutoCAD Training institute#AutoCAD Course institute

1 note

·

View note

Text

CD To MP3

To transform a video you don't have to make use of a pc or a web-based service. Its interface is sort of identical to Free YouTube to MP3 converter - a clear, self-explanatory affair with extra advanced settings tucked away in an Choices menu. There, you can choose to close down your COMPUTER as soon as the obtain is completed, download through a proxy, and paste URLs from the clipboard automatically. It's able to converting your audio file to MP3 in high quality. No matter you need to convert video or audio, it will possibly add all of the files with an on the spot. What's more, you can also extract music track to MP3. It could actually assist more than 50 supply formats and also MP2 to MP3 is included. Having a attempt will not be dangerous. Youzik is the fastest on-line website allowing you to obtain Youtube movies in mp3, no third occasion program installation is required, no plugin, not even a sign up, you simply have to go looking or instantly copy an url of your alternative within the above enter. Bigasoft FLAC Splitter helps to seamlessly break up FLAC recordsdata with CUE and convert FLAC to MP3, M4A, WAV, ALAC, etc in one step. Go to detailed information now. Batch conversion in VLC works the same regardless of whether you're converting audio or video. The process is exactly the identical and comprises only some steps. The precise conversion process may take time although - video information especially are very large and even highly effective computers need time to work on them. Much is dependent upon the type of file, the dimensions of it, the format you're changing from and to and the specs of your computer. Level MP3 is designed for simplicity, fluidity, and distinctive efficiency. In the event you need the most trending movies transferred from YouTube to your device within seconds, Point MP3 is your saving grace. The result's unparalleled, uninterrupted audio and video anytime, anyplace with out making a gap in your pocket. The right way to convert MP2 to MP3 with Video Converter Ultimate? Simply be taught more about the detailed process to transform MP2 to MP3 as beneath. Free Video to MP3 converter is an easy application, and with its pleasant user interface even probably the most fundamental users can extract audio from video recordsdata. The appliance is free to use, but it comes with certain limitations that you would be able to remove by buying the Premium model. You may also want to try Freemake Video Converter , one other program from the same builders as Freemake Audio Converter that supports audio codecs too. It even helps you to convert local and on-line videos into other formats. Nevertheless, mp2 to mp3 converter free download full version for windows 10 whereas Freemake Audio Converter does assist MP3s, their video software program does not (until you pay for it). FLAC to MP3 Converter converts your FLAC files into MP3 format for lowering file size, saving disk house and taking part in them in your MP3 participant and iPod.

No tech information required. Intuitive interface makes it straightforward for everyone to be the master of audio conversions. Should you have no idea what bit fee or frequency to choose the wizard of this system will routinely set the most acceptable. The clear, easy interface makes changing information quick and easy. And it additionally comes with a primary participant to take heed to tracks. Nevertheless, the free model doesn't support lossless formats like FLAC until you upgrade. But, if all you want to do is convert to MP3 for instance then it's still a great tool. Anything2MP3 is a free online SoundCloud and YouTube to MP3 conversion tool which allows you to convert and obtain SoundCloud and YouTube movies to MP3. All you want is a URL and our software program will download the SoundCloud or YouTube video to your browser, convert it after which help you save the converted file. Most individuals use our service to convert SoundCloud and YouTube to mp3, however we have now many supported services. Home windows Integration: Property, Thumbnail Handlers and Shell Integration extend home windows to offer tag modifying inside of Home windows explorer. Especially useful is the flexibility for dBpoweramp to add help for file types Windows doesn't natively help, or prolong support comparable to mp3 IDv2.four tags. Album artwork show and intensive popup info tips are also supplied, dBpoweramp is so much more than a easy mp3 converter. OGG Vorbis (generally just known as Vorbis) is an open source patent-free audio compression format, developed as a alternative for proprietary digital audio encoding formats, akin to MP3, VQF, and AAC. Whenever you obtain the sound file with OGG format and your MP3 Player cannot import and play it, you'd be so depressed. Now, you need to do is to transform OGG to MP3 after which for playback on your MP3 Gamers. Thus chances are you'll want a simple but powerful application that may remodel your OGG information to those encoded within the MP3 format. Nowadays you possibly can at all times convert one or two audio recordsdata easily with an internet audio converter however what if you have to convert lots of(or much more) of audio files? It is not like you possibly can upload all of them to or obtain from online audio converters' server in a second - it is not realistic, particularly if you end up in an urgent want of the work. All the MP3 converter software we picked can batch convert MP3 information at a satisfying speed(150 recordsdata transformed inside 1 hour). Click on Convert button to start to convert MP3 to MP2 or different audio format you desired. A conversion dialog will seem to indicate the progress of mp3 to mp2 to mp3 audio converter conversion If you want to stop the method, please click on Stop button. After the conversion, you presumably can click on the Output Folder button to get the transformed recordsdata and swap to your iPod, iPad, iPhone, mp3 player or laborious driver. By converting the unique file to MP3, users can freely put the converted MP3 files to portable units to take pleasure in. Wondershare Video Converter Ultimate can convert audio for standard audio gamers equivalent to iPod classic, iPod contact, Zune, and every kind of other MP3 players. It will probably additionally present 300% changing pace, which is much faster than every other video converter program on the Internet. Video Converter Final additionally converts between standard audio codecs including convert MP3 to AAC, convert WMA to MP3, convert WAV to MP3, convert MP3 to MKA, convert wma to OGG, convert audio to AAC, M4A, APE, AIFF, and mp2 to mp3 converter mac many others. Furthermore, this program means that you can alter audio bitrate, audio channel, pattern rate and let you select an audio encoder. This guide will show you the best way to use this audio converter program intimately.

1 note

·

View note

Text

Trade The News - Profiting From Trading With Low Latency News Feeds

Experienced traders identify the effects of global changes about Foreign Exchange (Forex/FX) markets, stock markets and futures markets. Factors such as interest rate decisions, inflation, retail sales, unemployment, industrial productions, consumer confidence surveys, business sentiment surveys, trade balance and manufacturing surveys impact currency movement. While traders could monitor this information manually using traditional news sources, profiting from automated or algorithmic trading utilizing low latency news feeds is an often more predictable and effective trading method that can increase profitability while reducing risk.

The faster a trader can receive economic news, analyze the data, make decisions, apply risk management models and execute trades, the more lucrative they can become. Automated traders are generally more successful than manual traders because the automation will use a tested rules-based trading strategy that employs money management and risk management techniques. The strategy will process trends, analyze data and execute trades faster than a human with no emotion. In order to take advantage of the low latency news feeds it is essential to have the right low latency news feed provider, have a proper trading strategy and the correct network infrastructure to ensure the quickest possible latency to the news source in order to beat the competition on order entries and fills or execution.

How Do Low Latency News Feeds Work?

Low latency news feeds provide key economic data to sophisticated market participants for whom speed is definitely a top priority. While the rest of the world receives economic news through aggregated news feeds, bureau services or mass media such as news web sites, television or radio low latency news traders count on lightning fast delivery of key financial releases. These include jobs numbers, inflation data, and developing indexes, from the Bureau of Labor Statistics straight, Commerce Division, and the Treasury Press Space in a machine-readable feed that's optimized for algorithmic investors.

One technique of controlling the launch of news can be an embargo. Following the embargo can be lifted for information event, reporters enter the launch data into electronic file format which is distributed in a proprietary binary file format immediately. The info is sent over personal networks to many distribution points near different large cities all over the world. In order to have the information data as feasible quickly, it is essential a trader make use of a valid low latency information provider which has invested seriously in technology infrastructure. Embargoed data can be requested by a resource not to be released before a particular date and period or unless certain circumstances have already been met. The press is given advanced see in order to plan the release.

News agencies likewise have reporters in sealed Authorities press rooms throughout a defined lock-up period. Lock-up data intervals simply regulate the launch of most news data to ensure that every news store releases it concurrently. This is often done in two methods: "Finger push" and " Change Release" are accustomed to regulate the release.

News feeds feature corporate and financial news that influence trading activity worldwide. Economic indicators are accustomed to facilitate trading decisions. The news headlines is usually fed into an algorithm that parses, consolidates, analyzes and makes trading suggestions centered when the news headlines. The algorithms can filtration system the news, create help and indicators investors make split-second preferences in order to avoid substantial losses.

Automated software trading programs allow faster trading decisions. Decisions manufactured in microseconds might mean a sizeable edge on the market.

News is an excellent indicator of the volatility of market and if you trade the information, opportunities shall present themselves. Traders have a tendency to overreact whenever a news record is normally released, and under-react when there is quite little information. Machine readable information provides traditional data through archives that allow traders to back check price movements against particular economical indicators.

Each national country releases important financial news during certain times of the day. Advanced investors analyze and execute trades nearly when the announcement is manufactured instantaneously. Instantaneous analysis is manufactured feasible through automated trading with low latency information feed. Automated trading can play a role of a trader's risk administration and loss avoidance technique. With automated trading, traditional back algorithms and exams are utilized to choose optimum entry and exit factors.

Traders got to know when the data will be released to learn when to monitor the marketplace. For instance, important financial data in the usa is released between 8: 30 AM and 10: 00 AM EST. Canada releases information between 7: 00 AM and 8: 30 AM. Since currencies span the world, traders may look for a market that is open and ready for trading always.

A SAMPLE of Main Economic Indicators Consumer Price Index Employment Cost Index Employment Situation Producer Price Index Productivity and Costs Real Earnings U. S. Export and import Prices Employment + Unemployment

Where Do You Put Your Servers? Essential Geographic Places for algorithmic trading Strategies

Nearly all investors that trade the news headlines seek to possess their algorithmic trading platforms hosted as close as possible to news source and the execution venue as possible. General distribution places for low latency information feed suppliers include globally: NY, Washington DC, London and chicago.

The ideal places to put your servers are in well-connected datacenters that permit you to directly join your network or servers to the actually news feed source and execution venue. There has to be a balance of distance and between both latency. You should be close more than enough to the news headlines in order to do something about the releases nevertheless, close more than enough to the broker or exchange to really get your purchase in prior to the masses looking to find the best fill.

Low News Feed Providers Latency

Thomson Reuters uses proprietary, state of the innovative art technology to produce a low latency news feed. The news feed is made for applications and is machine readable particularly. Streaming XML broadcast can be used to produce full text message and metadata to make sure that investors hardly ever miss an event.

Another Thomson Reuters information feed features macro- financial events, organic disasters and violence in the national country. An evaluation of the news headlines is released. Whenever a threshold is normally reached by the category, the investor's trading and risk administration program is notified to result in an access or exit stage from the marketplace. Thomson Reuters includes an unique advantage on global news in comparison to other suppliers being probably the most respected business news organizations in the globe if not the esteemed outside of america. They have the benefit of including global Reuters News with their feed furthermore to third-party newswires and Economic data for both USA and Europe. The University of Michigan Study of Customers report is another main news event and releases data twice regular also. Thomson Reuters has exceptional mass media rights to The University of Michigan data.

Various other low latency news suppliers include: Have to know News, Dow Jones News and Rapidata which we will discuss if they make details regarding their services even more available further.

Types of News Affecting the Markets

A news feed might indicate a noticeable alter in the unemployment price. With regard to the scenario, unemployment prices shall present a positive change. Historical analysis may present that the change isn't because of seasonal effects. News feeds show that buyer confidence is increasing due the decrease in unemployment rates. Reports provide a strong indication that the unemployment rate will remain low.

With this information, analysis may indicate that traders should short the USD. The algorithm may determine that the USD/JPY pair would yield the most profits. An automatic trade would be executed when the target is reached, and the trade will be on auto-pilot until completion.

The dollar could continue to fall despite reports of unemployment improvement provided from the news feed. Investors must keep in mind that multiple factors affect the movement of the United States Dollar. The unemployment rate may drop, but the overall economy may not improve. If larger investors do not change their perception of the dollar, then the dollar may continue to fall.

The big players will typically make their options prior to most of the retail or smaller traders. Big player options may affect the market in an unexpected way. If the decision is made on only information from the unemployment, the assumption will be incorrect. Non-directional biassumes that any major news about a country will create a trading opportunity. Directional-bias trading accounts for all possible economic indicators including responses from major market players.

Trading The News - The Bottom Line

News moves the markets and if you trade the news, you can capitalize. There are very few of us that can argue against that fact. There is no doubt that the trader receiving news data ahead of the curve has the edge on getting a solid short-term trade on momentum trade in various markets whether FX, Equities or Futures. The price of low latency infrastructure offers dropped over the past few years making it possible to subscribe to a low latency news feed and receive the data from the source giving a tremendous edge over traders watching television, the Internet, radio or standard news feeds. In a market driven by large banks and hedge funds, low latency news feeds certainly give the big company edge to even individual traders .

Get to know more about ICO News

1 note

·

View note

Text

World After Capital: Bots for All of Us (Informational Freedom)

NOTE: I have been posting excerpts from my book World After Capital. Currently we are on the Informational Freedom section and the previous excerpt was on Internet Access. Today looks at the right to be represented by a bot (code that works on your behalf).

Bots for All of Us

Once you have access to the Internet, you need software to connect to its many information sources and services. When Sir Tim Berners-Lee first invented the World Wide Web in 1989 to make information sharing on the Internet easier, he did something very important [95]. He specified an open protocol, the Hypertext Transfer Protocol or HTTP, that anyone could use to make information available and to access such information. By specifying the protocol, Berners-Lee opened the way for anyone to build software, so-called web servers and browsers that would be compatible with this protocol. Many did, including, famously, Marc Andreessen with Netscape. Many of the web servers and browsers were available as open source and/or for free.

The combination of an open protocol and free software meant two things: Permissionless publishing and complete user control. If you wanted to add a page to the web, you didn't have to ask anyone's permission. You could just download a web server (e.g. the open source Apache), run it on a computer connected to the Internet, and add content in the HTML format. Voila, you had a website up and running that anyone from anywhere in the world could visit with a web browser running on his or her computer (at the time there were no smartphones yet). Not surprisingly, content available on the web proliferated rapidly. Want to post a picture of your cat? Upload it to your webserver. Want to write something about the latest progress on your research project? No need to convince an academic publisher of the merits. Just put up a web page.

People accessing the web benefited from their ability to completely control their own web browser. In fact, in the Hypertext Transfer Protocol, the web browser is referred to as a “user agent” that accesses the Web on behalf of the user. Want to see the raw HTML as delivered by the server? Right click on your screen and use “view source.” Want to see only text? Instruct your user agent to turn off all images. Want to fill out a web form but keep a copy of what you are submitting for yourself? Create a script to have your browser save all form submissions locally as well.

Over time, popular platforms on the web have interfered with some of the freedom and autonomy that early users of the web used to enjoy. I went on Facebook the other day to find a witty note I had written some time ago on a friend's wall. It turns out that Facebook makes finding your own wall posts quite difficult. You can't actually search all the wall posts you have written in one go; rather, you have to go friend by friend and scan manually backwards in time. Facebook has all the data, but for whatever reason, they've decided not to make it easily searchable. I'm not suggesting any misconduct on Facebook's part—that's just how they've set it up. The point, though, is that you experience Facebook the way Facebook wants you to experience it. You cannot really program Facebook differently for yourself. If you don't like how Facebook's algorithms prioritize your friends' posts in your newsfeed, then tough luck, there is nothing you can do.

Or is there? Imagine what would happen if everything you did on Facebook was mediated by a software program—a “bot”—that you controlled. You could instruct this bot to go through and automate for you the cumbersome steps that Facebook lays out for finding past wall posts. Even better, if you had been using this bot all along, the bot could have kept your own archive of wall posts in your own data store (e.g., a Dropbox folder); then you could simply instruct the bot to search your own archive. Now imagine we all used bots to interact with Facebook. If we didn't like how our newsfeed was prioritized, we could simply ask our friends to instruct their bots to send us status updates directly so that we can form our own feeds. With Facebook on the web this was entirely possible because of the open protocol, but it is no longer possible in a world of proprietary and closed apps on mobile phones.

Although this Facebook example might sound trivial, bots have profound implications for power in a networked world. Consider on-demand car services provided by companies such as Uber and Lyft. If you are a driver today for these services, you know that each of these services provides a separate app for you to use. And yes you could try to run both apps on one phone or even have two phones. But the closed nature of these apps means you cannot use the compute power of your phone to evaluate competing offers from the networks and optimize on your behalf. What would happen, though, if you had access to bots that could interact on your behalf with these networks? That would allow you to simultaneously participate in all of these marketplaces, and to automatically play one off against the other.

Using a bot, you could set your own criteria for which rides you want to accept. Those criteria could include whether a commission charged by a given network is below a certain threshold. The bot, then, would allow you to accept rides that maximize the net fare you receive. Ride sharing companies would no longer be able to charge excessive commissions, since new networks could easily arise to undercut those commissions. For instance, a network could arise that is cooperatively owned by drivers and that charges just enough commission to cover its costs. Likewise, as a passenger using a bot could allow you to simultaneously evaluate the prices between different car services and choose the service with the lowest price for your current trip. The mere possibility that a network like this could exist would substantially reduce the power of the existing networks.

We could also use bots as an alternative to anti-trust regulation to counter the overwhelming power of technology giants like Google or Facebook without foregoing the benefits of their large networks. These companies derive much of their revenue from advertising, and on mobile devices, consumers currently have no way of blocking the ads. But what if they did? What if users could change mobile apps to add Ad-Blocking functionality just as they can with web browsers?

Many people decry ad-blocking as an attack on journalism that dooms the independent web, but that's an overly pessimistic view. In the early days, the web was full of ad-free content published by individuals. In fact, individuals first populated the web with content long before institutions joined in. When they did, they brought with them their offline business models, including paid subscriptions and of course advertising. Along with the emergence of platforms such as Facebook and Twitter with strong network effects, this resulted in a centralization of the web. More and more content was produced either on a platform or moved behind a paywall.

Ad-blocking is an assertion of power by the end-user, and that is a good thing in all respects. Just as a judge recently found that taxi companies have no special right to see their business model protected, neither do ad-supported publishers [96]. And while in the short term this might prompt publishers to flee to apps, in the long run it will mean more growth for content that is paid for by end-users, for instance through a subscription, or even crowdfunded (possibly through a service such as Patreon).

To curtail the centralizing power of network effects more generally, we should shift power to the end-users by allowing them to have user agents for mobile apps, too. The reason users don't wield the same power on mobile is that native apps relegate end-users once again to interacting with services just using our eyes, ears, brain and fingers. No code can execute on our behalf, while the centralized providers use hundreds of thousands of servers and millions of lines of code. Like a web browser, a mobile user-agent could do things such as strip ads, keep copies of my responses to services, let me participate simultaneously in multiple services (and bridge those services for me), and so on. The way to help end-users is not to have government smash big tech companies, but rather for government to empower individuals to have code that executes on their behalf.

What would it take to make bots a reality? One approach would be to require companies like Uber, Google, and Facebook to expose all of their functionality, not just through standard human usable interfaces such as apps and web sites, but also through so-called Application Programming Interfaces (APIs). An API is for a bot what an app is for a human. The bot can use it to carry out operations, such as posting a status update on a user's behalf. In fact, companies such as Facebook and Twitter have APIs, but they tend to have limited capabilities. Also, companies presently have the right to control access so that they can shut down bots, even when a user has clearly authorized a bot to act on his or her behalf.

Why can't I simply write code today that interfaces on my behalf with say Facebook? After all, Facebook's own app uses an API to talk to their servers. Well in order to do so I would have to “hack” the existing Facebook app to figure out what the API calls are and also how to authenticate myself to those calls. Unfortunately, there are three separate laws on the books that make those necessary steps illegal.

The first is the anti-circumvention provision of the DMCA. The second is the Computer Fraud and Abuse Act (CFAA). The third is the legal construction that by clicking “I accept” on a EULA (End User License Agreement) or a set of Terms of Service I am actually legally bound. The last one is a civil matter, but criminal convictions under the first two carry mandatory prison sentences.

So if we were willing to remove all three of these legal obstacles, then hacking an app to give you programmatic access to systems would be possible. Now people might object to that saying those provisions were created in the first place to solve important problems. That's not entirely clear though. The anti circumvention provision of the DMCA was created specifically to allow the creation of DRM systems for copyright enforcement. So what you think of this depends on what you believe about the extent of copyright (a subject we will look at in the next section).

The CFAA too could be tightened up substantially without limiting its potential for prosecuting real fraud and abuse. The same goes for what kind of restriction on usage a company should be able to impose via a EULA or a TOS. In each case if I only take actions that are also available inside the company's app but just happen to take these actions programmatically (as opposed to manually) why should that constitute a violation?

But, don't companies need to protect their encryption keys? Aren't “bot nets” the culprits behind all those so-called DDOS (distributed denial of service) attacks? Yes, there are a lot of compromised machines in the world, including set top boxes and home routers that some are using for nefarious purposes. Yet that only demonstrates how ineffective the existing laws are at stopping illegal bots. Because those laws don't work, companies have already developed the technological infrastructure to deal with the traffic from bots.

How would we prevent people from adopting bots that turn out to be malicious code? Open source seems like the best answer here. Many people could inspect a piece of code to make sure it does what it claims. But that's not the only answer. Once people can legally be represented by bots, many markets currently dominated by large companies will face competition from smaller startups.

Legalizing representation by a bot would eat into the revenues of large companies, and we might worry that they would respond by slowing their investment in infrastructure. I highly doubt this would happen. Uber, for instance, was recently valued at $50 billion. The company's “takerate” (the percentage of the total amount paid for rides that they keep) is 20%. If competition forced that rate down to 5%, Uber's value would fall to $10 billion as a first approximation. That is still a huge number, leaving Uber with ample room to grow. As even this bit of cursory math suggests, capital would still be available for investment, and those investments would still be made.

That's not to say that no limitations should exist on bots. A bot representing me should have access to any functionality that I can access through a company's website or apps. It shouldn't be able to do something that I can't do, such as pretend to be another user or gain access to private posts by others. Companies can use technology to enforce such access limits for bots; there is no need to rely on regulation.

Even if I have convinced you of the merits of bots, you might still wonder how we might ever get there from here. The answer is that we can start very small. We could run an experiment with the right to be represented by a bot in a city like New York. New York's municipal authorities control how on demand transportation services operate. The city could say, “If you want to operate here, you have to let drivers interact with your service programmatically.” And I'm pretty sure, given how big a market New York City is, these services would agree.

2 notes

·

View notes

Text

Website Progress Specialized Capabilities and Interacts With the Consumer at All Phases

Data technological know-how (IT) is 1 of the most booming sectors of the entire world financial state. In truth, the properly-becoming of this sector is critical to the overall performance of the economy as a full, with swings in the sector tremendously impacting the economic properly-currently being all around the planet. IT-associated services can be broadly divided into distinct, precise disciplines. A single of the most critical of this kind of IT company is web development. Net growth services can be outlined as any exercise carried out by qualified internet designers, in purchase to build a internet site. The world-wide-web page is meant for publication on the Globe Huge World wide web (i.e., the Net). On the other hand, there is a complex change concerning web improvement and world wide web designing solutions. When the latter will involve all the style and design and format elements of a website page, creating codes and building markups form significant duties underneath website improvement. Web site enhancement companies are necessary for a large assortment of IT-relate products and services. Some of the critical fields that require web growth consist of e-commerce, business development, technology of content material for the net, internet server configuration and client-aspect (or, server-facet) scripting. Though the world-wide-web enhancement teams of huge businesses can comprise of a substantial quantity of builders, it is not unheard of for lesser business enterprise to have a one contracting webmaster. It ought to also be comprehended that, though world wide web development demands specialised capabilities, it is commonly a collaborative work of the distinctive departments of a firm that make it a good results. For those who have virtually any queries about exactly where and also how you can employ Web Developer profile, it is possible to email us in the web site. The website growth method is a comprehensive just one, and can be broadly divided into distinct, more compact sections. In get to understand the mechanism of world-wide-web development, a single needs to search at the hierarchy of a regular these kinds of method. In general, any net progress course of action can comprise of the following sections: a) Client Side Coding -- This component of web development includes the usage of various computer languages. Such languages include: i) AJAX -- involving an up-gradation of Javascript or PHP (or, any similar languages). The focus is on enhancement of the end-user experience. ii) CSS -- involving usage of stylesheets, iii) Flash -- Commonly used as the Adobe Flash Player, this provides a platform on the client side, iv) JavaScript -- The programming language and different forms of coding, v) Microsoft SilverLight -- This, however, works only with the latest win9x versions, vi) XHTML -- This is used as a substitute for HTML 4. With the acceptance of HTML 5 by the international browser community, this would gain more in popularity. b) Server Side Coding -- A wide range of computer languages can be used in the server side coding component of a web development process. Some of them are: i) ASP -- this is proprietary product from Microsoft, ii) Coldfusion -- also known as Macromedia (its formal official name), iii) Perl and/or CGI -- an open source programming language, iv) Java -- including J2EE and/or WebObjects, v) PHP -- another open source language, vi) Lotus Domino, vii) Dot Net ( .NET) -- a proprietary language from Microsoft, viii) Websphere -- owned by IBM, ix) SSJS (a server-side JavaScript) -- including Aptana Jaxer and Mozilla Rhino, x) Smalltalk -- including Seaside and AIDA/Web, xi) Ruby -- comprising of Ruby on Rails, xii) Python -- this has web framework called Django. The consumer aspect coding is mostly connected to the structure and developing of internet webpages. On the other hand, server aspect coding makes sure that that all back again close systems work thoroughly, and the functionality of the web-site is suitable. These two parts of coding want to be put together in a skilled, qualified method in purchase to make world-wide-web growth an powerful process. Web site improvement is speedily gaining in attractiveness all in excess of the globe, in the IT sector. In this context, Australia, and in certain, Sydney, justifies a distinctive point out. There are really a amount of Sydney net development businesses. Net advancement in Sydney is an extremely very well-recognized provider and the builders from this space are comprehensive professionals in this field .

0 notes

Text

World wide web Improvement Specialized Expertise and Interacts With the Buyer at All Levels

Info technology (IT) is a single of the most booming sectors of the planet financial system. Without a doubt, the well-staying of this sector is very important to the general performance of the overall economy as a entire, with swings in the sector considerably affecting the financial well-currently being all over the earth. IT-relevant companies can be broadly divided into distinct, distinct disciplines. One particular of the most vital of these kinds of IT services is web advancement. Net enhancement assistance can be defined as any activity undertaken by professional world-wide-web designers, in get to build a world wide web webpage. The web webpage is meant for publication on the Planet Large World wide web (i.e., the World wide web). Having said that, there is a technological big difference in between internet improvement and web coming up with providers. Whilst the latter involves all the layout and layout features of a world wide web page, creating codes and creating markups variety crucial responsibilities beneath net development. Web site improvement companies are required for a huge vary of IT-relate providers. Some of the critical fields that entail net enhancement contain e-commerce, organization enhancement, technology of content for the internet, net server configuration and shopper-side (or, server-aspect) scripting. Although the internet advancement teams of significant providers can comprise of a significant quantity of builders, it is not unheard of for lesser organization to have a single contracting webmaster. It ought to also be recognized that, despite the fact that website advancement involves specialised competencies, it is generally a collaborative effort and hard work of the distinctive departments of a business that make it a achievements. The world wide web advancement procedure is a in depth one particular, and can be broadly divided into unique, more compact sections. In buy to have an understanding of the mechanism of web development, a single demands to search at the hierarchy of a usual such procedure. In common, any website progress approach can comprise of the next sections: a) Client Side Coding -- This component of web development includes the usage of various computer languages. When you adored this information and also you want to obtain more info about Web Developer profile i implore you to go to our own web-site. Such languages include: i) AJAX -- involving an up-gradation of Javascript or PHP (or, any similar languages). The focus is on enhancement of the end-user experience. ii) CSS -- involving usage of stylesheets, iii) Flash -- Commonly used as the Adobe Flash Player, this provides a platform on the client side, iv) JavaScript -- The programming language and different forms of coding, v) Microsoft SilverLight -- This, however, works only with the latest win9x versions, vi) XHTML -- This is used as a substitute for HTML 4. With the acceptance of HTML 5 by the international browser community, this would gain more in popularity. b) Server Side Coding -- A wide range of computer languages can be used in the server side coding component of a web development process. Some of them are: i) ASP -- this is proprietary product from Microsoft, ii) Coldfusion -- also known as Macromedia (its formal official name), iii) Perl and/or CGI -- an open source programming language, iv) Java -- including J2EE and/or WebObjects, v) PHP -- another open source language, vi) Lotus Domino, vii) Dot Net ( .NET) -- a proprietary language from Microsoft, viii) Websphere -- owned by IBM, ix) SSJS (a server-side JavaScript) -- including Aptana Jaxer and Mozilla Rhino, x) Smalltalk -- including Seaside and AIDA/Web, xi) Ruby -- comprising of Ruby on Rails, xii) Python -- this has web framework called Django. The consumer facet coding is generally connected to the format and planning of net pages. On the other hand, server side coding guarantees that that all back again close devices work adequately, and the features of the web-site is correct. These two places of coding require to be blended in a qualified, skilled method in purchase to make internet enhancement an powerful process. Website growth is swiftly gaining in acceptance all over the earth, in the IT sector. In this context, Australia, and in specific, Sydney, justifies a particular point out. There are very a variety of Sydney web advancement organizations. World-wide-web progress in Sydney is an exceptionally very well-acknowledged company and the developers from this place are thorough industry experts in this discipline.

0 notes

Text

Released in 2008, OPC Unified Architecture (OPC UA) is the data exchange standard and OPC machine-to-machine communication protocol to facilitate industrial automation. It enables users to facilitate safe data exchange between different industrial products and across operating systems. The specification of the OPC UA standard is as per the specifications developed in close co-operation between manufacturers, users, research institutes to enable safe information exchange in heterogeneous systems.

Why Choose OPC UA?

OPC is fast becoming an accepted technology in areas like the industrial Internet of Things (IIoT). With the introduction of Service Oriented Architecture (SOA) in industrial automation systems, OPC UA started offering a platform-independent solution by creating a mix of benefits of web services and integrated security with a consistent information model of data. The IEC standard OPC UA is suitable for interoperability with other industry standards.

OPC UA offers a standard interface for data exchange across the different levels of the communication pyramid. Another critical advantage of OPC UA is the ease of deployment, as it accommodates legacy systems and existing infrastructure to offer scalability. Apart from this, OPC UA acts as a bridge between the high-end servers and low level field systems that includes sensors, embedded devices, controllers etc.

Business Benefits of OPC UA:

Nowadays, businesses are focussing more on enhancing connectivity and communication in the manufacturing sector. Devices like servers no longer operate in isolation. Different devices like PLCs, HMIs, and machines produce data in their formats, and every device's data interpretation is needed for accurate operations. Here, OPC UA works as a translator to understand data from different sources (devices) and send it back and forth in every device's format.

In simple words, OPC removes the barriers between proprietary factory floor devices, systems, and manufacturing software in the facility. It reduces system integration, installation costs of factory automation, and process control systems. In short, OPC UA can help business owners solve real manufacturing problems today.

How OPC UA will Revolutionize Industrial Automation

The design of OPC UA is suitable for connecting the factory floor to the enterprise. Your servers will support the data transport used in traditional IT-type applications and connect with IT applications over Simple Object Access Protocol (SOAP) or HTTP. However, mapping the transport layer depends on the OPC UA services, data, and object models. Meaning, if other transport protocols are incorporated in the future, the same OPC UA Information Model services can be applied for that newly added transport.

OPC UA simplifies machine to machine (M2M) communication and is explicitly designed for industrial automation. Thus, enabling data exchange on devices and bridges the gap between information technology and operational technology.

Businesses would not reap the benefits of the Internet of Things (IoT) and Industry 4.0 without using OPC UA. Additionally, for SMBs, OPC UA provides an easier way to leverage the data generated by small businesses from existing technologies, especially machine vision.

OPC UA and Industry 4.0: A detailed discussion

The main challenge of Industry 4.0 and the industrial Internet of Things (IIoT) is the security and standardized exchange of data across machines, and services from different industries. In April 2015, the Reference Architecture Model for Industry 4.0 (RAMI 4.0) listed the IEC 62541 standard OPC Unified Architecture as the most recommended solution for implementing the communication layer.

Unlike other protocols, OPC UA facilitates standard data modelling throughout the automation systems to achieve seamless interoperability. The actual power of automation lies in the meaning and description of the data. And OPC UA provides a framework and standards for data modelling.

OPC UA lays the foundation for industrial automation in the following ways:

Connecting machine data to the enterprise

Automation in industries opens doors for new business opportunities, enhancing services and productivity. The main challenge of industrial automation is to effectively transfer the raw data generated from the plant floor devices to the applications like ERPs, CRMs, and more. It is important to get insights and actionable decisions to stay at the top.

A fully automated OEM process system gives real-time and accurate data from these systems for analysis, maintenance notifications etc., depending upon your priorities. Here, OPC UA provides two ways for this integration process:

Using customized solutions through OPC UA stack APIs

Deliver COTS solutions like connectors, servers, clients, etc. for connecting machines, floor devices, and other equipment, etc. to the enterprise

Meets the Industry 4.0 requirements

Industry 4.0 demands interoperability and standardized data connectivity for meeting requirements like:

1. Integration across every level

2. Secure transfer and authentication at user levels.

3. Meeting the industry standards.

4. Scalability

5. Mapping of various data models to enable device and platform agnostic feature among the various products and their manufacturers

6. Ability to plug-and-play

These are some of the requirements for meeting industry 4.0 standards. Here, OPC UA works as the common data connectivity and communication standard for local and remote device access in IoT, M2M, and Industry 4.0 settings.

Various communication mechanisms where OPC UA works as the common data connectivity and communication standards:

1. OPC UA Client/Server Model:

It is a one-to-one communication mechanism that is used in industrial automation. Here, the OPC server transfers the data to the OPC client as per the request. This data transfer is done in an encrypted and reliable manner using the communication protocols like EtherNet/IP, Modbus, etc.

2. OPC UA PubSub Model:

Under this network, a one-to-many or many-to-one communication process is established. In this process, the information is available from the publisher and accessible by multiple subscribers. Here, OPC UA PubSub facilitates real-time communication at the control level like sensor or actuator and meets the requirements of time-critical applications.

3. OPC UA over TSN model:

OPC UA over TSN model offers bandwidth-intensive IT traffic on a single cable without interference between them. TSN provides interoperability for the data link (layer 2) of the communication network and guarantees scalable bandwidths for real-time capability and low latency.

Future of OPC UA:

The OPC Foundation has created several technical committees for extending the scope and function of OPC UA specifications. The working groups is established to make OPC UA address a broader range of manufacturing connectivity and interoperability issues. Some of the existing OPC specifications include OPC Alarms & Events, OPC Historical Data Access, and OPC Security, OPC Data eXchange (DX) and OPC XML-DA, and more. OPC DX standard offers interoperability between controllers (sensors, actuators) connected to Ethernet networks using protocols like Ethernet/IP, PROFINET, and Foundation Fieldbus High-Speed Ethernet.

OPC XML-DA provides several benefits like publishing floor data among the manufacturing enterprise and using XML, HTTP, SOAP, and more. In short, OPC UA will be a force to reckon with in the future.

Conclusion

There are several protocols, but OPC UA offers security from the ground up and offers the most secure technology for moving data between factory, enterprise applications, and the cloud. OPC UA is not limited to a "protocol"; it is an architecture that expanded to meet the market requirement. Let Utthunga help you make the smooth transition to a digitally transformed and industry 4.0 ready company with our experience and OPC UA expertise.

0 notes

Text

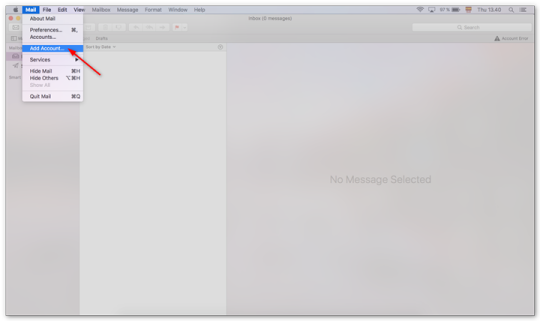

Plugins For Mac Os X Mail

Apple Mail Plugins

Mac Os X Mail Plugins

Plugins For Mac Os X Mail Free

Courier 1.0.9

This article is adapted from Josh Aas's blog post Writing an NPAPI plugin for Mac OS X. Before you go on reading this article, you may wish to grab the sample code located here, as it will be referenced during this tutorial. The sample is posted under a BSD-style license that means you can use it for anything, even if you don't plan to release your source code. Contains four plug-ins. Has a good installation tool. Have the option to add some or all. MailPluginFix is a free tool which will help you to fix any incompatible Mail.app plugin (GrowlMail for example) after an update of Mac OS X. Just start the application and you will see a list of all incompatible plugins for your current Mail.app installation. Just select the ones you would like to fix and press the start button in the toolbar.

The Courier mail transfer agent (MTA) is an integrated mail/groupwareserver based on open commodity protocols, such as ESMTP, IMAP, POP3,LDAP, SSL, and HTTP. Courier provides ESMTP, IMAP, POP3, webmail, andmailing list services within a single, consistent, framework. Individualcomponents can be enabled or disabled at will. The Courier mail servernow implements basic web-based calendaring and scheduling servicesintegrated in the webmail module. Advanced groupware calendaringservices will follow soon.

License: Freeware

Developer/Publisher: Double Precision, Inc.

Modification Date: August 28, 2019

Requirements: macOS

Download File Size: 7.5 MB

Dovecot 2.3.7.2 Dovecot is an open source IMAP and POP3 email server for Linux/UNIX-likesystems, written with security primarily in mind. Dovecot is anexcellent choice for both small and large installations. It's fast,simple to set up, requires no special administration and it uses verylittle memory.

License: Freeware

Developer/Publisher: Timo Sirainen

Modification Date: August 26, 2019

Requirements: macOS

Download File Size: 7.1 MB

Emailchemy 14.3.9 Emailchemy converts email from the closed, proprietary file formats ofthe most popular (and many of yesterday’s forgotten) email applicationsto standard, portable formats that any application can use. Thesestandard formats are ideal for importing, long term archival, databaseentry, or forensic analysis and eDiscovery.

License: Demo, $30 individual, $50 family

Developer/Publisher: Weird Kid Software